1. Introduction

Complicated components with numerous small key local features (KLFs) are commonly used in modern advanced manufacturing industries such as aerospace, marine engineering, and automotive sectors. These features, including edges, holes, grooves, and curved surfaces, often exhibit complex geometries and tight tolerances, which pose significant challenges for dimensional inspection and quality assurance. Automated and accurate 3D shape measurement of these features is critical for ensuring quality control, enhancing product reliability, and minimizing manufacturing costs [

1,

2,

3]. As product designs become increasingly complex and lightweight, the demand for high-precision and intelligent inspection technologies continues to grow, driving the need for more effective measurement systems capable of handling diverse geometries under varying operational conditions.

Three-dimensional (3D) shape measurement techniques are generally classified into contact and non-contact approaches. Among contact-based methods, coordinate measuring machines (CMMs) equipped with tactile probes [

4] offer exceptional precision. Nevertheless, they are constrained by low efficiency in acquiring high-density 3D point cloud data. With the continued advancement of optical non-contact measurement technologies, line-structured light sensors based on active vision have been widely applied in industrial visual inspection and 3D shape measurement due to their non-contact nature, high precision, and efficiency [

5]. However, these sensors have limited fields of view and lack intrinsic motion capabilities, making them unsuitable for measuring the full geometry of large-scale objects. To address this, they are typically mounted on high-precision motion platforms to enable large-area, multi-angle, and full-coverage measurements [

6,

7]. In recent years, structured light sensors have been widely used in industrial applications and integrated with CMMs for 3D shape measurements. However, the combined method, which integrates CMMs with a structured light sensor [

8], is not suitable for online measurements due to its restrictive mechanical structure and limited measurement efficiency. In contrast to CMMs, industrial robots are well-suited for executing complex spatial positioning and orientation tasks with efficiency [

9,

10]. Equipped with a high-performance controller, these robots can also transport a vision sensor mounted on the end-effector to specified target locations. During the online measurement process, the spatial pose of the vision sensor varies as the robot’s end-effector moves to different positions. Nevertheless, the relative transformation between the sensor’s coordinate system and the end-effector remains fixed-an essential concept known as hand-eye calibration. Consequently, the accuracy of this calibration plays a pivotal role in determining the overall measurement accuracy of the system.

For hand-eye calibration, techniques are generally classified into three categories based on the nature of the calibration object: 2D target-based methods, single-point or standard-sphere-based methods, and 3D-object-based methods. One of the most widely recognized hand-eye calibration methods based on a two-dimensional calibration target was introduced by Shiu [

11]. The spatial relationship between the sensor and the robot’s wrist frame was identified by analyzing the sensor’s motion in response to controlled movements of the robotic arm, typically modeled using the hand-eye calibration equation AX = XB, where A and B represent the robot and sensor transformations, respectively, and X denotes the unknown sensor pose. Under general conditions, this equation has a closed-form solution, provided that at least two distinct robot motions are used to resolve the inherent ambiguities in rotation and translation. The uniqueness of the solution depends on the rotational characteristics of the robot’s movements. Since then, a wide range of solution methods have been developed for these calibration equations, which are generally categorized into linear and nonlinear algorithms [

12,

13]. Representative approaches include the distributed closed-loop method, the global closed-loop method, and various iterative techniques. However, these methods tend to be highly sensitive to measurement noise. In contrast to traditional hand-eye calibration methods, which often involve complex setups and labor-intensive manual procedures, Sung et al. [

14] proposed a vision-based self-calibration method tailored for industrial robotic applications. The approach utilizes a static 2D calibration plane and a camera mounted on the end-effector of a moving robot. By designing controlled translational and rotational movements of the robot arm, the spatial relationship between the robot and the camera can be accurately estimated without external intervention. This self-calibration strategy significantly reduces human involvement and enhances the practicality of deploying robotic vision systems in automated environments. To streamline the calibration process for line-structured light sensors in robotic eye-in-hand systems, Wang et al. [

15] introduced an integrated method that simultaneously determines the camera intrinsics, hand-eye transformation, and structured light plane parameters. Unlike traditional approaches that treat each parameter group separately, often resulting in complex and time-consuming workflows, this method performs all calibrations using a unified image set acquired from multiple robot and target poses. By reusing the extrinsic results from camera calibration, the structured light plane and hand-eye parameters can be efficiently derived without additional data collection. The procedure requires only a low-cost planar checkerboard as the calibration target, making it both practical and accessible. Experimental results demonstrated that the method achieves sub-millimeter accuracy with a total calibration time under 10 min, validating its effectiveness for industrial 3D measurement applications. Pavlovčič et al. [

16] proposed an efficient calibration method that simultaneously estimates both the intrinsic parameters of a laser profilometer and the hand-eye transformation with respect to an industrial robot. Unlike conventional approaches that often rely on multiple calibration targets or sequential procedures, their method requires only a single reference geometry scanned from multiple viewpoints. By capturing the reference object from 15 different poses and numerically optimizing the transformation parameters to minimize geometric deviations, the approach achieves high accuracy with minimal setup. Experimental validation demonstrated sub-millimeter calibration precision, achieving deviations below 0.105 mm when using a robotic arm and 0.046 mm with a CNC system. The fully automated workflow, which completes in under 10 min, makes this approach highly suitable for regular on-site recalibration in industrial environments. In contrast, Xu et al. [

17] proposed a single-point-based calibration method that utilizes a single point, such as a standard sphere, to compute the transformation, offering a relatively simple and practical implementation. Furthermore, hand-eye calibration methods utilizing a standard sphere of known radius have been extensively adopted to estimate the hand-eye transformation parameters [

18]. These methods provide an intuitive and user-friendly solution for determining both rotation and translation matrices. Among the 3D-object-based calibration methods, Liu et al. [

19] designed a calibration target consisting of a small square with a circular hole. Feature points were extracted from line fringe images and used as reference points for the calibration process. However, the limited number of calibration points and the low precision of the extracted image features resulted in suboptimal calibration accuracy. In addition to hand-eye calibration methods based on calibration objects, several approaches that use environmental images, rather than calibration objects, have been successfully applied to determine hand-eye transformation parameters, as reported by Sang [

20] and Nicolas and Qi [

21]. To enhance the flexibility and autonomy of 3D measurement systems, Song et al. [

22] proposed a hand-eye calibration method that avoids reliance on high-precision or specially designed calibration targets. Instead, their approach leverages irregular and unstructured objects, capturing 3D point cloud data from multiple viewpoints to estimate the spatial relationship between the robot and the sensor. By integrating a fast point feature histogram (FPFH)-based sampling consistency check with an improved iterative closest point registration algorithm that incorporates probabilistic modeling and covariance refinement, the method establishes the hand-eye transformation through robust point cloud alignment. Experimental results confirmed that the technique can perform accurate calibration using arbitrary objects in real-world robotic applications. However, the methods mentioned above do not account for the robot positioning errors introduced by kinematic parameter errors during the hand-eye calibration process.

To overcome this limitation, many researchers have proposed various hand-eye calibration methods that account for the correction of robot kinematic parameter errors. Yin et al. [

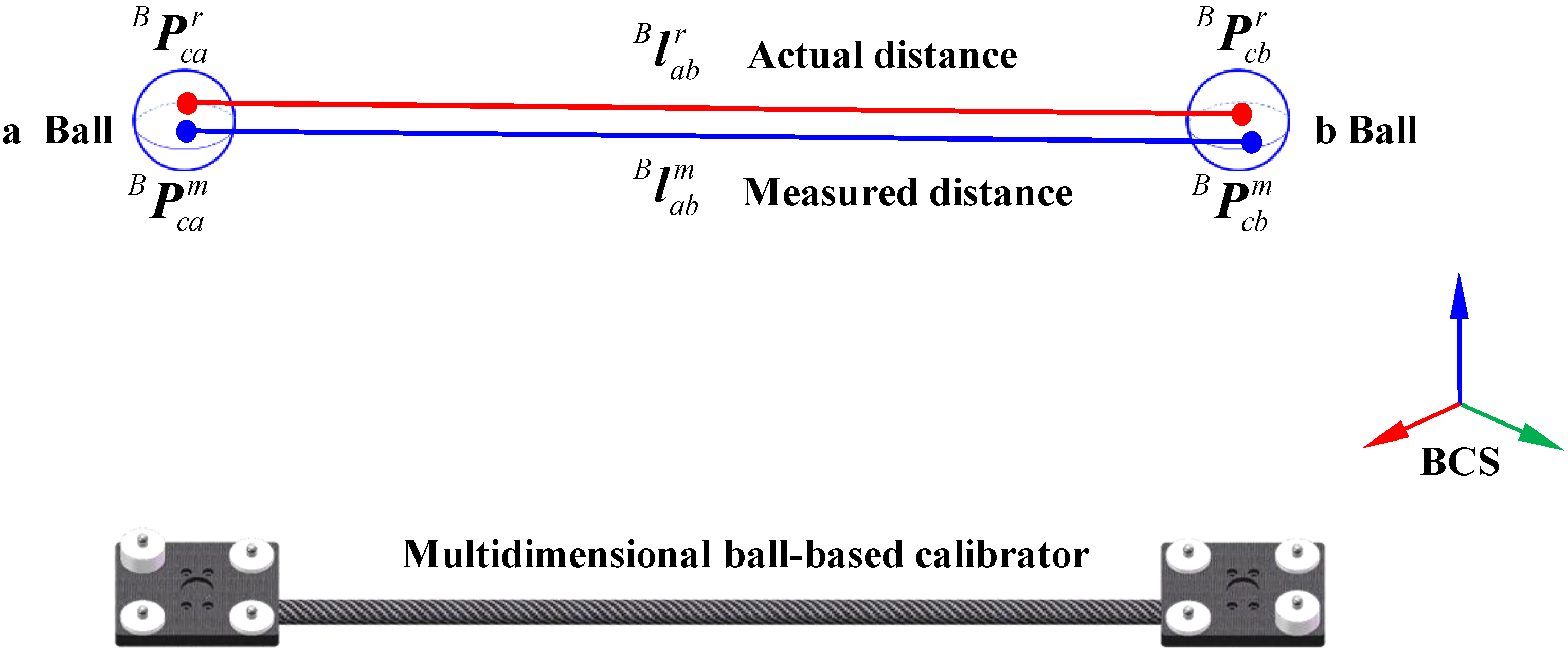

23] introduced an enhanced hand-eye calibration algorithm. Initially, hand-eye calibration is conducted using a standard sphere, without accounting for robot positioning errors. Then, both the robot’s kinematic parameters and the hand-eye relationship parameters are iteratively refined based on differential motion theory. Finally, a unified identification of hand-eye and kinematic parameter errors is accomplished using the singular value decomposition (SVD) method. However, the spherical constraint-based error model lacks absolute dimensional information, and cumulative sensor measurement errors further limit the accuracy of parameter estimation. Li et al. [

24] introduced a method that incorporates fixed-point information as optimization constraints to concurrently estimate both hand-eye transformation and robot kinematic parameters. However, the presence of measurement errors from visual sensors substantially compromises the robustness and generalizability of the resulting parameter estimations. To further optimize measurement system parameters, Mu et al. [

25] introduced a unified calibration method that simultaneously estimates hand-eye and robot kinematic parameters based on distance constraints. However, this method does not adequately address sensor measurement error correction during the solution of the hand-eye matrix, resulting in accumulated sensor inaccuracies that significantly degrade the precision of the derived relationship matrix.

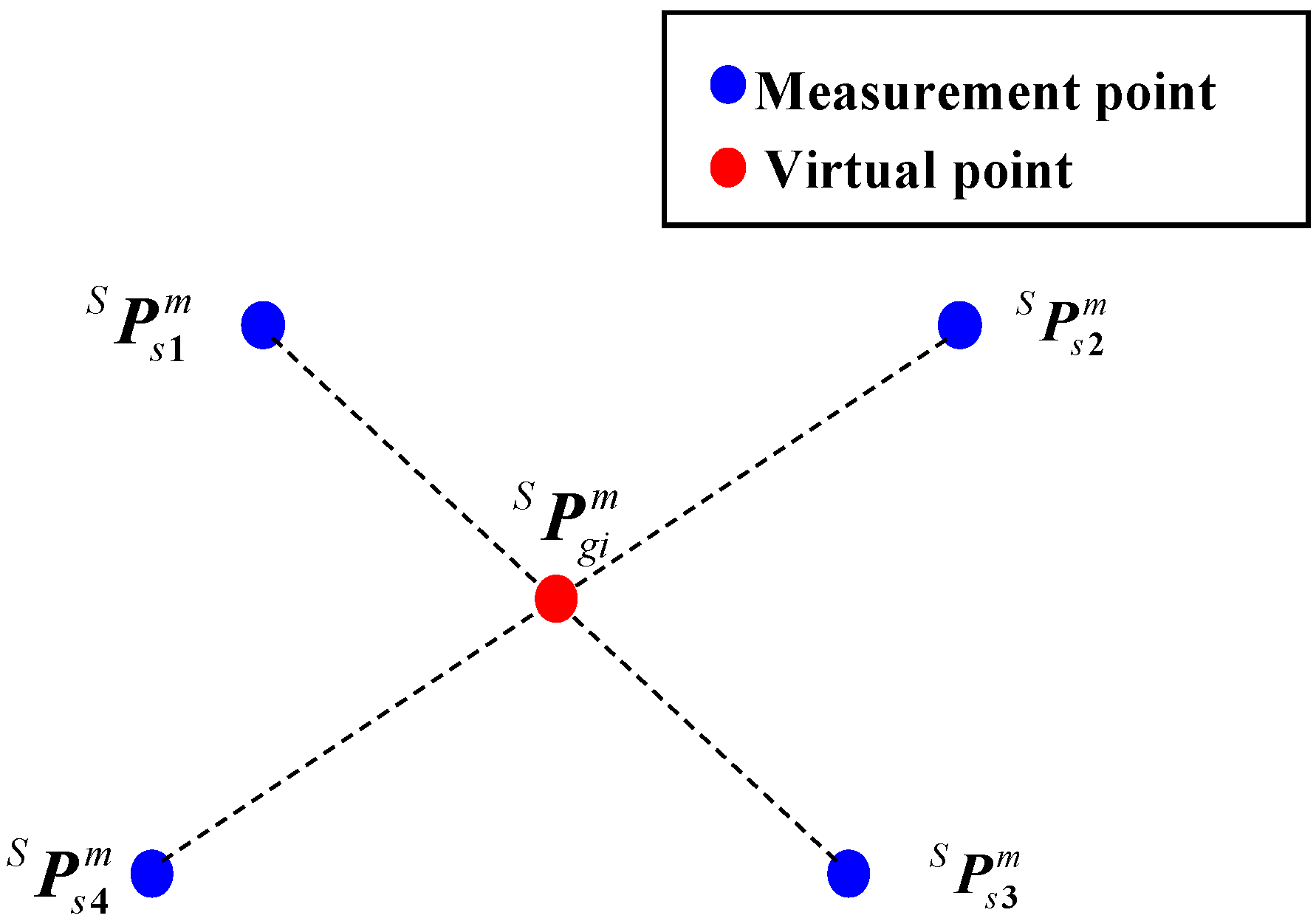

To address the aforementioned limitations, we propose an accurate calibration method for a robotic flexible 3D scanning system based on a multidimensional ball-based calibrator (MBC) [

26]. This method constructs a distance-based calibration model that concurrently considers measurement errors, hand-eye parameter errors, and robotic kinematic errors. Specifically, by incorporating geometric-constraint-based optimization for compensating coordinate errors of the measurement points, a preliminary hand-eye calibration method is introduced based on a single virtual point determined via the barycenter technique. Subsequently, a distance-constraint-based calibration method is developed to further optimize both hand-eye and kinematic parameters, effectively associating system parameter errors with deviations in the measured coordinates of the single virtual point.

The remainder of this paper is organized as follows.

Section 2 introduces the model of the measurement system.

Section 3 outlines the proposed calibration methodology.

Section 4 presents the experimental setup and accuracy validation. Finally,

Section 5 concludes this study with a brief summary of the findings.

4. Experimental and Discussion

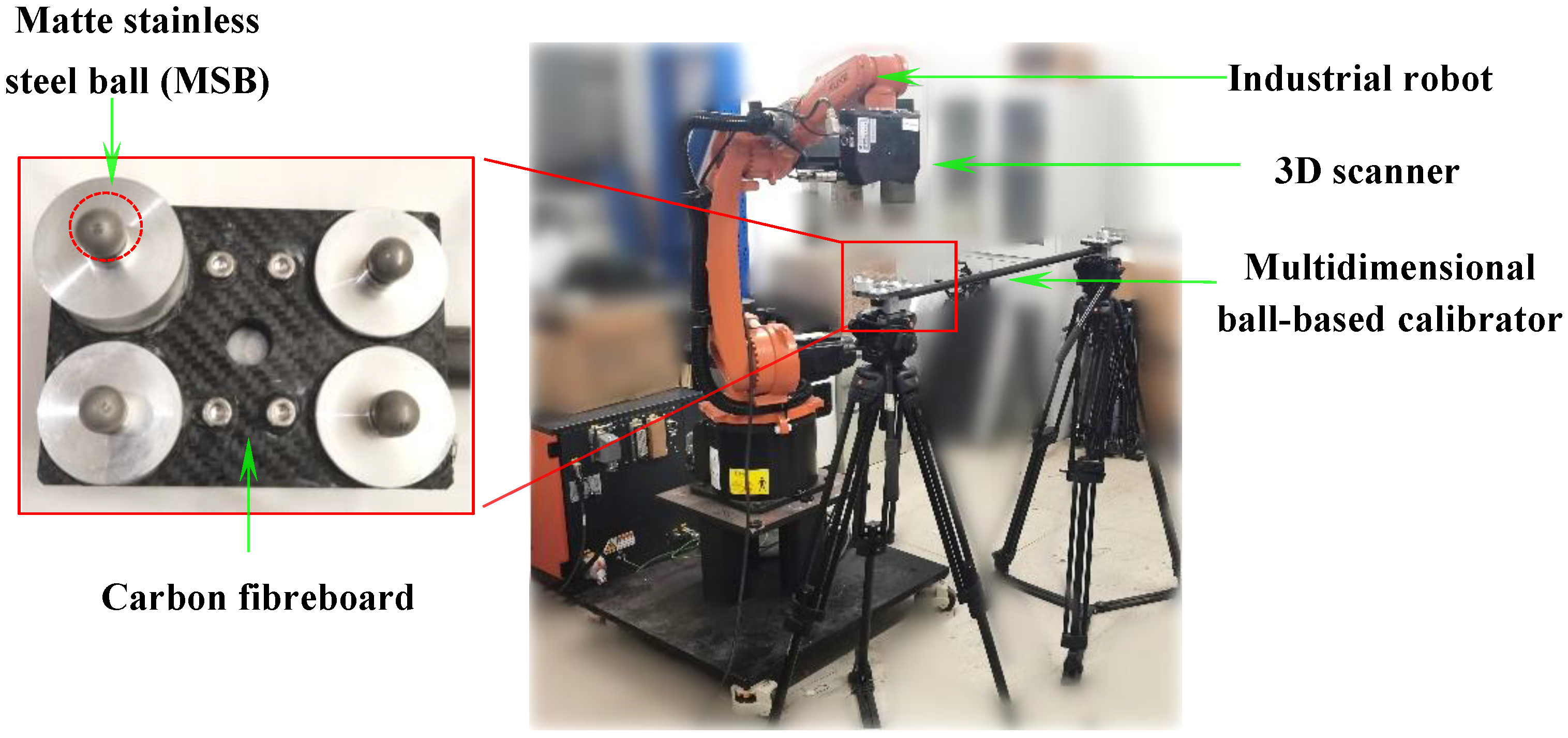

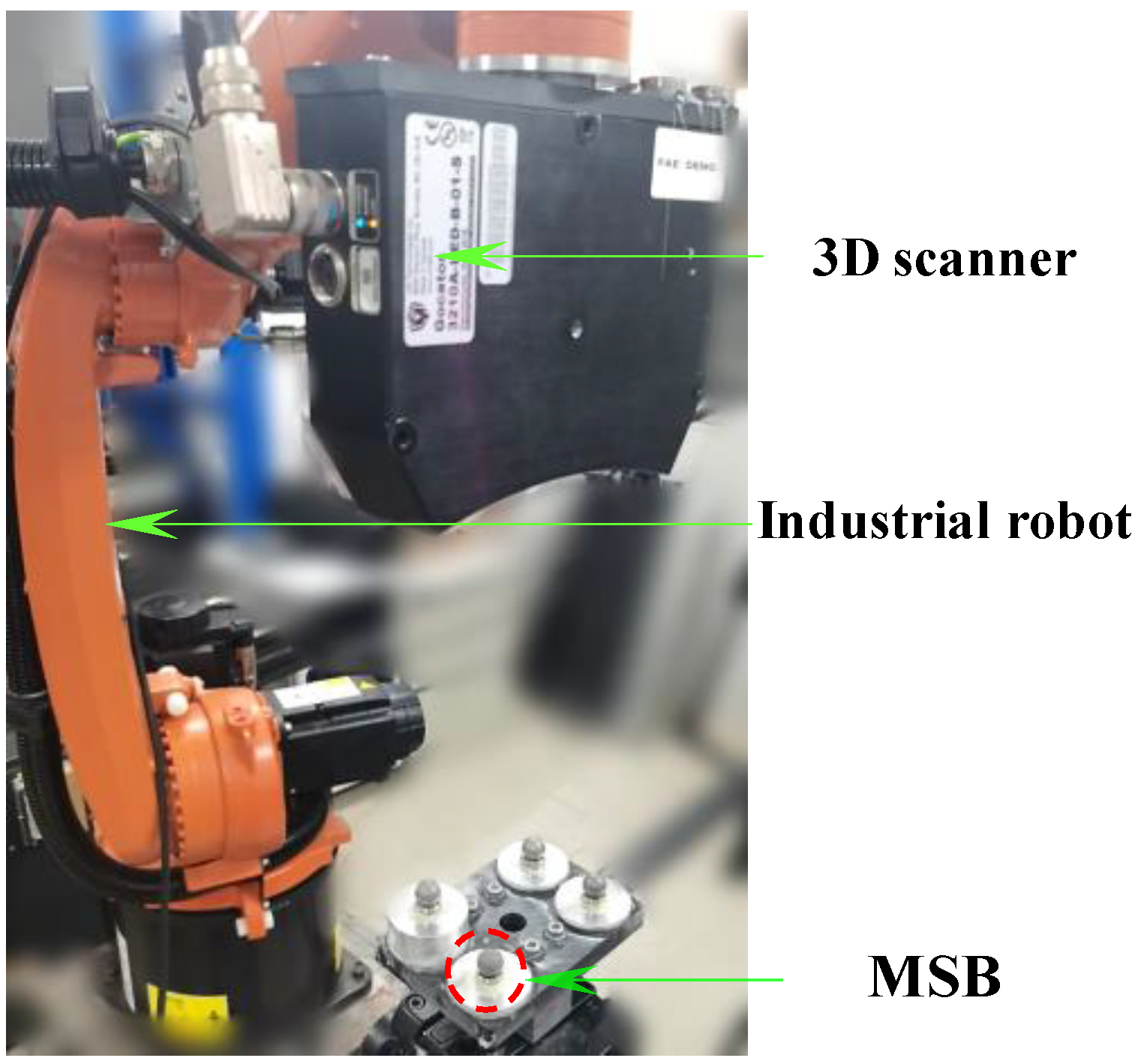

To verify the effectiveness of the proposed calibration method, a robot-scanner experimental system was established, and corresponding validation experiments were conducted. As illustrated in

Figure 5, the system mainly comprises a KUKA-KR industrial robot (KUKA Robotics, Augsburg, Germany) with a repeatability of 0.04 mm and a structured light 3D scanner. The scanner, a binocular fringe projection system from the LMI Gocator 3210 (LMI Technologies Inc., Burnaby, BC, Canada), has its key performance specifications listed in

Table 1. The calibration experiments are presented were carried out in

Section 4.1, while

Section 4.2 assesses the calibration accuracy by measuring a metric artifact in accordance with VDI/VDE 2634 Part 3 [

30]. All experiments were conducted in a stable laboratory environment, with the indoor temperature varying between 22 °C and 23 °C and the relative humidity ranging from 55% to 60%.

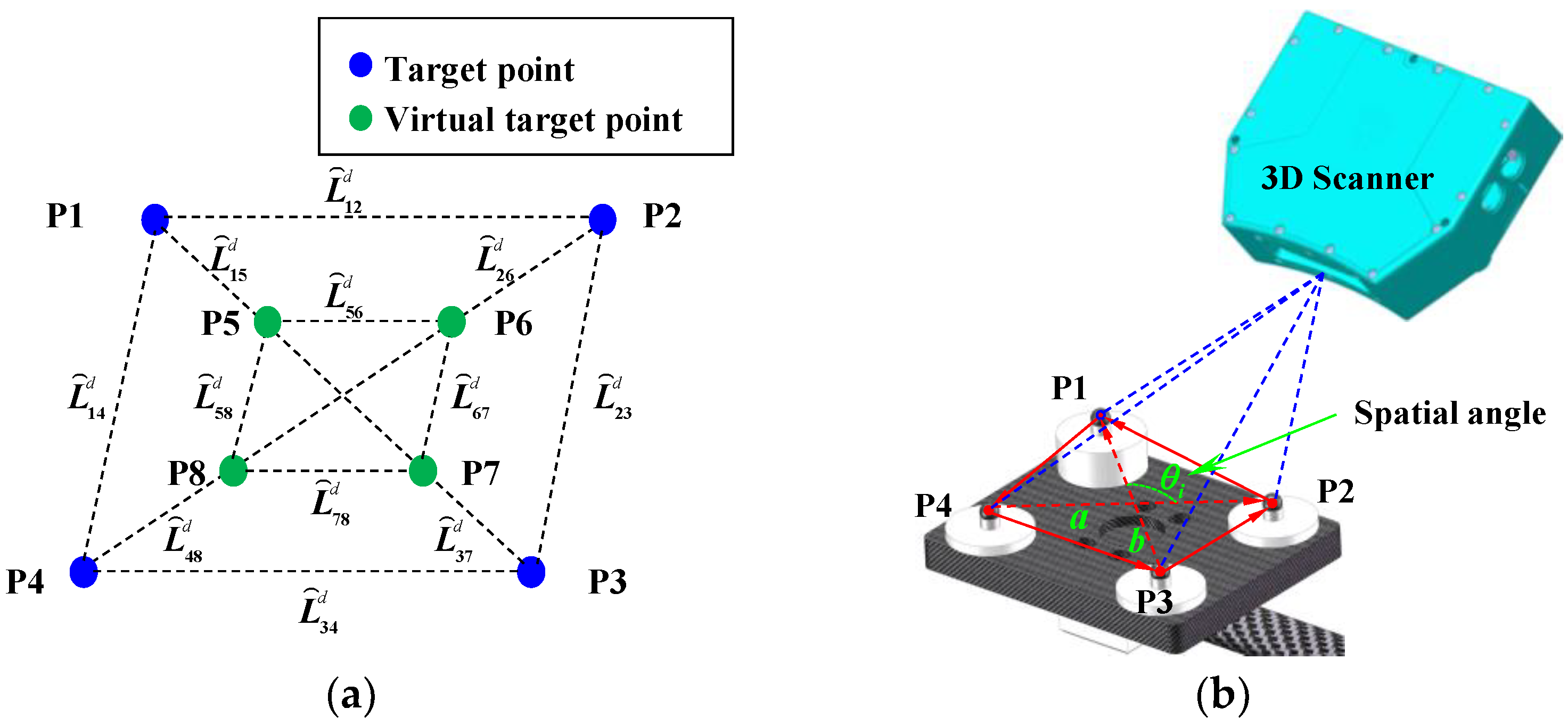

For precise calibration and performance evaluation, a customized multidimensional ball-based calibrator (MBC) with a length of 1000 mm was designed specifically for 3D scanner measurements. As shown in

Figure 5, the calibrator is made of carbon fiber reinforced polymer (CFRP) and integrates eight matte stainless steel balls (MSBs). Each MSB has a diameter of 12.70 mm and a roundness deviation of less than 0.002 mm. The spatial relationships among all balls were pre-calibrated using a Zeiss PRISMO coordinate measuring machine (Carl Zeiss Industrielle Messtechnik GmbH, Oberkochen, Germany), a high-precision CMM widely used in industrial metrology. In this study, the maximum inter-sphere distance is 1006.863 mm. According to the manufacturer, the machine provides a length measurement error (MPE) of

where

L is the measured length in millimeters. For our typical inter-sphere distances, this corresponds to a measurement error below 1.5 μm. A Type A uncertainty analysis based on repeated measurements, combined with Type B estimates (instrument specs and environmental control), leads to a conservative combined uncertainty of ±2 μm (k = 2) for each sphere center distance. These uncertainty estimates provide a reliable reference for evaluating the calibration accuracy of the proposed method.

4.1. Calibration Experiments

The accuracy of hand-eye calibration is known to be highly sensitive to the spatial distribution and diversity of the robot poses used during the calibration process. To ensure robust and accurate hand-eye calibration, we adopt the following pose selection strategy: First, we generate at least 12–15 poses with varied orientations and positions covering a large portion of the robot’s reachable workspace. Then, the selected poses avoid alignment along a single axis (collinearity) or within a single plane, thereby increasing the observability of rotational and translational parameters. Furthermore, each pose ensures that the 3D scanner maintains a consistent view of the calibration target, avoiding occlusion and maintaining sufficient overlap for accurate point cloud registration. Specifically, the range of end-effector orientations is selected to include rotations about different axes, and translational displacements cover different spatial quadrants.

To validate the effectiveness of this strategy, we conducted a contrast experiment in which three different pose configurations were tested, as shown in

Figure 6. Specifically, in this study, we compare the radius error of 0.5-inch MSBs obtained using (i) collinear poses, (ii) planar poses, and (iii) spatially distributed poses, under the same conditions. The experimental steps are as follows: First, the hand-eye transformation matrix was estimated under three different robot poses using a calibration method based on a single sphere [

18]. The resulting point cloud data of the measured MSB were then transformed into the robot coordinate frame. The radius of the MSB was reconstructed and compared with its pre-calibrated reference value to compute the radius error of the MSB for each pose, as summarized in

Table 2. These results confirm that greater pose diversity significantly improves calibration accuracy and reduces numerical instability.

Based on the comprehensive analysis of robot pose diversity and its influence on calibration accuracy, we proceeded to apply the proposed calibration method using the optimized pose. The following section details the setup, procedure, and results of the calibration experiments conducted to validate the effectiveness and robustness of our method: First, an initial hand-eye calibration experiment was conducted following the method introduced in

Section 3.1. A multidimensional ball-based calibrator was positioned within the robot’s workspace, and the robot was programmed to execute six translational movements and six orientation changes. To prevent singularities, the end-effector was translated along the X, Y, and Z axes of the robot’s base coordinate frame during the six translational movements. At each pose, the 3D scanner performed multiple measurements of the target spheres on the stereo target. Using the first six sets of measurements, the rotation matrix

was computed according to Equation (7). Subsequently, the translation vector

was determined from the remaining six sets of data, yielding the initial estimate of the hand-eye transformation matrix.

Subsequently, a high-precision hand-eye calibration experiment was performed. The MBC, mounted on a tripod, was successively placed at eight distinct vertical positions within the workspace. At each position, the robot’s end-effector executed eight unique orientation changes. A 3D scanner was employed to capture the target points and extract the coordinates of the sphere centers. Based on these measurements, a system parameter error identification model was constructed to estimate the kinematic parameter errors, as presented in

Table 3. The refined hand-eye transformation matrix was then accurately determined through this high-precision calibration process, as shown below:

The entire calibration procedure is highly automated and completes within 8 min, enabling efficient on-site recalibration of the system, when necessary, without interrupting standard workflow.

4.2. Accuracy Evaluation of Accurate Calibration

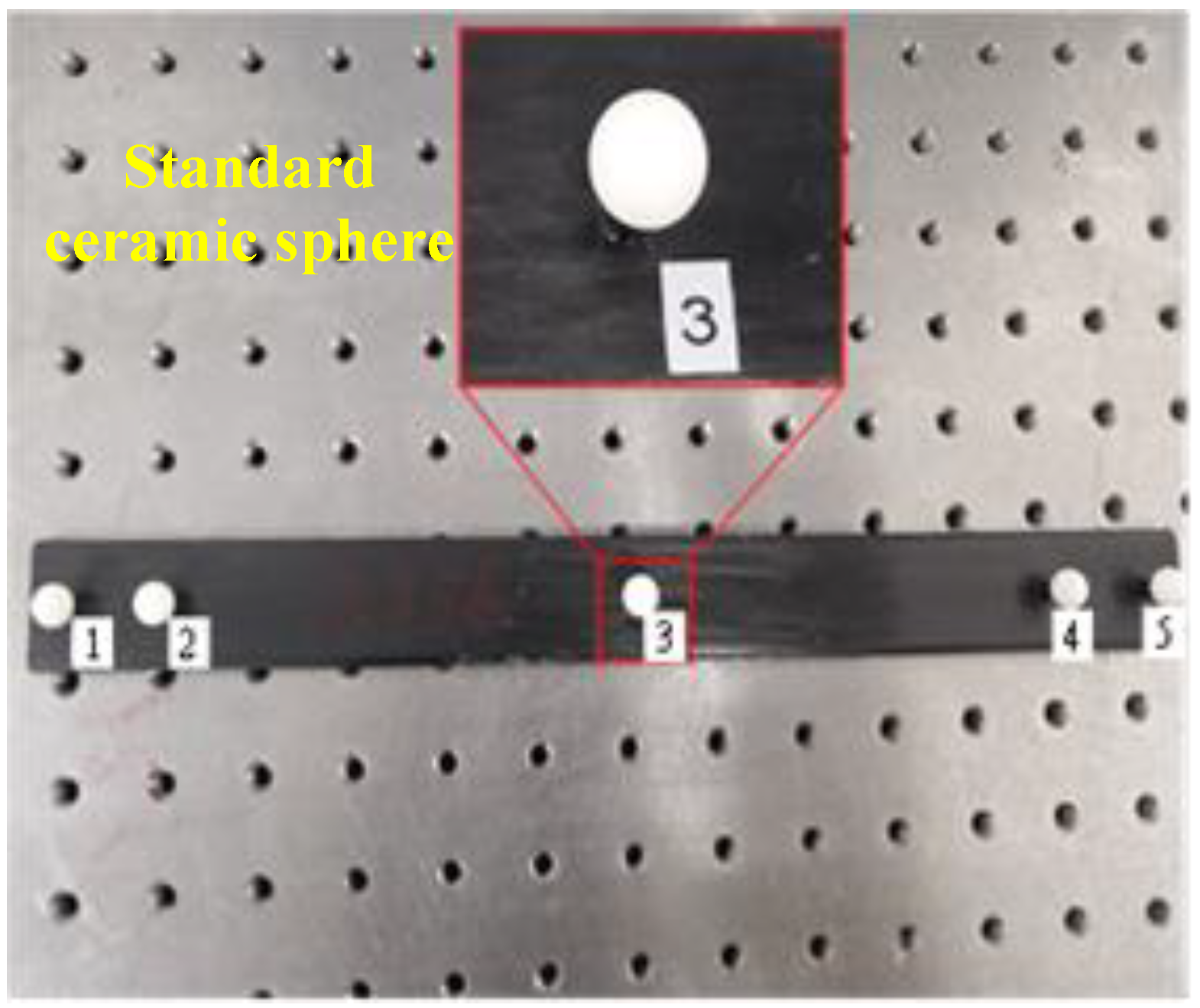

To evaluate the calibration accuracy, a standard scale was utilized, as shown in

Figure 7. The scale primarily consists of several homogeneous and precision-grade ceramic spheres with an approximate diameter. Each standard ceramic sphere has a diameter of 10.010 mm and a roundness deviation of 0.001 mm. The center-to-center distance between two selected spheres was pre-calibrated using a coordinate measuring machine (CMM) [

30], yielding a result of D15 = 299.546 mm.

Therefore, the errors between the measured and actual distances of sphere centers on the standard scale were computed at ten different spatial positions, using system parameters obtained both before and after calibration. The corresponding results are presented in

Table 4. Following calibration, the maximum (MPE) and mean errors (ME) were reduced from 1.053/0.814 mm to 0.421/0.373 mm, respectively. These results satisfy the accuracy requirements for scanning critical and hard-to-reach features, thereby confirming the reliability and effectiveness of the proposed method.

To better understand the accuracy and robustness of the proposed calibration method, we performed a qualitative analysis of the major sources of error involved in the hand-eye and kinematic parameter calibration process. These error sources can be categorized into the following three aspects: (i) robot pose repeatability and mechanical uncertainty. Although the KUKA industrial robot employed in this study offers a high repeatability of ±0.04 mm, it is not immune to small deviations caused by joint backlash, compliance, or control jitter. These minor inconsistencies directly affect the transformation between the robot base and end-effector and propagate into the final calibration results; (ii) 3D scanner measurement error. The LMI Gocator 3 series structured-light scanner used in this study has a nominal accuracy better than 0.035 mm. However, in practical use, factors, such as surface reflectivity, ambient lighting, and sensor-to-target distance, may introduce fluctuations in the measured point cloud, resulting in uncertainty in the extracted geometric features; and (iii) pose selection and geometric observability.

In summary, while sensor noise and sphere fitting errors primarily affect the input data quality, the pose distribution and optimization formulation govern the observability and convergence of the calibration. These factors jointly determine the final calibration accuracy.

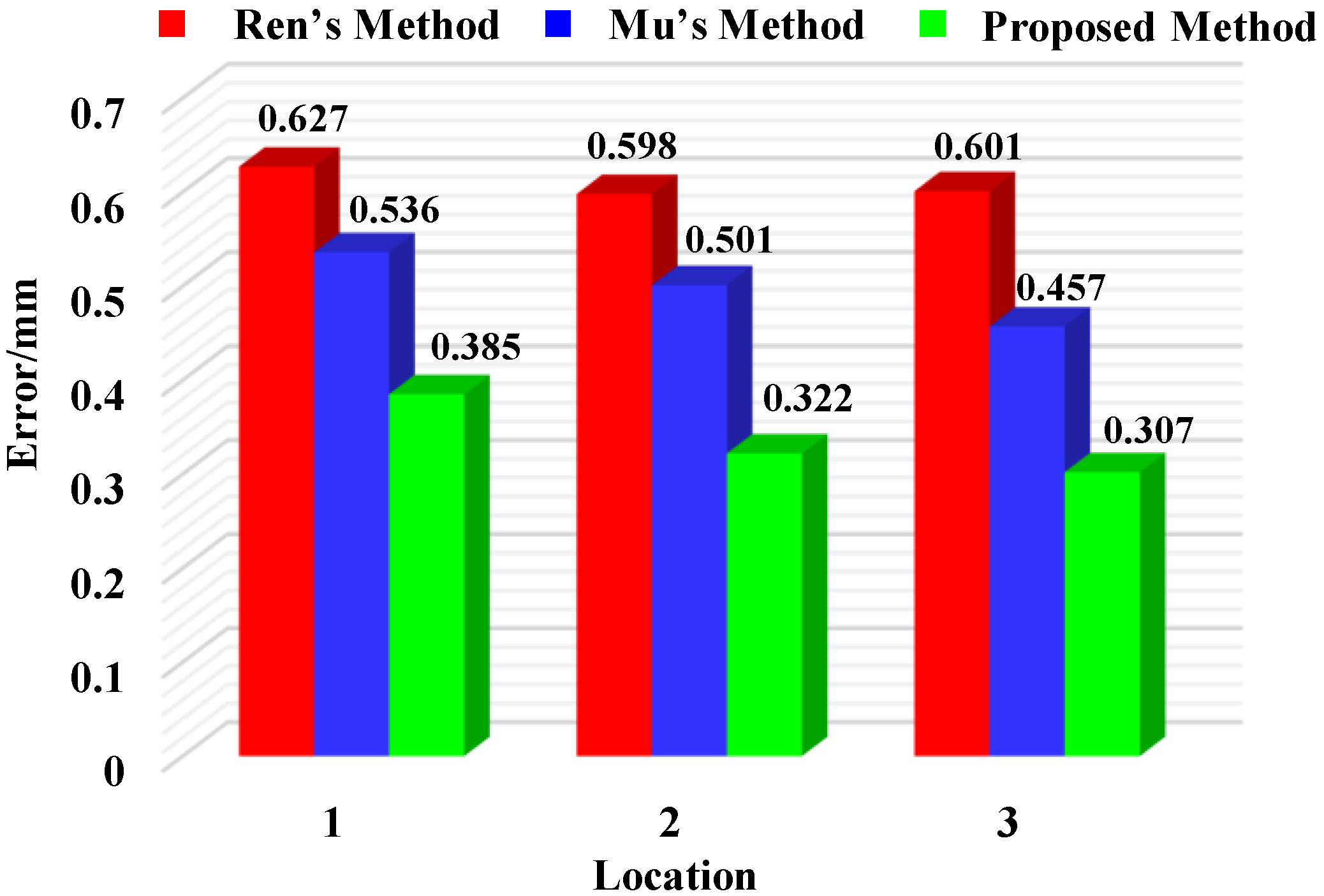

To further validate the effectiveness of the proposed calibration method, mean sphere spacing errors at multiple positions were evaluated using the methods proposed by Ren [

18], Mu [

25], and this study, respectively, as shown in

Figure 8. When using Ren’s method, the mean sphere spacing errors at positions 1 to 3 were 0.627 mm, 0.598 mm, and 0.601 mm, respectively. In comparison, Mu’s method yielded average errors of 0.536 mm, 0.501 mm, and 0.457 mm for the same positions. By contrast, the proposed method achieved lower mean errors of 0.385 mm, 0.322 mm, and 0.307 mm at positions 1 to 3. These experimental results demonstrate the superior accuracy of the calibration technique developed in this study.