Abstract

Compressed sensing is widely used in modern resource-constrained sensor networks. However, achieving high-quality and robust signal reconstruction under low sampling rates and noise interference remains challenging. Traditional CS methods have limited performance, so many deep learning-based CS models have been proposed. Although these models show strong fitting capabilities, they often lack the ability to handle complex noise in sensor networks, which affects their performance stability. To address these challenges, this paper proposes SBCS-Net. This framework innovatively expands the iterative process of sparse Bayesian compressed sensing using convolutional neural networks and Transformer. The core of SBCS-Net is to optimize key SBL parameters through end-to-end learning. This can adaptively improve signal sparsity and probabilistically process measurement noise, while fully leveraging the powerful feature extraction and global context modeling capabilities of deep learning modules. To comprehensively evaluate its performance, we conduct systematic experiments on multiple public benchmark datasets. These studies include comparisons with various advanced and traditional compressed sensing methods, comprehensive noise robustness tests, ablation studies of key components, computational complexity analysis, and rigorous statistical significance tests. Extensive experimental results consistently show that SBCS-Net outperforms many mainstream methods in both reconstruction accuracy and visual quality. In particular, it exhibits excellent robustness under challenging conditions such as extremely low sampling rates and strong noise. Therefore, SBCS-Net provides an effective solution for high-fidelity, robust signal recovery in sensor networks and related fields.

1. Introduction

The rapid development of sensing technology has put forward higher requirements for data acquisition and processing systems. Modern sensor networks, especially those in environmental monitoring [1], remote sensing [2], and biomedical applications [3], generate massive amounts of data, posing a major challenge to traditional sampling and transmission capabilities. These networks are often deployed in resource-constrained environments and face many limitations, including low bandwidth, limited transmission capacity, real-time processing requirements, and low energy consumption for long-term operation. These factors have created huge bottlenecks in data processing and operational efficiency.

Compressed sensing (CS) [4] is a breakthrough signal processing paradigm. It breaks away from the limitations of the Nyquist–Shannon sampling theorem [5] and provides a new approach to address the above challenges. By exploiting the sparse nature of signals in a specific domain, compressed sensing can achieve accurate signal reconstruction with far fewer measurements than traditional methods, making it an effective method for data acquisition and processing in many scientific and engineering fields [6,7]. In sensor networks, CS usually adopts an architecture of lightweight front-end sampling and complex back-end reconstruction. Its typical workflow is shown in Figure 1. Resource-constrained sensor nodes perform low-complexity compressed sampling through random projection or structured measurement to compress high-dimensional signals into low-dimensional space. This significantly reduces the amount of data transmission, bandwidth usage, and energy consumption. The computationally intensive reconstruction task is transferred to the cloud or edge computing nodes, using complex nonlinear algorithms to restore high-fidelity signals while meeting real-time requirements and avoiding sensor node overload. This division of labor effectively alleviates the bottlenecks in data transmission, energy consumption, and processing speed in resource-constrained sensor networks.

Figure 1.

Sensor network.

Although the theory of compressed sensing has made significant progress, its practical application still faces severe challenges, especially how to achieve sufficient reconstruction accuracy and robustness under conditions such as low sampling rate, complex signal structure, and noise interference. Traditional compressed sensing methods, whether based on convex optimization methods [8] or greedy algorithms, have obvious limitations. For example, the hyperparameter problem makes these traditional algorithms inefficient and low-precision, and they have strict requirements on signal sparsity and poor robustness. Although the early Bayesian compressed sensing (BCS) [9] method provides adaptive sparse learning and noise modeling from a probabilistic perspective to improve robustness, it has high computational complexity and is still sensitive to hyperparameters related to sparsity and noise precision. At the same time, deep learning-based methods solve the hyperparameter problem in traditional algorithms and achieve significant performance improvements through powerful nonlinear fitting, but their designs often focus on end-to-end mapping learning and network architecture design and may lack clear and adaptive probabilistic modeling mechanisms for signal sparsity and measurement noise. When encountering strong noise or extremely low sampling rates that are not fully reflected in the training data, it will lead to unstable performance or impaired generalization ability.

In order to make up for the lack of explicit probabilistic modeling in existing deep learning compressed sensing methods, make full use of the powerful feature extraction and efficient computing capabilities of deep neural networks, and give the compressed sensing reconstruction process stronger theoretical guidance, structured sparsity constraints and robust uncertainty handling capabilities, this paper proposes a deep Bayesian compressed sensing network (SBCS-Net). The core design concept of this network is that the SBL adaptive sparsity learning ability achieved by prior parameters and the probability-based noise modeling ability achieved by noise precision parameters can provide key regularization and effective guidance for deep learning models in sensor network environments with extremely sparse or severely polluted information. Specifically, this study aims to explore and clarify several key aspects of this fusion method. First, this study explores how to synergistically combine the adaptive sparsity enhancement and probabilistic noise modeling capabilities of sparse Bayesian learning (SBL) [10] with deep neural network architectures to overcome their inherent computing capabilities. Traditional SBL suffers from hyperparameter sensitivity, especially in resource-constrained sensor networks that typically operate at low sampling rates. Second, this study explores the potential of fusing the strengths of convolutional neural networks (CNNs) [11] in fine-grained local feature extraction with the complementary strengths of Transformer [12] in capturing global contextual information within an iterative SBL framework, aiming to significantly improve the reconstruction accuracy and robustness of complex signal structures. Finally, the core goal of this study is to determine whether such hybrid models can achieve a more ideal balance between reconstruction fidelity, computational efficiency, and adaptability to different noise conditions and signal types by learning key SBL parameters end-to-end, thereby providing a more robust and general solution for practical CS applications in challenging sensor network environments.

Instead of simply combining SBL with deep learning, SBCS-Net deeply integrates the core reasoning mechanism of SBL into an iterative deep architecture composed of CNN and Transformer, and designs key SBL parameters (such as and ) as components that can be internally optimized through end-to-end learning. This design enables SBCS-Net to fully exploit the inherent advantages of SBL by learning its control parameters to adaptively improve signal sparsity and quantize/suppress noise—which is difficult to explicitly achieve in many purely data-driven deep learning models. At the same time, it overcomes the inherent limitations of SBL by reducing significantly its computational complexity by leveraging neural networks (especially image patch-based processing strategies and GPU parallel computing capabilities) and simplifying the parameter optimization process through end-to-end learning. In addition, the comprehensive performance of the deep learning module is also improved, because the fine-grained local detail capture of CNN and the excellent global dependency modeling of Transformer provide the SBL module with higher-quality signal representation, thereby achieving more accurate signal reconstruction under the guidance of the SBL probabilistic framework. The organic fusion of model-driven Bayesian principles and data-driven deep learning capabilities enables SBCS-Net to pursue computational efficiency and reconstruction accuracy while maintaining robustness and generalization potential that is superior to many purely data-driven deep learning models under challenging conditions.

Therefore, through this effective fusion of model-driven and data-driven approaches, SBCS-Net is dedicated to achieving superior compressed sensing performance under challenging conditions. The main contributions of this paper include the following:

- A novel compressed sensing framework, SBCS-Net, is proposed, which achieves a deep iterative integration of the core principles of SBL with deep neural networks (CNN and Transformer). Through a unique structural design, SBCS-Net effectively combines SBL’s adaptive sparse modeling, CNN’s local feature extraction, and Transformer’s global contextual awareness capabilities. It aims to achieve higher reconstruction accuracy and better noise robustness, particularly under challenging conditions such as low sampling rates and strong noise.

- Leveraging the end-to-end learning capability of neural networks, key hyperparameters from traditional BCS—such as those controlling sparsity and noise precision—are transformed into learnable parameters within the network. This design not only significantly alleviates the difficulty of hyperparameter optimization inherent in BCS but also effectively reduces the overhead of large-scale matrix computations, while retaining the theoretical advantages of Bayesian inference in uncertainty modeling and adaptive sparsity.

- The proposed model has undergone extensive experimental validation on multiple public datasets covering diverse scenarios and has been comprehensively compared with current mainstream deep learning CS methods. Preliminary experimental results indicate that the proposed method can achieve superior reconstruction quality and visual effects under various conditions, especially in cases of low sampling rates and noisy measurements.

The remainder of this paper is organized as follows: Section 2 reviews related work in CS reconstruction methods and deep learning approaches. Section 3 presents the detailed architecture and methodology of SBCS-Net. Section 4 provides experimental results and comparative analysis. Finally, Section 5 concludes with discussions on the implications of this work and future directions.

2. Related Work

This section gives a brief presentation of related works, i.e., conventional CS theory and its Bayesian extension, DL-based models for image CS reconstruction, and Transformer architectures for modeling global dependencies. These works form the foundation of our proposed SBCS-Net framework.

2.1. Traditional Compressed Sensing

Traditional CS methods can be primarily categorized into two main schools: convex optimization algorithms and greedy algorithms.

Convex optimization methods, such as Basis Pursuit (BP) [13], Iterative Soft-Thresholding Algorithm (ISTA) [14], and its accelerated versions (e.g., FISTA [15]), provide a solid mathematical foundation for CS problems by relaxing the NP-hard -norm minimization problem into a more tractable convex -norm minimization problem. For instance, under the condition that the sensing matrix satisfies the well-known Restricted Isometry Property (RIP) and when the signal is sufficiently sparse, BP can theoretically guarantee the exact recovery of sparse signals or achieve stable recovery in the presence of noise. The mathematical completeness of these methods established them as central to early CS theory. However, these approaches typically entail high computational complexity. BP, for example, may involve linear programming, with its complexity potentially reaching the order of in some scenarios, or it may depend on a large number of iterations (as in ISTA/FISTA, where the cost per iteration is low, but the total number of iterations can be substantial), leading to high overall computational costs. Furthermore, their performance can degrade significantly when dealing with very low sampling rates or complex noise.

Greedy algorithms, such as Matching Pursuit (MP), Orthogonal Matching Pursuit (OMP) [16], and Subspace Pursuit (SP) [17], adopt an iterative strategy, selecting the atom (or set of atoms) most correlated with the current measurement residual at each step to progressively construct the sparse solution. This intuitive and efficient approach to signal approximation is their primary characteristic. The main operations in a single iteration of OMP include calculating correlations (approximately ) and solving a small-scale least-squares problem. If the signal sparsity is K, the total computational complexity is roughly on the order of , thus generally being faster than convex optimization methods. However, their performance is highly dependent on the signal’s sparse structure and mathematical properties of the measurement matrix, such as the RIP or mutual coherence. When these conditions are not ideally met, greedy algorithms are more prone to getting trapped in local optima and may lack universality for complex signals.

Overall, while these early methods that laid the groundwork for CS theory pioneered signal recovery from undersampled data and provided mathematical guarantees for recovery and complexity bounds under specific conditions, their initial framework has gradually revealed inherent limitations in terms of methodological innovation and performance improvement when faced with the increasing demands for reconstruction quality (especially under non-ideal conditions), computational efficiency, and robustness.

2.2. Bayesian Compressed Sensing

To address certain limitations of traditional methods, BCS introduced a principled probabilistic modeling framework for the CS reconstruction problem. BCS models the reconstruction process using hierarchical Bayesian inference, providing a more comprehensive theoretical foundation for sparse signal recovery.

Its core lies in the probabilistic representation of the measurement process:

where the observation vector is formed by the true signal undergoing a linear transformation via the sensing matrix and being superimposed with zero-mean Gaussian noise , where is the noise precision. This explicit noise modeling is key for BCS to handle uncertainty and enhance noise robustness.

The key innovation of BCS lies in its hierarchical sparse prior structure. First, a zero-mean Gaussian prior is set for each component of the signal (note: itself is a scalar component and thus not bolded):

where the precision controls sparsity. Subsequently, a conjugate Gamma hyperprior is set for each :

to achieve adaptive learning of sparsity. This hierarchical structure is central to the adaptivity of Bayesian models.

Based on this, the Gaussian posterior distribution of the signal :

can be inferred via Bayes’ theorem, with its mean and covariance given by:

where in typically denotes the vector of individual precision parameters .

This probabilistic treatment endows BCS with several theoretical advantages: Automatic Relevance Determination (ARD) is achieved by inferring hyperparameters to prune irrelevant components; the posterior covariance provides principled uncertainty quantification; and the integration of the noise parameter allows for more flexible handling of observation noise, thereby theoretically equipping BCS with good noise robustness. The emergence of BCS undoubtedly provided the CS field with a more intelligent solution paradigm, distinctly different from traditional optimization.

However, BCS also faces challenges in practical applications: the matrix inversion involved in solving for the posterior covariance makes it difficult to scale to large-scale signals; its performance is highly dependent on the precise estimation of model hyperparameters (referring to the vector of all ’s or their common governing parameters) and , which often requires complex optimization or manual tuning in traditional BCS; and commonly used Gaussian priors may not adequately capture the complex sparse characteristics of real signals. These factors indicate that although the probabilistic framework of BCS offers profound insights for CS research, critical breakthroughs are still needed in how to apply this framework efficiently, robustly, and conveniently to diverse practical problems, especially regarding computational feasibility, automatic hyperparameter optimization, and complex prior representation. This also clarifies the direction for subsequent research, including the SBCS-Net proposed in this paper.

2.3. Deep Compressed Sensing

In recent years, deep learning has brought great progress to the field of CS reconstruction, and its powerful nonlinear mapping ability has significantly improved the quality and application potential of reconstructed images. At present, DL-based compressed sensing methods can be roughly divided into two major directions: deep unfolding networks and end-to-end learning networks. Although these methods have made great progress, they still face continuous challenges and optimization needs in key aspects such as feature extraction efficiency, effective retention and transmission of information during iteration, performance stability under low sampling rates, and model generalization ability and noise robustness.

The core concept of deep unfolding networks is to cleverly transform the iterative solution steps of traditional optimization algorithms into a specific hierarchical structure in neural networks. This strategy effectively combines model-driven theoretical guidance with data-driven parameter learning. By optimizing key internal network parameters (such as iteration step size, sparsity threshold, and various learnable transformation modules) through end-to-end training, these networks can usually achieve better reconstruction performance and faster convergence speed than the original traditional algorithms, while retaining a certain degree of interpretability based on optimization theory. Many representative works have emerged in this direction. For example, ISTA-Net [18] and its subsequent versions are based on the concept of proximal gradient descent, expanding the iterative process of the ISTA algorithm and replacing the manually set sparsity constraints with learned nonlinear transformations; FSOINet [19] optimizes in the feature space. Unfolding networks based on the ADMM algorithm, such as ADMM-CSNet [20] and ADMM-Net, improve the performance of the original algorithm by introducing learnable parameters. AMP-Net [21], based on the AMP algorithm, focuses on solving noise and reducing block effects, while DRCAMP-Net [22] expands the receptive field by merging residual convolutions. In addition, other unfolding methods include NeumNet [23], which uses the Neumann series to solve the inverse problem; TransCS, which combines the ISTA-based Transformer backbone network with a CNN module for ICS reconstruction; and OCTUF [24], which draws on the classic PGD method and iterates the Transformer with a cross-attention mechanism. In order to further improve the convergence speed and modeling capabilities, some studies have begun to explore the combination of more advanced optimization strategies with novel sequence modeling ideas. SSM-Net [25] deeply integrates and unfolds the FISTA algorithm with the dependency modeling function of the Mamba-based state-space model (SSM), aiming to efficiently capture short-range and long-range signal dependencies with linear complexity through SSM, while accelerating convergence using the momentum mechanism of FISTA. Despite the significant progress made in deep unfolding networks, as USB-Net [26] pointed out in their research, many existing unfolding methods still face some common problems, such as the lack of information exchange in the iterative reconstruction stage, which leads to inefficient feature extraction and information loss, which is particularly evident at low sampling rates, i.e., the quality of details in the reconstructed image is reduced. This is also one of the core challenges that our current research, SBCS-Net, attempts to solve by deeply integrating the Bayesian mechanism with advanced feature extraction and fusion modules.

Unlike deep unfolding networks, end-to-end learning networks directly construct complex nonlinear mappings from compressed measurements to original signals without explicitly relying on the framework of traditional optimization algorithms. These methods take full advantage of powerful feature learning and end-to-end optimization capabilities of deep neural networks. Among them, methods based on CNN are a primary focus of research. The early ReconNet [27] pioneered the application of CNN in CS recovery. Subsequent models such as CSNet+ (which learns both the sampling pattern and reconstruction), DeepCodec [28], DR2-Net [29] (which employs residual learning for improved reconstruction), and DPA-Net [30] (which uses dual-path attention to separately capture structural and texture information) have further enhanced reconstruction performance. However, the inherent local receptive field of standard CNN limits their ability to effectively capture global image dependencies. To address this, methods like MSCRLNet [31] employ multi-scale design and residual learning strategies to expand the effective receptive field. To more directly model long-range dependencies, researchers have introduced the Transformer architecture, initially applied in natural language processing, into the CS domain. TCS-Net, for instance, is a Transformer-based hierarchical framework that achieves reconstruction from patch-wise outlines to pixel-wise textures. Although Transformer excels at global feature modeling due to its self-attention mechanism, its core self-attention operation typically has a quadratic computational complexity () related to the input sequence length, which may limit its application in scenarios with high demands on computational efficiency. Furthermore, methods based on Generative Adversarial Networks (GANs), such as CSGAN, Task-aware GAN [32], and Sub-pixel GAN [33], have shown potential in enhancing the visual realism and detail richness of reconstructed images by introducing adversarial learning mechanisms, but their training stability and the fidelity of generated content areas remain of concern. Other representative model-driven approaches, such as AutoBCS [34] and MAC-Net [35], have also contributed to this field. In general, these end-to-end or purely model-driven deep learning methods often surpass traditional algorithms in reconstruction speed and peak metrics and can automatically learn parameters from data. However, their main limitations lie in their “black-box” nature, lacking clear interpretability rooted in CS theory, and their performance is highly dependent on the quantity and distribution of training data. When faced with out-of-distribution data or extremely adverse measurement conditions, their generalization ability and robustness can be severely challenged.

In summary, deep learning technology has undoubtedly injected strong momentum into the field of compressed sensing reconstruction and achieved significant performance improvements. However, many current leading methods innovate more at the level of network architecture engineering or training strategies, rather than achieving profound insights into the inherent theories of compressed sensing, such as signal sparsity and uncertainty modeling, and deeply integrating these with the powerful representation capabilities of deep learning. There remains vast research space and urgent challenges in how to more fundamentally incorporate domain-specific prior knowledge from CS into deep networks, substantially enhance model generalization ability and robustness in real-world complex application environments, and achieve an optimal balance between pursuing excellent performance and managing computational efficiency and resource consumption.

2.4. Transformer

The Transformer [36] architecture, originally introduced for natural language processing, has demonstrated strong capabilities in capturing long-range dependencies due to its self-attention mechanism. In computer vision, Transformer reformulates images as sequences of flattened patches and processes them using multi-head self-attention (MHSA) and positional encodings. Let z represent one such vectorized image patch from the input sequence; for each input patch z, the positional encoding (PE) is added to retain spatial information:

where and i are the positional index and dimension index, respectively. This encoding allows Transformer to capture both spatial and semantic relationships across image patches.

In Transformer, the MHSA mechanism plays a key role in capturing dependencies among different patches. For each attention head h, the queries Q, keys K, and values V are computed as:

where is the dimension of each head. By using multiple heads, the model can focus on various aspects of the input sequence, providing a richer representation of the image.

In the field of image CS, combining Transformer with CNN can help capture global and local dependencies. For example, TransCS [37] uses a two-stream structure where Transformer and CNN are fused together to improve performance by leveraging the strengths of both architectures.

2.5. Application Scenario: SBCS-Net in Sensor Networks

The practical application of a sophisticated algorithm like SBCS-Net within sensor networks is enabled by a specific architectural paradigm: lightweight front-end sampling and complex back-end reconstruction. In this model, the resource-constrained sensor node performs only a computationally simple linear projection to compress the acquired signal. This step’s primary purpose is to drastically reduce the amount of data for wireless transmission, thus saving critical energy and bandwidth.

The compressed, low-volume data is then sent to a powerful back-end server, which is where SBCS-Net is deployed. On this server, our algorithm executes its intensive reconstruction process to recover a high-fidelity signal. This division of labor makes it practical to leverage advanced reconstruction models, as their computational complexity does not burden the sensor nodes. Instead, the superior performance of SBCS-Net at the back-end enhances the overall efficiency and data quality of the entire sensor network system.

3. Proposed Method

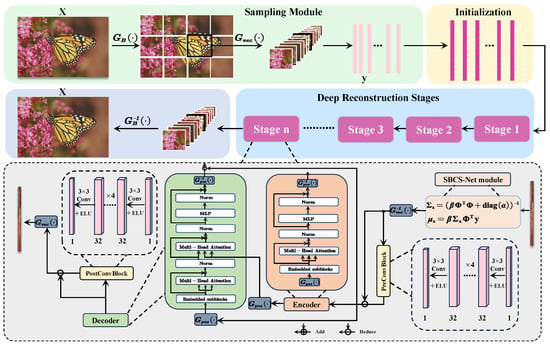

This section will elaborate on the proposed SBCS-Net framework. SBCS-Net adopts a staged and modular design, aiming to decompose the complex compressed sensing reconstruction task into a series of ordered and interconnected processing steps, ultimately achieving efficient compressive sampling from the original signal and precise recovery of the final high-quality image. Figure 2 is the framework diagram of SBCS-Net, clearly illustrating its overall data flow.

Figure 2.

SBCS-Net framework.

The core workflow of this framework begins with the sampling module, which is responsible for acquiring low-dimensional compressed measurements from the original high-dimensional signal. These measurements are subsequently fed into an initial reconstruction stage, which utilizes a relatively simple mapping to quickly generate a preliminary estimate of the original signal. This preliminary estimate then serves as the input for the core deep reconstruction stage. The deep reconstruction stage employs an innovative iterative optimization mechanism, fusing Bayesian sparse estimation with deep learning techniques, to refine the signal through multi-level and systematic processing.

To ensure that all modules can be collaboratively optimized to achieve the best overall reconstruction performance, we have also designed a corresponding loss function for end-to-end network training. The following subsections will provide detailed explanations of these key components constituting SBCS-Net, along with their intrinsic working principles and optimization mechanisms.

3.1. Sampling Module

To achieve superior image reconstruction results, the sampling module of SBCS-Net utilizes a data-driven trainable sensing matrix. This module first divides the original image into non-overlapping blocks of size using a partitioning function , and subsequently converts these image blocks into one-dimensional vectors using a flattening function . These vectorized image blocks are then linearly projected via a learnable sensing matrix . Here, denotes the original dimension of the vectorized image blocks, while represents the dimension of the compressed measurements under a sampling rate (where ).

Unlike traditional methods that employ fixed random matrices, the sensing matrix in this module is an integral part of the network, with its parameters optimized through an end-to-end learning process. Specifically, is initialized according to a specific probability distribution before training commences and is iteratively updated throughout the training of the entire SBCS-Net network, along with all other learnable parameters, using the Adam optimizer with respect to the final reconstruction loss function. This data-driven optimization allows the sensing matrix to automatically adapt to the intrinsic statistical properties of the training data, learning sampling patterns most conducive to information preservation and subsequent reconstruction; its final element distribution is the result of this optimization learning process. To more realistically simulate actual measurement scenarios, a Gaussian noise term is introduced during the sampling process to represent the inherent imperfections and uncertainties of the measurement system. Synthesizing these operations, the mathematical expression for the sampling module is as follows:

where represents the entire sampling process. Utilizing a learned sensing matrix, compared to random matrices, is generally more efficient for hardware implementation and requires fewer storage resources. It is noteworthy that SBCS-Net aims to obtain a universal sampling operator with good generalization capabilities across different signal types and common noise levels by learning a single sensing matrix from diverse training data. Therefore, the parameters of are fixed after training is complete. The model’s adaptation to the characteristics of specific input signals then primarily relies on the learnable noise precision parameter within the Bayesian sparse estimation module in the subsequent deep reconstruction stage, as well as the powerful feature representation and generalization capabilities of the deep learning modules themselves. Furthermore, the explicit introduction of the noise term , controlled by the precision parameter , not only enables the model to better handle noise present in actual measurements but also provides a basis for uncertainty quantification within the Bayesian framework. By actively considering the imperfections in real-world measurement processes, it effectively enhances the robustness of the subsequent reconstruction process.

According to Equation (9), this sampling process ultimately generates the compressed measurement vector . This vector is not only a compact, low-dimensional representation of the original high-dimensional signal but also constitutes the core observational data upon which all subsequent signal reconstruction stages must rely.

3.2. Initial Reconstruction Stage

After obtaining the compressed measurement vector from the sampling module, the recovery process begins with the initial reconstruction stage. The objective of this stage is not to achieve a perfect reconstruction, but rather to provide an effective starting point for the subsequent deep iterative refinement. This is accomplished by applying a simple linear transformation that acts as an approximate inverse projection, mapping the low-dimensional measurements back to the high-dimensional signal space.

To perform this operation, we introduce an initial reconstruction matrix . Crucially, this matrix is not a fixed pseudo-inverse. Instead, its parameters are learned end-to-end along with the rest of the network. The sensing matrix used in the sampling module is also optimized during training and then fixed during inference. The reconstruction matrix , however, is specifically trained to produce the most beneficial initial estimate for the subsequent stages. This data-driven approach allows the network to find an initial mapping that is superior to a simple, fixed transpose operation. The resulting preliminary estimate, , is calculated as:

Due to the inherent information loss during the compressed sensing process, the preliminary estimate obtained from this approximate inverse is naturally coarse and typically exhibits reconstruction artifacts, such as noise amplification and loss of structural details.

Despite these limitations, serves a critical purpose. Rather than being a final output, it is merely an iterative starting point for the deep reconstruction stages. A well-designed initial estimate provides preliminary structural information—superior to a random or zero initialization—that helps guide the convergence of the subsequent iterative optimization process and improves its efficiency. Therefore, this initial reconstruction is a necessary foundation for the complex refinement process that follows.

3.3. Deep Reconstruction Stage

The deep reconstruction stage constitutes the core component of the SBCS-Net model. The design objective of this stage is to achieve high-quality image reconstruction through a hybrid multi-stage iterative optimization process, progressively refining the signal. The overall workflow of this iterative framework follows the description in Algorithm 1, encompassing n optimization stages, with the iteration variable k ranging from 1 to n. For the first optimization stage (i.e., ), the input is the preliminary reconstructed signal as described in Section 3.2; for any subsequent stage k (where ), further refinement is performed based on the optimized signal output from the preceding stage .

| Algorithm 1 Forward propagation for SBCS-Net. |

| Require: Number of iterations n, measurements , sensing matrix , initial reconstruction matrix , trainable parameters Ensure: Reconstructed image

|

Within each independent optimization stage k, a structured signal processing architecture is employed. This architecture organically integrates the theoretical guidance of Bayesian sparse estimation with the powerful representation capabilities of deep learning. Specifically, the input signal for the current stage is first analyzed and updated for sparsity by the Bayesian sparse estimation module based on a probabilistic model; a detailed explanation of this module is provided in Section 3.3.1. Subsequently, the output from the Bayesian sparse estimation module is passed to a series of cascaded deep learning modules. These modules are responsible for deep feature extraction, complex pattern recognition, and the effective fusion and refinement of global and local information; their specific compositions and working mechanisms will be elucidated section by section from Section 3.3.2, Section 3.3.3, Section 3.3.4 and Section 3.3.5. The signal output mechanism after processing by all modules in the current stage is described in Section 3.3.6.

3.3.1. Bayesian Sparse Estimation

To enforce signal sparsity and handle measurement noise in a principled, model-driven way, each reconstruction stage begins with this module. It provides a probabilistically regularized signal for subsequent deep learning-based refinement. The core task of this module is to update the sparse representation of the signal in a probabilistic sense, by combining the reconstruction result from the previous stage () (or the initial reconstructed signal for ) and relying on the parameters and learned at the current stage. This method is based on the probabilistic framework of BCS, which assumes that the measurement process is affected by Gaussian noise and endows the signal with a Gaussian sparsity prior to promote its sparse characteristics.

In SBCS-Net, the noise robustness and adaptive capability to noise of this Bayesian sparse estimation module are primarily achieved by designing the key hyperparameters from traditional BCS—namely, the precision vector controlling sparsity and the noise precision —as network-trainable parameters for the current stage k (denoted as and ). These parameters are optimized through end-to-end training of the entire network, guided by the final reconstruction loss function. In particular, the learning mechanism for the noise precision parameter enables the model to automatically adjust its effective perception of and response strategy to the noise level in the observed data, based on the training data.

Under this probabilistic setting and parameter learning mechanism, the posterior distribution of the signal at stage k can be approximated as a Gaussian distribution. Its posterior covariance matrix and posterior mean (referencing Algorithm 1) are calculated, respectively, as:

In these formulas, the learned noise precision directly modulates the influence weight of information from the previous stage’s estimate (acting indirectly through ) in the calculation of the current posterior mean . This means that when faced with higher noise (or greater uncertainty in the previous stage’s result), if the network learns a smaller value for , it will reduce its reliance on potentially noisy data (or uncertain input) and depend more on the sparse structural information implied by the Gaussian prior, thereby suppressing noise. Conversely, if is larger, it places more trust in the data from the previous stage. This adaptive adjustment is key to improving the quality of signal recovery. The sparse estimate for the current stage is this posterior mean:

This , as the output of the Bayesian sparse estimation module for the current stage, integrates information from the preceding stage, the currently learned parameters and , and the regularization effect of the probabilistic model. It provides a signal estimate that has undergone initial sparsity enhancement and noise consideration for the subsequent deep learning modules. By integrating the necessary matrix operations (such as the matrix inversion in Equation (11) and matrix-vector multiplications in Equation (12)) into the neural network’s computation graph and leveraging the optimization capabilities of the deep learning framework, this module avoids the separate and potentially more time-consuming iterative parameter solving process found in traditional BCS, thereby effectively managing computational complexity.

3.3.2. Pre-Block: Local Feature Optimization

While the Bayesian estimate provides a good sparse signal, it may lack fine-grained textures or contain minor artifacts. The Pre-block is therefore introduced to perform initial local feature enhancement and artifact suppression using CNNs. After the sparse estimation signal output by the Bayesian sparse estimation module is transformed into an image domain representation via , although it possesses theoretical sparse advantages, it may still be deficient in the depiction of local details, such as potential smoothing effects or slight artifacts. To solve this problem and provide high-quality input for subsequent global feature modeling, we send into the Pre-block module. The core function of the Pre-block is to utilize the powerful local feature extraction and non-linear mapping capabilities of CNN to perform initial local feature enhancement and artifact suppression on . This is an initial manifestation of the synergistic effect between Bayesian sparse estimation results and the local perception capabilities of CNN, aiming to improve the quality of the signal representation. After the sparse estimation signal output by the Bayesian sparse estimation module is transformed into an image domain representation via , although it possesses theoretical sparse advantages, it may still be deficient in the depiction of local details, such as potential smoothing effects or slight artifacts. To solve this problem and provide high-quality input for subsequent global feature modeling, we send into the Pre-block module. The core function of the Pre-block is to utilize the powerful local feature extraction and non-linear mapping capabilities of CNN to perform initial local feature enhancement and artifact suppression on . This is an initial manifestation of the synergistic effect between Bayesian sparse estimation results and the local perception capabilities of CNN, aiming to improve the quality of the signal representation.

This module usually consists of several convolutional layers, batch normalization layers, and non-linear activation functions. Its operation at stage k can be represented as a residual learning process, and the Pre-block operation is expressed as:

where represents the CNN network of the Pre-block at stage k. By learning and subtracting the correction term , the network is guided to focus on identifying and eliminating local defects in the input signal, such as residual noise, blurred edges, or block effects, while simultaneously enhancing useful textures and structural details. This design enables the Pre-block to effectively purify the results of Bayesian sparse estimation. Its output, , is a clearer and more informative signal version at the local feature level, laying a good foundation for the global context analysis sent to the Transformer encoder.

3.3.3. Encoder: Global Feature Modeling

A purely local approach struggles with capturing the long-range dependencies essential for overall structural integrity. Although the signal , whose local features have been optimized by the Pre-block, exhibits enhanced local details, its representation may still be primarily confined to neighborhood information. To capture long-range dependencies and the overall structure among various regions in the image, we input into the Transformer encoder module . While the Bayesian sparse estimation and Pre-block modules primarily focus on the signal’s sparsity and local characteristics, the encoder introduces a powerful global feature modeling mechanism to SBCS-Net. This is a key step in the deep fusion of Bayesian methods, CNN, and Transformer to capture a broader context. Although the signal , whose local features have been optimized by the Pre-block, exhibits enhanced local details, its representation may still be primarily confined to neighborhood information. To capture long-range dependencies and the overall structure among various regions in the image, we input into the Transformer encoder module . While the Bayesian sparse estimation and Pre-block modules primarily focus on the signal’s sparsity and local characteristics, the encoder introduces a powerful global feature modeling mechanism to SBCS-Net. This is a key step in the deep fusion of Bayesian methods, CNN, and Transformer to capture a broader context.

Before entering the encoder, the input signal is typically first segmented into a sequence of image patches via a patch partitioning operation . Since the Transformer’s self-attention mechanism itself does not directly perceive the order or spatial position of elements in a sequence, positional encoding (PE) needs to be added to each image patch to incorporate spatial information into the representation. Let denote this initial sequence of patch embeddings with positional information.

The sequence is then fed into the Transformer encoder , which is composed of L identical encoder layers stacked sequentially. Each encoder layer, say layer l (where l ranges from 1 to L), takes the output from the previous layer (with being the input to the first layer) and produces an output . The core components of each encoder layer are an MHSA module and a feed-forward network (FFN), with layer normalization (LN) and residual connections applied for stable training and effective representation. Schematically, the operations within the l-th encoder layer can be expressed as:

The final output of the entire encoder stage, after L such layers, is . Thus, the overall encoder operation can be summarized as (reiterating your original Equation (17) for clarity in this new context):

The MHSA module is crucial for modeling the relationships among all elements in the input sequence . It achieves this by parallelly computing multiple self-attention “heads”, allowing the model to jointly attend to information from different representation subspaces at different positions. Each attention head operates on query (Q), key (K), and value (V) matrices, which are typically derived from the input through learnable linear projections: , , , where are parameter matrices. The core computation for a single attention head is the scaled dot-product attention, given by:

where is the dimension of the key vectors. The outputs of multiple such heads are then concatenated and linearly projected to produce the final MHSA output.

The encoder, by stacking these layers, can thereby dynamically capture the interdependencies among all regions in the input image, unrestricted by spatial distance. Its output, , is a feature representation that deeply encodes global contextual information, containing rich semantics of each image patch and their interrelations within the overall image structure. This provides crucial global perspective guidance for the subsequent decoder reconstruction.

3.3.4. Decoder: Signal Reconstruction to Image Space

To synthesize a coherent image, the abstract global context must be effectively combined with concrete local details. The features , rich in global context and generated by the Transformer encoder (as detailed in Section 3.3.3), along with the Pre-block output (which serves as a reference for local details), are jointly processed by the Transformer decoder module . The primary task of the decoder is to effectively fuse the abstract high-level features with the concrete low-level features present in , and to progressively map this combined information back to a structured image space. This aims to generate an optimized reconstruction that balances both global consistency and local detail. The features , rich in global context and generated by the Transformer encoder (as detailed in Section 3.3.3), along with the Pre-block output (which serves as a reference for local details), are jointly processed by the Transformer decoder module . The primary task of the decoder is to effectively fuse the abstract high-level features with the concrete low-level features present in , and to progressively map this combined information back to a structured image space. This aims to generate an optimized reconstruction that balances both global consistency and local detail.

Similar to the encoder architecture, typically consists of multiple stacked decoder layers. Each decoder layer usually incorporates a masked self-attention module (operating on representations derived from and its own intermediate states), a cross-attention module (which attends to the encoder’s output ), and an FFN. LN and residual connections are also employed within these layers.

The Transformer decoder network, denoted as , thus takes both the local feature map and the global context as inputs. It produces an output signal which represents the learned enhancement or refinement. Consistent with the additive residual connection shown in Figure 2, the output of the decoder stage, , is formulated by adding this learned refinement back to the original Pre-block output:

In this manner, the decoder effectively combines the long-range dependencies and global structural information captured by the encoder (via and processed by ) with the local feature details preserved in and passed through (via the skip connection). This step is crucial for ensuring that the reconstructed signal possesses an accurate global layout and clear local content. Its output, , is thus a more refined signal version in the image space, modulated and enhanced by global information, and serves as the input to the subsequent Post-block.

3.3.5. Post-Block: Final Refinement and Output Stage

Although the signal , obtained after processing by the Transformer decoder, has already fused global and local information and is of relatively high quality, there is still room for improvement in details, such as eliminating minor artifacts potentially introduced by complex transformations or sharpening edges. For this purpose, we introduce the Post-block module to perform final local feature optimization and quality enhancement on . This module is structurally similar to the Pre-block and is also a CNN-based component. The Post-block can be regarded as the final fine-polishing step in the entire signal processing pipeline—following the deep fusion of sparsity guided by Bayesian sparse estimation, local details extracted by CNN, and global context captured by Transformer—to ensure the visual quality of the final output. The corresponding Post-block operation is represented as:

Here, represents the Post-block CNN network at the current stage k. learns to identify and remove any residual noise, artifacts, or undesired smoothness from , while further sharpening image details and enhancing local contrast. The resulting is a visually clearer reconstruction with richer details and fewer artifacts, representing the best achievable reconstruction quality at the current optimization stage k, striking a good balance between global structural integrity and local detail fidelity.

3.3.6. Final Output Stage: Reconstructed Signal Generation

After completing the iterative processing and refinement through Bayesian sparse estimation and a series of deep learning modules such as the Pre-block, Transformer encoder–decoder, and Post-block, the signal generated at the current optimization stage k already incorporates all optimization results from this stage. To format it for final image stitching or, if , as input for the next iteration stage, is first processed by a vectorization operation . The corresponding operational expression is:

Here, represents the vectorized signal that has undergone complete processing in stage k and is ready to enter stage k+1 or serve as the final output.

When all n optimization stages are completed, i.e., when the iteration variable k reaches n, the final, multi-stage deep-optimized vectorized signal is obtained. To obtain the final visualized reconstructed image , this vectorized representation needs to be transformed back to the original complete image space through a two-step inverse mapping process. The corresponding operational expression is:

In this process, first reorganizes and rearranges the one-dimensional long vector, restoring it to block-wise two-dimensional image data. Subsequently, the operation reassembles these image blocks according to their correct spatial positions in the original image, thereby generating the final complete image . These two transformation steps are crucial for maintaining the structural integrity and visual continuity of the reconstructed image, ensuring excellent visual quality.

3.4. Loss Function

SBCS-Net is an end-to-end framework designed to reconstruct the original input image from its compressed measurements y, incorporating both sampling and reconstruction optimization within a unified architecture. The sampling module generates compressed measurements that serve as input to the reconstruction pipeline; this pipeline includes an initial coarse recovery via followed by complex iterative hybrid reconstruction stages . These reconstruction stages integrate sparsity-inducing constraints derived from Bayesian principles, particularly manifested in the adaptive handling of measurement uncertainty through the precision parameter .

This framework employs a joint optimization strategy during training, simultaneously optimizing the sampling and reconstruction modules to minimize the difference between the reconstructed image and its corresponding ground truth image . By introducing the precision parameter in the sampling process, the framework can adaptively balance measurement fidelity against noise robustness, ensuring that the final output , after all reconstruction stages are completed, achieves optimal fidelity in terms of both local details and global structure.

To quantify reconstruction quality and guide the optimization process, we employ the Mean Squared Error (MSE) [38] loss function. This function provides a robust measure of the pixel-wise difference between the reconstructed and ground truth images. The loss function for SBCS-Net is specifically expressed as:

where denotes the i-th training sample, represents its corresponding reconstructed output after all reconstruction stages are completed, and N indicates the total number of training samples. This differentiable objective function enables effective gradient-based optimization, thereby facilitating the collaborative optimization of the sampling and reconstruction modules, and enhancing the robustness and accuracy of image reconstruction by minimizing pixel-wise reconstruction error. The inclusion of the precision parameter in the sampling process also serves as an implicit regularization for the reconstruction process, strengthening the framework’s ability to handle measurement uncertainties while maintaining high reconstruction fidelity.

It is noteworthy that there is a key interaction mechanism between the MSE loss function adopted by SBCS-Net and its core Bayesian sparse estimation module in the deep reconstruction stage. Firstly, through end-to-end training, the MSE loss directly drives the learning and optimization of internal parameters within the Bayesian module, such as sparsity precision and noise precision. This implies that these parameters are not set independently of the reconstruction task but are adjusted so that the output of the Bayesian module, after being processed by subsequent deep learning modules, can maximally improve the quality of the final reconstructed image under the MSE criterion. The strategy of integrating theoretical model parameters into a discriminative learning framework and optimizing them in a data-driven manner has achieved success in various model-unfolding-based deep learning methods, as exemplified in works like ISTA-Net and ADMM-Net. Secondly, the choice of the MSE loss function is theoretically compatible with the posterior mean estimation in Bayesian estimation, which targets the Minimum Mean Squared Error (MMSE). SBCS-Net, by optimizing for MSE overall, can be seen as learning a complex mapping to approximate this MMSE optimal estimate. Lastly, the Bayesian framework itself introduces beneficial inductive biases to the MSE-driven learning process. Through its inherent sparse priors and noise modeling, it helps guide the network towards more robust and structurally sound solutions, especially when dealing with under-sampled and noisy data. Therefore, the MSE loss and the Bayesian framework act synergistically in SBCS-Net to jointly enhance reconstruction performance.

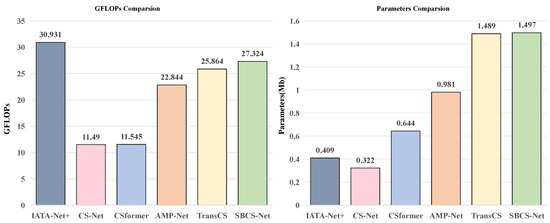

3.5. Computational Complexity Analysis

The computational complexity of SBCS-Net is analyzed based on its operation over image blocks, each of size , for n iterative reconstruction stages. The initial sampling and reconstruction phases primarily involve matrix-vector operations, with a complexity of approximately , where M is the measurement dimension per block.

Within each of the n deep reconstruction stages, the Bayesian sparse estimation module requires an matrix inversion per block, leading to a dominant term of for this module per iteration. The subsequent CNN-based Pre-block and Post-block modules, characterized by their depth and convolutional filter configurations, contribute a complexity proportional to , where represents the typical computational load of these CNN per block. Similarly, the Transformer-based encoder and decoder modules, with layers and processing sequences of length (derived from each block) with an embedding dimension , exhibit a complexity of roughly , where is the feed-forward network’s hidden dimension.

SBCS-Net achieves significant computational advantages over traditional BCS methods, which often face an cost for full-image matrix inversion ( being total image pixels) and separate, costly hyperparameter optimization. These advantages stem from: (1) Block-wise processing, reducing the cubic complexity’s base to . (2) End-to-end learning of SBL parameters () within n fixed stages, which bypasses traditional iterative hyperparameter inference. While the integrated deep learning modules introduce their own computational costs, the architecture is optimized for a practical balance between reconstruction efficacy and computational load. This positions SBCS-Net as a more scalable solution for CS applications.

4. Experimental Results

This section presents comprehensive experimental evaluations of the proposed SBCS-Net framework. We first describe the experimental settings, including dataset preparation, training details, and implementation specifications. Then, through extensive comparisons with state-of-the-art methods on multiple benchmark datasets, we demonstrate the superior reconstruction results of SBCS-Net. Furthermore, we analyze the framework’s robustness under various noise conditions and evaluate its computational complexity. Finally, we perform detailed ablation studies to validate the effectiveness of each key component in our architecture.

4.1. Experimental Settings

The training data for SBCS-Net is primarily based on the BSD500 [39] dataset. Renowned for its extensive content and diverse features, this dataset provides a solid foundation for the model to address various sensor network applications. Its richness helps SBCS-Net learn visual features for specific scenarios (e.g., environmental monitoring) and general image priors, thereby enhancing its capability to process diverse sensor data, including remote sensing, urban surveillance, and even some biomedical imagery. Therefore, our strategy of integrating all subsets of BSD500 (training, validation, and testing sets) for training, combined with data augmentation, aims to enable SBCS-Net to learn highly generalizable feature representations and reconstruction methods, thereby enhancing its practicality and robustness in real-world complex visual signal environments. For each original image, we first normalize its pixel values to the [0, 1] range and then randomly crop 200 patches of pixels. This process generates a total of 100,000 training sample patches. To further enhance data diversity and improve the model’s generalization ability, we apply data augmentation techniques to these image patches, primarily including random horizontal and vertical flips, small-angle rotations, and scale adjustments. All preprocessed image patches are finally converted into single-channel grayscale image tensors. Set11 [40] is used as an independent validation dataset during this process. Final performance evaluation is conducted on multiple public benchmark datasets, including BSD100 [41], Set5 [42], Urban100 [43], UCMerced [44], and BrainImages. These diverse datasets encompass various image types such as natural scenes, urban landscapes, remote sensing, and medical images, broadly representing the visual data characteristics that various sensors might acquire in practical applications, thus facilitating a comprehensive assessment of SBCS-Net’s performance and generalization capabilities.

The model’s implementation follows typical deep learning compressed sensing configurations comparable to methods like ISTA-Net. Key parameters are set as follows: patch dimension P = 8, initial step size is 1.0, and the number of iterations H = 8. During the training phase, two core Bayesian parameters are set as learnable: for regulating image sparsity, and for modeling measurement noise. These parameters, along with the weights of other network parts, are jointly optimized via backpropagation within the training framework. Training is conducted for a total of 200 epochs with a batch size of 64. The initial learning rate is set to 0.001, with periodic decay between epochs 101 and 150, followed by a fixed learning rate for the final 50 epochs. We employ the Adam optimizer to tune all learnable parameters in the network, including and , aiming to enhance the fidelity of reconstructed images and the overall robustness of the model.

The performance of SBCS-Net will be compared with several current leading compressed sensing reconstruction methods, including Csformer [45], ISTA-Net+, CsNet, AMP-Net, and TransCS, most of which fuse traditional iterative algorithms with deep learning techniques. Performance evaluation is primarily based on objective perceptual metrics of image quality: Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM). PSNR measures pixel-level reconstruction error. For a ground-truth image and a reconstructed image of size , it is defined as:

where is the maximum possible pixel value of the image (1 for our normalized images). SSIM aims to more closely align with human visual perception of image quality by assessing similarities in luminance (l), contrast (c), and structure (s). For two image patches and , it is defined as:

where and represent the mean and variance, and the final score is the average over all image patches. Consequently, SSIM is particularly important for evaluating the structural integrity and visual fidelity of reconstructed images; higher values for both metrics generally indicate better reconstruction quality. All comparative models are obtained from their publicly available source code or projects and tested using their default recommended configurations. To ensure fairness in comparison, all training images used by the competing methods (if training is required) are consistent with SBCS-Net, originating from the BSD500 dataset. All experiments are conducted on a server equipped with an Intel Xeon 8336 CPU (Santa Clara, CA, USA) and an NVIDIA GeForce RTX 4090 GPU (Santa Clara, CA, USA), using the PyTorch 1.9.0 deep learning framework.

4.2. Comparisons

4.2.1. Comparisons with State-of-the-Art Methods

In this section, we comprehensively evaluate the proposed SBCS-Net by comparing its performance with state-of-the-art methods, including ISTA-Net, CSNet, CSformer, AMP-Net, and TransCS, on the benchmark datasets Urban100, BSD100, Set5, UCMerced, and BrainImages. We conduct both quantitative and qualitative analyses to demonstrate the superiority of SBCS-Net, using Table 1 for numerical comparisons and Figure 3 for visual assessment.

Table 1.

PSNR (dB) and SSIM comparisons of different methods on Urban100, BSD100, Set5, UCMerced, and BrainImages datasets at multiple sampling rates . Red color in the table footer indicates the optimal values, and blue color indicates the sub-optimal values.

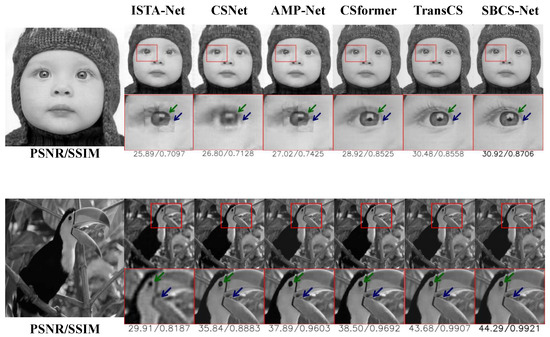

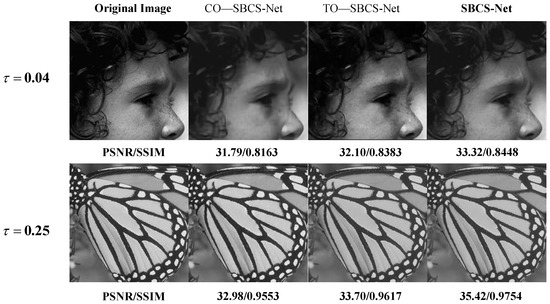

Figure 3.

Reconstruction results of the baby and bird images of our SBCS-Net and various competing methods. The sampling rates of the first row and second row are 0.04 and 0.25, respectively. Green and blue arrows are used to contrast the details of the two reconstructed images at their respective marked positions. Please zoom in for better comparisons.

Table 1 presents a comparison of PSNR and SSIM performance for various SOTA methods—including ISTA-Net, CSNet, CSformer, AMP-Net, TransCS, and our proposed SBCS-Net—across five benchmark datasets (Set5, Urban100, BSD100, UCMerced, and BrainImages) at multiple sampling rates (). In the results, the best performance at the corresponding sampling rate is marked in red, while the second-best is marked in blue. The experimental results clearly demonstrate the superior performance of SBCS-Net, particularly in terms of reconstruction quality at low sampling rates. Specifically, on the Set5 dataset, SBCS-Net achieves the optimal PSNR and SSIM metrics (marked in red) across all sampling rates. Notably, at challenging low sampling rates ( and ), SBCS-Net shows a significant advantage over other methods; at higher sampling rates ( and ), it maintains a leading edge, although close to the second-best methods (marked in blue). For the Urban100 dataset, which contains more complex texture structures, SBCS-Net also exhibits strong performance. Although slightly inferior to CSformer at and to TransCS at (marked in blue), it still significantly outperforms other competing methods. Particularly at sampling rates and , SBCS-Net achieves optimal performance (marked in red), surpassing TransCS in both PSNR and SSIM metrics. On the BSD100 dataset, SBCS-Net demonstrates the most stable performance advantage, achieving optimal results across all sampling rates (all marked in red), fully validating its significant reconstruction stability and effectiveness in handling diverse image complexities. For the UCMerced dataset, SBCS-Net performs best at most sampling rates (marked in red), achieving, for example, 21.38 dB at compared to CSformer’s 21.06 dB, and 34.35 dB at , surpassing TransCS’s 33.97 dB; it only falls slightly behind TransCS at (37.98 dB vs. 38.02 dB) but regains the lead at with 40.18 dB, showcasing its excellent adaptability to the diverse landscape features in remote sensing images. On the BrainImages dataset, SBCS-Net also delivers robust performance, achieving 26.46 dB at compared to CSformer’s 25.94 dB, and 36.49 dB at , outperforming TransCS’s 35.62 dB; although it trails TransCS slightly at and (e.g., 30.17 dB vs. 30.97 dB), it achieves the best performance at with 40.17 dB, highlighting its robustness in handling high-contrast structures in medical images.

The superiority of SBCS-Net is evident not only in quantitative metrics but also in the visual quality of reconstructed images. Figure 3 shows the reconstruction results for the “Baby” and “Parrot” images at sampling rates and , respectively. At a sampling rate of , SBCS-Net excels in reconstructing fine details, such as the eyelashes in the “Baby” image, where other methods fail to capture these intricate features, resulting in blurred or distorted regions. SBCS-Net achieves an SSIM value of 0.8706 in this example, significantly higher than comparative methods, and this quantitative result confirms its advantage in maintaining image structure and edge clarity. Similarly, at , SBCS-Net demonstrates its ability to recover complex textures, particularly in the head textures of the “Parrot” image. Its high SSIM value of 0.9921, compared to other methods, also proves from a data perspective that its reconstruction results are structurally closer to the original image with higher fidelity in texture details. In contrast, alternative methods like CSNet and AMP-Net produce reconstructions that are overly smoothed or prone to artifacts, whereas SBCS-Net delivers clear and visually superior results, highlighting its exceptional capability in modeling both local and global image features.

The key to SBCS-Net’s remarkable performance lies in its hybrid architecture, which combines Bayesian sparse estimation with convolutional and attention mechanisms. This integration enables SBCS-Net to effectively capture local details while simultaneously modeling long-range dependencies, a limitation observed in many baseline methods. Furthermore, SBCS-Net demonstrates exceptional robustness at low sampling rates, where traditional and deep learning-based approaches often struggle to maintain reconstruction quality. The combination of quantitative excellence and superior visual fidelity positions SBCS-Net as a significant advancement in the field of compressed sensing reconstruction, offering a robust and efficient solution for high-quality image recovery.

4.2.2. Statistical Significance Analysis

To further validate the performance advantages of SBCS-Net over other advanced methods from a statistical perspective and to assess the stability and reliability of its results, we conducted additional statistical evaluations. This includes a detailed per-sampling-rate performance comparison with representative SOTA methods on the UCMerced dataset, as well as the introduction of statistical ranking for multi-algorithm comparison.

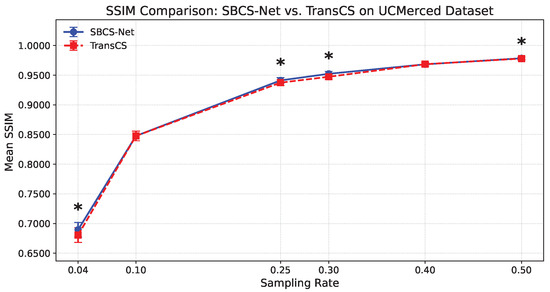

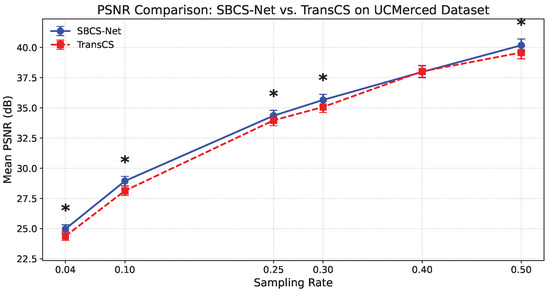

We first conducted a meticulous comparison of the reconstruction performance of SBCS-Net against the recently high-performing TransCS method at different sampling rates on the UCMerced remote sensing image dataset. Figure 4 and Figure 5, respectively, show the comparison results of the two methods in terms of Mean SSIM and Mean PSNR (dB). As clearly visible in the figures, each data point for SBCS-Net (blue solid line) and TransCS (red dashed line) is accompanied by error bars. These error bars visually represent the distribution of results from multiple independent experiments, reflecting the stability of the reconstruction performance.

Figure 4.

Mean SSIM comparison on UCMerced. * Indicates SBCS-Net significantly outperforms TransCS (Wilcoxon one-sided test, ).

Figure 5.

Mean PSNR comparison on UCMerced. * Indicates SBCS-Net significantly outperforms TransCS (Wilcoxon one-sided test, ).

In the figures, we marked with an asterisk (*) instances where SBCS-Net’s performance is statistically significantly superior to TransCS. As shown in Figure 4, at sampling rates of 0.04, 0.25, 0.30, and 0.50, the SSIM values of SBCS-Net were demonstrated to be significantly higher than those of TransCS via the Wilcoxon one-sided test (). Similarly, it can be observed from Figure 5 that at all tested sampling rates, the PSNR values of SBCS-Net were statistically significantly superior to those of TransCS (Wilcoxon one-sided test, ). These statistical test results from pairwise comparisons provide strong statistical support for the performance advantage of SBCS-Net over TransCS on the UCMerced dataset.

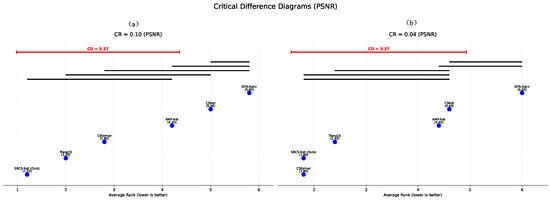

To evaluate the performance of SBCS-Net among a broader range of SOTA methods and to perform statistical ranking, we employed Critical Difference (CD) Diagrams for analysis. This method, typically based on the Friedman test followed by post hoc multiple comparison tests (such as the Nemenyi test), visually presents the average rankings of multiple algorithms under different conditions and the statistically significant differences between them.

Figure 6 shows the comparison of average rankings on the PSNR metric for SBCS-Net against several mainstream compressed sensing reconstruction algorithms, including TransCS, CSformer, AMP-Net, CSNet, and ISTA-Net+, at compression/sampling rates (CRs) of 0.10 and 0.04, respectively. In the diagram, a lower average rank indicates better performance. The Critical Difference value (CD = 3.37) is marked on the diagram. If the difference in average ranks between two algorithms exceeds this CD value, their performance difference is considered statistically significant; conversely, if algorithms are connected by the same thick black line, the performance difference between them is not statistically significant.

Figure 6.

Critical difference diagrams for PSNR performance of SBCS-Net against SOTA methods at different CR. The diagram shows average ranks. Algorithms connected by a thick black line do not have statistically significant performance differences. The CD value is 3.37. (a) Results at CR = 0.10. (b) Results at CR = 0.04.

As can be seen from Figure 6a (CR = 0.10), SBCS-Net achieved the lowest average rank (1.20) and is not connected to other methods by a thick black line, indicating a significant performance advantage over most comparative methods at this sampling rate. Under the more challenging low sampling rate condition of CR = 0.04 (Figure 6b), SBCS-Net (Ours) and CSformer both have an average rank of 1.80, with no statistically significant difference between them. However, they are both significantly superior to TransCS (2.40) and the lower-ranked AMP-Net, CSNet, and ISTA-Net+.

4.3. Noise Robustness Analysis

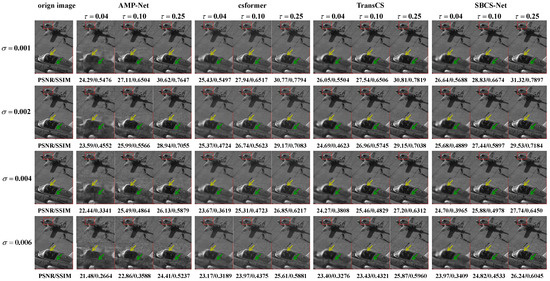

Robustness under various noise levels is a crucial metric for evaluating the reliability of CS reconstruction algorithms. To analyze the noise robustness of SBCS-Net, we conducted experiments under different Gaussian noise levels () and sampling rates (). The quantitative results are shown in Table 2, and qualitative visual comparisons are presented in Figure 7.

Table 2.

PSNR (dB) and SSIM on BSD100 with Gaussian noise at various levels and sampling rates . Red color in the table footer indicates the optimal values, and blue color indicates the sub-optimal values.

Figure 7.

Visual comparisons for various image CS methods on airplane image from dataset BSD100 at different sampling rates . The first, second, and third rows correspond to the original image with Gaussian noise of multi-level variances (), respectively.

Table 2 indicates that SBCS-Net consistently achieves the highest PSNR and SSIM values across all Gaussian noise levels and sampling rates, highlighting its superior reconstruction capability. At a Gaussian noise level of and a sampling rate of , SBCS-Net achieves a PSNR of 26.75 dB and an SSIM of 0.7399, outperforming other methods such as AMP-Net (25.68 dB, 0.7138) and Csformer (25.79 dB, 0.7151). As the Gaussian noise level increases, SBCS-Net’s performance gradually degrades but maintains a significant performance advantage over competing methods. Similarly, Table 3 evaluates SBCS-Net’s performance under salt-and-pepper noise, showing consistent superiority across noise levels and sampling rates . For instance, at a salt-and-pepper noise level of and , SBCS-Net achieves a PSNR of 19.84 dB and an SSIM of 0.6209, surpassing TransCS (19.54 dB, 0.6159) and Csformer (18.27 dB, 0.6093). Even at a higher noise level of , SBCS-Net maintains its lead, with a PSNR of 17.04 dB and an SSIM of 0.5591 at , compared to TransCS’s 16.99 dB and 0.5523. This robust performance under both Gaussian and salt-and-pepper noise underscores SBCS-Net’s strong adaptability to different noise types, making it highly suitable for practical applications where noise characteristics vary.

Table 3.

PSNR (dB) and SSIM on BSD100 with salt-and-pepper noise at various levels and sampling rates .Red color in the table footer indicates the optimal values, and blue color indicates the sub - optimal values.

The key to SBCS-Net’s effective handling of different noise types lies in the adaptive capability of its Bayesian sparse estimation module and the synergistic processing mechanism of CNN and Transformer. For Gaussian noise, SBCS-Net’s Bayesian sparse estimation module utilizes its inherent Gaussian noise probability model, as elucidated in Equation (1), and achieves precise statistical modeling of Gaussian noise through the end-to-end learned noise precision parameter , thereby realizing effective probabilistic signal separation. Subsequently, the CNN and Transformer modules collaboratively perform deep optimization on the output of the Bayesian estimation, including smoothing residual noise, sharpening image details, and strengthening the overall structure. For non-Gaussian impulse noise such as salt-and-pepper noise, the processing mechanism has a different emphasis: although the Gaussian noise assumption of the Bayesian module does not perfectly match the characteristics of impulse noise, it can still perform crucial preliminary regularization on the signal through end-to-end learned parameters like noise precision and sparsity precision . It adaptively adjusts its reliance on severely corrupted data items and utilizes sparse priors to help suppress large isolated outliers, thus providing a cleaner input basis for subsequent deep learning modules to process impulse noise. On this foundation, the core impulse noise removal is primarily accomplished by the deep learning modules: the CNN module, through data-driven learning, can accurately identify and repair local, high-magnitude salt-and-pepper noise points; meanwhile, the Transformer module, with its global context modeling capability, maintains the overall image structure and long-range dependencies while the CNN performs local restoration, preventing the introduction of secondary distortions. It is this sophisticated division of labor and close collaboration among the modules that endows SBCS-Net with its excellent robustness in complex noise environments.

Figure 7 displays the qualitative reconstruction results of various comparative methods under the influence of different intensities of Gaussian noise, further visually substantiating the robustness of SBCS-Net. Taking a typically adverse condition as an example, i.e., a Gaussian noise level of and a sampling rate of , SBCS-Net demonstrates significant reconstruction advantages: it not only visually succeeds in reconstructing many intricate details of aircraft structural components, such as wing edges and fuselage textures—markedly superior to the severely degraded or blurred reconstruction effects in other comparative methods—but also, corresponding to this visual improvement, SBCS-Net achieves an SSIM value of 0.6450 under this condition, notably higher than AMP-Net’s 0.5879, Csformer’s 0.6217, and TransCS’s 0.6312. This higher SSIM value quantitatively proves that SBCS-Net’s reconstruction results are structurally more similar to the original image, thereby better preserving image structural integrity and perceptual quality. These excellent visual and quantitative results intuitively reflect SBCS-Net’s capability, as described above, to effectively recover high-quality images from various noisy measurements through the adaptive noise modeling of its Bayesian sparse estimation module and the synergistic action of the CNN and Transformer modules.