Gait Environment Recognition Using Biomechanical and Physiological Signals with Feed-Forward Neural Network: A Pilot Study

Abstract

1. Introduction

2. Data Collection and Experimental Design

2.1. Participants

2.2. Sensor Setup

2.3. Gait Environment

3. ML Framework

3.1. Data Collection and Preprocessing

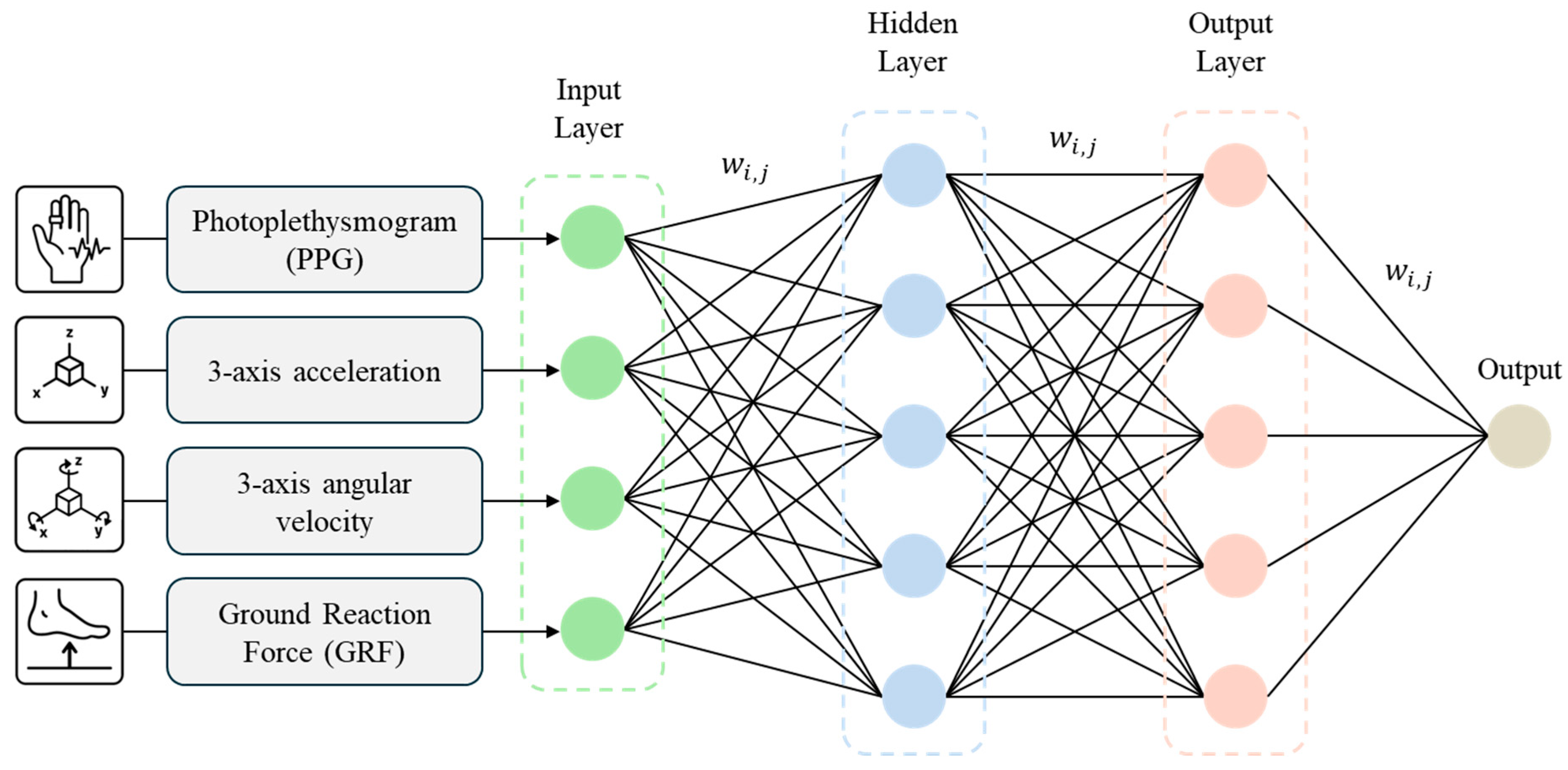

3.2. FFNN

4. Results and Discussion

4.1. Gait Environment Analysis

4.2. Performance Comparison by Sensor Configuration

4.3. Comparative Analysis and Discussion

4.3.1. Classification Performance for Gait Environments

4.3.2. Performance According to Sensor Combinations

4.3.3. Model Architecture and Real-Time Applicability

4.3.4. Limitations of the Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AR | Ascending Ramp |

| AS | Ascending Stairs |

| CNN | Convolutional Neural Network |

| DR | Descending Ramp |

| DS | Descending Stairs |

| FFNN | Feed-Forward Neural Network |

| GRF | Ground Reaction Force |

| GSR | Galvanic Skin Response |

| IMU | Inertial Measurement Unit |

| LG | Level Ground |

| LSTM | Long Short-Term Memory |

| ML | Machine Learning |

| PPG | Photoplethysmogram |

References

- Pirker, W.; Katzenschlager, R. Gait disorders in adults and the elderly: A clinical guide. Wien. Klin. Wochenschr. 2017, 129, 81–95. [Google Scholar] [CrossRef] [PubMed]

- Ramadan, R.; Geyer, H.; Jeka, J.; Schöner, G.; Reimann, H. A neuromuscular model of human locomotion combines spinal reflex circuits with voluntary movements. Sci. Rep. 2022, 12, 8189. [Google Scholar] [CrossRef] [PubMed]

- Schuna, J.M., Jr.; Tudor-Locke, C. Step by step: Accumulated knowledge and future directions of step-defined ambulatory activity. Res. Exerc. Epidemiol. 2012, 14, 107–116. [Google Scholar]

- Harris, G.F.; Wertsch, J.J. Procedures for gait analysis. Arch. Phys. Med. Rehabil. 1994, 75, 216–225. [Google Scholar] [CrossRef]

- Nukala, B.T.; Nakano, T.; Rodriguez, A.; Tsay, J.; Lopez, J.; Nguyen, T.Q.; Zupancic, S.; Lie, D.Y. Real-time classification of patients with balance disorders vs. normal subjects using a low-cost small wireless wearable gait sensor. Biosensors 2016, 6, 58. [Google Scholar] [CrossRef]

- Balaban, B.; Tok, F. Gait disturbances in patients with stroke. PMR 2014, 6, 635–642. [Google Scholar] [CrossRef]

- Chen, P.-H.; Wang, R.-L.; Liou, D.-J.; Shaw, J.-S. Gait disorders in Parkinson’s disease: Assessment and management. Int. J. Gerontol. 2013, 7, 189–193. [Google Scholar] [CrossRef]

- Kim, J.-K.; Bae, M.-N.; Lee, K.; Kim, J.-C.; Hong, S.G. Explainable artificial intelligence and wearable sensor-based gait analysis to identify patients with osteopenia and sarcopenia in daily life. Biosensors 2022, 12, 167. [Google Scholar] [CrossRef]

- Mirelman, A.; Bonato, P.; Camicioli, R.; Ellis, T.D.; Giladi, N.; Hamilton, J.L.; Hass, C.J.; Hausdorff, J.M.; Pelosin, E.; Almeida, Q.J. Gait impairments in Parkinson’s disease. Lancet Neurol. 2019, 18, 697–708. [Google Scholar] [CrossRef]

- Richards, C.L.; Malouin, F.; Dean, C. Gait in stroke: Assessment and rehabilitation. Clin. Geriatr. Med. 1999, 15, 833–856. [Google Scholar] [CrossRef]

- Brodie, M.A.; Coppens, M.J.; Ejupi, A.; Gschwind, Y.J.; Annegarn, J.; Schoene, D.; Wieching, R.; Lord, S.R.; Delbaere, K. Comparison between clinical gait and daily-life gait assessments of fall risk in older people. Geriatr. Gerontol. Int. 2017, 17, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Verghese, J.; Holtzer, R.; Lipton, R.B.; Wang, C. Quantitative gait markers and incident fall risk in older adults. J. Gerontol. Ser. A Biomed. Sci. Med. Sci. 2009, 64, 896–901. [Google Scholar] [CrossRef]

- Zhang, W.; Low, L.-F.; Schwenk, M.; Mills, N.; Gwynn, J.D.; Clemson, L. Review of gait, cognition, and fall risks with implications for fall prevention in older adults with dementia. Dement. Geriatr. Cogn. Disord. 2019, 48, 17–29. [Google Scholar] [CrossRef]

- Ram, M.; Baltzopoulos, V.; Shaw, A.; Maganaris, C.N.; Cullen, J.; O’Brien, T. Stair-fall risk parameters in a controlled gait laboratory environment and real (domestic) houses: A prospective study in faller and non-faller groups. Sensors 2024, 24, 526. [Google Scholar] [CrossRef]

- Yamin, N.A.A.A.; Basaruddin, K.S.; Ijaz, M.F.; Mat Som, M.H.; Shahrol Aman, M.N.S.; Takemura, H. Correlation between Postural Stability and Lower Extremity Joint Reaction Forces in Young Adults during Incline and Decline Walking. Appl. Sci. 2023, 13, 13246. [Google Scholar] [CrossRef]

- Nagano, H. Gait biomechanics for fall prevention among older adults. Appl. Sci. 2022, 12, 6660. [Google Scholar] [CrossRef]

- Merryweather, A.S.; Trkov, M.; Gubler, K.K. Surface transitions and stair climbing and descent. In DHM and Posturography; Elsevier: Amsterdam, The Netherlands, 2019; pp. 397–413. [Google Scholar]

- Hu, B.; Li, S.; Chen, Y.; Kavi, R.; Coppola, S. Applying deep neural networks and inertial measurement unit in recognizing irregular walking differences in the real world. Appl. Ergon. 2021, 96, 103414. [Google Scholar] [CrossRef] [PubMed]

- Kim, P.; Lee, J.; Shin, C.S. Classification of walking environments using deep learning approach based on surface EMG sensors only. Sensors 2021, 21, 4204. [Google Scholar] [CrossRef]

- Kyeong, S.; Shin, W.; Yang, M.; Heo, U.; Feng, J.-R.; Kim, J. Recognition of walking environments and gait period by surface electromyography. Front. Inf. Technol. Electron. Eng. 2019, 20, 342–352. [Google Scholar] [CrossRef]

- Song, M.; Kim, J. An ambulatory gait monitoring system with activity classification and gait parameter calculation based on a single foot inertial sensor. IEEE Trans. Biomed. Eng. 2017, 65, 885–893. [Google Scholar] [CrossRef]

- Yu, S.; Yang, J.; Huang, T.-H.; Zhu, J.; Visco, C.J.; Hameed, F.; Stein, J.; Zhou, X.; Su, H. Artificial neural network-based activities classification, gait phase estimation, and prediction. Ann. Biomed. Eng. 2023, 51, 1471–1484. [Google Scholar] [CrossRef]

- Zhu, L.; Wang, Z.; Ning, Z.; Zhang, Y.; Liu, Y.; Cao, W.; Wu, X.; Chen, C. A novel motion intention recognition approach for soft exoskeleton via IMU. Electronics 2020, 9, 2176. [Google Scholar] [CrossRef]

- Chauhan, P.; Singh, A.K.; Raghuwanshi, N.K. Classifying the walking pattern of humans on different surfaces using convolutional features and shallow machine learning classifiers. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2024, 238, 8943–8954. [Google Scholar] [CrossRef]

- McQuire, J.; Watson, P.; Wright, N.; Hiden, H.; Catt, M. Uneven and irregular surface condition prediction from human walking data using both centralized and decentralized machine learning approaches. In Proceedings of the 2021 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Houstion, TX, USA, 9–12 December 2021; IEEE: New York, NY, USA, 2021. [Google Scholar]

- Shah, V.; Flood, M.W.; Grimm, B.; Dixon, P.C. Generalizability of deep learning models for predicting outdoor irregular walking surfaces. J. Biomech. 2022, 139, 111159. [Google Scholar] [CrossRef] [PubMed]

- Sher, A.; Bunker, M.T.; Akanyeti, O. Towards personalized environment-aware outdoor gait analysis using a smartphone. Expert Syst. 2023, 40, e13130. [Google Scholar] [CrossRef]

- Sikandar, T.; Rabbi, M.F.; Ghazali, K.H.; Altwijri, O.; Almijalli, M.; Ahamed, N.U. Evaluating the difference in walk patterns among normal-weight and overweight/obese individuals in real-world surfaces using statistical analysis and deep learning methods with inertial measurement unit data. Phys. Eng. Sci. Med. 2022, 45, 1289–1300. [Google Scholar] [CrossRef] [PubMed]

- Yıldız, A. Towards environment-aware fall risk assessment: Classifying walking surface conditions using IMU-based gait data and deep learning. Brain Sci. 2023, 13, 1428. [Google Scholar] [CrossRef]

- Scattolini, M.; Tigrini, A.; Verdini, F.; Burattini, L.; Fioretti, S.; Mengarelli, A. Inertial Sensing for Human Motion Analysis: Enabling Sensor-to-Body Calibration Through an Anatomical and Functional Combined Approach. IEEE Trans. Neural Syst. Rehabil. Eng. 2025, 33, 1853–1862. [Google Scholar] [CrossRef]

- Hu, B.; Dixon, P.; Jacobs, J.; Dennerlein, J.; Schiffman, J. Machine learning algorithms based on signals from a single wearable inertial sensor can detect surface-and age-related differences in walking. J. Biomech. 2018, 71, 37–42. [Google Scholar] [CrossRef]

- Indrawati, R.T.; Putri, F.T.; Safriana, E.; Nugroho, W.I.; Prawibowo, H.; Ariyanto, M. Artificial Neural Network Accuracy Optimization Using Transfer Function Methods on Various Human Gait Walking Environments. JOIV Int. J. Inform. Vis. 2024, 8, 1001–1009. [Google Scholar] [CrossRef]

- Song, X.; Liu, J.; Pan, H.; Rao, H.; Wang, C.; Wu, X. Irrelevant Locomotion Intention Detection for Myoelectric Assistive Lower Limb Robot Control. IEEE Trans. Med. Robot. Bionics 2025, 7, 655–665. [Google Scholar] [CrossRef]

- Disselhorst-Klug, C.; Schmitz-Rode, T.; Rau, G. Surface electromyography and muscle force: Limits in sEMG–force relationship and new approaches for applications. Clin. Biomech. 2009, 24, 225–235. [Google Scholar] [CrossRef]

- Frigo, C.; Crenna, P. Multichannel SEMG in clinical gait analysis: A review and state-of-the-art. Clin. Biomech. 2009, 24, 236–245. [Google Scholar] [CrossRef]

- Archer, C.; Lach, J.; Chen, S.; Abel, M.; Bennett, B. Activity classification in users of ankle foot orthoses. Gait Posture 2013, 39, 111–117. [Google Scholar] [CrossRef]

- Fida, B.; Bernabucci, I.; Bibbo, D.; Conforto, S.; Schmid, M. Pre-processing effect on the accuracy of event-based activity segmentation and classification through inertial sensors. Sensors 2015, 15, 23095–23109. [Google Scholar] [CrossRef] [PubMed]

- Abolins, V.; Nesenbergs, K.; Bernans, E. On improving gait analysis data: Heel induced force plate noise removal and cut-off frequency selection for Butterworth filter. In Proceedings of the 9th International Conference on Signal Processing Systems, Auckland, New Zealand, 27–30 November 2017. [Google Scholar]

- Cereatti, A.; Gurchiek, R.; Mündermann, A.; Fantozzi, S.; Horak, F.; Delp, S.; Aminian, K. ISB recommendations on the definition, estimation, and reporting of joint kinematics in human motion analysis applications using wearable inertial measurement technology. J. Biomech. 2024, 173, 112225. [Google Scholar] [CrossRef]

- Potter, M.V. Simulating effects of sensor-to-segment alignment errors on IMU-based estimates of lower limb joint angles during running. Sports Eng. 2025, 28, 1. [Google Scholar] [CrossRef]

- Olyanasab, A.; Annabestani, M. Leveraging machine learning for personalized wearable biomedical devices: A review. J. Pers. Med. 2024, 14, 203. [Google Scholar] [CrossRef]

- Iadarola, G.; Mengarelli, A.; Crippa, P.; Fioretti, S.; Spinsante, S. A Review on Assisted Living Using Wearable Devices. Sensors 2024, 24, 7439. [Google Scholar] [CrossRef]

- Lee, J.; Hong, W.; Hur, P. Continuous gait phase estimation using LSTM for robotic transfemoral prosthesis across walking speeds. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 1470–1477. [Google Scholar] [CrossRef]

- Yaprak, B.; Gedikli, E. Different gait combinations based on multi-modal deep CNN architectures. Multimed. Tools Appl. 2024, 83, 83403–83425. [Google Scholar] [CrossRef]

| LG | DR | AR | DS | AS | Total | |

|---|---|---|---|---|---|---|

| S01 | 2996 | 2200 | 3361 | 767 | 675 | 9999 |

| S02 | 2821 | 1655 | 486 | 1557 | 1170 | 7689 |

| S03 | 3688 | - | 1364 | 1456 | 1655 | 8163 |

| S04 | 3127 | 2245 | 2244 | 1853 | 1857 | 11,326 |

| S05 | 3222 | 1569 | 1463 | 1652 | 1950 | 9856 |

| Total | 15,854 | 7669 | 8918 | 7285 | 7307 | 47,033 |

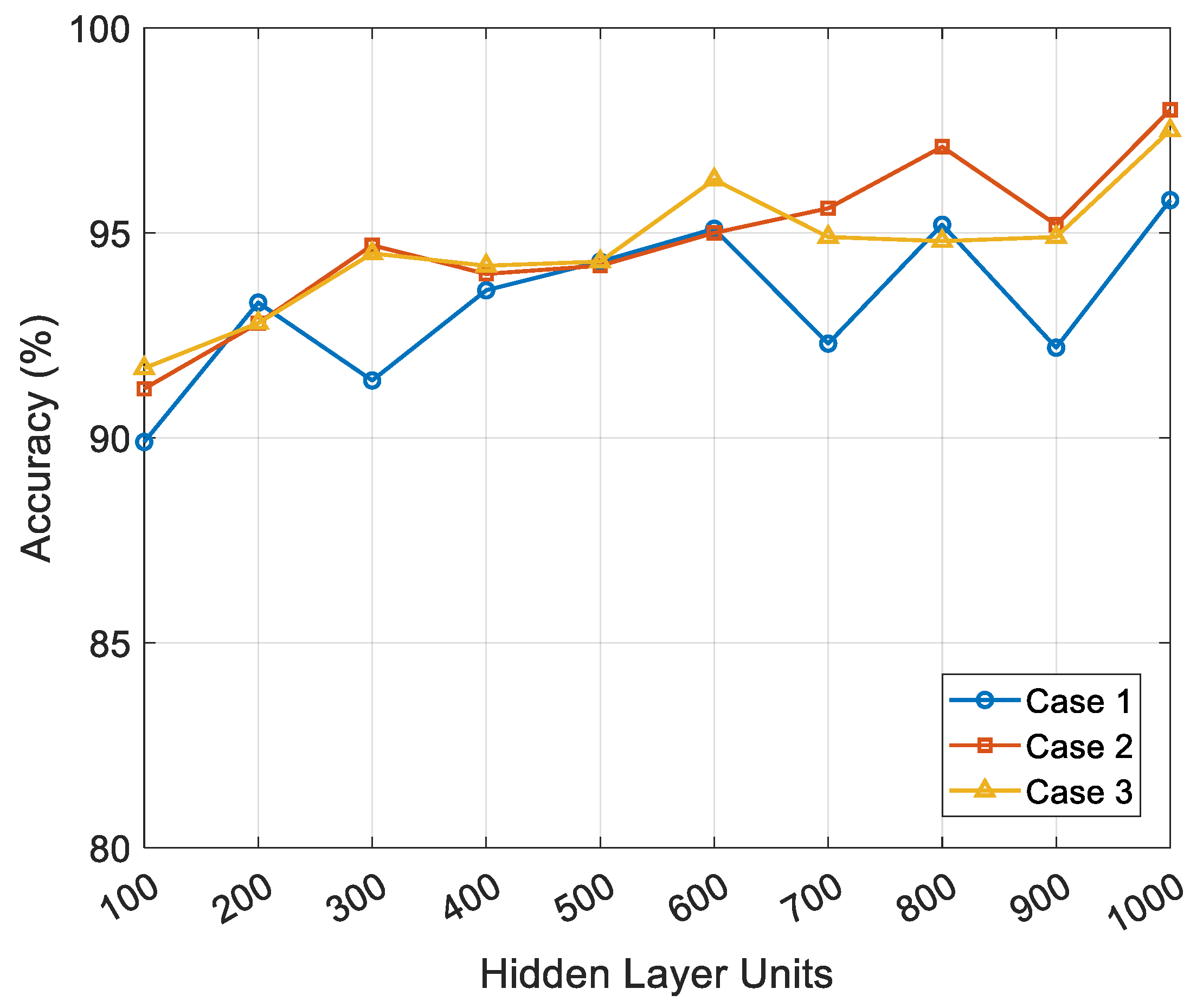

| Hidden Layer Units | Case 1 | Case 2 | Case 3 | |

|---|---|---|---|---|

| Accuracy (%) | ||||

| 1st | 100 | 89.9 | 91.2 | 91.7 |

| 2nd | 200 | 93.3 | 92.8 | 92.8 |

| 3rd | 300 | 91.4 | 94.7 | 94.5 |

| 4th | 400 | 93.6 | 94.0 | 94.2 |

| 5th | 500 | 94.3 | 94.2 | 94.3 |

| 6th | 600 | 95.1 | 95.0 | 96.3 |

| 7th | 700 | 92.3 | 95.6 | 94.9 |

| 8th | 800 | 95.2 | 97.1 | 94.8 |

| 9th | 900 | 92.2 | 95.2 | 94.9 |

| 10th | 1000 | 95.8 | 98.0 | 97.5 |

| Predicted Class | ||||||||

| LG | DR | AR | DS | AS | Recall | F1-score | ||

| Actual Class | LG | 15,396 | 288 | 299 | 67 | 28 | 95.76% | 96.43% |

| DR | 161 | 7114 | 110 | 47 | 45 | 95.15% | 93.94% | |

| AR | 183 | 128 | 8419 | 40 | 52 | 95.43% | 94.92% | |

| DS | 74 | 63 | 48 | 7045 | 85 | 96.31% | 96.51% | |

| AS | 39 | 76 | 42 | 86 | 7097 | 96.69% | 96.91% | |

| Precision | 97.12% | 92.76% | 94.40% | 96.71% | 97.13% | Macro F1-score | 95.83% | |

| (a) Case 1 | ||||||||

| Predicted Class | ||||||||

| LG | DR | AR | DS | AS | Recall | F1-score | ||

| Actual Class | LG | 15,638 | 120 | 108 | 35 | 25 | 98.19% | 98.35% |

| DR | 89 | 7432 | 84 | 32 | 27 | 96.97% | 96.68% | |

| AR | 86 | 65 | 8666 | 17 | 25 | 97.82% | 97.47% | |

| DS | 22 | 18 | 23 | 7158 | 47 | 98.49% | 98.08% | |

| AS | 39 | 76 | 42 | 86 | 7097 | 96.69% | 97.48% | |

| Precision | 98.51% | 96.38% | 97.12% | 97.68% | 98.28% | Macro F1-score | 97.73% | |

| (b) Case 2 | ||||||||

| Predicted Class | ||||||||

| LG | DR | AR | DS | AS | Recall | F1-score | ||

| Actual Class | LG | 15,549 | 184 | 164 | 39 | 29 | 97.39% | 97.73% |

| DR | 127 | 7373 | 74 | 28 | 25 | 96.67% | 96.40% | |

| AR | 117 | 53 | 8623 | 30 | 24 | 97.47% | 97.08% | |

| DS | 37 | 19 | 26 | 7131 | 47 | 98.22% | 98.05% | |

| AS | 24 | 40 | 31 | 57 | 7182 | 97.93% | 98.11% | |

| Precision | 98.08% | 96.14% | 96.69% | 97.89% | 98.29% | Macro F1-score | 97.50% | |

| (c) Case 3 | ||||||||

| Sensor Combination | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|

| IMU only | 92.3 | 92.24 | 92.44 | 92.34 |

| IMU + smart insole | 93.2 | 93.13 | 93.36 | 93.25 |

| IMU + GSR | 94.4 | 94.26 | 94.42 | 94.34 |

| IMU + smart insole + GSR | 98.0 | 97.60 | 97.63 | 97.73 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seo, K.-J.; Lee, J.; Cho, J.-E.; Kim, H.; Kim, J.H. Gait Environment Recognition Using Biomechanical and Physiological Signals with Feed-Forward Neural Network: A Pilot Study. Sensors 2025, 25, 4302. https://doi.org/10.3390/s25144302

Seo K-J, Lee J, Cho J-E, Kim H, Kim JH. Gait Environment Recognition Using Biomechanical and Physiological Signals with Feed-Forward Neural Network: A Pilot Study. Sensors. 2025; 25(14):4302. https://doi.org/10.3390/s25144302

Chicago/Turabian StyleSeo, Kyeong-Jun, Jinwon Lee, Ji-Eun Cho, Hogene Kim, and Jung Hwan Kim. 2025. "Gait Environment Recognition Using Biomechanical and Physiological Signals with Feed-Forward Neural Network: A Pilot Study" Sensors 25, no. 14: 4302. https://doi.org/10.3390/s25144302

APA StyleSeo, K.-J., Lee, J., Cho, J.-E., Kim, H., & Kim, J. H. (2025). Gait Environment Recognition Using Biomechanical and Physiological Signals with Feed-Forward Neural Network: A Pilot Study. Sensors, 25(14), 4302. https://doi.org/10.3390/s25144302