From Photogrammetry to Virtual Reality: A Framework for Assessing Visual Fidelity in Structural Inspections

Abstract

1. Introduction

2. Related Work

- (1)

- (2)

- Excluding studies utilizing augmented reality or mixed reality technologies.

- (3)

- Only considering studies investigating VR models grounded in real-world data of the physical structures.

- (4)

- Excluding studies primarily focusing on VR usability tests.

- (1)

- The 360-degree camera filming adopted in [15]. Deploying a 360-degree camera in the field is an affordable way to capture the in situ status of a structure. Depending on the structure’s size, multiple 360-degree images shall be collected from various areas of interest. For example, in [15], 27 360-degree images were collected to cover views from both the top and bottom of the bridge. The images were then edited in Photoshop to remove the tripods and correct lighting discrepancies. However, this method has limitations. For large structures, extensive effort would be required to collect enough 360-degree images to cover all areas of interest, especially for inaccessible areas. Also, aligning a large volume of images correctly to form a cohesive VR model could be challenging.

- (2)

- Photogrammetry adopted in [16,17,18]. Built upon Structure-from-Motion and Multi-View Stereo (SfM-MVS) [26,27], photogrammetry is an excellent tool for rapidly reconstructing a 3D model of a structure based on a large volume of 2D digital images taken from different camera positions. In [17], the researchers used a UAV for image collection of historic structures and employed the off-the-shelf software Agisoft Metashape [28] for 3D model reconstruction. In [16], a different platform, 3DFlow Zephyr Aerial [29], was used to reconstruct the 3D point cloud, the mesh, and ultimately the texture mapping model of the tunnel walls.

- (3)

- The 3D LiDAR scanning adopted in [12,13,14], which is another remote sensing method that uses laser light to measure the distances and create a high-fidelity 3D point cloud of a structure [30]. In [14], 11 LiDAR scans were conducted on a 53.9 m long truss bridge to create the 3D point cloud. The data collection process lasted around 5 h and 45 min. In [12,13], researchers adopted Leica ScanStation P40 to establish the 3D point clouds for a masonry bridge and a reinforced box girder concrete bridge. Because raw LiDAR scans have no color information, additional field images were collected and mapped back to the LiDAR point clouds using off-the-shelf capacities embedded in the LiDAR device.

3. Motivation and Research Gap

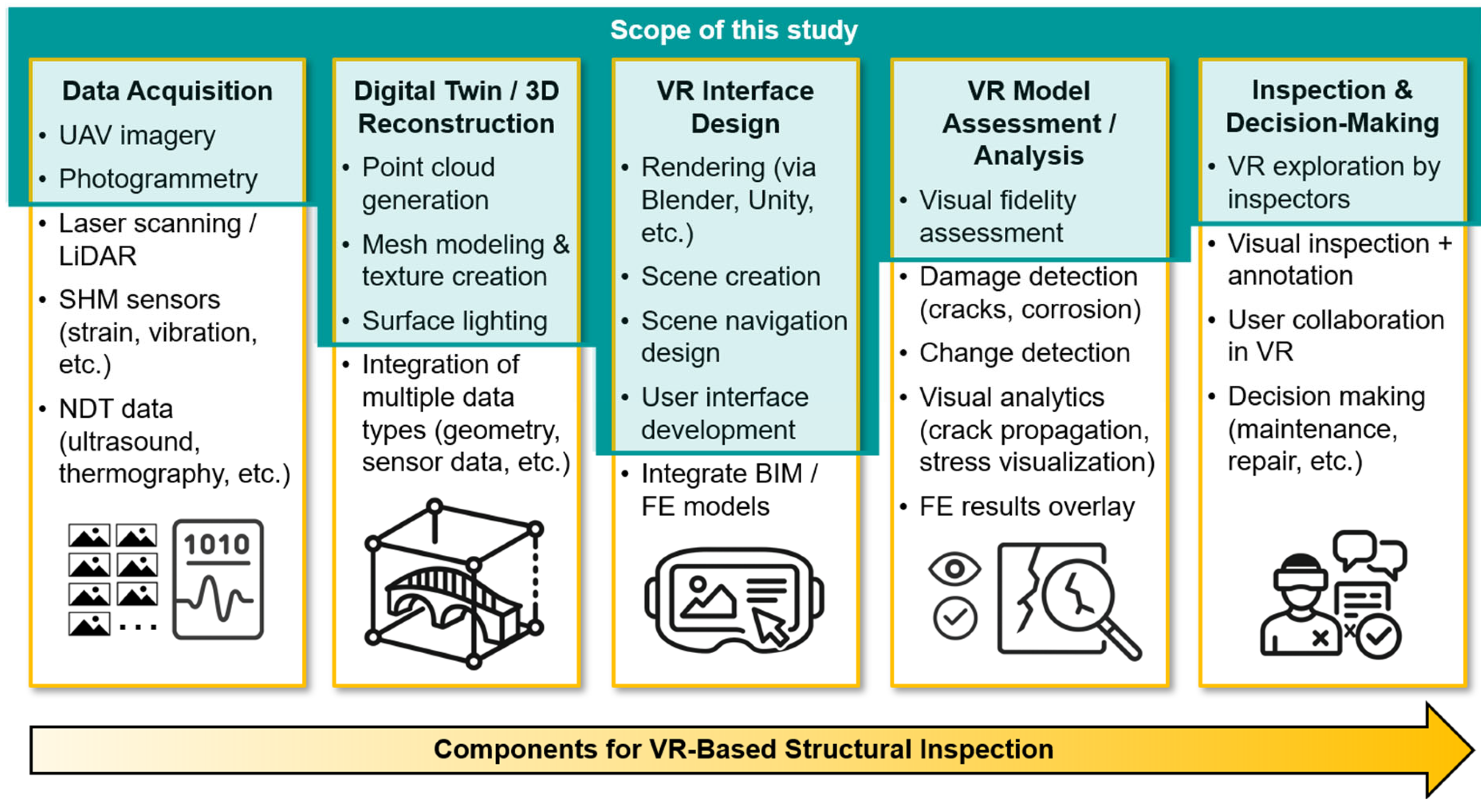

4. Scope of This Study

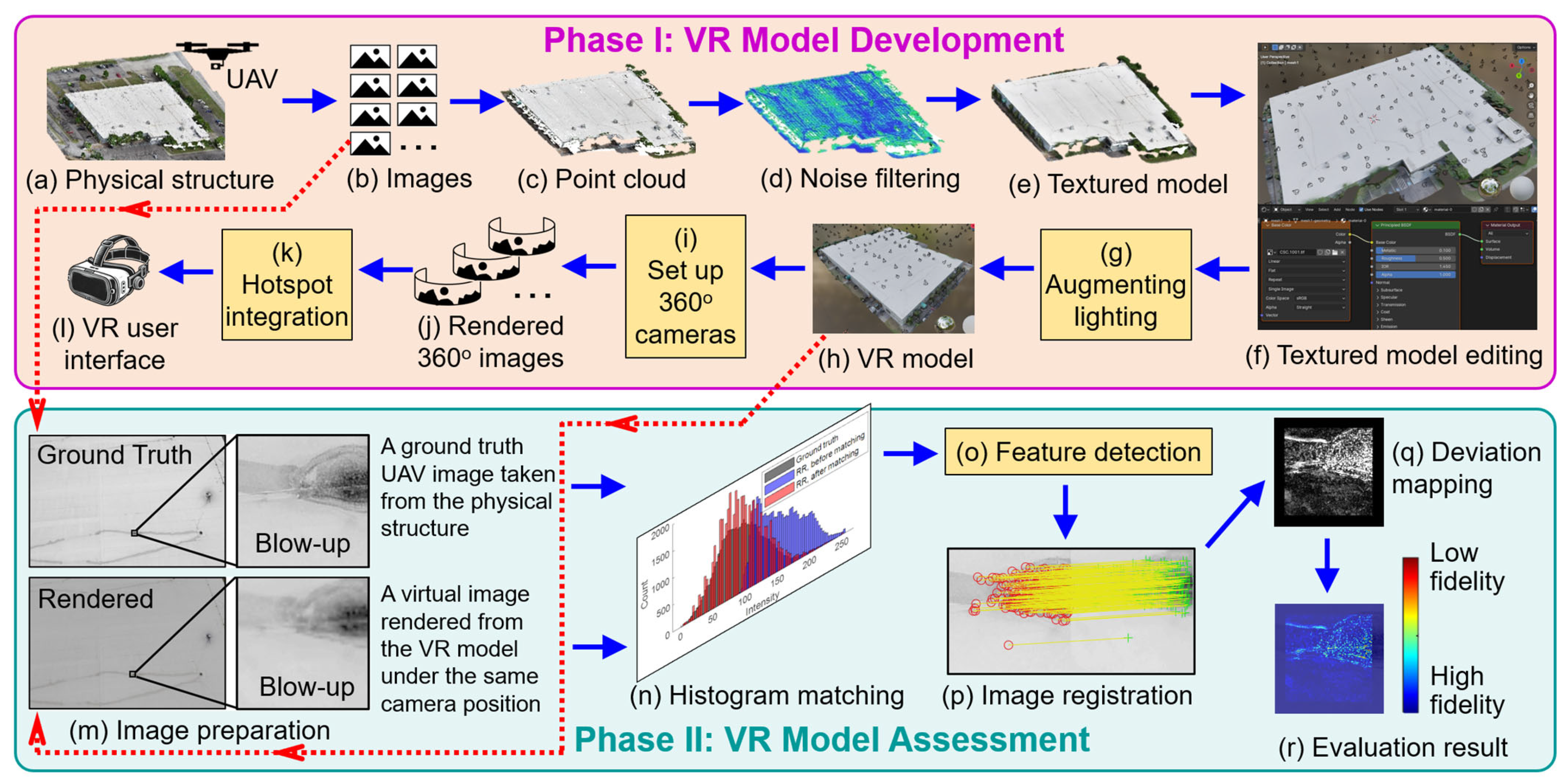

5. Methodology

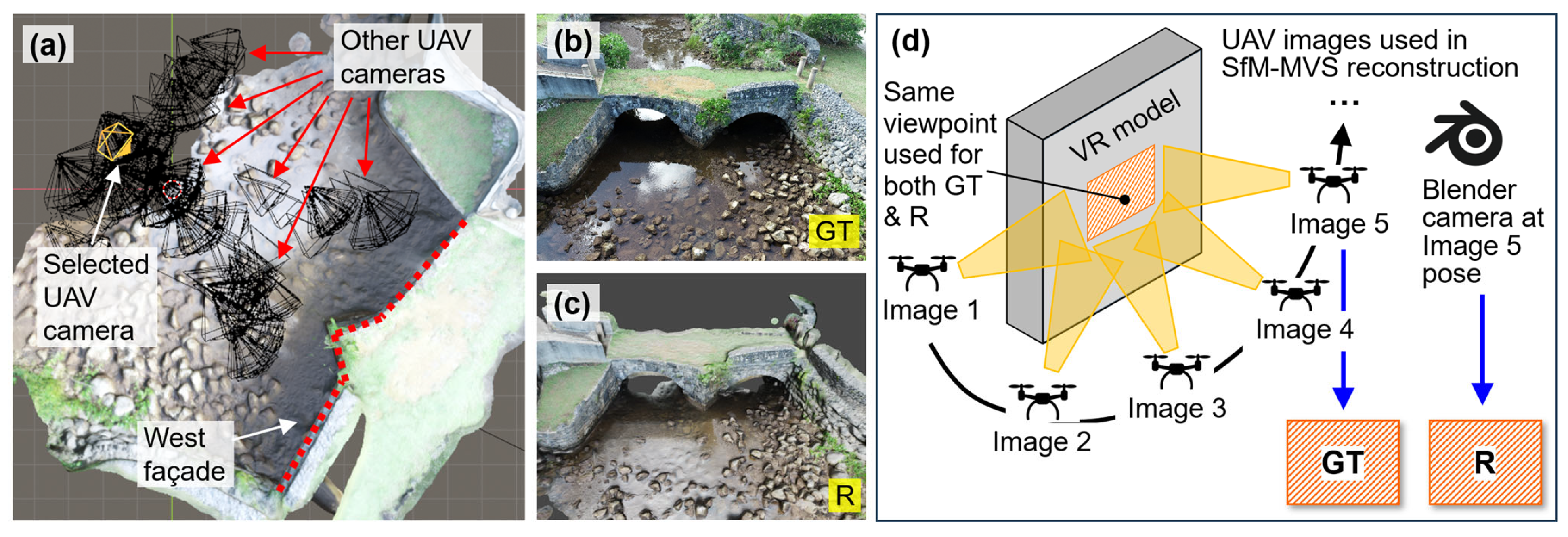

5.1. VR Model Development

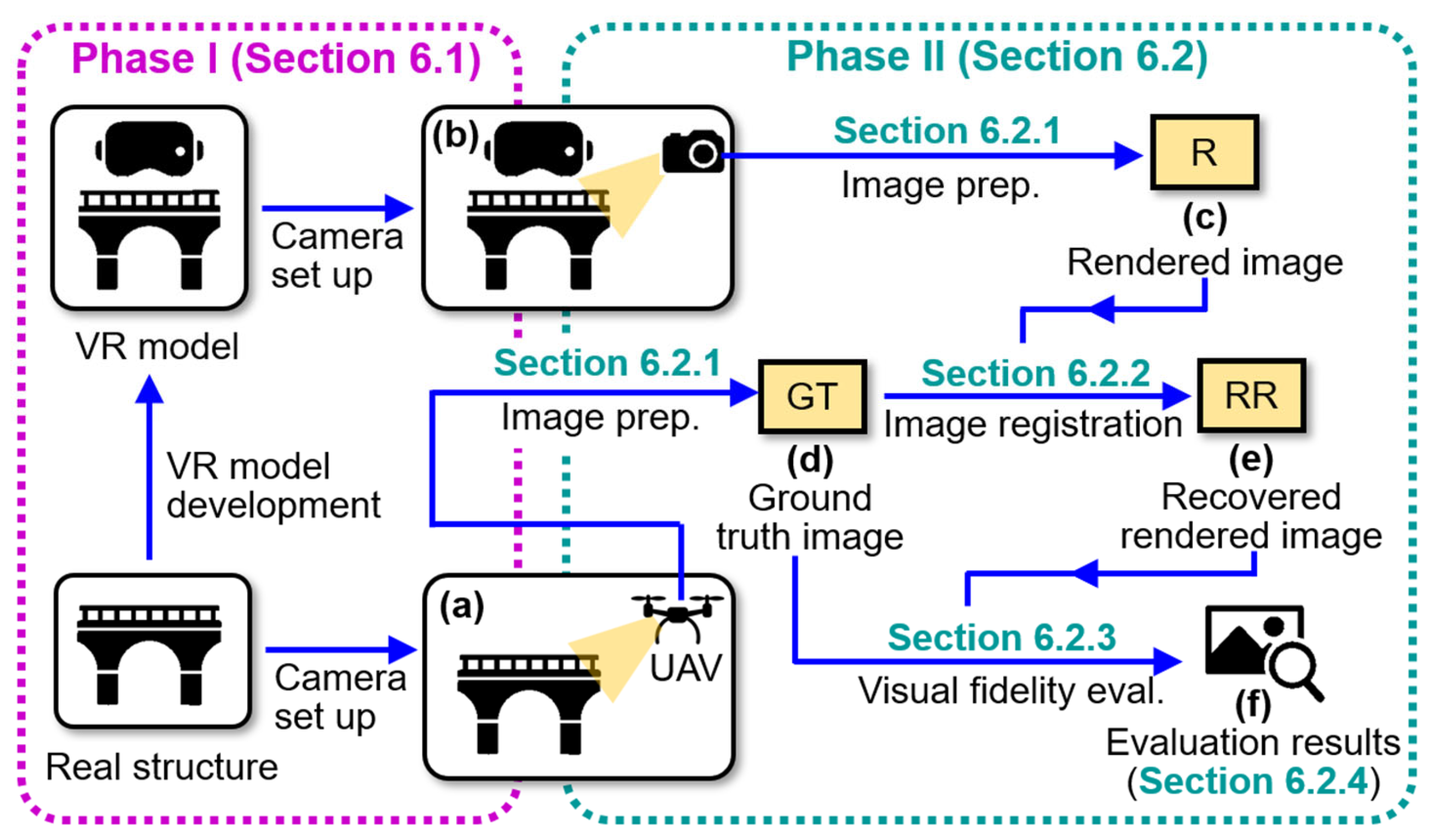

5.2. VR Model Assessment

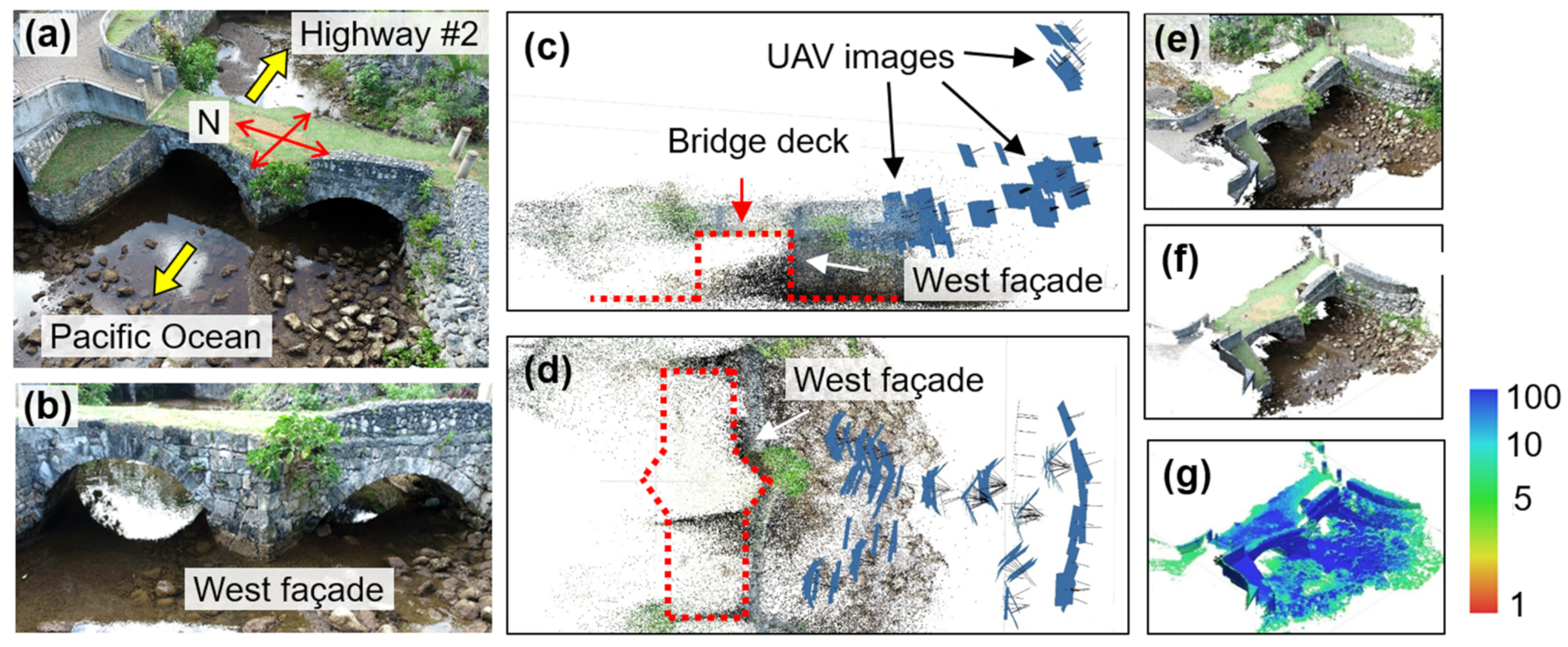

6. Bridge Validation

6.1. VR Model Development

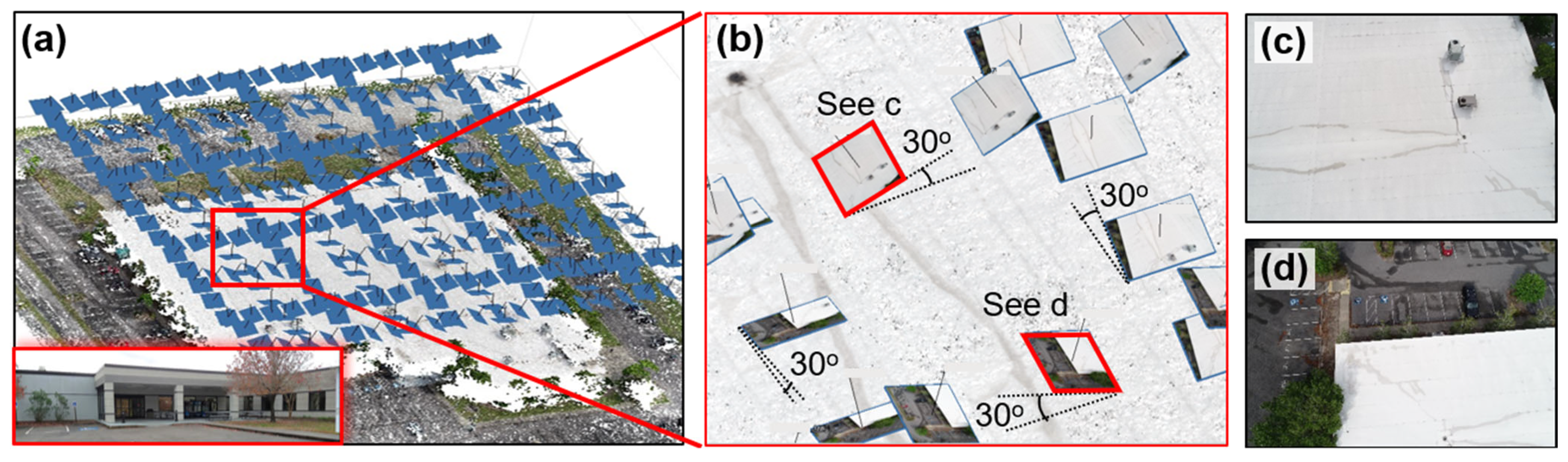

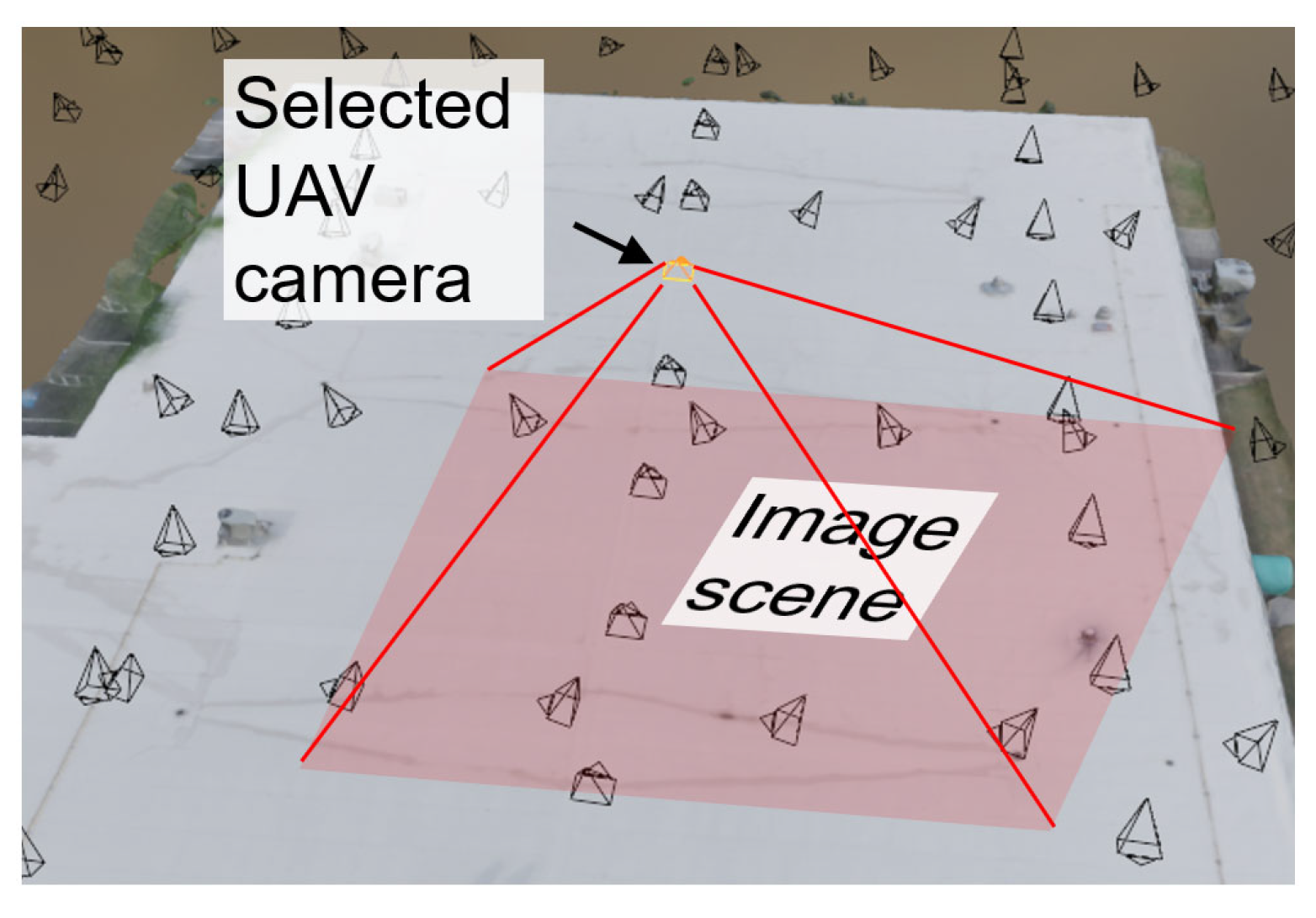

6.1.1. Point Cloud Reconstruction via SfM-MVS

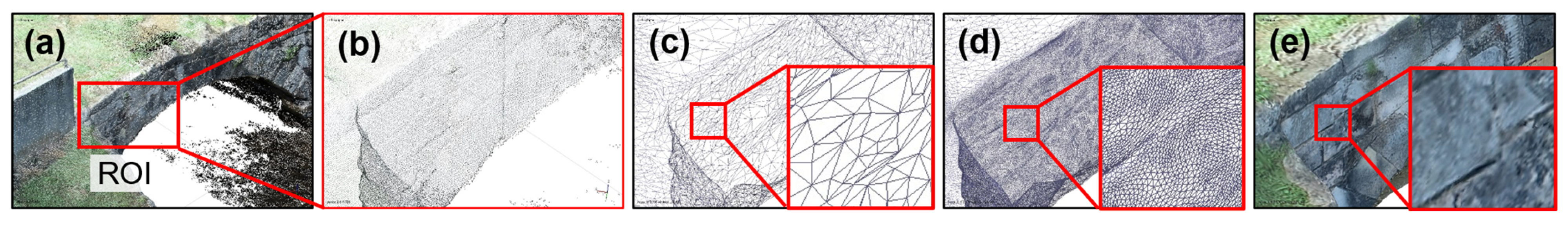

6.1.2. Textured Model Generation

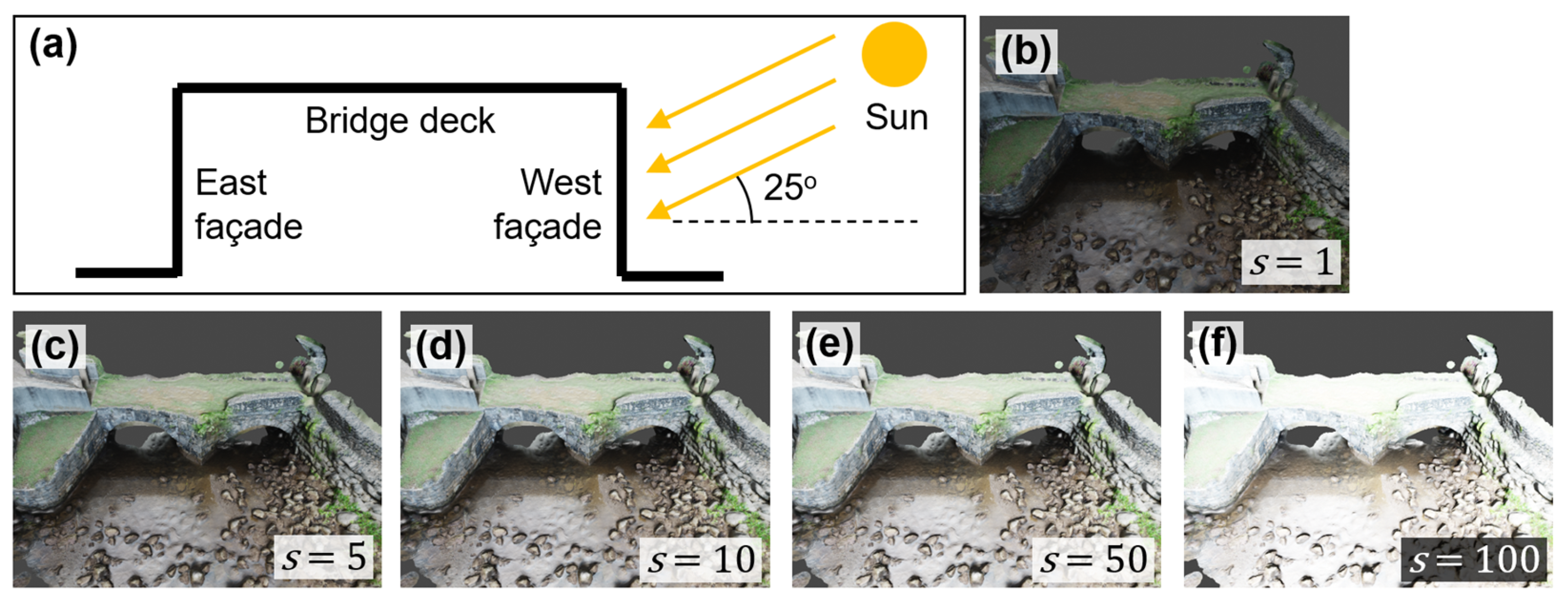

6.1.3. Texture Augmentation

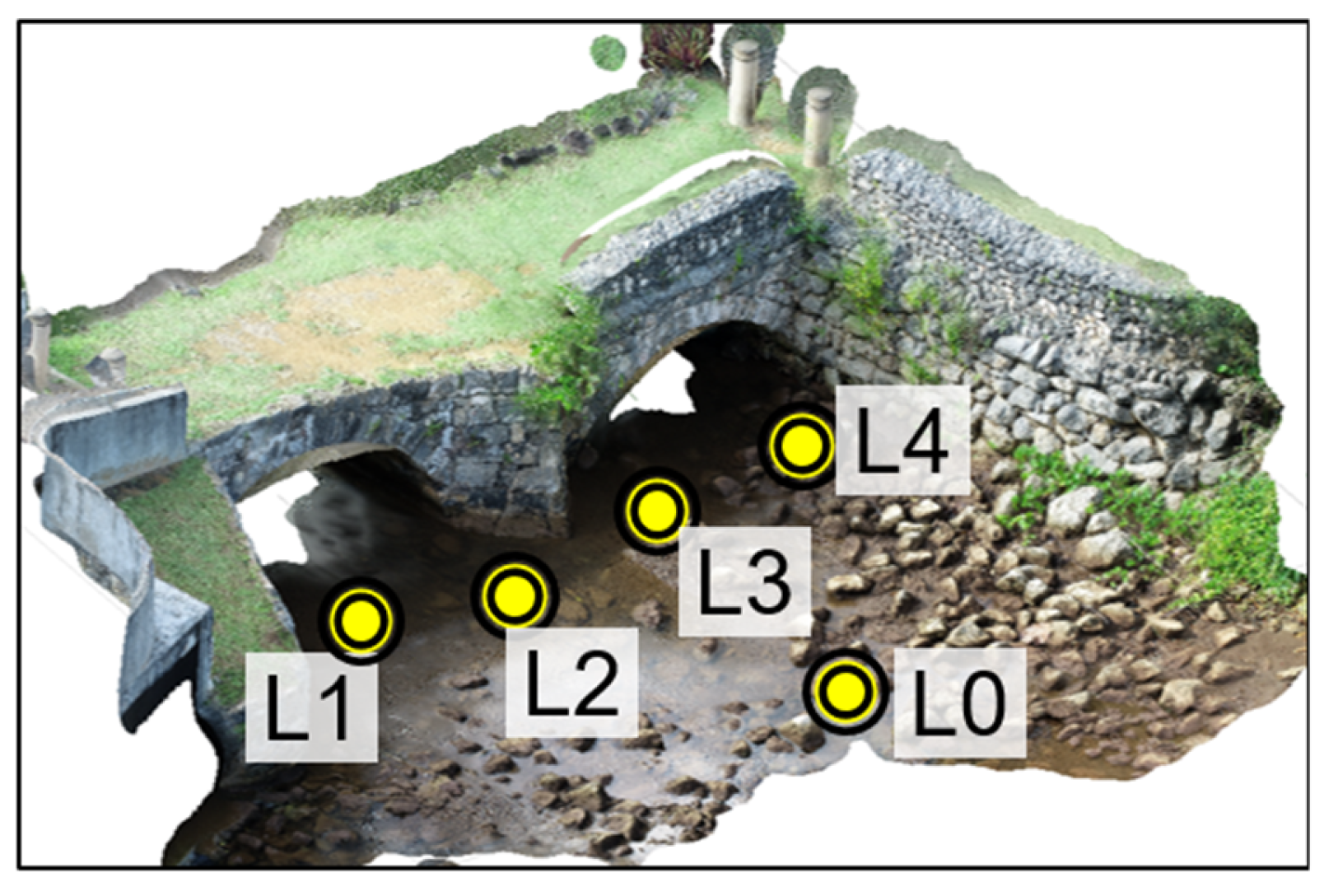

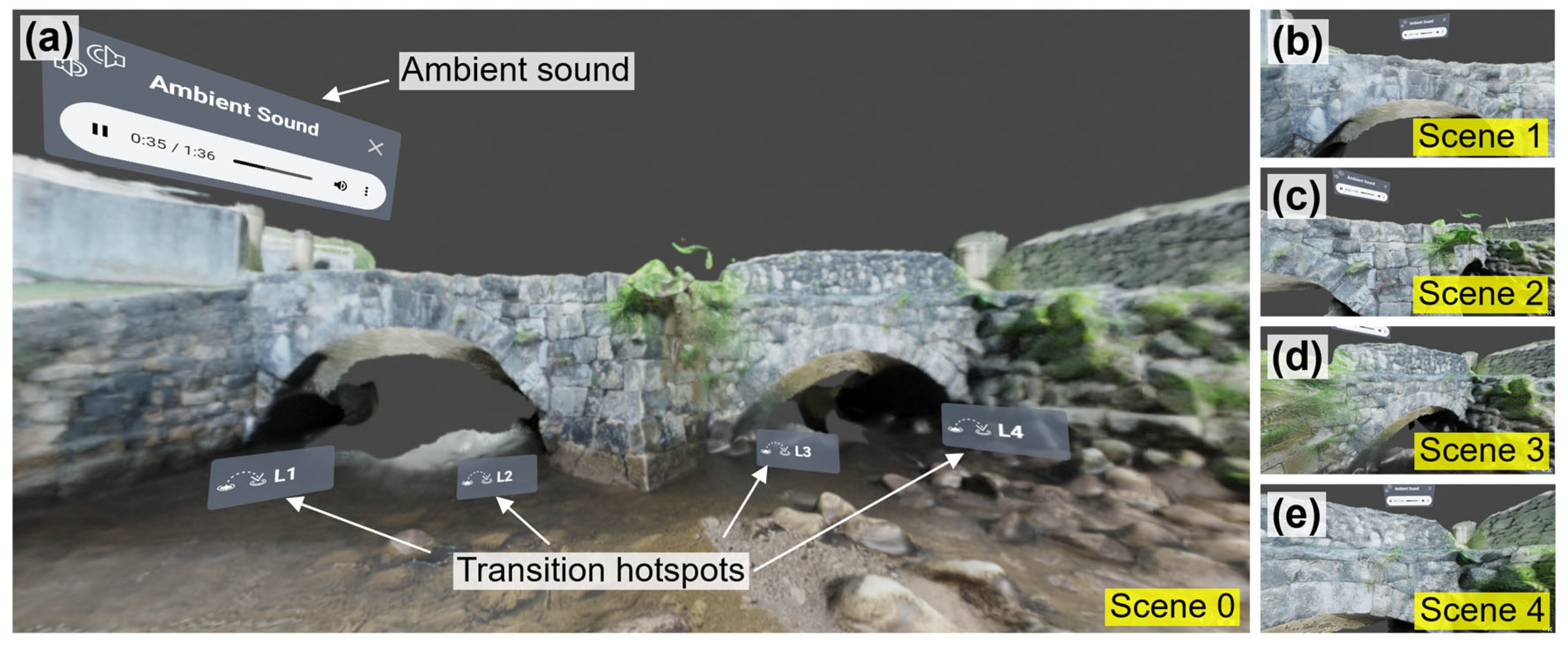

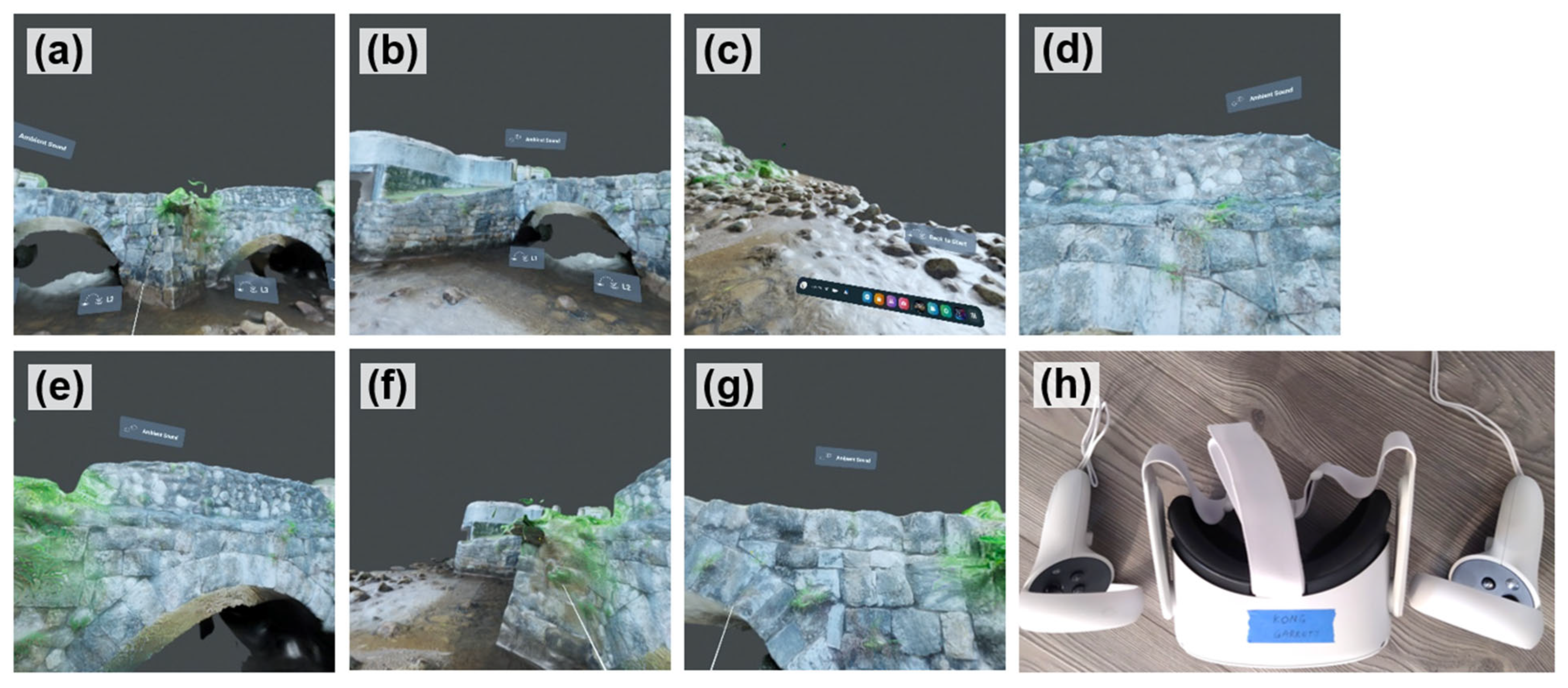

6.1.4. VR Scene Creation

6.1.5. VR User Interface

6.1.6. Implementation via VR Headset

6.2. VR Model Assessment

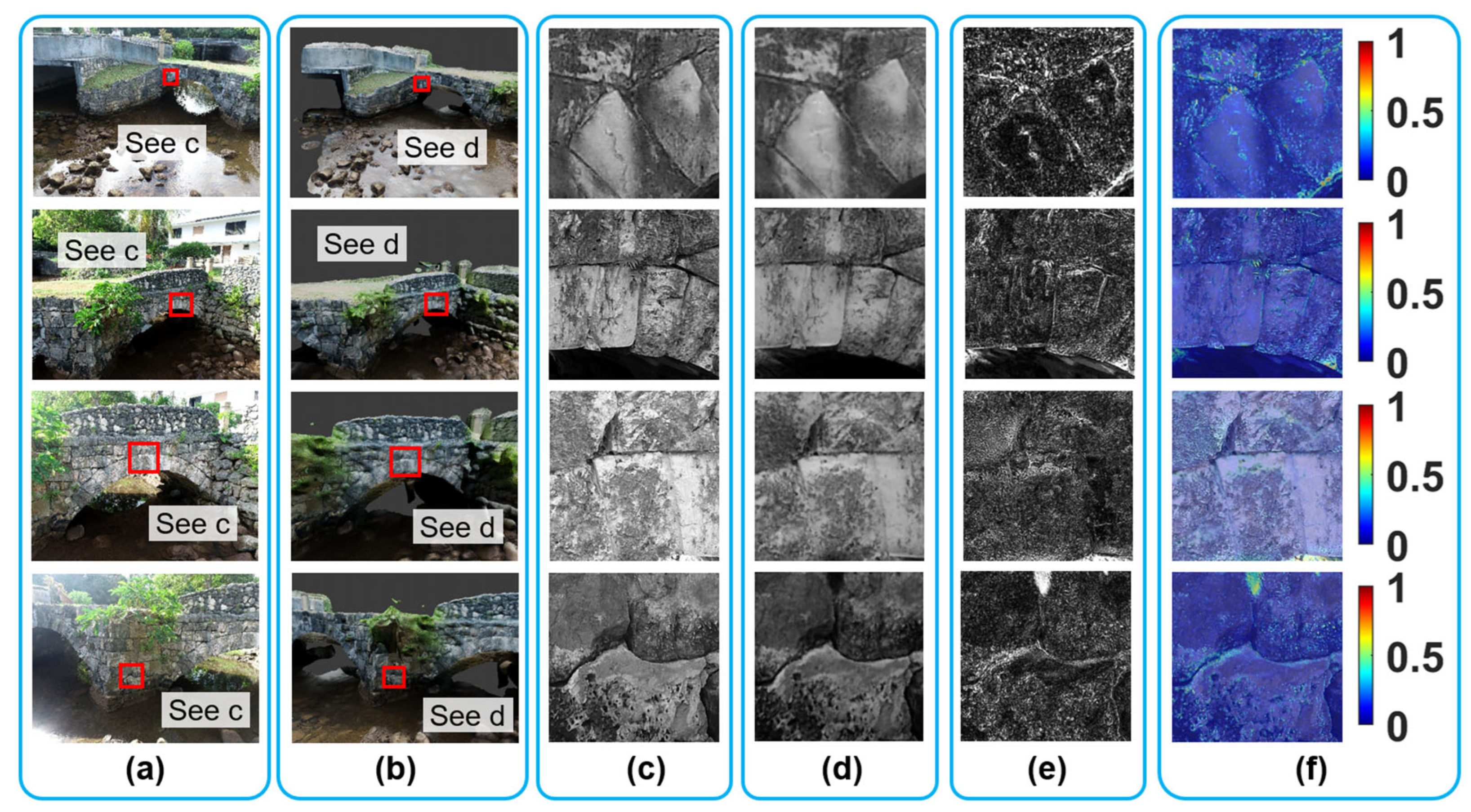

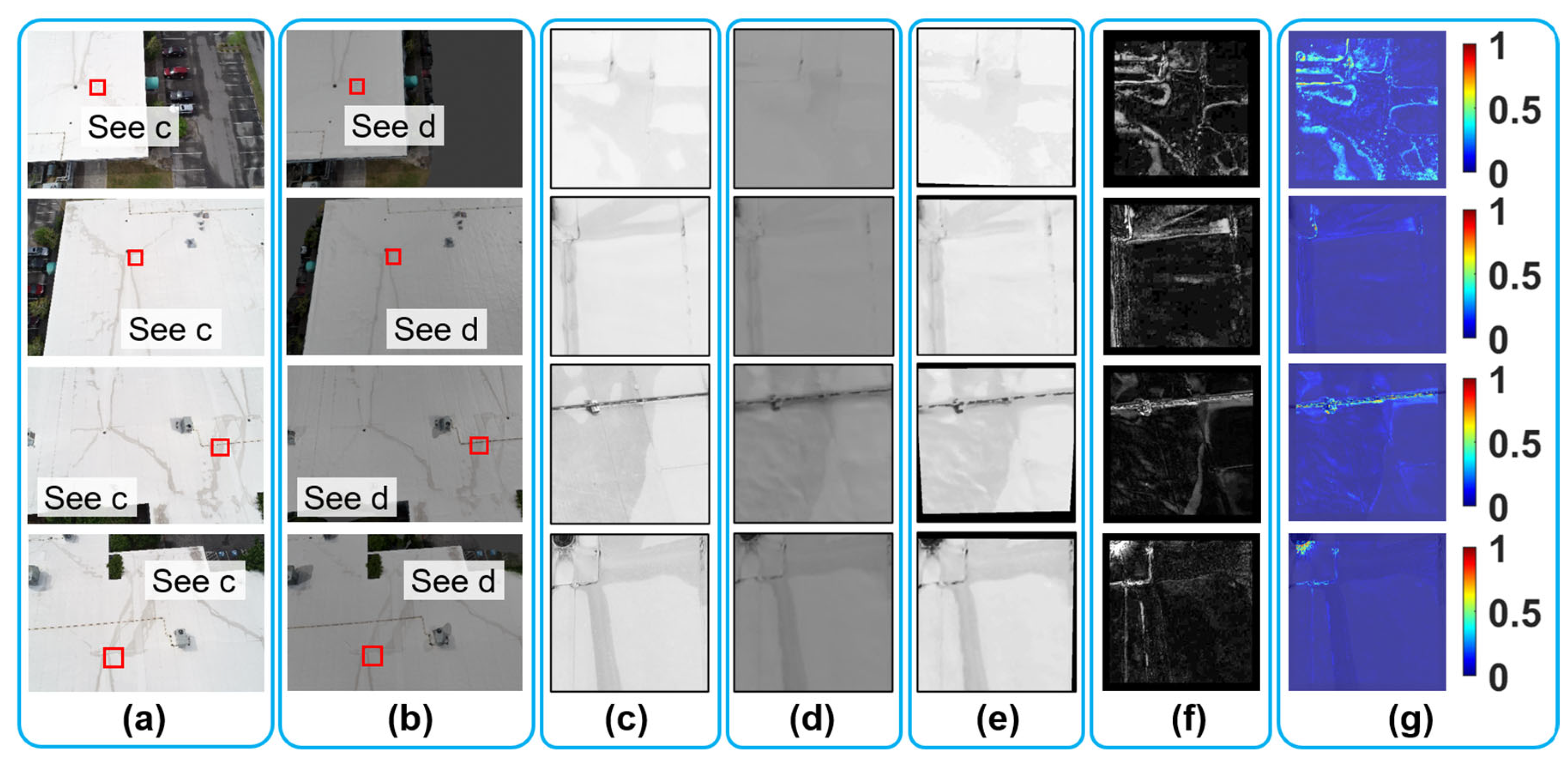

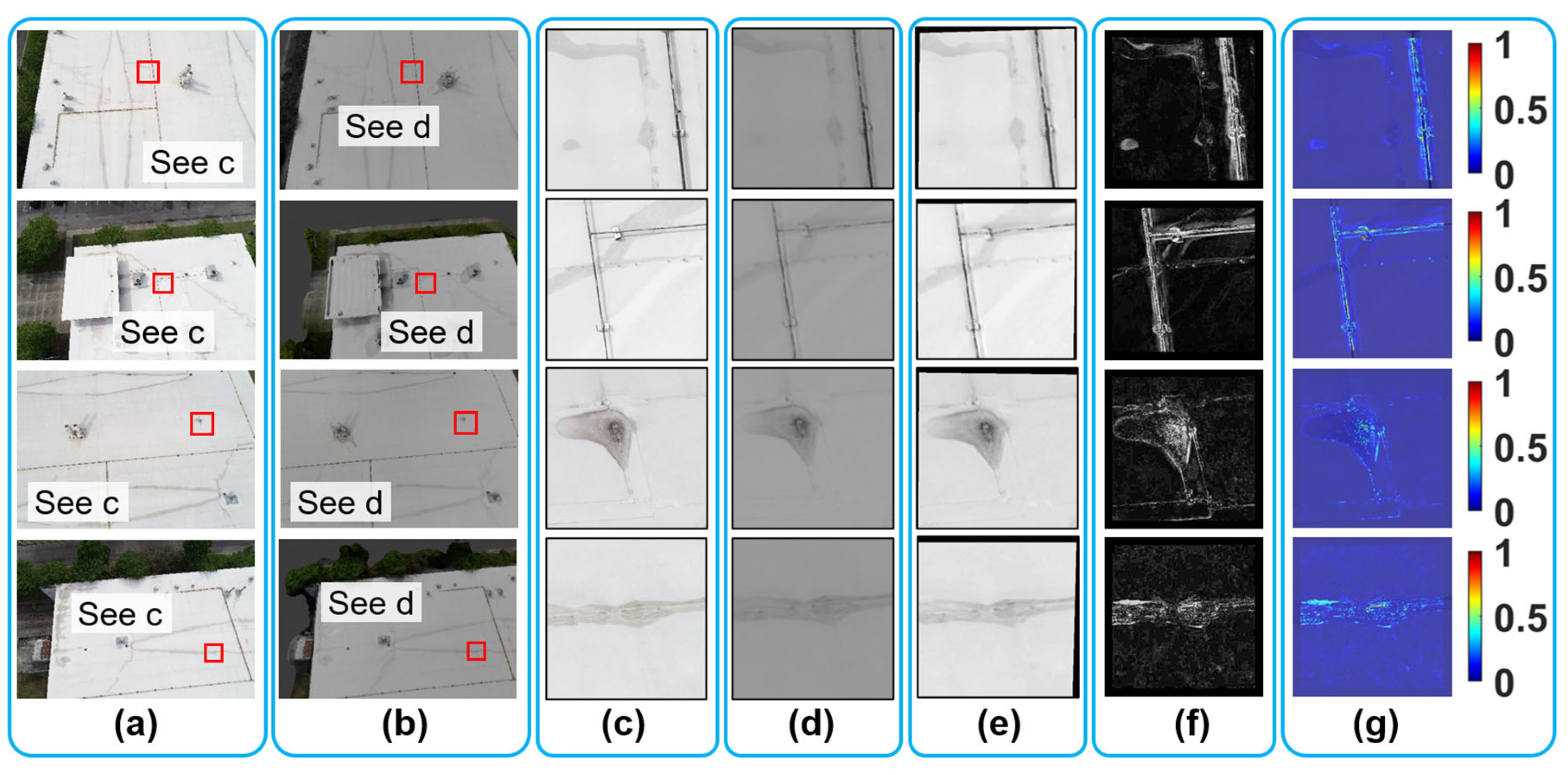

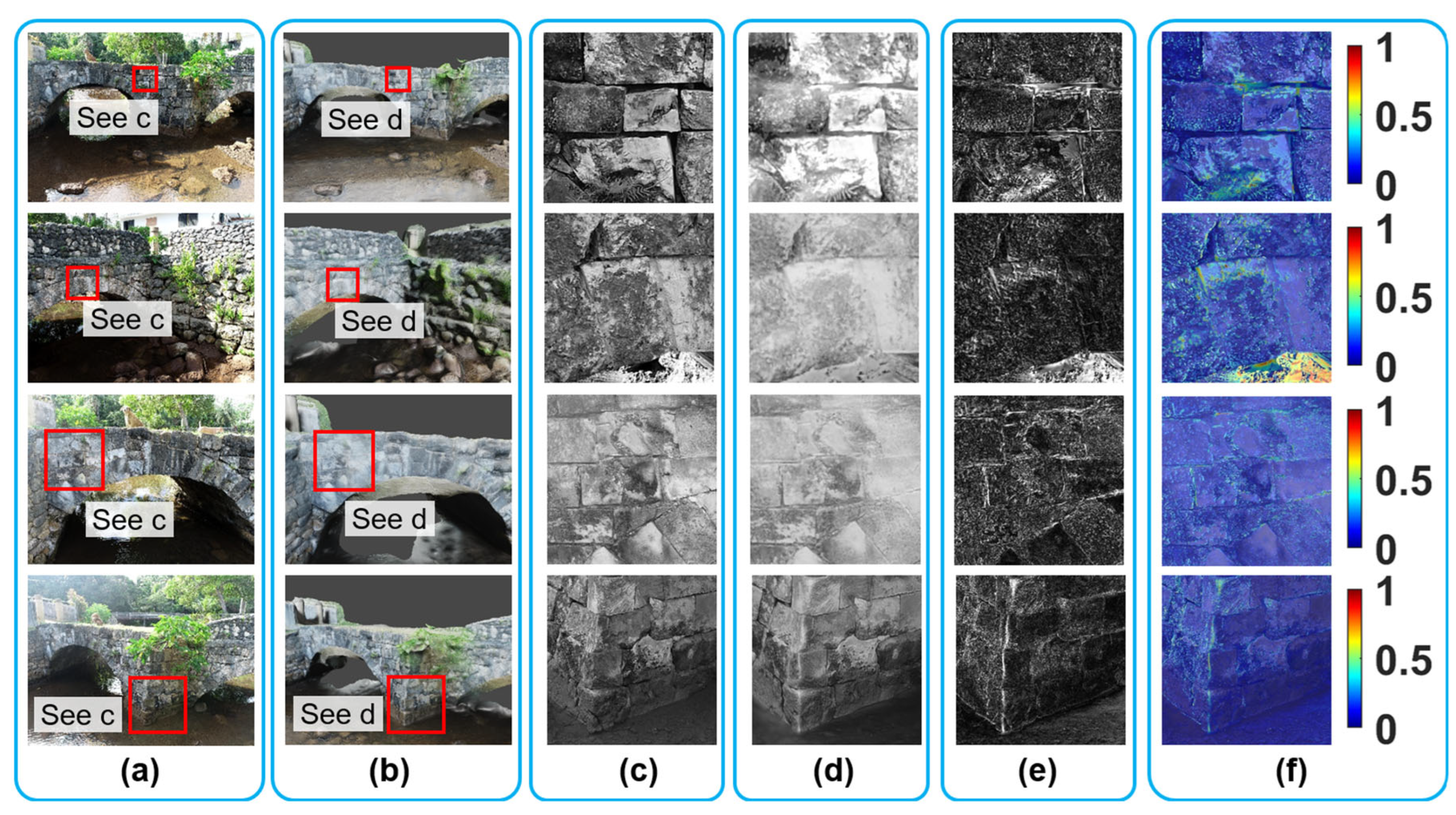

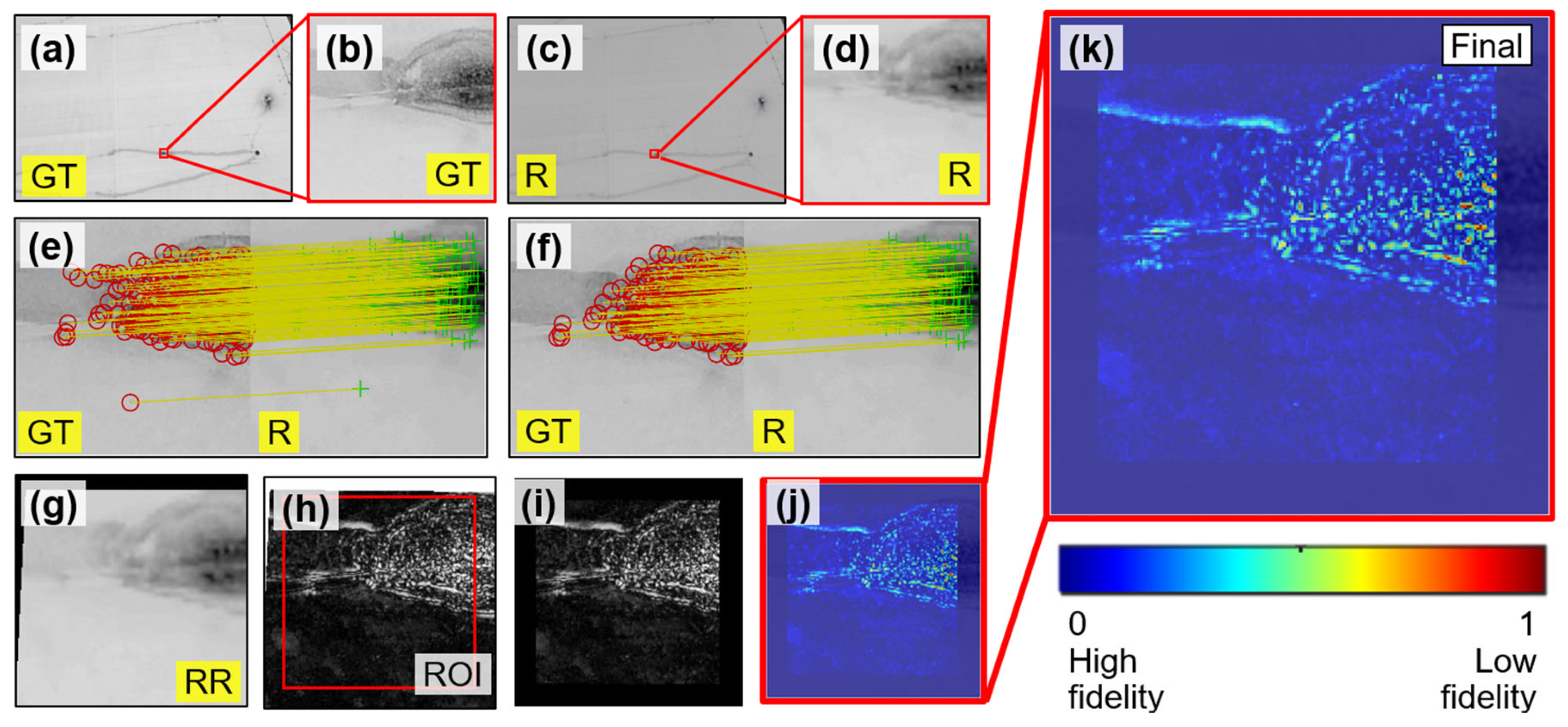

6.2.1. Image Preparation

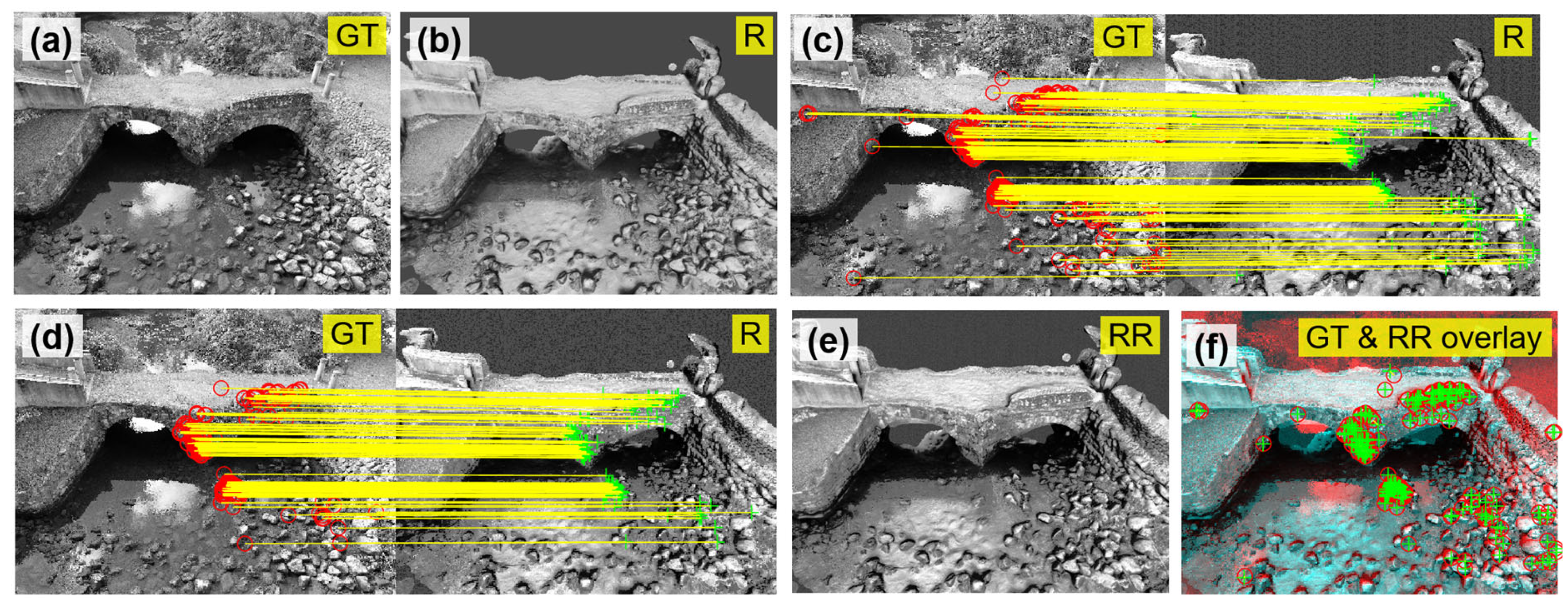

6.2.2. Image Registration

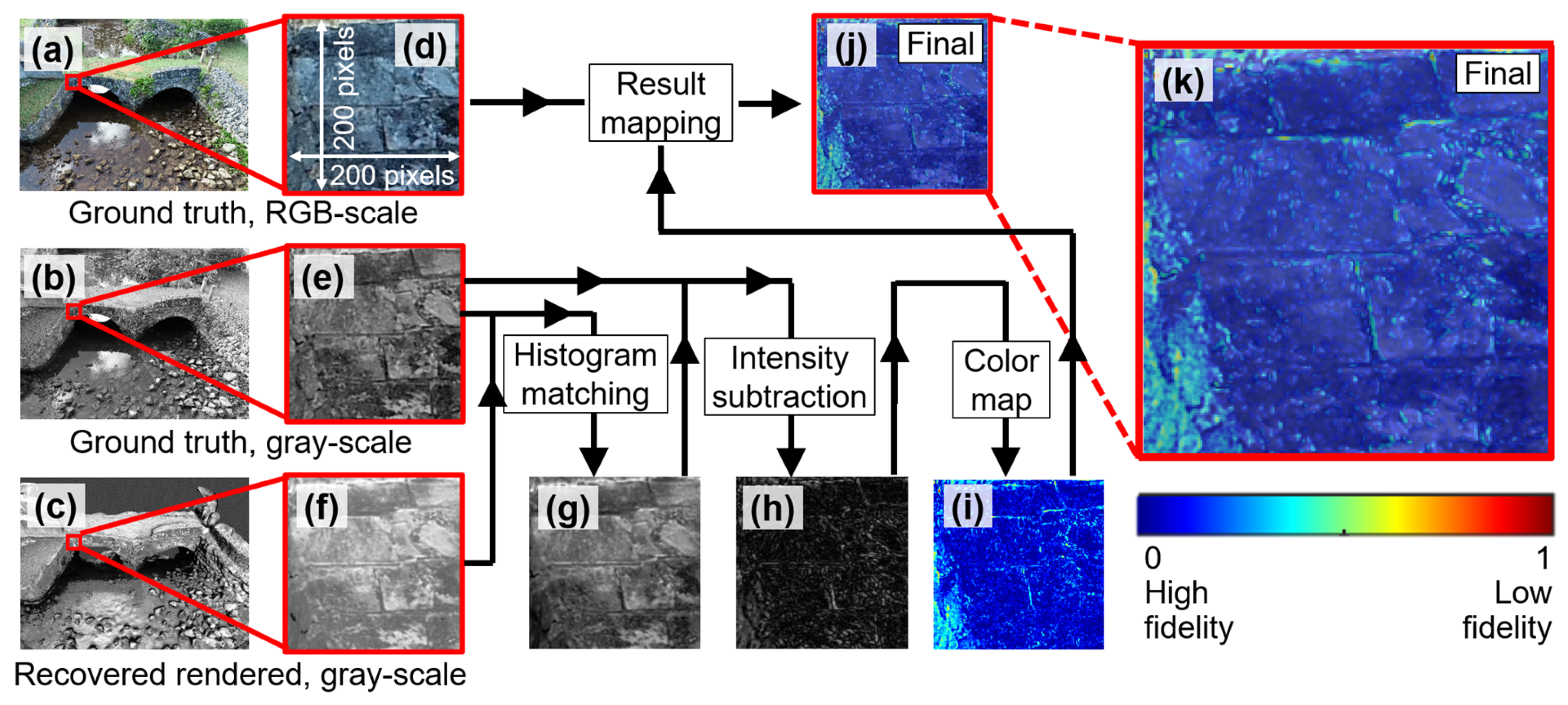

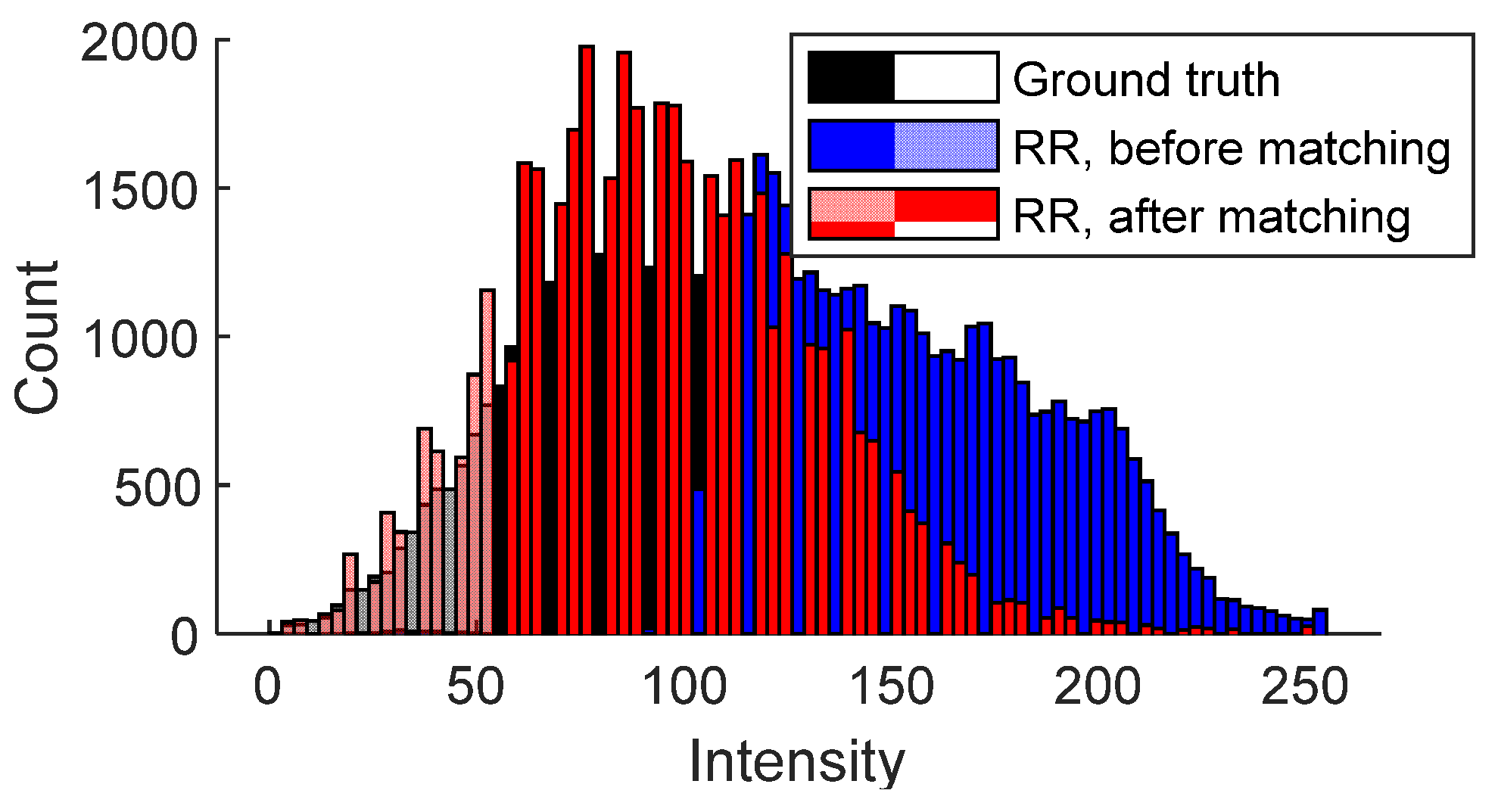

6.2.3. Visual Fidelity Evaluation

6.2.4. Evaluation Results

7. Building Validation

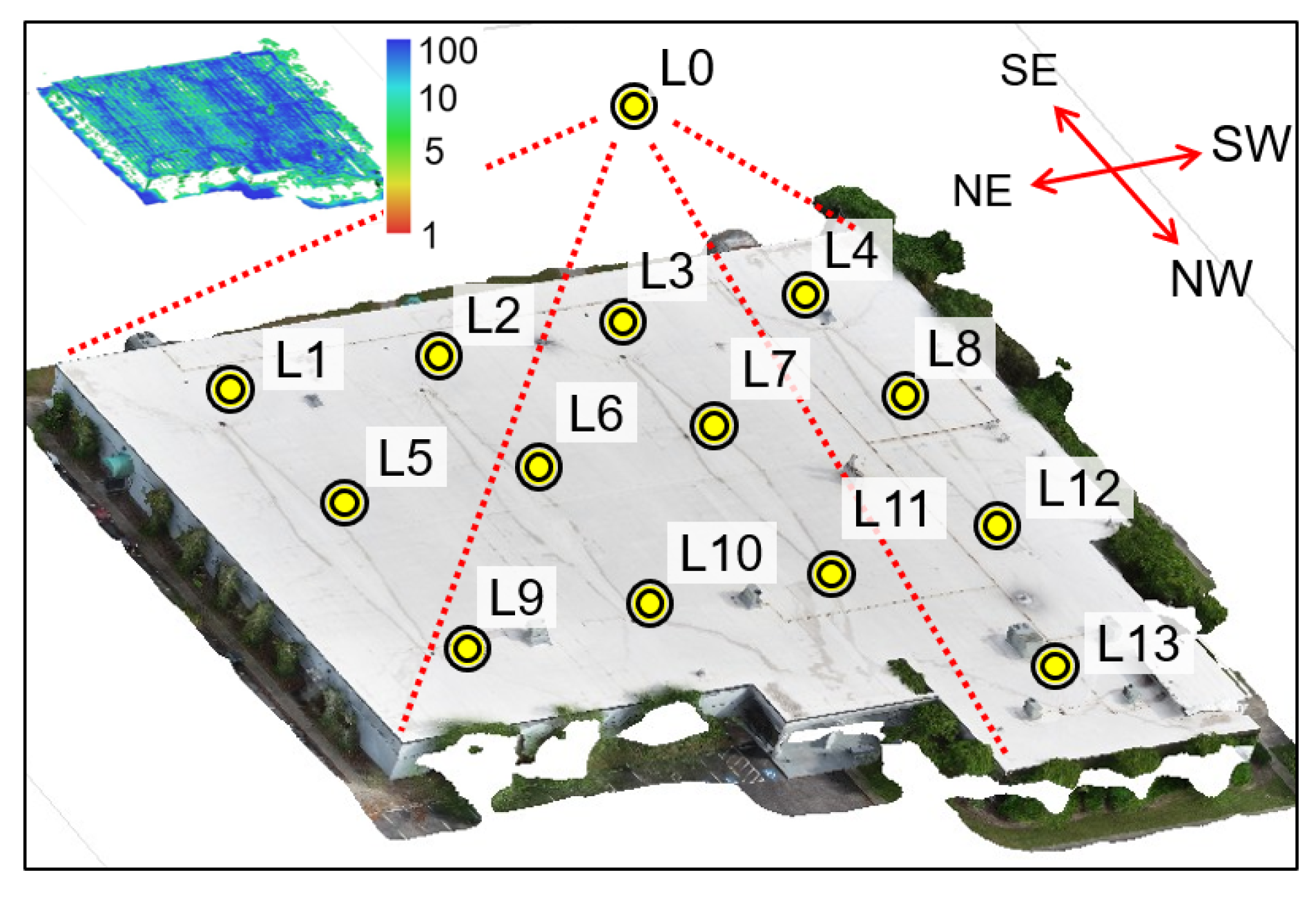

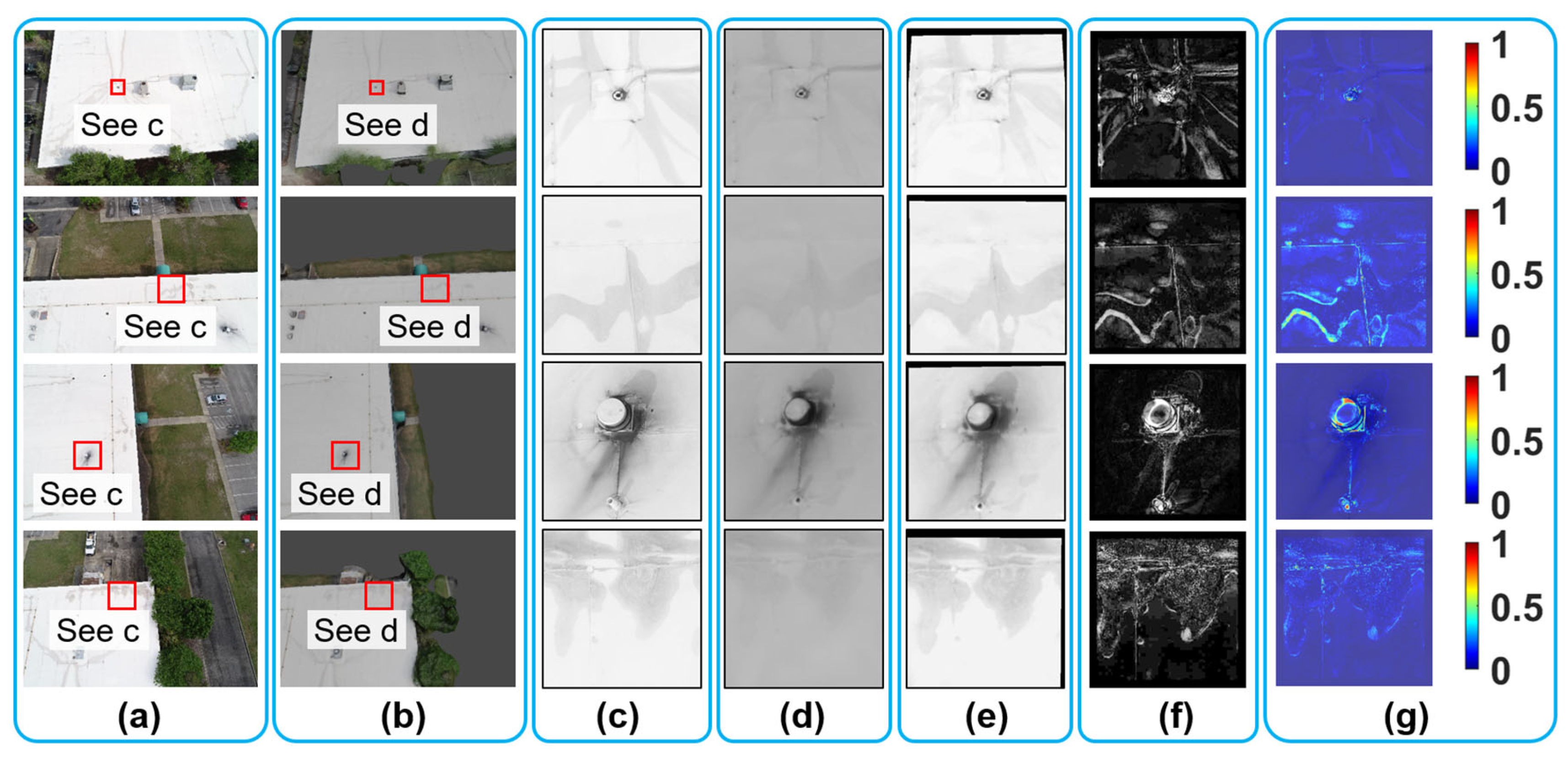

7.1. VR Model Development

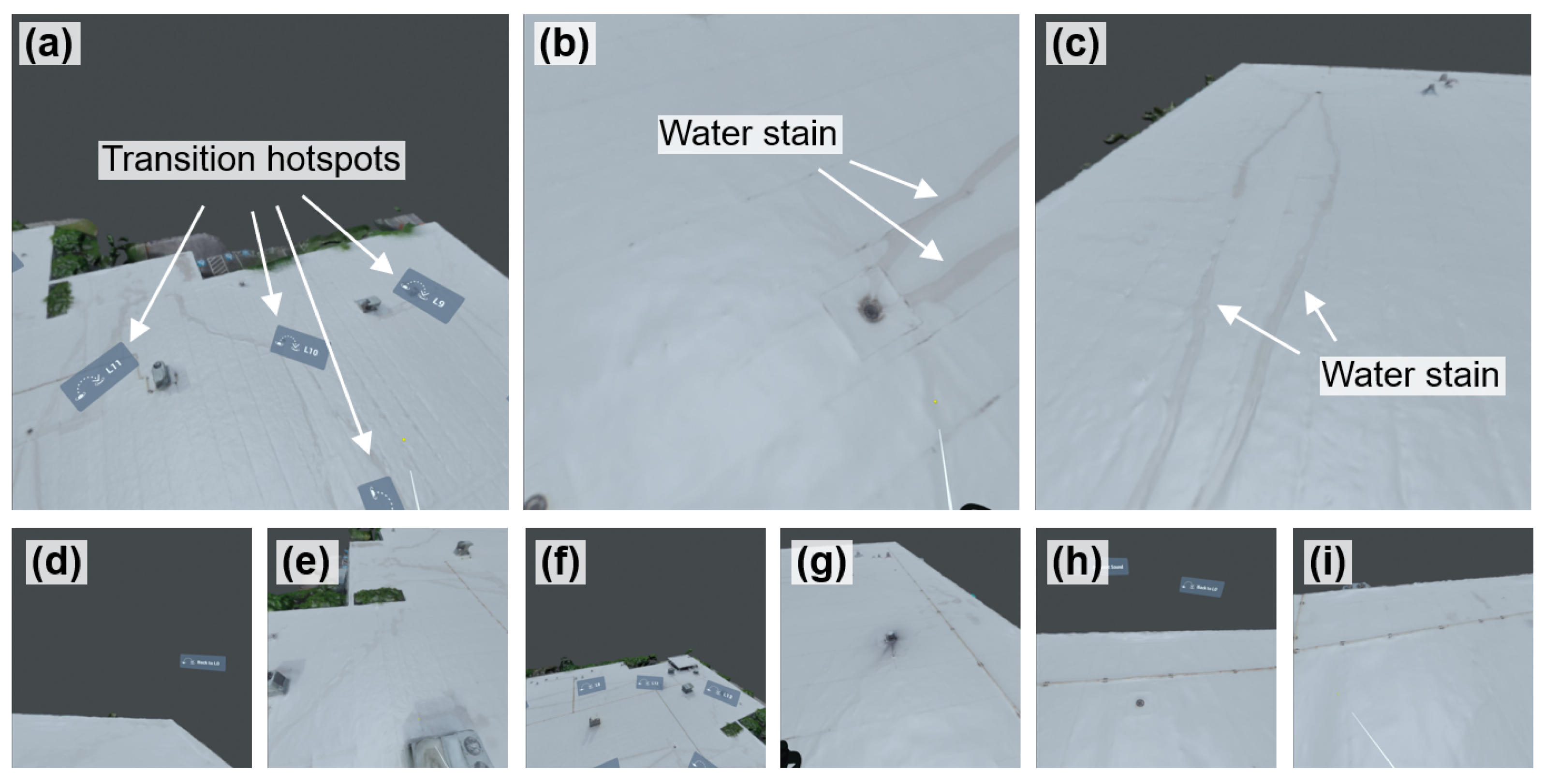

7.2. VR Model Assessment

8. Discussion

8.1. Discussion of Two Validation Studies

8.2. Limitations

8.3. Future Work

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

References

- American Society of Civil Engineers (ASCE). 2021 Infrastructure Report Card. Available online: https://infrastructurereportcard.org/ (accessed on 1 June 2025).

- Understanding Dam Failures, Association of State Dam Safety Officials. Available online: https://damsafety.org/dam-failures (accessed on 1 June 2025).

- Overview of Dams, ASCE Infrastructure Report Card. Available online: https://infrastructurereportcard.org/cat-item/dams-infrastructure/ (accessed on 1 June 2025).

- US Department of Transportation, Highway Bridge Inspections. Available online: https://www.transportation.gov/testimony/highway-bridge-inspections (accessed on 1 June 2025).

- FEMA Fact Sheet: FEMA National Dam Safety Program Overview. Available online: https://www.fema.gov/sites/default/files/documents/fema_nsdp-overview-fact-sheet.pdf (accessed on 1 June 2015).

- Deng, Z.; Huang, M.; Wan, N.; Zhang, J. The current development of structural health monitoring for bridges: A review. Buildings 2023, 13, 1360. [Google Scholar] [CrossRef]

- An, Y.; Chatzi, E.; Sim, S.H.; Laflamme, S.; Blachowski, B.; Ou, J. Recent progress and future trends on damage identification methods for bridge structures. Struct. Control. Health Monit. 2019, 26, e2416. [Google Scholar] [CrossRef]

- Graybeal, B.A.; Phares, B.M.; Rolander, D.D.; Moore, M.; Washer, G. Visual inspection of highway bridges. J. Nondestruct. Eval. 2002, 21, 67–83. [Google Scholar] [CrossRef]

- Jordan, S.; Moore, J.; Hovet, S.; Box, J.; Perry, J.; Kirsche, K.; Lewis, D.; Tse, Z.T.H. State-of-the-art technologies for UAV inspections. IET Radar Sonar Navig. 2018, 12, 151–164. [Google Scholar] [CrossRef]

- Sony, S.; Laventure, S.; Sadhu, A. A literature review of next-generation smart sensing technology in structural health monitoring. Struct. Control. Health Monit. 2019, 26, e2321. [Google Scholar] [CrossRef]

- Fayyad, T.M.; Taylor, S.; Feng, K.; Hui, F.K.P. A scientometric analysis of drone-based structural health monitoring and new technologies. Adv. Struct. Eng. 2025, 28, 122–144. [Google Scholar] [CrossRef]

- Omer, M.; Margetts, L.; Hadi Mosleh, M.; Hewitt, S.; Parwaiz, M. Use of gaming technology to bring bridge inspection to the office. Struct. Infrastruct. Eng. 2019, 15, 1292–1307. [Google Scholar] [CrossRef]

- Omer, M.; Margetts, L.; Mosleh, M.H.; Cunningham, L.S. Inspection of concrete bridge structures: Case study comparing conventional techniques with a virtual reality approach. J. Bridge Eng. 2021, 26, 05021010. [Google Scholar] [CrossRef]

- Luleci, F.; Chi, J.; Cruz-Neira, C.; Reiners, D.; Catbas, F.N. Fusing infrastructure health monitoring data in point cloud. Autom. Constr. 2024, 165, 105546. [Google Scholar] [CrossRef]

- Napolitano, R.; Blyth, A.; Glisic, B. Virtual environments for visualizing structural health monitoring sensor networks, data, and metadata. Sensors 2018, 18, 243. [Google Scholar] [CrossRef]

- Attard, L.; Debono, C.J.; Valentino, G.; Di Castro, M.; Osborne, J.A.; Scibile, L.; Ferre, M. A comprehensive virtual reality system for tunnel surface documentation and structural health monitoring. In Proceedings of the 2018 IEEE International Conference on Imaging Systems and Techniques (IST), Krakow, Poland, 16–18 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Bacco, M.; Barsocchi, P.; Cassará, P.; Germanese, D.; Gotta, A.; Leone, G.R.; Moroni, D.; Pascali, M.A.; Tampucci, M. Monitoring ancient buildings: Real deployment of an IoT system enhanced by UAVs and virtual reality. IEEE Access 2020, 8, 50131–50148. [Google Scholar] [CrossRef]

- Yasin Yiğit, A.; Uysal, M. Virtual reality visualisation of automatic crack detection for bridge inspection from 3D digital twin generated by UAV photogrammetry. Measurement 2025, 242, 115931. [Google Scholar] [CrossRef]

- Getuli, V.; Capone, P.; Bruttini, A.; Isaac, S. BIM-based immersive Virtual Reality for construction workspace planning: A safety-oriented approach. Autom. Constr. 2020, 114, 103160. [Google Scholar] [CrossRef]

- Wang, P.; Bai, X.; Billinghurst, M.; Zhang, S.; Zhang, X.; Wang, S.; He, W.; Yan, Y.; Ji, H. AR/MR remote collaboration on physical tasks: A review. Robot. Comput.-Integr. Manuf. 2021, 72, 102071. [Google Scholar] [CrossRef]

- Zhang, M.; Shu, L.; Luo, X.; Yuan, M.; Zheng, X. Virtual reality technology in construction safety training: Extended technology acceptance model. Autom. Constr. 2022, 135, 104113. [Google Scholar] [CrossRef]

- Hartless, J.F.; Ayer, S.K.; London, J.S.; Wu, W. Comparison of building design assessment behaviors of novices in augmented-and virtual-reality environments. J. Archit. Eng. 2020, 26, 04020002. [Google Scholar] [CrossRef]

- Jáuregui, D.V.; White, K.R. Implementation of virtual reality in routine bridge inspection. Transp. Res. Rec. 2003, 1827, 29–35. [Google Scholar] [CrossRef]

- Google Scholar. Available online: https://scholar.google.com/ (accessed on 1 June 2025).

- ScienceDirect. Available online: https://www.sciencedirect.com/ (accessed on 1 June 2025).

- Ullman, S. The interpretation of structure from motion. Proc. R. Soc. Lond. Ser. B Biol. Sci. 1979, 203, 405–426. [Google Scholar] [CrossRef]

- Andrew, A.M. Multiple view geometry in computer vision. Kybernetes 2001, 30, 1333–1341. [Google Scholar] [CrossRef]

- AgiSoft Metashape. Available online: http://www.agisoft.com/downloads/installer/ (accessed on 7 January 2025).

- 3Dflow. Available online: https://www.3dflow.net/3df-zephyr-pro-3d-models-from-photos/ (accessed on 1 June 2025).

- Kaartinen, E.; Dunphy, K.; Sadhu, A. LiDAR-based structural health monitoring: Applications in civil infrastructure systems. Sensors 2022, 22, 4610. [Google Scholar] [CrossRef]

- Unity. Available online: https://unity.com/ (accessed on 1 June 2025).

- Kolor. Panotour. Available online: http://www.kolor.com/panotour/ (accessed on 1 June 2025).

- Stratbox. Introducing Stratbox Connect. 2024. Available online: https://www.imagedreality.com/stratbox-connect/ (accessed on 2 October 2024).

- Savini, F.; Marra, A.; Cordisco, A.; Giallonardo, M.; Fabbrocino, G.; Trizio, I. A complex Virtual Reality system for the management and visualization of bridge data. SCIRES-IT-Sci. Res. Inf. Technol. 2022, 12, 49–66. [Google Scholar] [CrossRef]

- Luleci, F.; Li, L.; Chi, J.; Reiners, D.; Cruz-Neira, C.; Catbas, F.N. Structural health monitoring of a foot bridge in virtual reality environment. Procedia Struct. Integr. 2022, 37, 65–72. [Google Scholar] [CrossRef]

- Luleci, F.; Catbas, F.N. Bringing site to the office: Decision-making in infrastructure management through virtual reality. Autom. Constr. 2024, 166, 105675. [Google Scholar] [CrossRef]

- Shao, W.; Sasaki, A.; Kakizaki, K.I.; Araki, S.; Mukai, T. Inspection Support System for Aging Buildings Using Virtual Reality Technology. In Proceedings of the 2022 IEEE International Conference on Consumer Electronics-Taiwan, Taipei, Taiwan, 6–8 July 2022; pp. 47–48. [Google Scholar] [CrossRef]

- Ma, X.; Liu, Y.; Wang, J.; Wang, D.; Liu, Y. Application of the virtual reality technology in structural safety monitoring of shield tunnels. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2020; Volume 960, No. 2; p. 022064. [Google Scholar] [CrossRef]

- Fabbrocino, G.; Savini, F.; Marra, A.; Trizio, I. Virtual investigation of masonry arch bridges: Digital procedures for inspection, diagnostics, and data management. In Proceedings of the International Conference of the European Association on Quality Control of Bridges and Structures, Padua, Italy, 29 August–1 September 2021; Springer International Publishing: Cham, Switzerland, 2021; pp. 979–987. [Google Scholar] [CrossRef]

- Hameed, A.; Perkis, A. Authenticity and presence: Defining perceived quality in VR experiences. Front. Psychol. 2024, 15, 1291650. [Google Scholar] [CrossRef]

- Gilbert, S.B. Perceived Realism of Virtual Environments Depends on Authenticity. Presence 2016, 25, 322–324. [Google Scholar] [CrossRef]

- Wang, A.; Thompson, M.; Uz-Bilgin, C.; Klopfer, E. Authenticity, interactivity, and collaboration in virtual reality games: Best practices and lessons learned. Front. Virtual Real. 2021, 2, 734083. [Google Scholar] [CrossRef]

- Lavoué, G.; Larabi, M.C.; Váša, L. On the efficiency of image metrics for evaluating the visual quality of 3D models. IEEE Trans. Vis. Comput. Graph. 2015, 22, 1987–1999. [Google Scholar] [CrossRef]

- Onozawa. Final Historical Structures Report Taleyfak Spanish Bridge, Tollai Talaifak, Agat, Guam. 2008. Available online: https://www.pacificpreservation.org/plans (accessed on 7 January 2025).

- Lizama, D.J. Colonization to Construction: Bridging the Gap Between Ancient Chamorro, Spanish Colonial & Modern Architecture on Guam. Undergraduate Honors Theses, University of San Francisco, San Francisco, CA, USA, 2016. Available online: https://repository.usfca.edu/honors/9 (accessed on 1 June 2025).

- Kong, X.; Hucks, R.G. Preserving our heritage: A photogrammetry-based digital twin framework for monitoring deteriorations of historic structures. Autom. Constr. 2023, 152C, 104928. [Google Scholar]

- Kingsland, K. Comparative analysis of digital photogrammetry software for cultural heritage. Digit. Appl. Archaeol. Cult. Herit. 2020, 18, e00157. [Google Scholar] [CrossRef]

- Kong, X. Identifying Geomorphological Changes of Coastal Cliffs through Point Cloud Registration from UAV Images. Remote Sens. 2021, 13, 3152. [Google Scholar] [CrossRef]

- Zhao, S.; Kang, F.; Li, J.; Ma, C. Structural health monitoring and inspection of dams based on UAV photogrammetry with image 3D reconstruction. Autom. Constr. 2021, 130, 103832. [Google Scholar] [CrossRef]

- Blender, Version 3.5. 2023. Available online: https://www.blender.org/ (accessed on 1 June 2025).

- VIAR360. Version 1.8.17. Available online: https://www.viar360.com/ (accessed on 1 June 2025).

- Kong, X.; Fegely, A.; De Backer, W.; Gray, M.; Hitt, G.; Kerns, R. Work-in-Progress: Developing an Interactive, Immersive, 360-Degree Virtual Media for Enhancing Student Learning in Additive Manufacturing. In Proceedings of the 2022 ASEE Annual Conference & Exposition, Minneapolis, MN, USA, 26–29 June 2022. [Google Scholar] [CrossRef]

- Kong, X.; Fegely, A.; Hitt, G.W.; Garcia, D.; Montgomery, K.; Law, D. i360°VR: An interactive 360-degree virtual reality approach for engineering education. In Proceedings of the 2025 ASEE Annual Conference, Montreal, QC, Canada, 22–25 June 2025; Available online: https://nemo.asee.org/public/conferences/365/papers/45936/view (accessed on 1 June 2025).

- Uplifting Pad Texture, Samuel Francis Johnson. Available online: https://pixabay.com/sound-effects/search/ambient/ (accessed on 1 June 2025).

- Meta Quest 2. Available online: https://www.meta.com/quest/products/quest-2/ (accessed on 15 July 2023).

- The MathWorks, Inc. MATLAB, version R2024a; The MathWorks, Inc.: Natick, MA, USA, 2024. [Google Scholar]

- Shi, J. Good features to track. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; IEEE: Piscataway, NJ, USA, 1994; pp. 593–600. [Google Scholar] [CrossRef]

- Leutenegger, S.; Chli, M.; Siegwart, R.Y. BRISK: Binary robust invariant scalable keypoints. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 2548–2555. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; Volume 15, No. 50. p. 10-5244. [Google Scholar]

- Kong, X.; Smyl, D. Investigation of the condominium building collapse in Surfside, Florida: A video feature tracking approach. Structures 2022, 43, 533–545. [Google Scholar] [CrossRef]

- Kong, X.; Li, J. Vision-based fatigue crack detection of steel structures using video feature tracking. Comput. -Aided Civ. Infrastruct. Eng. 2018, 33, 783–799. [Google Scholar] [CrossRef]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the IJCAI’81: 7th International Joint Conference on Artificial Intelligence, Vancouver, BC, Canada, 24–28 August 1981; Volume 2, pp. 674–679. [Google Scholar]

- Tomasi, C.; Kanade, T. Detection and tracking of point. Int. J. Comput. Vis. 1991, 9, 137–154. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Tanchenko, A. Visual-PSNR measure of image quality. J. Vis. Commun. Image Represent. 2014, 25, 874–878. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A feature similarity index for image quality assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef]

- Pix4Dcapture. Available online: https://www.pix4d.com/product/pix4dcapture/ (accessed on 1 February 2021).

- Double Grid Mode. Pix4Dcapture. Available online: https://support.pix4d.com/migrated/hc/en-us/articles/115002496206 (accessed on 1 June 2025).

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 22, 1330–1334. [Google Scholar] [CrossRef]

- Slocum, R.K.; Parrish, C.E. Simulated imagery rendering workflow for UAS-based photogrammetric 3D reconstruction accuracy assessments. Remote Sensing 2017, 9, 396. [Google Scholar] [CrossRef]

- Liu, W.; Zhang, P.; Lei, Y.; Huang, X.; Yang, J.; Ng, M. A generalized framework for edge-preserving and structure-preserving image smoothing. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 6631–6648. [Google Scholar] [CrossRef]

- Hsieh, Y.A.; Tsai, Y.J. Machine learning for crack detection: Review and model performance comparison. J. Comput. Civ. Eng. 2020, 34, 04020038. [Google Scholar] [CrossRef]

- Mishra, M.; Lourenço, P.B. Artificial intelligence-assisted visual inspection for cultural heritage: State-of-the-art review. J. Cult. Herit. 2024, 66, 536–550. [Google Scholar] [CrossRef]

- Mansuri, L.E.; Patel, D.A. Artificial intelligence-based automatic visual inspection system for built heritage. Smart Sustain. Built Environ. 2022, 11, 622–646. [Google Scholar] [CrossRef]

- Buia, A.; Stockhausen, F.; Filmann, N.; Hanisch, E. 2D vs. 3D imaging in laparoscopic surgery—Results of a prospective randomized trial. Langenbeck’s Arch. Surg. 2017, 402, 1241–1253. [Google Scholar] [CrossRef]

- Molinari, G.; Ragonesi, T.; Hool, S.-L.; Mantokoudis, G.; Presutti, L.; Caversaccio, M.; Anschuetz, L. Surgical implications of 3D vs. 2D endoscopic ear surgery: A case–control study. Eur. Arch. Oto-Rhino-Laryngol. 2020, 277, 3323–3330. [Google Scholar] [CrossRef]

- Kulke, L.; Pasqualette, L. Learning from 360-degree videos on a VR headset compared to 2D videos on a monitor. Cogent Educ. 2024, 11, 2355386. [Google Scholar] [CrossRef]

- Makransky, G.; Klingenberg, S. Virtual reality enhances safety training in the maritime industry: An organizational training experiment with a non-WEIRD sample. J. Comput. Assist. Learn. 2022, 38, 1127–1140. [Google Scholar] [CrossRef]

- Andersen, M.S.; Makransky, G. The validation and further development of a multidimensional cognitive load scale for virtual environments. J. Comput. Assist. Learn. 2021, 37, 183–196. [Google Scholar] [CrossRef]

- Wang, W.; Cheng, Y.; Lee, H.; Lin, C.; Huang, Y. Impact of anxiety and confidence in virtual reality-mediated learning transferred to hands-on tasks. J. Comput. Assist. Learn. 2023, 39, 1368–1381. [Google Scholar] [CrossRef]

- Ragan, E.D.; Bowman, D.A.; Kopper, R.; Stinson, C.; Scerbo, S.; McMahan, R.P. Effects of Field of View and Visual Complexity on Virtual Reality Training Effectiveness for a Visual Scanning Task. IEEE Trans. Vis. Comput. Graph. 2015, 21, 794–807. [Google Scholar] [CrossRef] [PubMed]

- Hidding, J.; Bucher, J.; Heiliger, C.; Andrade, D.; Trupka, L.; Halmen, M.; Werner, J.; Karcz, K.; Frank, A. Laparoscopy training of novices with complex curved instruments using 2D- and 3D-visualization. Langenbeck’s Arch. Surg. 2024, 409, 109. [Google Scholar] [CrossRef]

- Koller, S.; Ebert, L.C.; Martinez, R.M.; Sieberth, T. Using virtual reality for forensic examinations of injuries. Forensic Sci. Int. 2019, 295, 30–35. [Google Scholar] [CrossRef] [PubMed]

| Authors | Journal/Proceeding | Publisher | Year | Reference |

|---|---|---|---|---|

| Napolitano et al. | MDPI Sensors | MDPI | 2018 | [15] |

| Attard et al. | IEEE IST Conference | IEEE | 2018 | [16] |

| Omer et al. | Structure and Infrastructure Engineering | Taylor & Francis | 2019 | [12] |

| Bacco et al. | IEEE Access | IEEE | 2020 | [17] |

| Omer et al. | Journal of Bridge Engineering | ASCE | 2021 | [13] |

| Luleci et al. | Automation in Construction | Elsevier | 2024 | [14] |

| Yiğit and Uysal | Measurement | Elsevier | 2025 | [18] |

| VR Scene | Camera Location | Theme | Common Features | Transition Hotspots |

|---|---|---|---|---|

| Scene 0 | L0 | Bridge overview; link to other scenes | Pre-defined point of view; background ambient sound | Visit Scenes 1, 2, 3, and 4 |

| Scene 1 | L1 | Detailed inspections of a localized area at a close distance | Visit Scenes 0 and 2 | |

| Scene 2 | L2 | Visit Scenes 0, 1, and 3 | ||

| Scene 3 | L3 | Visit Scenes 0, 2, and 4 | ||

| Scene 4 | L4 | Visit Scenes 0 and 3 |

| Terminology | Definition | Source |

|---|---|---|

| Ground truth | UAV-captured image taken directly from the field during site inspection. | UAV camera |

| Rendered | Image generated in Blender from the VR model under a matched camera position. | VR model |

| Recovered rendered | Rendered image after geometric transformation and histogram matching to align with the ground truth image. | Transformed in MATLAB [56] |

| Deviation map | Color-coded map showing per-pixel intensity difference between recovered rendered and ground truth images. | Extracted from registered images |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kong, X.; Pettijohn, T.F., II; Torikyan, H. From Photogrammetry to Virtual Reality: A Framework for Assessing Visual Fidelity in Structural Inspections. Sensors 2025, 25, 4296. https://doi.org/10.3390/s25144296

Kong X, Pettijohn TF II, Torikyan H. From Photogrammetry to Virtual Reality: A Framework for Assessing Visual Fidelity in Structural Inspections. Sensors. 2025; 25(14):4296. https://doi.org/10.3390/s25144296

Chicago/Turabian StyleKong, Xiangxiong, Terry F. Pettijohn, II, and Hovhannes Torikyan. 2025. "From Photogrammetry to Virtual Reality: A Framework for Assessing Visual Fidelity in Structural Inspections" Sensors 25, no. 14: 4296. https://doi.org/10.3390/s25144296

APA StyleKong, X., Pettijohn, T. F., II, & Torikyan, H. (2025). From Photogrammetry to Virtual Reality: A Framework for Assessing Visual Fidelity in Structural Inspections. Sensors, 25(14), 4296. https://doi.org/10.3390/s25144296