Abstract

This paper proposes a deeply integrated model called CAS-GNN, aiming to solve the collaborative path-planning problem for multi-agent vehicles operating in dynamic environments. Our proposed model integrates CAS-UNet and Graph Neural Network (GNN), and, by introducing a dynamic edge enhancement module and a dynamic edge weight update module, it improves the accuracy of obstacle boundary recognition in complex scenarios and adaptively changes the influence of different edges during the information transmission process. We generate data through online trajectory optimization to enhance the model’s adaptability to dynamic environments. Simulation results show that our proposed CAS-GNN model has good performance in path planning. In a dynamic scenario involving six vehicles, our model achieved a success rate of 92.8%, a collision rate of 0.0836%, and a trajectory efficiency of 64%. Compared with the traditional A-GNN model, our proposed CAS-GNN model improves the planning success rate by 2.7% and the trajectory efficiency by 8%, while reducing the collision rate by 23%.

1. Introduction

Path planning plays an important role in intelligent transportation systems [1], which makes it a crucial aspect of autonomous driving technology. With the development of autonomous driving technology, research on single-vehicle path planning is gradually becoming more mature. Early research on traditional single-vehicle planning focused on using conventional algorithms to identify optimal or sub-optimal paths in known environments. Among the classic algorithms in the path-planning field, the A* algorithm has demonstrated excellent performance on rasterized maps [2]; it is a classic algorithm for solving single-vehicle planning problems and is especially suitable for finding the shortest path in a static environment, with a high success rate and efficiency. On this basis, references [3,4,5] propose improved A* algorithms to address the issues of low exploration efficiency, excessive turns, and rough paths of the A* algorithm, respectively.

Sampling-based searching algorithms, such as the Rapidly-exploring Random Trees (RRT) algorithm and the RRT* algorithm, have also been used to solve the single-vehicle path planning problem [6,7,8]. These sampling-based searching algorithms can rapidly generate candidate paths in high-dimensional complex spaces and gradually converge through optimization. Based on this, references [9,10,11] propose improved RRT* algorithms to address the slow convergence speed of the RRT* algorithm.

Reference [12] proposed a path planning algorithm based on Model Predictive Control (MPC), which considers the vehicle dynamics model and realizes continuous constraints in path planning. In reference [13], a metaheuristic algorithm was used to solve the path planning problem. In the early research, metaheuristic algorithms included fuzzy logic algorithms [14], simulated annealing algorithms [15], etc. Since then, they have expanded to encompass advanced methods such as neural networks [16], genetic algorithms [17], ant colony optimization [18], etc. Metaheuristic algorithms possess strong generalization ability and can adapt to various environments.

With the development of multi-vehicle collaboration technology, multi-unmanned vehicle systems (MUV) have been gradually applied in complex scenarios such as logistics and urban transportation [19]. Thus, the multi-vehicle routing problem has attracted the attention of researchers. Multi-vehicle path planning involves a tradeoff between local obstacle avoidance for each vehicle and overall collaborative efficiency. In recent years, researchers have proposed four kind of algorithms, i.e., the optimization-based planning algorithm [20], sampling-based search algorithm [21,22,23], deep reinforcement learning-based algorithm [24,25,26], and hybrid architecture algorithm. They are all considered to solve the multi-vehicle path planning problem.

The optimization-based algorithm formulates objective functions which include path smoothness, energy consumption, and safe obstacle-avoidance distances. It also establishes constraints, such as vehicle dynamic limitations and collision avoidance. The algorithm then employs mathematical optimization algorithms to determine the optimal path. Wang et al. proposed an MPC path planning and control model [20] which can adaptively adjust the obstacle avoidance weight, thereby solving the problems of easy planning failure and poor obstacle avoidance performance of MPC caused by changes in external parameters.

Sample-based search algorithms identify optimal paths by generating and evaluating candidate routes through a configuration space. These algorithms employ random or heuristic strategies to create samples and then use cost evaluation to select the best solution. Representative algorithms include Rapidly-exploring Random Tree (RRT*) and its improved versions (e.g., Dynamic RRT*, and algorithms derived from A*). Zhao et al. proposed the Dynamic RRT algorithm [21]. They constructed a heuristic sampling subset through path length estimation and decomposed the path optimization problem by integrating the concept of dynamic programming. This algorithm balances convergence speed and path length when navigating environments with randomly distributed obstacles. Researchers have studied conflict detection and resolution strategies to more effectively address conflict problems in multi-vehicle path planning. Time-window-based conflict detection algorithms [22] can predict potential collision risks of vehicles at future time points in real time. The Conflict-Based Search (CBS) algorithm [23] improves problem-solving efficiency by decoupling path planning from conflict resolution in a hierarchical manner.

The hybrid architecture algorithm uses optimization techniques to ensure that vehicle dynamics and safety constraints are strictly enforced during the planning phase. Xu et al. proposed a hybrid planner [27]. The V-Hybrid A* algorithm was employed for global path searches. The optimization algorithm was then used to refine trajectories. This algorithm generated safe, efficient, and smooth cooperative trajectories for multiple vehicles within an unstructured conflict area.

Neural networks improve a system’s ability to adapt to dynamic and unstructured environments. This capability enables the system to better adapt to dynamic and unstructured environments. Li et al. proposed a geometric GNN (GeoGNN) [28] that allows each robot to process sensory data from its neighbors. This model can achieve path planning without a global map and significantly improves path efficiency in complex scenarios. Among these neural network approaches, attention-based Graph Neural Networks (GNNs) show remarkable value in multi-vehicle path planning. GNN models represent each vehicle and its surrounding environment as a graph structure, where the edges represent interactions among vehicles. The network automatically learns the priority of interactions among different vehicles by using an attention mechanism. Liu et al. proposed a trajectory prediction framework based on a multi-agent, multi-modal graph attention isomorphic network (GAIN) [29] to effectively understand and aggregate long-term interactions among agents. Shi et al. created the UniMP model [30], which uses a graph transformer to effectively propagate information throughout the network. Ma et al. proposed a graph neural network A-GNN [31] based on the U-Net architecture and attention mechanism, which achieved multi-vehicle predictive navigation control.

In practical applications, multi-vehicle path planning often integrates multi-source data, including vision, LiDAR, and map information. It also needs a unified decision-making framework based on such data. Existing multi-vehicle dynamic path planning approaches have several problems and limitations. Traditional algorithms relying on searches or optimization perform well in single-vehicle planning scenarios. However, when dealing with multi-vehicle collaboration and dynamic environments, they struggle to balance global optimality, real-time responsiveness, and vehicle dynamics constraints. Metaheuristic algorithms typically suffer from slow convergence and result in instability [32]. Deep reinforcement learning-based algorithms demand substantial amounts of training data and are susceptible to fluctuations during the training process [33], thereby yielding less-than-optimal practical outcomes. Furthermore, current algorithms still show relatively low planning success rates and trajectory efficiency in multi-vehicle, unconstrained scenarios. Their collision avoidance rates also need further enhancement.

In addressing the aforementioned problems, this paper introduces a dynamic path planning model for multiple unmanned autonomous vehicles. This method integrates CAS-UNet [34] with Graph Neural Networks (GNNs) and is referred to as CAS-GNN. Building upon this foundation, we incorporate dynamic edge enhancement to improve the accuracy of obstacle boundary recognition using the CAS-GNN model in complex scenarios. Additionally, the model uses a dual-channel feature interaction strategy coupled with a dynamic edge weight generation mechanism. We use online trajectory optimization to generate training data, strengthening the model’s generalization capability in intricate obstacle avoidance situations. Our simulation results indicate that, compared to the conventional A-GNN model, the proposed CAS-GNN model achieves a success rate of 92.8% in path planning across six vehicle dynamics scenarios. Additionally, it reduces the collision rate by 23% and improves trajectory efficiency by 8%. This model significantly improves collaborative decision-making capabilities among multi-agent vehicles operating in dynamic and complex environments. By leveraging a GNN constructed with an attention mechanism based on the CAS-UNet model, we demonstrate improved performance in dynamic path planning for multiple vehicles within unconstrained scenarios.

2. Problem Model

In this paper, we assume an unconstrained environment without traffic rules or routes, which includes N vehicles and N obstacles. The i-th vehicle and the i-th obstacle nodes are represented as , respectively, where are the initial coordinates, is the initial orientation, is the vehicle’s speed, , are the target coordinates of the vehicle, and r is the radius of the obstacle. Since the obstacles are stationary, the parameter of the obstacle is set as 0, and the target coordinate of the obstacle is set as . The topological relationship of these nodes are constructed with a hybrid connection mode. The goal of this paper is to establish a Graph Neural Network (GNN) model based on the CAS-UNet architecture with an attention mechanism to safely and effectively control multiple vehicles, allowing them to reach their target positions in an unconstrained environment.

The vehicle model in this paper is based on the Kinematic Bicycle Model. We assume that the vehicle is a rigid body moving on a two-dimensional plane; the steering radius and the nonlinear characteristics of the tires are ignored. From time T to T + 1, the motion equations can be described as:

where, is the steering angle of the vehicle’s front wheels, represents the vehicle’s acceleration, is the time step, and and are adjustable parameters representing speed damping and steering response coefficients, respectively.

Based on Equation (1), two objectives should be achieved during the dynamic path planning process, i.e., guiding the vehicle to the target position, and preventing collisions with other vehicles or obstacles. Let and be the cost of a vehicle to obstacle collision and the cost of a vehicle to vehicle collision, respectively, which can be denoted as:

where is the predicted time step, and is the position vector , where represents the position vector of the -th vehicle at time , represents the position vector of the -th obstacle in the scene, represents the radius of the -th obstacle, and represents the safety distance of the obstacle, is the indicator function showing whether the -th vehicle and the -th obstacle collide, with a value of 1 if a collision occurs, and 0 otherwise, and represents the weight coefficient of the obstacle collision cost. Let be the target cost to penalize the distance between the current position and the target position of vehicles, which can be denoted as:

where represents the target position vector of the i-th vehicle, represents the direction angle of the -th vehicle at time , represents the target direction angle of the i-th vehicle, represents the weight coefficient for position error, and represents the weight coefficient for angle error.

Finally, we use the Sequential Least Squares Programming (SLSQP) algorithm to iteratively search for the minimum value of the objective function under a given constraint.

Our model uses two core constraints, and , to achieve effective collision avoidance. Specifically, is responsible for maintaining a safe distance between vehicles, while is used to maintain a safe distance between vehicles and obstacles. In addition, we introduce the constraint, which guides each vehicle to approach its preset target position and orientation as closely as possible. The trajectory smoothness is optimized by imposing constraints on the vehicle’s steering angle and acceleration.

3. CAS-GNN Path Planning

We propose a graph neural network model named CAS-GNN. This model guides each vehicle to its destination while preventing collisions with other vehicles and obstacles.

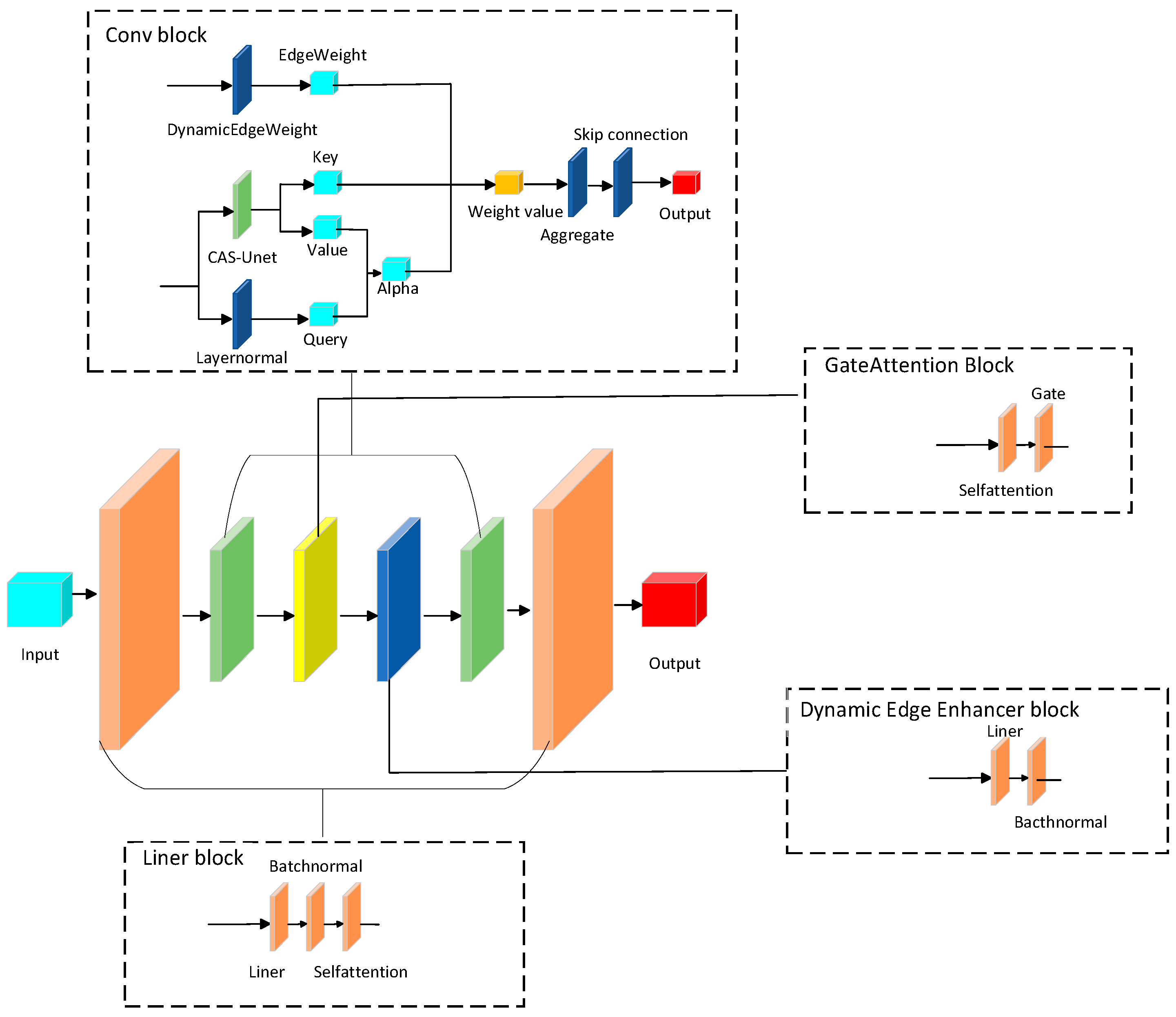

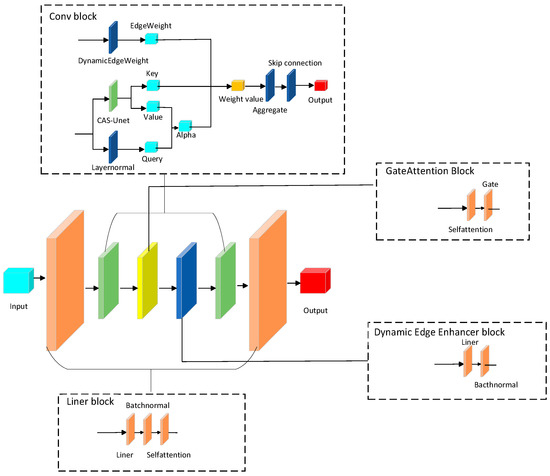

The CAS-GNN model integrates the CAS-UNet architecture, which enhances the network’s adaptability and generalization capabilities. This architecture includes an attention gate module, a cross-channel attention mechanism, and a dynamic edge weight generation module. Compared to the traditional U-Net, our model demonstrates greater flexibility and expressiveness when processing graph data. The model synergizes graph neural networks, attention mechanisms, and physical constraints to facilitate dynamic scene modeling and prediction. The proposed CAS-GNN model is illustrated in Figure 1.

Figure 1.

CAS-GNN model.

In this paper, we utilize a dynamic heterogeneous graph to model the traffic scene. The eight-dimensional input node features of each node, denoted as , are transformed into higher-dimensional latent vectors through a linear transformation followed by an activation function (ReLU). This process can be expressed as:

where is a trainable weight matrix.

Our proposed CAS-GNN model comprises L residual graph layers. Each layer incorporates four essential modules: graph convolution, gated multi-head attention, dynamic edge enhancement, and residual feature fusion. Within these layers, nodes employ an attention-based mechanism to effectively capture information from neighboring entities. The inclusion of residual connections within the framework facilitates the efficient transfer of prior information, thereby enabling the effective integration of multimodal data.

3.1. Graph Convolutional Layer

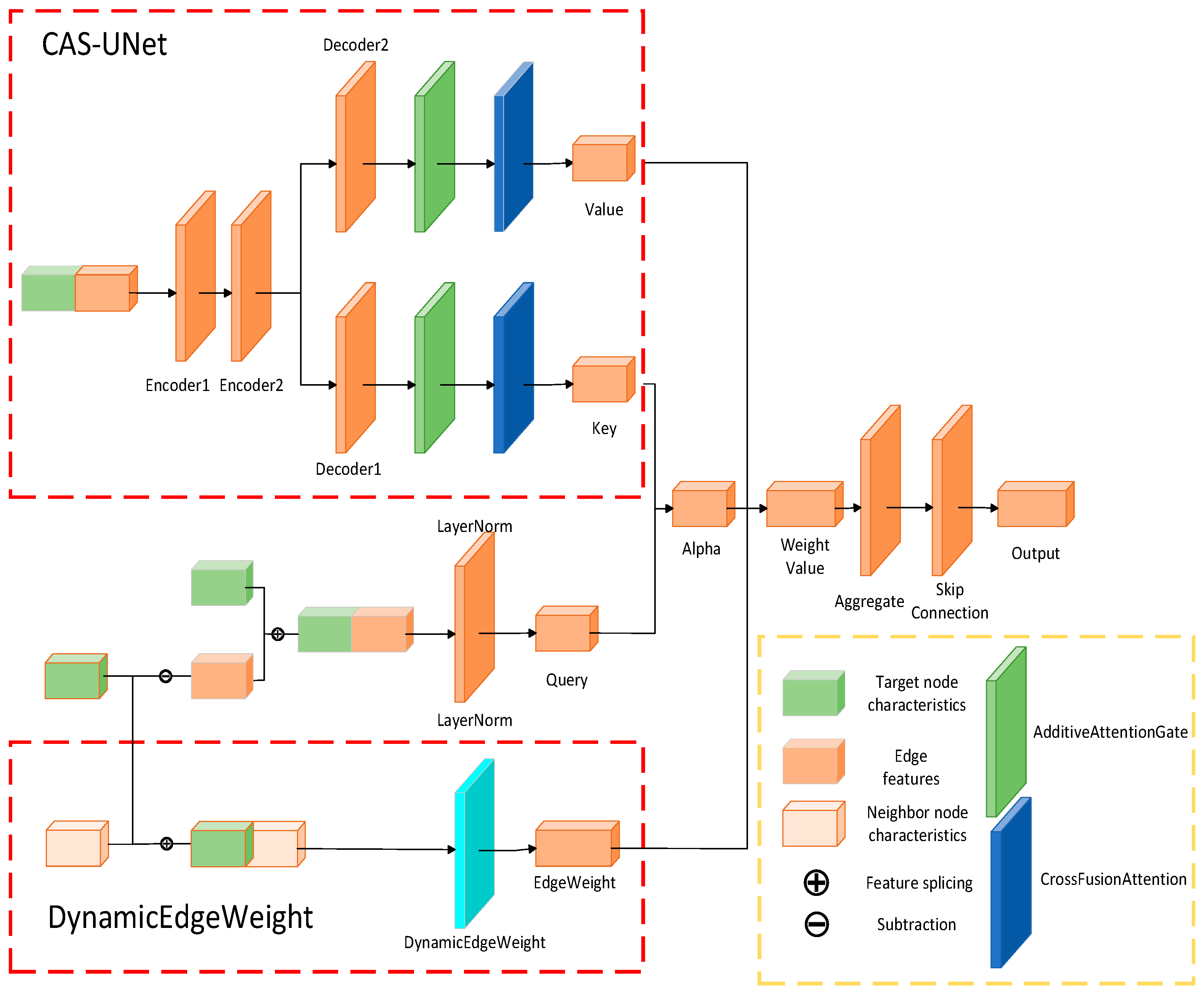

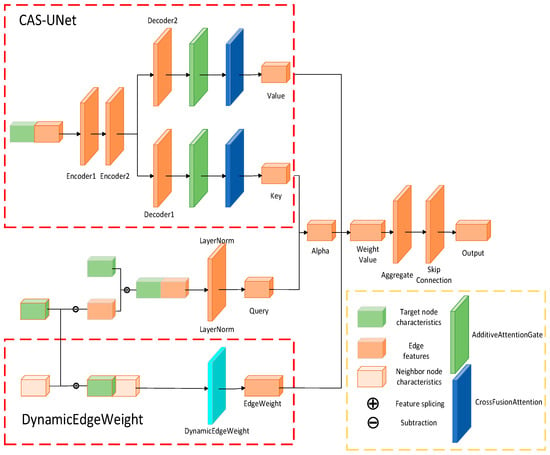

The graph convolutional layer is alternately composed of graph convolution blocks and self-attention layers. The graph convolution block consists of two parts: the CAS-UNet module and the dynamic edge weight update module, as shown in Figure 2.

Figure 2.

The Graph Convolution Block Structure.

The graph convolution block extracts information from neighboring nodes while integrating edge weights and node features. This process enhances the capability of feature representation through the improved CAS-UNet structure, ultimately yielding updated features for the nodes. The procedure for convoluting blocks is outlined as follows.

- Calculate dynamic edge weights.

For the edge , the dynamic edge weight can be calculated as

where is a multilayer perceptron (Multilayer, MLP), which consists of three linear transformations, i.e., LayerNorm, ReLU activation, and Sigmoid. is the Sigmoid function to ensure that the output is within the range [0, 1].

- 2.

- Construct the key, value, and query of the attention mechanism.

Let be the difference between the node features and the neighbor nodes features, which can be denoted as

By layer normalization, query can be obtained as

Then, by using CAS-UNet scheme, we can calculate the key and value:

CAS-UNet adopts an improved encoder-decoder architecture, where the encoder extracts node features through linear blocks and the decoder reconstructs these features and generates predictions. CAS-UNet promotes feature gating and fusion by introducing an Additive Attention Gate (AAG). Additionally, the model optimizes the interrelationship between feature channels through a cross-fusion channel attention module to enhance the effectiveness of fusion.

- 3.

- Calculate the attention coefficients. First, the model uses the scaled dot-product to calculate the unnormalized attention scores:

Then, for each target node i, normalize over all neighboring nodes using softmax:

Attention coefficient after applying Dropout :

- 4.

- Use the attention coefficients and dynamic edge weights to weight the values and construct the messages.

- 5.

- Use the Message Passing framework to aggregate information from neighbors:

After obtaining the aggregated result , a node’s own skip connection is also introduced, and the node’s intrinsic information is obtained through linear mapping:

where is a weight matrix that represents the transformation from input features to output features, and is a bias vector that adjusts the output of the linear transformation.

- 6.

- Fusion of the β gating mechanism. The β gating mechanism provides an adaptive fusion strategy which dynamically adjusts the contribution of information by learning the gating parameter :

The final output of the module is :

The output undergoes a self-attention layer and a series of residual operations, resulting in the final output :

where ReLU is the activation function, BN refers to the batch normalization layer, and represents the features obtained from after passing through a self-attention layer.

3.2. Gated Multi-Head Attention Mechanism

Calculate the attention coefficient :

where represents the input features of node used for attention computation in the current module, denotes the transformation matrix for the query, which converts the features of node into a query vector, represents the input features of the neighboring node used for attention computation, denotes the transformation matrix for the key, and represents the vector dimension for each attention head, used to scale the dot-product attention. Softmax is an activation function that normalizes a vector of values into a probability distribution, where the sum of all probabilities equals 1.

The gating vector can be calculated as

where denotes the weight matrix used to compute the gating vector, and represents the bias term used to adjust the output of the linear transformation.

The fused information is used to update the node information:

where is the value transformation matrix, represents the scaling factor, set to 0.1 in this paper, and denotes the Hadamard product.

3.3. Dynamic Edge Enhancement

This layer dynamically generates edge features based on the current node features to enhance edge information. It also computes the updated edge values (edge features) using node features while simultaneously adjusting the node features.

- 1.

- Calculate the edge feature :

- 2.

- Map the concatenated features into the node-dimensional space using an MLP. Let be the mapped edge feature:

- 3.

- Calculate the average of the edge features of each node’s neighbors. For each node , the average edge feature of its neighboring nodes is denoted as :where is the set of neighboring nodes of node , indicating all the neighboring nodes connected to node , denotes the number of neighboring nodes, and is the edge feature mapping value from neighboring node to node .

- 4.

- By linear transformation and normalization on the current node features, we can obtain the updated intrinsic featurewhere is the weight matrix, and bnb_nbn is the bias vector.

- 5.

- By integrating the edge features into the node features, the final node feature can be obtained as

The CAS-Unet model adopts a hierarchical update strategy, alternating between edge enhancement and gated attention every two layers. The final control command is constrained by a dynamic range. This architecture employs CAS-UNet for multi-scale feature extraction, with the gating mechanism stabilizing the training process. The dynamic edge weights adaptively adjust the interaction intensity. The entire process can be simplified as:

where represents the node features after passing through all the previous linear layers, graph convolutional layers, and the gated attention and dynamic edge enhancement added at appropriate levels.

The final output is the vehicle control command:

where is the process of graph convolutional layer in Section 3.1, and are the coefficients of the final linear mapping layer, and tanh is the activation function.

4. Simulation

We create a simulation environment with multiple vehicles and obstacles to evaluate the CAS-GNN model we proposed. In the simulation, we assess its trajectory efficiency and planning success rate for path planning, as well as its obstacle avoidance performance.

4.1. Definition of the Dataset

The initial positions of the vehicles are distributed within a ±15 m range centered around the origin. The target point is located in the symmetric direction relative to the center of the initial positions, and the speed follows a normal distribution denoted as N(2.5, 5). Obstacles are randomly distributed within ±7 m on both sides of the vehicle’s driving path. Considering that the radii of these obstacles range from 1 to 3 m, and that the actual minimum distance between the vehicle and any obstacle is approximately 1 meter, it is required that obstacles be situated at least 5 m away from both start and end points. The input data for vehicles includes their starting position, starting angle, speed, and end position. Obstacle data encompasses their position and radius.

The dataset used for training is a simulation dataset, including 1–3 vehicles and 0–4 static obstacles. A total of approximately 20,961 trajectories were generated, with each trajectory consisting of 120 time steps. These labels were generated via the cost function presented in formula 2–5. By optimizing these objectives, the generated control commands are utilized as the labels for the model. The generated label data is partitioned into a training set and a validation set, with the ratio of the training set to the validation set being 4:1. Since that obstacles with various shapes can be assembled with using circular obstacles of different sizes, this experiment focuses on circular obstacles case.

4.2. CAS-GNN Training Details and Simulation Environment Setup

The training dataset for the CAS-GNN model is constructed as follows. We construct the multi-vehicle and multi-obstacle scene data, then we generate the environment information matrix and output control input-output pairs by data augmentation. The initial learning rate is set to 0.001. In the case that the validation loss does not decrease for 10 epochs, the learning rate is multiplied by 0.2. The minimum of learning rate is set to 0.00000001. The weight decay coefficient is set to 0.000005, the batch size is set to 4096, and early stopping is employed (training is stopped after 15 epochs without improvement). During the training procedure, the loss function computes the weighted mean square error (MSE) between the predicted value and the true value. Specifically, different weights are assigned to the error of each control command in accordance with the time step. Generally speaking, a higher weight is assigned to the earlier time step. In the presence of static obstacles, an additional loss term is calculated and weighted before being incorporated into the total loss. The final loss function yields the weighted total loss, and the model is trained by minimizing this loss value. The hardware configuration is shown in Table 1:

Table 1.

The configuration of the hardware environment where the experiment is located.

The following three metrics, which can evaluate navigation accuracy, safety, and path quality, are used to assess the performance of the proposed scheme.

- (PPSR) Path Planning success rate: The planning success rate is the proportion of collision-free arrivals that meet endpoint position deviation ≤ 2 m and heading angle deviation ≤ 0.1 radians, obtained by dividing the number of successfully planned scenarios by the total number of scenarios.

- Collision rate: This refers to the number of vehicle-obstacle/vehicle-vehicle collisions per unit travel distance, with geometric collisions accurately detected using the Separating Axis Theorem, obtained by dividing the number of collision scenes by the total number of scenes.

- Trajectory efficiency, which is the ratio of the ideal straight-line distance to the actual travel distance in successful cases. The trajectory efficiency reflects the optimality of the path.

4.3. Results

The path planning success rate (PPSR), collision rate, and trajectory efficiency of the proposed CAS-GNN model and the A-GNN model in various vehicle-obstacle scenarios are presented in Table 2.

Table 2.

The path planning success rate (PPSR), collision rate, and trajectory efficiency of the CAS-GNN model and the A-GNN model in various vehicle-obstacle scenarios.

Both models exhibit a decrease in PPSR as the number of obstacles or vehicles increases. However, when there is only one or two vehicles present, the PPSR of the CAS-GNN model is slightly lower than that of the A-GNN model. This discrepancy can be attributed to the inclusion of self-loop edges within the edge enhancement module of the CAS-GNN model, which becomes more pronounced with a smaller number of vehicles. Additionally, each vehicle has limited effective interaction relationships; thus, the multi-head mechanism may lead to dispersed attention. As the number of vehicles increases, it is observed that the CAS-GNN model achieves a better PPSR compared to the A-GNN model. Table 2 comparison of planning success rate, collision rate, and trajectory efficiency in scenarios with different obstacle densities.

Compared with the A-GNN model, the CAS-GNN model can obtain a lower collision rate and better trajectory efficiency.

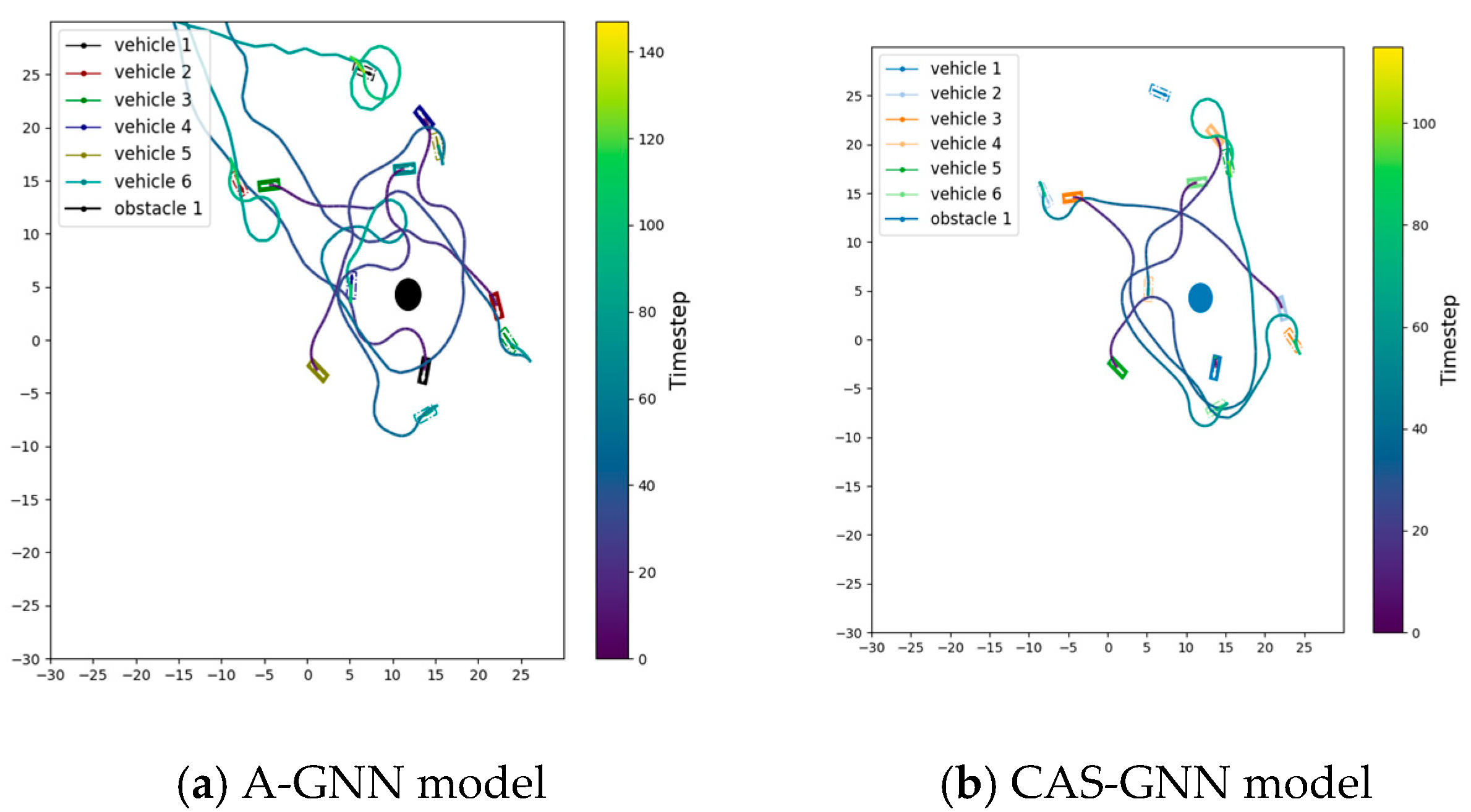

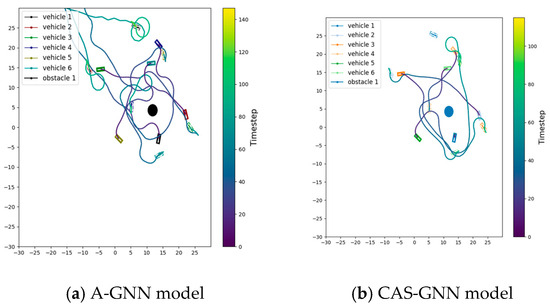

The trajectory graph of the two models with six vehicles and one obstacle is shown in Figure 3. As shown, the trajectory length of the CAS-GNN model is significantly shorter than that of the A-GNN model.

Figure 3.

Trajectory graph of the A-GNN model and the proposed CAS-GNN. It can be clearly observed that the CAS-GNN model proposed in this paper outperforms the A-GNN model in terms of efficiency.

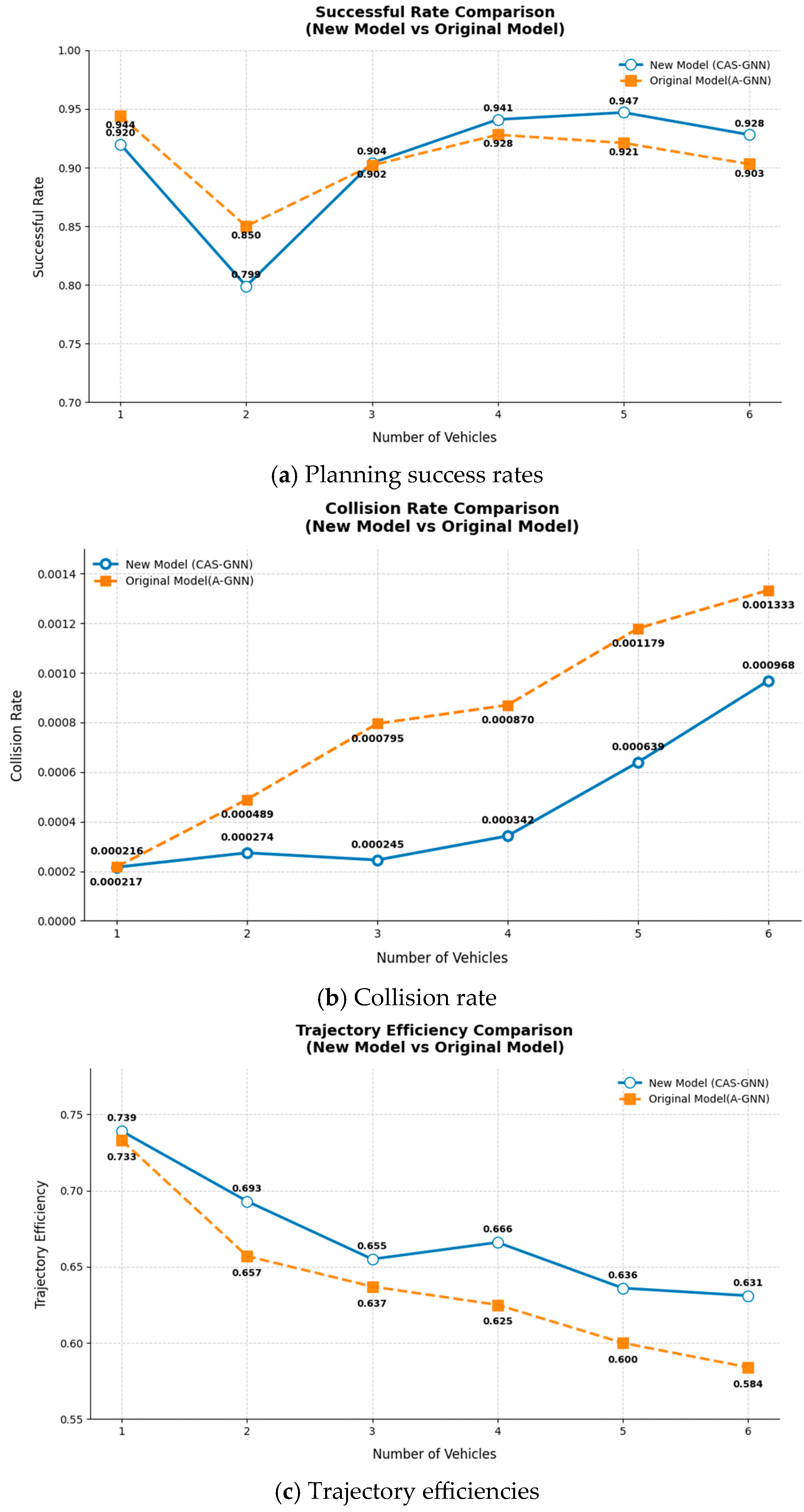

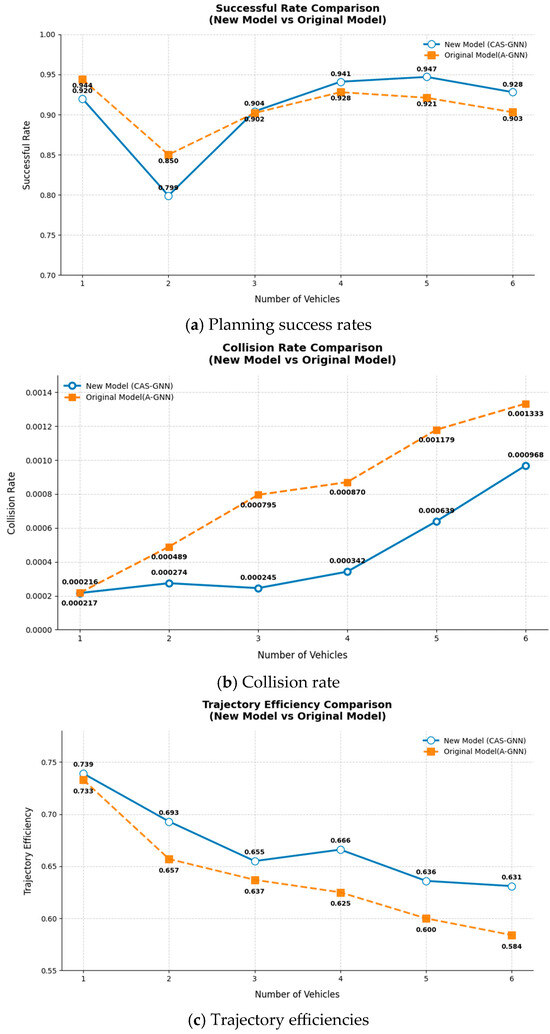

In the context of varying numbers of vehicles, Figure 4 presents a comparison of planning success rates, collision rates, and trajectory efficiencies between the CAS-GNN and A-GNN models. For the scenario involving six vehicles, the CAS-GNN model demonstrates an increase in the planning success rate of 2.7%, a reduction in the collision rate of 23%, and an enhancement in trajectory efficiency of 8%. The proposed CAS-GNN model is capable of generating smooth and safe paths, showcasing remarkable generalization ability.

Figure 4.

Comparisons of planning success rates, collision rates, and trajectory efficiencies between the CAS-GNN and A-GNN models with different numbers of vehicles.

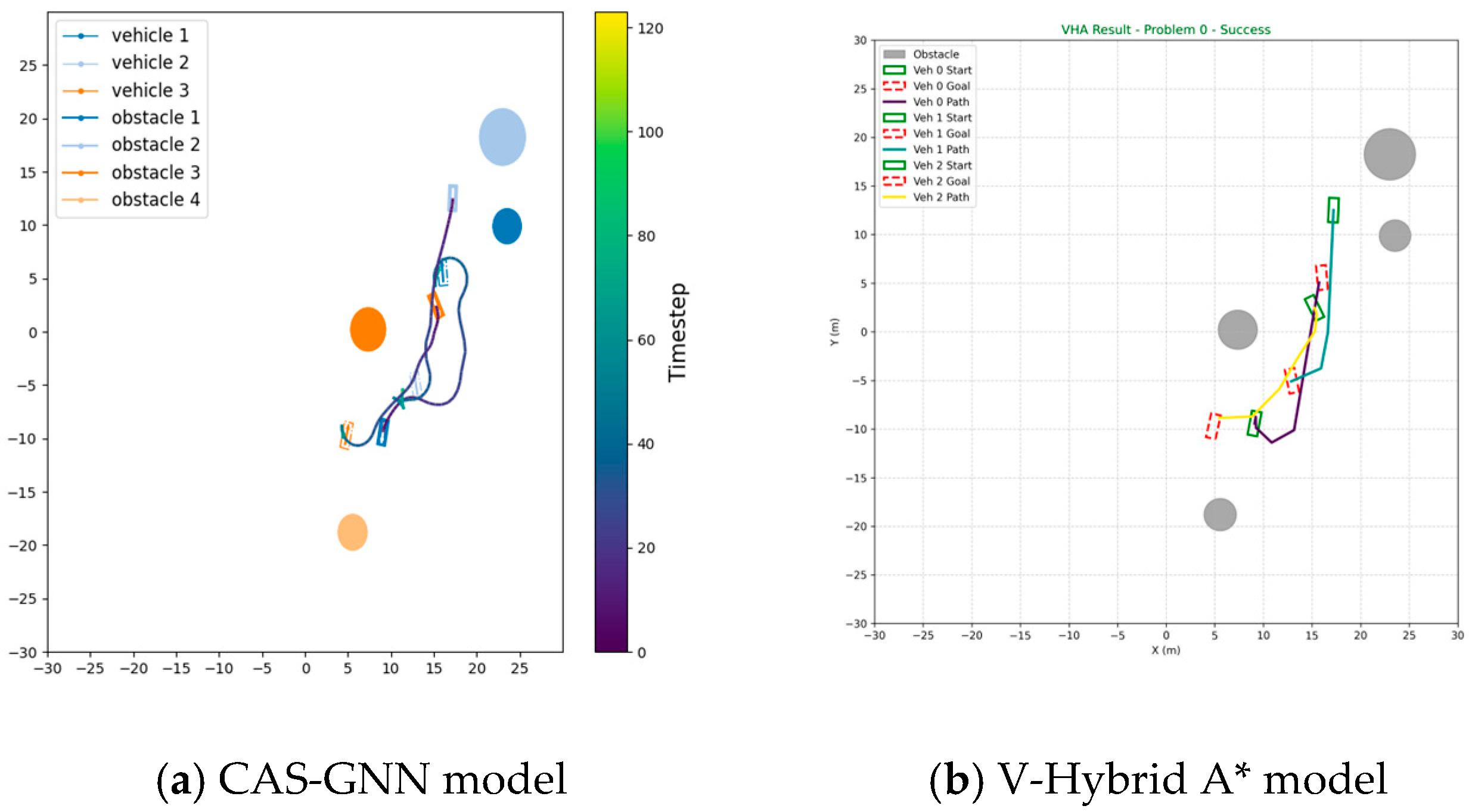

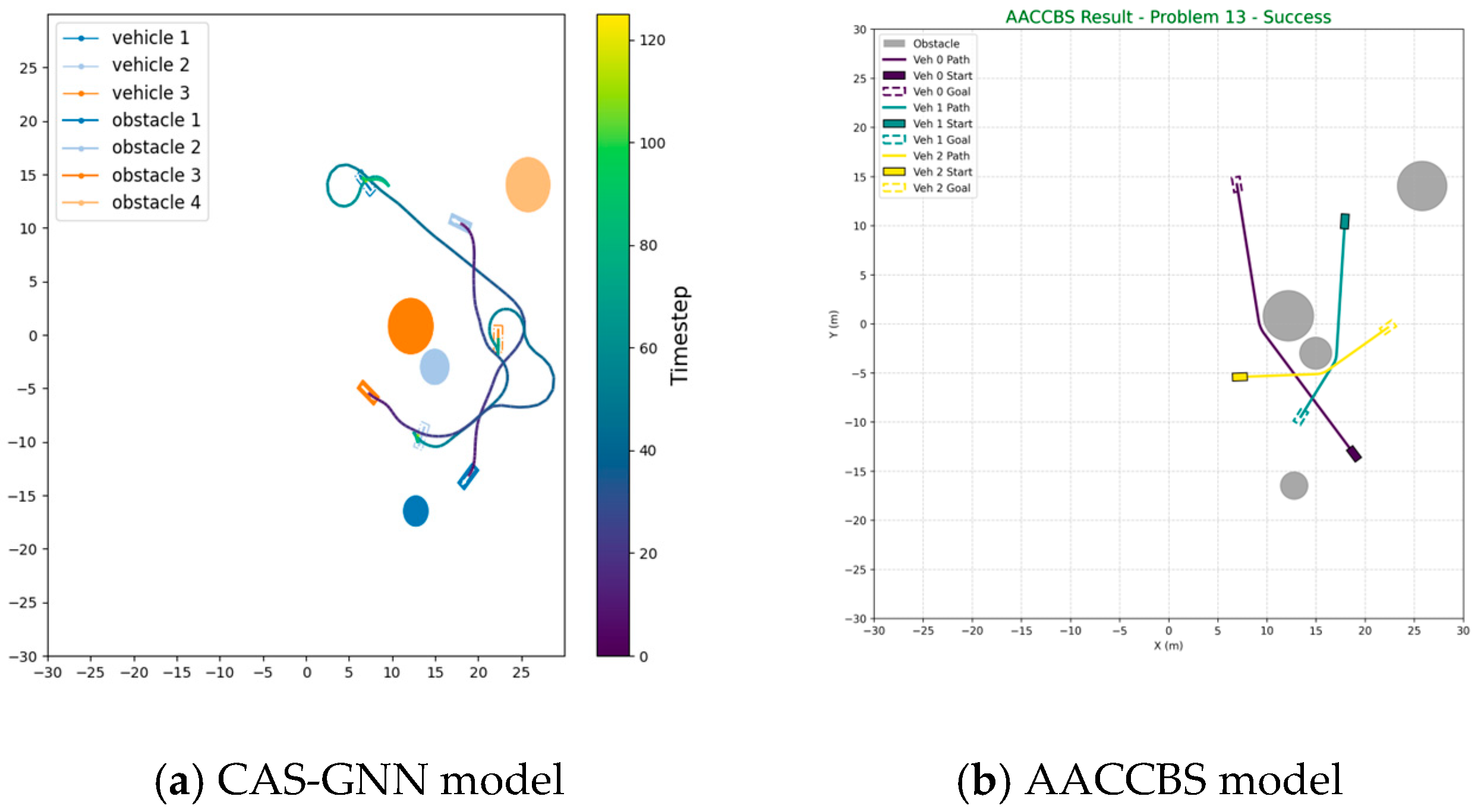

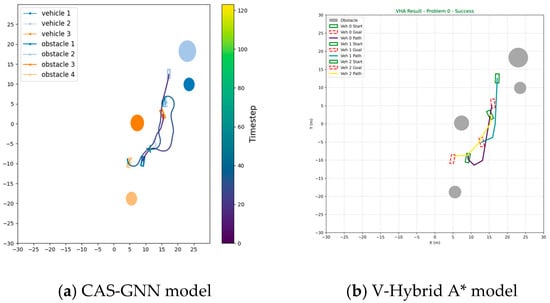

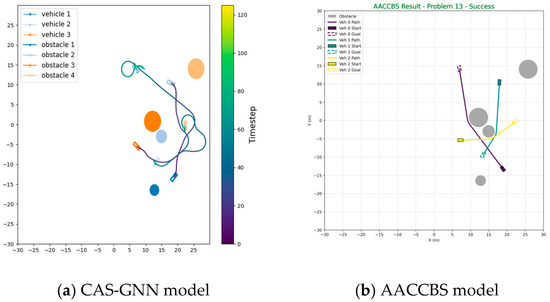

We now conduct a comparative analysis of our proposed CAS-GNN model against two traditional path-planning algorithms: Anytime A* Conflict-based Search (AACCBS) and V-Hybrid A*. This comparison is executed across 100 scenarios, each involving three vehicles and four obstacles. The paths generated by both AACCBS and V-Hybrid A* consist of discrete points; consequently, we implement an interpolation process for these paths in our simulation. The original straight-line trajectories produced by these two algorithms demonstrate high efficiency. However, following the interpolation process, the resulting paths exhibit a significantly elevated collision rate compared to that of our proposed algorithm. This discrepancy can be attributed to the fact that both AACCBS and V-Hybrid A* rely on search-based and optimization-based methods, which tend to perform inadequately in environments characterized by a high density of potential collisions. As illustrated in Table 3, the results clearly indicate that our proposed CAS-GNN algorithm surpasses both AACCBS and V-Hybrid A*. Figure 5 and Figure 6 presents the trajectories planned by all three models within a scenario featuring three vehicles and four obstacles.

Table 3.

Comparison of the performance of three models.

Figure 5.

Trajectory graph of the V-Hybrid A* model and the proposed CAS-GNN model.

Figure 6.

Trajectory graph of the AACCBS model and the proposed CAS-GNN model.

5. Conclusions

In this paper, we propose the CAS-GNN model, which integrates CAS-UNet with a graph neural network (GNN). The CAS-GNN model uses an attention-based graph neural network to construct a multi-vehicle interaction framework, facilitating distributed decision-making through the aggregation of global and local information. Furthermore, CAS-UNet efficiently extracts environmental features. Its lightweight architecture and residual connection design significantly surpass those of traditional U-Net models. Simulation results demonstrate that this model achieves a high planning success rate, enhanced trajectory efficiency, and a low collision rate across scenarios characterized by varying numbers of obstacles and multiple vehicles. Additionally, it exhibits strong generalization capabilities, enabling adaptation to diverse environmental conditions. This effectively enhances the path planning abilities of multiple autonomous vehicles operating in environments with both multiple vehicles and obstacles, thereby improving obstacle avoidance accuracy and robustness. It is important to note that the analysis conducted in this study was limited to scenarios involving homogeneous circular obstacles. Future research could extend the application of this algorithm to environments featuring heterogeneous shapes and dynamic obstacles. Moreover, subsequent investigations may explore the performance of the model by incorporating vehicle dynamics models.

Author Contributions

Conceptualization, Y.J.; Methodology, Y.J. and R.S.; Software, R.S.; Validation, R.S.; Formal analysis, Y.W.; Data curation, R.S. and Z.Z.; Writing—original draft, R.S.; Writing—review & editing, Y.J.; Visualization, Z.L.; Supervision, Y.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Fundamental Research Funds for the Central Universities (No. 3122025075).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data is unavailable due to privacy and ethical restrictions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sánchez-Ibáñez, J.R.; Pérez-del-Pulgar, C.J.; García-Cerezo, A. Path planning for autonomous mobile robots: A review. Sensors 2021, 21, 7898. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Yao, L.; Mi, C. Fusion Algorithm Based on Improved A* and DWA for USV Path Planning. J. Mar. Sci. Appl. 2024, 24, 1–14. [Google Scholar] [CrossRef]

- Wang, H.; Lou, S.; Jing, J.; Wang, Y.; Liu, W.; Liu, T.; Arya, Y. The EBS-A* algorithm: An improved A* algorithm for path planning. PLoS ONE 2022, 17, e0263841. [Google Scholar] [CrossRef] [PubMed]

- Hou, Y.; Gao, H.; Wang, Z.; Du, C. Path planning for mobile robots based on improved A* algorithm. In International Conference on Neural Computing for Advanced Applications; Springer Nature Singapore: Singapore, 2022; pp. 169–183. [Google Scholar]

- Luo, L. An Improved A* Algorithm for AGV Path Planning. In Proceedings of the 2023 IEEE International Conference on Image Processing and Computer Applications (ICIPCA), Changchun, China, 11–13 August 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 739–743. [Google Scholar]

- Noreen, I.; Khan, A.; Habib, Z. Optimal path planning using RRT* based approaches: A survey and future directions. Int. J. Adv. Comput. Sci. Appl. 2016, 7, 97–107. [Google Scholar] [CrossRef]

- Tu, H.; Deng, Y.; Li, Q.; Song, M.; Zheng, X. Improved RRT global path planning algorithm based on Bridge Test. Robot. Auton. Syst. 2024, 171, 104570. [Google Scholar] [CrossRef]

- Hu, B.; Cao, Z.; Zhou, M. An efficient RRT-based framework for planning short and smooth wheeled robot motion under kinodynamic constraints. IEEE Trans. Ind. Electron. 2020, 68, 3292–3302. [Google Scholar] [CrossRef]

- Xin, P.; Wang, X.; Liu, X.; Wang, Y.; Zhai, Z.; Ma, X. Improved bidirectional RRT* algorithm for robot path planning. Sensors 2023, 23, 1041. [Google Scholar] [CrossRef]

- Rahman, A.; Kundu, A.; Banerjee, S. IQ-RRT*: A path planning algorithm based on informed-RRT* and quick-RRT. Int. J. Comput. Sci. Eng. 2025, 28, 303–313. [Google Scholar]

- Guo, S.; Gong, J.; Shen, H.; Yuan, L.; Wei, W.; Long, Y. DBVSB-P-RRT*: A path planning algorithm for mobile robot with high environmental adaptability and ultra-high speed planning. Expert Syst. Appl. 2025, 266, 126123. [Google Scholar] [CrossRef]

- Liu, C.; Lee, S.; Varnhagen, S.; Tseng, H.E. Path planning for autonomous vehicles using model predictive control. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Yahia, H.S.; Mohammed, A.S. Path planning optimization in unmanned aerial vehicles using meta-heuristic algorithms: A systematic review. Environ. Monit. Assess. 2023, 195, 30. [Google Scholar] [CrossRef]

- Wang, M. Fuzzy logic based robot path planning in unknown environment. In Proceedings of the 2005 International Conference on Machine Learning and Cybernetics, Guangzhou, China, 18–21 August 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 2. [Google Scholar]

- Shi, K.; Wu, Z.; Jiang, B.; Karimi, H.R. Dynamic path planning of mobile robot based on improved simulated annealing algorithm. J. Frankl. Inst. 2023, 360, 4378–4398. [Google Scholar] [CrossRef]

- Yang, S.; Luo, C. A neural network approach to complete coverage path planning. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2004, 34, 718–724. [Google Scholar] [CrossRef] [PubMed]

- Lamini, C.; Benhlima, S.; Elbekri, A. Genetic algorithm based approach for autonomous mobile robot path planning. Procedia Comput. Sci. 2018, 127, 180–189. [Google Scholar] [CrossRef]

- Liu, J.; Yang, J.; Liu, H.; Tian, X.; Gao, M. An improved ant colony algorithm for robot path planning. Soft Comput. 2017, 21, 5829–5839. [Google Scholar] [CrossRef]

- Bagherzadeh, M.; Savehshemshaki, S.; Lucia, W. Guaranteed collision-free reference tracking in constrained multi unmanned vehicle systems. IEEE Trans. Autom. Control 2021, 67, 3083–3089. [Google Scholar] [CrossRef]

- Wang, X.; Cui, T.; Sun, W.; Li, Y. Path Planning and Steering Obstacle Avoidance Control Method of Intelligent Vehicle. Automob. Appl. Technol. 2025, 50, 27–33. [Google Scholar] [CrossRef]

- Zhao, P.; Chang, Y.; Wu, W.; Luo, H.; Zhou, Z.; Qiao, Y.; Li, Y.; Zhao, C.; Huang, Z.; Liu, B.; et al. Dynamic RRT: Fast feasible path planning in randomly distributed obstacle environments. J. Intell. Robot. Syst. 2023, 107, 48. [Google Scholar] [CrossRef]

- Orsini, F.; Gecchele, G.; Gastaldi, M.; Rossi, R. Real-time conflict prediction: A comparative study of machine learning classifiers. Transp. Res. Procedia 2021, 52, 292–299. [Google Scholar] [CrossRef]

- Sharon, G.; Stern, R.; Felner, A.; Sturtevant, N.R. Conflict-based search for optimal multi-agent pathfinding. Artif. Intell. 2015, 219, 40–66. [Google Scholar] [CrossRef]

- Gong, H.; Wang, P.; Ni, C.; Cheng, N. Efficient path planning for mobile robot based on deep deterministic policy gradient. Sensors 2022, 22, 3579. [Google Scholar] [CrossRef]

- Wang, Q.; Liu, H.; Gao, K.; Zhang, L. Improved multi-agent reinforcement learning for path planning-based crowd simulation. IEEE Access 2019, 7, 73841–73855. [Google Scholar] [CrossRef]

- Shi, Q.; Liu, M.; Zhang, S.; Lan, X. Reinforcement Learning for Multi-Agent Path Finding in Large-Scale Warehouses via Distributed Policy Evolution. IEEE Robot. Autom. Lett. 2025, 10, 7843–7850. [Google Scholar] [CrossRef]

- Xu, B.; Wang, G.; Yang, Z.; Bian, Y.; Wang, X.; Hu, M. Multi-vehicle collaborative trajectory planning in unstructured conflict areas based on v-hybrid a. IEEE Trans. Intell. Transp. Syst. 2024, 25, 12722–12735. [Google Scholar] [CrossRef]

- Lu, W.; Lin, Q.; Meng, L.; Li, C.; Liang, B. Collaborative Navigation of Multiple Autonomous Mobile Robots via Geometric Graph Neural Network. IEEE Trans. Ind. Electron. 2025, 1–10. [Google Scholar] [CrossRef]

- Liu, Y.; Qi, X.; Sisbot, E.A.; Oguchi, K. Multi-agent trajectory prediction with graph attention isomorphism neural network. In Proceedings of the 2022 IEEE Intelligent Vehicles Symposium (IV), Aachen, Germany, 4–9 June 2022; IEEE: Piscataway, NJ, USA, 2022. [Google Scholar]

- Shi, Y.; Huang, Z.; Feng, S.; Zhong, H.; Wang, W.; Sun, Y. Masked label prediction: Unified message passing model for semi-supervised classification. arXiv 2020, arXiv:2009.03509. [Google Scholar]

- Ma, Y.; Khan, Q.; Cremers, D. Multi agent navigation in unconstrained environments using a centralized attention based graphical neural network controller. In Proceedings of the 2023 IEEE 26th International Conference on Intelligent Transportation Systems (ITSC), Bilbao, Spain, 24–28 September 2023; IEEE: Piscataway, NJ, USA, 2023. [Google Scholar]

- Ab Wahab, M.N.; Nefti-Meziani, S.; Atyabi, A. A comparative review on mobile robot path planning: Classical or meta-heuristic methods? Annu. Rev. Control 2020, 50, 233–252. [Google Scholar] [CrossRef]

- Aradi, S. Survey of deep reinforcement learning for motion planning of autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 2020, 23, 740–759. [Google Scholar] [CrossRef]

- You, Z.; Yu, H.; Xiao, Z.; Peng, T.; Wei, Y. CAS-UNet: A Retinal Segmentation Method Based on Attention. Electronics 2023, 12, 3359. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).