Restoring Anomalous Water Surface in DOM Product of UAV Remote Sensing Using Local Image Replacement

Abstract

1. Introduction

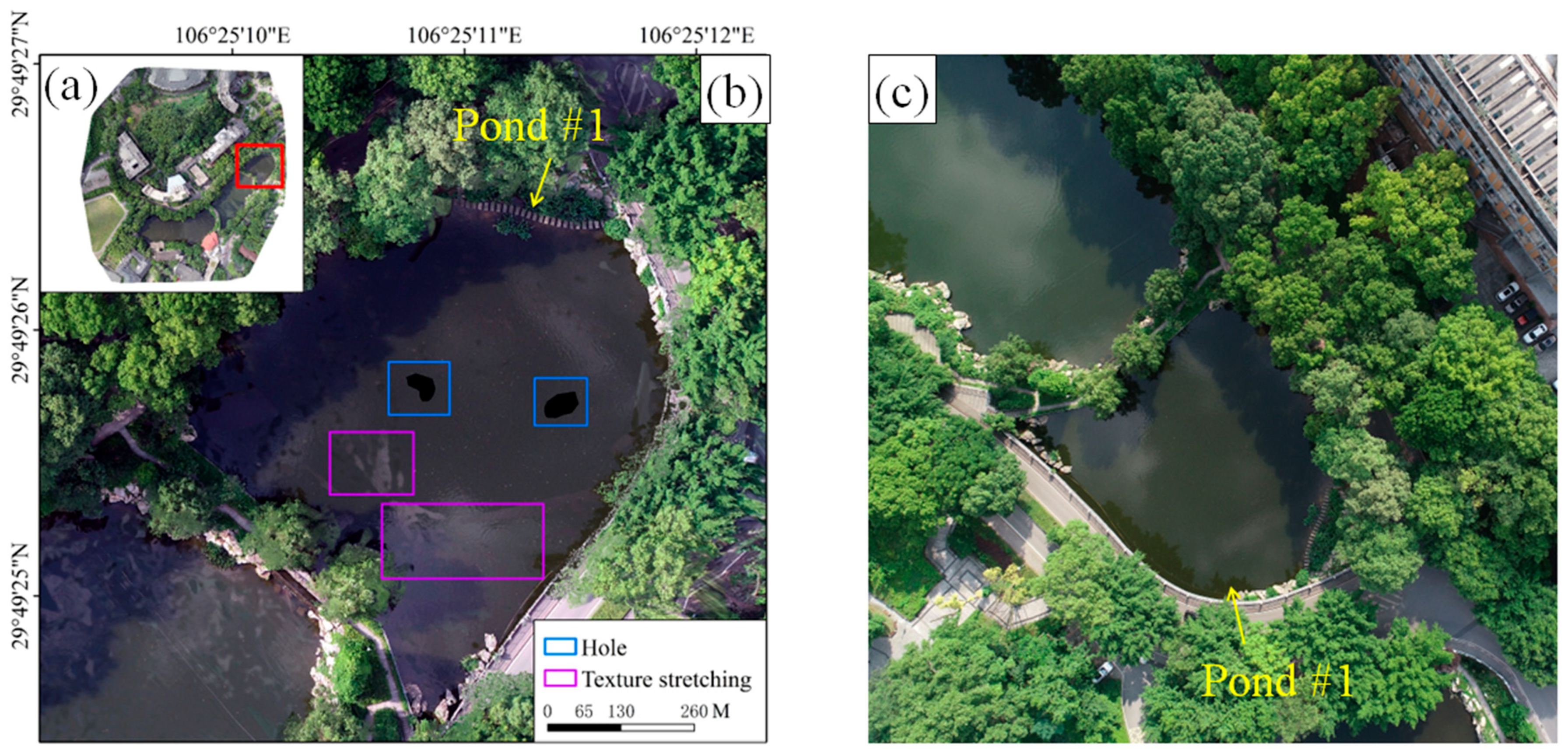

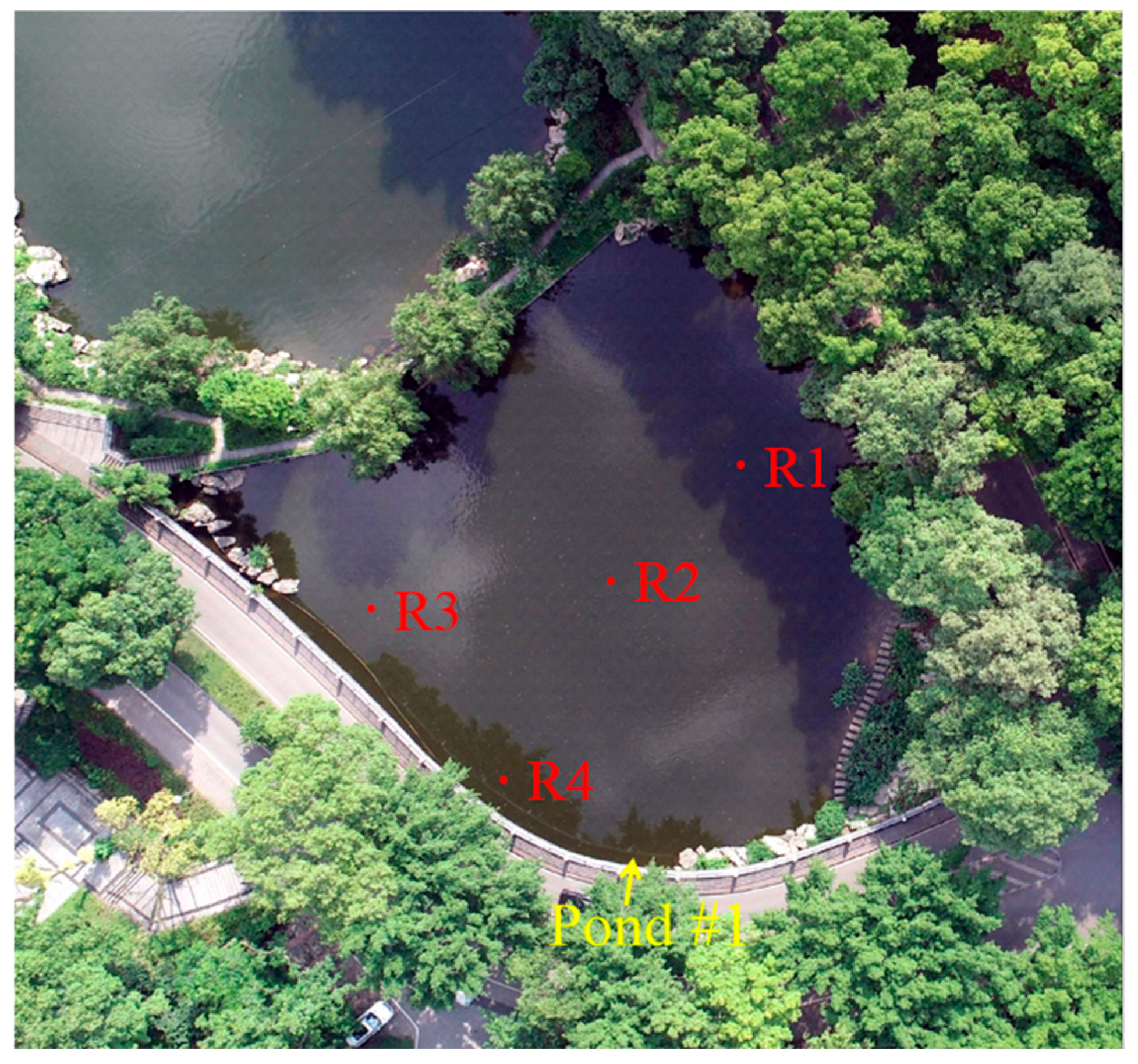

2. Study Area and Data

2.1. Study Area and DOM

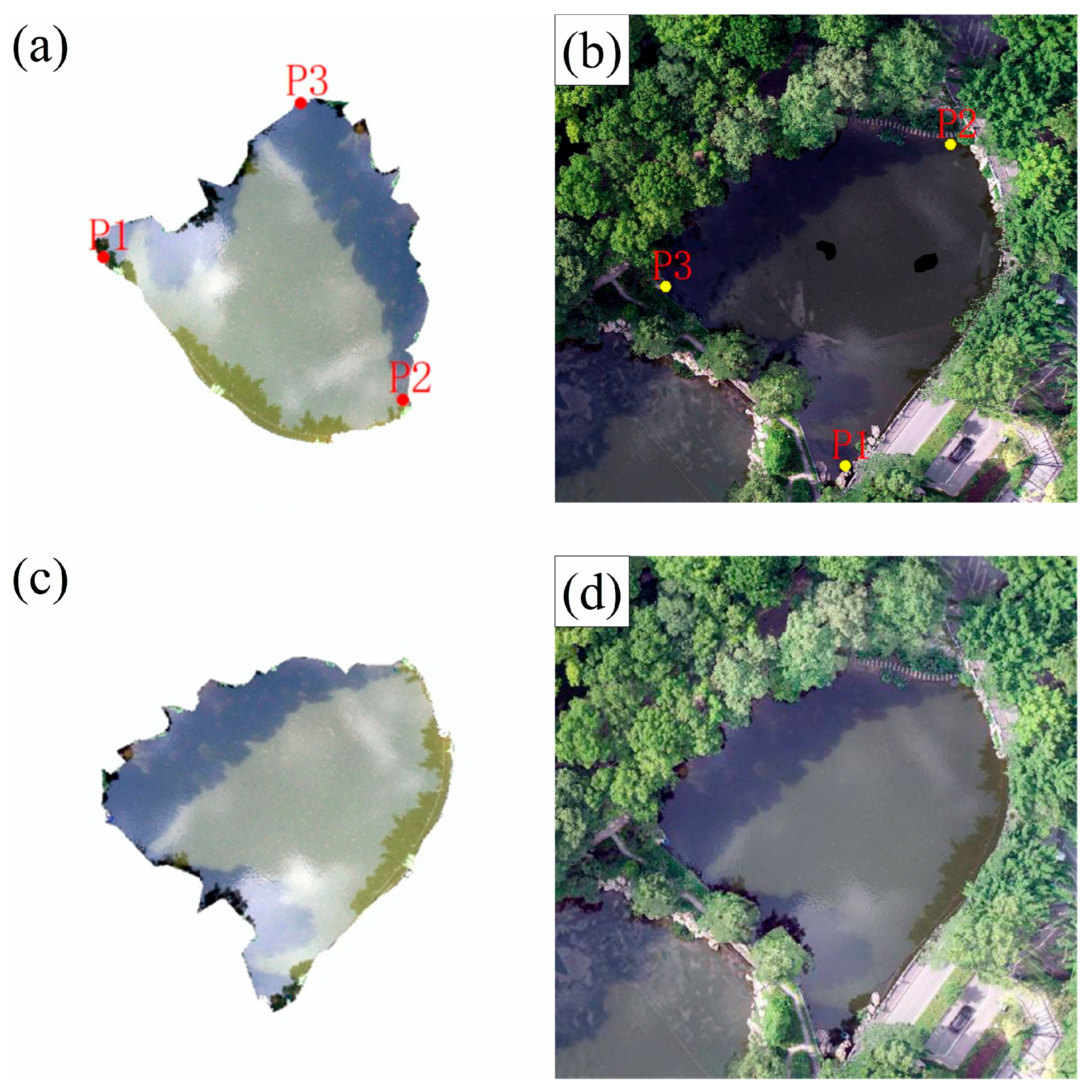

2.2. Single Image Selection

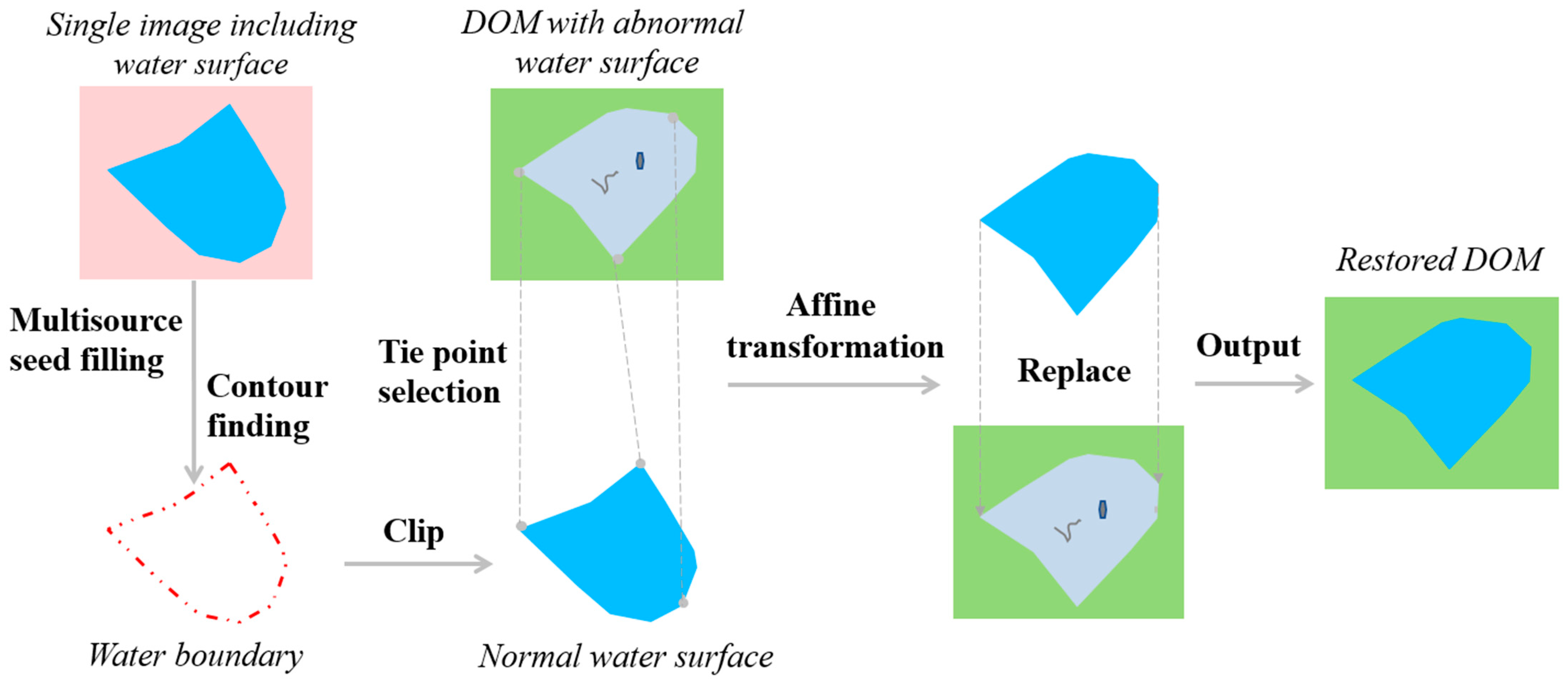

3. Methodology

3.1. Boundary Extraction of Normal Water Surface

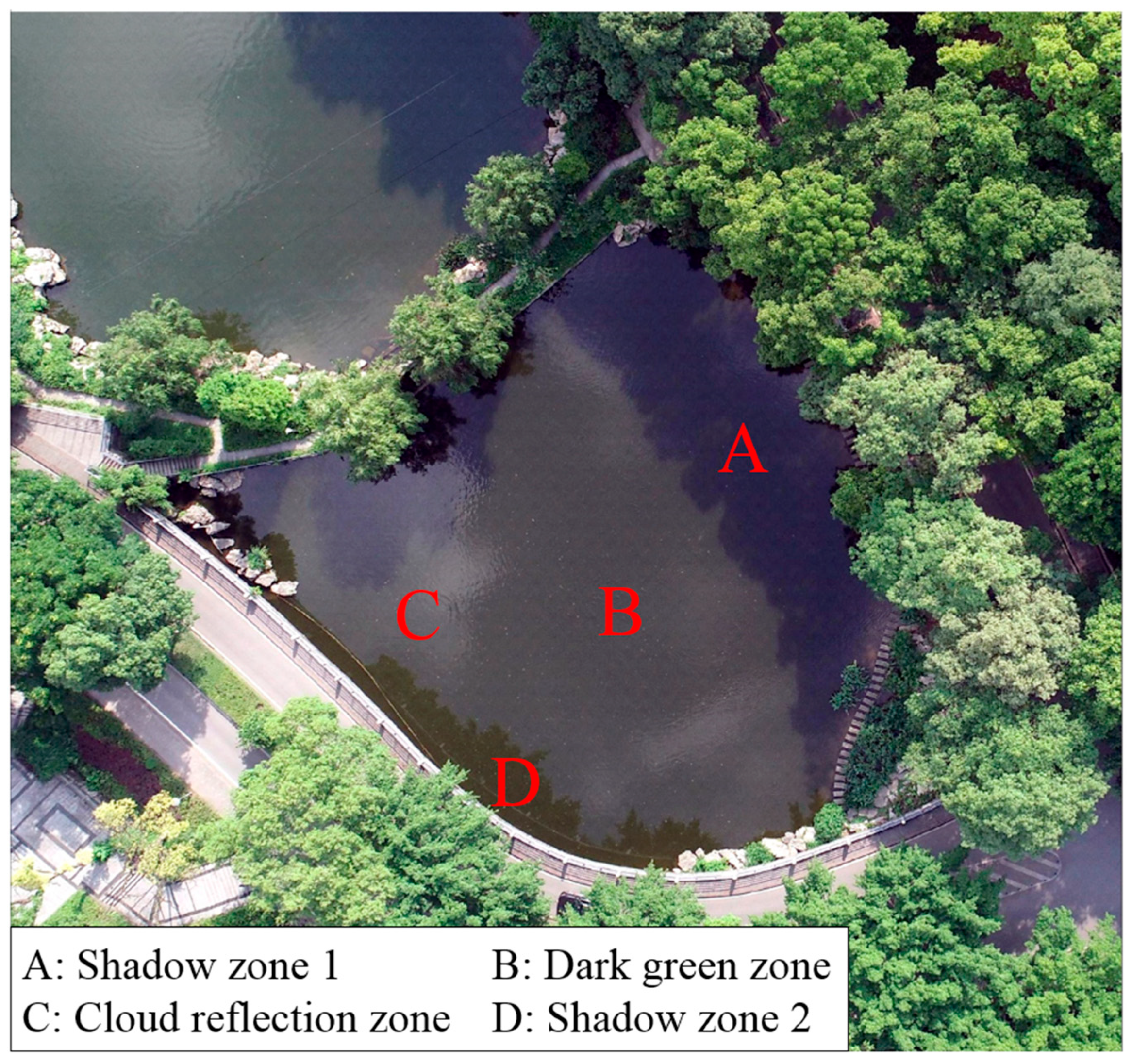

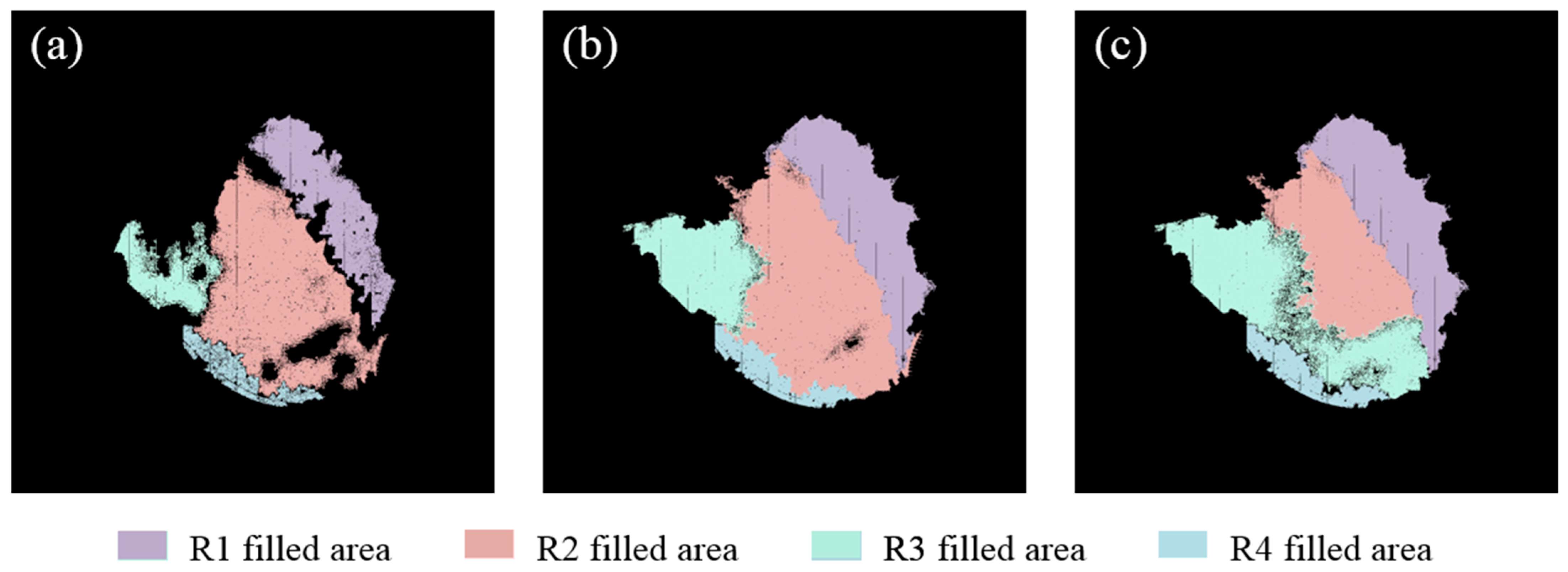

3.1.1. Water Surface Zoning

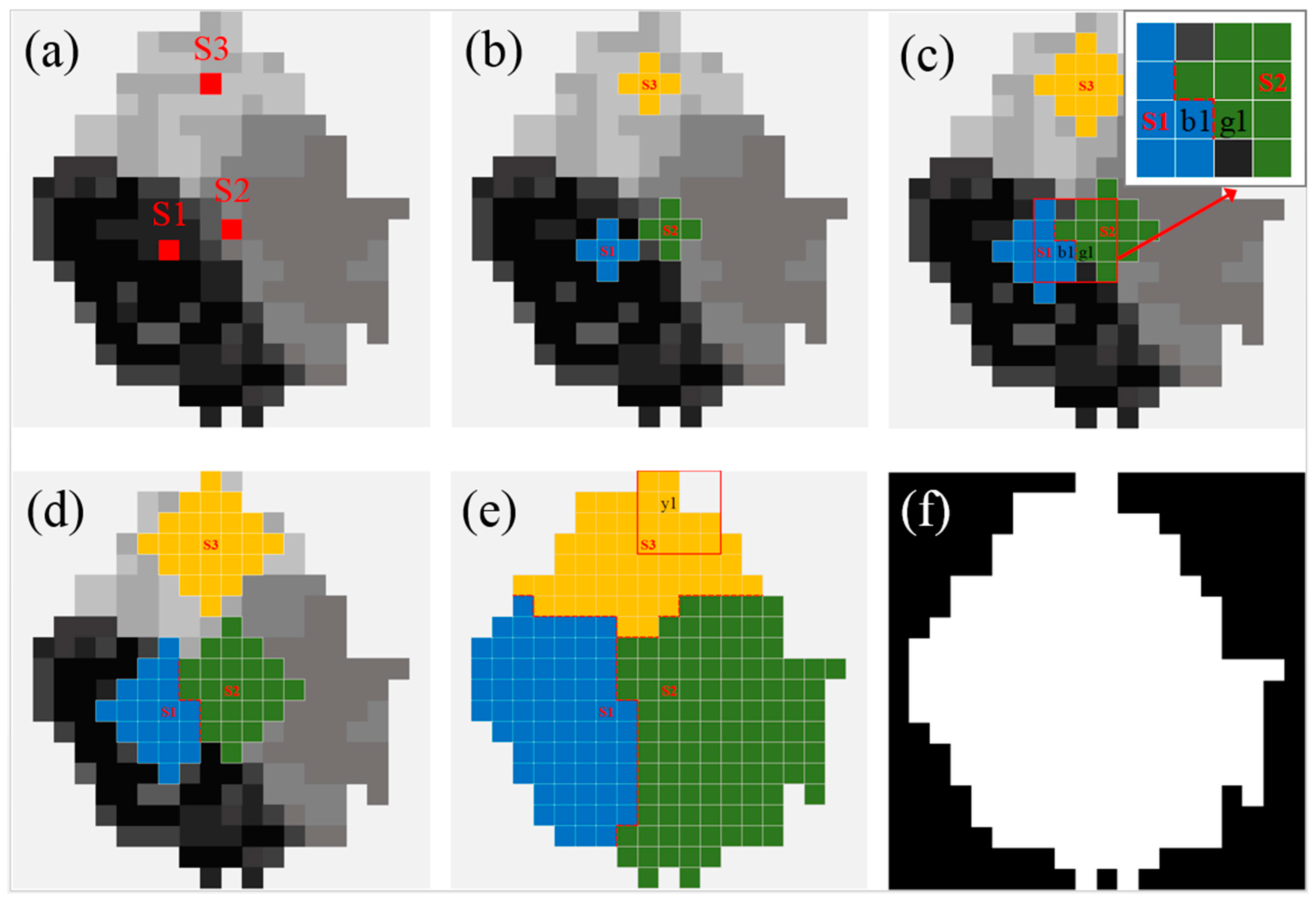

3.1.2. Water Surface Acquisition with Multisource Seed Filling

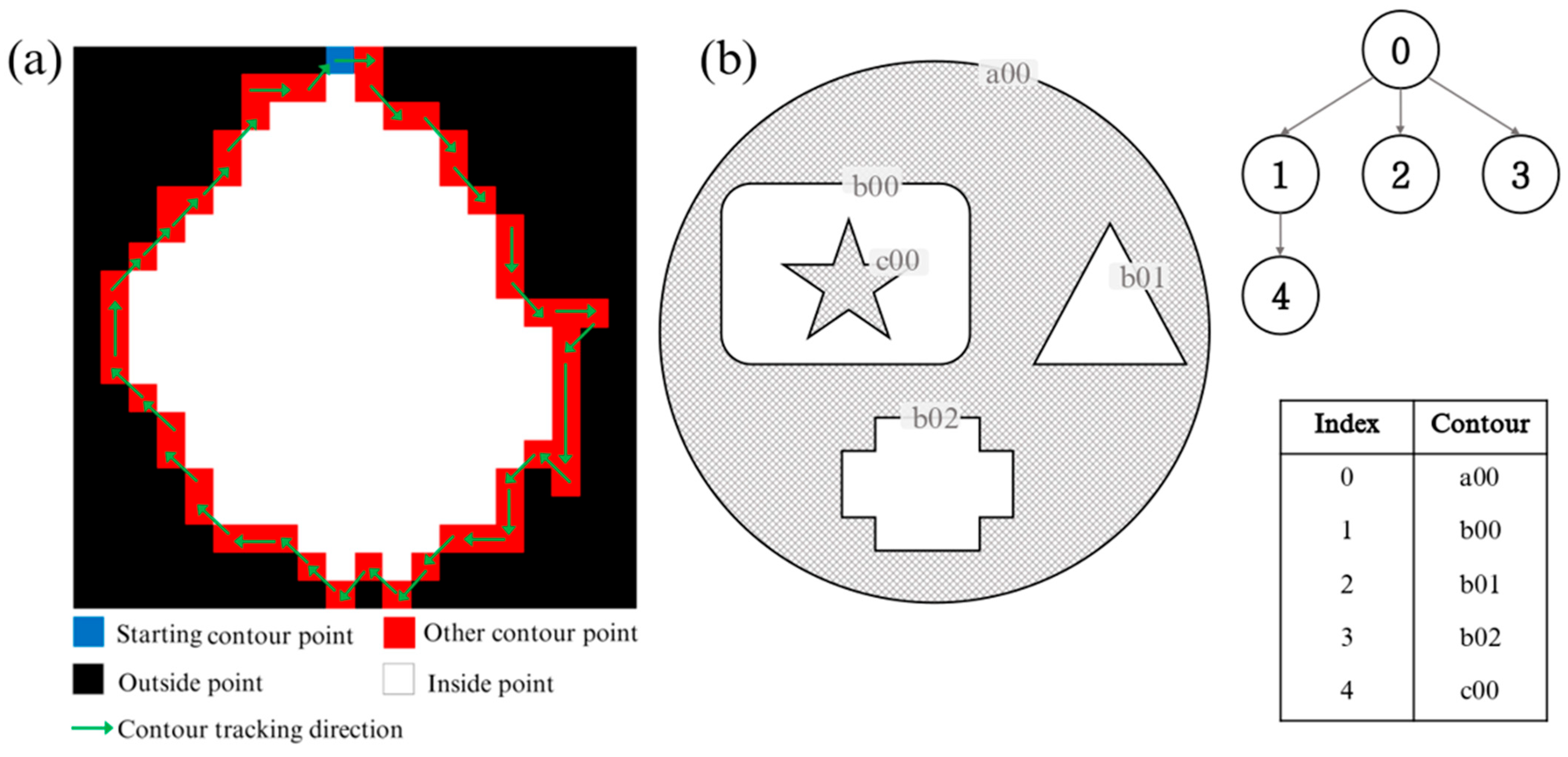

3.1.3. Water Boundary Extraction with Contour Finding

3.1.4. Color Difference Threshold Selection

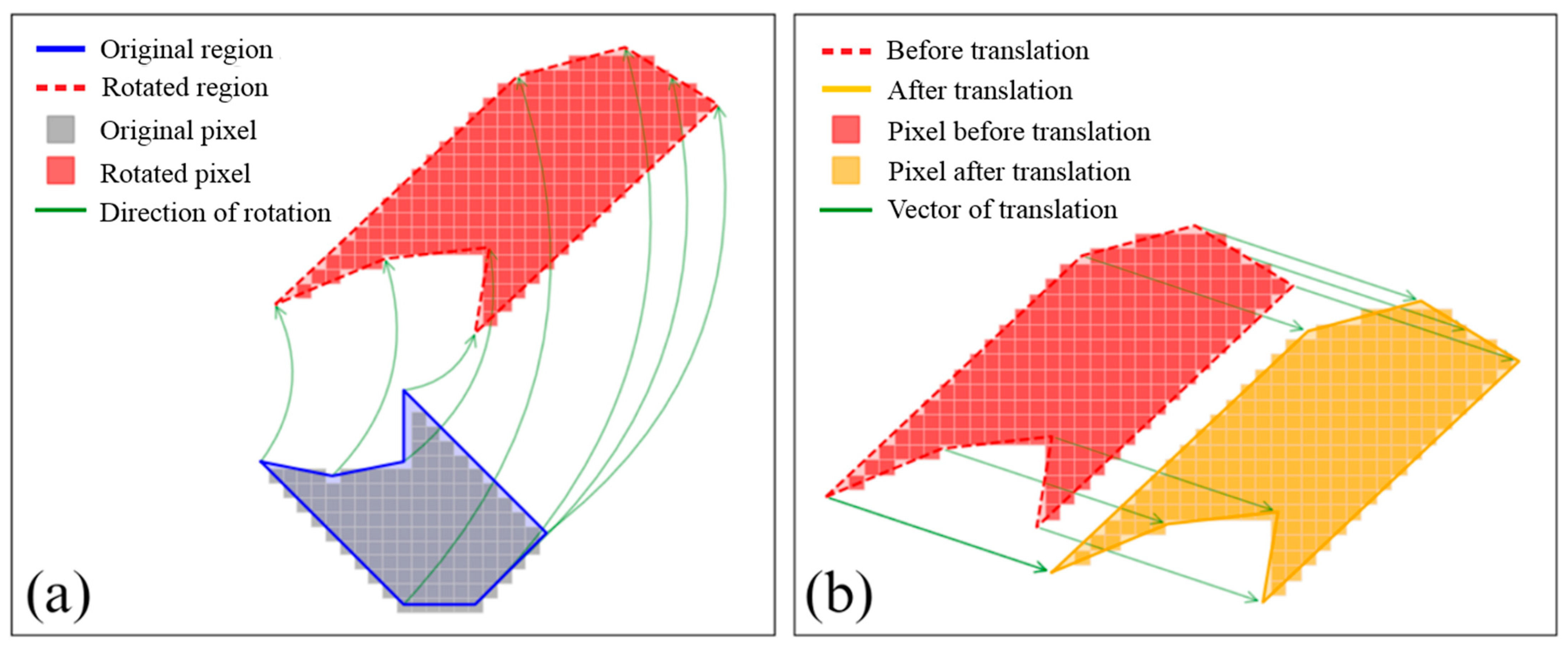

3.2. Affine Transformation of Normal Water Surface

4. Results

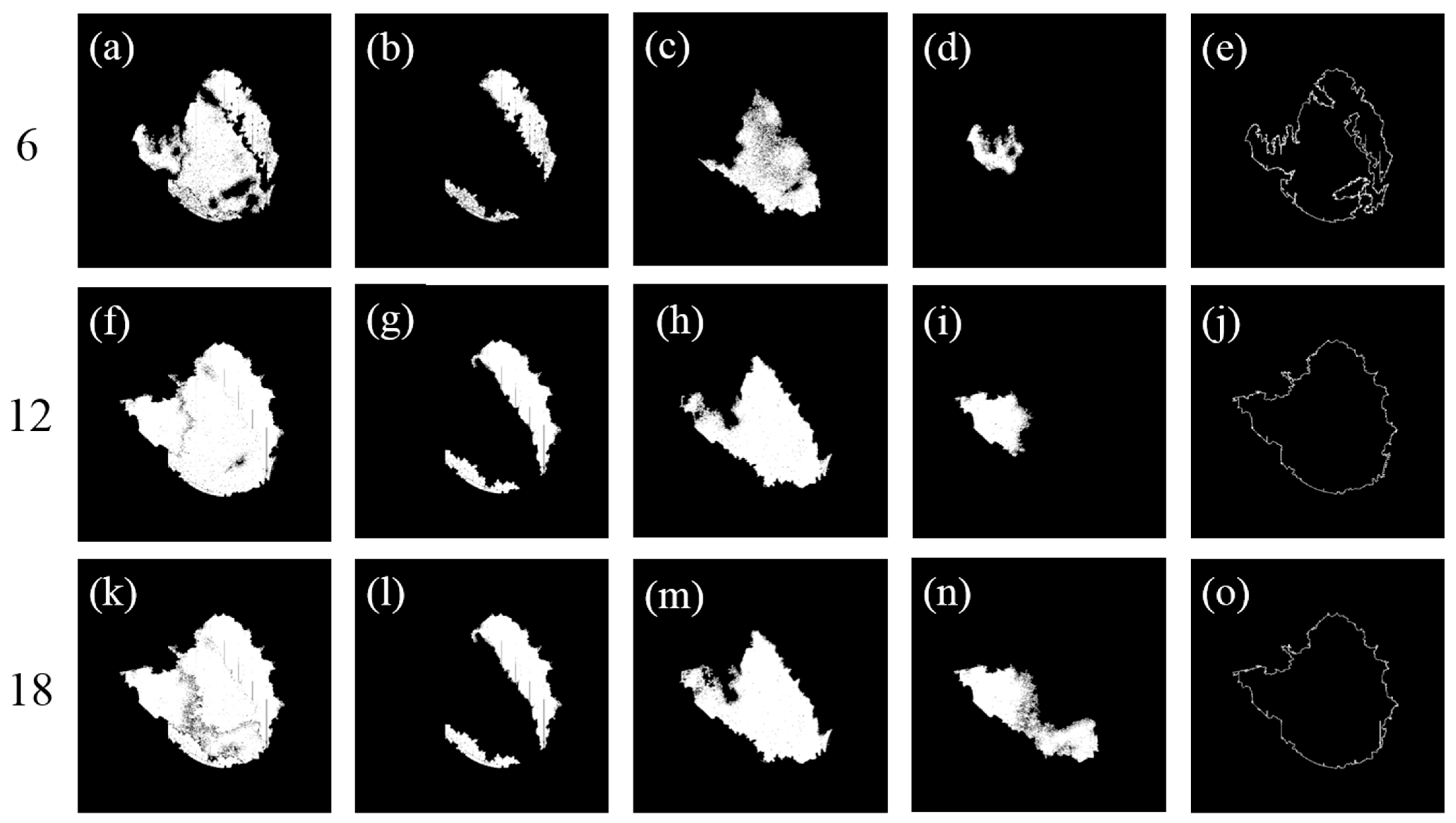

4.1. Extracted Normal Water Surface

4.1.1. Selected Multisource Seed Points

4.1.2. Determined Color Difference Threshold

4.2. Restored DOM

4.3. Quantitative Assessment

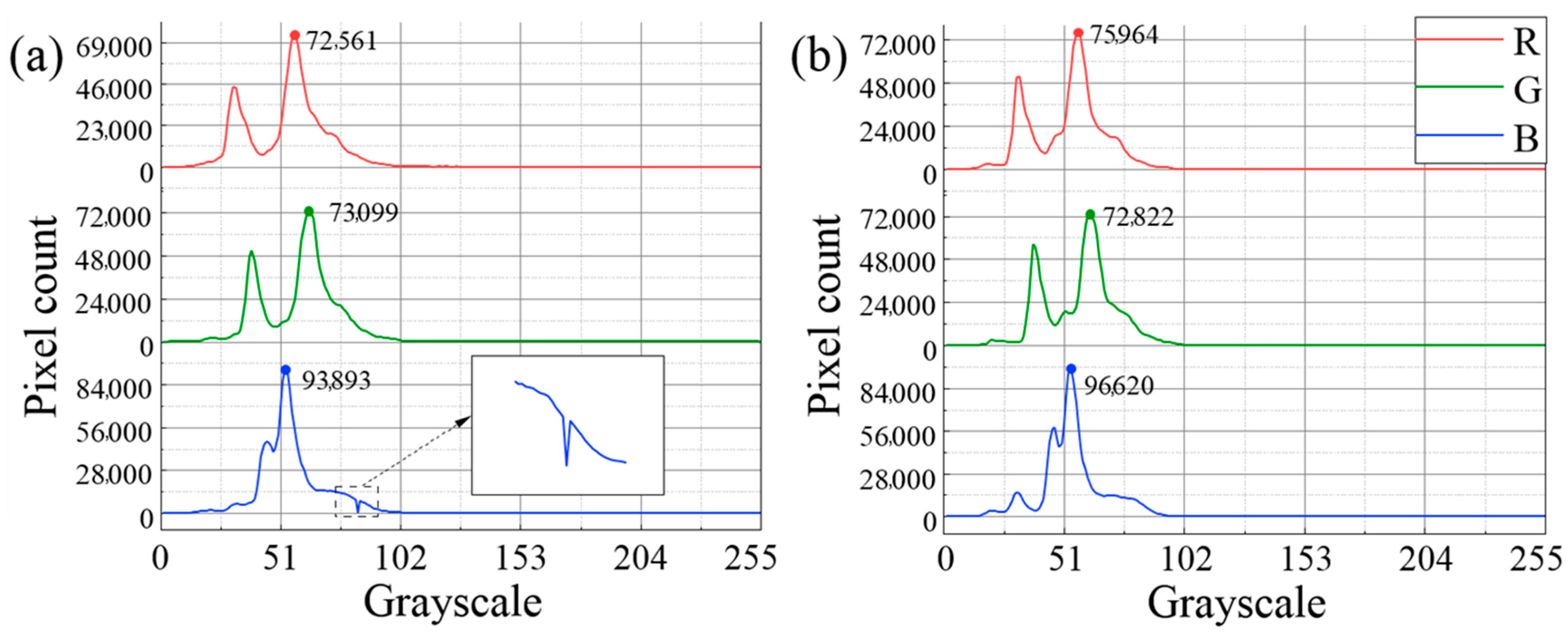

- (1)

- Histogram analysis based on the distribution of band grayscale values [25]. Before the restoration (Figure 11a), the water surface area exhibited a more dispersed distribution of grayscale values in the blue, green, and red bands due to the texture stretching and data holes. There was an obvious abnormal valley in the blue band near the grayscale value of 80, and the count of pixels with the grayscale value of 52 was 93,893. After the restoration (Figure 11b), the distribution of grayscale values in each band became significantly concentrated. The count of pixels with the grayscale value of 52 in the blue band increased to 96,620, and the abnormal valley disappeared, fully reflecting the color homogeneity of the water surface [26].

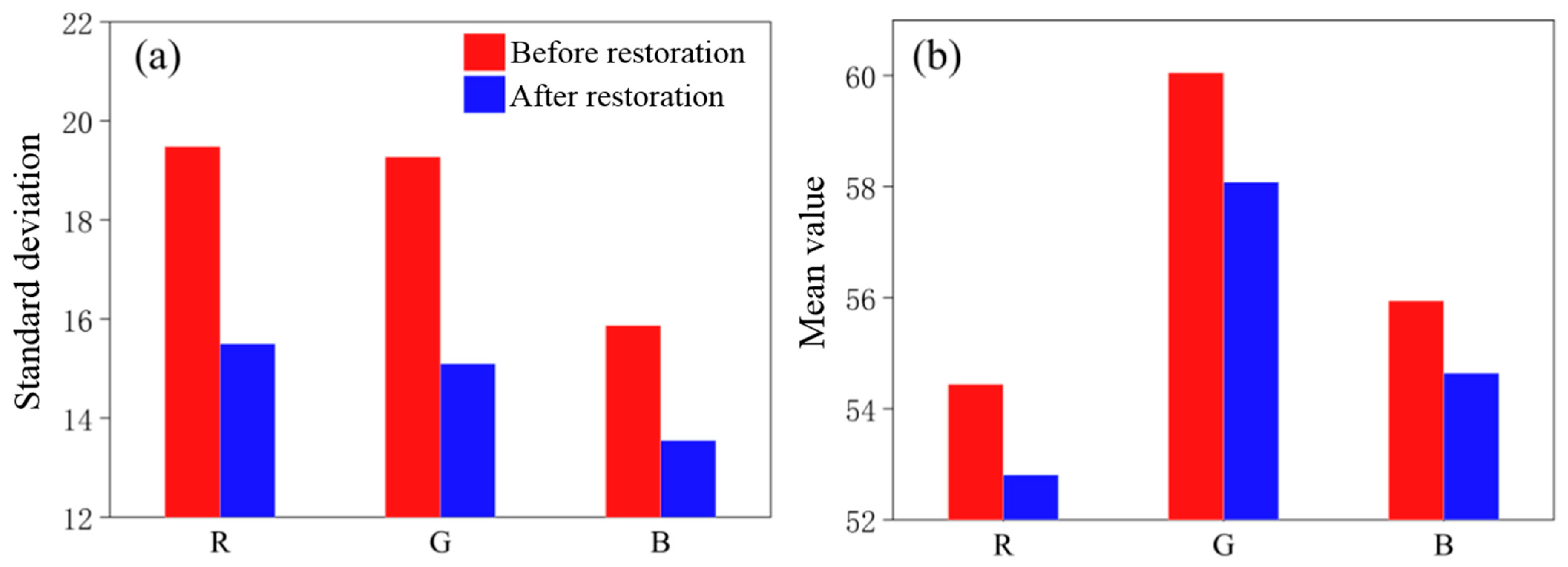

- (2)

- The standard deviation of band grayscale values. As indicated in Figure 12a, the standard deviations of the grayscale values of the three bands were significantly reduced after the restoration, confirming that the spectral consistency of the restored water surface had been notably improved. The color difference was reduced and color homogeneity was enhanced.

- (3)

- The mean value of band grayscale values. As shown in Figure 12b, the highlight overexposure caused by texture stretching in the original DOM led to abnormally high RGB mean values in the water surface area [27]. After restoration, the mean values of RGB bands were significantly reduced, reflecting a more realistic water surface reflectance.

5. Discussion

5.1. Applicable Scenarios

5.2. Appropriate Single Image Selection

5.3. Edge Effect and Smoothing

5.4. Other Limitations and Potential Improvements

6. Conclusions

- The proposed multisource seed filling algorithm is superior to the single seed one via conducting synchronous filling processes in all color zones. The sharing of the global traversal records among all of the filling process is the key point for reasonably dealing with the border conflicts of adjacent filling zones.

- As one key parameter for the multisource seed filling algorithm, the optimal color difference threshold can only be determined from a series of candidate thresholds through repeated testing. Each test includes the procedures of multisource seed filling and boundary extraction, and the effect of the tested color difference threshold is judged based on the integrity and continuity of the filled area and extracted boundary of the entire water surface.

- After image replacement, the water surface anomalies in the DOM were effectively eliminated. According to the statistics of the standard deviations and mean values of RGB pixels, the quality of the restored DOM was greatly improved in comparison with the original one. The restored water area achieved the ideal effect in terms of color consistency and spatial continuity.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, M.; Lin, J. Retrieving individual tree heights from a point cloud generated with optical imagery from an unmanned aerial vehicle (UAV). Can. J. Forest Res. 2020, 50, 1012–1024. [Google Scholar] [CrossRef]

- Li, J.; Yao, Y.; Duan, P.; Chen, Y.; Li, S.; Zhang, C. Studies on Three-Dimensional (3D) Modeling of UAV Oblique Imagery with the Aid of Loop-Shooting. ISPRS Int. J. Geo-Inf. 2018, 7, 356. [Google Scholar] [CrossRef]

- Yang, G.; Li, C.; Wang, Y.; Yuan, H.; Feng, H.; Xu, B.; Yang, X. The DOM Generation and Precise Radiometric Calibration of a UAV-Mounted Miniature Snapshot Hyperspectral Imager. Remote Sens. 2017, 9, 642. [Google Scholar] [CrossRef]

- Liu, X.; Yang, F.; Wei, H.; Gao, M. Shadow Compensation from UAV Images Based on Texture-Preserving Local Color Transfer. Remote Sens. 2022, 14, 4969. [Google Scholar] [CrossRef]

- Alvarado-Robles, G.; Solís-Muñoz, F.J.; Garduño-Ramón, M.A.; Osornio-Ríos, R.A.; Morales-Hernández, L.A. A Novel Shadow Removal Method Based upon Color Transfer and Color Tuning in UAV Imaging. Appl. Sci. 2021, 11, 11494. [Google Scholar] [CrossRef]

- Wang, S.; Yu, C.; Sun, Y.; Gao, F.; Dong, J. Specular reflection removal of ocean surface remote sensing images from UAVs. Multimed. Tools Appl. 2018, 77, 11363–11379. [Google Scholar] [CrossRef]

- Yao, F. DewaterGAN: A Physics-Guided Unsupervised Image Water Removal for UAV in Coastal Zone. IEEE Trans. Geosci. Remote 2024, 62, 4212015. [Google Scholar] [CrossRef]

- Lin, J.; Zuo, H.; Ye, Y.; Liao, X. Histogram-based Autoadaptive Filter for Destriping NDVI Imagery Acquired by UAV-loaded Multispectral Camera. IEEE Geosci. Remote Sens. 2019, 16, 648–652. [Google Scholar] [CrossRef]

- Lv, J.; Jiang, G.; Ding, W.; Zhao, Z. Fast Digital Orthophoto Generation: A Comparative Study of Explicit and Implicit Methods. Remote Sens. 2024, 16, 786. [Google Scholar] [CrossRef]

- Li, J.; Zhu, S.; Gao, Y.; Zhang, G.; Xu, Y. Change Detection for High-Resolution Remote Sensing Images Based on a Multi-Scale Attention Siamese Network. Remote Sens. 2022, 14, 3464. [Google Scholar] [CrossRef]

- ContextCapture Master. Available online: https://docs.bentley.com/LiveContent/web/ContextCapture%20Help-v17/en/GUID-249266F7-ACB4-4E81-B466-389E4CE10CAE.html (accessed on 24 April 2025).

- Bentley Introduces ContextCapture Supporting Reality Modeling for Infrastructure Project Delivery. Available online: https://reliabilityweb.com/news/article/bentley_introduces_contextcapture_supporting_reality_modeling_for_infr (accessed on 24 April 2025).

- Berber, M.; Munjy, R.; Lopez, J. Kinematic GNSS positioning results compared against Agisoft Metashape and Pix4dmapper results produced in the San Joaquin experimental range in Fresno County, California. J. Geod. Sci. 2021, 11, 48–57. [Google Scholar] [CrossRef]

- Heckbert, P. A seed fill algorithm. In Graphics Gems; Glassner, A., Ed.; Academic Press: London, UK, 1990; pp. 275–277. [Google Scholar]

- Pechyen, C.; Trif, C.; Cherdhirunkorn, B.; Toommee, S.; Parcharoen, Y. Measurement of Ellman’s Essay Using a Smartphone Camera Coupled with an Image Processing Technique in CIE-LAB Color Space for the Detection of Two Pesticides in Water. Analytica 2025, 6, 4. [Google Scholar] [CrossRef]

- Khoshnevis, B. Automated construction by contour crafting—Related robotics and information technologies. Autom. Constr. 2004, 13, 5–19. [Google Scholar] [CrossRef]

- MacDonald, W.L. Developments in colour management systems. Displays 1996, 16, 203–211. [Google Scholar] [CrossRef]

- Wilder, J.; Feldman, J.; Singh, M. The role of shape complexity in the detection of closed contours. Vis. Res. 2016, 126, 220–231. [Google Scholar] [CrossRef] [PubMed]

- Anaya, M.V. Artificial intelligence based auto-contouring solutions for use in radiotherapy treatment planning of head and neck cancer. IPEM-Transl. 2023, 6–8, 100018. [Google Scholar] [CrossRef]

- Bach, S.H.; Khoi, P.B.; Yi, S.Y. Application of QR Code for Localization and Navigation of Indoor Mobile Robot. IEEE Access 2023, 11, 28384–28390. [Google Scholar] [CrossRef]

- Fan, Z.; Pi, Y.; Wang, M.; Kang, Y.; Tan, K. GLS–MIFT: A modality invariant feature transform with global-to-local searching. Inf. Fusion 2024, 105, 102252. [Google Scholar] [CrossRef]

- Weibo, Z.; Qiuyan, D.; Xingyue, Z.; Dehua, L.; Xinran, M. A method for stitching remote sensing images with Delaunay triangle feature constraints. Geocarto Int. 2023, 38, 2285356. [Google Scholar] [CrossRef]

- Chen, Q.; Feng, D. Feature matching of remote-sensing images based on bilateral local–global structure consistency. IET Image Process. 2023, 17, 3909–3926. [Google Scholar] [CrossRef]

- Zhou, C.; Cao, J.; Hao, Q.; Cui, H.; Yao, H.; Ning, Y.; Zhang, H.; Shi, M. Adaptive locating foveated ghost imaging based on affine transformation. Opt. Express 2024, 32, 7119–7135. [Google Scholar] [CrossRef]

- Xie, W.; Liu, B.; Li, Y.; Lei, J.; Chang, C.; He, G. Spectral Adversarial Feature Learning for Anomaly Detection in Hyperspectral Imagery. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2352–2365. [Google Scholar] [CrossRef]

- Xianglin, D.; Tariq, A.; Jamil, A.; Aslam, R.W.; Zafar, Z.; Bailek, N.; Zhran, M.; Almutairi, K.F.; Soufan, W. Advanced machine vision techniques for groundwater level prediction modeling geospatial and statistical research. Adv. Space Res. 2025, 75, 2652–2668. [Google Scholar] [CrossRef]

- Štricelj, A.; Kačič, Z. Detection of objects on waters’ surfaces using CEIEMV method. Comput. Electr. Eng. 2015, 46, 511–527. [Google Scholar] [CrossRef]

- Douglas, T.J.; Coops, N.C.; Drever, M.C. UAV-acquired imagery with photogrammetry provides accurate measures of mudflat elevation gradients and microtopography for investigating microphytobenthos patterning. Sci. Remote Sens. 2023, 7, 100089. [Google Scholar] [CrossRef]

- Brown, M.; Lowe, D.G. Automatic Panoramic Image Stitching using Invariant Features. Int. J. Comput. Vis. 2007, 74, 59–73. [Google Scholar] [CrossRef]

- Liu, Y.; Zheng, X.; Ai, G.; Zhang, Y.; Zuo, Y. Generating a High-Precision True Digital Orthophoto Map Based on UAV Images. ISPRS Int. J. Geo-Inf. 2018, 7, 333. [Google Scholar] [CrossRef]

- Liu, H.; Li, H.; Wang, H.; Liu, C.; Qian, J.; Wang, Z.; Geng, C. Improved Detection and Location of Small Crop Organs by Fusing UAV Orthophoto Maps and Raw Images. Remote Sens. 2025, 17, 906. [Google Scholar] [CrossRef]

- Long, J.; Chen, S.; Zhang, K.; Liu, Y.; Luo, Q.; Chen, Y. Global Sparse Texture Filtering for edge preservation and structural extraction. Comput. Graph. 2025, 128, 104213. [Google Scholar] [CrossRef]

- Xu, F.; Liu, J.; Song, Y.; Sun, H.; Wang, X. Multi-Exposure Image Fusion Techniques: A Comprehensive Review. Remote Sens. 2022, 14, 771. [Google Scholar] [CrossRef]

- Papia, E.M.; Kondi, A. Quantifying subtle color transitions in Mark Rothko’s abstract paintings through K-means clustering and Delta E analysis. J. Cult. Herit. 2025, 72, 194–204. [Google Scholar] [CrossRef]

- Huang, R.; Zheng, Y. Image Structure-Induced Semantic Pyramid Network for Inpainting. Appl. Sci. 2023, 13, 7812. [Google Scholar] [CrossRef]

- Yuan, S.; Zhao, W.; Gao, S.; Xia, S.; Hang, B.; Qu, H. An adaptive threshold-based quantum image segmentation algorithm and its simulation. Quantum Inf. Process. 2022, 21, 359. [Google Scholar] [CrossRef]

- Long, J.; Luo, Q.; Zhang, C. Image smoothing algorithm based on texture intensity adaptation and edge consistency. Multimed. Syst. 2025, 31, 223. [Google Scholar] [CrossRef]

- Brar, K.K.; Goyal, B.; Dogra, A.; Mustafa, M.A.; Majumdar, R.; Alkhayyat, A.; Kukreja, V. Image segmentation review: Theoretical background and recent advances. Inf. Fusion 2025, 114, 102608. [Google Scholar] [CrossRef]

- Hartmann, W.; Havlena, M.; Schindler, K. Recent developments in large-scale tie-point matching. ISPRS J. Photogramm. 2016, 115, 47–62. [Google Scholar] [CrossRef]

- Isikdogan, F.; Bovik, A.C.; Passalacqua, P. Surface Water Mapping by Deep Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4909–4918. [Google Scholar] [CrossRef]

- Li, X.; Hu, Y.; Jie, Y.; Zhao, C.; Zhang, Z. Dual-Frequency Lidar for Compressed Sensing 3D Imaging Based on All-Phase Fast Fourier Transform. J. Opt. Photonics Res. 2023, 1, 74–81. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, G.; Cao, H.; Hu, K.; Wang, Q.; Deng, Y.; Gao, J.; Tang, Y. Geometry-Aware 3D Point Cloud Learning for Precise Cutting-Point Detection in Unstructured Field Environments. J. Field Robot. 2025, 190–195. [Google Scholar] [CrossRef]

- Pang, G.; Shen, C.; Cao, L.; Anton, V.D.H. Deep Learning for Anomaly Detection: A Review. ACM Comput. Surv. 2021, 54, 1–38. [Google Scholar] [CrossRef]

- Peterson, K.T.; Sagan, V.; Sloan, J.J. Deep learning-based water quality estimation and anomaly detection using Landsat-8/Sentinel-2 virtual constellation and cloud computing. GISci. Remote Sens. 2020, 57, 510–525. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, C.; Zhang, T.; Tao, L.; Lin, J. Restoring Anomalous Water Surface in DOM Product of UAV Remote Sensing Using Local Image Replacement. Sensors 2025, 25, 4225. https://doi.org/10.3390/s25134225

Wang C, Zhang T, Tao L, Lin J. Restoring Anomalous Water Surface in DOM Product of UAV Remote Sensing Using Local Image Replacement. Sensors. 2025; 25(13):4225. https://doi.org/10.3390/s25134225

Chicago/Turabian StyleWang, Chunjie, Ti Zhang, Liang Tao, and Jiayuan Lin. 2025. "Restoring Anomalous Water Surface in DOM Product of UAV Remote Sensing Using Local Image Replacement" Sensors 25, no. 13: 4225. https://doi.org/10.3390/s25134225

APA StyleWang, C., Zhang, T., Tao, L., & Lin, J. (2025). Restoring Anomalous Water Surface in DOM Product of UAV Remote Sensing Using Local Image Replacement. Sensors, 25(13), 4225. https://doi.org/10.3390/s25134225