Research on Super-Resolution Reconstruction of Coarse Aggregate Particle Images for Earth–Rock Dam Construction Based on Real-ESRGAN

Abstract

1. Introduction

1.1. Image Super-Resolution Reconstruction Algorithms for Ideal Degradation Models

1.2. Image Super-Resolution Reconstruction Algorithms for Real Degradation Models

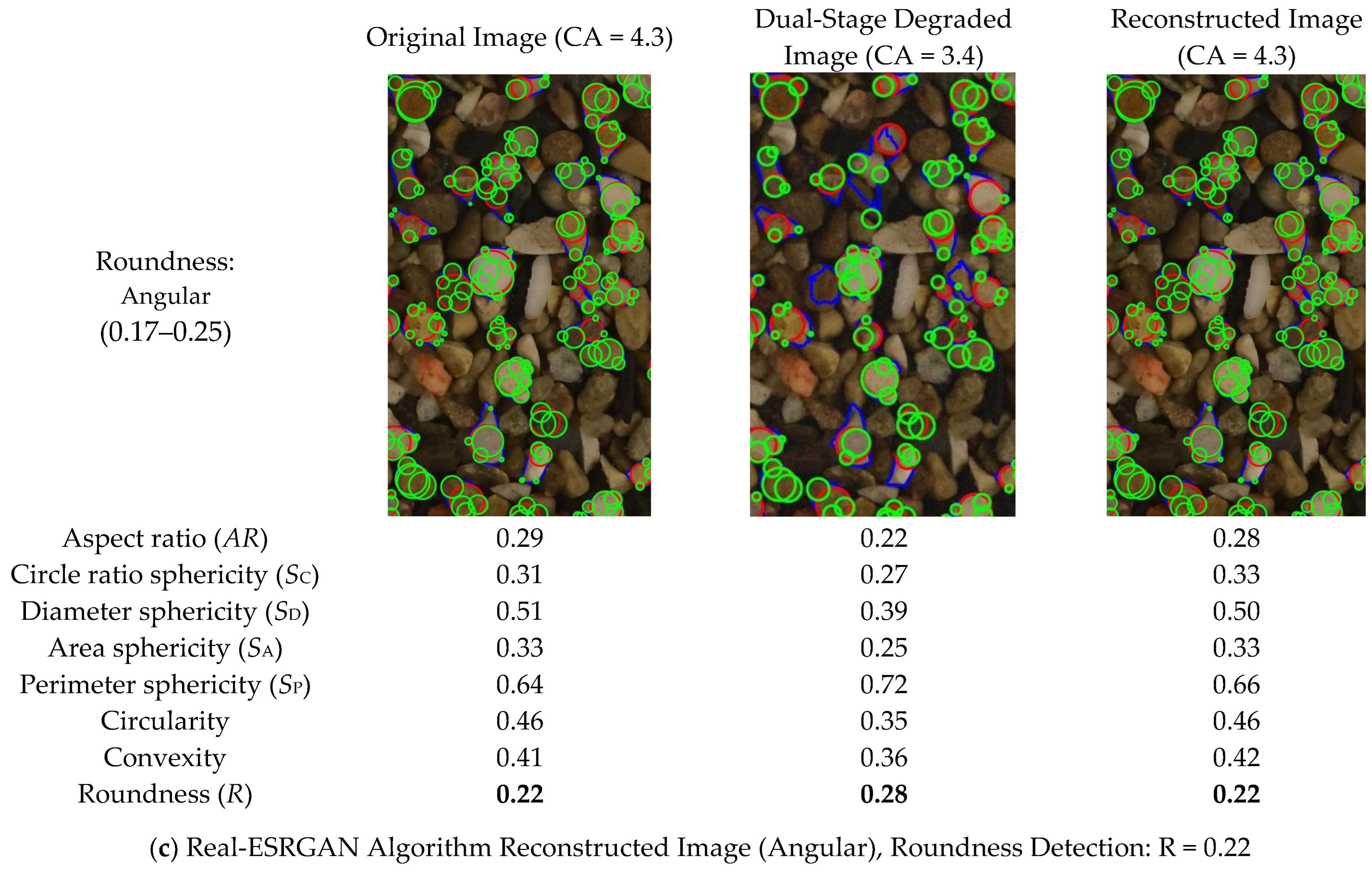

2. Dataset Construction

2.1. Particle Image Collection and Classification

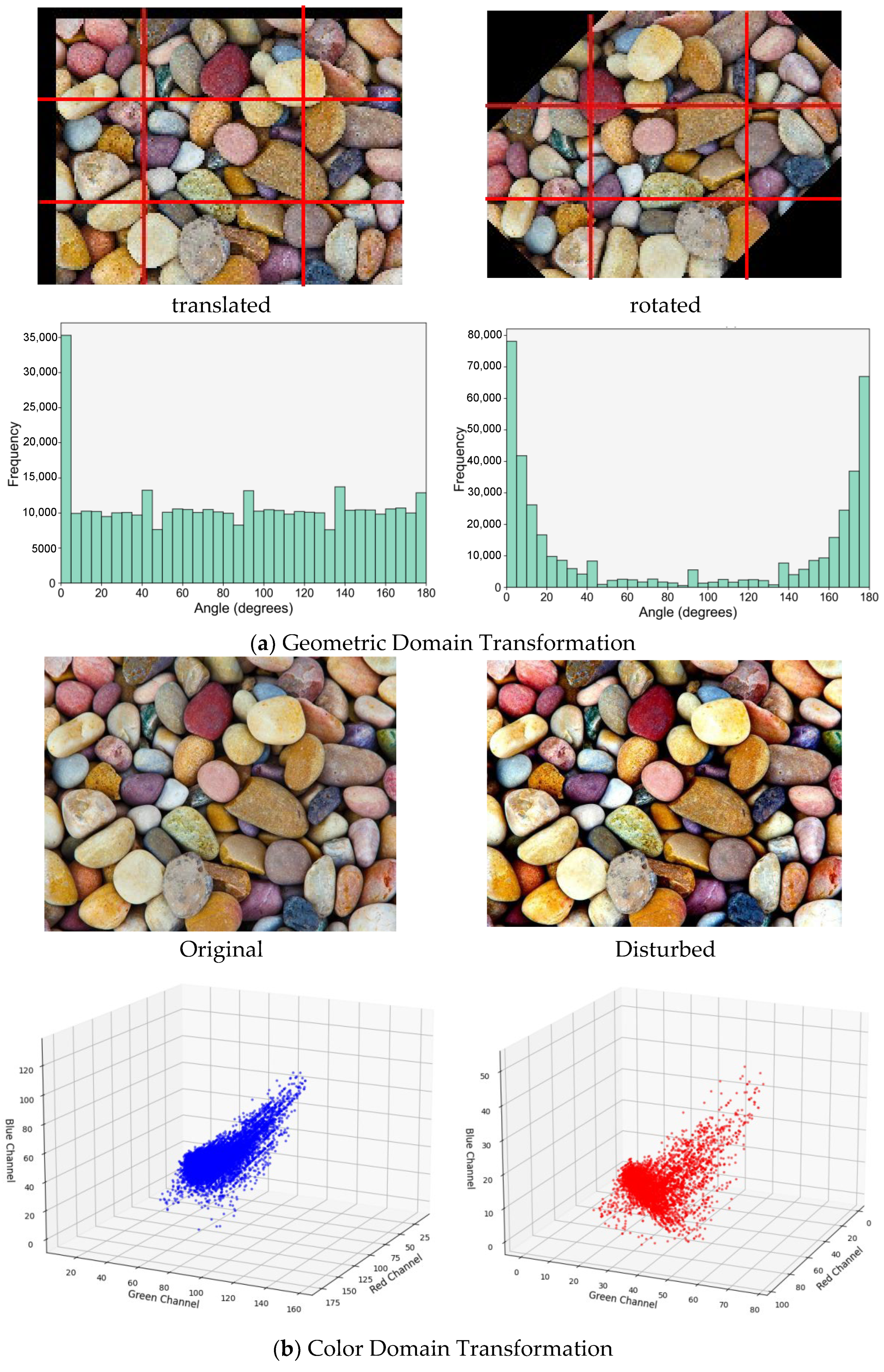

2.2. Data Augmentation and Quality Thresholds

2.3. Dual-Stage Image Degradation

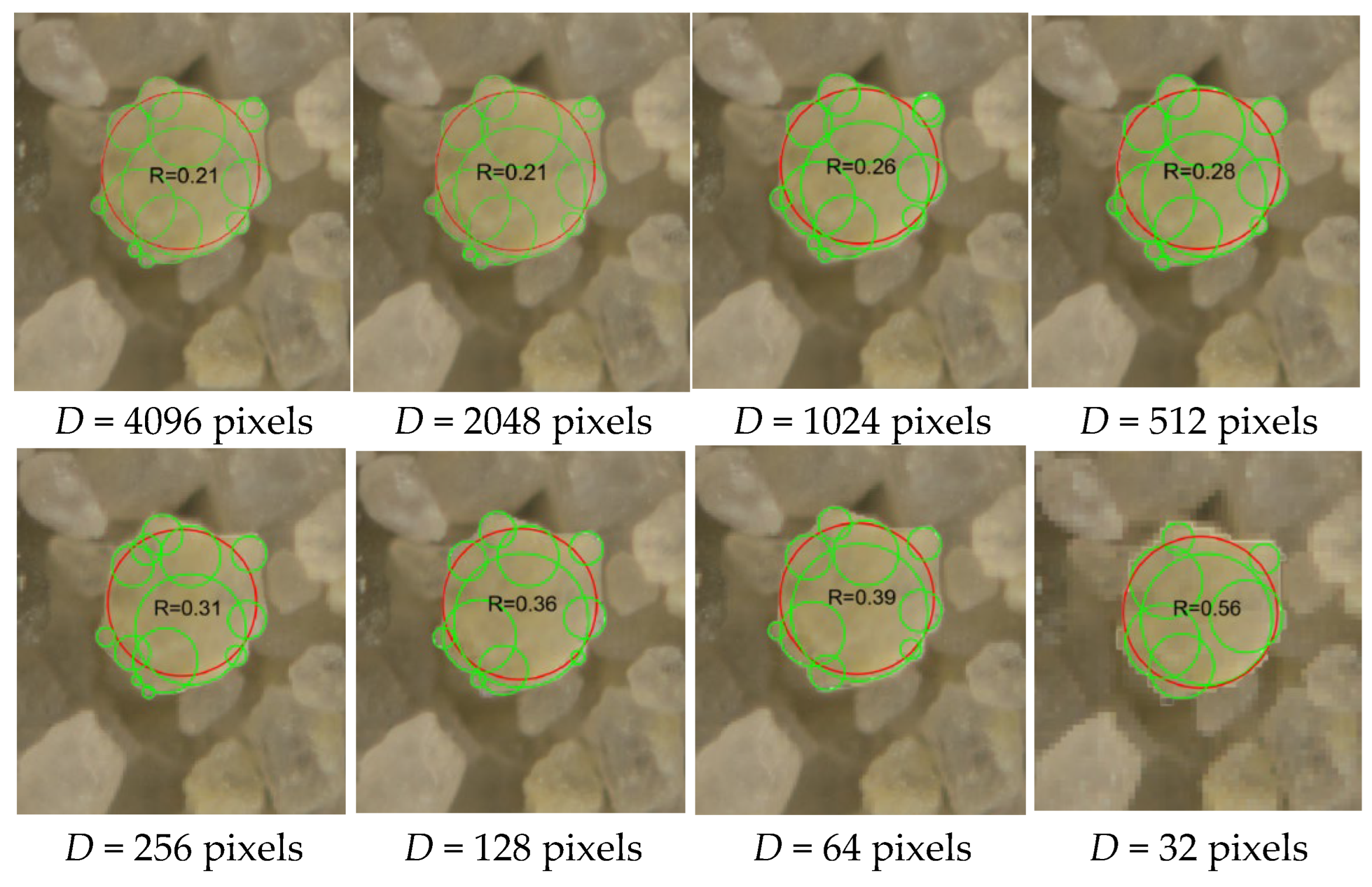

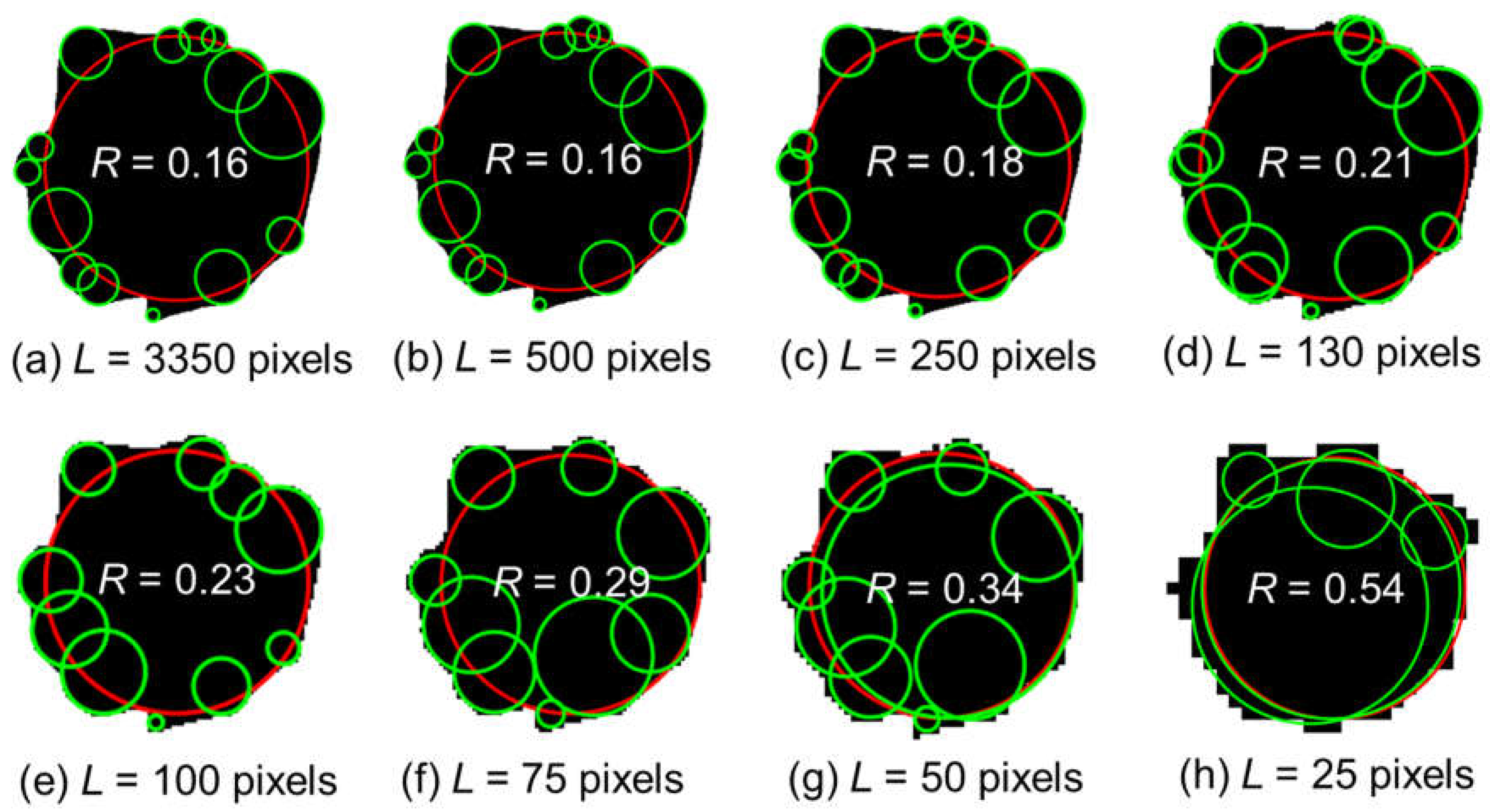

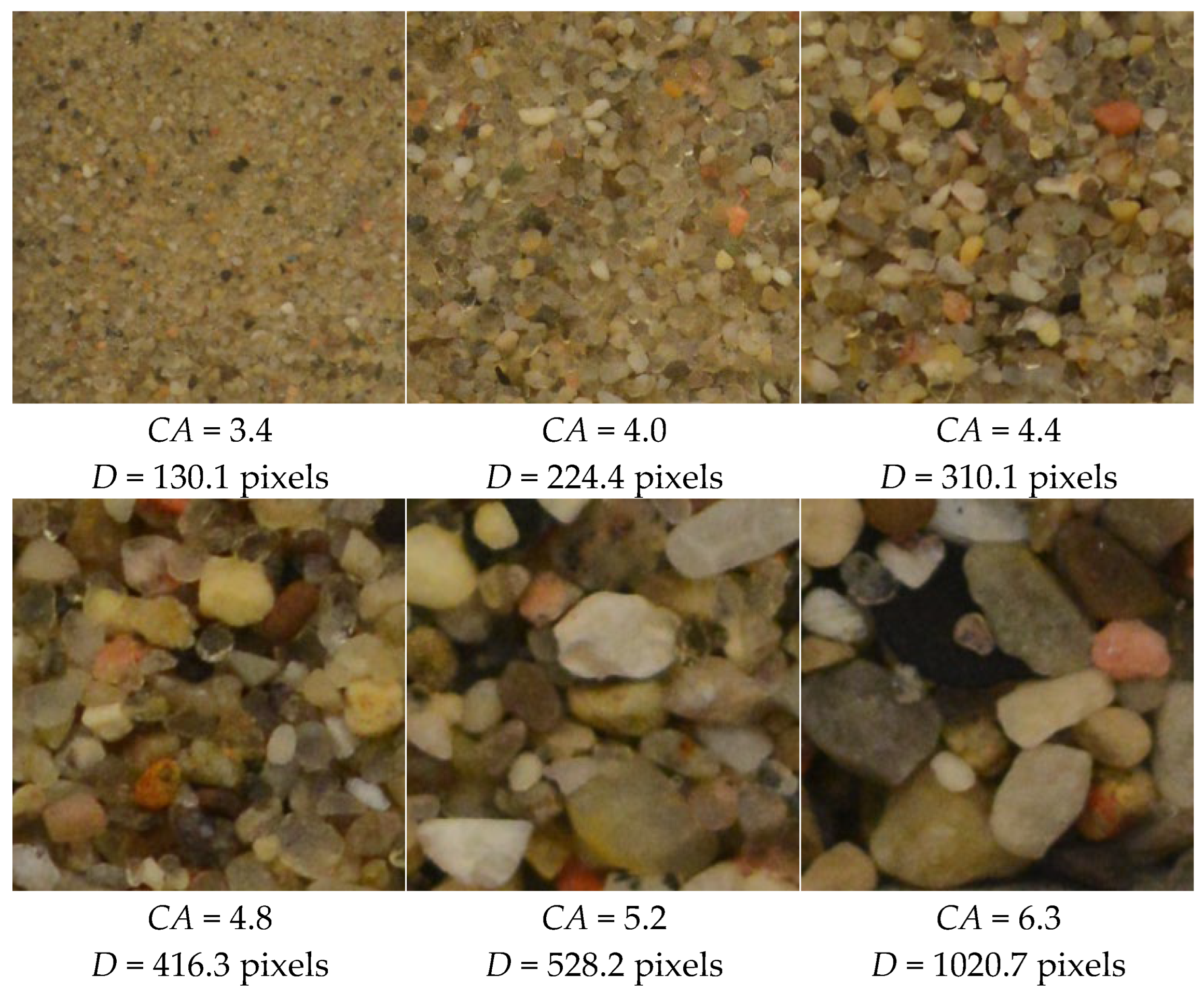

2.4. Minimum Image Quality for Reliable Optical Characterizations of Soil Particle Shapes

2.5. The Necessity of Minimum Image Quality for Analyzing Particle Shapes in Assemblies

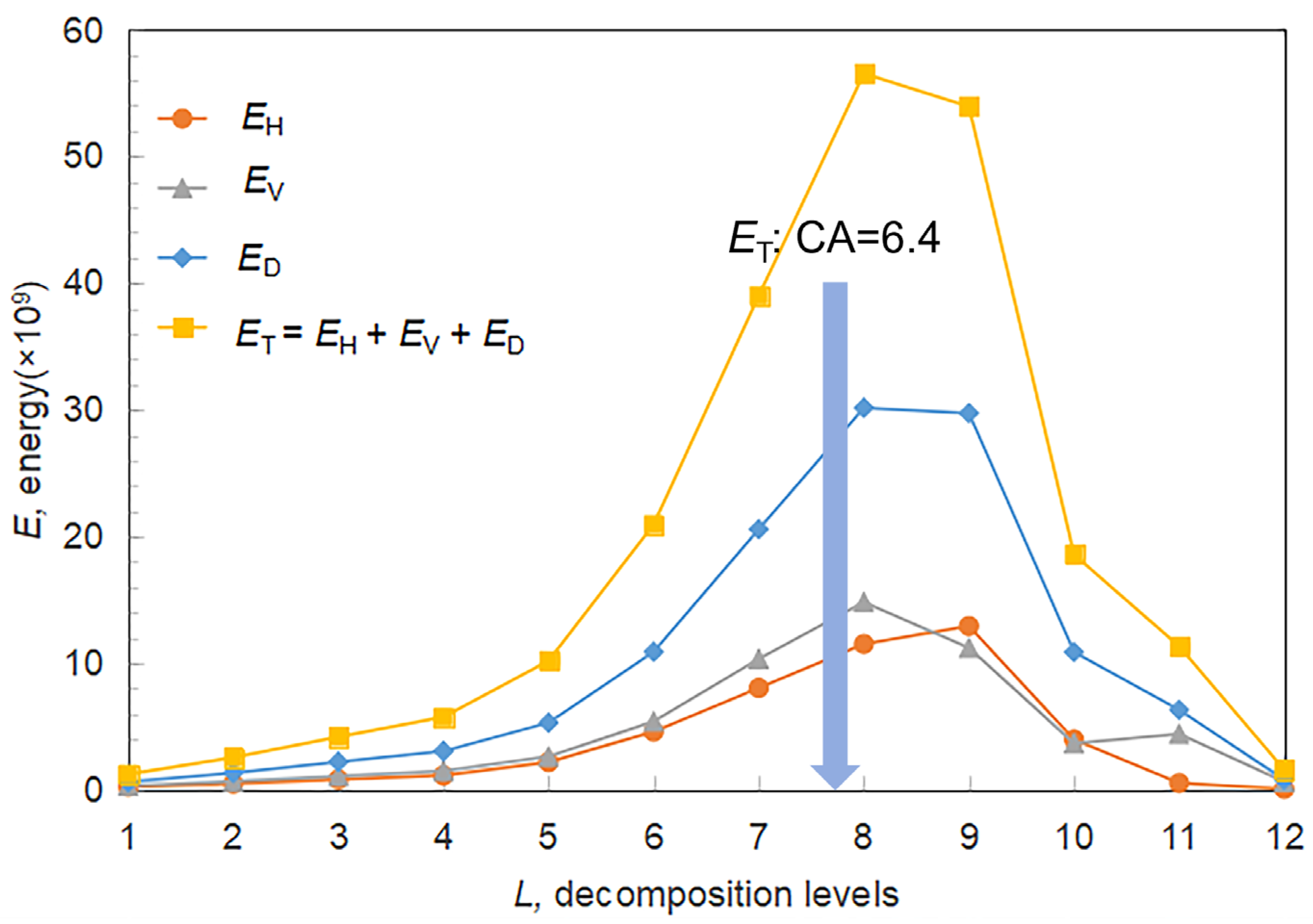

3. Wavelet-Based Image Quality Assessment and Resolution Metrics

3.1. Basic Principle of Wavelet Analysis

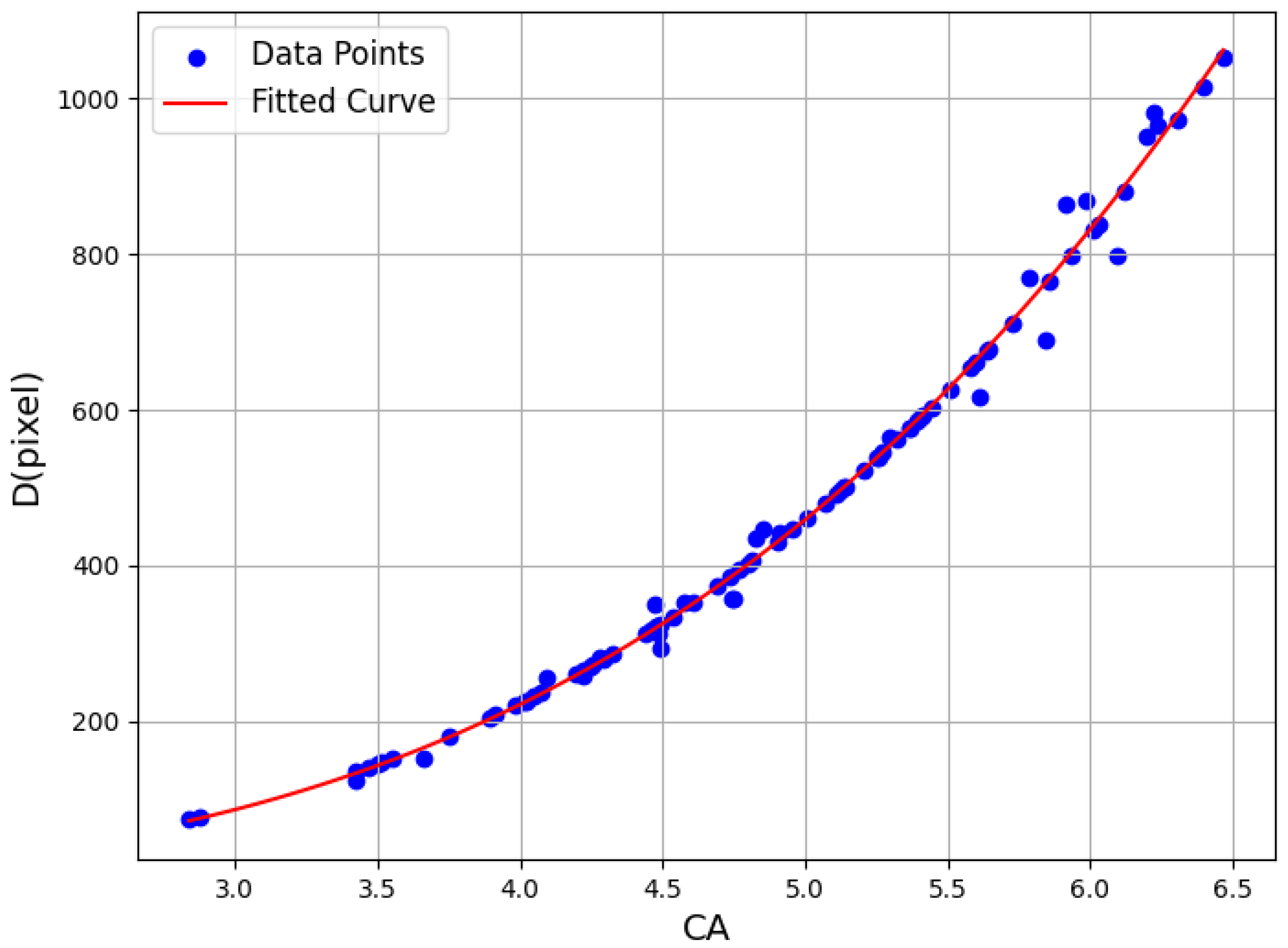

3.2. Determine the Mean Particle Length by Wavelet Analysis

3.3. Image Quality Evaluation Metrics

- (1)

- Peak Signal-to-Noise Ratio (PSNR)

- (2)

- Structural Similarity Index Measure (SSIM)

- (3)

- Wavelet Coefficients (CA)

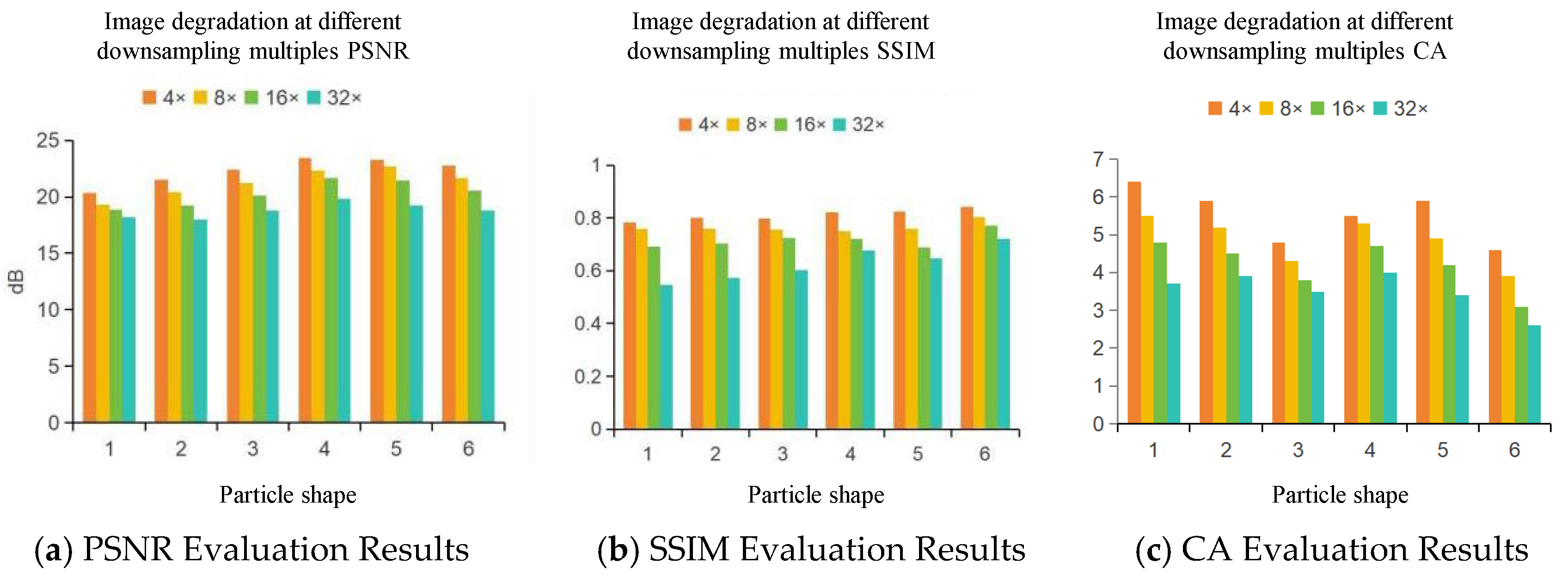

3.4. Dual-Stage Degradation Results Analysis

4. Coarse Granular Particle Image Reconstruction Method for Earth/Rock Dam Construction

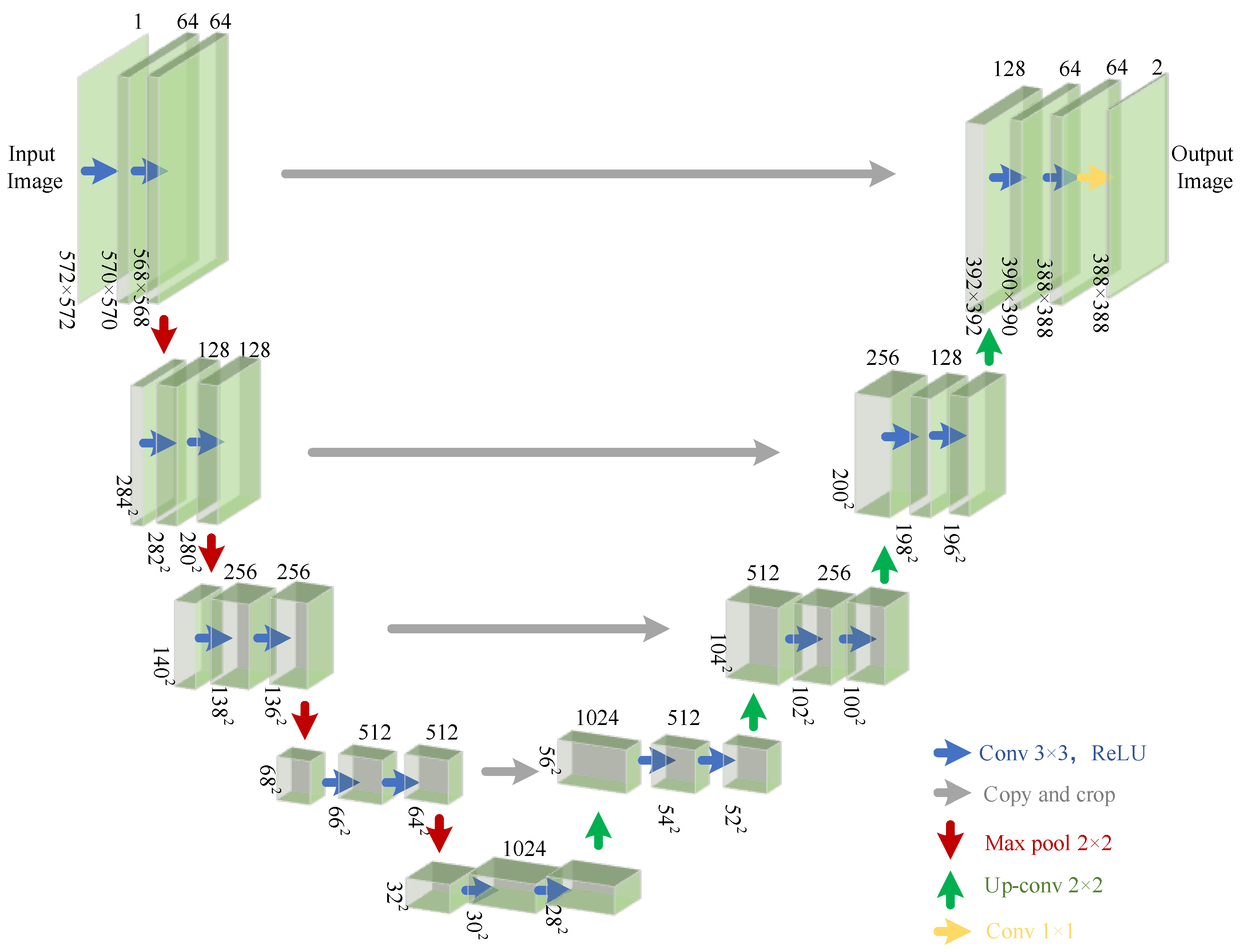

4.1. Generator Analysis

4.2. Discriminator Analysis

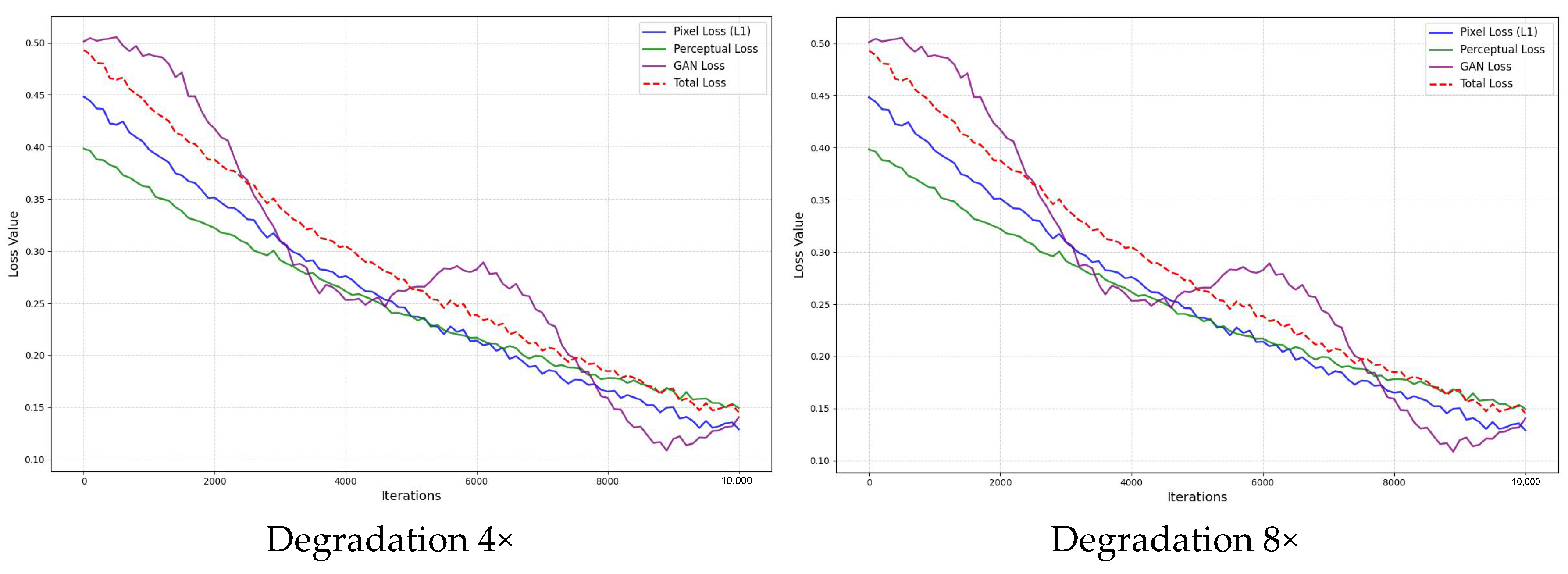

4.3. Loss Function

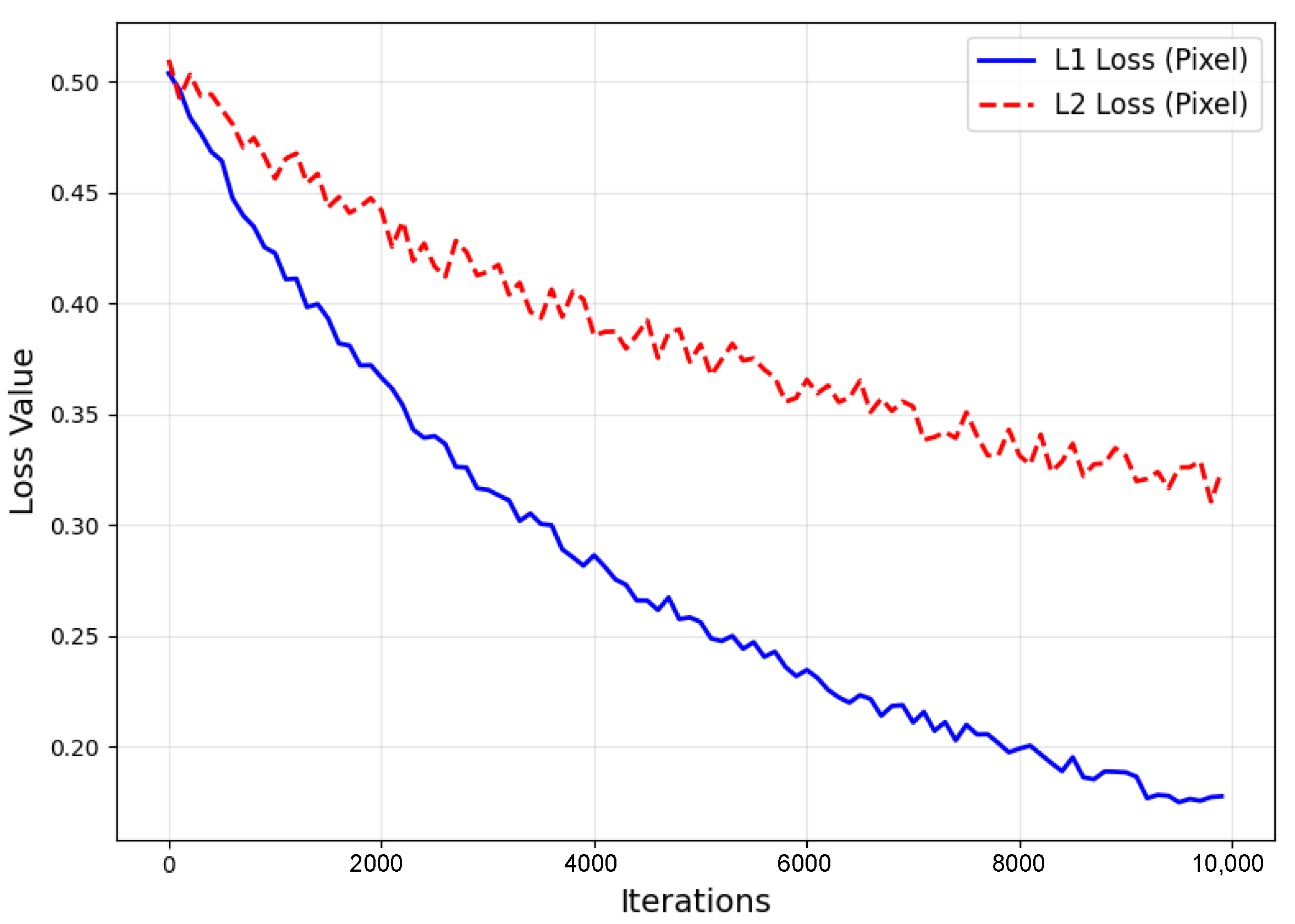

- (1)

- Pixel Loss

- (2)

- Perceptual Loss

- (3)

- Adversarial Loss

5. Experimental Results and Analysis

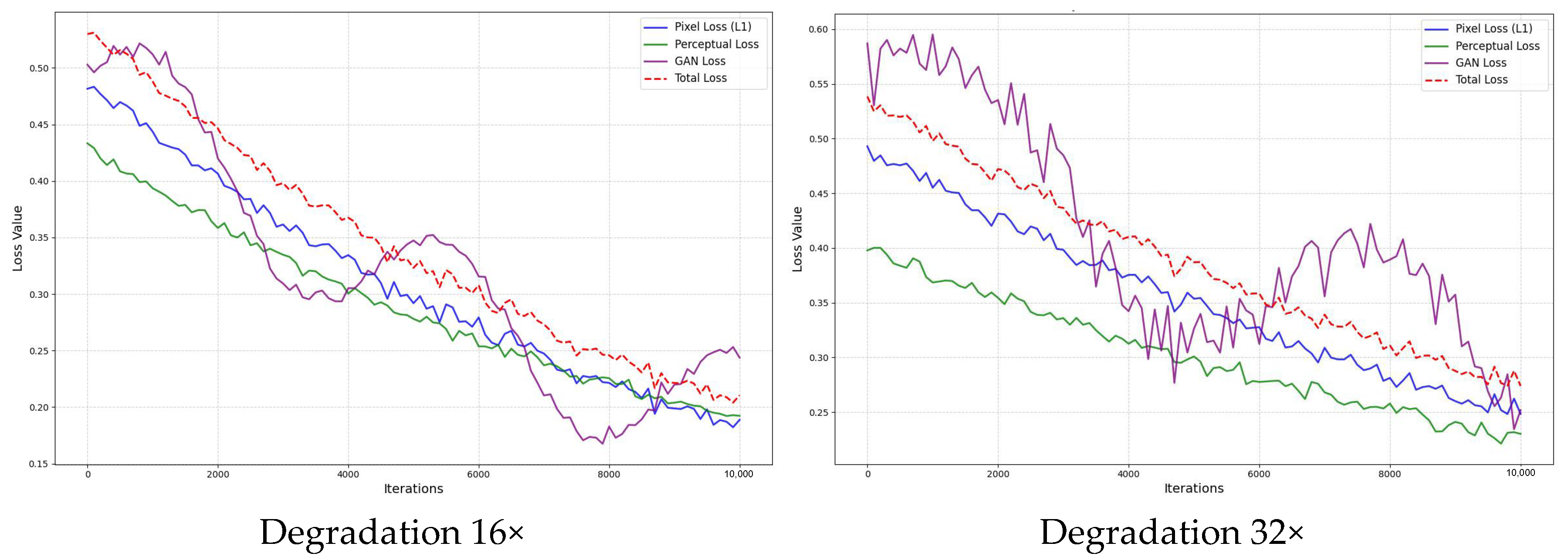

5.1. Image Reconstruction Results

5.2. Detailed Analysis

5.2.1. Original Image

5.2.2. Dual-Stage Degraded Image

5.2.3. Reconstructed Image

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wang, Z.; Chen, J.; Hoi, S.C.H. Deep learning for image super-resolution: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3365–3387. [Google Scholar] [CrossRef] [PubMed]

- Liu, A.; Liu, Y.; Gu, J.; Qiao, Y.; Dong, C. Blind image super-resolution: A survey and beyond. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 5461–5480. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 295–307. [Google Scholar] [CrossRef]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image super-resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 286–301. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 1–9. [Google Scholar]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 694–711. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Loy, C.C.; Qiao, Y.; Tang, X. ESRGAN: Enhanced super-resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision Workshops, Munich, Germany, 8–14 September 2018; pp. 63–79. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Zhang, W.; Liu, Y.; Dong, C.; Qiao, Y. RankSRGAN: Generative adversarial networks with ranker for image super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3096–3105. [Google Scholar]

- Ma, C.; Rao, Y.; Cheng, Y.; Lu, J.; Zhou, J. Structure-preserving super resolution with gradient guidance. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 7769–7778. [Google Scholar]

- Zhang, K.; Liang, J.; Van Gool, L.; Timofte, R. Designing a practical degradation model for deep blind image super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 4791–4800. [Google Scholar]

- Chen, C.; Shi, X.; Qin, Y.; Li, X.; Han, X.; Yang, T.; Guo, S. Real-world blind super-resolution via feature matching with implicit high-resolution priors. In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10–14 October 2022; pp. 1329–1338. [Google Scholar]

- Wu, R.; Yang, T.; Sun, L.; Zhang, Z.; Li, S.; Zhang, L. SeeSR: Towards Semantics-Aware Real-World Image Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 25456–25467. [Google Scholar] [CrossRef]

- Li, B.; Li, X.; Zhu, H.; Jin, Y.; Feng, R.; Zhang, Z.; Chen, Z. SeD: Semantic-Aware Discriminator for Image Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 25784–25795. [Google Scholar] [CrossRef]

- Wang, X.; He, Z.; Peng, X. Artificial-Intelligence-Generated Content with Diffusion Models: A Literature Review. Mathematics 2024, 12, 977. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, Z.; Song, Y.; Hong, S.; Xu, R.; Zhao, Y.; Zhang, W.; Cui, B.; Yang, M.-H. Diffusion Models: A Comprehensive Survey of Methods and Applications. ACM Comput. Surv. 2024, 56, 105. [Google Scholar] [CrossRef]

- Wang, X.; Xie, L.; Dong, C.; Shan, Y. Real-ESRGAN: Training Real-World Blind Super-Resolution with Pure Synthetic Data. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, QC, Canada, 11–17 October 2021; pp. 1905–1914. [Google Scholar] [CrossRef]

- Cho, G.C.; Dodds, J.; Santamarina, J.C. Particle shape effects on packing density, stiffness, and strength: Natural and crushed sands. J. Geotech. Geoenvironmental Eng. 2006, 132, 591–602. [Google Scholar] [CrossRef]

- Bareither, C.A.; Edil, T.B.; Benson, C.H.; Mickelson, D.M. Geological and physical factors affecting the friction angle of compacted sands. J. Geotech. Geoenvironmental Eng. 2008, 134, 1476–1489. [Google Scholar] [CrossRef]

- Sun, Q.; Zheng, J.; Coop, M.R.; Altuhafi, F.N. Minimum image quality for reliable optical characterizations of soil particle shapes. Comput. Geotech. 2019, 114, 103110. [Google Scholar] [CrossRef]

- Al-Rousan, T.; Masad, E.; Tutumluer, E.; Pan, T. Evaluation of image analysis techniques for quantifying aggregate shape characteristics. Constr. Build. Mater. 2007, 21, 978–990. [Google Scholar] [CrossRef]

- Tutumluer, E.; Pan, T. Aggregate morphology affecting strength and permanent deformation behavior of unbound aggregate materials. J. Mater. Civ. Eng. 2008, 20, 617–627. [Google Scholar] [CrossRef]

- Fletcher, T.; Chandan, C.; Masad, E.; Sivakumar, K. Aggregate imaging system for characterizing the shape of fine and coarse aggregates. Transp. Res. Rec. 2003, 1832, 67–77. [Google Scholar] [CrossRef]

- Chandan, C.; Sivakumar, K.; Masad, E.; Fletcher, T. Application of imaging techniques to geometry analysis of aggregate particles. J. Comput. Civ. Eng. 2004, 18, 75–82. [Google Scholar] [CrossRef]

- Mahmoud, E.; Gates, L.; Masad, E.; Erdoğan, S.; Garboczi, E. Comprehensive evaluation of AIMS texture, angularity, and dimension measurements. J. Mater. Civ. Eng. 2010, 22, 369–379. [Google Scholar] [CrossRef]

- Ohm, H.S.; Hryciw, R.D. Translucent segregation table test for sand and gravel particle size distribution. Geotech. Test. J. 2013, 36, 592–605. [Google Scholar] [CrossRef]

- Raschke, S.A.; Hryciw, R.D. Vision cone penetrometer for direct subsurface soil observation. J. Geotech. Geoenvironmental Eng. 1997, 123, 1074–1076. [Google Scholar] [CrossRef]

- Ghalib, A.M.; Hryciw, R.D.; Susila, E. Soil stratigraphy delineation by VisCPT. In Innovations and Applications in Geotechnical Site Characterization; Amer Society of Civil Engineers: Reston, VA, USA, 2000; pp. 65–79. [Google Scholar]

- Hryciw, R.D.; Ohm, H.S. Soil migration and piping susceptibility by the VisCPT. In Geo-Congress 2013: Stability and Performance of Slopes and Embankments III; Amer Society of Civil Engineers: Reston, VA, USA, 2013; pp. 192–195. [Google Scholar]

- Zheng, J.; Hryciw, R.D. Traditional soil particle sphericity, roundness and surface roughness by computational geometry. Géotechnique 2015, 65, 494–506. [Google Scholar] [CrossRef]

- Haar, A. Zur theorie der orthogonalen funktionensysteme. Math. Ann. 1911, 71, 38–53. [Google Scholar] [CrossRef]

- Ventola, A.; Horstmeier, G.; Hryciw, R.D.; Kempf, C.; Eykholt, J. Particle size distribution of kalamazoo river sediments by FieldSed. J. Geotech. Geoenvironmental Eng. 2020, 146, 05020012. [Google Scholar] [CrossRef]

- Ventola, A.; Hryciw, R.D. On-site particle size distribution by FieldSed. In Eighth International Conference on Case Histories in Geotechnical Engineering; Amer Society of Civil Engineers: Reston, VA, USA, 2019; pp. 143–151. [Google Scholar]

- Ventola, A.; Hryciw, R.D. The Sed360 test for rapid sand particle size distribution determination. Geotech. Test. J. 2023, 46, 422–429. [Google Scholar] [CrossRef]

- Rhif, M.; Ben Abbes, A.; Farah, I.R.; Martínez, B.; Sang, Y. Wavelet transform application for/in non-stationary time-series analysis: A review. Appl. Sci. 2019, 9, 1345. [Google Scholar] [CrossRef]

- Ventola, A.; Hryciw, R.D. An autoadaptive Haar wavelet transform method for particle size analysis of sands. Acta Geotech. 2023, 18, 5341–5358. [Google Scholar] [CrossRef]

- Shin, S.; Hryciw, R.D. Wavelet analysis of soil mass images for particle size determination. J. Comput. Civ. Eng. 2004, 18, 19–27. [Google Scholar] [CrossRef]

| Shape Descriptors | Dmin | Hierarchy | |

|---|---|---|---|

| Aspect ratio (AR) | 25 | Coarse descriptors: Assessing the main dimensions of particles | |

| Circle ratio sphericity (SC) | |||

| Diameter sphericity (SD) | 100 | Medium-coarse descriptor: Pertaining to the areas of particles | |

| Area sphericity (SA) | |||

| Perimeter sphericity (SP) | 130 | Fine descriptor: Pertaining to the perimeter of particles | |

| Circularity | |||

| Convexity | 250 | Very fine descriptors: Assessing the perimeters of particles | |

| Roundness (R) | Very angular to angular (0 < R < 0.17) | ||

| Angular to rounded (0.17 < R < 0.70) | 130 | ||

| Rounded to well-rounded (0.70 < R < 1.0) | 75 | ||

| Shape Descriptors | CAmin | Hierarchy | |

|---|---|---|---|

| Aspect ratio (AR) | 2.1 | Coarse descriptors: Assessing the main dimensions of particles | |

| Circle ratio sphericity (SC) | |||

| Diameter sphericity (SD) | 3.1 | Medium-coarse descriptor: Pertaining to the areas of particles | |

| Area sphericity (SA) | |||

| Perimeter sphericity (SP) | 3.4 | Fine descriptor: Pertaining to the perimeter of particles | |

| Circularity | |||

| Convexity | 4.1 | Very fine descriptors: Assessing the perimeters of particles | |

| Roundness (R) | Very angular to angular (0 < R < 0.17) | ||

| Angular to rounded (0.17 < R < 0.70) | 3.4 | ||

| Rounded to well-rounded (0.70 < R < 1.0) | 2.9 | ||

| Reconstruction Method | Evaluation Metrics | ||

|---|---|---|---|

| PSNR/dB | SSIM | CA | |

| SRGAN | 23.03 | 0.7606 | 4.4 |

| SRResNet | 23.34 | 0.7764 | 4.9 |

| ESRGAN | 23.37 | 0.7740 | 5.4 |

| Real-ESRGAN | 24.63 | 0.8402 | 6.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, S.; Gao, L.; Zhang, B.; Liu, Z.; Zhang, X.; Guan, L.; Zheng, J. Research on Super-Resolution Reconstruction of Coarse Aggregate Particle Images for Earth–Rock Dam Construction Based on Real-ESRGAN. Sensors 2025, 25, 4084. https://doi.org/10.3390/s25134084

Li S, Gao L, Zhang B, Liu Z, Zhang X, Guan L, Zheng J. Research on Super-Resolution Reconstruction of Coarse Aggregate Particle Images for Earth–Rock Dam Construction Based on Real-ESRGAN. Sensors. 2025; 25(13):4084. https://doi.org/10.3390/s25134084

Chicago/Turabian StyleLi, Shuangping, Lin Gao, Bin Zhang, Zuqiang Liu, Xin Zhang, Linjie Guan, and Junxing Zheng. 2025. "Research on Super-Resolution Reconstruction of Coarse Aggregate Particle Images for Earth–Rock Dam Construction Based on Real-ESRGAN" Sensors 25, no. 13: 4084. https://doi.org/10.3390/s25134084

APA StyleLi, S., Gao, L., Zhang, B., Liu, Z., Zhang, X., Guan, L., & Zheng, J. (2025). Research on Super-Resolution Reconstruction of Coarse Aggregate Particle Images for Earth–Rock Dam Construction Based on Real-ESRGAN. Sensors, 25(13), 4084. https://doi.org/10.3390/s25134084