A Highly Efficient HMI Algorithm for Controlling a Multi-Degree-of-Freedom Prosthetic Hand Using Sonomyography

Abstract

1. Introduction

2. System and AI Model Development

2.1. Programming Environment and Tools

2.2. Classification of Different Hand Gestures Using Ultrasound Imaging

2.2.1. Feature Extraction

2.2.2. Classification

2.3. Replacing Ultrasound Gel and Gel Pad with Sticky Silicone Pad

2.4. Designing a Novel Prosthetic Hand

3. Experiment and Results

3.1. Participants

3.2. Experimental Setup

3.3. Experiment 1: Performance of Offline Classification

Data Collection for Offline Testing

3.4. Experiment 2: Real-Time Functional Performance

Data Collection for Real-Time Classification Testing

3.5. Results

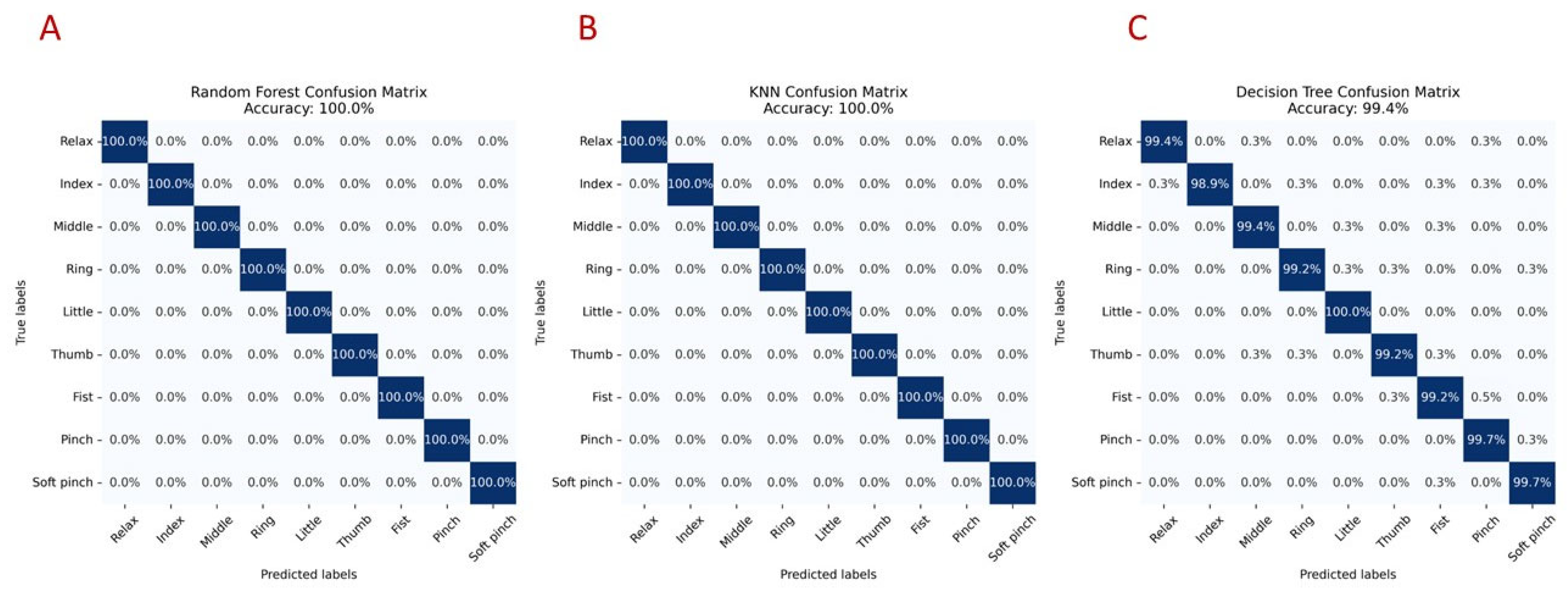

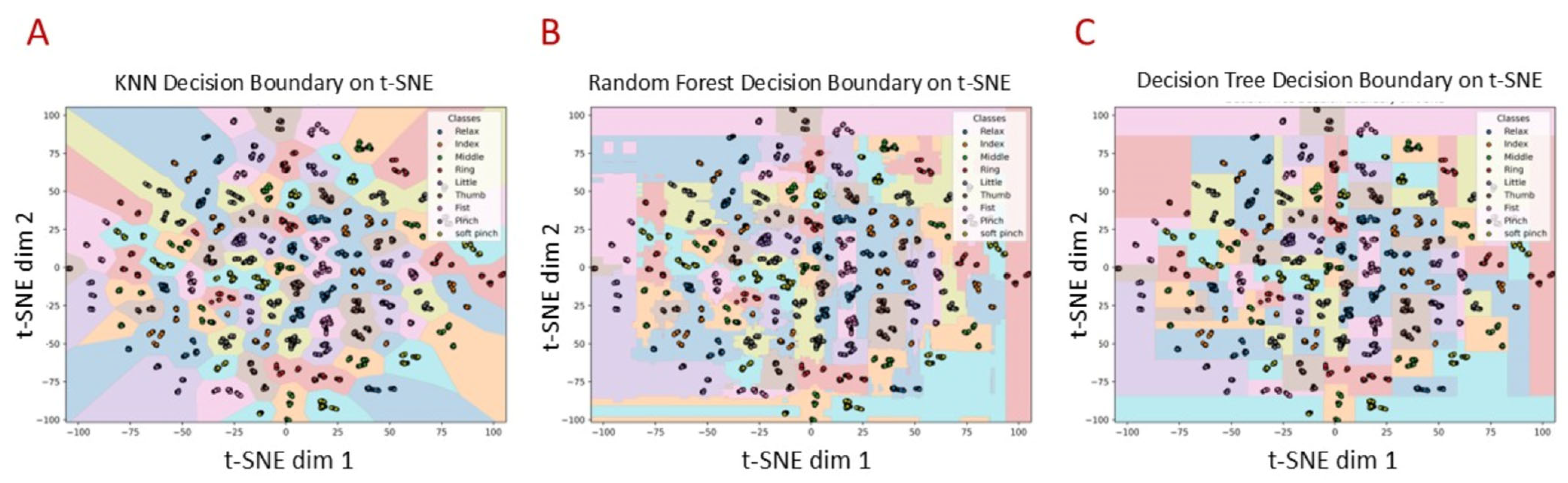

3.5.1. Offline Classification Results

3.5.2. Real-Time Performance Results

3.5.3. Evaluating the Potential of Using a Silicone Pad Instead of Ultrasound Gel or a Gel Pad

4. Discussion

Limitations and Future Works

5. Patents

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| HMI | Human–Machine Interface |

| SMG | Sonomyography |

| EMG | Electromyography |

| EEG | Electroencephalography |

| MIRA | Myoelectric Implantable Recording Array |

| MM | Magnetomicrometry |

| SDA | Subclass Discriminant Analysis |

| PCA | Principal Component Analysis |

| SVM | Support Vector Machine |

| BP-ANN | Backpropagation Artificial Neural Network |

| DOF | Degree of Freedom |

| B&B | Box and Blocks |

| TB&B | Targeted Box and Blocks |

| ARAT | Action Research Arm Test |

| CNN | Convolutional Neural Network |

| RF | Random Forest |

| KNN | K-Nearest Neighbors |

| DTC | Decision Tree Classifier |

| SVR | Support Vector Regression |

| NNR | Nearest Neighbor Regression |

| DTR | Decision Tree Regression |

| FDS | Flexor Digitorum Superficialis |

| FPL | Flexor Pollicis Longus |

| FDP | Flexor Digitorum Profundus |

| ADL | Activity of Daily Living |

References

- Damerla, R.; Qiu, Y.; Sun, T.M.; Awtar, S. A review of the performance of extrinsically powered prosthetic hands. IEEE Trans. Med. Robot. Bionics 2021, 3, 640–660. [Google Scholar] [CrossRef]

- Cordella, F.; Ciancio, A.L.; Sacchetti, R.; Davalli, A.; Cutti, A.G.; Guglielmelli, E.; Zollo, L. Literature review on needs of upper limb prosthesis users. Front. Neurosci. 2016, 10, 209. [Google Scholar] [CrossRef] [PubMed]

- Belter, J.T.; Segil, J.L.; Dollar, A.M.; Weir, R.F. Mechanical design and performance specifications of anthropomorphic prosthetic hands: A review. J. Rehabil. Res. Dev. 2013, 50, 599. [Google Scholar] [CrossRef]

- Xu, K.; Guo, W.; Hua, L.; Sheng, X.; Zhu, X. A prosthetic arm based on EMG pattern recognition. In Proceedings of the 2016 IEEE International Conference on Robotics and Biomimetics (ROBIO), Qingdao, China, 3–7 December 2016; pp. 1179–1184. [Google Scholar]

- O’Neill, C. An advanced, low cost prosthetic arm. In Proceedings of the 2014 IEEE Sensors Conference, Valencia, Spain, 2–5 November 2014; pp. 494–498. [Google Scholar]

- Gretsch, K.F.; Lather, H.D.; Peddada, K.V.; Deeken, C.R.; Wall, L.B.; Goldfarb, C.A. Development of novel 3D-printed robotic prosthetic for transradial amputees. Prosthet. Orthot. Int. 2016, 40, 400–403. [Google Scholar] [CrossRef]

- Kontoudis, G.P.; Liarokapis, M.V.; Zisimatos, A.G.; Mavrogiannis, C.I.; Kyriakopoulos, K.J. Open-source, anthropomorphic, underactuated robot hands with a selectively lockable differential mechanism: Towards affordable prostheses. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 5857–5862. [Google Scholar]

- Ng, K.H.; Nazari, V.; Alam, M. Can Prosthetic Hands Mimic a Healthy Human Hand? Prosthesis 2021, 3, 11–23. [Google Scholar] [CrossRef]

- Ke, A.; Huang, J.; Wang, J.; Xiong, C.; He, J. Optimal design of dexterous prosthetic hand with five-joint thumb and fingertip tactile sensors based on novel precision grasp metric. Mech. Mach. Theory 2022, 171, 104759. [Google Scholar] [CrossRef]

- Mohammadi, A.; Lavranos, J.; Zhou, H.; Mutlu, R.; Alici, G.; Tan, Y.; Choong, P.; Oetomo, D. A practical 3D-printed soft robotic prosthetic hand with multi-articulating capabilities. PLoS ONE 2020, 15, e0232766. [Google Scholar] [CrossRef]

- Yang, D.; Liu, H. Human-machine shared control: New avenue to dexterous prosthetic hand manipulation. Sci. China Technol. Sci. 2021, 64, 767–773. [Google Scholar] [CrossRef]

- Furui, A.; Eto, S.; Nakagaki, K.; Shimada, K.; Nakamura, G.; Masuda, A.; Chin, T.; Tsuji, T. A myoelectric prosthetic hand with muscle synergy–based motion determination and impedance model–based biomimetic control. Sci. Robot. 2019, 4, eaaw6339. [Google Scholar] [CrossRef]

- Gu, G.; Zhang, N.; Xu, H.; Lin, S.; Yu, Y.; Chai, G.; Ge, L.; Yang, H.; Shao, Q.; Sheng, X. A soft neuroprosthetic hand providing simultaneous myoelectric control and tactile feedback. Nat. Biomed. Eng. 2021, 7, 589–598. [Google Scholar] [CrossRef]

- Laffranchi, M.; Boccardo, N.; Traverso, S.; Lombardi, L.; Canepa, M.; Lince, A.; Semprini, M.; Saglia, J.A.; Naceri, A.; Sacchetti, R. The Hannes hand prosthesis replicates the key biological properties of the human hand. Sci. Robot. 2020, 5, eabb0467. [Google Scholar] [CrossRef] [PubMed]

- Biddiss, E.A.; Chau, T.T. Upper limb prosthesis use and abandonment: A survey of the last 25 years. Prosthet. Orthot. Int. 2007, 31, 236–257. [Google Scholar] [CrossRef] [PubMed]

- Resnik, L.; Meucci, M.R.; Lieberman-Klinger, S.; Fantini, C.; Kelty, D.L.; Disla, R.; Sasson, N. Advanced upper limb prosthetic devices: Implications for upper limb prosthetic rehabilitation. Arch. Phys. Med. Rehabil. 2012, 93, 710–717. [Google Scholar] [CrossRef]

- Lewis, S.; Russold, M.; Dietl, H.; Kaniusas, E. Satisfaction of prosthesis users with electrical hand prostheses and their sugggested improvements. Biomed. Eng./Biomed. Tech. 2013, 58, 000010151520134385. [Google Scholar] [CrossRef]

- Weiner, P.; Starke, J.; Rader, S.; Hundhausen, F.; Asfour, T. Designing prosthetic hands with embodied intelligence: The kit prosthetic hands. Front. Neurorobotics 2022, 16, 815716. [Google Scholar] [CrossRef] [PubMed]

- Nazarpour, K. A more human prosthetic hand. Sci. Robot. 2020, 5, eabd9341. [Google Scholar] [CrossRef]

- Balasubramanian, R.; Santos, V.J. The Human Hand as an Inspiration for Robot Hand Development; Springer: Berlin/Heidelberg, Germany, 2014; Volume 95. [Google Scholar]

- Varol, H.A.; Dalley, S.A.; Wiste, T.E.; Goldfarb, M. Biomimicry and the design of multigrasp transradial prostheses. In The Human Hand as an Inspiration for Robot Hand Development; Springer: Berlin/Heidelberg, Germany, 2014; pp. 431–451. [Google Scholar]

- Controzzi, M.; Cipriani, C.; Carrozza, M.C. Design of artificial hands: A review. In The Human Hand as an Inspiration for Robot Hand Development; Springer: Berlin/Heidelberg, Germany, 2014; pp. 219–246. [Google Scholar]

- Marinelli, A.; Boccardo, N.; Tessari, F.; Di Domenico, D.; Caserta, G.; Canepa, M.; Gini, G.; Barresi, G.; Laffranchi, M.; De Michieli, L. Active upper limb prostheses: A review on current state and upcoming breakthroughs. Prog. Biomed. Eng. 2023, 5, 012001. [Google Scholar] [CrossRef]

- Bicchi, A.; Gabiccini, M.; Santello, M. Modelling natural and artificial hands with synergies. Philos. Trans. R. Soc. B Biol. Sci. 2011, 366, 3153–3161. [Google Scholar] [CrossRef]

- Leo, A.; Handjaras, G.; Bianchi, M.; Marino, H.; Gabiccini, M.; Guidi, A.; Scilingo, E.P.; Pietrini, P.; Bicchi, A.; Santello, M. A synergy-based hand control is encoded in human motor cortical areas. Elife 2016, 5, e13420. [Google Scholar] [CrossRef]

- Weiner, P.; Starke, J.; Hundhausen, F.; Beil, J.; Asfour, T. The KIT prosthetic hand: Design and control. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 3328–3334. [Google Scholar]

- Murray, C.D. Embodiment and prosthetics. In Psychoprosthetics; Springer: Berlin/Heidelberg, Germany, 2008; pp. 119–129. [Google Scholar]

- De Vignemont, F. Embodiment, ownership and disownership. Conscious. Cogn. 2011, 20, 82–93. [Google Scholar] [CrossRef]

- D’Anna, E.; Valle, G.; Mazzoni, A.; Strauss, I.; Iberite, F.; Patton, J.; Petrini, F.M.; Raspopovic, S.; Granata, G.; Di Iorio, R. A closed-loop hand prosthesis with simultaneous intraneural tactile and position feedback. Sci. Robot. 2019, 4, eaau8892. [Google Scholar] [CrossRef] [PubMed]

- Krebs, H.I.; Palazzolo, J.J.; Dipietro, L.; Ferraro, M.; Krol, J.; Rannekleiv, K.; Volpe, B.T.; Hogan, N. Rehabilitation robotics: Performance-based progressive robot-assisted therapy. Auton. Robot. 2003, 15, 7–20. [Google Scholar] [CrossRef]

- Nazari, V.; Pouladian, M.; Zheng, Y.-P.; Alam, M. A compact and lightweight rehabilitative exoskeleton to restore grasping functions for people with hand paralysis. Sensors 2021, 21, 6900. [Google Scholar] [CrossRef] [PubMed]

- Qassim, H.M.; Wan Hasan, W. A review on upper limb rehabilitation robots. Appl. Sci. 2020, 10, 6976. [Google Scholar] [CrossRef]

- Ribeiro, J.; Mota, F.; Cavalcante, T.; Nogueira, I.; Gondim, V.; Albuquerque, V.; Alexandria, A. Analysis of man-machine interfaces in upper-limb prosthesis: A review. Robotics 2019, 8, 16. [Google Scholar] [CrossRef]

- Begovic, H.; Zhou, G.-Q.; Li, T.; Wang, Y.; Zheng, Y.-P. Detection of the electromechanical delay and its components during voluntary isometric contraction of the quadriceps femoris muscle. Front. Physiol. 2014, 5, 494. [Google Scholar] [CrossRef] [PubMed]

- Setiawan, J.D.; Alwy, F.; Ariyanto, M.; Samudro, L.; Ismail, R. Flexion and Extension Motion for Prosthetic Hand Controlled by Single-Channel EEG. In Proceedings of the 2021 8th International Conference on Information Technology, Computer and Electrical Engineering (ICITACEE), Semarang, Indonesia, 23–24 September 2021; pp. 47–52. [Google Scholar]

- Ahmadian, P.; Cagnoni, S.; Ascari, L. How capable is non-invasive EEG data of predicting the next movement? A mini review. Front. Hum. Neurosci. 2013, 7, 124. [Google Scholar] [CrossRef]

- Gstoettner, C.; Festin, C.; Prahm, C.; Bergmeister, K.D.; Salminger, S.; Sturma, A.; Hofer, C.; Russold, M.F.; Howard, C.L.; McDonnall, D. Feasibility of a wireless implantable multi-electrode system for high-bandwidth prosthetic interfacing: Animal and cadaver study. Clin. Orthop. Relat. Res. 2022, 480, 1191–1204. [Google Scholar] [CrossRef]

- Taylor, C.R.; Srinivasan, S.S.; Yeon, S.H.; O’Donnell, M.; Roberts, T.; Herr, H.M. Magnetomicrometry. Sci. Robot. 2021, 6, eabg0656. [Google Scholar] [CrossRef]

- Zheng, Y.-P.; Chan, M.; Shi, J.; Chen, X.; Huang, Q.-H. Sonomyography: Monitoring morphological changes of forearm muscles in actions with the feasibility for the control of powered prosthesis. Med. Eng. Phys. 2006, 28, 405–415. [Google Scholar] [CrossRef]

- Zhou, Y.; Zheng, Y.P. Sonomyography: Dynamic and Functional Assessment of Muscle Using Ultrasound Imaging, 1st ed.; Springer: Singapore, 2021; pp. 1–252. [Google Scholar]

- Guo, J.Y.; Zheng, Y.P.; Huang, Q.H.; Chen, X.; He, J.F.; Chan, H.L. Performances of one-dimensional sonomyography and surface electromyography in tracking guided patterns of wrist extension. Ultrasound Med. Biol. 2009, 35, 894–902. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Zheng, Y.P.; Guo, J.Y.; Shi, J. Sonomyography (SMG) control for powered prosthetic hand: A study with normal subjects. Ultrasound Med. Biol. 2010, 36, 1076–1088. [Google Scholar] [CrossRef]

- Shi, J.; Chang, Q.; Zheng, Y.P. Feasibility of controlling prosthetic hand using sonomyography signal in real time: Preliminary study. J. Rehabil. Res. Dev. 2010, 47, 87–98. [Google Scholar] [CrossRef]

- Shi, J.; Guo, J.Y.; Hu, S.X.; Zheng, Y.P. Recognition of finger flexion motion from ultrasound image: A feasibility study. Ultrasound Med. Biol. 2012, 38, 1695–1704. [Google Scholar] [CrossRef]

- Guo, J.Y.; Zheng, Y.P.; Xie, H.B.; Koo, T.K. Towards the application of one-dimensional sonomyography for powered upper-limb prosthetic control using machine learning models. Prosthet. Orthot. Int. 2013, 37, 43–49. [Google Scholar] [CrossRef][Green Version]

- Kamatham, A.T.; Mukherjee, B. Design and optimization of a wearable sonomyography sensor for dynamic muscle activity monitoring. In Proceedings of the 2023 IEEE Applied Sensing Conference (APSCON), Bengaluru, India, 23–25 January 2023; pp. 1–3. [Google Scholar]

- Manikandan, S.; Prasad, A.; Mukherjee, B.; Sridar, P. Towards Deep Learning-Based Classification of Multiple Gestures using Sonomyography for Prosthetic Control Applications. In Proceedings of the 2023 3rd International Conference on Electrical, Computer, Communications and Mechatronics Engineering (ICECCME), Tenerife, Spain, 19–21 July 2023; pp. 1–5. [Google Scholar]

- Ma, C.Z.-H.; Ling, Y.T.; Shea, Q.T.K.; Wang, L.-K.; Wang, X.-Y.; Zheng, Y.-P. Towards wearable comprehensive capture and analysis of skeletal muscle activity during human locomotion. Sensors 2019, 19, 195. [Google Scholar] [CrossRef] [PubMed]

- Fifer, M.S.; McMullen, D.P.; Osborn, L.E.; Thomas, T.M.; Christie, B.; Nickl, R.W.; Candrea, D.N.; Pohlmeyer, E.A.; Thompson, M.C.; Anaya, M.A.; et al. Intracortical Somatosensory Stimulation to Elicit Fingertip Sensations in an Individual with Spinal Cord Injury. Neurology 2022, 98, e679–e687. [Google Scholar] [CrossRef] [PubMed]

- Engdahl, S.; Mukherjee, B.; Akhlaghi, N.; Dhawan, A.; Bashatah, A.; Patwardhan, S.; Holley, R.; Kaliki, R.; Monroe, B.; Sikdar, S. A novel method for achieving dexterous, proportional prosthetic control using sonomyography. In Proceedings of the MEC20 Symposium, Fredericton, NB, Canada, 10–13 August 2020; The Institute of Biomedical Engineering, University of New Brunswick: Fredericton, NB, Canada, 2020. [Google Scholar]

- Yang, X.; Yan, J.; Fang, Y.; Zhou, D.; Liu, H. Simultaneous prediction of wrist/hand motion via wearable ultrasound sensing. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 970–977. [Google Scholar] [CrossRef]

- Akhlaghi, N.; Dhawan, A.; Khan, A.A.; Mukherjee, B.; Diao, G.; Truong, C.; Sikdar, S. Sparsity analysis of a sonomyographic muscle–computer interface. IEEE Trans. Biomed. Eng. 2019, 67, 688–696. [Google Scholar] [CrossRef]

- Li, J.; Zhu, K.; Pan, L. Wrist and finger motion recognition via M-mode ultrasound signal: A feasibility study. Biomed. Signal Process. Control. 2022, 71, 103112. [Google Scholar] [CrossRef]

- Guo, J.-Y.; Zheng, Y.-P.; Huang, Q.-H.; Chen, X. Dynamic monitoring of forearm muscles using one-dimensional sonomyography system. J. Rehabil. Res. Dev. 2008, 45, 187–195. [Google Scholar] [CrossRef] [PubMed]

- Castellini, C.; Passig, G.; Zarka, E. Using ultrasound images of the forearm to predict finger positions. IEEE Trans. Neural Syst. Rehabil. Eng. 2012, 20, 788–797. [Google Scholar] [CrossRef]

- Castellini, C.; Passig, G. Ultrasound image features of the wrist are linearly related to finger positions. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 2108–2114. [Google Scholar]

- Nazari, V.; Zheng, Y.-P. Controlling Upper Limb Prostheses Using Sonomyography (SMG): A Review. Sensors 2023, 23, 1885. [Google Scholar] [CrossRef]

- Maibam, P.C.; Pei, D.; Olikkal, P.; Vinjamuri, R.K.; Kakoty, N.M. Enhancing prosthetic hand control: A synergistic multi-channel electroencephalogram. Wearable Technol. 2024, 5, e18. [Google Scholar] [CrossRef] [PubMed]

- Prakash, A.; Sharma, S.; Sharma, N. A compact-sized surface EMG sensor for myoelectric hand prosthesis. Biomed. Eng. Lett. 2019, 9, 467–479. [Google Scholar] [CrossRef]

- Dunai, L.; Segui, V.I.; Tsurcanu, D.; Bostan, V. Prosthetic Hand Based on Human Hand Anatomy Controlled by Surface Electromyography and Artificial Neural Network. Technologies 2025, 13, 21. [Google Scholar] [CrossRef]

- Kalita, A.J.; Chanu, M.P.; Kakoty, N.M.; Vinjamuri, R.K.; Borah, S. Functional evaluation of a real-time EMG controlled prosthetic hand. Wearable Technol. 2025, 6, e18. [Google Scholar] [CrossRef]

- Chen, Z.; Min, H.; Wang, D.; Xia, Z.; Sun, F.; Fang, B. A review of myoelectric control for prosthetic hand manipulation. Biomimetics 2023, 8, 328. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Shi, P.; Yu, H. Gesture recognition using surface electromyography and deep learning for prostheses hand: State-of-the-art, challenges, and future. Front. Neurosci. 2021, 15, 621885. [Google Scholar] [CrossRef]

- Parajuli, N.; Sreenivasan, N.; Bifulco, P.; Cesarelli, M.; Savino, S.; Niola, V.; Esposito, D.; Hamilton, T.J.; Naik, G.R.; Gunawardana, U. Real-time EMG based pattern recognition control for hand prostheses: A review on existing methods, challenges and future implementation. Sensors 2019, 19, 4596. [Google Scholar] [CrossRef]

- Kinugasa, R.; Kubo, S. Development of consumer-friendly surface electromyography system for muscle fatigue detection. IEEE Access 2023, 11, 6394–6403. [Google Scholar] [CrossRef]

- Engdahl, S.M.; Acuña, S.A.; King, E.L.; Bashatah, A.; Sikdar, S. First demonstration of functional task performance using a sonomyographic prosthesis: A case study. Front. Bioeng. Biotechnol. 2022, 10, 876836. [Google Scholar] [CrossRef] [PubMed]

- Mathiowetz, V.; Volland, G.; Kashman, N.; Weber, K. Adult norms for the Box and Block Test of manual dexterity. Am. J. Occup. Ther. 1985, 39, 386–391. [Google Scholar] [CrossRef]

- Kontson, K.; Marcus, I.; Myklebust, B.; Civillico, E. Targeted box and blocks test: Normative data and comparison to standard tests. PLoS ONE 2017, 12, e0177965. [Google Scholar] [CrossRef] [PubMed]

- Kontson, K.; Ruhde, L.; Trent, L.; Miguelez, J.; Baun, K. Targeted Box and Blocks Test: Evidence of Convergent Validity in Upper Limb Prosthesis User Population. Arch. Phys. Med. Rehabil. 2022, 103, e132. [Google Scholar] [CrossRef]

- Kontson, K.; Wang, B.; Leung, N.; Miguelez, J.M.; Trent, L. Test-retest reliability, inter-rater reliability, and convergent validity of the targeted Box and Blocks Test (tBBT) in an upper extremity prosthesis user population. Arch. Rehabil. Res. Clin. Transl. 2025, 100427. [Google Scholar]

- McDonnell, M. Action research arm test. Aust. J. Physiother. 2008, 54, 220. [Google Scholar] [CrossRef]

- Yozbatiran, N.; Der-Yeghiaian, L.; Cramer, S.C. A standardized approach to performing the action research arm test. Neurorehabilit. Neural Repair 2008, 22, 78–90. [Google Scholar] [CrossRef]

- Buma, F.E.; Raemaekers, M.; Kwakkel, G.; Ramsey, N.F. Brain function and upper limb outcome in stroke: A cross-sectional fMRI study. PLoS ONE 2015, 10, e0139746. [Google Scholar] [CrossRef]

- Wang, C.; Chen, X.; Wang, L.; Makihata, M.; Liu, H.-C.; Zhou, T.; Zhao, X. Bioadhesive ultrasound for long-term continuous imaging of diverse organs. Science 2022, 377, 517–523. [Google Scholar] [CrossRef]

- Park, E.; Meek, S.G. Fatigue compensation of the electromyographic signal for prosthetic control and force estimation. IEEE Trans. Biomed. Eng. 1993, 40, 1019–1023. [Google Scholar] [CrossRef] [PubMed]

- Díaz-Amador, R.; Mendoza-Reyes, M.A. Towards the reduction of the effects of muscle fatigue on myoelectric control of upper limb prostheses. Dyna 2019, 86, 110–116. [Google Scholar] [CrossRef]

- Shi, J.; Zheng, Y.-P.; Chen, X.; Huang, Q.-H. Assessment of muscle fatigue using sonomyography: Muscle thickness change detected from ultrasound images. Med. Eng. Phys. 2007, 29, 472–479. [Google Scholar] [CrossRef]

- Middleton, A.; Ortiz-Catalan, M. Neuromusculoskeletal arm prostheses: Personal and social implications of living with an intimately integrated bionic arm. Front. Neurorobotics 2020, 14, 39. [Google Scholar] [CrossRef] [PubMed]

- Raspopovic, S.; Valle, G.; Petrini, F.M. Sensory feedback for limb prostheses in amputees. Nat. Mater. 2021, 20, 925–939. [Google Scholar] [CrossRef] [PubMed]

- Marasco, P.D.; Hebert, J.S.; Sensinger, J.W.; Beckler, D.T.; Thumser, Z.C.; Shehata, A.W.; Williams, H.E.; Wilson, K.R. Neurorobotic fusion of prosthetic touch, kinesthesia, and movement in bionic upper limbs promotes intrinsic brain behaviors. Sci. Robot. 2021, 6, eabf3368. [Google Scholar] [CrossRef]

- Clemente, F.; D’Alonzo, M.; Controzzi, M.; Edin, B.B.; Cipriani, C. Non-invasive, temporally discrete feedback of object contact and release improves grasp control of closed-loop myoelectric transradial prostheses. IEEE Trans. Neural Syst. Rehabil. Eng. 2015, 24, 1314–1322. [Google Scholar] [CrossRef]

- Deijs, M.; Bongers, R.; Ringeling-van Leusen, N.; Van Der Sluis, C. Flexible and static wrist units in upper limb prosthesis users: Functionality scores, user satisfaction and compensatory movements. J. Neuroeng. Rehabil. 2016, 13, 1–13. [Google Scholar] [CrossRef]

- Montagnani, F.; Controzzi, M.; Cipriani, C. Is it finger or wrist dexterity that is missing in current hand prostheses? IEEE Trans. Neural Syst. Rehabil. Eng. 2015, 23, 600–609. [Google Scholar] [CrossRef]

- Choi, S.; Cho, W.; Kim, K. Restoring natural upper limb movement through a wrist prosthetic module for partial hand amputees. J. Neuroeng. Rehabil. 2023, 20, 135. [Google Scholar] [CrossRef]

- Zhao, H.; O’brien, K.; Li, S.; Shepherd, R.F. Optoelectronically innervated soft prosthetic hand via stretchable optical waveguides. Sci. Robot. 2016, 1, eaai7529. [Google Scholar] [CrossRef] [PubMed]

- Dunai, L.; Novak, M.; García Espert, C. Human hand anatomy-based prosthetic hand. Sensors 2020, 21, 137. [Google Scholar] [CrossRef] [PubMed]

- Capsi-Morales, P.; Piazza, C.; Catalano, M.G.; Grioli, G.; Schiavon, L.; Fiaschi, E.; Bicchi, A. Comparison between rigid and soft poly-articulated prosthetic hands in non-expert myo-electric users shows advantages of soft robotics. Sci. Rep. 2021, 11, 23952. [Google Scholar] [CrossRef] [PubMed]

- Nazari, V.; Zheng, Y.; Shea, T.K. Prosthetic Hand Device Using a Wearable Ultrasound Module as a Human Machine Interface. U.S. Patent 18/305,415, 1 July 2024. [Google Scholar]

| Machine Learning Algorithm | Transfer Learning Model | Accuracy |

|---|---|---|

| Random Forest (RF) | InceptionResNetV2 | 100% |

| K-Nearest Neighbors (KNN) | InceptionResNetV2 | 100% |

| Decision Tree Classifier (DCT) | InceptionResNetV2 | 100% |

| Support Vector Machine (SVM) | InceptionResNetV2 | 100% |

| Random Forest (RF) | VGG19 | 100% |

| K-Nearest Neighbors (KNN) | VGG19 | 100% |

| Decision Tree Classifier (DCT) | VGG19 | 100% |

| Support Vector Machine (SVM) | VGG19 | 100% |

| Random Forest (RF) | VGG16 | 100% |

| K-Nearest Neighbors (KNN) | VGG16 | 100% |

| Decision Tree Classifier (DCT) | VGG16 | 100% |

| Support Vector Machine (SVM) | VGG16 | 100% |

| Multi-Layer Perceptron (MLP) | VGG16 | 23% |

| Regression Algorithm | Accuracy |

|---|---|

| Neural Network Regression (NNR) | 100% |

| Decision Tree Regression (DTR) | 91.72% |

| Support Vector Regression (SVR-L) | 55.96% |

| Support Vector Regression (SVR-P) | 55.38% |

| Test | Hand | Result | |

|---|---|---|---|

| B&B | Number of blocks | ||

| A1 | A2 | ||

| Left | 12 | 8 | |

| Right | 45 | 47 | |

| TB&B (4 × 4) | Time (seconds) | ||

| A1 | A2 | ||

| Left | 86.66 | 136.79 | |

| Right | 31.31 | 21.23 | |

| TB&B (3 × 3) | Time (seconds) | ||

| A1 | A2 | ||

| Left | 41.40 | 67.18 | |

| Right | 17.00 | 12.28 | |

| ARAT | Score (total) | ||

| A1 | A2 | ||

| Left | 40 | 40 | |

| Right | 57 | 57 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nazari, V.; Zheng, Y.-P. A Highly Efficient HMI Algorithm for Controlling a Multi-Degree-of-Freedom Prosthetic Hand Using Sonomyography. Sensors 2025, 25, 3968. https://doi.org/10.3390/s25133968

Nazari V, Zheng Y-P. A Highly Efficient HMI Algorithm for Controlling a Multi-Degree-of-Freedom Prosthetic Hand Using Sonomyography. Sensors. 2025; 25(13):3968. https://doi.org/10.3390/s25133968

Chicago/Turabian StyleNazari, Vaheh, and Yong-Ping Zheng. 2025. "A Highly Efficient HMI Algorithm for Controlling a Multi-Degree-of-Freedom Prosthetic Hand Using Sonomyography" Sensors 25, no. 13: 3968. https://doi.org/10.3390/s25133968

APA StyleNazari, V., & Zheng, Y.-P. (2025). A Highly Efficient HMI Algorithm for Controlling a Multi-Degree-of-Freedom Prosthetic Hand Using Sonomyography. Sensors, 25(13), 3968. https://doi.org/10.3390/s25133968