Cross-Feature Hybrid Associative Priori Network for Pulsar Candidate Screening

Abstract

1. Introduction

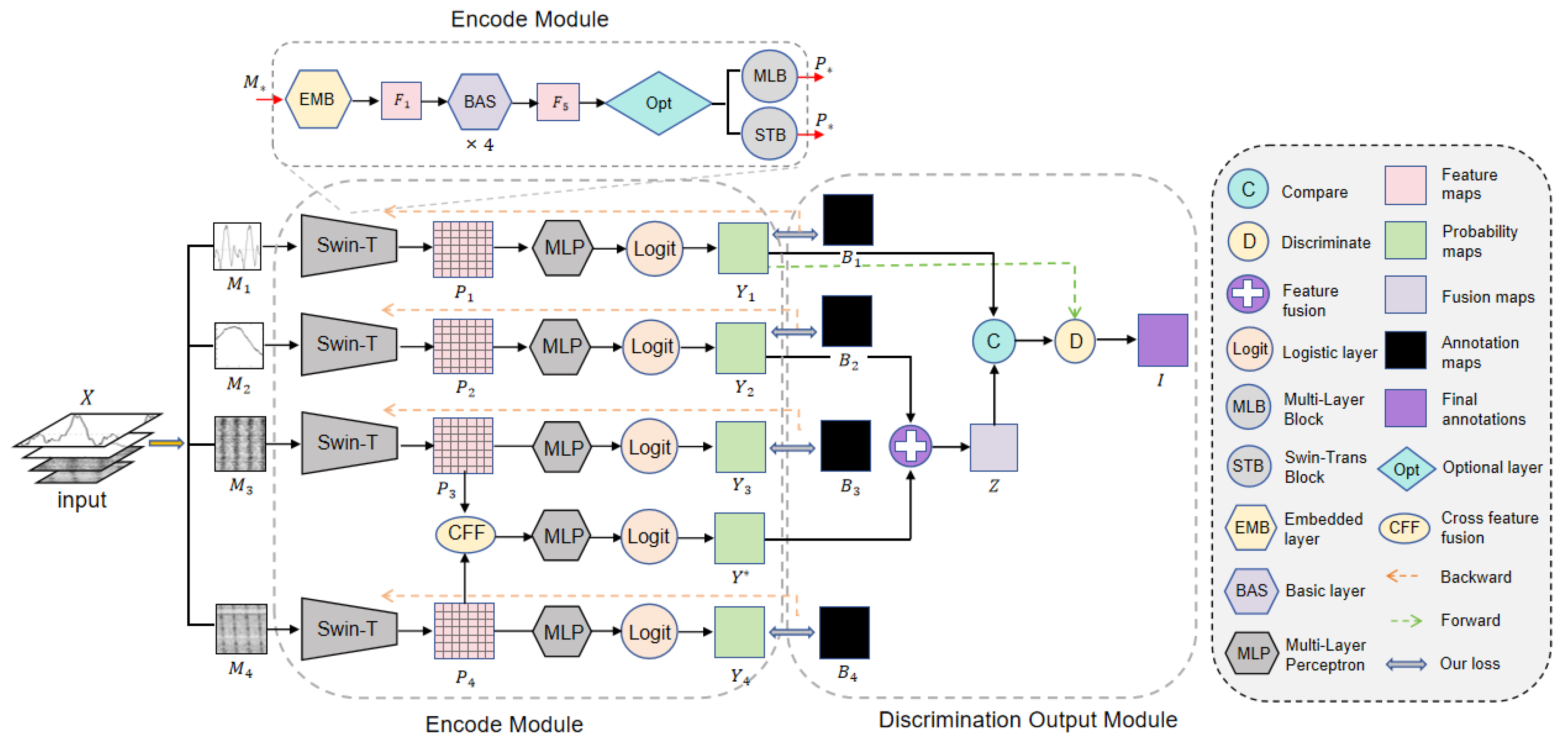

- A cross-feature hybrid associative prior network (CFHAPNet) and corresponding strategies are proposed. The method integrates multi-channel data composed of heterogeneous subimages from each pulsar candidate through hybrid association. By tracking, fusing, and comparing feature weight mappings across channels, it effectively addresses the challenge of associating relatively independent multi-view subimages of pulsar candidates for recognition.

- Structural enhancements are implemented in the encoder through adjustments to the downsampling scheme, the addition of optional layers, and the introduction of a cross-feature fusion (CFF) module. These modifications improve the model’s capacity to capture fine-grained textural details in multi-view subimages and refine the generation of weak semantic priors, thereby achieving significant improvements in recognition accuracy for candidate subimages.

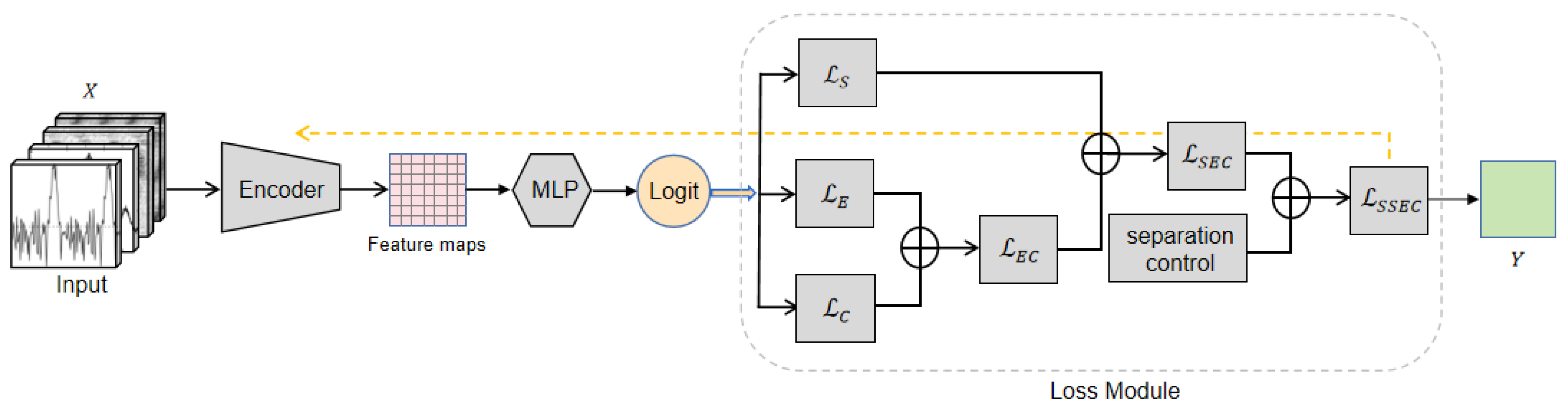

- The loss function is enhanced through the introduction of cosine loss and learnable parameters, which aim to strengthen intra-class cohesion and inter-class separability. These refinements address the original loss function’s limitations in effectively discriminating hard samples and handling feature ambiguity.

2. Proposed Methods

2.1. Encode Module

2.1.1. Optional Layer

2.1.2. Cross-Feature Fusion Module

2.2. Discrimination Output Module

| Algorithm 1 CFHAPNet strategy |

|

2.3. Loss Function

2.3.1. Enhancement of Intra-Class Compactness

2.3.2. Separation Control

3. Experiments

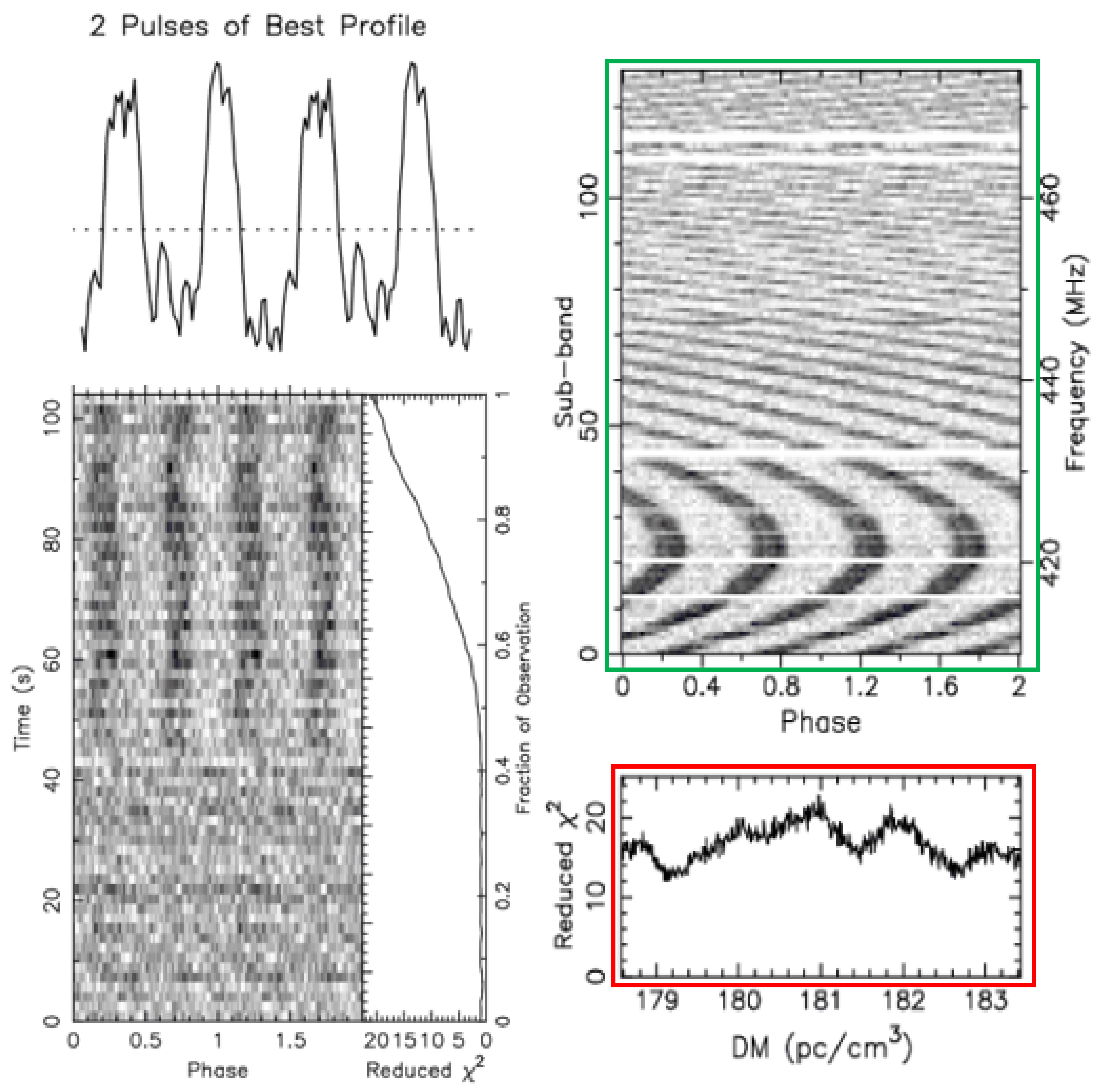

3.1. Data Preparation

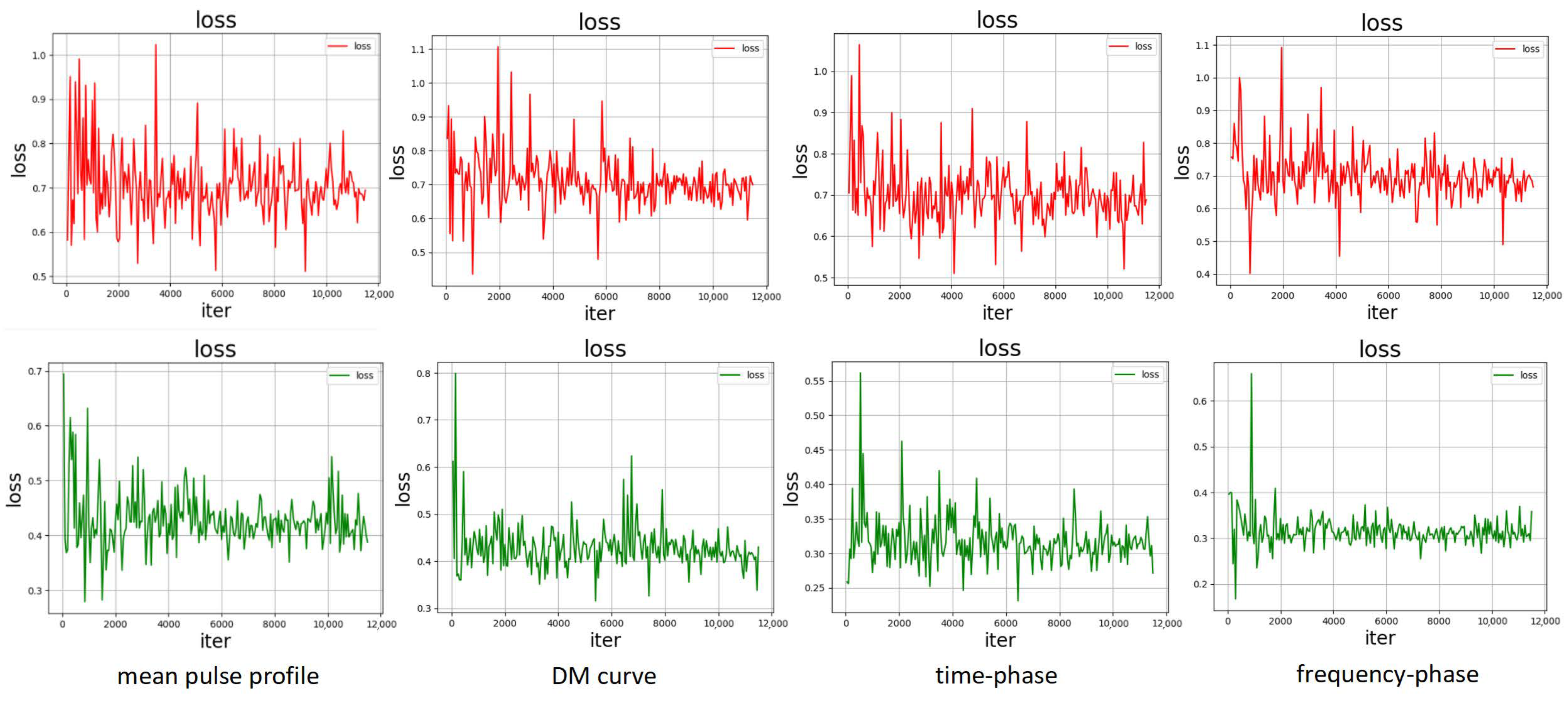

3.2. Training Configurations

3.3. Evaluation Metrics

4. Results

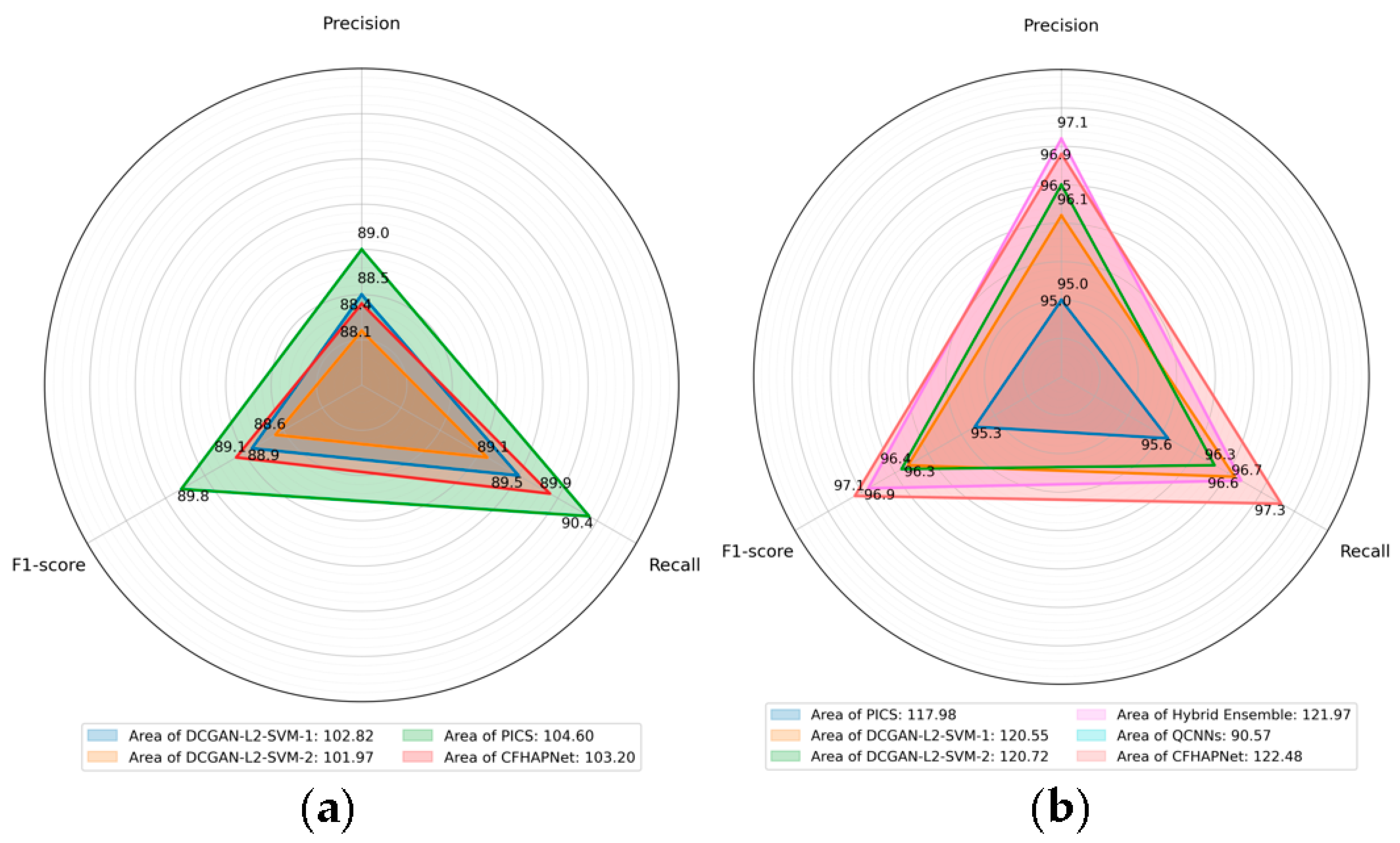

4.1. Comparison with SOTA Methods

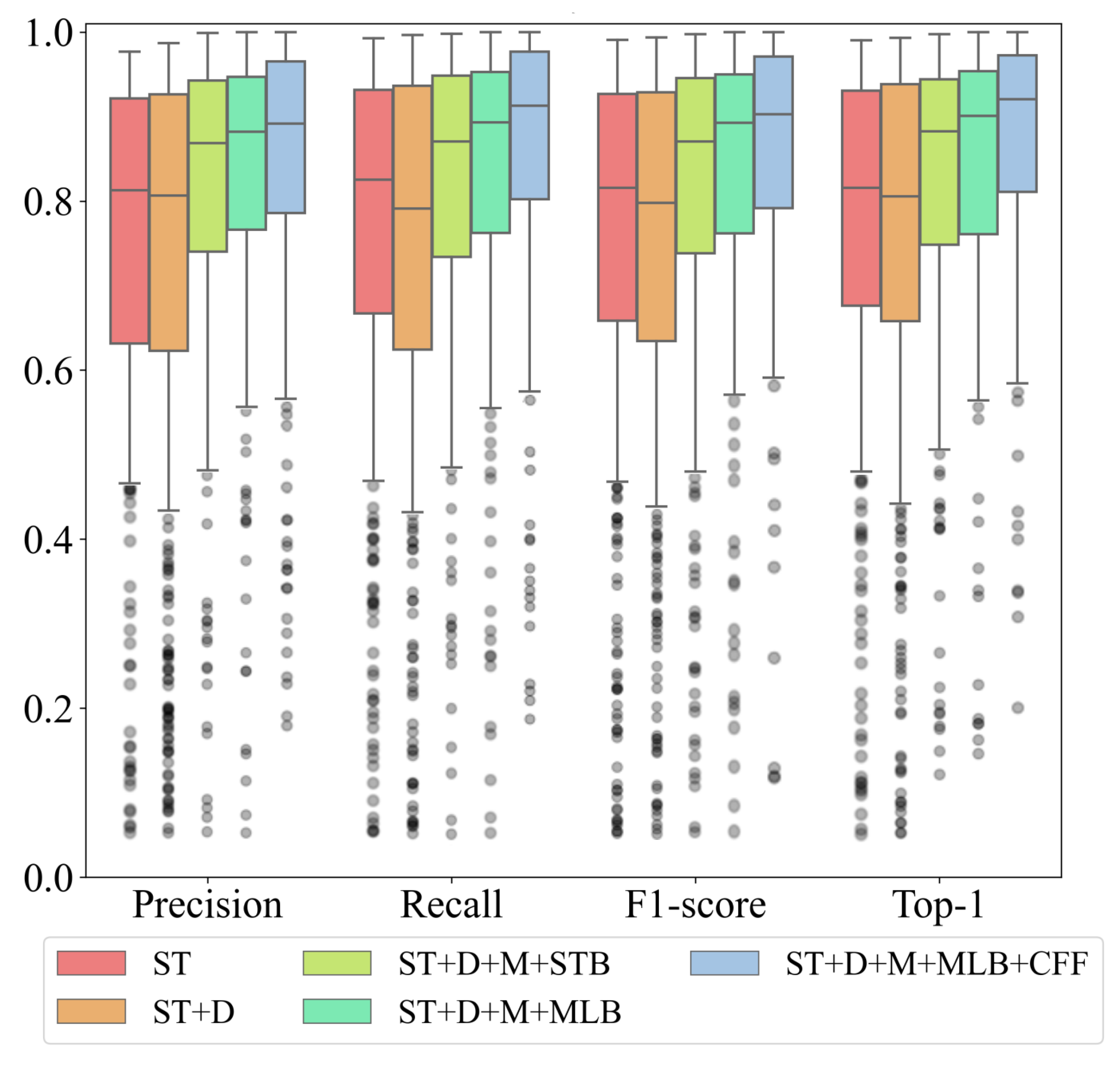

4.2. Ablation Results

4.2.1. CFHAPNet Model Validation

4.2.2. Structural Improvements of Encoder

4.3. Improvements of Loss Functions

4.3.1. Ablation Results

4.3.2. Generalization Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CFHAPNet | Cross-feature hybrid associative priori network |

| FAST | Five-hundred-meter Aperture Spherical Telescope |

| ConvNets | Convolutional neural networks |

| ViT | Vision Transformer |

| Swin-T | Swin Transformer |

| SOTA | State of the art |

| CFF | Cross-feature fusion |

| mAP | Mean average precision |

| CMC | Cumulative Matching Characteristics |

References

- Thanu, S.; Subha, V. Emerging Trends In Pulsar Star Studies: A Synthesis Of Machine Learning Techniques In Pulsar Star Research. Ann. Comput. Sci. Inf. Syst. 2023, 38, 93–98. [Google Scholar]

- Sureja, S.; Gadhia, B. Advances in Pulsar Candidate Selection: A Neural Network Perspective. J. Soft Comput. Paradig. 2023, 5, 287–300. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, J.; Zhang, Y.; Du, X.; Wu, H.; Zhang, T. Review of artificial intelligence applications in astronomical data processing. Astron. Tech. Instruments 2023, 1, 1–15. [Google Scholar] [CrossRef]

- Hobbs, G.B.; Bailes, M.; Bhat, N.D.R.; Burke-Spolaor, S.; Champion, D.J.; Coles, W.; Hotan, A.; You, X.P. Gravitational-wave detection using pulsars:status of the parkes pulsar timing array project. Publ. Astron. Soc. Aust. 2009, 26, 103–109. [Google Scholar] [CrossRef]

- Sengar, R.; Bailes, M.; Balakrishnan, V.; Bernadich, M.I.; Burgay, M.; Barr, E.D.; Wongphechauxsorn, J. Discovery of 37 new pulsars through GPU-accelerated reprocessing of archival data of the Parkes multibeam pulsar survey. Mon. Not. R. Astron. Soc. 2023, 522, 1071–1090. [Google Scholar] [CrossRef]

- Wongphechauxsorn, J.; Champion, D.J.; Bailes, M.; Balakrishnan, V.; Barr, E.D.; Bernadich, M.I.; van Straten, W. The High Time Resolution Universe Pulsar survey–XVIII. The reprocessing of the HTRU-S Low Lat survey around the Galactic Centre using a Fast Folding Algorithm pipeline for accelerated pulsars. Mon. Not. R. Astron. Soc. 2024, 527, 3208–3219. [Google Scholar] [CrossRef]

- Parent, E.; Kaspi, V.M.; Ransom, S.M.; Freire, P.C.C.; Brazier, A.; Camilo, F.; Zhu, W.W. Eight millisecond pulsars discovered in the arecibo PALFA survey. Astrophys. J. 2019, 886, 148. [Google Scholar] [CrossRef]

- Lynch, R.S.; Boyles, J.; Ransom, S.M.; Stairs, I.H.; Lorimer, D.R.; McLaughlin, M.A.; Van Leeuwen, J. The Green Bank Telescope 350 MHz Drift-scan Survey II: Data Analysis and the Timing of 10 New Pulsars, Including a Relativistic Binary. Astrophys. J. 2013, 763, 81. [Google Scholar] [CrossRef]

- Swiggum, J.K.; Pleunis, Z.; Parent, E.; Kaplan, D.L.; McLaughlin, M.A.; Stairs, I.H.; Surnis, M. The Green Bank North Celestial Cap Survey. VII. 12 New Pulsar Timing Solutions. Astrophys. J. 2023, 944, 154. [Google Scholar] [CrossRef]

- Van Der Wateren, E.; Bassa, C.G.; Cooper, S.; Grießmeier, J.M.; Stappers, B.W.; Hessels, J.W.T.; Wucknitz, O. The LOFAR Tied-Array All-Sky Survey: Timing of 35 radio pulsars and an overview of the properties of the LOFAR pulsar discoveries. Astron. Astrophys. 2023, 669, A160. [Google Scholar] [CrossRef]

- Zhang, B.; Shang, W.; Gao, X.; Li, Z.; Wang, X.; Ma, Y.; Li, Q. Synthetic design and analysis of the new feed cabin mechanism in Five-hundred-meter Aperture Spherical radio Telescope (FAST). Mech. Mach. Theory 2024, 191, 105507. [Google Scholar] [CrossRef]

- Smits, R.; Kramer, M.; Stappers, B.; Lorimer, D.R.; Cordes, J.; Faulkner, A. Pulsar searches and timing with the square kilometre array. Astron. Astrophys. 2009, 493, 1161–1170. [Google Scholar] [CrossRef]

- Labate, M.G.; Waterson, M.; Alachkar, B.; Hendre, A.; Lewis, P.; Bartolini, M.; Dewdney, P. Highlights of the square kilometre array low frequency (SKA-LOW) telescope. J. Astron. Telesc. Instruments Syst. 2022, 8, 011024. [Google Scholar] [CrossRef]

- Ransom, S. Presto [EB/OL]. Available online: https://www.cv.nrao.edu/~sransom/presto/ (accessed on 17 April 2023).

- Cai, N.; Han, J.L.; Jing, W.C.; Zhang, Z.; Zhou, D.; Chen, X. Pulsar Candidate Classification Using a Computer Vision Method from a Combination of Convolution and Attention. Res. Astron. Astrophys. 2023, 23, 104005. [Google Scholar] [CrossRef]

- Sett, S.; Bhat, N.D.R.; Sokolowski, M.; Lenc, E. Image-based searches for pulsar candidates using MWA VCS data. Publ. Astron. Soc. Aust. 2023, 40, e003. [Google Scholar] [CrossRef]

- Salal, J.; Tendulkar, S.P.; Marthi, V.R. Identifying pulsar candidates in interferometric radio images using scintillation. Astrophys. J. 2024, 974, 46. [Google Scholar] [CrossRef]

- You, Z.Y.; Pan, Y.R.; Ma, Z.; Zhang, L.; Zhang, D.D.; Li, S.Y. Applying hybrid clustering in pulsar candidate sifting with multi-modality for FAST survey. Res. Astron. Astrophys. 2024, 24, 035022. [Google Scholar] [CrossRef]

- Wang, Y.; Zheng, J.; Pan, Z.; Mingtao, L.I. An Overview of Pulsar Candidate Classification Methods. J. Deep. Space Explor. 2018, 5, 203–211+218. [Google Scholar]

- Tariq, I.; Qiao, M.; Wei, L.; Yao, S.; Zhou, C.; Ali, Z.; Spanakis-Misirlis, A. Classification of pulsar signals using ensemble gradient boosting algorithms based on asymmetric under-sampling method. J. Instrum. 2022, 17, P03020. [Google Scholar] [CrossRef]

- Zhu, W.W.; Berndsen, A.; Madsen, E.C.; Tan, M.; Stairs, I.H.; Brazier, A.; Venkataraman, A. Searching for pulsars using image pattern recognition. Astrophys. J. 2014, 781, 117. [Google Scholar] [CrossRef]

- Lyon, R.J.; Stappers, B.W.; Cooper, S.; Brooke, J.M.; Knowles, J.D. Fifty years of pulsar candidate selection: From simple filters to a new principled real-time classification approach. Mon. Not. R. Astron. Soc. 2016, 459, 1104–1123. [Google Scholar] [CrossRef]

- Vafaei Sadr, A.; Bassett, B.A.; Oozeer, N.; Fantaye, Y.; Finlay, C. Deep learning improves identification of radio frequency interference. Mon. Not. R. Astron. Soc. 2020, 499, 379–390. [Google Scholar] [CrossRef]

- Sadhu, A. Pulsar Star Detection: A Comparative Analysis of Classification Algorithms using SMOTE. Int. J. Comput. Inf. Technol. 2022, 11, 38–46. [Google Scholar] [CrossRef]

- Bhat, S.S.; Prabu, T.; Stappers, B.; Ghalame, A.; Saha, S.; Sudarshan, T.B.; Hosenie, Z. Investigation of a Machine learning methodology for the SKA pulsar search pipeline. J. Astrophys. Astron. 2023, 44, 36. [Google Scholar] [CrossRef]

- Wang, Y.; Pan, Z.; Zheng, J.; Qian, L.; Li, M. A hybrid ensemble method for pulsar candidate classification. Astrophys. Space Sci. 2019, 364, 139. [Google Scholar] [CrossRef]

- Guo, P.; Duan, F.; Wang, P.; Yao, Y.; Yin, Q.; Zhang, L. Pulsar candidate classification using generative adversary networks. Mon. Not. R. Astron. Soc. 2019, 490, 5424–5439. [Google Scholar] [CrossRef]

- Song, J.R. The effectiveness of different machine learning algorithms in classifying pulsar stars and the impact of data preparation. J. Phys. Conf. Ser. 2023, 2428, 012046. [Google Scholar] [CrossRef]

- Ding, X.; Zhang, Y.; Ge, Y.; Zhao, S.; Song, L.; Yue, X.; Shan, Y. UniRepLKNet: A Universal Perception Large-Kernel ConvNet for Audio Video Point Cloud Time-Series and Image Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 5513–5524. [Google Scholar]

- Slabbert, D.; Lourens, M.; Petruccione, F. Pulsar classification: Comparing quantum convolutional neural networks and quantum support vector machines. Quantum Mach. Intell. 2024, 6, 56. [Google Scholar] [CrossRef]

- Zhang, C.J.; Shang, Z.H.; Chen, W.M.; Miao, X.H. A review of research on pulsar candidate recognition based on machine learning. Procedia Comput. Sci. 2020, 166, 534–538. [Google Scholar] [CrossRef]

- Ji, L.; Liu, N. Global-local transformer for aerial image semantic segmentation. In Proceedings of the Sixteenth International Conference on Graphics and Image Processing (ICGIP 2024), Nanjing, China, 8–10 November 2024; SPIE: St Bellingham, WA, USA, 2025; Volume 13539, pp. 171–180. [Google Scholar]

- Lian, X.; Huang, X.; Gao, C.; Ma, G.; Wu, Y.; Gong, Y.; Li, J. A Multiscale Local–Global Feature Fusion Method for SAR Image Classification with Bayesian Hyperparameter Optimization Algorithm. Appl. Sci. 2023, 13, 6806. [Google Scholar] [CrossRef]

- Dosovitskiy, A. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Bao, F.; Nie, S.; Xue, K.; Cao, Y.; Li, C.; Su, H.; Zhu, J. All are worth words: A vit backbone for diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 22669–22679. [Google Scholar]

- Xia, C.; Wang, X.; Lv, F.; Hao, X.; Shi, Y. Vit-comer: Vision transformer with convolutional multi-scale feature interaction for dense predictions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 5493–5502. [Google Scholar]

- Yuan, L.; Chen, Y.; Wang, T.; Yu, W.; Shi, Y.; Jiang, Z.H.; Yan, S. Tokens-to-token vit: Training vision transformers from scratch on imagenet. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 558–567. [Google Scholar]

- Dong, X.; Bao, J.; Chen, D.; Zhang, W.; Yu, N.; Yuan, L.; Guo, B. Cswin transformer: A general vision transformer backbone with cross-shaped windows. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12124–12134. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Shao, L. Pvt v2: Improved baselines with pyramid vision transformer. Comput. Vis. Media 2022, 8, 415–424. [Google Scholar] [CrossRef]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Amodei, D. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Hendricks, L.A.; Mellor, J.; Schneider, R.; Alayrac, J.B.; Nematzadeh, A. Decoupling the role of data, attention, and losses in multimodal transformers. Trans. Assoc. Comput. Linguist. 2021, 9, 570–585. [Google Scholar] [CrossRef]

- Ding, X.; Zhang, X.; Han, J.; Ding, G. Scaling up your kernels to 31 × 31: Revisiting large kernel design in cnns. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11963–11975. [Google Scholar]

- Mehta, P.; Sagar, A.; Kumari, S. Domain Generalized Recaptured Screen Image Identification Using SWIN Transformer. arXiv 2024, arXiv:2407.17170. [Google Scholar] [CrossRef]

- Shi, L.; Chen, Y.; Liu, M.; Guo, F. DuST: Dual Swin Transformer for Multi-modal Video and Time-Series Modeling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 4537–4546. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Zhu, X.; Wu, Y.; Hu, H.; Zhuang, X.; Yao, J.; Ou, D.; Xu, D. Medical lesion segmentation by combining multimodal images with modality weighted UNet. Med. Phys. 2022, 49, 3692–3704. [Google Scholar] [CrossRef]

- Prakash, A.; Chitta, K.; Geiger, A. Multi-modal fusion transformer for end-to-end autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 7077–7087. [Google Scholar]

- Liu, C.; Zou, W.; Hu, Z.; Li, H.; Sui, X.; Ma, X.; Guo, N. Bearing Health State Detection Based on Informer and CNN+ Swin Transformer. Machines 2024, 12, 456. [Google Scholar] [CrossRef]

- Zhou, H.Y.; Yu, Y.; Wang, C.; Zhang, S.; Gao, Y.; Pan, J.; Li, W. A transformer-based representation-learning model with unified processing of multimodal input for clinical diagnostics. Nat. Biomed. Eng. 2023, 7, 743–755. [Google Scholar] [CrossRef]

- Akbari, H.; Yuan, L.; Qian, R.; Chuang, W.H.; Chang, S.F.; Cui, Y.; Gong, B. Vatt: Transformers for multimodal self-supervised learning from raw video, audio and text. Adv. Neural Inf. Process. Syst. 2021, 34, 24206–24221. [Google Scholar]

- Wen, Y.; Zhang, K.; Li, Z.; Qiao, Y. A comprehensive study on center loss for deep face recognition. Int. J. Comput. Vis. 2019, 127, 668–683. [Google Scholar] [CrossRef]

- Han, C.; Pan, P.; Zheng, A.; Tang, J. Cross-modality person re-identification based on heterogeneous center loss and non-local features. Entropy 2021, 23, 919. [Google Scholar] [CrossRef] [PubMed]

- Wen, Y.; Zhang, K.; Li, Z.; Qiao, Y. A discriminative feature learning approach for deep face recognition. In Proceedings of the Computer vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part VII 14. Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 499–515. [Google Scholar]

- Wen, S.; Liu, W.; Yang, Y.; Zhou, P.; Guo, Z.; Yan, Z.; Huang, T. Multilabel image classification via feature/label co-projection. IEEE Trans. Syst. Man Cybern. Syst. 2020, 51, 7250–7259. [Google Scholar] [CrossRef]

- Dai, Y.; Li, X.; Liu, J.; Tong, Z.; Duan, L.Y. Generalizable person re-identification with relevance-aware mixture of experts. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 16145–16154. [Google Scholar]

- Jiang, M.; Zhang, X.; Yu, Y.; Bai, Z.; Zheng, Z.; Wang, Z.; Yang, Y. Robust vehicle re-identification via rigid structure prior. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 4026–4033. [Google Scholar]

- Tan, L.; Zhang, Y.; Shen, S.; Wang, Y.; Dai, P.; Lin, X.; Ji, R. Exploring invariant representation for visible-infrared person re-identification. arXiv 2023, arXiv:2302.00884. [Google Scholar]

| Dataset Names | Positive | Negative | Total |

|---|---|---|---|

| Train | |||

| PMPS | 700 | 7000 | 7700 |

| HTRU | 900 | 8500 | 9400 |

| FAST | 637 | 9658 | 10,295 |

| Test | |||

| PMPS | 200 | 3000 | 3200 |

| HTRU | 296 | 2000 | 2296 |

| FAST | 123 | 998 | 1121 |

| Methods | Datasets | Precision | Recall | F1-Score | Positive | Negative |

|---|---|---|---|---|---|---|

| DCGAN-L2-SVM-1 (Guo et al., 2019 [27]) | PMPS | 88.5 | 89.5 | 88.9 | 1500 RP + 8500 GP | 10,000 |

| DCGAN-L2-SVM-2 (Guo et al., 2019 [27]) | PMPS | 88.1 | 89.1 | 88.6 | 1500 RP + 8500 GP | 10,000 |

| PICS (Zhu et al., 2014 [21]) | PMPS | 89.0 | 90.4 | 89.8 | 2000 | 10,000 |

| CFHAPNet | PMPS | 88.4 | 89.9 | 89.1 | 900 | 10,000 |

| PICS (Zhu et al., 2014 [21]) | HTRU | 95.0 | 95.6 | 95.3 | 1196 | 10,500 |

| DCGAN-L2-SVM-1 (Guo et al., 2019 [27]) | HTRU | 96.1 | 96.6 | 96.3 | 696 RP + 9304 GP | 10,000 |

| DCGAN-L2-SVM-2 (Guo et al., 2019 [27]) | HTRU | 96.5 | 96.3 | 96.4 | 696 RP + 9304 GP | 10,000 |

| Hybrid Ensemblet (Y. Wang et al., 2019 [26]) | HTRU | 97.1 | 96.7 | 96.9 | 1196 | 10,500 |

| QCNNs (Donovan Slabbert et al., 2023 [29]) | HTRU2 | 95.0 | 73.4 | 82.8 | 1639 | 14,620 |

| CFHAPNet | HTRU | 96.9 | 97.3 | 97.1 | 1196 | 10,500 |

| Subplot Categories | Image Size | Precision | Recall | F1-Score | Top-1 | Ms/Step |

|---|---|---|---|---|---|---|

| Mean pulse profile | 2242 | 81.38 | 88.82 | 84.94 | 83.36 | 66 |

| DM curve | 2242 | 82.14 | 87.72 | 84.84 | 84.52 | 64 |

| Time–phase | 2242 | 86.87 | 86.74 | 86.79 | 87.06 | 70 |

| Frequency–phase | 2242 | 87.58 | 86.38 | 86.98 | 87.53 | 69 |

| Result Set | Image Size | Precision | Recall | F1-Score | Top-1 |

|---|---|---|---|---|---|

| Mosaicking | 2242 | 88.84 | 91.61 | 90.20 | 91.27 |

| Hybrid connection | 2242 | 92.16 | 93.15 | 92.65 | 93.08 |

| Reverse | 2242 | 88.42 | 88.77 | 88.59 | 89.61 |

| Backbone | Image Size | Precision | Recall | F1-Score | Top-1 | Ms/Step |

|---|---|---|---|---|---|---|

| Mean pulse profile plot | ||||||

| Swin-T Our Downsampler +1 patch merging & STB +1 patch merging & MLB | 2242 2242 2242 2242 | 81.38 81.41 81.52 81.92 | 88.82 88.90 89.06 89.35 | 84.94 85.00 85.12 85.47 | 83.36 83.65 83.94 84.24 | 66 66 66 68 |

| DM curve plot | ||||||

| Swin-T Our Downsampler +1 patch merging & STB +1 patch merging & MLB | 2242 2242 2242 2242 | 82.14 82.32 82.94 83.45 | 87.72 87.70 87.91 87.95 | 84.84 84.92 85.35 85.64 | 84.52 84.88 85.17 85.37 | 64 65 65 66 |

| Time–phase plot | ||||||

| Swin-T Our Downsampler +1 patch merging & STB +1 patch merging & MLB | 2242 2242 2242 2242 | 86.87 87.02 87.40 87.98 | 86.72 86.80 87.05 87.21 | 86.79 86.91 87.23 87.59 | 87.06 87.69 88.26 89.18 | 70 68 70 72 |

| Frequency–phase plot | ||||||

| Swin-T Our Downsampler +1 patch merging & STB +1 patch merging & MLB | 2242 2242 2242 2242 | 87.58 87.75 88.12 88.73 | 86.38 86.40 86.51 86.83 | 86.98 87.07 87.31 87.77 | 87.53 88.02 88.65 89.58 | 69 69 70 70 |

| Result Set I | ||||||

| Swin-T Our Downsampler +1 patch merging & STB +1 patch merging & MLB +CFF Module | 2242 2242 2242 2242 2242 | 92.16 92.62 94.25 94.71 96.54 | 93.15 93.61 94.84 95.25 97.67 | 92.65 92.86 94.54 94.98 97.10 | 93.08 93.82 94.41 95.38 97.23 | 269 270 272 275 277 |

| Method | Mean Pulse Profile | DM Curve | Time–Phase | Frequency–Phase | ||||

|---|---|---|---|---|---|---|---|---|

| mAP | CMC1 | mAP | CMC1 | mAP | CMC1 | mAP | CMC1 | |

| 0.244 | 0.275 | 0.268 | 0.288 | 0.342 | 0.356 | 0.274 | 0.299 | |

| 0.513 | 0.541 | 0.546 | 0.559 | 0.583 | 0.605 | 0.587 | 0.614 | |

| 0.712 | 0.747 | 0.724 | 0.755 | 0.731 | 0.776 | 0.741 | 0.798 | |

| 0.816 | 0.834 | 0.818 | 0.849 | 0.855 | 0.882 | 0.867 | 0.894 | |

| Method | Mean Pulse Profile | DM Curve | Time–Phase | Frequency–Phase | ||||

|---|---|---|---|---|---|---|---|---|

| mAP | CMC1 | mAP | CMC1 | mAP | CMC1 | mAP | CMC1 | |

| 0.158 | 0.166 | 0.166 | 0.181 | 0.195 | 0.205 | 0.191 | 0.206 | |

| 0.421 | 0.457 | 0.455 | 0.470 | 0.476 | 0.491 | 0.487 | 0.508 | |

| 0.618 | 0.644 | 0.622 | 0.657 | 0.644 | 0.659 | 0.653 | 0.677 | |

| 0.691 | 0.727 | 0.726 | 0.738 | 0.766 | 0.784 | 0.784 | 0.799 | |

| Method | Mean Pulse Profile | DM Curve | Time–Phase | Frequency–Phase | ||||

|---|---|---|---|---|---|---|---|---|

| mAP | CMC1 | mAP | CMC1 | mAP | CMC1 | mAP | CMC1 | |

| 0.214 | 0.246 | 0.236 | 0.268 | 0.275 | 0.296 | 0.251 | 0.276 | |

| 0.508 | 0.527 | 0.515 | 0.531 | 0.556 | 0.571 | 0.557 | 0.578 | |

| 0.708 | 0.734 | 0.717 | 0.736 | 0.726 | 0.748 | 0.734 | 0.752 | |

| 0.771 | 0.802 | 0.785 | 0.816 | 0.835 | 0.857 | 0.837 | 0.869 | |

| Loss Function | Mean Pulse Profile | DM Curve | Time–Phase | Frequency–Phase | Result Set I |

|---|---|---|---|---|---|

| Original loss | 84.24 | 85.37 | 89.18 | 89.58 | 97.23 |

| 84.98 | 86.02 | 90.84 | 91.52 | 98.63 |

| Datasets | Image Size | Precision | Recall | F1-Score | Top-1 |

|---|---|---|---|---|---|

| PMPS | 2242 | 88.40 | 89.91 | 89.15 | 89.52 |

| HTRU | 2242 | 96.91 | 97.30 | 97.10 | 97.40 |

| FAST | 2242 | 97.52 | 98.43 | 97.97 | 98.63 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, W.; Xie, X.; Jiang, J.; Zhou, L.; Hu, Z. Cross-Feature Hybrid Associative Priori Network for Pulsar Candidate Screening. Sensors 2025, 25, 3963. https://doi.org/10.3390/s25133963

Luo W, Xie X, Jiang J, Zhou L, Hu Z. Cross-Feature Hybrid Associative Priori Network for Pulsar Candidate Screening. Sensors. 2025; 25(13):3963. https://doi.org/10.3390/s25133963

Chicago/Turabian StyleLuo, Wei, Xiaoyao Xie, Jiatao Jiang, Linyong Zhou, and Zhijun Hu. 2025. "Cross-Feature Hybrid Associative Priori Network for Pulsar Candidate Screening" Sensors 25, no. 13: 3963. https://doi.org/10.3390/s25133963

APA StyleLuo, W., Xie, X., Jiang, J., Zhou, L., & Hu, Z. (2025). Cross-Feature Hybrid Associative Priori Network for Pulsar Candidate Screening. Sensors, 25(13), 3963. https://doi.org/10.3390/s25133963