AI-Powered Mobile App for Nuclear Cataract Detection

Abstract

1. Introduction

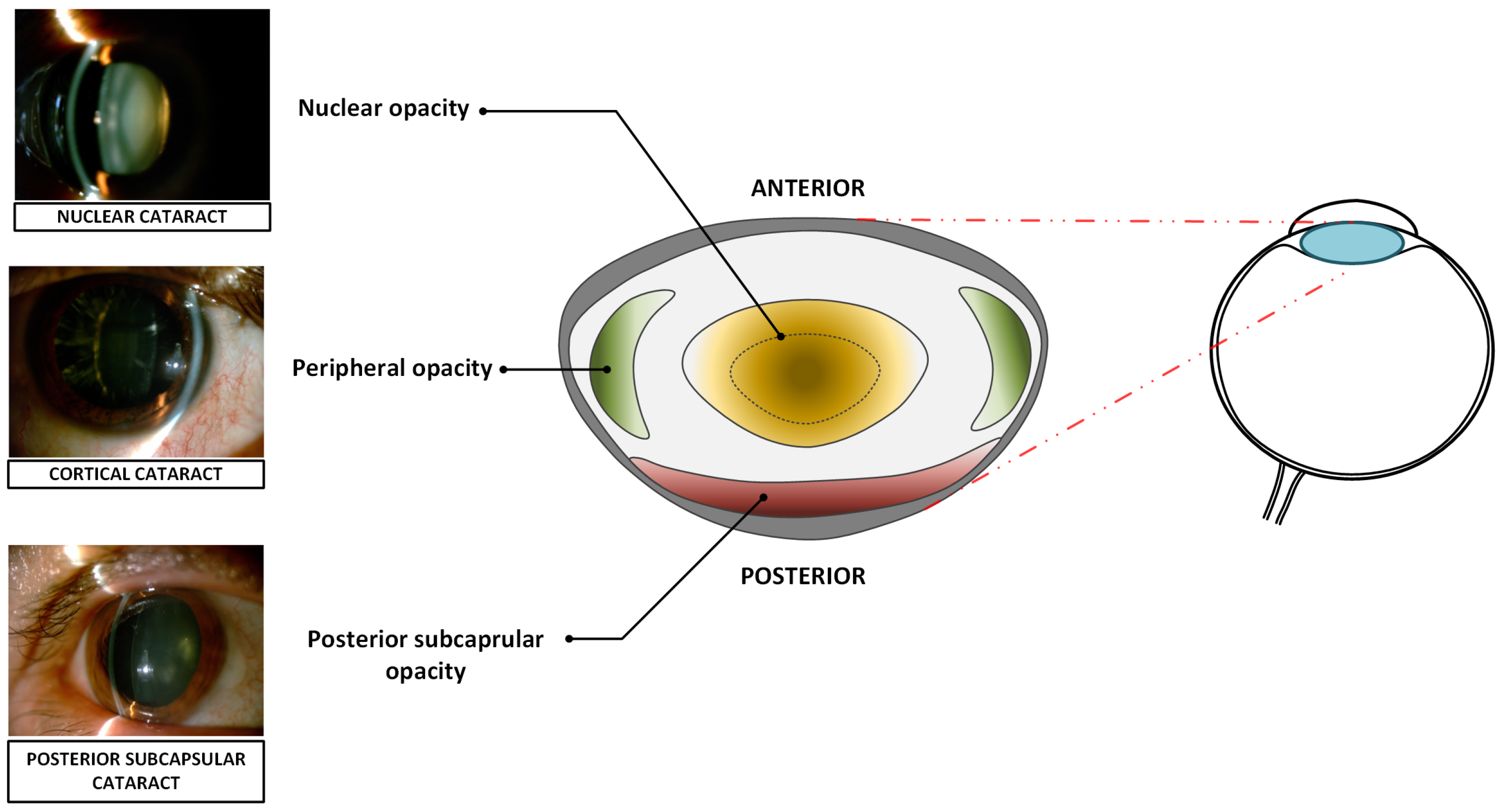

1.1. Cataract Types

- Nuclear cataract,

- Cortical cataract,

- Posterior subcapsular cataract.

1.2. Related Work

- a comparison of the effectiveness of six neural network architectures trained using real slit-lamp photos;

- a comprehensive comparison of the effectiveness neural network models after conversion to the constraints of mobile devices;

- full source code of a mobile app designed for cataract classification, with software released on GitHub (version 1.0).

2. Materials and Methods

2.1. Publicly Available Datasets

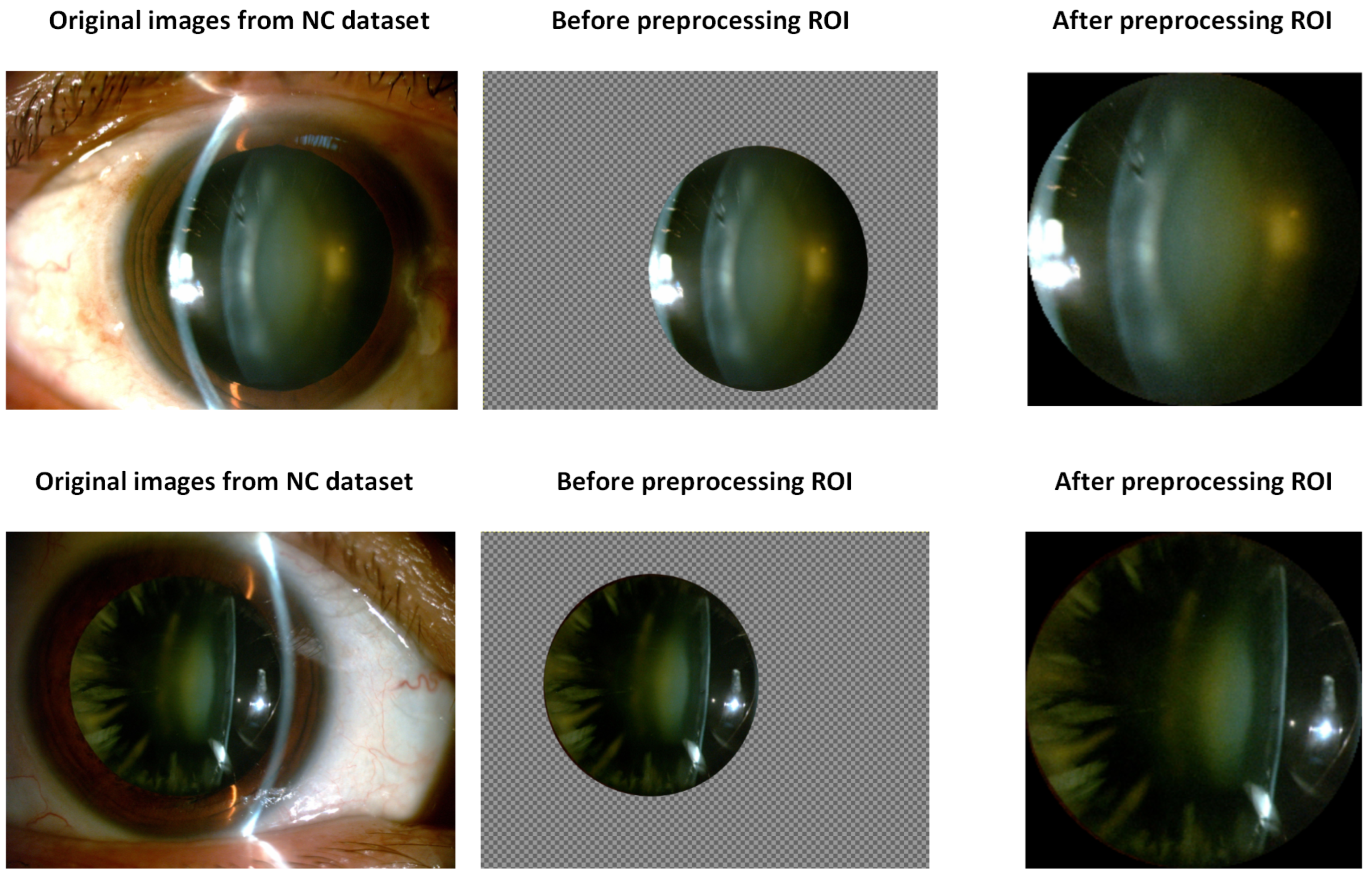

2.2. Extraction of Region-of-Interest (ROI) and Image Preprocessing

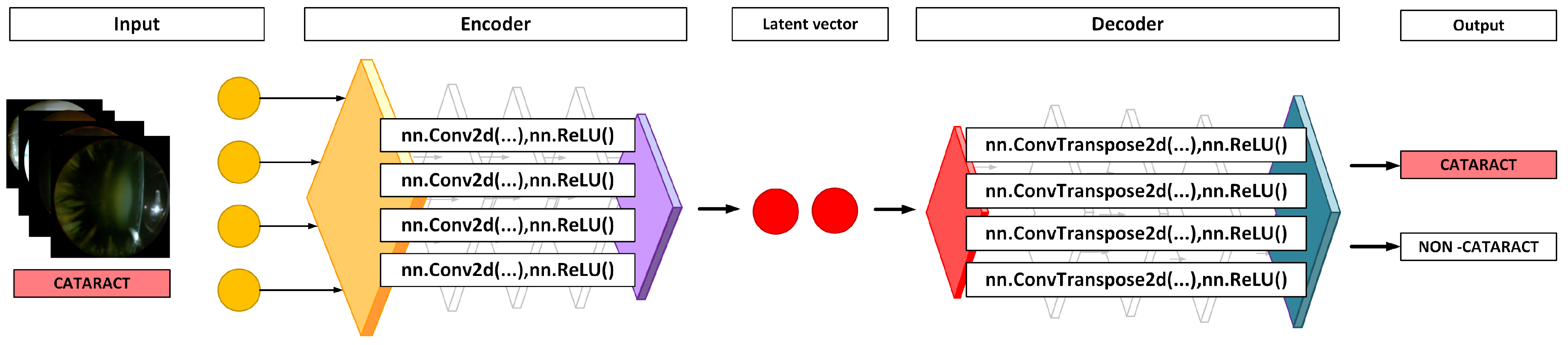

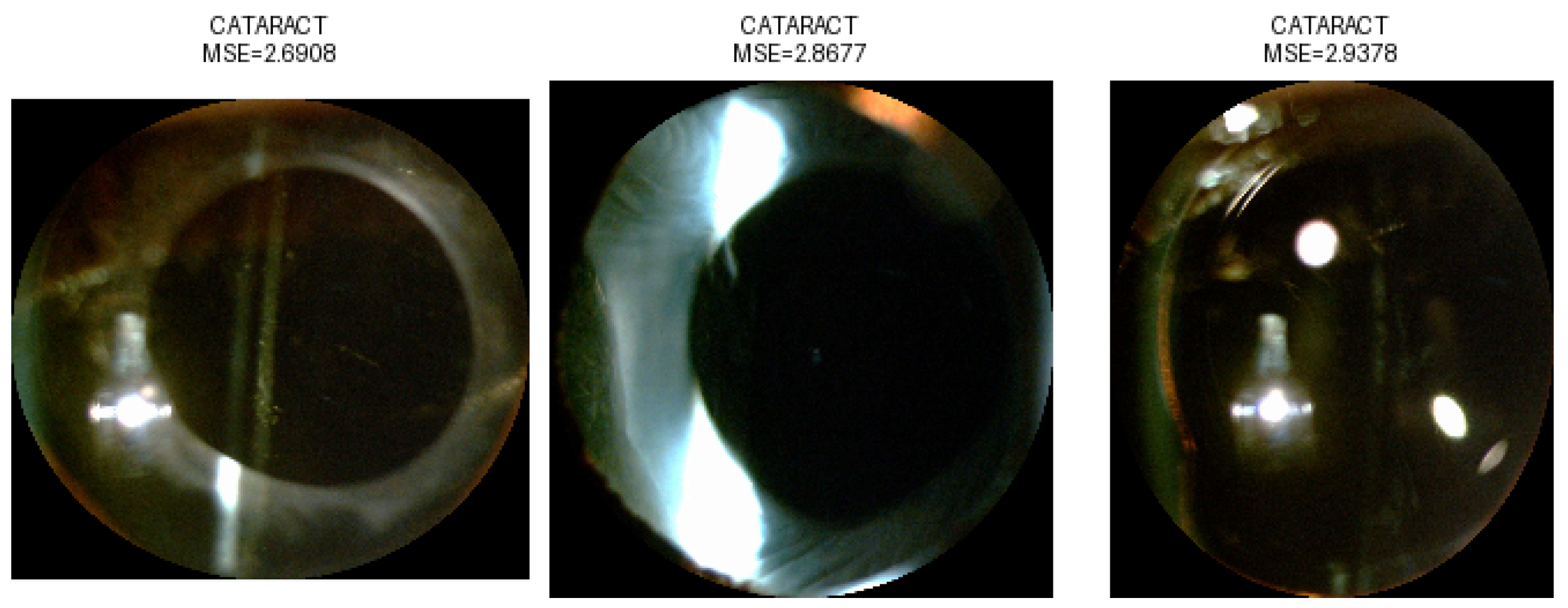

2.3. Anomaly Detection Using an Autoencoder

2.4. Tested Neural Network Architectures for LOCS III

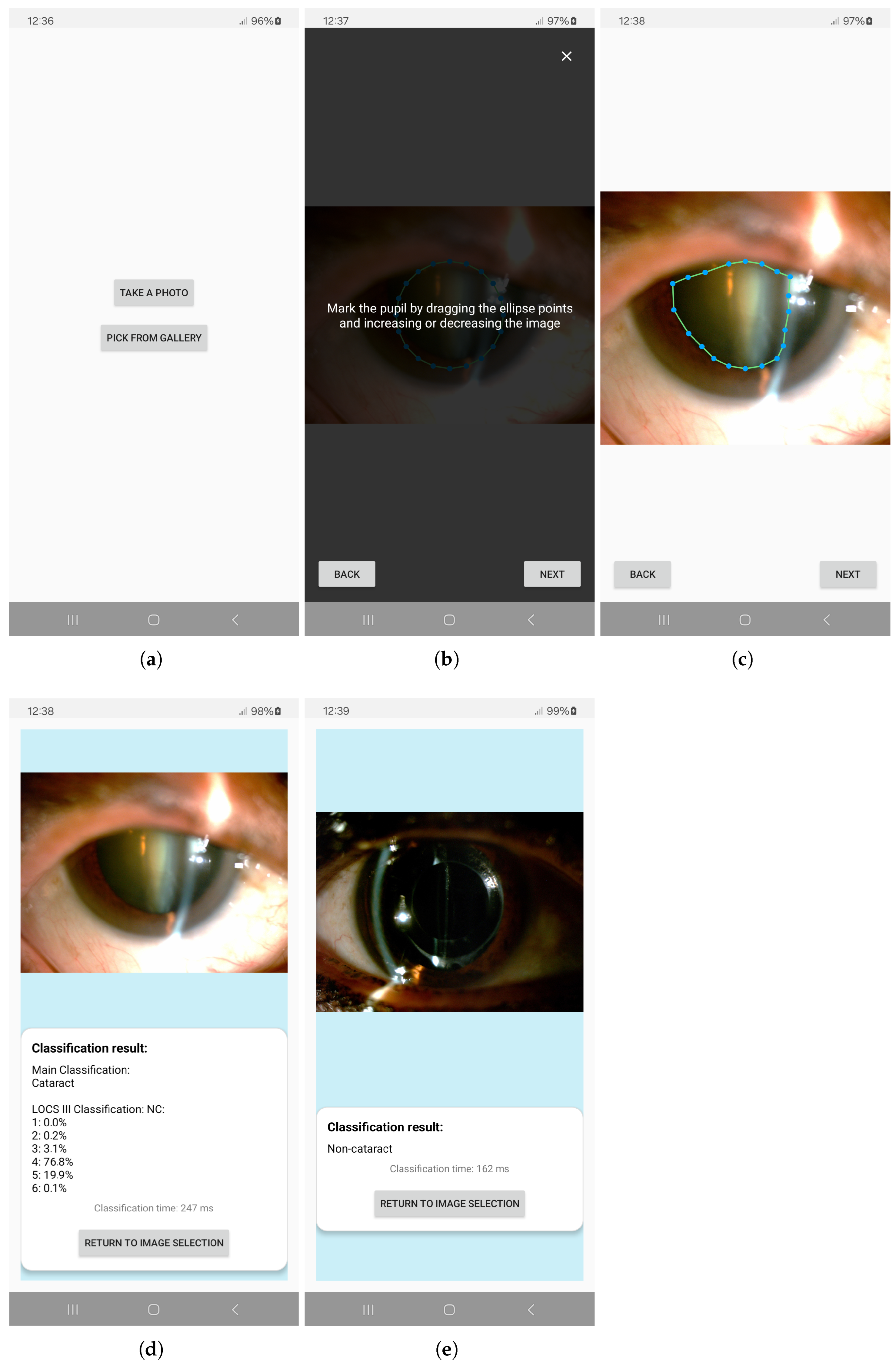

2.5. Android-Based Application

3. Results

3.1. Dataset

3.2. Anomaly Detection

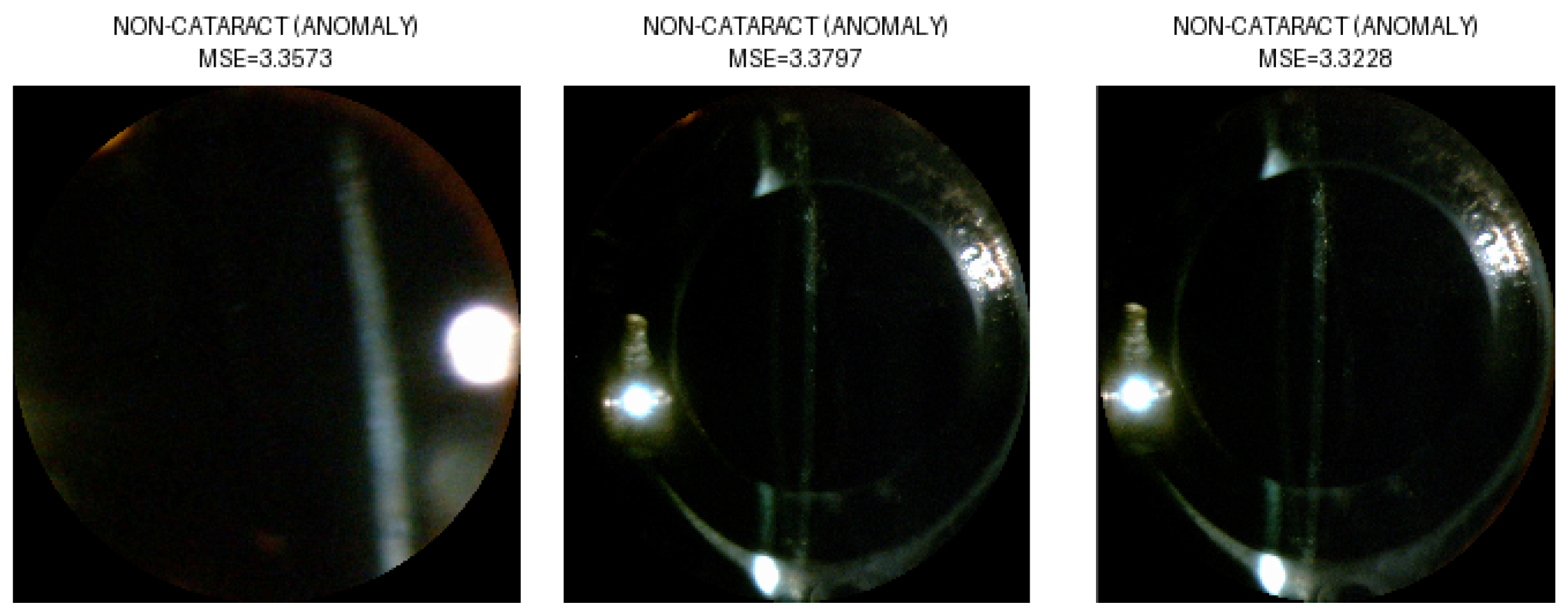

3.3. Selection of Neural Network for Second Stage of Classification

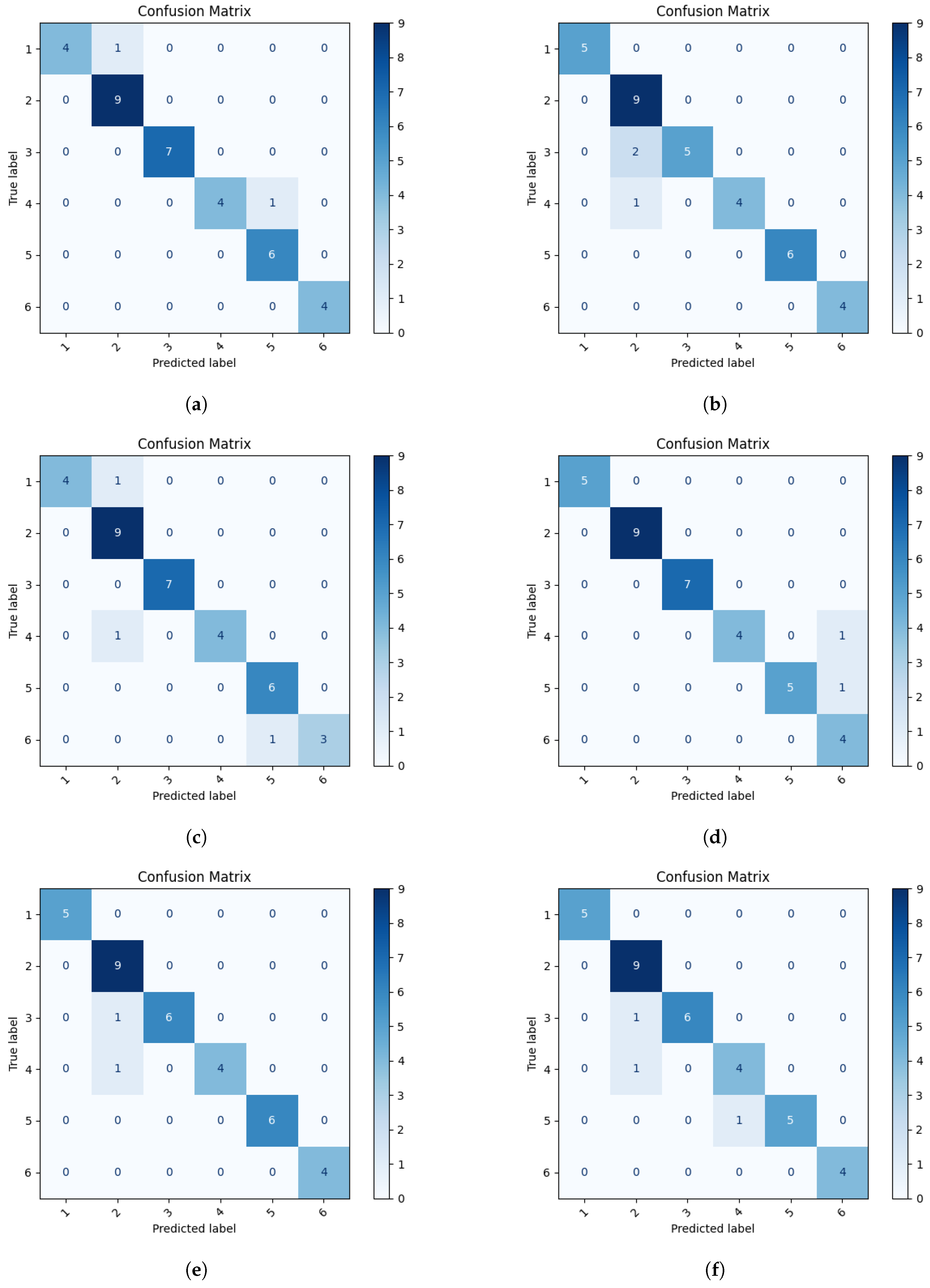

3.4. App Performance on Mobile Devices

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CAE | Convolutional Autoencoder |

| IOL | Intraocular Lens |

| LOCS III | Lens Opacities Classification System III |

| MSE | Mean Squared Error |

| NC | Nuclear Cataract |

| PIL | Python Imaging Library |

| ROI | Region of Interest |

| SVM | Support Vector Machines |

References

- Vision Loss Expert Group of the Global Burden of Disease Study; GBD 2019 Blindness and Vision Impairment Collaborators. Global estimates on the number of people blind or visually impaired by cataract: A meta-analysis from 2000 to 2020. Eye 2024, 38, 2156–2172. [Google Scholar] [CrossRef]

- Ang, M.J.; Afshari, N.A. Cataract and systemic disease: A review. Clin. Exp. Ophthalmol. 2021, 49, 118–127. [Google Scholar] [CrossRef] [PubMed]

- Hashemi, H.; Pakzad, R.; Yekta, A.; Aghamirsalim, M.; Pakbin, M.; Ramin, S.; Khabazkhoob, M. Global and regional prevalence of age-related cataract: A comprehensive systematic review and meta-analysis. Eye 2020, 34, 1357–1370. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.P.; Woreta, F.; Chang, D.F. Cataracts: A Review. JAMA 2025, 333, 2093–2103. [Google Scholar] [CrossRef] [PubMed]

- Ikeda, T.; Minami, M.; Nakamura, K.; Kida, T.; Fukumoto, M.; Sato, T.; Ishizaki, E. Progression of nuclear sclerosis based on changes in refractive values after lens-sparing vitrectomy in proliferative diabetic retinopathy. Clin. Ophthalmol. 2014, 8, 959. [Google Scholar] [CrossRef]

- Mamatha, B.S.; Nidhi, B.; Padmaprabhu, C.A.; Pallavi, P.; Vallikannan, B. Risk factors for nuclear and cortical cataracts: A hospital-based study. J. Ophthalmic Vis. Res. 2015, 10, 243–249. [Google Scholar] [CrossRef]

- Richardson, R.B.; Ainsbury, E.A.; Prescott, C.R.; Lovicu, F.J. Etiology of posterior subcapsular cataracts based on a review of risk factors including aging, diabetes, and ionizing radiation. Int. J. Radiat. Biol. 2020, 96, 1339–1361. [Google Scholar] [CrossRef]

- Vasavada, A.R.; Mamidipudi, P.R.; Sharma, P.S. Morphology of and visual performance with posterior subcapsular cataract. J. Cataract Refract. Surg. 2004, 30, 2097–2104. [Google Scholar] [CrossRef]

- Khokhar, S.K.; Pillay, G.; Dhull, C.; Agarwal, E.; Mahabir, M.; Aggarwal, P. Pediatric cataract. Indian J. Ophthalmol. 2017, 65, 1340. [Google Scholar] [CrossRef]

- Li, F.; Song, D.; Chen, H.; Xiong, J.; Li, X.; Zhong, H.; Tang, G.; Fan, S.; Lam, D.S.C.; Pan, W.; et al. Development and clinical deployment of a smartphone-based visual field deep learning system for glaucoma detection. NPJ Digit. Med. 2020, 3, 123. [Google Scholar] [CrossRef]

- Okumura, Y.; Inomata, T.; Midorikawa-Inomata, A.; Sung, J.; Fujio, K.; Akasaki, Y.; Nakamura, M.; Iwagami, M.; Fujimoto, K.; Eguchi, A.; et al. DryEyeRhythm: A reliable and valid smartphone application for the diagnosis assistance of dry eye. Ocul. Surf. 2022, 25, 19–25. [Google Scholar] [CrossRef]

- Bhaskaran, A.; Babu, M.; Abhilash, B.; Sudhakar, N.A.; Dixitha, V. Comparison of smartphone application-based visual acuity with traditional visual acuity chart for use in tele-ophthalmology. Taiwan J. Ophthalmol. 2022, 12, 155–163. [Google Scholar] [CrossRef] [PubMed]

- Zhao, L.; Stinnett, S.S.; Prakalapakorn, S.G. Visual acuity assessment and vision screening using a novel smartphone application. J. Pediatr. 2019, 213, 203–210.e1. [Google Scholar] [CrossRef] [PubMed]

- Azrak, C.; Palazon-Bru, A.; Baeza-Díaz, M.V.; Folgado-De la Rosa, D.M.; Hernández-Martínez, C.; Martínez-Toldos, J.J.; Gil-Guillén, V.F. A predictive screening tool to detect diabetic retinopathy or macular edema in primary health care: Construction, validation and implementation on a mobile application. PeerJ 2015, 3, e1404. [Google Scholar] [CrossRef]

- Nagino, K.; Sung, J.; Midorikawa-Inomata, A.; Eguchi, A.; Fujimoto, K.; Okumura, Y.; Miura, M.; Yee, A.; Hurramhon, S.; Fujio, K.; et al. Clinical Utility of Smartphone Applications in Ophthalmology: A Systematic Review. Ophthalmol. Sci. 2024, 4, 100342. [Google Scholar] [CrossRef] [PubMed]

- Vagge, A.; Wangtiraumnuay, N.; Pellegrini, M.; Scotto, R.; Iester, M.; Traverso, C.E. Evaluation of a free public smartphone application to detect leukocoria in high-risk children aged 1 to 6 Years. J. Pediatr. Ophthalmol. Strabismus 2019, 56, 229–232. [Google Scholar] [CrossRef]

- Kaur, M.; Kaur, J.; Kaur, R. Low cost cataract detection system using smart phone. In Proceedings of the 2015 International Conference on Green Computing and Internet of Things (ICGCIoT), Greater Noida, India, 8–10 October 2015; pp. 1607–1609. [Google Scholar] [CrossRef]

- Rana, J.; Galib, S.M. Cataract detection using smartphone. In Proceedings of the 2017 3rd International Conference on Electrical Information and Communication Technology (EICT), Khulna, Bangladesh, 7–9 December 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Hu, S.; Wang, X.; Wu, H.; Luan, X.; Qi, P.; Lin, Y.; He, X.; He, W. Unified Diagnosis Framework for Automated Nuclear Cataract Grading Based on Smartphone Slit-Lamp Images. IEEE Access 2020, 8, 174169–174178. [Google Scholar] [CrossRef]

- Askarian, B.; Ho, P.; Chong, J.W. Detecting Cataract Using Smartphones. IEEE J. Transl. Eng. Health Med. 2021, 9, 3800110. [Google Scholar] [CrossRef]

- Neogi, D.; Chowdhury, M.A.; Akter, M.M.; Hossain, M.I.A. Mobile detection of cataracts with an optimised lightweight deep Edge Intelligent technique. Iet-Cyber-Phys. Syst. Theory Appl. 2024, 9, 269–281. [Google Scholar] [CrossRef]

- Ghamsarian, N.; El-Shabrawi, Y.; Nasirihaghighi, S.; Putzgruber-Adamitsch, D.; Zinkernagel, M.; Wolf, S.; Schoeffmann, K.; Sznitman, R. Cataract-1K Dataset for Deep-Learning-Assisted Analysis of Cataract Surgery Videos. Sci. Data 2024, 11, 373. [Google Scholar] [CrossRef]

- Al Hajj, H.; Lamard, M.; Conze, P.-H.; Roychowdhury, S.; Hu, X.; Maršalkaitė, G.; Zisimopoulos, O.; Dedmari, M.A.; Zhao, F.; Prellberg, J.; et al. CATARACTS: Challenge on Automatic Tool Annotation for Cataract Surgery. Med. Image Anal. 2019, 52, 24–41. [Google Scholar] [CrossRef]

- Cruz-Vega, I.; Morales-Lopez, H.I.; Ramirez-Cortes, J.M.; Rangel-Magdaleno, J.D.J. Nuclear Cataract Database for Biomedical and Machine Learning Applications. IEEE Access 2023, 11, 107754–107766. [Google Scholar] [CrossRef]

- Chylack, L.T. The Lens Opacities Classification System III. Arch. Ophthalmol. 1993, 111, 831. [Google Scholar] [CrossRef] [PubMed]

- Tax, D.M.; Duin, R.P. Support vector data description. Mach. Learn. 2004, 54, 45–66. [Google Scholar] [CrossRef]

- Manevitz, L.M.; Yousef, M. One-class SVMs for document classification. J. Mach. Learn. Res. 2002, 2, 139–154. [Google Scholar]

- Seeböck, P.; Waldstein, S.; Klimscha, S.; Gerendas, B.S.; Donner, R.; Schlegl, T.; Schmidt-Erfurth, U.; Langs, G. Identifying and categorizing anomalies in retinal imaging data. arXiv 2016, arXiv:1612.00686. [Google Scholar]

- Zhang, H.; Guo, W.; Zhang, S.; Lu, H.; Zhao, X. Unsupervised Deep Anomaly Detection for Medical Images Using an Improved Adversarial Autoencoder. J. Digit. Imaging 2022, 35, 153–161. [Google Scholar] [CrossRef]

- Albahar, M.A.; Binsawad, M. Deep Autoencoders and Feedforward Networks Based on a New Regularization for Anomaly Detection. Secur. Commun. Netw. 2020, 2020, 7086367. [Google Scholar] [CrossRef]

- Siddalingappa, R.; Kanagaraj, S. Anomaly Detection on Medical Images Using Autoencoder and Convolutional Neural Network. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 148–156. [Google Scholar] [CrossRef]

- Shabbeer, S.; Reddy, E.S. A Framework for Medical Data Analysis Using Deep Learning Based on Conventional Neural Network (CNN) and Variational Auto-Encoder. Int. J. Recent Technol. Eng. (IJRTE) 2019, 8, 852–857. [Google Scholar] [CrossRef]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar] [CrossRef]

- Roy, M.; Kong, J.; Kashyap, S.; Pastore, V.P.; Wang, F.; Wong, K.C.L.; Mukherjee, V. Convolutional autoencoder based model HistoCAE for segmentation of viable tumor regions in liver whole-slide images. Sci. Rep. 2021, 11, 139. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 770–778. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. arXiv 2019, arXiv:1905.02244. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

| Images from the NC Dataset | NC | Total | |||||

|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | ||

| Original | 54 | 247 | 129 | 93 | 70 | 34 | 627 |

| Selected | 42 | 90 | 63 | 49 | 58 | 33 | 335 |

| Stage | Classification | Network Architecture | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|

| Stage II: NC | 1, 2, 3, 4, 5, 6 | VGG16 | 0.944 | 0.962 | 0.934 | 0.941 |

| ResNet50 | 0.913 | 0.945 | 0.921 | 0.932 | ||

| VGG11 | 0.916 | 0.937 | 0.914 | 0.918 | ||

| ResNet18 | 0.945 | 0.963 | 0.940 | 0.952 | ||

| MobileNetV3 | 0.942 | 0.974 | 0.945 | 0.955 | ||

| EfficiencyNet | 0.911 | 0.931 | 0.916 | 0.913 |

| Neural Network | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| VGG16 | 0.821 | 0.831 | 0.820 | 0.820 |

| ResNet50 | 0.824 | 0.852 | 0.823 | 0.824 |

| VGG11 | 0.848 | 0.857 | 0.848 | 0.846 |

| ResNet18 | 0.681 | 0.784 | 0.680 | 0.658 |

| MobileNetV3 | 0.633 | 0.737 | 0.632 | 0.615 |

| EfficientNet | 0.638 | 0.698 | 0.612 | 0.669 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ignatowicz, A.A.; Marciniak, T.; Marciniak, E. AI-Powered Mobile App for Nuclear Cataract Detection. Sensors 2025, 25, 3954. https://doi.org/10.3390/s25133954

Ignatowicz AA, Marciniak T, Marciniak E. AI-Powered Mobile App for Nuclear Cataract Detection. Sensors. 2025; 25(13):3954. https://doi.org/10.3390/s25133954

Chicago/Turabian StyleIgnatowicz, Alicja Anna, Tomasz Marciniak, and Elżbieta Marciniak. 2025. "AI-Powered Mobile App for Nuclear Cataract Detection" Sensors 25, no. 13: 3954. https://doi.org/10.3390/s25133954

APA StyleIgnatowicz, A. A., Marciniak, T., & Marciniak, E. (2025). AI-Powered Mobile App for Nuclear Cataract Detection. Sensors, 25(13), 3954. https://doi.org/10.3390/s25133954