Enhancing Infotainment Services in Integrated Aerial–Ground Mobility Networks

Abstract

1. Introduction

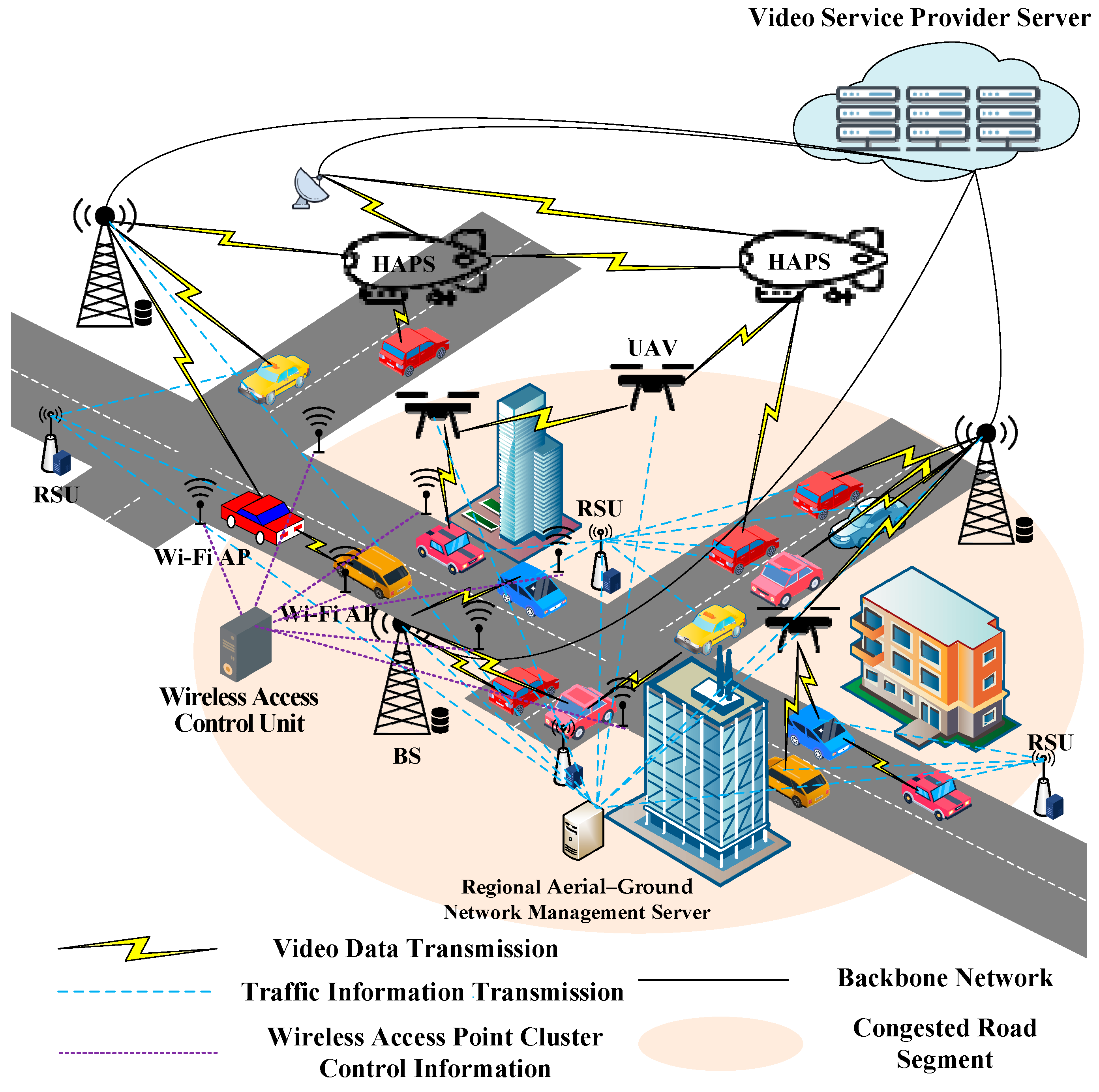

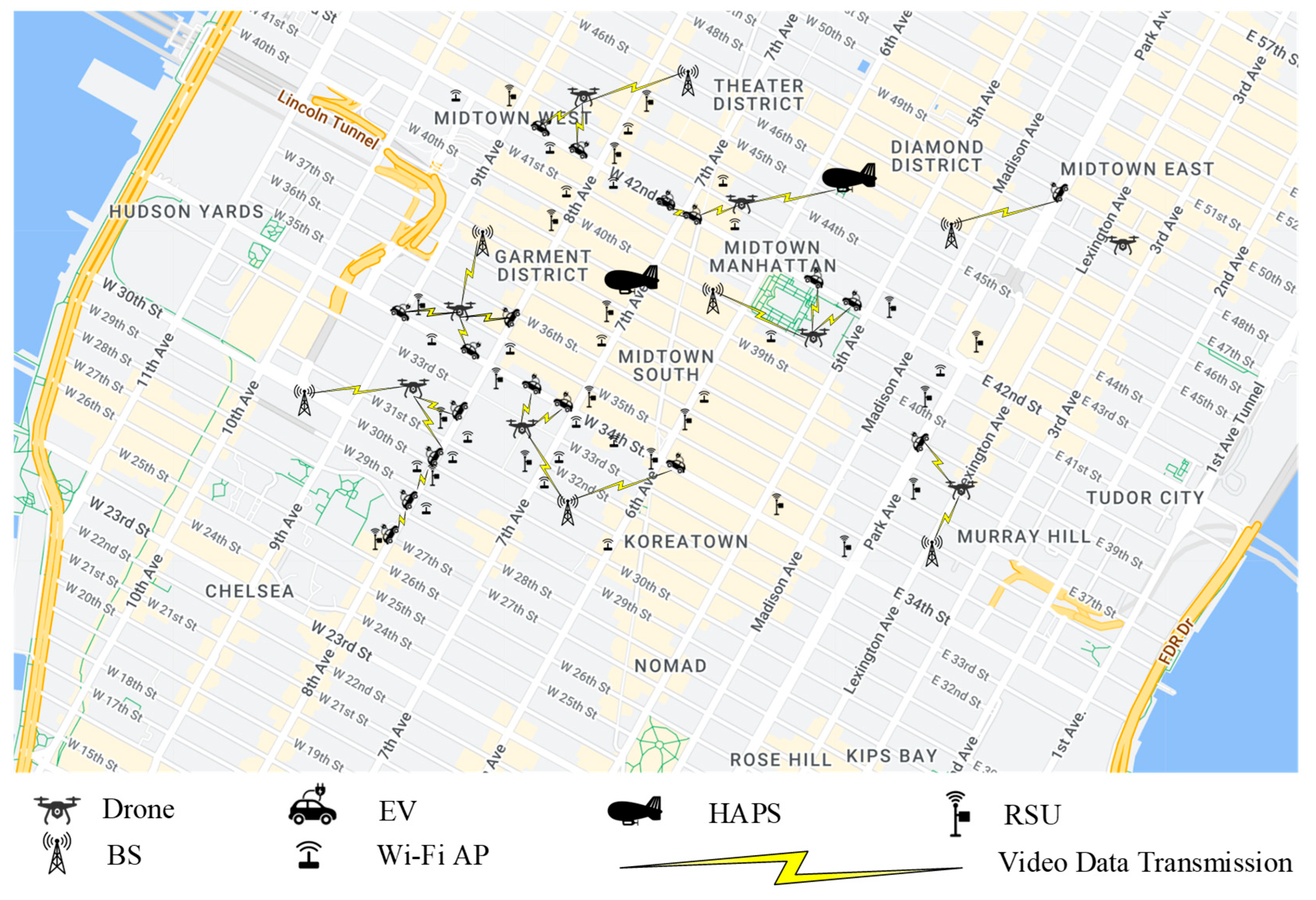

- A multi-layer hybrid network combining RSUs, drones, and HAPs interconnected via high-speed wireless links (VLC, mmWave, and THz) to provide robust connectivity for moving EVs.

- A dynamic, priority-based bandwidth allocation mechanism that adapts in real time to EV speed and service requirements, reserving capacity for high-priority tasks while efficiently managing lower-priority traffic.

- An aerial-assisted load balancing strategy whereby drones and HAPs function as mobile relays, redistributing unused bandwidth from low-demand to congested zones to support group streaming efficiently.

- A graceful video degradation scheme that anticipates network dead zones and preloads non-live content to prevent buffering interruptions.

2. Related Work

3. Research Methodology and Rationale Adopted in This Work

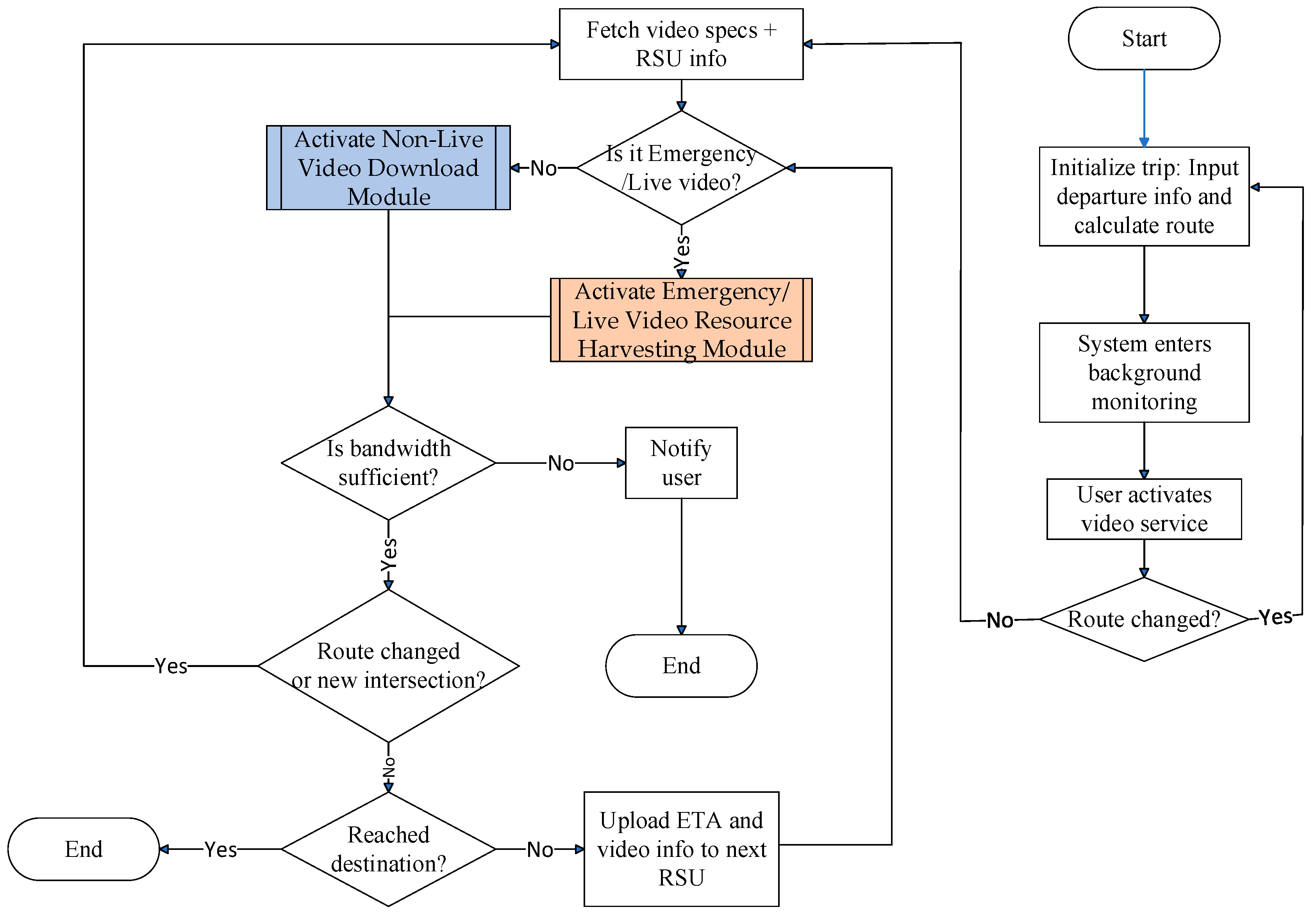

3.1. Adaptive Video Resource Manager Module

- Step 1:

- Prior to initiating the EV’s journey, the system retrieves historical traffic data stored in the EV’s onboard database. Based on the user-defined parameters—including the origin, departure time, and intended destination—the navigation system calculates the optimal driving route.Here, and denote the origin and destination of EV σ, respectively. , , and represent the starting point of the EV’s driving route, the i-th waypoint, and terminus of the route, respectively. These two equations define the route structure as an ordered list of segments and are used for logical referencing throughout the system. Since the route is obtained via third-party navigation systems (e.g., Google Maps API), the formulation reflects how the path is internally represented, rather than being derived from first principles.

- Step 2:

- This module transitions into background execution mode, continuously monitoring the system state.

- Step 3:

- If the travel plan changes during transit, the system responds by re-executing Step 1 to generate a revised route incorporating the changes.

- Step 4:

- The system performs an initial congestion evaluation when the passenger activates video streaming, determining if the EV’s route intersects with peak-time traffic bottlenecks. Subsequently, it communicates with the video service provider’s server to retrieve the relevant streaming specifications, including encoding formats and multicast relay node information.

- Step 5:

- The system calculates the intersection that can be reached within time from the current road segment:subject to:In these expressions, defines the EV’s planned route from the current location to the destination . denotes the estimated arrival time at waypoint , and Cur is the system timestamp at evaluation time. The optimization in Equation (3) is a heuristic for finding the most forward intersection that can be reached within the available reservation window . This enables predictive bandwidth allocation at RSUs ahead of the EV’s trajectory. These constraints operationalize the trade-off between lookahead planning and real-time mobility, and are not based on theoretical derivations but on logical control conditions specific to our system architecture.

- Step 6:

- Each RSU along the EV’s route receives estimated arrival time, video specs, and multicast node configurations.

- Step 7:

- If the EV passenger’s application type is emergency/live streaming, the “Emergency/Live Video Resource Harvesting Module” is activated. Otherwise, the “Non-Live Video Download Module” is activated.

- Step 8:

- If the bandwidth is insufficient, the EV passenger is notified that the service cannot be executed and this module is terminated.

- Step 9:

- Should the passenger change the planned route, the system updates its operations by re-executing Step 5 of the process.

- Step 10:

- Prior to arriving at the next intersection, the system verifies whether the upcoming intersection corresponds to the EV’s designated destination.Here, tracks the current position in the waypoint list, and is a binary indicator denoting whether the destination has been reached. These logical expressions control the iteration and module continuation. They do not require mathematical derivation but are integral to the heuristic loop structure governing video resource management.

- Step 11:

- If , indicating that the EV has not yet reached its final destination, the system uploads the estimated arrival time at intersection , together with the associated video application specifications and multicast tree node details, to the RSU overseeing that roadway. The process then returns to Step 7 to resume execution.

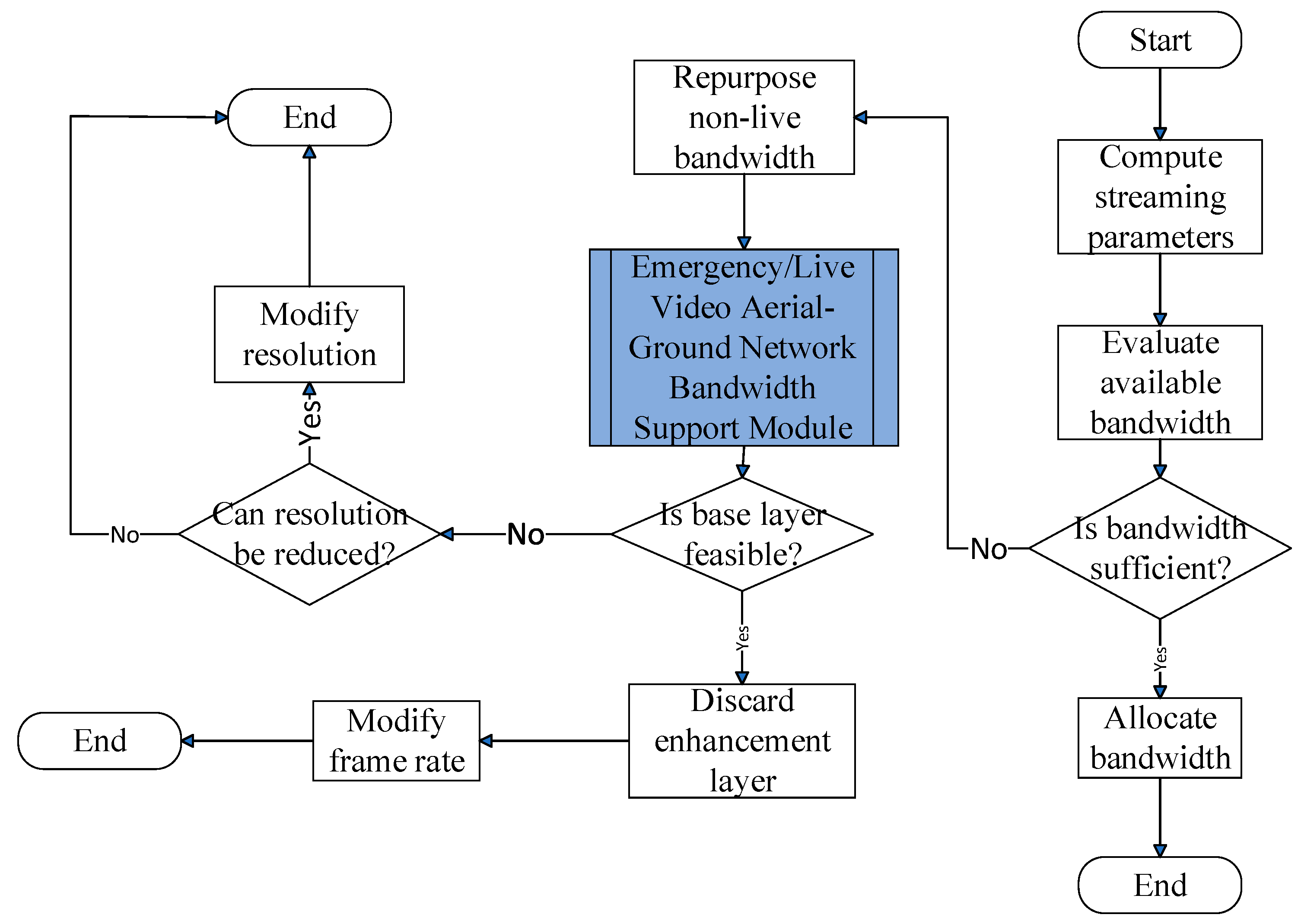

3.2. Emergency/Live Video Resource Harvesting Module

- Step 1:

- Using the EV’s computed segment arrival times and infotainment service specifications, the following equations are implemented:The binary indicator specifies whether the emergency or live video stream is bidirectional. Specifically, if , the stream is bidirectional; otherwise, if , it is considered unidirectional. The variables and represent the initial and terminal timestamps of video stream , respectively. In addition, and denote the i-th waypoint index and terminal destination index, respectively, in σ’s planned route. The parameters and indicate the number of encoding layers used for video upload and download, respectively, at time slot , while and denote the video resolution for upload and download. and track how many frames per second are being uploaded and downloaded for video v. enumerates the complete set of video layers, including the mandatory base layer and all optional enhancement layers. The resolution is classified into levels, with denoting the resolution level specified by the EV passenger for service streaming. Similarly, the video frame rate is classified into levels, with representing the frame rate level .This step defines the EV’s video service requirements over time through Equations (11)–(18), which model how the video stream is structured along the EV’s route. For each trip segment (denoted by time intervals ), the system determines the number of video layers to upload or download, the resolution level representing video quality, and the frame rate indicating playback smoothness. This enables precise tracking of video service demands throughout the EV’s journey.

- Step 2:

- This step determines time-sliced bandwidth needs using the emergency/live video stream parameters throughout the EV’s trip. It then assesses whether the cumulative bandwidth provisioned by base stations and distributed access point clusters along the planned route is sufficient to satisfy the minimum requirements for uninterrupted video transmission.where the indices and denote the base station and the member of the distributed access point cluster that provide the uplink bandwidth and for bidirectional video , respectively. Correspondingly, and represent the downlink bandwidth allocated by and to video . denotes the bandwidth requirement for video encoding, where corresponds to the index of the base or enhancement layer. The term denotes the index of the RSU overseeing the roadway through which the EV passes.Specifically, Equation (19) calculates the uplink bandwidth gap , which compares the total uplink bandwidth allocated by base stations and distributed access point clusters (via and ) against the sum of bandwidth requirements for each encoded video layer (up to ) based on resolution and frame rate (). When this gap is negative, Equation (20) identifies the RSU () responsible for the affected road segment . Equations (21) and (22) follow a parallel structure for the downlink, defining and the corresponding RSU index if there is a shortfall in downlink capacity.Together, these formulations systematically evaluate where bandwidth is insufficient and which RSU must respond. When a shortfall is detected, the RSU triggers a clustering mechanism [30] to dynamically select an optimal access point set (). This enables adaptive, distributed bandwidth control to meet the EV’s real-time video demands across each segment.

- Step 3:

- If sufficient bandwidth is available for the requested emergency/live video service, this step allocates the required resources to the EV. Upon successful allocation confirmation, the module terminates its operation.

- Step 4:

- If the bandwidth provided by base stations or distributed access point clusters is inadequate for supporting the required emergency or live service stream, the system investigates whether it is possible to reassign bandwidth originally reserved for non-live services within the EV’s communication range, as outlined in the subsequent procedures.Here, represents the index of non-live video. and denote the base station and distributed access point cluster members that have been allocating bandwidth to , respectively. and represent the uplink bandwidth that and can allocate to bidirectional video when the EV is within their transmission range at time slot . Similarly, and represent the downlink bandwidth that and can allocate to video . and respectively denote the amount of uplink and downlink bandwidth that can be reassigned from non-live video streams to support emergency or live video transmissions.Equations (23) and (25) calculate the reassignable uplink () and downlink () bandwidth, respectively. These quantities sum the available bandwidth originally allocated to non-live video sessions (indexed by ) from nearby base stations () and access point clusters () at time , but only if a bandwidth shortfall ( < 0 < 0 or < 0) is detected.Equations (24) and (26) then update the uplink and downlink shortfall metrics by incorporating the reassigned bandwidth. This process ensures that critical video services (e.g., emergency feeds) can opportunistically borrow bandwidth from non-critical streams without violating system constraints.In summary, Step 4 provides a fallback mechanism that temporarily reallocates bandwidth from non-live services to maintain uninterrupted delivery of high-priority live video content.

- Step 5:

- Once bandwidth has been successfully reallocated to meet the minimum requirements for priority stream v, the system notifies the originating wireless infrastructure nodes to reassign the bandwidth from μ to v. It then calls the “Non-Live Video Download Module” to reallocate the necessary bandwidth for the non-live service μ. Upon completing the reallocation, the module terminates its execution.

- Step 6:

- If or is negative for a given time slot, the system consults the regional aerial–ground network management server—responsible for managing multicast tree nodes—to determine whether base stations or distributed access point cluster members at each node can supply additional bandwidth.

- Step 7:

- After receiving replies from the regional aerial–ground network management servers, the system sets the priority order for bandwidth allocation from the base stations and distributed access point clusters at the multicast tree nodes to the emergency/live video according to the following formulas:These sequences are determined by the following conditions:Here, and represent the sequences of multicast tree nodes that can allocate uplink and downlink bandwidth to video at time , respectively. These sequences are sorted in descending order based on the bandwidth available at each node for the EV at time . and denote the number of nodes in the multicast trees and , respectively. and stand for the positions of the -th nodes in and , respectively.

- The

- four equations used at this step are designed to prioritize the selection of multicast tree nodes for bandwidth allocation, based on their real-time capacity to support emergency or live video streaming. Equations (27) and (28) define sorted sequences of uplink and downlink nodes, respectively, with the order determined by conditions (29) and (30), which compare cumulative bandwidth availability across candidate nodes. The sorting logic ensures that the most capable nodes—those contributing the highest usable bandwidth—are considered first. This approach embeds a greedy heuristic into the multicast selection process, optimizing throughput and minimizing transmission latency without requiring global optimization. By formulating these priorities explicitly, the framework balances responsiveness with computational efficiency and maintains adaptability across dynamic network states.

- Step 8:

- Based on the priority order of the multicast tree nodes, the system calculates the number of nodes that can provide bandwidth support:Here, and represent the number of multicast tree nodes supporting uplink and downlink bandwidth, respectively. and stand for the base stations and distributed access point cluster members covering the node in the sequence . and denote the uplink bandwidth that and can allocate to bidirectional video at time slot . Similarly, and represent the base stations and distributed access point cluster members covering node in sequence , while and denote the downlink bandwidth that and can allocate to video at time slot .Equations (31) and (32) compute the total bandwidth that can be leveraged by selectively including an appropriate number of high-priority nodes from the multicast tree sequences identified in the previous step. By incrementally summing the bandwidth from top-ranked nodes in and , the equations determine the minimum number of contributors ( and ) required to satisfy the uplink and downlink demands of video v. The operation ensures a balance between efficiency and sufficiency by terminating the accumulation process as soon as the aggregated capacity reaches or slightly exceeds the outstanding bandwidth deficit. This approach minimizes resource consumption while ensuring that video transmission requirements are satisfied. Overall, the formulation implements a localized optimization mechanism that is both computationally efficient and adaptive to real-time network conditions, consistent with the guiding principles of the proposed heuristic framework.

- Step 9:

- The system sends a request to the regional aerial–ground network management server overseeing the multicast tree nodes identified in the previous step, triggering the “Emergency/Live Video Aerial-Ground Network Bandwidth Support Module”. The server then evaluates whether base stations or distributed access point cluster members under its jurisdiction can relay bandwidth to video v via drones or aerial platforms.

- Step 10:

- If the data relay process successfully meets the requirements of , this module advances to Step 22 to resume its operation.

- Step 11:

- Remove the first and nodes from and , respectively, and update the and nodes as follows:As shown in Equations (33)–(36), the head elements of the and sequences—those already selected to fulfill the bandwidth demand—are removed, and the remaining nodes are reindexed to maintain the structural integrity of the sequences. The values of and , which track the total number of candidate nodes, are correspondingly decremented. This update ensures that future iterations of the allocation logic operate only on unassigned resources, thereby preventing redundancy and enforcing efficient use of the remaining network capacity. This iterative pruning aligns with the overall heuristic framework by ensuring adaptability and local optimization in a dynamic bandwidth allocation environment.

- Step 12:

- If the sequences and have not yet been fully processed (i.e., not completely emptied), the system returns to Step 8 and resumes execution.

- Step 13:

- If video stream does not contain enhancement layer encoding, the system skips directly to Step 20 for continued processing. Otherwise, it proceeds to the subsequent step.

- Step 14:

- The base layer bandwidth requirement for video stream v is calculated as follows:Equations (37) and (38) refine the estimated bandwidth requirements for the base layer of video stream v during time slot . The expressions integrate both initial bandwidth deficits—represented by and —and cumulative contributions from additional base stations indexed by r = 2 to or , respectively. The term (⋅) denotes the r-th base station’s bandwidth support within the current uplink or downlink cluster. The scaling factor adjusts the effective uplink requirement to account for video-specific transmission demands (e.g., encoding complexity or priority weight). By integrating this hierarchical accumulation mechanism, these formulas ensure that the base layer—a critical component for maintaining minimal video quality—is reliably sustained. This approach aligns with the heuristic’s design by prioritizing robustness and continuity in emergency video delivery under constrained network conditions.

- Step 15:

- If both and are non-negative, it indicates that the bandwidth requirements for the base layer encoding of video stream have been met. In this case, the system discards the enhancement layer encoding and returns to Step 2, as specified by the following two equations; otherwise, it proceeds to the next step.This step serves as a validation checkpoint within the bandwidth allocation process. By confirming that both uplink and downlink base layer bandwidth deficits, and , are non-negative, the system ensures that the essential quality of the video stream v can be maintained without requiring additional enhancement layers. Setting and effectively signals the system to prioritize base layer transmission exclusively, simplifying resource allocation and reducing network load. This conditional check supports a fail-safe mechanism within the heuristic framework, allowing early termination of more complex allocation steps when base layer needs are already satisfied, thereby improving computational efficiency and responsiveness.

- Step 16:

- If video stream is identified as a live stream, the system proceeds to Step 20 for further execution. Otherwise, it continues to the subsequent step.

- Step 17:

- Bandwidth that was originally assigned to other live video streams from ground base stations or distributed access point cluster members within the EV’s coverage area to the urgent application is reallocated, as described below:Here, represents the index of the live video stream from which bandwidth is being reallocated. and denote the base stations and distributed access point cluster members originally allocating bandwidth to . and denote the uplink bandwidth that and can allocate to the urgent video stream when the EV is within their coverage at time slot . Similarly, and stand for the downlink bandwidth that and can allocate to the urgent video stream .This step details the process of dynamically reallocating bandwidth from existing live video streams to the emergency video stream v within the EV’s coverage area. Equations (41) and (42) calculate the additional uplink and downlink bandwidth that can be reclaimed by summing the resources allocated by base stations and distributed access point clusters currently serving other live streams (indexed by μ). The use of the Min[∙] function ensures that the reallocation does not exceed the bandwidth deficit, preventing over-allocation and preserving system stability. This step enables a responsive adjustment of network resources, prioritizing urgent traffic by temporarily borrowing capacity from less critical live streams, thus maintaining efficient utilization of limited bandwidth under dynamic network conditions.

- Step 18:

- If reallocating bandwidth from other live video streams to the emergency service results in the inability to smoothly download and play the affected live service streams, and are set to the bandwidth quota of the affected live service stream and the system returns to Step 6 to request aerial–ground network support for restoring bandwidth to the affected live service stream.

- Step 19:

- Once the bandwidth requirements for the EV passenger’s emergency service are met, the system proceeds to Step 22 to continue execution. Otherwise, it advances to the next step.

- Step 20:

- If the service in use permits frame rate adjustment and the current frame rate has not yet reached the minimum threshold defined by the system, the frame rate is recalibrated according to the following equations. The system then returns to Step 2 to resume execution.Here, and represent the uplink and downlink frame rates of video for the EV user. and indicate the RSU managing the segment between and where uplink and downlink bandwidth are insufficient at time slot . The variables and denote the initial and terminal timestamps of the video, respectively. The term stands for the frame rate level specified by the EV passenger for the corresponding service stream.This step introduces a dynamic frame rate adjustment mechanism to cope with bandwidth shortages when reallocating resources is insufficient. The equations (43)–(45) define how uplink and downlink frame rates ( and ) are reduced stepwise, guided by the RSU bandwidth insufficiency indicators ( and ). By decrementing the frame rate level when necessary—while respecting predefined minimum thresholds—this adaptive approach balances video quality degradation against network capacity constraints. This ensures continuous service availability for the EV passenger’s emergency video stream, preventing abrupt interruptions. Returning to Step 2 after adjustment enables the system to iteratively refine bandwidth allocation and video encoding parameters, thereby maintaining an optimal trade-off between performance and resource limitations under dynamic network conditions.

- Step 21:

- If the service supports resolution adjustment and the current resolution level remains above the system-defined minimum threshold, the system recalibrates the resolution using the following equations and returns to Step 2 to resume execution.In this context, and denote the resolution settings for uplink and downlink transmission, respectively, whereas indicates the resolution level as requested by the EV passenger for the service stream.This step implements an adaptive resolution adjustment mechanism to further optimize bandwidth usage when necessary. Equations (46) and (47) update the uplink and downlink resolution levels ( and ) by decrementing the current resolution when the bandwidth insufficiency flags ( and ) indicate resource constraints and the resolution is above the system-defined minimum. Equation (48) manages the resolution index update to ensure gradual stepping down through predefined resolution levels, avoiding abrupt quality drops. This approach allows the system to maintain video service continuity by trading off resolution quality dynamically, aligned with network conditions and user preferences.

- Step 22:

- If the EV passenger’s service bandwidth requirements have been met, the base stations, distributed access point clusters, or multicast tree nodes involved in the bandwidth allocation process are notified to assign the bandwidth to the EV passenger.

- Step 23:

- The bandwidth allocation results are transmitted back to the EV, and the execution of this module is terminated.

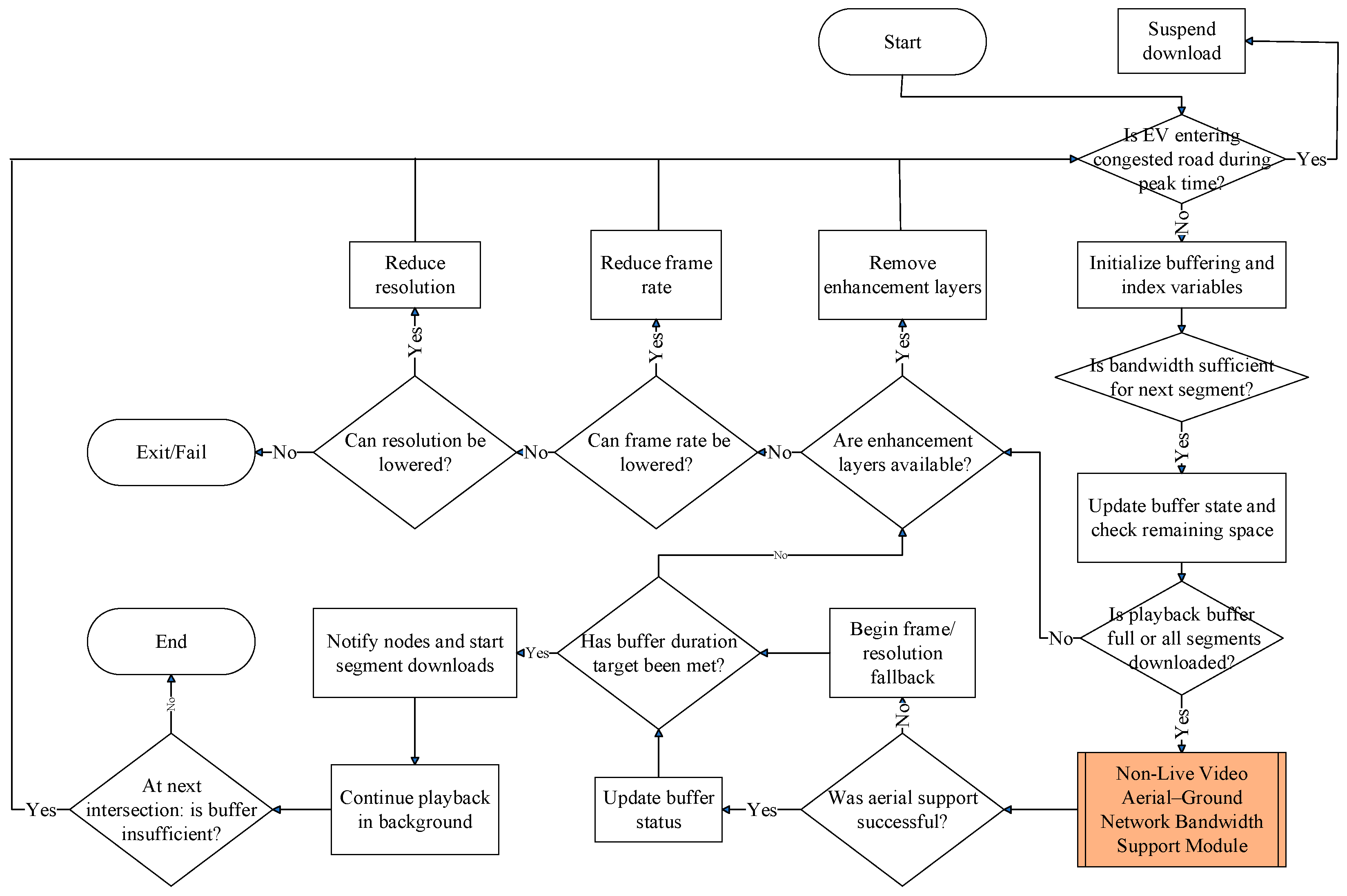

3.3. Non-Live Video Download Module

- Step 1:

- If the EV is traveling through a congested road segment during peak hours, the user’s non-live video download request is paused, and the operation of this module is halted.

- Step 2:

- The following variables are initialized:where represents the index of the last unplayed video segment stored in the EV for video . denotes the remaining playable video duration that can be pre-downloaded by the EV, while is the system-defined upper limit for pre-downloaded video playback time. The video stream consists of a base layer and one or more enhancement layers. is the total number of layers; , , and , respectively, stand for the number of encoding layers, resolution, and frame rate of the video segments. The resolution of v is categorized into levels, while the frame rate is categorized into levels. and stand for the resolution corresponding to level and the frame rate corresponding to level , respectively.This step establishes the initial state variables critical for managing non-live video downloads in dynamic vehicular environments. The variable tracks the index of the last unplayed video segment stored onboard, enabling the system to monitor playback progress and buffer status. represents the remaining duration of video content that the EV can pre-download, bounded by the system-defined upper limit , which prevents excessive buffering and optimizes storage use. The video encoding parameters—number of layers , resolution c, and frame rate —are initialized to reflect the current streaming quality settings. These parameters account for layered video, allowing adaptive adjustment to network conditions. The resolution and frame rate are discretized into levels to facilitate systematic quality control. Collectively, these definitions provide a foundation for dynamically regulating bandwidth allocation and download strategies tailored to the EV’s route, storage, and video playback status, thereby ensuring seamless video delivery despite variable network conditions.

- Step 3:

- If the EV is navigating a congested road segment during peak hours, the system halts the passenger’s non-live video download request and suspends bandwidth allocation. Otherwise, based on the passenger’s non-live video playback settings and the remaining duration of preloaded video segments, this step evaluates whether the available bandwidth is sufficient to preload upcoming video segments using the following formula:The above equation is subject to the following conditions:where is the time at which video segment is downloaded. and represent the bandwidth allocated to non-live video segment from base station and distributed access point cluster member at time , respectively. The variable denotes the number of consecutive time slots following that are allocated for the continuous downloading of video segments. stands for the total number of video segments in video , and denotes the number of video segments that are preloaded. The variable represents the duration of the l-th video portion, while indicates the bit size of the base or enhancement layer for a given video portion. The term corresponds to the playback duration of segment . Additionally, refers to the remaining buffer capacity available on the EV prior to preloading, and denotes the total buffer storage capacity of the EV.This step evaluates whether the system can continue preloading upcoming non-live video segments for the EV passenger under current network and buffer conditions, or if downloads must be suspended due to congestion. Equation (54) maximizes the preload segment count by comparing the available downlink bandwidth aggregated from base stations () and distributed access points () over a window of consecutive time slots against the cumulative video segment size needed for smooth playback. Constraints (55)–(59) ensure that the total allocated bandwidth meets or exceeds the preload demand; the preloaded content does not surpass the remaining buffer space or total video length; segment download times are ordered sequentially within valid playback timeframes; and buffer capacity limits are respected to prevent overflow. Together, this formulation ensures that video preloading is adaptively managed to balance seamless playback and network resource availability, preventing unnecessary bandwidth allocation during congestion or limited buffer scenarios.

- Step 4:

- The following temporary variables are updated:Here, represents the updated remaining playable duration that can be pre-downloaded, denotes the updated remaining buffer storage on the EV, and stands for the index of the last segment remaining in the playback buffer after bandwidth allocation in the prior step.This step updates key temporary variables to reflect the impact of the preloading decisions made in Step 3. Equation (60) recalculates , the remaining playable video duration that still needs to be downloaded after accounting for the newly preloaded segments. Equation (61) updates the temporary buffer occupancy by adding the bit sizes of the newly downloaded video segments to the existing buffer level, ensuring accurate tracking of buffer usage. Finally, Equation (62) sets as the index of the latest segment buffered, marking the current boundary between downloaded and yet-to-be-downloaded content. Together, these updates maintain synchronization between buffer status, playback progression, and network resource allocation, supporting adaptive video streaming management in dynamic conditions.

- Step 5:

- If all non-live video portions have been successfully downloaded, or if is greater than or equal to the system-defined minimum playback duration required for preloading, the system proceeds to Step 15 for continued execution. Otherwise, the system advances to the following step.

- Step 6:

- The number of video portions that can continue to be downloaded is determined using the following formula:Equation (63) formulates a constrained optimization problem that minimizes the difference between the upper threshold and the sum of the playback durations of candidate segments plus the current buffered duration . This formulation ensures that additional buffering remains within safe limits, preventing resource waste and buffer overflow while still extending playback continuity. It reflects a practical trade-off between aggressive preloading and system stability, especially under fluctuating network conditions.

- Step 7:

- Based on the video multicast tree node information provided by the video service provider, the system consults the regional aerial–ground network management servers responsible for managing the multicast tree nodes. After receiving responses, the system determines the order in which base stations and distributed access point clusters at the multicast tree nodes allocate bandwidth for video portion downloads using the following sequences:where represents the sequence of multicast tree nodes allocated to download bandwidth for the -th encoding layer, and denotes the position of the -th node in . and sequences, respectively, stand for the video segment indices and the number of video segments downloaded at each node. is the number of nodes in the sequences , , and . and denote the base stations and distributed access point cluster members covering node in sequence . and represent the bandwidth allocated by base stations and distributed access point clusters at node for non-live video portions. is the number of consecutive time slots used by node for video segment downloads after .This step orchestrates the allocation of bandwidth for downloading additional non-live video segments by leveraging multicast tree structures managed by regional aerial–ground servers. It constructs three sequences—, , and —to define which nodes handle which segment layers and in what quantity. The accompanying constraints, as given in Equations (67)–(76), ensure that each node has sufficient bandwidth, segment assignments are sequential and non-overlapping, timing aligns with playback windows, and total buffer capacity is respected. Accordingly, this step ensures efficient, scalable segment preloading across distributed infrastructure while maintaining smooth playback and resource compliance.

- Step 8:

- The regional aerial–ground network management servers responsible for the multicast tree nodes calculated in the previous step are notified to activate the “Non-Live Video Aerial-Ground Network Bandwidth Support Module”. These servers will then determine whether their managed base stations or distributed access point clusters can relay bandwidth for video via drones or aerial platforms.

- Step 9:

- If the previous step fails to establish a drone or aerial platform relay path to provide bandwidth for the EV user, the system proceeds to Step 11. Otherwise, the following temporary variables are updated:This step handles the system’s response after successfully securing aerial or drone-based bandwidth relays for non-live video delivery. Upon confirmation from Step 8, it updates three key temporary variables to reflect the impact of newly preloaded segments: the remaining playable duration () is reduced by the playback time of the newly downloaded segments; the buffer usage () is increased according to the data size of the downloaded segments across all encoding layers; and the playback index () is advanced to indicate the last buffered segment. These updates maintain accurate system state and ensure continuity in subsequent scheduling decisions.

- Step 10:

- If is greater than or equal to the system-defined minimum playback duration for preloaded video segments, the system proceeds to Step 15. Otherwise, it continues to the subsequent step.

- Step 11:

- If video stream does not contain enhancement layer encoding, the system proceeds directly to Step 13. Otherwise, it continues to the subsequent step.

- Step 12:

- After removing the enhancement layer via Equation (80), the system transitions to Step 3 to proceed.As shown in Equation (80), the system discards all enhancement layers, thereby reducing both the data size and the complexity of the video stream.

- Step 13:

- If the service supports frame rate reduction and the current frame rate has not yet reached the minimum threshold defined by the system, this step adjusts the passenger’s video frame rate as specified below, then proceeds to Step 3 to continue processing.By lowering the frame rate using Equation (81) and updating the frame rate index as in Equation (82), the system decreases bandwidth demand while maintaining video continuity, then returns to Step 3 for further processing.

- Step 14:

- When resolution reduction is supported and the current level exceeds the minimum threshold, the system adapts the video resolution per the given equation and re-enters Step 3.By adjusting the resolution according to Equation (83) and updating the resolution index in Equation (84), this step reduces bandwidth usage while preserving playback.

- Step 15:

- The base stations, distributed access point clusters, and multicast tree nodes involved in the bandwidth allocation process are notified to download all video portions, and the following variables are updated:where represents the index of the video segment currently being played by the EV.This step updates key variables to reflect the current playback and buffering status: is set to the updated buffer capacity , is updated to the latest last preloaded segment index , and the remaining video length is recalculated based on the total segments (), the updated last preloaded segment, and the current playback position . This ensures synchronization of system state with ongoing video playback and preloading.

- Step 16:

- This module continues video playback in the background mode until the EV user stops the video playback.

- Step 17:

- Upon arriving at the next intersection, if is less than , the system returns to Step 3 to resume execution.

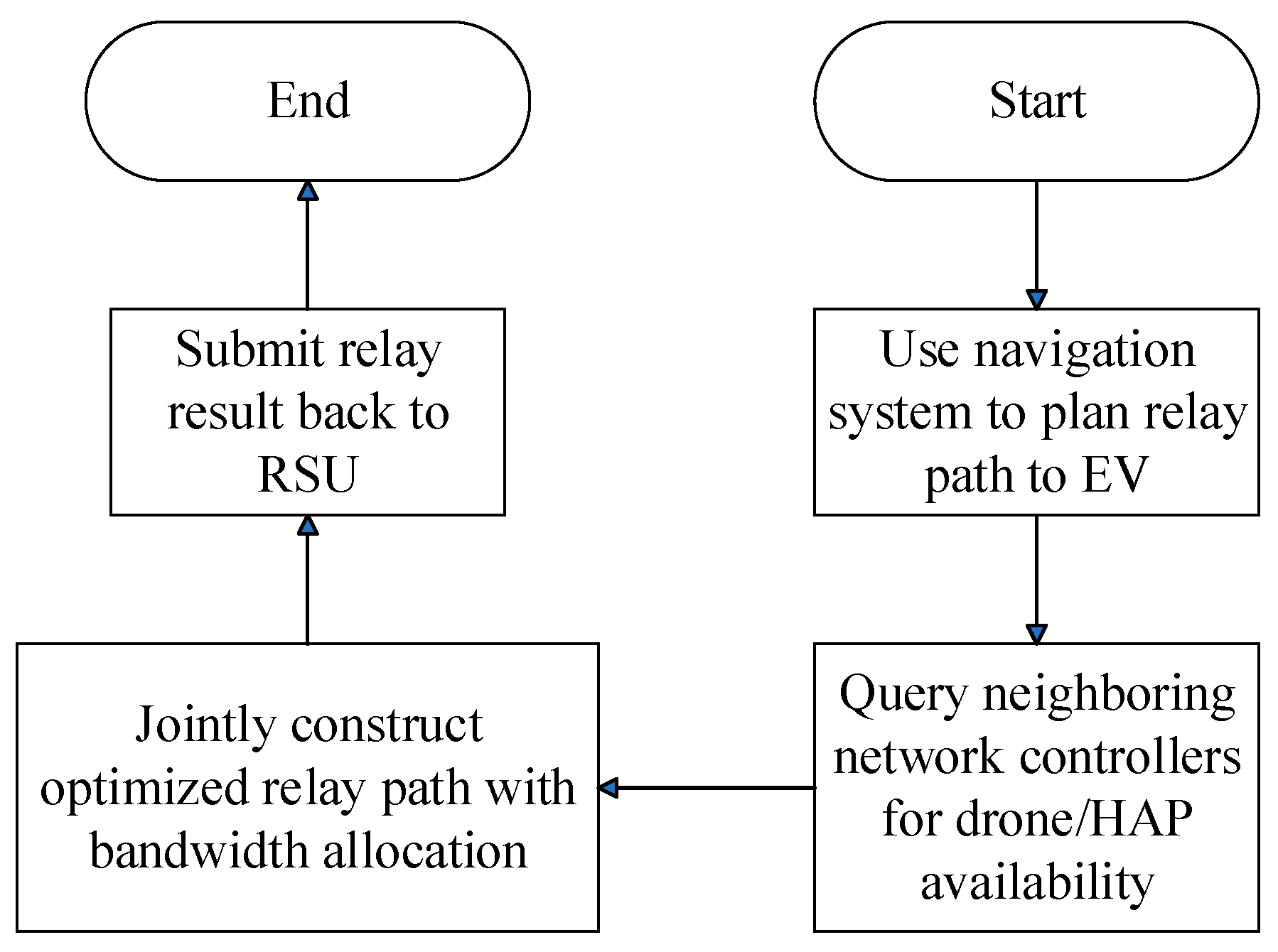

3.4. Emergency/Live Video Aerial-Ground Network Bandwidth Support Module

- Step 1:

- The regional aerial–ground network management server uses navigation software to determine the relay route from its managed base stations or distributed access point clusters to the EV’s location.

- Step 2:

- The server queries nearby regional aerial–ground network management servers for information on aerial platform and drone deployment within their respective coverage areas.

- Step 3:

- In collaboration with the surrounding regional aerial–ground network management servers, a bandwidth relay path is established with the EV requesting bandwidth using the following optimization formulas:subject towhere and represent the m-th uplink and downlink aerial–ground relay paths that can support ’s bandwidth demand. and are the numbers of uplink and downlink relay paths, while and denote the numbers of nodes along the m-th uplink and downlink relay paths, respectively. and represent the source base stations or distributed access point clusters for the uplink and downlink aerial–ground relay paths, respectively, while is the EV σ at the end of the relay path. and represent the remaining available bandwidth from and from to , respectively, while and denote the required bandwidths for uplink and downlink transmission. Unless otherwise noted, all parameters are as defined in Section 3.2.Equations (88) and (89) minimize the total bandwidth load across multiple relay paths, balancing the demands and available capacity. Paths and , defined in Equations (90) and (91), represent sequences of nodes—from base stations or access points to the EV—that must maintain sufficient bandwidth, as specified in Equations (92)–(97). The constraints ensure that each path terminates at the EV, uplink and downlink bandwidth requirements do not exceed available resources, and overall allocation efficiently supports the EV’s streaming demands. Step 4 involves sending the finalized bandwidth relay setup results to the RSU.

- Step 4:

- The bandwidth relay setup results are sent to the RSU.

3.5. Non-Live Video Aerial-Ground Network Bandwidth Support Module

- Step 1:

- The regional aerial–ground network management server uses navigation software to determine the relay route from its managed base stations or distributed access point clusters to the EV’s location.

- Step 2:

- The server queries neighboring regional aerial–ground network management servers for aerial platform and drone deployment information within their respective coverage areas.

- Step 3:

- In collaboration with the surrounding regional aerial–ground network management servers, an aerial–ground network relay path is established to pre-download video segments for the EV as follows:subject to:where represents the m-th aerial–ground network relay path that supports the bandwidth transfer for video from multicast tree node . is the number of aerial–ground relay paths for video segment transfer, while denotes the number of nodes along the m-th aerial–ground relay path. stands for the source base station or distributed access point cluster for the aerial–ground network relay path. represents, at time , the remaining bandwidth for downloading from node to node , and denotes, at time , the total bandwidth required for downloading video segments from node to node . Unless otherwise noted, all parameters are as defined in Section 3.3.Equation (98) formulates an optimization problem that minimizes the total weighted difference between the bandwidth used to download video segments over multiple aerial–ground relay paths and the sizes of the pre-fetched video segments, balancing network load and caching efficiency. Each relay path , defined in Equation (99), is a sequence of nodes—from source base stations or access point clusters to the EV—that transmit video data from multicast tree nodes to the EV. Constraint (100) ensures that the combined bandwidth provided by these relay paths meets or exceeds the demand needed to download all required video segments for smooth playback. Equation (101) guarantees that each relay path ends at the EV’s current location. Finally, inequality (102) enforces that the available bandwidth on each link along the relay path at time is sufficient to support the required video download rate, preventing network congestion or bottlenecks during preloading.

- Step 4:

- The finalized bandwidth relay settings are submitted to the RSU.

3.6. Decision Metrics, Context Parameters, and Optimization Framework

4. Simulation Results and Analysis

4.1. Computational Complexity Analysis

4.1.1. Comparison to the Baseline

4.1.2. Comparative Summary

4.1.3. Communication and Coordination Overhead

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- He, Y.; Wang, D.; Huang, F.; Zhang, R.; Min, L. Aerial-ground integrated vehicular networks: A UAV-vehicle collaboration perspective. IEEE Trans. Intell. Transp. Syst. 2024, 25, 5154–5169. [Google Scholar] [CrossRef]

- Shaheen, S.; Mamyrbayev, O.; Hashmi, M.T.; Arshad, H.; Akhmediyarova, A.; Oralbekova, D. Location-Based Hybrid Video Streaming Protocol for VANETs. Int. J. Networked Distrib. Comput. 2025, 13, 1–15. [Google Scholar] [CrossRef]

- Dong, P.; Li, S.; Gong, X.; Zhang, L. HVASR: Enhancing 360-degree video delivery with viewport-aware super resolution. Inf. Sci. 2025, 691, 121609. [Google Scholar] [CrossRef]

- Palma, B.; Casanova-Salas, P.; Gimeno, J.; Pérez-Aixendri, M.; Riera, J.V. Exploring Immersive Solutions for Surgery in the Virtuality Continuum: A Review. Surgeries 2025, 6, 35. [Google Scholar] [CrossRef]

- Garrido Abenza, P.P.; Malumbres, M.P.; Piñol, P.; López-Granado, O. A multi-channel packet scheduling approach to improving video delivery performance in vehicular networks. Computers 2024, 13, 16. [Google Scholar] [CrossRef]

- Xue, Q.; Ji, C.; Ma, S.; Guo, J.; Xu, Y.; Chen, Q.; Zhang, W. A survey of beam management for mmWave and THz communications towards 6G. IEEE Commun. Surv. Tutor. 2024, 26, 1520–1559. [Google Scholar] [CrossRef]

- Jiang, W.; Zhou, Q.; He, J.; Habibi, M.A.; Melnyk, S.; El-Absi, M.; Han, B.; Renzo, D.; Schotten, H.D.; Luo, F.; et al. Terahertz communications and sensing for 6G and beyond: A comprehensive review. IEEE Commun. Surv. Tutor. 2024, 26, 2326–2381. [Google Scholar] [CrossRef]

- Song, Y.; Gong, Z.; Chen, Y.; Li, C. Distributed Massive MIMO With Low Resolution ADCs for Massive Random Access. IEEE J. Sel. Top. Signal Process. 2024, 18, 1381–1395. [Google Scholar] [CrossRef]

- Wandelt, S.; Wang, S.; Zheng, C.; Sun, X. Aerial: A meta review and discussion of challenges toward unmanned aerial vehicle operations in logistics, mobility, and monitoring. IEEE Trans. Intell. Transp. Syst. 2024, 25, 6276–6289. [Google Scholar] [CrossRef]

- Goudarzi, S.; Soleymani, S.A.; Anisi, M.H.; Jindal, A.; Xiao, P. Optimizing UAV-Assisted Vehicular Edge Computing with Age of Information: A SAC-Based Solution. IEEE Internet Things J. 2025, 12, 4555–4569. [Google Scholar] [CrossRef]

- Saad, M.M.; Jamshed, M.A.; Tariq, M.A.; Nauman, A.; Kim, D. Knowledge-Empowered Distributed Learning Platform in Internet of Unmanned Aerial Agents to Support NR-V2X Communication. IEEE Internet Things J. 2025; early access. [Google Scholar]

- Su, Y.; Huang, L.; Liwang, M. Joint power control and time allocation for UAV-assisted IoV networks over licensed and unlicensed spectrum. IEEE Internet Things J. 2024, 11, 1522–1533. [Google Scholar] [CrossRef]

- Abderrahim, W.; Amin, O.; Shihada, B. Data center-enabled high altitude platforms: A green computing alternative. IEEE Trans. Mob. Comput. 2024, 23, 6149–6162. [Google Scholar] [CrossRef]

- Lou, Z.; Belmekki, B.E.Y.; Alouini, M.S. Coverage analysis of large-scale HAPS networks using directional beams. IEEE Trans. Aerosp. Electron. Syst. 2025; early access. [Google Scholar]

- Abbasi, O.; Yadav, A.; Yanikomeroglu, H.; Đào, N.D.; Senarath, G.; Zhu, P. HAPS for 6G networks: Potential use cases, open challenges, and possible solutions. IEEE Wirel. Commun. 2024, 31, 324–331. [Google Scholar] [CrossRef]

- Shamsabadi, A.A.; Yadav, A.; Yanikomeroglu, H. Enhancing next-generation urban connectivity: Is the integrated HAPS-terrestrial network a solution? IEEE Commun. Lett. 2024, 8, 1112–1116. [Google Scholar] [CrossRef]

- Chow, C.W. Recent advances and future perspectives in optical wireless communication, free space optical communication and sensing for 6G. J. Light. Technol. 2024, 42, 3972–3980. [Google Scholar] [CrossRef]

- Elfikky, A.; Singh, M.; Boghdady, A.I.; Abd El-Mottaleb, S.A.; Mohsan, S.A.H.; Aly, M.H. Spatial diversity-based FSO links under adverse weather conditions: Performance analysis. Opt. Quantum Electron. 2024, 56, 826. [Google Scholar] [CrossRef]

- Sathish, K.; Mahalingam, H.; Padmaja, K.; Makala, R.; Krishnaiah, N.R. An integrated design for improving spectral efficiency of FSO communication system in 6G applications. Int. J. Commun. Syst. 2024, 37, e5776. [Google Scholar] [CrossRef]

- Seeling, P.; Reisslein, M. Video transport evaluation with H. 264 video traces. IEEE Commun. Surv. Tutor. 2011, 14, 1142–1165. [Google Scholar] [CrossRef]

- Barman, N.; Martini, M.G. User generated HDR gaming video streaming: Dataset, codec comparison, and challenges. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 1236–1249. [Google Scholar] [CrossRef]

- Lee, M.; Song, H.; Park, J.; Jeon, B.; Kang, J.; Kim, J.G.; Lee, Y.L.; Kang, J.W.; Sim, D. Overview of versatile video coding (H. 266/VVC) and its coding performance analysis. IEIE Trans. Smart Process. Comput. 2023, 12, 122–154. [Google Scholar] [CrossRef]

- Bian, Y.; Sheng, X.; Li, L.; Liu, D. LSSVC: A Learned Spatially Scalable Video Coding Scheme. IEEE Trans. Image Process. 2024, 33, 3314–3327. [Google Scholar] [CrossRef] [PubMed]

- Ye, Z.; Li, Q.; Ma, X.; Zhao, D.; Jiang, Y.; Ma, L.; Yi, B.; Muntean, G.M. VRCT: A viewport reconstruction-based 360 video caching solution for tile-adaptive streaming. IEEE Trans. Broadcast. 2023, 69, 691–703. [Google Scholar] [CrossRef]

- Wang, Y.; Cheng, S.; Gao, N.; Bi, T.; Jiang, T. JSFBA: Joint Segment and Frame Bitrate Adaptation for Real-Time Video Analytics. IEEE Trans. Mobile Comput. 2025, 24, 4874–4888. [Google Scholar] [CrossRef]

- Zhou, W.; Lu, Y.; Pan, W.; Chen, Z.; Qin, J. Dynamic Bitrate Adaptation and Bandwidth Allocation for MEC-Enabled Video Streaming. IEEE Commun. Lett. 2024, 28, 2121–2125. [Google Scholar] [CrossRef]

- Xie, Y.; Xu, D.; Zhang, T.; Yu, K.; Hussain, A.; Guizani, M. VLC-Assisted Safety Message Dissemination in Roadside Infrastructure-Less IoV Systems: Modeling and Analysis. IEEE Internet Things J. 2024, 11, 8185–8198. [Google Scholar] [CrossRef]

- Dai, X.; Xiao, Z.; Jiang, H.; Lui, J.C. UAV-assisted task offloading in vehicular edge computing networks. IEEE Trans. Mob. Comput. 2023, 23, 2520–2534. [Google Scholar] [CrossRef]

- Zahoor, K.; Bilal, K.; Erbad, A.; Mohamed, A.; Guizani, M. Multicast at edge: An edge network architecture for service-less crowdsourced live video multicast. IEEE Access 2021, 9, 59508–59526. [Google Scholar] [CrossRef]

- Freitas, M.; Souza, D.; Borges, G.; Cavalcante, A.M.; da Costa, D.B.; Marquezini, M.; Almeida, I.; Rodrigues, R.; Costa, J.C. Matched-decision AP selection for user-centric cell-free massive MIMO networks. IEEE Trans. Veh. Technol. 2023, 72, 6375–6391. [Google Scholar] [CrossRef]

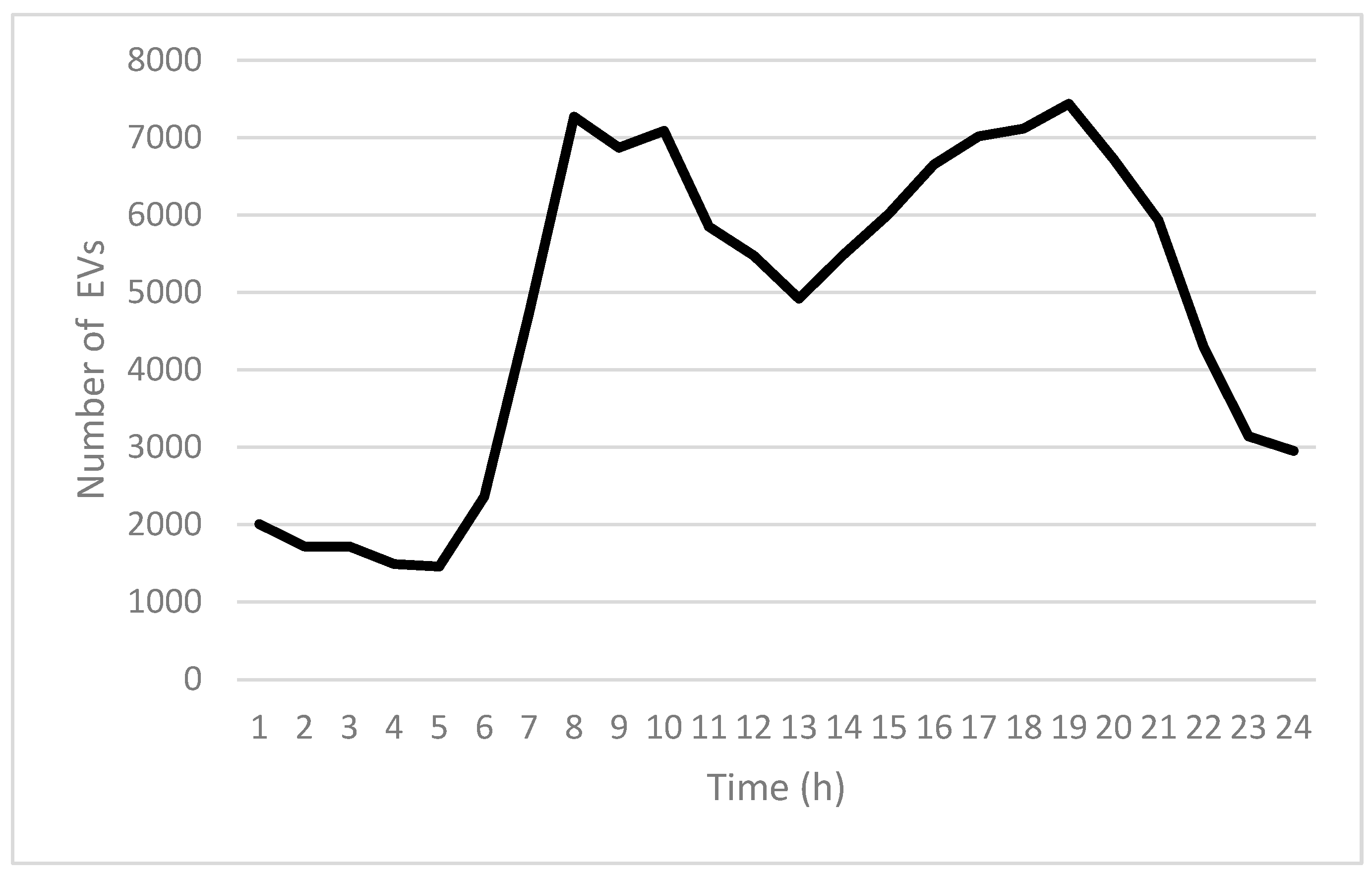

- New York City Vehicle Flow Statistics. Available online: https://www.nyc.gov/html/dot/html/about/datafeeds.shtml#trafficcounts (accessed on 3 April 2025).

- New York City Department of Transportation (NYC DOT). Annual Traffic Volume Report: Manhattan Vehicle Counts and Projections; NYC DOT: New York, NY, USA, 2024. [Google Scholar]

- International Energy Agency (IEA). Global EV Outlook 2024: Urban Adoption Trends and Infrastructure Demands; IEA: Paris, France, 2024. [Google Scholar]

- Smith, A.B.; Johnson, C.D.; Lee, E.F. Projected Penetration Rates of Electric Vehicles in Metropolitan Areas: A 2030 Outlook. Sustainability 2023, 15, 6789. [Google Scholar]

- Bai, F.; Sadagopan, N.; Helmy, A. IMPORTANT: A framework to systematically analyze the impact of mobility on performance of routing protocols for adhoc networks. Proc. IEEE Infocom 2003, 2, 825–835. [Google Scholar]

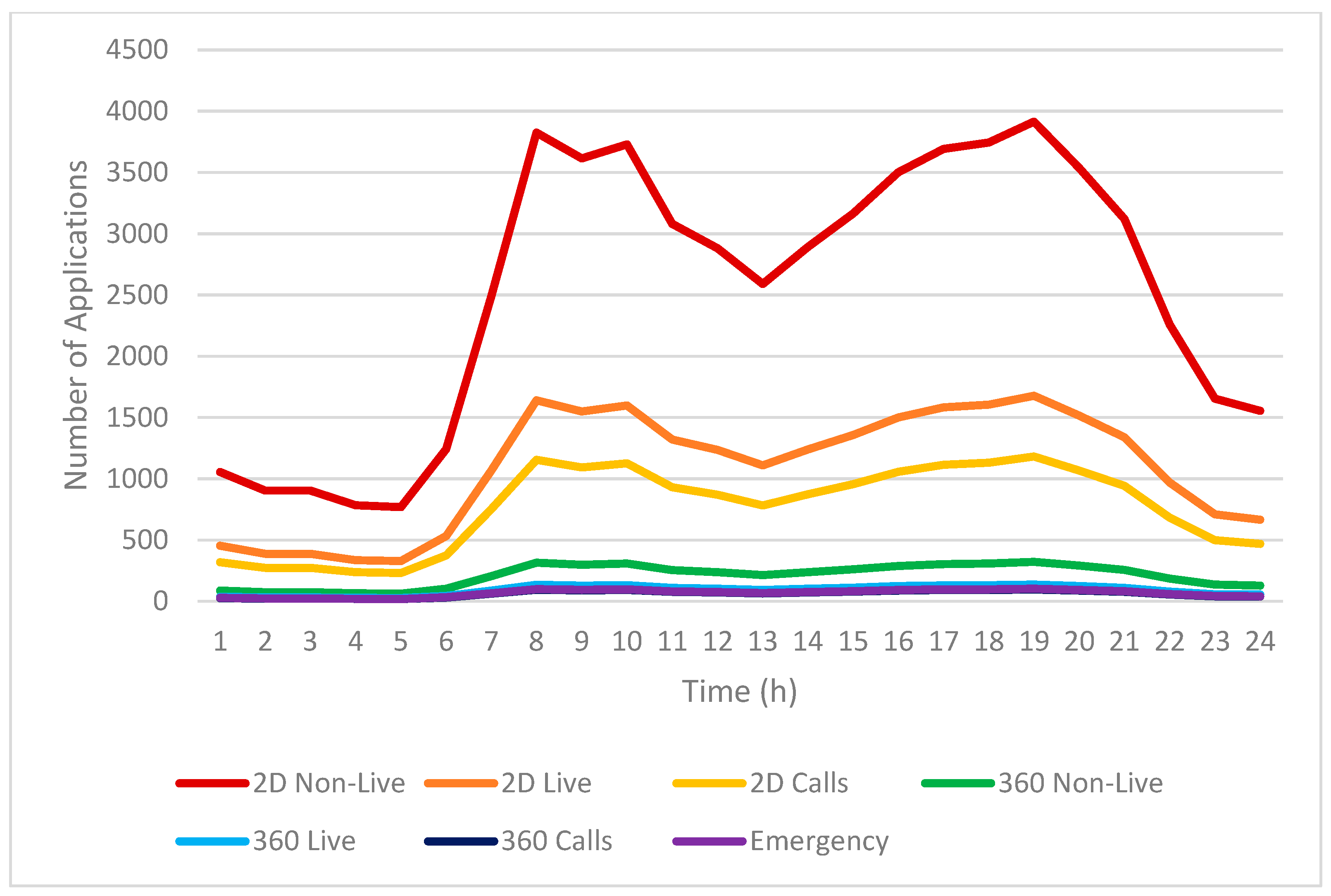

- Cisco Systems. Cisco Visual Networking Index (VNI): Mobile Video Traffic Forecast 2023–2028; Cisco: San Jose, CA, USA, 2024. [Google Scholar]

- IEEE 802. 11ad Working Group. Multi-Gigabit Wireless Video Traffic Analysis in Dense Urban Environments. IEEE Access 2024, 12, 3456–3478. [Google Scholar]

- Zhang, X.; Imoize, A.L.; Do, D.-T. Terahertz and mmWave Spectrum Efficiency in 6G: A Capacity Optimization Framework. IEEE Access 2024, 12, 5678–5695. [Google Scholar]

- Time and Date AS. Historical Weather Data, Manhattan, New York, USA. Available online: https://www.timeanddate.com/weather/usa/new-york/historic (accessed on 13 April 2025).

- Lee, J.Y.; Park, S.I.; Kwon, S.; Lim, B.M.; Kim, H.M.; Hur, N.; Pesin, A.; Chevet, J.-C.; Llach, J.; Stein, A.J.; et al. Efficient transmission of multiple broadcasting services using LDM and SHVC. IEEE Trans. Broadcast. 2017, 64, 177–187. [Google Scholar] [CrossRef]

- Nguyen, D.; Hung, N.V.; Phong, N.T.; Huong, T.T.; Thang, T.C. Scalable multicast for live 360-degree video streaming over mobile networks. IEEE Access 2022, 10, 38802–38812. [Google Scholar] [CrossRef]

| Ref. | Focus/Contribution | Technology | Strength | Limitation |

|---|---|---|---|---|

| [1] | Drone–vehicle collaboration for improved coverage and reliability | Drone-assisted V2X | Enhances connectivity, supports mobility | Lacks support for adaptive video delivery |

| [2,3,4] | Challenges of network instability in urban vehicular environments | Cellular vehicular networks | Highlights QoS degradation factors | No mitigation strategy for infotainment traffic |

| [5,6] | Analyze 5G limitations in high-density, high-mobility conditions | 5G cellular, mmWave | Shows performance bottlenecks | Lacks scalability and robustness for infotainment |

| [7,8] | Introduction of 6G technologies for enhanced capacity and latency | Terahertz (THz), mMIMO | Promises significant gains in capacity | Underexplored in vehicular infotainment scenarios |

| [9] | Drones in smart transportation systems | Drone-assisted V2X | Flexible deployment, rural coverage | Static, lacks media awareness or adaptability |

| [10] | SDN and RL for drone-assisted edge computing | SDN, MEC, reinforcement learning | Efficient task offloading | Ignores video streaming and UAV–media coordination |

| [11] | Multi-drone learning for responsive network control | Distributed learning, multi-UAV | Enhances real-time adaptation | No support for video layer control |

| [12] | Spectrum sharing for drone–ground coexistence | Hybrid licensed/unlicensed bands | Improved PHY coexistence | Lacks scalable content delivery framework |

| [13,14,15,16] | HAPs for wide-area and persistent connectivity | HAPs (balloons, solar drones) | Low latency, wide coverage | No content-layer integration for infotainment |

| [17,18] | High-speed optical links between aerial platforms | FSO links | High data rate, interference-free | Weather-sensitive, ground-level use not addressed |

| [19] | Hybrid switching to overcome FSO reliability issues | FSO/RF hybrid | Resilient against weather interference | Focused on inter-HAP, not UAV–ground applications |

| [20,21,22] | SVC for layered video compression and transmission | H.264/H.265/H.266 | Improved compression and scalability | Lacks dynamic integration with network state |

| [23] | Adaptive video layer delivery | SVC | Flexible bandwidth usage | Not integrated with UAV or mobility systems |

| [24] | Tile-based 360° video delivery | Tiling, viewport-aware streaming | Reduces redundancy | No UAV or context-aware delivery support |

| [25] | Joint segment and frame bitrate adaptation | Bitrate adaptation | Improves QoE with fine-grained control | High computational complexity |

| [26] | Dynamic bitrate adaptation and bandwidth allocation | Adaptive streaming | Efficient resource utilization | Limited scalability in dynamic networks |

| [27] | VLC for short-range V2V communication | VLC | High-speed local data exchange | No integration with aerial relays or global delivery |

| Wireless Communication Technology | Maximum Communication Distance | Maximum Capacity |

|---|---|---|

| mmWave | 100 m | 20 Gbps |

| THz | 500 m | 240 Gbps |

| FSO | HAPs 20 km height | 335 Gbps |

| VLC | 14 m | 270 Mbps |

| Video Classification | Resolution and Frame Rate Specifications | Expected Bandwidth |

|---|---|---|

| 7680 × 4320 60 fps | 135–156 Mbps | |

| 2D video | 3840 × 2160 60 fps | 38–44 Mbps |

| 2560 × 1440 60 fps | 17 Mbps | |

| 1280 × 720 60 fps | 5 Mbps | |

| 7680 × 3840 60 fps | 125–309 Mbps | |

| 360-degree video | 4090 × 4090 60 fps | 70–174 Mbps |

| 2890 × 1920 60 fps | 33–82 Mbps |

| Characteristic | This Work | [1] |

|---|---|---|

| Problem domain | Heuristic-driven video streaming and relay planning | Analytical coverage and sum-rate optimization |

| Dominant variables | M, L, R, N | D (channels or drones) |

| Worst-case complexity | – | |

| Operations cost | Sorting, mapping, path planning | Closed-form formulas + iterative convex solves |

| Scalability | Linear/log-linear in practical parameters | Polynomial in D; suitable for moderate drone sets |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, C.-J.; Chen, L.-C.; Cheng, Y.-S.; Hu, K.-W.; Jian, M.-E. Enhancing Infotainment Services in Integrated Aerial–Ground Mobility Networks. Sensors 2025, 25, 3891. https://doi.org/10.3390/s25133891

Huang C-J, Chen L-C, Cheng Y-S, Hu K-W, Jian M-E. Enhancing Infotainment Services in Integrated Aerial–Ground Mobility Networks. Sensors. 2025; 25(13):3891. https://doi.org/10.3390/s25133891

Chicago/Turabian StyleHuang, Chenn-Jung, Liang-Chun Chen, Yu-Sen Cheng, Ken-Wen Hu, and Mei-En Jian. 2025. "Enhancing Infotainment Services in Integrated Aerial–Ground Mobility Networks" Sensors 25, no. 13: 3891. https://doi.org/10.3390/s25133891

APA StyleHuang, C.-J., Chen, L.-C., Cheng, Y.-S., Hu, K.-W., & Jian, M.-E. (2025). Enhancing Infotainment Services in Integrated Aerial–Ground Mobility Networks. Sensors, 25(13), 3891. https://doi.org/10.3390/s25133891