P300 ERP System Utilizing Wireless Visual Stimulus Presentation Devices

Abstract

1. Introduction

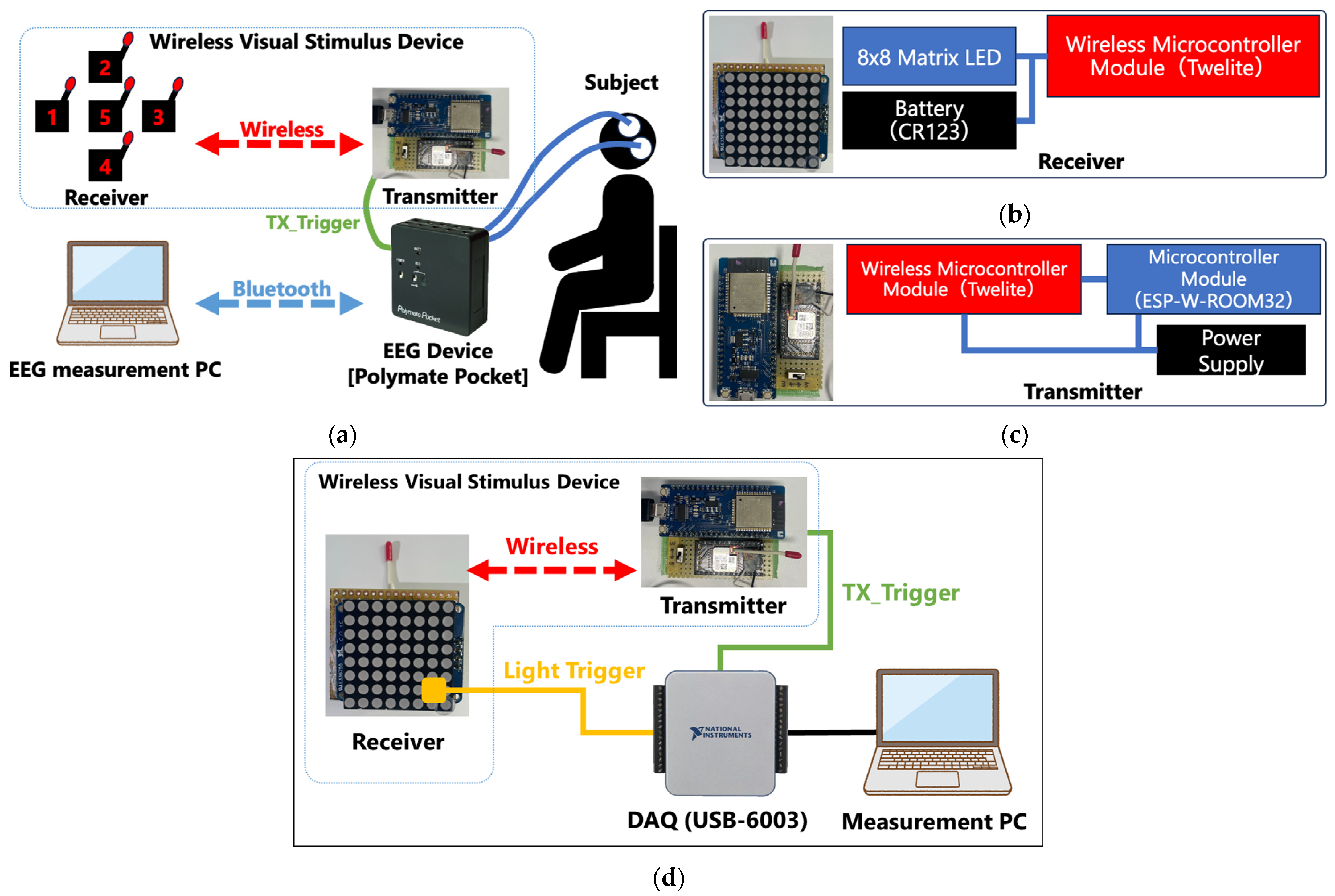

2. Proposed System

2.1. Hardware Configuration

2.2. Visual Stimulation Patterns

2.3. EEG Measurement Settings

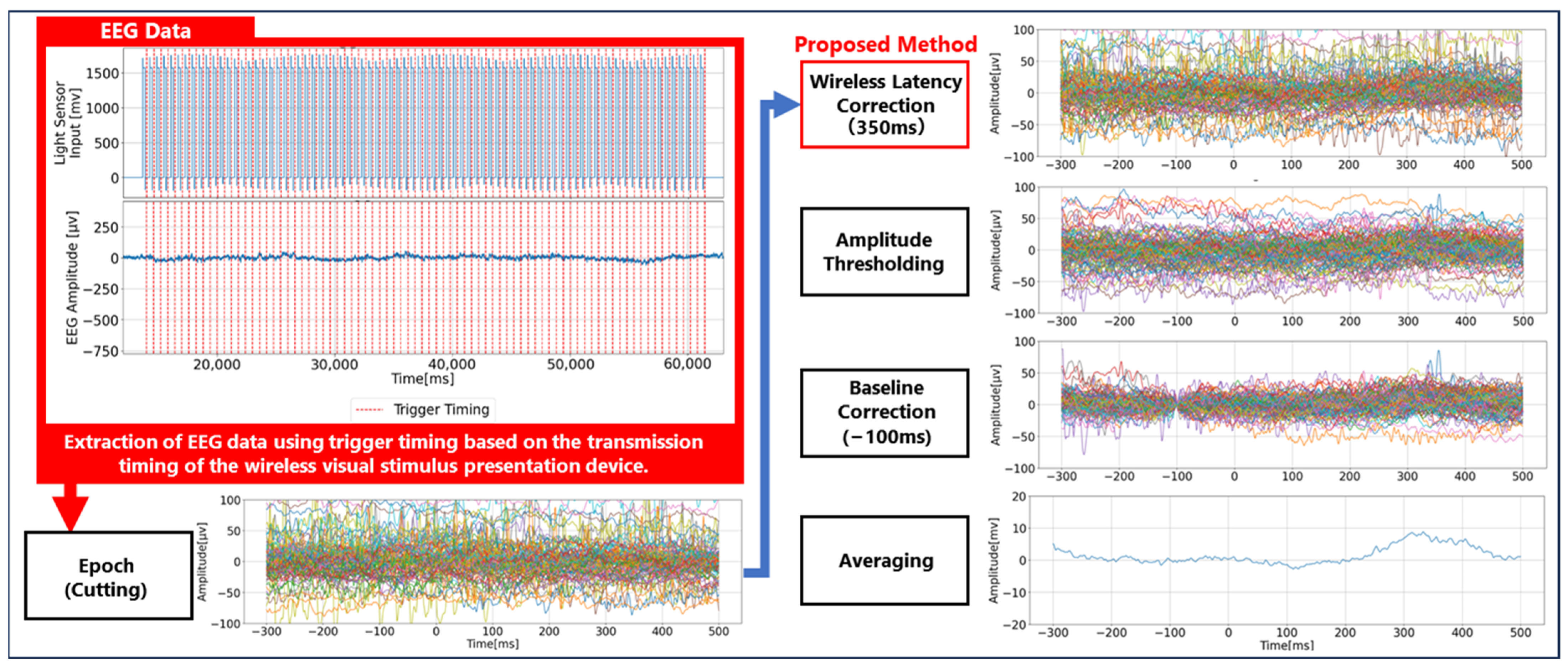

2.4. P300 Analysis Method

3. Experiment 1: Evaluation of Wireless Transmission Delay in Visual Stimulus Presentation Device

3.1. Experimental Setup for Evaluation of Wireless Transmission Delay

3.2. Experimental Results for Evaluation of Wireless Transmission Delay

4. Experiment 2: Evaluation of Waveform Characteristics Based on P300 Experiments Using Proposed Wireless Visual Stimulus Presentation Device

4.1. Experimental Setup for Evaluation of Waveform Characteristics

- Transmission signal-based corrected (TSC) method: a method using the proposed temporal correction from Experiment 1, where a fixed delay of 350 ms is added to the flash command timing to estimate stimulus onset.

- Actual visual onset-based (AVO) method: an ideal method using the actual visual onset time directly measured by the photodetector, serving as a reference for accurate P300 timing.

- Jitter-modeled correction (JMC) method: a method combining the measured visual onset time with a modeled delay distribution derived from Experiment 1, where random delays sampled from a Gaussian distribution (mean: 352.1 ms, standard deviation: 30.9 ms) are added to simulate real-world wireless latency characteristics.

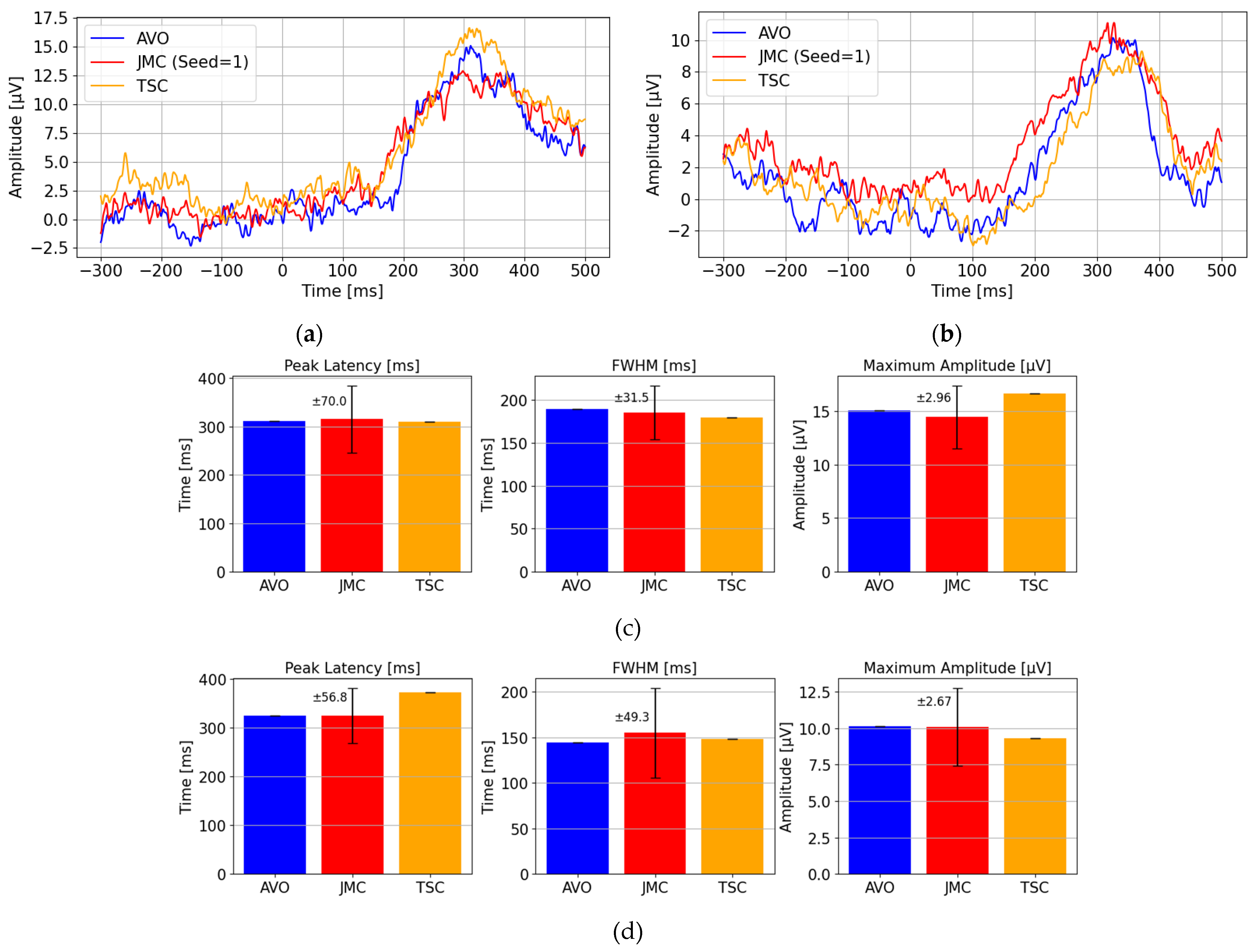

4.2. Experimental Results for Evaluation of Waveform Characteristics

- The peak latency (the time of maximum amplitude);

- The full width at half-maximum (FWHM) of the peak;

- The maximum amplitude.

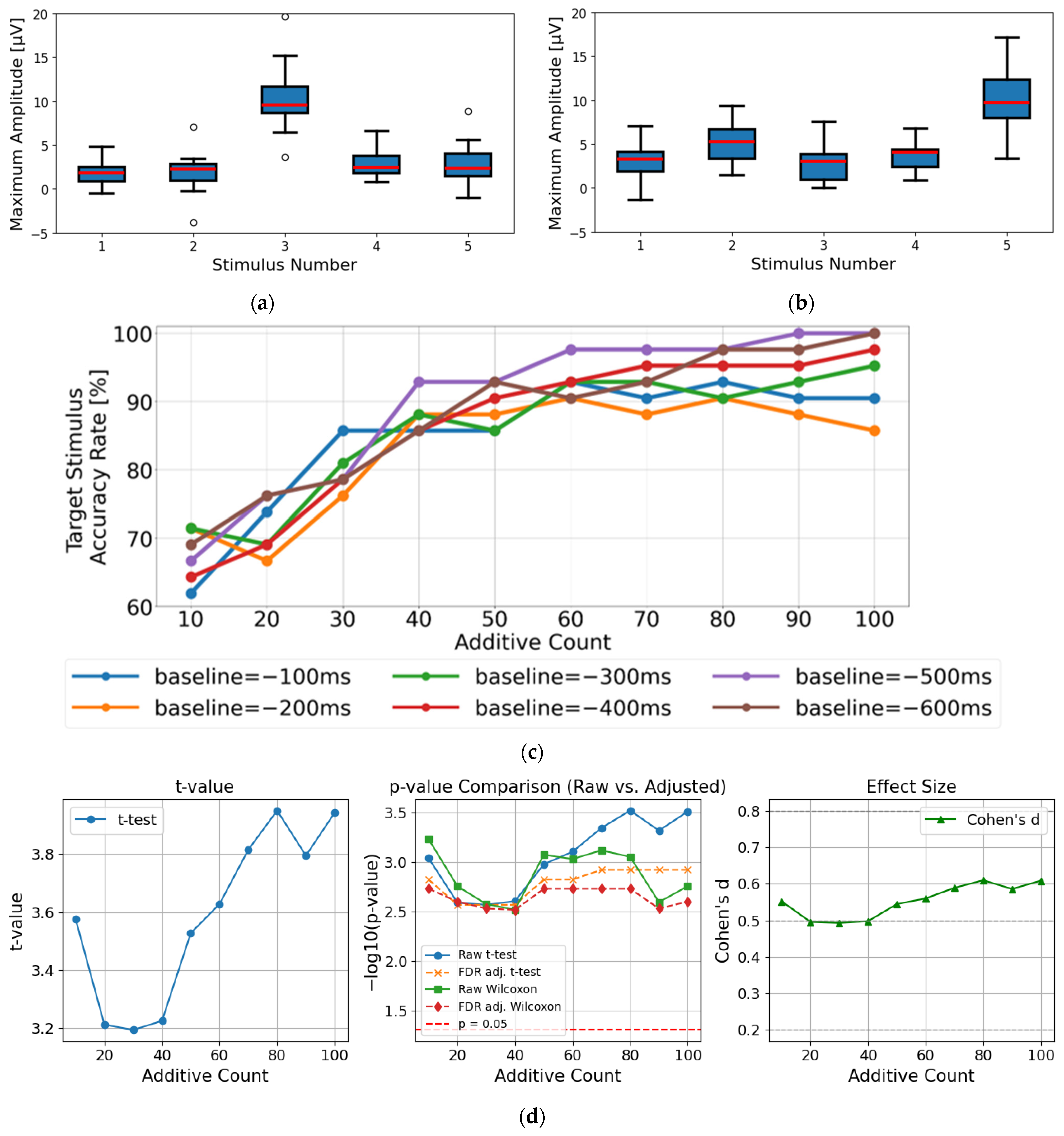

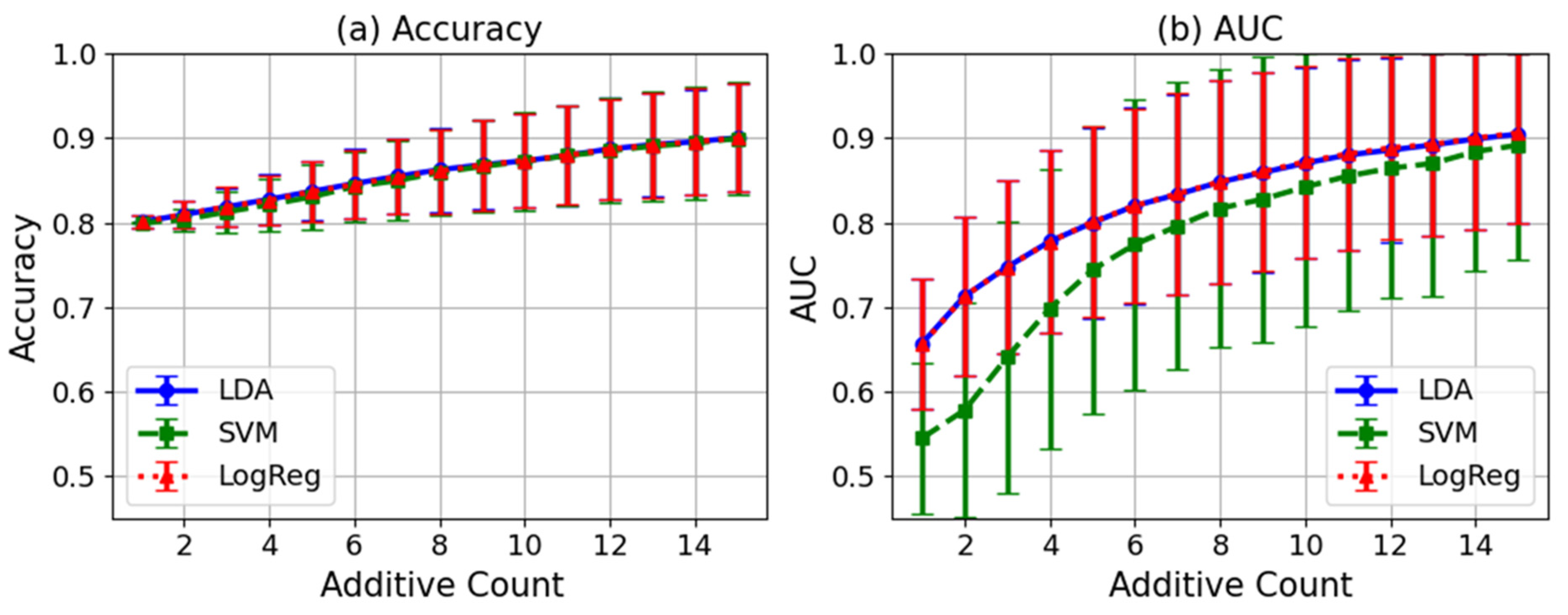

5. Experiment 3: Evaluation of P300 Detection Using Wireless Visual Stimulus Presentation Device in 21 Participants

5.1. Experimental Setup for Evaluation of P300 Detection

5.2. Experimental Results for Evaluation of P300 Detection

6. Discussion

6.1. Validity of Baseline Correction Timing at −500 ms/−600 ms

6.2. Effects of Ambient Lighting and Visual Perception

6.3. Influence of Wireless Transmission Delay on Classification Performance and Real-Time Applicability

6.4. Limitations and Future Challenges

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Esposito, D.; Centracchio, J.; Andreozzi, E.; Gargiulo, G.D.; Naik, G.R.; Bifulco, P. Biosignal-Based Human-Machine Interfaces for Assistance and Rehabilitation: A Survey. Sensors 2021, 21, 6863. [Google Scholar] [CrossRef] [PubMed]

- Ferreira, A.; Celeste, W.C.; Cheein, F.A.; Bastos-Filho, T.F.; Sarcinelli-Filho, M.; Carelli, R. Human-Machine Interfaces Based on EMG and EEG Applied to Robotic Systems. J. Neuroeng. Rehabil. 2008, 5, 10. [Google Scholar] [CrossRef] [PubMed]

- Birbaumer, N.; Cohen, L.G. Brain–Computer Interfaces: Communication and Restoration of Movement in Paralysis. J. Physiol. 2007, 579, 621–636. [Google Scholar] [CrossRef] [PubMed]

- Wolpaw, J.R.; Birbaumer, N.; McFarland, D.J.; Pfurtscheller, G.; Vaughan, T.M. Brain-Computer Interfaces for Communication and Control. Clin. Neurophysiol. 2002, 113, 767–791. [Google Scholar] [CrossRef]

- Berger, H. Über das Elektrenkephalogramm des Menschen: XIV. Mitteilung. Arch. Psychiatr. Nervenkrankh. 1938, 108, 407–431. [Google Scholar] [CrossRef]

- Wolpaw, J.R.; McFarland, D.J. Control of a Two-Dimensional Movement Signal by a Noninvasive Brain-Computer Interface in Humans. Proc. Natl. Acad. Sci. USA 2004, 101, 17849–17854. [Google Scholar] [CrossRef]

- Hochberg, L.R.; Serruya, M.D.; Friehs, G.M.; Mukand, J.A.; Saleh, M.; Caplan, A.H.; Branner, A.; Chen, D.; Penn, R.D.; Donoghue, J.P. Neuronal Ensemble Control of Prosthetic Devices by a Human with Tetraplegia. Nature 2006, 442, 164–171. [Google Scholar] [CrossRef]

- Becher, B. Brain-Computer Interfaces (BCI), Explained. Available online: https://builtin.com/hardware/brain-computer-interface-bci (accessed on 25 July 2024).

- Stieger, J.R.; Engel, S.A.; He, B. Continuous Sensorimotor Rhythm Based Brain Computer Interface Learning in a Large Population. Sci. Data 2021, 8, 98. [Google Scholar] [CrossRef]

- Orban, M.; Elsamanty, M.; Guo, K.; Zhang, S.; Yang, H. A Review of Brain Activity and EEG-Based Brain-Computer Interfaces for Rehabilitation Application. Bioengineering 2022, 9, 768. [Google Scholar] [CrossRef]

- Triana-Guzman, N.; Orjuela-Cañon, A.D.; Jutinico, A.L.; Mendoza-Montoya, O.; Antelis, J.M. Decoding EEG Rhythms Offline and Online during Motor Imagery for Standing and Sitting Based on a Brain-Computer Interface. Front. Neuroinform. 2022, 16, 961089. [Google Scholar] [CrossRef]

- Sutton, S.; Braren, M.; Zubin, J.; John, E.R. Evoked-Potential Correlates of Stimulus Uncertainty. Science 1965, 150, 1187–1188. [Google Scholar] [CrossRef]

- Middendorf, M.; McMillan, G.; Calhoun, G.; Jones, K.S. Brain-Computer Interfaces Based on the Steady-State Visual-Evoked Response. IEEE Trans. Rehabil. Eng. 2000, 8, 211–214. [Google Scholar] [CrossRef] [PubMed]

- Müller-Putz, G.R.; Scherer, R.; Brauneis, C.; Pfurtscheller, G. Steady-State Visual Evoked Potential (SSVEP)-Based Communication: Impact of Harmonic Frequency Components. J. Neural Eng. 2005, 2, 123–130. [Google Scholar] [CrossRef] [PubMed]

- Bin, G.; Gao, X.; Wang, Y.; Hong, B.; Gao, S. VEP-Based Brain-Computer Interfaces: Time, Frequency, and Code Modulations [Research Frontier]. IEEE Comput. Intell. Mag. 2009, 4, 22–26. [Google Scholar] [CrossRef]

- Bin, G.; Gao, X.; Wang, Y.; Li, Y.; Hong, B.; Gao, S. A High-Speed BCI Based on Code Modulation VEP. J. Neural Eng. 2011, 8, 025015. [Google Scholar] [CrossRef]

- Nicolas-Alonso, L.F.; Gomez-Gil, J. Brain Computer Interfaces, a Review. Sensors 2012, 12, 1211–1279. [Google Scholar] [CrossRef]

- Mak, J.N.; Wolpaw, J.R. Clinical Applications of Brain-Computer Interfaces: Current State and Future Prospects. IEEE Rev. Biomed. Eng. 2009, 2, 187–199. [Google Scholar] [CrossRef]

- Fabiani, M.; Gratton, G.; Karis, D.; Donchin, E. Definition, Identification, and Reliability of Measurement of the P300 Component of the Event-Related Brain Potential. Adv. Psychophysiol. 1987, 2, 1–78. [Google Scholar]

- Li, F.; Li, X.; Wang, F.; Zhang, D.; Xia, Y.; He, F. A Novel P300 Classification Algorithm Based on a Principal Component Analysis-Convolutional Neural Network. NATO Adv. Sci. Inst. Ser. E Appl. Sci. 2020, 10, 1546. [Google Scholar] [CrossRef]

- Farwell, L.A.; Donchin, E. Talking off the Top of Your Head: Toward a Mental Prosthesis Utilizing Event-Related Brain Potentials. Electroencephalogr. Clin. Neurophysiol. 1988, 70, 510–523. [Google Scholar] [CrossRef]

- Kirasirova, L.; Bulanov, V.; Ossadtchi, A.; Kolsanov, A.; Pyatin, V.; Lebedev, M. A P300 Brain-Computer Interface With a Reduced Visual Field. Front. Neurosci. 2020, 14, 604629. [Google Scholar] [CrossRef] [PubMed]

- Kaufmann, T.; Schulz, S.M.; Grünzinger, C.; Kübler, A. Flashing Characters with Famous Faces Improves ERP-Based Brain-Computer Interface Performance. J. Neural Eng. 2011, 8, 056016. [Google Scholar] [CrossRef]

- Galambos, R.; Sheatz, G.C. An Electroencephalograph Study of Classical Conditioning. Am. J. Physiol. 1962, 203, 173–184. [Google Scholar] [CrossRef] [PubMed]

- Picton, T.W.; Bentin, S.; Berg, P.; Donchin, E.; Hillyard, S.A.; Johnson, R., Jr.; Miller, G.A.; Ritter, W.; Ruchkin, D.S.; Rugg, M.D.; et al. Guidelines for Using Human Event-Related Potentials to Study Cognition: Recording Standards and Publication Criteria. Psychophysiology 2000, 37, 127–152. [Google Scholar] [CrossRef] [PubMed]

- Event-Related Potentials; Handy, T.C., Ed.; A Bradford Book; Bradford Books: Cambridge, MA, USA, 2004; ISBN 9780262083331. [Google Scholar]

- Luck, S.J. An Introduction to the Event-Related Potential Technique, 2nd ed.; A Bradford Book; Bradford Books: Cambridge, MA, USA, 2014; ISBN 9780262525855. [Google Scholar]

- Tanner, D.; Norton, J.J.S.; Morgan-Short, K.; Luck, S.J. On High-Pass Filter Artifacts (They’re Real) and Baseline Correction (It’s a Good Idea) in ERP/ERMF Analysis. J. Neurosci. Methods 2016, 266, 166–170. [Google Scholar] [CrossRef]

- Krusienski, D.J.; Sellers, E.W.; McFarland, D.J.; Vaughan, T.M.; Wolpaw, J.R. Toward Enhanced P300 Speller Performance. J. Neurosci. Methods 2008, 167, 15–21. [Google Scholar] [CrossRef]

- Kilani, S.; Hulea, M. Enhancing P300-Based Brain-Computer Interfaces with Hybrid Transfer Learning: A Data Alignment and Fine-Tuning Approach. NATO Adv. Sci. Inst. Ser. E Appl. Sci. 2023, 13, 6283. [Google Scholar] [CrossRef]

- Hu, L.; Gao, W.; Lu, Z.; Shan, C.; Ma, H.; Zhang, W.; Li, Y. Subject-Independent Wearable P300 Brain-Computer Interface Based on Convolutional Neural Network and Metric Learning. IEEE Trans. Neural Syst. Rehabil. Eng. 2024, 32, 3543–3553. [Google Scholar] [CrossRef]

- Congedo, M. EEG Source Analysis; Université de Grenoble: Saint-Martin-d’Hères, France, 2013. [Google Scholar]

- Barachant, A.; Bonnet, S.; Congedo, M.; Jutten, C. Multiclass Brain-Computer Interface Classification by Riemannian Geometry. IEEE Trans. Biomed. Eng. 2012, 59, 920–928. [Google Scholar] [CrossRef]

- Li, F.; Xia, Y.; Wang, F.; Zhang, D.; Li, X.; He, F. Transfer Learning Algorithm of P300-EEG Signal Based on XDAWN Spatial Filter and Riemannian Geometry Classifier. Appl. Sci. 2020, 10, 1804. [Google Scholar] [CrossRef]

- Krzemiński, D.; Michelmann, S.; Treder, M.; Santamaria, L. Classification of P300 Component Using a Riemannian Ensemble Approach. In IFMBE Proceedings; Springer International Publishing: Cham, Switzerland, 2020; pp. 1885–1889. ISBN 9783030316341. [Google Scholar]

- Li, Y.; Pan, J.; Wang, F.; Yu, Z. A Hybrid BCI System Combining P300 and SSVEP and Its Application to Wheelchair Control. IEEE Trans. Biomed. Eng. 2013, 60, 3156–3166. [Google Scholar] [PubMed]

- Piccione, F.; Giorgi, F.; Tonin, P.; Priftis, K.; Giove, S.; Silvoni, S.; Palmas, G.; Beverina, F. P300-Based Brain Computer Interface: Reliability and Performance in Healthy and Paralysed Participants. Clin. Neurophysiol. 2006, 117, 531–537. [Google Scholar] [CrossRef]

- Kim, S.; Lee, S.; Kang, H.; Kim, S.; Ahn, M. P300 Brain-Computer Interface-Based Drone Control in Virtual and Augmented Reality. Sensors 2021, 21, 5765. [Google Scholar] [CrossRef] [PubMed]

- Fazel-Rezai, R.; Allison, B.Z.; Guger, C.; Sellers, E.W.; Kleih, S.C.; Kübler, A. P300 Brain Computer Interface: Current Challenges and Emerging Trends. Front. Neuroeng. 2012, 5, 14. [Google Scholar] [CrossRef]

- Käthner, I.; Kübler, A.; Halder, S. Rapid P300 Brain-Computer Interface Communication with a Head-Mounted Display. Front. Neurosci. 2015, 9, 207. [Google Scholar] [CrossRef]

- Ron-Angevin, R.; Garcia, L.; Fernández-Rodríguez, Á.; Saracco, J.; André, J.M.; Lespinet-Najib, V. Impact of Speller Size on a Visual P300 Brain-Computer Interface (BCI) System under Two Conditions of Constraint for Eye Movement. Comput. Intell. Neurosci. 2019, 2019, 7876248. [Google Scholar] [CrossRef] [PubMed]

- Pan, J.; Chen, X.; Ban, N.; He, J.; Chen, J.; Huang, H. Advances in P300 Brain-Computer Interface Spellers: Toward Paradigm Design and Performance Evaluation. Front. Hum. Neurosci. 2022, 16, 1077717. [Google Scholar] [CrossRef]

- Mak, J.N.; Arbel, Y.; Minett, J.W.; McCane, L.M.; Yuksel, B.; Ryan, D.; Thompson, D.; Bianchi, L.; Erdogmus, D. Optimizing the P300-Based Brain-Computer Interface: Current Status, Limitations and Future Directions. J. Neural Eng. 2011, 8, 025003. [Google Scholar] [CrossRef]

- Cattan, G.H.; Andreev, A.; Mendoza, C.; Congedo, M. A Comparison of Mobile VR Display Running on an Ordinary Smartphone with Standard PC Display for P300-BCI Stimulus Presentation. IEEE Trans. Games 2021, 13, 68–77. [Google Scholar] [CrossRef]

- Shin, J.H.; Kwon, J.; Kim, J.U.; Ryu, H.; Ok, J.; Joon Kwon, S.; Park, H.; Kim, T.-I. Wearable EEG Electronics for a Brain–AI Closed-Loop System to Enhance Autonomous Machine Decision-Making. Npj Flex. Electron. 2022, 6, 1–12. [Google Scholar] [CrossRef]

- Mascia, A.; Collu, R.; Spanu, A.; Fraschini, M.; Barbaro, M.; Cosseddu, P. Wearable System Based on Ultra-Thin Parylene C Tattoo Electrodes for EEG Recording. Sensors 2023, 23, 766. [Google Scholar] [CrossRef]

- Casson, A.J. Wearable EEG and Beyond. Biomed. Eng. Lett. 2019, 9, 53–71. [Google Scholar] [CrossRef]

- Eckhoff, D.; Schnupp, J.; Cassinelli, A. Temporal Precision and Accuracy of Audio-Visual Stimuli in Mixed Reality Systems. PLoS ONE 2024, 19, e0295817. [Google Scholar] [CrossRef]

- Davis, C.E.; Martin, J.G.; Thorpe, S.J. Stimulus Onset Hub: An Open-Source, Low Latency, and Opto-Isolated Trigger Box for Neuroscientific Research Replicability and Beyond. Front. Neuroinform. 2020, 14, 2. [Google Scholar] [CrossRef]

- SciMethods: Controlling and Coordinating Experiments in Neurophysiology. Available online: https://www.scientifica.uk.com/learning-zone/scimethods-controlling-and-coordinating-experiments-in-neurophysiology (accessed on 8 April 2025).

- Labstreaminglayer: LabStreamingLayer Super Repository Comprising Submodules for LSL and Associated Apps ; Github: San Francisco, CA, USA, 2025; Available online: https://github.com/sccn/labstreaminglayer (accessed on 20 May 2025).

- Guger, C.; Edlinger, G.; Krausz, G. Hardware/Software Components and Applications of BCIs. In Recent Advances in Brain-Computer Interface Systems; InTech: Tokyo, Japan, 2011; ISBN 9789533071756. [Google Scholar]

- Andreev, A.; Cattan, G.; Congedo, M. Engineering Study on the Use of Head-Mounted Display for Brain- Computer Interface. arXiv 2019, arXiv:1906.12251. [Google Scholar]

- Cattan, G.; Andreev, A.; Maureille, B.; Congedo, M. Analysis of Tagging Latency When Comparing Event-Related Potentials. arXiv 2018, arXiv:1812.03066. [Google Scholar]

- Swami, P.; Gramann, K.; Vonstad, E.K.; Vereijken, B.; Holt, A.; Holt, T.; Sandstrak, G.; Nilsen, J.H.; Su, X. CLET: Computation of Latencies in Event-Related Potential Triggers Using Photodiode on Virtual Reality Apparatuses. Front. Hum. Neurosci. 2023, 17, 1223774. [Google Scholar] [CrossRef] [PubMed]

- Onishi, A.; Natsume, K. Ensemble Regularized Linear Discriminant Analysis Classifier for P300-Based Brain-Computer Interface. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2013, 2013, 4231–4234. [Google Scholar]

- Sharma, N. Single-Trial P300 Classification Using PCA with LDA, QDA and Neural Networks. arXiv 2017, arXiv:1712.01977. [Google Scholar]

- Manyakov, N.V.; Chumerin, N.; Combaz, A.; Van Hulle, M.M. Comparison of Classification Methods for P300 Brain-Computer Interface on Disabled Subjects. Comput. Intell. Neurosci. 2011, 2011, 519868. [Google Scholar] [CrossRef]

- Ma, Y.; Jiang, G.; Chang, T.; Guo, L. Research on Feature Extraction and Classification of P300 EEG Signals. In Proceedings of the 2017 2nd International Conference on Electrical, Automation and Mechanical Engineering (EAME 2017), Shanghai, China, 23–24 April 2017; Atlantis Press: Paris, France, 2017; pp. 26–30. [Google Scholar]

- Cristancho-Cuervo, J.H. A Bootstrapping Method for Improving the Classification Performance in the P300 Speller. Rev. Mex. Ing. Biomed. 2020, 41, 43–56. [Google Scholar]

- Karimi, S.A.; Mijani, A.M.; Talebian, M.T.; Mirzakuchaki, S. Comparison of the P300 Detection Accuracy Related to the BCI Speller and Image Recognition Scenarios. arXiv 2019, arXiv:1912.11371. [Google Scholar]

- Blankertz, B.; Lemm, S.; Treder, M.; Haufe, S.; Müller, K.-R. Single-Trial Analysis and Classification of ERP Components--a Tutorial. Neuroimage 2011, 56, 814–825. [Google Scholar] [CrossRef]

- Krusienski, D.J.; Sellers, E.W.; Cabestaing, F.; Bayoudh, S.; McFarland, D.J.; Vaughan, T.M.; Wolpaw, J.R. A Comparison of Classification Techniques for the P300 Speller. J. Neural Eng. 2006, 3, 299–305. [Google Scholar] [CrossRef]

- Sellers, E.W.; Donchin, E. A P300-Based Brain-Computer Interface: Initial Tests by ALS Patients. Clin. Neurophysiol. 2006, 117, 538–548. [Google Scholar] [CrossRef]

- Chen, X.; Wang, Y.; Nakanishi, M.; Gao, X.; Jung, T.-P.; Gao, S. High-Speed Spelling with a Noninvasive Brain-Computer Interface. Proc. Natl. Acad. Sci. USA 2015, 112, E6058–E6067. [Google Scholar] [CrossRef]

- Lotte, F.; Congedo, M.; Lécuyer, A.; Lamarche, F.; Arnaldi, B. A Review of Classification Algorithms for EEG-Based Brain-Computer Interfaces. J. Neural Eng. 2007, 4, R1–R13. [Google Scholar] [CrossRef] [PubMed]

- Kappenman, E.S.; Luck, S.J. The Effects of Electrode Impedance on Data Quality and Statistical Significance in ERP Recordings. Psychophysiology 2010, 47, 888–904. [Google Scholar] [CrossRef]

- Goncharova, I.I.; McFarland, D.J.; Vaughan, T.M.; Wolpaw, J.R. EMG Contamination of EEG: Spectral and Topographical Characteristics. Clin. Neurophysiol. 2003, 114, 1580–1593. [Google Scholar] [CrossRef]

- Congedo, M.; Korczowski, L.; Delorme, A.; Lopes da Silva, F. Spatio-Temporal Common Pattern: A Companion Method for ERP Analysis in the Time Domain. J. Neurosci. Methods 2016, 267, 74–88. [Google Scholar] [CrossRef]

- Lotte, F.; Bougrain, L.; Cichocki, A.; Clerc, M.; Congedo, M.; Rakotomamonjy, A.; Yger, F. A Review of Classification Algorithms for EEG-Based Brain-Computer Interfaces: A 10 Year Update. J. Neural Eng. 2018, 15, 031005. [Google Scholar] [CrossRef] [PubMed]

- Jayaram, V.; Barachant, A. MOABB: Trustworthy Algorithm Benchmarking for BCIs. J. Neural Eng. 2018, 15, 066011. [Google Scholar] [CrossRef] [PubMed]

- Chevallier, S.; Carrara, I.; Aristimunha, B.; Guetschel, P.; Sedlar, S.; Lopes, B.; Velut, S.; Khazem, S.; Moreau, T. The Largest EEG-Based BCI Reproducibility Study for Open Science: The MOABB Benchmark. arXiv 2024, arXiv:2404.15319. [Google Scholar]

- Phadikar, S.; Sinha, N.; Ghosh, R.; Ghaderpour, E. Automatic Muscle Artifacts Identification and Removal from Single-Channel EEG Using Wavelet Transform with Meta-Heuristically Optimized Non-Local Means Filter. Sensors 2022, 22, 2948. [Google Scholar] [CrossRef] [PubMed]

- Yan, W.; Wu, Y. A Time-Frequency Denoising Method for Single-Channel Event-Related EEG. Front. Neurosci. 2022, 16, 991136. [Google Scholar] [CrossRef]

- Hu, Q.; Li, M.; Li, Y. Single-Channel EEG Signal Extraction Based on DWT, CEEMDAN, and ICA Method. Front. Hum. Neurosci. 2022, 16, 1010760. [Google Scholar] [CrossRef]

- He, J.; Huang, Z.; Li, Y.; Shi, J.; Chen, Y.; Jiang, C.; Feng, J. Single-Channel Attention Classification Algorithm Based on Robust Kalman Filtering and Norm-Constrained ELM. Front. Hum. Neurosci. 2024, 18, 1481493. [Google Scholar] [CrossRef]

- Vijayasankar, A.; Kumar, P.R. Correction of Blink Artifacts from Single Channel EEG by EMD-IMF Thresholding. In Proceedings of the 2018 Conference on Signal Processing And Communication Engineering Systems (SPACES), Vijayawada, India, 4–5 January 2018; pp. 176–180. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sasatake, Y.; Matsushita, K. P300 ERP System Utilizing Wireless Visual Stimulus Presentation Devices. Sensors 2025, 25, 3592. https://doi.org/10.3390/s25123592

Sasatake Y, Matsushita K. P300 ERP System Utilizing Wireless Visual Stimulus Presentation Devices. Sensors. 2025; 25(12):3592. https://doi.org/10.3390/s25123592

Chicago/Turabian StyleSasatake, Yuta, and Kojiro Matsushita. 2025. "P300 ERP System Utilizing Wireless Visual Stimulus Presentation Devices" Sensors 25, no. 12: 3592. https://doi.org/10.3390/s25123592

APA StyleSasatake, Y., & Matsushita, K. (2025). P300 ERP System Utilizing Wireless Visual Stimulus Presentation Devices. Sensors, 25(12), 3592. https://doi.org/10.3390/s25123592