The Design and Implementation of a Dynamic Measurement System for a Large Gear Rotation Angle Based on an Extended Visual Field

Abstract

1. Introduction

2. Basic Theory

3. System Solution Design

3.1. General System Design

3.2. Measurement Reference Plate Design

3.3. Measurement Reference Plate Calibration Method

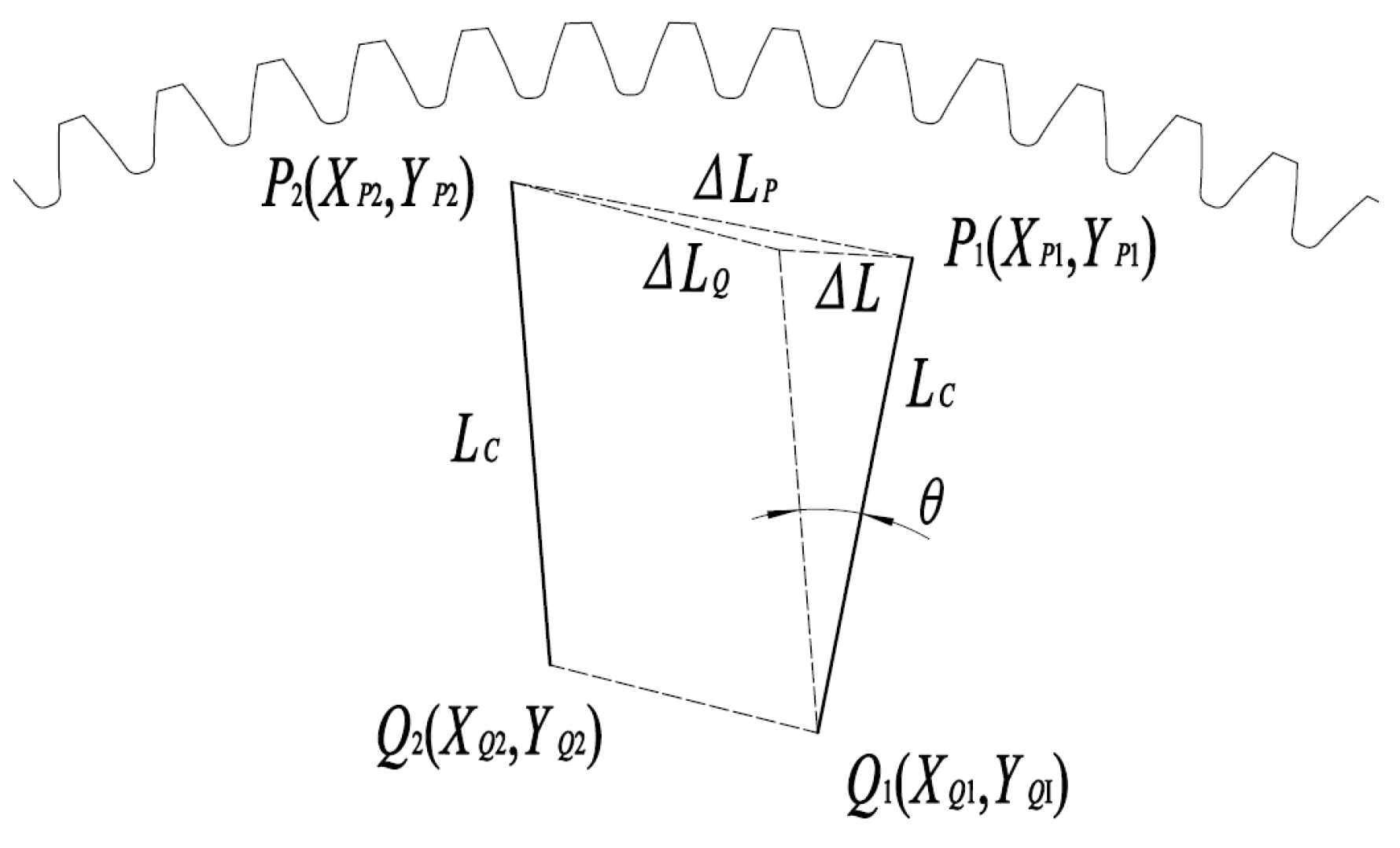

3.3.1. Coordinate System Establishment

3.3.2. Calibration Method for Reference Plate

4. Experiment and Verification

4.1. Experimental Setup

4.2. Experimental Environment Control

4.3. Pixel Equivalent Calibration Experiment

4.4. Calibration Experiment of Measurement Reference Plate

4.4.1. Transformation from Image Pixel Coordinates to Image Physical Coordinates

4.4.2. Transformation from Image Physical Coordinates to 2D Dot Array Calibration Plate Coordinates

4.4.3. Transformation from 2D Dot Array Calibration Plate Coordinates to Measurement Reference Plate Coordinates

4.5. Angle Rotation Measurement Experiment

4.5.1. Angle Rotation Measurement Procedure

- (1)

- The measurement reference plate was secured to the large gear using three magnetic mounts, and the plate was leveled via a three-point leveling device.

- (2)

- Prior to initiating the measurement program, the initial coordinates of the calibration circle center closest to the field-of-view center and its right adjacent circle center were recorded in the 2D dot array calibration plate coordinate system: , .

- (3)

- The rotary table was activated to rotate at a constant angular velocity via the measurement program while simultaneously triggering the binocular cameras for continuous image acquisition.

- (4)

- The stereo camera system synchronously captures sequential images of the calibration target at fixed temporal intervals, generating dual image sequences and from respective optical channels.

4.5.2. Image Processing

- (1)

- From image , extract the coordinates of the calibration circle center closest to the field of view center and its right adjacent circle center in the image pixel coordinate system: and . Additionally, derive the camera center coordinate in the same coordinate system. Chose as the reference point because the area near the center of the field of view is less affected by camera distortion. Select primarily for programming convenience and ease of identification. The positional relationship of these three points is illustrated in Figure 7.

- (2)

- Based on the pixel equivalent of Camera 1, compute the coordinates of the aforementioned three points in the image physical coordinate system: , , and .

- (3)

- Given the initial coordinates , of the calibration circle centers closest to the field-of-view center and its immediate right neighbor in the 2D dot array calibration plate coordinate system, iteratively compute the coordinates , for each image frame using an 8-neighborhood coordinate extraction algorithm.

- (4)

- Compute the four-parameter values , , , and using the coordinates of points and derived from Steps (2) and (3) in both the image physical coordinate system and the 2D dot array calibration plate coordinate system. During rotational angle measurement, as the large gear undergoes rotary motion, the image physical coordinate system experiences relative rotation with respect to the 2D dot array calibration plate coordinate system. Consequently, the four parameters describing the coordinate transformation for each image vary with each rotation angle.

- (5)

- Using the four parameters (, , , ) and , compute the camera center coordinate in the 2D dot array calibration plate coordinate system.

- (6)

- Transform to the measurement reference plate coordinate system via the pre-calibrated four-parameter set (, , , ), resulting in . The coordinate distribution of Camera 1 centered in this system is shown in Figure 8a.

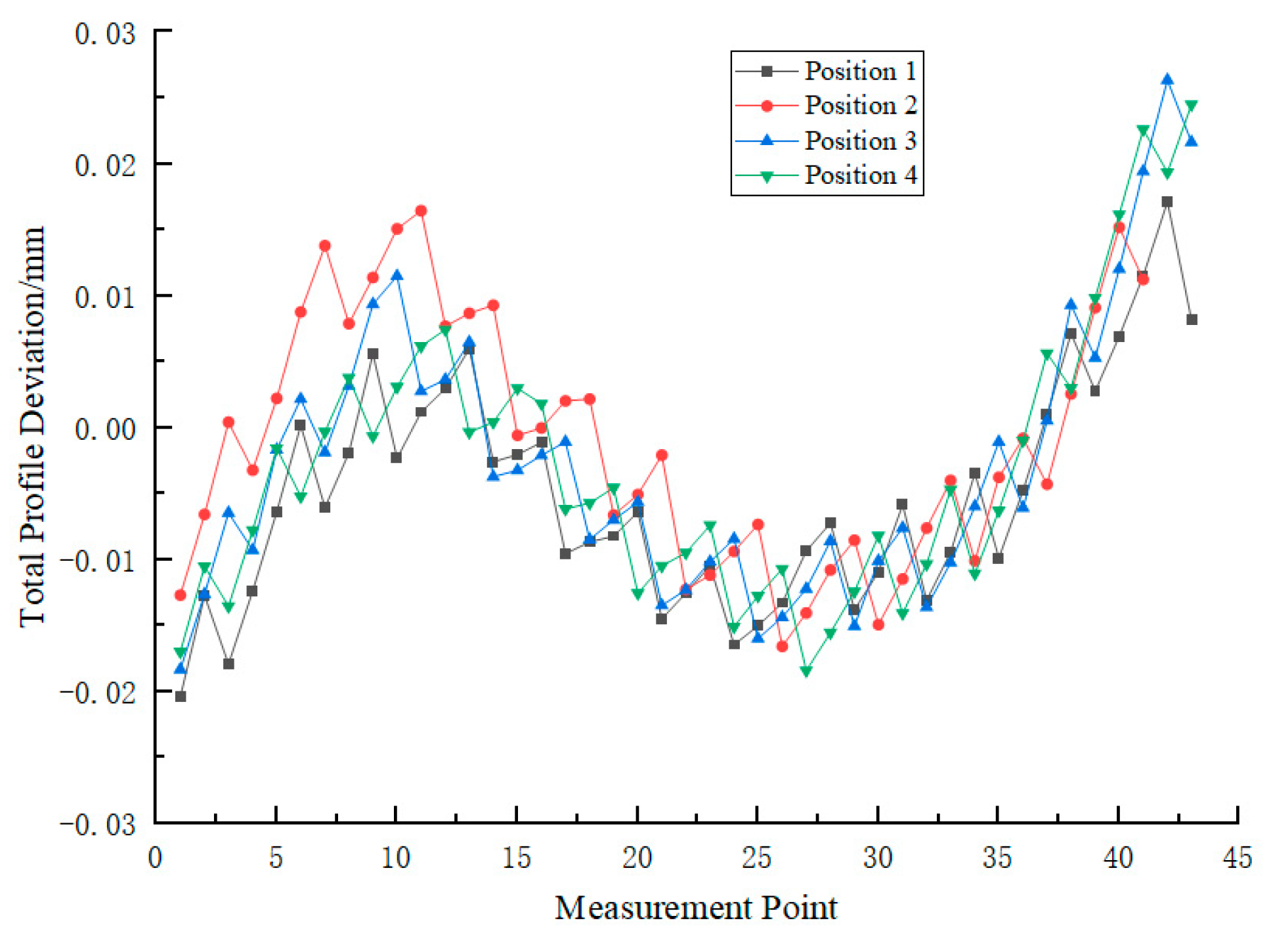

4.5.3. Analysis of Rotation Angle Measurement Results

4.5.4. Angle Measurement Application

4.5.5. System Optimization Prospects

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kumar, A.S.A.; George, B.; Mukhopadhyay, S.C. Technologies and Applications of Angle Sensors: A Review. IEEE Sens. J. 2021, 21, 7195–7206. [Google Scholar] [CrossRef]

- Gao, W.; Kim, S.W.; Bosse, H.; Haitjema, H.; Chena, Y.L.; Lu, X.D.; Knapp, W.; Weckenmann, A.; Estler, W.T.; Kunzmann, H. Measurement technologies for precision positioning. CIRP Ann.–Manuf. Technol. 2015, 64, 773–796. [Google Scholar] [CrossRef]

- Wang, S.; Ma, R.; Cao, F.; Luo, L.; Li, X. A Review: High-Precision Angle Measurement Technologies. Sensors 2024, 24, 1755. [Google Scholar] [CrossRef]

- Li, M.; Guo, L.; Guo, X.; Yang, Y.; Zou, J. Research on the Construction of a Digital Twin Model for a 3D Measurement System of Gears. IEEE Access 2023, 11, 124556–124569. [Google Scholar] [CrossRef]

- Guan, X.; Tang, Y.; Dong, B.; Li, G.; Fu, Y.; Tian, C. An Intelligent Detection System for Surface Shape Error of Shaft Workpieces Based on Multi-Sensor Combination. Appl. Sci. 2023, 13, 12931. [Google Scholar] [CrossRef]

- Wen, Q.; Li, P.; Zhang, Z.; Hu, H. Displacement Measurement Method Based on Double-Arrowhead Auxetic Tubular Structure. Sensors 2023, 23, 9544. [Google Scholar] [CrossRef] [PubMed]

- Das, S.; Chakraborty, B. Design and Realization of an Optical Rotary Sensor. IEEE Sens. J. 2018, 18, 2675–2681. [Google Scholar] [CrossRef]

- Ahmadi Tameh, T.; Sawan, M.; Kashyap, R. Novel Analog Ratio-Metric Optical Rotary Encoder for Avionic Applications. IEEE Sens. J. 2016, 16, 6586–6595. [Google Scholar] [CrossRef]

- Liu, B.; Wang, Y.; Liu, J.; Chen, A. Self-calibration Method of Circular Grating Error in Rotary Inertial Navigation. In Proceedings of the 2023 2nd International Symposium on Sensor Technology and Control (ISSTC), Hangzhou, China, 11–13 August 2023; pp. 139–143. [Google Scholar]

- Du, Y.; Yuan, F.; Jiang, Z.; Li, K.; Yang, S.; Zhang, Q.; Zhang, Y.; Zhao, H.; Li, Z.; Wang, S. Strategy to Decrease the Angle Measurement Error Introduced by the Use of Circular Grating in Dynamic Torque Calibration. Sensors 2021, 21, 7599. [Google Scholar] [CrossRef]

- Wu, H.; Zhang, F.; Liu, T.; Li, J.; Qu, X. Absolute distance measurement with correction of air refractive index by using two-color dispersive interferometry. Opt. Express 2016, 24, 24361–24376. [Google Scholar] [CrossRef]

- Liang, X.; Lin, J.; Yang, L.; Wu, T.; Liu, Y.; Zhu, J. Simultaneous Measurement of Absolute Distance and Angle Based on Dispersive Interferometry. IEEE Photonics Technol. Lett. 2020, 32, 449–452. [Google Scholar] [CrossRef]

- Straube, G.; Fischer Calderón, J.S.; Ortlepp, I.; Füßl, R.; Manske, E. A Heterodyne Interferometer with Separated Beam Paths for High-Precision Displacement and Angular Measurements. Nanomanuf. Metrol. 2021, 4, 200–207. [Google Scholar] [CrossRef]

- Kumar, A.; Chandraprakash, C. Computer Vision-Based On-Site Estimation of Contact Angle From 3-D Reconstruction of Droplets. IEEE Trans. Instrum. Meas. 2023, 72, 2524108. [Google Scholar] [CrossRef]

- Hsu, Q.-C.; Ngo, N.-V.; Ni, R.-H. Development of a faster classification system for metal parts using machine vision under different lighting environments. Int. J. Adv. Manuf. Technol. 2019, 100, 3219–3235. [Google Scholar] [CrossRef]

- Shankar, R.K.; Indra, J.; Oviya, R.; Heeraj, A.; Ragunathan, R. Ragunathan. Machine Vision based quality inspection for automotive parts using edge detection technique. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1055, 012029. [Google Scholar] [CrossRef]

- McGuinness, B.; Duke, M.; Au, C.K.; Lim, S.H. Measuring radiata pine seedling morphological features using a machine vision system. Comput. Electron. Agric. 2021, 189, 106355. [Google Scholar] [CrossRef]

- Zhou, J.; Yu, J. Chisel edge wear measurement of high-speed steel twist drills based on machine vision. Comput. Ind. 2021, 128, 103436. [Google Scholar] [CrossRef]

- Li, Z.; Liao, W.; Zhang, L.; Ren, Y.; Sun, G.; Sang, Y. Feature-Model-Based In-Process Measurement of Machining Precision Using Computer Vision. Appl. Sci. 2024, 14, 6094. [Google Scholar] [CrossRef]

- Venkatesan, C.; Ai-Turjman, F.; Pelusi, D. Guest Editorial: Smart Measurement in Machine Vision for Challenging Applications. IEEE Instrum. Meas. Mag. 2023, 26, 3. [Google Scholar] [CrossRef]

- Wu, J.; Jiang, H.; Wang, H.; Wu, Q.; Qin, X.; Dong, K. Vision-based multi-view reconstruction for high-precision part positioning in industrial robot machining. Measurement 2025, 242, 116042. [Google Scholar] [CrossRef]

- Huang, H.L.; Jywe, W.Y.; Chen, G.R. Application of 3D vision and networking technology for measuring the positional accuracy of industrial robots. Int. J. Adv. Manuf. Technol. 2024, 135, 2051–2064. [Google Scholar] [CrossRef]

- Shan, D.; Zhu, Z.; Wang, X.; Zhang, P. Pose measurement method based on machine vision and novel directional target. Appl. Sci. 2024, 14, 1698. [Google Scholar] [CrossRef]

- Zhang, F.; Wang, L.; Zhang, J. A visual-based angle measurement method using the rotating spot images: Mathematic modeling and experiment validation. IEEE Sens. J. 2021, 21, 16576–16583. [Google Scholar] [CrossRef]

- Li, W.; Jin, J.; Li, X.; Li, B. Method of rotation angle measurement in machine vision based on calibration pattern with spot array. Appl. Opt. 2010, 9, 1001–1006. [Google Scholar] [CrossRef] [PubMed]

- Xu, J.; Zheng, S.; Sun, K.; Song, P. Research and Application of Contactless Measurement of Transformer Winding Tilt Angle Based on Machine Vision. Sensors 2023, 23, 4755. [Google Scholar] [CrossRef]

- Li, J.; Li, J.; Wang, X.; Tian, G.; Fan, J. Machine Vision-Based Method for Measuring and Controlling the Angle of Conductive Slip Ring Brushes. Micromachines 2022, 13, 447. [Google Scholar] [CrossRef]

- Zheng, T.; Gong, B.; Li, X.; Zhao, X.; Zhang, Y. A Novel Method for Measuring the Attitude Angles of the Artillery Barrel Based on Monocular Vision. IEEE Access 2024, 12, 155125–155135. [Google Scholar] [CrossRef]

- Ahn, J.; Kim, S. Development of online braiding angle measurement system. Text. Res. J. 2024, 95, 1526–1536. [Google Scholar] [CrossRef]

- Yu, Z.; Bu, T.; Zhang, Y.; Jia, S.; Huang, T.; Liu, J.K. Robust Decoding of Rich Dynamical Visual Scenes With Retinal Spikes. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 3396–3409. [Google Scholar] [CrossRef]

- Liu, Z.; Guan, B.; Shang, Y.; Bian, Y.; Sun, P.; Yu, Q. Stereo Event-Based, 6-DOF Pose Tracking for Uncooperative Spacecraft. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5607513. [Google Scholar] [CrossRef]

- Jin, J.; Zhao, L.; Xu, S. High-precision rotation angle measurement method based on monocular vision. J. Opt. Soc. Am. A 2014, 31, 1401–1407. [Google Scholar] [CrossRef] [PubMed]

- Cui, J.-S.; Huo, J.; Yang, M.; Wang, Y.-K. Research on the rigid body posture measurement using monocular vision by coplanar feature points. Optik 2015, 126, 5423–5429. [Google Scholar] [CrossRef]

- Eves, B.J.; Leroux, I.D.; Cen, A.J. Phase shifting angle interferometer. Metrologia 2023, 60, 055006. [Google Scholar] [CrossRef]

- Chen, S.-H.; Chen, H.-Y.; Lai, Y.-X.; Hsieh, H.-L. Development of a double-diffraction grating interferometer for measurements of displacement and angle. In Proceedings of the Conference on Optics and Photonics for Advanced Dimensional Metrology, Electr Network, Online, 6–10 April 2020. [Google Scholar]

- Xu, X.; Dai, Z.; Tan, Y. A Dual-Beam Differential Method Based on Feedback Interferometry for Noncontact Measurement of Linear and Angular Displacement. IEEE Trans. Ind. Electron. 2023, 70, 6405–6413. [Google Scholar] [CrossRef]

- Černe, B.; Petkovšek, M. High-speed camera-based optical measurement methods for in-mesh tooth deflection analysis of thermoplastic spur gears. Mater. Des. 2022, 223, 111184. [Google Scholar] [CrossRef]

- Yin, P.; Han, F.; Wang, J.; Lu, C. Influence of module on measurement uncertainty of gear tooth profile deviation on gear measuring center. Measurement 2021, 182, 109688. [Google Scholar] [CrossRef]

- Shimizu, Y.; Matsukuma, H.; Gao, W. Optical sensors for multi-axis angle and displacement measurement using grating reflectors. Sensors 2019, 19, 5289. [Google Scholar] [CrossRef]

- Shults, R.; Urazaliev, A.; Annenkov, A.; Nesterenko, O.; Kucherenko, O.; Kim, K. Different approaches to coordinate transformation parameters determination of nonhomogeneous coordinate systems. In Proceedings of the 11th International Conference “Environmental Engineering”, Vilnius, Lithuania, 21–22 May 2020. [Google Scholar] [CrossRef]

- ISO 20816-1:2016; Mechanical Vibration—Measurement and Evaluation of Machine Vibration. ISO: Geneva, Switzerland, 2016.

- Zhang, Z.; Wang, X.; Zhao, H.; Ren, T.; Xu, Z.; Luo, Y. The Machine Vision Measurement Module of the Modularized Flexible Precision Assembly Station for Assembly of Micro- and Meso-Sized Parts. Micromachines 2020, 11, 918. [Google Scholar] [CrossRef]

- Yin, Z.; Zhao, L.; Li, T.; Zhang, T.; Liu, H.; Cheng, J.; Chen, M. Automated alignment technology for laser repair of surface micro-damages on large-aperture optics based on machine vision and rangefinder ranging. Measurement 2025, 244, 116511. [Google Scholar] [CrossRef]

- Wang, X.; Li, F.; Du, Q.; Zhang, Y.; Wang, T.; Fu, G.; Lu, C. Micro-amplitude vibration measurement using vision-based magnification and tracking. Measurement 2023, 208, 112464. [Google Scholar] [CrossRef]

- GB/T 10095.1-2008; Cylindrical Gears—System of Accuracy—Part 1: Definitions and Allowable Values of Deviations Relevant to Corresponding Flanks of Gear Teeth. Standardization Administration of the People’s Republic of China: Beijing, China, 2008.

| Camera | The Center Distance of Physical Dimensions/mm | The Center Distance of Pixel Dimensions/Pixel | Pixel Equivalent/μm |

|---|---|---|---|

| 1 | 5 | 266.0175 | 18.7958 |

| 2 | 5 | 266.4734 | 18.7636 |

| Points | The Image Physical Coordinate Values | Points | The Image Physical Coordinate Values |

|---|---|---|---|

| (−7.7499, 4.5968) | (−7.7462, 3.5232) | ||

| (−7.7585, −5.3396) | (−7.7394, −6.4343) | ||

| (2.2464, −5.3572) | (2.1792, −6.4728) | ||

| (2.255, 4.6411) | (2.2253, 3.4502) | ||

| (−2.7518, −0.3647) | (−2.7703, −1.4834) | ||

| (−6.8633, 4.1014) | (−6.9554, 4.0799) | ||

| (−6.9426, −5.8532) | (−6.9522, −5.9734) | ||

| (3.1689, −5.8435) | (3.1409, −5.8716) | ||

| (3.0945, 4.1221) | (3.0282, 4.0708) | ||

| (−1.8856, −0.8683) | (−1.9346, −0.9236) |

| Points | The Image Physical Coordinate Values | The Calibration Board Coordinate Values |

|---|---|---|

| (−7.8233, 4.6096) | (−5, 170) | |

| (2.1557, −5.4125) | (−15, 160) | |

| (−7.7393, 3.6197) | (−360, 170) | |

| (2.237, −6.4059) | (−370, 160) | |

| (−6.9289, 4.1157) | (5, 15) | |

| (3.0512, −5.9063) | (15, 5) | |

| (−6.9941, 4.0914) | (360, 15) | |

| (3.0367, −5.8802) | (370, 5) |

| Points | ||||

|---|---|---|---|---|

| 12.8328 | 165.4075 | −0.9999 | 3.1394 | |

| 367.7474 | 166.3997 | −0.9999 | 3.1391 | |

| 11.9367 | 10.8993 | −0.9999 | 3.1395 | |

| 366.9811 | 10.8884 | −0.9999 | 3.1386 |

| Points | The Calibration Board Coordinate Values |

|---|---|

| (10.08196, 165.0369) | |

| (364.9811, 164.9096) | |

| (10.05311, 10.02708) | |

| (365.044, 9.970665) |

| Points | The Reference Plate Coordinate Values | Points | The Reference Plate Coordinate Values |

|---|---|---|---|

| (−5.4162, 4.791) | (349.5118, 5.0201) | ||

| (−5.3551, −5.18) | (349.5381, −4.9659) | ||

| (4.5691, −5.1535) | (359.5017, −5.0561) | ||

| (4.5462, 4.7649) | (359.5675, 5.0564) | ||

| (−0.414, −0.1944) | (354.5298, 0.0136) | ||

| (−5.2281, 249.7988) | (349.7845, 249.9674) | ||

| (−5.2981, 239.7837) | (349.7289, 240.0091) | ||

| (4.6984, 239.7735) | (359.7011, 239.9157) | ||

| (4.7079, 249.7902) | (359.7338, 250.0244) | ||

| (−0.28, 244.7865) | (354.7371, 244.9792) |

| The Calibration Board | ||||

|---|---|---|---|---|

| 1# | −10.3268 | −165.2615 | −1.0003 | 3.1406 |

| 2# | −10.3247 | 234.7516 | −1.0001 | 3.1409 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, P.; Duan, Z.; Zhang, J.; Zhao, W.; Lai, E.; Jiang, G. The Design and Implementation of a Dynamic Measurement System for a Large Gear Rotation Angle Based on an Extended Visual Field. Sensors 2025, 25, 3576. https://doi.org/10.3390/s25123576

Du P, Duan Z, Zhang J, Zhao W, Lai E, Jiang G. The Design and Implementation of a Dynamic Measurement System for a Large Gear Rotation Angle Based on an Extended Visual Field. Sensors. 2025; 25(12):3576. https://doi.org/10.3390/s25123576

Chicago/Turabian StyleDu, Po, Zhenyun Duan, Jing Zhang, Wenhui Zhao, Engang Lai, and Guozhen Jiang. 2025. "The Design and Implementation of a Dynamic Measurement System for a Large Gear Rotation Angle Based on an Extended Visual Field" Sensors 25, no. 12: 3576. https://doi.org/10.3390/s25123576

APA StyleDu, P., Duan, Z., Zhang, J., Zhao, W., Lai, E., & Jiang, G. (2025). The Design and Implementation of a Dynamic Measurement System for a Large Gear Rotation Angle Based on an Extended Visual Field. Sensors, 25(12), 3576. https://doi.org/10.3390/s25123576