Automatic Number Plate Detection and Recognition System for Small-Sized Number Plates of Category L-Vehicles for Remote Emission Sensing Applications

Abstract

1. Introduction

- In this work, generic state-of-the-art license plate detection and recognition algorithms are tested with different types of Category L vehicles, and a performance comparison is presented.

- A cost-effective and energy efficient Category L vehicle automatic number plate recognition (L-ANPR) system is developed to detect and recognize license plates on Category L vehicles.

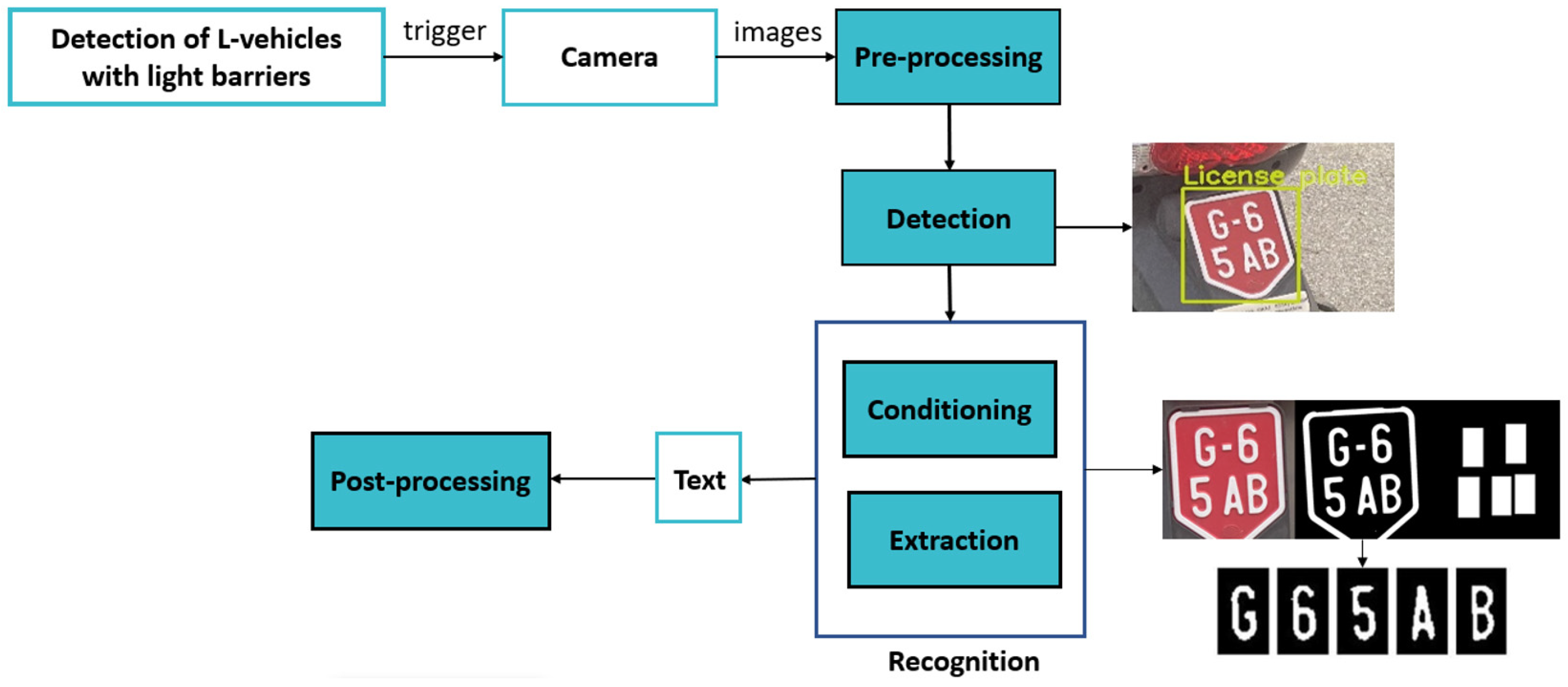

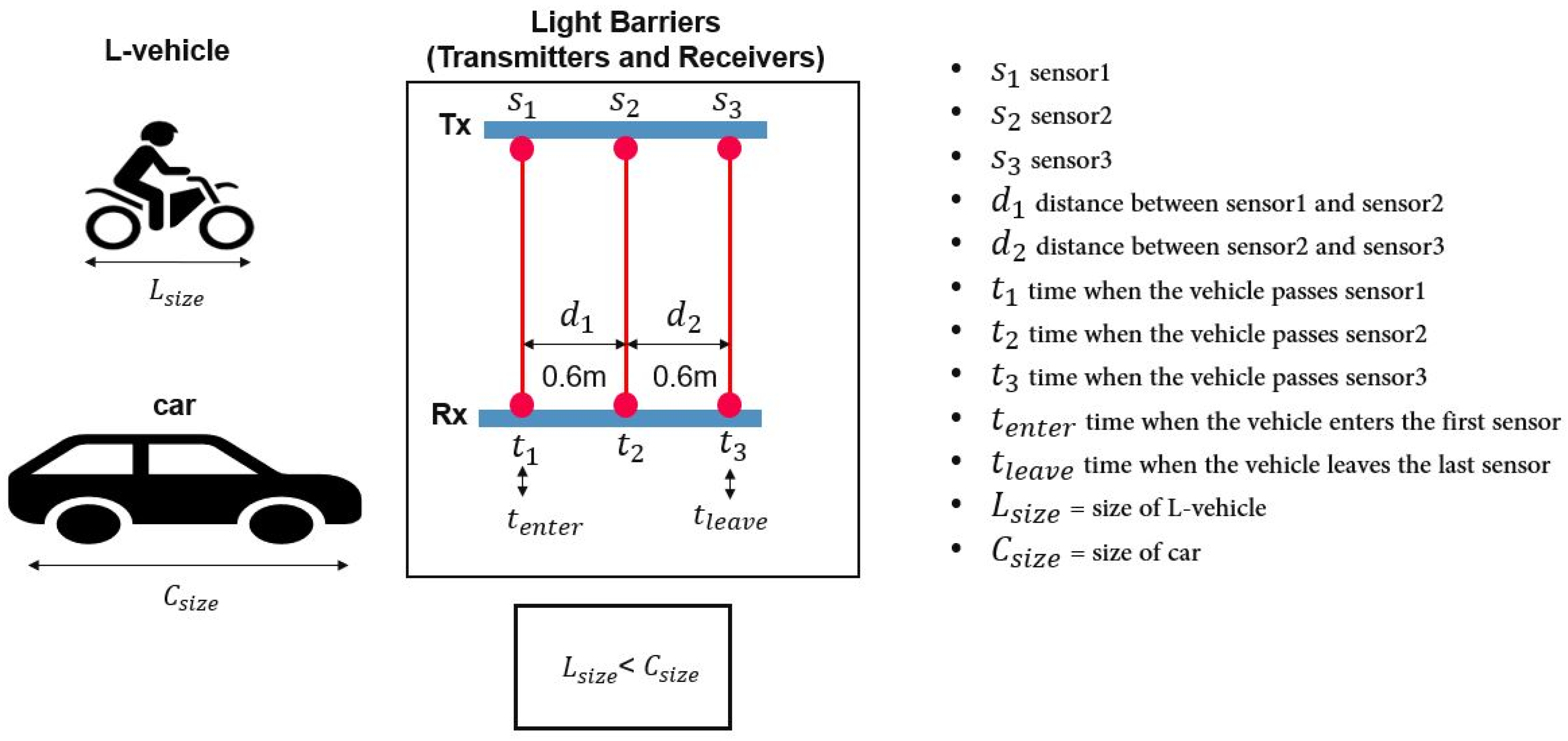

- The L-ANPR system recognizes L-vehicles by calculating the size of passing vehicles using photoelectric sensors (light barriers), as L-vehicles are smaller than cars. After identifying an L-vehicle, the light barriers trigger the L-ANPR detection system and emission measurement devices to start monitoring. This technique significantly reduces energy consumption, data usage, data storage, and computational costs.

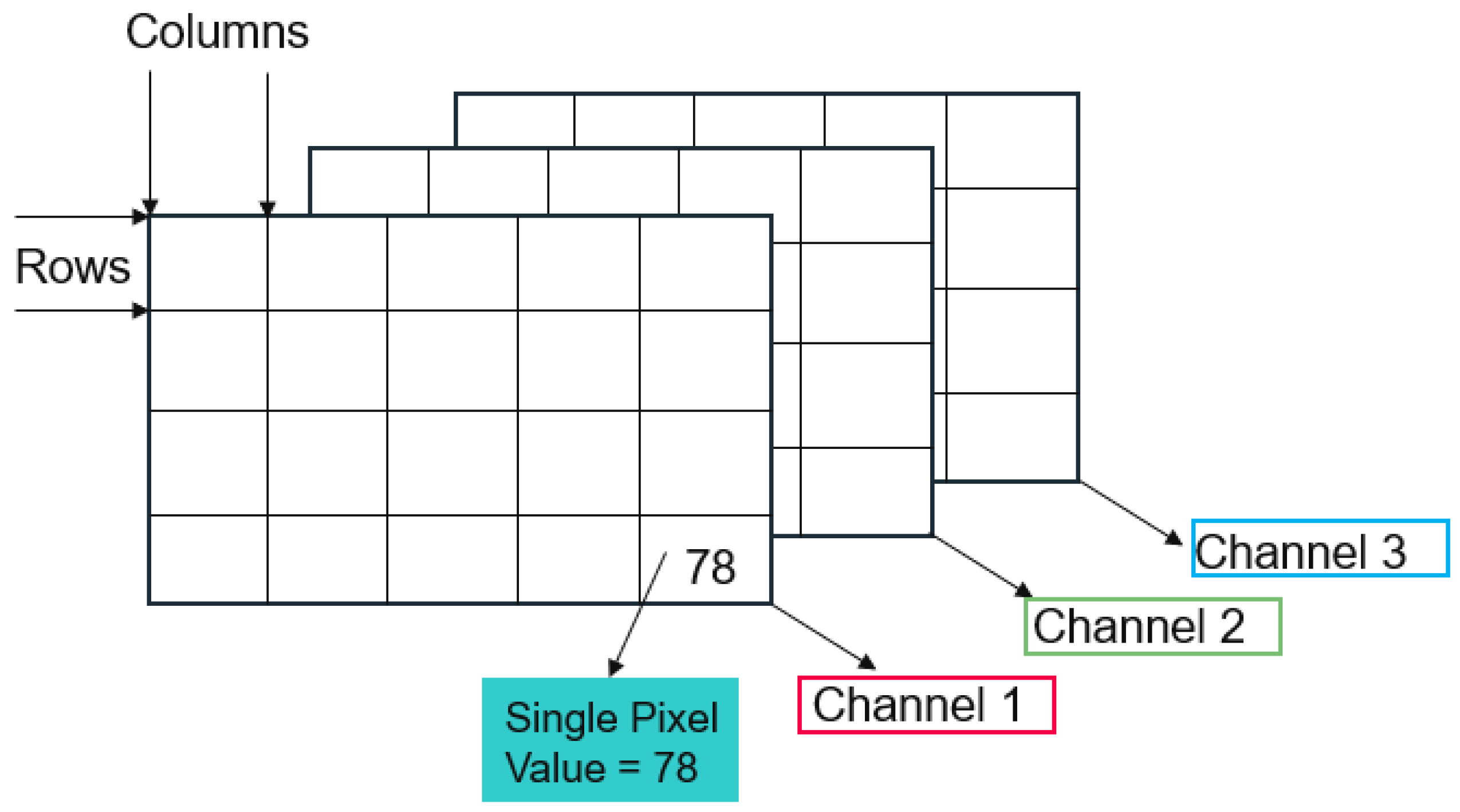

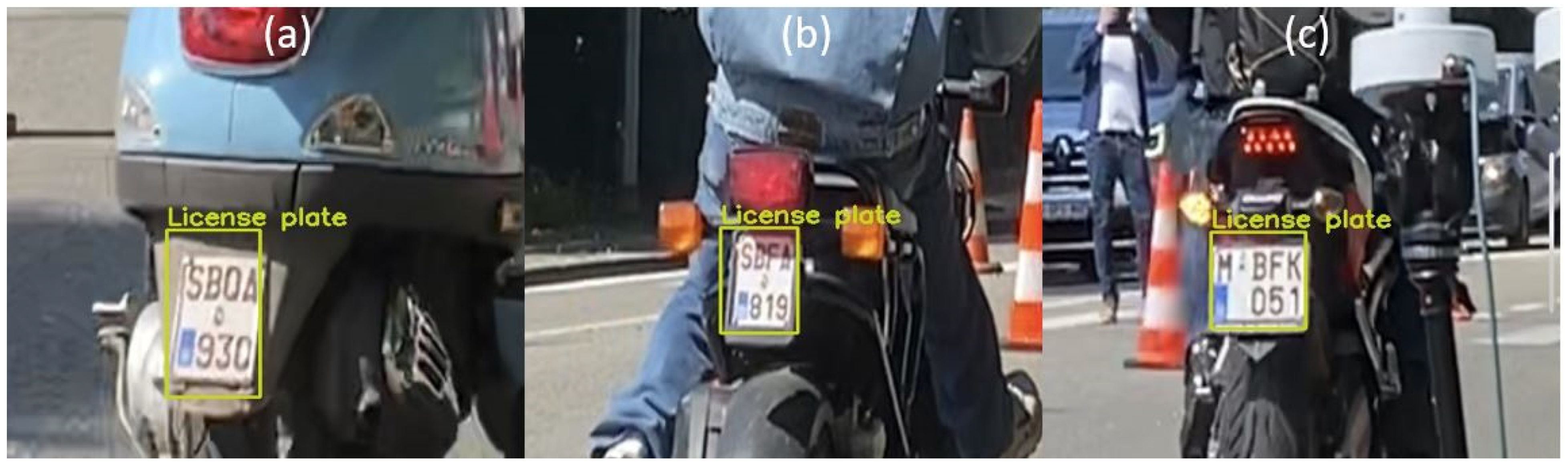

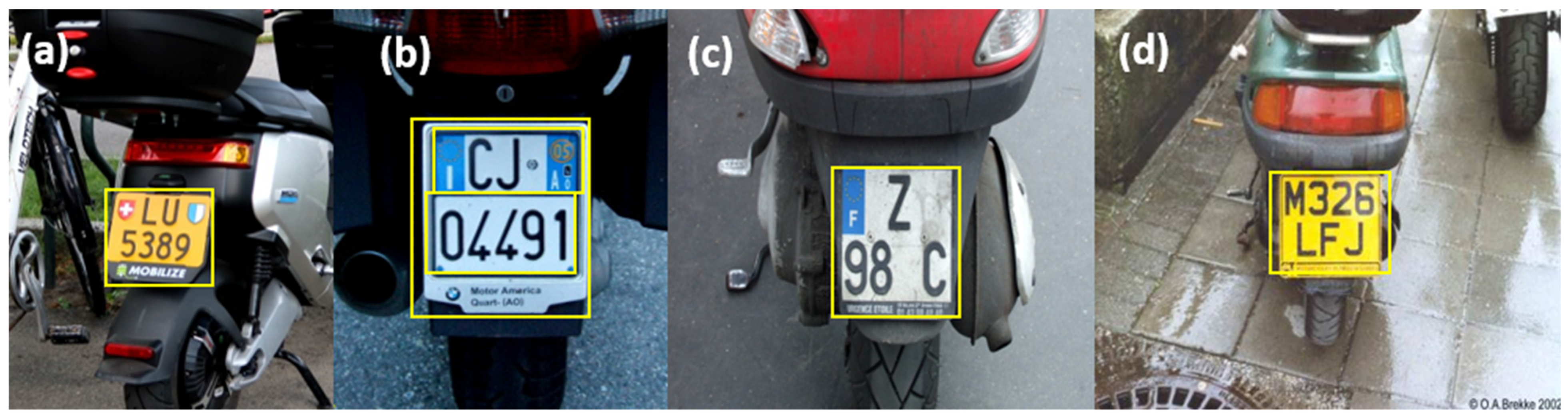

- The L-ANPR system’s convolutional neural network-based automatic detection model is trained with thousands of images of license plates of different types of Category L vehicles from different countries. The training data are collected from public online datasets, remote emission sensing device validation campaigns, and remote emission measurement campaigns performed in different European countries. The datasets were appropriately annotated for training, and the trained weights were generated. The advantage of the automatic detection model is that this single model is capable of detecting tiny plates of different colors on mopeds as well as normal-sized car and bike plates.

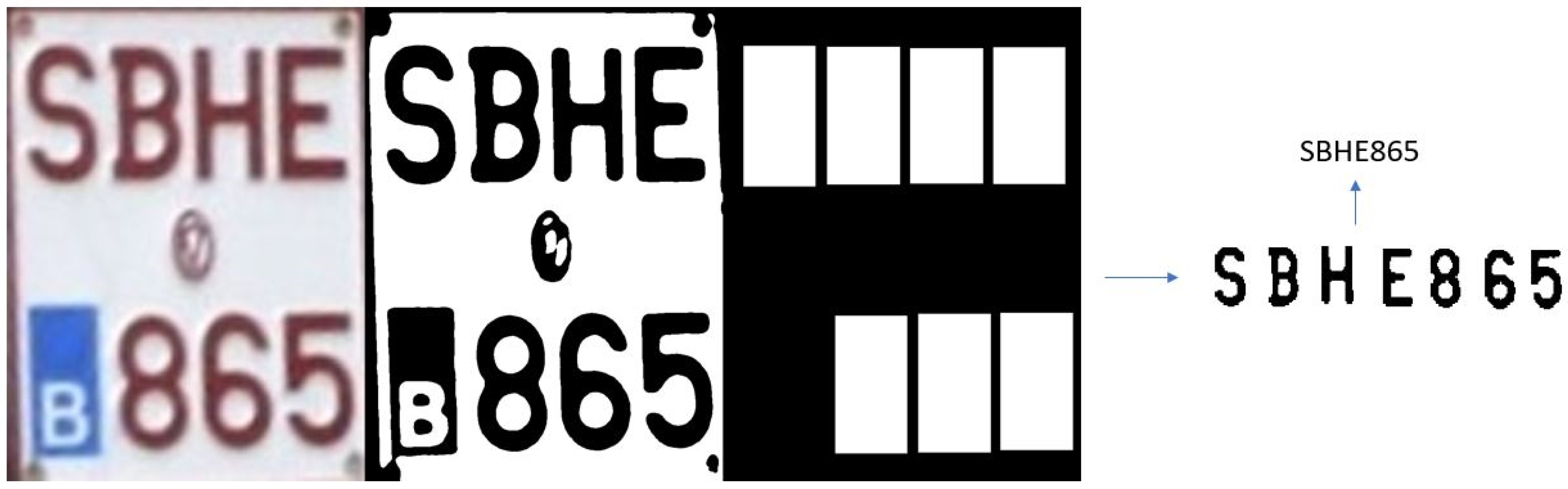

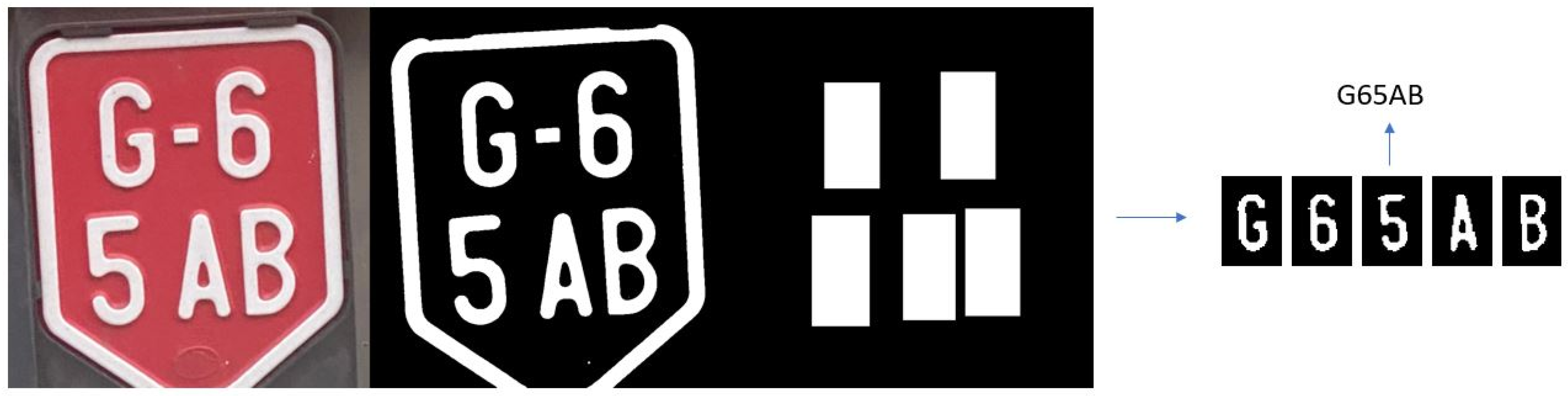

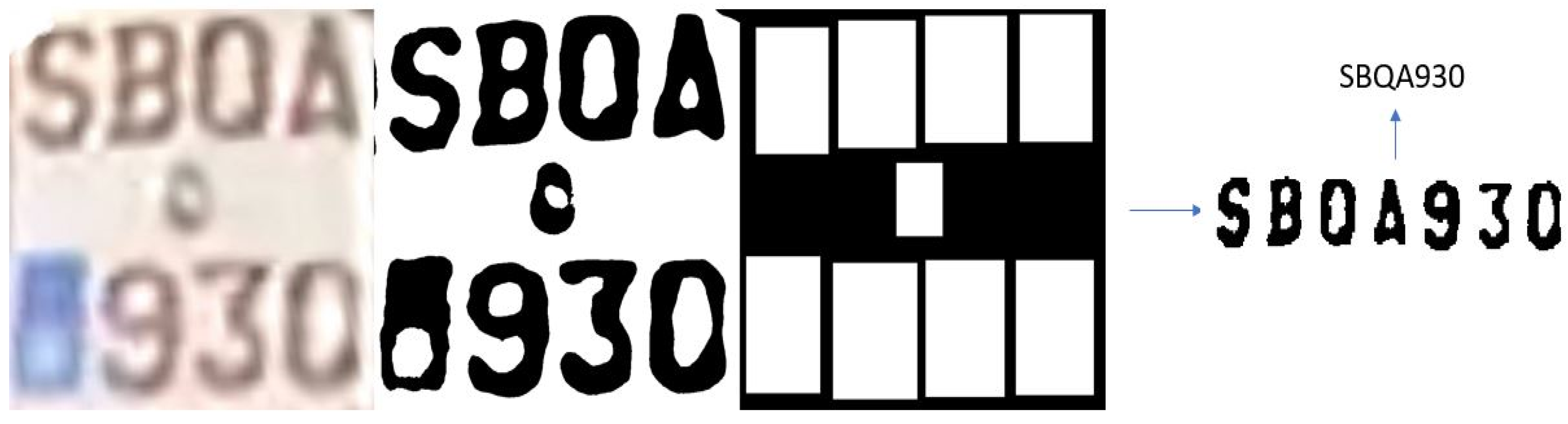

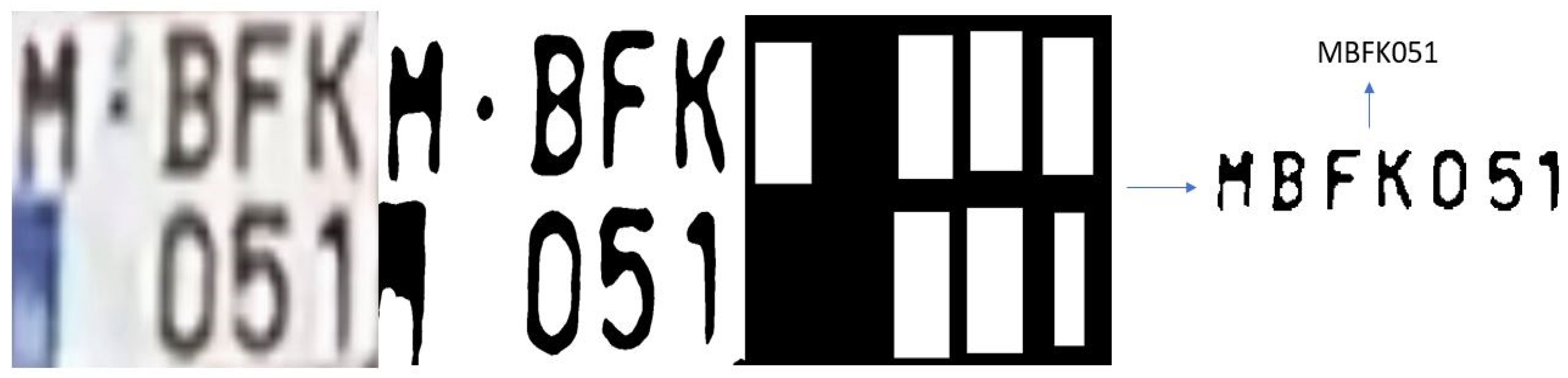

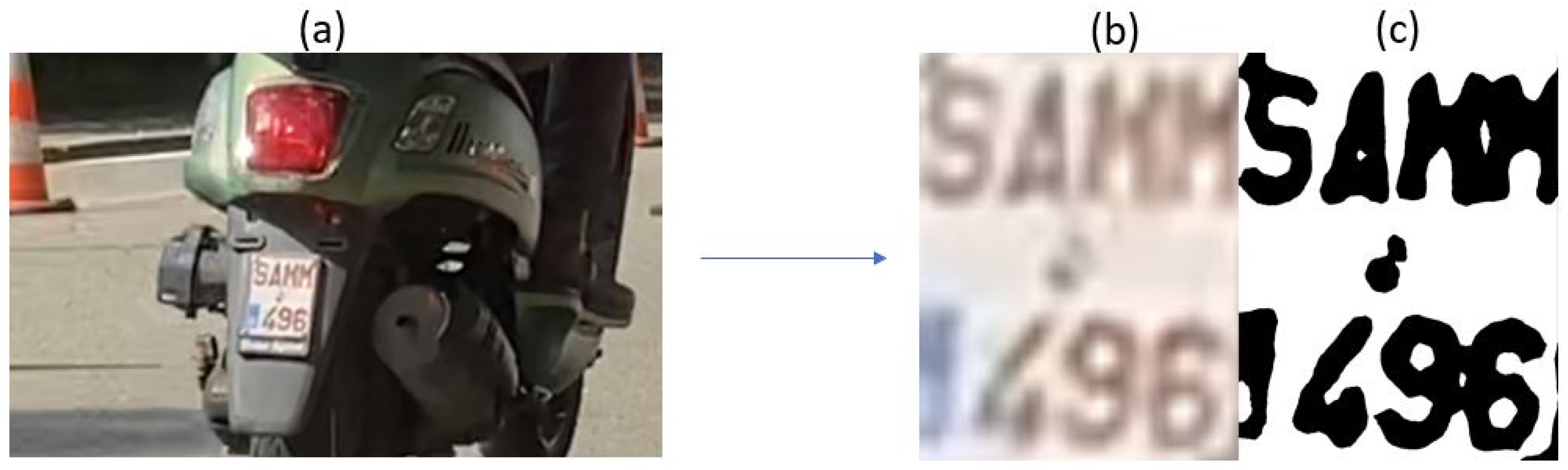

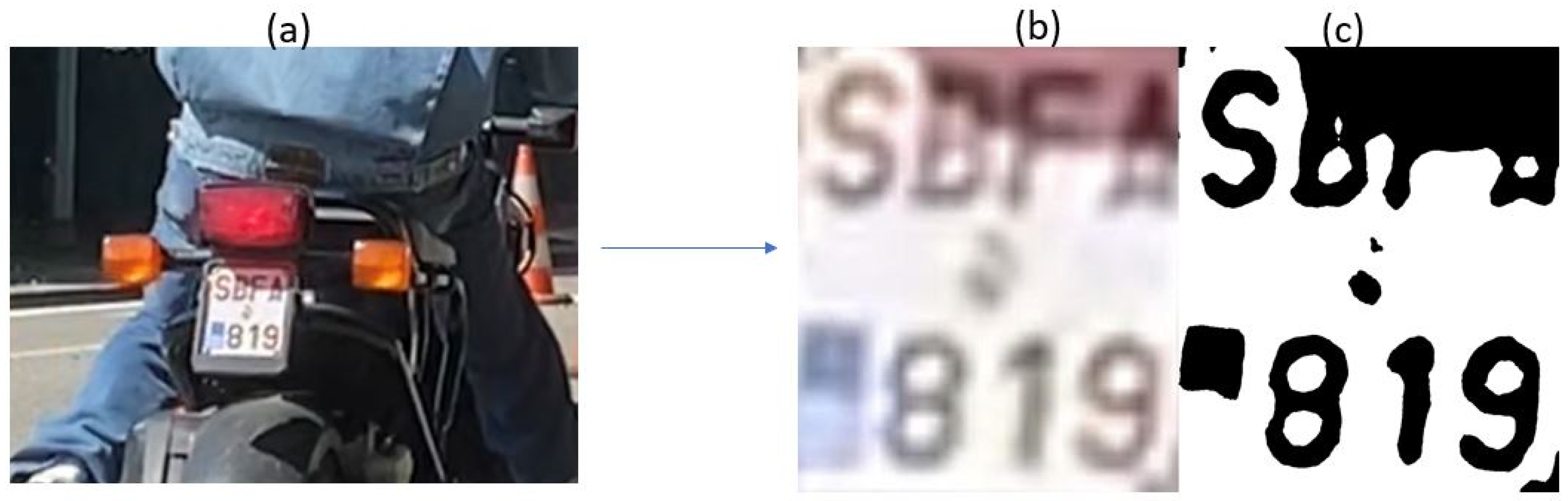

- The L-ANPR character recognition system is designed to recognize characters from license plates of different types of Category L vehicles. Plate conditioning, background color-based thresholding, and dilating techniques are applied to increase the visibility of small characters from the background and improve the performance of the optical character recognizer for small plates. The advantage of a character recognition algorithm is that it can quickly recognize large characters from standard number plates as well as small characters of different colors from the tiny plates on mopeds from different countries. Additionally, the L-ANPR system can capture 90–120 frames per second, enabling recognition of as many characters as necessary by reviewing all frames during post-processing for any false recognition that occurred in real-time.

- Emission measurement campaigns have been conducted under the European Union-supported LENS project [24]. The L-ANPR system was implemented on roads and with remote emission sensing devices during validation campaigns in Austria and emission measurement campaigns in Belgium. It was tested with hundreds of Category L vehicles from different countries, and its performance was evaluated. Another advantage of implementing L-ANPR in the emission measurement campaigns is that a large amount of data on different types of L-vehicles was collected, which was then used for further training and improving the performance of the L-ANPR system.

- After evaluating the performance of the L-ANPR system, the reasons for false recognitions are highlighted, and solutions are proposed to improve the performance of ANPR systems in detecting the tiny plates of L-vehicles.

2. Materials and Methods

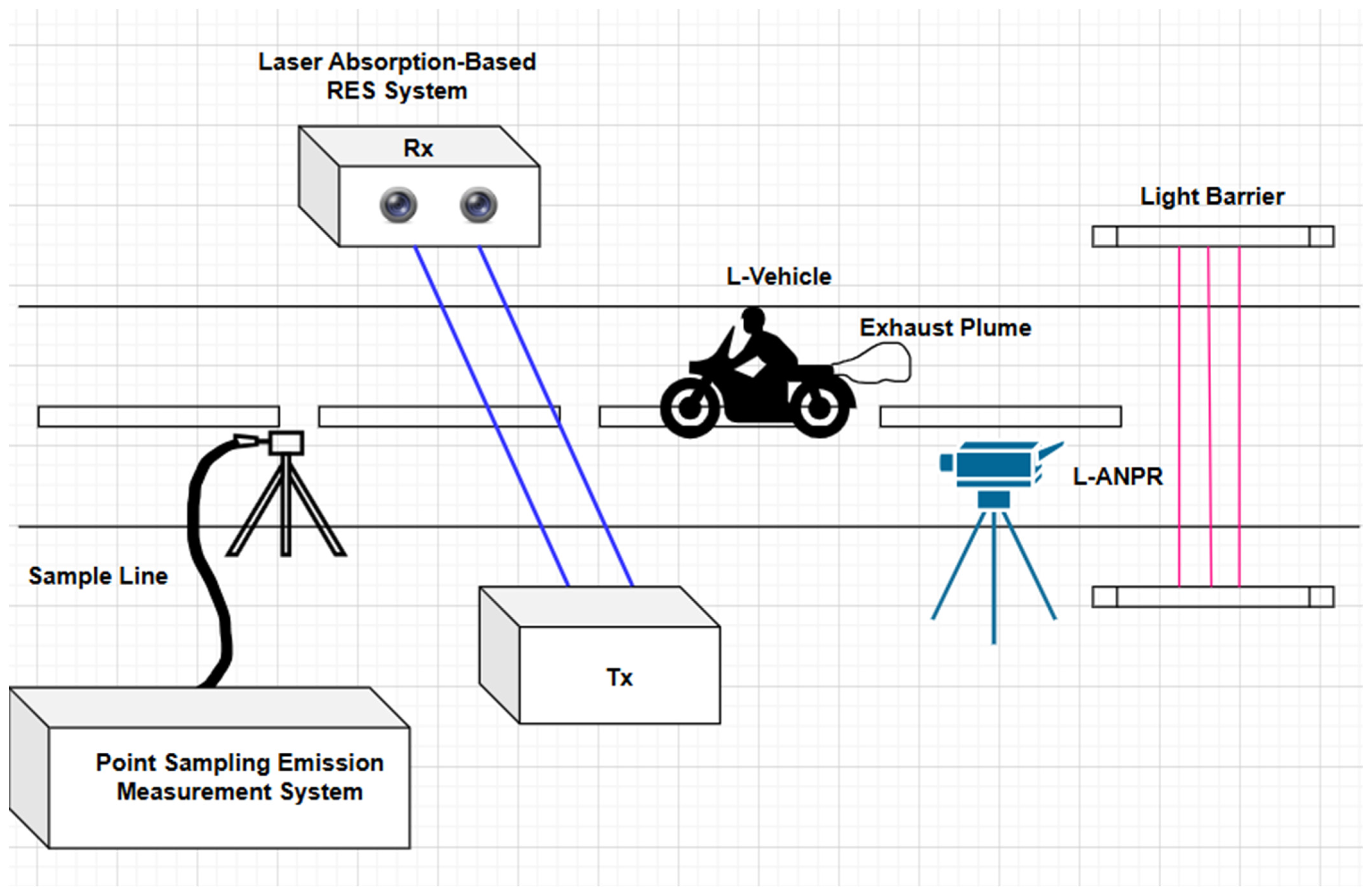

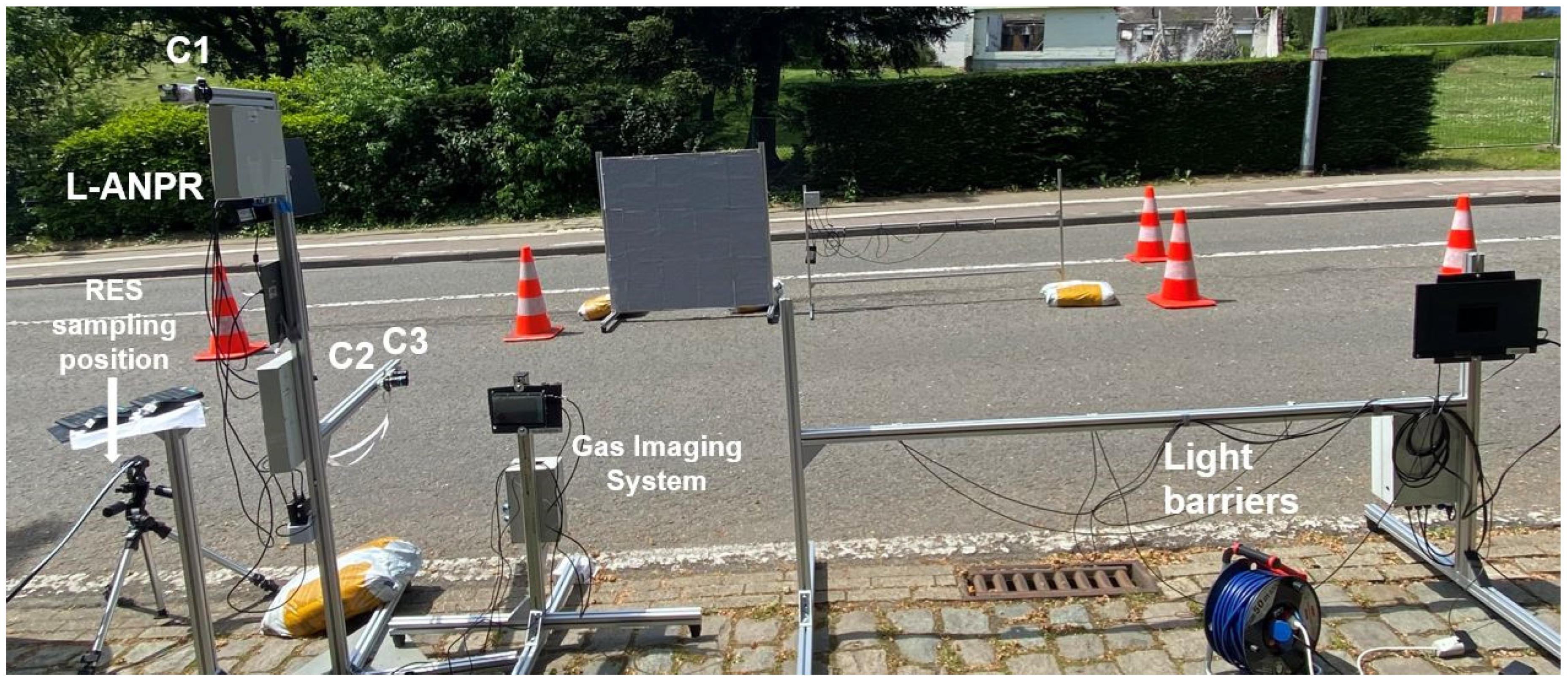

2.1. Roadside Emission Measurement Setup

2.2. L-Vehicle Automatic Number Plate Detection and Recognition (L-ANPR) System

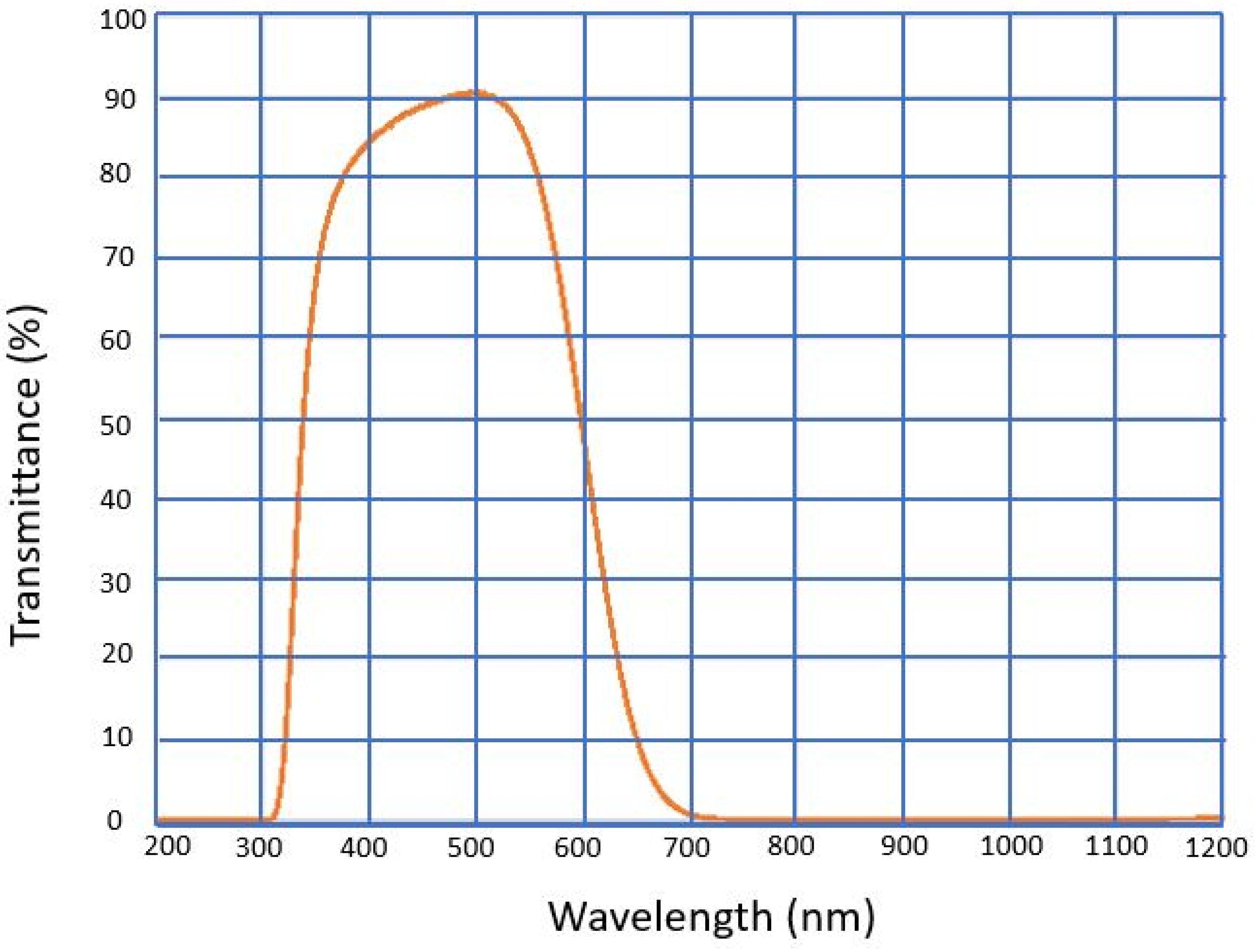

2.3. Hardware for L-ANPR

2.4. Detection of L-Vehicles with Light Barriers of L-ANPR System

Calculation of Size of Passing Vehicles

2.5. L-ANPR License Plate Detection

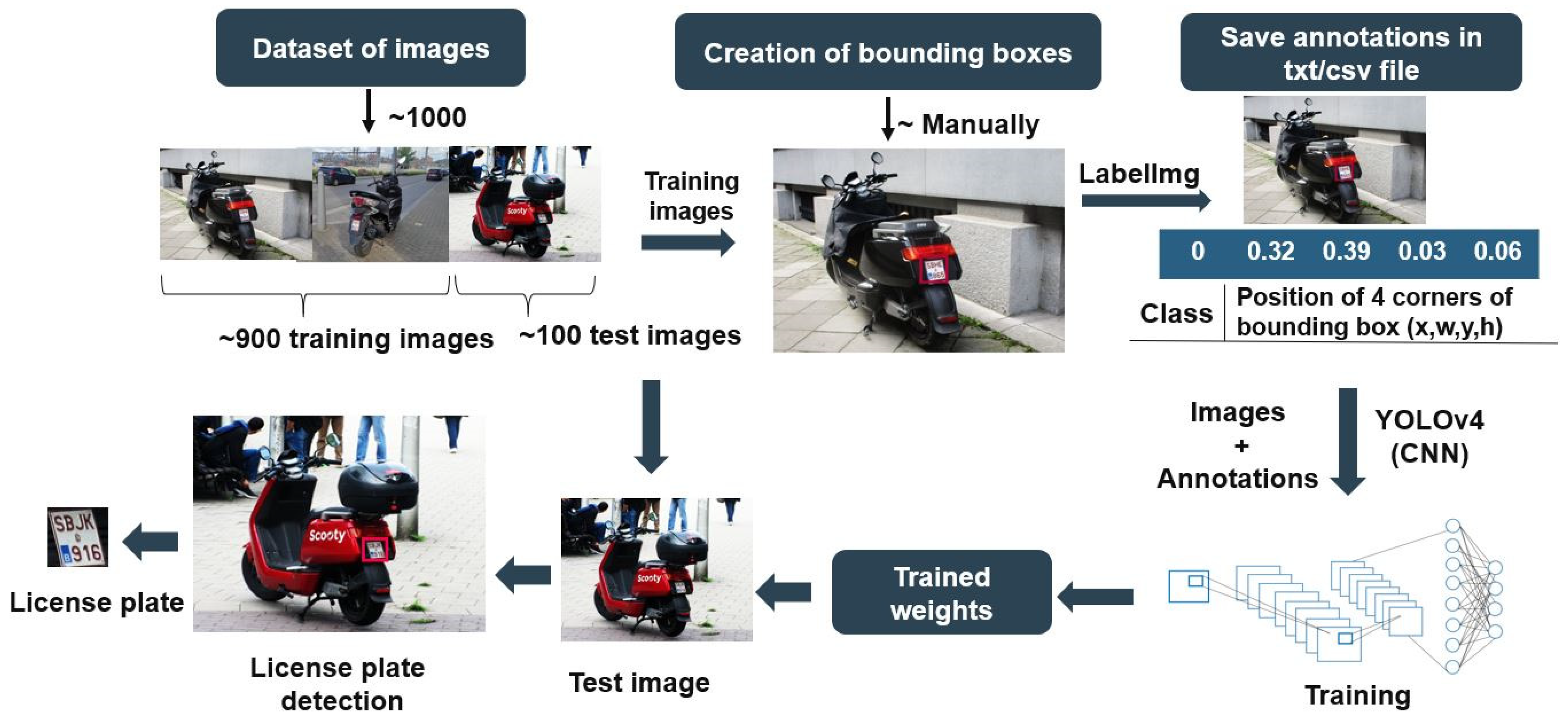

2.5.1. Dataset of License Plate Images

2.5.2. Creation of Bounding Boxes

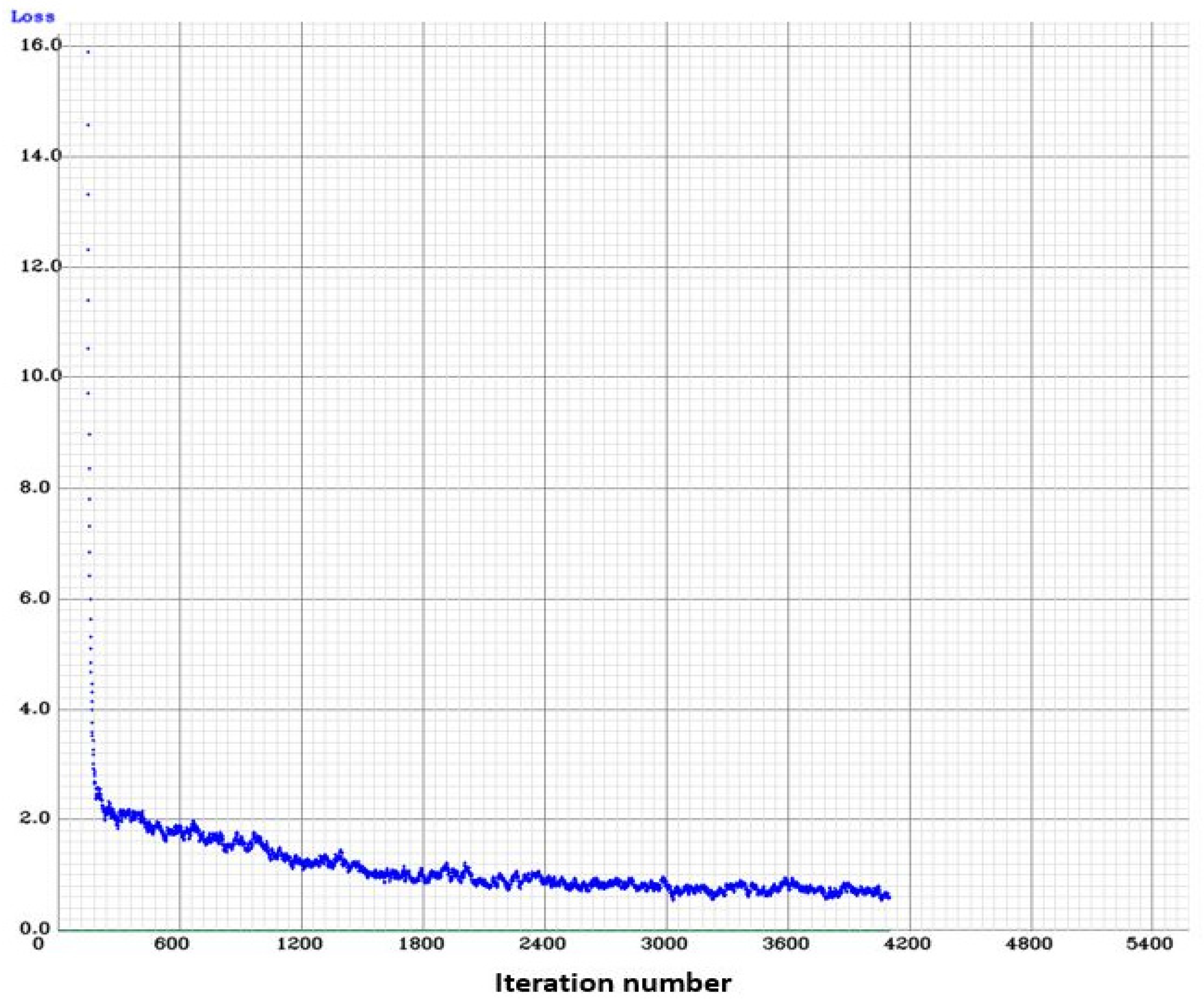

2.5.3. Object Detection Model—YOLOv4

2.5.4. Detection with Test Images

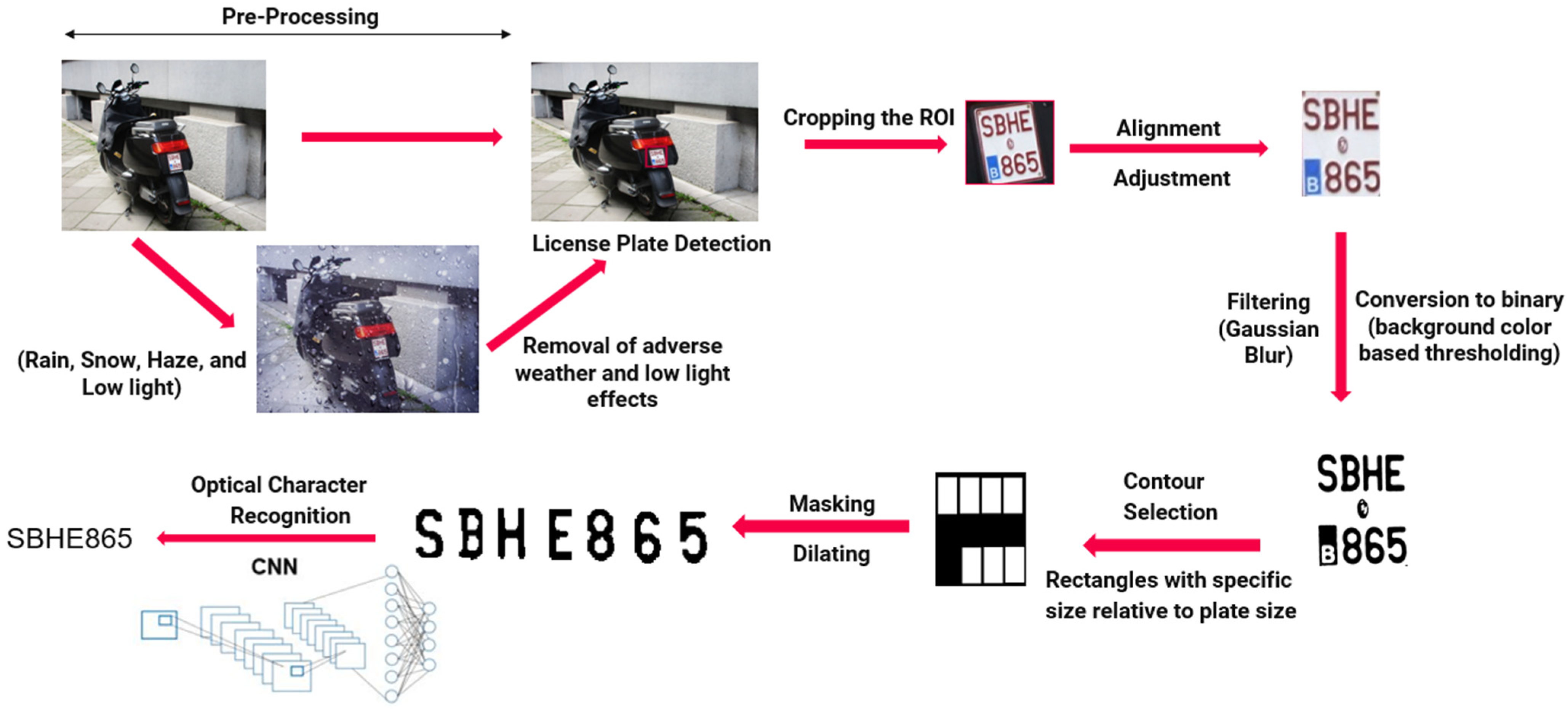

2.6. L-ANPR Character Recognition

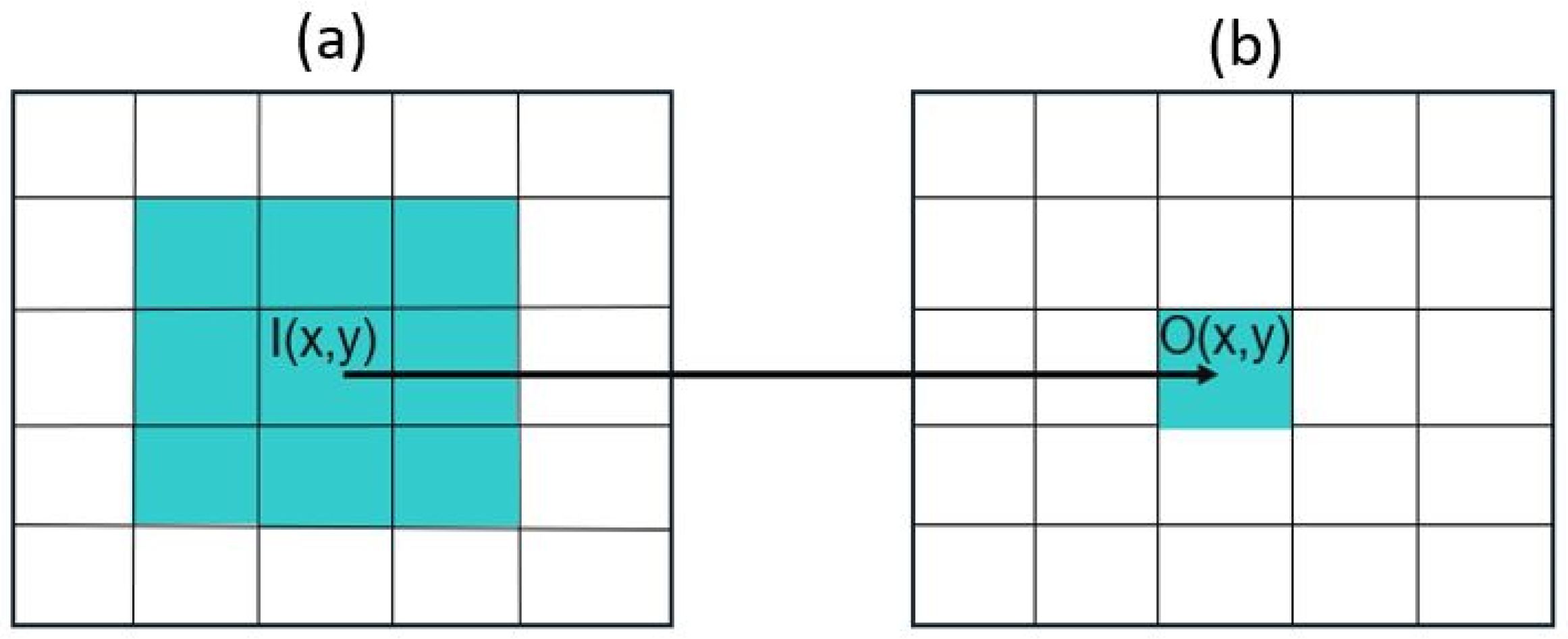

2.6.1. Pre-Processing: Removing Adverse Weather and Low Light Effects

2.6.2. Plate Conditioning

2.6.3. Character Extraction and Recognition

2.7. Devices Validation Campaign L-ANPR Setup

2.8. Emission Measurement Camoaign L-ANPR Setup

3. Results and Discussions

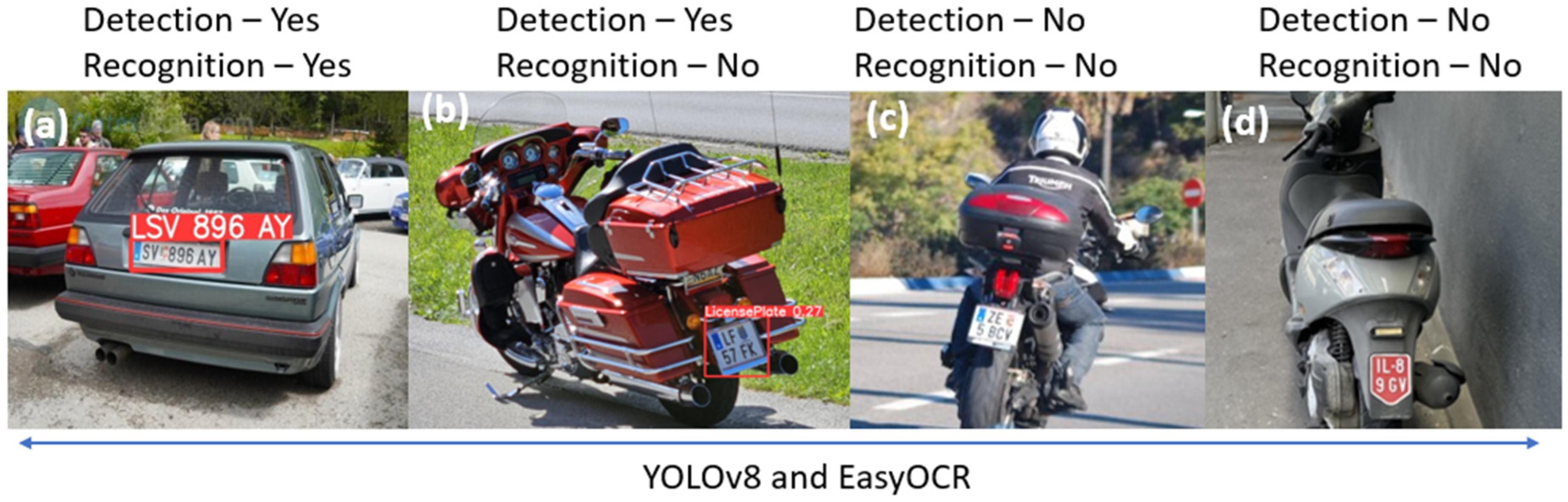

3.1. Performance Comparison of State-of-the-Art License Plate Detection and Recognition Algorithms on Category L Vehicles

3.2. L-ANPR License Plate Detection

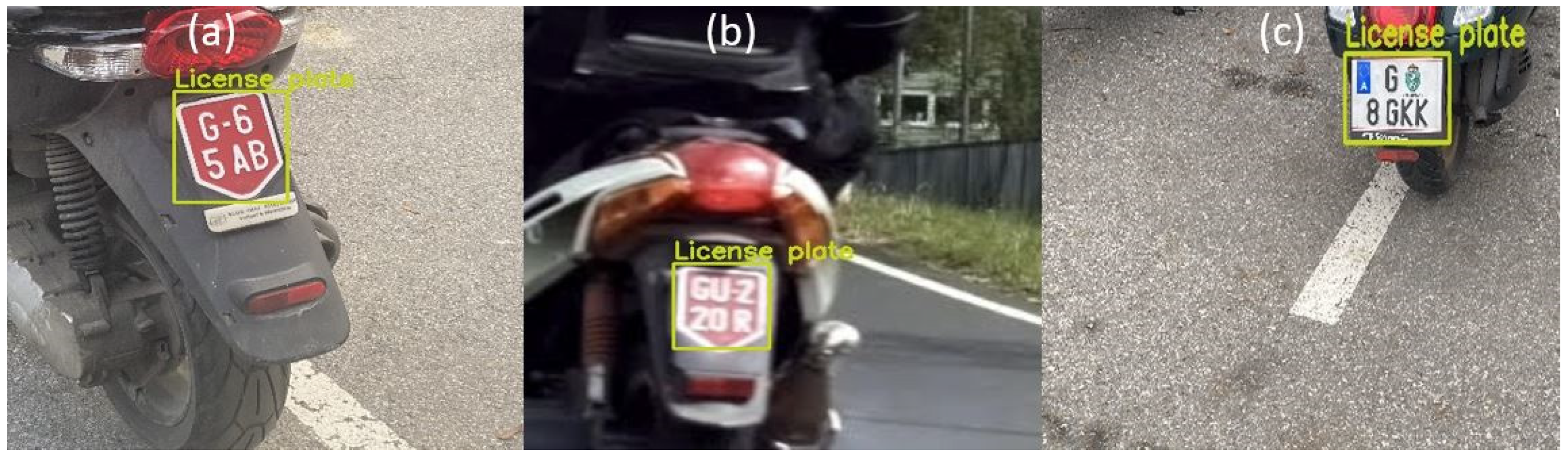

3.2.1. Testing with Images from Online Datasets

3.2.2. Testing with Images from Validation Campaign in Austria

3.2.3. Testing with Images from Emission Measurement Campaign in Belgium

3.3. L-ANPR License Plate Character Recognition

3.3.1. Testing with Images from Online Datasets

3.3.2. Testing with Images from Validation Campaign in Austria

3.3.3. Testing with Images from Emission Measurement Campaign in Belgium

3.4. Limitations and Solutions

3.4.1. Low Resolution License Plates

3.4.2. Effects of Shadow and Sunlight

3.4.3. YOLOv4-Based Detection Algorithm

3.5. Adaptation of the L-ANPR System for Broader Applicability

4. Summary and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, K.; Batterman, S. Air pollution and health risks due to vehicle traffic. Sci. Total Environ. 2013, 450–451, 307–316. [Google Scholar] [CrossRef] [PubMed]

- Bainschab, M.; Schriefl, M.A.; Bergmann, A. Particle number measurements within periodic technical inspections: A first quantitative assessment of the influence of size distributions and the fleet emission reduction. Atmos. Environ. X 2020, 8, 100095. [Google Scholar] [CrossRef]

- Davison, J.; Bernard, Y.; Borken-Kleefeld, J.; Farren, N.J.; Hausberger, S.; Sjödin, Å.; Tate, J.E.; Vaughan, A.R.; Carslaw, D.C. Distance-based emission factors from vehicle emission remote sensing measurements. Sci. Total Environ. 2020, 739, 139688. [Google Scholar] [CrossRef]

- Hansen, A.D.; Rosen, H. Individual measurements of the emission factor of aerosol black carbon in automobile plumes. J. Air Waste Manag. Assoc. 1990, 40, 1654–1657. [Google Scholar] [CrossRef]

- Bishop, G.A.; Starkey, J.R.; Ihlenfeldt, A.; Williams, W.J.; Stedman, D.H. Ir long-path photometry: A remote sensing tool for automobile emissions. Anal. Chem. 1989, 61, 671A–677A. [Google Scholar] [CrossRef]

- JanhÄll, S.; Hallquist, M. A novel method for determination of size-resolved, submicrometer particle traffic emission factors. Environ. Sci. Technol. 2005, 39, 7609–7615. [Google Scholar] [CrossRef]

- Hallquist, M.; Jerksjö, M.; Fallgren, H.; Westerlund, J. Sjödin Particle and gaseous emissions from individual diesel and CNG Buses. Atmos. Chem. Phys. 2013, 13, 5337–5350. [Google Scholar] [CrossRef]

- Ghaffarpasand, O.; Ropkins, K.; Beddows, D.C.S.; Pope, F.D. Detecting high emitting vehicle subsets using Emission Remote Sensing Systems. Sci. Total Environ. 2023, 858, 159814. [Google Scholar] [CrossRef] [PubMed]

- CARES: City Air Remote Emission Sensing. Available online: https://cares-project.eu/ (accessed on 15 December 2024).

- Knoll, M.; Penz, M.; Juchem, H.; Schmidt, C.; Pöhler, D.; Bergmann, A. Large-scale automated emission measurement of individual vehicles with point sampling. Atmos. Meas. Tech. 2024, 17, 2481–2505. [Google Scholar] [CrossRef]

- Imtiaz, H.H.; Schaffer, P.; Liu, Y.; Hesse, P.; Bergmann, A.; Kupper, M. Qualitative and quantitative analyses of automotive exhaust plumes for remote emission sensing application using Gas Schlieren Imaging Sensor System. Atmosphere 2024, 15, 1023. [Google Scholar] [CrossRef]

- Stedman, D.H.; Bishop, G.A. An Analysis of On-Road Remote Sensing As A Tool For Automobile Emissions Control. In Abschlussbericht an das Ministerium für Energie und natürliche Ressourcen von Illinois; ILENR/RE-AQ-90/05; University of Denver Press: Denver, CO, USA, 1990. [Google Scholar]

- History of ANPR. Available online: https://www.anpr-international.com/history-of-anpr/ (accessed on 10 January 2025).

- Ahmad, I.S.; Boufama, B.; Habashi, P.; Anderson, W.; Elamsy, T. Automatic License Plate Recognition: A comparative study. In Proceedings of the 2015 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT), Abu Dhabi, United Arab Emirates, 7–10 December 2015; pp. 635–640. [Google Scholar]

- Paruchuri, H. Application of artificial neural network to ANPR: An overview. ABC J. Adv. Res. 2015, 4, 143–152. [Google Scholar] [CrossRef]

- Fakhar, A.G.S.; Hamid, M.S.; Kadmin, A.F.; Hamzah, R.A.; Aidil, M. Development of Portable Automatic Number Plate Recognition (ANPR) System on Raspberry Pi. Int. J. Electr. Comput. Eng. 2019, 9, 1805. [Google Scholar]

- Nayak, V. Automatic Number Plate Recognition. Int. J. Adv. Trends Comput. Sci. Eng. 2020, 9, 3783–3787. [Google Scholar] [CrossRef]

- Salma; Saeed, M.; Ur Rahim, R.; Gufran Khan, M.; Zulfiqar, A.; Bhatti, M.T. Development of ANPR framework for Pakistani vehicle number plates using object detection and OCR. Complexity 2021, 2021, 5597337. [Google Scholar] [CrossRef]

- Rafek, S.N.; Kamarudin, S.N.; Mahmud, Y. Deep learning-based car plate number recognition (CPR) in videos stream. In Proceedings of the 2024 5th International Conference on Artificial Intelligence and Data Sciences (AiDAS), Bangkok, Thailand, 3–4 September 2024; pp. 538–543. [Google Scholar]

- Al-Hasan, T.M.; Bonnefille, V.; Bensaali, F. Enhanced yolov8-based system for automatic number plate recognition. Technologies 2024, 12, 164. [Google Scholar] [CrossRef]

- Liu, R. Improved LKM-yolov10 vehicle licence plate recognition detection system based on yolov10. In Proceedings of the 2024 4th International Conference on Electronic Information Engineering and Computer Science (EIECS), Yanji, China, 27–29 September 2024; pp. 622–626. [Google Scholar]

- Zunair, H.; Khan, S.; Hamza, A.B. RSUD20K: A dataset for road scene understanding in autonomous driving. In Proceedings of the 2024 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 27–30 October 2024; pp. 708–714. [Google Scholar]

- Geetha, A.S. YOLOv4: A Breakthrough in Real-Time Object Detection. arXiv 2025. [Google Scholar] [CrossRef]

- L-Vehicles Emissions and Noise Mitigation Solutions. Available online: https://www.lens-horizoneurope.eu/ (accessed on 17 August 2024).

- Raspberry Pi Documentation Camera Filters—Camera. Available online: https://www.raspberrypi.com/documentation/accessories/camera.html#hq-and-gs-cameras (accessed on 16 October 2024).

- Raspberry Pi Documentation Filter Removal—Camera. Available online: https://www.raspberrypi.com/documentation/accessories/camera.html#ir-filter (accessed on 16 October 2024).

- Photos of Vehicles and License Plates. Available online: https://platesmania.com/ (accessed on 17 January 2024).

- Tzutalin. LabelImg. Git Code. 2015. Available online: https://github.com/tzutalin/labelImg (accessed on 15 January 2024).

- Ning, M.; Lu, Y.; Hou, W.; Matskin, M. Yolov4-object: An efficient model and method for Object Discovery. In Proceedings of the 2021 IEEE 45th Annual Computers, Software, and Applications Conference (COMPSAC), Madrid, Spain, 12–16 July 2021. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Chen, W.-T.; Huang, Z.-K.; Tsai, C.-C.; Yang, H.-H.; Ding, J.-J.; Kuo, S.-Y. Learning multiple adverse weather removal via two-stage knowledge learning and multi-contrastive regularization: Toward a unified model. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Yang, H.; Pan, L.; Yang, Y.; Liang, W. Language-driven all-in-one adverse weather removal. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 24902–24912. [Google Scholar]

- Liu, Y.; Wang, X.; Hu, E.; Wang, A.; Shiri, B.; Lin, W. VNDHR: Variational single nighttime image Dehazing for enhancing visibility in intelligent transportation systems via hybrid regularization. IEEE Trans. Intell. Transp. Syst. 2025; early access. [Google Scholar] [CrossRef]

- Kerle, N.; Janssen, L.; Bakker, W.H. Principles of Remote Sensing: An Introductory Textbook; The International Institute for Geo-Information Science and Earth Observation (ITC): Enschede, The Netherlands, 2009. [Google Scholar]

- Paper, R.P.; Chandel, R.C.; Gupta, G. Image Filtering Algorithms and Techniques: A Review. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2013, 3, 198–202. [Google Scholar]

- Roy, P.; Dutta, S.; Dey, N.; Dey, G.; Chakraborty, S.; Ray, R. Adaptive thresholding: A comparative study. In Proceedings of the 2014 International Conference on Control, Instrumentation, Communication and Computational Technologies (ICCICCT), Kanyakumari District, India, 10–11 July 2014; pp. 1182–1186. [Google Scholar]

- Gong, X.-Y.; Su, H.; Xu, D.; Zhang, Z.-T.; Shen, F.; Yang, H.-B. An overview of contour detection approaches. Int. J. Autom. Comput. 2018, 15, 656–672. [Google Scholar] [CrossRef]

- Said, K.A.; Jambek, A.B. Analysis of image processing using morphological erosion and dilation. J. Phys. Conf. Ser. 2021, 2071, 012033. [Google Scholar] [CrossRef]

- Xue, Y. Optical Character Recognition; Department of Biomedical Engineering, University of Michigan: Ann Arbor, MI, USA, 2014. [Google Scholar]

- Aakash, P. Optical character recognition. Int. J. Sci. Res. Manag. 2016, 4, 409. [Google Scholar] [CrossRef]

- Smith, R. An overview of the tesseract OCR engine. In Proceedings of the Ninth International Conference on Document Analysis and Recognition (ICDAR 2007), Curitiba, Brazil, 23–26 September 2007; Volume 2, pp. 629–633. [Google Scholar]

- Tesseract-Ocr. Tesseract-OCR/Tesseract: Tesseract Open Source OCR Engine (Main Repository). Available online: https://github.com/tesseract-ocr/tesseract (accessed on 16 August 2024).

- L-Vehicles Emissions and Noise Mitigation Solutions. Available online: https://www.lens-horizoneurope.eu/demonstration-sites/ (accessed on 17 August 2024).

- ARIJIT1080/Licence-Plate-Detection-and-Recognition-Using-YOLO-V8-Easyocr. Available online: https://github.com/Arijit1080/Licence-Plate-Detection-and-Recognition-using-YOLO-V8-EasyOCR.git (accessed on 1 May 2025).

- Entbappy/License-Plate-Extraction-Save-Data-to-SQL-Database. Available online: https://github.com/entbappy/License-Plate-Extraction-Save-Data-to-SQL-Database.git (accessed on 1 May 2025).

- Lu, Y.; Chen, Y.; Zhang, S. Research on the method of recognizing book titles based on paddle OCR. In Proceedings of the 2024 4th International Signal Processing, Communications and Engineering Management Conference (ISPCEM), Montreal, QC, Canada, 28–30 November 2024; pp. 1044–1048. [Google Scholar]

- Photos of Vehicles and License Plates. Available online: https://platesmania.com/be/ (accessed on 17 January 2024).

- Photos of Vehicles and License Plates. Available online: https://platesmania.com/be/gallery.php?ctype=1&format=9 (accessed on 2 May 2025).

- Olav’s License Plate Pictures—Number Plate Photos. Available online: https://www.olavsplates.com/ (accessed on 25 May 2024).

| Configuration Parameter | Value |

|---|---|

| Batch size | 64 |

| Subdivision | 16 |

| Epochs | 100 |

| Classes | 1 |

| Performance Metric | Value |

|---|---|

| Precision | 0.99 |

| Recall | 1.00 |

| F1-score | 0.99 |

| L-Vehicle Type | Data Source | Detection Accuracy |

|---|---|---|

| Big Bikes or Scooters | Public dataset | ~95% |

| Big Bikes or Scooters | Validation Campaign | ~90% |

| Big Bikes or Scooters | Measurement Campaign | ~90% |

| Mopeds | Public dataset | ~90% |

| Mopeds | Validation Campaign | ~85% |

| Mopeds | Measurement Campaign | ~85% |

| L-Vehicle Type | Data Source | Recognition Accuracy |

|---|---|---|

| Big Bikes or Scooters | Public dataset | ~75% |

| Big Bikes or Scooters | Validation Campaign | ~70% |

| Big Bikes or Scooters | Measurement Campaign | ~70% |

| Mopeds | Public dataset | ~70% |

| Mopeds | Validation Campaign | ~65% |

| Mopeds | Measurement Campaign | ~60% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Imtiaz, H.H.; Schaffer, P.; Hesse, P.; Kupper, M.; Bergmann, A. Automatic Number Plate Detection and Recognition System for Small-Sized Number Plates of Category L-Vehicles for Remote Emission Sensing Applications. Sensors 2025, 25, 3499. https://doi.org/10.3390/s25113499

Imtiaz HH, Schaffer P, Hesse P, Kupper M, Bergmann A. Automatic Number Plate Detection and Recognition System for Small-Sized Number Plates of Category L-Vehicles for Remote Emission Sensing Applications. Sensors. 2025; 25(11):3499. https://doi.org/10.3390/s25113499

Chicago/Turabian StyleImtiaz, Hafiz Hashim, Paul Schaffer, Paul Hesse, Martin Kupper, and Alexander Bergmann. 2025. "Automatic Number Plate Detection and Recognition System for Small-Sized Number Plates of Category L-Vehicles for Remote Emission Sensing Applications" Sensors 25, no. 11: 3499. https://doi.org/10.3390/s25113499

APA StyleImtiaz, H. H., Schaffer, P., Hesse, P., Kupper, M., & Bergmann, A. (2025). Automatic Number Plate Detection and Recognition System for Small-Sized Number Plates of Category L-Vehicles for Remote Emission Sensing Applications. Sensors, 25(11), 3499. https://doi.org/10.3390/s25113499