Abstract

To address the challenges of accurate indoor positioning in complex environments, this paper proposes a two-stage indoor positioning method, ResT-IMU, which integrates the ResNet and Transformer architectures. The method initially processes the IMU data using Kalman filtering, followed by the application of windowing to the data. Residual networks are then employed to extract motion features by learning the residual mapping of the input data, which enhances the model’s ability to capture motion changes and predict instantaneous velocity. Subsequently, the self-attention mechanism of the Transformer is utilized to capture the temporal features of the IMU data, thereby refining the estimation of movement direction in conjunction with the velocity predictions. Finally, a fully connected layer outputs the predicted velocity and direction, which are used to calculate the trajectory. During training, the RMSE loss is used to optimize velocity prediction, while the cosine similarity loss is employed for direction prediction. Theexperimental results demonstrate that ResT-IMU achieves velocity prediction errors of 0.0182 m/s on the iIMU-TD dataset and 0.014 m/s on the RoNIN dataset. Compared with the ResNet model, ResT-IMU achieves reductions of 0.19 m in ATE and 0.05 m in RTE on the RoNIN dataset. Compared with the IMUNet model, ResT-IMU achieves reductions of 0.61 m in ATE and 0.02 m in RTE on the iIMU-TD dataset and reductions of 0.32 m in ATE and 0.33 m in RTE on the RoNIN dataset. Compared with the ResMixer model, ResT-IMU achieves reductions of 0.13 m in ATE and 0.02 m in RTE on the RoNIN dataset. These improvements indicate that ResT-IMU offers superior accuracy and robustness in trajectory prediction.

1. Introduction

In recent years, localization technologies have become essential for a variety of applications, including navigation, tracking, and environmental perception. Accurate indoor localization is particularly crucial, as people spend a significant amount of time in indoor environments such as shopping centers, airports, and offices. Common indoor localization technologies, such as RFID [1], UWB [2], Wi-Fi positioning [3,4], Bluetooth positioning [5], infrared positioning [6], ultrasonic positioning [7], vision-based positioning [8], INS [9,10], geomagnetic positioning [11], and Zigbee positioning [12], each possess unique application scenarios and performance characteristics. However, many of these technologies necessitate additional hardware deployment. In contrast, Inertial Measurement Unit (IMU) technology, as an integrated component on modern smartphones, offers extensive application potential in GPS-limited scenarios by capturing high-precision motion states without the need for additional hardware. Depending on the implementation techniques, IMU localization can be categorized into traditional and deep learning-based approaches.

Traditional IMU localization typically employs methods such as step counting and zero-velocity updates to mitigate errors. Step counting involves monitoring gait information during walking or running to estimate position and distance by counting the number of steps taken. Zero-velocity updating identifies moments when the object is stationary and utilizes these instances to correct the velocity drift errors in the inertial navigation system (INS). Wang et al. [13] investigated the impact of IMU installation locations on the accuracy of pedestrian inertial navigation systems assisted by zero-velocity update techniques. Another approach involves using filters to combine IMU measurements with prior information to estimate position and velocity in real time, thereby correcting errors. The Kalman filter, a common example, effectively integrates the acceleration and angular velocity data from IMUs through its recursive data fusion algorithm. References [14,15,16,17] have investigated the use of filtering techniques in combination with IMU data to enhance the accuracy and robustness of navigation and positioning systems. Fusing IMU data with other sensor data is also a viable solution. For example, GPS provides globally accurate position information. When combined with IMU data, it can eliminate cumulative errors and reduce dependence on closed-loop systems. Magnetometers can measure the Earth’s magnetic field to assist the IMU in calibrating yaw angles, thereby reducing long-term accumulated errors and improving positioning accuracy. Eckenhoff et al. [18] proposed a multi-IMU, multi-camera visual-inertial navigation system named MIMC-VINS, which integrates information from all sensors.

Deep learning-based IMU localization leverages advanced machine learning techniques [19,20] to reduce errors in trajectory estimation from IMU data. Replacing traditional step prediction and zero-velocity prediction methods with neural networks is a viable approach. The advantage of this method lies in its ability to utilize deep learning models to learn and infer inertial odometry directly from raw IMU data, thereby simultaneously addressing errors in dynamic motion. This enhances the overall performance and reliability of the positioning system. Wagstaff et al. [21] and Brossard et al. [22] proposed methods to enhance the accuracy of zero-velocity aided inertial navigation systems by replacing the standard zero-velocity detector with a Long Short-Term Memory (LSTM) neural network. Neural networks can also be employed to dynamically adjust the parameters of filters to effectively mitigate the error issues associated with IMU localization. Brossard et al. [23] utilized deep neural networks to dynamically tune the noise parameters of filters, thereby enabling real-time determination of the most suitable covariance noise matrix.

Learning position transformations directly from IMU data is a method that warrants attention. Chen et al. [24,25] proposed a deep neural network framework that employs an LSTM model to learn position transformations from IMU data, thereby constructing an inertial odometry system. The LSTM model is capable of effectively modeling the temporal dynamics of human motion, thereby making it suitable for inertial navigation tasks. However, the sequential nature of LSTMs restricts their parallelization capabilities, leading to slower training speeds when dealing with very long sequences. Herath et al. [26] developed a ResNet-based deep learning architecture that focuses on extracting position information from IMU data to construct an inertial odometry system. However, ResNet itself may not fully capture the temporal dependencies crucial for inertial navigation, as it is primarily designed for extracting spatial features. Recently, the Transformer architecture [27] has emerged as a powerful alternative for sequence modeling. Unlike RNNs, the Transformer relies entirely on attention mechanisms to capture global dependencies between input and output. This allows for significantly more parallelization during training and can lead to superior performance in tasks such as machine translation. The Transformer’s ability to handle long-range dependencies and its efficiency in training make it a promising candidate for improving IMU localization [28].

This paper addresses the challenge of accurate indoor localization by proposing a novel two-stage network-based IMU localization model, named ResNet–Transformer Integrated Network for Inertial Measurement Units (ResT-IMUs). Unlike the two-stage methods in [29,30], which focus on switching between different localization techniques or fusing multiple sensor modalities, this method leverages deep learning techniques, combining ResNet and Transformer architectures to process IMU sensor data, learn human walking patterns, predict movement velocity, and calculate the trajectory of a target within an indoor environment. Specifically, the first stage of ResT-IMU predicts the instantaneous velocity from IMU data using a ResNet-based velocity branch model, while the second stage refines the estimation of the motion direction using a Transformer-based orientation branch model. The main contributions of this paper are as follows:

- This paper applies the Transformer architecture to motion trajectory prediction using IMU data. By leveraging the self-attention mechanism of the Transformer, it captures long-term dependencies in the IMU data. This not only improves the accuracy of the predictions but also enhances the model’s adaptability to complex dynamic environments.

- This paper proposes an innovative two-stage prediction framework. The model first predicts the instantaneous velocity and subsequently refines the estimation of the motion direction based on the predicted velocity. This staged approach enables the model to focus on specific prediction tasks at each stage, thereby enhancing the overall prediction accuracy and reliability.

- The model adaptively adjusts the size of the data window for direction prediction based on the predicted velocity. This dynamic adjustment mechanism enables the model to more flexibly handle different motion states, particularly during periods of drastic velocity changes, thereby allowing it to quickly focus on the most relevant data intervals. Additionally, velocity information is incorporated into the loss function for direction prediction, thereby making the loss calculation dependent not only on the accuracy of direction but also on the matching degree of velocity.

2. Improved ResT-IMU Model

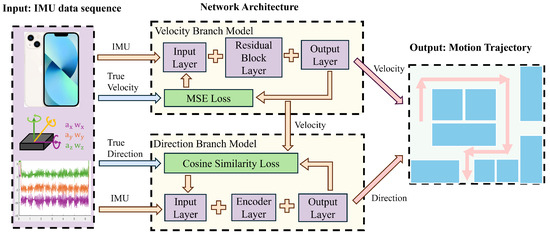

The architecture of the proposed ResT-IMU model is depicted in Figure 1. It takes the IMU acceleration and angular velocity, collected via a smartphone, as input. For the velocity branch, it employs a ResNet-based architecture to calculate velocity, thereby leveraging ResNet’s strong spatial feature extraction capabilities. For the direction branch, it uses a Transformer-based architecture to calculate direction, thereby taking advantage of the Transformer’s ability to handle long-range dependencies. This design integrates the strengths of existing techniques to address their limitations and enhance the accuracy of trajectory calculation.

Figure 1.

Architecture of the ResT-IMU model.

The model architecture comprises two main components: the velocity branch model and the direction branch model. The core of the velocity branch model is the ResNet network. First, it maps the acceleration and angular velocity data from the IMU from the input space to the feature space, thereby generating high-dimensional feature vectors. Then, the output layer maps these high-dimensional feature vectors to the output space. The network parameters are optimized using the MSE loss function, resulting in the generation of the velocity sequence as the final output of this branch. The core of the direction branch model is the encoder structure within the Transformer network. The encoder receives input vectors that have been processed through an embedding layer and augmented with positional information. It captures the temporal sequence features of the data using the self-attention mechanism. The output layer then maps the high-dimensional feature vectors to the output space. In the cosine similarity loss function, the network parameters are optimized by incorporating the input data and the velocity information from the velocity branch model. This results in the generation of the direction sequence. By combining the velocity sequence and the direction sequence, the final trajectory of motion is computed.

2.1. Data Preparation

The model in this paper was trained using the iIMU-TD dataset, which comprises a total of 2.41 h and 10.4 km of data, collected in both indoor and outdoor experimental environments. The dataset was captured using two smartphones. One smartphone, equipped with an IMU sensor, was used to capture the triaxial acceleration and triaxial angular velocity data during the experiments. The other smartphone used in the experiments was ASUS_A002A, which integrates Tango technology developed by Google. This technology provides localization tags for the trajectory through visual recognition, as shown in Figure 2. During the experiments, the data collector placed the Tango-enabled smartphone on the chest to record a more accurate trajectory. The other smartphone was held in hand in a manner that simulates everyday use, to collect IMU data at a sampling rate of 200 Hz. After the experiments, the raw IMU data were preprocessed through filtering and other operations and then saved for subsequent analysis and use.

Figure 2.

Experimental data collection setup.

In the experiments, the IMU data record dynamic information in the device coordinate system, rather than in the global coordinate system. To predict the trajectory of motion, these data must be transformed into the global coordinate system, as shown in the following Equations (1) and (2):

In the equations, and represent the acceleration and angular velocity vectors in the Tango device coordinate system at time i, while and represent the acceleration and angular velocity vectors in the IMU device coordinate system at time i. is the rotation matrix that transforms from the IMU device coordinate system to the Tango device coordinate system at time i. The quaternion representation of this rotation matrix is given by the following Equation (3):

In the equation, represents the quaternion that transforms from the IMU device coordinate system to the Tango device coordinate system at time i. and respectively represent the initial attitudes of the IMU and Tango devices in their own coordinate systems. represents the initial rotation quaternion from the IMU device coordinate system to the Tango device coordinate system, which is determined by fixing the initial relative attitude of the two devices.

2.2. Velocity Branch Model

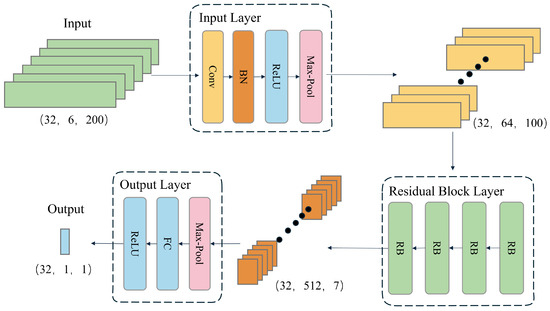

The velocity branch model is designed to predict the magnitude of instantaneous velocity from IMU data. Its architecture is depicted in Figure 3 and comprises three main components: the input module, the residual group module, and the output module. The input module first preprocesses the raw data collected by the IMU sensors. The data then flow through multiple residual blocks, which extract features by learning patterns in the data while mitigating the vanishing gradient problem using residual connections. Finally, the output module maps the extracted features to the predicted instantaneous velocity through global average pooling and a linear layer, thereby achieving accurate velocity estimation.

Figure 3.

Architecture of the velocity branch model.

The velocity branch model employs a time window of 200 data points as input, based on the data collected by the IMU sensors, to predict the instantaneous velocity of the last frame in the sequence. To adapt to the characteristics of IMU data, which are one-dimensional sequences, the model utilizes one-dimensional convolutional layers and pooling layers. These layers are specifically designed to process one-dimensional data efficiently. Additionally, a ReLU activation function is added to the output layer to ensure that the predicted velocity values are non-negative, which is essential for regression tasks involving speed magnitude. These modifications not only enhance the model’s suitability for handling long sequences of IMU data but also reduce the parameter count, making the model more efficient and better suited for processing extensive data sequences. With a stride of 10 for step-by-step prediction, the model can transform an IMU data sequence of length N into a velocity magnitude sequence of length . By applying this model, a series of discrete velocity values can be estimated from continuous IMU measurements, where each velocity value represents the speed magnitude of the last frame in a window of 200 data points.

2.2.1. Input Layer

The input layer performs preliminary processing on the input data and extracts initial features. A one-dimensional convolutional layer with a kernel size of 7 increases the number of input data channels from 6 to 64. Subsequently, a batch normalization layer normalizes the output of the convolutional layer, which accelerates training and enhances model stability. The normalized data are then activated by the ReLU activation function to introduce non-linearity. Finally, a max-pooling layer reduces the temporal dimension of the features by half while preserving important features.

2.2.2. Residual Block Layer

The model comprises four residual blocks. The number of output channels in each residual block increases according to the residual rules. After passing through the four residual blocks, the number of channels increases from 64 to 512. The addition of convolutional layers with a stride of 2 in the residual blocks reduces the temporal dimension from 100 to 7. This enables the model to extract more advanced features while removing redundant information, enhancing the model’s generalization ability.

2.2.3. Output Layer

The output layer maps the output features from the residual group to the final output space. The global average pooling layer compresses the temporal dimension of the features to 1, yielding the global average value for each channel. Subsequently, the fully connected layer regresses the 512-dimensional features to produce the final velocity prediction.

2.2.4. Loss Function

To objectively evaluate the model’s performance in the velocity prediction task, the MSE is adopted as the primary metric for quantifying the error. MSE is a widely used measure for comparing the differences between model predictions and actual observations, especially in continuous numerical prediction tasks such as velocity estimation. The formula for calculating the velocity loss using MSE is shown in the following Equation (4):

In the equation, n denotes the number of samples, represents the predicted velocity value for the i-th sample by the model, and is the corresponding actual velocity value. The smaller the value of MSE, the higher the prediction accuracy of the model, indicating a smaller difference between the predicted values and the actual values.

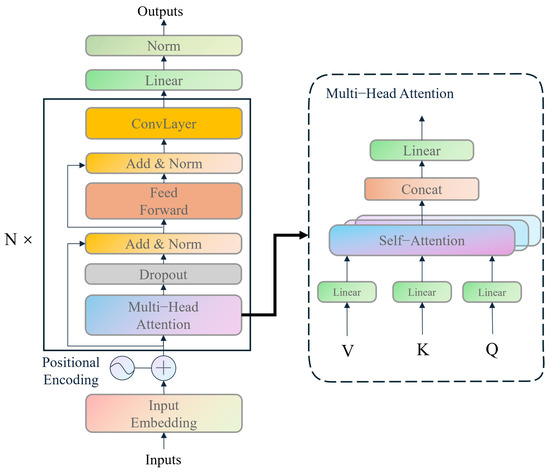

2.3. Direction Branch Model

The direction branch model is designed to predict the instantaneous direction vector from IMU data, taking into account the velocity magnitude predicted by the velocity branch model. The velocity magnitude is used not only for the dynamic adjustment of the data window but also in the loss function calculation. The model’s architecture, depicted in Figure 4, includes an input embedding layer, a positional encoding layer, an encoder layer, a fully connected layer, and an activation function. The embedding layer transforms the input into a higher-dimensional vector form, while the positional encoding layer adds a positional vector to each position. The encoder layer processes the input data through a multi-layer structure, with each head in the multi-head attention mechanism module performing self-attention computations, enabling the model to focus on the internal information of the sequence data. The fully connected layer converts the encoder’s output into a predicted direction vector, and the activation function introduces non-linearity to enhance the model’s expressive power. The proposed framework incorporates several novel modifications. Specifically, the decoder component of the Transformer architecture is omitted, as the objective is to derive an average velocity rather than predict a sequence of velocities within a window. This modification significantly enhances computational efficiency. Building on the insights from [31], a ConvLayer is integrated after each encoder layer to mitigate computational overhead and augment the model’s capacity to handle long sequence data. Ultimately, a linear layer and L2 normalization are appended after the encoder to generate the unit vector of velocity.

Figure 4.

Architecture of the direction branch model.

The direction branch model also employs a time window of 200 data points as input, based on the data collected by the IMU sensors, to predict the direction vector of the last frame in the sequence. Unlike the velocity branch model, it incorporates the velocity magnitude sequence to aid in direction prediction. Specifically, it discards certain data points based on the velocity magnitude, thereby dynamically adjusting the size of the window. With a stride of 10 for step-by-step prediction, the model can transform an IMU data sequence of length N into a direction vector sequence of length . Finally, by combining the velocity magnitude sequence, a complete velocity vector sequence is formed. By applying this model, a series of discrete velocity vectors can be estimated from continuous IMU measurements, where each velocity vector represents the velocity of the last frame in a window of 200 data points.

2.3.1. Embedding Layer

The embedding layer employs a convolutional layer with a kernel size of 3 to increase the number of input channels from 6 to 64, capturing local features within the time series and thereby enhancing the model’s expressive capability.

2.3.2. Positional Encoding

The role of positional encoding is to provide the model with positional information for each time step, thereby enabling the model to capture the sequential order of the series. The calculation formula is shown as follows:

where is the position in the sequence, i is the dimension, and is the dimensionality of the embedding.

2.3.3. Encoder Layer

The encoder consists of multiple encoder layers, each of which contains an attention layer that performs self-attention operations on the input sequence to extract the dependencies within the time series. The multi-head attention mechanism module in each encoder layer has multiple heads, each of which executes the self-attention mechanism to focus on different parts of the sequence data. The calculation is shown in the following Equation (6):

where Q, K, and V are the query, key, and value matrices, respectively, and is the dimension of the key vectors. The fully connected layer transforms the output of the encoder into the predicted direction vector, and the activation function introduces non-linearity, thereby enhancing the model’s expressive capability.

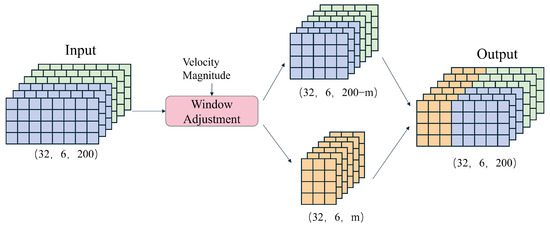

2.3.4. Adaptive Window Adjustment

This paper proposes a dynamic window adjustment strategy, illustrated in Figure 5, to enhance the accuracy of velocity prediction based on IMU data. This strategy dynamically adjusts the size of the data window based on the predicted velocity magnitudes, thereby optimizing computational efficiency and prediction accuracy.

Figure 5.

Dynamic window adjustment diagram.

The core idea of this strategy is to utilize the velocity prediction results from the preceding branch model to adjust the size of the data window in the direction branch model, thereby better capturing the motion features and enhancing prediction accuracy. The predicted velocities from the velocity branch model are first stored locally. Then, in the direction branch model, the size of the data window used for velocity direction prediction is dynamically adjusted based on the magnitude of velocity. This process is implemented through the following Equation (7):

In the equation, and represent the data of the j-th frame within a 200-frame window, m denotes the adjustment node, and its calculation is shown in the following Equation (8):

In the equation, v represents the velocity magnitude corresponding to the window.

The adjustment method takes the predicted velocity magnitude and the window data for direction prediction as inputs and outputs of the modified window data. Essentially, it does not change the window size but sets the values of the first m data points in the window to zero. The value of m increases with the velocity magnitude. This is because higher velocities are more likely to result in velocity changes, reducing the correlation between the current velocity and previous data points.

2.3.5. Loss Function

For the direction branch model, this paper proposes a composite loss function that integrates cosine similarity and the magnitude of the velocity vector to optimize the network’s prediction accuracy of the velocity vector direction, especially at higher speeds. The construction of this loss function is based on the following theoretical foundation: during high-speed motion, deviations in direction prediction can significantly impact the accuracy of the trajectory. Therefore, the objective of this function is to enable the network to focus more on reducing directional prediction errors for samples with higher velocity values during the training process, as shown in the following Equation (9):

By multiplying the cosine similarity with the velocity magnitude, the model ensures that both the direction and magnitude of the velocity are considered during training. Let x be the predicted velocity direction vector and y be the true velocity direction vector. The cosine similarity is calculated as shown in the following Equation (10):

3. Results

3.1. Velocity Prediction Error

The velocity branch model is capable of predicting the magnitude of velocity at each moment. This prediction serves not only to determine the mode of motion but also as a crucial metric for evaluating model performance, providing guidance for model assessment and optimization. The RoNIN dataset [26], created to support data-driven inertial navigation research, contains over 42.7 h of IMU sensor data and corresponding ground-truth 3D trajectory data from more than 100 different human subjects. These data were collected in various buildings, covering 276 sequences. In this section, experiments are conducted on different models using both the RoNIN dataset and the iIMU-TD dataset. Table 1 presents a comparison of different models in velocity prediction. The ResNet and LSTM network models are reproduced based on the work in [26], while the idea of the Encoder model is inspired by [28]. Additionally, the IMUNet [32] and ResMixer [33] models, both of which are based on the work in [26], represent the latest research in this area.

Table 1.

Comparison of velocity prediction errors for six models on two datasets.

As shown in Table 1, the velocity branch of the ResT-IMU model outperforms single-stage models in predicting velocity magnitude. Single-stage models, which consider both the magnitude and direction of velocity during training, may yield less accurate predictions of velocity magnitude compared with models that focus solely on predicting velocity magnitude, such as the velocity branch of the ResT-IMU model. On the iIMU-TD and RoNIN datasets, the ResT-IMU model achieves average prediction errors of 0.0182 m/s and 0.014 m/s, respectively, corresponding to percentage errors of approximately 1.55% and 1.91%. Compared with other models, ResT-IMU demonstrates significantly lower error rates. On the iIMU-TD dataset, ResT-IMU outperforms ResNet (0.0265 m/s, 2.26%), LSTM (0.0881 m/s, 7.51%), Encoder (0.0281 m/s, 2.39%), IMUNet (0.0293 m/s, 2.51%), and ResMixer (0.0246 m/s, 2.11%). On the RoNIN dataset, ResT-IMU outperforms ResNet (0.0225 m/s, 3.08%), LSTM (0.0294 m/s, 4.02%), Encoder (0.0241 m/s, 3.29%), IMUNet (0.0233 m/s, 3.11%), and ResMixer (0.0221 m/s, 2.92%). These results highlight the superior performance of ResT-IMU in velocity prediction, achieving the lowest error rates among all tested models.

3.2. Absolute Error and Relative Error

After obtaining the velocity sequence from the velocity branch model and inputting it along with the original IMU data into the direction branch model, the direction sequence can be obtained through training. By combining the velocity sequence and the direction sequence into a velocity vector sequence, the trajectory of the target can be derived. Comparisons were made on the RoNIN dataset and the iIMU-TD dataset for the ResNet, LSTM, Encoder, IMUNet, and ResMixer models that predict velocity vectors, as well as the proposed ResT-IMU model. Relative trajectory error and absolute trajectory error were used as evaluation metrics. The RTE calculates the positioning error for each trajectory point over the subsequent 1 minute, sums these errors, and then divides the result by the total number of trajectory points. The ATE calculates the average error value for all trajectory points. By considering both error metrics, a comprehensive evaluation and comparison of the localization accuracy of various models can be made. Table 2 presents a comparison of trajectory errors for the six models on the two different datasets.

Table 2.

Comparison of absolute and relative trajectory errors for six models on two datasets.

As shown in Table 2, the ResT-IMU model demonstrates superior performance in terms of absolute and relative trajectory errors on both the iIMU-TD and RoNIN datasets. Specifically, on the iIMU-TD dataset, the ResT-IMU model achieves the lowest ATE of 3.08 and the third-lowest RTE of 3.89, outperforming the ResNet, LSTM, Encoder, IMUNet, and ResMixer models. On the RoNIN dataset, the ResT-IMU model exhibits the lowest ATE of 3.64 and the lowest RTE of 2.60. The ResT-IMU model achieves the lowest error on both the iIMU-TD and RoNIN datasets, attributable to its two-stage analysis approach. This approach first predicts the velocity magnitude using a dedicated velocity branch, which focuses solely on the magnitude of the velocity. This dedicated branch allows the model to more accurately estimate the speed of the target, reducing the error in the velocity predictions. The direction branch then uses the predicted velocity magnitude along with the original IMU data to predict the direction of movement. By separating the prediction of velocity magnitude and direction, the model can more effectively capture the spatial and temporal features of the IMU data, leading to improved localization accuracy. For instance, compared with the ResNet model, the ResT-IMU model achieves a 5.21% reduction in ATE and a 4.41% reduction in RTE on the RoNIN dataset. On the iIMU-TD dataset, the ResT-IMU model achieves a 16.53% reduction in ATE and a 0.51% reduction in RTE compared with the IMUNet model. On the RoNIN dataset, the ResT-IMU model outperforms the IMUNet model with an 8.08% reduction in ATE and an 11.26% reduction in RTE. Additionally, compared with the ResMixer model, the ResT-IMU model achieves a 3.20% reduction in ATE and a 1.14% reduction in RTE on the RoNIN dataset.

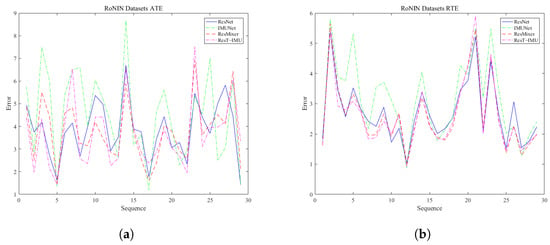

Figure 6 illustrates the error distribution of four network models on the RoNIN dataset. The pink line denotes the novel ResT-IMU model proposed in this study, while the other lines represent the ResNet, IMUNet, and ResMixer models. In terms of ATE, the ResT-IMU model outperforms the ResNet model in 20 of 29 trajectories, accounting for 69.0% of the total trajectories. It also outperforms the IMUNet model in 19 of 29 trajectories, equivalent to 65.5%. Furthermore, it surpasses the ResMixer model in 18 of 29 trajectories, representing 62.1% of the total trajectories. In terms of RTE, the ResT-IMU model outperforms the ResNet model in 21 of 29 trajectories, accounting for 72.4% of the total trajectories. It also outperforms the IMUNet model in 23 of 29 trajectories, equivalent to 79.3%. Additionally, it outperforms the ResMixer model in 17 of 29 trajectories, which is 58.6% of the total trajectories.

Figure 6.

Error distribution chart of four models on the RoNIN dataset. (a) shows the ATE of each trajectory for four different models on the RoNIN dataset, while (b) shows the RTE of each trajectory for four different models on the RoNIN dataset, where the pink line corresponds to the proposed ResT-IMU model.

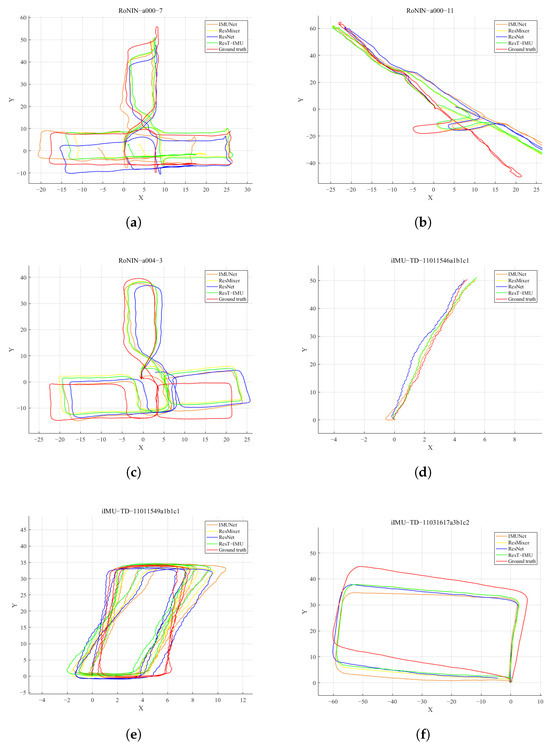

Figure 7 depicts the trajectories of four models on partial data from the RoNIN dataset and the iIMU-TD dataset. In the figure, the red trajectory represents the ground truth, while the green trajectory corresponds to the novel model proposed in this study, which is compared with the trajectories of other models represented by different colors. Due to the presence of errors, noise, and bias in the IMU, the double integration algorithm accumulates significant errors over time, leading to substantial deviations from the true trajectory even in a short period. Neural network-based methods effectively reduce the accumulation of errors and provide accurate estimations of the motion trajectories. As shown in Figure 7a, the ATEs of the ResNet, IMUNet, ResMixer, and ResT-IMU models on the trajectory prediction are 2.92 m, 4.15 m, 2.86 m, and 2.26 m, respectively. When the motion reaches the upper region of the trajectory, the trajectory predicted by the ResT-IMU model is significantly closer to the ground truth. As shown in Figure 7f, the ATEs of the four models are 4.07 m, 4.45 m, 4.06 m, and 3.90 m, respectively. The endpoint of the trajectory predicted by the ResT-IMU model is closer to the endpoint of the ground truth. The velocity branch of the model ensures that the overall trajectory scale is closer to reality, while the direction branch enhances the model’s fitting accuracy during both straight-line motion and turns. These features indicate that the new model achieves higher accuracy and stronger robustness in trajectory prediction.

Figure 7.

Trajectory comparison chart of different models on two datasets. (a–c) show the comparison of predicted trajectories and ground-truth trajectories for three data samples from the RoNIN dataset across different models. Similarly, (d–f) show the comparison of predicted trajectories and ground-truth trajectories for three data samples from the iIMU-TD dataset across different models. The red lines represent the ground-truth trajectories; the green lines correspond to the proposed ResT-IMU model.

3.3. Cross-Device Adaptability of the Model

To verify the generalization ability of the network model designed for smartphone IMU data in other application scenarios, additional experiments were conducted on the TLIO dataset [34]. This dataset was collected from a head-mounted device equipped with a Bosch BMI055 IMU sensor, capturing diverse motion patterns through a series of real-world activities. The dataset comprises over 400 sequences, totaling approximately 60 h of pedestrian data, covering a wide range of activities, including walking, standing, doing household chores, playing billiards, and climbing stairs. Table 3 presents a comparison of different models in terms of trajectory error on the this dataset.

Table 3.

Comparison of absolute and relative trajectory errors for six models on the TLIO datasets.

As shown in Table 3, the ResT-IMU model achieves nearly 2 m errors in both absolute and relative terms on the TLIO dataset. The absolute error is second only to the RoNIN (ResNet) algorithm, while the relative error outperforms other neural network methods. These results demonstrate that the proposed ResT-IMU model maintains high accuracy in other application scenarios, such as head-mounted IMU devices.

4. Conclusions

This paper presents a novel two-stage motion trajectory prediction model, ResT-IMU, which effectively enhances the accuracy and robustness of IMU-based localization. The proposed method leverages the self-attention mechanism of the Transformer architecture to capture temporal sequence features in IMU data, first predicting instantaneous velocity and then refining the estimation of motion direction based on the predicted velocity. The ResT-IMU model introduces a two-stage prediction framework that separates the prediction of instantaneous velocity and motion direction, allowing the model to focus on specific prediction tasks at each stage and thereby improving overall prediction accuracy and reliability. By combining ResNet for velocity prediction and Transformer for direction prediction, the model captures both spatial and temporal dependencies in IMU data, significantly improving trajectory prediction accuracy. The model also dynamically adjusts the size of the data window for direction prediction based on the predicted velocity, enabling it to handle different motion states more flexibly, especially during periods of drastic velocity changes.

Experimental results on the RoNIN and iIMU-TD datasets demonstrate that ResT-IMU outperforms existing models in both ATE and RTE. On the iIMU-TD dataset, ResT-IMU achieves an ATE of 3.08 and an RTE of 3.89, outperforming ResNet (ATE: 3.27, RTE: 3.73), LSTM (ATE: 3.87, RTE: 4.37), Encoder (ATE: 3.77, RTE: 3.95), IMUNet (ATE: 3.69, RTE: 3.91), and ResMixer (ATE: 3.21, RTE: 3.71). On the RoNIN dataset, ResT-IMU achieves an ATE of 3.64 and an RTE of 2.60, outperforming ResNet (ATE: 3.84, RTE: 2.72), LSTM (ATE: 4.22, RTE: 2.73), Encoder (ATE: 3.80, RTE: 2.78), IMUNet (ATE: 3.96, RTE: 2.93), and ResMixer (ATE: 3.76, RTE: 2.63). These results highlight the superior performance of ResT-IMU in reducing both ATE and RTE, thereby improving the overall accuracy of trajectory prediction.

Additional experiments on the TLIO dataset verify the model’s generalization ability in other application scenarios, with ResT-IMU achieving nearly 2 m errors in both absolute and relative terms, outperforming other neural network methods. Future research will focus on further optimizing the IMU-based localization algorithm, exploring more efficient network structures and training strategies to improve localization accuracy and model generalization ability, and reducing the algorithm’s power consumption to extend the device’s battery life while maintaining localization accuracy.

Author Contributions

Conceptualization, Y.Z.; methodology, Y.Z. and J.Z.; software, J.Z. and W.C.; validation, Y.Z., S.Y. and Q.C.; formal analysis, C.Z.; investigation, Y.Z., C.Z. and Q.C.; resources, Y.Z.; data curation, J.Z.; writing—original draft preparation, J.Z.; writing—review and editing, Y.Z.; visualization, W.C.; supervision, Y.Z.; project administration, Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Jiangsu Graduate Research Fund Innovation Project grant number KYCX23_3059, and the APC was funded by Changzhou University.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to considerations of privacy protection and ethical principles.

Acknowledgments

We are grateful to all our study participants for their support.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Yao, C.Y.; Hsia, W.C. An Indoor Positioning System Based on the Dual-Channel Passive RFID Technology. IEEE Sens. J. 2018, 18, 4654–4663. [Google Scholar] [CrossRef]

- Bregar, K. Indoor UWB Positioning and Position Tracking Data Set. Sci. Data 2023, 10, 744. [Google Scholar] [CrossRef]

- Shang, S.; Wang, L. Overview of WiFi fingerprinting-based indoor positioning. IET Commun. 2022, 16, 725–733. [Google Scholar] [CrossRef]

- Ma, Z.; Shi, K. Few-Shot learning for WiFi fingerprinting indoor positioning. Sensors 2023, 23, 8458. [Google Scholar] [CrossRef]

- De, B.G.; Quesada-Arencibia, A.; Garcia, C.R.; Rodriguez-Rodriguez, J.C.; Moreno-Diaz, R. A Protocol-Channel-based Indoor Positioning Performance Study for Bluetooth Low Energy. IEEE Access 2018, 6, 33440–33450. [Google Scholar]

- Tsai, C.Y.; Lin, Y.W.; Ku, H.M.; Lee, C.Y. Positioning System of Infrared Sensors Based on ZnO Thin Film. Sensors 2023, 23, 6818. [Google Scholar] [CrossRef]

- Khyam, M.O.; Xinde, L.; Ge, S.S.; Pickering, M.R. Multiple Access Chirp-Based Ultrasonic Positioning. IEEE Trans. Instrum. Meas. 2017, 66, 3126–3137. [Google Scholar] [CrossRef]

- Qin, J.; Li, M.; Li, D.; Zhong, J.; Yang, K. A survey on visual navigation and positioning for autonomous UUVs. Remote Sens. 2022, 14, 3794. [Google Scholar] [CrossRef]

- Lu, C.; Uchiyama, H.; Thomas, D.; Shimada, A.; Taniguchi, R.I. Indoor Positioning System Based on Chest-Mounted IMU. Sensors 2019, 19, 420. [Google Scholar] [CrossRef]

- Sun, M.; Wang, Y.; Joseph, W.; Plets, D. Indoor localization using mind evolutionary algorithm-based geomagnetic positioning and smartphone IMU sensors. IEEE Sens. J. 2022, 22, 7130–7141. [Google Scholar] [CrossRef]

- Kuo, Y.H.; Wu, E.H.K. Intelligent geomagnetic indoor positioning system. Electronics 2023, 12, 2227. [Google Scholar] [CrossRef]

- Uradzinski, M.; Guo, H.; Liu, X.; Yu, M. Advanced indoor positioning using zigbee wireless technology. Wirel. Pers. Commun. 2017, 97, 6509–6518. [Google Scholar] [CrossRef]

- Wang, Y.; Askari, S.; Shkel, A.M. Study on mounting position of IMU for better accuracy of ZUPT-aided pedestrian inertial navigation. In Proceedings of the 2019 IEEE International Symposium on Inertial Sensors and Systems (INERTIAL), Naples, FL, USA, 1–5 April 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–4. [Google Scholar]

- Yang, C.; Shi, W.; Chen, W. Robust M–M unscented Kalman filtering for GPS/IMU navigation. J. Geod. 2019, 93, 1093–1104. [Google Scholar] [CrossRef]

- Chen, J.; Zhou, B.; Bao, S.; Liu, X.; Gu, Z.; Li, L.; Zhao, Y.; Zhu, J.; Li, Q. A data-driven inertial navigation/Bluetooth fusion algorithm for indoor localization. IEEE Sens. J. 2021, 22, 5288–5301. [Google Scholar] [CrossRef]

- Feng, D.; Wang, C.; He, C.; Zhuang, Y.; Xia, X.G. Kalman-filter-based integration of IMU and UWB for high-accuracy indoor positioning and navigation. IEEE Internet Things J. 2020, 7, 3133–3146. [Google Scholar] [CrossRef]

- Xu, X.; Xu, X.; Zhang, T.; Wang, Z. In-motion filter-QUEST alignment for strapdown inertial navigation systems. IEEE Trans. Instrum. Meas. 2018, 67, 1979–1993. [Google Scholar] [CrossRef]

- Eckenhoff, K.; Geneva, P.; Huang, G. MIMC-VINS: A versatile and resilient multi-IMU multi-camera visual-inertial navigation system. IEEE Trans. Robot. 2021, 37, 1360–1380. [Google Scholar] [CrossRef]

- Zhu, C.; Wang, Q.; Xie, Y.; Xu, S. Multiview latent space learning with progressively fine-tuned deep features for unsupervised domain adaptation. Inf. Sci. 2024, 662, 17. [Google Scholar] [CrossRef]

- Zhu, C.; Zhang, L.; Luo, W.; Jiang, G.; Wang, Q. Tensorial multiview low-rank high-order graph learning for context-enhanced domain adaptation. Neural Netw. 2025, 181, 106859. [Google Scholar] [CrossRef]

- Wagstaff, B.; Kelly, J. LSTM-based zero-velocity detection for robust inertial navigation. In Proceedings of the 2018 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Nantes, France, 24–27 September 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–8. [Google Scholar]

- Brossard, M.; Barrau, A.; Bonnabel, S. RINS-W: Robust inertial navigation system on wheels. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macao, China, 4–8 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 2068–2075. [Google Scholar]

- Brossard, M.; Barrau, A.; Bonnabel, S. AI-IMU dead-reckoning. IEEE Trans. Intell. Veh. 2020, 5, 585–595. [Google Scholar] [CrossRef]

- Chen, C.; Lu, X.; Markham, A.; Trigoni, N. Ionet: Learning to cure the curse of drift in inertial odometry. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Chen, C.; Zhao, P.; Lu, C.X.; Wang, W.; Markham, A.; Trigoni, N. Deep-learning-based pedestrian inertial navigation: Methods, data set, and on-device inference. IEEE Internet Things J. 2020, 7, 4431–4441. [Google Scholar] [CrossRef]

- Herath, S.; Yan, H.; Furukawa, Y. Ronin: Robust neural inertial navigation in the wild: Benchmark, evaluations, & new methods. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 3146–3152. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Herath, S.; Caruso, D.; Liu, C.; Chen, Y.; Furukawa, Y. Neural inertial localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 6604–6613. [Google Scholar]

- Yilmaz, A.; Temeltas, H. A multi-stage localization framework for accurate and precise docking of autonomous mobile robots (AMRs). Robotica 2024, 42, 1885–1908. [Google Scholar] [CrossRef]

- Zhang, L.; Bao, J.; Xu, Y.; Wang, Q.; Xu, J.; Li, D. From coarse to fine: Two-stage indoor localization with multisensor fusion. Tsinghua Sci. Technol. 2022, 28, 552–565. [Google Scholar] [CrossRef]

- Zhou, H.; Zhang, S.; Peng, J.; Zhang, S.; Li, J.; Xiong, H.; Zhang, W. Informer: Beyond efficient transformer for long sequence time-series forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 11106–11115. [Google Scholar]

- Zeinali, B.; Zanddizari, H.; Chang, M.J. Imunet: Efficient regression architecture for inertial imu navigation and positioning. IEEE Trans. Instrum. Meas. 2024, 73, 1–13. [Google Scholar] [CrossRef]

- Lai, R.; Tian, Y.; Tian, J.; Wang, J.; Li, N.; Jiang, Y. ResMixer: A lightweight residual mixer deep inertial odometry for indoor positioning. IEEE Sens. J. 2024, 24, 30875–30884. [Google Scholar] [CrossRef]

- Liu, W.; Caruso, D.; Ilg, E.; Dong, J.; Mourikis, A.I.; Daniilidis, K.; Kumar, V.; Engel, J. Tlio: Tight learned inertial odometry. IEEE Robot. Autom. Lett. 2020, 5, 5653–5660. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).