1. Introduction

Pine wilt disease (PWD), known for its rapid spread and high mortality rate, poses a significant threat to global pine forest ecosystems and has become one of the major forest diseases [

1]. Timely detection and removal of infected trees are essential to prevent rapid disease proliferation, which can lead to extensive ecological degradation and significant economic losses [

2]. In recent years, the widespread adoption of unmanned aerial vehicle (UAV) remote sensing and advancements in image resolution have enabled researchers to rapidly acquire high-resolution imagery across extensive forested areas, thereby facilitating the efficient and accurate detection of dead pine wood [

3,

4].

In this context, deep learning has emerged as a pivotal tool for automatically extracting and handling large-scale image datasets. Its applications in dead pine wood detection have been extensively explored. Numerous studies have introduced methodological enhancements to address the inherent challenges associated with remote sensing-based detection of dead pine wood. For instance, to enable UAV-based dead pine wood detection, Bai et al. [

5] enhanced the Mamba model by integrating an attention mechanism. Their method integrates an SSM-centric Mamba backbone with a Path Aggregation Feature Pyramid Network for multi-scale feature fusion and employs depth-wise separable convolutions to enhance convolution efficiency. Similarly, Wang et al. [

6] developed a large-scale dead pine wood detection dataset by combining Digital Orthophoto Map and Digital Surface Model information. By leveraging spectral and terrain features of remote sensing data, their method effectively reduces the misclassification of ambiguous targets. In a related study, Li et al. [

7] employed an enhanced Mask R-CNN integrated with a prototype network to support the intelligent identification of individual trees infected by PWD. Furthermore, Cai et al. [

8] fused high-resolution UAV RGB imagery with Sentinel-2 multispectral data to develop a YOLOv5-PWD-based model for detecting individual trees in PWD-affected forests, leveraging data augmentation techniques to improve detection performance. In addition, Wang et al. [

9] proposed a semi-supervised semantic segmentation model, GAN_HRNet_Semi, based on generative adversarial networks, which integrates expansion prediction, grid vectorization, and forest bottom mask extraction strategies, providing a novel solution for precise and large-scale monitoring of PWD. Han et al. [

10] produced confidence maps using Gaussian kernels, incorporated a multi-scale spatial attention mechanism, and applied a copy–paste augmentation technique to accurately detect dead pine wood from aerial remote sensing imagery affected by PWD. Lastly, You et al. [

11] developed a general deep learning-based general system utilizing RGB UAV orthophotos for dead pine wood detection, thereby enabling precise and efficient disease monitoring.

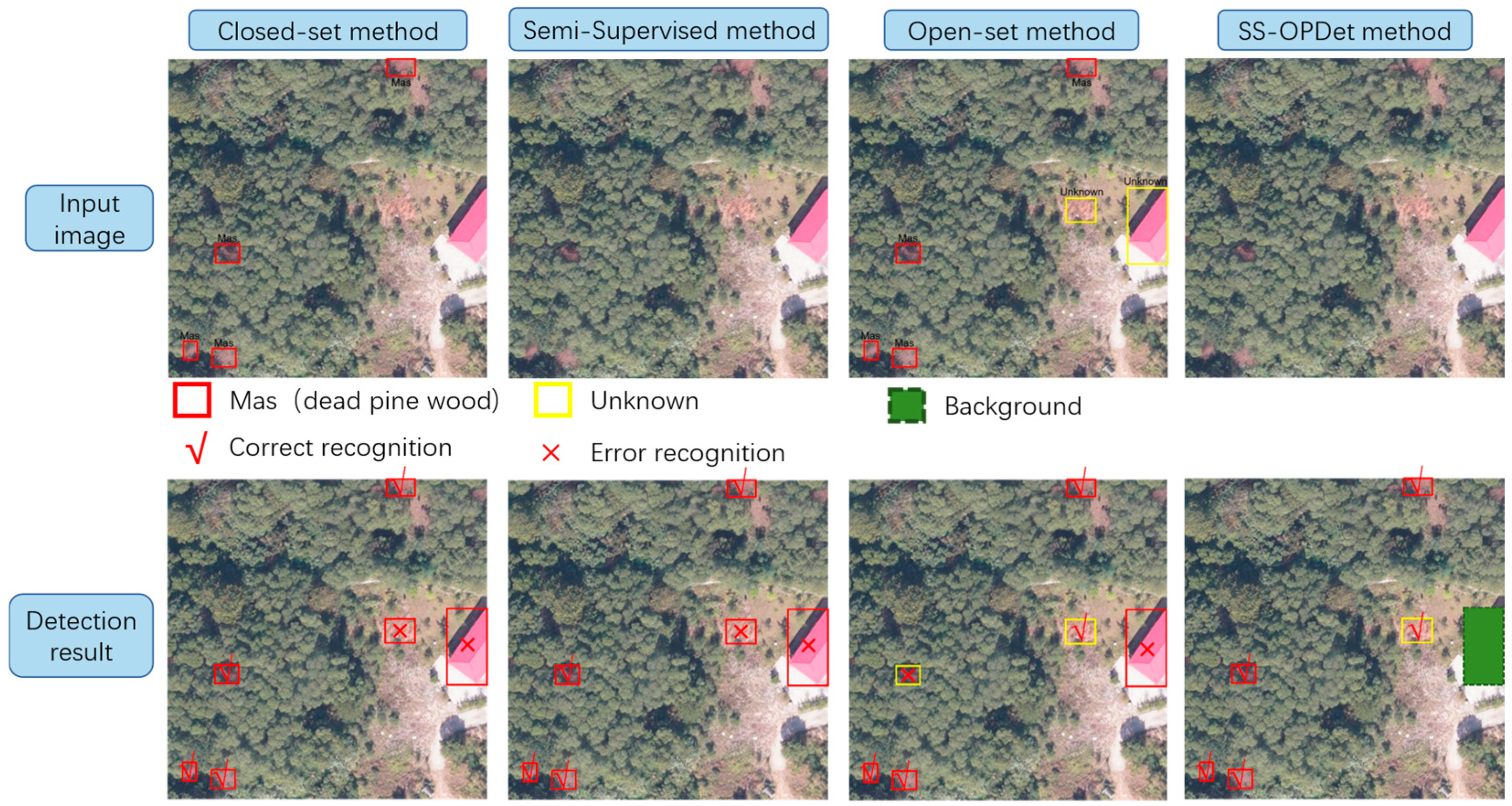

Although modern deep learning methods have significantly improved the accuracy and efficiency of dead pine wood detection, most methods are still based on a closed-set object detection paradigm that assumes identical target categories in both the training and testing sets. As shown in the first column of

Figure 1, the detection results include only the dead pine wood class from the input image. However, in real UAV imagery, unannotated interfering objects—such as loess, buildings, and fallen trees—often appear alongside dead pine wood. This discrepancy limits the model’s ability to learn the characteristics of unknown categories during training. As a result, the model may misclassify unknown objects as dead pine wood or erroneously label actual dead pine wood as background, leading to increased false positives and false negatives that significantly degrade detection performance. For example, the red crosses in the red box in the first column of the second row in

Figure 1 highlight cases where unknown categories, such as loess and buildings, are incorrectly identified as dead pine wood. In addition, in UAV imagery, the demand for large-scale annotated datasets has become increasingly urgent, placing substantial burden on manual annotation efforts.

To reduce dependence on large volumes of annotated samples, researchers have proposed semi-supervised object detection (SSOD) methods. These methods leverage a limited set of labeled data together with a large pool of unlabeled data (e.g., images without annotations, as shown in

Figure 1, first row, columns 2 and 4), thereby enabling effective learning across diverse data distributions through strategies such as pseudo-label generation, self-consistency regularization, and teacher–student frameworks. This paradigm significantly alleviates the manual annotation. For example, Huang et al. [

12] adopted a two-stage hierarchical semi-supervised learning framework to optimize the YOLOv7 model via a semi-supervised sample mining strategy, successfully achieving the extraction of individual trees infected by pine wood nematode. Ben et al. [

13] first generated preliminary tree crown training data using LIDAR-based unsupervised detection, and then fine-tuned a RetinaNet-based RGB tree crown detector using a small set of high-quality manual annotations, enabling efficient extraction of individual crowns in forest environments. Wang et al. [

9] utilized a GAN-based semi-supervised deep semantic segmentation framework that incorporates expansion prediction, grid vectorization, and forest mask extraction techniques to accurately identify standing trees exhibiting color changes caused by pine wilt disease. In a separate study, Zhao et al. [

14] employed a cascade detector with Focal Loss and SmoothL1 Loss, achieving a 1.6% AP improvement over the Combating Noise method. Luo et al. [

15] proposed a semi-supervised framework that integrates a channel-prior convolutional attention mechanism and an adaptive thresholding strategy based on a Gaussian mixture model, and applied it to UAV imagery for detecting dead pine trees in the context of pine wilt disease prevention and control, providing a low-cost and efficient monitoring solution. Finally, Vennervirta et al. [

16] employed a semi-supervised object detection network to identify collapsed dead pine wood. Although the detection rate was relatively low, their findings emphasized the significant influence of site conditions, vegetation characteristics, and image resolution on detectability, thereby providing valuable insights for future improvements in dead wood detection.

However, most existing semi-supervised methods still rely on the closed-set assumption, making them prone to misclassifying unknown categories as dead pine wood. For example, as shown by the red cross in the red box in the second row, in the second column of

Figure 1, unknown objects such as loess and buildings are incorrectly identified as dead pine wood. To overcome the limitations of this assumption when dealing with unknown targets, open-set object detection has been introduced [

17,

18,

19,

20,

21,

22,

23]. This approach trains exclusively on known categories. As illustrated in the first row, third column of

Figure 1, the input image includes both dead pine wood and unknown objects; however, only the known class (dead pine wood) is used for supervision during training. During inference, when the model encounters unseen objects, it classifies them as “unknown” or background. As shown by the red check mark in the red box in the second row, third column of

Figure 1, loess is correctly detected as an unknown category. Although this mechanism provides clear benefits in handling visually similar but novel targets, detection errors may still occur—for instance, as indicated by the yellow box with a yellow cross in the second row, third column, where dead pine wood is misclassified as unknown, and in the red box with a red cross, unknown categories are incorrectly detected as dead pine wood. Moreover, current open-set detection methods still rely on large volumes of annotated data to maintain detection performance for known categories, posing a considerable challenge in the era of large-scale image annotation. Consequently, semi-supervised open-set object detection is gradually becoming a new research focus [

24,

25,

26,

27]. Its primary objective is to fully leverage unlabeled data to enhance the model’s learning capacity, while introducing effective mechanisms to distinguish unknown categories or treat them as background, thereby reducing false positives and missed detections. As shown in the fourth column of the second row in

Figure 1, all dead pine wood targets are correctly detected, and the previously misidentified unknown objects are correctly treated as background (green box). Hence, applying a semi-supervised open-set object detection method to dead pine wood detection is considered essential for improving detection robustness and reducing annotation costs.

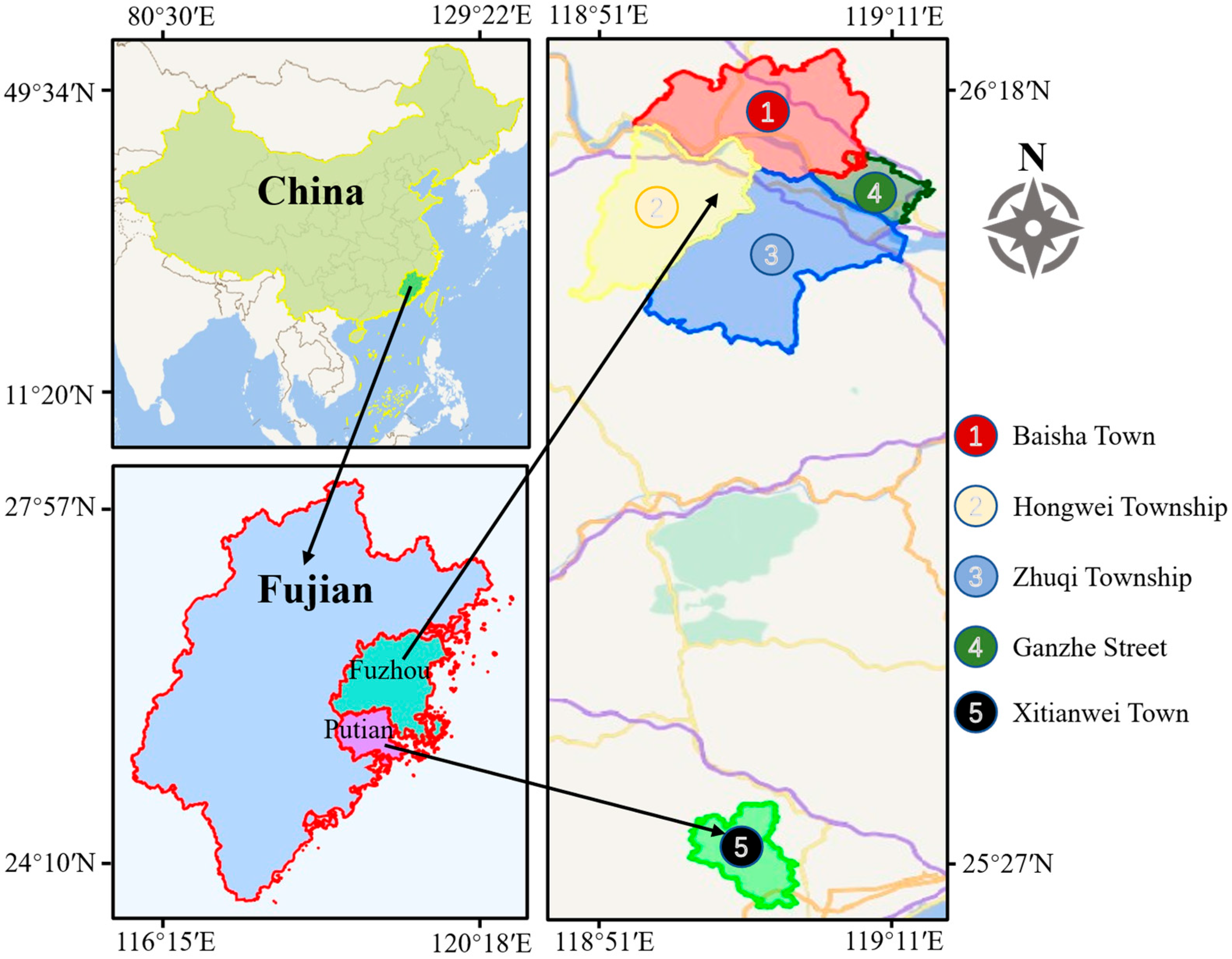

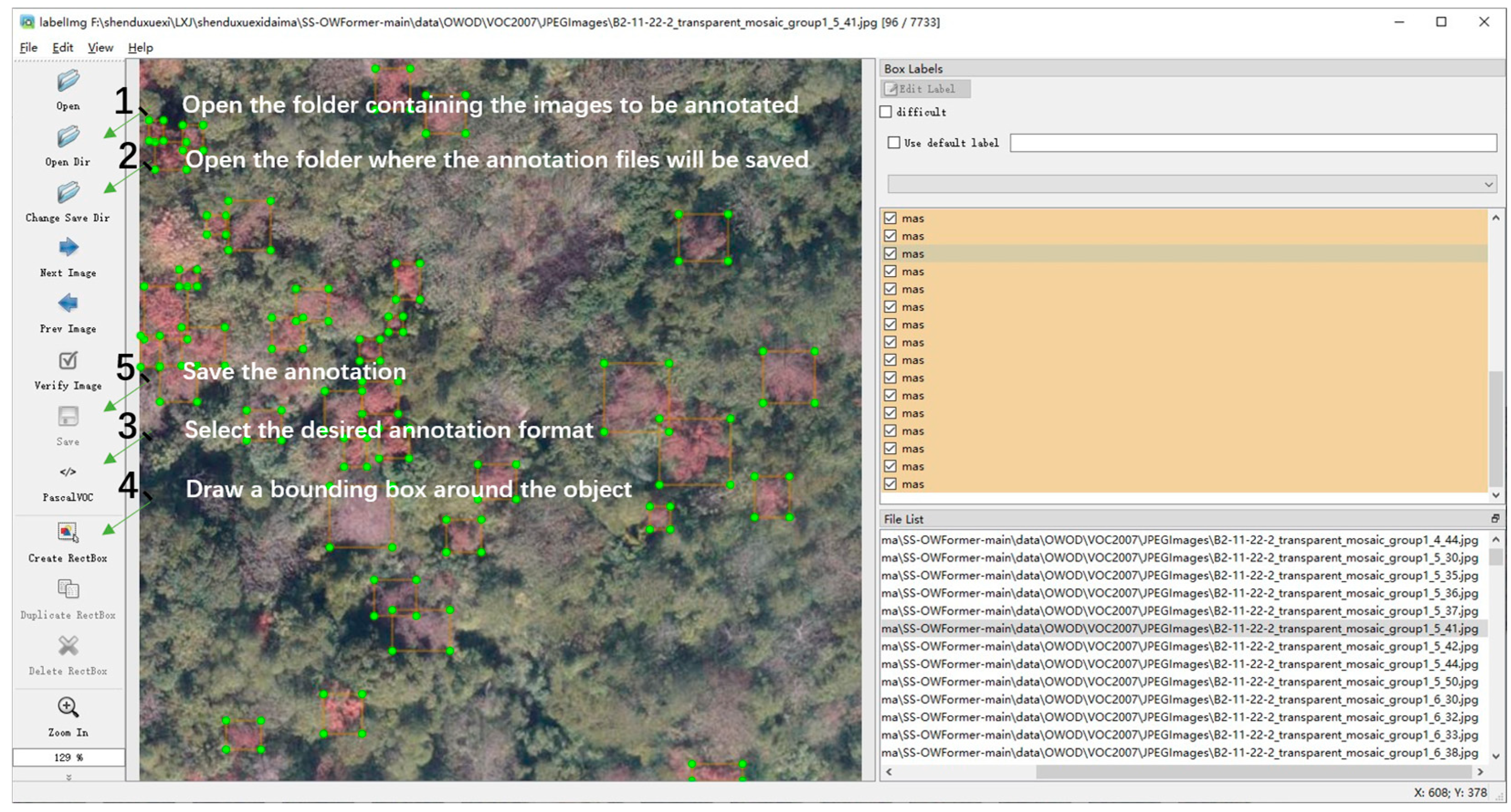

To demonstrate the innovation of our proposed SS-OPDet framework and simultaneously evaluate its applicability and robustness in real forest environments, we constructed a large-scale dataset capturing the spatial distribution of dead pine wood. The dataset contains 29,575 samples of dead pine wood and 7334 samples of unknown categories, collected from multiple regions under diverse environmental conditions. Additionally, detailed cross-region experiments were conducted to mitigate spatial autocorrelation and validate the generalization capability of the model. Our main contributions are as follows:

- (1)

First Application of Semi-Supervised Open-Set Detection for Dead Pine Wood Recognition: Conventional methods based on closed-set assumptions or semi-supervised strategies are inadequate for effectively handling unknown interfering targets. By reducing the quantity of annotated data by half and introducing an “unknown” (or background) classification strategy, our approach effectively excludes novel, unseen categories, thereby significantly reducing both false positives and false negatives.

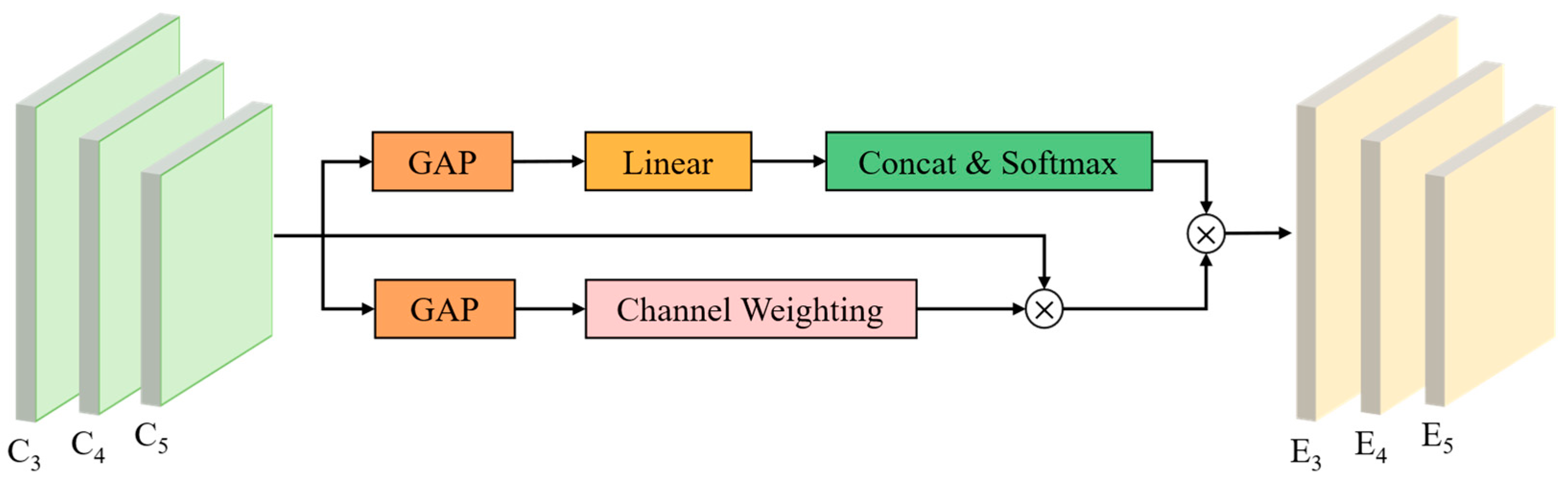

- (2)

Introduction of Two Novel Modules—Weighted Multi-scale Feature Fusion (WMFF) Module and Dynamic Confidence Pseudo-Label Generation (DCPL) Strategy: The WMFF module performs weighted fusion of multi-scale feature maps to highlight the most discriminative features for dead pine wood detection. At the same time, the DCPL strategy divides predicted bounding boxes into high, medium, and low confidence levels, thereby enhancing pseudo-label quality and effectively reducing label noise.

- (3)

Cross-Regional Validation and Adaptation Testing: Cross-regional experiments were conducted to evaluate the adaptability and generalization capability of SS-OPDet under varying forest conditions. By sequentially designating five distinct regions as test sets, our experimental results show that the proposed methods consistently achieve excellent detection performance across diverse environments, providing robust technical support for early intervention in pine wilt disease and related detection applications.

3. Results

3.1. Overall Performance Comparison

In this study, SS-OPDet exhibited superior performance in the task of dead pine wood detection. To verify the effectiveness of SS-OPDet, we compared it with several state-of-the-art methods, including OW-DETR [

32] and SS-OWFormer. This experimental setup was designed to comprehensively evaluate the performance advantages of SS-OPDet in dead pine wood detection tasks.

In addition, SS-OPDet was further compared with recent fully supervised open-set detection methods, including OpenDet and Grounding DINO, to evaluate its performance under open-set conditions.

3.1.1. Quantitative Analysis

As shown in

Table 1, SS-OPDet outperforms all other semi-supervised open-set detection methods across all evaluation metrics. Specifically, SS-OPDet achieves an

of 84.73%, which is 2.3 percentage points higher than SS-OWFormer’s 82.44% and 3.39 points higher than OW-DETR’s 81.34%, demonstrating a significant advantage in detecting known dead pine wood targets. Moreover, SS-OPDet attains an

of 94.48%, which is 1.67 percentage points higher than SS-OWFormer’s 92.81%, confirming its superior ability to retrieve known targets. In terms of open-set robustness, SS-OPDet achieves the lowest

AOSE of 271, compared to 305 for SS-OWFormer and 433 for OW-DETR. This demonstrates its stronger capability to exclude unknown targets and avoid false activations. Furthermore, SS-OPDet attains the lowest

WI of 0.0917%, compared to 0.0958% for SS-OWFormer and 0.2131% for OW-DETR. These results confirm that SS-OPDet not only improves detection of known categories but also excels in suppressing misclassification of unknown and confusing background objects (e.g., loess, buildings) as dead pine wood. In summary, SS-OPDet demonstrates a more balanced and robust performance than other semi-supervised open-set detection baselines, especially under complex forest conditions.

Simultaneously, we include two recent and representative open-set object detection methods—OpenDet and Grounding DINO—for comparison. These models operate under full supervision (100% labeled data) and achieve high values (86.48% and 87.35%, respectively). However, they exhibit significantly higher AOSE (386 and 352) and WI (2.6406% and 2.2875%), indicating a reduced ability to reject unknown targets and a higher tendency to misclassify background objects. In contrast, our SS-OPDet, even with only 50% labeled data, achieves lower AOSE (271) and the lowest WI (0.0917%) among all models tested. This highlights the superior open-set robustness and noise suppression capabilities of SS-OPDet, demonstrating its effectiveness in complex real-world scenarios where both label efficiency and unknown rejection are critical.

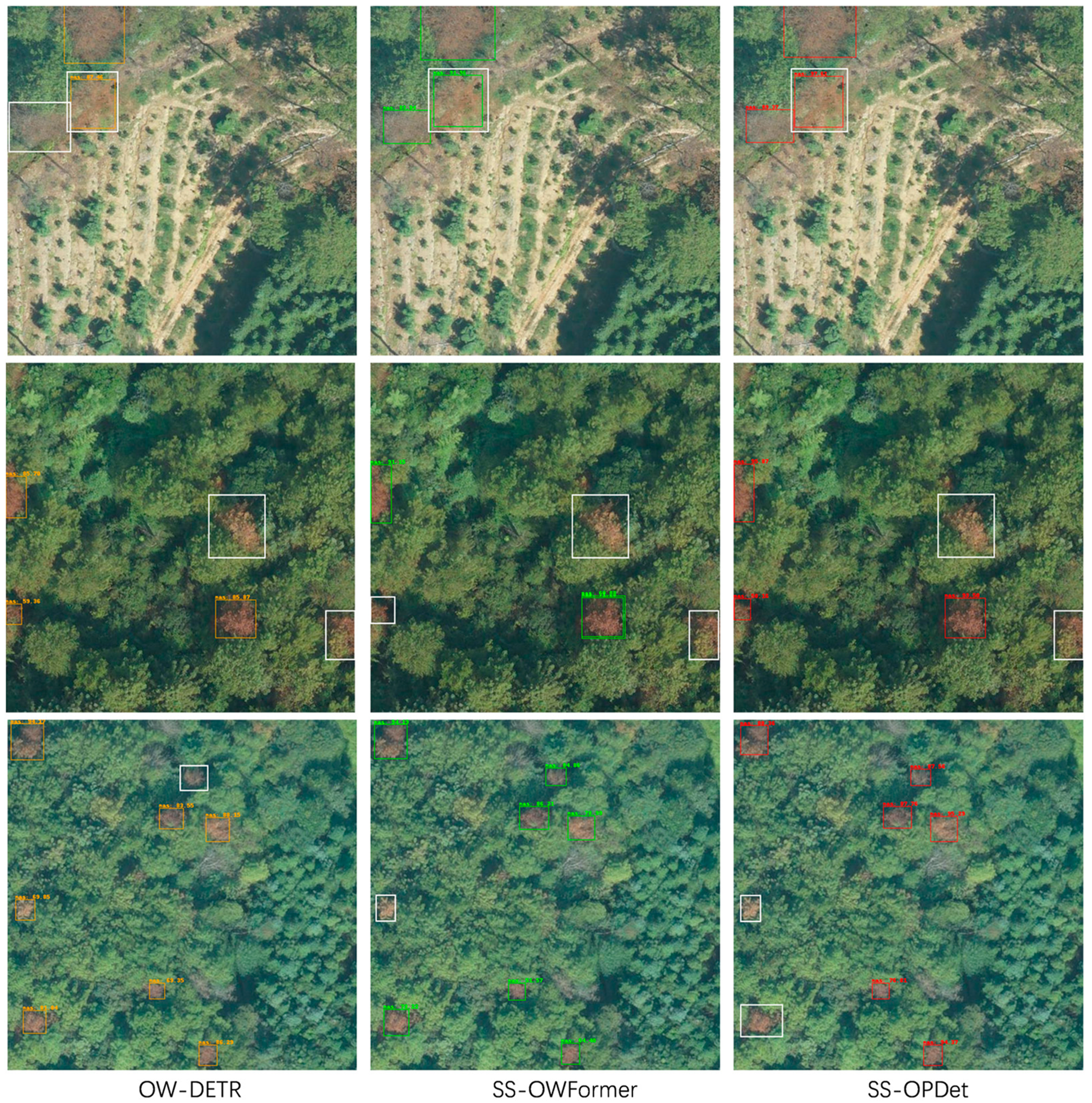

3.1.2. Qualitative Analysis

Figure 8 illustrates a visual comparison of dead pine wood detection results across five representative scenes for three methods: OW-DETR, SS-OWFormer, and SS-OPDet. In this figure, orange boxes represent the detection results of OW-DETR, green boxes represent those of SS-OWFormer, and bright red boxes represent the results of SS-OPDet. Each row corresponds to a distinct scene, with the left, middle, and right columns displaying the detection outputs of OW-DETR, SS-OWFormer, and SS-OPDet, respectively. It can be seen that the orange bounding boxes in the left column (OW-DETR) and the green boxes in the middle column (SS-OWFormer) successfully identify some dead pine wood targets, yet both methods exhibit noticeable missed detection and false positives in multiple scenes. In contrast, the red bounding boxes in the right column (SS-OPDet) show a more complete and accurate detection across all five scenes, with minimal missed detection. The enhanced performance of SS-OPDet stems from its optimized design for semi-supervised open-set detection, which integrates two key modules: the WMFF module, for adaptive multi-scale feature fusion, and the DCPL strategy, for robust pseudo-label generation. Through the synergistic effect of these two modules, SS-OPDet effectively leverages the limited labeled data and abundant unlabeled data to accurately exclude unknown categories and precisely detect known targets, ultimately achieving superior detection performance across diverse scenes compared to the other methods.

3.1.3. Quantitative Analysis

Figure 9 illustrates representative failure cases from three distinct scenes for OW-DETR (left column), SS-OWFormer (middle column), and SS-OPDet (right column). In this figure, orange boxes represent the detection results of OW-DETR, green boxes represent those of SS-OWFormer, and bright red boxes represent the results of SS-OPDet. White bounding boxes are used to highlight regions where all three methods make detection errors. The white bounding boxes indicate regions where all three methods exhibit errors. All models occasionally miss small or partially occluded dead pine wood targets. In addition, unknown objects are sometimes incorrectly detected as dead pine wood due to high visual similarity. While SS-OPDet generally demonstrates superior robustness and precision, these failure cases indicate that the model still encounters challenges under complex conditions. Future work may focus on enhancing sensitivity to small objects, incorporating contextual information, and refining open-set rejection mechanisms to further improve detection reliability.

3.2. Ablation Experiments

To systematically evaluate the contribution of each component of SS-OPDet to its overall performance, we conducted a series of ablation experiments on the dead pine wood dataset. The corresponding results are summarized in

Table 2. As additional modules are integrated, SS-OPDet exhibits consistent improvements across all evaluation metrics.

Specifically, introducing only the WMFF module raises from 82.44% (achieved by SS-OWFormer without WMFF) to 83.41%, and increases from 92.81% to 93.16%. Meanwhile, the AOSE drops from 305 to 286, indicating that the WMFF module substantially enhances the model’s ability to capture fine-grained features of dead pine wood. This improvement demonstrates that the adaptive weighted fusion of multi-scale features effectively enhances the extraction of detailed target information, particularly in complex forest backgrounds, thereby reducing missed detection and false positives. When only the DCPL strategy is applied, the model’s further increases to 83.34%, improves to 93.20%, and AOSE decreases to 282. These results show that DCPL, by dynamically adjusting the pseudo-label generation process, effectively mitigates the influence of noisy pseudo-labels, enhancing the model’s robustness and stability. In complex scenarios, DCPL facilitates accurate filtering of pseudo-labels, preventing the mislabeling of irrelevant objects as dead pine wood and thereby improving detection outcomes. Finally, when both WMFF and DCPL are used together, SS-OPDet’s reaches 84.73%, the reaches 94.48%, AOSE further drops to 271, and WI further drops to 0.0917. These results indicate that the combined effect of WMFF and DCPL not only enhances fine-grained feature extraction but also improves pseudo-label quality and noise suppression. The WMFF module reinforces multi-scale feature fusion to improve precise localization of dead pine wood targets, while the DCPL strategy optimizes the pseudo-label generation process by improving the quality of medium-confidence pseudo-labels. Together, these modules enable the model to efficiently detect known targets and accurately reject unknown ones under open-set conditions, ultimately contributing to superior detection performance.

Figure 10 presents detection results across four distinct scenes for different configurations of SS-OPDet. In this figure, green boxes represent the detection results of SS-OWFormer, purple boxes represent the results of SS-OPDet with only the WMFF module, blue boxes represent the results with only the DCPL strategy, and bright red boxes represent the full SS-OPDet configuration (with both WMFF and DCPL). Each row denotes a distinct scene, and from left to right, the methods shown are SS-OWFormer, SS-OPDet with only the WMFF module, SS-OPDet with only the DCPL strategy, and the full SS-OPDet (with both WMFF and DCPL). We observe that SS-OWFormer, though able to detect some dead pine wood targets, suffers from significant missed detection and false positives in all scenes, reflecting its limited ability to handle multi-scale targets and suppress unknown interference. When only the WMFF module is applied (second column), the model leverages adaptive multi-scale feature fusion to better capture fine-grained details, resulting in improved detection in some scenes, although certain targets are still missed. With only the DCPL strategy applied (third column), the model filters predictions based on confidence, discarding low-confidence noisy predictions and retaining certain medium-confidence true positives. This configuration partially alleviates the missed detection issue but still struggles with targets at very large or small scales. In contrast, with both WMFF and DCPL integrated (right column, SS-OPDet), the model accurately detects all dead pine wood targets in every scene, with minimal missed detection or false positives. This superior performance can be attributed the efficient multi-scale feature fusion provided by the WMFF module and the dynamic pseudo-label filtering introduced by the DCPL strategy. The synergy between the two modules enables the model to effectively filter out unknown distractors while maintaining focus on known targets, resulting in the best detection performance across all scenes.

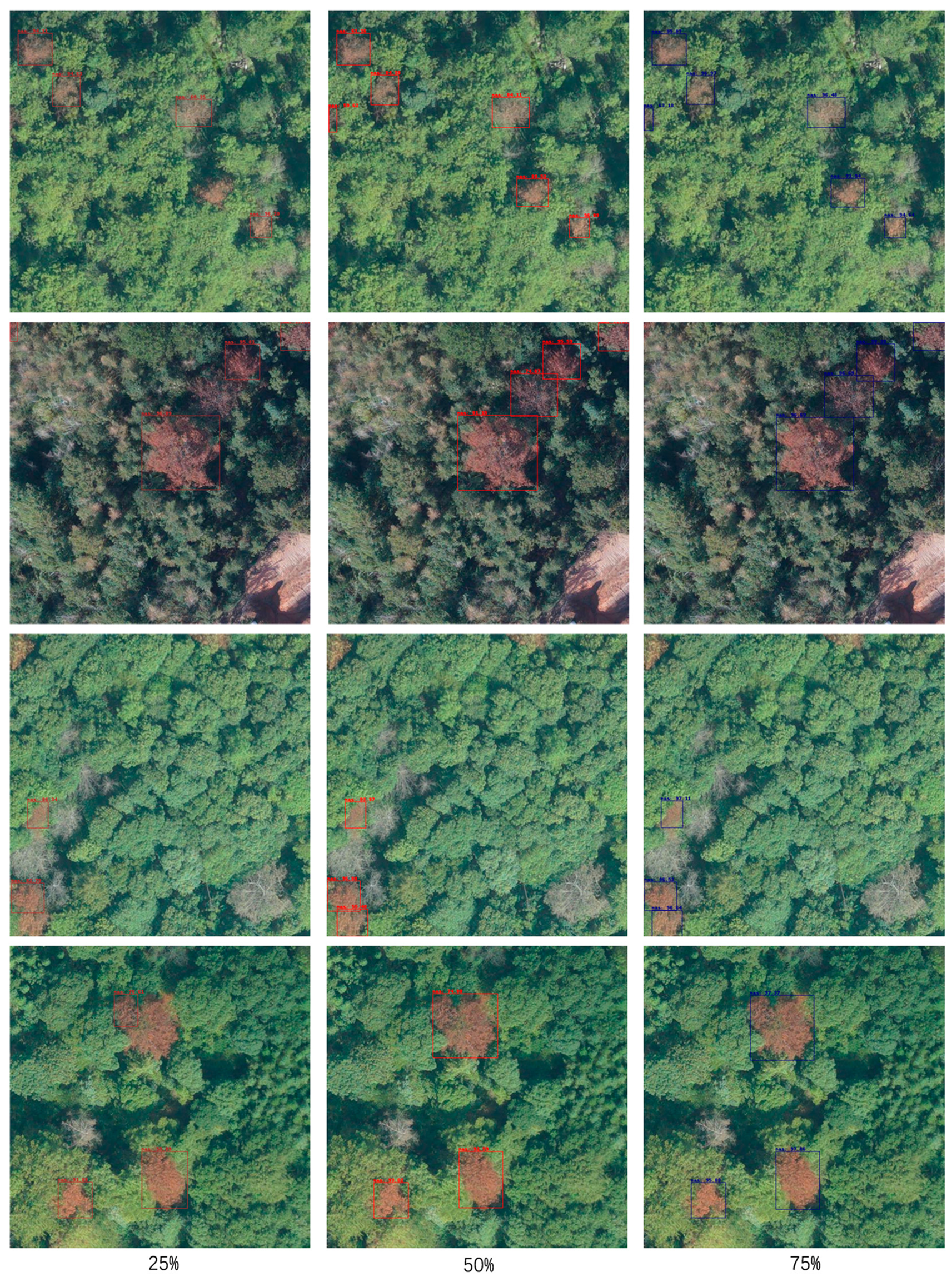

3.3. Experiments on Different Annotation Ratios

To further explore the potential of the semi-supervised learning strategy in reducing manual annotation effort, we conducted experiments using different annotation ratios. Specifically, we trained SS-OPDet using 75%, 50%, and 25% of the available annotated data, simulating practical scenarios where high annotation cost or data collection challenges limit the availability of labeled samples. It should be noted that in the earlier overall performance comparison experiments, we used 50% of the annotated data. This ratio was selected as it provides sufficient supervision for training while leveraging a substantial amount of unlabeled data, thereby enabling cost-effective detection without significant performance degradation.

As shown in

Table 3, when 75% of the data is annotated, SS-OPDet achieves a known-class average precision (

) of 87.42%, a recall (

) of 95.63%, and an

AOSE of 250. This suggests that a high annotation ratio enables the model to achieve optimal detection performance. Reducing the annotation ratio to 50% results in an

of 84.73%, an

of 94.48%, and an

AOSE of 271. Although there is a slight performance drop compared to the 75% scenario, the overall detection capability remains relatively stable. When the annotation ratio is further reduced to 25%,

decreases to 83.89%,

to 93.43%, and

AOSE increases to 278, which shows that under low-annotation conditions, the model’s performance declines somewhat but still remains relatively satisfactory.

Overall, SS-OPDet maintains strong detection performance across varying annotation ratios and effectively utilizes unlabeled data to compensate for limited supervision. This significantly reduces the requirement for extensive manual labeling, offering an efficient and practical solution for large-scale forest monitoring in situations where data annotation is challenging. These findings also validate the use of a 50% annotation ratio as a representative setting, as it achieves a favorable balance between detection performance and annotation cost.

Figure 11 provides a visual comparison of detection results across four scenes under the three annotation ratios (75%, 50%, 25%), for a total of 12 images (each row corresponds to a scene and each column to an annotation ratio). In this figure, dark red boxes represent the detection results under the 25% annotation ratio, bright red boxes represent the results under 50%, and dark blue boxes represent the results under 75%. At the 75% annotation ratio (third column), the model accurately detects all dead pine wood targets in each scene, demonstrating strong recognition capability for the known class when sufficient labeled data is available. With 50% annotation (second column), the model still accurately detects all targets, with only a slight decline in overall detection performance. However, when the annotation ratio is reduced to 25% (first column), missed detection increases noticeably, and the overall detection performance is adversely affected. Based on these observations, the 50% annotation ratio was selected as the representative setting for our experiments, as it achieves an optimal balance between detection performance and annotation cost.

3.4. Cross-Region Testing Experiments

To assess the generalization ability of SS-OPDet across different geographic regions and imaging conditions, a series of cross-region testing experiments were designed. The entire dataset was divided into five non-overlapping subsets based on geographical location, with each region containing images acquired under diverse conditions. This partitioning ensured both diversity and representativeness of the dataset, providing comprehensive test scenarios to evaluate the model’s adaptability to varying environmental conditions.

In these experiments, a leave-one-region-out cross-validation strategy was adopted, wherein each region was sequentially used as the test set while the remaining regions served as training and validation data. This approach effectively mitigates the influence of intra-region spatial autocorrelation, ensuring that the model is evaluated on entirely unseen regional data. Each training set included diverse environmental characteristics, while the corresponding test set represented real-world conditions in an unseen region, enabling a thorough assessment of SS-OPDet’s robustness and detection performance.

We focused on known-category average precision (

) and a known-class recall (

) as the primary evaluation metrics to examine the model’s ability to detect known targets and reject unknown ones. Due to the large variation in the number of unknown objects across regions,

AOSE was excluded from evaluation, as it could lead to inconsistent comparisons.

Table 4 summarizes the detailed results of the cross-region evaluation. Notably, in the “Putian” region, SS-OPDet achieved an

of 76.13% and an

of 87.03%, which are lower than the results in the other regions (for instance, in the “Baisha,” “Hongwei,” “Ganzhe,” and “Zhuqi” regions,

ranged from 84.81% to 87.64% and

from 95.11% to 97.22%). This performance gap is primarily attributed to the Putian region having a higher density of dead pine wood targets, more challenging acquisition conditions, and a greater repetition of similar scenes, which make it more difficult for the model to capture sufficient distinctive features of the targets.

Furthermore, when averaging the results across all five regions, the overall and recall were 84.27% and 94.46%, respectively. These values are highly consistent with the performance on the mixed (pooled) dataset (SS-OPDet achieved 84.73% and 94.48% recall, with AOSE 271 on the mixed test set). This consistency further demonstrates the applicability of SS-OPDet in real-world forest environments and highlights its ability to maintain high detection accuracy under diverse conditions and environmental variations.

4. Discussion

The experimental results above confirm that SS-OPDet significantly outperforms existing methods and effectively addresses the challenges of semi-supervised open-set dead pine wood detection. In the overall performance comparison, SS-OPDet achieved higher precision and recall than both OW-DETR and SS-OWFormer, indicating its superior ability to suppress unknown interference and reduce missed detection. The qualitative and quantitative comparisons show that the new modules we introduced (WMFF and DCPL) play a crucial role in these improvements: the WMFF module enhances the model’s ability to detect targets of varying sizes by fusing multi-scale features, while the DCPL strategy improves training by filtering out low-confidence pseudo-labels. The ablation study further confirms that each module independently contributes significantly to performance—either module alone yields notable improvements in detection accuracy, while their combination produces a synergistic effect that enables SS-OPDet to surpass the baseline models by a substantial margin.

In addition, the experiments with different annotation ratios highlight the robustness and practicality of the semi-supervised approach employed in SS-OPDet. Even with only 25% of the data annotated, the model maintained relatively high precision and recall, with only a slight degradation compared to the 50% and 75% annotation settings. These results demonstrate that SS-OPDet can effectively leverage unlabeled data to compensate for limited labeled samples, a desirable property in real-world scenarios where annotations are expensive or difficult to obtain. This underscores the advantage of the proposed semi-supervised strategy in significantly reducing manual labeling effort without severely compromising detection performance.

The cross-region testing experiments further demonstrate the strong generalization ability of SS-OPDet. The model maintained high detection accuracy across geographically distinct regions with different environmental conditions, and the average performance in cross-region tests closely matched that on the mixed dataset. This consistency indicates that SS-OPDet generalizes well to previously unseen regions, which is critical for practical deployment in large-scale forest monitoring. Although performance in one particularly challenging region (Putian) was somewhat lower due to dense targets and difficult imaging conditions, the method still achieved reasonably good results there, and such findings provide insights into potential areas for future improvement (e.g., handling very dense target scenarios). Overall, these results demonstrate that SS-OPDet effectively meets its design goals: it significantly improves detection of dead pine wood under open-set conditions and limited supervision, reducing false positives and missed detections in complex real-world environments.