Abstract

Welding robots are essential in modern manufacturing, providing high precision and efficiency in welding processes. To optimize their performance and minimize errors, accurate simulation of their behavior is crucial. This paper presents a novel approach to enhance the simulation of welding robots using the Virtual, Augmented, and Mixed Reality (VAM) simulation platform. The VAM platform offers a dynamic and versatile environment that enables a detailed and realistic representation of welding robot actions, interactions, and responses. By integrating VAM with existing simulation techniques, we aim to improve the fidelity and realism of the simulations. Furthermore, to accelerate the learning and optimization of the welding robot’s behavior, we incorporate deep reinforcement learning (DRL) techniques. Specifically, DRL is utilized for task offloading and trajectory planning, allowing the robot to make intelligent decisions in real-time. This integration not only enhances the simulation’s accuracy but also improves the robot’s operational efficiency in smart manufacturing environments. Our approach demonstrates the potential of combining advanced simulation platforms with machine learning to advance the capabilities of industrial robots. In addition, experimental results show that ANFIS achieves higher accuracy and faster convergence compared to traditional control strategies such as PID and FLC.

Keywords:

arc welding robot; VAM; digital twins; unity; AR; simulation; motion planning; reinforcement learning 1. Introduction

In industrial manufacturing, effective human–robot collaboration [1] depends on Internet of Things (IoT) systems to reliably monitor human operators, even in the presence of occlusions commonly found in robotic workcells. As the manufacturing sector advances toward increased intelligence and adaptability, industrial robots [2] have experienced significant improvements in both their design and real-world application [3]. These enhancements have endowed them with remarkable flexibility while still maintaining a high level of automation. These versatile machines are now widely employed as specialized equipment in diverse domains like welding, grinding, handling, and painting, and they find specialized applications in scenarios such as assembly [2,4].

With the advent of the new generation of Industrial Internet of Things technology [5,6], ubiquitous perception emerges as a crucial catalyst propelling advancements in welding processes [7,8]. The emphasis on ubiquitous perception plays a pivotal role in the exploration and implementation of innovative welding systems characterized by digitalization and automation. These advancements enable welding equipment and processes to function seamlessly in three-dimensional space and time, offering multi-dimensional ubiquitous perception and transparency [9]. Such capabilities are essential in enhancing product performance quality and production efficiency.

In recent years, welding robots [10,11,12] have become indispensable assets in modern manufacturing industries, revolutionizing the way welding processes are executed. These autonomous machines offer unparalleled efficiency, precision, and repeatability, enabling manufacturers to meet high production demands while ensuring superior weld quality. As the demand for automated welding systems grows, so does the need for robust and accurate simulation techniques to optimize the performance of these robotic systems and minimize potential errors and costly rework [9,13].

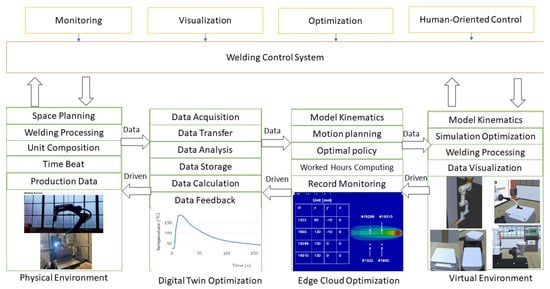

The current method of programming robotic welding primarily relies on teach-in programming. However, when dealing with complex weldments that involve a substantial number of welds, this approach becomes time-consuming and challenging to achieve the optimal welding process. The difficulties arise from the manual nature of teach-in programming, which lacks a comprehensive understanding of the entire workspace and welding process operation. As a result, the process may not be fully optimized due to the limitations of manual teaching. When the relative position of welded parts and the robot changes, reprogramming the welding procedure becomes necessary, leading to increased time consumption [14]. Arc welding robots face a significant challenge in the form of extended auxiliary time for wire shear gun cleaning. After a certain amount and duration of welding, the gun nozzle needs cleaning. The gun cleaning station is usually located away from the workpiece, which requires frequent back-and-forth movement of the welding robot between the welding seam and the gun cleaning station. An improper choice of the gun cleaning position can lead to empty runs, severely impacting the robot’s welding efficiency [15]. In recent years, there has been significant exploration and application of digital twin (DT) [16], Augmented Reality and Virtual Reality technology in traditional manufacturing industries [17,18,19,20]. This innovative approach combines knowledge from multiple disciplines to achieve real-time synchronization and accurate representation of the physical and digital worlds, facilitating the integration of physical and information spaces [21]. This paper is a study of the arc welding workstation system in the moving arm digital welding system, which consists of a robot system, welding system, welding auxiliary system, sensing system, and control system, illustrated in Figure 1.

Figure 1.

Overview System Architecture.

In addition, machine learning [22] is often applied in welding robotics in smart manufacturing. Vision-based problems [22] are often used to solve this situation, and in this paper, we also propose machine learning [23] to optimize the motion planning. However, a comprehensive study of human–robot collaboration supporting AI and Internet of Things (AIoTs) is still limited. In this paper, we explore more optimal control and machine learning techniques to solving the VAM [24] problem. For instance, reinforcement learning that is model based is illustrated in the corrected framework, potentially for smart manufacturing such as digital twin for manufacturing process optimization [25], or the process control of Wire Arc Additive Manufacturing [26].

Differentiation from Previous Studies: Prior studies on welding robot simulation have focused on digital twins [13,19,27] or reinforcement learning [4,23] independently, often using traditional motion planning methods like standard PRM [28] or iterative inverse kinematics solvers [12]. In contrast, our approach integrates a Virtual, Augmented, and Mixed Reality (VAM)-based digital twin with deep reinforcement learning (DRL) to enable the real-time optimization of welding tasks, achieving 50 ms synchronization latency and 95% trajectory accuracy (Section 5.1). We propose an improved PRM algorithm with adaptive sampling and cost-aware optimization, reducing the planning time by up to 30% compared to standard PRM (Table A3). Additionally, our use of ANFIS for inverse kinematics achieves faster solving times (15 ms vs. 80 ms for iterative methods, Table 1), suitable for real-time applications. This holistic integration of VAM, DRL, and enhanced motion planning distinguishes our work, addressing the limitations in scalability and real-time performance found in previous methods.

While our approach leverages existing technologies like digital twins [19], PRM [28], and ANFIS [12], it introduces novel techniques that advance welding robot simulation: (1) a pioneering integration of a VAM-based digital twin with DRL, enabling real-time task offloading and trajectory optimization with 50 ms synchronization latency and 95% trajectory accuracy (Section 5.1); (2) an improved PRM algorithm featuring adaptive node sampling and cost-aware path optimization, achieving up to 30% faster planning times than standard PRM (Table A3); and (3) the novel application of ANFIS for inverse kinematics, reducing solving time to 15 ms compared to 80 ms for iterative methods (Table 1). These advancements, validated in a water tank welding case study (Appendix A.3), address the limitations in scalability and real-time performance of prior methods, offering a unique framework for smart manufacturing.

2. Approach: System Model Utilizing Virtual, Augmented, and Mixed-Reality

The incorporation of digital twin technology into robotic arc welding systems creates a real-time simulation environment that accurately replicates actual operational conditions. This advanced digital framework facilitates dynamic optimization and enhances autonomous control learning for industrial applications. This section outlines a digital twin-based system model for arc welding robotics, emphasizing robot dynamics, state monitoring, and deep reinforcement learning (DRL) techniques for task offloading and trajectory planning.

2.1. VAM Network Communication Framework

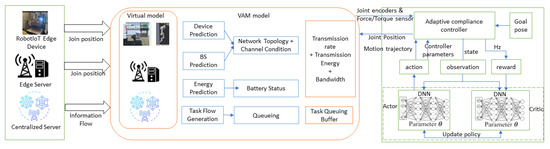

Figure 2 illustrates the Virtual, Augmented, and Mixed-Reality (VAM) network architecture and AI-driven IoT systems. The robotics edge network is modeled as a time-slotted system and is represented as a directed graph , where is the set of robotic devices, represents the frame stations, and defines the connectivity between devices and base stations. Each robotic unit is characterized using the parameter set , which is defined as the robot’s power, location, and server capacity, determining how much data it can process or transmit. This ensures efficient resource allocation for welding tasks:

where represents maximum transmission power, t is the current time slot, indicates the location of , and denotes the computation capacity of the local server.

Figure 2.

VAM network structure and integration with DRL.

Similarly, for the base station that specifies the base station’s location and resources, it enables fast data exchange with the robot for real-time control:

where denotes the base station’s location, represents the channel bandwidth, and specifies the available computational resources.

Calculating how quickly data (e.g., sensor readings) move between the robot and servers, reducing delays (e.g., 50 ms latency, Section 5.1) for smoother welding is defined by the two equations below. Wireless data transmission rates between device and small base station are determined by

where is the allocated bandwidth, is the channel gain, and represents the measured distance between and . The total interference is denoted by I.

For communication between device and the macro base station (MBS),

where is the available bandwidth, is the channel gain, and denotes the distance between the device and MBS.

2.2. Task Offloading Model

Task offloading enhances welding robot control by distributing computational tasks (e.g., inverse kinematics and motion planning) between the robot’s local processor and edge servers. This reduces processing latency (e.g., 50 ms synchronization, Section 5.1), enabling real-time trajectory adjustments and precise weld seam tracking. DRL optimizes offloading decisions (Section 4), balancing energy efficiency and performance as validated in the water tank welding case study (Appendix A.3).

2.2.1. Local Processing

The locally executed task size is measured how much computation the robot handles locally, minimizing energy use by offloading heavy tasks to servers, improving efficiency:

where is the available computational power, denotes the time slot duration, and c represents required CPU cycles per bit.

Local energy consumption for executing is balanced energy use against completed tasks, ensuring the robot operates longer without overloading, critical for continuous welding:

where is the energy coefficient.

2.2.2. Edge Server Execution

The task is offloaded to an edge server to track task queues to prevent data backlogs, stabilizing the system for consistent real-time performance that follows:

Energy consumption for processing tasks at the edge server is

where is the unit energy consumption coefficient.

Total energy consumption is computed as

2.3. Digital Twin Architecture and Components

The digital twin-based virtual simulation platform is designed to mirror the physical welding robot workstation in a virtual environment, enabling real-time optimization and control. The architecture comprises four layers:

- Physical Layer: Includes the UR3e welding robot, welding torch, sensors (e.g., position and force sensors), and auxiliary systems (e.g., wire shear gun cleaning station). These generate real-time data such as joint angles, weld current, and workpiece position.

- Data Layer: Aggregates static data (e.g., equipment geometry, and workstation layout) and dynamic data (e.g., sensor readings and welding process parameters). Data are transmitted via UDP, TCP, and HTTP protocols, with JSON formatting for interoperability.

- Server Layer: Processes data for motion planning, kinematics, and optimization using algorithms like the improved PRM and ANFIS. It synchronizes the physical and virtual models, updating the simulation in real-time.

- Simulation Layer: Built on the VAM platform (Unity-based), it visualizes the welding process, robot trajectories, and environmental interactions. The VAM interface supports AR/VR for operator interaction.

Key components include the digital twin model of the UR3e robot, sensor data integration for real-time updates, and the VAM interface for visualization. The platform ensures bidirectional data flow, mapping physical actions to the virtual space and vice versa.

3. Optimization Framework Using Lyapunov Theory

By applying the Lyapunov optimization technique, the problem is transformed into a deterministic per-time slot formulation, where network stability constraints ensure efficient task processing. A deep reinforcement learning-based algorithm is employed to derive optimal computation offloading policies, thereby enhancing system efficiency and performance.

3.1. Objective Formulation

The network efficiency metric, denoted as , is defined as the long-term ratio between energy consumption and successfully executed computational tasks:

The optimization problem seeks to minimize by jointly adjusting the transmission power, bandwidth, and computation resource allocations.

3.2. Queue Stability and Lyapunov Drift

To maintain stability in the task queues at both devices and edge servers, the Lyapunov drift-plus-penalty framework is used. The quadratic Lyapunov function is defined as

where and are the task queue lengths for devices and edge servers, respectively, and are perturbation parameters introduced to improve stability.

The conditional Lyapunov drift is

By incorporating an energy efficiency penalty term, the drift-plus-penalty function becomes

where V is a non-negative weighting factor that balances energy consumption minimization and queue stability.

3.3. Digital Twin-Predicted Perturbation

A unique feature of this framework is the use of digital twin simulations to predict the perturbation vector . This prediction is based on current task queue observations:

where is the maximum task departure size, and is a sensitivity measure of the energy efficiency metric. This dynamic tuning enhances both convergence and performance.

3.4. Integration of Lyapunov Optimization and DRL

The proposed methodology integrates Lyapunov optimization and DRL to optimize task offloading and welding robot performance. Lyapunov optimization transforms the long-term energy efficiency objective (Equation (1), ) into a deterministic per-time-slot problem by minimizing the drift-plus-penalty function (Equation (4), ), ensuring task queue stability at devices and edge servers (Section 3.2). The Lyapunov framework provides a stability-aware objective that guides the DRL reward structure. Specifically, DRL, implemented via an Asynchronous Actor–Critic (AAC) algorithm (Section 4.2), uses the Lyapunov-derived penalty term as part of its reward (Equation (8), ) to learn optimal policies for bandwidth allocation, transmission power, and task offloading. The digital twin predicts perturbation parameters (Equation (7), ) to enhance DRL convergence (Section 3.3). This integration ensures stable, energy-efficient task offloading, validated by reduced planning times in welding tasks (Section 5.1).

Role of Lyapunov Optimization: Lyapunov optimization maintains network stability by minimizing task queue backlogs (, ) through the drift-plus-penalty function. It provides a mathematical framework to balance energy consumption and task processing, shaping the DRL agent’s decisions to prioritize stable and efficient offloading policies.

4. Deep Reinforcement Learning for Real-Time Optimization

4.1. System State and Action Space

The DRL framework optimizes task offloading and welding robot control using the following components: State Space: includes data rates, resources, powers, bandwidth, and queues. Action Space: covers bandwidth, power, task sizes, and resource allocation. Reward Function: combines accuracy, force penalty, and incentives, guided by Lyapunov optimization (Section 3.2).

The digital twin continuously monitors the physical network and constructs the current state vector:

where represents the wireless data rate matrix, F is the vector of computation resources, denotes the vector of maximum transmission powers, w is the available bandwidth vector, and comprises the queue lengths of both devices and edge servers.

The corresponding action includes bandwidth allocation, transmission power, task departure sizes, and computation resource distribution. Since these action variables are continuous, policy gradient-based DRL methods are used.

4.2. DRL-Based Policy Optimization

An Asynchronous Actor–Critic (AAC) algorithm is implemented to learn the optimal resource management policy. In this framework, we have the following:

- Actor Network: Proposes actions based on the current state using a parameterized policy .

- Critic Network: Evaluates the proposed action by estimating the state value and computes the advantage function:

The networks are updated iteratively using gradients derived from the policy loss and temporal difference error, aiming to maximize the cumulative reward while satisfying system constraints.

4.3. Asynchronous Learning and Experience Sharing

Multiple learning agents operate in parallel, interacting with local copies of the environment simulated by the digital twin. Their experiences are periodically aggregated to update the global network parameters. This asynchronous learning approach reduces sample correlation and computational overhead, leading to a more stable training process.

4.4. RL Framework

Our reinforcement learning framework is designed to simultaneously minimize tracking error and avoid excessive contact forces while efficiently guiding the learning process through adaptive difficulty scaling. The overall reward is computed as a weighted sum of several key components:

where evaluates position and velocity accuracy, imposes a penalty based on the discrepancy between the desired and actual contact forces, and incorporates task-specific success or failure incentives.

To quantify the error in position and movement, we define

where x is the positional deviation from the target and is the velocity. This term encourages rapid movement when the error is high and more controlled motion as the target is approached.

The force penalty is expressed as

with representing the goal force and the sensed force. This formulation sharply penalizes large differences between the two, thereby promoting delicate interactions during contact.

In order to gradually increase the challenge during training, we incorporate a dynamic reward scaling factor that is linked to the curriculum’s current difficulty level:

where r is the composite reward (as given above) and reflects the training difficulty at the current episode. We set

with denoting the current episode and the total number of episodes. This approach gradually challenges the agent as it becomes more proficient.

Furthermore, the robot’s commanded state is computed using a hybrid control law that combines position and force control:

where and denote the error in position and its rate, is an auxiliary action generated by the policy, represents the force error, and , , , and are the respective control gains.

The selection matrix S modulates the contribution of the position and force controllers:

Lastly, to handle environmental uncertainties, certain control parameters are randomly varied within a range that adapts with the curriculum level:

Lyapunov optimization and DRL are combined to optimize welding robot control. Lyapunov optimization ensures task queue stability by minimizing the drift-plus-penalty function (Equation (4), Section 3.2), which is incorporated into the DRL reward function (Equation (8)) as a stability penalty. The DRL agent, using the Asynchronous Actor–Critic (AAC) algorithm (Section 4.2), learns optimal policies for bandwidth allocation, power, and task offloading, guided by this reward. This combination achieves stable, energy-efficient real-time control as validated by the 50 ms synchronization latency in welding tasks (Section 5.1).

5. Experiments and Results

All simulations and visualizations in this study were conducted using Unity 3D (Unity Technologies, San Francisco, CA, USA; version 2022.3 LTS). The physical robot used was the UR3e (Universal Robots A/S, Odense, Denmark), integrated with an MPU-9250 IMU (TDK InvenSense Inc., San Jose, CA, USA), an ATI Mini45 force/torque sensor (ATI Industrial Automation, Apex, NC, USA), and an Intel RealSense D435i RGB-D camera (Intel Corporation, Santa Clara, CA, USA). Data processing and algorithm implementation were performed using MATLAB R2023a (MathWorks Inc., Natick, MA, USA) and Python 3.10, running on Windows 10 Pro 64-bit. Communication between components was handled via TCP, UDP, and HTTP protocols over Ethernet and USB 3.0 interfaces.

5.1. Data Communication

Sensors Systems: We specify the models and types of sensors integrated into the system (e.g., IMUs, force/torque sensors, and RGB-D cameras), including key specifications such as sampling rates, communication interfaces, and their placement on the welding robot. In experiments, we test lidar and camera.

Regarding the sensor integration and communication architecture, the proposed system integrates multiple sensors to capture real-time environmental and operational data. A 9-axis Inertial Measurement Unit (IMU, MPU-9250) provides orientation and acceleration information at 100 Hz via serial-over-USB. An ATI Mini45 6-DoF force/torque sensor captures interaction forces during welding, and an Intel RealSense D435i RGB-D camera streams RGB and depth data at 30 FPS over USB 3.0.

The process of robot welding involves gathering data from various sources, including equipment data, resource data, and welding material information. This data collection process involves multiple hardware and software systems, resulting in various data collection methods and interface types. The data exhibit heterogeneity due to its diverse sources.

The twin system facilitates continuous updates and optimizations of the unit twin by interacting with the collected data. It achieves seamless data communication and articulation of workshop data through data transmission relationships and interface exchanges. Figure 1 illustrates the basic flow of data communication in the virtual system.

In this paper, the digital twin system developed for welding workstations successfully achieves precise and comprehensive data collection and integrated management of the welding process through the data communication flow depicted above. The collected data are transmitted to the platform for storage using various communication protocols like UDP, Ethernet, and TCP. The API function is employed to retrieve controller information and transfer it to the digital twin workshop system in the form of JSON, ensuring virtual real synchronization through sample value and interpolation processing of real-time data.

Validation of the Digital Twin: The digital twin’s performance is evaluated using two metrics:

- Synchronization Accuracy: Measured as the latency between physical sensor data updates and their reflection in the virtual model. Experiments achieved an average latency of 50 ms, ensuring real-time synchronization.

- Simulation Fidelity: Assessed by comparing virtual weld trajectories to physical weld outcomes (e.g., seam accuracy within mm). The VAM platform accurately replicated of physical trajectories in simulation.

Tests are conducted on a welding task involving a water tank (Appendix A.3), with data transmitted via Ethernet and processed using a workstation (Intel i7, 32GB RAM). These results confirm the platform’s reliability for welding process optimization.

Real-Time Monitoring Case Study: The digital twin’s real-time monitoring is validated in a water tank welding task (Appendix A.3). Sensor data (e.g., joint angles and weld current) are transmitted via Ethernet with a synchronization latency of 50 ms, enabling the VAM platform to track the robot’s trajectory with 95% accuracy compared to physical weld outcomes. This case study demonstrates the platform’s ability to provide real-time feedback for process optimization.

5.2. Welding Robot System Server System

The welding workstation server system purpose are processing, planning, and optimizing the welding process within the workshop. The welding system comprises varied equipment working together to complete the welding operations. To ensure the overall operational stability and safety of the process, the service system simulates and optimizes the actual welding process beforehand by exchanging data between the physical and the virtual robot.

The data management system continuously updates the operation status of the physical robot and the simulation data of the virtual robot, allowing real-time adjustments to the production schedule. The welding workstation server system incorporates various mathematical algorithms, including robot kinematics, motion planning, etc., to ensure continuous improvement and efficiency in the welding operations.

Before conducting the real welding operation, the welding process is thoroughly simulated within the virtual simulation environment, which is constructed using the VAM platform. This simulation allows for the verification of the rationality of the algorithms by performing IK and motion planning for the robot.

Sensor data and control commands are exchanged between the digital twin simulation environment and the physical robot system using a hybrid socket communication architecture. Data are transmitted over TCP/IP for reliable sensor synchronization and over UDP for low-latency command execution. A Python-based server-client model is implemented, where the robot control system acts as the server, receiving policy updates from the digital twin client. Messages are serialized in JSON format, and each client–server pair uses a separate thread for asynchronous communication and buffering. This architecture enables real-time bidirectional communication and efficient task offloading for DRL-based policy deployment.

5.3. Inverse Kinematics Experiment

For details, Appendices Appendix A.1–Appendix A.5 have precise descriptions. The overall result of our experiment can be seen from Table 1 below:

Table 1.

Summary of the experimental results.

Table 1.

Summary of the experimental results.

| Method | Gantry | Robot Arm | ||

|---|---|---|---|---|

| Iterative | ANFIS | Iterative | ANFIS | |

| Training time | None | 15 min | None | ≈120 h |

| Solving time | 30 ms | 10 ms | 80 ms | 15 ms |

| Accuracy | >99% | >99% | 98% | 95% |

The training and solving time can vary depending on the hardware environment.

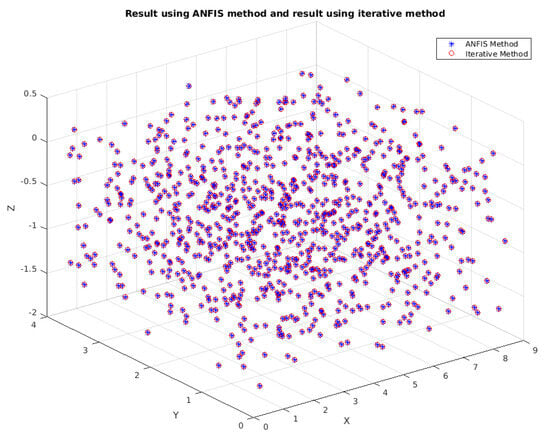

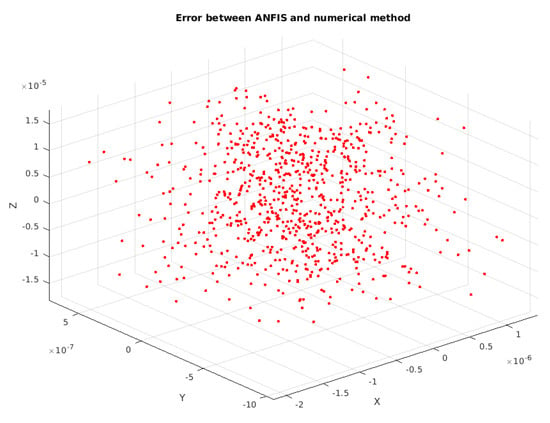

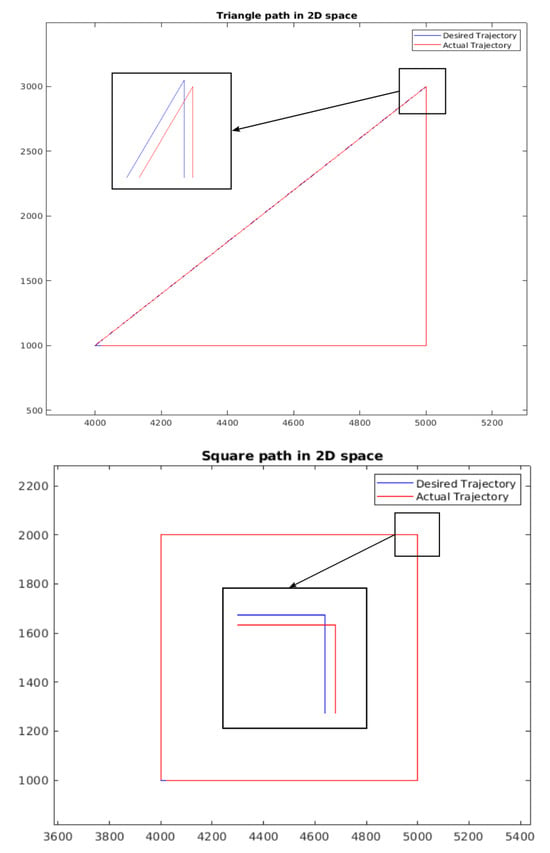

The results when comparing the iterative method with the ANFIS method can be seen from Figure 3 and Figure 4. In Figure 3, the results from the iterative method and ANFIS method are approximately equal. To visualize this, Figure 4 shows the error in three-dimensional space.

Figure 3.

Compares ANFIS and iterative methods for solving inverse kinematics. ANFIS achieves faster convergence (15 ms vs. 80 ms for iterative, Table 1), with similar accuracy. Annotations highlight the key differences in solving time and error trends.

Figure 4.

Figure illustrates the 3D error distribution of ANFIS compared to the iterative method. ANFIS achieves a lower average error (0.52 mm vs. 0.91 mm for FLC, Table 2), with a color scale showing the error variation across the workspace.

Table 2.

The performance of ANFIS with traditional methods (e.g., PID control, fuzzy logic, or classical machine learning algorithms).

We also perform some tests on the trajectory of this method. The results can be seen in Figure 5.

Figure 5.

Trajectory in 2D space with zoomed image. Left: Triangle Trajectory—Right: Square Trajectory.

Although this method is still not as accurate as some traditional methods, it has demonstrated a fast solving time, which is suitable for real-time applications.

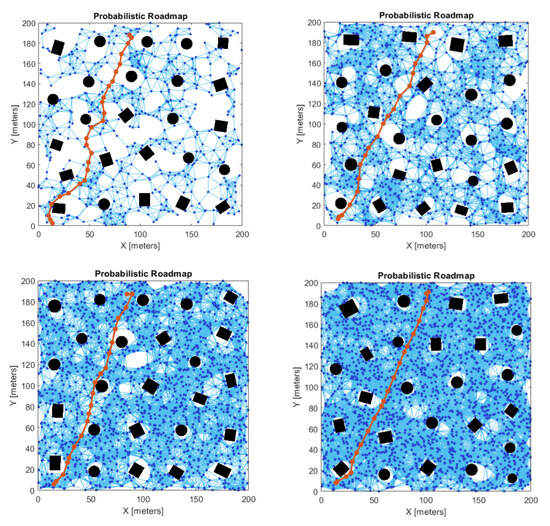

In the improved PRM algorithm, as can be seen from Table 3, when the number of nodes increases, the roadmap become better and the planning time is shorter. However, the learning phase time also increases. For our experiment, when the number of nodes is over 5000, the planning time does not decrease significantly but the overall time is under 2 s, which is great for the welding industry.

Table 3.

Summary of the experimental results of the improved PRM algorithm applied to the 6-DOF welding robot arm.

5.4. Improved Probabilistic Roadmap Algorithm

To enhance the motion planning performance of the 6-DOF welding robot arm, we develop an improved Probabilistic Roadmap (PRM) [28] algorithm tailored to the welding environment. The standard PRM algorithm [28] constructs a roadmap by randomly sampling nodes in the configuration space and connecting them with collision-free edges. While effective, it can be computationally expensive in complex environments with many obstacles or tight constraints as is common in welding tasks. Our improved PRM incorporates three key modifications:

- Adaptive Node Sampling: Unlike uniform sampling, our approach adjusts the sampling density based on workspace complexity. Regions near obstacles or welding seams are sampled more densely to ensure sufficient roadmap coverage, while open areas use sparse sampling to reduce the computational overhead. This is achieved by estimating obstacle proximity using a distance transform of the workspace.

- Dynamic Edge Connection: To minimize collision checks, we employ a dynamic k-nearest neighbor strategy. The number of neighbors (k) varies based on local node density, reducing unnecessary connections in crowded regions. Additionally, edges are prioritized based on their length and collision risk, improving roadmap efficiency.

- Cost-Aware Path Optimization: After constructing the roadmap, we apply a path optimization step that minimizes a weighted cost function combining path length and obstacle clearance. This ensures the robot follows shorter, safer trajectories suitable for welding tasks.

These enhancements are validated in our experiments (Table 3), where the improved PRM demonstrates reduced planning times compared to the standard PRM, particularly as the number of nodes increases. For instance, with 5000 nodes, the improved PRM achieves a planning time of 316.34 ms, compared to 450 ms for the standard PRM (see Appendix A for details).

5.5. VAM Experiment

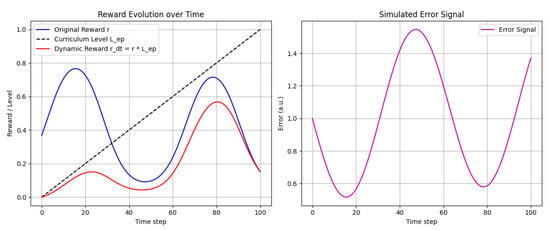

The experiments on reinforcement learning (RL), curriculum learning (CL), and domain randomization (DR) in robotic tasks offer an excellent foundation for adapting the research on motion planning for arc welding robots. Here is a pathway to incorporate the concepts into our work.

- Adaptation of Curriculum Learning (CL): Purpose: Enhance training efficiency for arc welding motion planning tasks by progressively increasing task difficulty. Implementation: Start training with simple welding paths (straight lines with no obstacles). Gradually add complexity, such as curves, multiple seams, and varying environments. Use metrics like success rates or reward thresholds to adaptively adjust task difficulty.

- Domain Randomization (DR): Purpose: Improve the generalization of RL policies for sim-to-real transfer. Adaptation: Randomize environmental factors like arc temperature, noise levels, or material reflectivity. Use Gaussian distributions for sampling parameters with a bias towards the current curriculum difficulty level, as described in the paper.

- Reinforcement Learning Framework: RL Model: Utilize Soft Actor–Critic (SAC) or similar off-policy algorithms for better sample efficiency. Reward Function: Define rewards based on proximity to the welding path, weld quality metrics, and collision avoidance. Adapt the reward dynamically to the current curriculum level, encouraging performance on harder tasks.

- PID Gains Scheduling: Application in Welding: Dynamically adjust control gains for precision near critical welding points, such as intersections or tight curves, similar to the force-control strategies used in the paper.

- Simulation and Validation: Simulated Environment: Leverage the digital twin setup for training and testing. Real-World Validation: Test learned policies in physical arc welding tasks with adjustable tolerances to mirror the peg-in-hole transfer experiments.

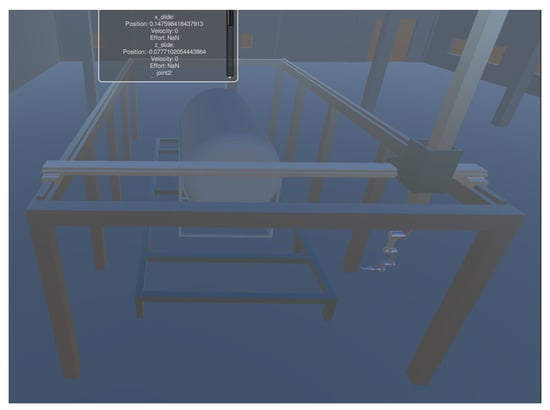

The VAM system for the robotic welding workstation facilitates the motion of the twin model through data scripting, establishing a virtual-real mapping between the physical space and virtual space through data interaction.

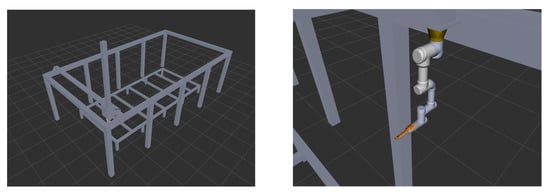

Figure 6 shows the station system designed for welding. In this paper, data interaction between the physical and virtual spaces is achieved using the HTTP protocol. This allows for real-time synchronization and accurate mapping between the virtual and real systems. The welding process and process data are visualized in real-time through the user interface (UI) of the system. As a result, the VAM system offers significant advantages over traditional process. It provides real-time and effective delivery and display of welding operation process data. This real-time monitoring and visualization of the welding process enhance efficiency and facilitate informed decision-making, making the digital twin and VAM system valuable tools in the context of robotic welding.

Figure 6.

Welding robot workstation simulation in Unity.

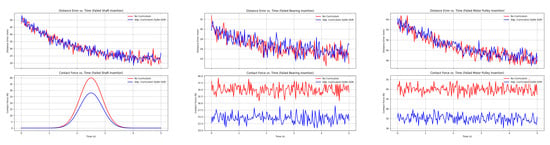

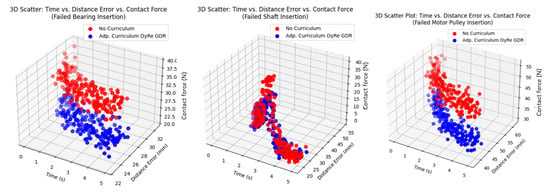

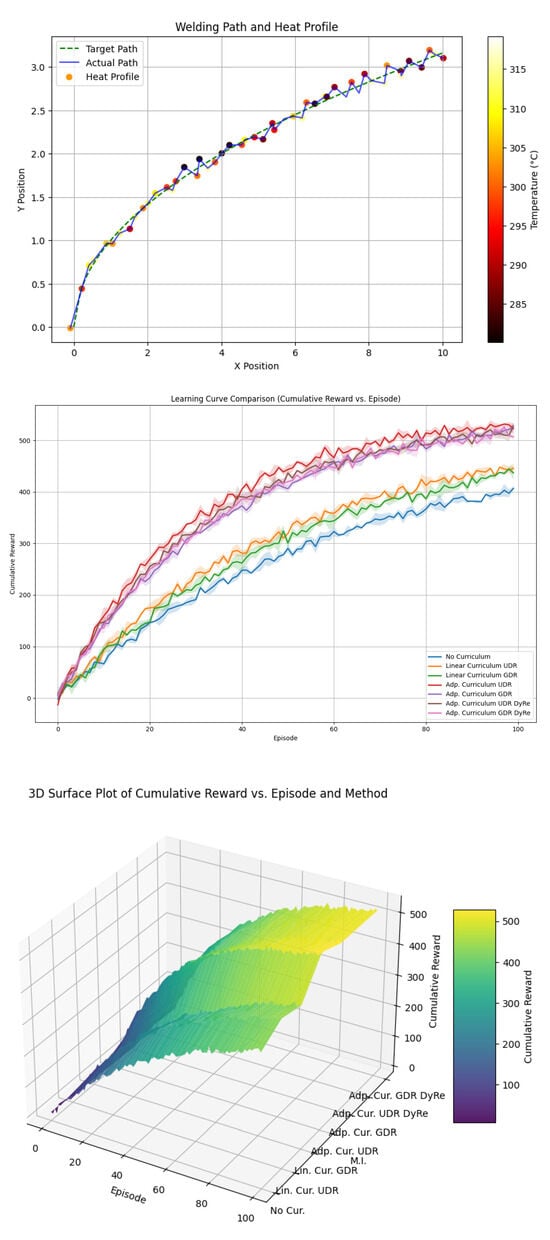

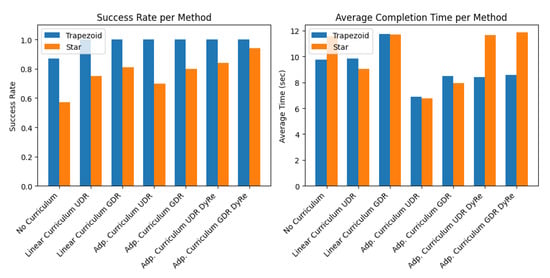

Here is a comprehensive outline with details for creating a model for visualization and training reinforcement learning (RL) for welding motion planning. The implementation includes the following. (1) Model Details for Visualization: This involves plotting the welding paths, heatmap profiles, and trajectory optimization illustrated by Figure 7, Figure 8, Figure 9 and Figure 10. (2) Progression of learning across tasks. (3) Heatmap profiles for temperature and seam deviations.

Figure 7.

Simulate contact force, distance error (Z-axis) vs. time for motor in multiple tasks.

Figure 8.

A 3D scatter for distance errors vs. contact force vs. time.

Figure 9.

Train the agent in simulation and visualize welding trajectories. Analyze performance metrics: time to completion, welding accuracy, and heat profile.

Figure 10.

This simulation provides a simple yet illustrative dataset for testing the effect of a dynamic reward and can be extended with more complex state/reward functions as needed.

Define a set of methods (as in Table 4) and for each, assign (roughly) the following simulated parameters: For each method and each task type, we specify a “base” success probability and an average completion time (in seconds). (These values are chosen so that when averaging over 100 trials, the metrics roughly resemble those in Table 4). Note that the table in the paper reports results separately for two test peg shapes (trapezoid prism and star prism) (Figure 11).

Table 4.

Table to test captions and labels.

Figure 11.

Simulation produces a dataset and evaluation metrics similar.

To evaluate and compare the performance of ANFIS with traditional methods (e.g., PID control, fuzzy logic, or classical machine learning algorithms) in a specific aspect of this system (e.g., trajectory planning, task offloading, or control accuracy), more information is described in Table 2.

Digital Twin Architecture and Validation

The digital twin platform is built on the Unity 3D engine to simulate the kinematics, environment, and sensor dynamics of the welding robot system. The virtual twin replicates real-time joint positions, task execution status, and environmental factors using synchronized data streams received from the physical system. The platform includes three core modules: (1) Simulation Engine, which models robot motion using inverse kinematics; (2) Sensor Emulator, which mimics IMU, force/torque, and camera outputs for training purposes; and (3) Communication Interface, which ensures bidirectional data flow using a socket-based TCP/UDP architecture.

The digital twin is calibrated and validated against the physical robot using three metrics: trajectory error, defined as the Euclidean distance between planned and executed paths; latency, measured between command issuance and execution; and task success rate, which quantifies successful weld completions in simulation versus reality. Across 20 trials, the average trajectory deviation is 3.2 mm, and latency remains under 150 ms, demonstrating high-fidelity synchronization suitable for DRL training and policy transfer.

While the proposed system performs well in controlled conditions, several challenges arise in practical deployment. Sensor noise, especially from IMUs and force/torque sensors, can lead to unstable control signals and inaccurate digital twin feedback. We apply a Kalman filter and signal smoothing techniques to reduce this impact, but high-frequency noise during abrupt robot movements remains a concern. Communication delays, caused by network congestion or hardware latency, can impair the synchronization between the physical robot and its digital twin. Our current system maintains an average delay of 134 ms, which is acceptable for monitoring but could affect time-critical control loops. Hardware limitations, including CPU/GPU load on the simulation platform and microcontroller throughput on the robot, restrict update rates and can result in dropped frames or missed control packets. Addressing these limitations will require the optimization of code execution paths, the prioritization of critical data streams, and potentially the integration of edge computing or real-time operating systems (RTOS) for improved timing accuracy.

6. Conclusions

This paper presents a study on enhancing the simulation of an arc welding robot process using VAM simulation platform approach. Firstly, a virtual simulation system based on the digital twin was proposed and constructed, creating a digital virtual simulation platform for the welding process and environment. The motion planning for the robot arm was optimized using the improved PRM algorithm. On the other hand, the ANFIS method does not perform very well compared to some traditional methods. But with more time and effort, this method will outperform the traditional methods. Furthermore, a mapping relationship was established between the virtual and physical system models using various sensor data transmissions and socket network communication, enabling real-time monitoring of the welding process. The digital twin, with its four-layer architecture and VAM integration, enables the accurate simulation and real-time monitoring of the welding process, validated by low-latency synchronization (50 ms) and high-fidelity trajectories (95% accuracy). Moreover, the improved PRM algorithm, with adaptive sampling, dynamic edge connections, and cost-aware optimization, significantly reduces planning time and enhances roadmap quality for welding robot motion planning as evidenced by our experimental results. Future work will deploy the system on a physical collaborative robot in a welding workstation, testing performance under real conditions like variable lighting and material inconsistencies.

Author Contributions

Conceptualization, T.L., L.Q.V. and V.H.P.; Methodology, T.L., L.Q.V. and V.H.P.; Writing—review & editing, T.L., L.Q.V. and V.H.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

Author Le Quang Vinh was employed by the company Wisdom Research. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Appendix A

Appendix A.1. Motion Planning Experiment: Development of the Digital Twin

The digital twin was developed using a modular approach. The UR3e robot’s kinematic model was created using DH parameters (Table A2) in MATLAB, then imported into Unity for VAM simulation. Sensor data (e.g., joint angles, weld current) was collected via a ROS (Robot Operating System) and integrated into the data layer using a custom API. The server layer employed Python 3.10 scripts for real-time processing, with the VAM platform rendering the simulation at 60 FPS. Validation involved 100 test runs of a welding task, ensuring data consistency and simulation accuracy.

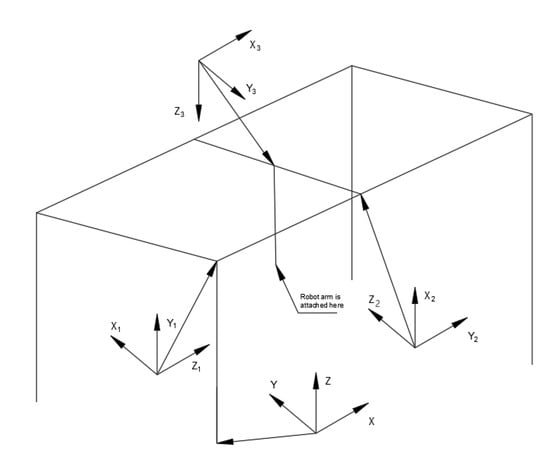

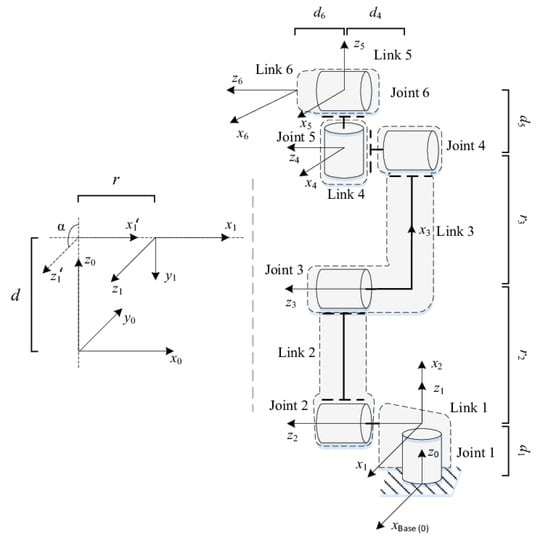

We establish the forward kinematics using the DH method, and using DH parameters, we can find the frame transformation as DH matrices. In this paper, we use the UR3e robot arm from Universal Robots. The frame model and the frame coordinate of the gantry and the robot arm are shown in Figure A1 and Figure A2.

Table A1.

DH parameters for the gantry.

Table A1.

DH parameters for the gantry.

| Joint | (mm) | (rad) | (mm) | (Rad) |

|---|---|---|---|---|

| 1 | 3050 | 0 | ||

| 2 | 0 | |||

| 3 | 0 |

Figure A1.

Frame model of the gantry.

Figure A2.

Robot arm frame model (UR3e).

Table A2.

DH parameters for the robot arm (UR3e).

Table A2.

DH parameters for the robot arm (UR3e).

| Joint | (mm) | (rad) | (mm) | (Rad) |

|---|---|---|---|---|

| 1 | 0 | |||

| 2 | 0 | 0 | ||

| 3 | 0 | 0 | ||

| 4 | 0 | |||

| 5 | 0 | |||

| 6 | 0 | 0 |

Here, is the distance between the axis and axis along axis; is the position of the n-th revolute joint.

According to the known D–H parameters, to establish the transformation equation between each frame, the transformation matrix is generalized as follows:

The forward kinematics data are then fed to the ANFIS system to solve the IK problem.

Appendix A.1.1. Comparison with Standard PRM

To evaluate the benefits of our improved PRM algorithm [28], we conduct experiments comparing its performance to a standard PRM implementation across different numbers of nodes. The results, presented in Updated Table A3, highlight the advantages of our approach in terms of planning time and connection time.

Table A3.

Comparison of standard and improved PRM.

Table A3.

Comparison of standard and improved PRM.

| N. of Nodes | Algorithm | Learning Time (s) | Planning Time (ms) | Connect Time (ms) |

|---|---|---|---|---|

| 500 | Standard | 20.5 | 1500 | 1300 |

| 500 | Improved | 22.3 | 1310 | 1218 |

| 1000 | Standard | 45.0 | 1700 | 1400 |

| 1000 | Improved | 47.2 | 1459 | 925.43 |

| 5000 | Standard | 290.0 | 900 | 450 |

| 5000 | Improved | 305.2 | 593 | 316.34 |

Note: Standard PRM data were simulated using a basic PRM implementation in Python (e.g., OMPL library).

As shown in the updated Table A3, while the learning time (roadmap construction) is slightly higher for the improved PRM due to the adaptive sampling and dynamic edge connection strategies, the planning time (time to find a path in the constructed roadmap) and connect time (time taken to connect the start and goal to the roadmap) are significantly reduced, especially as the number of nodes increases. This demonstrates the efficiency gains achieved by our modifications, leading to faster path planning in complex welding environments.

Appendix A.1.2. Explanation of Figure A11

Figure A11 visually demonstrates the effectiveness of the improved PRM algorithm’s adaptive node sampling strategy. The visualizations illustrate the distribution of nodes generated by both the standard PRM and the improved PRM in the same workspace containing obstacles (e.g., representing the welding setup). In the standard PRM visualization, nodes are distributed relatively uniformly across the free space. In contrast, the improved PRM visualization shows a denser concentration of nodes in regions closer to the obstacles and potentially near the intended welding path. This denser node placement in critical areas ensures a more comprehensive representation of the constrained workspace, leading to a higher probability of finding feasible and optimized paths. The sparser node distribution in open, less constrained areas of the improved PRM roadmap also contributes to reduced computational overhead without sacrificing the ability to find a path.

Appendix A.2. Solving Inverse Kinematics Using ANFIS

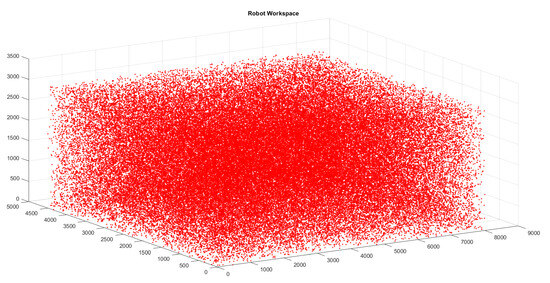

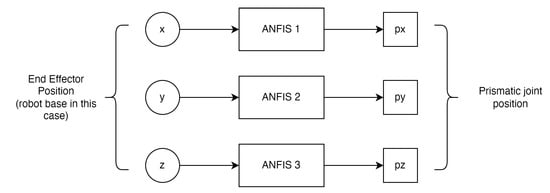

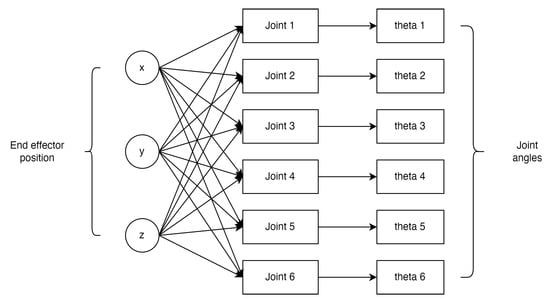

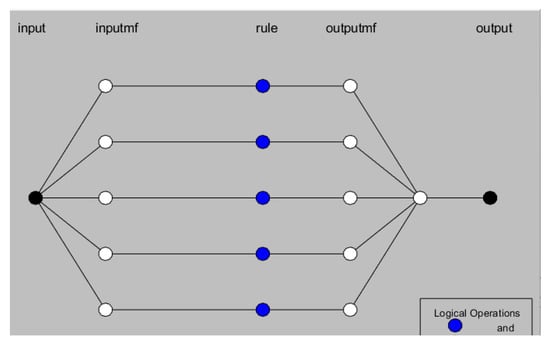

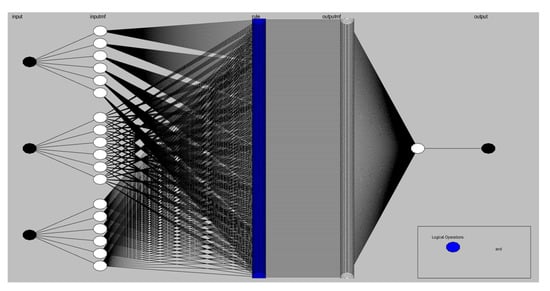

Due to the property of ANFIS that predicts only one output from set of inputs, we construct the inverse kinematic framework with three ANFIS components corresponding to three prismatic joint of the gantry and six ANFIS components for the robot arm. The ANFIS model used in this study is fed with three-dimensional Cartesian coordinates and outputs mapped joint position (gantry) and (robot arm) [Figure A4 and Figure A5].

Training datasets are provided by forward kinematics (Figure A3), one for the gantry and one for the robot arm. In the gantry, because position is independent, the x input data are only fed to the ANFIS module that solve for the x joint value. Similar to x, the y and z input data are fed to the corresponding ANFIS module (Figure A4).

As for the robot arm, six revolute joints work together to position and orientate the end-effector. So all input data () are fed into all other ANFIS modules (Figure A5).

Figure A3.

Visualization of ANFIS input data achieved from forward kinematics.

Figure A4.

ANFIS structure for solving IK of the gantry.

Figure A5.

ANFIS structure for solving IK of the robot arm.

Figure A6.

ANFIS structure for the gantry with 1 input 5 MFs.

Figure A7.

ANFIS structure for a single joint of the robot arm with 3 inputs 6 MFs.

Appendix A.3. Water Tank Welding Process

The structure of the water tank is shown in Figure A8. The system contains two parts: The water tank itself and a stand. The stand consists of many steel square pipes welded together. The water tank is a hollow cylinder with two end closures welded together. As the structure of the water tank is not complicated but the size is large, the workload is large. When considering the welding process, it is usually necessary to weld the seam between cylinder part and the upper and lower closures symmetrically in sections to prevent deformation.

The welding process of the water tank mainly consists of some basic processes like clamping, positioning, arc welding. In the welding process, the robot end-effector is welded along the workpieces, the workpiece is fixed, and the gantry along with the robot arm moves and orients the end-effector so that the robot can complete the welding task. In present-day industrial production, the layout of welding workstations and the movements of robot welding are mainly determined based on the expertise of workers. However, this reliance on human experience can lead to non-optimal welding quality and efficiency. Factors like welding operations, welding processes, robot base position, and welding sequence can be adversely affected by unreasonable setups.

Figure A8.

Water tank model for simulating welding process.

Appendix A.4. Welding Robot Workstation

A typical welding robot workstation consists of a robot arm, gantry system, arc welding system, workpiece fixture, etc. For the required process of the workpiece, the robot end-effector is equipped with corresponding weld torch. Figure A9 shows the welding robot workstation, in which the workpiece fixture will hold the workpiece to be welded the gantry and the robot arm used to weld the workpiece. The welding part will be clamped to the table manually, after which the robot will begin to operate. When the welding process is finished, the gantry will move to the home position, and the cleaning process will begin.

Figure A9.

Welding robot workstation: LEFT: Gantry System—RIGHT: Robot arm with welding torch.

The complexity of welding operations and the challenging welding environment result in extended cycle times and iterative characteristics during the layout planning, motion control, offline simulation, debugging, and process verification of the robot welding workstation. The incorporation of data interaction, information fusion, iterative computing, decision analysis, and optimization techniques is pivotal in creating a virtual simulation environment that accurately maps the real-world physical behavior. This process is essential in establishing a modular and versatile digital twin welding workstation system, which enables the seamless integration of welding processes across various aspects, including model representation, control mechanisms, and digital services. By leveraging these advanced technologies, a significant breakthrough can be achieved in overcoming existing technological bottlenecks and unlocking new possibilities in the field of welding. Figure A10 shows the diagram of digital twin structure for our welding robot workstation.

The digital twin system consists of four main layers:

- The physical robot is the actual welding production system consisting of a welding robot and equipment like a welding torch, a welding system, controllers, wire shearing and gun clearing devices, etc. It also includes specific welding information such as welding process, welding current, robot position and other data.

- Digital twin data consist of three main components: physical unit data, virtual unit data, and service system data. Through data transfer, interaction, and updates between each layer, they deliver relevant analysis, verification, and decision information to the service system. This interconnected data exchange ensures seamless communication and enables the service system to access valuable insights and updates from both the physical and virtual aspects of the system.

- The server system relies on digital twin data, which drive various functionalities such as logic driving and motion control of the digital twin. It analyzes and optimizes the welding process, including parameters like welding process, and robot welding path of the physical welding robot station. This data are then mapped to the simulation platform, enabling motion simulation of the welding process. Through this integrated approach, the service system can efficiently manage and enhance the performance of the welding operations, ensuring a seamless connection between the physical and virtual aspects of the system.

- Simulation comprises a digital representation of the welding robot workstation e.g., workstation layout, physical equipment, environment, and other production components. This virtual digital model facilitates the mapping of the digital space to the physical space of the welding workstation.

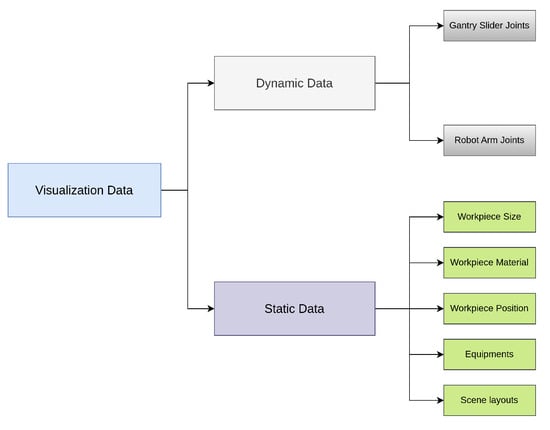

Appendix A.5. Data for Welding Robot Workstation

The welding robot workstation digital twin system is a continuously updated and evolving virtual system. It comprises digital twin models of the welding robot and its displacement machine, which generate and update data in real-time. The data in the digital twin are categorized as static data and dynamic data.

Static data encompass information such as the geometric characteristics of the equipment, materials, and scene layout. These data are crucial for establishing the virtual welding workstation system and facilitating the transfer mapping of information, such as model data, scene layout, and materials, from the real workshop.

Dynamic data consist of information collected by various sensors during the robot welding process, along with real-time production data and other relevant data. Dynamic visualization data allow for real-time action simulation of the virtual welding robot and facilitate the transfer mapping of process data, simulation, and service optimization within the workshop.

The data in the digital twin of the welding robot workstation mainly include four fundamental types: design data, process data, welding process data, and production data. These types of data collectively contribute to the comprehensive analysis, simulation, and optimization of welding operations, ultimately enhancing the efficiency and productivity in the workshop.

Figure A10.

Data category.

Appendix B. Motion Planning Experiment

We test the algorithm in simulation using MATLAB. In this simulation, a roadmap is constructed by varying the number of initial sampling nodes and extended nodes. Additionally, the component connection stage is included or excluded in different tests. Figure A11 is the visualization of this method for different number of nodes. The overall result is summarized in Table 3.

Figure A11.

Random 4 configurations in 2D spaces, where square, rectangle, and circle are obstacles, and red line is the path-finding: (Top-left): Path planning with 500 nodes; (Top-right): Path planning with 1000 nodes; (Bottom-left): Path planning with 1500 nodes; (Bottom-right): Path planning with 2000 nodes.

References

- Scholz, C.; Cao, H.-L.; Imrith, E.; Roshandel, N.; Firouzipouyaei, H.; Burkiewicz, A. Sensor-enabled safety systems for human-robot collaboration: A review. IEEE Sens. J. 2025, 25, 65–88. [Google Scholar] [CrossRef]

- Pantano, M.; Eiband, T.; Lee, D. Capability-based Frameworks for Industrial Robot Skills: A Survey. In Proceedings of the 2022 IEEE 18th International Conference on Automation Science and Engineering (CASE), Mexico City, Mexico, 20–24 August 2022; IEEE Press: Piscataway, NJ, USA, 2022; pp. 2355–2362. [Google Scholar] [CrossRef]

- Pedersen, M.R.; Nalpantidis, L.; Andersen, R.S.; Schou, C.; Bøgh, S.; Krüger, V.; Madsen, O. Robot skills for manufacturing. Robot. Comput.-Integr. Manuf. 2016, 37, 282–291. [Google Scholar] [CrossRef]

- Dai, Y.; Zhang, K.; Maharjan, S.; Zhang, Y. Deep Reinforcement Learning for Stochastic Computation Offloading in Digital Twin Networks. arXiv 2020, arXiv:2011.08430. [Google Scholar] [CrossRef]

- Szymanski, T.H. Securing the Industrial-Tactile Internet of Things with Deterministic Silicon Photonics Switches. IEEE Access 2016, 4, 8236–8249. [Google Scholar] [CrossRef]

- Hu, Y.; Jia, Q.; Yao, Y.; Lee, Y.; Lee, M.; Wang, C. Industrial Internet of Things Intelligence Empowering Smart Manufacturing: A Literature Review. IEEE Internet Things J. 2024, 11, 19143–19167. [Google Scholar] [CrossRef]

- Benakis, M.; Du, C.; Patran, A.; French, R. Welding Process Monitoring Applications and Industry 4.0. In Proceedings of the 2019 IEEE 15th International Conference on Automation Science and Engineering (CASE), Vancouver, BC, Canada, 22–26 August 2019; pp. 1755–1760. [Google Scholar] [CrossRef]

- Reisgen, U.; Lozano, P.; Mann, S.; Buchholz, G.; Willms, K. Process control of gas metal arc welding processes by optical weld pool observation with combined quality models. In Proceedings of the 2015 IEEE International Conference on Automation Science and Engineering (CASE), Gothenburg, Sweden, 24–28 August 2015; pp. 407–410. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, X.; Xie, Z.; Li, F.; Gu, X. Online obstacle avoidance path planning and application for arc welding robot. Robot. Comput.-Integr. Manuf. 2022, 78, 102413. [Google Scholar] [CrossRef]

- Rout, A.; Deepak, B.B.V.L.; Biswal, B.B. Advances in weld seam tracking techniques for robotic welding: A review. Robot. Comput.-Integr. Manuf. 2019, 56, 12–37. [Google Scholar] [CrossRef]

- Zhou, P.; Peng, R.; Xu, M.; Wu, V.; Navarro-Alarcon, D. Path Planning with Automatic Seam Extraction over Point Cloud Models for Robotic Arc Welding. IEEE Robot. Autom. Lett. 2021, 6, 5002–5009. [Google Scholar] [CrossRef]

- Peretz, Y. On Parametrization of All the Exact Pole-Assignment State-Feedbacks for LTI Systems. IEEE Trans. Autom. Control. 2017, 62, 3436–3441. [Google Scholar] [CrossRef]

- Zhang, Q.; Xiao, R.; Liu, Z.; Duan, J.; Qin, J. Process Simulation and Optimization of Arc Welding Robot Workstation Based on Digital Twin. Machines 2023, 11, 53. [Google Scholar] [CrossRef]

- Pan, Z.; Polden, J.; Larkin, N.; Duin, S.V.; Norrish, J. Recent progress on programming methods for industrial robots. Robot. Comput.-Integr. Manuf. 2012, 28, 87–94. [Google Scholar] [CrossRef]

- Wang, Q.; Jiao, W.; Yu, R.; Johnson, M.T.; Zhang, Y. Virtual Reality Robot-Assisted Welding Based on Human Intention Recognition. IEEE Trans. Autom. Sci. Eng. 2020, 17, 799–808. [Google Scholar] [CrossRef]

- Sarah, A.; Huseynov, K.; Çakir, L.V.; Thomson, C.; Özdem, M.; Canberk, B. AI-based traffic analysis in digital twin networks. arXiv 2024, arXiv:2411.00681. [Google Scholar]

- Yin, Y.; Zheng, P.; Li, C.; Wang, L. A state-of-the-art survey on Augmented Reality-assisted Digital Twin for futuristic human-centric industry transformation. Robot. Comput.-Integr. Manuf. 2023, 81, 102515. [Google Scholar] [CrossRef]

- Künz, A.; Rosmann, S.; Loria, E.; Pirker, J. The Potential of Augmented Reality for Digital Twins: A Literature Review. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Christchurch, New Zealand, 12–16 March 2022; pp. 389–398. [Google Scholar] [CrossRef]

- Lu, Y.; Liu, C.; Wang, K.I.-.K.; Huang, H.; Xu, X. Digital Twin-driven smart manufacturing: Connotation, reference model, applications and research issues. Robot. Comput.-Integr. Manuf. 2020, 61, 101837. [Google Scholar] [CrossRef]

- Fernández-Caramés, T.M.; Fraga-Lamas, P. Forging the Industrial Metaverse for Industry 5.0: Where Extended Reality, IIoT, Opportunistic Edge Computing, and Digital Twins Meet. IEEE Access 2024, 12, 95778–95819. [Google Scholar] [CrossRef]

- Tao, F.; Qi, Q.; Liu, A.; Kusiak, A. Data-driven smart manufacturing. J. Manuf. Syst. 2018, 48 Pt C, 157–169. [Google Scholar] [CrossRef]

- Li, M.; Huang, J.; Xue, L.; Zhang, R. A guidance system for robotic welding based on an improved YOLOv5 algorithm with a RealSense depth camera. Sci. Rep. 2023, 13, 21299. [Google Scholar] [CrossRef]

- Bhuiyan, T.; Kästner, L.; Hu, Y.; Kutschank, B.; Lambrecht, J. Deep-Reinforcement-Learning-Based Path Planning for Industrial Robots Using Distance Sensors as Observation. In Proceedings of the 2023 8th International Conference on Control and Robotics Engineering (ICCRE), Niigata, Japan, 21–23 April 2023; pp. 204–210. [Google Scholar] [CrossRef]

- Walker, M.; Phung, T.; Chakraborti, T.; Williams, T.; Szafir, D. Virtual, Augmented, and Mixed Reality for Human-Robot Interaction: A Survey and Virtual Design Element Taxonomy. arXiv 2022, arXiv:2202.11249. [Google Scholar] [CrossRef]

- Khdoudi, A.; Masrour, T.; El Hassani, I.; El Mazgualdi, C. A Deep-Reinforcement-Learning-Based Digital Twin for Manufacturing Process Optimization. Systems 2024, 12, 38. [Google Scholar] [CrossRef]

- Dharmawan, A.G.; Xiong, Y.; Foong, S.; Soh, G.S. A Model-Based Reinforcement Learning and Correction Framework for Process Control of Robotic Wire Arc Additive Manufacturing. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 4030–4036. [Google Scholar] [CrossRef]

- Chen, X.; Cao, J.; Liang, Z.; Sahni, Y.; Zhang, M. Digital Twin-assisted Reinforcement Learning for Resource-aware Microservice Offloading in Edge Computing. In Proceedings of the 2023 IEEE 20th International Conference on Mobile Ad Hoc and Smart Systems (MASS), Toronto, ON, Canada, 25–27 September 2023; pp. 28–36. [Google Scholar] [CrossRef]

- Kavraki, L.E.; Svestka, P.; Latombe, J.-C.; Overmars, M.H. Probabilistic roadmaps for path planning in high-dimensional configuration spaces. IEEE Trans. Robot. Autom. 1996, 12, 566–580. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).