Real-Time Seam Extraction Using Laser Vision Sensing: Hybrid Approach with Dynamic ROI and Optimized RANSAC

Abstract

1. Introduction

- A Dynamic ROI Transmission Mechanism: An adaptive ROI generation strategy based on the geometric features of laser stripes from historical frames is proposed, which intelligently suppresses noise regions through inter-frame spatial correlation analysis.

- Slope-Constrained RANSAC Optimization: The conventional RANSAC algorithm is enhanced by integrating historical slope constraints, where slope threshold restrictions are imposed on random sampling processes to accelerate iterative convergence.

- A Sequential Processing Architecture: A recursive workflow incorporating “pre-arc baseline initialization → dynamic ROI updating → constrained fitting” is established, thereby achieving high-efficiency, real-time tracking throughout the entire welding process cycle.

2. Methodology

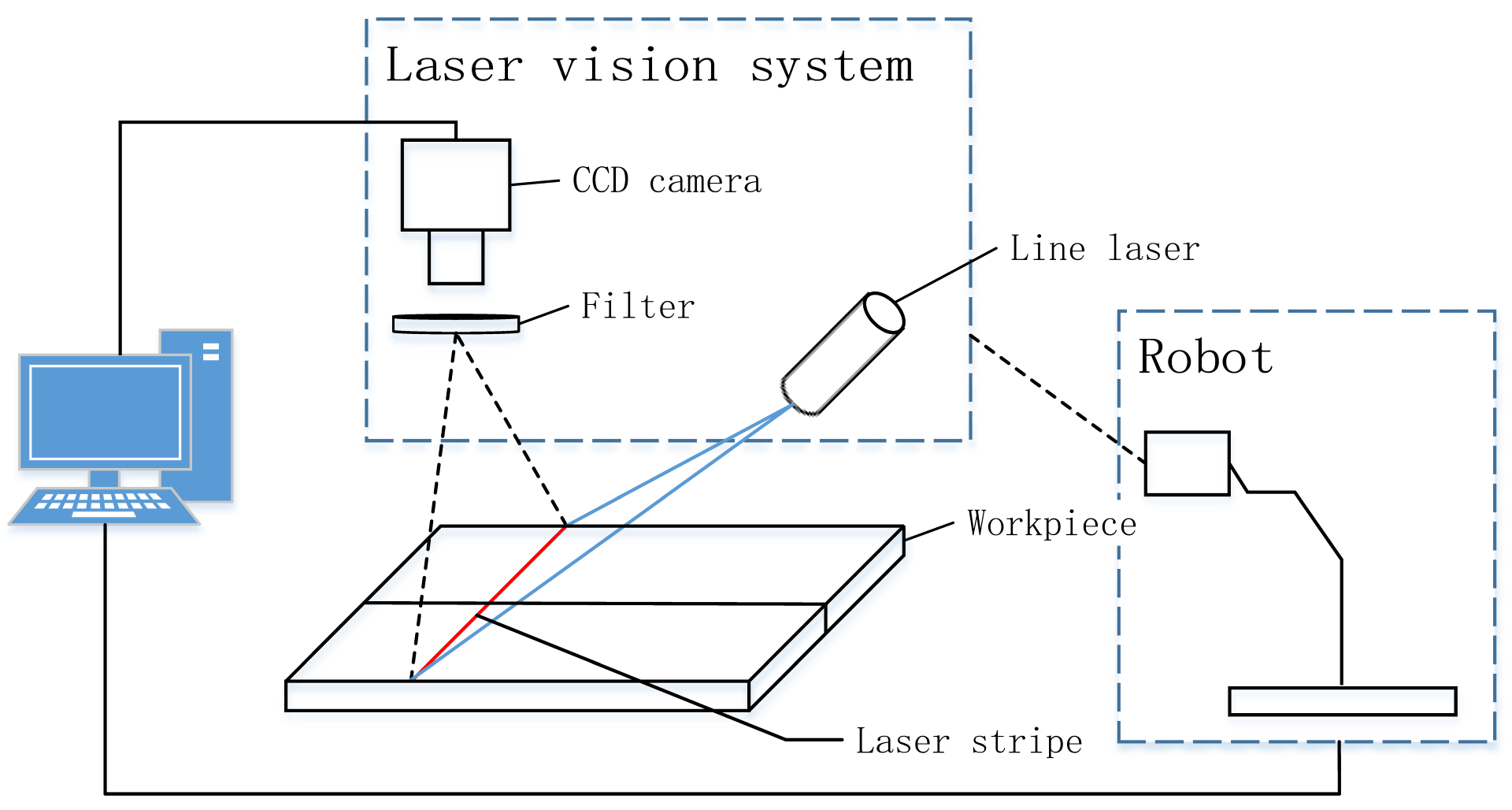

2.1. System Architecture and Data Acquisition

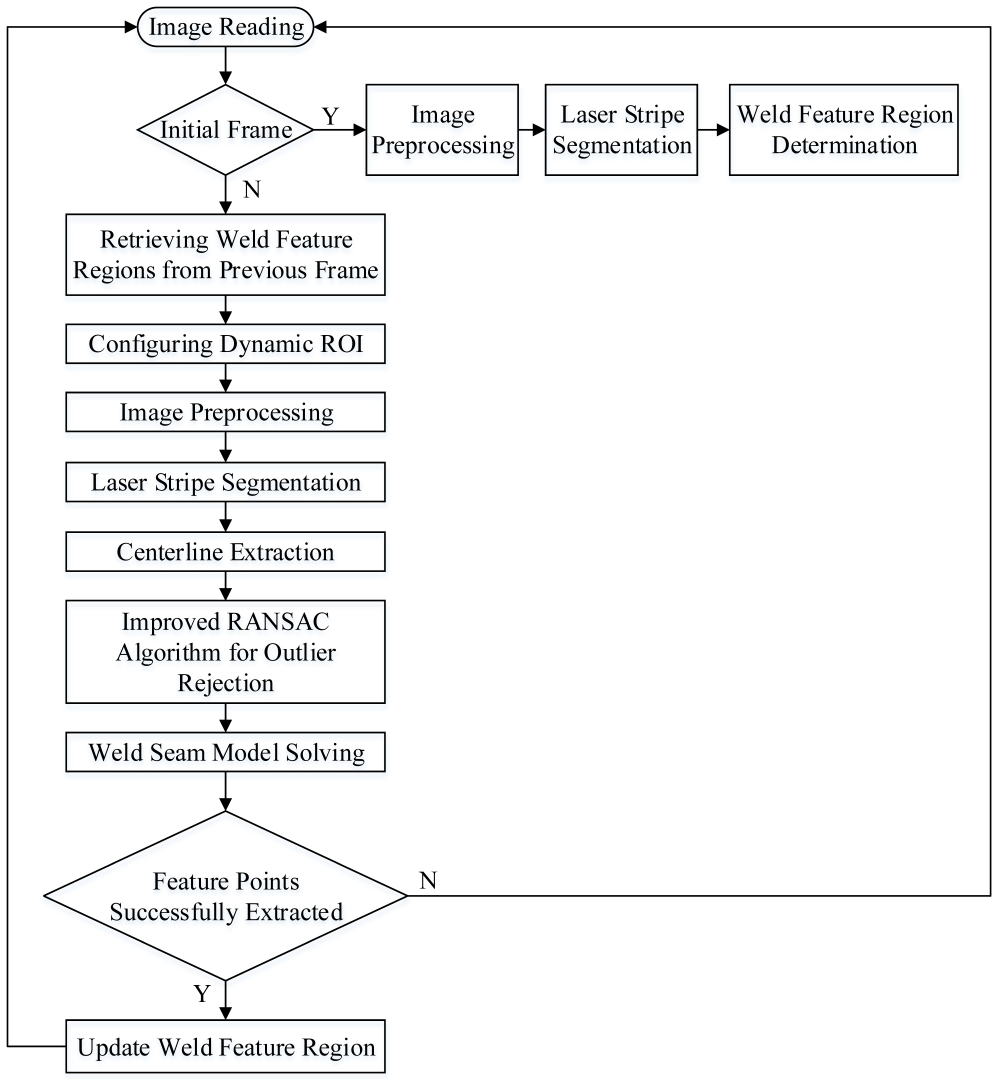

2.2. Proposed Algorithm

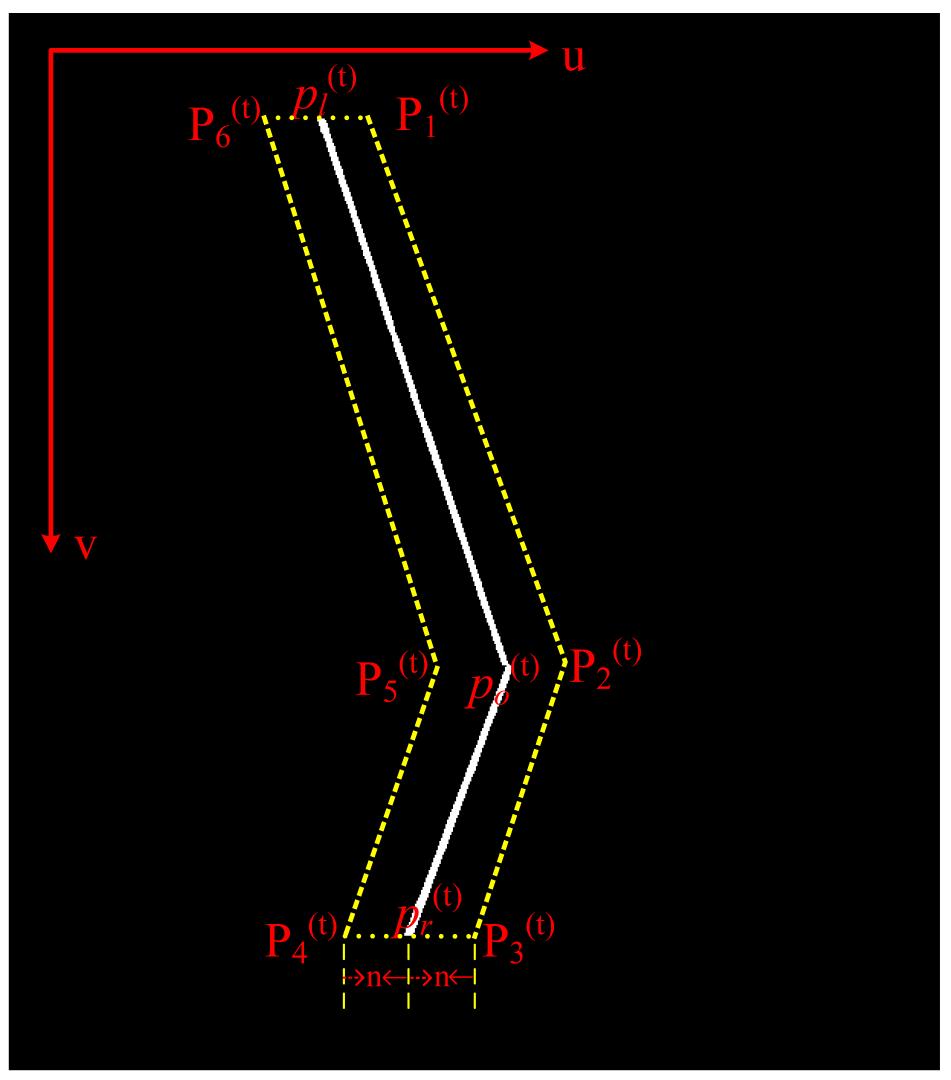

2.2.1. Dynamic ROI

| Algorithm 1 Core principle of dynamic ROI |

| Require: Initial frame without noise |

| Require: Subsequent frames |

| Ensure: Real-time laser stripe tracking |

| Initialization: |

| 1: Extract initial ROI from : |

| 2: |

| Iterative Processing: |

| 3: for each frame at time do |

| 4: Apply previous ROI: |

| 5: |

| 6: Extract new stripe region: |

| 7: |

| 8: Propagate ROI to next frame: |

| 9: |

| 10: end for |

2.2.2. Weld Seam Segmentation

Step 1: Thresholding

Step 2: Morphological Filtering

Step 3: Laser Stripe Centerline Extraction

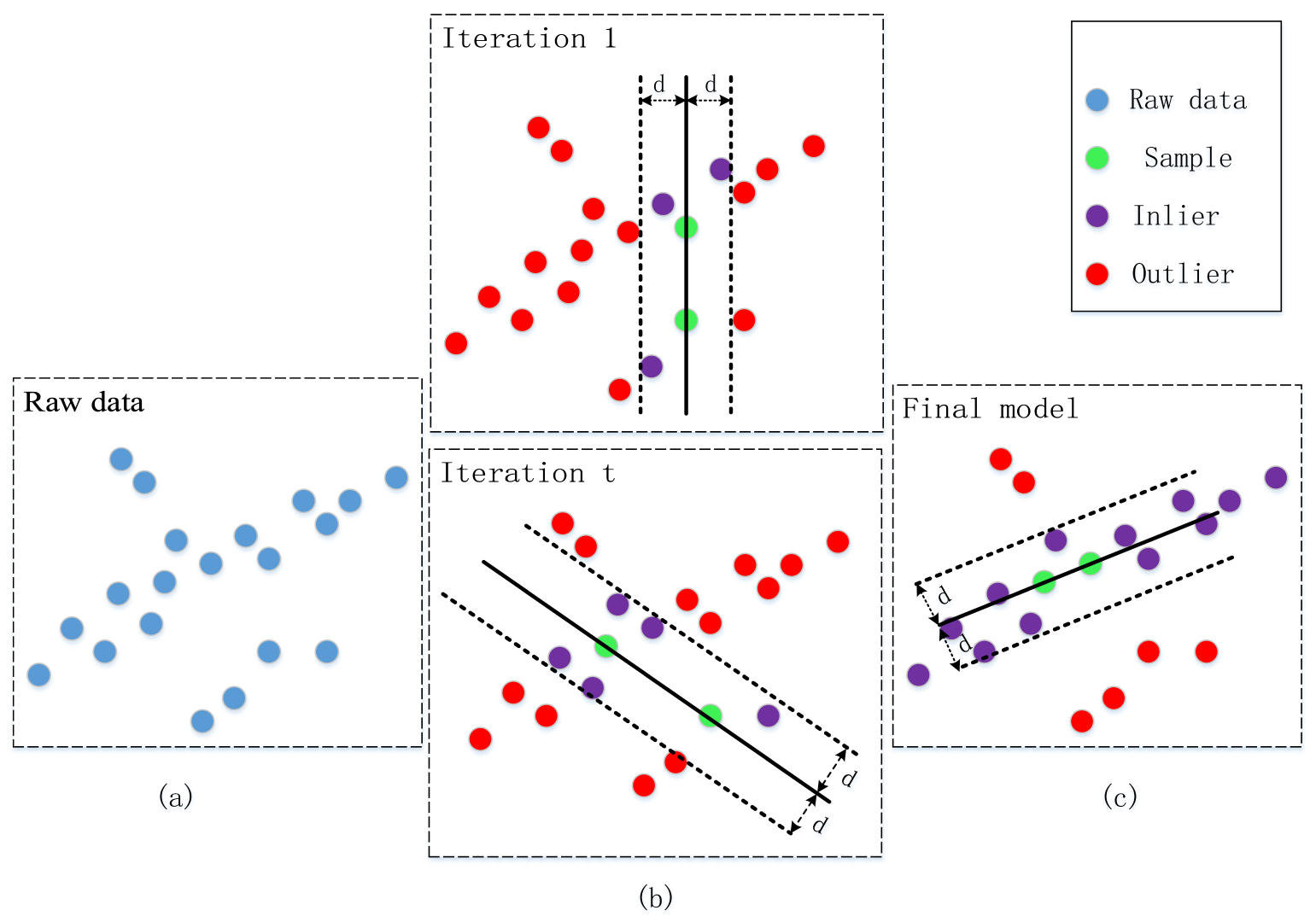

2.3. Optimized RANSAC Algorithm

- Step 1: The computation of the Euclidean distance from all data points to the model in each iteration.where are the coordinates of the ith data point in the RANSAC algorithm.

- Step 2: The classification of inliers and outliers using a preset distance threshold, w. The parameter w is determined using the laser stripe width extracted from the initial reference frame. The discrimination criteria are as follows:

- Step 3: The selection of the model with the maximum number of inliers as the optimal laser stripe solution.

- (1)

- The stochastic sampling mechanism introduces inherent randomness and a trial-and-error nature, which necessitates excessive iterations and significantly degrades the computational efficiency;

- (2)

- The predetermined fixed iteration count fails to dynamically adapt to varying inlier ratios, leading to a suboptimal trade-off between the processing speed and estimation accuracy.

| Algorithm 2 Improved slope-constrained RANSAC algorithm with early termination |

| Require: Current frame laser stripe point set P, previous slope , |

| 1: max iterations K, distance threshold , |

| 2: slope tolerance , consecutive patience N |

| Ensure: Optimal line model , inlier set |

| 3: Initialize best inliers: |

| 4: Initialize optimal model: |

| 5: Iteration counter: |

| 6: No improvement counter: |

| 7: while and do |

| 8: |

| 9: repeat |

| 10: Randomly select two distinct points |

| 11: Compute slope: |

| 12: until |

| 13: Construct line model: |

| 14: Temporary inlier set: |

| 15: for each point do |

| 16: Distance: |

| 17: if then |

| 18: |

| 19: end if |

| 20: end for |

| 21: if then |

| 22: |

| 23: |

| 24: |

| 25: else |

| 26: |

| 27: end if |

| 28: end while |

| 29: return |

2.4. Weld Seam Feature Point Estimation

2.5. Coordinate Transformation

- Camera Calibration: Obtains the intrinsic matrix and removes lens distortion.

- Laser Plane Calibration: Establishes the mapping from the 2D laser plane coordinates to the 3D camera space.

- Hand–Eye Calibration: Solves the transformation matrix between the robot tool end coordinate system and the camera coordinate system .

3. Experiments

3.1. Experimental Setup

3.2. Weld Seam Extraction Experiment

3.3. Ablation Experiment

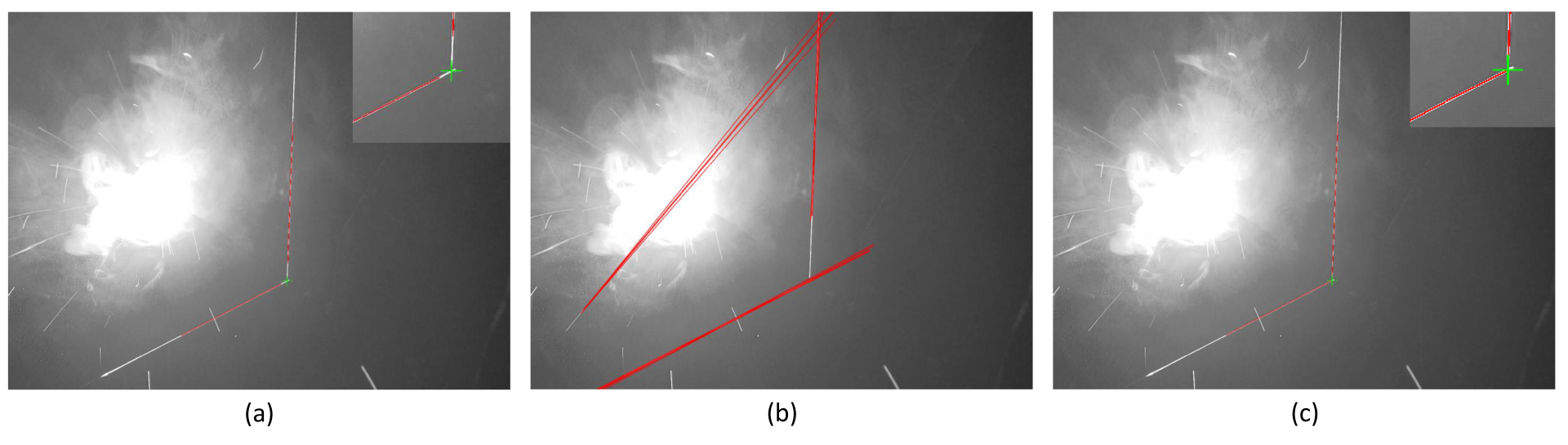

3.3.1. Impact of Dynamic ROI

3.3.2. Impact of Improved RANSAC Algorithm

3.4. Comparative Experiments

4. Discussion

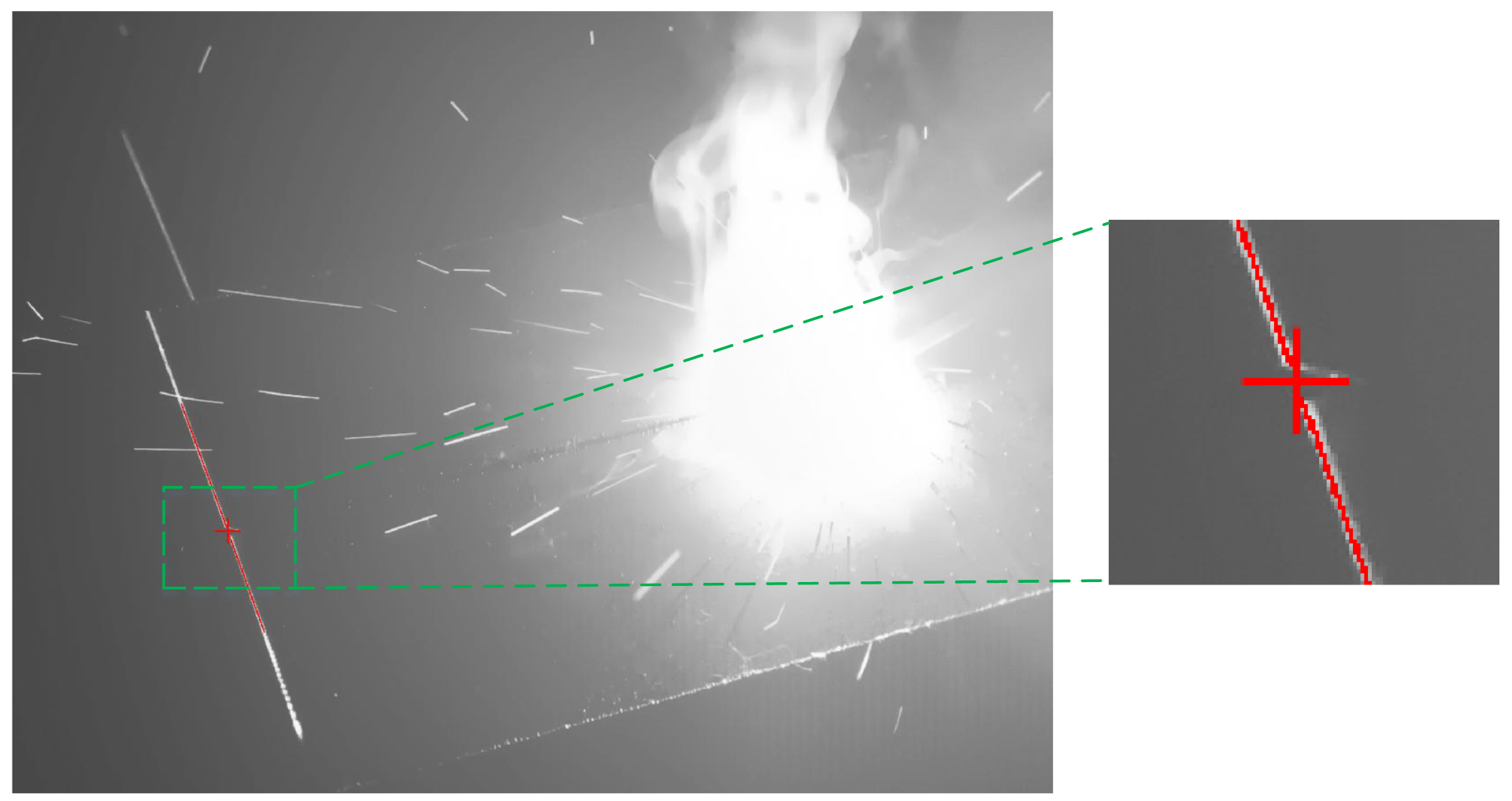

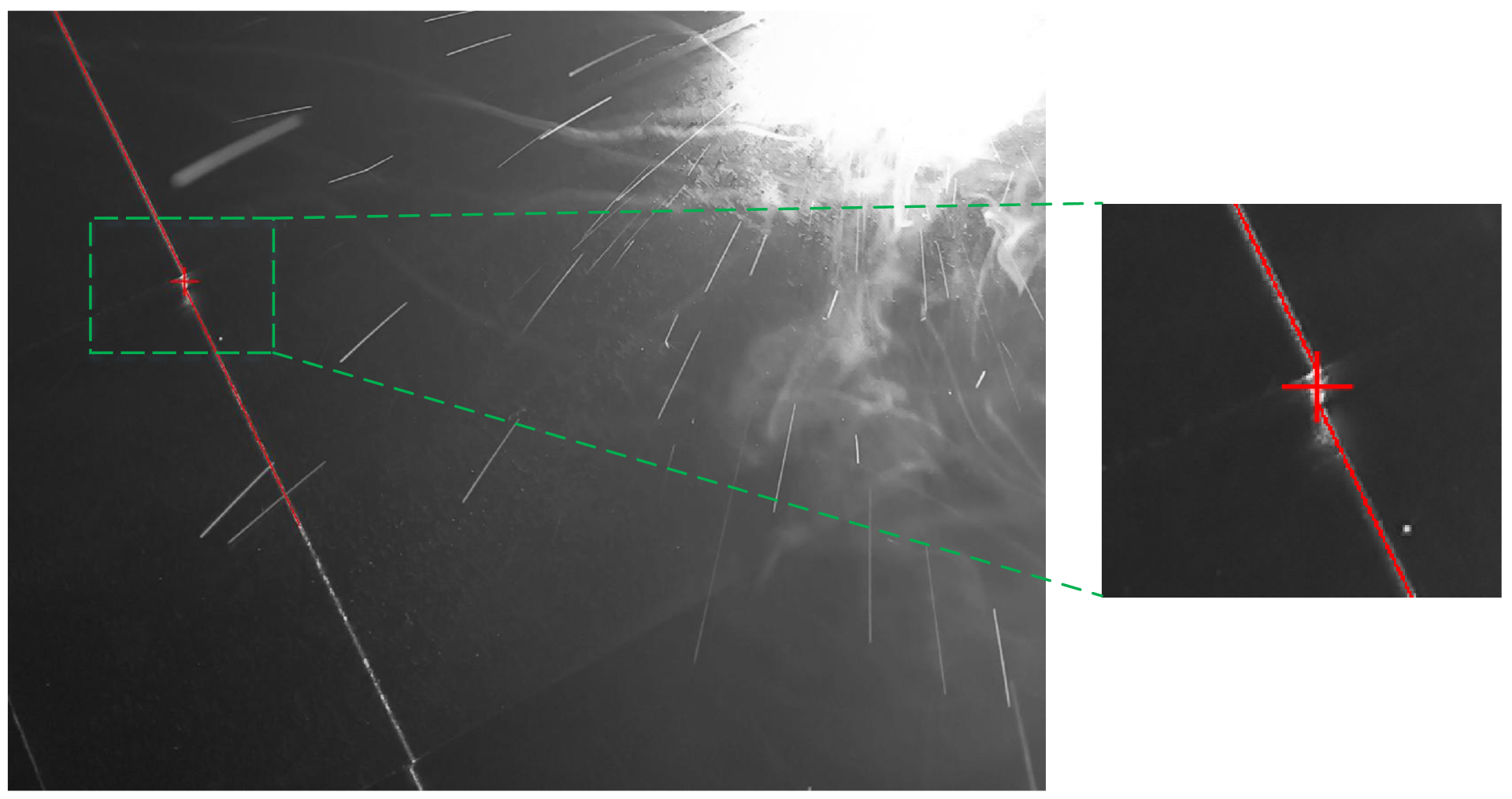

4.1. Interference Mechanism of Spatter and Surface Reflections

- Spatter Interference: The dynamic dispersion of molten metal spatter induces the transient local occlusion and geometric distortion of the laser stripe, which may be misinterpreted as topological continuity features, leading to fragmented centerline reconstruction. Moreover, the high-velocity trajectories of spatter particles create spatio-temporal coupling interference with the laser stripe, significantly increasing the complexity of optical feature separation.

- Reflection Interference: Multi-order reflection artifacts generated by highly reflective metal surfaces produce competing optical signals, causing path ambiguity in stripe centerline extraction. Concurrently, photon saturation effects in specular reflection regions reduce the contrast threshold of valid signals, inducing subpixel-level edge resolution degradation.

4.2. Analysis of Dynamic ROI

- The suppression of the arc-induced noise and spatter interference inherent to welding environments.

- A real-time processing capability at 30 fps.

4.3. Analysis of Improved RANSAC Algorithm

4.4. Analysis of Comparative Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fang, W.; Xu, X.; Tian, X. A vision-based method for narrow weld trajectory recognition of arc welding robots. Int. J. Adv. Manuf. Technol. 2022, 121, 8039–8050. [Google Scholar] [CrossRef]

- Lei, T.; Rong, Y.; Wang, H.; Huang, Y.; Li, M. A review of vision-aided robotic welding. Comput. Ind. 2020, 123, 103326. [Google Scholar] [CrossRef]

- Wu, Q.; Li, Z.; Gao, C.; Biao, W.; Shen, G. Research on Welding Guidance System of Intelligent Perception for Steel Weldment. IEEE Sens. J. 2023, 23, 5220–5231. [Google Scholar] [CrossRef]

- Jia, X.; Luo, J.; Li, K.; Wang, C.; Li, Z.; Wang, M.; Jiang, Z.; Veiko, V.P.; Duan, J.A. Ultrafast laser welding of transparent materials: From principles to applications. Int. J. Extrem. Manuf. 2025, 7, 032001. [Google Scholar] [CrossRef]

- Fan, J.; Jing, F.; Yang, L.; Long, T.; Tan, M. A precise seam tracking method for narrow butt seams based on structured light vision sensor. Opt. Laser. Technol. 2019, 109, 616–626. [Google Scholar] [CrossRef]

- Zou, Y.; Wang, Y.; Zhou, W.; Chen, X. Real-time seam tracking control system based on line laser visions. Opt. Laser. Technol. 2018, 103, 182–192. [Google Scholar] [CrossRef]

- Wang, B.; Hu, S.J.; Sun, L.; Freiheit, T. Intelligent welding system technologies: State-of-the-art review and perspectives. J. Manuf. Syst. 2020, 56, 373–391. [Google Scholar] [CrossRef]

- Rout, A.; Deepak, B.B.V.L.; Biswal, B.B. Advances in weld seam tracking techniques for robotic welding: A review. Rob. Comput. Integr. Manuf. 2019, 56, 12–37. [Google Scholar] [CrossRef]

- Zhang, G.; Zhang, Y.; Tuo, S.; Hou, Z.; Yang, W.; Xu, Z.; Wu, Y.; Yuan, H.; Shin, K. A novel seam tracking technique with a four-step method and experimental investigation of robotic welding oriented to complex welding seam. Sensors 2021, 21, 3067. [Google Scholar] [CrossRef]

- Banafian, N.; Fesharakifard, R.; Menhaj, M.B. Precise seam tracking in robotic welding by an improved image processing approach. Int. J. Adv. Manuf. Technol. 2021, 114, 251–270. [Google Scholar] [CrossRef]

- Xu, F.; Hou, Z.; Xiao, R.; Xu, Y.; Wang, Q.; Zhang, H. A novel welding path generation method for robotic multi-layer multi-pass welding based on weld seam feature point. Measurement 2023, 216, 112910. [Google Scholar] [CrossRef]

- Dinham, M.; Fang, G. Detection of fillet weld joints using an adaptive line growing algorithm for robotic arc welding. Rob. Comput. Integr. Manuf. 2014, 30, 229–243. [Google Scholar] [CrossRef]

- Deng, L.; Lei, T.; Wu, C.; Liu, Y.; Cao, S.; Zhao, S. A weld seam feature real-time extraction method of three typical welds based on target detection. Measurement 2023, 207, 112424. [Google Scholar] [CrossRef]

- Johan, N.F.; Mohd Shah, H.N.; Sulaiman, M.; Naji, O.A.A.M.; Arshad, M.A. Weld seam feature point extraction using laser and vision sensor. Int. J. Adv. Manuf. Technol. 2023, 127, 5155–5170. [Google Scholar] [CrossRef]

- Wu, Q.Q.; Lee, J.P.; Park, M.H.; Jin, B.J.; Kim, D.H.; Park, C.K.; Kim, I.S. A study on the modified Hough algorithm for image processing in weld seam tracking. J. Mech. Sci. Technol. 2015, 29, 4859–4865. [Google Scholar] [CrossRef]

- Muhammad, J.; Altun, H.; Abo-Serie, E. A robust butt welding seam finding technique for intelligent robotic welding system using active laser vision. Int. J. Adv. Manuf. Technol. 2016, 94, 13–29. [Google Scholar] [CrossRef]

- Li, X.; Li, X.; Ge, S.S.; Khyam, M.O.; Luo, C. Automatic welding seam tracking and identification. IEEE Trans. Ind. Electron. 2017, 64, 7261–7271. [Google Scholar] [CrossRef]

- Shao, W.J.; Huang, Y.; Zhang, Y. A novel weld seam detection method for space weld seam of narrow butt joint in laser welding. Opt. Laser. Technol. 2018, 99, 39–51. [Google Scholar] [CrossRef]

- Yu, S.; Guan, Y.; Hu, J.; Hong, J.; Zhu, H.; Zhang, T. Unified seam tracking algorithm via three-point weld representation for autonomous robotic welding. Eng. Appl. Artif. Intell. 2024, 128, 107535. [Google Scholar] [CrossRef]

- Li, J.; Li, B.; Dong, L.; Wang, X.; Tian, M. Weld seam identification and tracking of inspection robot based on deep learning network. Drones 2022, 6, 216. [Google Scholar] [CrossRef]

- Gao, A.; Fan, Z.; Li, A.; Le, Q.; Wu, D.; Du, F. YOLO-Weld: A Modified YOLOv5-Based Weld Feature Detection Network for Extreme Weld Noise. Sensors. 2023, 23, 5640. [Google Scholar] [CrossRef] [PubMed]

- Mobaraki, M.; Ahani, S.; Gonzalez, R.; Yi, K.M.; Van Heusden, K.; Dumont, G.A. Vision-based seam tracking for GMAW fillet welding based on keypoint detection deep learning model. J. Manuf. Process. 2024, 117, 315–328. [Google Scholar] [CrossRef]

- Kang, S.; Qiang, H.; Yang, J.; Liu, K.; Qian, W.; Li, W.; Pan, Y. Research on a Feature Point Detection Algorithm for Weld Images Based on Deep Learning. Electronics 2024, 13, 4117. [Google Scholar] [CrossRef]

- Lin, Z.; Shi, Y.; Wang, Z.; Li, B.; Chen, Y. Intelligent seam tracking of an ultranarrow gap during K-TIG welding: A hybrid CNN and adaptive ROI operation algorithm. IEEE Trans. Instrum. Meas. 2022, 72, 1–14. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. Automatica 1975, 11, 23–27. [Google Scholar] [CrossRef]

- Li, Y.; Zhou, J.; Huang, F.; Liu, L. Sub-pixel extraction of laser stripe center using an improved gray-gravity method. Sensors 2017, 17, 814. [Google Scholar] [CrossRef]

- Steger, C. An unbiased detector of curvilinear structures. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 113–125. [Google Scholar] [CrossRef]

- Jiang, C.; Li, W.L.; Wu, A.; Yu, W.Y. A novel centerline extraction algorithm for a laser stripe applied for turbine blade inspection. Meas. Sci. Technol. 2020, 31, 095403. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Li, W.; Mei, F.; Hu, Z.; Gao, X.; Yu, H.; Housein, A.A.; Wei, C. Multiple weld seam laser vision recognition method based on the IPCE algorithm. Opt. Laser. Technol. 2022, 155, 108388. [Google Scholar] [CrossRef]

| System Component | |

|---|---|

| Line laser | Wavelength: 450 nm; optical power: 80 mW; laser stripe width: 1 mm |

| Bandpass filter | (450 ± 20) nm |

| Imaging device | CCD camera (2592 × 1944 pixel) @ 30 fps |

| Computing unit | Intel Core i7-11800H @ 2.3 GHz, 16 GB RAM |

| Robot | Fairino 6-DOF robotic arm; repeatability: ±0.02 mm |

| Hand–eye calibration accuracy | 1.2 mm |

| Parameter | Value |

|---|---|

| Welding process | GMAW |

| Welding materials | Q235 steel (8 mm thick) |

| Welding current | 117 |

| Welding voltage | 22 |

| Feed speed | 3 mm/s |

| Welding speed | 5 mm/s |

| Gas flow rate | 18 L/min |

| Wire diameter | 1.0 |

| Shielding gas | CO2 |

| Method | Fillet Weld | Butt Weld | Lap Weld | |||

|---|---|---|---|---|---|---|

| Time (ms) | Acc. (%) | Time (ms) | Acc. (%) | Time (ms) | Acc. (%) | |

| Dynamic ROI | 7.97 | 98.84 | 5.31 | 96.18 | 6.26 | 97.45 |

| Static ROI | 12.40 | 91.28 | 10.20 | 92.37 | 13.59 | 94.27 |

| Weld Type | Fillet Weld | Butt Weld | Lap Joint |

|---|---|---|---|

| Original RANSAC | 21.26 | 10.79 | 12.63 |

| Improved RANSAC | 7.97 | 5.31 | 6.26 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, G.; Zhang, Y.; Ai, Y.; Yu, B.; Xu, W. Real-Time Seam Extraction Using Laser Vision Sensing: Hybrid Approach with Dynamic ROI and Optimized RANSAC. Sensors 2025, 25, 3268. https://doi.org/10.3390/s25113268

Chen G, Zhang Y, Ai Y, Yu B, Xu W. Real-Time Seam Extraction Using Laser Vision Sensing: Hybrid Approach with Dynamic ROI and Optimized RANSAC. Sensors. 2025; 25(11):3268. https://doi.org/10.3390/s25113268

Chicago/Turabian StyleChen, Guojun, Yanduo Zhang, Yuming Ai, Baocheng Yu, and Wenxia Xu. 2025. "Real-Time Seam Extraction Using Laser Vision Sensing: Hybrid Approach with Dynamic ROI and Optimized RANSAC" Sensors 25, no. 11: 3268. https://doi.org/10.3390/s25113268

APA StyleChen, G., Zhang, Y., Ai, Y., Yu, B., & Xu, W. (2025). Real-Time Seam Extraction Using Laser Vision Sensing: Hybrid Approach with Dynamic ROI and Optimized RANSAC. Sensors, 25(11), 3268. https://doi.org/10.3390/s25113268