Quantitative Assessment of Facial Paralysis Using Dynamic 3D Photogrammetry and Deep Learning: A Hybrid Approach Integrating Expert Consensus

Abstract

Highlights

- The accuracy of the new approach was higher than 95%.

- The objective approach offers better assessment than the subjective approach.

- The automated objective assessment of facial paralysis is achievable.

- The deep learning approach enhanced dynamic 3D photogrammetry for facial paralysis assessment.

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Acquisition

2.2. Feature Engineering

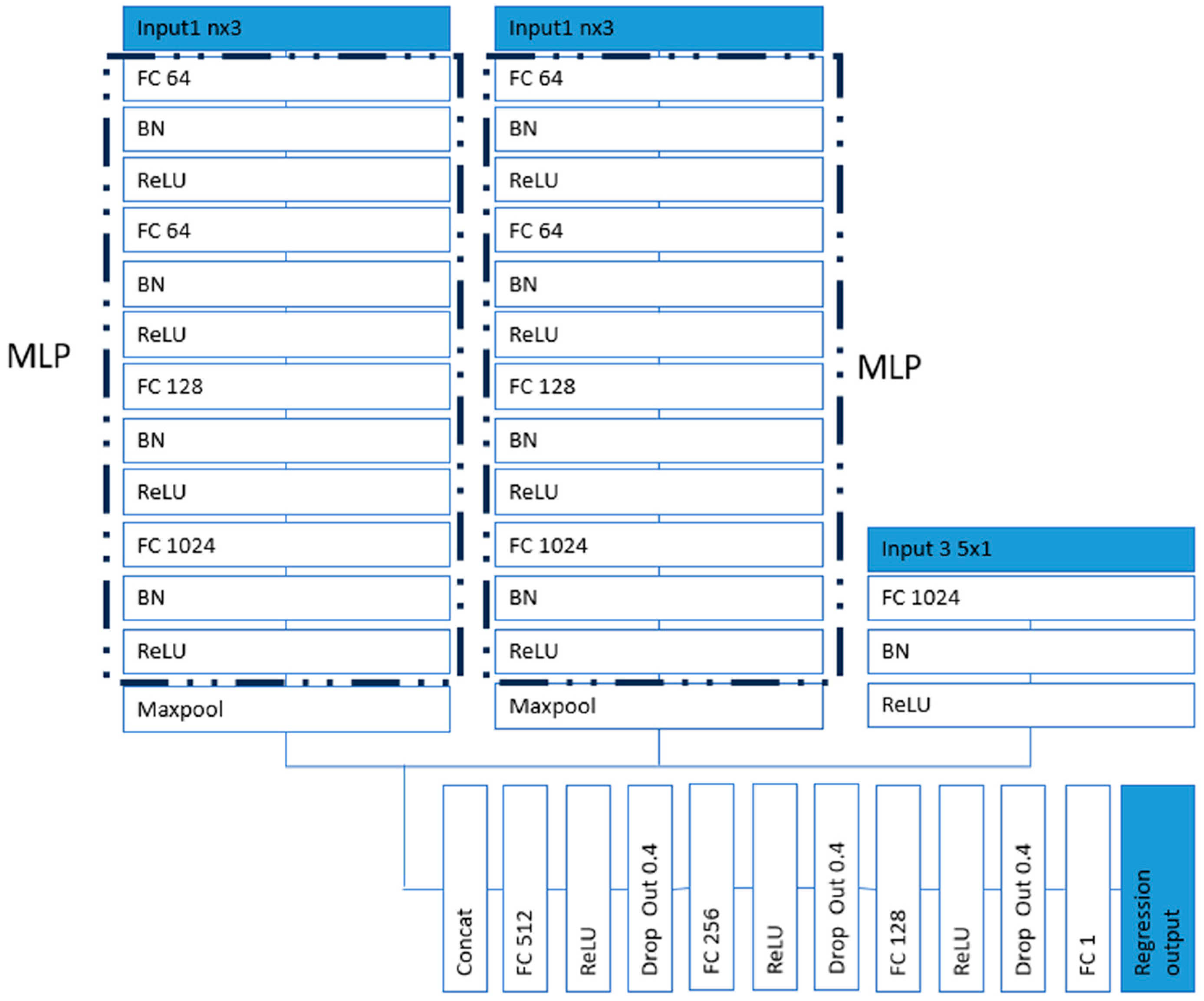

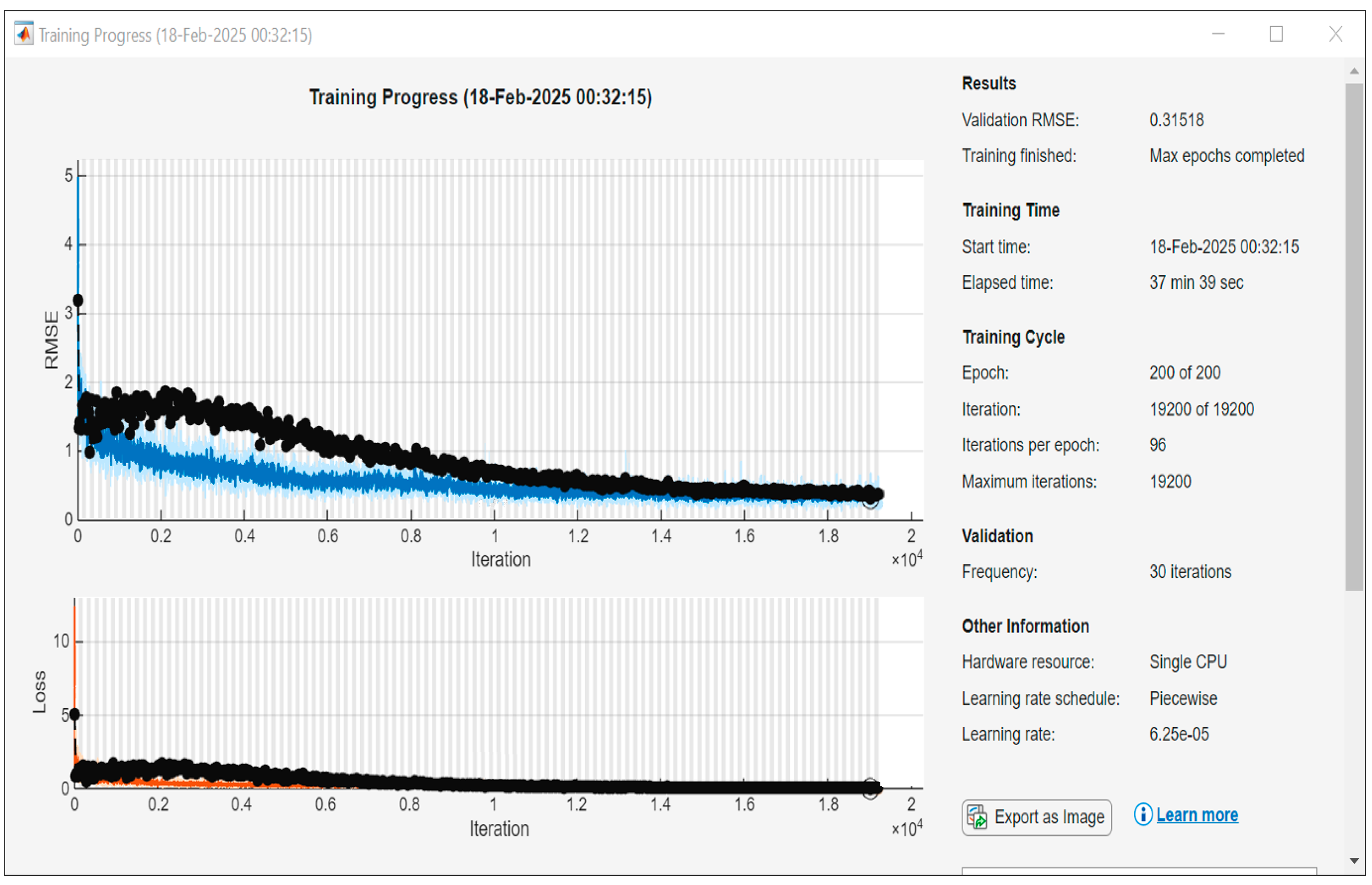

2.3. Network Architecture

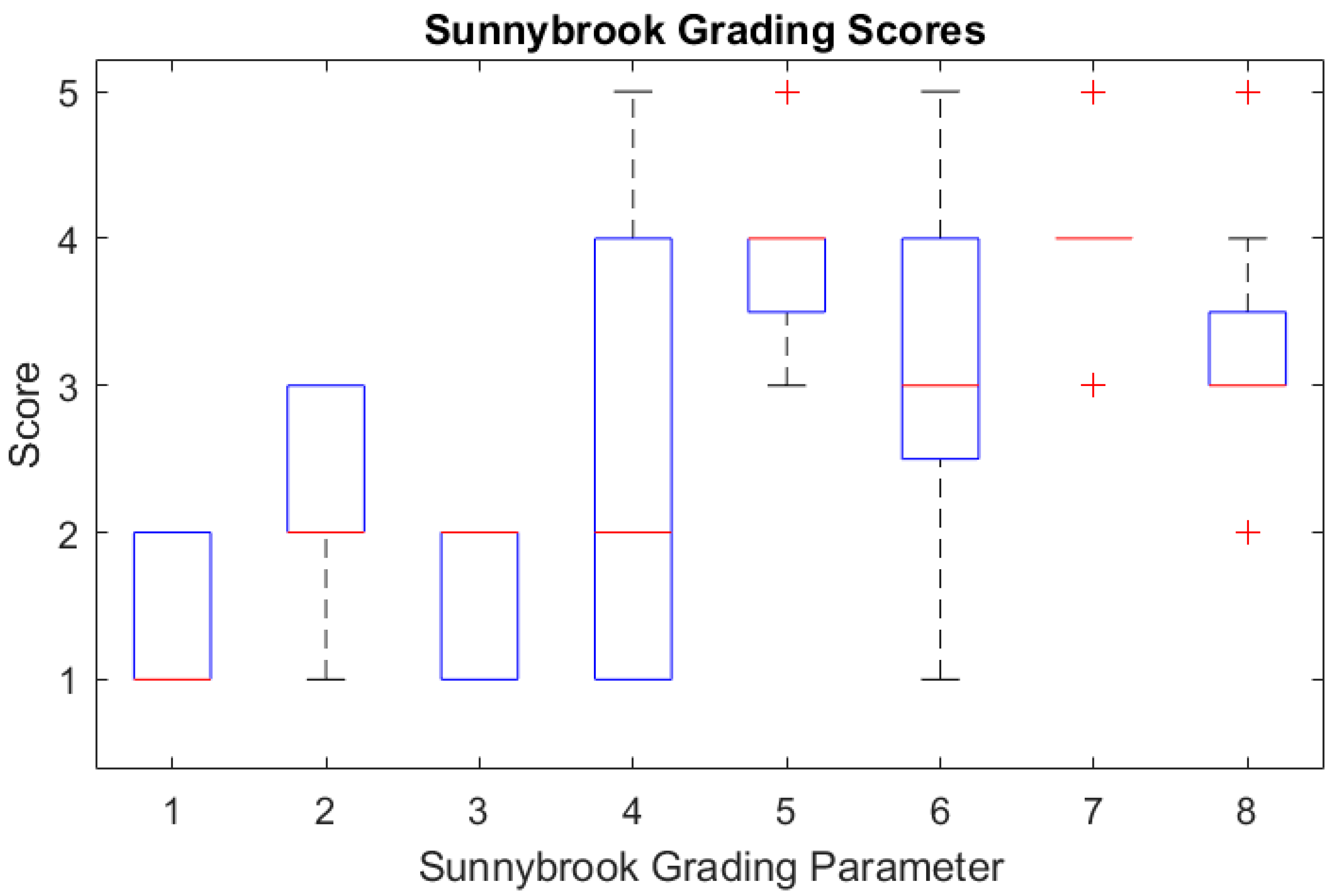

2.4. Facial Movement Observation

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- House, J.; Brackmann, D. Facial nerve grading system. Otolaryngol. Head Neck Surg. 1985, 93, 146. [Google Scholar] [CrossRef] [PubMed]

- Ross, B.; Fradet, G.; Nedzelski, J. Development of a sensitive clinical facial grading system. Otolaryngol. Head Neck Surg. 1996, 114, 380–386. [Google Scholar] [CrossRef] [PubMed]

- Yanagihara, N. Grading of facial palsy. In Facial Nerve Surgery, Proceedings of the Third International Symposium on Facial Nerve Surgery, Zurich, Switzerland, 9–12 August 1976; Fisch, U., Ed.; Kugler Medical Publications: Amstelveen, The Netherlands; Aesculapius Publishing Co.: Birmingham, AL, USA, 1976; pp. 533–535. [Google Scholar]

- Fattah, A.; Gurusinghe, A.; Gavilan, J.; Hadlock, T.; Marcus, J.; Marres, H.; Nduka, C.; Slattery, W.; Snyder-Warwick, A. Facial nerve grading instruments: Systematic review of the literature and suggestion for uniformity. Plast. Reconstr. Surg. 2015, 135, 569–579. [Google Scholar] [CrossRef] [PubMed]

- Pourmomeny, A.; Zadmehr, H.; Hossaini, M. Measurement of facial movements with Photoshop software during treatment of facial nerve palsy. J. Res. Med. Sci. 2011, 16, 1313–1318. [Google Scholar]

- Modersohn, L.; Denzler, J. Facial Paresis Index Prediction by Exploiting Active Appearance Models for Compact Discriminative Features. In Proceedings of the 11th Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications–Volume 4 VISAPP: VISAPP, Rome, Italy, 27–29 February 2016; pp. 271–278. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, S.; Liu, L.; Wu, G.; Dong, J. Automatic Facial Paralysis Evaluation Augmented by a Cascaded Encoder Network Structure. IEEE Access 2019, 7, 135621–135631. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, S.; Dong, J.; Liu, L.; Yu, H. Automatic evaluation of the degree of facial nerve paralysis. Multimed Tools Appl. 2016, 75, 11893–11908. [Google Scholar] [CrossRef]

- Veeravalli, S.; Bodapati, P. Evaluation of Asymmetry in Facial Palsy Images by Generating Facial Key Points and Contours. Electr. Syst. 2024, 20, 2134–2145. [Google Scholar]

- Knoedler, L.; Miragall, M.; Kauke-Navarro, M.; Obed, D.; Bauer, M.; Tißler, P.; Prantl, L.; Machens, H.; Broer, P.; Baecher, H.; et al. A Ready-to-Use Grading Tool for Facial Palsy Examiners-Automated Grading System in Facial Palsy Patients Made Easy. J. Pers. Med. 2022, 12, 1739. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Mishima, K.; Sugahara, T. Review article: Analysis methods for facial motion. Jpn. Dent. Sci. Rev. 2009, 45, 4–13. [Google Scholar] [CrossRef][Green Version]

- McGrenary, S.; O’Reilly, B.; Soraghan, J. Objective grading of facial paralysis using artificial intelligence analysis of video data. In Proceedings of the 18th IEEE Symposium on Computer-Based Medical Systems [CBMS’05], Dublin, Ireland, 23–24 June 2005; pp. 587–592. [Google Scholar] [CrossRef]

- Xu, P.; Xie, F.; Su, T.; Wan, Z.; Zhou, Z.; Xin, X.; Guan, Z. Automatic Evaluation of Facial Nerve Paralysis by Dual-Path LSTM with Deep Differentiated Network. Neurocomputing 2020, 388, 70–77. [Google Scholar] [CrossRef]

- Arora, A.; Zaeem, J.; Garg, V.; Jayal, A.; Akhtar, Z. A Deep Learning Approach for Early Detection of Facial Palsy in Video Using Convolutional Neural Networks: A Computational Study. Computers 2024, 13, 200. [Google Scholar] [CrossRef]

- Tzou, C.; Pona, I.; Placheta, E.; Hold, A.; Michaelidou, M.; Artner, N.; Kropatsch, W.; Gerber, H.; Frey, M. Evolution of the 3-dimensional video system for facial motion analysis. Ann. Plast. Surg. 2012, 69, 173–185. [Google Scholar] [CrossRef] [PubMed]

- Lanz, C.; Olgay, B.; Denzl, J.; Gross, H. Automated classification of therapeutic face exercises using the Kinect. In Proceedings of the 8th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Barcelona, Spain, 21–24 February 2013. [Google Scholar]

- Desrosiers, P.; Bennis, Y.; Daoudi, M.; Amor, B.; Guerreschi, P. Analyzing of facial paralysis by shape analysis of 3D face sequences. Image Vis. Comput. 2017, 67, 67–88. [Google Scholar] [CrossRef]

- Gaber, A.; Taher, M.; Wahed, M. Quantifying facial paralysis using the kinect v2. In Proceedings of the 37th IEEE Annual International Conference on Engineering in Medicine and Biology Society (EMBS), Milan, Italy, 25–29 August 2015; pp. 2497–2501. [Google Scholar]

- Katsumi, S.; Esaki, S.; Hattori, K.; Yamano, K.; Umezaki, T.; Murakami, S. Quantitative analysis of facial palsy using a three-dimensional facial motion measurement system. Auris Nasus Larynx 2015, 42, 275–283. [Google Scholar] [CrossRef]

- Alagha, M.; Ju, X.; Morley, S.; Ayoub, A. Reproducibility of the dynamics of facial expressions in unilateral facial palsy. Int. J. Oral Maxillofac. Surg. 2018, 47, 268–275. [Google Scholar] [CrossRef]

- Hasebe, K.; Kojima, T.; Okanoue, Y.; Yuki, R.; Yamamoto, H.; Otsuki, S.; Fujimura, S.; Hori, R. Novel evaluation method for facial nerve palsy using 3D facial recognition system in iPhone. Auris Nasus Larynx 2024, 51, 460–464. [Google Scholar] [CrossRef] [PubMed]

- Alagha, M.; Ayoub, A.; Morley, S.; Ju, X. Objective grading facial paralysis severity using a dynamic 3D stereo photogrammetry imaging system. Opt. Lasers Eng. 2022, 150, 106876. [Google Scholar] [CrossRef]

- Gaber, A.; Taher, M.; Nevin, M.W.; Shalaby, M.; Gaber, S. Classification of facial paralysis based on machine learning techniques. BioMed Eng. OnLine 2022, 21, 65. [Google Scholar] [CrossRef]

- Storey, G.; Jiang, R.; Keogh, S.; Bouridane, A.; Li, C.-T. 3DPalsyNet: A Facial Palsy Grading and Motion Recognition Framework Using Fully 3D Convolutional Neural Networks. IEEE Access 2019, 7, 121655–121664. [Google Scholar] [CrossRef]

- Koji, Y.; Manabu, M.; Inokuchi, I.; Kawakami, S.; Masuda, Y. Dynamic evaluation of facial palsy by moire topography video: Second report. In Proceedings of the BiOS Europe ‘95, Barcelona, Spain, 12–16 September 1995; Medical Applications of Lasers III. Volume 2623. [Google Scholar] [CrossRef]

- Jiang, C.; Wu, J.; Zhong, W.; Wei, M.; Tong, J.; Yu, H.; Wang, L. Automatic Facial Paralysis Assessment via Computational Image Analysis. J. Healthc. Eng. 2020, 2398542. [Google Scholar] [CrossRef]

- Colon, R.; Park, J.; Boczar, D.; Diep, G.; Berman, Z.; Trilles, J.; Chaya, B.; Rodriguez, E. Evaluating Functional Outcomes in Reanimation Surgery for Chronic Facial Paralysis: A Systematic Review. Plast. Reconstr. Surg.-Glob. Open 2021, 9, e3492. [Google Scholar] [CrossRef]

- Lou, J.; Yu, H.; Wang, F. A Review on Automated Facial Nerve Function Assessment from Visual Face Capture. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 488–497. [Google Scholar] [CrossRef] [PubMed]

- Vrochidou, E.; Papić, V.; Kalampokas, T.; Papakostas, G. Automatic Facial Palsy Detection—From Mathematical Modeling to Deep Learning. Axioms 2023, 12, 1091. [Google Scholar] [CrossRef]

- Storey, G.; Jiang, R.; Bouridane, A. Role for 2D image generated 3D face models in the rehabilitation of facial palsy. Healthc. Technol. Lett. 2017, 4, 145–148. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Gross, M.; Trotman, C.; Moffatt, K. A comparison of three-dimensional and two-dimensional analyses of facial motion. Angle Orthod. 1996, 66, 189–194. [Google Scholar]

- He, S.; Soraghan, J.; O’Reilly, B. Biomedical image sequence analysis with application to automatic quantitative assessment of facial paralysis. J. Image Video Proc. 2007, 081282. [Google Scholar] [CrossRef]

- Afifi, N.; Diederich, J.; Shanableh, T. Computational methods for the detection of facial palsy. J. Telemed. Telecare 2006, 12 (Suppl. S3), 3–7. [Google Scholar] [CrossRef]

- Amsalam, A.; Al-Naji, A.; Daeef, A.; Chahl, J. Automatic Facial Palsy, Age and Gender Detection Using a Raspberry Pi. BioMedinformatics 2023, 3, 455–466. [Google Scholar] [CrossRef]

- Qi, C.; Su, H.; Kaichun, M.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 77–85. [Google Scholar] [CrossRef]

- Nguyen, D.; Tho, M.; Dao, T. Enhanced facial expression recognition using 3D point sets and geometric deep learning. Med. Biol. Eng. Comput. 2021, 59, 1235–1244. [Google Scholar] [CrossRef] [PubMed]

- Al-Anezi, T.; Khambay, B.; Peng, M.; O’Leary, E.; Ju, X.; Ayoub, A. A new method for automatic tracking of facial landmarks in 3D motion captured images [4D]. Int. J. Oral Maxillofac. Surg. 2013, 42, 9–18. [Google Scholar] [CrossRef] [PubMed]

- Ju, X.; Siebert, J. Conforming generic animatable models to 3D scanned data. In Proceedings of the 6th Numerisation 3D/Scanning 2001 Congress, Paris, France, 4–5 April 2001. [Google Scholar]

- Almukhtar, A.; Khambay, B.; Ju, X.; McDonald, J.; Ayoub, A. Accuracy of generic mesh conformation: The future of facial morphological analysis. JPRAS Open 2017, 14, 39–48. [Google Scholar] [CrossRef]

- Rolf, F.; Dennis, S. Extensions of the Procrustes Method for the Optimal Superimposition of Landmarks. Syst. Zool. 1990, 39, 40–59. [Google Scholar] [CrossRef]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 14–16 April 2014. [Google Scholar]

- Bock, S.; Weiß, M. A Proof of Local Convergence for the Adam Optimizer. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Al-Rudainy, D.; Ju, X.; Stanton, S.; Mehendale, F.; Ayoub, A. Assessment of regional asymmetry of the face before and after surgical correction of unilateral cleft lip. J. Cranio-Maxillofac. Surg. 2018, 46, 974–978. [Google Scholar] [CrossRef] [PubMed]

- Gattani, S.; Ju, X.; Gillgrass, T.; Bell, A.; Ayoub, A. An Innovative Assessment of the Dynamics of Facial Movements in Surgically Managed Unilateral Cleft Lip and Palate Using 4D Imaging. Cleft Palate-Craniofacial J. 2020, 57, 1125–1133. [Google Scholar] [CrossRef] [PubMed]

| Parameter | Observation | Grade |

|---|---|---|

| Resting Symmetry Score | ||

| Eye | Abnormal/normal | 1/2 |

| Cheek [nasolabial] | Absent/altered/normal | 1/2/3 |

| Mouth [drooped] | Abnormal/normal | 1/2 |

| Voluntary movement Score | ||

| Forehead wrinkling | No movement—normal | 1–5 |

| Gentle eye closure | No movement—normal | 1–5 |

| Open mouth smiling | No movement—normal | 1–5 |

| Cheek puffing | No movement—normal | 1–5 |

| Lip puckering | No movement—normal | 1–5 |

| Expressions | Eyebrow Raising | Eye Closure | Smiling | Cheek Puffing | Lip Puckering |

|---|---|---|---|---|---|

| Assessor | 74.1% | 79.9% | 65.2% | 73.2% | 73.2% |

| PointNet | 100% | 97.3% | 95.0% | 100% | 95.7% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ju, X.; Ayoub, A.; Morley, S. Quantitative Assessment of Facial Paralysis Using Dynamic 3D Photogrammetry and Deep Learning: A Hybrid Approach Integrating Expert Consensus. Sensors 2025, 25, 3264. https://doi.org/10.3390/s25113264

Ju X, Ayoub A, Morley S. Quantitative Assessment of Facial Paralysis Using Dynamic 3D Photogrammetry and Deep Learning: A Hybrid Approach Integrating Expert Consensus. Sensors. 2025; 25(11):3264. https://doi.org/10.3390/s25113264

Chicago/Turabian StyleJu, Xiangyang, Ashraf Ayoub, and Stephen Morley. 2025. "Quantitative Assessment of Facial Paralysis Using Dynamic 3D Photogrammetry and Deep Learning: A Hybrid Approach Integrating Expert Consensus" Sensors 25, no. 11: 3264. https://doi.org/10.3390/s25113264

APA StyleJu, X., Ayoub, A., & Morley, S. (2025). Quantitative Assessment of Facial Paralysis Using Dynamic 3D Photogrammetry and Deep Learning: A Hybrid Approach Integrating Expert Consensus. Sensors, 25(11), 3264. https://doi.org/10.3390/s25113264