Abstract

Driven by the remarkable capabilities of machine learning, brain–computer interfaces (BCIs) are carving out an ever-expanding range of applications across a multitude of diverse fields. Notably, electroencephalogram (EEG) signals have risen to prominence as the most prevalently utilized signals within BCIs, owing to their non-invasive essence, exceptional portability, cost-effectiveness, and high temporal resolution. However, despite the significant strides made, the paucity of EEG data has emerged as the main bottleneck, preventing generalization of decoding algorithms. Taking inspiration from the resounding success of generative models in computer vision and natural language processing arenas, the generation of synthetic EEG data from limited recorded samples has recently garnered burgeoning attention. This paper undertakes a comprehensive and thorough review of the techniques and methodologies underpinning the generative models of the general EEG, namely the variational autoencoder (VAE), the generative adversarial network (GAN), and the diffusion model. Special emphasis is placed on their practical utility in augmenting EEG data. The structural designs and performance metrics of the different generative approaches in various application domains have been meticulously dissected and discussed. A comparative analysis of the strengths and weaknesses of each existing model has been carried out, and prospective avenues for future enhancement and refinement have been put forward.

1. Introduction

Electroencephalography (EEG) is a non-invasive approach of measuring the brain’s electrical fields. It records the voltage potentials generated by the flow of electric current in and around neurons by means of electrodes placed on the scalp. EEG research has a history of nearly a century, during which it has accumulated a wealth of experience in various application areas. It not only has an important foundation in clinical diagnosis but also plays a role in more modern brain-triggered neurorehabilitation treatments [1]. It aids in diagnosing various neurological disorders, such as epilepsy, tumors, cerebrovascular lesions, depression, and trauma-related issues. Furthermore, as a true neuroimaging method, EEG has seen expanded applications in translational neuroscience and computational neuroscience in recent years [1].

BCI (brain–computer interface) is a technology that facilitates interaction between humans and computers by capturing and interpreting the brain’s electrical signals. This activity can be in the form of EEG or other electrophysiological recordings, such as Magnetoencephalography (MEG), Electrocorticography (ECoG), functional Magnetic Resonance Imaging (fMRI), and functional Near-Infrared Spectroscopy (fNIRs) [2]. However, compared to other recording methods, the cost of obtaining EEG is relatively low, making it more accessible [2]. The concept of BCIs, introduced in the 1970s by Jacques Vidal [3], has been further developed thanks to advancements in computer technology, machine learning, and neuroscience.

With the advancement of deep learning, substantial data are required to train large models with strong generalization capabilities. Generative models are a main solution of data augmentation. In the past decades, generative models for augmenting EEG data have achieved significant success. However, there is currently a lack of reviews comparing the applications of generative models in EEG data augmentation. Existing reviews only focus on a single type of generative model (e.g., GAN [4]). Therefore, this review aims to provide a broader overview, primarily examining EEG generative models based on VAE, GAN, and diffusion models. For each type of model, we provide a detailed introduction to the principles, model architecture, datasets used, and experimental results. At the end of each section, we discuss the advantages and limitations of each model.

By organizing and analyzing the existing studies on generating EEG signals based on generative models, several review studies have been conducted in this field, as shown in Table 1. Previous work on EEG-based data generation has comprehensively analyzed the improvement of generative models as well as the design of the experimental process, including experimental design methods, data preprocessing methods, feature extraction methods, and the effect of using generative models on performance. However, most of these works only explored a single generative model or a single task domain, neglecting the overall perspective of the EEG generation domain. We have conducted an all-encompassing review of this topic, and in this paper, we synthesize representative research results on generative models used for various EEG tasks in recent years.

Table 1.

A comparison with existing reviews.

The remainder of this review is organized as follows: In Section 2, we discuss the importance of data augmentation for EEG and review the most widely used methods. In Section 3, we discuss the literature search criteria and methodology. In Section 4, we introduce the theoretical foundations of three mainstream generative models and describe the evaluation metrics used in this review. In Section 5, Section 6 and Section 7, we review the applications of VAEs, GANs, and diffusion models in EEG generation and summarize their respective advantages and disadvantages, respectively. In Section 8, we conclude this paper and offer our views on future directions for development.

2. Data Augmentation

The rapid development of BCI based on deep learning in recent years has been remarkable, but breakthroughs in deep learning often depend on large datasets, which determine the final performance of the model. Unfortunately, currently, there are very few open-source EEG datasets available. This scarcity is mainly because annotating EEG data requires specific expertise, and the process is time-consuming and the results can vary depending on the annotator. Additionally, due to the lack of data, deep learning models find it difficult to generalize to unseen research subjects. Without appropriate regularization, the scarcity of data can severely hinder model generalization and lead to overfitting [10,11]. In addition to the well-known challenges associated with data annotation and limited availability, it is essential to acknowledge that EEG signal variability is substantially affected by the underlying neurological pathology. Distinct disorders often give rise to specific alterations in brain activity, which are reflected in the EEG signal morphology and spectral characteristics. When combined with inherent inter-subject variability, these pathological differences significantly increase the complexity and heterogeneity of EEG datasets [12]. Due to the inherently high-dimensional nature of EEG signals (characterized by multiple electrode channels, long temporal sequences, and substantial inter-subject variability), training robust and generalizable models remains highly challenging. These complexities often lead to increased computational burden, elevated risk of overfitting, and limited model scalability under constrained data conditions. Moreover, the spatial and temporal correlations across channels further complicate feature extraction and classification [13]. Additionally, EEG signals are highly susceptible to various forms of artifacts and environmental noise, including ocular and muscular movements, which can significantly distort the underlying neural patterns. Without adequate preprocessing and filtering strategies, such artifacts may introduce substantial variability and degrade the reliability of feature extraction and classification.

To mitigate these challenges, data augmentation (DA) has emerged as a necessary strategy to expand the diversity and quantity of training data. EEG signals in real-world applications are influenced by multiple factors, including pathological heterogeneity (that is, differences in characteristics across various disorders), inter-channel coupling, artifacts and noise, and physiological variability both between subjects and within a subject. Therefore, augmentation methods that are carefully designed, such as injecting jitter in the time domain, randomly dropping individual channels, or perturbing spectral components, can expose the model during training to a wide range of possible signal distortions and thus enable robust extraction of critical features in practical settings. In the field of computer vision, researchers enhance image datasets through flipping, rotating, scaling, cropping, shifting, adding Gaussian noise, etc. In recent years, researchers have also utilized deep learning-based generative models to generate additional samples from existing ones.

Inspired by the achievements of data augmentation in the field of computer vision, DA in BCI is also divided into two categories: methods based on geometric manipulation and methods based on generative models. Geometric manipulation is one of the simplest and most effective DA methods. For EEG data, geometric manipulations include time domain enhancement, frequency domain enhancement, and spatial domain enhancement.

In the time domain, Schirrmeister et al. generated additional data by sliding and cropping on longer EEG signals using shorter time windows [14]. Yang et al. added Gaussian noise to motor imagery EEG samples to increase data diversity [15]. Lotte et al. enhanced datasets by splitting EEG signals into multiple time segments and then recombining different segments [16]. Mohsenvand et al. randomly selected a portion of the signal and set it to zero to enhance the model’s ability to learn the differences between different augmented samples [17]. Rommel et al. augmented data by inverting the temporal order in the EEG signals [18]. In this way, the model may learn to recognize samples that remained unchanged even when the temporal sequence had been reversed, thereby enhancing its ability to generalize to the data. The team also performed data augmentation by randomly changing the polarity of the signals in all channels, an operation that does not change the semantic information of the data and also helps the model to recognize and differentiate between different instances of augmentation from the same data point as it learns.

In the frequency domain, Mohsenvand et al. performed a Fourier transform on the EEG signal to obtain its frequency components, then randomly changed the phase of these components while keeping the amplitude constant, and finally converted these modified frequency components back to the time domain through an inverse Fourier transform to achieve data augmentation [17]. Schwabedal et al. generated new data sequences by changing the Fourier phase of signals to address class imbalance [19]. Cheng et al. selected a specific frequency band and filtered out all frequency components within this band from the signal to simulate frequency-specific interference or signal loss [20].

In the spatial domain, Zhang et al. rotated, translated, and added random noise to spectrograms based on short-time Fourier transform (STFT) for data augmentation [21]. Shovon et al. performed DA on motor imagery EEG spectrograms by rotating, flipping, scaling, and brightening them [22]. Sakai et al. established a dual strategy to amplify all time data and near-peak data for data augmentation [23]. Deiss et al. enhanced EEG datasets by flipping the electrodes on the left and right sides of the brain [24]. Saeed et al. first randomly permuted channels and then randomly sampled a binary mask to completely discard some channels to increase the model’s robustness in handling missing and disordered channels [25].

Data augmentation methods based on generative models refer to the use of additional deep learning-based generative models to synthesize training samples. Among the deep generative models that have recently been successful, VAEs (Variational Autoencoders), GANs (Generative Adversarial Networks), and diffusion models have demonstrated their practical capabilities with a solid theoretical foundation. This paper systematically summarizes the applications of these three generative models in generating EEG data.

3. Search Method

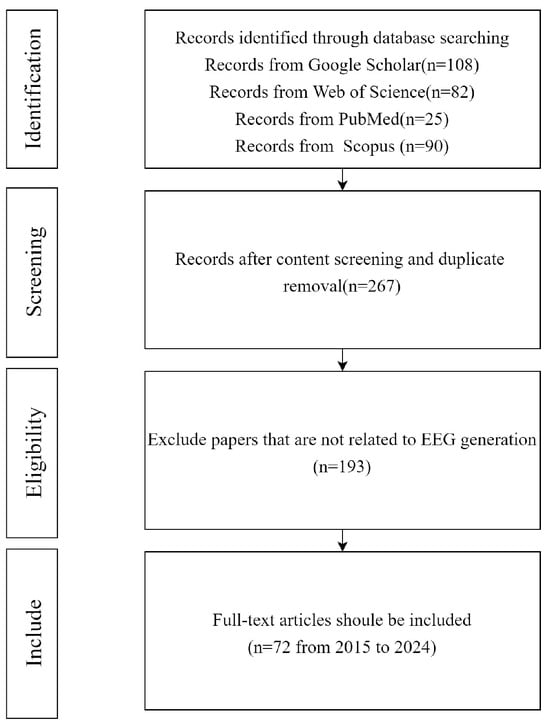

The main purpose of this paper is to study various generative models applied in different EEG-related tasks, emphasizing how these models generate EEG and the performance results they achieve. A literature review was conducted, as shown in Figure 1, across four databases including Google Scholar, Web of Science, PubMed, and Scopus, using the following group of keywords: (“Data augmentation” OR “Generate” OR “Synthesize”) AND (“Generative models” OR “GAN” OR “VAE” OR “Diffusion”) AND (“EEG” OR “Electroencephalography”). The initial search yielded 305 matching results in the databases, with publications ranging from 2015 to 2024, including 38 duplicate articles. After manually screening the remaining 267 papers, 195 were found to be irrelevant for this review (for example, the papers did not emphasize the role of generative models in EEG generation but focused more on the analysis of EEG signals). Thus, we ultimately selected 72 papers. After identifying papers that met the criteria based on our selection criteria, we extracted key information from each paper, including the following: authors, year of publication, main purpose of the article, dataset used, type of model, evaluation metrics used, and final experimental results.

Figure 1.

Selection criteria.

4. Basic Concepts

4.1. VAE

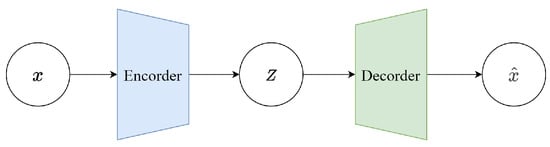

Variational Autoencoders (VAEs) were proposed by Kingma et al. in 2013 as a generative network structure based on Variational Bayes (VB) inference [26]. Unlike traditional autoencoders that describe the latent space numerically, it characterizes observations of the latent space probabilistically. This means that the output of the decoder is not the direct values of x, but the parameters of the probability distribution of x. Therefore, it has tremendous application value in data generation. Figure 2 illustrates a simplified schematic of a VAE. The model consists of two parts: the encoder and the decoder. The encoder’s function is to map the input data x to a representation in the latent space z. In a VAE, the encoder does not output a fixed z but instead outputs two parameters: the mean and the variance (in practice, the logarithm of the variance is often used for numerical stability). These parameters define a probability distribution, typically assumed to be Gaussian, meaning z is sampled from N(,I). This probabilistic output is what differentiates a VAE from a traditional autoencoder. The decoder’s function is to map the latent representation z back to the data space, essentially reconstructing the input data x. In a VAE, the decoder learns the conditional probability (x∣z), which is the probability of generating data point x given the latent representation z. The output of the decoder can be viewed as a “reconstruction” of the original input data.

Figure 2.

VAE architecture.

During the training of a VAE, there are two goals: ensuring that the distribution of the latent representations output by the encoder is as close as possible to the prior distribution (usually a standard normal distribution) and ensuring that the data reconstructed from the latent representation are as similar as possible to the original data. To achieve these goals, the loss function of a VAE consists of two parts: reconstruction loss and Kullback–Leibler (KL) divergence. Reconstruction loss is typically the difference between the input data and the reconstructed data, like mean squared error loss or cross-entropy loss. KL (Kullback–Leibler) divergence measures the divergence between the distribution of the latent representations output by the encoder and the prior distribution. This metric encourages the encoder’s output distribution of z not to stray too far, thereby avoiding “overfitting” to specific features of the training data:

In this equation, the term quantifies the divergence between the encoder’s approximate posterior and the prior . Here, denotes the i-th input sample, and is its latent representation of dimension k. The encoder defines , where is the mean vector and is the covariance matrix (typically diagonal, with entries ). The prior is chosen as the standard normal . In the closed-form KL expression, is the sum of the diagonal variances, its determinant, and log denotes the natural logarithm. Minimizing this KL divergence encourages the learned posterior to stay close to the fixed prior.

The final goal of VAE is as follows:

where denotes the input data sample, and is the corresponding reconstruction generated by the decoder. The index represents the feature dimensions of each input vector. The reconstruction loss ensures that the reconstructed data are as close as possible to the original input. The terms and are the mean and (diagonal) covariance output by the encoder for sample , where and , with k being the dimensionality of the latent variable z. The notation denotes the squared of the mean vector, defined as , and refers to the sum of the logarithms of the diagonal elements of the covariance matrix, given by .

4.2. GAN

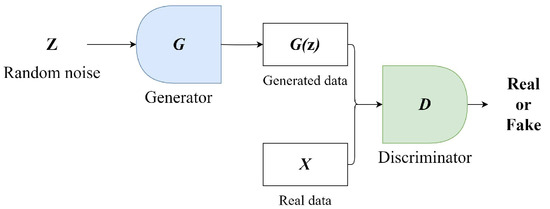

The GAN model was designed by Ian J. Goodfellow in 2014 [27]. GANs consist of two independently operating networks: a generator (G) and a discriminator (D). Figure 3 presents a simplified diagram of a GAN. The goal of the generator network is to learn and mimic the distribution of real data. It begins with a random noise vector z and tries to create samples that resemble real data. The discriminator is a binary classifier tasked with evaluating the output of the generator and differentiating between the fake data produced by the generator and actual real data. During training, the two networks are trained in parallel. The generator strives to produce data that appear more realistic over time, while the discriminator focuses on improving its ability to distinguish between real and fake data. This process involves a minimax game, where the discriminator tries to maximize its accuracy, while the generator aims to minimize the discriminator’s success. The ultimate aim is that through this competitive and learning process, the generator becomes adept at creating high-quality data indistinguishable from real data, while the discriminator becomes proficient at accurately identifying what is real and what is not [27].

Figure 3.

GAN architecture.

Traditional GANs, while promising in generative modeling, often face issues with training instability, mode collapse, and vanishing gradients. Arjovsky et al. introduced WGAN, which effectively addresses these issues by adopting the Wasserstein distance as the objective function [28]. WGAN boasts superior theoretical properties, such as continuity and differentiability, leading to a more stable training process. The Wasserstein distance intuitively represents the minimum “cost” of transferring “mass” from one distribution to another, relying less on gradients, thus mitigating the vanishing gradient problem. This method provides meaningful results even when two distributions do not overlap on low-dimensional manifolds. The key to WGAN is maintaining the K-Lipschitz continuity of the discriminator during training, proposing a method of weight clipping after each gradient update to preserve this continuity. The Wasserstein GAN loss function is obtained by the Kantorovich–Rubinstein duality:

In this formulation, the generator G aims to minimize the estimated Wasserstein distance between the model distribution and the real data distribution, while the discriminator (a 1-Lipschitz function) seeks to maximize this distance. The term denotes the expected discriminator score over real data samples, while is the expected discriminator score over generated samples.

4.3. Diffusion Model

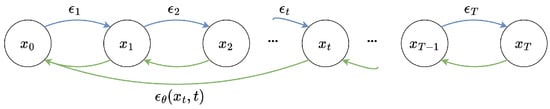

Diffusion models are also a type of latent variable model used for data generation, where the latent variables to have the same dimensions as the data and represent hidden states that gradually change during the diffusion process. Diffusion models consist of two processes: the forward process and the reverse process. Figure 4 illustrates a simplified schematic of a denoised diffusion probabilistic model.

Figure 4.

Denoised diffusion probabilistic model. Blue arrows represent the forward diffusion process, green arrows represent the reverse denoising process.

The forward process in the diffusion model is a fixed Markov chain that progressively adds Gaussian noise to the data, following a changing variance schedule to . This process defines the transformation path of the data from its original state to a state of complete random noise. The distinction of diffusion models from other latent variable models lies in their use of an approximate posterior probability , which describes the process of reverting from completely noisy data back to the original data state:

In this equation, represents the joint distribution of latent variables across all time steps, given the original data point . This formula describes how we would gradually add noise to a noise-free data point. is the specific conditional distribution, indicating that each step follows a normal distribution with as the mean and as the variance.

The reverse process of the diffusion model is a Markov chain starting from , where each step is controlled by learned Gaussian transition probabilities. These transitions are modeled using Gaussian distributions, with and being parameters learned by the model. The full reverse process can be written as follows:

The reverse process starts from a predefined prior . denotes the joint distribution of all latent variables from time point 0 to T, where , represent the specific conditional probability distributions modeled as Gaussian distributions parameterized by . is the conditional mean of the latent variable at time point given the latent variable at time point t, and is the conditional covariance matrix. These parameters are learned through the model to determine the transformation at each step of the reverse process.

A neural network, named the denoising U-Net, is trained to carry out the reverse diffusion process, predicting and removing noise from the noisy input to recover the original variables. The loss function for this process is expressed as follows:

where represents the true Gaussian noise, is the neural network that predicts the noise, and denotes the time step.

4.4. Classical Paradigms in BCI

In EEG-based BCI systems, classical paradigms such as motor imagery (MI), P300, and steady-state visual evoked potentials (SSVEPs) define the structure and characteristics of the neural signals to be processed. Although these paradigms were originally designed to facilitate specific communication or control tasks, they now also serve as practical categorizations for assessing EEG data generation techniques. Given the diversity of signal characteristics and task requirements across paradigms, generative models are typically evaluated within the context of one or more of these established BCI paradigms. In this section, we briefly introduce the most commonly used paradigms, which serve as the foundation for organizing the subsequent discussion on generative model applications.

Motor imagery (MI), as the name implies, is the process of mentally simulating an action in the brain without physically executing the action. According to studies by Jeannerod [29], MI can be considered as a conscious access to the content of motor intentions, which is typically carried out unconsciously during the preparation phase of movement. Jeannerod concluded that conscious motor imagery and unconscious motor preparation operate through the same mechanisms and are functionally identical. This explains why psychological practices using MI training can improve motor performance [29]. Due to the functional similarity between MI and motor execution (ME), the brain areas activated by both are highly overlapping. By analyzing EEG data and identifying the activation patterns in different brain regions, it is possible to determine the user’s intent, thereby achieving direct communication and control between the brain and external systems. Commonly focused areas for motor imagery include the left and right hands, both feet, and the tongue. During motor imagery, the cerebral cortex generates two types of rhythm signals with significant changes: the 8–15 Hz rhythm and the 18–24 Hz rhythm. When engaging in motor imagery, neuron cells are activated, metabolism speeds up, and the electrical rhythmic energy in the contralateral motor sensory area of the cerebral cortex is significantly reduced, whereas that of the ipsilateral motor sensory areas is increased. This phenomenon is known as event-related desynchronization (ERD) and event-related synchronization (ERS). Therefore, a variety of control commands can be generated by actively controlling the amplitude of the and rhythms in the left and right brain.

Emotion is a complex psychological state manifested through physical behavior and physiological activities [30]. When an organism perceives a situation that necessitates a response, automatic psychological and physiological reactions occur. Emotions impact both individuals’ health and their decision-making processes. There is currently extensive research being conducted in the field of emotion recognition, which is a crucial branch of affective computing and plays a significant role in understanding people’s thoughts and behaviors. Based on the type of signals used, emotion recognition can be categorized into two types: non-physiological signals and physiological signals. Non-physiological signals include vocal tone, body posture, gestures, facial expressions, and other similar signals. Physiological signals include electroencephalography (EEG), body temperature (T), electrocardiogram (ECG), electromyography (EMG), galvanic skin response (GSR), and respiration (RSP). Emotion recognition technology aims to identify two main parameters behind emotions: valence, which represents the change from unpleasant to pleasant, and arousal, which measures the change from calm to excited [31].

Brain activity is often influenced by external stimuli, such as flashing LEDs and sounds. The altered EEG activity can be collected and decoded to control real or virtual objects or external prosthetics [32]. The most commonly used external stimulation paradigms are the P300 and steady-state visual evoked potential (SSVEP) paradigms. This is because they both exhibit high signal response and signal-to-noise ratio. The classification accuracy rate and signal detection time impact the overall performance of BCI systems. These metrics are crucial for calculating the information transfer rate (ITR), a key performance indicator for a BCI system. A BCI system based on P300 or SSVEP typically has higher ITR values than other types of BCI systems [33].

The P300 is among the most widely studied event-related potentials (ERPs). It can be detected by averaging EEG signals in response to specific events. The reaction to unusual stimuli triggers the P300 component, a positive peak in ERP, typically ranging from 5 to 10 microvolts, occurring 220 to 500 milliseconds after the event. P300 recognition has been instrumental in developing essential communication tools and devices for patients with motor neuron diseases. BCI systems utilizing P300 provide these patients with affordable, portable, and non-invasive communication devices, potentially enhancing their quality of life. Despite advances in P300-based BCI, challenges remain in detecting and interpreting P300 signals. These waveforms are often high-dimensional and have a low signal-to-noise ratio (SNR). Additionally, P300 signals are known to be non-stationary, exhibiting significant variability across subjects [33].

SSVEP offers advantages such as minimal training requirements, high classification accuracy, and a high information transfer rate (ITR), making it widely regarded as one of the most effective paradigms for high-throughput BCI. SSVEP stands for steady-state visual evoked potential, which is an oscillatory response generated in the brain when a person views a visual stimulus with a frequency of 6 Hz or more. The brain’s natural oscillations may respond to such a stimulus. These SSVEP signals are most noticeable in the occipital region (visual cortex), with their primary frequency corresponding to the stimulus and its harmonics [34].

Epilepsy is a disease caused by abnormal excitation of brain cells leading to unprovoked seizures, with some main causes being hypoglycemia, malformations, and hypoxia during birth [35]. Epileptic seizures can occur at any time, leading to loss of consciousness and potentially resulting in injury or even death. Generally, epileptic seizures are classified into two main types: generalized seizures and partial seizures, depending on whether the seizure affects a part or all of the brain areas. In generalized seizures, all parts of the brain are affected; in partial seizures, only a specific region of the brain is involved [36]. Currently, for many patients with epilepsy, pharmacological treatments are not always effective, making it crucial to predict the occurrence of these seizures.

In addition to the widely adopted paradigms such as motor imagery (MI), P300, and SSVEP, several other BCI paradigms have been proposed to expand the scope and flexibility of EEG-based systems. These include paradigms based on real movement, somatosensory stimulation, and auditory and olfactory stimuli, as well as covert and overt attention. Other less common but potentially valuable approaches include observation-based paradigms, slow cortical potentials (SCPs), reflexive semantic conditioning, and passive paradigms where brain activity is recorded without any explicit task [32]. While these paradigms have received comparatively less attention in the context of EEG data generation, they offer promising avenues for future exploration, particularly in hybrid systems or personalized BCI applications.

4.5. Evaluation Metrics

To comprehensively assess the performance of generative models applied to EEG data, various evaluation metrics have been used in the literature. These metrics can be broadly categorized into four groups based on their underlying purpose and analytical focus: (1) Downstream task performance, which refers to how well the generated data support specific EEG analysis tasks such as classification, regression, or brain–computer interface (BCI) applications. High performance in these tasks indicates that the synthetic data preserve essential discriminative features of the original EEG. (2) Generative quality, focusing on how realistic and diverse the generated EEG signals are. Common metrics in this category include Fréchet Inception Distance (FID), Inception Score (IS), and signal quality indices that quantify the fidelity and variability of generated samples. (3) Task-specific metrics, which evaluate how well the generated data fulfill the specific requirements of particular EEG applications. For example, in brain–computer interface (BCI) systems, information transfer rate (ITR) is commonly used to measure the efficiency of information transmission. (4) Interpretability and visualization, which involve qualitative assessments such as visual inspection of waveforms, topographic maps, or latent space projections (e.g., t-SNE), helping to understand what the generative model learns and how it represents EEG data.

This categorization facilitates a more nuanced understanding of each study’s contributions and evaluation focus. Table 2 and Table 3 summarize the evaluation metrics used in this review.

Table 2.

Evaluation metrics used in this review.

Table 3.

Evaluation metrics used in this review (generative quality).

5. VAE for EEG

Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs) are considered among the most valuable methods in the field of unsupervised learning, with more and more applications in the domain of deep learning. In this section, we first introduce the basic theoretical knowledge about VAEs, followed by a systematic review and summary of the recent applications of VAEs in the field of EEG generation.

5.1. Review of Related Work

In this section, we reviewed the various applications of VAEs in EEG generation, and Table 4 summarizes all the papers on the use of VAEs across various EEG tasks.

Table 4.

Studies that used VAEs in EEG tasks.

5.1.1. VAE Models for Motor Imagery

Ozan et al. demonstrated the feasibility of using Conditional Variational Autoencoders (cVAEs) to generate multi-channel EEG signals under specific conditions [43]. The cVAE includes a stochastic encoder and a deterministic decoder. The encoder learns the variational posterior distribution of the latent representation, reconstructs the input signal through the decoder, and generates new samples during inference using a conditional variable. The data used in the experiments came from the PhysioBank EEG Motor Imagery Dataset, considering data from 100 participants who performed motor imagery tasks involving the right hand, left hand, and both feet. The experimental results indicate that the generated EEG segments can exhibit spectro-temporal characteristics under specific task conditions, including event-related desynchronization (ERD) patterns in the and bands for different tasks such as right-hand, left-hand, and both-feet movement imagery. These generated signals show significant frequency and spatial distribution differences across different task conditions.

Yang et al. proposed a model that combines Conditional Variational Autoencoders (CVAEs) and GAN for the generation and recognition of four classes of motor imagery EEG signals [44]. The CVAE-GAN integrates the encoder–decoder network of CVAE with the generative model of GAN. The encoder learns the latent representation z of data x under the condition of a specific class y, and the decoder uses this to predict the class. The GAN’s generator and discriminator work to produce data that approximate the real data distribution and distinguish between real and synthetic data. This study utilized both private [44] and public (BCI competition IV 2a) datasets. The authors processed the data by short-time Fourier transform (STFT) to convert the MI-EEG signals into a series of time–frequency images. The authors compared the model’s performance with other models (CVAE, CNN, CGAN) using metrics such as Inception Score (IS), Fréchet Inception Distance (FID), and Sliced Wasserstein Distance (SWD). The experimental findings indicate that CVAE-GAN performed best in terms of IS and SWD metrics, while CNN performed better in the FID metric but did not perform well in the other metrics. The augmented training dataset significantly improved the MI-EEG recognition performance, with experimental results showing that CVAE-GAN achieved higher classification accuracy across different datasets. In addition, the authors found that the generated data also exhibited significant power decreases and increases in the same electrode regions as the real data, corresponding to ERD/ERS phenomena.

George et al. detailed the study of data augmentation strategies in EEG-based decoding of motor imagery, where six data augmentation methods were employed to synthesize EEG motor imagery experimental data with the goal of improving decoding performance [47]. These methods include Trial Averaging (AVG), Temporal Slicing (RT), Frequency Slicing (RF), Noise Addition (NS), Cropping (CPS), and Variational Autoencoder (VAE). The generated data were assessed using four metrics: predictive accuracy, FID, t-Distributed Stochastic Neighbor Embedding (t-SNE) plots, and topographic head maps. The study showed that synthetic data shared similar characteristics with real data, and applying these methods resulted in an average accuracy increase of 3% and 12% on two public datasets, respectively. After applying augmentation techniques to the two datasets, the accuracy improvement with VAE compared to other augmentation methods was not significant. Additionally, VAE showed higher FID values compared to some other methods (e.g., adding noise), indicating that the data generated by VAE were slightly less similar to the statistical distribution of the original data. Furthermore, VAE was the most computationally expensive among all augmentation techniques, suggesting a need for a trade-off consideration when using VAE as an augmentation method in resource-constrained situations.

Zancanaro et al. introduced a model called vEEGNet, which is not only used for classification tasks in motor imagery but also for generating EEG signals [53]. vEEGNet comprises VAE and a feed-forward neural network. It utilizes the EEGNet [56] architecture within the VAE, with the encoder comprising temporal convolution, spatial convolution, and separable convolution, followed by a fully connected layer. vEEGNet’s decoder mirrors the encoder structure, employing transposed convolutions and upsampling layers. The feed-forward neural network is employed to classify EEG signals, with the output layer containing four neurons, each corresponding to one of the four motor imagery task categories. The model was tested on the BCI Competition IV 2a dataset, achieving an average classification accuracy of 71.95% and a kappa score of 0.63 across all subjects. The performance of vEEGNet was comparable to that of the other models, with accuracies ranging from 70% to 80% and a standard deviation of 8.78%, suggesting that performance varied considerably between subjects. Additionally, the reconstructed signals could identify motor-related cortical potentials (MRCPs), which are specific EEG components closely associated with motor execution or imagery.

5.1.2. VAE Models for Emotion Recognition

Luo et al. introduced sVAE to solve the problem of data scarcity in emotion recognition [39]. This model adds a data augmentation strategy to the standard VAE. Two strategies were compared: one involving the complete use of all generated data, and the other focusing solely on the highest-quality synthetic data. To evaluate the quality of the data, classifiers trained on the original dataset (Support Vector Machine (SVM) or Deep Neural Network (DNN) with shortcut layers) were used to classify the generated data. The experimental results demonstrated that using sVAE with a DNN classifier, the accuracy rates on SEED and DEAP datasets increased by 4.2% and 4.4%, respectively, compared to the baseline.

Bao et al. introduced a data augmentation model called VAE-D2GAN [45]. This model extracts emotional features from EEG signals using Differential Entropy (DE) and transforms these features into topological images as inputs. It includes an encoder, a generator, and dual discriminators ( and ). The encoder maps real samples into the latent space, the generator creates artificial samples based on the latent vectors, and the discriminators and distinguish between real and generated samples. The model’s performance was evaluated on two public emotional EEG datasets, SEED [57] and SEED-IV [58]. After using data enhancement, the recognition accuracy reaches 92.5% for SEED and 82.3% for SEED-IV, which are 1.5% and 3.5% higher than the recognition accuracy without data enhancement, respectively.

Bethge et al. introduced a model named EEG2Vec, which utilizes a framework based on Conditional Variational Autoencoders (cVAEs) aimed at learning generative–discriminative representations from EEG [46]. This method can predict emotional states and generate synthetic EEG data related to specific participants or emotions. EEG2Vec has three main components: a feature encoder, a decoder, and a classifier. The authors used EEGNet as the backbone network of the feature encoder. The latent representations obtained after data processing by the encoder are reconstructed in the decoder, and the classifier is used to predict emotional states. The final experimental results demonstrated that merging the original data with 20% synthetic data could increase the classification accuracy from 66% to 69%. Moreover, by adding 20% synthetic EEG data to the training set, the model significantly enhanced emotion recognition accuracy in some participants. Specifically, for participants 12 and 11, the emotion recognition accuracy increased by 42.85% and 31.57%, respectively.

Wang et al. introduced a Multimodal Domain Adaptive Variational Autoencoder (MMDA-VAE) approach, aimed at addressing the issue of limited calibration samples in EEG-based emotion recognition [50]. MMDA-VAE constructs a Multimodal Variational Autoencoder (MVAE) that projects multimodal data into a shared space. Through adversarial learning and cycle consistency regularization, it reduces the distribution differences across domains within the shared latent representation layer, thereby facilitating knowledge transfer. On this shared latent space, MMDA-VAE further trains a cross-domain classifier for emotion state recognition. The experiments were carried out using two datasets, SEED and SEED-IV. The results showed that MMDA-VAE significantly outperforms other modality fusion methods, such as Feature Level Fusion (FLF) based on SVM and Bimodal Deep Autoencoder (BDAE), achieving an average accuracy of 89.64% on the SEED dataset and 73.82% on the SEED-IV dataset. MMDA-VAE shows higher average accuracy on both datasets compared to common domain adaptation methods (e.g., TCA, DDC, DAN, etc.). The authors also conducted experiments on cross-subject emotion recognition, demonstrating MMDA-VAE’s good performance in handling cross-subject domain adaptation problems, with an average accuracy of 85.07% on the SEED dataset and 75.52% on the SEED-IV dataset.

Ahmed et al. utilized a deep learning model known as CNN-VAE to interpret and visualize the disentangled representation of individual-specific EEG topographic maps [54]. The main contribution of this study is to interpret the disentangled representation of VAE by activating only one potential component while setting the rest of the components to zero (since zero is the mean of the distribution), thereby identifying the role of each component in capturing the generative factors within the topographic maps. The experiment used the DEAP dataset, which contains multi-channel EEG recordings of 32 participants while watching 40 one-minute music video clips [59]. The researchers transformed the original EEG signals into EEG topographic maps that retained spatial information, further generating 40 × 40 sized EEG topographic head maps. Experimental results showed that when using all latent components as input for the decoder, metrics such as Mean Absolute Error (MAE), Mean Squared Error (MSE), Structural Similarity Index (SSIM), and Mean Absolute Percentage Error (MAPE) approached ideal values, indicating good performance of CNN-VAE in EEG topographic image reconstruction. In addition, the researchers found that by training the decoder by activating only one latent variable component and setting the others to zero, each latent variable made a different contribution to capturing the generative aspects in the topographic maps.

Tian et al. introduced a model named DEVAE-GAN, which integrates spatiotemporal features to generate high-quality artificial EEG data, aiming to address the current problem of data scarcity in EEG-based emotion recognition tasks [55]. DEVAE-GAN combines VAE and GAN, with a special emphasis on introducing dual encoders to concurrently capture the spatiotemporal characteristics of EEG signals. One encoder is designated for extracting processed time features, while another encoder is tasked with extracting spatial information distributed according to EEG electrode locations. These two types of information are considered latent variables. By merging these two vectors, a new latent variable distribution is formed, which contains complementary information. The authors conducted experiments using the SEED dataset, which contains EEG signals from fifteen subjects, categorized into positive, negative, or neutral emotions. The experimental results show that the method achieved an average classification accuracy of 97.21% on the SEED dataset, which is a 5% improvement over the original dataset. Additionally, the authors measured the similarity between the generated data and the original data using various metrics such as JS (Jensen–Shannon) divergence, KL divergence, and WD (Wasserstein distance). The results indicate that DEVAE-GAN performs the best in generating high-quality artificial samples that are similar to the distribution of the original data.

5.1.3. VAE Models for External Stimulation

Aznan et al. utilized VAE as generative model to generate EEG signals, aiming to enhance the performance of steady-state visual evoked potential (SSVEP) classification [37]. The authors trained each generative model solely on the Video-Stimuli dataset under a single experimental condition. The VAE used 1D convolutions to encode features from given EEG data samples, which were used to parameterize a Gaussian distribution. Latent representations sampled from this distribution were passed to the decoder part of the model, which transformed the latent representations back into the original EEG data. The authors assessed the feasibility of improving classification results by using synthetic data, merging synthetic data (30 samples per class) with real data to form a training set. The results indicated that including synthetic data positively influenced the generalization ability across different subjects. In addition, the study revealed that pre-training on synthetic data improved the cross-subject generalization of the model, especially when pre-training with data generated by VAE, where there was a significant improvement in classification accuracy.

5.1.4. VAE Models for Epilepsy

Li et al. proposed a model named CR-VAE for generating medical time series data [51]. Unlike traditional recursive VAEs, this model uses a multi-head decoder, where each decoder head is in charge of producing a different dimension of the data. By applying a penalty that induces sparsity to the weights of the decoder and encouraging certain weights to be zero, this model learns a sparse adjacency matrix that encodes causal relationships between all pairs of variables. This matrix allows the decoder to strictly follow the principles of Granger causality, making the data generation process transparent. The EEG dataset used by the authors is a real intracranial EEG recording dataset from patients with drug-resistant epilepsy. Compared with TGAN, VRNN, and VRAE, CR-VAE showed better performance both qualitatively and quantitatively.

5.1.5. VAE Models for Other EEG Applications

Krishna et al. introduced an RNN-based VAE that enhances the performance of EEG-based speech recognition systems by generating more meaningful EEG features through a novel constrained loss function [40]. Unlike previous speech recognition methods, this model does not depend on extra features such as acoustic or phonetic features. Building upon the VAE framework, the model includes an additional component in the loss function (ASR model loss), enabling the generation of more significant EEG features directly from the raw data, thereby improving the performance of the speech recognition system. The model’s encoder employs a single-layer Long Short-Term Memory (LSTM) network to transform input EEG features into latent representations. The authors then connect the output of one dimension (the fifth dimension) to the ASR model to guide the VAE in generating EEG features most relevant to speech production. The authors conducted experiments on both isolated speech recognition and continuous speech recognition using datasets from [41,42]. For isolated speech recognition, the classifier model trained and tested with EEG features generated by the VAE model showed a performance improvement of 4.55% over the baseline model using original 30-dimensional EEG features. For continuous speech recognition, experiments using EEG features generated by the VAE model consistently outperformed the baseline, and the word error rate (WER) was lower than previous methods for the larger test set corpus size.

5.2. Conclusions

This section summarizes the VAE models used in EEG tasks, demonstrating remarkable effectiveness across a range of EEG applications. VAE provides a solid probabilistic framework by learning latent representations of raw data to generate new data samples. By compressing high-dimensional EEG data into low-dimensional latent representations, VAE reduces computational costs and speeds up calculations. It is particularly useful for understanding and modeling complex biological signals like EEG. Compared to traditional autoencoders, the key improvement of VAE lies in modeling latent variables as an isotropic Gaussian distribution. Due to the independence of its latent variables, VAE can better capture and generate the features of complex data like EEG.

Despite VAE’s good performance in generating EEG data, the quality of the generated data is still inferior to that of real data and data generated by GAN models. The generated data may contain some noise and inaccurate signal features, which can affect the performance of downstream applications. Additionally, VAE performs poorly when dealing with complex EEG data, such as multi-channel EEG data or high-frequency signals. Most importantly, although VAE models have shown excellent performance in various EEG tasks, the understanding of how they recognize and generate specific EEG patterns is still insufficient. Future research could focus on improving the interpretability of these models.

6. GAN for EEG

As one of the hottest deep learning models currently, GANs (Generative Adversarial Networks) are applied in various fields. This section systematically reviews the various applications of GAN models in the field of EEG generation.

6.1. Review of Related Work

In this section, we reviewed the various applications of GANs in EEG generation. As in Section 5, the reviewed studies are also categorized into five groups: motor imagery, emotion recognition, external stimulation, epilepsy detection, and other EEG applications.

6.1.1. GAN Models for Motor Imagery

Currently, EEG-based motor imagery signals have been utilized in a range of healthcare applications, such as neurorehabilitation [29], restoring lost or damaged limb functions through the control of prosthetics or exoskeletons, replacing walking functions for those unable to walk with robotic wheelchairs, and for spelling and cursor control. However, MI-EEG signals are intricate and possess high-dimensional structures. Consequently, it is necessary to employ advanced machine learning and deep learning (DL) algorithms to analyze and interpret these brain data. Generative deep learning models are often used to enhance and improve training data. Since Goodfellow et al. proposed GANs, there have been numerous advancements in generating image, audio, and video data, highlighting their significant potential for various applications. However, the potential of GANs has not yet been explored in dealing with the challenge of limited dataset sizes in EEG tasks. We review recent studies that have demonstrated employing GANs for data augmentation can significantly enhance the performance of MI-based BCI systems. Table 5 summarizes the studies of GAN models in motor imagery tasks.

Table 5.

Studies that used GANs in MI tasks.

In 2018, Abdelfattah et al. proposed the Recurrent Generative Adversarial Network (RGAN) [60]. This model shares the same design philosophy with conventional GAN models, but it incorporates Recurrent Neural Networks (RNNs) in the generator part. EEG signals are continuous time series where subsequent samples may be related to previous ones, indicating the presence of temporal dependencies. Such dependencies mean that a sample in the signal could be influenced by the preceding sample. RNNs are particularly suited for handling this type of data because they can capture the time dynamics and long-term dependencies in sequence data. The generator consists of four hidden layers: the initial two layers are RNNs that capture the temporal dependencies within the signals, followed by two fully connected (FC) layers, each comprising 128 neurons. The input to the generator is a noise vector matching the dimensions of the real signal, and the output is a generated signal sample that is then used as input for the discriminator. The authors conducted two experiments. The first one compared the RGAN with the Autoencoder (AE) and Variational Autoencoder (VAE). They assessed each model by measuring the reconstruction error, using a 5-fold cross-validation to separate the dataset, and averaged the results across 109 subjects. The results indicated that the RGAN attained an average signal reconstruction accuracy of 89.8% ± 3.5% across the dataset of 109 subjects. In contrast, the average signal reconstruction accuracy for AE and VAE was 54.9% ± 6.5% and 69.9% ± 3.7%, respectively.

The second experiment (RGAN Augmentation under Reduced-Data Conditions) assessed how RGAN-based data augmentation affects the classification accuracy of three models: Deep Neural Network (DNN), Random Forest Trees (RFTs), and SVM [60]. For this assessment, the authors initially evaluated the mean and standard deviation of each model using 100% of the training data from each subject’s dataset. They then conducted two additional experiments with 25% and 50% of the original dataset size, respectively, while augmenting the remaining samples using the RGAN model. The performance of the different classification models (DNN, SVM, RFT) significantly improved with RGAN augmentation. With 25% of the dataset size and RGAN augmentation, the classification accuracy (mean ± standard deviation) for the three models was , , and . When using 50% of the dataset and augmenting with RGAN, the DNN’s performance was notably superior to that of SVM and RFT, with increases of approximately 21% and 14%, respectively. With RGAN-augmented datasets, even at smaller dataset sizes (25% and 50% of the original size), classification models were able to achieve performance close to that with 100% dataset size.

Hartmann et al. introduced a GAN named EEG-GAN, which is designed to generate EEG signals [62]. To address training stability issues, the researchers improved the training process of WGAN to make it more suitable for generating EEG signals. A key improvement in WGAN is the introduction of gradient penalty (GP), which resolves the issues caused by weight clipping in the original WGAN. Building on this, the authors further proposed a method to increase training stability by gradually relaxing the gradient constraints. Moreover, during the training process, they adopted a training approach that progressively increases resolution, starting from a lower resolution and gradually advancing to the target resolution. This method helps enhance the quality of the generated signals and reduces instability during training. They also employed advanced techniques such as batch normalization, equalized learning rate, and pixel normalization to further improve the model’s performance and stability. For EEG signal generation, the authors chose a conventional CNN. They explored different upsampling and downsampling methods, including nearest-neighbor upsampling, linear interpolation, and cubic interpolation, and compared their impacts on the quality of the generated signals. To assess the quality of the generated EEG signals, the authors used the following metrics: IS, FID, Euclidean Distance (ED), and SWD. The experiment utilized a dataset of EEG signals produced by subjects performing simple motor tasks (such as resting or moving the hand). These signals were recorded with a 128-electrode EEG system and downsampled to 250 Hz. Experimental results showed that EEG-GAN is capable of generating samples that closely resemble real EEG signals in both the time and frequency domains.

Zhang et al. proposed a Conditional Deep Convolutional Generative Adversarial Network (cDCGAN) to generate EEG signals for data augmentation [63]. The loss function of cDCGAN is similar to that of GAN but introduces conditional information during the generation and discrimination processes. During the generation process, the model first converts the EEG signals into time–frequency representations (TFRs) and uses a two-dimensional convolutional kernel to learn the time–frequency features. Then, it generates waveform EEG signals through the inverse process of wavelet transform. The authors evaluated the model using the BCI Competition II dataset III. Experimental results show that by adjusting the ratio of synthetic data to original data during mixed training, the classification accuracy improved from 85% (0.5) to 90% (2). Additionally, the authors compared the synthetic EEG TFR with the original EEG TFR; it can be seen that the synthetic data closely match the original data in major time–frequency features and includes some additional features.

Roy et al. proposed a novel approach, MIEEG-GAN, for generating motor imagery EEG signals in [64]. The model consists of two main components: a generator (G) and a discriminator (D), both constructed using Bidirectional Long Short-Term Memory (Bi-LSTM) neurons. The experiments utilized Dataset 2b from the BCI Competition IV [76]. The study compared the first-order features between generated and original EEG signals, finding similarities that indicate the GAN’s capability to capture the temporal relations inherent in real EEG signals. The authors conducted a short-time Fourier transform (STFT) analysis to compare the time–frequency characteristics of real and synthetic EEG data. The results showed that synthetic and real EEG signals exhibit similar power spectral density (PSD) characteristics in the -band (13–32 Hz), although the synthetic signals were noisier than the real ones. Furthermore, the Welch method was used to analyze the PSD, revealing similar patterns of power decrease in specific frequency ranges between real and synthetic signals. This indicates that synthetic EEG signals can effectively simulate the statistical properties of real EEG signals. The authors noted that with more training trials involving motor imagery tasks, the model is expected to better learn key neural patterns such as event-related desynchronization (ERD) and event-related synchronization (ERS), thereby enhancing the utility of the synthetic signals for classification tasks.

Debie et al. proposed a privacy-preserving GAN for generating and classifying EEG data while protecting data privacy [65]. The main goal of this method is to generate realistic EEG signals without disclosing sensitive features of the original data. The experiments were conducted using the Graz dataset A, which includes data from nine healthy subjects performing four motor imagery tasks: imagining movements of the left hand, right hand, both feet, and the tongue [66]. Differential privacy, mentioned in the article, is a technique to protect individual privacy, especially during the publication and analysis of statistical databases. It protects personal information from being disclosed by ensuring that the output of an algorithm does not significantly change whether or not any individual’s data are included or removed from the database. In the process of synthesizing EEG data, the authors trained two models using Differential Privacy Stochastic Gradient Descent (DP-SGD): a non-private GAN (NP-GAN) and a privacy-preserving GAN (PP-GAN). NP-GAN is a GAN trained normally to generate synthetic EEG data without incorporating privacy measures. PP-GAN, on the other hand, integrates differential privacy techniques during the GAN training process to ensure that the generated data maintain high fidelity while protecting the privacy of subjects. Over 1000 training epochs, the loss functions of the generator and discriminator were monitored. The loss functions in the training of NP-GAN and PP-GAN indicated that the models gradually achieved the capability to generate high-quality synthetic data. The authors investigated the impact of the noise multiplier on the performance of PP-GAN, especially regarding the quality of generated data and privacy protection. A higher noise multiplier offers stronger privacy protection but may affect the quality of the generated data, making the appropriate selection of the noise multiplier key to balancing privacy protection and data quality. Increasing the training data to 150 artificially generated samples improved the classification accuracy of the utilized classifiers, while using 200 artificially generated samples resulted in poor outcomes.

Luo et al., in reconstructing EEG signals, did not employ the traditional time mean squared error but introduced a novel reconstruction algorithm based on GAN that incorporates Wasserstein distance [77] and a temporal–spatial-frequency (TSF-MSE) loss function [67]. The entire WGAN-EEG framework comprises three parts: a deep generator, a TSF-MSE loss calculator, and a discriminator network. In the first part, the authors employed the same layout of “B residual blocks” as proposed by He et al. [78], where 16 B residual blocks are applied to the original EEG signals to extract deep features of the generator. The second part is the TSF-MSE loss calculator, which inputs both the generator’s reconstructed EEG signals and the real signals to extract common spatial patterns (CSPs) and PSD features. Then, using the extracted features, the loss is calculated based on the TSF-MSE loss function, which decomposes the reconstruction error into three components: temporal MSE between time steps (), which reflects signal continuity over time; spatial MSE between EEG channels (), which measures spatial consistency across electrodes; and frequency MSE between signal batches (), which captures the preservation of spectral information in the generated signals. The authors utilized three datasets: the Action Observation (AO) dataset [79], Grasp and Lift (GAL) dataset [80], and BCI competition IV dataset 2a [81]. Through experiments, the authors found that under the same sensitivity conditions, the similarity of reconstruction effects of the framework on different datasets is AO > GAL > MI, indicating that the high-sensitivity WGAN framework reconstructed low-sensitivity EEG signals well, but the low-sensitivity model could not accurately reconstruct high-sensitivity EEG signals. For reconstructions of the same sensitivity, the WGAN framework outperformed the GAN framework in terms of average spectral results and Brain Electrical Activity Mapping (BEAM) results. For reconstructions of different sensitivities, the high-sensitivity model performed better in terms of average spectral differences and BEAM. Specifically, the signals reconstructed by the WGAN framework exhibit more distinct ERD/ERS patterns in the BEAM results. According to quantitative analysis, the WGAN framework exhibited higher classification accuracy. The classification accuracy rates of the WGAN-reconstructed signals on the AO dataset, GAL dataset, and MI dataset were 67.67%, 73.89%, and 64.01%, respectively, showing improvements of 4.1%, 4.11%, and 2.03% compared to the original data, respectively.

Zhang et al. researched and compared different DA methods to improve the classification performance of MI data [21]. The study included traditional methods (geometric transformations, AE, VAE) as well as DCGANs. Compared to traditional GAN models, DCGANs replace the pooling layers with fractional-strided convolutions in the generator and strided convolutions in the discriminator. The authors utilized DCGANs to obtain spectral graphs of MI data, which were subsequently classified by CNN to verify the performance improvement after data augmentation. Two datasets were used: BCI competition IV datasets 1 and 2b, with evaluation metrics including FID, average classification accuracy, and average kappa value. Compared to the baseline, the average classification accuracy of the CNN method without data augmentation was 74.5% ± 4.0% for dataset 1 and 80.6% ± 3.2% for dataset 2b. Different DA methods (NA-CNN, VAE-CNN, and DCGAN-CNN) provided higher accuracy, with DCGAN-CNN’s classification accuracy being 12.6% higher than the baseline for dataset 2b and 8.7% higher for dataset 1. In dataset 1, the accuracy of the CNN-DCGAN model was 5% higher than the average accuracy of VAE and AE, thus outperforming the best classification method mentioned among the DA methods previously. Furthermore, in terms of classification accuracy for dataset 2b, DCGAN’s accuracy was 5.6% and 10% higher than VAE and AE, respectively.

In 2021, Fahimi et al. proposed a framework based on DCGAN for generating artificial EEG to augment the training set, thereby improving the performance of BCI classifiers [68]. During the training process, the authors first extracted feature vectors from a subset of the target participant’s data using a pre-trained Deep Convolutional Neural Network (DCNN) model. Then, they trained the GAN using these feature vectors as a condition to generate new EEG data. The generated EEG data were merged with the original training set to form an augmented training set for training the BCI system’s classifier. The experiment included 14 healthy participants aged between 21 and 29 years. Participants were asked to perform a motor task under two different conditions: a focused attention condition and a diverted attention condition, which included opening and closing the right hand. The authors used an end-to-end DCNN as the baseline classifier to classify the EEG data of participants under both focused and diverted attention conditions. The results showed that, without data augmentation, the classifier achieved an average accuracy of 80.09% under the focused attention condition, which dropped to 73.04% under the diverted attention condition. After augmenting with data generated by DCGANs, the classification performance significantly improved. Under the focused attention condition, the accuracy increased by 5.45% and it increased by 7.32% under the diverted attention condition. The study also compared the effects of data augmentation using Variational Autoencoders (VAEs) and time–frequency domain segmentation and recombination (S&R) methods. The results showed that the DCGAN significantly outperformed these two methods in the diverted attention condition.

Song et al. proposed a new framework based on GANs, named CS-GAN (Common Spatial GAN), aimed at improving the accuracy of cross-subject EEG signal classification by generating high-quality data [69]. Unlike traditional GANs’ discriminators, the discriminator of CS-GAN includes two modules: one focused on discriminating EEG signals, and the other focused on maintaining the spatial features of the EEG signals (referred to as the CS-M). This module utilizes a spatial filter to process the input EEG data and enhances the spatial properties of the data during the training process. The training of CS-GAN employs not only the traditional adversarial loss but also introduces two special loss functions: covariance loss (cov-loss) and eigenvalue loss (ev-loss), which are used to maintain the spatial patterns of the generated data similar to the original data and enhance the distinction between different categories, respectively. The experiments used the BCI competition IV dataset 2a, which contains EEG data of nine subjects performing four types of motor imagery tasks (left hand, right hand, both feet, and tongue). The experimental results show that using 100 real samples for adaptive training yields an average classification accuracy of 59.40%, while using 3000 generated samples achieves an average accuracy of 67.97%, which is 15.85% higher than the leave-one-subject-out (LOO) test result of 52.12%. Compared to other data augmentation methods, the classification accuracy of the CS-GAN method is also higher: adding Gaussian noise achieves 64.60%, segmentation and recombination achieves 64.54%, VAE achieves 55.73%, and DCGAN achieves 54.20%. The authors’ ablation experiments further validate the effectiveness of each module in the CS-GAN method. Removing all modules results in a classification accuracy of 56. 97%, removing the common spatial module (CS-M) results in 57.33%, removing the covariance loss (cov-loss) results in 65.54%, and removing the eigenvalue loss (ev-loss) results in 66.02%.

Xu et al. enhanced the dataset for classifying left and right hand motor imagery (MI) in stroke patients by generating additional electroencephalography (EEG) data using Cycle-Consistent Adversarial Networks (CycleGANs) [70]. The research team utilized the EEG2Image method based on the Modified S-transform (MST) to convert EEG data into EEG topographies, a method capable of preserving the frequency domain characteristics and spatial information of the EEG signals. MST improves upon the S-transform by introducing adaptive parameters to optimize the accuracy of high-frequency energy calculations, thereby better focusing on the time–frequency characteristics of EEG data. In data processing, researchers first downsampled the original EEG data, then used MST to extract activities in the mu and beta frequency bands, and converted this information into 2D images through polar projection. Subsequently, researchers employed CycleGAN to learn and generate stroke patients’ motor imagery EEG data. CycleGAN includes two generators (G and F) and two discriminators ( and ), used for unpaired image-to-image translation between two data domains. In this study, one domain was the EEG topographies of healthy subjects, and the other was the EEG topographies of stroke patients. CycleGAN ensured consistency of information through cycle consistency loss when converting from one domain to another and then back again. Experiments compared the impact of adding different quantities of generated data on classification accuracy. When the amount of generated data equaled the original data volume, classification performance significantly improved. However, as more generated data were added, the increase in classification accuracy began to plateau, and in some cases, even slightly decreased. In addition, the authors found that the generated data also exhibited ERD characteristics in the rhythm.

Xie et al. proposed a novel algorithm that combines Long Short-Term Memory Generative Adversarial Networks (LGANs) and Multi-Output Convolutional Neural Networks (MoCNNs) for MI classification and introduced an attention network to enhance model performance [71]. The LGAN’s generator consists of a fully connected layer and four convolutional layers, aimed at generating realistic MI data and establishing a mapping between categories and data. The discriminator is composed of three convolutional layers, one LSTM layer, and a fully connected layer. MI data often exhibit strong temporal features, which are challenging to recognize with convolutional layers alone. The LSTM can extract temporal information from the MI data’s time series. Thus, the features extracted by the CNN are fed into the LSTM layer. The discriminator’s fully connected layer serves as the final output network. The MoCNN model includes a convolutional layer feature extraction network and three sub-classification networks. Specifically, the feature extraction network shares the same structure as the convolutional layers in the discriminator, and they share parameters. During the training of MoCNN, the feature extraction network is not trained, and the output of each convolutional layer is input into the sub-classification networks. Each sub-classification network completes the classification task based on the features it receives and then outputs the classification results. The authors used two datasets for experimentation: BCI competition IV dataset 2a and BCI Competition IV dataset 2b. The experimental results indicate that on dataset 2a, after data augmentation using the proposed model, the average classification accuracy reached 83. 99%, significantly higher than other generative adversarial network methods (e.g., InfoGAN at 75.30% and WGAN at 60.87%). On dataset 2b, the classification accuracy reached 94.31%, significantly outperforming other methods (e.g., FBCSP combined with CNN at 82.39% and attention network combined with CNN at 87.60%). Confusion matrix analysis showed that data augmentation increased the overall classification accuracy by approximately 8%, and the kappa value increased from 0.688 to 0.787. By evaluating the generated data using the correlation coefficient (R value) and mutual information score (I value), it was found that the data generated using this method had the highest similarity to the real data, especially in the left-hand and right-hand MI data.

Raoof et al. proposed a conditional input-based GAN model for generating spatiotemporal electroencephalograph (EEG) data related to motor imagery [72]. Besides the generator and discriminator, this model also includes an encoder and a decoder, which together form an autoencoder. This autoencoder is used to encode EEG data into a low-dimensional (i.e., latent) space and recover the original data from this space. All four components adopt Gated Recurrent Unit (GRU) structures to generate high-quality EEG data through conditional input. The authors conducted experiments using an EEG dataset comprising 10 subjects. Evaluation metrics include the inverted Kolmogorov–Smirnov test, KL divergence, classification experiments (LSTM, SVM, 1D TCNN), and visualization techniques (PCA, t-SNE). The experimental results show that the data generated by the model are highly similar to real data in terms of statistical properties, classification performance, and feature space distribution, achieving an accuracy of 94.1% in classification tasks. This significantly enhances the performance of motor imagery classification and also indirectly verifies that the synthetic data preserve frequency response features related to ERDs.

Dong et al. proposed an EEG data augmentation method based on DCGAN to address issues caused by data scarcity or imbalance in EEG data classification [73]. The DCGAN in this study uses one-dimensional (1D) convolutional layers to handle EEG time series data. The generator takes random Gaussian noise as input, which passes through two dense layers and Parametric Rectified Linear Unit (PReLU) activation functions, and then generates EEG data through five transposed convolution blocks with different kernel sizes and strides. The discriminator receives either real or generated EEG data as input, extracts features through five 1D convolutional blocks, each consisting of a 1D convolutional layer, batch normalization layer, and PReLU activation function. Additionally, a dropout layer is added after each convolutional block to prevent overfitting. Finally, two dense layers are used to determine the authenticity of the input data. The authors evaluated the model using the publicly available EEG Motor Movement/Imagery dataset from Physionet. The authors analyzed the generated data using Fast Fourier Transform (FFT) and Continuous Wavelet Transform (CWT) and found that the generated data were similar to the real data in terms of temporal and frequency characteristics. The frequency distribution trends of the generated data and the real data are similar, primarily concentrated in the range of 0–10 Hz. Additionally, the power spectral maps of the generated signals and the real signals match well within the range of 0–2.5 Hz and 0–1 s.

Yin et al. propose an unsupervised end-to-end subject adaptation method named GITGAN for EEG motor imagery analysis, aiming to improve cross-subject data adaptability in BCI systems through GAN, thereby enhancing the system’s generalization capability [74]. GITGAN combines strategies for outlier removal, data augmentation, and generative adversarial learning to facilitate EEG data transfer between different subjects. The entire process starts with data preprocessing, followed by a generator, encoder, and domain discriminator, and finally, classification in the target domain. In the outlier removal process, the authors propose cbaDBSCAN (class-balanced auto-adaptive DBSCAN). The improved DBSCAN algorithm clusters data separately for each class, dynamically adjusting clustering parameters such as the radius and the minimum number of neighbors (minPts) based on class characteristics to ensure reasonable clustering results. For data augmentation, the authors use the MixUp method to increase the data volume, mitigating the reduction in data caused by outlier removal. After data preprocessing, generative adversarial learning is employed. The encoder and generator structures transfer features from the source data to the target data domain, creating a target data-centered space that maintains the integrity of the target data’s latent representation. Additionally, a label consistency mechanism is introduced to ensure that the class information of the source data is preserved during transfer. Experiments conducted on the OpenBMI [75] and BCI Competition IV dataset 2a show that the accuracy, F1-score, and kappa value of GITGAN are significantly superior to those of other comparison methods (EEGITNet, EEGResNet, EEG-Adapt, ADAST, SLARDA, TSMNet). Through the Layer-Wise Relevance Propagation (LRP) technique, the authors also analyze the interpretability of the GITGAN model. LRP results show that GITGAN can accurately identify brain regions associated with motor imagery tasks.

6.1.2. GAN Models for Emotion Recognition