Highlights

This paper presents the GCN-Transformer, a novel deep learning model that integrates

Graph Convolutional Networks (GCNs) and Transformers to enhance multi-person pose

forecasting. The model effectively captures both spatial and temporal dependencies, improving

the performance of pose forecasting. Additionally, a new evaluation metric, Final

Joint Position and Trajectory Error (FJPTE), is introduced to provide a more comprehensive

assessment of movement dynamics. These contributions establish GCN-Transformer as a

state-of-the-art solution in pose forecasting.

- What are the main findings?

- We introduce GCN-Transformer, a novel architecture combining Graph Convolutional Networks (GCNs) and Transformers for multi-person pose forecasting.

- We propose a new evaluation metric, Final Joint Position and Trajectory Error (FJPTE), which comprehensively assesses both local and global movement dynamics.

- What is the implication of the main finding?

- GCN-Transformer achieves state-of-the-art performances on the CMU-Mocap, MuPoTS- 3D, SoMoF Benchmark, and ExPI datasets, demonstrating superior generalization across different motion scenarios.

- The proposed FJPTE metric improves the evaluation of pose forecasting models by accounting for both movement trajectory and final position, enabling better assessments of motion realism.

Abstract

Multi-person pose forecasting involves predicting the future body poses of multiple individuals over time, involving complex movement dynamics and interaction dependencies. Its relevance spans various fields, including computer vision, robotics, human–computer interaction, and surveillance. This task is particularly important in sensor-driven applications, where motion capture systems, including vision-based sensors and IMUs, provide crucial data for analyzing human movement. This paper introduces GCN-Transformer, a novel model for multi-person pose forecasting that leverages the integration of Graph Convolutional Network and Transformer architectures. We integrated novel loss terms during the training phase to enable the model to learn both interaction dependencies and the trajectories of multiple joints simultaneously. Additionally, we propose a novel pose forecasting evaluation metric called Final Joint Position and Trajectory Error (FJPTE), which assesses both local movement dynamics and global movement errors by considering the final position and the trajectory leading up to it, providing a more comprehensive assessment of movement dynamics. Our model uniquely integrates scene-level graph-based encoding and personalized attention-based decoding, introducing a novel architecture for multi-person pose forecasting that achieves state-of-the-art results across four datasets. The model is trained and evaluated on the CMU-Mocap, MuPoTS-3D, SoMoF Benchmark, and ExPI datasets, which are collected using sensor-based motion capture systems, ensuring its applicability in real-world scenarios. Comprehensive evaluations on the CMU-Mocap, MuPoTS-3D, SoMoF Benchmark, and ExPI datasets demonstrate that the proposed GCN-Transformer model consistently outperforms existing state-of-the-art (SOTA) models according to the VIM and MPJPE metrics. Specifically, based on the MPJPE metric, GCN-Transformer shows a 4.7% improvement over the closest SOTA model on CMU-Mocap, 4.3% improvement over the closest SOTA model on MuPoTS-3D, 5% improvement over the closest SOTA model on the SoMoF Benchmark, and a 2.6% improvement over the closest SOTA model on the ExPI dataset. Unlike other models with performances that fluctuate across datasets, GCN-Transformer performs consistently, proving its robustness in multi-person pose forecasting and providing an excellent foundation for the application of GCN-Transformer in different domains.

1. Introduction

Pose forecasting is a machine learning task that predicts future poses based on a historical sequence of poses. This task is inherently challenging, as it requires models to anticipate movements several seconds into the future, thereby necessitating the capture of intricate temporal dynamics. The goal of pose forecasting is to provide accurate predictions of future poses, which can have practical applications in a wide range of fields. For example, in robotics, pose forecasting models enable robots to infer human intentions and predict future movements, facilitating safer, more intuitive collaboration in environments such as manufacturing floors, healthcare, and assistive robotics [1,2,3,4,5,6]. In sports analytics, forecasting player trajectories and body orientations several moments ahead supports tactical decision-making, performance evaluation, and even automated highlight generation. In autonomous driving, the accurate prediction of pedestrian motion improves vehicle navigation and enhances safety in complex urban settings. Intelligent surveillance systems use pose forecasting to proactively detect abnormal group behaviors, such as crowd surges or physical altercations, by identifying deviations from expected motion patterns. In virtual and augmented reality, forecasting full-body motion enables latency compensation and smoother avatar rendering during real-time collaborative experiences or immersive gameplay. These applications often rely on sensor-based motion capture systems, including vision-based sensors, inertial measurement units (IMUs), and depth cameras, to collect high-precision human movement data for training and inference [7,8,9].

One way to conceptualize pose forecasting is to divide it into two main categories: single-person [3,10,11,12,13,14] and multi-person [15,16,17,18,19,20] pose forecasting. In single-person pose forecasting, the task focuses on predicting the future poses of an individual based solely on their previous poses. This scenario is typically less complex, as it involves modeling the movement patterns of a single entity. On the other hand, multi-person pose forecasting extends the task by simultaneously predicting the future poses of multiple individuals. In this scenario, the forecasting model needs to consider each person’s previous poses and extract social dependencies and interactions among them. These interactions could include factors such as proximity, response to a movement, and body language, which significantly influence the future movements of individuals within a scene.

Various deep learning methods have been employed to tackle the task of pose forecasting. Fully connected networks directly map input pose sequences to future predictions, which is suitable for straightforward temporal dependencies [10,11,17]. Recurrent neural networks (RNNs) capture long-range dependencies by maintaining hidden states across time steps [12]. Graph Convolutional Networks (GCNs) excel in modeling spatial dependencies and interactions in multi-person scenarios [3,13,20,21]. Attention mechanisms and Transformer architectures focus on the relevant parts of input sequences, handling long-range dependencies effectively for precise predictions [15,16,18,19].

The paper presents a novel model, GCN-Transformer, designed to address the challenges of multi-person pose forecasting. Our model integrates key features from various deep learning architectures to capture complex spatiotemporal dependencies and social interactions among multiple individuals in a scene. GCN-Transformer consists of two main modules: the Scene Module and the Spatiotemporal Attention Forecasting Module. The Scene Module leverages Graph Convolutional Networks (GCNs) to extract social features and dependencies from the scene context, while the Spatiotemporal Attention Forecasting Module utilizes a combination of Temporal Graph Convolutional Networks (T-GCNs) and Transformer decoder modules to predict future poses. By combining these components, GCN-Transformer achieves state-of-the-art performance in multi-person pose forecasting tasks, demonstrating its effectiveness in capturing intricate motion dynamics and social interactions. GCN-Transformer is trained and evaluated on sensor-based datasets CMU-Mocap, MuPoTS-3D, SoMoF Benchmark, and ExPI, which include motion capture data collected through real-world sensing systems. To enhance the learning process and improve the movement dynamics of predicted sequences while also capturing interaction dependencies, we introduce new loss terms during the training phase, specifically the multi-person joint distance loss and velocity loss. These loss terms are designed to encourage the model to learn both interaction dependencies and joint movement dynamics. The inter-individual joint distance loss focuses on maintaining realistic spatial relationships between joints, while velocity loss promotes the accurate modeling of movement dynamics.

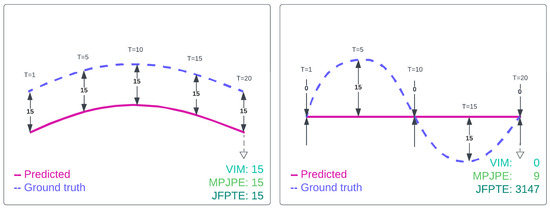

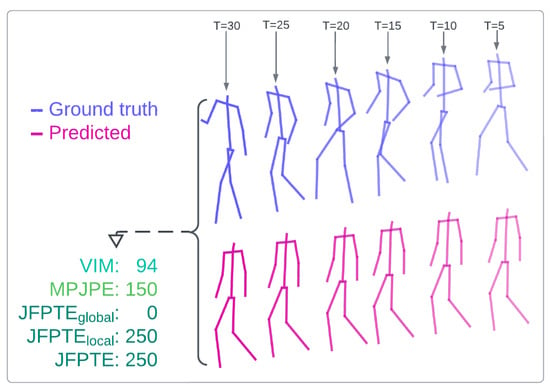

Additionally, in this paper, we introduce a novel evaluation metric, Final Joint Position and Trajectory Error (FJPTE), designed to comprehensively assess pose forecasting performance. While several attempts have been made to develop evaluation metrics specifically for pose forecasting [17,19,22], these have predominantly been variations of well-known metrics such as MPJPE and VIM, both of which originate from the pose estimation domain. However, pose forecasting requires a more holistic approach that considers not only the final position of each joint but also the trajectory leading to that position. FJPTE addresses this need by evaluating both the final position and the movement dynamics throughout the trajectory, providing a more thorough assessment of how well a model captures the complexities of human motion over time.

Our contributions are as follows:

- We propose a new architecture and model that combines Graph Convolutional Networks (GCNs) and Transformer modules for multi-person pose forecasting; it is designed to handle complex interactions in dynamic scenes and consistently outperforms state-of-the-art models on standard evaluation metrics.

- Multi-person joint distance loss (MPJD) and Velocity Loss (VL) were designed to encourage the model to generate spatially interaction-dependent and temporally coherent pose sequences for dynamic and interaction-rich scenes.

- A new evaluation metric for pose forecasting, called FJPTE, that evaluates movement trajectories and the final position error, is proposed to better assess the realism and coherency of predicted pose sequences in dynamic and interaction-rich scenes.

In this work, we aim to address the challenge of forecasting future 3D poses in dynamic multi-person scenarios by designing a model that combines scene-level social context encoding with individual-specific forecasting using query token fusion. The architecture jointly models spatial dependencies within each individual and temporal motion patterns using both Transformer and GCN-based components. We evaluate the model across four datasets, CMU Mocap, MuPoTS 3D, SoMoF, and ExPI, which feature varying numbers of individuals and different levels of interaction complexity. This setup allows us to assess the robustness and generalization ability of the model across diverse motion conditions.

The organization of this paper is structured to comprehensively address the advancements and methodologies in multi-person pose forecasting. We begin with a review of the related work by discussing existing models and their limitations. Next, we define the problem formulation for multi-person forecasting, detailing the task’s objectives and the necessary input and output representations. Following this, we introduce our proposed model, GCN-Transformer, which is elaborated through several subsections: the Spatiotemporal Fully Connected module for projecting sequences into a higher-dimensional embedding space; the Scene Module for capturing social interactions; and the Spatiotemporal Attention Forecasting Module for predicting future poses, data preprocessing, and augmentation techniques to enhance model performance, along with the training procedures employed. The Experimental Results Section follows, where we describe the metrics used for evaluation, the datasets involved, and the model’s performance on the CMU-Mocap, MuPoTS-3D, SoMoF Benchmark, and ExPI datasets. We then present an ablation study to analyze the impact of different model components. Additionally, we introduce a novel evaluation metric, FJPTE, which assesses both local movement dynamics and global movement errors. Finally, we conclude the paper by summarizing the key findings and discussing future research directions.

2. Related Work

In the domain of pose forecasting, establishing a baseline is crucial, with the Zero-Velocity model serving as a simple yet effective benchmark. This model predicts future poses by duplicating the last observed pose. Remarkably, this baseline has emerged as a strong contender, outperforming numerous proposed models and thus providing a fundamental comparison point. Consequently, this paper exclusively discusses models that surpass this baseline performance.

2.1. Single-Person Pose Forecasting

Early explorations [3,10,11,12,13,23,24] focused predominantly on single-person pose forecasting. However, when applied to multi-person scenarios, these models independently conduct pose forecasting for each individual.

The LTD model introduced by Mao et al. in [3] uses a Graph Convolutional Network (GCN) with 12 blocks and residual connections, along with two additional graph convolutional layers placed at the beginning and end of the model to encode temporal information and decode features for pose prediction. The Future Motion model was proposed in [13] for single-person pose forecasting on a similar backbone architecture of 12 GCN blocks and also includes data augmentation, curriculum learning, and the use of Online Hard Keypoints Mining (OHKM) loss.

Parsaeifard et al. in [12] proposed a DViTA model that uses a Long Short-Term Memory (LSTM) encoder–decoder network for trajectory forecasting and a Variational LSTM AutoEncoder (VAE) for local pose dynamic forecasting in order to extract two distinct components of human movement: global trajectory and local pose dynamics.

MotionMixer, introduced by Bouazizi et al. in [11], proposes multi-layer perceptrons (MLPs) for pose forecasting and captures spatiotemporal dependencies through spatial mixing across body joints and temporal mixing across time steps by incorporating squeeze-and-excitation (SE) blocks to adjust the significance of different time steps. Guo et al. in [10] proposed siMLPe, a lightweight MLP-based model for pose forecasting that, in addition to having fully connected layers and carrying out layer normalization and transpose operations, contains a Discrete Cosine Transform (DCT) to encode temporal information and carry out residual displacement to predict motion.

Incorporating additional constraints into the problem’s formulation, such as modeling human–scene interactions using per-joint contact maps to capture the distance between human joints and scene points, can enhance pose forecasting performance, as demonstrated by Mao et al. in [23]. This approach resolves issues such as “ghost motion”, conditioning future human poses on predicted contact points.

Zhong et al. in [24] introduced a model called GAGCN that addresses the complex spatiotemporal dependencies in human motion data. The authors use a gating network to dynamically blend multiple adaptive adjacency matrices that capture joint dependencies (spatial) and temporal correlations.

2.2. Multi-Person Pose Forecasting

Recent advancements in multi-person pose forecasting have emphasized the integration of social interactions and dependencies among individuals within a scene, aiming to enhance model performance [15,16,17,18,19,20,25,26,27].

Wang et al. in [15] proposed a transformer-based architecture called the Multi-Range Transformer (MRT) that captures both local individual motion and global social interactions among multiple individuals. The MRT decoder predicts future poses for each person by attending to both local- and global-range encoder features. Additionally, a motion discriminator is incorporated into the training process to ensure the generated motions maintain natural characteristics.

The Transformer Encoder was used in the SoMoFormer model, introduced by Vendrow et al. in [16], which treats each input as a Discrete Cosine Transform (DCT)-encoded, padded trajectory of one joint. The SoMoFormer model simultaneously predicts pose trajectories for multiple individuals and uses attention mechanisms to model human body dynamics and the grid position of individuals for its spatial understanding.

In [17], Šajina and Ivasic-Kos proposed the MPFSIR model, which focuses on spatial and temporal pose information using fully connected layers with skip connections. Despite its relatively low model parameters, MPFSIR achieves state-of-the-art performances. Moreover, the model includes an auxiliary output to recognize social interactions between individuals, contributing to its overall performance improvement.

Xu et al. uses temporal differentiation of joints and explicit joint relations as inputs to a joint-relation transformer model called JRTransformer, introduced in [18], which models future relations between joints along with future joint positions.

TBIFormer, proposed by Peng et al. in [19], breaks down human poses into five body parts and models their interactions separately. It employs a Temporal Body Partition Module to transform sequences into a Multi-Person Body-Part sequence, retaining spatial and temporal information. The subsequent module, Social Body Interaction Self-Attention, aims to learn body part dynamics for both inter-individual and intra-individual interactions. Finally, a Transformer Decoder forecasts future movements based on the extracted features and Global Body Query Tokens.

In [20], Peng et al. proposed SocialTGCN, a convolution-based model comprising a Pose Refine Module (PSM) consisting of Graph Convolutional Network (GCN) layers, a Social Temporal GCN (SocialTGCN) encoder with GCN and Temporal Convolutional Network (TCN) layers, and a TCN decoder. Additionally, the SocialTGCN Module is fed a Spatial Adjacency Matrix constructed based on the Euclidean distance between the body root trajectories of individuals.

In recent years, several innovative approaches have emerged for creating multi-person forecasting models that diverge significantly from traditional approaches, offering new ways to handle the complexities of social interactions and motion dynamics. In the following, we discuss a few notable examples of these alternative approaches.

Jeong et al. in [25] have integrated pose forecasting with trajectory forecasting in their Trajectory2Pose model. This interaction-aware, trajectory-conditioned model first predicts multi-modal global trajectories and then refines local pose predictions based on these trajectories. It utilizes a graph-based person-wise interaction module to model inter-person dynamics and reciprocal forecasting of both global trajectories and local poses for improved prediction performance in multi-person scenarios.

In [26], Tanke et al. proposed a framework for predicting the poses of multiple individuals with mutual interactions that bases the prediction of future movements on past behaviors, and they also proposed a function that aggregates movement features across individuals, either by averaging or using multi-head attention to provide contextually plausible interactions for groups of different sizes. By leveraging causal temporal convolutional networks, the model processes the relationships between participants and generates realistic, socially consistent motions over extended time horizons.

Xu et al. in [27] proposed a framework (DuMMF) for stochastic multi-person pose forecasting that incorporates generative modeling and latent codes to model individual movements at the local level and social interactions at the global level. The model generates multiple different predictions for individual poses and social interactions, covering a range of possible outcomes. The approach is generalizable to various generative models, including GANs and diffusion models.

A prevalent technique in data preprocessing for pose forecasting involves the application of the Discrete Cosine Transform (DCT), which encodes human motion into the frequency domain represented by a set of coefficients. This transformation aids in noise reduction, thus improving the robustness of the data. Conversely, the Inverse DCT (IDCT) decodes predictions back to Cartesian coordinates, facilitating interpretation and application [3,10,13,15,16,19,20,23,25].

To further enhance the performance of pose forecasting models, a strategy often employed is dividing the task into short-term and long-term prediction models, also known as short-term and long-term optimization. In this approach, the final prediction is derived from a combination of outputs from both short-term and long-term models [13,16,18]. Additionally, another effective technique to improve transformer-based models is deep supervision. Here, the output of each block within the model is passed through the decoder model, thereby mitigating issues related to overfitting and enhancing model generalization [16,18].

Despite the advancements in pose forecasting, including substantial advancements driven by GCN and Transformer architectures, several limitations persist that challenge the field. Current models often produce structurally invalid poses, where predicted poses do not reflect anatomically feasible configurations, rendering them unrealistic or impossible in real-world settings. Additionally, many models struggle to capture natural movement dynamics, leading to “ghosting” effects where poses appear frozen or drift unrealistically and lacking the fluidity and continuity expected in human motion. A further important issue is generalizability, where certain models achieve strong performance on specific datasets but frequently underperform when tested on different datasets, indicating an over-reliance on dataset-specific characteristics. To address these challenges, our proposed model is designed to improve the structural validity of predicted poses, enhance the realism of movement dynamics, and achieve more consistent performance across diverse datasets.

2.3. Pose Forecasting Evaluation Metrics

The evaluation of pose forecasting models involves adopting various metrics borrowed from related tasks, such as pose estimation [28,29]. Initially, the Mean Per Joint Position Error (MPJPE) metric, borrowed from pose estimation, was widely used. However, it calculates the Euclidean distance (L2 norm) across all joints in the predicted sequence, providing an overall assessment of the model’s performance without specifically focusing on human movement dynamics. To address this limitation, Adeli et al. in [22] introduced the Visibility-Ignored Metric (VIM). Unlike MPJPE, VIM evaluates the pose error solely at the last predicted frame, overlooking the trajectory of joints in preceding frames and focusing solely on the final pose error. MPJPE, along with VIM, has since become a standard evaluation metric for pose forecasting due to its simplicity, interpretability, and broad adoption in recent works.

Building upon the MPJPE metric, Šajina and Ivasic-Kos in [17] proposed the Movement-Weighted Mean Per Joint Position Error (MW-MPJPE). This metric enhances MPJPE by incorporating a weighting factor based on the overall movement exhibited by the individual throughout the target pose sequence. This weighting factor provides a more nuanced evaluation by considering the varying degrees of movement across different poses.

Peng et al. in [19] employed various evaluation metrics to assess multi-person pose forecasting models. These included the Joint Position Error (JPE), which resembles MPJPE but reports errors for all individuals in the scene; the Aligned Mean Per Joint Position Error (APE), which is akin to Root-MPJPE, focusing on pose position errors by removing global movement; and the Final Displacement Error (FDE), measuring the trajectory prediction error by considering only the final global position (e.g., pelvis) of each person.

Despite the introduction of several evaluation metrics, most existing metrics either focus solely on joint-wise positional errors or isolate specific aspects of motion, such as the final displacement. As a result, they often fail to provide a comprehensive view of both local movement dynamics and global motion trajectories over time. This highlights the need for a more complete pose forecasting metric that can jointly assess the error of predicted joint movements, as well as the overall realism and coherence of predicted human motion.

2.4. GCN and Transformer Hybrid Architectures in Related Fields

While significant progress has been made with Graph Convolutional Networks (GCNs) and Transformers individually, to the best of our knowledge, no prior work has successfully integrated these two architectures into a unified model specifically for the task of multi-person pose forecasting. This gap represents an opportunity for advancement, as combining the strengths of GCNs in capturing spatial dependencies and Transformers in modeling long-range temporal dynamics could lead to more robust and accurate predictions in complex, interaction-heavy scenarios. In this paper, we aim to bridge this gap by proposing GCN-Transformer, a novel model that leverages both GCN and Transformer architectures for multi-person pose forecasting, potentially setting a new standard in the field.

Although no previous work has applied a GCN-Transformer hybrid directly to multi-person pose forecasting, this combination has demonstrated considerable success across several related fields. These studies provide valuable insights into the benefits of integrating structured relational modeling with dynamic sequence modeling. In the following, we briefly review selected examples where GCN-Transformer hybrids have been effectively applied to tasks such as trajectory prediction [30,31], time series forecasting [32,33], and pose estimation [34,35]. For example, Li et al. in [30] proposed a Graph-Based Spatial Transformer for predicting multiple plausible future pedestrian trajectories, which models both human-to-human and human-to-scene interactions by integrating attention mechanisms within a graph structure. Additionally, they present a Memory Replay algorithm to improve the temporal consistency of predicted trajectories by smoothing the temporal dynamics. Similarly, Aydemir et al. in [31] proposed a novel approach for predicting trajectories in complex traffic scenes. By utilizing a dynamic-weight learning mechanism, the model adapts to each person’s state while maintaining a scene-centric representation to ensure efficient and accurate trajectory prediction for all individuals. The model leverages GCNs to capture spatial interactions between individuals and employs Transformer-based attention to model temporal dependencies.

GCN and Transformer architectures have also been successfully applied to time series forecasting, a task of predicting future time intervals based on historical data. For instance, Hu et al. in [32] introduced a GCN-Transformer model designed to handle complex spatiotemporal dependencies in EV-battery-swapping-station load forecasting. The model integrates Graph Convolutional Networks (GCNs) to capture spatial relationships between stations and a Transformer to model temporal dynamics, allowing it to manage both spatial and temporal information simultaneously. Similarly, Xiong et al. in [33] introduced a model for chaotic multivariate time series forecasting. The model utilizes a Dynamic Adaptive Graph Convolutional Network (DAGCN) to model spatial correlations across variables and applies multi-head attention from the Transformer to capture temporal relationships. This hybrid approach demonstrates the effective application of GCNs and Transformers in tasks that require managing complex nonlinear data, such as chaotic systems, showing strong interpretability and performance across benchmark datasets.

GCN and Transformer architectures have also been successfully applied to pose estimation, a task of detecting human joint positions from an image. For example, Zhai et al. in [34] proposed the Hop-wise GraphFormer (HGF) module, which groups joints by k-hop neighbors and applies a transformer-like attention mechanism to model joint synergies. Additionally, the Intragroup Joint Refinement (IJR) module refines joint features, particularly for peripheral joints, using prior limb information. Furthermore, Cheng et al. in [35] presents GTPose, a novel model combining Graph Convolutional Networks (GCNs) and Transformers to enhance 2D human pose estimation. The model uses multi-scale convolutional layers for initial feature extraction, followed by Transformers to model the spatial relationships between keypoints and image regions. To further refine predictions, a Graph Convolutional Network models the topological structure between keypoints, capturing the relationships between joints.

While prior works have combined GCNs and Transformers in tasks such as trajectory forecasting, time series prediction, and pose estimation, these models typically apply GCNs for spatial encoding followed by Transformers for temporal modeling in a sequential or stacked manner. In contrast, our architecture is structured as a modular pipeline that first models social contexts using a Spatial-GCN applied across all individuals in the scene. This shared context is then injected into per-person forecasting branches using query token fusion, allowing each branch to access global scene information alongside individual motion patterns. Additionally, our forecasting module jointly incorporates both Transformer-based attention mechanisms and Temporal GCNs, enabling the complementary modeling of long-range temporal dependencies and local graph-based dynamics. To our knowledge, no prior GCN-Transformer hybrid applies this architecture to multi-person pose forecasting with such explicit scene-person disentanglement and fusion.

3. Background of Graph Convolutional Networks and Transformers

In recent years, two of the most prominent architectures for tasks like pose forecasting have been Graph Convolutional Networks (GCNs) and Transformer architectures. To better understand their foundations and effectiveness, we will provide a formalized overview of these architectures. It is important to note that the following descriptions remain generalized relative to GCN and Transformer architectures and do not delve into their specific application to multi-person pose forecasting, as this has already been addressed in the Related Work Section.

3.1. Graph Convolutional Networks

Conventional Convolutional Neural Networks (CNNs) operate on grid-like data structures like images, while GCNs are designed to work with non-Euclidean data, such as graphs, which consist of nodes (vertices) and edges representing relationships between the nodes. A graph is formally defined as , where V is the set of nodes and E is the set of edges. The key challenge in GCNs is to propagate information between nodes to capture the spatial structure of the graph.

GCNs can be broadly categorized into spatial and spectral graph convolutions [36]. Spatial-GCNs aggregate information from neighboring nodes based on their local structure. This aggregation can be extended to k-hop neighbors, where the neighborhood expands to include nodes within k steps of the target node, as in [37]. Spectral GCNs, on the other hand, transform graph data into the spectral domain, using the graph’s Laplacian to perform convolutions, but these often encounter computational challenges due to the size of the graph kernel. A simplified version of spectral convolutions, proposed by Kipf and Welling in [38], utilizes a first-order approximation, which is widely adopted due to its computational efficiency.

The general form of a GCN layer can be represented as follows:

where represents the feature matrix at layer l, is the normalized adjacency matrix, is the learnable weight matrix at layer l, and is an activation function like ReLU.

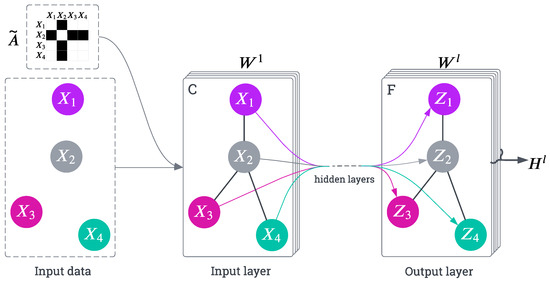

Figure 1 illustrates the multi-layer GCN architecture, highlighting how the input features are progressively transformed through successive layers using the shared graph structure defined by the normalized adjacency matrix . Traditionally, the adjacency matrix is predefined based on the structure of the graph (e.g., a human skeleton with fixed joint connections). However, in more advanced applications, especially in tasks like pose forecasting, the adjacency matrix can be treated as a learnable parameter [24,39], allowing the model to dynamically adapt the relationships between nodes (e.g., joints) based on the data. By making the adjacency matrix learnable, the network can adjust the strength or presence of connections between nodes, capturing more complex and data-driven relationships that may not be explicitly defined in the original graph. This is particularly useful for tasks involving non-static or flexible relationships, such as multi-person interactions or joint dynamics that change over time.

Figure 1.

The figure depicts a multi-layer Graph Convolutional Network (GCN) architecture. The graph’s structure, defined by the normalized adjacency matrix , is shared across all layers (edges shown as black lines). The input data (with C channels) are iteratively transformed at each layer l using and a learnable weight matrix . The final layer outputs feature maps, F, capturing node relationships and properties through stacked graph convolutions.

3.2. Transformer Architecture

The Transformer model, introduced by Vaswani in [40], has revolutionized the field of sequence modeling due to its effectiveness in capturing long-range dependencies and its parallel computation capabilities. Initially developed for natural language processing (NLP), where understanding contextual relationships between words across long sequences is essential, the Transformer architecture quickly surpassed traditional recurrent models such as LSTMs and GRUs. This success sparked widespread adoption across numerous domains, including computer vision, time-series forecasting, reinforcement learning, and human motion modeling.

Transformers rely on the attention mechanism that allows each element of the input sequence to interact with every other element. During processing, the attention mechanism assigns higher importance, or attention weights, to parts of the sequence that are most relevant for a given prediction or representation. This dynamic weighting enables the model to selectively focus on crucial inputs while diminishing the influence of less relevant ones, enhancing the ability to capture complex, long-range relationships without relying on sequential processing steps.

Because Transformers do not inherently model sequential order, they incorporate positional encodings into the input embeddings to preserve information about the position of each element within a sequence. These positional encodings can be predefined, typically using sine and cosine functions at varying frequencies [15,19,41,42], or learned as trainable parameters during model optimization [16,18]. By embedding positional information alongside content information, Transformers maintain the ability to reason about both the identity and the temporal order of elements, allowing them to capture complex sequential dependencies in various tasks.

Moreover, Transformers are inherently well suited for scenarios involving complex relational dynamics, a defining characteristic of sensor-based human motion data. Their global attention mechanism enables the model to dynamically prioritize the most relevant joints or individuals at each time step, allowing it to capture nuanced dependencies across space and time. This capability is particularly valuable in crowded or interaction-rich environments, where individual movements are not independent but influenced by the collective behavior of others in the scene.

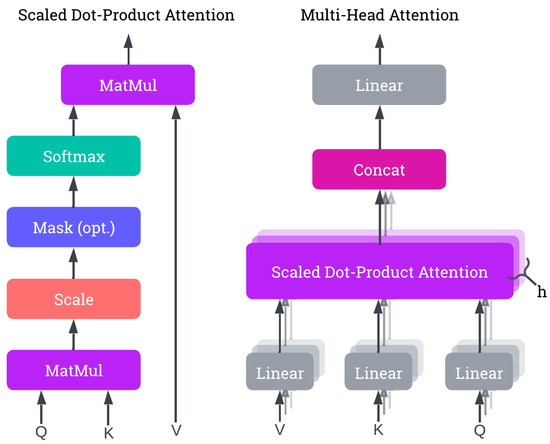

At the core of the Transformer is the scaled dot-product attention, which computes the attention score as follows:

where Q, K, and V are the query, key, and value matrices derived from the input sequence, and is the dimensionality of the key vectors. The softmax function ensures that the attention weights sum up to one, enabling the model to focus on relevant parts of the sequence. The scaling factor prevents the dot-product values from growing too large, which could cause vanishing gradients during backpropagation [40].

To enhance the model’s expressiveness, the Transformer uses multi-head attention, where multiple attention mechanisms run in parallel, and their outputs are concatenated:

where , and , , and are learnable weight matrices for the queries, keys, and values, respectively. The outputs are then transformed by a final weight matrix [40]. Figure 2 illustrates the calculations involved in the attention mechanisms of Transformers, including Scaled Dot-Product Attention and Multi-Head Attention, which aggregate multiple attention layers in parallel.

Figure 2.

The figure illustrates the attention mechanism used in Transformer architecture. The left side depicts Scaled Dot-Product Attention, where the attention scores are computed using queries (Q), keys (K), and values (V), followed by scaling and a softmax operation. The right side shows Multi-Head Attention, consisting of multiple parallel Scaled Dot-Product Attention layers. The outputs of these parallel layers are concatenated and linearly transformed to produce the final attention output.

4. Problem Formulation for Multi-Person Forecasting

In the multi-person pose forecasting task, the aim is to forecast the forthcoming movements of multiple individuals within a given scene. Each individual in the scene is characterized by anatomical joints, typically including key areas such as elbows, knees, and shoulders. The task involves predicting the trajectories of these joints over a specified duration into the future, usually denoted by T time steps. To accomplish this predictive task, the model is provided with a sequence of historical poses for each individual. These historical poses encapsulate the positional information of each joint in three-dimensional Cartesian coordinates framed within a global coordinate system. This representation is standard in the field, as it reflects the native output of motion capture systems and 3D pose estimation models, and it allows for the straightforward computation of spatial relationships such as distances and velocities. For any given individual , each historical pose is represented by a vector of J dimensions, where J signifies the number of tracked joints. Consequently, the entire historical sequence for individual n is represented as , capturing the temporal evolution of poses up to the present moment. The length of the input pose sequence, denoted as t, dictates the number of historical poses the model uses for prediction. The index n ranges from 1 to N, where N corresponds to the total number of individuals observed within the scene. At its core, the model’s primary objective is to generate future pose sequences for each individual, denoted as . Here, T reflects the future number of time steps that the model is tasked with forecasting. The problem’s formulation is graphically shown in Figure 3.

Figure 3.

The figure illustrates the problem formulation for predicting the future movements of multiple individuals in a scene. Each individual is represented by joints (e.g., elbows, knees, shoulders), and the task is to forecast their trajectories over T time steps. The model receives historical pose sequences for each individual n, containing the positional data of joints in three-dimensional Cartesian coordinates. The objective is to predict future pose sequences , extending T time steps into the future.

5. Proposed Architecture and Model

This paper proposes GCN-Transformer, a novel model for multi-person pose forecasting that emphasizes capturing complex interactions and dependencies between individuals within a scene. GCN-Transformer takes sequences of poses from all individuals in the scene as inputs, which are firstly preprocessed to enhance the data’s richness. These sequences are then processed through the Scene Module, which is designed to capture the interactions and dependencies between individuals within the scene. Following this, the Spatiotemporal Attention Forecasting Module combines this contextual information with each individual’s sequence to predict future poses. The following sections provide a detailed description of each component in the model’s architecture.

The architecture of GCN-Transformer is guided by complementary theoretical principles from graph-based and attention-based modeling. Graph Convolutional Networks (GCNs) are well suited for capturing structured spatial relationships, such as the physical dependencies among joints and the social connections between individuals in a shared scene. These structures act as relational inductive biases that help the model reason over pose and proximity with minimal supervision. In contrast, Transformers are powerful tools for modeling long-range temporal dependencies and contextual interactions. Their self-attention mechanism allows for the dynamic weighting of information across time and between individuals, without requiring sequential computation. By combining GCNs and Transformers, GCN-Transformer is able to model both local and global dynamics, capturing individuals’ joint relationships and interactions with temporal dependencies in multi-person scenes.

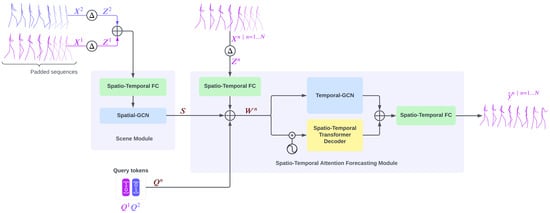

GCN-Transformer comprises two main modules: the Scene Module and Spatiotemporal Attention Forecasting Module. Initially, the input sequences, , are padded with the last known pose’s T times and augmented by incorporating their temporal differentiation, resulting in enriched sequences denoted as . Temporal differentiation refers to the process of computing the difference between joint positions across consecutive time steps to obtain motion velocity or first-order dynamics. Formally, for each person n, we compute , and we concatenate this velocity signal with the original sequence along the joint feature’s dimension. A zero-initialized frame is prepended to maintain temporal alignment. This results in a richer representation capturing both position and motion. These enriched sequences are concatenated and fed into the Scene Module. Within the Scene Module, a Spatiotemporal Fully Connected module encodes the poses into an embedding space. Subsequently, the output undergoes processing through the Spatial-GCN network designed to extract social features and dependencies. The resulting output S from the Scene Module is then forwarded into the Spatiotemporal Attention Forecasting Module for each n-th sequence , along with a query token generated through one-hot encoding based on the position of the n-th sequence within the scene.

In the Spatiotemporal Attention Forecasting Module, the sequence is encoded into the embedding space using a Spatiotemporal Fully Connected module (STFC). The resulting output is then concatenated with the extracted features S from the Scene Module and the query token to create . This fusion combines individual motion, scene-level context, and identity-specific signal. , where , , and (broadcasted across T). Subsequently, is simultaneously passed into the Spatiotemporal Transformer Decoder and Temporal-GCN modules. The outputs from both modules are concatenated and processed through a Spatiotemporal Fully Connected module to generate the final prediction .

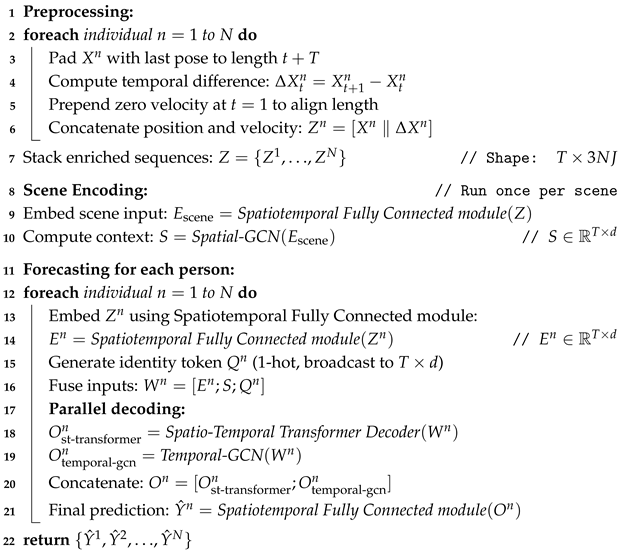

The architecture of GCN-Transformer is shown in Figure 4, and the full forward pass of GCN-Transformer is outlined in Algorithm 1.

| Algorithm 1: Pseudocode outlining the end-to-end forward pass of GCN-Transformer. The model first applies temporal differentiation to augment pose sequences for all individuals in the scene. These enriched sequences are embedded and passed through a Spatial GCN to extract scene-level context. Each individual’s sequence is then fused with the scene context and an identity-specific query token before being processed in parallel by a Spatiotemporal Transformer Decoder and a Temporal GCN. The outputs are concatenated and passed through a final Spatiotemporal Fully Connected module to produce future pose predictions. |

Input: Pose sequences for N individuals, each with J joints in 3D space Output: Predicted future pose sequences  |

Figure 4.

The figure depicts the architecture of the GCN-Transformer model. In the preprocessing step, input sequences and are padded with the last pose to match the full length of the sequence, and they are enriched with their temporal differentiation , resulting in sequences and . These sequences are then jointly processed by the Scene Module to extract social features and dependencies, producing the output S. Finally, to produce the final predictions, the output S is subsequently fed into the Spatiotemporal Attention Forecasting Module for each n-th sequence , along with a query token generated via one-hot encoding based on the position of the n-th sequence within the scene.

5.1. Spatiotemporal Fully Connected Module

The Spatiotemporal Fully Connected module is a lightweight component that projects pose sequences into a higher-dimensional embedding space, making them suitable for processing by downstream modules. It consists of two fully connected layers that independently process the spatial and temporal dimensions of the input. Given an input sequence , where T is the number of time steps, N is the number of individuals, J is the number of joints, and each joint is represented in 3D Cartesian space. The first fully connected layer operates along the spatial dimension, and it maps each frame-level pose vector of dimension to a higher-dimensional representation, resulting in an intermediate output of shape . Subsequently, a second fully connected layer is applied across the temporal dimension, allowing the model to capture short-term temporal patterns and refine the sequence-level encoding. The final output remains in and serves as the input to both the Scene Module and Spatiotemporal Attention Forecasting Module, where it is further processed by GCN and Transformer components.

5.2. Scene Module

The Scene Module is designed to enhance input data representation by leveraging temporal and spatial information. It comprises two key elements: a Spatiotemporal Fully Connected module and the Spatial-GCN. The Spatiotemporal Fully Connected module serves as an initial processing unit, transforming the enriched input sequence into a higher-dimensional embedding space, refining the input data and preparing them for subsequent modules through spatial and temporal transformations. In conjunction with the Spatiotemporal Fully Connected module, the Spatial-GCN module serves to uncover intricate patterns embedded within the data, specifically focusing on extracting interaction dependencies and dynamics among individuals within the scene. Comprising eight GCN blocks with learnable adjacency matrices, this module employs various techniques, including batch normalization, dropout, and Tanh activation functions, to enhance feature extraction and maintain the integrity of the structural information present in the input data. To further enhance the model’s ability to capture social dependencies and maintain realistic spatial relationships between joints of the people in the scene, we compute the inter-individual joint distance loss on the output S.

5.3. Spatiotemporal Attention Forecasting Module

The Spatiotemporal Attention Forecasting Module predicts future poses by synthesizing information from various sources, including the input sequence , scene context S, and positional query token associated with sequence . Initially, the input sequence undergoes encoding via the Spatiotemporal Fully Connected module, transforming into an embedded space. Subsequently, this encoded sequence is concatenated with the scene context S and the positional query token to form . This composite representation undergoes parallel processing through two key components: the Spatiotemporal Transformer Decoder and the Temporal-GCN modules.

The Spatiotemporal Transformer Decoder comprises two attention blocks positioned after the learnable positional encoding of . The first attention block is followed by fully connected layers that operate on the spatial dimension, facilitating the extraction of spatial features. Conversely, the second attention block is followed by Temporal Convolutional Network (TCN) layers, which specialize in capturing long-term temporal dependencies and temporal patterns within the data. Concurrently, the Temporal-GCN module, composed of eight GCN blocks with learnable adjacency matrices, operates on to extract and refine temporal dependencies, thereby enhancing the temporal representation separate from the Spatiotemporal Transformer Decoder.

Finally, the Spatiotemporal Attention Forecasting Module integrates the extracted features using Spatiotemporal Fully Connected module, resulting in the generation of the final pose sequence prediction . This fusion process ensures that the module leverages the diverse information captured across spatial, temporal, and contextual dimensions to produce accurate and reliable predictions for future poses.

5.4. Data Preprocessing

We opted against employing any data preprocessing techniques for our model; instead, we utilized raw data from the datasets. This approach was chosen to compel the model to learn the intricate structure of the human skeleton and the dynamic nature of movement. Conventional preprocessing methods, such as employing Discrete Cosine Transform (DCT) to encode Cartesian coordinates into frequencies, often yield poses that appear ghost-like and lack the nuanced dynamics of human movement, like in [13,15,16,17]. Moreover, techniques like predicting temporal differentiation that is subsequently added to the last known pose to generate the final result can produce invalid poses over the long term due to the model’s lack of awareness regarding human structural information, like in [12,15,18,19,20].

5.5. Data Augmentation

Data augmentation is used for enhancing the robustness and generalization capability of pose forecasting models. Building upon methods utilized in [17], we extended the augmentation strategy with new methods to introduce further variations in the training data. Inspired by [17], we adopted several effective methods: sequence reversal, which reverses the temporal order of input sequences to expose the model to diverse temporal patterns; random person permutation, which shuffles the order of individuals within a scene to accommodate different person arrangements and interactions; random scaling, which introduces variations in pose scale to simulate varying heights of the people; random orientation, where poses are randomly rotated to simulate different camera viewpoints or human orientations; and random positioning, which shifts the positions of individuals within the scene to introduce spatial variability.

Expanding upon these methods, we introduced new techniques to enrich the dataset further. One method involved randomizing the joint order of individuals in a scene, encouraging the model to learn complex skeleton representations and adapt to different joint configurations. Additionally, we used a method to randomize the XYZ axes of individuals, enhancing pose variation by altering the orientation and positioning of poses in 3D space. Lastly, we varied the dataset’s sampling frequency, using frequencies 1–4 to capture slower and faster sequences, though this type of sampling is performed during the preprocessing step.

All augmentations, except for sampling frequencies, are applied dynamically to each sampled batch of scene sequences during training. Each augmentation method is applied with a specific probability, introducing controlled variability into the training data. For instance, sequence reversal, random person permutation, random scaling, and random positioning each have a 50% probability of being applied, while random orientation, random joint order, and random XYZ-axis order are applied with a 25% probability. Furthermore, there is a 25% probability that no augmentation will be applied to a given sequence, ensuring that the model is exposed to both augmented and unaugmented data. These augmented datasets enable the model to learn robust features and adapt effectively to diverse scenarios, improving its performance and generalization capability in pose forecasting tasks.

We progressively introduced each method during development and empirically observed consistent reductions in training loss, indicating improved learning dynamics. All augmentation strategies were designed to preserve structural validity, and none produced implausible or invalid pose sequences. Importantly, all augmentations in our pipeline are applied consistently across the entire scene, meaning that the same transformation is applied to all individuals’ pose sequences within a given scene to ensure that augmented motions remain coherent and socially consistent. Furthermore, since each augmentation process is applied with controlled probability and independently of others, we found no clear evidence of conflicting interactions or degradation in data quality. In practice, the combined use of all proposed augmentations led to the most effective training results across all datasets, as we also show in the ablation study (Section 7).

5.6. Training

Our model optimizes its parameters by minimizing the error between the predicted and ground truth poses, using a loss commonly referred to as reconstruction loss (REC). This is a standard approach in pose forecasting and is widely adopted in prior work due to its simplicity and direct correlation with spatial prediction accuracy. REC is typically computed as the L2 distance between corresponding joints in the predicted and ground truth sequences, ensuring that the forecasted poses remain close to the true positions frame by frame.

However, while REC provides a useful baseline for learning pose positions, it has several limitations, particularly in the context of multi-person and dynamic motion forecasting. REC measures pose similarity on a per-joint, per-frame basis, and as such, it does not account for the temporal continuity of movements or the relational dynamics between individuals. This can lead to predicted sequences that are spatially accurate in isolated frames but lack smoothness over time or consistency in movement dynamics. For instance, a model trained with REC alone may generate plausible individual poses that result in jittery motion or unrealistic group behavior, such as individuals moving without regard for nearby participants.

To address these shortcomings, we introduce two additional loss terms that target complementary aspects of human motion. First, the multi-person joint distance (MPJD) loss enhances the model’s ability to capture social and spatial interactions by penalizing discrepancies in joint distances between individuals across time. This encourages the Scene Module to improve model interaction dependencies and produce socially coherent pose sequences. Second, we incorporate a Velocity loss (VL), which prioritizes the learning of consistent temporal dynamics. By penalizing deviations in joint velocities between predicted and ground truth sequences, the VL term helps the model generate smoother and more realistic motion trajectories, reducing jitter and improving the fluidity of movement. The effectiveness of both additional losses is demonstrated in the ablation study (Section 7).

The final loss function is determined by combining the standard reconstruction loss with an additional multi-person joint distance loss (MPJD), scaled by a factor denoted as , used to adjust the effect of the MPJD loss on the overall loss. Both the output and scene predictions are subjected to Velocity Loss (VL), with Velocity Loss for the output from the Scene Module also scaled by the factor. To measure the error between the predicted and ground truth coordinates, we employ -norm loss, aiming to minimize this error during training.

The final loss is calculated as follows:

where N represents the number of people in the scene; and represent the predicted pose sequence of the n-th and p-th person in the scene, while and represent the corresponding ground truth pose sequence of n-th and p-th person in the scene. denotes the Euclidean distance (L2 norm), and represents the mean distance across all people in the scene. The represents temporal differentiation, where for and for . The predicted velocities of joint distances between individuals are represented with , while represents the ground truth velocities of joint distances between individuals.

Including MPJD and VL losses in the training process significantly enhances the practical applicability of multi-person pose forecasting models in real-world scenarios. The MPJD loss encourages the model to learn interaction dynamics between individuals in a scene, helping it capture how one individual’s movements influence others. This is particularly useful in scenarios such as crowd monitoring, group behavioral analysis, and human–robot collaboration, where understanding interpersonal interactions is essential. On the other hand, the VL loss emphasizes temporal velocities between subsequent poses, promoting the generation of fluid and natural motion sequences. This is crucial in applications like animation, virtual reality, and autonomous systems, where smooth and realistic motion transitions are essential. Together, these losses address the challenges of producing rigid or disconnected poses, ensuring that the model generates dynamic, context-aware predictions.

We trained our model for 512 epochs with a batch size of 256, which was the largest manageable size given our hardware constraints. The extended training duration was chosen to accommodate the strong and dynamic augmentation strategy, which introduced extensive variability to the data, necessitating longer training for the model to effectively learn from these variations. Observing that the performance improvements plateaued at around 512 epochs, we determined that this duration was sufficient for optimal convergence. The Adam optimizer, a standard choice in pose forecasting, was chosen due to its adaptability and efficiency in handling complex, dynamic loss landscapes, especially with the strong augmentations applied. After testing multiple learning rates, we set an initial learning rate of 0.001, finding that it balanced effective learning with stability. A higher learning rate caused the loss to oscillate heavily, likely due to abrupt shifts in the solution space introduced by the strong augmentation, and in some cases, gradients would explode. To guide the model closer to the optimal solution, we reduced the learning rate to 0.0001 after 256 epochs, ensuring smoother convergence in the later stages of training. We also carefully tuned the parameter, which scales the MPJD loss, by analyzing values from 0 to 1. A value of 0.1 was selected, as it provided the best balance in guiding the model to capture both spatial dependencies and movement dynamics effectively.

6. Experimental Results

In our experimental evaluation of the GCN-Transformer, we employed four distinct datasets: CMU-Mocap, MuPoTS-3D, SoMoF, and ExPI. To assess the model’s performance, we define evaluation metrics that quantify the error between predicted poses and ground truth. Through comprehensive analysis, we evaluated our model’s performance on all datasets and conducted a comparative study against state-of-the-art models in the domain of multi-person pose forecasting. All models used for the experimental results were retrained from scratch using their official implementations, with the exception of Future Motion, which we re-implemented based on the details provided in the original paper. We followed the reported training protocols and hyperparameters wherever available and performed validation-based tuning only for Future Motion due to missing implementation details. All models were trained and evaluated under a consistent experimental setup to ensure a fair and meaningful comparison with our proposed method.

6.1. Metrics

The MPJPE (Mean Per Joint Position Error) is a commonly used metric for evaluating the performance of pose forecasting methods [15,16,17,18,43]. It measures the average Euclidean distance between the predicted joint positions and the corresponding ground truth positions across all joints. The lower the MPJPE value, the closer the predicted poses align with the ground truth. This metric provides a joint-level assessment of pose forecasting performance. The MPJPE metric is calculated as follows:

where f denotes a time step, and denotes the corresponding skeleton. is the estimated position of joint j, and is the corresponding ground truth position. represents the number of joints. denotes the Euclidean distance (L2 norm), and represents the mean distance across all joints.

Another commonly employed metric in pose forecasting evaluation is the Visibility-Ignored Metric (VIM), initially proposed by Adeli et al. in [22]. The VIM is computed by assessing the mean distance between the predicted and ground truth joint positions at the last pose T. This calculation involves flattening the joint positions and coordinates dimensions into a unified vector representation, resulting in a vector dimensionality of , where J denotes the number of joints. Subsequently, the Euclidean distance (L2 norm) is computed between the corresponding ground truth and predicted joint positions. The average distance across all joints yields the final VIM score. The SoMoF Benchmark adopts this metric for its evaluation framework. The VIM metric computation can be expressed as follows:

where J represents the number of joints, is the ground truth position of the i-th joint (flattened), is the predicted position of the i-th joint (flattened), denotes the Euclidean distance (L2 norm), and represents the mean distance across all joints.

6.2. Datasets

We employed distinct datasets for both training and evaluation, aligning with the methodology of previous models such as SoMoFormer [16], MRT [15], MPFSIR [17], and JRTransformer [18]. For training, we utilized the 3D Poses in the Wild (3DPW) [44] and Archive of Motion Capture As Surface Shapes (AMASS) [45] datasets. The 3DPW dataset contains over 60 video sequences containing scenes with two individuals, capturing human motion in real-world scenarios, including accurate reference 3D poses in natural scenes, such as people shopping in the city, having coffee, or playing sports, recorded with a moving hand-held camera. The dataset was collected using a combination of vision-based sensors and inertial measurement units (IMUs), which provided high-fidelity motion tracking in unconstrained environments. To adhere to the evaluation protocol of the SoMoF benchmark [22], we employed a specific split of the 3DPW dataset, where the train and test sets are inverted. Thus, we trained all models on the 3DPW test set and subsequently evaluated them on the 3DPW train set. This inversion was originally introduced by the authors of the SoMoF benchmark [22] due to the preprocessing of the 3DPW dataset, which created a larger number of sequences in the test set than in the training set, thus inverting the datasets allowed for a more robust training set. By following this protocol, we ensure that our results are directly comparable with other multi-person pose forecasting models evaluated under the same conditions. Specifically, for the SoMoF test set, data from the original 3DPW training set were sampled without overlap, producing distinct pose sequences. In contrast, the SoMoF training set was generated by sampling the original 3DPW testing set with overlap, employing a sliding window of 1 to capture a broader range of pose variations. The validation set remained consistent with the original 3DPW dataset, which was sampled without overlap.

On the other hand, the AMASS dataset provides an extensive collection of human motion capture sequences, totaling over 40 h of motion data and 11,000 motions represented as SMPL mesh models. AMASS unifies multiple optical marker-based motion capture datasets within a common framework, where motion data were originally collected using high-precision marker-based tracking systems. During the training process, we utilized the CMU, BMLMovi, and BMLRub subsets of the AMASS dataset, which provided a diverse and large-scale dataset. Given that many sequences within this dataset are single-person, we employed a technique to synthesize additional training data by combining sampled sequences to generate multi-person training data.

In contrast to recent works [15,16,17,18,19] that utilize the SoMoF Benchmark [22] alongside the Carnegie Mellon University Motion Capture Database (CMU-Mocap) [46] and the Multi-person Pose Estimation Test Set in 3D (MuPoTS-3D) [47] for model evaluation, our study additionally presents results on the Extreme Pose Interaction (ExPI) [48] dataset.

The CMU-Mocap and MuPoTS-3D datasets contain scenes with three individuals, with approximately 8000 annotated frames of poses across 20 real-world scenes. However, the movements captured are primarily simplistic, with limited interactions, often resulting in sequences where individuals maintain largely static poses or perform minimal motions. While we include evaluations on CMU-Mocap and MuPoTS-3D to ensure completeness and facilitate comparison with prior works, we emphasize that models trained or evaluated on these datasets may struggle to demonstrate their full capabilities in forecasting socially coherent, dynamic multi-person motion.

Therefore, after presenting initial results on CMU-Mocap and MuPoTS-3D, we focus our full analysis on the SoMoF Benchmark and the Extreme Pose Interaction (ExPI) dataset, both of which feature two-person scenes but offer significantly more challenging and realistic multi-person motion scenarios. In particular, ExPI contains dynamic sequences involving two couples engaged in physically demanding and interaction-heavy activities. The dataset was collected using a multi-sensor motion capture system comprising 68 synchronized and calibrated RGB cameras, along with a high-resolution infrared-based motion capture setup featuring 20 infrared mocap cameras. This comprehensive setup makes ExPI particularly well suited for evaluating complex, coordinated multi-person interactions in controlled yet naturalistic settings.

6.3. Results on CMU-Mocap and MuPoTS-3D

We first evaluate the GCN-Transformer against several state-of-the-art (SOTA) multi-person pose forecasting models, including MRT [15], Future Motion [13], SoMoFormer [16], JRTransformer [18], LTD [3], and MPFSIR [17]. Following established protocols, we trained all models using a synthesized dataset created by combining sampled motions from the CMU-Mocap database to simulate three-person interaction scenes. Evaluations were conducted on both test sets from the CMU-Mocap and MuPoTS-3D datasets.

For the Carnegie Mellon University Motion Capture Database (CMU-Mocap) [46], we adopt the training and testing splits provided by Wang et al. in [15]. Specifically, the dataset’s construction involves combining two-person motion sequences with an additional randomly sampled third individual, introducing a degree of randomness into the generated scenes. To ensure fairness, the same generated datasets are used across all evaluated models.

Each input sequence consists of 15 historical frames (corresponding to 1000 ms), and the models are tasked with forecasting the subsequent 45 frames (3000 ms into the future). Each individual’s pose is annotated with 15 joints, provided both as inputs and as ground truth for evaluation. We assessed performance using the Mean Per Joint Position Error (MPJPE) metric, which is reported at 1, 2, and 3 s into the future to align with evaluation from [15]. All models are retrained and evaluated under identical conditions using the official code and data released by [15].

As summarized in Table 1, the GCN-Transformer consistently outperforms all competing methods on both CMU-Mocap and MuPoTS-3D datasets, achieving new state-of-the-art performance in these settings.

Table 1.

Performance comparison on the test sets of the CMU-Mocap and MuPoTS-3D datasets, featuring three-person scenes. Results are reported using the MPJPE metric (in meters), where lower values indicate better joint position prediction accuracy. Our proposed GCN-Transformer consistently achieves state-of-the-art results, outperforming all competing models on both datasets.

The results demonstrate that the proposed GCN-Transformer consistently outperforms all competing models across both the CMU-Mocap and MuPoTS-3D test sets. These improvements are observed consistently across short-term and long-term forecasting horizons, indicating the model’s strong ability to maintain prediction performance even as the forecast extends further into the future. Among the baselines, MPFSIR, JRTransformer, and LTD perform relatively competitively but still lag behind GCN-Transformer at all evaluation points. Interestingly, the model LTD, designed for single-person forecasting, performs relatively well given its lack of explicit multi-person modeling capabilities. In contrast, models such as MRT, SoMoFormer, and Future Motion show substantially higher errors, particularly as the forecast horizon increases, suggesting weaker mechanisms for modeling long-term temporal dependencies in multi-person settings. It is also noteworthy that the ordering of model performance shifts between the CMU-Mocap and MuPoTS-3D datasets. This variability indicates that many models are sensitive to the specific characteristics of the dataset and highlights a lack of consistent generalization ability across different multi-person forecasting environments.

The strong results achieved by the GCN-Transformer highlight its ability to forecast complex multi-person motion accurately over both short and long time horizons. Its consistent improvements across different datasets demonstrate robustness and generalization. These findings validate the importance of combining spatial and temporal reasoning for multi-person forecasting tasks. In the following sections, we further evaluate GCN-Transformer on more socially complex datasets (SoMoF and ExPI) to assess its performance in even more dynamic and challenging scenarios.

6.4. Results on SoMoF Benchmark

The SoMoF Benchmark, introduced by Adeli et al. in [22], serves as a standardized assessment platform for evaluating the performance of multi-person pose forecasting models. The SoMoF Benchmark is derived from the 3DPW dataset, where every other frame is sampled to lower the original frames per second (FPS) from 30 to 15. This benchmark task involves predicting the subsequent 14 frames (equivalent to 930 milliseconds) based on 16 frames (1070 milliseconds) of preceding input data, encompassing joint positions for multiple individuals. The evaluation uses the Visibility-Ignored Metric (VIM), measuring performances across various future time steps. Similarly to [13,16,17,18], all evaluated models in this paper were trained to utilize data from the 3DPW [44] and AMASS [45] datasets. During training, emphasis was placed solely on the 13 joints evaluated within the SoMoF framework. To ensure fairness in the comparisons, a practice observed in various studies such as [18,19,20] was adopted, whereby the final results are reported based on the epoch with the lowest average VIM score on the test dataset. Furthermore, problem formulation remained consistent for all evaluated models, focusing on predicting the next 14 frames using 16 input data frames. This differs from methodologies advocated by [13,16,18] to divide formulations into two separate problem formulations for short-term and long-term optimization, which inherently enhances the model’s performance.

We conducted a comparative analysis of evaluated methods on the SoMoF Benchmark test set, as presented in Table 2, demonstrating that our model consistently achieves state-of-the-art results compared to competing models.

Table 2.

Performance comparison on the SoMoF Benchmark test set featuring two-person scenes, using the VIM and MPJPE metrics, where lower values indicate better performances. Our proposed model, GCN-Transformer, achieves state-of-the-art results. The model marked with an asterisk (*) incorporated the validation dataset during training and currently leads the official SoMoF Benchmark leaderboard at https://somof.stanford.edu.

The results demonstrate the superior performance of the proposed GCN-Transformer across both VIM and MPJPE metrics, establishing it as a state-of-the-art solution in multi-person pose forecasting. While SoMoFormer emerges as a formidable competitor, particularly in long-term forecasting, GCN-Transformer consistently outperforms all models, especially when considering the overall metric, which aggregates performance across all evaluated time intervals. Interestingly, despite the reported similar performance to SoMoFormer, the JRTransformer fails to achieve competitive results in this evaluation. Conversely, the Future Motion model, introduced in 2021, demonstrates commendable performance, rivaling even the most recent state-of-the-art models. The MPFSIR model is not far off either, achieving this performance with only a fraction of parameters compared to others. Finally, the GCN-Transformer* showcases significantly superior results owing to its training with an integrated validation dataset. This variant currently leads the official SoMoF Benchmark leaderboard at https://somof.stanford.edu.

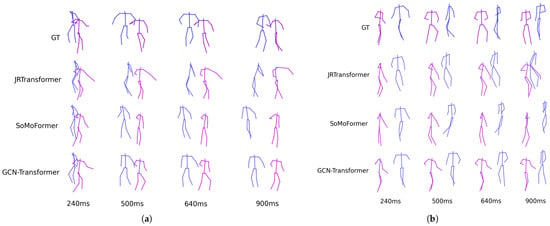

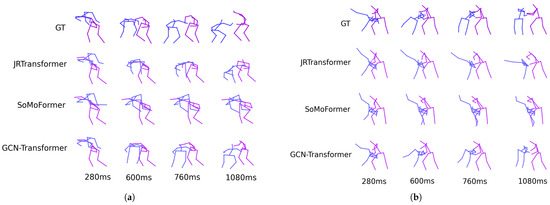

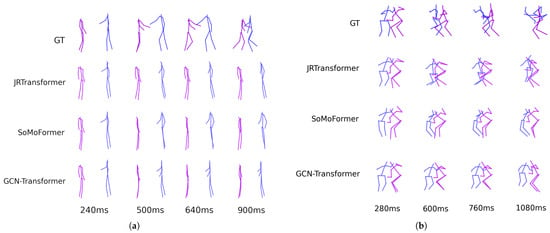

Figure 5 shows the predicted poses for two sequences from the SoMoF Benchmark test set, comparing the performance of the best-performing models, JRTransformer, SoMoFormer, and GCN-Transformer, with the ground truth (GT) also displayed for comparison. The figures reveal that both JRTransformer and SoMoFormer encounter difficulties in generating valid poses, often producing unrealistic joint configurations and movements. In contrast, the GCN-Transformer model demonstrates a clear advantage, consistently generating valid poses and realistic movements.

Figure 5.

The figure displays predicted poses on two example sequences from the SoMoF Benchmark test set for the best-performing models: JRTransformer, SoMoFormer, and GCN-Transformer, with GT representing the ground truth. Sequence (a) shows two people rotating around each other, while sequence (b) shows two people meeting and then walking together in the same direction. The visual comparison reveals that while JRTransformer and SoMoFormer struggle to create valid poses, the GCN-Transformer generates both valid poses and realistic movement.

6.5. Results on ExPI Dataset

The Extreme Pose Interaction (ExPI) dataset, described in [48], features two pairs of dancers engaging in 16 distinct extreme actions. These actions include aerial maneuvers, with the first seven being performed by both dancer couples. Subsequently, six additional aerials are executed by Couple 1, while the remaining three are carried out by Couple 2. Each action is repeated five times to capture variability, resulting in a collection of 115 sequences recorded at 25 frames per second (FPS) and 60,000 annotated 3D body poses.

Taking inspiration from the data partitioning outlined in [48], we designate all actions executed by Couple 2 as the training set and those performed by Couple 1 as the test set. This approach deviates slightly from the dataset’s division presented by Guo et al. in [48], as we incorporate common actions performed by both couples and actions performed exclusively by one couple into the training set. This dataset split emulates both the Common action split and Unseen action split described in [48], consolidating them into a single split.

We employ a sliding-window technique with overlapping sequences to sample the training data, whereas the testing data are sampled sequentially without overlaps. Additionally, we downsample each sequence by selecting every other frame, reducing the original frames per second (FPS) from 25 to 12.5 FPS. Following the precedent set by the SoMoF Benchmark, we utilize 16 frames (equivalent to 1280 milliseconds) to predict the subsequent 14 frames (equivalent to 1080 milliseconds). Moreover, we apply a scaling factor of 0.39 to maintain consistency in person scale with the SoMoF Benchmark, the dataset on which the models are developed.

We conducted a comparative analysis of evaluated methods on the ExPI test set, as presented in Table 3, demonstrating that our model consistently achieves state-of-the-art results compared to competing models. The results on the ExPI dataset differ significantly from those on the SoMoF Benchmark dataset, revealing notable performance degradation in some of the previously strong models. SoMoFormer, a close competitor on the SoMoF Benchmark, performs substantially worse on the ExPI dataset, surpassed by JRTransformer and MPFSIR. This drop in performance highlights the model’s sensitivity to different dataset characteristics. Similarly, the Future Motion model, which had proven to be a strong contender on the SoMoF Benchmark, is now outperformed by almost all other models. This indicates that the Future Motion model’s performance is heavily influenced by the dataset’s characteristics, showcasing its lack of robustness across diverse data scenarios. Interestingly, JRTransformer, which was not as competitive on the SoMoF Benchmark, emerges as a close competitor to GCN-Transformer on the ExPI dataset. Despite this, the proposed GCN-Transformer remains the clear winner across all time intervals, reaffirming its superior performance and generalizability.

Table 3.

Performance comparison on the ExPI test set featuring two-person scenes using the VIM and MPJPE metrics, where lower values indicate better performance. Our proposed model, GCN-Transformer, achieves state-of-the-art results on both metrics.