1. Introduction

State estimation and filtering for dynamic systems has great practical significance in signal processing, communication systems control engineering, and the related fields [

1]. State estimation techniques have been widely used in numerous practical applications, such as navigation [

2], target tracking [

3], and intelligent vehicles [

4]. The classical well-known Kalman filter (KF) provides an optimal state estimate for linear Gaussian systems based on the minimum mean squared error (MMSE) criterion [

5]. However, the practical systems usually have the phenomenon of incomplete information and non-Gaussian features, which leads to the failure of the Kalman filter.

Constrained by the limited communication resources, the event-triggered scheduling mechanism has emerged as a promising transmission paradigm for optimizing resource utilization while maintaining estimation and control performance by mitigating unnecessary system activations [

6,

7]. However, incomplete information resulting from the event-triggered scheduling schemes complicates the design of state estimators. Consequently, the design of the event-triggered state estimators has received much attention recently, including the determinist event-triggered scheme [

8] and the stochastic event-triggered scheme [

9]. Most of the literature on event-triggered state estimation assumes that system noise has a Gaussian noise with known statistical information. This assumption leads to the poor robust estimation performance of the above filters to outliers. As a common type of a non-Gaussian phenomenon, outliers are of enormous practical significance inasmuch as they occur relatively often [

10]. The noise corrupted by an outlier has heavy-tailed features, which cannot be captured by a Gaussian distribution [

11]. Due to the advantages in dealing with the intractable posterior probability density function (PDF), variational Bayesian inference (VBI) has been an effective approximation method to derive robust estimators.

Motivated by the above discussion, in this paper, we study the robust state estimation with the heavy-tailed process and measurement noise for the stochastic event-triggered scheduling scheme. An adaptive robust event-triggered filtering algorithm is presented based on the VBI approach and the fixed-point iteration. The contributions of this paper are summarized as follows:

- 1.

In the stochastic event-triggered state estimation problem, the heavy-tailed process and measurement noise with inaccurate nominal noise covariances are considered. Student’s t-distribution and the inverse Wishart distribution are adopted to model the one-step prediction and the measurement likelihood PDFs and the unknown covariances matrices, respectively.

- 2.

A robust event-triggered variational Bayesian filtering method is proposed to jointly estimate the system state together with the prediction error covariance and measurement noise covariance. In the filtering algorithm, the event-triggered probabilistic information and the transmitted measurement are, respectively, used to adaptively update the system states in the cases of non-transmission () and transmission ().

- 3.

The simulation results verify that the proposed filtering method has a promising estimation performance in the presence of intermittent measurements and outliers. The comparison with several other variational filtering algorithms confirms that our filtering greatly saves the communication resources, and achieves a comparable estimation performance without knowing the accurate noise covariances.

The remainder of this paper is organized as follows.

Section 2 reviews the literature on event-triggered robust state estimation.

Section 3 formulates the stochastic event-triggered robust state estimation problem with the heavy-tailed process and measurement noise, and it presents the hierarchical Gaussian model based on Student’s t-distribution.

Section 4 proposes the robust event-triggered variational Bayesian filtering method for cases of non-transmission and transmission, respectively.

Section 5 presents the simulation experiments and results to show the effectiveness of the proposed filtering. A conclusion is given in

Section 6.

Notations: and represent the sets of real symmetric positive semi-definite and positive definite matrices, respectively. is the -dimensional identity matrix. denotes the Gaussian distribution with the mean and covariance matrix P. stands for the Gaussian PDF of random variable x with the mean and covariance matrix P. is the probability of a random event.

2. Related Work

Robust state estimation and filtering for dynamic systems have been developing for decades. Robust state estimation has been extensively studied, involving cases of model parameter uncertainty [

12], non-Gaussian noise [

13], and imperfect sensor information [

14,

15]. To overcome the failure of conventional Kalman filtering in such problems, various type of filtering methods have been proposed, such as extended Kalman filtering, unscented Kalman filtering, cubature Kalman filtering, and particle filtering.

To date, studies on event-triggered state estimation have produced many exciting results. For the innovation-based deterministic event-triggered scheduling schemes, the authors in [

8,

16] proposed a Kalman-like filter and a distributed KF by using the Gaussian approximation method, respectively. To obtain the MMSE estimator, a stochastic event-triggered scheduling scheme was proposed in [

9] with Gaussian-like triggering functions, and then, the optimal event-triggered KF were derived for both the open-loop and closed-loop schemes. Recently, the robust event-triggered state estimators based on risk-sensitive functions have been proposed for hidden Markov models [

17], linear Gaussian systems [

18] and nonlinear systems [

19], respectively. In [

20], an event-triggered model was designed in the presence of packet drops and Gaussian correlated noise. For nonlinear systems, cubature Kalman filtering and particle filtering methods are used in [

21,

22] to design the event-triggered state estimators, respectively.

Most of the literature on event-triggered state estimation assumes that system noise has a Gaussian distribution with known statistical information. However, in practical engineering applications, many hidden factors and anomalies produce outliers, such as unanticipated environmental disturbances and temporary sensor failure. When the noise is corrupted by outliers, the Kalman-based filters usually have poor robustness because the Gaussian distribution cannot capture heavy-tailed features. In recent years, the filtering problem with non-Gaussian heavy-tailed noise has attracted much attention. Variational Bayesian inference (VBI) has been proposed as an effective approximation method to tackle the complicated PDFs and derive robust filters [

23,

24,

25,

26,

27]. Based on Student’s t-distribution, a novel variational filtering algorithm with heavy-tailed noise was developed in [

23]. In [

24], non-stationary heavy-tailed noise is modeled as Gaussian mixture distributions. Lately, the Gaussian-Gamma mixture filter [

25], the Gaussian-Student’s t mixture filter [

26], and Student’s t mixture filter [

27] were proposed in the presence of outliers, respectively. In [

28], a multi-sensor variational Bayesian Student’s t-based cubature information fusion algorithm was developed to solve the state estimation of nonlinear systems with heavy-tailed measurement noise. The authors in [

29] derived a robust cubature Kalman filter by using a partial variational Bayesian method to deal with non-stationary heavy-tailed noise. For systems with inaccurate Gaussian process noise covariance over binary sensor networks, the authors in [

30] developed a distributed sequential estimator via inverse Wishart distributions and fixed-point iterations. In [

31], an event-triggered variational Bayesian filter was proposed for the Gaussian system noise with unknown and time-varying noise covariances. In the case of heavy-tailed process noise and Gaussian measurement noise, an event-triggered robust unscented Kalman filter was proposed in [

32] to jointly estimate the states and the unmanned surface vehicle parameters. The authors in [

33] introduced a sampled memory event-triggered mechanism and discarded the measurement outliers based on an error upper bound.

4. Robust Event-Triggered Variational Bayesian Filtering

In this section, a robust filtering algorithm is proposed based on the VBI approach under event-triggered scheduling scheme (

3).

VBI is usually applied to solve the optimization problem for systems with both unknown parameters and latent variables. Denote the set of all latent variables and parameters by

and the set of all observed variables by

. The solution to the joint posterior PDF

is not analytically tractable, which hinders the estimates of the unknown parameters and latent variables. The VBI approach is used to search for an approximate PDF in a factorized form [

34,

38]:

where

is the approximate posterior PDF of

. The approximation based on VBI can be formed by minimizing the Kullback–Leibler (KL) divergence between the separable approximation and the true posterior distributions. Thus, a general expression for the optimal solution is given by [

34,

38]

where

is the set of all elements in

except

, and

is a constant independent of

. When the optimal variational parameters are coupled with each other, the fixed-point iteration method is adopted to solve (

19), i.e.,

where

i denotes the

i-th iteration, and the iterations will converge to a local optimum of (

19).

For Student’s t-based hierarchical Gaussian state-space model in

Section 3.2, the posterior estimates will be given based on the VBI method, which is illustrated by the graphical model in

Figure 1. Depending on whether the measurement data have been received by the remote estimator, the discussion is divided into non-transmission, i.e.,

, and transmission cases, i.e.,

. As shown in

Figure 1, the measurement

can be directly used to infer

in the case of

. In the case of

, the probabilistic information of the event-triggered scheme (

3) is used to infer

and

jointly.

Remark 2. Compared with most of the previous studies [23,31], a more practical robust state estimation problem is considered, with both the event-triggered transmission scheme and heavy-tailed noise. A way to estimate the system states in the presence of non-transmissions and outliers is a critical problem. As a result, the proposed filtering will achieve superior robust estimation performance while greatly saving communication resources. 4.1. Variational Filtering in the Case of Non-Transmission

When the measurement

is not transmitted by the sensor at time

k, i.e.,

,

is unavailable for the remote estimator. In this case, the set of the unknown variables is denoted as

In the VBI framework, the joint posterior PDF

is approximated as

The approximate posterior PDF for every element in

will be calculated in the following.

(1) The logarithm of joint PDF

: The joint PDF

is factorized as

where

,

,

, and

are given by (

16), (

17), (

10) and (

11), respectively, and the event-triggered scheme (

3) yields

It has been shown in [

9] that

and

are jointly Gaussian given

. We define

,

, and

. In view of (

12) and (

13),

is given as

It follows that

Substituting the above distributions into (

23), we have

Then,

is computed as

where

is a constant independent of

.

(2) Decoupling of

: We used the method proposed in [

31] to decouple

and

from

and

. First,

is factorized as

Thus, the determinant and the inverse of

are, respectively, computed as

Define

, then

where

is computed by

and

and

are given as

Substituting (

26) and (

28) into (

25) yields

(3) The update of

: Let

, and using (

31) in (

20), we have

where

is given by

According to (

32) and Definition 3,

can be updated as the following Gamma PDF:

where the shape parameter

and rate parameter

are given by

(4) The update of

: Let

, and using (

31) in (

20), we have

where

is given by

According to (

36) and Definition 3,

can be updated as the following Gamma PDF:

where the shape parameter

and rate parameter

are given by

(5) The update of

: Let

, and using (

31) in (

20), we have

Based on (

40) and Definition 2,

is updated by the following inverse Wishart PDF:

where the dof parameter

and inverse scale matrix

are given by

(6) The update of

: Let

and using (

31) in (

20), we have

Based on (

43) and Definition 2,

can be updated as the following inverse Wishart PDF:

where the dof parameter

and inverse scale matrix

are given by

(7) The update of : To update , i.e., and , the following two lemmas will be used.

Lemma 1 ([

39])

. For matrices A, B, C, and D with appropriate dimensions, if A and are nonsingular, thenIf D is also nonsingular, then . Lemma 2 ([

40])

. For matrices A, B, U, and V with appropriate dimensions, if A and B are nonsingular, then . Theorem 1. Considering the event-triggered state estimation system with heavy-tailed process and measurement noise (1)–(3), based on Student’s t-based hierarchical Gaussian model (14)–(17) and the variation Bayesian approximation (22), the variational updates and in the case of are given as follows:where Proof. Let

, and using (

31) in (

20), we have

Defining the modified measurement noise and prediction error covariance matrices

and

, respectively, then

where

is given as

and

is given as

Based on Lemma 1, the estimation error covariance of

is computed as

where the last two equalities hold because of Lemma 2.

The estimation error covariance of

is computed as

where the third equality holds due to Lemma 2, and the last two equalities are obtained by conventional matrix operations.

In addition, the cross-covariance between

and

are derived as follows:

where the third equality holds due to Lemma 2.

Therefore, is updated as the Gaussian PDF , where the mean and the covariance matrix is given as (48). Similarly, is updated as the Gaussian PDF , where the mean and the covariance matrix is given as (48). □

(8) The computation of expectations: According to Definition 2 and Definition 3, the required expectations

,

,

and

are calculated as follows

In view of (

30), the required expectations

and

are computed as

where

,

, and

are given by (48). In the case of

, it follows from

and

.

4.2. Variational Bayesian Filtering in the Case of Transmission

When the measurement

is transmitted by the sensor at time

k, i.e.,

, the set of the unknown variables is denoted as

The joint PDF

is factorized as

The recursions of

,

,

, and

are the same as those in the case of

. Let

, so that

where

and

are given by (

49). Hence

is updated by the following Gaussian PDF:

where the mean

and covariance matrix

are given by

The expectations

and

are given as follows.

where

is given by (

64).

Remark 3. In the derivation of variational Bayesian filtering, event-triggered structure information is used to calculate the logarithm of joint PDF in the case of non-transmission. Despite the absence of the measurement, the unknown covariances are adaptively estimated by taking advantage of the conditional probabilities of the triggering decision (24). In the case of transmission, the measurement received by the remote estimator can be directly used to update the system states, as seen in (64). To sum up, we propose the robust event-triggered Student’s t-based variational Bayesian filtering, as summarized in Algorithm 1.

| Algorithm 1 Event-triggered Student’s t-based robust variational Bayesian filtering |

- Input:

, , , , , , ; m, n, , , , , N - 1:

Time update: - 2:

- 3:

- 4:

Variational measurement update: - 5:

Initialization: , , , , , , , - 6:

for to do - 7:

Calculate and : - 8:

if then - 9:

, is calculated by (59) - 10:

else - 11:

and are calculated by ( 65) and (66) - 12:

end if - 13:

Update by ( 35), - 14:

Update by ( 39), - 15:

Update by ( 42), - 16:

Update by ( 45), - 17:

Update : , - 18:

if then - 19:

, , , are calculated as (48) - 20:

else - 21:

and are updated as ( 64) - 22:

end if - 23:

end for - 24:

, , , , , - Output:

, , ,

|

The parameters in Algorithm 1 are discussed as follows: (1) and are the dofs of Student’s t-distributions (6) and (7), respectively. Student’s t-distribution will reduce to the Cauchy distribution and the Gaussian distribution when the dof is 1 and infinite, respectively. The selection of the dofs is related to the degree of heavy tail of the target distribution. (2) is a tuning parameter used to reflect the influence of the prior information . For a large , the measurement updates will introduce more prior uncertainties caused by the heavy-tailed process noise. For a small , a large amount of information about the system process will be lost. Generally, the tuning parameter is suggested to be selected as . (3) is the forgetting factor used to update the prior scale matrix in the inverse Wishart prior distribution of in (11), which indicates the extent of the time fluctuations. The smaller the forgetting factor , the more the information from the previous estimation is forgotten; otherwise, the more the information from the previous estimation is used. Generally, the forgetting factor is suggested to be selected as for a better performance.

Remark 4. The computational complexity of Algorithm 1 is analyzed. In the time update step, the computational complexity of calculating and is . In the variational measurement update for each iteration i, the complexity of calculating and is and , respectively. The complexity of updating , , , and is , , , and , respectively. Then, the computational complexity of obtaining is , where n and m are the dimensions of the system state and the measurement output, respectively.

5. Simulation Results

In this section, the performance of the proposed robust event-triggered variational Bayesian filtering method is illustrated by the problem of target tracking. The target moves according to a constant velocity model in two-dimensional space, and its position is measured. The system matrices are given as

where the parameter

is the sampling interval. The state dimension and the measurement dimension are

and

, respectively. The nominal process and measurement noise covariances are set as

where

and

. The heavy-tailed process and measurement noise are generated by

In this simulation, we compare the proposed filtering algorithm, Algorithm 1, with the following estimators.

- 1.

RST-KF (the robust Student’s t-based Kalman filter [

23]): RST-KF is proposed for heavy-tailed process and measurement noise, where the accurate noise covariances are known and

is sent at each time

k.

- 2.

ETVBF (the event-triggered variational Bayesian filter [

31]): ETVBF is proposed for unknown Gaussian noise covariance, where the unknown Gaussian process noise covariance is formulated as the multiple nominal process noise covariance.

- 3.

KFNCM (the Kalman filter with nominal noise covariance matrices): The measurement is sent at each time k.

- 4.

CLSET-KF (the closed-loop stochastic event-triggered Kalman filter [

9]): The stochastic event-triggered scheme is adopted, where the process and measurement noise have Gaussian distributions with known covariance matrices.

The inaccurate nominal initial process and measurement noise covariances are set as

and

, respectively. The triggering parameter takes the form of

. The mean and covariance of

are chosen as

and

. The dof parameters are set as

. The tuning parameter and the forgetting factor are set as

and

, respectively. To compare the estimation performance, the root mean square error (RMSE) and the averaged RMSE (ARMSE) are used as the performance metrics, which are defined as

where

10,000,

, and

n represent the total Monte Carlo experiment number, the iterative step of one Monte Carlo run, and the dimension of the state, respectively.

and

represent the

l-th components of system state

and the estimate

at time

k in the

j-th Monte Carlo run, respectively. To evaluate the estimation performance under different transmission frequencies, the communication rate is defined as

which is numerically calculated by

where

denotes

in the

j-th Monte Carlo run.

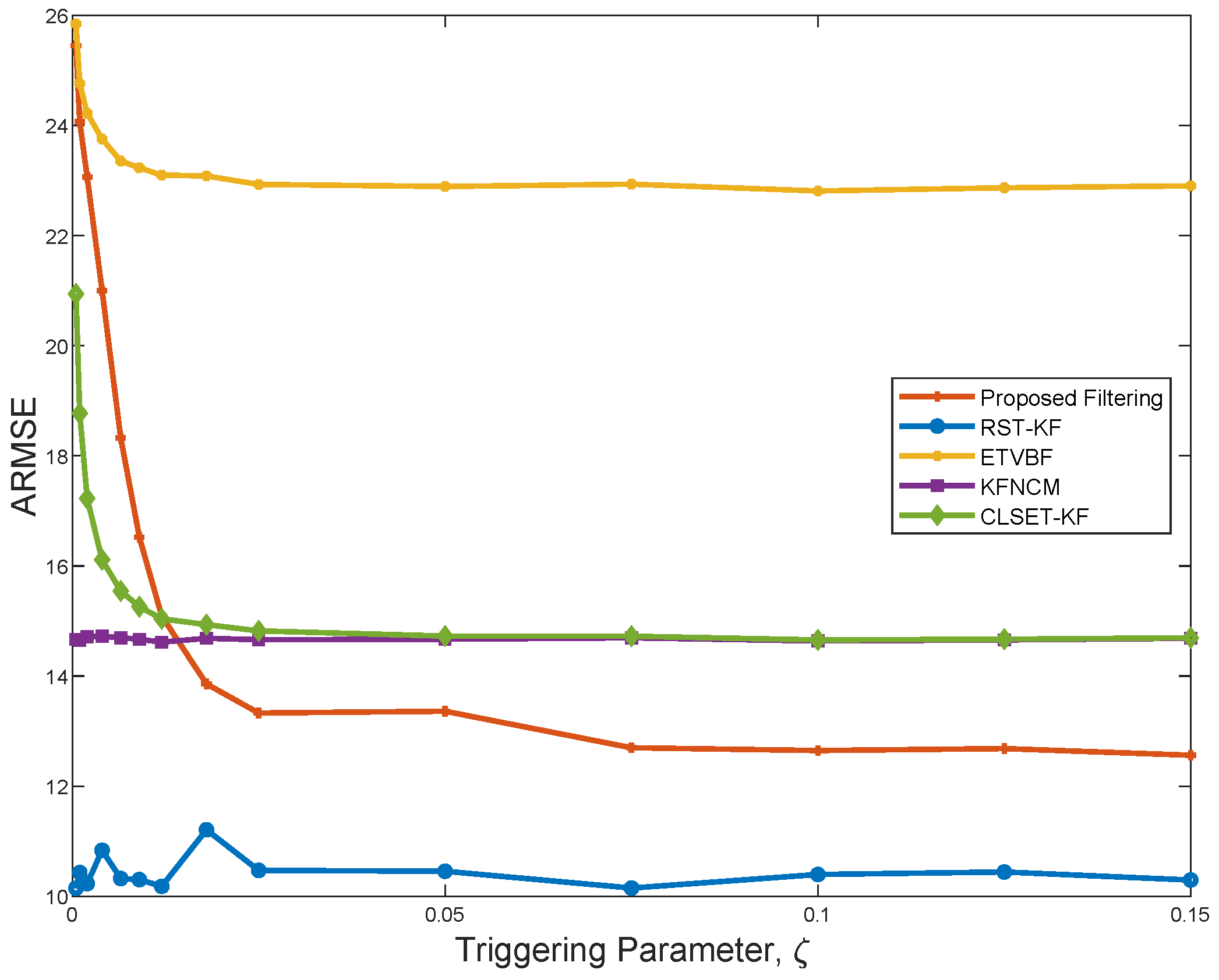

Figure 2 depicts the ARMSEs of the five filtering algorithms under

. It is seen that the proposed filter performs better than ETVBF, KFNCM, and CLSET-KF, and it gradually stabilizes as

increases. Compared with RST-KF, the proposed filter does not know the exact nominal noise covariance. Hence, the performance of the proposed filter is worse than that of RST-KF because of the information loss. ETVBF is developed for the unknown Gaussian noise covariances, and it has poor estimation performance in the presence of outliers. KFNCM and CLSET-KF are the optimal estimators for the linear Gaussian systems without and with closed-loop the event-triggered scheme, respectively, and their performance tends to be the same as

increases. However, the two filters are ineffective under heavy-tailed noise.

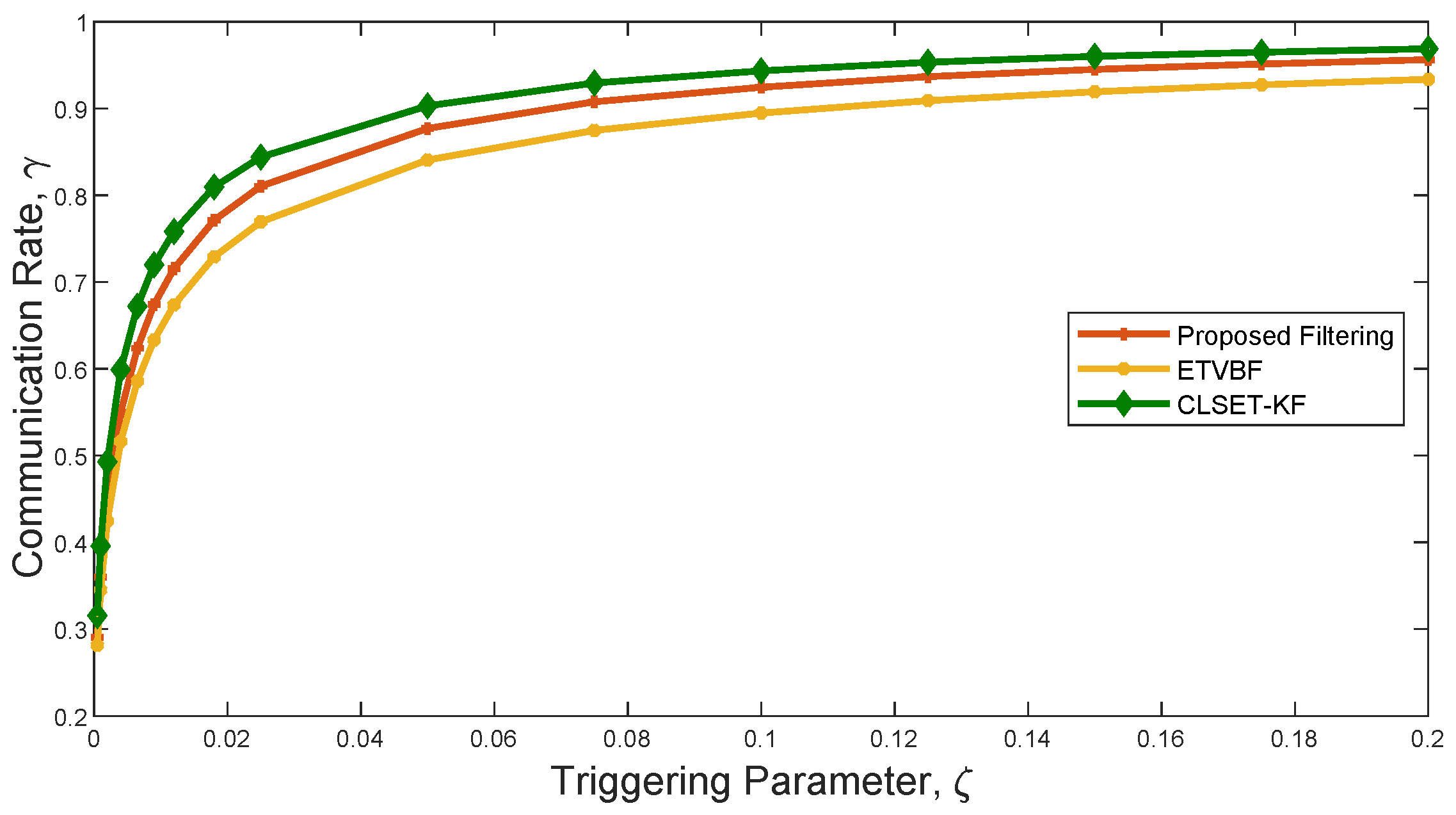

Figure 3 shows the communication rate of the three filters using a closed-loop stochastic event-triggered scheme. As seen in

Figure 2 and

Figure 3, ETVBF has the lowest communication rate but the largest ARMSE. Compared with CLSET-KF, the proposed filter has both a smaller communication rate and better performance. Therefore, it can be concluded that the proposed filtering achieves a promising robust estimation performance with acceptable communication overhead under heavy-tailed noise.

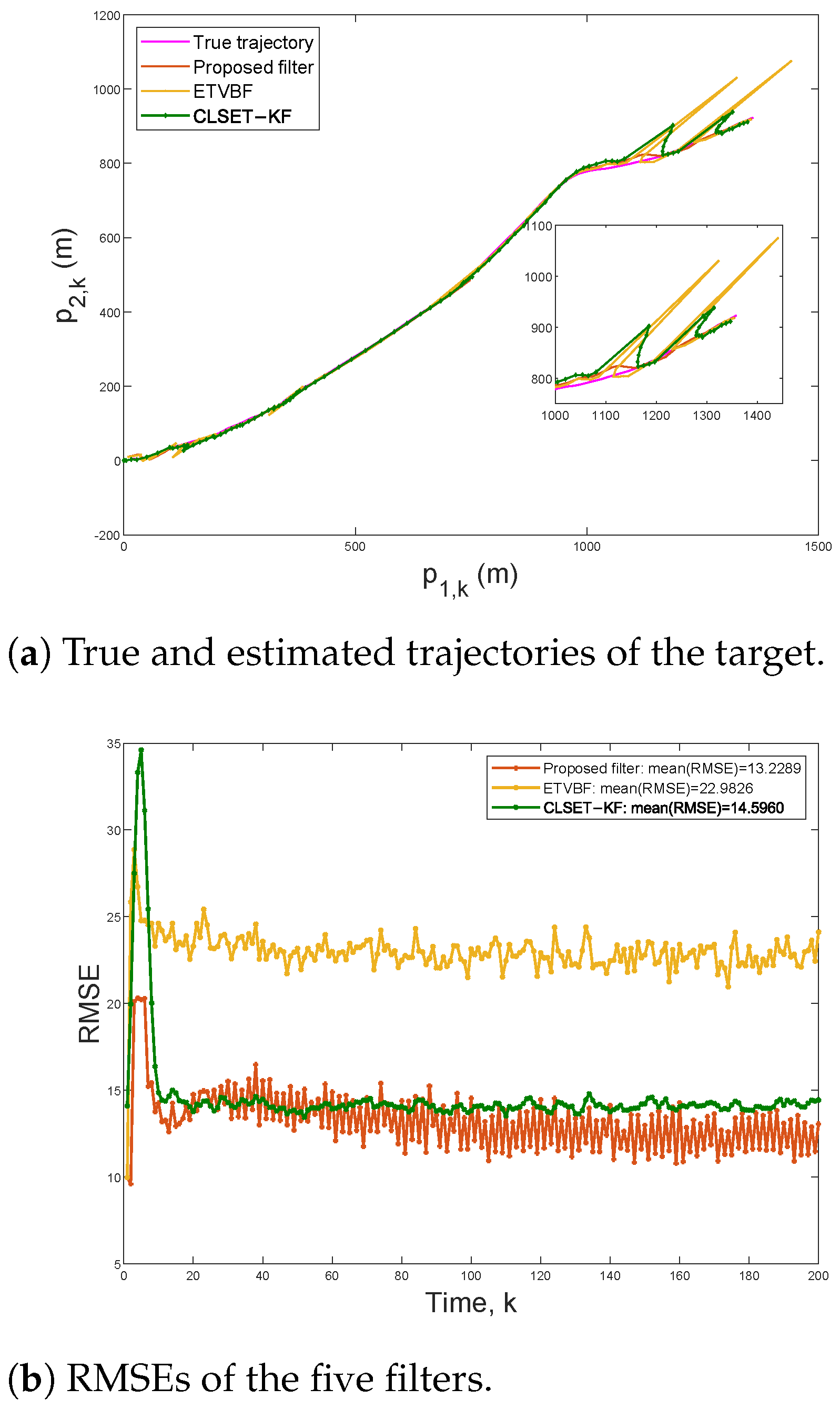

To compare the robust estimation performance under the stochastic event-triggered scheme,

Figure 4 plots the target trajectories and the RMSEs within 200 steps under

and

. In this case, the communication rates of the proposed filter, ETVBF, and CLSET-KF are

,

, and

, respectively. As shown in

Figure 4a, ETVBF and CLSET-KF fail in tracking outliers, while the proposed filtering can still track the target position well. It can be seen in

Figure 4b that the mean RMSEs of the proposed filtering and CLSET-KF are

and

, respectively. As time step

k increases, the RMSE of the proposed filtering is gradually and significantly smaller than that of CLSET-KF and ETVBF.

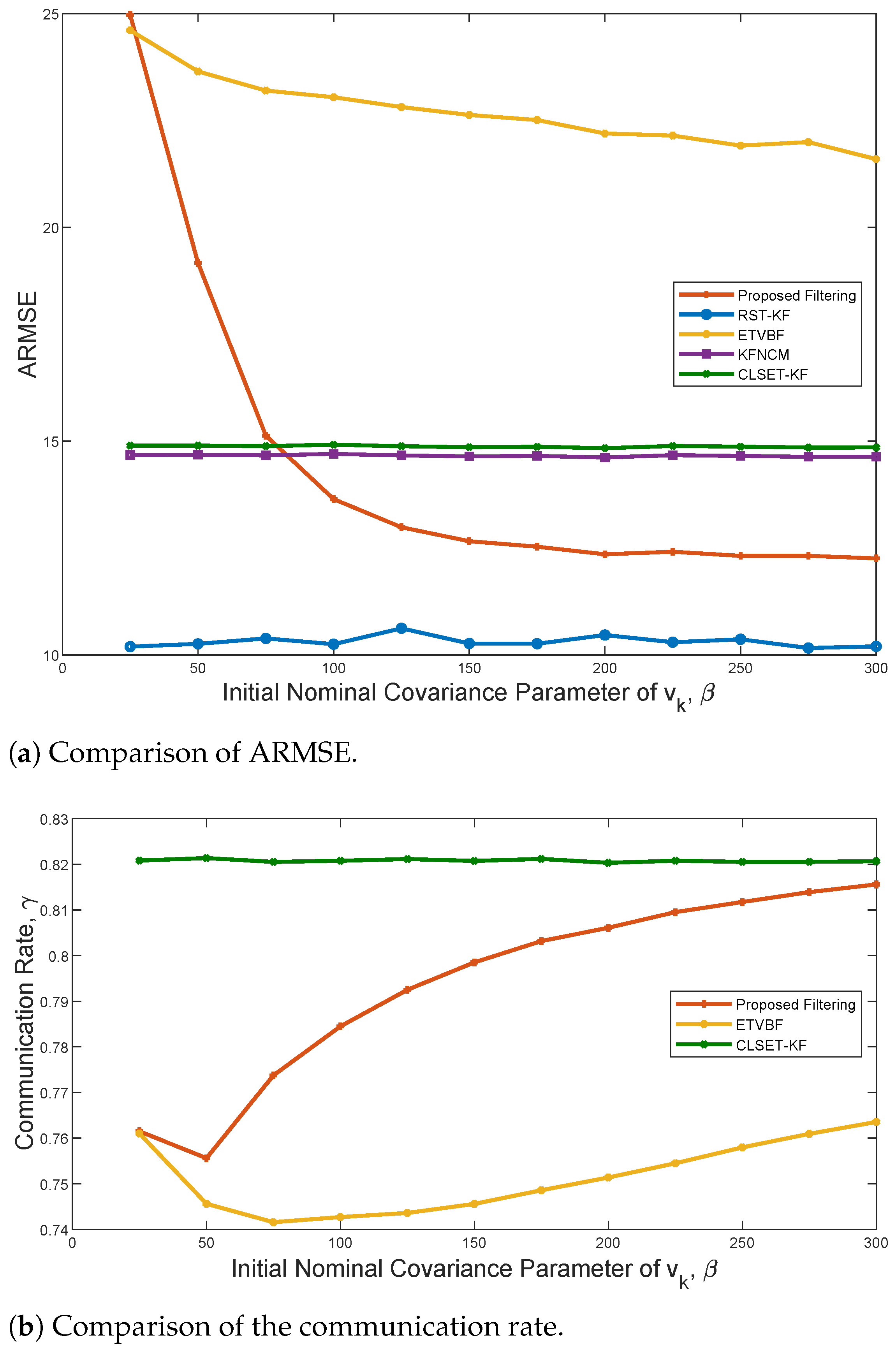

To illustrate the influence of the nominal measurement noise covariance

, the comparisons of ARMSE and the communication rate are depicted in

Figure 5. Only ETVBF and the proposed filtering are affected by

, and both have better performance with increased

. It is also noted that the ARMSE of the proposed filtering tends to be stable when

is about 200. However, as

becomes large, the communication rate of both ETVBF and the proposed filtering decreases and then increases. Hence, a trade-off between the estimation performance and the communication rate can be achieved by adjusting parameter

.

6. Conclusions

In this paper, a robust variational Bayesian filtering method is proposed for the stochastic event-triggered state estimation system with heavy-tailed process and measurement noise. Heavy-tailed noise is approximated as Student’s t-distributions, where the prior distributions of the scale matrices are chosen as the inverse Wishart distributions. Based on a Student’s t-based hierarchical Gaussian state-space model, the variational Bayesian inference approach and the fixed-point iteration method are utilized to jointly estimate the system states and the unknown covariances in the cases of non-transmission and transmission, respectively. The simulation results demonstrate the effectiveness of the proposed stochastic event-triggered filtering method for heavy-tailed process and measurement noise with inaccurate covariance matrices.

This work was addresses the practical constraints of limited communication resources and outliers. Further work can involve the design of event-triggered robust state estimators for various other non-ideal implementations, such as communication delays, model uncertainties, and the extension of the proposed estimation method to real application scenarios.