2.1. Calculation of Image Dynamic Segmentation Line

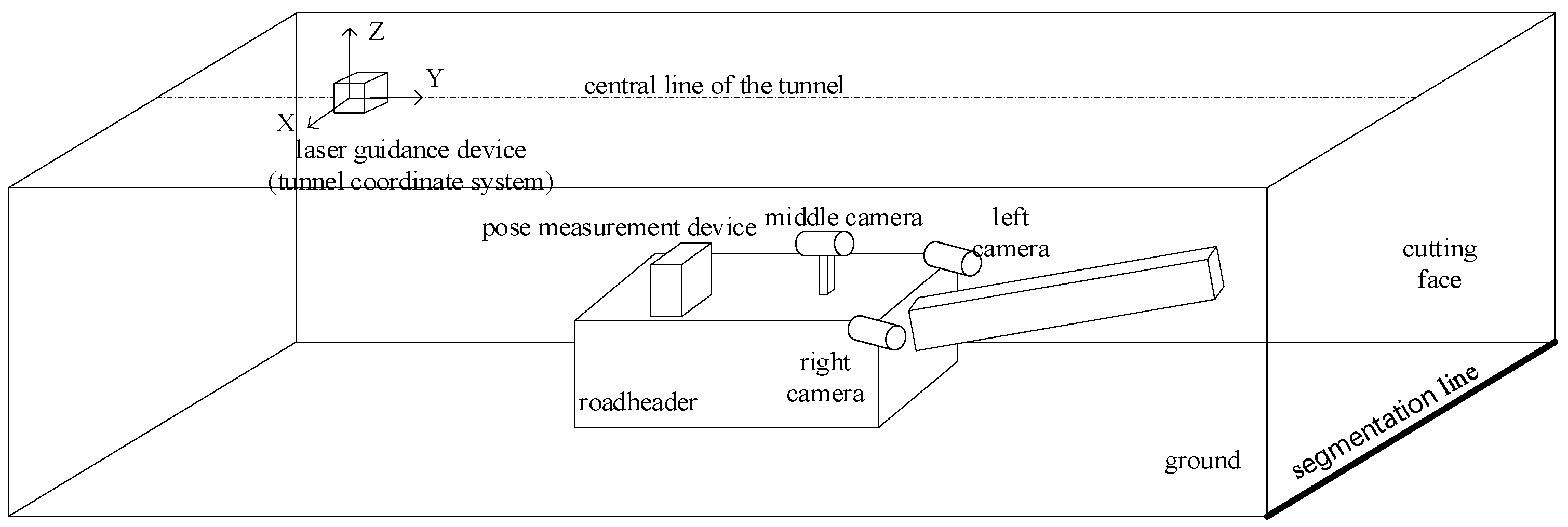

The navigation and positioning device for the roadheader consists of two parts: a laser guidance device suspended from the tunnel roof at the rear end of the roadheader and a pose measurement device installed on the roadheader. The origin

OL of the tunnel coordinate system is located on the laser guidance device, with the X, Y, and Z directions of the coordinate system representing the lateral, tunneling, and height directions of the tunnel, respectively. The entire navigation and positioning device provides the heading angle, pitch angle, and roll angle of the roadheader body, as well as the coordinates of the roadheader in the tunnel coordinate system [

12].

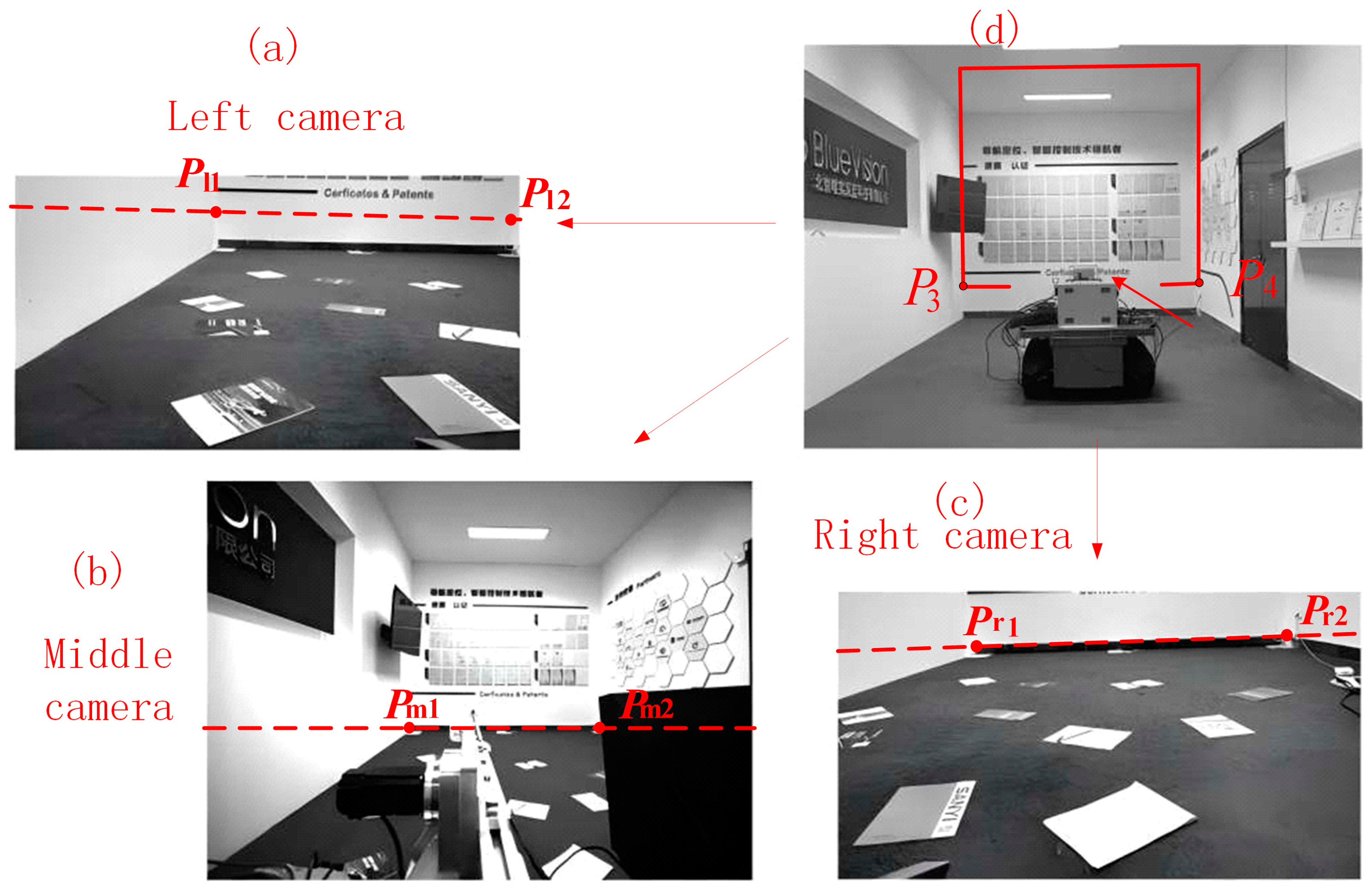

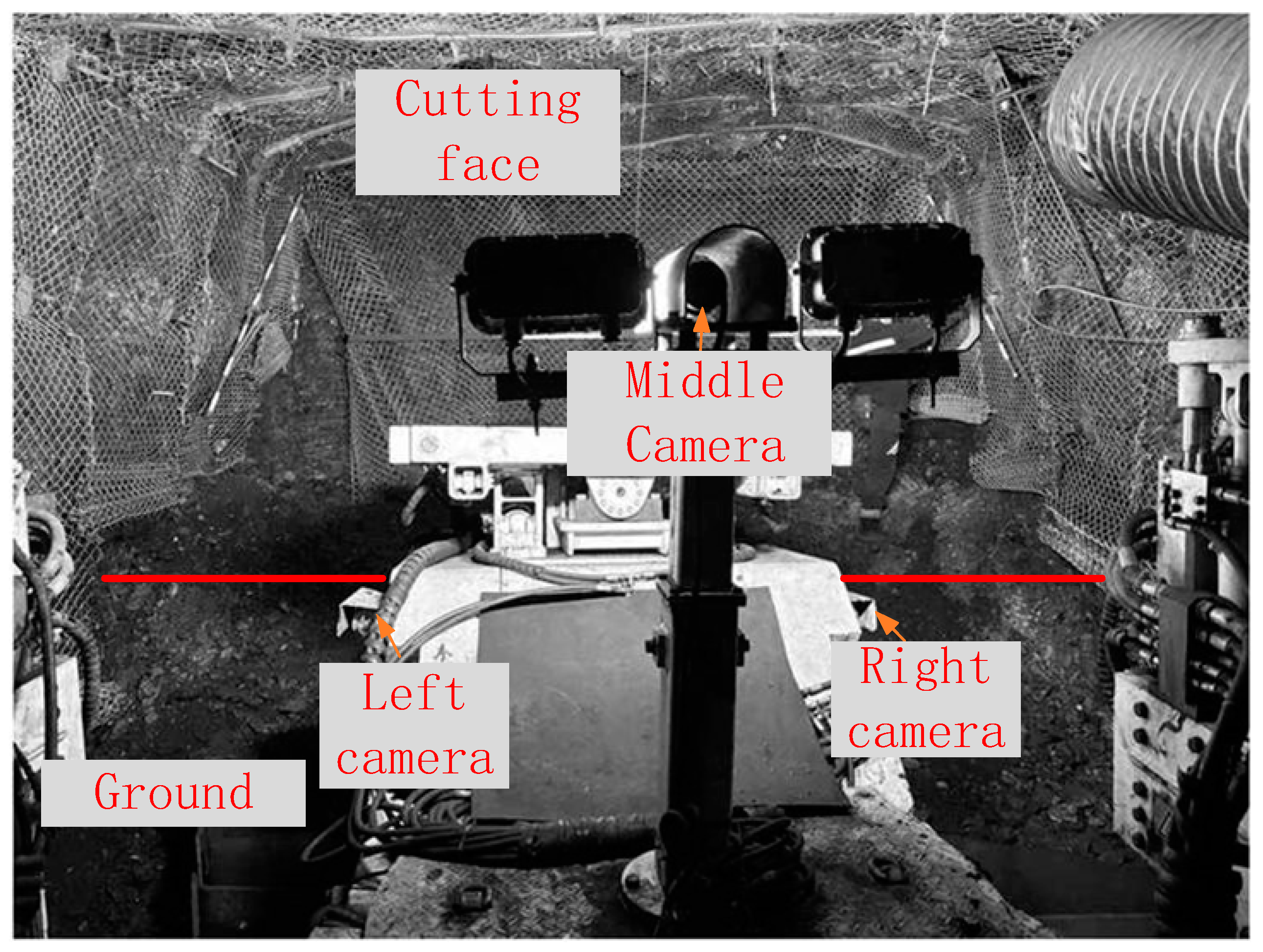

To obtain surveillance images of the tunneling face, three cameras are installed on the left and right sides at the front end of the roadheader and near the driver’s position in the middle, respectively. As shown in

Figure 1, the multi-camera system covers the entire cutting face and ground area through its spatially distributed fields of view.

In an actual tunnel, the boundary between the ground and the cutting face approximates an ideal straight line, and the coordinates of the endpoints P1 and P2 of this segmentation line are known in the tunnel coordinate system OL. If we calculate the imaging pixels of P1 and P2 in the cameras, the line connecting these pixels can serve as the segmentation line between the cutting face and the ground in the image.

As shown in

Figure 2, taking the middle camera as an example, a calibration board is installed at the tunnel heading, and the coordinates of each corner point on the calibration board in the tunnel coordinate system can be obtained in advance through measurement. Let the coordinate of any corner point in the tunnel coordinate system be

. Meanwhile, based on the camera’s intrinsic parameters, the geometric parameters of the calibration board itself, and the pixel coordinates corresponding to the image of this corner point captured by the middle camera, its coordinate in the middle camera coordinate system can be calculated as

.

where

and

represent the rotation matrix and translation vector from the pose measurement device coordinate system

Ot to the tunnel coordinate system

OL, which can be obtained by inverse calculation using the heading angle, roll angle, pitch angle, and spatial position coordinates provided by the roadheader navigation and positioning system.

and

represent the rotation matrix and translation vector from the middle camera coordinate system

OCm to the pose measurement device coordinate system

Ot, which can be calibrated by combining multiple corner point coordinates and Equation (1).

For the endpoints

P1 and

P2 of the segmentation line between the cutting face and the ground, their coordinates in the tunnel coordinate system are

PL_1 and

PL_2, respectively, while their coordinates

PCm_1 and

PCm_2 in the middle camera coordinate system can be obtained using Equation (2).

By combining the camera’s intrinsic parameters, we can further obtain the corresponding pixel coordinates

pm1 and

pm2 of these two points in the image captured by the middle camera with the following equation.

where

is the intrinsic matrix of the middle camera, while

and

represent the distances of points

P1 and

P2, respectively, along the Z-axis direction of the middle camera. The line connecting the pixel points

pm1 and

pm2 serves as the line between the cutting face and the ground in the tunneling heading image.

This method is also applicable to the images captured by the left and right cameras. On the one hand, it can obtain the rotation matrix

and translation vector

from the left camera coordinate system

OLm to the pose measurement device coordinate system

Ot, as well as the rotation matrix

and translation vector

from the right camera coordinate system

ORm to the pose measurement device coordinate system

Ot. On the other hand, it can also calculate the segmentation lines between the cutting face and the ground in the left and right camera images, as shown in

Figure 2.

pr1,

pr2 and

pl1,

pl2 are the corresponding pixel points of points

P1 and

P2 in the right and left camera images, respectively, and their connecting lines represent the segmentation lines between the cutting face and the ground in the corresponding images.

Compared to other methods that utilize image feature points to divide the front and back planes, this method directly calculates and generates the segmentation lines between the cutting face and the ground based on the coordinates of the segmentation line endpoints, thereby avoiding reliance on image quality and significantly improving the reliability of the segmentation of the tunneling heading image.

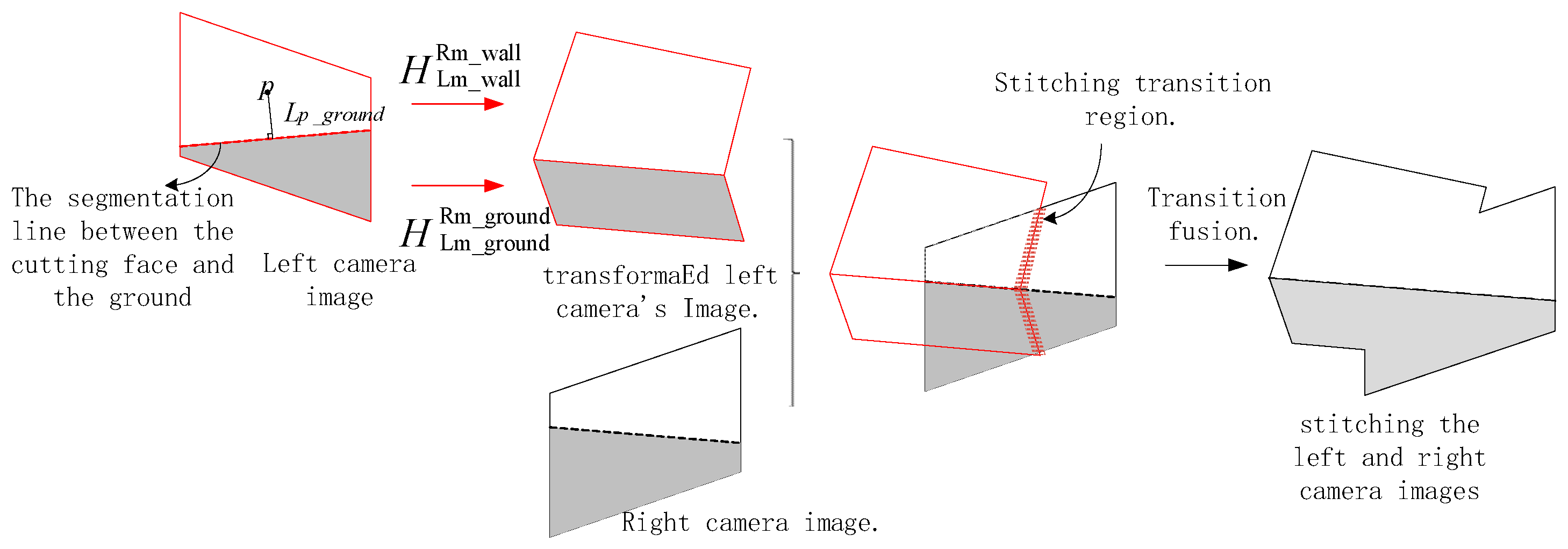

2.2. Stitching of Segmented Images

After completing the segmentation of the cutting face and the ground within the front-facing images, this section employs the DHW method for these two planes. It separately transforms the images captured by the left and center cameras into the perspective of the right camera through matrix transformation, and then stitches them with the image captured by the right camera.

2.2.1. Stitching of Ground Plane

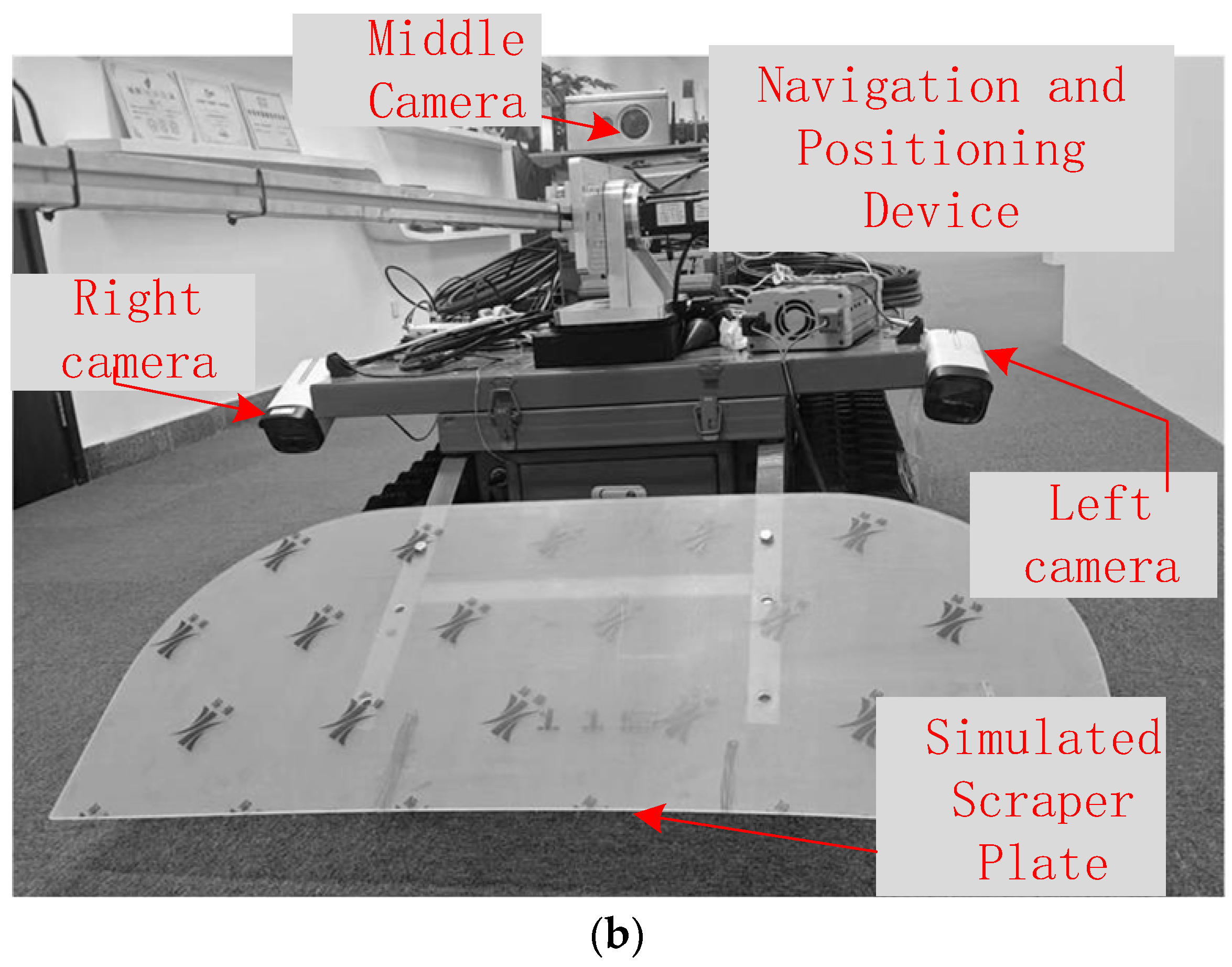

As shown in

Figure 1, the heights of the left, center, and right cameras remain basically unchanged relative to the roadway ground. Therefore, once the camera installation positions are fixed, the homography matrices

and

for perspective transformation from the left and center cameras to the right camera are also fixed. These matrices can be pre-calibrated by setting multiple feature points in the common ground area of the cameras and performing feature point matching.

2.2.2. Stitching of Cutting Face

Unlike the ground, the distance between the cutting face and the cameras varies with the movement of the roadheader, leading to dynamic adjustments in the transformation matrices between images captured by different cameras according to this distance. To address the dynamically changing transformation matrices, this paper adopts a plane-induced homography model, which is rapidly calculated by integrating the internal and external parameters of the cameras, the pose transformation matrices between the coordinate systems of each camera, the normal vector of the cutting face in the camera coordinate system, and the distance from the camera to the cutting face [

13].

Taking the left camera as an example, the homography matrix

of the cutting face portion within its image relative to the cutting face portion in the right camera’s image can be calculated by Equation (3).

where

and

are the intrinsic matrices of the left and right cameras, respectively;

and

are the rotation matrix and translation matrix from the left camera to the right camera, respectively;

I is the identity matrix;

is the normal vector of the cutting face in the right camera coordinate system; and

is the distance from the cutting face to the origin of the right camera coordinate system.

Based on the coordinate system transformation relationship,

and

can be obtained according to the positional relationships between the left and right camera coordinate systems and the pose measurement device coordinate system provided in

Section 2.1.

and

can be determined based on several feature points (such as

P1,

P2, etc.) on the cutting face and the coordinate values of the right camera in the roadway coordinate system

OL [

13,

14]. The specific calculation steps are as follows: As shown in

Figure 2, four feature points

P1,

P2,

P3, and

P4 are selected on the cutting face (

P1 and

P2 are the endpoints of the intersection line between the cutting face and the ground, and

P3 and

P4 are two feature points on the cutting face directly above

P1 and

P2 at a height

h). Their coordinates in the roadway coordinate system

OL are known, denoted as

PL_1,

PL_2,

PL_3, and

PL_4, respectively. By combining the rotation matrix

and translation vector

between the pose measurement device and the right camera obtained through calibration in

Section 2.1, as well as the pose parameters provided by the navigation and positioning system, their coordinates in the right camera coordinate system can be calculated.

Subsequently, the normal vector

of the cutting face formed by the four points

P1,

P2,

P3, and

P4 in the right camera coordinate system and the distance

from the cutting face to the origin of the right camera coordinate system can be obtained.

By substituting the results from Equations (5)–(8) into Equation (4), the dynamic homography matrix of the left camera relative to the right camera can be calculated. Similarly, the homography matrix of the cutting face portion within the middle camera image relative to the right camera can also be determined.

The aforementioned process demonstrates that, given the camera parameters, the proposed method in this paper can quickly solve for the transformation matrix of the cutting face by combining the coordinate information of the cutting face provided by the navigation and positioning system and the distance from the camera to the cutting face. This approach avoids the complex process in traditional algorithms of first selecting feature points, performing registration, and then calculating the homography matrix through SVD decomposition, thereby significantly improving real-time performance.

2.2.3. Stitching of the Overall Image

After completing image segmentation and the calculation of transformation matrices, when employing the DHW method for final stitching, it is necessary to calculate the weight of each pixel based on its position in the image and perform overlay fusion. The process is illustrated in

Figure 3.

Assuming there exists a pixel point

p in the left image, the transformation matrix

Hp used to convert it to the right camera’s perspective can be calculated according to the following formula.

where

is the weight corresponding to the position of point

p in the image, and the calculation method is as follows.

represents the distance from pixel point p to the nearest feature point on the cutting face, and represents the distance from pixel point p to the nearest feature point on the ground. Since the segmentation line between the cutting face and the ground has been clearly marked in this paper, when point p is on the cutting face, , and at this time, ; similarly, when point p is on the ground, , and at this time, .

After performing perspective transformation on the left camera image using Equation (9), it is stitched and fused with the right camera image. The position of the image stitching seam (as indicated by the annotated stitching transition region in the figure) can achieve a smooth transition through linear weight blending [

15].

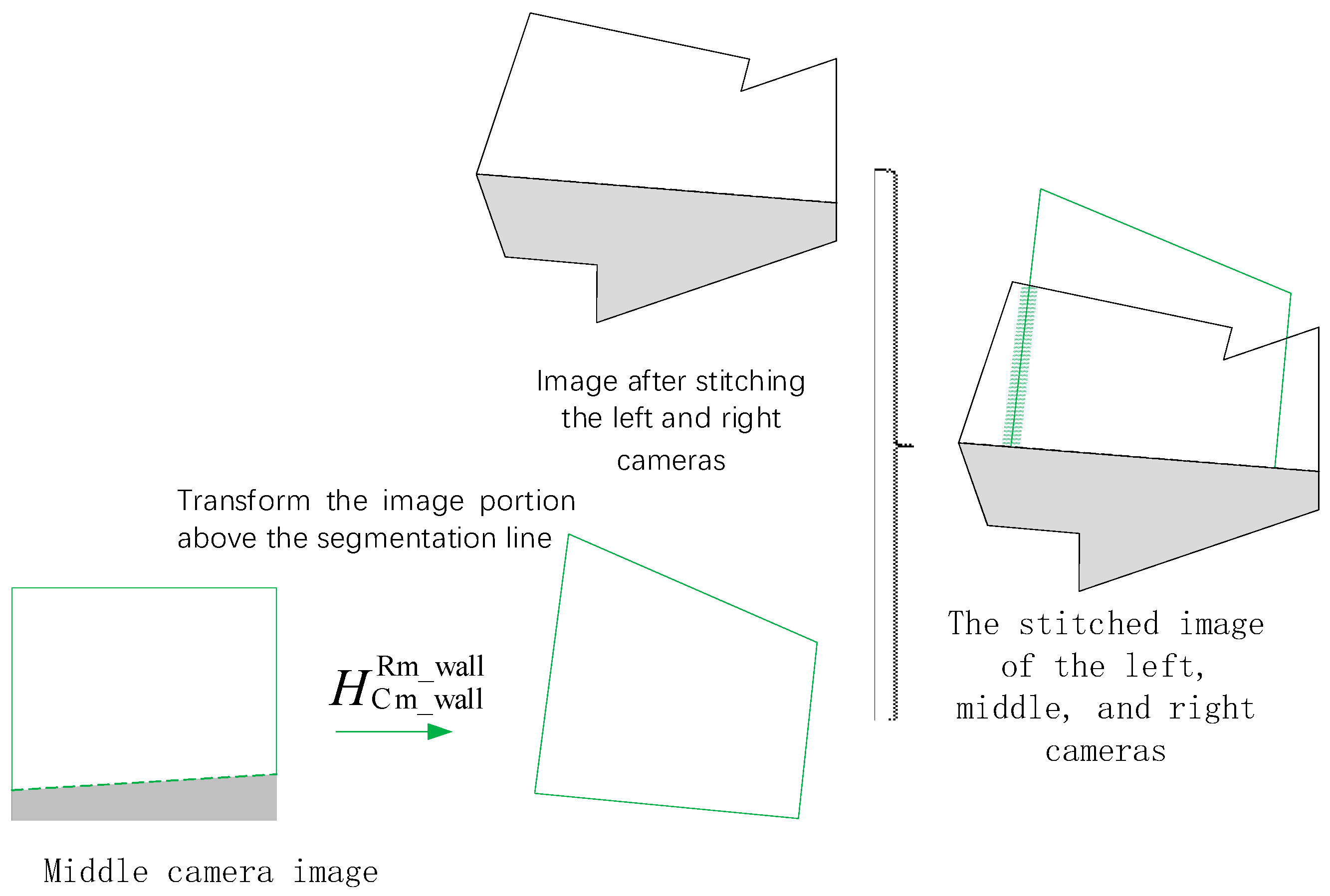

After completing the stitching of the left and right camera images, this stitched image is then fused with the middle camera image. Since, in practical scenarios, the middle camera is usually installed at a higher position above the ground compared to the left and right cameras, the cutting face portion occupies a larger proportion in its image. Therefore, only the cutting face portion (above the dynamic segmentation line) in the middle camera image is selected, subjected to perspective transformation using the dynamic homography matrix

and then fused and stitched a second time with the previously stitched image of the left and right cameras to ultimately obtain a complete stitched image of the three cameras, as shown in

Figure 4 below.