Vision-Language Model-Based Local Interpretable Model-Agnostic Explanations Analysis for Explainable In-Vehicle Controller Area Network Intrusion Detection

Abstract

1. Introduction

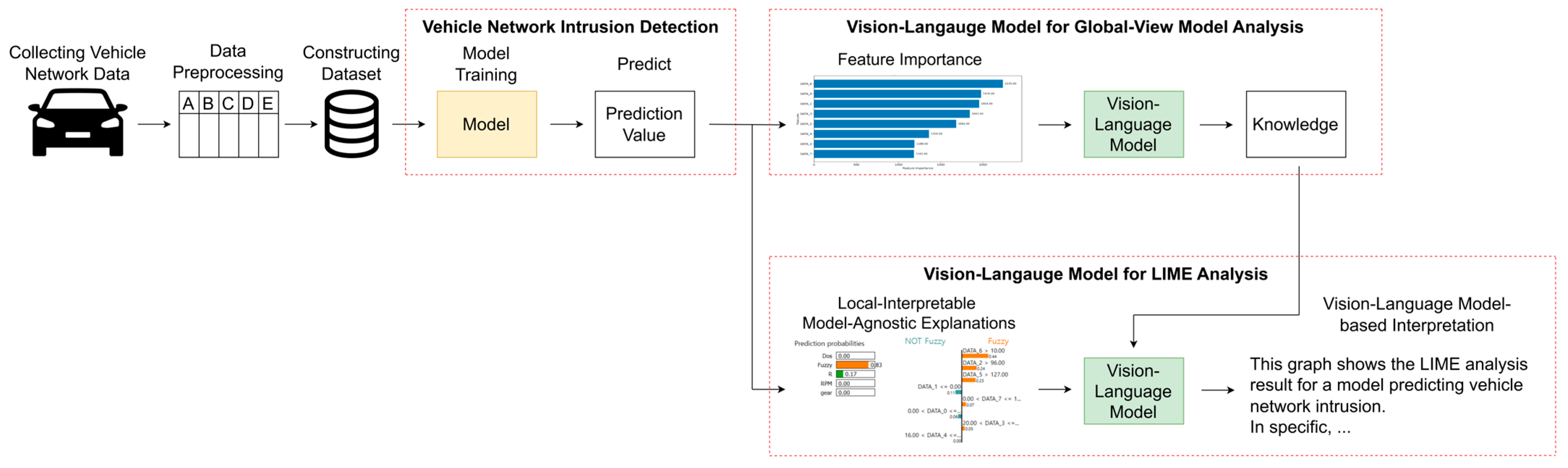

- We propose a novel explainable intrusion detection method for in-vehicle CAN that integrates LIME analysis with a VLM, enabling both global and local interpretability.

- We develop a structured prompt template for guiding a VLM in producing informative textual explanations, enabling more effective interpretation of intrusion detection outcomes.

- The proposed method is validated using a publicly available CAN intrusion detection dataset, and its effectiveness is demonstrated through prompt-based experiments evaluating interpretability and explanation quality.

2. Related Works

2.1. Intusion Detection for In-Vehicle CAN Based on Statistical Method

2.2. Intusion Detection for In-Vehicle CAN Based on Machine Learning Model

2.3. Application of Explainable Artificial Intelligence in Intrusion Detection for In-Vehicle CAN

2.4. Vision-Language Model

3. Proposed Method

3.1. In-Vehicle CAN Data Collection and Preprocessing

3.2. In-Vehicle CAN Intrusion Detection Using Machine Learning

3.3. Vision-Language Model-Based Global Feature Importance Analysis for In-Vehicle CAN Intrusion Detection Model

3.4. Vision-Language Model-Based LIME Analysis for Enhancing Explainability of In-Vehicle CAN Intrusion Detection Model

4. Experimental Design

4.1. Dataset

4.2. Experimental Settings

4.3. Evaluation Metrics

| . | (1) |

| . | (2) |

| . | (3) |

| . | (4) |

5. Experimental Results

5.1. Intrusion Detection Performance

5.2. Vision-Language Model-Based Global Feature Importance Analysis for In-Vehicle CAN Intrusion Detection Model

5.3. Vision-Lanaguage Model-Based LIME Analysis for In-Vehicle CAN Intrusion Detection Model

5.4. Ablation Study

5.5. Qualitative Evaluation

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bari, B.S.; Yelamarthi, K.; Ghafoor, S. Intrusion Detection in Vehicle Controller Area Network (CAN) Bus Using Machine Learning: A Comparative Performance Study. Sensors 2023, 23, 3610. [Google Scholar] [CrossRef] [PubMed]

- Lee, Y.; Kim, S.; Choi, S. Anomaly Detection in Vehicles Using Predictive Models Based on CAN Data. J. Korea Inf. Technol. Soc. 2024, 22, 257–265. [Google Scholar] [CrossRef]

- Hanxing, C.; Jun, T. Research on the Controller Area Network. Int. Conf. Netw. Digit. Soc. 2009, 2, 251–254. [Google Scholar] [CrossRef]

- Hossain, M.D.; Inoue, H.; Ochiai, H.; Fall, D.; Kadobayashi, Y. An Effective In-Vehicle CAN Bus Intrusion Detection System Using CNN Deep Learning Approach. In Proceedings of the IEEE Global Communications Conference, GLOBECOM 2020, Taipei, Taiwan, 7–11 December 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Khan, J.; Lim, D.; Kim, Y. Intrusion Detection System CAN-Bus In-Vehicle Networks Based on the Statistical Characteristics of Attacks. Sensors 2023, 23, 3554. [Google Scholar] [CrossRef]

- Rajapaksha, S.; Kalutarage, H.; Al-Kadri, M.O.; Petrovski, A.; Madzudzo, G.; Cheah, M. AI-Based Intrusion Detection Systems for In-Vehicle Networks: A Survey. ACM Comput. Surv. 2023, 55, 1–40. [Google Scholar] [CrossRef]

- Hoppe, T.C.; Hoppe, T.; Kiltz, S.; Dittmann, J. Applying Intrusion Detection to Automotive IT-Early Insights and Remaining Challenges. J. Inf. Assur. Secur. (JIAS) 2009, 4, 226–235. [Google Scholar]

- Miller, C.; Valasek, C. Remote Exploitation of an Unaltered Passenger Vehicle. Black Hat USA 2015, S91, 1–91. [Google Scholar]

- Taylor, A.; Leblanc, S.; Japkowicz, N. Anomaly Detection in Automobile Control Network Data with Long Short-Term Memory Networks. In Proceedings of the 3rd IEEE International Conference on Data Science and Advanced Analytics, DSAA 2016, Montreal, QC, Canada, 17–19 October 2016; pp. 130–139. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, Z.; Zheng, W.; Wang, J.; Shi, H.; Gu, M. A Combined Multi-Classification Network Intrusion Detection System Based on Feature Selection and Neural Network Improvement. Appl. Sci. 2023, 13, 8307. [Google Scholar] [CrossRef]

- Karim, M.R.; Shajalal, M.; Gras, A.; Dohmen, T.; Chala, S.A.; Boden, A.; Beecks, C.; Decker, S. Interpreting Black-Box Machine Learning Models for High Dimensional Datasets. In Proceedings of the 2023 IEEE 10th International Conference on Data Science and Advanced Analytics, Thessaloniki, Greece, 9–13 October 2023; pp. 1–10. [Google Scholar] [CrossRef]

- Hassija, V.; Chamola, V.; Mahapatra, A.; Singal, A.; Goel, D.; Huang, K.; Scardapane, S.; Spinelli, I.; Mahmud, M.; Hussain, A. Interpreting Black-Box Models: A Review on Explainable Artificial Intelligence. Cogn. Comput. 2024, 16, 45–74. [Google Scholar] [CrossRef]

- Mersha, M.; Lam, K.; Wood, J.; AlShami, A.K.; Kalita, J. Explainable Artificial Intelligence: A Survey of Needs, Techniques, Applications, and Future Direction. Neurocomputing 2024, 599, 128111. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why Should I Trust You?” Explaining the Predictions of Any Classifier. In Proceedings of the NAACL-HLT 2016—2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Proceedings of the Demonstrations Session, San Diego, CA, USA, 12–17 June 2016; pp. 97–101. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.I. A Unified Approach to Interpreting Model Predictions. In Proceedings of the Thirty-First Annual Conference on Neural Information Processing Systems, San Diego, CA, USA, 4–9 December 2017; pp. 4766–4775. [Google Scholar]

- Muschalik, M.; Fumagalli, F.; Jagtani, R.; Hammer, B.; Hüllermeier, E. IPDP: On Partial Dependence Plots in Dynamic Modeling Scenarios. Commun. Comput. Inf. Sci. 2023, 1901, 177–194. [Google Scholar] [CrossRef]

- O’brien Quinn, H.; Sedky, M.; Francis, J.; Streeton, M. Literature Review of Explainable Tabular Data Analysis. Electronics 2024, 13, 3806. [Google Scholar] [CrossRef]

- Poli, J.P.; Ouerdane, W.; Pierrard, R. Generation of Textual Explanations in XAI: The Case of Semantic Annotation. In Proceedings of the IEEE International Conference on Fuzzy Systems 2021, Luxembourg, 11–14 July 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Erion, G.; Chen, H.; DeGrave, A.; Prutkin, J.M.; Nair, B.; Katz, R.; Himmelfarb, J.; Bansal, N.; Lee, S.I. From Local Explanations to Global Understanding with Explainable AI for Trees. Nat. Mach. Intell. 2020, 2, 56–67. [Google Scholar] [CrossRef] [PubMed]

- Ghosh, A.; Acharya, A.; Saha, S.; Jain, V.; Chadha, A. Exploring the Frontier of Vision-Language Models: A Survey of Current Methodologies and Future Directions. arXiv 2024, arXiv:2404.07214. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, J.; Jin, S.; Lu, S. Vision-Language Models for Vision Tasks: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 5625–5644. [Google Scholar] [CrossRef]

- Li, T.; Wang, S.; Shang, M.; Stern, R. Can Cyberattacks on Adaptive Cruise Control Vehicles Be Effectively Detected? In Proceedings of the 2024 IEEE Intelligent Vehicles Symposium (IV), Jeju Island, Republic of Korea, 2–5 June 2024; pp. 323–328. [Google Scholar] [CrossRef]

- Li, T.; Shang, M.; Wang, S.; Stern, R. Detecting Subtle Cyberattacks on Adaptive Cruise Control Vehicles: A Machine Learning Approach. IEEE Open J. Intell. Transp. Syst. 2024, 6, 11–23. [Google Scholar] [CrossRef]

- Machado, M.R.; Karray, S.; De Sousa, I.T. LightGBM: An Effective Decision Tree Gradient Boosting Method to Predict Customer Loyalty in the Finance Industry. In Proceedings of the 14th International Conference on Computer Science and Education, ICCSE 2019, Toronto, ON, Canada, 19–21 August 2019; pp. 1111–1116. [Google Scholar] [CrossRef]

- Müter, M.; Asaj, N. Entropy-Based Anomaly Detection for in-Vehicle Networks. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium, Baden-Baden, Germany, 5–9 June 2011; pp. 1110–1115. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, W.; Liu, L.; Ren, J.; Wang, L. Three-Branch Random Forest Intrusion Detection Model. Mathematics 2022, 10, 4460. [Google Scholar] [CrossRef]

- Lundberg, H.; Mowla, N.I.; Abedin, S.F.; Thar, K.; Mahmood, A.; Gidlund, M.; Raza, S. Experimental Analysis of Trustworthy In-Vehicle Intrusion Detection System Using EXplainable Artificial Intelligence (XAI). IEEE Access 2022, 10, 102831–102841. [Google Scholar] [CrossRef]

- Ahmed, U.; Jiangbin, Z.; Almogren, A.; Sadiq, M.; Rehman, A.U.; Sadiq, M.T.; Choi, J. Hybrid Bagging and Boosting with SHAP Based Feature Selection for Enhanced Predictive Modeling in Intrusion Detection Systems. Sci. Rep. 2024, 14, 30532. [Google Scholar] [CrossRef]

- Hassan, F.; Yu, J.; Syed, Z.S.; Ahmed, N.; Reshan, M.S.A.; Shaikh, A. Achieving Model Explainability for Intrusion Detection in VANETs with LIME. PeerJ Comput. Sci. 2023, 9, e1440. [Google Scholar] [CrossRef]

- Li, Y.; Qian, Y.; Yu, Y.; Qin, X.; Zhang, C.; Liu, Y.; Yao, K.; Han, J.; Liu, J.; DIng, E. StrucTexT: Structured Text Understanding with Multi-Modal Transformers. In Proceedings of the MM ’21: ACM Multimedia Conference, Virtual Event, China, 20–24 October 2021; Association for Computing Machinery: New York, NY, USA, 2021; Volume 1, ISBN 9781450386517. [Google Scholar]

- Luo, C.; Tang, G.; Zheng, Q.; Yao, C.; Jin, L.; Li, C.; Xue, Y.; Si, L. Bi-VLDoc: Bidirectional Vision-Language Modeling for Visually-Rich Document Understanding. Int. J. Doc. Anal. Recognit. 2022, 14, 1–10. [Google Scholar] [CrossRef]

- Li, X.; Wu, Y.; Jiang, X.; Guo, Z.; Gong, M.; Cao, H.; Liu, Y.; Jiang, D.; Sun, X. Enhancing Visual Document Understanding with Contrastive Learning in Large Visual-Language Models. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 15546–15555. [Google Scholar] [CrossRef]

- Song, H.M.; Woo, J.; Kim, H.K. In-Vehicle Network Intrusion Detection Using Deep Convolutional Neural Network. Veh. Commun. 2020, 21, 100198. [Google Scholar] [CrossRef]

- Korium, M.S.; Saber, M.; Beattie, A.; Narayanan, A.; Sahoo, S.; Nardelli, P.H.J. Intrusion Detection System for Cyberattacks in the Internet of Vehicles Environment. Ad Hoc Netw. 2024, 153, 103330. [Google Scholar] [CrossRef]

- Guerra, L.; Xu, L.; Bellavista, P.; Chapuis, T.; Duc, G.; Mozharovskyi, P.; Nguyen, V.T. AI-Driven Intrusion Detection Systems (IDS) on the ROAD Dataset: A Comparative Analysis for Automotive Controller Area Network (CAN). In Proceedings of the CCS ’24: ACM SIGSAC Conference on Computer and Communications Security, Salt Lake City, UT, USA, 14–18 October 2024; pp. 39–49. [Google Scholar] [CrossRef]

- He, Z.; Lin, D.; Lau, T.; Wu, M. Gradient Boosting Machine: A Survey. arXiv 2019, arXiv:1908.06951. [Google Scholar] [CrossRef]

- Saarela, M.; Jauhiainen, S. Comparison of Feature Importance Measures as Explanations for Classification Models. SN Appl. Sci. 2021, 3, 272. [Google Scholar] [CrossRef]

- Rengasamy, D.; Mase, J.M.; Kumar, A.; Rothwell, B.; Torres, M.T.; Alexander, M.R.; Winkler, D.A.; Figueredo, G.P. Feature Importance in Machine Learning Models: A Fuzzy Information Fusion Approach. Neurocomputing 2022, 511, 163–174. [Google Scholar] [CrossRef]

- Lee, Y.; Baruzzo, G.; Kim, J.; Seo, J.; Di Camillo, B. Validity of Feature Importance in Low-Performing Machine Learning for Tabular Biomedical Data. arXiv 2024, arXiv:2409.13342. [Google Scholar] [CrossRef]

- Gu, J.; Han, Z.; Chen, S.; Beirami, A.; He, B.; Zhang, G.; Liao, R.; Qin, Y.; Tresp, V.; Torr, P. A Systematic Survey of Prompt Engineering on Vision-Language Foundation Models. arXiv 2023, arXiv:2307.12980. [Google Scholar] [CrossRef]

- Sahoo, P.; Singh, A.K.; Saha, S.; Jain, V.; Mondal, S.; Chadha, A. A Systematic Survey of Prompt Engineering in Large Language Models: Techniques and Applications. arXiv 2024, arXiv:2402.07927. [Google Scholar] [CrossRef]

- Alfeo, A.L.; Zippo, A.G.; Catrambone, V.; Cimino, M.G.C.A.; Toschi, N.; Valenza, G. From Local Counterfactuals to Global Feature Importance: Efficient, Robust, and Model-Agnostic Explanations for Brain Connectivity Networks. Comput. Methods Programs Biomed. 2023, 236, 107550. [Google Scholar] [CrossRef]

- Mohammed, R.; Rawashdeh, J.; Abdullah, M. Machine Learning with Oversampling and Undersampling Techniques: Overview Study and Experimental Results. In Proceedings of the 2020 11th International Conference on Information and Communication Systems (ICICS), Irbid, Jordan, 7–9 April 2020; pp. 243–248. [Google Scholar] [CrossRef]

- Luo, J.; Yuan, Y.; Xu, S. Improving GBDT Performance on Imbalanced Datasets: An Empirical Study of Class-Balanced Loss Functions. Neurocomputing 2025, 634, 129896. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O. SMOTE: Synthetic Minority Over-Sampling Technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Rao, S.; Kondaiah, K.; Chandra, G.; Kumar, K. A Survey on Machine Learning: Concept, Algorithms and Applications. Int. J. Innov. Res. Comput. Commun. Eng. 2017, 5, 8198–8205. [Google Scholar]

- Peng, C.Y.J.; Lee, K.L.; Ingersoll, G.M. An Introduction to Logistic Regression Analysis and Reporting. J. Educ. Res. 2002, 96, 3–14. [Google Scholar] [CrossRef]

- Izza, Y.; Ignatiev, A.; Marques-Silva, J. On Explaining Decision Trees. arXiv 2020, arXiv:2010.11034. [Google Scholar] [CrossRef]

- Louppe, G. Understanding Random Forests: From Theory to Practice. arXiv 2014, arXiv:1407.7502. [Google Scholar] [CrossRef]

- Marius-Constantin, P.; Balas, V.E.; Perescu-Popescu, L.; Mastorakis, N. Multilayer Perceptron and Neural Networks. WSEAS Trans. Circuits Syst. 2009, 8, 579–588. [Google Scholar] [CrossRef]

- Tharwat, A.; Gaber, T.; Ibrahim, A.; Hassanien, A.E. Linear Discriminant Analysis: A Detailed Tutorial. AI Commun. 2017, 30, 169–190. [Google Scholar] [CrossRef]

- Beja-Battais, P. Overview of AdaBoost: Reconciling Its Views to Better Understand Its Dynamics. arXiv 2023, arXiv:2310.18323. [Google Scholar] [CrossRef]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely Randomized Trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef]

- Cunningham, P.; Delany, S.J. K-Nearest Neighbour Classifiers: 2nd Edition (with Python Examples). arXiv 2020, arXiv:2004.04523. [Google Scholar] [CrossRef]

- Peretz, O.; Koren, M.; Koren, O. Naive Bayes Classifier—An Ensemble Procedure for Recall and Precision Enrichment. Eng. Appl. Artif. Intell. 2024, 136, 108972. [Google Scholar] [CrossRef]

- Prokhorenkova, L.; Gusev, G.; Vorobev, A.; Dorogush, A.V.; Gulin, A. Catboost: Unbiased Boosting with Categorical Features. In Proceedings of the Advances in Neural Information Processing Systems 2018, Montreal, QC, Canada, 3–8 December 2018; pp. 6638–6648. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 2016, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- OpenAI; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Kalyan, K.S. A Survey of GPT-3 Family Large Language Models Including ChatGPT and GPT-4. Nat. Lang. Process. J. 2024, 6, 100048. [Google Scholar] [CrossRef]

- Vujović, Ž. Classification Model Evaluation Metrics. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 599–606. [Google Scholar] [CrossRef]

- Joshi, A.; Kale, S.; Chandel, S.; Pal, D.K. Likert Scale: Explored and Explained. Curr. J. Appl. Sci. Technol. 2015, 7, 396–403. [Google Scholar] [CrossRef]

- Fang, Z.; Wang, J.; Hu, X.; Wang, L.; Yang, Y.; Liu, Z. Compressing Visual-Linguistic Model via Knowledge Distillation. In Proceedings of the Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 10–17 October 2021; pp. 1408–1418. [Google Scholar] [CrossRef]

- Zhu, D.; Chen, J.; Shen, X.; Li, X.; Elhoseiny, M. Minigpt-4: Enhancing Vision-Language Understanding With Advanced Large Language Models. In Proceedings of the 12th International Conference on Learning Representations, ICLR 2024, Vienna, Austria, 7 May 2024; pp. 1–15. [Google Scholar]

- Hu, Y.; Chu, X.; Qiao, L.; Lin, X.; Xu, S.; Yang, Y. MobileVLM: A Fast, Strong and Open Vision Language Assistant for Mobile Devices. arXiv 2023, arXiv:2312.16886. [Google Scholar] [CrossRef]

| Type | Template |

|---|---|

| Role description | You are a domain expert in machine learning and XAI. Your objective is to analyze the global feature importance of a trained machine learning model and produce precise and insightful textual explanations suitable for the report. |

| Task specification | <Task> - The image shows the global feature importance graph of {Prediction model} model used for intrusion detection in a vehicle CAN. - This is a multi-class classification task that classifies CAN messages into five categories: Normal, DoS, Fuzzy, Gear, and RPM.- Input features, DATA_0 to DATA_7, represent byte-level values from CAN messages. - In the graph, the Y-axis represents the input variables (DATA_0 to DATA_7), and the X-axis represents their corresponding importance scores. - The numerical values displayed at the end of each bar indicate the feature importance score calculated by the model, reflecting each feature’s contribution to the overall prediction performance. </Task> |

| Interpretation guideline | <Interpretation guideline> - Please provide a structured explanation that includes: 1. A summary of the top three most important features, including their relative importance scores. 2. A discussion on the contribution of these features to detecting specific intrusion types. 3. An interpretation of lower-ranked features and potential reasons for their limited impact. 4. A logical analysis of how the model prioritizes input variables in the context of CAN message classification. - Since the provided graph represents global feature importance across all classes, do not associate specific input features with particular intrusion types. - Focus on the overall contribution of each feature to the model’s general classification performance. - The explanation should be clear, analytical, and suitable for inclusion in a research paper. - Use technical language appropriate for a machine learning and cybersecurity audience. </Interpretation guideline> |

| Type | Template |

|---|---|

| Role description | You are a domain expert in machine learning and explainable artificial intelligence. Your objective is to analyze the local interpretable LIME visualization for a specific data instance classified by a vehicle CAN intrusion detection model, and to produce a precise and insightful textual explanation suitable for inclusion in a report. |

| Task Specification | <Task> - The image shows the LIME explanation for a specific instance classified by {Prediction model} model trained to detect intrusions in a vehicle CAN. - This is a multi-class classification task that classifies CAN messages into five categories: Normal, DoS, Fuzzy, Gear, and RPM. - Input features, DATA_0 to DATA_7, represent byte-level values from CAN messages. -The LIME visualization consists of:

{LIME feature conditions and contribution values} </Task> |

| Interpretation guideline | <Interpretation guideline> - Please provide a structured explanation that includes: 1. A summary of the most influential features in the local explanation, including both positively and negatively contributing features, and their corresponding contribution values. 2. A discussion on how the model locally prioritizes features to predict the target class for this instance. 3. An interpretation of features with minimal or near-zero contribution and possible reasons for their limited relevance. 4. A logical analysis of the local decision-making process as revealed by LIME in the context of byte-level classification of CAN messages. 5. A reflection on whether the local feature importance observed in the LIME explanation aligns with or diverges from the global feature importance patterns. An analysis of what this reveals about the model’s instance-specific decision behavior. - Focus on the local behavior of the model for this instance only. Do not generalize feature relevance across the entire dataset. - The explanation should be clear, analytical, and suitable for inclusion in a research paper. - Use technical language appropriate for a machine learning and cybersecurity audience. </Interpretation guideline> |

| Contextual reference | <Contextual reference> - Use the following result of global feature importance analysis to complement your interpretation: {Result of global feature importance analysis of vehicle CAN intrusion detection model} - In your explanation, you may reflect on whether the local importance shown in the LIME result aligns with or diverges from the global importance patterns, and what this reveals about the model’s behavior on this specific instance. </Contextual reference> |

| Input Variable | Variable Type | Description |

|---|---|---|

| DATA_0 | Continuous | Byte-level payload values (0–255) of CAN message |

| DATA_1 | ||

| DATA_2 | ||

| DATA_3 | ||

| DATA_4 | ||

| DATA_5 | ||

| DATA_6 | ||

| DATA_7 |

| Output Variable | Variable Type | No. of Class | Description | Class | Samples |

|---|---|---|---|---|---|

| Vehicle intrusion | Categorical | 5 | Type of the vehicle intrusion | Normal | 701,832 |

| DoS | 29,501 | ||||

| Fuzzy | 24,624 | ||||

| Gear | 29,944 | ||||

| RPM | 32,539 |

| Model | Precision | Recall | F1 Score | Accuracy |

|---|---|---|---|---|

| Logistic Regression | 0.7893 | 0.7548 | 0.7692 | 0.9571 |

| Decision Tree | 0.9014 | 0.9913 | 0.9302 | 0.9652 |

| Random Forest | 0.9015 | 0.9919 | 0.9305 | 0.9652 |

| MLP Classifier | 0.9013 | 0.9914 | 0.9302 | 0.9652 |

| Linear Discriminant Analysis | 0.7106 | 0.7333 | 0.7117 | 0.9370 |

| AdaBoost | 0.3993 | 0.5404 | 0.3961 | 0.6702 |

| ExtraTree | 0.9015 | 0.9919 | 0.9306 | 0.9653 |

| K-Nearest Neighbor | 0.7919 | 0.7990 | 0.7953 | 0.9640 |

| Naïve Bayes | 0.8992 | 0.9421 | 0.9011 | 0.9575 |

| Gradient Boosting | 0.9015 | 0.9912 | 0.9302 | 0.9652 |

| CatBoost | 0.9015 | 0.9918 | 0.9305 | 0.9653 |

| XGBoost | 0.9015 | 0.9919 | 0.9305 | 0.9653 |

| LightGBM | 0.9015 | 0.9919 | 0.9306 | 0.9653 |

| Result |

| Global Feature Importance Analysis of LightGBM for CAN Intrusion Detection 1. Summary of Top Three Most Important Features The global feature importance plot of the LightGBM model, trained to classify CAN messages into five categories (Normal, DoS, Fuzzy, Gear, and RPM), reveals the following top three features based on their contribution to the overall model performance:

2. Contribution of Top Features to Intrusion Detection While this analysis considers global feature importance and does not attribute features to specific attack types, the high importance of DATA_6, DATA_0, and DATA_1 implies that these fields capture generalizable patterns that differentiate both benign and malicious behaviors. These bytes may encode information sensitive to protocol misuse or anomalous behaviors common across various attack categories such as DoS or fuzzing. Their dominance suggests that the model detects deviations or patterns in these byte positions that are statistically distinct from normal traffic across multiple classes. 3. Interpretation of Lower-Ranked Features The remaining features—DATA_3 (1843), DATA_5 (1682), DATA_4 (1359), DATA_2 (1188), and DATA_7 (1182)—though still contributing to model performance, exhibit decreasing importance. This suggests several possible interpretations:

The LightGBM model’s feature prioritization reflects an information-theoretic optimization where byte positions that consistently help reduce entropy (i.e., uncertainty in class prediction) are ranked higher. In the context of CAN message classification, certain byte positions (e.g., DATA_6, DATA_0) likely align with payload segments that encapsulate control-critical or attacker-manipulable fields. These positions may correlate with identifiers or command values that are disproportionately affected during attacks, making them statistically salient. Conversely, lower-ranked features may correspond to non-critical bytes or fields that remain invariant across traffic conditions, thus offering limited utility for classification. The distribution of importance suggests that the model effectively distinguishes signal-carrying bytes from noise, optimizing the feature space to prioritize high-signal content in the multi-class setting. Conclusion The global feature importance analysis confirms that the LightGBM model effectively identifies and exploits specific byte-level fields (particularly DATA_6, DATA_0, and DATA_1) that contribute most significantly to intrusion detection across all CAN message classes. The declining importance of other features reflects the model’s ability to focus on the most predictive information, supporting efficient and interpretable decision-making in a complex multi-class classification task. This prioritization is critical for deploying lightweight and explainable intrusion detection systems in automotive cyber-physical environments. |

| Result |

| Local Explanation of LIME for CAN Intrusion Detection—Class “Fuzzy” 1. Summary of the Most Influential Features The LIME explanation reveals that the instance was classified as “Fuzzy” with the highest predicted probability of 0.83. The following features had the most significant contributions:

2. Local Feature Prioritization Logic The model prioritized features that exhibited high predictive relevance under the given input conditions. Specifically:

3. Interpretation of Low-Contribution Features

4. Byte-Level Local Decision Logic The model’s decision reflects how specific byte-level thresholds are used to distinguish among attack classes. In this case:

5. Alignment with Global Feature Importance The local importance mostly aligns with global importance rankings:

Conclusion The LIME-based analysis of this CAN message classified as “Fuzzy” demonstrates how a small subset of byte-level features, particularly DATA_6, DATA_2, and DATA_5, drove the local prediction. While the result is partially consistent with global importance rankings, discrepancies like the limited local impact of DATA_0 emphasize the need for instance-level interpretability. This kind of hybrid analysis is essential for developing trustworthy, explainable, and safety-critical intrusion detection models in vehicular environments. |

| Result |

| Local Explanation of LIME for CAN Intrusion Detection—Class “Fuzzy” 1. Summary of the Most Influential Features In this case, the instance was classified as Fuzzy with the highest prediction probability of 0.83, indicating high model confidence. The LIME explanation reveals the local decision process by attributing contributions of feature conditions to the predicted class (Fuzzy). The most influential features, ranked by absolute contribution magnitude, are as follows:

The local model prioritization clearly emphasizes high values of DATA_6, DATA_2, and DATA_5 as indicative of Fuzzy intrusions. These byte-level features may capture payload anomalies or entropy patterns associated with injected random/fuzzy CAN frames. Notably, the model’s reliance on DATA_6 > 10.00 as the dominant rule (contribution: +0.4373) suggests that this byte is particularly sensitive to Fuzzy behavior in the training data distribution. The use of threshold-based binning (e.g., DATA_2 > 96.00, DATA_5 > 127.00) reflects the model’s learned boundaries that separate Fuzzy instances from other types. This is consistent with decision tree behavior within LightGBM, where split thresholds are optimized during training to maximize information gain. 3. Interpretation of Low-Contribution Features

4. Byte-Level Local Decision Logic This instance’s classification as Fuzzy results from the model identifying a distinctive combination of high byte values in critical features (DATA_6, DATA_2, DATA_5) that align with fuzzing attack signatures. These patterns may represent payload anomalies generated by random data injection common in Fuzzy attacks. The model effectively discounts certain conditions (e.g., DATA_1 <= 0.00, DATA_0 low values) that may be more associated with normal or Gear messages. The presence of both positive and negative contributions illustrates the model’s nuanced decision boundary, balancing evidence for and against the Fuzzy class within the local context. Conclusion Overall, this LIME explanation offers interpretability into the LightGBM classifier’s internal logic, highlighting how specific byte-level thresholds guide localized decisions. This is especially valuable in cybersecurity settings where transparency and trust in intrusion detection systems are critical. |

| Metric | Very Dissatisfied | Dissatisfied | Neutral | Satisfied | Very Satisfied |

|---|---|---|---|---|---|

| Usability | 0.0% | 0.0% | 20.0% | 60.0% | 20.0% |

| Relevance | 0.0% | 10.0% | 20.0% | 60.0% | 10.0% |

| Fluency | 0.0% | 0.0% | 30.0% | 60.0% | 10.0% |

| Accuracy | 0.0% | 10.0% | 30.0% | 50.0% | 10.0% |

| Overall satisfaction | 0.0% | 0.0% | 20.0% | 60.0% | 20.0% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, J.; Rew, J. Vision-Language Model-Based Local Interpretable Model-Agnostic Explanations Analysis for Explainable In-Vehicle Controller Area Network Intrusion Detection. Sensors 2025, 25, 3020. https://doi.org/10.3390/s25103020

Lee J, Rew J. Vision-Language Model-Based Local Interpretable Model-Agnostic Explanations Analysis for Explainable In-Vehicle Controller Area Network Intrusion Detection. Sensors. 2025; 25(10):3020. https://doi.org/10.3390/s25103020

Chicago/Turabian StyleLee, Jaeseung, and Jehyeok Rew. 2025. "Vision-Language Model-Based Local Interpretable Model-Agnostic Explanations Analysis for Explainable In-Vehicle Controller Area Network Intrusion Detection" Sensors 25, no. 10: 3020. https://doi.org/10.3390/s25103020

APA StyleLee, J., & Rew, J. (2025). Vision-Language Model-Based Local Interpretable Model-Agnostic Explanations Analysis for Explainable In-Vehicle Controller Area Network Intrusion Detection. Sensors, 25(10), 3020. https://doi.org/10.3390/s25103020