Revolutionizing RIS Networks: LiDAR-Based Data-Driven Approach to Enhance RIS Beamforming

Abstract

1. Introduction

2. Related Work

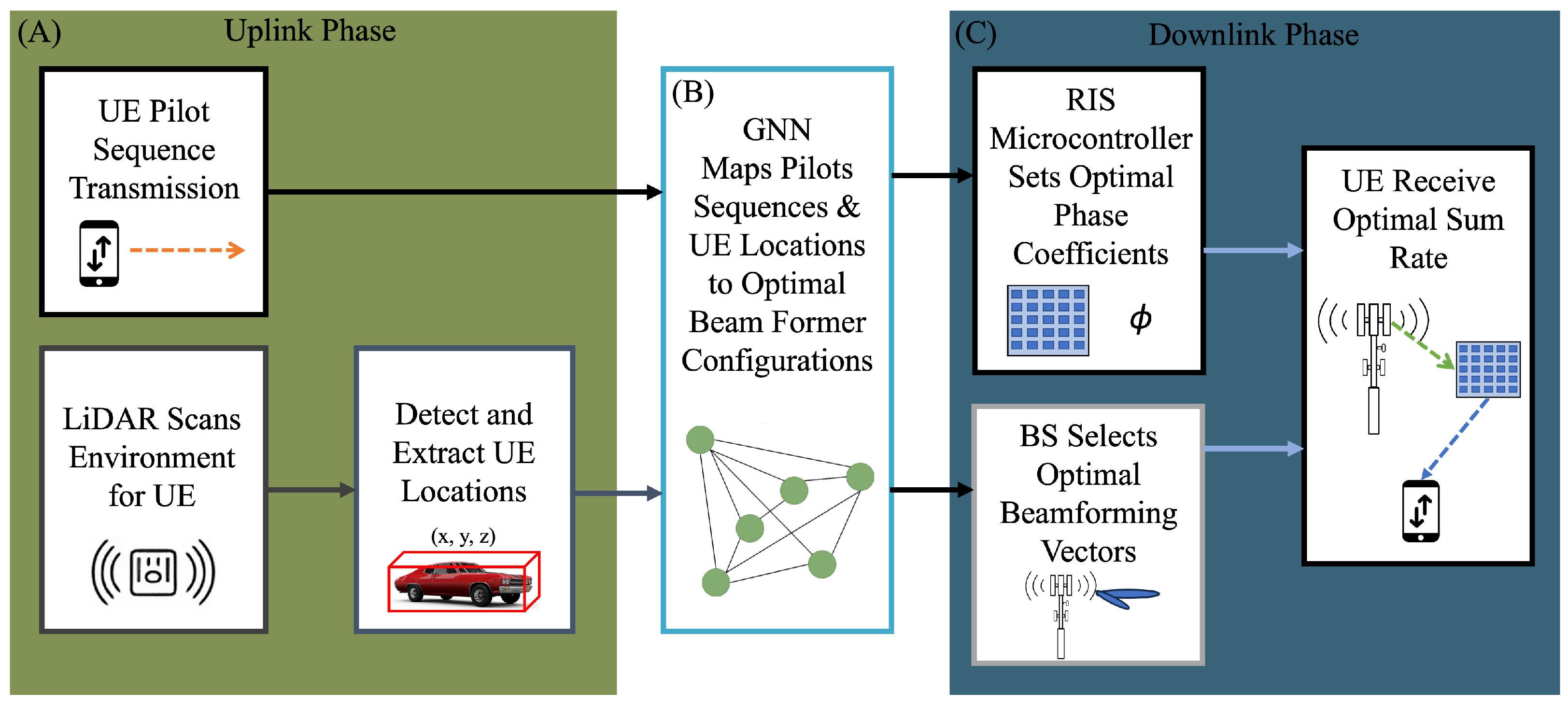

3. Materials and Methods

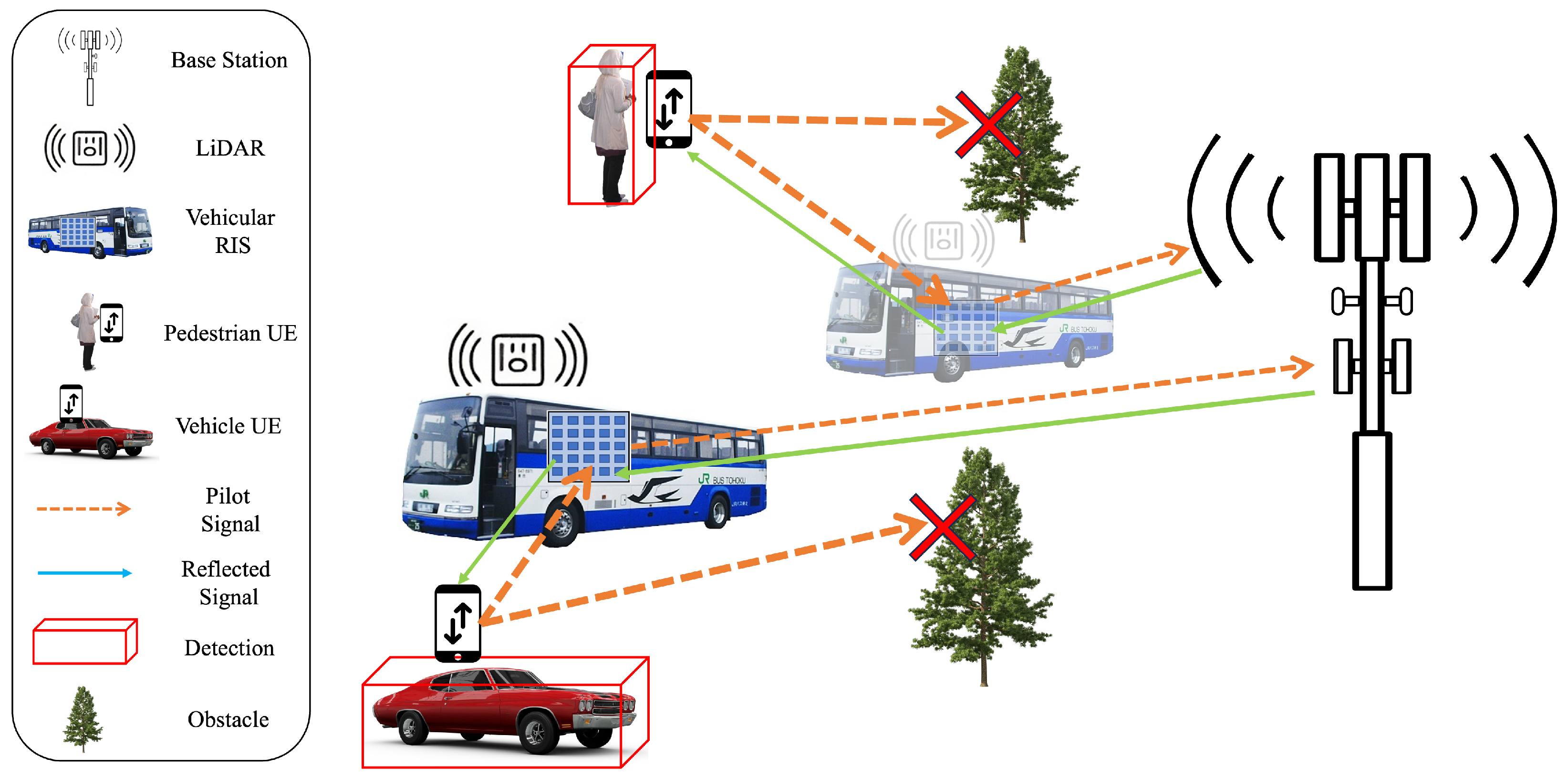

3.1. Scenario

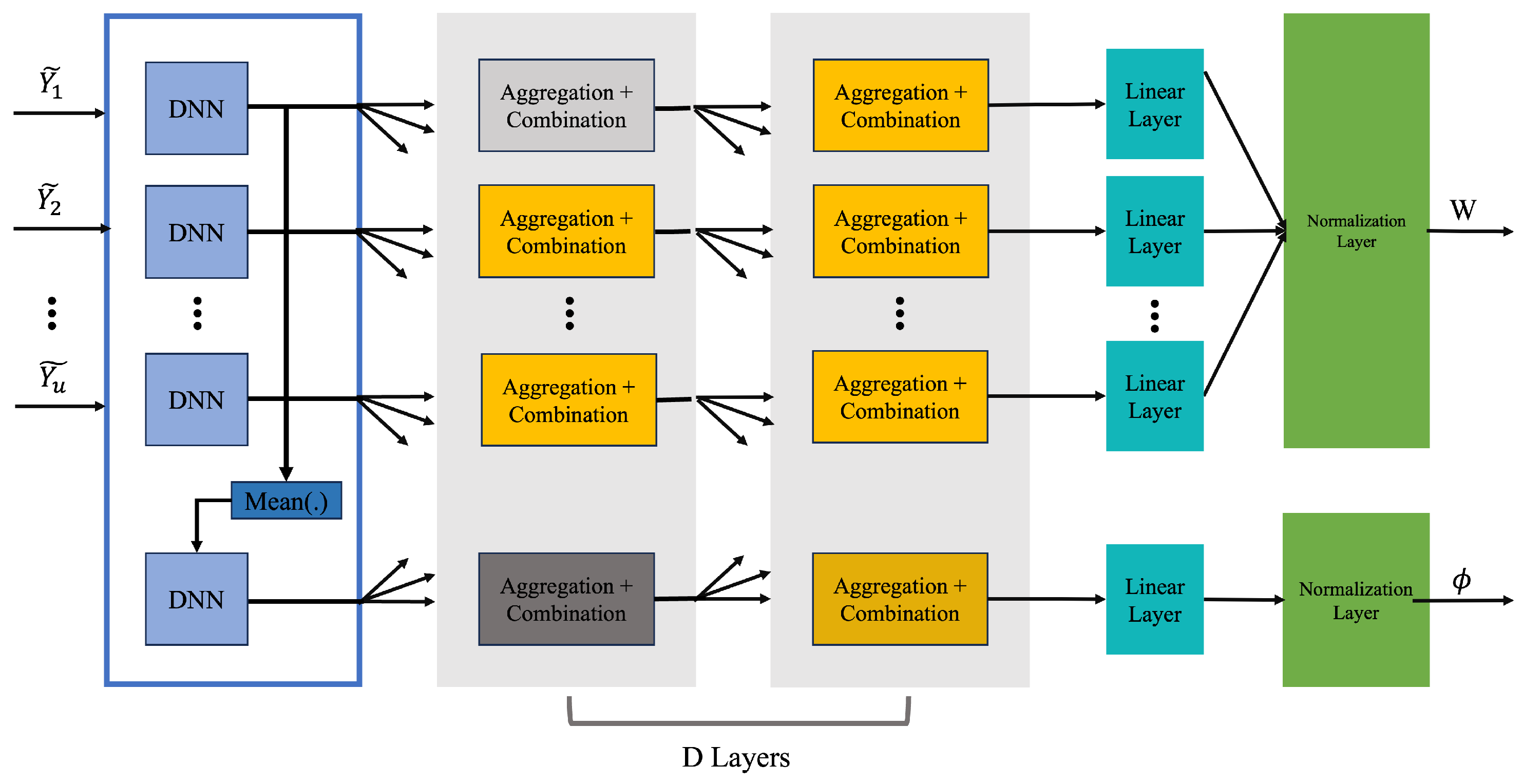

3.2. Beamforming and RIS Phase Coefficient Scheme

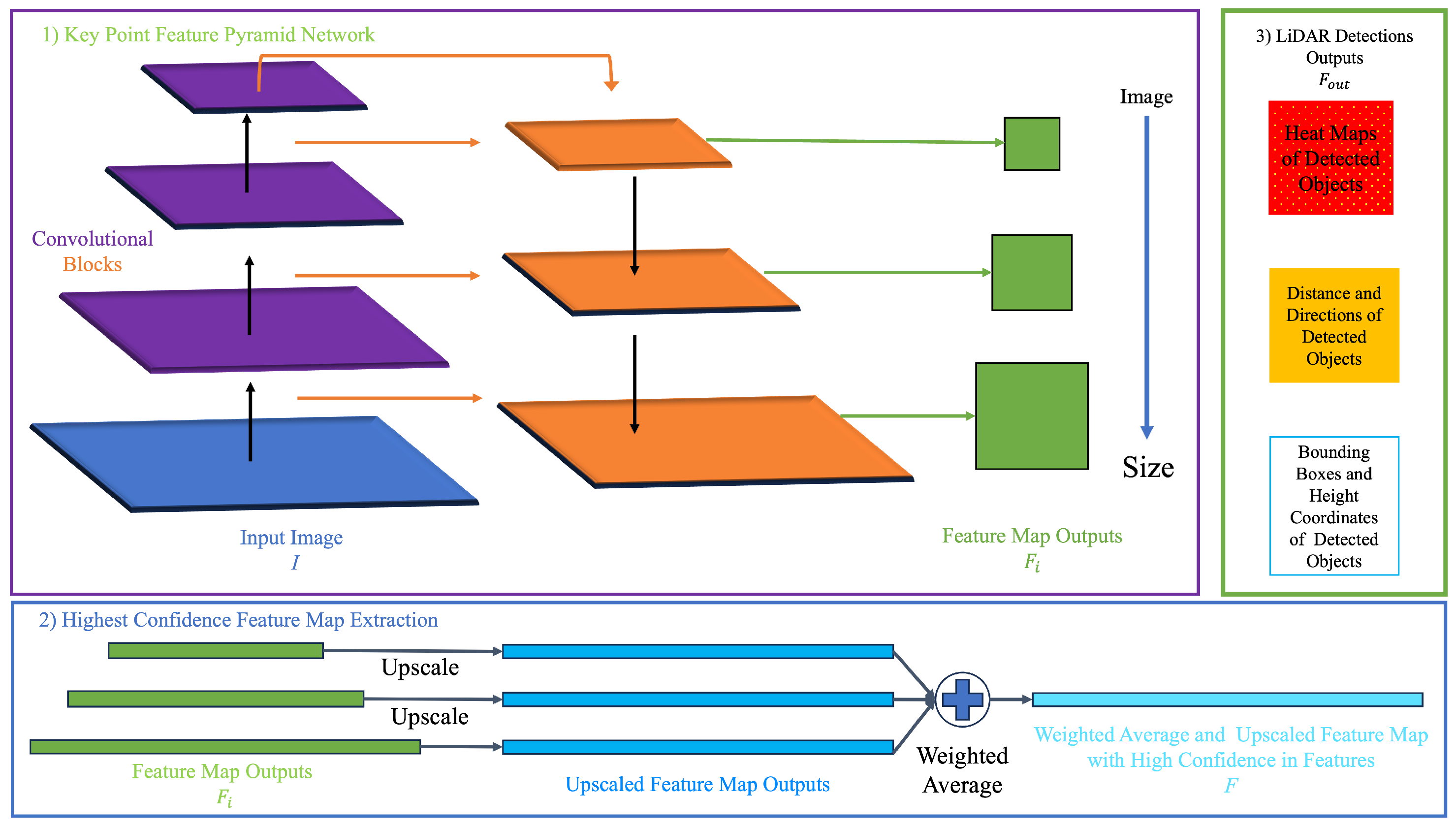

3.3. Super Fast and Accurate 3D Object Detection Based on 3D LiDAR Point Clouds (SFA3D)

4. Results

4.1. Experiment 1: Varying Downlink Transmission Power

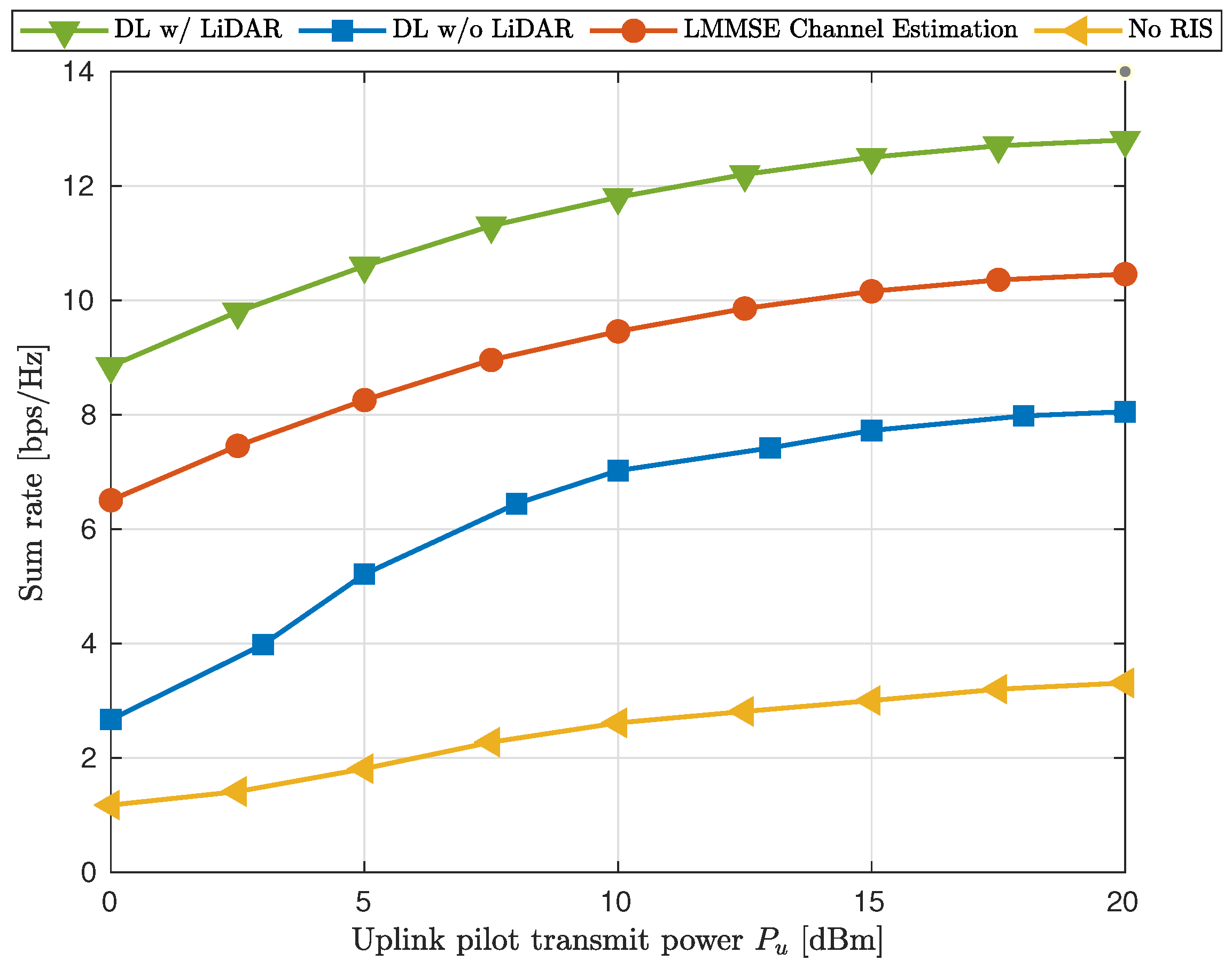

4.2. Experiment 2: Varying Uplink Transmission Power

4.3. Experiment 3: Varying User Pilot Sequence Lengths

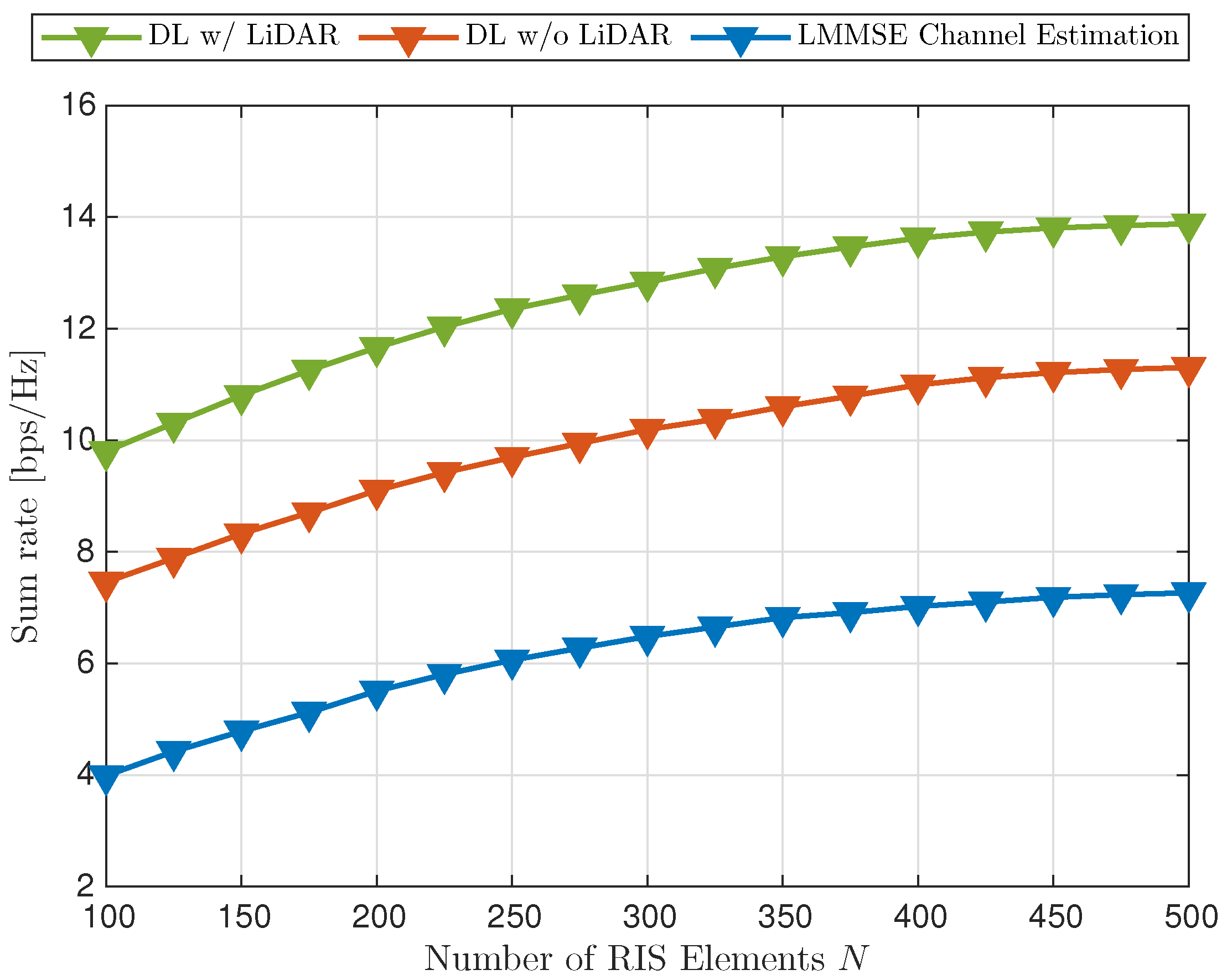

4.4. Experiment 4: Varying Number of RIS Elements

5. Discussion

5.1. Key Findings and Implications

5.2. Advantages of LiDAR Integration

5.3. Computational Complexity and Trade-Offs

5.4. Broader Implications

6. Future Work

6.1. Optimization of Computational Efficiency

- Model pruning and quantization: reducing the size and complexity of the GNN through techniques such as pruning redundant parameters and quantizing weights.

- Lightweight neural architectures: designing simplified GNN models tailored for RIS optimization without compromising performance.

- Hardware acceleration: exploring the use of GPUs, TPUs, or FPGAs to speed up LiDAR processing and GNN inference, enabling real-time deployment in resource-constrained environments.

6.2. Adaptive Beamforming and Phase Shift Optimization

- Reinforcement learning: integrating reinforcement learning techniques to enable adaptive beamforming and phase shift updates based on real-time feedback from the environment.

- Online learning: developing online learning algorithms that update the GNN model incrementally as new LiDAR data and communication metrics become available.

- Mobility-aware optimization: enhancing the framework to account for rapid user mobility, such as in vehicular or drone-assisted networks, ensuring consistent performance across dynamic scenarios.

6.3. Integration of Multi-Modal Sensing

- Fusion with other sensors: Combining LiDAR data with cameras or radar creates a multi-modal sensing framework. This integration can provide complementary perspectives and improve the localization accuracy in complex environments.

- Data fusion algorithms: developing algorithms to effectively combine data from multiple sensors while managing uncertainties and measurement conflicts.

- Robustness in diverse scenarios: testing the framework in varied environments, such as urban areas, rural landscapes, and indoor settings, to evaluate its adaptability across different contexts.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| BEV | Bird’s-Eye View |

| BS | Base station |

| CSI | Channel state information |

| FPN | Feature Pyramid Network |

| GNN | Graph Neural Network |

| IoT | Internet of Things |

| KFPN | Keypoint Feature Pyramid Network |

| LiDAR | Light Detecting and Ranging |

| LMMSE | Linear Minimum Mean Squared Error |

| LoS | Line of Sight |

| MIMO | Multiple-Input–Multiple-Output |

| MISO | Multiple-Input–Single-Output |

| MMSE | Minimum mean-squared error |

| NLoS | Non-Line of Sight |

| QoS | Quality of service |

| RIS | Reconfigurable Intelligent Surface |

| SFA3D | Super Fast and Accurate 3D Object Detection Framework |

| UAV | Unmanned aerial vehicle |

| UE | User Equipment |

| WPCN | Wireless-Powered Communication Network |

References

- Holloway, C.; Mohamed, M.; Kuester, E.; Dienstfrey, A. Reflection and transmission properties of a metafilm: With an application to a controllable surface composed of resonant particles. IEEE Trans. Electromagn. Compat. 2005, 47, 853–865. [Google Scholar] [CrossRef]

- Li, L.; Jun Cui, T.; Ji, W.; Liu, S.; Ding, J.; Wan, X.; Bo Li, Y.; Jiang, M.; Qiu, C.W.; Zhang, S. Electromagnetic reprogrammable coding-metasurface holograms. Nat. Commun. 2017, 8, 197. [Google Scholar] [CrossRef] [PubMed]

- Hassouna, S.; Jamshed, M.A.; Rains, J.; Kazim, J.U.R.; Rehman, M.U.; Abualhayja, M.; Mohjazi, L.; Cui, T.J.; Imran, M.A.; Abbasi, Q.H. A survey on reconfigurable intelligent surfaces: Wireless communication perspective. IET Commun. 2023, 17, 497–537. [Google Scholar] [CrossRef]

- Wu, Q.; Zhang, R. Towards Smart and Reconfigurable Environment: Intelligent Reflecting Surface Aided Wireless Network. IEEE Commun. Mag. 2020, 58, 106–112. [Google Scholar] [CrossRef]

- Nazar, A.M.; Selim, M.Y.; Kamal, A.E. Mounting RIS panels on tethered and untethered uavs: A survey. Arab. J. Sci. Eng. 2024, 49, 2857–2885. [Google Scholar] [CrossRef]

- Tariq, F.; Khandaker, M.; Ansari, I. 6G Wireless: The Communication Paradigm Beyond 2030; CRC Press: Boca Raton, FL, USA, 2023. [Google Scholar]

- Goberville, N.; El-Yabroudi, M.; Omwanas, M.; Rojas, J.; Meyer, R.; Asher, Z.; Abdel-Qader, I. Analysis of LiDAR and Camera Data in Real-World Weather Conditions for Autonomous Vehicle Operations. SAE Int. J. Adv. Curr. Pract. Mobil. 2020, 2, 2428–2434. [Google Scholar] [CrossRef]

- Kim, J.; Park, B.j.; Kim, J. Empirical Analysis of Autonomous Vehicle’s LiDAR Detection Performance Degradation for Actual Road Driving in Rain and Fog. Sensors 2023, 23, 2972. [Google Scholar] [CrossRef] [PubMed]

- Rinch, O.; Elzanaty, A.; Alsharoa, A. Enhancing Near-Field Wireless Localization with LiDAR-Assisted RIS in Multipath Environments. IEEE Wirel. Commun. Lett. 2023, 12, 2168–2172. [Google Scholar] [CrossRef]

- Jiang, T.; Cheng, H.V.; Yu, W. Learning to Reflect and to Beamform for Intelligent Reflecting Surface with Implicit Channel Estimation. arXiv 2021, arXiv:2009.14404. [Google Scholar] [CrossRef]

- Cherif, B.; Ghazzai, H.; Alsharoa, A. LiDAR from the Sky: UAV Integration and Fusion Techniques for Advanced Traffic Monitoring. IEEE Syst. J. 2024, 18, 1639–1650. [Google Scholar] [CrossRef]

- Rinchi, O.; Alsharoa, A. LiDAR Technology For Future Wireless Networks: Use Cases and Challenges. In Proceedings of the 2024 IEEE 30th International Conference on Telecommunications (ICT), Amman, Jordan, 24–27 June 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Xu, W.; An, J.; Xu, Y.; Huang, C.; Gan, L.; Yuen, C. Time-Varying Channel Prediction for RIS-Assisted MU-MISO Networks via Deep Learning. IEEE Trans. Cogn. Comm. Netw. 2022, 8, 1802–1815. [Google Scholar] [CrossRef]

- Hameed, I.; Koo, I. Enhancing Throughput in IoT Networks: The Impact of Active RIS on Wireless Powered Communication Systems. Electronics 2024, 13, 1402. [Google Scholar] [CrossRef]

- Jiang, S.; Hindy, A.; Alkhateeb, A. Camera Aided Reconfigurable Intelligent Surfaces: Computer Vision Based Fast Beam Selection. arXiv 2022, arXiv:2211.07563. [Google Scholar]

- Ouyang, M.; Gao, F.; Wang, Y.; Zhang, S.; Li, P.; Ren, J. Computer Vision-Aided Reconfigurable Intelligent Surface-Based Beam Tracking: Prototyping and Experimental Results. IEEE Trans. Wirel. Commun. 2023, 22, 8681–8693. [Google Scholar] [CrossRef]

- Li, Z.; Wang, S.; Lin, Q.; Li, Y.; Wen, M.; Wu, Y.C.; Poor, H.V. Phase Shift Design in RIS Empowered Wireless Networks: From Optimization to AI-Based Methods. Network 2022, 2, 398–418. [Google Scholar] [CrossRef]

- Zhang, P.; Zhang, J.; Xiao, H.; Du, H.; Niyato, D.; Ai, B. RIS-Aided 6G Communication System with Accurate Traceable User Mobility. IEEE Trans. Veh. Technol. 2022, 72, 2718–2722. [Google Scholar] [CrossRef]

- Mishra, D.; Johansson, H. Channel Estimation and Low-complexity Beamforming Design for Passive Intelligent Surface Assisted MISO Wireless Energy Transfer. In Proceedings of the 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 4659–4663. [Google Scholar] [CrossRef]

- Wang, K.; Lam, C.T.; Ng, B.K. RIS-Assisted High-Speed Communications with Time-Varying Distance-Dependent Rician Channels. Appl. Sci. 2022, 12, 1857. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Dung, N.M. Super-Fast-Accurate-3D-Object-Detection-PyTorch. 2020. Available online: https://github.com/maudzung/Super-Fast-Accurate-3D-Object-Detection (accessed on 26 August 2024).

- Li, P.; Zhao, H.; Liu, P.; Cao, F. RTM3D: Real-time Monocular 3D Detection from Object Keypoints for Autonomous Driving. arXiv 2020, arXiv:2001.03343. [Google Scholar]

| Parameter | Value |

|---|---|

| BS position | |

| GNN training epochs | 500 |

| LiDAR-RIS vehicle position | |

| Noise power () | −100 dB |

| Number of BS antennas (M) | 8 |

| Number of samples | 1000 |

| Number of users (U) | 5 |

| Rician factor () | 10 |

| Static downlink power () | 20 dBm |

| Static number of RIS elements (N) | 100 |

| Static pilot length (L) | 15 |

| Varying pilot length () | |

| Static uplink power () | 5 dBm |

| Varying downlink power () | dBm |

| Varying number of RIS elements () | |

| Varying uplink power () | dBm |

| Experiment | LiDAR-GNN vs. Location-Exclusive GNN (%) | LiDAR-GNN vs. LMMSE (%) | LiDAR-GNN vs. No RIS (%) |

|---|---|---|---|

| Varying | 16.0 | 85.0 | 190.0 |

| Varying | 25.4 | 73.1 | 349.0 |

| Varying | 22.0 | 98.0 | 239.0 |

| Varying | 25.8 | 101.0 | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nazar, A.M.; Selim, M.Y.; Qiao, D. Revolutionizing RIS Networks: LiDAR-Based Data-Driven Approach to Enhance RIS Beamforming. Sensors 2025, 25, 75. https://doi.org/10.3390/s25010075

Nazar AM, Selim MY, Qiao D. Revolutionizing RIS Networks: LiDAR-Based Data-Driven Approach to Enhance RIS Beamforming. Sensors. 2025; 25(1):75. https://doi.org/10.3390/s25010075

Chicago/Turabian StyleNazar, Ahmad M., Mohamed Y. Selim, and Daji Qiao. 2025. "Revolutionizing RIS Networks: LiDAR-Based Data-Driven Approach to Enhance RIS Beamforming" Sensors 25, no. 1: 75. https://doi.org/10.3390/s25010075

APA StyleNazar, A. M., Selim, M. Y., & Qiao, D. (2025). Revolutionizing RIS Networks: LiDAR-Based Data-Driven Approach to Enhance RIS Beamforming. Sensors, 25(1), 75. https://doi.org/10.3390/s25010075