Abstract

Electroencephalogram (EEG) signals are important bioelectrical signals widely used in brain activity studies, cognitive mechanism research, and the diagnosis and treatment of neurological disorders. However, EEG signals are often influenced by various physiological artifacts, which can significantly affect data analysis and diagnosis. Recently, deep learning-based EEG denoising methods have exhibited unique advantages over traditional methods. Most existing methods mainly focus on identifying the characteristics of clean EEG signals to facilitate artifact removal; however, the potential to integrate cross-disciplinary knowledge, such as insights from artifact research, remains an area that requires further exploration. In this study, we developed DHCT-GAN, a new EEG denoising model, using a dual-branch hybrid network architecture. This model independently learns features from both clean EEG signals and artifact signals, then fuses this information through an adaptive gating network to generate denoised EEG signals that accurately preserve EEG signal features while effectively removing artifacts. We evaluated DHCT-GAN’s performance through waveform analysis, power spectral density (PSD) analysis, and six performance metrics. The results demonstrate that DHCT-GAN significantly outperforms recent state-of-the-art networks in removing various artifacts. Furthermore, ablation experiments revealed that the hybrid model surpasses single-branch models in artifact removal, underscoring the crucial role of artifact knowledge constraints in improving denoising effectiveness.

1. Introduction

Electroencephalography (EEG) is a non-invasive neurophysiological technique that measures and records the electrical activity of the brain [1]. It is widely used in clinical diagnosis [2] and research on neuroscience, cognitive neuroscience [3,4,5,6], and brain–computer interface (BCI) [7,8,9]. However, EEG signals are easily contaminated by various artifacts [10,11,12,13]. Artifacts in EEG signals can introduce significant biases, which may lead to the misinterpretation of essential EEG characteristics [14,15], potentially impact clinical diagnoses of Alzheimer’s disease [16], and affect the performance of BCI systems [17]. Artifacts stemming from non-physiological (e.g., faulty electrodes, line noise, and high electrode impedance) and non-brain physiological (e.g., electrocardiography (ECG), electrooculography (EOG), and electromyography (EMG)) sources can be minimized through the use of precise recording systems and strict recording protocols [18]. However, in practical applications, such ideal conditions are often not achievable. Additionally, physiological artifacts like ECG, EMG, and EOG closely resemble pure EEG signals in characteristics such as amplitude and frequency range [19,20,21], making their removal particularly challenging. Therefore, there is a critical need to develop effective artifact removal techniques to suppress these interferences and preserve the authenticity of EEG signals.

Several traditional approaches for EEG denoising have been developed and demonstrate promising results. These include regression [22], filtering [23,24], the wavelet transform algorithm (WVT), empirical-mode decomposition (EMD) [25,26], and blind source separation (BSS) [27,28,29,30,31,32,33,34,35]. To leverage the strengths of each method, strategies such as EMD-BSS [36,37,38] and WVT-BSS [39,40,41] have been proposed to improve accuracy. However, these approaches have certain drawbacks in practical applications. For example, the absence of external reference channels in regression algorithms [22] limits their effectiveness in removing EOG and ECG artifacts [42]. Filtering may inadvertently discard valuable information [43,44]. EMD algorithms, though effective, are complex and time-consuming. BSS algorithms are vulnerable to rank-deficient data, a common issue arising from practices such as re-referencing and channel interpolation, which limits their effectiveness [45]. In general, most traditional methods have difficulty in effectively eliminating noise from EEG signals, particularly in the absence of reference channels or when manual inspection is required.

Recently, researchers are increasingly turning to deep learning methods to remove artifacts from EEG signals. Neural network architectures based on denoising autoencoders (DAEs), long short-term memory networks (LSTM), and convolutional neural networks (CNNs) are widely used [46,47,48]. For instance, Leite et al. [49] demonstrated the efficacy of DAEs in removing artifacts such as eye blinks and jaw clenching. Xiong et al. [50] proposed a dual-pathway autoencoder (DPAE) framework to capture EEG features at multiple scales for improved denoising. Sun et al. [51] developed a one-dimensional residual convolutional neural network (1D-ResCNN) that effectively removes artifacts while preserving signal clarity. Xiong et al. [52] introduced an algorithm named DWINet, which utilizes the image-dehazing capability of DRHNet to enhance the denoising performance of EEG signals. Gao et al. [53] introduced a method called DuoCL, which combines CNN and LSTM models for deep artifact removal. Wu et al. [54] proposed a neural architecture search with large kernels to enhance denoising performance. Huang et al. [55] developed LTDNet-EEG, a lightweight network designed for real-time EEG signal denoising, and optimized both model structure and computational efficiency. Pei et al. [56] proposed DTP-Net, which reconstructs EEG signals in the time–frequency domain by reusing multi-scale features. Wang et al. [57] deployed the Retentive Network architecture (EEGDiR) for EEG denoising, and exploited its robust feature extraction and comprehensive modeling prowess.

Additionally, Generative Adversarial Networks (GANs), as representatives of adversarial training, have demonstrated excellent performance in EEG signal denoising [58,59]. For instance, Brophy et al. [60] employed GAN to remove EMG and EOG artifacts, while Gandhi et al. [61] designed an asymmetric GAN to denoise EEG time series data. Sumiya et al. [62] utilized GANs to denoise mouse EEG signals. Sawangjai et al. [17] proposed EEGANet, a GAN-based framework for removing ocular artifacts in multi-channel EEG data. Dong et al. [63] developed a novel EEG denoising method based on Wasserstein GAN (WGAN), which effectively decomposes, detects, and removes artifacts from EEG signals.

Recently, Transformer architecture has shown powerful capabilities in EEG denoising [64,65,66]. For example, Pu et al. [67] proposed EEGDNet, a Transformer-based network that removes EOG and EMG artifacts. Furthermore, Yin et al. [68] developed GCTNet, a novel network that combines GAN, parallel CNN, and Transformer architectures, which outperforms many advanced networks in various artifact removal tasks. Huang et al. [69] introduced EEGDfus, a conditional diffusion model for fine-grained EEG denoising, which demonstrates excellent performance in practical applications.

Although deep learning models have shown significant improvements in EEG denoising, there are still some limitations. First, EEG signals are often contaminated by diverse artifacts with varying characteristics, and most previous denoising approaches focus solely on noise removal without learning artifact features, leading to unstable performance and poor handling of varied artifact types. In addition, previous deep learning methods have been limited in their ability to model spatiotemporal features effectively. For instance, CNNs capture local spatial features well but fail to account for long-term temporal dependencies, while Transformer architectures excel at modeling long-range dependencies but underperform in leveraging local features, limiting their effectiveness in fine-grained artifact removal. Moreover, GAN-based structures, while promising, suffer from issues like mode collapse and unstable output during training, particularly with complex artifacts. The reliance on a single discriminator further exacerbates instability, affecting overall denoising performance.

Motivated by the aforementioned challenges, this research introduces DHCT-GAN, a dual-branch hybrid CNN–Transformer network for EEG denoising. The main innovations of DHCT-GAN are as follows:

- Dual-branch architecture for artifact-specific learning: This network features two branches, one for learning clean EEG features and the other for artifact features. This design allows the model to distinguish EEG signals and artifacts, leveraging cross-domain knowledge for more effective denoising. A gated network module controls the information exchange between branches, adapting to diverse data characteristics.

- Multi-scale feature extraction and dependency capture: By combining CNNs and Transformers, DHCT-GAN captures both spatial and temporal characteristics, enhancing adaptability to complex signal dynamics. The integration of global and local Transformers enables the model to capture long-term and short-term dependencies, boosting feature robustness.

- Multi-discriminator GAN framework for stability: DHCT-GAN employs a multi-discriminator approach to mitigate issues such as mode collapse, ensuring stable and reliable denoising across diverse artifacts.

In this study, the denoising capabilities of DHCT-GAN were evaluated for four types of artifacts—EMG, EOG, ECG, and mixed (EMG + EOG)—using three publicly available datasets. A comparative analysis was conducted with several recent state-of-the-art EEG denoising methods. Quantitative evaluation using six performance metrics demonstrated that DHCT-GAN outperforms the current leading methods, showcasing its superior effectiveness and robustness.

The main contributions of this paper are summarized as follows:

- We introduce a dual-branch hybrid CNN–Transformer network specifically designed for EEG denoising. This network explicitly models the noise and incorporates it as conditional information, effectively extracting both local and global features from EEG signals, enabling a more accurate and flexible denoising process. Additionally, the use of a multi-discriminator approach enhances the stability of model training.

- The design of DHCT-GAN carefully addresses the diversity and variability of artifact challenges, making it highly effective in practical scenarios, especially in areas such as BCI and clinical EEG analysis.

- The effectiveness and robustness of DHCT-GAN were validated on three public datasets. Experimental results demonstrate its ability to effectively remove four types of artifacts and reconstruct more accurate waveforms. Quantitative evaluation further highlights its superior performance compared to recent state-of-the-art models.

The structure of this article is as follows: Section 2 provides a comprehensive introduction to the proposed network, including the dataset and evaluation metrics used in this study. Section 3 demonstrates the denoising results of the proposed network and compares them with other methods. Section 4 discusses these results. Finally, Section 5 summarizes the conclusions of this study.

2. Materials and Methods

2.1. Datasets

In this study, we utilized three public datasets, the EEGdenoiseNet dataset [70], the MIT-BIH Arrhythmia Database [71], and a semi-simulated EEG/EOG dataset [72], to evaluate the performance of the proposed network according to previous research [63,65,67,68,69,73].

The EEGdenoiseNet dataset is a publicly available, structured dataset designed for training and testing deep learning-based denoising models. It also facilitates performance comparisons among different models. This dataset comprises 4514 clean EEG segments, 3400 ocular artifact (EOG) segments, and 5598 muscular artifact (EMG) segments. The clean EEG, pure EOG, and pure EMG segments were sourced from several publicly available data repositories [74,75,76,77,78,79,80,81]. The raw EEG data were collected from 52 participants performing both real and imagined left- and right-hand movement tasks, with 64-channel EEG recorded at a 512 Hz sampling frequency. Clean EEG signals were obtained by resampling to 256 Hz and processed by ICLabel [82]. For EOG segments, multiple open-access EEG datasets with additional EOG channels were used [75,76,77,78,79,81], where horizontal and vertical raw EOG signals were resampled to 256 Hz. For EMG segments, a facial EMG dataset was utilized [80], with raw EMG signals resampled to 512 Hz. All three categories of signals (EEG, EOG, and EMG) were segmented into standardized 2 s, one-dimensional segments according to the previous knowledge. Finally, each segment was visually checked by experts to ensure they are clean and usable.

The MIT-BIH Arrhythmia Database consists of data from 47 subjects monitored by the BIH Arrhythmia Laboratory from 1975 to 1979. It includes 48 half-hour excerpts of two-channel ambulatory ECG recordings with a sampling rate of 360 Hz. Of these, 23 recordings were randomly selected from a set of 4000 24 h ambulatory ECG recordings collected from a mixed population of inpatients (about 60%) and outpatients (about 40%) at Boston’s Beth Israel Hospital. The remaining 25 recordings were deliberately chosen from the same set to capture less common but clinically significant arrhythmias that might not be well represented in a small random sample. These recordings were digitized at 360 samples per second per channel with an 11-bit resolution over a 10 mV range. Two or more cardiologists independently annotated each record, and any disagreements were resolved to create computer-readable reference annotations for each beat, resulting in approximately 110,000 annotations included in the database.

The semi-simulated EEG/EOG dataset includes 54 recordings, each lasting 30 s. The pure EEG signals, contaminated EEG signals, and EOG artifacts are provided in this dataset. EEG data were collected from 27 healthy subjects during an eyes-closed session, with a sampling frequency of 200 Hz. The signals were band-pass filtered at 0.5–40 Hz and notch filtered at 50 Hz. EOG signals were obtained from the same subjects, during an eyes-opened condition, using four electrodes placed above and below the left eye and another two on the outer canthi of each eye. These EOG signals were band-pass filtered at 0.5–5 Hz. The artifact-free EEG signals are manually contaminated with EOG artifacts based on the model proposed by Elbert et al. [83], creating the semi-simulated EEG/EOG dataset.

In our study, pure EMG signals are sourced from the EEGdenoiseNet dataset, pure EOG signals are from both the EEGdenoiseNet and the semi-simulated EEG/EOG dataset, and pure ECG signals are taken from the MIT-BIH Arrhythmia Database. Clean EEG signals are derived from all three datasets. These clean EEG signals serve as the ground truth, while four types of artifacts (EMG, EOG, ECG, and mixed (EOG + EMG)) are linearly combined with the clean EEG signal to produce contaminated EEG recordings.

For consistency, all datasets were resampled at 512 Hz and segmented into one-dimensional segments of 2 s each. Specifically, 3400 pairs of EEG and EOG segments, 5600 pairs of EEG and EMG segments, 3600 pairs of EEG and ECG segments, and 3400 pairs of EEG and mixed artifact (EOG + EMG) segments were used to generate contaminated EEG signals according to Equation (1).

where represents the pure EEG signal as the ground truth (EEGP), while y represents the contaminated EEG (EEGC), and denotes artifacts (e.g., EMG, ECG, EOG, and mixed). The parameter λ is used to regulate the signal-to-noise ratio (SNR) in the contaminated EEG signal, where denotes the noise components of EEGC. refers to the root-mean-squared value. indicates the number of temporal samples in the segment , where represents the i-th sample of a segment .

For the training set, 80% of the segments were randomly selected from each EEG and artifact pair. Another 10% of the segments from each pair were selected randomly for the test set, while the remaining 10% formed the validation set. Within the training set, pure EEG segments and artifact segments were randomly paired at varying SNR levels ranging from −7 to 2 dB. For both the test and the validation sets, each artifact segment was paired with a pure EEG segment, with mixing conducted once at each of the ten SNR levels (−7, −6, −5, −4, −3, −2, −1, 0, 1, and 2 dB).

2.2. Methods

In this study, we introduce DHCT-GAN, a dual-branch hybrid CNN–Transformer network for EEG denoising.

2.2.1. Pseudo Code

See Algorithm 1.

| Algorithm 1 EEG Denoising |

| Input: Training datasets (x; y), number of adversarial training iterations T, number of training iterations for the denoising network K, mini-batch size M, and early stopping criterion C. 1: Randomly initialize the generator parameters θ and the parameters of the three discriminators: Φ1 Φ2, Φ2 2: for t ← 1 to T do 3: for k ← 1 to K do 4: Sample M instances{x(m), y(m)} from the training datasets //G is the overall generator network, G1 generates clean EEG signals, and G2 generates noise signals 5: Compute the loss and gradients for the discriminator network D1 6: Update the parameters of discriminator network D1 7: Compute the loss and gradients for the discriminator network D2 8: Update the parameters of discriminator network D2 9: Compute the loss and gradients for the discriminator network D3 10: Update the parameters of discriminator network D3 11: Compute the loss and gradients for the generator G 12: Update the parameters of generator network G with end for 13: Compute the loss of generator network G on the validation set 14: If the validation loss increases consecutively more than C times, then stop training; otherwise, continue training end for Output: Generator network G(x; θ) |

2.2.2. DHCT-GAN Structure

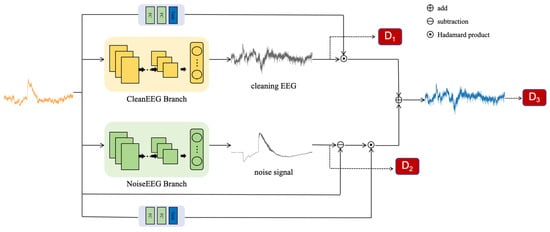

In Figure 1, we present DHCT-GAN, a dual-branch network designed for EEG signal denoising. The network architecture comprises a generator (G) and three discriminators (D1, D2, D3). The generator G has two branches and two gating networks: the first branch learns the distribution of clean EEG signals, while the second branch learns the distribution of noise signals. When provided with a sample pair, the generator processes the input X and generates three outputs: an initial prediction of the clean EEG signal, a prediction of the noise signal, and a clean EEG signal derived from the fusion of the two outputs. Subsequently, discriminators D1, D2, and D3 then take these outputs as input and guide the training of the generator through feature loss and adversarial loss.

Figure 1.

The structure of the proposed network—DHCT-GAN.

Gating networks are particularly useful in scenarios involving complex or heterogeneous data. In this study, the gating networks consist of two fully connected layers and use a tanh activation function to learn a set of weight coefficients that adaptively fuse the outputs of two branches, generating the constrained clean EEG signal. The reconstructed EEG can be calculated using the following formula:

where denotes the gating networks, represents the ultimate predicted clean EEG signal, and and correspond to the outputs of the first and second branches, respectively. and refer to the mask of the first and second branches, while denotes the original EEG signal.

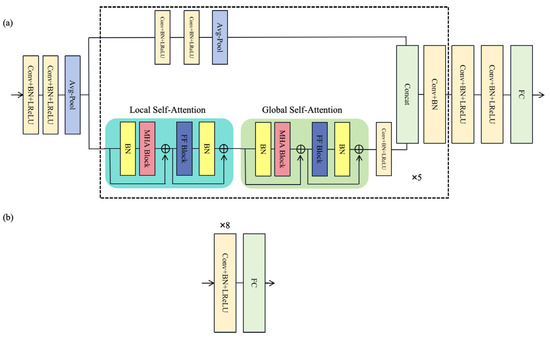

Each branch generator follows a consistent structure (Figure 2), consisting of three main parts:

Figure 2.

The structure of (a) the generator and (b) the discriminator.

- Preprocessing module: This module converts the one-dimensional signal into high-dimensional (32 dimensions) features by employing two 1-D convolution layers and an average pooling layer to enhance information extraction capabilities.

- Encoding module: This module consists of 5 CNN-LGTB encoding blocks, which include parallel CNN and Local–Global Transformer Block (LGTB), with information dimensions starting from 64 and doubling incrementally (64, 128, 256, 512, and 1024). The output information of the last encoding block is combined through a feature fusion layer, and after passing through a convolutional layer and batch normalization (BN) layer, it is output as extracted features. The CNN block consists of two convolutional layers with a kernel size of 3, a batch normalization layer, and a Leaky Rectified Linear Unit (LReLU) layer. The LGTB includes a local self-attention module (LSA), a global self-attention module (GSA), and a feedforward module. The local attention module divides the input data into multiple parts (set to 8 blocks in this study), calculates local attention for each part, and then concatenates them to form local attention features. Local self-attention focuses on a limited context, which enables the model to effectively capture local dependencies within the input sequence. In contrast, global self-attention considers the entire sequence, allowing the model to capture long-range dependencies and overall contextual relationships. By integrating both local and global self-attention mechanisms, the model effectively captures a wide range of dependencies, enhancing its ability to understand complex patterns within the data. This combination facilitates a more comprehensive representation of the input, improving performance across various tasks. The output is then passed through a feedforward network to the global attention module, where global attention features are computed. Subsequently, the output undergoes further processing through a feedforward network, a convolutional layer, a batch normalization layer, and an LReLU layer.

- Decoding module: This module reverts the intermediate features back to the original signal dimension by utilizing two convolutional layers and one fully connected layer to produce denoised signals.

The three discriminators, denoted as D1, D2, and D3, have the same structure as shown in Figure 2a. The input signals undergo processing through M convolutional layers, each layer followed by a batch normalization layer and an activation function layer. In this research, M is defined as 8. The output channels of the convolutional layers are 64, 64, 128, 128, 256, 256, 512, and 512, respectively. The kernel size of each one-dimensional convolutional layer is 3, with a stride of 2 and padding of 1. D1 discriminates between real clean signals and clean signals predicted by the first branch, while D2 distinguishes between real noisy signals and the noisy signals predicted by the second branch. D3 focused on differentiating real clean signals from the clean signals ultimately predicted by the generator. Together, these three discriminators offer additional loss functions to the generator, enhancing the quality of the generated signals.

2.2.3. Loss Function

The loss function employed in this study comprises the mean squared error (MSE) loss for the output of the generator, in addition to the feature loss and adversarial loss for the output of the discriminator.

There are three losses (, , ) in the generator; each loss is composed of MSE loss (), feature loss (), and adversarial loss (), with added constraint coefficients. Here, and are the weights of feature loss and adversarial loss.

Total loss is described in Equation (9), where is the total loss of the generator, and are the total loss of the first and second branch, respectively, and denotes the loss associated with the final output of the generator.

MSE loss, the feature loss, and the adversarial loss are expressed as follows:

where represents the ground truth used during model training and denotes the model’s output, which includes the predicted clean signal as well as the noise signal, subscript represents the i-th sample, and denotes the feature from the intermediate convolution layer of the discriminator.

In Equation (10), given the input data , its denoised prediction , and the corresponding ground truth clean data , the mean squared error (MSE) between and is computed for each sample. The average of these MSE values across all samples results in a scalar value that quantifies the discrepancy between the predicted and ground truth data.

In Equation (11), the feature representations of both the predicted values and the ground truth Y are extracted using the discriminator. The feature loss is calculated by computing the MSE between the intermediate features of , () and () derived from the discriminator. This yields a scalar value that quantifies the difference between the predictions and the ground truth in the feature space.

In Equation (12), the predicted values are passed through the discriminator to obtain the output probability, which represents the likelihood of the input being classified as real. To quantify the gap between the predicted values and real samples in the discriminator, the squared difference between the output probability and 1 is computed. This produces a scalar value that reflects the confidence difference in the discriminator’s classification of the predictions.

The discriminators were trained using the discriminator loss () to enhance the accuracy in distinguishing and .

2.2.4. Model Training and Implementation

During the training process, generators (G) and discriminators (D1, D2, D3) are trained alternately. Initially, the parameters of the generator G are kept constant while the discriminators (D1, D2, and D3) are trained individually. Each discriminator receives different inputs: D1 is provided with the clean EEG signal generated by the first branch of the generator, D2 receives the noise signal generated by the second branch of the generator, and D3 receives the denoised EEG signal generated by the generator. Subsequently, the parameters of each discriminator are independently updated based on their respective loss function. Following this, the discriminator parameters are fixed, and the original signal (X) is input into the generator G, with the parameters of G being updated according to the total loss of the generator. Early stopping is implemented during the training phase to prevent model overfitting by monitoring the model’s performance metric (MSE updated with each epoch) on the validation dataset. If no significant improvement is observed over a certain number of epochs, the training is stopped. The model with the best performance is then selected for deployment. Notably, the incorporation of three discriminators enhances the stability of the model by not only computing the loss for the overall output but also evaluating the loss for each branch separately. During the testing phase, the original signal (X) is input into the generator G, and the denoised EEG signal predicted by the generator is used as the final output.

The training process of GANs is often unstable and prone to mode collapse. To mitigate this, we adjust the learning rate to 0.001. The Adam optimizer is employed for model optimization, with the generator’s Adam parameters set to = 0.5 and = 0.9, and the discriminator’s Adam parameters set to = 0.9 and = 0.999. The learning rate is fixed at 0.0001, and the batch size is set to 40. The maximum number of training epochs is limited to 1000. All experiments are conducted using Python 3.7.13 and the PyTorch 2.1.0 framework on an NVIDIA RTX 4090 GPU, manufactured by NVIDIA Corporation in Santa Clara, CA, USA.

2.2.5. Evaluation Metrics

Several objective measures are used to complete the quantitative performance analysis, including Relative Root Mean Square Error (RRMSE) in the temporal domain (RRMSEt), RRMSE in the spectral domain (RRMSEf), the average correlation coefficient (CC), the temporal percentage reduction in artifacts from the EEGC (), the structural similarity index measure (SSIM), and mutual information (MI). RRMSEt and RRMSEf define the energy error of the signal in the temporal and spectral domains, respectively. Smaller values indicate better artifact removal performance. CC measures the similarity between the predicted EEG and the ground truth EEG; a higher CC value indicates greater similarity, while a lower value indicates less agreement. To assess the statistical significance of the CC, a -value is calculated. Typically, a small -value (<0.05) suggests that the observed correlation is statistically significant. A higher value indicates that more artifacts have been removed from the contaminated EEG signal, resulting in a cleaner and more accurate EEG signal. SSIM is a measure of signal similarity; a higher SSIM value indicates that the predicted EEG is more similar to the ground truth EEG. MI measures the dependency between two signals, with larger MI values signifying stronger mutual dependence or correlation between the predicted EEG and the ground truth. RRMSEt, RRMSEf, CC, , SSIM and MI are defined as follows:

Here, the denoised EEG signals are represented by , the is the power spectral density computed using the Periodogram method, and the functions and denote the covariance and variance of the signal, respectively. is used to represent the time-domain correlation coefficient between EEGC and EEGP segments. and are the average values of the pure EEG signals () and the denoised EEG signals (). and are small constants set to avoid the denominator being zero; usually, and . is the joint probability density function of and , and and are the marginal probability density function of and , respectively.

3. Results

3.1. Performance Evaluation

In order to assess the efficacy of DHCT-GAN, we conducted a comparative analysis with five state-of-the-art deep learning-based EEG denoising techniques: simple convolutional neural network (SimpleCNN), long short-term memory network (LSTM) [84], dual-scale CNN-LSTM model (DuoCL) [53], a GAN-guided parallel CNN and Transformer network (GCTNet) [68], and EEGDiR [57]. The comparison experiments were carried out using the same training and testing settings, for example, the same data separation and same batch size.

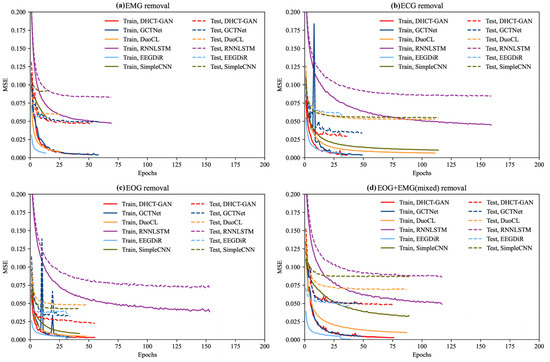

Figure 3 illustrates the variation in MSE as a function of the number of iterations (epochs) during the training and testing process. Overall, the training and testing MSEs for each method generally decrease with increasing iterations, eventually reaching a stable state. In the task of EMG artifact removal, DHCT-GAN, DuoCL, GCTNet, and EEGDiR demonstrate similar performance on the training set, achieving the lowest MSE values. Among these, EGDiR exhibits the fastest convergence, while DHCT-GAN achieves the lowest MSE on the testing set. Notably, DHCT-GAN shows superior generalization ability among these four models, with the smallest MSE difference between the training and testing sets. This highlights the robustness and efficiency of DHCT-GAN for EMG artifact removal tasks. In the task of ECG artifact removal, the MSE values of DHCT-GAN, DuoCL, GCTNet, and EEGDiR are similar in the training set. Among these, EEGDiR achieves the lowest MSE on the training set, and exhibits the fastest convergence (converges around 25 epochs). However, DHCT-GAN achieves the lowest MSE on the testing set, demonstrating a good generalization. In the task of EOG artifact removal, DHCT-GAN, EEGDiR, and GCTNet demonstrate the best performance on the training set, achieving the lowest MSE values. DHCT-GAN achieves the lowest MSE on the testing set, outperforming the other methods in terms of accuracy and generalization. Among these, GCTNet and EEGDiR exhibit the fastest convergence, but GCTNet’s convergence curve exhibits greater fluctuations, indicating reduced stability. In the tasks of EMG, ECG, and EOG artifact removal, EEGDiR fits the training set well but performs poorly on the testing set. In the task of EOG and EMG mixed artifact removal, EEGDiR demonstrates the best performance on the training and testing sets. DHCT-GAN converges at approximately 75 epochs, while GCTNet converges at around 50 epochs. DHCT-GAN and GCTNet achieve comparable performance.

Figure 3.

The MSE loss curve of DHCT-GAN, GCTNet, DuoCL, EEGDiR, RNNLSTM, and SimpleCNN in the training (solid lines) and testing (dashed lines) process in (a) EMG removal, (b) ECG removal, (c) EOG removal, and (d) EOG + EMG (mixed) removal tasks.

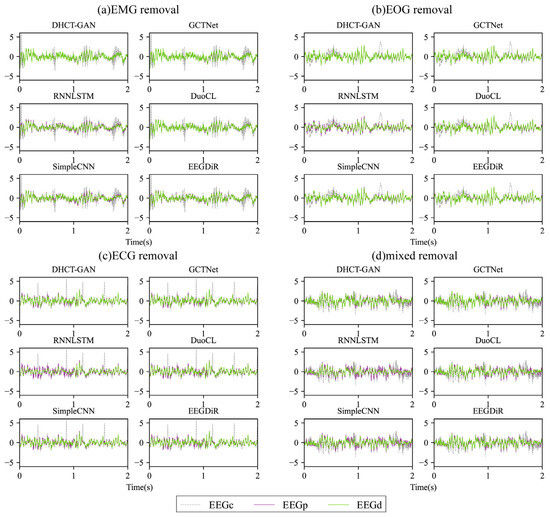

The exemplary waveforms obtained after removing four types of artifacts are displayed in Figure 4. In comparison to EEGC, the waveform of EEGD from various networks shows significant changes (Figure 4). It is apparent that DHCT-GAN, GCTNet, DuoCL, and EEGDiR demonstrate notable effectiveness in mitigating EMG and EOG artifacts in the contaminated signals. While the EEGD waveform exhibits slight variations from the EEGP waveform, these two waveforms are highly similar and show considerable overlap, indicating successful EEG signal reconstruction. In ECG and mixed artifact removal tasks, DHCT-GAN and GCTNet demonstrate better performance than DuoCL and EEGDiR. On the other hand, RNNLSTM and SimpleCNN display significant signal distortion in the reconstructed data when compared to the original contaminated EEG signals after artifact removal. Moreover, the reconstructed waveforms from GCTNet, DuoCL, and EEGDiR exhibit instances of peak amplitude overflow and local waveform offset, indicating a loss of specific waveform details. These findings illustrate that DHCT-GAN can efficiently eliminate ECG, EOG, EMG, and mixed (EOG + EMG) artifacts and reconstruct EEG signals.

Figure 4.

The exemplary waveform obtained after removing (a) EMG, (b) ECG, (c) EOG, and (d) EOG + EMG (mixed) artifacts using various networks from contaminated EEG signals with an SNR of 0 dB. EEGP represents the pure EEG signal considered the ground truth, EEGC represents the contaminated EEG signal, and EEGD represents the denoised EEG signal.

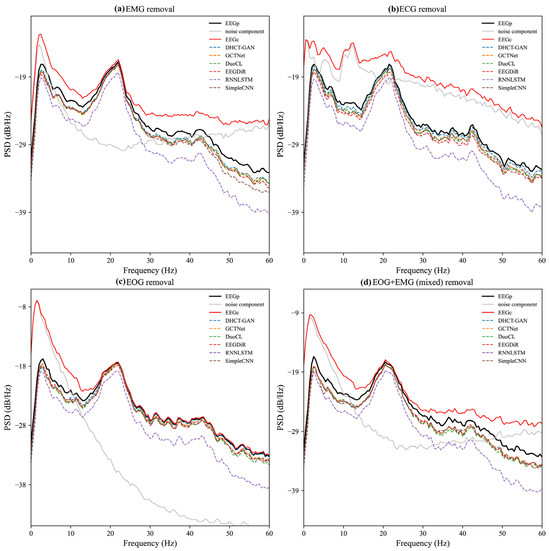

The PSD results of the noise component (EEGC-EEGP) are displayed in Figure 5. In the EMG artifact removal task, the PSD of the noise component shows substantial values across all frequencies, indicating that the energy distribution of EMG artifacts is relatively uniform. In the mixed artifact removal task, the PSD pattern of the noise component closely resembles that of EMG artifacts, suggesting that the characteristics of mixed artifacts (EOG + EMG) are primarily dominated by EMG. The PSD of EOG and ECG artifacts is primarily concentrated in low-frequency (0–20 Hz) ranges and decays rapidly thereafter (Figure 5b,c).

Figure 5.

PSD results of the pure EEG signal (EEGP, black solid line), the contaminated EEG signal (EEGC, red solid line), and the noise component (EEGC-EEGP, gray solid line). PSD results obtained after removing (a) EMG, (b) ECG, (c) EOG, and (d) EOG + EMG (mixed) artifacts from the contaminated EEG data by various networks (dashed lines).

The PSD results after artifact removal, the pure EEG signal (EEGP), and the contaminated EEG signal (EEGC) are also presented in Figure 5. A greater divergence between the PSD line and the red line (EEGC) indicates that more artifacts in the frequency domain have been removed. Conversely, a closer alignment of the PSD line with the black line (EEGP) indicates minimal loss in the frequency domain after artifact removal. In the task of EMG and mixed artifact removal, the PSD lines of DHCT-GAN, DuoCL, EEGDiR, GCTNet, and SimpleCNN align closely with the black line across certain frequencies (e.g., 0–5 Hz, 20–25 Hz), demonstrating their strong EMG denoising performance. In the task of ECG and EOG artifact removal, the PSD lines of DHCT-GAN, GCTNet, DuoCL, EEGDiR, and SimpleCNN also exhibit close alignment with EEGP across all frequencies, indicating effective denoising capabilities. In contrast, the PSD lines of RNNLSTM exhibit significant overlap with EEGC across all artifact types, revealing substantial frequency domain information loss and highlighting their comparatively poor performance.

In order to more accurately measure the effectiveness of these models in removing artifacts, we provide quantitative results for six performance metrics (RRMSEf, RRMSEt, CC, , SSIM and MI) by averaging the values across all SNR levels in Table 1. Lower values of RRMSEt and RRMSEf indicate that the denoised EEG signals maintain minimal deviation in both the time and frequency domains. Likewise, higher values of CC, , SSIM and MI indicate that more authentic EEG information is retained while effectively eliminating artifacts. To assess the statistical significance of the CC, a -value is calculated. Typically, A small -value (<0.05) suggests that the observed correlation is statistically significant.

Table 1.

Average performance of artifact removal for EMG, EOG, ECG, and mixed (EOG + EMG) signals by all networks.

Compared to the other five models, DHCT-GAN achieves superior results across all metrics. In the EMG artifact removal task, DHCT-GAN demonstrates the minimum values for RRMSEt (0.3918) and RRMSEf (0.2837), as well as the maximum values for CC (0.9201), η (82.35%), SSIM (0.7167), and MI (1.0031) among six models. In the EOG artifact removal task, DHCT-GAN exhibits the lowest RRMSEt (0.2734) and RRMSEf (0.2024), and the highest CC (0.9624), η (91.80%), SSIM (0.8402), and MI (1.4290). When addressing ECG artifacts, DHCT-GAN outperforms all other models with the lowest RRMSEt (0.3111) and RRMSEf (0.2285), and the highest CC (0.9504), η (88.97%), SSIM (0.7796), and MI (1.2309). Moreover, DHCT-GAN surpasses the comparison method in removing mixed artifacts, displaying the lowest RRMSEt (0.3975) and RRMSEf (0.2904), and the highest CC (0.9184), η (82.07%), SSIM (0.6996) and MI (1.0159).

Among the four types of artifacts, our network demonstrates superior performance in removing EOG artifacts, with the ability to eliminate ECG artifacts ranking second. The effectiveness of our network in eliminating EMG and mixed artifacts is comparable. Notably, judging from the metrics of RRMSEf, RRMSEt, CC, η, SSIM, and MI, GCTNet is closely aligned with our work in tasks related to removing ECG and mixed artifacts, while EEGDiR is closely aligned with our work in removing mixed artifacts. At the same time, the -values corresponding to the correlation coefficients are less than 0.05, which indicates that the relationship between prediction signals and the true signals is statistically significant.

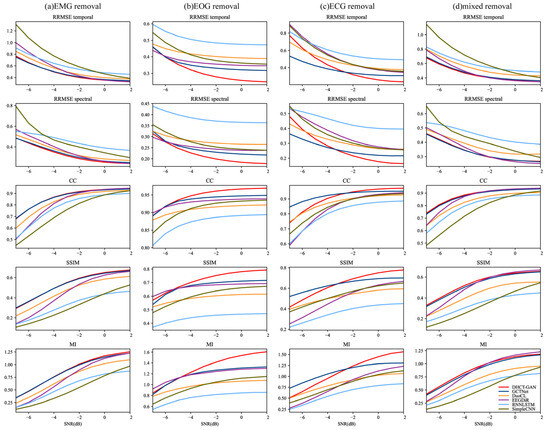

To comprehensively compare these networks, performance metrics are evaluated at various SNR levels, and the Kruskal–Wallis H test is conducted. As illustrated in Figure 6, the performance of artifact removal generally improves with increasing SNR levels. Our network achieves the highest CC, SSIM, and MI values, and the lowest RRMSEf and RRMSEt values for EOG artifact removal tasks at SNR levels from −6 to 2 dB (p < 0.05 for all metrics). In contrast, for EMG and mixed artifacts, both our network and GCTNet demonstrate similar performance across all SNR levels, with our network showing a slight advantage (p < 0.05 for all metrics), consistent with the results in Table 1. In the case of ECG artifact removal, GCTNet outperforms our network at SNR levels ranging from −7 to −3 dB (p < 0.05 for all metrics). However, our network exhibits superior performance in removing ECG artifacts at SNR levels from −2 to 2 dB (p < 0.05 for all metrics).

Figure 6.

Performance metric estimates (RRMSEf, RRMSEt, CC, SSIM, MI) at various SNR levels (from −7 to 2 dB) in (a) EMG (b) ECG, (c) EOG and (d) EOG + EMG (mixed) artifact removal tasks.

The results obtained by DHCT-GAN for different SNR levels of ECG artifacts show greater variability, while those for EOG, EMG, and mixed artifacts show less variability. These findings suggest that the extent of ECG artifact contamination has a more pronounced impact on the network’s performance compared to the other three types of artifacts.

3.2. Ablation Study

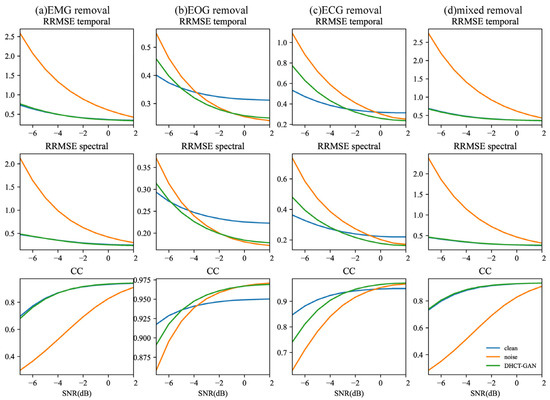

In DHCT-GAN, the first branch, referred to as the clean-learning branch, directly learns the distribution of clean EEG signals, while the second branch, referred to as the noise-learning branch, learns the characteristics of noise signals. In order to explore the necessity of using a dual-branch structure, ablation studies were conducted as follows: (1) using only the clean-learning branch (clean), (2) using only the noise-learning branch (noise), and (3) using the proposed network (combining the noise-learning branch and the clean-learning branch, DHCT-GAN).

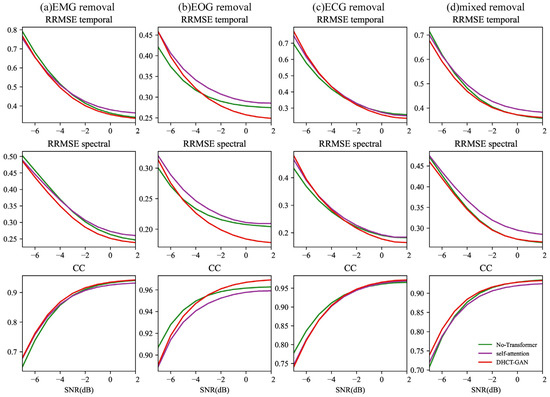

In this study, Transformers are integral to the DHCT-GAN architecture, but their role in the hybrid EEG artifact removal process is not sufficiently explored. In order to clarify the contribution of the Transformer component, we designed ablation experiments as follows: (1) remove the Transformer module from the proposed network (No-Transformer), (2) replace the Transformer module with a simple self-attention mechanism module (self-attention), and (3) the proposed network (hybrid CNN–Transformer modules, DHCT-GAN).

The results of the ablation experiments are presented in Table 2.

Table 2.

Performance metrics for ablation experiments.

The DHCT-GAN integrates a noise learning branch and a clean learning branch. Compared to using the clean learning branch alone or the noise learning branch alone, the proposed network demonstrates stronger artifact removal performance on different types of artifacts. Additionally, the clean learning branch performs well in removing ECG, EMG, and mixed artifacts, while the noise learning branch is more effective in removing EOG artifacts compared to the clean learning branch and other networks. Furthermore, when dealing with EMG and mixed artifacts, using the noise learning branch alone leads to significantly poorer denoising performance. This may be due to the fact that the noise learning branch is more prone to learning the distribution characteristics of low-frequency noise.

For four types of artifact removal tasks, the network without the Transformer module (No-Transformer) performs better than the network using a hybrid CNN and self-attention mechanism module. Compared to the network without the Transformer module or using the self-attention mechanism module, the proposed network demonstrates stronger denoising performance. These results emphasize the contribution of the Transformer component in our network.

The study also investigated the performance metrics (RRMSEf, RRMSEt, CC) of the ablation experiment at various SNR levels, as shown in Figure 7 and Figure 8. The Kruskal–Wallis H test was conducted, and < 0.05 for all metrics. In Figure 7, for tasks involving EMG and mixed artifact removal, the performance of the DHCT-GAN and the clean-learning branch alone exhibits similar performance at equivalent SNR levels, and both approaches outperform using the noise learning branch alone across all SNR levels. In the case of EOG removal, the clean learning branch alone demonstrates superior performance between −7 and −5 dB SNR levels, while the DHCT-GAN achieves the best performance between −5 and −1 dB SNR levels. However, the performance of the DHCT-GAN is slightly inferior to using the noise learning branch alone from 0 to 2 dB SNR levels. Regarding ECG removal, the clean learning branch alone performs best between −7 and −4 dB SNR levels. In comparison to utilizing the clean learning branch alone, the noise learning branch alone demonstrates enhanced ECG removal performance within the 0 to 2 dB SNR range, and the DHCT-GAN achieves optimal performance between −2 and 2 dB SNR levels.

Figure 7.

The results of the ablation experiment (using clean branch only or noise branch only) conducted at varying SNR levels (from −7 to 2 dB) in (a) EMG, (b) ECG, (c) EOG, and (d) EOG + EMG (mixed) artifact removal tasks.

Figure 8.

The results of the ablation experiment (remove the Transformer module or replace the Transformer module with a self-attention mechanism module) conducted at varying SNR levels (from −7 to 2 dB) in (a) EMG, (b) ECG, (c) EOG, and (d) EOG + EMG (mixed) artifact removal tasks.

In Figure 8, for tasks involving EMG, ECG, and mixed artifact removal, the network without the Transformer module (CNN module only) and the network using the hybrid CNN and self-attention mechanism module exhibit similar performance at equivalent SNR levels. The performance of DHCT-GAN is slightly better than the network without the Transformer module (CNN module only) as well as the network using the hybrid CNN and self-attention mechanism module at all SNR levels in EMG and mixed artifact removal tasks. In the case of EOG removal, DHCT-GAN performs best between −3 and 2 dB SNR levels, and the network without the Transformer module (CNN module only) performs better than the network using hybrid CNN and self-attention mechanism module between −7 and −5 dB SNR levels. In the ECG artifact removal task, the network without the Transformer module performs best between −7 and −4 dB SNR levels.

4. Discussion

In the analysis and applications of EEG data, removing artifacts is crucial. In this study, we propose DHCT-GAN, a dual-branch network that integrates cross-domain knowledge for EEG denoising. To improve the EEG denoising performance, we made some key improvements to the model architecture. Firstly, we developed the dual-branch architecture and gated network module to incorporate cross-domain knowledge of both EEG signals and artifacts, overcoming the limitations of traditional clean-signal-only methods. Secondly, the network leverages the complementary strengths of CNNs and Transformers to capture both spatial and temporal characteristics of EEG signals and artifacts, enhancing the model’s adaptability to complex signal dynamics. Thirdly, by integrating both global and local Transformers, the model can capture long-term and short-term dependencies in the data, improving feature robustness and interpretability. Furthermore, the use of multiple discriminators in the GAN framework improves model stability.

Through waveform analysis, power spectral density examination, and quantitative evaluation of six performance metrics, DHCT-GAN demonstrates its ability to preserve intrinsic brain activity after artifact removal. Compared with five state-of-the-art deep learning-based EEG denoising works (SimpleCNN, LSTM, DuoCL, GCTNet, and EEGDiR), DHCT-GAN also demonstrates superior performance in preserving the physiological features of EEG while effectively mitigating multiple types of artifacts. Ablation experiments further prove that the overall denoising performance of DHCT-GAN surpasses methods focusing solely on learning clean EEG signals. In general, DHCT-GAN preserves essential neurophysiological characteristics while significantly reducing interference from different artifacts. This improvement not only enhances the diagnostic accuracy of EEG in clinical environments but also increases the robustness and reliability of BCI systems in decoding user intent.

However, this study still has some limitations. First, DHCT-GAN has shown promising performance on several public datasets, but its performance on real data was not explored in this study, facing challenges with generalization. Second, in this study, all EEG and artifacts were segmented into one-dimensional segments, without considering correction amongst channels. Furthermore, due to the dual-branch architecture and the inclusion of a GAN structure, the DHCT-GAN model has relatively slow inference speeds, requiring more computational power and memory than other simple networks.

Therefore, future works will focus on enhancing the generalization capabilities of DHCT-GAN. Our study primarily focuses on the time domain, and future work could consider incorporating frequency domain information to enhance the performance of DHCT-GAN. Future work could also explore how to integrate the denoising model with existing EEG devices and systems, which could be achieved by developing APIs or utilizing standard interfaces such as OpenEEG or BIDS to facilitate data transmission and interaction between the devices and the model. Furthermore, given the potential of graph neural networks for denoising tasks, future research could also explore their application in this context.

5. Conclusions

This study proposes DHCT-GAN, a dual-branch network that integrates cross-domain knowledge for enhancing the denoising of EEG signals. Through waveform analysis, power spectral density examination, and quantitative evaluation using performance metrics, DHCT-GAN demonstrates its superior ability to preserve intrinsic brain activity after removing various types of artifacts compared to recent state-of-the-art methods. Furthermore, ablation experiments show that the hybrid model surpasses single-branch models in artifact removal, underscoring the crucial role of artifact knowledge constraints in improving denoising effectiveness. In conclusion, this study establishes DHCT-GAN as a robust method for artifact removal in RRG signals, effectively leveraging cross-domain knowledge to achieve state-of-the-art results.

Author Contributions

Conceptualization, Y.C., Z.M. and D.H.; methodology, Y.C. and Z.M.; software, Y.C. and Z.M.; validation, Y.C. and Z.M.; formal analysis, Y.C. and Z.M.; investigation, Y.C. and Z.M.; resources, D.H.; data curation, Y.C. and Z.M.; writing—original draft preparation, Y.C. and Z.M.; writing—review and editing, Y.C., Z.M. and D.H.; visualization, Y.C. and Z.M.; supervision, Y.C.; project administration, Y.C.; funding acquisition, D.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key R&D Program of China (Grant No. 2023YFB3002404), and the Guangdong High Level Innovation Research Institute (Grant No. 2021B0909050004).

Informed Consent Statement

Not applicable.

Data Availability Statement

The original data presented in the study are openly available. EEGdenoiseNet dataset: https://github.com/ncclabsustech/EEGdenoiseNet, accessed on 11 December 2023; MIT-BIH Arrhythmia Database: https://physionet.org/content/mitdb/1.0.0/, accessed on 1 January 2024; the semi-simulated EEG/EOG dataset: https://data.mendeley.com/datasets/wb6yvr725d/1, accessed on 20 January 2024.

Conflicts of Interest

The authors declare no conflicts of interest.

Glossary

Transformer: A deep learning architecture widely used in natural language processing and computer vision tasks. It relies on a mechanism called self-attention to process sequential data efficiently and capture long-range dependencies. Transformers have revolutionized fields such as machine translation and image recognition. GAN (Generative Adversarial Network): A type of machine learning model composed of two neural networks, a generator and a discriminator, that compete against each other. The generator aims to create data that mimic real samples, while the discriminator evaluates the authenticity of the generated data. This adversarial process improves the generator’s ability to produce realistic outputs. Adversarial Training: A training strategy where a model is exposed to adversarial examples—inputs designed to challenge the model’s predictions. This approach improves robustness by teaching the model to handle such challenging scenarios, often used in conjunction with GANs or to defend against attacks in security-sensitive applications.

References

- Casson, A.J.; Yates, D.C.; Smith, S.J.; Duncan, J.S.; Rodriguez-Villegas, E. Wearable electroencephalography. IEEE Eng. Med. Biol. Mag. 2010, 29, 44–56. [Google Scholar] [CrossRef]

- Henry, J.C. Electroencephalography: Basic Principles, Clinical Applications, and Related Fields, Fifth Edition. Neurology 2006, 67, 2092-a. [Google Scholar] [CrossRef]

- Alarcao, S.M.; Fonseca, M.J. Emotions recognition using EEG signals: A survey. IEEE Trans. Affect. Comput. 2017, 10, 374–393. [Google Scholar] [CrossRef]

- Zhang, J.; Yin, Z.; Chen, P.; Nichele, S. Emotion recognition using multi-modal data and machine learning techniques: A tutorial and review. Inf. Fusion 2020, 59, 103–126. [Google Scholar] [CrossRef]

- Sikander, G.; Anwar, S. Driver Fatigue Detection Systems: A Review. IEEE Trans. Intell. Transp. Syst. 2019, 20, 2339–2352. [Google Scholar] [CrossRef]

- Lal, S.K.L.; Craig, A.; Boord, P.; Kirkup, L.; Nguyen, H. Development of an algorithm for an EEG-based driver fatigue countermeasure. J. Saf. Res. 2003, 34, 321–328. [Google Scholar] [CrossRef] [PubMed]

- Abiri, R.; Borhani, S.; Sellers, E.W.; Jiang, Y.; Zhao, X. A comprehensive review of EEG-based brain–computer interface paradigms. J. Neural Eng. 2019, 16, 011001. [Google Scholar] [CrossRef]

- Shih, J.J.; Krusienski, D.J.; Wolpaw, J.R. Brain-Computer Interfaces in Medicine. Mayo Clin. Proc. 2012, 87, 268–279. [Google Scholar] [CrossRef]

- Lebedev, M.A.; Nicolelis, M.A. Brain-machine interfaces: From basic science to neuroprostheses and neurorehabilitation. Physiol. Rev. 2017, 97, 767–837. [Google Scholar] [CrossRef]

- Britton, J.W.; Frey, L.C.; Hopp, J.L.; Korb, P.; Koubeissi, M.Z.; Lievens, W.E.; Pestana-Knight, E.M.; St Louis, E.K. Electroencephalography (EEG): An Introductory Text and Atlas of Normal and Abnormal Findings in Adults, Children, and Infants; American Epilepsy Society: Chicago, IL, USA, 2016. [Google Scholar]

- Hartmann, M.M.; Schindler, K.; Gebbink, T.A.; Gritsch, G.; Kluge, T. PureEEG: Automatic EEG artifact removal for epilepsy monitoring. Neurophysiol. Clin.Clin. Neurophysiol. 2014, 44, 479–490. [Google Scholar] [CrossRef]

- Jiang, X.; Bian, G.-B.; Tian, Z. Removal of artifacts from EEG signals: A review. Sensors 2019, 19, 987. [Google Scholar] [CrossRef]

- Radüntz, T.; Scouten, J.; Hochmuth, O.; Meffert, B. Automated EEG artifact elimination by applying machine learning algorithms to ICA-based features. J. Neural Eng. 2017, 14, 046004. [Google Scholar] [CrossRef] [PubMed]

- Sazgar, M.; Young, M.G. Absolute Epilepsy and EEG Rotation Review: Essentials for Trainees; Springer: Berlin/Heidelberg, Germany, 2019; ISBN 978-3-030-03511-2. [Google Scholar]

- Liu, A.; Liu, Q.; Zhang, X.; Chen, X.; Chen, X. Muscle artifact removal toward mobile SSVEP-based BCI: A comparative study. IEEE Trans. Instrum. Meas. 2021, 70, 1–12. [Google Scholar] [CrossRef]

- Labate, D.; La Foresta, F.; Mammone, N.; Morabito, F.C. Effects of artifacts rejection on EEG complexity in Alzheimer’s disease. In Advances in Neural Networks: Computational and Theoretical Issues; Springer: Berlin/Heidelberg, Germany, 2015; pp. 129–136. [Google Scholar]

- Sawangjai, P.; Trakulruangroj, M.; Boonnag, C.; Piriyajitakonkij, M.; Tripathy, R.K.; Sudhawiyangkul, T.; Wilaiprasitporn, T. EEGANet: Removal of ocular artifacts from the EEG signal using generative adversarial networks. IEEE J. Biomed. Health Inform. 2021, 26, 4913–4924. [Google Scholar] [CrossRef] [PubMed]

- Ivaldi, M.; Giacometti, L.; Conversi, D. Quantitative Electroencephalography: Cortical Responses under Different Postural Conditions. Signals 2023, 4, 708–724. [Google Scholar] [CrossRef]

- Gratton, G. Dealing with artifacts: The EOG contamination of the event-related brain potential. Behav. Res. Methods Instrum. Comput. 1998, 30, 44–53. [Google Scholar] [CrossRef]

- Croft, R.J.; Barry, R.J. Removal of ocular artifact from the EEG: A review. Neurophysiol. Clin. Clin. Neurophysiol. 2000, 30, 5–19. [Google Scholar] [CrossRef]

- Chen, X.; Liu, A.; Chiang, J.; Wang, Z.J.; McKeown, M.J.; Ward, R.K. Removing muscle artifacts from EEG data: Multichannel or single-channel techniques? IEEE Sens. J. 2015, 16, 1986–1997. [Google Scholar] [CrossRef]

- Klados, M.A.; Papadelis, C.; Braun, C.; Bamidis, P.D. REG-ICA: A hybrid methodology combining blind source separation and regression techniques for the rejection of ocular artifacts. Biomed. Signal Process. Control. 2011, 6, 291–300. [Google Scholar] [CrossRef]

- Marque, C.; Bisch, C.; Dantas, R.; Elayoubi, S.; Brosse, V.; Perot, C. Adaptive filtering for ECG rejection from surface EMG recordings. J. Electromyogr. Kinesiol. 2005, 15, 310–315. [Google Scholar] [CrossRef]

- Somers, B.; Bertrand, A. Removal of eye blink artifacts in wireless EEG sensor networks using reduced-bandwidth canonical correlation analysis. J. Neural Eng. 2016, 13, 066008. [Google Scholar] [CrossRef] [PubMed]

- Kumar, P.S.; Arumuganathan, R.; Sivakumar, K.; Vimal, C. Removal of ocular artifacts in the EEG through wavelet transform without using an EOG reference channel. Int. J. Open Probl. Compt. Math 2008, 1, 188–200. [Google Scholar]

- Safieddine, D.; Kachenoura, A.; Albera, L.; Birot, G.; Karfoul, A.; Pasnicu, A.; Biraben, A.; Wendling, F.; Senhadji, L.; Merlet, I. Removal of muscle artifact from EEG data: Comparison between stochastic (ICA and CCA) and deterministic (EMD and wavelet-based) approaches. EURASIP J. Adv. Signal Process. 2012, 2012, 127. [Google Scholar] [CrossRef]

- Choi, S.; Cichocki, A.; Park, H.-M.; Lee, S.-Y. Blind source separation and independent component analysis: A review. Neural Inf. Process.-Lett. Rev. 2005, 6, 1–57. [Google Scholar]

- Berg, P.; Scherg, M. Dipole modelling of eye activity and its application to the removal of eye artefacts from the EEG and MEG. Clin. Phys. Physiol. Meas. 1991, 12, 49. [Google Scholar] [CrossRef] [PubMed]

- Casarotto, S.; Bianchi, A.M.; Cerutti, S.; Chiarenza, G.A. Principal component analysis for reduction of ocular artefacts in event-related potentials of normal and dyslexic children. Clin. Neurophysiol. 2004, 115, 609–619. [Google Scholar] [CrossRef]

- Flexer, A.; Bauer, H.; Pripfl, J.; Dorffner, G. Using ICA for removal of ocular artifacts in EEG recorded from blind subjects. Neural Netw. 2005, 18, 998–1005. [Google Scholar] [CrossRef] [PubMed]

- James, C.J.; Hesse, C.W. Independent component analysis for biomedical signals. Physiol. Meas. 2004, 26, R15–R39. [Google Scholar] [CrossRef]

- Zhou, W.; Gotman, J. Automatic removal of eye movement artifacts from the EEG using ICA and the dipole model. Prog. Nat. Sci. 2009, 19, 1165–1170. [Google Scholar] [CrossRef]

- De Clercq, W.; Vergult, A.; Vanrumste, B.; Van Paesschen, W.; Van Huffel, S. Canonical correlation analysis applied to remove muscle artifacts from the electroencephalogram. IEEE Trans. Biomed. Eng. 2006, 53, 2583–2587. [Google Scholar] [CrossRef] [PubMed]

- Borga, M.; Friman, O.; Lundberg, P.; Knutsson, H. A canonical correlation approach to exploratory data analysis in fMRI. In Proceedings of the ISMRM Annual Meeting, Honolulu, HI, USA, 18–24 May 2002. [Google Scholar]

- Vos, D.M.; Riès, S.; Vanderperren, K.; Vanrumste, B.; Alario, F.-X.; Huffel, V.S.; Burle, B. Removal of Muscle Artifacts from EEG Recordings of Spoken Language Production. Neuroinformatics 2010, 8, 135–150. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Chen, Q.; Zhang, Y.; Wang, Z.J. A novel EEMD-CCA approach to removing muscle artifacts for pervasive EEG. IEEE Sens. J. 2018, 19, 8420–8431. [Google Scholar] [CrossRef]

- Wang, G.; Teng, C.; Li, K.; Zhang, Z.; Yan, X. The removal of EOG artifacts from EEG signals using independent component analysis and multivariate empirical mode decomposition. IEEE J. Biomed. Health Inform. 2015, 20, 1301–1308. [Google Scholar] [CrossRef] [PubMed]

- Sweeney, K.T.; McLoone, S.F.; Ward, T.E. The use of ensemble empirical mode decomposition with canonical correlation analysis as a novel artifact removal technique. IEEE Trans. Biomed. Eng. 2012, 60, 97–105. [Google Scholar] [CrossRef] [PubMed]

- Mahajan, R.; Morshed, B.I. Unsupervised eye blink artifact denoising of EEG data with modified multiscale sample entropy, kurtosis, and wavelet-ICA. IEEE J. Biomed. Health Inform. 2014, 19, 158–165. [Google Scholar] [CrossRef]

- Mammone, N.; Morabito, F.C. Enhanced automatic wavelet independent component analysis for electroencephalographic artifact removal. Entropy 2014, 16, 6553–6572. [Google Scholar] [CrossRef]

- Hamaneh, M.B.; Chitravas, N.; Kaiboriboon, K.; Lhatoo, S.D.; Loparo, K.A. Automated removal of EKG artifact from EEG data using independent component analysis and continuous wavelet transformation. IEEE Trans. Biomed. Eng. 2013, 61, 1634–1641. [Google Scholar] [CrossRef]

- Urigüen, J.A.; Garcia-Zapirain, B. EEG artifact removal—State-of-the-art and guidelines. J. Neural Eng. 2015, 12, 031001. [Google Scholar] [CrossRef]

- Motamedi-Fakhr, S.; Moshrefi-Torbati, M.; Hill, M.; Hill, C.M.; White, P.R. Signal processing techniques applied to human sleep EEG signals—A review. Biomed. Signal Process. Control. 2014, 10, 21–33. [Google Scholar] [CrossRef]

- Tanner, D.; Morgan-Short, K.; Luck, S.J. How inappropriate high-pass filters can produce artifactual effects and incorrect conclusions in ERP studies of language and cognition. Psychophysiology 2015, 52, 997–1009. [Google Scholar] [CrossRef]

- Kim, H.; Luo, J.; Chu, S.; Cannard, C.; Hoffmann, S.; Miyakoshi, M. ICA’s bug: How ghost ICs emerge from effective rank deficiency caused by EEG electrode interpolation and incorrect re-referencing. Front. Signal Process. 2023, 3, 1064138. [Google Scholar] [CrossRef]

- Manjunath, N.K.; Paneliya, H.; Hosseini, M.; Hairston, W.D.; Mohsenin, T. A low-power lstm processor for multi-channel brain eeg artifact detection. In Proceedings of the 2020 21st International Symposium on Quality Electronic Design (ISQED), Santa Clara, CA, USA, 25–26 March 2020; pp. 105–110. [Google Scholar]

- Nejedly, P.; Cimbalnik, J.; Klimes, P.; Plesinger, F.; Halamek, J.; Kremen, V.; Viscor, I.; Brinkmann, B.H.; Pail, M.; Brazdil, M. Intracerebral EEG artifact identification using convolutional neural networks. Neuroinformatics 2019, 17, 225–234. [Google Scholar] [CrossRef] [PubMed]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.-A.; Bottou, L. Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. J. Mach. Learn. Res. 2010, 11, 3371–3408. [Google Scholar]

- Leite, N.M.N.; Pereira, E.T.; Gurjao, E.C.; Veloso, L.R. Deep convolutional autoencoder for EEG noise filtering. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 2605–2612. [Google Scholar]

- Xiong, W.; Ma, L.; Li, H. A general dual-pathway network for EEG denoising. Front. Neurosci. 2024, 17, 1258024. [Google Scholar] [CrossRef]

- Sun, W.; Su, Y.; Wu, X.; Wu, X. A novel end-to-end 1D-ResCNN model to remove artifact from EEG signals. Neurocomputing 2020, 404, 108–121. [Google Scholar] [CrossRef]

- Xiong, J.; Meng, X.-L.; Chen, Z.-Q.; Wang, C.-S.; Zhang, F.-Q.; Grau, A.; Chen, Y. One-Dimensional EEG Artifact Removal Network Based on Convolutional Neural Networks. J. Netw. Intell. 2024, 9, 142–159. [Google Scholar]

- Gao, T.; Chen, D.; Tang, Y.; Ming, Z.; Li, X. EEG Reconstruction With a Dual-Scale CNN-LSTM Model for Deep Artifact Removal. IEEE J. Biomed. Health Inform. 2023, 27, 1283–1294. [Google Scholar] [CrossRef]

- Wu, L.; Liu, A.; Li, C.; Chen, X. Enhancing EEG artifact removal through neural architecture search with large kernels. Adv. Eng. Inform. 2024, 62, 102831. [Google Scholar] [CrossRef]

- Huang, J.; Wang, C.; Zhao, W.; Grau, A.; Xue, X.; Zhang, F. LTDNet-EEG: A Lightweight Network of Portable/Wearable Devices for Real-Time EEG Signal Denoising. IEEE Trans. Consum. Electron. 2024, 70, 5561–5575. [Google Scholar] [CrossRef]

- Pei, Y.; Xu, J.; Chen, Q.; Wang, C.; Yu, F.; Zhang, L.; Luo, W. DTP-Net: Learning to Reconstruct EEG Signals in Time-Frequency Domain by Multi-scale Feature Reuse. IEEE J. Biomed. Health Inform. 2024, 28, 2662–2673. [Google Scholar] [CrossRef]

- Wang, B.; Deng, F.; Jiang, P. EEGDiR: Electroencephalogram denoising network for temporal information storage and global modeling through Retentive Network. Comput. Biol. Med. 2024, 177, 108626. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Brophy, E.; Redmond, P.; Fleury, A.; De Vos, M.; Boylan, G.; Ward, T. Denoising EEG signals for Real-World BCI Applications using GANs. Front. Neuroergonomics 2022, 2, 805573. [Google Scholar] [CrossRef]

- Gandhi, S.; Oates, T.; Mohsenin, T.; Hairston, D. Denoising Time Series Data Using Asymmetric Generative Adversarial Networks. In Advances in Knowledge Discovery and Data Mining; Phung, D., Tseng, V.S., Webb, G.I., Ho, B., Ganji, M., Rashidi, L., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2018; Volume 10939, pp. 285–296. ISBN 978-3-319-93039-8. [Google Scholar]

- Sumiya, Y.; Horie, K.; Shiokawa, H.; Kitagawa, H. NR-GAN: Noise Reduction GAN for Mice Electroencephalogram Signals. In Proceedings of the 2019 4th International Conference on Biomedical Imaging, Signal Processing, Nagoya, Japan, 17 October 2019; pp. 94–101. [Google Scholar]

- Dong, Y.; Tang, X.; Li, Q.; Wang, Y.; Jiang, N.; Tian, L.; Zheng, Y.; Li, X.; Zhao, S.; Li, G. An Approach for EEG Denoising Based on Wasserstein Generative Adversarial Network. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 3524–3534. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.; Wang, Y.; Liu, X.; Qiu, X. A survey of transformers. AI Open 2022, 3, 111–132. [Google Scholar] [CrossRef]

- Chen, J.; Pi, D.; Jiang, X.; Xu, Y.; Chen, Y.; Wang, X. Denosieformer: A Transformer based Approach for Single-Channel EEG Artifact Removal. IEEE Trans. Instrum. Meas. 2023, 73, 2501116. [Google Scholar] [CrossRef]

- Pfeffer, M.A.; Ling, S.S.H.; Wong, J.K.W. Exploring the Frontier: Transformer-Based Models in EEG Signal Analysis for Brain-Computer Interfaces. Comput. Biol. Med. 2024, 178, 108705. [Google Scholar] [CrossRef] [PubMed]

- Pu, X.; Yi, P.; Chen, K.; Ma, Z.; Zhao, D.; Ren, Y. EEGDnet: Fusing non-local and local self-similarity for EEG signal denoising with transformer. Comput. Biol. Med. 2022, 151, 106248. [Google Scholar] [CrossRef] [PubMed]

- Yin, J.; Liu, A.; Li, C.; Qian, R.; Chen, X. A GAN Guided Parallel CNN and Transformer Network for EEG Denoising. IEEE J. Biomed. Health Inform. 2023, 27, 1–12. [Google Scholar] [CrossRef]

- Huang, X.; Li, C.; Liu, A.; Qian, R.; Chen, X. EEGDfus: A Conditional Diffusion Model for Fine-Grained EEG Denoising. IEEE J. Biomed. Health Inform. 2024, 1–13. [Google Scholar] [CrossRef]

- Zhang, H.; Zhao, M.; Wei, C.; Mantini, D.; Li, Z.; Liu, Q. EEGdenoiseNet: A benchmark dataset for deep learning solutions of EEG denoising. J. Neural Eng. 2021, 18, 056057. [Google Scholar] [CrossRef]

- Moody, G.B.; Mark, R.G. The impact of the MIT-BIH arrhythmia database. IEEE Eng. Med. Biol. Mag. 2001, 20, 45–50. [Google Scholar] [CrossRef]

- Klados, M.A.; Bamidis, P.D. A semi-simulated EEG/EOG dataset for the comparison of EOG artifact rejection techniques. Data Brief 2016, 8, 1004–1006. [Google Scholar] [CrossRef]

- Dora, M.; Holcman, D. Adaptive single-channel EEG artifact removal with applications to clinical monitoring. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 286–295. [Google Scholar] [CrossRef] [PubMed]

- Cho, H.; Ahn, M.; Ahn, S.; Kwon, M.; Jun, S.C. EEG datasets for motor imagery brain–computer interface. GigaScience 2017, 6, gix034. [Google Scholar] [CrossRef]

- Kanoga, S.; Nakanishi, M.; Mitsukura, Y. Assessing the effects of voluntary and involuntary eyeblinks in independent components of electroencephalogram. Neurocomputing 2016, 193, 20–32. [Google Scholar] [CrossRef]

- Fatourechi, M.; Bashashati, A.; Ward, R.K.; Birch, G.E. EMG and EOG artifacts in brain computer interface systems: A survey. Clin. Neurophysiol. 2007, 118, 480–494. [Google Scholar] [CrossRef] [PubMed]

- Naeem, M.; Brunner, C.; Leeb, R.; Graimann, B.; Pfurtscheller, G. Seperability of four-class motor imagery data using independent components analysis. J. Neural Eng. 2006, 3, 208. [Google Scholar] [CrossRef]

- Schlögl, A.; Keinrath, C.; Zimmermann, D.; Scherer, R.; Leeb, R.; Pfurtscheller, G. A fully automated correction method of EOG artifacts in EEG recordings. Clin. Neurophysiol. 2007, 118, 98–104. [Google Scholar] [CrossRef] [PubMed]

- Schlögl, A.; Kronegg, J.; Huggins, J.E.; Mason, S.G. Evaluation criteria for BCI research. In Toward Brain-Computer Interfacing; MIT Press: Cambridge, MA, USA, 2007. [Google Scholar]

- Rantanen, V.; Ilves, M.; Vehkaoja, A.; Kontunen, A.; Lylykangas, J.; Mäkelä, E.; Rautiainen, M.; Surakka, V.; Lekkala, J. A survey on the feasibility of surface EMG in facial pacing. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 1688–1691. [Google Scholar]

- Brunner, C.; Naeem, M.; Leeb, R.; Graimann, B.; Pfurtscheller, G. Spatial filtering and selection of optimized components in four class motor imagery EEG data using independent components analysis. Pattern Recognit. Lett. 2007, 28, 957–964. [Google Scholar] [CrossRef]

- Pion-Tonachini, L.; Kreutz-Delgado, K.; Makeig, S. ICLabel: An automated electroencephalographic independent component classifier, dataset, and website. NeuroImage 2019, 198, 181–197. [Google Scholar] [CrossRef] [PubMed]

- Elbert, T.; Lutzenberger, W.; Rockstroh, B.; Birbaumer, N. Removal of ocular artifacts from the EEG—A biophysical approach to the EOG. Electroencephalogr. Clin. Neurophysiol. 1985, 60, 455–463. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).