Abstract

In the field of intelligent connected vehicles, the precise and real-time identification of speed bumps is critically important for the safety of autonomous driving. To address the issue that existing visual perception algorithms struggle to simultaneously maintain identification accuracy and real-time performance amidst image distortion and complex environmental conditions, this study proposes an enhanced lightweight neural network framework, YOLOv5-FPNet. This framework strengthens perception capabilities in two key phases: feature extraction and loss constraint. Firstly, FPNet, based on FasterNet and Dynamic Snake Convolution, is developed to adaptively extract structural features of distorted speed bumps with accuracy. Subsequently, the C3-SFC module is proposed to augment the adaptability of the neck and head components to distorted features. Furthermore, the SimAM attention mechanism is embedded within the backbone to enhance the ability of key feature extraction. Finally, an adaptive loss function, Inner–WiseIoU, based on a dynamic non-monotonic focusing mechanism, is designed to improve the generalization and fitting ability of bounding boxes. Experimental evaluations on a custom speed bumps dataset demonstrate the superior performance of FPNet, with significant improvements in key metrics such as the mAP, mAP50_95, and FPS by 38.76%, 143.15%, and 51.23%, respectively, compared to conventional lightweight neural networks. Ablation studies confirm the effectiveness of the proposed improvements. This research provides a fast and accurate speed bump detection solution for autonomous vehicles, offering theoretical insights for obstacle recognition in intelligent vehicle systems.

1. Introduction

In modern transportation systems, speed bumps are used for traffic management to slow down vehicles and improve pedestrian safety [1]. However, the target identification algorithms of autonomous driving systems often ignore speed bumps when identified, resulting in the vehicle driving over speed bumps at high speeds. This presents a risk of rollover and collision, endangering the safety of the vehicle occupants and pedestrians [2]. Additionally, this impacts the comfort of the vehicle occupants [3]. Therefore, it is crucial for autonomous vehicles to identify speed bumps accurately and promptly.

Conventional speed bump detection typically relies on mathematical models of vehicle dynamics or complex image processing algorithms and costly sensors [4,5]. These methods either lack predictability by only detecting the current road surface or require a large number of computational resources, making it difficult to meet the real-time performance requirements of autonomous driving systems. In recent years, deep learning techniques have rapidly developed and provided new solutions for speed bump identification. Specifically, methods based on convolutional neural networks (CNNs) have demonstrated good detection performance in a variety of traffic scenarios. Deep learning-based target identification algorithms can be further divided into two-stage methods and one-stage methods [6,7].

Representative works of two-stage object detection algorithms include the R-CNN series [8,9,10,11,12], which are fundamentally characterized by first extracting candidate regions from images, followed by the classification of these regions. Thus, the exceptional performance in accuracy for object detection tasks is exhibited with this category of algorithms. Two-stage methods achieve high accuracy in target identification tasks, reaching 85.6% on the VOC2007 dataset, but due to the need to perform the inference twice, they fall short in terms of processing speed, reaching only 15 frames per second (FPS). Contrastingly, single-stage object detection algorithms, such as You Only Look Once (YOLO) [13] and Single Shot Multibox Detector (SSD) [14], are characterized by a more direct strategy. Target boxes and their corresponding categories are predicted at multiple locations in the image through a single inference process by these algorithms. This method optimizes computational efficiency and dramatically improves processing speed, achieving an FPS of up to 100. However, it falls slightly short in detection accuracy compared to the two-stage methods, with an accuracy of only 75.1% on the VOC2007 dataset.

However, deep learning-based models often have a large number of parameters and high computational complexity, which can be a significant constraint for autonomous driving systems with limited computational capability. Existing pavement identification algorithms commonly utilize semantic segmentation in target identification, accurately identifying the boundaries between obstacles and drivable areas on the road. However, the algorithms’ FPS is limited to 40, resulting in poor real-time performance. To address the challenge of balancing real-time challenges and accuracy in target identification algorithms, the use of lightweight models in autonomous vehicle driving systems has emerged as a crucial research area in academia. To address the aforementioned issues, scholars have proposed solutions, such as PVANet [15] and SSDRNet [16]. These lightweight models aim to balance computational resources with speed and accuracy in identification tasks. Although studies on lightweight modeling applications address limited computational resources, maintaining a balance between detection speed and accuracy remains challenging. Therefore, designing a new high-precision lightweight neural network model that can be deployed on edge devices holds significant value, both in research and in practicality.

Due to its computational efficiency and ease of use, the YOLO framework has gained widespread attention in academia and industry as a modeling tool for target identification. The framework is composed of three parts: the backbone, neck, and head, each containing different modules. An attention mechanism is employed to mimic the way humans focus on important features while ignoring distracting information. The loss function is a method in deep learning that is used to evaluate the differences between the predictions of a model and the actual results. Through the loss function, the parameters of the model can be constantly adjusted, so that the model’s predictions are closer to the real label.

To address the deficiencies of neural network models in detecting speed bumps, this study proposes an enhanced lightweight object detection network model, YOLOv5–Faster&Preciser Net (YOLOv5-FPNet). The development of the model is based on the following: Firstly, the architecture integrates FasterNet with Dynamic Snake Convolution, effectively reducing the parameter count and floating-point operations of the backbone network while significantly enhancing detection accuracy. Subsequently, an innovative modification to the C3 module in the head is proposed as the C3–SerpentFlowConv (C3-SFC) module. The aim is to enhance the ability of the neck and head components to adapt to distorted features. Furthermore, a parameter-free attention mechanism SimAM is embedded in the backbone network and the large target detection head, with the aim of enhancing the feature extraction capability of the backbone and the detection head. Finally, the fitting and generalization capabilities of the model for predicted targets are bolstered through the introduction of Inner–WIoU. Through ablation and comparison experiments, FPNet is verified to have better performance on speed bump detection tasks, demonstrating its significant advantages in the field of target detection.

In summary, the principal contributions of this study are as follows:

- i.

- A deformable lightweight neural network model, FPNet, is introduced, which is capable of more effectively addressing detection failures caused by image distortions.

- ii.

- The C3-SFC module is proposed to enhance the feature expression capability of the C3 module. Furthermore, the SimAM attention mechanism is embedded within both the backbone network and the large-object detection head to improve the feature extraction capability of the model and suppress the interference caused by background noise.

- iii.

- Inner–WiseIoU is proposed to optimize the fitting and generalization capabilities of the anchor box.

2. Related Works

Given that the FPNet proposed in this study is a lightweight neural network model tailored for real-time speed bump detection, this section provides a review of speed bump detection methodologies and existing lightweight neural network models.

2.1. Speed Bump Detection Methods

Currently, there are numerous methods for speed bump identification based on mathematical models of vehicle dynamics, and there are also methods based on deep learning. Methods that utilize mathematical models of vehicle dynamics achieve speed bump identification by analyzing the dynamic response of vehicles under various road conditions, including acceleration, vibration, and other relevant characteristics. These methods include an artificial intelligence-based pavement identification method proposed by Qin et al. [17,18]; a logic model for detecting speed bumps using genetic algorithms proposed by Celaya-Padilla et al. [19]; a method for estimating pavement elevation using fuzzy logic proposed by Qi et al. [20]; and a method for applying a short-time Fourier transform to estimate pavement elevation proposed by Domínguez et al. [21]. It should be noted that the methods based on a mathematical model of vehicle dynamics mentioned above can achieve an accuracy of over 97%. However, it is important to keep in mind that these methods are only able to conduct vehicle identification in a quasi-real-time manner. This means that the vehicle can only be accurately identified after it has passed over the speed bumps, and there is a time delay. As speed bumps are an unconventional road condition, quasi-real-time identification cannot satisfy the needs of autonomous vehicles for advanced sensing, advanced decision-making, and timely execution.

Compared to the methods mentioned above, deep learning-based speed bump identification methods can learn the feature representation of speed bumps automatically, which means that deep learning-based speed bump identification models have better generalization ability. There are two main categories for identifying speed bumps using CNNs: semantic segmentation-based and anchor box-based. Semantic segmentation-based methods include SegNet, first proposed by Vijay et al. [22] and applied to speed bump identification work by Arunpriyan et al. [23]. Varona et al. [24] proposed a method based on CNNs, Long Short-Term Memory Networks (LSTMs), and Reservoir Computing Models. There has also been a speed bump identification algorithm for Microsoft Kinect sensors proposed by Lion et al. [25] as well as a Gaussian filtering-based speed bump identification proposed by Devapriya et al. [26]. Anchor box-based methods include a speed bump detection method combining LiDAR and a camera proposed by Yun et al. [27]. A GPU and ZED stereo camera-based speed bump identification method was proposed by Varma et al. [28], and. Dewangan et al. [29] proposed a model for speed bump detection based on deep learning and computer vision. All of these methods can achieve approximately 85% accuracy.

It should be noted that while the semantic segmentation-based approach achieves a maximum accuracy of 89%, the computationally intensive nature of pixel-level segmentation restricts speed processing to a maximum of only 30 FPS. Additionally, the anchor box-based speed bump identification algorithm performs slightly better in terms of FPS, reaching 30–60 FPS but experiences issues due to poor anchor box convergence and limited detection distance.

In advanced autonomous driving systems, a processing speed of 100 FPS is considered standard to effectively capture dynamic changes [30]. This ensures that an autonomous driving vehicle can make decisions and react at the millisecond level, significantly improving safety. However, CNN-based methods do not meet the stringent requirements for millisecond-level decision making in autonomous driving systems due to their low processing speeds.

2.2. Lightweight Neural Network Model

In 2016, Iandola et al. [31] proposed SqueezeNet, which introduces the Fire module to perform Squeeze and Expand operations. The Squeeze operation reduces the number of channels using a 1 × 1 convolution kernel. The Expand operation enhances the model’s expressive power by combining a 1 × 1 convolution kernel with a 3 × 3 convolution kernel to increase the number of channels.

In 2017, Google proposed the MobileNet [32] model, which for the first time proposed the concept of a lightweight neural network model. The model computation is significantly reduced by using depth-separable convolution, which is a decomposition of the traditional convolution operation into depth convolution and point-by-point convolution. After MobileNet, Google released MobileNetV2 [33] in 2018, which introduced a linearized neck layer structure and optimized the activation function, an improvement that made the model more efficient in computationally resource-constrained environments. MobileNetV3 [34], proposed in 2019, further optimized the series by adding SE modules to the backbone and optimizing the activation function and output structure, thus significantly enhancing performance.

In 2017, Chollet [35] proposed Xception, which utilized deep separable convolution technology. Xception has a modular design that enhances model flexibility and introduces residual connections to address the issue of gradient vanishing during model training. This improves the stability and efficiency of the training process.

In 2018, Zhang et al., from MEGVII Technology, proposed ShuffleNet [36], which is a model that effectively achieved more efficient feature delivery, reduced computational complexity, and improved the computational efficiency of the model through the introduction of point-by-point grouped convolution and channel shuffling mechanisms. After ShuffleNet, Ma et al., also from MEGVII Technology, introduced ShuffleNetV2 [37]. This model proposed ShuffleUnit, which combined residual connectivity and channel-by-channel convolution to reduce the fragmentation of the model and achieve a significant improvement in performance.

In 2020, GhostNet was proposed by Han et al. [38] from the University of Sydney. This model utilizes simple linear operations on feature maps to generate additional similar feature maps, effectively reducing the parameter count. GhostNet innovates by replacing traditional convolutions with the Ghost Module, using standard 1 × 1 convolutions for channel count compression of the input image, followed by depthwise convolutions for additional feature map extraction and, finally, concatenating various feature maps to form a new output.

Notwithstanding the outstanding contribution of the above models to lightweighting neural networks, which reduces the number of parameters and computational complexity of the models, they also face some challenges such as the degradation of recognition accuracy, limited feature expressiveness, and difficulties in the training process.

3. Methodology

The YOLO algorithm, proposed by Joseph Redmon et al. [13] in 2016, has been optimized over the years to enhance the detection accuracy and computational efficiency of the algorithm. Despite the YOLO series [39,40,41] has maintained a leading position in the real-time target detection field and demonstrated excellent performance in several application scenarios, there still exist limitations. Firstly, YOLOv5 still has much space for optimization in the balance between real-time and detection accuracy. Secondly, YOLOv5 is not sufficiently robust when dealing with targets that have significant aspect ratio variations, image distortions, and occluded targets; thus, there is the problem of missed detection. Finally, the model has limited adaptability to complex scenarios and is susceptible to background noise and complex lighting conditions, leading to unstable detection results. The YOLOv5 detection results are shown in Figure 1.

Figure 1.

Detection results of YOLOv5.

To address the above issues, the following strategies are proposed in this study as a countermeasure.

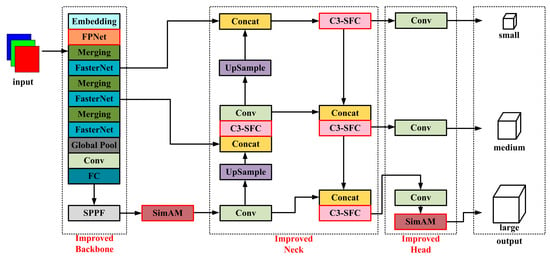

Firstly, to address the problems of YOLOv5 in terms of balancing real-time and detection accuracy as well as the missing detection of targets with significant aspect ratio variations and image distortions, FPNet is proposed using FasterNet [42] integrated with Dynamic Serpentine Convolution (DSConv) and replaced with the original backbone network, which ensures the detection accuracy of the model while reducing the number of model parameters and the amount of floating-point operations, as well as using adaptive variation to accurately extract structural features of targets with significant aspect ratio variations or image distortions. Subsequently, to address the issues of insufficiently robust processing when handling occluded targets and limited adaptability for complex scenarios, the SimAM [43] attention mechanism is embedded in the backbone network and the large target detection head, and the C3-SFC module is proposed to enhance the feature extraction capability. Finally, an adaptive loss function based on a dynamic non-monotonic focusing mechanism, Inner–WiseIoU, is proposed to enhance the fitting and generalization ability of the anchor box. The proposed YOLOv5-FPNet is shown in Figure 2.

Figure 2.

Schematic diagram of YOLOv5-FPNet network structure. Different colors represent different modules.

3.1. Design of FPNet

Chen et al. [42] highlighted a major problem with lightweight neural networks: While numerous lightweight models have successfully reduced the number of parameters, most of them still rely on frequent memory accesses, which lengthens the execution time of the model. Comparatively, models that have reduced the frequency of memory accesses, such as MicroNet [44], exhibit the problem of relying on inefficient fragmented computation, which contradicts the intended purpose of lightweight designs.

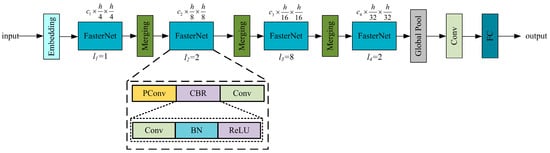

To address this problem, Chen et al. [42] proposed FasterNet, which avoids unnecessary redundant operations, achieves a better balance between accuracy and real-time performance, and demonstrates great potential in edge device applications. A schematic diagram of the FasterNet structure is shown in Figure 3.

Figure 3.

Schematic diagram of FasterNet structure.

However, when FasterNet was applied as a backbone for the experiment, the problem of missed detection of significant targets was observed. Missed targets are often caused by image distortion, resulting from the lens effect of the camera. This means that straight objects at the edge of the image may appear curved. Image distortion is a common occurrence during image acquisition, as shown in Figure 4.

Figure 4.

Example of image distortion. The speed bumps in the red box show the image distortion clearly.

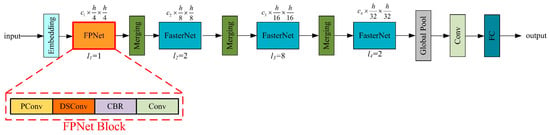

To address the above issues, this study adds deformable convolution kernels [45,46] based on FasterNet. The Dynamic Snake Convolution (DSConv) proposed by Qi et al. [47] is used to improve the model’s ability to handle object shapes and details.

DSConv has demonstrated excellent performance in recognizing tubular objects because the flexibility of its convolutional kernel allows it to “stravaig” around the target object, efficiently adapting and accurately capturing the features of the tubular structure. Thus, the model’s performance, in terms of complex scenes and deformed objects, is improved.

The structure of the proposed FPNet network after the addition of DSConv to FasterNet is shown in Figure 5.

Figure 5.

Schematic diagram of FPNet network structure.

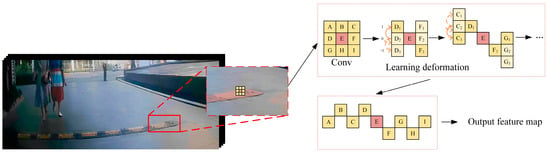

The deformation mechanism of DSConv is shown in Figure 6.

Figure 6.

Deformation mechanism of DSConv.

The deformation rule of DSConv along the x-axis is as follows:

The deformation rule along the y-axis is as follows:

The offset Δ is typically a fraction, and the implementation of bilinear interpolation is as follows:

where K denotes the position of the fraction in Equations (1) and (2), K’ denotes all the enumerated integral spatial positions, and B denotes the bilinear interpolation kernel. The bilinear interpolation kernel is divided into two one-dimensional kernels:

The deformation of the x-axis and y-axis coordinates is illustrated in a schematic diagram shown in Figure 7.

Figure 7.

Schematic diagram of x- and y-axis coordinate deformation.

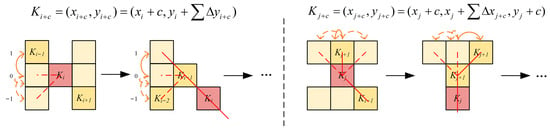

Under the assumption that the range of values on the x-axis is [i − 4, i + 4] and the range of values on the y-axis is [j − 4, j + 4], the sensibility field of DSConv in this range of values is shown in Figure 8.

Figure 8.

Receptive field of DSConv.

Figure 8 shows that after coordinate deformation on the x- and y-axis, DSConv is able to obtain a receptive field that can cover an area of 9 × 9 for better perception of key features.

In order to fully evaluate the performance of DSConv, this thesis implemented a convolutional kernel performance comparison experiment. The experiment involved constructing a simple neural network model to which DSConv was applied. In order to establish the effectiveness and superiority of DSConv, the experiment specifically compared it to the currently dominant convolutional kernel. The detailed results of the experiment are collated and presented in Table 1, and these data provide a quantitative basis for evaluating the performance of DSConv across different aspects.

Table 1.

Results of convolutional kernel performance comparison experiment.

According to the data given in Table 1, DSConv has smaller parameters and almost the same FPS compared to the traditional Conv2d convolution kernel. Thus, DSConv achieves a good balance between accuracy and computation.

In summary, FPNet successfully solves the target loss problem due to image radial distortion and significantly improves the overall performance of the backbone in speed bump detection by introducing the deformation mechanism of DSConv into FasterNet.

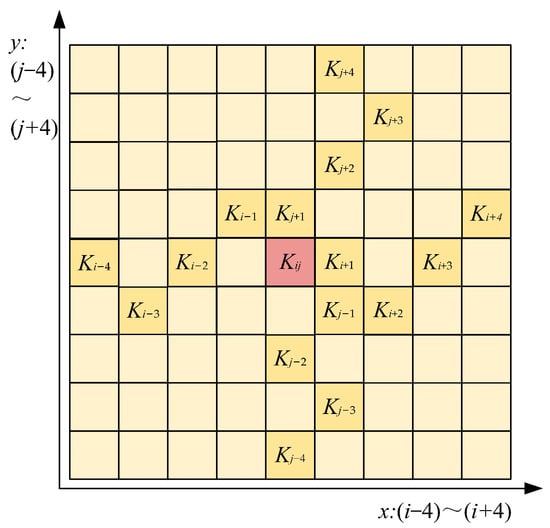

3.2. Design of C3-SFC Module

In the neck section of YOLOv5, the CSP_3 (C3) module is used to increase the depth and receptive field of the neural network model. This module’s design greatly enhances the network’s ability to extract features, allowing it to process and recognize complex image content more efficiently, resulting in improved accuracy and robustness of detection. However, the operation of the C3 module generates a large number of parameters, which can significantly drain memory resources and lead to problems such as vanishing gradients and information bottlenecks. These issues can limit the overall performance of the neural network model.

To tackle these challenges, this study has improved the C3 module by drawing on the Efficient Layer Aggregation Network (ELAN) concept proposed by Wang et al. [50]. The study introduced the DSConv operator to the BottleNeck section of the C3 module, resulting in the creation of the SFCBottleNeck. Based on this enhancement, the study further introduces the C3-SFC module, which aims to improve the model’s efficiency and performance through structural optimization. Figure 9 shows the detailed architecture of the enhanced C3-SFC module.

Figure 9.

Schematic diagram of C3-SFC module.

Compared to the traditional Conv2D convolution operator, DSConv offers significant advantages in terms of parameter and floating-point operation reduction. Table 1 shows a 62.59% reduction in parameters and an 87.01% reduction in floating-point operations. Based on these data, it can be concluded that SFCBottleNeck outperforms the traditional BottleNeck structure in terms of parameters and operations. These improvements enable SFCBottleNeck to effectively increase the computational speed and accuracy of the original C3 module.

3.3. Attention Mechanism

This study introduces the Simulation Attention Module (SimAM) proposed by Yang et al. [43] to address the problem of lighting conditions and background noise affecting model detection accuracy in road target detection tasks. The purpose of SimAM is to enhance the ability of neural network models to concentrate on crucial areas in an image. This attention mechanism enables the network to more accurately identify and process important features of the target, thereby improving the accuracy and reliability of detection under complex environmental conditions.

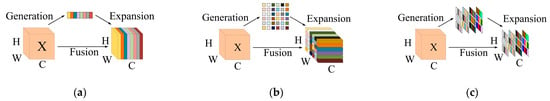

SimAM achieves higher efficiency and smaller attention weights by utilizing the linear separability between neurons, without introducing additional parameters. The mechanism identifies key neurons and prioritizes them for attention, resulting in efficient feature map extraction. The principle of operating SimAM is illustrated in Figure 10.

Figure 10.

SimAM schematic diagram: (a) one-dimensional channel attention, (b) two-dimensional spatial attention, and (c) three-dimensional spatial weighted attention.

In this study, SimAM is embedded in the backbone network and the large target detection head in YOLOv5-FPNet to enhance target detection performance.

The theoretical basis of SimAM is derived from neuroscience and uses an energy function to define the linear separability between a neuron t and every other neuron in the same channel except t. This approach successfully differentiates the relative importance of neurons and implements an effective attention mechanism. Equation (5) determines the energy function for each neuron:

where t represents the target neuron in a single channel of the input feature, and xi represents other neurons in the same channel. and denote the results after t and xi undergo linear transformation, where i indicates the spatial dimension index. The weight and bias of the linear transformation for the target neuron t are represented using and , respectively. λ is identified as a hyperparameter, and M indicates the total number of neurons in a single channel.

and can be obtained from the following equation:

By minimizing Equation (5), the equation is made equivalent to the linear separability between the target neuron t and other neurons in the same channel. To simplify Equation (5), yt and y0 are marked using binary notation, and a regularization matrix is added to Equation (5), resulting in the final energy function, as shown in Equation (8):

The linear transformation weight wt and the linear transformation bias for neuron t are determined using Equations (6) and (7), respectively.

where μt represents the mean of all neurons excluding neuron t, and σt2 represents the variance of all neurons excluding neuron t.

The mean μt of all neurons excluding neuron t and the variance σt2 of all neurons excluding neuron t can be determined using Equations (8) and (9), respectively.

Assuming all pixels in a single channel follow the same distribution, the mean and variance of all neurons are calculated based on this assumption. These values are then reused across all neurons in that channel, significantly reducing the floating-point operation volume. The minimum energy et* is determined using the following formula:

where represents the variance including neuron t, and represents the mean including neuron t; these values can be determined using the subsequent equations:

Utilizing Equation (13), it is understood that the value of the energy function is inversely correlated with the linear separability between neuron t and other neurons. This indicates that linear separability increases as the energy function value decreases. The design of the SimAM attention mechanism is guided by the energy function et*, thus effectively avoiding unnecessary heuristics and adjustments. By computing for individual neurons and integrating the concept of linear separability into the entire model, a significant enhancement in the model’s learning capability is achieved.

3.4. Inner–WiseIoU

Intersection over Union (IoU) is used to measure the similarity between predicted bounding boxes and actual annotations, with a primary focus on the amount of region overlap between predictions and actual conditions.

Most existing works, such as GIoU [51], CIoU [52], DIoU [52], EIoU [53], and SIoU [54], assume that instances in training data are of high quality and focus on enhancing the fitting ability of Bounding Box Regression (BBR) loss. However, indiscriminately strengthening BBR on low-quality instances can lead to a decline in localization performance, as some researchers have discovered. In 2023, Tong et al. [55] built upon the static focusing mechanism (FM) proposed in Focal-EIoU [53] and introduced a loss function with a dynamic non-monotonic focusing mechanism called Wise–IoU (WIoU).

Since training data often include low-quality samples, this can result in a higher penalty for such samples, which can have a negative impact on the model’s ability to generalize. A well-designed loss function is expected to reduce the penalty on geometric factors and enhance the model’s generalization ability by minimizing interference from training interventions when there is a high degree of overlap between anchor boxes and target boxes.

To achieve this, a distance attention mechanism is introduced, and WIoUv1 is designed with a dual-layer attention mechanism, as shown in Equation (16):

where x and y represent the center coordinates of the bounding box, xgt and ygt denote the characteristics of the target box, and Wg and Hg refer to the dimensions of the smallest enclosing box.

To prevent the generation of gradients that impede convergence in RWIoUv1, Wg and Hg are separated from the calculation in the image in WIoU, as represented using the * operation. This effectively eliminates factors that hinder convergence, and new metrics are not introduced with this method. This approach is particularly effective when handling non-overlapping bounding boxes.

Tong et al. [55] further provide the monotonic focusing mechanism coefficient for WIoU for the monotonic focusing mechanism in focal loss, which can be defined using the following equation:

Additionally, Equation (16) is supplemented with the coefficients of the monotonic focusing mechanism to obtain the propagation gradient of the WIoU, as shown in Equation (18):

It is worth noting that = r ∈ [0, 1], where r represents the gradient gain. As LIoU decreases, the gradient gain r gradually decreases, resulting in the slower convergence of the anchor frame in the later stages of training. For the above reason, the LIoU normalization factor is introduced, as shown in Equation (19):

where represents the exponential running average with momentum m. The purpose of the introduction of the normalization factor is to retain gradient gain r at a high level, thus solving the problem of the slow convergence of the anchor frame in the later stages of training.

Furthermore, as all of the abovementioned WIoU utilize a static monotonic focusing mechanism, it is possible to induce anomalies in the anchor boxes. During training, researchers often prefer the bounding box to return to a standard quality sample anchor box. Therefore, for anchor boxes with significant outliers, a smaller gradient gain should be assigned to prevent low-quality sample anchor boxes from having a substantial impact on the gradient. Thus, the researchers developed the non-monotonic focusing coefficient τ and used it in Equation (16) to derive Equation (20):

where if τ = δ, then r = 1. The anchor box achieves the maximum gradient gain when the anomaly of the anchor box satisfies τ as a set constant value. The quality classification criterion of the anchor frame changes dynamically due to the dynamic nature of x. This enables WIoUv3 to adopt the most appropriate gradient gain allocation strategy for the current context at any moment, effectively optimizing the performance of the neural network model in local operation.

It is important to note that WIoU remains a BBR-based method. While IoU-based BBR methods aim to facilitate iterative convergence of the model by introducing new loss terms, they may overlook the inherent limitations of the IoU loss function itself. Although the IoU loss can effectively characterize the state of bounding box regression in theory, it does not adaptively adjust to different detectors and detection tasks, and its generalization ability is relatively limited in practice. To address these issues, Zhang et al. [56] proposed Inner–IoU in 2023.

Inner–IoU is designed to compensate for the weak convergence and slow convergence rates prevalent in loss functions widely utilized in various detection tasks. It introduces a scaling factor to control the proportional size of auxiliary bounding boxes, thus addressing the problem of weak generalization capabilities inherent in existing methods.

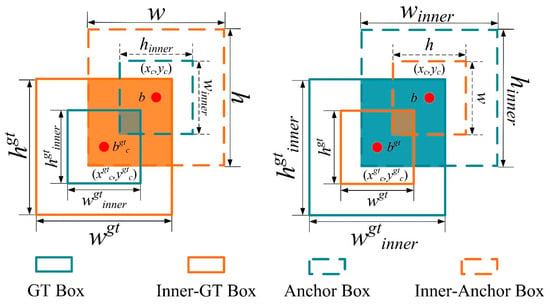

The operating mechanism of Inner–IoU is illustrated in a schematic diagram shown in Figure 11.

Figure 11.

Schematic diagram of Inner–IoU mechanism.

In Figure 11, bgt represents the center point of the annotated data box, and b represents the center point of the anchor box. The coordinates of the center points for the annotated data box and the Inner annotated data box are (xgtc,ygtc), and the coordinates for the anchor box and Inner anchor box are (xc,yc). wgt and hgt represent the width and height of the annotated data box, respectively, while w and h represent the width and height of the anchor box.

The calculation formula for Inner–IoU is presented in Equations (21)–(27):

where the variable ratio represents the scaling factor, with ratio ∈ [0.5, 1.5]; “inter” refers to the intersection between the Inner anchor box and the Inner annotated data box; and “union” denotes the intersection of the anchor box and the annotated data box minus “inter”.

It is important to note that, although Inner–IoU addresses the limitations in generalization capabilities of traditional IoU and can accelerate the convergence speed of high-quality instance anchor boxes, the issue of slowed convergence speed for anchor boxes due to low-quality instances still arises in the later stages of training. Furthermore, it has been observed through experimentation that the strengths and weaknesses of Inner–IoU and WIoU are complementary. Consequently, Inner–IoU and WIoU are combined in this study to form Inner–WIoU, which integrates the advantages of both to further enhance the fitting capability of anchor boxes in neural network models.

The calculation formula for Inner–WIoU is presented in the following equation:

The experimental results presented in Section 4.4 prove that Inner–WIoU, compared to the currently latest applied WIoU, exhibits superior generalization capabilities and faster convergence speed.

4. Dataset, Experimental Results, and Discussion

In this section, a series of experiments are presented and conducted to investigate the impact of the network improvements described in Section 3 on the performance of speed bump detection tasks. The contents include the creation of datasets, the introduction of the experimental environment, evaluation metrics, and the analysis of the experimental results.

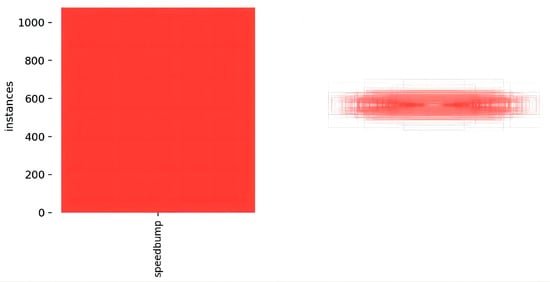

4.1. Dataset Production

Due to the absence of a public dataset specifically for road speed bumps, a self-built dataset was created. The dataset comprises numerous photos taken in underground parking lots, public parking lots, and campus streets. The data sources include the onboard camera of the experimental data collection vehicle and Baidu Street View, with a data source ratio of 1:9. The start of the data collection period is 2012, and the end of the data collection period is 2022. Figure 12 depicts the experimental data collection vehicle. In this study, we collected 1000 images of speed bumps, which were preprocessed and stored in JPG format with a resolution of 1280 × 720. Included in these images are speed bumps with different wear conditions, including heavily worn, lightly worn, and new, to ensure diverse data. All labels were manually reviewed to ensure data accuracy. To help the model learn the features of the target more effectively, as many images as possible were assigned to the training set. The dataset was divided into training, validation, and testing sets in an 8:1:1 ratio to ensure independent subsets of data for model training and evaluation. Figure 13 displays the distribution of label numbers and box shapes in the dataset, while Figure 14 shows representative images from various scenarios.

Figure 12.

Experimental data collection vehicle.

Figure 13.

Distribution of label numbers and box shapes in the dataset.

Figure 14.

Representative images from various scenarios.

4.2. Experimental Environment

The experimental platform used in this study is Ubuntu 20.04, with an Intel(R) Xeon(R) Platinum 8255C CPU @ 2.50 GHz as the central processing unit, an NVIDIA RTX 3080 (10 GB) as the graphics processing unit, CUDA version 11.3, PyTorch version 1.10.0, Python version 3.8, and 40 G of memory. The platform specifics are presented in Table 2.

Table 2.

Test environment.

The training parameter settings are consistent across models. The iteration epochs have an upper limit of 800, based on convergence observed in multiple training sessions with the self-constructed dataset. To balance GPU memory efficiency with computation speed, the batch size was set to 16. No pre-training weights were used during the training process to ensure the model was trained specifically for road speed bumps. The remaining hyperparameters were kept at their default settings.

4.3. Evaluation Metrics

The evaluation metrics used in this study include precision, recall, F1 score, average precision (AP), and mean average precision (mAP). When evaluating the algorithms in this study, speed bumps are considered as positive instances, and non-speed bumps as negative instances. The formulas for each of these evaluation metrics are presented in Equations (30)–(34).

where True Positive (TP) is the number of accurately detected annotated speed bump bounding boxes, False Positive (FP) is the number of bounding boxes incorrectly marked as speed bumps, and False Negative (FN) is the number of speed bump bounding boxes that were not detected.

Precision represents the proportion of accurately located speed bumps among all detection results, while recall indicates the proportion of accurately located speed bumps among all annotated speed bump bounding boxes in the test set. Precision reflects the false positive rate, i.e., the proportion of samples that are actually negative among all those judged as positive by the model. Recall, on the other hand, reflects the miss rate, i.e., the proportion of samples correctly identified as positive among all actual positive samples. AP represents the precision–recall curve, with the area under the curve used to measure the average precision of the test results. mAP is the average of AP, used to comprehensively evaluate the overall performance of the algorithm across the entire dataset. These evaluation metrics are commonly used performance indicators in target detection algorithms.

Since both mAP and mAP50_95 evaluate the precision and recall of the model and assess its performance at different IoU thresholds, they provide a comprehensive reflection of the model’s performance [57,58,59]. Additionally, evaluating the model under a range of higher IoU thresholds ensures that it not only recognizes objects well but also has high localization accuracy. Therefore, this paper uses mAP and mAP50_95 as evaluation metrics in the following comparative experiment and ablation study.

4.4. Experimental Results and Discussion

4.4.1. Comparative Experimental Results of Self-Built Datasets

Compared to YOLOv3-tiny and YOLOv7-tiny, YOLOv5s-FPNet demonstrates significant advantages in terms of performance metrics. Specifically, YOLOv5s-FPNet improves mAP by 9.51% and 73.14%, respectively. Additionally, its performance in mAP50_95 is impressive, with improvements of 28.42% and 92.76%, respectively. The results are given in Table 3.

Table 3.

YOLOv3-tiny, YOLOv7-tiny, YOLOv5s and YOLOv5s-FPNet comparison experimental results. ↑ denotes the improvement of the comparison algorithm, ↓ denotes the degradation of the comparison algorithm.

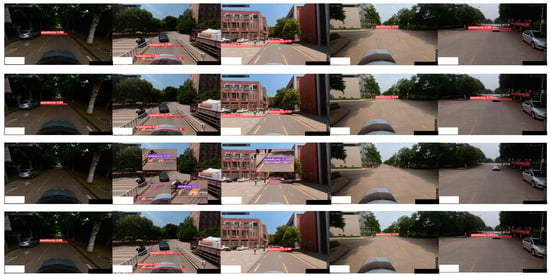

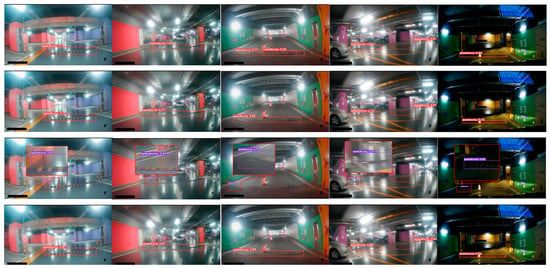

The visualization of the test results under normal lighting conditions is shown in Figure 15, and the results under dark lighting conditions are shown in Figure 16.

Figure 15.

YOLOv5s, YOLOv3-tiny, YOLOv7-tiny, and YOLOv5-FPNet speed bump detection results under normal lighting conditions. The first row illustrates the speed bump detection results of YOLOv5s. The second row illustrates the speed bump detection results of YOLOv3-tiny, the third row illustrates the speed bump detection results of YOLOv7-tiny, and the fourth row illustrates the speed bump detection results of YOLOv5-FPNet.

Figure 16.

YOLOv5s, YOLOv3-tiny, YOLOv7-tiny, and YOLOv5-FPNet speed bump detection results under dark lighting conditions. The first row illustrates the speed bump detection results of YOLOv5s. The second row illustrates the speed bump detection results of YOLOv3-tiny, the third row illustrates the speed bump detection results of YOLOv7-tiny, and the fourth row illustrates the speed bump detection results of YOLOv5-FPNet.

The experiments mentioned above demonstrate that YOLOv5 and YOLOv5-FPNet, proposed in this paper, perform better than YOLOv3-tiny and YOLOv7-tiny.

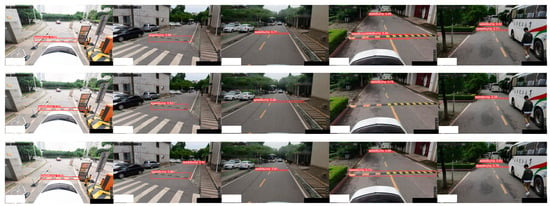

As shown in Figure 17, speed bumps can be detected under normal lighting conditions. The performance evaluation metrics include mAP, mAP50_95, and frames per second (FPS). Table 4 presents the comparative experimental results of the proposed YOLOv5-FPNet against current mainstream lightweight networks.

Figure 17.

YOLOv5, YOLOv5–FasterNet, and YOLOv5-FPNet speed bump detection results under normal lighting conditions. The first row illustrates the speed bump detection results of YOLOv5. The second row illustrates the speed bump detection results of YOLOv5–FasterNet, and the third row illustrates the speed bump detection results of YOLOv5-FPNet.

Table 4.

Lightweight network comparison experimental results. ↑denotes the enhancement of the proposed algorithm, ↓ denotes the degradation of the proposed algorithm.

Based on the data given in Table 4, the proposed YOLOv5-FPNet demonstrates significant superiority in terms of performance metrics compared to lightweight object detection models that utilize FasterNet, MobileNet, GhostNet, EfficientNet, and ShuffleNet as their backbone networks. Specifically, YOLOv5-FPNet achieved improvements in mAP of 12.85%, 38.76%, 23.66%, 13.90%, and 12.55%, respectively. Furthermore, in terms of mAP50_95, its performance was equally impressive, showing increases of 15.98%, 143.15%, 40.06%, 39.24%, and 27.05%. In addition, in terms of FPS, the enhancements reached −7.07%, 51.23%, 44.13%, 50.61%, and 17.20%, respectively.

Drawing from the data presented, it can be concluded that YOLOv5-FPNet demonstrates a significant enhancement in performance on speed bump detection tasks compared to the existing lightweight networks, and it has also made substantial advancements in the real-time aspects of speed bump detection tasks.

As illustrated in Figure 17, this study compares the visualization results of speed bump detection using YOLOv5, YOLOv5–FasterNet, and YOLOv5-FPNet.

From Figure 17, it can be observed that under normal lighting conditions, higher confidence levels, greater accuracy in the convergence of bounding boxes, and significant reductions in both false positive rates and miss rates are exhibited with the proposed network. Table 5 shows a comparison between confidence and detection accuracy in the five examples.

Table 5.

Comparison of sample image detection results under normal illumination.

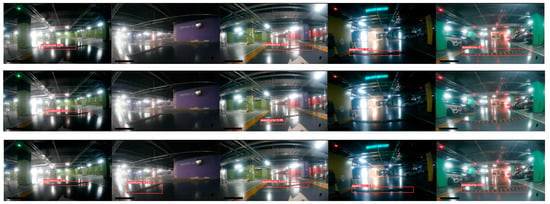

Furthermore, the visualization effects of speed bump detection under poor lighting conditions, such as in underground parking lots, are compared among the aforementioned three networks, as depicted in Figure 18.

Figure 18.

YOLOv5, YOLOv5–FasterNet, and YOLOv5-FPNet speed bump detection results under dark lighting conditions. The first row illustrates the speed bump detection results of YOLOv5. The second row illustrates the speed bump detection results of YOLOv5–FasterNet, and the third row illustrates the speed bump detection results of YOLOv5-FPNet.

It is evident from the comparison that the proposed network demonstrates superior detection performance under low-light conditions, in contrast to both YOLOv5 and YOLOv5–FasterNet. Table 6 shows a comparison between the confidence and detection accuracy in the five examples.

Table 6.

Comparison of sample image detection results under dark illumination.

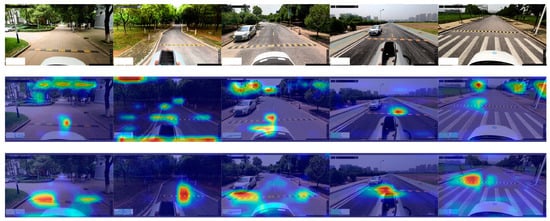

This study delved deeper by comparing the heatmap outputs of YOLOv5-FPNet integrated with and without the SimAM attention mechanism. This comparison was conducted using Gradient-weighted Class Activation Mapping (GradCAM). The visualization of the experimental results is presented in Figure 19.

Figure 19.

Heatmap comparison of YOLOv5-FPNet with and without SimAM. The first row illustrates the original plot of the dataset. The second row illustrates YOLOv5-FPNet without SimAM. The third row illustrates YOLOv5-FPNet with SimAM. The darker the color, the higher the impact on the confidence of the result.

As can be discerned from Figure 19, in comparison to YOLOv5-FPNet devoid of the SimAM attention mechanism, the integration of SimAM facilitates more efficacious concentration and extraction of speed bump features with the neural network model. The introduction of the SimAM attention mechanism substantially augments the proficiency of YOLOv5-FPNet in delineating features pertinent to speed bumps, effectively mitigating the perturbations induced by extraneous road elements.

To further elucidate the impact of the ratio parameter in Inner–WIoU, this research undertook a comprehensive series of experimental analyses, the outcomes of which are methodically delineated in Table 7.

Table 7.

Experimental effect of ratio on model performance.

An analysis of Table 7 elucidates that Inner–WIoU exerts a beneficial influence on the convergence and generalization capabilities of anchor boxes vis-à-vis WIoU. Furthermore, it is discerned that modulation of the ratio parameter significantly impacts the model’s proficiency in terms of convergence and generalization. In the end, a ratio of 1.4 was chosen for this paper because it has been found to provide optimal convergence.

Drawing from the aforementioned comparative analyses, it can be inferred that the proposed YOLOv5-FPNet manifests exemplary efficacy in the domain of speed bump detection, showcasing enhanced performance relative to the prevailing lightweight network architectures in the field.

4.4.2. Ablation Study

To quantitatively ascertain the contributory impact of individual components within the described neural network architecture, a meticulously structured series of ablation study analyses were undertaken. The objective of these analyses was to rigorously substantiate the efficacious role played by the newly integrated enhancement modules in amplifying the detection accuracy of speed bumps. The empirical findings derived from these ablation experimental analyses are systematically cataloged in Table 8.

Table 8.

Ablation experimental results. √ represents that the module is used. × represents that the module is not used.

In the ablation study, the baseline model selected was the original YOLOv5s. As indicated in Table 3, the introduction of optimization modules resulted in varying degrees of performance enhancement in the neural network model for speed bump detection tasks. By transitioning the backbone to FPNet, there was a notable reduction in the model’s computational load, with a 28.93% decrease in floating-point operation volume and a 20.43% reduction in the parameter count. However, this architectural adjustment led to a decrease in model precision, as evidenced by a 2.86% reduction in mAP and an 8.44% decrease in mAP50_95.

The C3-SFC module efficaciously augmented precision within the context of speed bump detection tasks. This enhancement was achieved with a marginal increment in the number of parameters of 3.09% while the floating-point computational load remained unchanged. Concurrently, this architectural innovation contributed to a commendable enhancement in model performance metrics, as evidenced by 4.09% and 2.84% increases in mAP and mAP50_95, respectively.

The incorporation of the SimAM attention mechanism between the backbone and SPPF, along with SimAM integration into the large object detection head, substantially bolstered the neural network model’s capacity for learning and discerning features within the ambit of speed bump detection endeavors. With an increment of 0.88% in floating-point operation and 0.59% in parameters, there was an enhancement of 1.35% in mAP and a 3.91% improvement in mAP50_95.

The optimization of the loss function, by substituting the default IoU with Inner–WIoU, facilitated respective enhancements of 3.27% and 5.97% in mAP and mAP50_95, significantly augmenting the convergence and generalization capabilities of the bounding boxes.

Aggregating the outcomes of the aforementioned ablation experiments, the refined YOLOv5-FPNet, in comparison to the baseline model, resulted in reductions of 17.77% in parameter count and 28.30% in floating-point operation volume, alongside increases of 5.83% and 3.68% in mAP and mAP50_95, respectively.

The conducted ablation experiments demonstrate that the proposed combination can assist autonomous vehicles in achieving faster and more precise speed bump detection, thereby providing passengers and drivers with a safer and more comfortable autonomous driving experience.

5. Conclusions

In this study, an enhanced algorithm based on the YOLOv5 model, termed YOLOv5-FPNet, is introduced to tackle the multifaceted challenges encountered by the current YOLOv5 object detection algorithm in speed bump detection. These challenges encompass issues such as image aberration, feature loss, and varying lighting conditions. To address the inadequacy in feature extraction capabilities during image aberration, the algorithm integrates the Dynamic Snake Convolution operator (DSConv) into FasterNet. Furthermore, through the refinement of the C3 module and the loss function, coupled with the integration of a parameter-free attention mechanism, SimAM, an augmentation in the model’s ability to extract features under the conditions of feature erosion and suboptimal illumination is achieved. These enhancements substantially contribute to the fortification of the model’s robustness. The empirical outcomes elucidate that, in comparison to the lightweight neural network backbones prevalently employed in contemporary applications, the proposed YOLOv5-FPNet confers superior precision and enhanced real-time performance in speed bump detection tasks. Relative to the foundational YOLOv5 architecture, the proposed YOLOv5-FPNet achieves a better balance between accuracy and real-time performance. Furthermore, this model demonstrates the capacity for extended applicability across a broader spectrum of road-based object detection tasks.

Despite the efficiency demonstrated with the proposed YOLOv5-FPNet model in the context of speed bump detection, certain constraints persist. Specifically, in terms of discerning distantly located speed bumps (akin to small object detection), despite a notable enhancement over the baseline YOLOv5 object detection paradigm, the performance remains suboptimal. To further advance the dependability of speed bump detection methodologies, with the aim of elevating safety and comfort levels in autonomous vehicle drive experience, future research should focus on the following areas:

- i.

- Dataset Enhancement: Pursuing the systematic aggregation of an extensive and varied dataset, with a focus on augmenting the representation of small targets, thereby facilitating increases in the precision of the target detection algorithm.

- ii.

- Multimodal Perception Fusion: Employing a multimodal perception framework, encompassing the synthesis of sensory data from optical imaging devices, Light Detection and Ranging (LiDAR) apparatus, and millimeter-wave radar systems to enhance the detection robustness of small-scale targets across diverse environments.

- iii.

- Algorithm Enhancement: Pruning and distillation algorithms could be adopted to further improve the real-time performance and accuracy of the model.

Author Contributions

Conceptualization, R.W. and X.L.; data curation, X.L.; methodology, X.L. and W.L.; resources, Q.Y.; software, X.L. and Y.J.; validation, X.L.; writing—original draft, W.L. and X.L.; writing—review and editing, Q.Y. and Y.J.; supervision, R.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China (51975253, U20A20331), the Young Scientist Fund of Natural Science Foundation of China (52102459), the Natural Science Foundation of Jiangsu Province (BK20210765), and the Science and Technology Program of Zhenjiang City (CQ2022004).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Antić, B.; Pešić, D.; Vujanić, M.; Lipovac, K. The influence of speed bumps heights to the decrease of the vehicle speed–Belgrade experience. Saf. Sci. 2013, 57, 303–312. [Google Scholar] [CrossRef]

- Lav, A.H.; Bilgin, E.; Lav, A.H. A fundamental experimental approach for optimal design of speed bumps. Accid. Anal. Prev. 2018, 116, 53–68. [Google Scholar] [CrossRef] [PubMed]

- Szurgott, P.; Kwasniewski, L.; Wekezer, J.W. Dynamic Interaction Between Heavy Vehicles And Speed Bumps. In Proceedings of the ECMS, Madrid, Spain, 9–12 June 2009; pp. 585–591. [Google Scholar]

- Rateke, T.; Justen, K.A.; Chiarella, V.F.; Sobieranski, A.C.; Comunello, E.; Wangenheim, A.V. Passive vision region-based road detection: A literature review. ACM Comput. Surv. (CSUR) 2019, 52, 1–34. [Google Scholar] [CrossRef]

- Žuraulis, V.; Surblys, V.; Šabanovič, E. Technological measures of forefront road identification for vehicle comfort and safety improvement. Transport 2019, 34, 363–372. [Google Scholar] [CrossRef]

- Du, L.; Zhang, R.; Wang, X. Overview of two-stage object detection algorithms. J. Phys. Conf. Ser. 2020, 1544, 012033. [Google Scholar] [CrossRef]

- Deng, J.; Xuan, X.; Wang, W.; Li, Z.; Yao, H.; Wang, Z. A review of research on object detection based on deep learning. J. Phys. Conf. Ser. 2020, 1684, 012028. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 27th IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the 29th Annual Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 1440–1448. [Google Scholar]

- He, K.M.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 16th IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Lu, X.; Li, B.Y.; Yue, Y.X.; Li, Q.Q.; Yan, J.J.; Soc, I.C. Grid R-CNN. In Proceedings of the 32nd IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 7355–7364. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016, Proceedings, Part I 14; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Kim, K.-H.; Hong, S.; Roh, B.; Cheon, Y.; Park, M. Pvanet: Deep but lightweight neural networks for real-time object detection. arXiv 2016, arXiv:1608.08021. [Google Scholar]

- Anisimov, D.; Khanova, T. Towards lightweight convolutional neural networks for object detection. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–8. [Google Scholar]

- Qin, Y.C.; Dong, M.M.; Langari, R.; Gu, L.; Guan, J.F. Adaptive Hybrid Control of Vehicle Semiactive Suspension Based on Road Profile Estimation. Shock Vib. 2015, 2015, 13. [Google Scholar] [CrossRef]

- Qin, Y.C.; Dong, M.M.; Zhao, F.; Langari, R.Z.; Gu, L. Road Profile Classification for Vehicle Semi-active Suspension System Based on Adaptive Neuro-Fuzzy Inference System. In Proceedings of the 54th IEEE Conference on Decision and Control (CDC), Osaka, Japan, 15–18 December 2015; pp. 1533–1538. [Google Scholar]

- Celaya-Padilla, J.M.; Galvan-Tejada, C.E.; Lopez-Monteagudo, F.E.; Alonso-Gonzalez, O.; Moreno-Baez, A.; Martinez-Torteya, A.; Galvan-Tejada, J.I.; Arceo-Olague, J.G.; Luna-Garcia, H.; Gamboa-Rosales, H. Speed Bump Detection Using Accelerometric Features: A Genetic Algorithm Approach. Sensors 2018, 18, 443. [Google Scholar] [CrossRef]

- Qi, G.; Fan, X.; Zhu, S.; Chen, X.; Wang, P.; Li, H. Research on Robust Control of Automobile Anti-lock Braking System Based on Road Recognition. Jordan J. Mech. Ind. Eng. 2022, 16, 343–352. [Google Scholar]

- Domínguez, J.M.L.; Sanguino, T.d.J.M.; Véliz, D.M.; de Viana González, I.J.F. Multi-Objective Decision Support System for Intelligent Road Signaling. In Proceedings of the 2020 15th Iberian Conference on Information Systems and Technologies (CISTI), Sevilla, Spain, 24–27 June 2020; pp. 1–6. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Arunpriyan, J.; Variyar, V.V.S.; Soman, K.P.; Adarsh, S. Real-Time Speed Bump Detection Using Image Segmentation for Autonomous Vehicles. In Intelligent Computing, Information and Control Systems: ICICCS 2019; Springer International Publishing: Cham, Switzerland, 2020; pp. 308–315. [Google Scholar]

- Varona, B.; Monteserin, A.; Teyseyre, A. A deep learning approach to automatic road surface monitoring and pothole detection. Pers. Ubiquitous Comput. 2020, 24, 519–534. [Google Scholar] [CrossRef]

- Lion, K.M.; Kwong, K.H.; Lai, W.K. Smart Speed Bump Detection and Estimation with Kinect. In Proceedings of the 4th International Conference on Control, Automation and Robotics (ICCAR), Auckland, New Zealand, 20–23 April 2018; pp. 465–469. [Google Scholar]

- Devapriya, W.; Babu, C.N.K.; Srihari, T. Real time speed bump detection using Gaussian filtering and connected component approach. In Proceedings of the 2016 World Conference On futuristic Trends in Research and Innovation for Social Welfare (Startup Conclave), Coimbatore, India, 29 February 2016–1 March 2016; pp. 1–5. [Google Scholar]

- Yun, H.S.; Kim, T.H.; Park, T.H. Speed-Bump Detection for Autonomous Vehicles by Lidar and Camera. J. Electr. Eng. Technol. 2019, 14, 2155–2162. [Google Scholar] [CrossRef]

- Varma, V.; Adarsh, S.; Ramachandran, K.; Nair, B.B. Real time detection of speed hump/bump and distance estimation with deep learning using GPU and ZED stereo camera. Procedia Comput. Sci. 2018, 143, 988–997. [Google Scholar] [CrossRef]

- Dewangan, D.K.; Sahu, S.P. Deep learning-based speed bump detection model for intelligent vehicle system using raspberry Pi. IEEE Sens. J. 2020, 21, 3570–3578. [Google Scholar] [CrossRef]

- Moujahid, A.; Tantaoui, M.E.; Hina, M.D.; Soukane, A.; Ortalda, A.; ElKhadimi, A.; Ramdane-Cherif, A. Machine learning techniques in ADAS: A review. In Proceedings of the 2018 International Conference on Advances in Computing and Communication Engineering (ICACCE), Paris, France, 22–23 June 2018; pp. 235–242. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and< 0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.C.; Tan, M.; Chu, G.; Vasudevan, V.; Zhu, Y.; Pang, R.; et al. Searching for MobileNetV3. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Zhang, X.; Zhou, X.Y.; Lin, M.X.; Sun, R. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Ma, N.N.; Zhang, X.Y.; Zheng, H.T.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 122–138. [Google Scholar]

- Han, K.; Wang, Y.H.; Tian, Q.; Guo, J.Y.; Xu, C.J.; Xu, C. GhostNet: More Features from Cheap Operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Electr Network, Seattle, WA, USA, 14–19 June 2020; pp. 1577–1586. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 20–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Chen, J.; Kao, S.h.; He, H.; Zhuo, W.; Wen, S.; Lee, C.H.; Chan, S.H.G. Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar]

- Yang, L.; Zhang, R.-Y.; Li, L.; Xie, X. Simam: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Li, Y.; Chen, Y.; Dai, X.; Chen, D.; Liu, M.; Yuan, L.; Liu, Z.; Zhang, L.; Vasconcelos, N. Micronet: Improving image recognition with extremely low flops. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 1–17 October 2021; pp. 468–477. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Zhu, X.; Hu, H.; Lin, S.; Dai, J. Deformable convnets v2: More deformable, better results. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9308–9316. [Google Scholar]

- Qi, Y.; He, Y.; Qi, X.; Zhang, Y.; Yang, G. Dynamic snake convolution based on topological geometric constraints for tubular structure segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 4–6 October 2023; pp. 6070–6079. [Google Scholar]

- Long, Q.; Jin, Y.; Song, G.; Li, Y.; Lin, W. Graph structural-topic neural network. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Virtual Event, 6–10 July 2020; pp. 1065–1073. [Google Scholar]

- Wang, W.; Dai, J.; Chen, Z.; Huang, Z.; Li, Z.; Zhu, X.; Hu, X.; Lu, T.; Lu, L.; Li, H. Internimage: Exploring large-scale vision foundation models with deformable convolutions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 14408–14419. [Google Scholar]

- Yu, F.; Wang, D.; Shelhamer, E.; Darrell, T. Deep layer aggregation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2403–2412. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2019; pp. 658–666. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI conference on artificial intelligence, New York, NY, USA, 7–12 February 2020; pp. 12993–13000. [Google Scholar]

- Zhang, Y.-F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and efficient IOU loss for accurate bounding box regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

- Gevorgyan, Z. SIoU loss: More powerful learning for bounding box regression. arXiv 2022, arXiv:2205.12740. [Google Scholar]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-IoU: Bounding Box Regression Loss with Dynamic Focusing Mechanism. arXiv 2023, arXiv:2301.10051. [Google Scholar]

- Zhang, H.; Xu, C.; Zhang, S. Inner-IoU: More Effective Intersection over Union Loss with Auxiliary Bounding Box. arXiv 2023, arXiv:2311.02877. [Google Scholar]

- Sui, L.; Zhang, C.-L.; Wu, J. Salvage of supervision in weakly supervised object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 14227–14236. [Google Scholar]

- Le, H.-B.; Kim, T.D.; Ha, M.-H.; Tran, A.L.Q.; Nguyen, D.-T.; Dinh, X.-M. Robust Surgical Tool Detection in Laparoscopic Surgery using YOLOv8 Model. In Proceedings of the 2023 International Conference on System Science and Engineering (ICSSE), Ho Chi Minh, Vietnam, 27–28 July 2023; pp. 537–542. [Google Scholar]

- Li, Y.; Wang, Y.; Zhao, H. CB-YOLOv5 Algorithm for Small Target Detection in Aerial Images. In Proceedings of the 2023 4th International Conference for Emerging Technology (INCET), Belgaum, India, 26–28 May 2023; pp. 1–7. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).