Abstract

The flexibility and versatility associated with autonomous mobile robots (AMR) have facilitated their integration into different types of industries and tasks. However, as the main objective of their implementation on the factory floor is to optimize processes and, consequently, the time associated with them, it is necessary to take into account the environment and congestion to which they are subjected. Localization, on the shop floor and in real time, is an important requirement to optimize the AMRs’ trajectory management, thus avoiding livelocks and deadlocks during their movements in partnership with manual forklift operators and logistic trains. Threeof the most commonly used localization techniques in indoor environments (time of flight, angle of arrival, and time difference of arrival), as well as two of the most commonly used indoor localization methods in the industry (ultra-wideband, and ultrasound), are presented and compared in this paper. Furthermore, it identifies and compares three industrial indoor localization solutions: Qorvo, Eliko Kio, and Marvelmind, implemented in an industrial mobile platform, which is the main contribution of this paper. These solutions can be applied to both AMRs and other mobile platforms, such as forklifts and logistic trains. In terms of results, the Marvelmind system, which uses an ultrasound method, was the best solution.

1. Introduction

To be more competitive, flexible, and productive, nowadays, all companies are modeling and investing in their factory floors. The industry is experiencing a new era of high technological development, so the integration of mobile platforms in industrial processes and tasks is increasingly common. Currently, all companies are focused on developing industrial systems that are fully automated and more flexible [1]. This flexibility makes them suitable to be used in different industrial stages or environments.

Autonomous guided vehicles (AGV) are normally mobile platforms to transport materials between workstations or warehouses, without guidelines like the magnetic lines, and their increased use in shop floors is related to their robustness and flexibility [2], contributing to the increase of efficiency and effectiveness of the production process.

In modern industries, AMR systems are a very attractive solution to increase the level of automation in factory logistics [3], so the use of them has become widespread in the last few decades.

The latest and most modern industries have integrated a hybrid system of mobile platform types on their factory floors. Manual platforms, such as logistic trains and forklift trucks, continue to perform their tasks, but with the help of autonomous mobile platforms, the well-known mobile robots. To have a good interaction between both, especially in path management, all these platforms must know their position and orientation so that the movement is safe and smooth [4], this is the main goal of the localization systems.

Indoor localization is a key technology for mobile platforms (MP) [5], such as AMR, as it enables the platform to determine its position and orientation within an indoor environment. There are several approaches to indoor localization, each with its own set of advantages and disadvantages.

As another important point, there are various industrial environments and their characteristics that must be taken into consideration to have an efficient localization robot system. Sometimes, a common solution is to use more than one of the technologies mentioned above and apply a sensor fusion algorithm.

The exchange between localization systems in real time, and consequently, the exchange of maps and trajectories, allows the robot to obtain greater stability in estimating its pose as well as in smoothing its movement on the factory floor. These data, of AMRs’ pose, are also very important for the robot fleet management algorithm.

This study is a module of a project aiming to integrate autonomous mobile robots (AMR) within logistical trains. To effectively plan the routes for the AMR, it is imperative to have accurate information about the positions of the logistic trains.

Considering the previous assumptions, this paper discusses the integration, in an industrial environment, and the comparison of different indoor localization systems for mobile platforms. The main goal is the selection of a real-time location system to be implemented in all mobile platforms of an industrial production line. The detection of the real location of each vehicle will allow the AMR fleet management software to plan more efficiently and accurately the AMRs paths, reducing conflicts between different mobile platforms on the shop floor (AMRs, forklifts, logistical trains, others).

In Section 2, the state-of-the-art indoor localization systems, technologies, and techniques are presented. Section 3 describes the most popular localization technologies and techniques usually implemented in indoor environments for locating objects and/or persons. Section 4 presents the systematic framework employed to address the research objectives. Section 5 presents the comparison results achieved with different indoor localization systems, in an industrial scenario and with an AMR. In this specific section, it is possible to compare and analyze the results obtained from the different industrial localization systems and their comparison with ground truth. Finally, some conclusions and the contribution of this research are presented in Section 6.

This paper stands out from the vast majority of papers in the literature on indoor localization, as it compares three industrial systems on the market in a quantitative way, with tests carried out in a real environment, making it easier to choose for future integration.

2. Related Work

Indoor localization refers to the process of determining the location of a device or a person inside a building or an enclosed space [6]. It is an important technology that has numerous applications in various industries and sectors, including retail, healthcare [7], transportation [8], industrial automation [9], public safety [10], and entertainment [11].

The importance of indoor localization lies in the fact that it enables businesses and organizations to better understand and optimize the movement and behavior of people and assets within their premises [12]. For example, indoor localization can help a retailer track customer movement and engagement in its store, a healthcare facility monitor the location and status of its medical equipment and staff, a transportation company optimize the delivery of packages and goods [13], and a factory automate [14] and monitor the production process [15].

There are different types of indoor localization systems, each using various technologies and methods to determine the location of a device or a person. Some common technologies used in indoor localization include radio frequency (RF) signals, wireless technologies (e.g., WiFi, Bluetooth), ultrasonic (US) signals, and infrared (IR) signals. Geometric methods [16] such as trilateration and triangulation are often used to calculate the position of a device based on the distance to multiple reference points [17]. Probabilistic methods such as Kalman filters and particle filters are also used to estimate the location based on statistical models and sensor data [14,18].

Regarding AMRs, nowadays, in industry, some technologies can be applied for autonomous mobile platforms localization like the indoor/outdoor Global Positioning System (GPS) [19,20], 2D/3D sensors, vision systems, and wireless technologies like radio frequency identification (RFID) tags [21,22] or barcodes [23,24,25]. They have different characteristics, namely the accuracy, and therefore, it is important to take into account the purpose of each robot.

In recent years, machine learning (ML) techniques [26,27,28,29] have also been applied to indoor localization to improve the accuracy and adaptability of the systems.

Concerning the transportation and logistics sectors, indoor localization systems can be used in warehouses, distribution centers, and other transportation and logistics environments to track the movement and status of packages [30], vehicles, and personnel. This can improve the efficiency and accuracy of package delivery [31] and inventory management [32], as well as reducing the risk of accidents and errors. For example, an indoor localization system can help a warehouse worker locate a specific package or pallet more quickly, or alert a driver when they are approaching a restricted area.

In industrial automation and smart factories, this type of localization system can be used in factories and other industrial environments to automate and monitor the production process. This can improve the efficiency, quality, and safety of manufacturing operations [33], as well as enable the use of advanced technologies such as robotics [34] and the Internet of Things (IoT) [35]. For example, an indoor localization system can be used to track the location and status of manufacturing equipment and materials, or to guide autonomous vehicles and robots through the factory [18].

One common approach is to use a fixed infrastructure, such as a network of stationary beacons or sensors [36,37], to determine the platform’s position. The platform can use these beacons or sensors to triangulate its position based on the strength of the signals it receives from each beacon [17]. This approach is relatively simple and accurate, but it requires the installation and maintenance of a fixed infrastructure, which may not be practical in all situations [36].

Another approach is to use computer vision techniques [38] to localize the robot or other platform. This can involve using visual features such as roofs [39], corners [40], edges, textures, or fiducial markers [41] in the environment to determine the robot’s position and orientation. This approach is generally more flexible and can work in a variety of environments, but it may be less accurate than other methods, particularly in cluttered or poorly lit environments.

Another option is to use Inertial Measurement Units (IMUs) to determine the platform’s position and orientation [42]. IMUs are sensors that measure acceleration and angular velocity and can be used in mobile robotics to track platform movement over time when integrated with other robot localization systems. This approach is relatively simple and can work in a variety of environments, but it may be prone to drift over time when used standalone, leading to errors in the platform’s position estimates [43].

Indoor Global Positioning System (GPS) systems are specialized versions of the GPS and are designed to work in indoor environments, where traditional GPS signals may be weak or unavailable [44]. These systems use a combination of technologies, such as Wi-Fi, Bluetooth, or ultra-wideband (UWB), to determine the location of a device or platform within an indoor space [45]. These systems are generally more accurate than a traditional GPS when used in indoor environments [46], but their accuracy can vary depending on the specific technologies and infrastructure used.

Finally, some indoor localization systems use a combination of these approaches, combining the strengths of different methods to achieve the best possible accuracy and flexibility [45]. For example, a robot may use a fusion algorithm to improve its accuracy and robustness in different environments [47]. However, all these types of localization systems, techniques, and methods are always subject to propagation problems and reflections of the signal itself, culminating in delays in its detection and subsequent errors in locating the object or person. In the chapter on data analysis, Section 5, it will be possible to validate these phenomena in the different indoor localization systems tested.

To the authors’ knowledge, so far, there is no article with the practical and implemented comparison of localization systems as highlighted in this article.

3. Localization Techniques and Methods

This section is divided into two subsections. The first discusses some of the most commonly used methodologies for obtaining the position of a given object or person, in different environments and in real time. The second subsection lists, describes, and characterizes some of the technologies that can be found in localization systems.

3.1. Localization Techniques

Several different methods can be used for indoor localization, each with its strengths and limitations. These methods can be broadly classified into three categories: Trilateration, Fingerprinting, and Dead Reckoning. This subsection will present three methods—time of flight (ToF), angle of arrival (AoA), and time difference of arrival (TDoA)—of the trilateration category.

3.1.1. Time of Flight (ToF)

ToF, or time of arrival (ToA), is a method for measuring the distance between two radio transceivers [48]. It uses the signal propagation time (), between the transmitter () and the receiver (), to determine the distance between them. The ToF value multiplied by the signal velocity (v) provides the physical distance () between and (see Equation (1)).

where is the time when , in pose i, sends a message to the , in pose j. The last receives the signal at , where = + ( is the time taken, by the signal, between and ). So, the distance between the i and j, , can be calculated by Equation (1), where v represents the speed of the signal.

The principal requirement of the ToF method is the synchronization between transmitters and receivers. The signal bandwidth and the sampling rate affect the system accuracy, where a low sampling rate (in time) reduces the ToF resolution. In industrial indoor environments, this type of method may have significant localization errors caused by the obstacles, that deflect the emitted signals from the transmitter to the receiver.

3.1.2. Angle of Arrival (AoA)

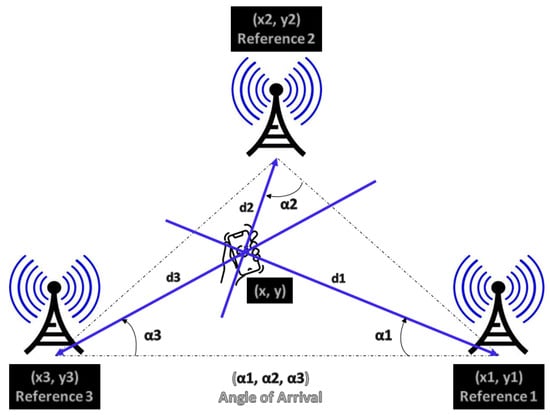

Using multiple receiver antennas [49], more commonly known as antenna arrays, it is possible to estimate the angle at which the transmitted signal impinges on the receivers; see Figure 1.

Figure 1.

Angle of arrival method, adapted.

The AoA approach uses this angle and the antenna positions , which are known in advance, to estimate and determine the two-dimensional (2D) or three-dimensional (3D) position of a transmitter. These data can be used for tracking or navigation purposes. Equation (2) represents the generic principle to obtain the object position by the AoA method.

and i is the antenna number 1, 2, or 3.

While the distance between the transmitter and receiver increases, the two best-known features of AoA are the accuracy deterioration of the transmitter’s estimated position and the hardware is much more expensive and complex than in other techniques.

3.1.3. Time Difference of Arrival (TDoA)

This method measures the difference in TOA at two or more different sensors, in other words, it exploits the relative position of a mobile transmitter based on the different signal propagation times of the transmitter and the multiple receivers. To calculate the perfect location of a transmitter is required, at least, three receivers and a strict synchronization between them [50]. Unlike ToF techniques where synchronization is needed between the transmitter and the receiver, in TDoA, only synchronization between the receivers is required. The signal bandwidth, the sampling rate, and a nondirect line of sight between the transmitter and the receivers will affect the accuracy of the system.

3.2. Localization Methods

Indoor localization refers to the use of methods to determine the location of a device or person inside a building or other enclosed structure. Several different technologies can be used for indoor localization. So in this subsection, will be presented two high-tech and industrial technologies: ultra-wideband (UWB), and ultrasound (US).

3.2.1. Ultra-Wideband (UWB)

The radio signals can penetrate a variety of materials, although metals and liquids can interfere with it. So, this immunity to interference from other signals makes the ultra-wideband very attractive for indoor localization [51]. This radio technology can enable the very accurate measure of the ToF, leading to centimeter accuracy distance/location measurement. This system features two methods: passive and active. The first one does not use a UWB tag and takes advantage only of the signal reflection to obtain the object or person’s position. In this specific case, it is necessary to know, in advance, where the system transmitters and receivers are located, to later be able to calculate where the object or person is, through its intersection in the signals sent and received between transmitters and receivers. On the other hand, an active UWB-based positioning system makes use of a battery-powered UWB tag. In this case, the system locates and tracks the tag, in indoor environments, by transmitting ultra-short UWB pulses from it to the fixed UWB sensors. The sensors send the collected data, via a wireless network, to the software platform, which then analyses, computes, and displays the position of the UWB tag in real-time. Furthermore, the application of UWB in indoor environments has the advantages of long battery life for UWB tags, robust flexibility, high data rates, high penetrating power, low power consumption and transmission, good positioning accuracy and performance, and little or no interference and multipath effects. In addition, UWB is expensive to scale because of the need to deploy more UWB sensors in a wide coverage area to improve performance.

3.2.2. Ultrasound (US)

Mostly supported by the ToF technique, US localization technology [52] calculates the distance between tags and nodes using sound velocity and ultrasound signals. Though the sound velocity can vary with atmospheric or weather conditions, factors such as humidity and temperature affect its propagation. However, the implementation of specific filter algorithms, based on complex signal processing, can reduce the environmental noise and consequently increase the localization accuracy. To provide system synchronization, usually, the ultrasound signal is supplemented by radio frequency (RF) pulses.

4. Methodology

This section serves as a comprehensive guide to the research design, data collection methods, and analytical techniques utilized to ensure the validity, reliability, and robustness of paper findings. By transparently outlining the steps taken to gather and analyze data, this section is structured to provide a clear understanding of the research process, allowing for a critical assessment of the study’s methodology and its implications for the interpretation of results. Section 4.1, named Testing Scenario and Indoor Localization Systems, presents the industrial indoor scenario where all the tests were developed and the three used indoor localization systems. Section 4.2, called Data Acquisition, presents the original data acquired from each localization system, and the last, called Data Transformation or Section 4.3, addresses the conversion of the points obtained in the various indoor systems to the robot’s referential.

4.1. Testing Scenario and Indoor Localization Systems

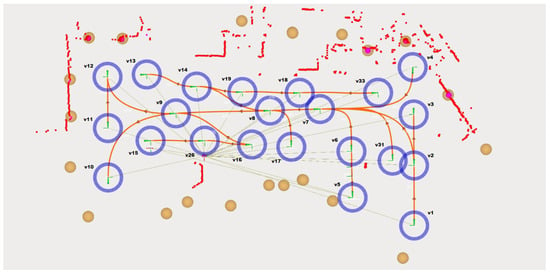

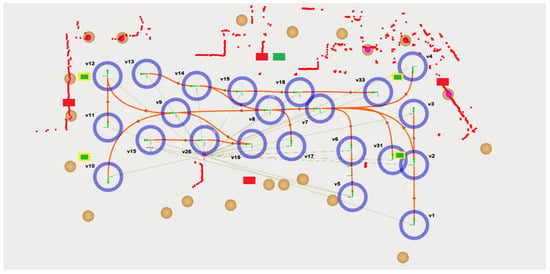

Nowadays, in mobile robotics, the map is given by natural markers/contours of the environment, however, for these tests, beacons with a high reflection rate were used, represented by the brown circles in Figure 2. They assume always the same position, on the factory floor, so this allows the mobile platform localization system to compare, in real time, the previous 2D beacon location, saved in a file, and the live position, which is given by the reflection of the security laser waves.

Figure 2.

Test scene. Image exported from Robot Operating System Visualization (RVIZ).

In this case, the robot localization system only gives relevance to the beacon position and odometry to estimate its position and orientation. The robot localization system needs to see at least two beacons, represented by red circles inside the beacons circles, to determine the exact robot location, with this only giving relevance to odometry.

The trajectory is composed of waypoints/vertices, blue circles, and edges, which are assumed to be the connecting paths between the vertices and through which the autonomous mobile robot moves, the orange splines. All these edges are bidirectional, so the AMR can move to both sides. Both vertices and edges are associated with a specific ID number, which is randomly assigned by the trajectory editor module, giving only relevance to the fact that each vertex and each edge has a unique identification number.

Indoor localization systems can be used for a variety of purposes, such as improving navigation, providing location-based services, and tracking the movements of people or objects within a building.

Several factors can affect the accuracy and reliability of indoor localization systems, including the type of technology used, the layout and environment of the building, and the accuracy of the underlying maps or reference points. To achieve reliable and accurate indoor localization, it is often necessary to use a combination of different technologies and techniques and to carefully calibrate and maintain the system.

Overall, indoor localization systems are an important tool for improving the efficiency, safety, and experience of people inside buildings, and have many potential applications in a wide range of industries.

The next subsections introduce and attend to the three industrial localization systems (Qorvo, Eliko Kio, and Marvelmind) used for the comparison announced by this paper. One last indoor localization system will be presented, the extended Kalman filter (EKF) beacons, which is considered the test’s ground truth.

4.1.1. Qorvo

Qorvo’s ultra-wideband technology [53], supported by Decawave’s Impulse Radio, allows for the location of tags in indoor environments (Figure 3), with high precision and at a very low cost compared to other solutions on the market, such as Pozyx [54]. Other main features of this system are secure low-power and low-latency data communication.

Figure 3.

Qorvo tag.

4.1.2. Eliko Kio

The KIO system, developed by Eliko [55], is intended for 2D/3D indoor positioning of mobile UWB tags (Figure 4) in relation to fixed position UWB anchors. Based on the time of flight measurements of radio pulses traveling between tags and anchors, the 2D location consists of at least three anchors and one mobile tag. With regard to the 3D location, the KIO system needs one more anchor. Due to the low intensity of emitted radio signals, KIO devices could be used for human tracking, but the positioning frequency decreases when the number of active tags increases.

Figure 4.

Eliko KIO tag.

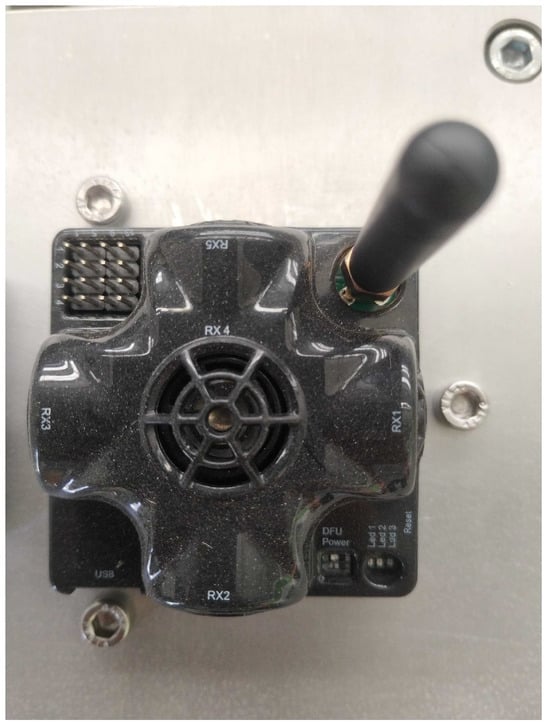

4.1.3. Marvelmind

The indoor positioning system by Marvelmind robotics [56], Figure 5, uses ultrasound ranging to find the position of one or more mobile sensor modules, also known as hedgehogs. Ultrasound ranging is also used by beacons, the transmitters, to determine their relative position. Therefore, the Marvelmind system is self-calibrating and the sensor modules have built-in rechargeable batteries. By the application programming interface (API), it is possible to choose if a module is a beacon or a hedgehog, which allows for greater system flexibility. The maximum update rate for tracking a single hedgehog is 16 Hz. However, in addition to ultrasonics, Marvelmind may also incorporate other communication technologies for data transmission and communication between beacons and tracked objects. Bluetooth and radio frequency communication are commonly used in conjunction with ultrasonics to enhance the capabilities of indoor positioning systems.

Figure 5.

Marvelmind tag.

4.1.4. EKF Beacons—Ground Truth

Table 1 shows a small comparison between the different systems. All the data were taken from their datasheets.

Table 1.

Device comparison—some features.

The high intensity/reflection of these beacons (Figure 6) and the large number of samples present on the factory floor gives the robot localization system excellent accuracy and repeatability, making it the ground truth of these tests. So, in Table 2, it is possible to see the comparison between the robot position, given by its localization system, in each vertex, and the vertex position values presented in the trajectory data file. All these values were taken based on the robot map referential, which typically refers to the coordinate system or frame of reference used by a robot to represent and navigate within its environment. This referential is crucial for the robot to understand its position, orientation, and movement relative to the surrounding space.

Figure 6.

Beacon example.

Table 2.

Ground truth selection process.

Regarding the integration of indoor localization systems, in industry, has the potential to improve productivity, efficiency, and safety, as well as to create new opportunities for innovation and value creation. Therefore, the next subsection exhibited the implementation of the different systems listed before, either on the AMR or on the industrial scenario.

4.1.5. Industrial Scenario—Systems Integration

To cover the whole robot map area with the different indoor localization systems, some preliminary tests were carried out that allowed us to conclude the data present in Table 3.

Table 3.

Minimum number of tags per localization system.

Figure 7, supported by Figure 2, highlights the distribution of the different localization systems across the plant floor, the colored rectangles distributed in the image, as well as the robot trajectories and the beacon map. A trajectory that spans the entire range of action of the different indoor localization systems was scaled to best evaluate them, because the greater the distance of the robot to them, the greater the error associated with the robot’s position.

Figure 7.

Sensors distribution in the industrial environment.

In the previous figure, the Eliko Kio system is illustrated by the four red rectangles, two of them at the center of the image and the others on each side. Regarding the Marvelmind system, it was possible to cover all the scene areas with only four tags, illustrated by the yellow rectangles. The only system where it was essential to use another tag was the Qorvo system, exemplified by five green rectangles, where four of them have a similar position to Marvelmind system tags. All these tags, regardless of the location system they are associated with, are both in the same referential.

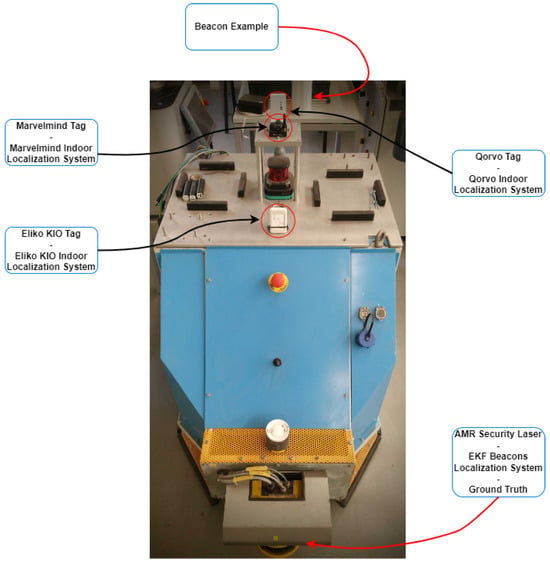

4.1.6. Autonomous Mobile Robot—Systems Integration

On the mobile platform, as it is possible to see in Figure 8, each tag has a specific position and all of them were powered by a portable power bank.

Figure 8.

Mobile platform sensors integration—system’s architecture.

To be able to compare the positions obtained by the ground truth and the different indoor localization systems, it was necessary to match the positions of the sick laser with each of the onboard tags. In this way, Table 4 represents the transformations of beacons data, in each vertex as described in Table 2 on robot row, to the three localization systems used in this case. These conversions were based on Equations (3) and (4).

where corresponds to the new X value of the new point, represents the X value of the original point, and is the modulus of the difference between the last two values. The last parameter of the equation, , assumes the angle, in radians, between the robot referential and the map referential in the original point.

here, corresponds to the new Y value of the new point, and represents the Y value of the original point. The last two parameters, and , are the same exposed in the last equation because the sensors were aligned by the X referential.

Table 4.

Beacons data conversion.

4.2. Data Acquisition

This subsection exposes the robot pose received by each localization system in each vertex from the robot’s map and their correspondence to the beacon data.

4.2.1. Beacons Data

Table 5 shows the average, the standard deviation, and the maximum and minimum values of the AMR localization system in each vertex.

Table 5.

AMR localization system—Beacons data.

4.2.2. Marvelmind

Table 6 shows the average, the standard deviation, and the maximum and minimum values of the Marvelmind localization system in each vertex.

Table 6.

Indoor localization system—Marvelmind data.

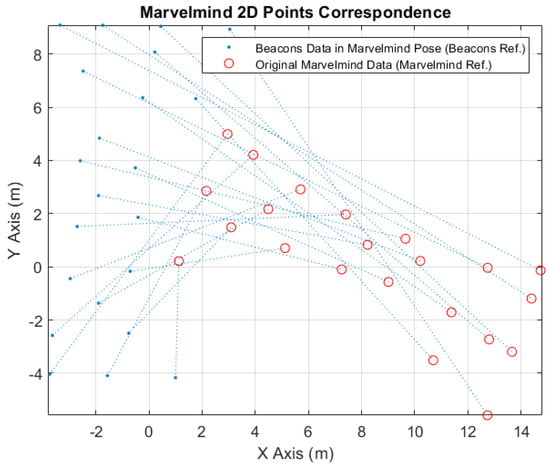

In Figure 9, it is possible to see the correspondence points between the converted beacons data to the Marvelmind robot tag position (blue points) and the received original Marvelmind data (red circles).

Figure 9.

Marvelmind 2D Points Correspondence.

4.2.3. Eliko Kio

Table 7 shows the average, the standard deviation, and the maximum and minimum values of the Eliko Kio localization system in each vertex.

Table 7.

Indoor localization system—Eliko Kio data.

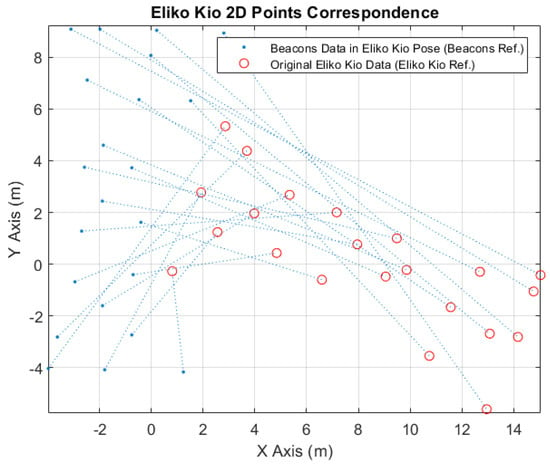

In Figure 10, it is possible to see the correspondence points between the converted beacons data to the Eliko Kio robot tag position (blue points) and the received original Eliko Kio data (red circles).

Figure 10.

Eliko Kio 2D Points Correspondence.

4.2.4. Qorvo

Table 8 shows the average, the standard deviation, and the maximum and minimum values of the Qorvo localization system in each vertex.

Table 8.

Indoor localization system—Qorvo Data.

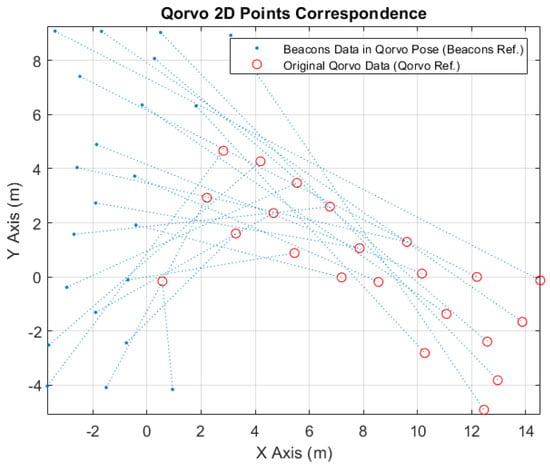

In Figure 11, it is possible to see the correspondence points between the converted beacons data to the Qorvo robot tag position (blue points) and the received original Qorvo data (red circles).

Figure 11.

Qorvo 2D points correspondence.

4.3. Data Transformation

This subsection will present the transformation matrix, which concerns the conversion of the points in each location system’s referential to their coordinates in the robot’s map referential. It will also be possible to observe, through figures, the approximation of the original points of each location system to the respective values acquired by ground truth.

After acquiring the various sets of points, in the different references, the next calculation would be to calculate the respective transform between them (Marvelmind to ground truth, Eliko Kio to ground truth, and Qorvo to ground truth). Based on the least squares (LS) approximation and with the help of MatLab, the following transformation matrices and respective errors, in each of the coordinates, were obtained.

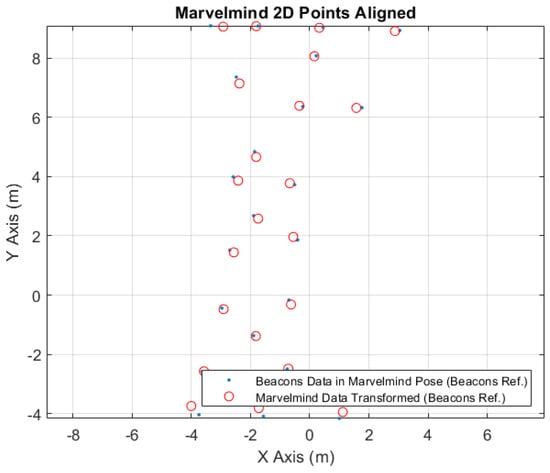

4.3.1. Marvelmind

Equations (5)–(7) represent the transformation matrix from the Marvelmind referential to the Beacons referential, the ground truth referential.

In Figure 12, it is possible to see the aligned points to the Marvelmind localization system. The blue points represent the converted robot position points, acquired from the robot location system, to the Marvelmind robot tag position. The red circles are the original robot location points, acquired from the Marvelmind system, transformed to the robot location system referential; see Table 9. Comparing these to types of points, after the conversion, it is possible to obtain the coordinate errors exposed in Table 10.

Figure 12.

Marvelmind 2D points aligned.

Table 9.

Indoor localization system—Marvelmind new points.

Table 10.

Marvelmind localization system—errors.

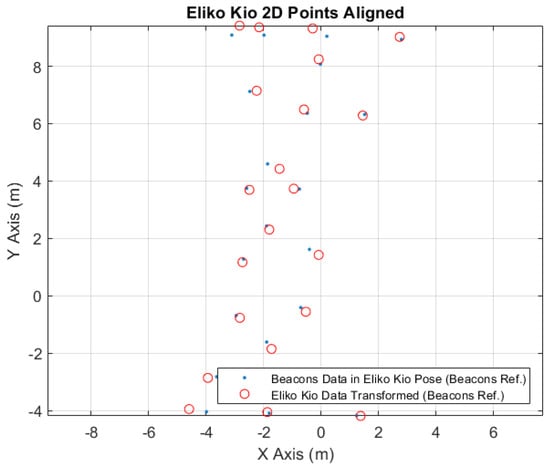

4.3.2. Eliko Kio

Equations (8)–(10) represent the transformation matrix from the Eliko Kio referential to the Beacons referential, the ground truth referential.

In Figure 13, it is possible to see the aligned points to the Eliko Kio localization system. The blue points represent the converted robot position points, acquired from the robot location system, to the Eliko Kio robot tag position. The red circles are the original robot location points, acquired from the Eliko Kio system, transformed to the robot location system referential; see Table 11. Comparing these to types of points, after the conversion, it is possible to obtain the coordinate errors exposed in Table 12.

Figure 13.

Eliko Kio 2D points aligned.

Table 11.

Indoor localization system—Eliko Kio new points.

Table 12.

Eliko Kio localization system—errors.

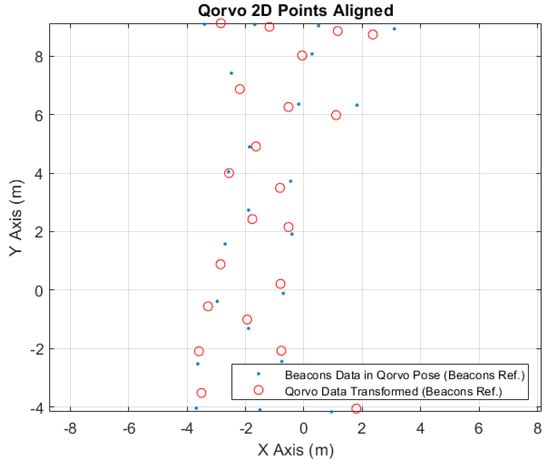

4.3.3. Qorvo

Equations (11)–(13) represent the transformation matrix from the Qorvo referential to the Beacons referential, the ground truth referential.

In Figure 14, it is possible to see the aligned points to the Qorvo localization system. The blue points represent the converted robot position points, acquired from the robot location system, to the Qorvo robot tag position. The red circles are the original robot location points, acquired from the Qorvo system, transformed to the robot location system referential; see Table 13. Comparing these to types of points, after the conversion, it is possible to obtain the coordinate errors exposed in Table 14.

Figure 14.

Qorvo 2D points aligned.

Table 13.

Indoor localization system—Qorvo new points.

Table 14.

Qorvo localization system—errors.

5. Results

In the results section of this paper, the research outcomes are unveiled, providing a detailed account of the data obtained through meticulous analysis. This section serves as a culmination of the study’s investigative efforts, presenting a comprehensive depiction of the key findings about the research questions and objectives outlined earlier.

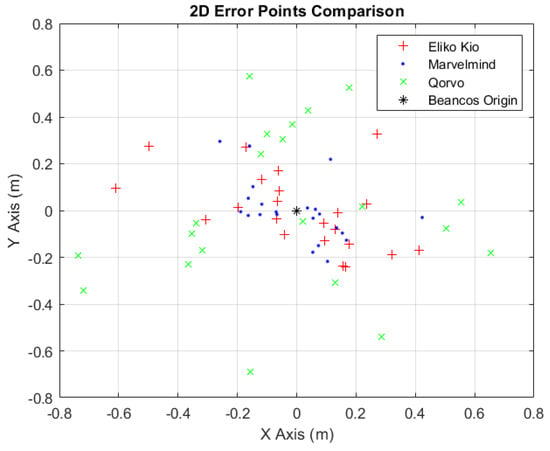

After presenting the results obtained with the different indoor localization systems and comparing the respective error values in each map point with the ground truth used (see Table 15), it can be stated that the Marvelmind system contains less error than Eliko Kio and Qorvo systems, being, in this environment and study scenario, the most accurate and precise module.

Table 15.

Indoor localization systems—error points comparison.

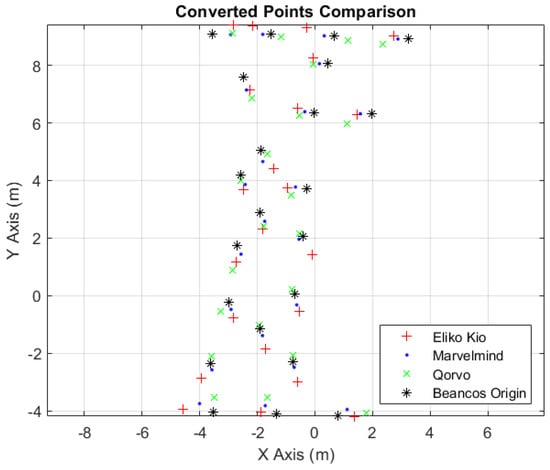

The blue dots, in Figure 15, refer to the Marvelmind indoor localization system. They are the closest to the point of origin , presented by the symbol ∗, alluding to ground truth values, thus assuming that it is the best-tested indoor localization system. The + symbols, in red, refer to the Eliko KIO localization system, and the x symbols refer to the Qorvo localization system.

Figure 15.

Two-dimensional error points comparison.

A more detailed and illustrative analysis is presented in Figure 16, illustrating more intuitively the difference, in terms of distance allusive to ground truth values, of the three used systems at each of the vertices.

Figure 16.

Two-dimensional converted points comparison.

Table 16 presents the best indoor localization system, in each vertex, which is highlighted with the respective color, according to the image caption in Figure 16. The blue color, alluding to Marvelmind, is the most repeated throughout the table, confirming it as the best system of the three selected.

Table 16.

Euclidean distances to ground truth System.

However, it is always necessary to take into account that there are always areas of the map where the different systems have difficulty in having precision, and even accuracy, in locating the AMR, leading to the so-called outliers. These critical points have to do with the positioning or distribution of the different modules, the various location systems, as well as the proximity of the AMR to industrial machinery and surrounding structures of the scenario itself, consisting mostly of iron, eventually influencing the signal propagation and the radio wave reflections.

In summary, there are several approaches to indoor localization for autonomous mobile robots and other different mobile platforms, each with its own set of advantages and disadvantages. The best approach for a given application will depend on the specific requirements and constraints of the environment and the accuracy can vary depending on the specific technologies and infrastructure used. After this analysis, it is possible to conclude that the Marvelmind system is the most accurate and the one that can cover a larger working area with the least number of tags. However, it is the most expensive system of all presented. As far as our case study is concerned, that is, for real-time detection of mobile platforms, such as AMRs, forklifts, or even logistics trains in industrial environments, any of the three systems will work, because they all have satisfactory results with errors below half a meter, which will always allow for safe, accurate, and optimized path planning for all AMRs.

6. Conclusions

This article tests three industrial indoor localization solutions: Qorvo, Eliko Kio, and Marvelmind, supported by two indoor localization methods: ultra-wideband (Qorvo and Eliko Kio) and Uultrasound (Marvelmind). A multicomparison between these three different indoor localization systems and a robot localization system (ground truth) was proposed. To optimize the data obtained by each system, the data acquired by the AMR location system were previously transformed to the position of each of the tags integrated on top of the AMR. Finally, an approximation was used, through MATLAB, using the method of least squares, of the points obtained by each localization system to the respective. It was possible to conclude that the Marvelmind system is the most accurate, but, for our proposal, any of the other systems could be used, because they all have errors below half a meter, which will always allow for safe, accurate, and optimized path planning for all AMRs when planning the paths for the different robots, taking into account the position of the different mobile platforms on the factory floor that are not managed by the robot fleet manager (forklifts, logistics trains).

A seminal contribution of this work lies in its comprehensive examination of three industrial localization systems in real time, coupled with a sophisticated and in-depth analysis. By systematically comparing and contrasting these disparate systems, this study endeavors to unearth nuanced insights into their respective functionalities. The intricate examination of real-time industrial localization not only elucidates the dynamic landscape of these technologies but also underscores their practical implications and potential advancements. This analytical approach offers a multifaceted perspective, fostering a deeper understanding of the intricate interplay between diverse industrial localization systems and providing a foundation for informed decision making in the realm of contemporary technological applications. Various quantitative and qualitative results of the different systems were presented, which could help readers make a future choice when purchasing an indoor localization system on the market.

As future work, it will be interesting to validate one of these indoor localization systems integrated into different forklifts or logistic trains and interaction with the TEA* Algorithm, the AMRs path planning algorithm, in real time and in a real environment.

Author Contributions

The contributions of the authors of this work are pointed as follows: Conceptualization: P.M.R. and J.L.; methodology: P.M.R., J.L. and H.S.; software: P.M.R.; validation: P.M.R., J.L., H.S. and P.C.; writing—review and editing: P.M.R., J.L., S.P.S., P.M.O., H.S. and P.C.; supervision: P.M.R., J.L., S.P.S., P.M.O. and P.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work is co-financed by Component 5-Capitalization and Business Innovation, integrated in the Resilience Dimension of the Recovery and Resilience Plan within the scope of the Recovery and Resilience Mechanism (MRR) of the European Union (EU), framed in the Next Generation EU, for the period 2021–2026, within project Produtech_R3, with reference 60.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

This study did not report any new data.

Acknowledgments

The authors of this work would like to thank the members of INESC TEC for all the support rendered to this project.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AGV | Autonomous Guided Vehicle |

| AMR | Autonomous Mobile Robots |

| AoA | Angle of Arrival |

| API | Application Programming Interface |

| BLE | Bluetooth Low Energy |

| EKF | Extended Kalman Filter |

| EU | European Union |

| GPS | Global Positioning System |

| ID | Identification Number |

| IMUs | Inertial Measurement Units |

| IoT | Internet of Things |

| IR | Infrared |

| LS | Least Squares |

| MDPI | Multidisciplinary Digital Publishing Institute |

| ML | Machine Learning |

| MP | Mobile Platform |

| RF | Radio Frequency |

| RFID | Radio Frequency IDentification |

| RSS | Received Signal Strength |

| RSSI | Received Signal Strength Indicator |

| RVIZ | Robot Operating System Visualization |

| TDoA | Time Difference of Arrival |

| TEA* | Time Enhanced A* |

| 3D | Three-Dimensional |

| ToA | Time of Arrival |

| ToF | Time of Flight |

| 2D | Two-Dimensional |

| UHF | Ultra High Frequency |

| US | Ultrasound |

| UWB | Ultra-Wideband |

| WLAN | Wireless Local Area Network |

References

- Moura, P.; Costa, P.; Lima, J.; Costa, P. A temporal optimization applied to time enhanced A. In AIP Conference Proceedings; AIP Publishing LLC: College Park, MD, USA, 2019; Volume 2116, p. 220007. [Google Scholar]

- Santos, J.; Costa, P.; Rocha, L.F.; Moreira, A.P.; Veiga, G. Time enhanced A*: Towards the development of a new approach for Multi-Robot Coordination. In Proceedings of the 2015 IEEE International Conference on Industrial Technology (ICIT), Seville, Spain, 17–19 March 2015; pp. 3314–3319. [Google Scholar]

- Cardarelli, E.; Digani, V.; Sabattini, L.; Secchi, C.; Fantuzzi, C. Cooperative cloud robotics architecture for the coordination of multi-AGV systems in industrial warehouses. Mechatronics 2017, 45, 1–13. [Google Scholar] [CrossRef]

- Butdee, S.; Suebsomran, A. Localization based on matching location of AGV. In Proceedings of the 24th International Manufacturing Conference, IMC24. Waterford Institute of Technology, Waterford, Ireland, 20–30 August 2007; pp. 1121–1128. [Google Scholar]

- Roy, P.; Chowdhury, C. A survey of machine learning techniques for indoor localization and navigation systems. J. Intell. Robot. Syst. 2021, 101, 63. [Google Scholar] [CrossRef]

- Zafari, F.; Gkelias, A.; Leung, K.K. A survey of indoor localization systems and technologies. IEEE Commun. Surv. Tutor. 2019, 21, 2568–2599. [Google Scholar] [CrossRef]

- Bradley, C.; El-Tawab, S.; Heydari, M.H. Security analysis of an IoT system used for indoor localization in healthcare facilities. In Proceedings of the 2018 Systems and Information Engineering Design Symposium (SIEDS), Charlottesville, VA, USA, 27 April 2018; pp. 147–152. [Google Scholar]

- Shit, R.C.; Sharma, S.; Yelamarthi, K.; Puthal, D. AI-enabled fingerprinting and crowdsource-based vehicle localization for resilient and safe transportation systems. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4660–4669. [Google Scholar] [CrossRef]

- Obeidat, H.; Shuaieb, W.; Obeidat, O.; Abd-Alhameed, R. A review of indoor localization techniques and wireless technologies. Wirel. Pers. Commun. 2021, 119, 289–327. [Google Scholar] [CrossRef]

- Pilati, F.; Sbaragli, A.; Nardello, M.; Santoro, L.; Fontanelli, D.; Brunelli, D. Indoor positioning systems to prevent the COVID19 transmission in manufacturing environments. Procedia Cirp 2022, 107, 1588–1593. [Google Scholar] [CrossRef]

- Xiong, R.; van Waasen, S.; Rheinlnder, C.; Wehn, N. Development of a Novel Indoor Positioning System With mm-Range Precision Based on RF Sensors Network. IEEE Sens. Lett. 2017, 1, 5500504. [Google Scholar] [CrossRef]

- Li, N.; Becerik-Gerber, B. An infrastructure-free indoor localization framework to support building emergency response operations. In Proceedings of the 19th EG-ICE International Workshop on Intelligent Computing in Engineering, Munich, Germany, 4–6 July 2012. [Google Scholar]

- Wang, S.; Zhao, L. Optimization of Goods Location Numbering and Storage and Retrieval Sequence in Automated Warehouse. In Proceedings of the 2009 International Joint Conference on Computational Sciences and Optimization, Sanya, China, 24–26 April 2009; Volume 2, pp. 883–886. [Google Scholar] [CrossRef]

- Lipka, M.; Sippel, E.; Hehn, M.; Adametz, J.; Vossiek, M.; Dobrev, Y.; Gulden, P. Wireless 3D Localization Concept for Industrial Automation Based on a Bearings Only Extended Kalman Filter. In Proceedings of the 2018 Asia-Pacific Microwave Conference (APMC), Kyoto, Japan, 6–9 November 2018; pp. 821–823. [Google Scholar] [CrossRef]

- Hesslein, N.; Wesselhöft, M.; Hinckeldeyn, J.; Kreutzfeldt, J. Industrial indoor localization: Improvement of logistics processes using location based services. In Advances in Automotive Production Technology–Theory and Application: Stuttgart Conference on Automotive Production (SCAP2020); Springer: Berlin/Heidelberg, Germany, 2021; pp. 460–467. [Google Scholar]

- Xu, L.; Shen, X.; Han, T.X.; Du, R.; Shen, Y. An Efficient Relative Localization Method via Geometry-based Coordinate System Selection. In Proceedings of the ICC 2022-IEEE International Conference on Communications, Seoul, Republic of Korea, 16–20 May 2022; pp. 4522–4527. [Google Scholar] [CrossRef]

- Luo, Q.; Yang, K.; Yan, X.; Li, J.; Wang, C.; Zhou, Z. An Improved Trilateration Positioning Algorithm with Anchor Node Combination and K-Means Clustering. Sensors 2022, 22, 6085. [Google Scholar] [CrossRef]

- Thrun, S.; Burgard, W.; Fox, D. Probabilistic Robotics (Intelligent Robotics and Autonomous Agents); The MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Kim, S.H.; Roh, C.W.; Kang, S.C.; Park, M.Y. Outdoor navigation of a mobile robot using differential GPS and curb detection. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007; pp. 3414–3419. [Google Scholar]

- Gonzalez, J.; Blanco, J.; Galindo, C.; Ortiz-de Galisteo, A.; Fernández-Madrigal, J.; Moreno, F.; Martinez, J. Combination of UWB and GPS for indoor-outdoor vehicle localization. In Proceedings of the 2007 IEEE International Symposium on Intelligent Signal Processing, Alcala de Henares, Spain, 3–5 October 2007; pp. 1–6. [Google Scholar]

- Hahnel, D.; Burgard, W.; Fox, D.; Fishkin, K.; Philipose, M. Mapping and localization with RFID technology. In Proceedings of the IEEE International Conference on Robotics and Automation, 2004. Proceedings. ICRA’04, New Orleans, LA, USA, 26 April–1 May 2004; Volume 1, pp. 1015–1020. [Google Scholar]

- Choi, B.S.; Lee, J.W.; Lee, J.J.; Park, K.T. A hierarchical algorithm for indoor mobile robot localization using RFID sensor fusion. IEEE Trans. Ind. Electron. 2011, 58, 2226–2235. [Google Scholar] [CrossRef]

- Huh, J.; Chung, W.S.; Nam, S.Y.; Chung, W.K. Mobile robot exploration in indoor environment using topological structure with invisible barcodes. ETRI J. 2007, 29, 189–200. [Google Scholar] [CrossRef]

- Lin, G.; Chen, X. A Robot Indoor Position and Orientation Method based on 2D Barcode Landmark. J. Comput. 2011, 6, 1191–1197. [Google Scholar] [CrossRef]

- Kobayashi, H. A new proposal for self-localization of mobile robot by self-contained 2d barcode landmark. In Proceedings of the 2012 Proceedings of SICE annual conference (SICE), Akita, Japan, 20–23 August 2012; pp. 2080–2083. [Google Scholar]

- Atanasyan, A.; Roßmann, J. Improving Self-Localization Using CNN-based Monocular Landmark Detection and Distance Estimation in Virtual Testbeds. In Tagungsband des 4. Kongresses Montage Handhabung Industrieroboter; Springer: Berlin/Heidelberg, Germany, 2019; pp. 249–258. [Google Scholar]

- Kendall, A.; Grimes, M.; Cipolla, R. Posenet: A convolutional network for real-time 6-dof camera relocalization. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2938–2946. [Google Scholar]

- Sadeghi Esfahlani, S.; Sanaei, A.; Ghorabian, M.; Shirvani, H. The Deep Convolutional Neural Network Role in the Autonomous Navigation of Mobile Robots (SROBO). Remote Sens. 2022, 14, 3324. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Lima, J.; Rocha, C.; Rocha, L.; Costa, P. Data Matrix Based Low Cost Autonomous Detection of Medicine Packages. Appl. Sci. 2022, 12, 9866. [Google Scholar] [CrossRef]

- Sharma, P.; Saucan, A.A.; Bucci, D.J.; Varshney, P.K. On Self-Localization and Tracking with an Unknown Number of Targets. In Proceedings of the 2018 52nd Asilomar Conference on Signals, Systems, and Computers, Pacific Grove, CA, USA, 28–31 October 2018; pp. 1735–1739. [Google Scholar] [CrossRef]

- Ahmad, U.; Poon, K.; Altayyari, A.M.; Almazrouei, M.R. A Low-cost Localization System for Warehouse Inventory Management. In Proceedings of the 2019 International Conference on Electrical and Computing Technologies and Applications (ICECTA), Ras Al Khaimah, United Arab Emirates, 19–21 November 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Halawa, F.; Dauod, H.; Lee, I.G.; Li, Y.; Yoon, S.W.; Chung, S. Introduction of a real time location system to enhance the warehouse safety and operational efficiency. Int. J. Prod. Econ. 2020, 224, 107541. [Google Scholar] [CrossRef]

- Coronado, E.; Kiyokawa, T.; Ricardez, G.A.G.; Ramirez-Alpizar, I.G.; Venture, G.; Yamanobe, N. Evaluating quality in human-robot interaction: A systematic search and classification of performance and human-centered factors, measures and metrics towards an industry 5.0. J. Manuf. Syst. 2022, 63, 392–410. [Google Scholar] [CrossRef]

- Martinho, R.; Lopes, J.; Jorge, D.; de Oliveira, L.C.; Henriques, C.; Peças, P. IoT Based Automatic Diagnosis for Continuous Improvement. Sustainability 2022, 14, 9687. [Google Scholar] [CrossRef]

- Le, D.V.; Havinga, P.J. SoLoc: Self-organizing indoor localization for unstructured and dynamic environments. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Sapporo, Japan, 18–21 September 2017; pp. 1–8. [Google Scholar] [CrossRef]

- Flögel, D.; Bhatt, N.P.; Hashemi, E. Infrastructure-Aided Localization and State Estimation for Autonomous Mobile Robots. Robotics 2022, 11, 82. [Google Scholar] [CrossRef]

- Alkendi, Y.; Seneviratne, L.; Zweiri, Y. State of the Art in Vision-Based Localization Techniques for Autonomous Navigation Systems. IEEE Access 2021, 9, 76847–76874. [Google Scholar] [CrossRef]

- Dias, F.; Schafer, H.; Natal, L.; Cardeira, C. Mobile Robot Localisation for Indoor Environments Based on Ceiling Pattern Recognition. In Proceedings of the 2015 IEEE International Conference on Autonomous Robot Systems and Competitions, Vila Real, Portugal, 8–10 April 2015; pp. 65–70. [Google Scholar] [CrossRef]

- Sudin, M.; Abdullah, S.; Nasudin, M. Humanoid Localization on Robocup Field using Corner Intersection and Geometric Distance Estimation. IJIMAI 2019, 5, 50–56. [Google Scholar] [CrossRef]

- Kalaitzakis, M.; Cain, B.; Carroll, S.; Ambrosi, A.; Whitehead, C.; Vitzilaios, N. Fiducial markers for pose estimation. J. Intell. Robot. Syst. 2021, 101, 71. [Google Scholar] [CrossRef]

- Grilo, A.; Costa, R.; Figueiras, P.; Gonçalves, R.J. Analysis of AGV indoor tracking supported by IMU sensors in intra-logistics process in automotive industry. In Proceedings of the 2021 IEEE International Conference on Engineering, Technology and Innovation (ICE/ITMC), Cardiff, UK, 21–23 June 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Malyavej, V.; Kumkeaw, W.; Aorpimai, M. Indoor robot localization by RSSI/IMU sensor fusion. In Proceedings of the 2013 10th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology, Krabi, Thailand, 15–17 May 2013; pp. 1–6. [Google Scholar] [CrossRef]

- Xia, Z.; Chen, C. A Localization Scheme with Mobile Beacon for Wireless Sensor Networks. In Proceedings of the 2006 6th International Conference on ITS Telecommunications, Chengdu, China, 21–23 June 2006; pp. 1017–1020. [Google Scholar] [CrossRef]

- Zhao, C.; Wang, B. A UWB/Bluetooth Fusion Algorithm for Indoor Localization. In Proceedings of the 2019 Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019; pp. 4142–4146. [Google Scholar] [CrossRef]

- Álvarez Merino, C.S.; Luo-Chen, H.Q.; Khatib, E.J.; Barco, R. WiFi FTM, UWB and Cellular-Based Radio Fusion for Indoor Positioning. Sensors 2021, 21, 7020. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Wu, X.; Gao, R.; Pan, L.; Zhang, Q. A multi-sensor fusion positioning approach for indoor mobile robot using factor graph. Measurement 2023, 216, 112926. [Google Scholar] [CrossRef]

- Dargie, W.; Poellabauer, C. Fundamentals of Wireless Sensor Networks: Theory and Practice; John Wiley & Sons: Hoboken, NJ, USA, 2010. [Google Scholar]

- Xiong, J.; Jamieson, K. ArrayTrack: A Fine-Grained indoor location system. In Proceedings of the 10th USENIX Symposium on Networked Systems Design and Implementation (NSDI 13), Lombard, IL, USA, 2–5 April 2013; pp. 71–84. [Google Scholar]

- Liu, H.; Darabi, H.; Banerjee, P.; Liu, J. Survey of wireless indoor positioning techniques and systems. IEEE Trans. Syst. Man Cybern. Part C 2007, 37, 1067–1080. [Google Scholar] [CrossRef]

- Oppermann, I.; Hämäläinen, M.; Iinatti, J. UWB: Theory and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2004. [Google Scholar]

- Ijaz, F.; Yang, H.K.; Ahmad, A.W.; Lee, C. Indoor positioning: A review of indoor ultrasonic positioning systems. In Proceedings of the 2013 15th International Conference on Advanced Communications Technology (ICACT), Pyeong Chang, Republic of Korea, 27–30 January 2013; pp. 1146–1150. [Google Scholar]

- Qorvo. Qorvo All Around You. 2024. Available online: https://www.qorvo.com/ (accessed on 14 November 2022).

- Pozyx. Pozyx. 2024. Available online: https://www.pozyx.io/ (accessed on 8 February 2024).

- Eliko. Next-Generation Location Tracking. 2024. Available online: https://eliko.tech/ (accessed on 10 December 2022).

- Marvelmind. Marvelmind Robotics. 2023. Available online: https://marvelmind.com/ (accessed on 23 November 2022).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).