Abstract

This paper introduces a model-free optimization method based on reinforcement learning (RL) aimed at resolving the issues of active power and frequency oscillations present in a traditional virtual synchronous generator (VSG). The RL agent utilizes the active power and frequency response of the VSG as state information inputs and generates actions to adjust the virtual inertia and damping coefficients for an optimal response. Distinctively, this study incorporates a setting-time term into the reward function design, alongside power and frequency deviations, to avoid prolonged system transients due to over-optimization. The soft actor critic (SAC) algorithm is utilized to determine the optimal strategy. SAC, being model-free with fast convergence, avoids policy overestimation bias, thus achieving superior convergence results. Finally, the proposed method is validated through MATLAB/Simulink simulation. Compared to other approaches, this method more effectively suppresses oscillations in active power and frequency and significantly reduces the setting time.

1. Introduction

As environmental pollution and energy shortages have become increasingly serious, renewable energy power generation technologies represented by wind power and photovoltaics have garnered significant attention [1]. Renewable energy sources are generally connected to the power grid through power electronic converters, which lack the inertia and damping characteristics inherent in a traditional synchronous generator (SG). Consequently, the substantial integration of renewable energy poses significant challenges to the operational, security, and stability aspects of the power grid [2,3,4]. To address this problem, some researchers have introduced virtual synchronous generator (VSG) control technology. The VSG emulates the rotor motion equation of an SG, providing the inverter with inertia characteristics that can enhance the stability of the power grid [5]. Nevertheless, despite the VSG introducing inertia, it also inherits the low-frequency oscillation issue observed in SG [6].

It is worth noting that the virtual synchronous generator is a control algorithm executed by software, and its parameters are flexible and adjustable. Consequently, some researchers enhance the dynamic response of the VSG by adaptively adjusting parameters such as virtual inertia or the damping coefficient. In [7], an adaptive virtual inertia control strategy based on improved bang-bang control is proposed, which effectively suppresses frequency oscillation, but it allows for altering the control signal only within a constrained range of discrete values, resulting in significant jitter when it encounters interference. Ref. [8] analyzes the impact of virtual inertia on the dynamic response of VSG and designed a continuous adaptive function of virtual inertia to make the control signal smoother, but it does not consider the damping coefficient. In order to achieve better optimization results, ref. [9] designs double adaptive functions for the virtual inertia and damping coefficient. However, linear functions cannot accurately express the changing rules of parameters. Ref. [10] uses a fuzzy controller to make up for the shortcomings of linear functions. Ref. [11] also adds the adaptive control of droop gain, which provides better state of charge (SOC) maintenance capabilities for the energy storage system and avoids additional oscillations under low power consumption. The above methods are all designed based on expert experience and therefore lack objectivity and universal applicability. As the external environment and technology change rapidly, the experience of experts may quickly become outdated. Updating the knowledge and rules in expert systems is a complex and time-consuming process.

To avoid relying too much on expert knowledge, some researchers use heuristic algorithms to optimize the parameters of VSG. For example, in [12], the particle Swarm Optimization (PSO) is used to optimize the parameters and virtual impedance of VSG, which improves the stability of the microgrid and reduces the current overshoot. Additionally, ref. [13] employs a multi-objective genetic algorithm to select optimal parameters of VSG, leading to reduced maximum frequency deviation and shortened setting time. In the work of [14], a control in the virtual inertia control loop based on a proportionl-integral (PI) controller is designed, and the authors use the Manta Ray Foraging Optimization (MRFO) algorithm to optimize the PI controller. Moreover, ref. [15] takes into account the uncertainty of VSG dynamics and system inertia and uses the whale optimization algorithm to optimize and improve the virtual inertia loop. In general, heuristic algorithms can not only achieve different optimization effects according to the objective functions, but they also flexibly handle various constraints. Compared with methods based on expert experience, they have wider applicability. Nonetheless, heuristics still have their limitations. The iterative process of heuristic algorithms requires a systematic mathematical model, but power systems usually have complex topological structures and many uncertain factors, so the establishment of accurate power system models is a very arduous task. Furthermore, heuristic algorithms are resource-intensive, requiring significant time and memory, necessitating advanced hardware capabilities, and presenting challenges in satisfying the real-time demands of power system control.

Reinforcement learning (RL) is a machine learning paradigm where an agent iteratively interacts with its environment, making decisions based on feedback from past actions, with the ultimate goal of maximizing its cumulative reward over time [16,17,18]. In [19], the work employs a Q-learning algorithm to fine-tune the controller parameters of the VSG during the frequency control process. However, as a method characterized by discrete states and actions, Q-learning relies on a Q-table to archive the Q-value for every possible state–action combination. Consequently, with the expansion in the number of state and action pairs, the efficiency of Q-learning is prone to diminishing. With the evolution of deep learning, the fusion of RL with neural networks, known as deep reinforcement learning (DRL), has delivered outstanding outcomes. For example, Deep Q-Network (DQN) adopts neural networks to approximate the Q-value, enabling it to manage complex, high-dimensional environments and significantly lower the memory demands associated with Q-learning [20]. Proximal Policy Optimization (PPO) is a policy-based reinforcement learning algorithm that optimizes the policy itself and can output a continuous distribution of action probabilities, making it particularly suitable for addressing problems with continuous action spaces [21]. As for VSG, ref. [22] introduced an adaptive tuning approach for VSG’s parameters using the DQN algorithm. However, the discrete action set of the DQN algorithm poses a limitation for adjusting VSG parameters within a continuous value range. In [23], the authors use the Deep Deterministic Policy Gradient (DDPG) algorithm to adjust the virtual inertia and damping coefficient of the islanded VSG. DDPG, being a reinforcement learning algorithm based on the actor–critic framework, operates in continuous state and action spaces [24,25,26]. Nevertheless, it is well-documented that DDPG is susceptible to overestimation bias, potentially resulting in suboptimal control policies. Twin Delayed Deep Deterministic Policy Gradient (TD3) is an extension of DDPG, that can reduce overestimation bias and enhance the stability and efficiency of learning by using twin Q-networks and delayed policy updates [27].

Based on the above inspiration, this paper investigates the optimal control issue of VSG in a model-free context and employs the Soft Actor Critic (SAC) algorithm to identify the optimal strategy. SAC incorporates entropy regularization, which endows it with superior exploration capabilities, enhanced sample efficiency, and a more stable and accelerated training process compared to DDPG. Considering the over-optimization issue in traditional VSG optimization methods, a setting time penalty has been incorporated into the reward function to prevent excessive damping of frequency fluctuations, which could result in prolonged adjustment periods and compromise system stability. The main contributions of this work are as follows:

- The optimal adaptive control problem of VSG is transformed into an RL task, thereby obviating the necessity for intricate mathematical models and expert knowledge. Subsequently, the state-of-the-art SAC algorithm is employed to train the agent, enabling it to discover the optimal strategy.

- The traditional optimization objective of VSG focuses solely on mitigating active power and frequency fluctuations, overlooking the optimization of the system’s transient response time. In the reward function design, this paper introduces an adjustment time component, motivating the agent to refine the strategy further for enhanced system performance.

The rest of this paper is organized as follows. Section 2 delves into the fundamental principles of VSG, formulates the optimization issue as a multi-objective function, and subsequently elucidates the principles behind parameter selection. Section 3 converts the optimization problem of the VSG into an RL task, detailing the design of the reward function and the configuration of the agent. Section 4 carries out simulation experiments in MATLAB/Simulink to validate the effectiveness of the proposed method. Finally, Section 5 concludes this paper, outlining prospects for future work.

2. System Model and Problem Formulation

2.1. VSG Control Principle

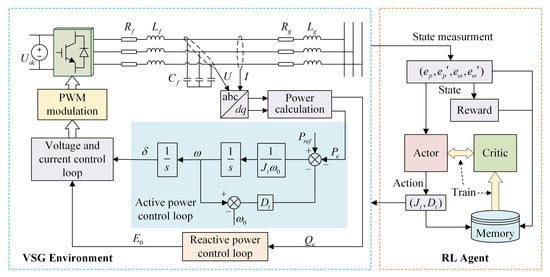

Figure 1 shows the schematic diagram of VSG control with RL, where , and represent the resistance, inductance and capacitance of the filter circuit; and represent the resistance and inductance of the output line; is the DC terminal voltage; U and I are the inverter output voltage and current. As shown in Figure 1, VSG operates as an outer loop control, primarily comprising an active power control loop (APL) and a reactive power control loop (RPL). The APL achieves control of active power and frequency by simulating the primary frequency regulation characteristics of synchronous generators; the RPL employs droop control or a PI controller to regulate reactive power and voltage; and the voltage and current control loop employs a dual closed-loop PI controller to achieve zero steady-state error tracking of the inverter’s output voltage and current. The core function of VSG lies in the APL, which provides inertia and damping to the inverter by simulating the rotor motion equation of SG [28,29]. Therefore, the purpose of this paper is to improve the APL. The rotor motion equation is described as follows:

where and represent the reference active power and output active power, respectively; and represent the rotor angular velocity and rated angular velocity, respectively; and J and D are the virtual inertia and damping coefficient.

Figure 1.

VSG control framework based on reinforcement learning.

When the circuit impedance exhibits inductive behavior (or a virtual impedance is employed otherwise), the APL and RPL are approximately decoupled. In accordance with the power flow calculation equation, the active power computation formula under these conditions is delineated as follows:

where and represent the effective value of inverter and grid phase voltages, respectively; Z is the total effective reactance of the line; and is the power angle of VSG, which can be given by

where is the grid frequency. Typically, the value of power angle is small. For analytical simplification, it is approximated by setting .

To conduct a more in-depth analysis of the response characteristics of the VSG and examine the impact of virtual inertia and damping coefficients on system stability, a small signal model can be constructed based on Equations (1)–(3):

where , , , represent the change in VSG angular frequency, reference active power, output active power and power angle, respectively. According to Equation (4), the transfer function of the active power outer loop can be expressed as follows:

where . and represent the transfer function of frequency response and active power response, respectively.

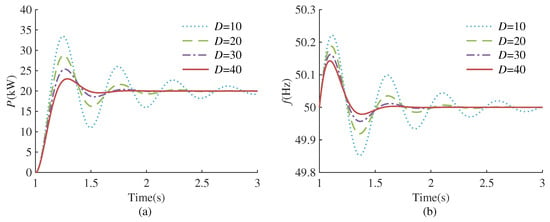

According to the transfer function (5) and (6), it can be seen that the dynamic response of VSG is affected by virtual inertia and the damping coefficient. Figure 2 and Figure 3 plot the response curves of VSG frequency and output active power under different virtual inertia and damping coefficients, respectively.

Figure 2.

Dynamic response of VSG under different virtual inertia. (a) Active power response. (b) Frequency response.

Figure 3.

Dynamic response of VSG under different damping coefficients. (a) Active power response. (b) Frequency response.

As depicted in Figure 2, with a fixed damping coefficient, the reduction in virtual inertia leads to a decrease in active power overshoot and a shorter setting time. However, this reduction is accompanied by an increase in frequency deviation. According to Figure 3, when the virtual inertia is fixed, as the damping coefficient increases, the active power overshoot decreases, the setting time is shortened, and the frequency deviation also becomes smaller. Nevertheless, a progressive increment in the damping coefficient may transition the system from an under-damped to an over-damped state, thereby prolonging the setting time and negatively impacting the system’s stability. The analysis indicates that the virtual inertia governs the oscillation frequency during the dynamic response of VSG, while the damping coefficient determines the attenuation rate of the oscillation process. A solitary adjustment of either the virtual inertia or damping coefficient fails to attain a comprehensive optimization of active power, frequency, and the overall system response speed. Consequently, this paper employs RL algorithms to enhance VSG control and pursue the optimal control strategy. The entire control framework is depicted in Figure 1. In each control cycle, the RL agent receives the state information and reward signals from the environment, subsequently generating actions via the actor network. The actions are set to the virtual inertia and damping coefficient at the current instance. During training, the agent continuously interacts with the environment and improves the strategy through the rewards obtained, thereby finding the optimal strategy.

2.2. Objective Function

This paper describes the oscillation suppression process of VSG as a multi-objective optimization problem. According to the optimization objective, the objective function can be described as follows:

where N is the total steps; , and represent active power deviation cost, frequency deviation cost and setting time cost, and , and are the corresponding weight coefficients, respectively; and represent the setting time. and contribute to the suppression of active power and frequency oscillations; however, excessive oscillation suppression might induce sluggish changes in power and frequency, consequently resulting in longer settling times and impacting system stability. To address this concern, we introduce a setting time cost into the objective function.

2.3. Constraint Conditions

The active power loop of the VSG, as described by Equation (5), conforms to the characteristics of a typical second-order system. The natural oscillation angular frequency, denoted as , and the damping ratio, denoted as , can be calculated as follows:

To guarantee the stability of the system, it is essential to restrict the damping ratio of the second-order system. In the case of underdamping in a second-order system, the relationship between overshoot and damping ratio is expressed as follows:

Derived from Equation (9), an excessively small damping ratio can induce substantial power overshoot, potentially causing damage to power electronic devices. Conversely, an excessively large damping ratio may result in an extended system settling time, which is detrimental to system stability. Therefore, the selection of damping ratio should not be too large or too small. In this paper, the chosen range for the damping ratio is . In accordance with the damping ratio range, the constraints for J and D can be determined as follows:

The relationship between the damping coefficient, frequency deviation and power deviation is give by

In adherence with the EN50438 standard [30] for renewable energy grid connection, a 1 Hz frequency change in the grid corresponds to a change in the output active power of the inverter in the range of 40% to 100% of the rated capacity [31]. This study establishes the rated capacity of the inverter at 100 kW and with a stipulated maximum allowable frequency deviation of 1 Hz. According to Equation (11), the range of values for the damping coefficient D can be obtained as follows:

In order to meet the stability margin of the system, it is crucial that the characteristic roots of the system do not approach the imaginary axis too closely. Therefore, the real part of the system characteristic roots must adhere to the following constraints:

In consideration of the grid capacity connected to the inverter, the range of values for is specified as . This study adopts . According to Equations (10), (12) and (13), the range of values for J and D is graphically depicted in a two-dimensional plane, as shown in Figure 4. The shaded area within the plot indicates the parameter range.

Figure 4.

The value range of virtual inertia and damping coefficient.

3. Transformation and Solution

3.1. Markov Decision Model

The parameter adjustment process of VSG can be regarded as a sequential decision-making problem. Furthermore, it satisfies the Markov property, that is, the state of the system at the next moment is only related to the state of the current moment and has nothing to do with the past state. Consequently, the adjustment of virtual inertia and damping coefficient can be reformulated as a Markov Decision Process (MDP) and addressed through a reinforcement learning algorithm. An MDP can be succinctly described by a quintuple . Where is the set of state space, is the set of action space, p is the transition matrix, indicating the probability of taking action in state and transitioning to the subsequent state , is the discount factor denoting the significance of future rewards in relation to current rewards (typically ranging from 0 to 1), and R stands for the reward function representing the feedback provided by the environment after taking action in state [32,33].

3.1.1. State Space

The primary features of the dynamic response in the VSG active power loop are manifested in the responses of active power and frequency. To comprehensively depict this response process, the selection of state space is as follows:

where , , and represent the normalized active power deviation, normalized frequency deviation, active power normalization coefficient and frequency normalization coefficient, respectively; and represent the change rates of and , respectively.

3.1.2. Action Space

In this study, the control variables are the virtual inertia and damping coefficient of the VSG. Therefore, the action space is defined as the change in virtual inertia and damping coefficient and can be expressed as follows:

where and represent the changes in virtual inertia and damping coefficient, respectively. Thus, the real virtual inertia and damping coefficient can be obtained by

where and represent the initial values of virtual inertia and damping coefficient, respectively.

3.1.3. Reward Function

The objective of RL is to maximize the cumulative discounted reward, rendering the reward function a crucial element in steering the agent towards learning the optimal strategy. Existing adaptive control approaches for VSG typically focus on optimizing the oscillations in active power and frequency. However, suppressing oscillations might prolong the setting time and negatively influence the stability of the system. Consequently, this paper incorporates active power, frequency and setting time into the reward function design to address these considerations. The design methodology is as follows:

Active power deviation reward function : In the post-disturbance recovery phase, the active power fluctuates around a reference value. The objective of this article is to minimize the oscillation amplitude and duration as much as possible, hence the reward function for active power is designed as follows:

where represents the weight coefficient.

Frequency deviation reward function : In practical scenarios, the power system necessitates that the grid frequency operates around the rated frequency, with minimal deviation being ideal. Consequently, the frequency reward function designed in this paper is structured as follows:

where represents the weight coefficient.

Setting time reward function : To prevent an excessive setting time, we incorporate the setting time as part of the reward function. Since the system can only ascertain the total settling time upon reaching a steady state, with only the last step receiving the settling time reward, this approach is deemed unreasonable. This paper adopts the following method to design a settling time reward function: for each non-steady state, a constant term penalty is applied as follows:

where represents the penalty coefficient.

Terminal reward function . When the active power and frequency reach a steady state, further adjustments to the virtual inertia and damping coefficient will not alter the system state. Consequently, the sampling data in the steady state hold no value in enhancing the strategy and are considered to be an invalid sample. To improve sample efficiency, when a steady state is reached, the training for that episode is promptly terminated, and a new episode begins. To encourage the agent to approach the steady state, a positive constant reward is given when the system state reaches stability:

where M is a state-independent constant, and represents the stable state. By summing Equations (17)–(20), the final reward function can be expressed as follows:

The roles of and is to suppress the oscillation of active power and frequency, and the function of is to reduce the setting time. When the agent is close to the steady state, the difference in rewards brought by different actions is very small. As a terminal reward, can prevent the agent from fluctuating near the steady state and improve sample efficiency. Incorporating allows the optimal strategy for oscillation suppression to be adjustable, effectively circumventing the issue of excessively prolonged adjustment times. Nonetheless, the value of must be judiciously selected; if is too large, the frequency deviation will become larger, resulting in poorer oscillation suppression effect. Conversely, should the value be excessively minimal, its efficacy in application will be compromised.

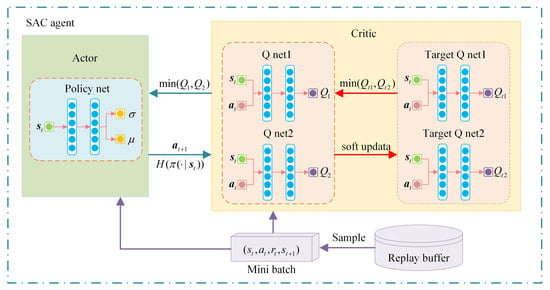

3.2. Principle of Soft Actor Critic

This paper employs the soft actor critic(SAC) algorithm to address the MDP associated with the optimization of the VSG dynamic response. SAC is a model-free and offline RL algorithm. By incorporating the concept of maximum entropy, it not only enhances the algorithm’s robustness but also improves the agent’s exploration capability, thereby accelerating the learning speed [34,35]. Figure 5 shows the framework of the SAC algorithm.

Figure 5.

The framework of the SAC algorithm.

The learning objective of SAC extends beyond maximizing the cumulative rewards, it also aims to maximize the entropy of each state [36,37,38]. Therefore, the objective function of SAC is as follows:

where represents the state transition equation; represents the policy function; is the temperature coefficient, delineating the relative significance of entropy terms in comparison to reward terms. It plays a crucial role in modulating the level of randomness in the policy; H represents the entropy of policy in state and is given by

RL algorithms use the Q-value to represent the expected cumulative reward that can be obtained by taking action in state . Different from general value-based RL algorithms, the Q-value in SAC contains both the reward value and action entropy. According to the Bellman equation, the soft Q-value can be calculated as follows:

where

is the soft state value function.

The SAC algorithm comprises a total of five neural networks, including a policy network, two critic networks and two target critic networks. The role of the critic network is to approximate the soft Q-value. Employing dual critic networks helps mitigate the issue of overestimating the Q-value. The parameters of the soft Q-function can be trained by minimizing the soft Bellman residual, which is expressed as follows:

where is the parameter of critic network; represents the experience replay buffer.

The policy network outputs the probability distribution of the action. This paper uses Gaussian probability distribution, so the policy network outputs the mean and standard deviation of the Gaussian probability distribution. The loss function for the policy network is as follows:

where represents the parameter of the policy network.

During training, SAC maintains a balance between exploration and exploitation by adjusting the size of temperature coefficient . Larger temperature coefficients correspond to increased exploration, while smaller coefficients correspond to heightened exploitation. However, determining the appropriate temperature coefficient can be challenging. In order to solve this problem, the SAC algorithm sets a target entropy for each task and automatically updates the temperature coefficient during the training process. The loss function of temperature coefficient is designed as follows:

The parameters of each neural network are updated through the gradient descent method:

where , and represent the gradients of critic network, policy network and temperature coefficient, and , and represent the corresponding learning rate, respectively.

4. Simulation Results

To assess the effectiveness and feasibility of the VSG parameter adaptive control strategy based on SAC proposed in this paper, a single grid-connected VSG simulation system shown in Figure 1 is constructed using Simulink. The key parameters of the simulation system and RL agent are detailed in Table 1.

Table 1.

Key parameters of simulation model.

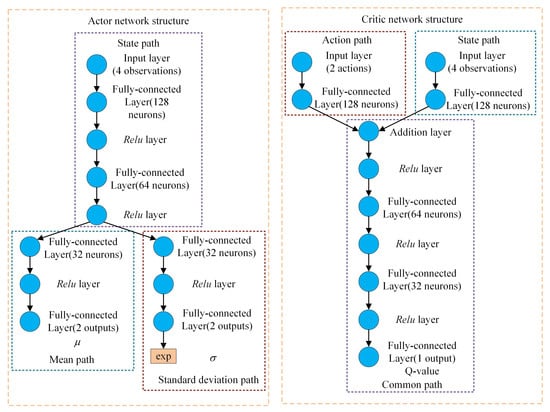

The RL agent is developed utilizing the RL toolbox in MATLAB, with the architecture of the actor network and the critic network illustrated in Figure 6. In this article, a Gaussian distribution is utilized as the action probability distribution. As depicted in Figure 6, the state information initially traverses through the state pathway, subsequently splitting into two branches. One branch is responsible for outputting the mean of the Gaussian distribution, while the other branch outputs the standard deviation . The role of the exponential function (exp) here is to ensure that the output value of the standard deviation remains positive. The action is derived by randomly sampling from the Gaussian distribution . Given that the sampling process is non-differentiable, to facilitate the actor in executing the neural network’s backpropagation process, the following formula is employed in practical applications to derive the action:

where is random noise obeying the standard Gaussian distribution. The value range of the tanh function is from −1 to 1, serving to transform Gaussian distribution sampling, which possesses an infinite value range, into a finite action value. The critic network is composed of three segments: the action path, the state path and the common path. The action path receives action information as its input, while the state path is fed with state information. These two paths converge on the common path, which subsequently processes the combined information to output the Q-value. The activation functions utilized in the neural networks are all Relu functions, as represented by the following formula:

Figure 6.

Structures of the actor and critic network.

To enhance the generalization ability of reinforcement learning agents, random disturbances are introduced to the reference active power and load power during the training process. The range of variation for the reference active power is 0 kW to 50 kW, and for load power, it is 5 kW to 30 kW.

4.1. Comparison of Different Reward Functions

The optimal strategy that the agent ultimately secures is determined by the structure of the reward function. To assess the impact of the setting time penalty term introduced in this article, we compare the training outcomes derived from various reward functions. The RL algorithm utilized in all cases is the SAC algorithm. There are three types of reward functions: The reward function of Case 1 includes only the oscillation suppression rewards , and the terminal reward , excluding the setting time penalty term . This reward function is designed to suppress power and frequency oscillations to the greatest extent possible, but it may result in excessively prolonged setting times. The reward function for Case 2 encompasses all components, yet the setting time penalty term is prioritized with a larger proportion. This reward function expects a rapid system response rate while overlooking the optimization of the process. In Case 3, the reward function also comprises all elements, but it maintains a balanced proportion of , and , aiming to achieve equilibrium between process optimization and adjustment time.

Figure 7a–c shows the training results of different reward functions. Under a range of reward functions, the cumulative rewards demonstrate convergence, suggesting that the agents have successfully identified their respective optimal strategies. Next, we conduct time-domain simulations for the trained agents with various reward functions in Simulink. The simulation scenario involves applying a step disturbance that changes the active power reference value from 0 kW to 20 kW at 1.0 s. The simulation results are depicted in Figure 8.

Figure 7.

Training results with different reward functions and RL algorithms. (a–c) are training results of SAC with different reward functions, and (d) is training results of DDPG with Case 3. (a) Case 1. (b) Case 2. (c) Case 3. (d) DDPG with Case 3.

Figure 8.

Simulation results with different reward functions. (a) Active power response. (b) Frequency response.

As observed in Figure 8, compared with the traditional VSG with fixed parameters (Fixed-VSG), there are no secondary oscillations in the active power and frequency in all three cases. This outcome is attributed to the inclusion of and in the reward function. The introduction of the setting time component alters the agent’s optimal strategy. An increase in the proportion of the setting time penalty term results in a gradual reduction in the setting time of the system. Further analysis reveals that since the reward function of Case 1 lacks the term, it more effectively suppresses the maximum frequency deviation, yet the optimization of the setting time is not pronounced. The reward function of Case 2 incorporates with a substantial weighting, while this significantly reduces the setting time, it leads to an increased frequency deviation and results in a deterioration of the frequency response. Case 3 establishes a balanced ratio among the various rewards, effectively suppressing the maximum frequency deviation and considerably reducing the setting time, aligning closely with the desired optimization objectives. This proves the effectiveness and flexibility of the method proposed in this paper.

4.2. Comparison of Different RL Algorithms

Furthermore, to ascertain the superiority of the SAC algorithm, this article employs the DDPG algorithm, which also operates under the Actor–Critic framework, training the agent using the reward function specified in Case 3. To maintain fairness in the comparison, the shared structures and parameters across each agent are initialized with identical values. For instance, the critic networks of SAC and DDPG are configured with the same architecture, and the initial values of the neural networks are also aligned. Considering that SAC employs a stochastic policy, while DDPG utilizes deterministic policies, the pathway from input to mean output in the SAC’s actor network is identical to the actor networks in DDPG. Moreover, the different algorithms also share the same learning rate and soft update coefficient. The reward curve of DDPG is shown in Figure 7d.

Comparing Figure 7c,d, it is apparent that SAC converges to the optimal strategy in just 200 rounds, whereas DDPG takes up to 750 rounds to converge, signifying a faster learning pace for SAC. The reward curve of SAC remains smooth throughout the learning process, contrasting with the large fluctuations observed in DDPG’s reward curve, which suggests a more stable learning trajectory for SAC. SAC’s cumulative reward ultimately settles at −67.4, while DDPG’s reward converges at −71.6, further evidencing the superior learning effectiveness of SAC. This conclusively demonstrates the superiority of the SAC algorithm.

4.3. Case Studies

In this subsection, our purpose is to verify the effectiveness of the well-trained VSG controller and compare its control effect with other methods in different scenarios. The proposed method (SAC-VSG) is trained using the balanced reward function specified in Case 3. The simulation scenarios are primarily executed under three distinct disturbance conditions: active power reference disturbance, load power disturbance and grid frequency disturbance. The methods included in the comparison are traditional fixed parameter control (Fixed-VSG), linear adaptive control (Adaptive-VSG) as mentioned in [9], fuzzy adaptive control (Fuzzy-VSG) from [10], and DDPG adaptive control (DDPG-VSG) utilizing the reward function from Case 3.

4.3.1. Active Power Reference Disturbance

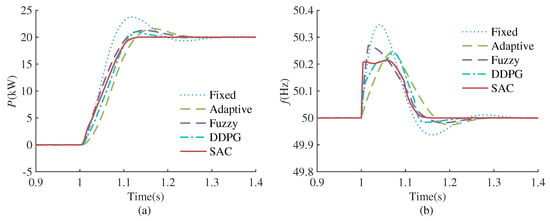

This case study compares the control effects of different methods in response to a step disturbance in the reference active power. The simulation test conditions are as follows: Initially, the system is in a stable state, and the reference active power by VSG is set to 0 kW. At 1.0 s, there is a step change for the reference active power from 0 kw to 20 kW. The simulation results are shown in Figure 9. We evaluate different control methods based on active power overshoot (the steady-state range is set to ), maximum frequency deviation and setting time presented in Table 2.

Figure 9.

Performance comparison of different VSG controllers under reference active power change. (a) Active power response. (b) Frequency response.

Table 2.

Performance indicators under reference active power disturbance.

It can be seen that when the reference active power changes, the control effect of Fixed-VSG is not ideal, and there is a large oscillation in both active power and frequency response. Adaptive-VSG and Fuzzy-VSG can improve the small signal oscillation problem of the traditional VSG control to a certain extent, but the suppression of frequency fluctuations and the shortening of setting time are not obvious, and while the DDPG algorithm can further optimize overshoot, maximum frequency deviation, and setting time, there remains scope for enhancement. However, SAC-VSG has almost no active power overshoot, the maximum frequency deviation is also very well suppressed, and the setting time is greatly shortened. It can be concluded that the optimization effect of the proposed method is better than for the other methods in all aspects under the reference active power change.

4.3.2. Load Disturbance

This case study compares the control effects of different methods under load disturbances. The simulation test conditions are as follows: At the initial moment, the system is in a stable state, with the active power of the load set at 10 kW. At 1.0 s, a load with active power of 20 kW is connected. The simulation results are shown in Figure 10, and the various performance indicators of different control methods are presented in Table 3.

Figure 10.

Performance comparison of different VSG controllers under load change. (a) Active power response. (b) Frequency response.

Table 3.

Performance indicators under load power disturbance.

Figure 10 shows that when the load active power changes suddenly, in order to satisfy the power balance, the inverter output power first changes with the load power and then gradually returns to the reference. Compared to the other four methods, SAC-VSG can restore to the equilibrium state more quickly and stably during power restoration. It can be concluded from Table 3 that SAC-VSG is also better than the other four methods in terms of active power overshoot, maximum frequency deviation and setting time under load power disturbance.

4.3.3. Grid Frequency Disturbance

In order to further verify the robustness of the proposed method, this case simulates and compares the control effects of different methods when small disturbances occur in the grid frequency. The simulation test conditions are as follows: The system is in a stable state at the initial moment, with the reference active power is 20 kW. At 1.0 s, the grid frequency changes from 50 Hz to 50.1 Hz. The comparison of simulation results is shown in Figure 11.

Figure 11.

Performance comparison of different VSG controllers under grid frequency change. (a) Active power response. (b) Frequency response.

As shown in Figure 11, when the grid frequency undergoes changes, there exists a static deviation between the output active power and reference active power, quantified as , where denotes the steady-state damping coefficient, set to 20 in this case. Consequently, a 0.1 Hz increase in grid frequency results in a 4 kW decrease in reference active power, and vice versa, an increase of 4 kW occurs. Figure 11 shows that the suppression effect of frequency oscillation and active power oscillation of SAC-VSG is more obvious than the other four methods, indicating that the proposed method is also suitable for disturbances in the power grid frequency.

5. Conclusions

Based on VSG control, this paper proposes an adaptive control strategy for virtual inertia and damping coefficient based on SAC to address the oscillation problem existing in the dynamic process of grid-connected VSG control. In order to better achieve the expected control performance, the oscillation suppression problem of VSG is described as a multi-objective optimization problem, and the optimization of frequency, active power and setting time is comprehensively considered. The problem is then transformed into a RL task and solved using the SAC algorithm. RL algorithms learn strategies through interaction with the environment and can achieve optimization in different scenarios. Compared with existing VSG adaptive control methods, the proposed method has better optimization effects in various application scenarios. Moreover, the proposed method does not require expert knowledge and system mathematical models and is a fully automatic optimization algorithm.

The RL parameter adaptive adjustment algorithm in this paper currently only considers the VSG control of a single inverter and can conduct research on multi-machine parallel systems in the future.

Author Contributions

Conceptualization, C.L. and X.Z.; methodology, C.L. and X.Z.; software, C.L.; writing—original draft preparation, C.L.; writing—review and editing, X.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Natural Science Foundation of China, grant number U22B20100.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kryonidis, G.C.; Kontis, E.O.; Chrysochos, A.I.; Oureilidis, K.O.; Demoulias, C.S.; Papagiannis, G.K. Power flow of islanded AC microgrids: Revisited. IEEE Trans. Smart Grid 2018, 9, 3903–3905. [Google Scholar] [CrossRef]

- Fang, J.; Li, H.; Tang, Y.; Blaabjerg, F. Distributed power system virtual inertia implemented by grid-connected power converters. IEEE Trans. Power Electron. 2017, 33, 8488–8499. [Google Scholar] [CrossRef]

- She, B.; Li, F.; Cui, H.; Wang, J.; Min, L.; Oboreh-Snapps, O.; Bo, R. Decentralized and Coordinated Vf Control for Islanded Microgrids Considering DER Inadequacy and Demand Control. IEEE Trans. Energy Convers. 2023, 38, 1868–1880. [Google Scholar] [CrossRef]

- Rehman, W.U.; Moeini, A.; Oboreh-Snapps, O.; Bo, R.; Kimball, J. Deadband voltage control and power buffering for extreme fast charging station. In Proceedings of the 2021 IEEE Madrid PowerTech, Madrid, Spain, 28 June–2 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- Chen, S.; Sun, Y.; Hou, X.; Han, H.; Fu, S.; Su, M. Quantitative Parameters Design of VSG Oriented to Transient Synchronization Stability. IEEE Trans. Power Syst. 2023, 38, 4978–4981. [Google Scholar] [CrossRef]

- Chen, M.; Zhou, D.; Blaabjerg, F. High penetration of inverter-based power sources with VSG control impact on electromechanical oscillation of power system. Int. J. Electr. Power Energy Syst. 2022, 142, 108370. [Google Scholar] [CrossRef]

- Li, J.; Wen, B.; Wang, H. Adaptive virtual inertia control strategy of VSG for micro-grid based on improved bang-bang control strategy. IEEE Access 2019, 7, 39509–39514. [Google Scholar] [CrossRef]

- Hou, X.; Sun, Y.; Zhang, X.; Lu, J.; Wang, P.; Guerrero, J.M. Improvement of frequency regulation in VSG-based AC microgrid via adaptive virtual inertia. IEEE Trans. Power Electron. 2019, 35, 1589–1602. [Google Scholar] [CrossRef]

- Shi, T.; Sun, J.; Han, X.; Tang, C. Research on adaptive optimal control strategy of virtual synchronous generator inertia and damping parameters. IET Power Electron. 2023, 17, 121–133. [Google Scholar] [CrossRef]

- Yin, Q.; Ma, H.; Shan, Y. VSG Fuzzy Control Strategy of Energy Storage in Grid-connected Mode. In Proceedings of the 2022 Asia Power and Electrical Technology Conference (APET), Shanghai, China, 11–13 November 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 191–197. [Google Scholar]

- He, P.; Li, Z.; Jin, H.; Zhao, C.; Fan, J.; Wu, X. An adaptive VSG control strategy of battery energy storage system for power system frequency stability enhancement. Int. J. Electr. Power Energy Syst. 2023, 149, 109039. [Google Scholar] [CrossRef]

- Pournazarian, B.; Sangrody, R.; Lehtonen, M.; Gharehpetian, G.B.; Pouresmaeil, E. Simultaneous optimization of virtual synchronous generators parameters and virtual impedances in islanded microgrids. IEEE Trans. Smart Grid 2022, 13, 4202–4217. [Google Scholar] [CrossRef]

- Wu, Y.S.; Liao, J.T.; Yang, H.T. Parameter Optimization of Virtual Synchronous Generator Control Applied in Energy Storage and Photovoltaic Systems for an Island Microgrid. In Proceedings of the 2023 IEEE Power & Energy Society General Meeting (PESGM), Orlando, FL, USA, 16–20 July 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–5. [Google Scholar]

- Saleh, A.; Omran, W.A.; Hasanien, H.M.; Tostado-Véliz, M.; Alkuhayli, A.; Jurado, F. Manta ray foraging optimization for the virtual inertia control of islanded microgrids including renewable energy sources. Sustainability 2022, 14, 4189. [Google Scholar] [CrossRef]

- Faragalla, A.; Abdel-Rahim, O.; Orabi, M.; Abdelhameed, E.H. Enhanced virtual inertia control for microgrids with high-penetration renewables based on whale optimization. Energies 2022, 15, 9254. [Google Scholar] [CrossRef]

- Wang, H.-N.; Liu, N.; Zhang, Y.-Y.; Feng, D.-W.; Huang, F.; Li, D.-S.; Zhang, Y.-M. Deep reinforcement learning: A survey. Front. Inf. Technol. Electron. Eng. 2020, 21, 1726–1744. [Google Scholar] [CrossRef]

- Shakya, A.K.; Pillai, G.; Chakrabarty, S. Reinforcement Learning Algorithms: A brief survey. Expert Syst. Appl. 2023, 231, 120495. [Google Scholar] [CrossRef]

- Annaswamy, A.M. Adaptive Control and Intersections with Reinforcement Learning. Annu. Rev. Control Robot. Auton. Syst. 2023, 6, 65–93. [Google Scholar] [CrossRef]

- Zhang, K.; Zhang, C.; Xu, Z.; Ye, S.; Liu, Q.; Lu, Z. A Virtual Synchronous Generator Control Strategy with Q-Learning to Damp Low Frequency Oscillation. In Proceedings of the 2020 Asia Energy and Electrical Engineering Symposium (AEEES), Chengdu, China, 29–31 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 111–115. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Wu, W.; Guo, F.; Ni, Q.; Liu, X.; Qiu, L.; Fang, Y. Deep Q-Network based Adaptive Robustness Parameters for Virtual Synchronous Generator. In Proceedings of the 2022 IEEE Transportation Electrification Conference and Expo, Asia-Pacific (ITEC Asia-Pacific), Haining, China, 28–31 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–4. [Google Scholar]

- Li, Y.; Gao, W.; Huang, S.; Wang, R.; Yan, W.; Gevorgian, V.; Gao, D.W. Data-driven optimal control strategy for virtual synchronous generator via deep reinforcement learning approach. J. Mod. Power Syst. Clean Energy 2021, 9, 919–929. [Google Scholar] [CrossRef]

- Park, M.; Lee, S.Y.; Hong, J.S.; Kwon, N.K. Deep Deterministic Policy Gradient-Based Autonomous Driving for Mobile Robots in Sparse Reward Environments. Sensors 2022, 22, 9574. [Google Scholar] [CrossRef]

- Tsai, J.; Chang, C.C.; Li, T. Autonomous Driving Control Based on the Technique of Semantic Segmentation. Sensors 2023, 23, 895. [Google Scholar] [CrossRef]

- Zheng, K.; Jia, X.; Chi, K.; Liu, X. DDPG-based joint time and energy management in ambient backscatter-assisted hybrid underlay CRNs. IEEE Trans. Commun. 2022, 71, 441–456. [Google Scholar] [CrossRef]

- Fujimoto, S.; Hoof, H.; Meger, D. Addressing function approximation error in actor-critic methods. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; PMLR: Stockholm, Sweden, 2018; pp. 1587–1596. [Google Scholar]

- Li, M.; Shu, S.; Wang, Y.; Yu, P.; Liu, Y.; Zhang, Z.; Hu, W.; Blaabjerg, F. Analysis and improvement of large-disturbance stability for grid-connected VSG based on output impedance optimization. IEEE Trans. Power Electron. 2022, 37, 9807–9826. [Google Scholar] [CrossRef]

- Chen, S.; Sun, Y.; Han, H.; Fu, S.; Luo, S.; Shi, G. A modified VSG control scheme with virtual resistance to enhance both small-signal stability and transient synchronization stability. IEEE Trans. Power Electron. 2023, 38, 6005–6014. [Google Scholar] [CrossRef]

- BS EN 50438:2007; Requirements for the Connection of Micro-Generators in Parallel with Public Low-Voltage Distribution Networks. European Committee for Electrotechnical Standardization: Brussels, Belgium, 2007.

- Yao, F.; Zhao, J.; Li, X.; Mao, L.; Qu, K. RBF neural network based virtual synchronous generator control with improved frequency stability. IEEE Trans. Ind. Informatics 2020, 17, 4014–4024. [Google Scholar] [CrossRef]

- Wu, D.; Lei, Y.; He, M.; Zhang, C.; Ji, L. Deep reinforcement learning-based path control and optimization for unmanned ships. Wirel. Commun. Mob. Comput. 2022, 2022, 7135043. [Google Scholar] [CrossRef]

- Pérez-Gil, Ó.; Barea, R.; López-Guillén, E.; Bergasa, L.M.; Gomez-Huelamo, C.; Gutiérrez, R.; Diaz-Diaz, A. Deep reinforcement learning based control for Autonomous Vehicles in CARLA. Multimed. Tools Appl. 2022, 81, 3553–3576. [Google Scholar] [CrossRef]

- Haarnoja, T.; Zhou, A.; Abbeel, P.; Levine, S. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; PMLR: Stockholm, Sweden, 2018; pp. 1861–1870. [Google Scholar]

- Haarnoja, T.; Zhou, A.; Hartikainen, K.; Tucker, G.; Ha, S.; Tan, J.; Kumar, V.; Zhu, H.; Gupta, A.; Abbeel, P.; et al. Soft actor-critic algorithms and applications. arXiv 2018, arXiv:1812.05905. [Google Scholar]

- Chen, P.; Pei, J.; Lu, W.; Li, M. A deep reinforcement learning based method for real-time path planning and dynamic obstacle avoidance. Neurocomputing 2022, 497, 64–75. [Google Scholar] [CrossRef]

- Pan, J.; Huang, J.; Cheng, G.; Zeng, Y. Reinforcement learning for automatic quadrilateral mesh generation: A soft actor–critic approach. Neural Netw. 2023, 157, 288–304. [Google Scholar] [CrossRef]

- Wu, J.; Wei, Z.; Li, W.; Wang, Y.; Li, Y.; Sauer, D.U. Battery thermal-and health-constrained energy management for hybrid electric bus based on soft actor-critic DRL algorithm. IEEE Trans. Ind. Inform. 2020, 17, 3751–3761. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).