Comparison of Machine Learning Algorithms for Heartbeat Detection Based on Accelerometric Signals Produced by a Smart Bed

Abstract

1. Introduction

2. Materials and Methods

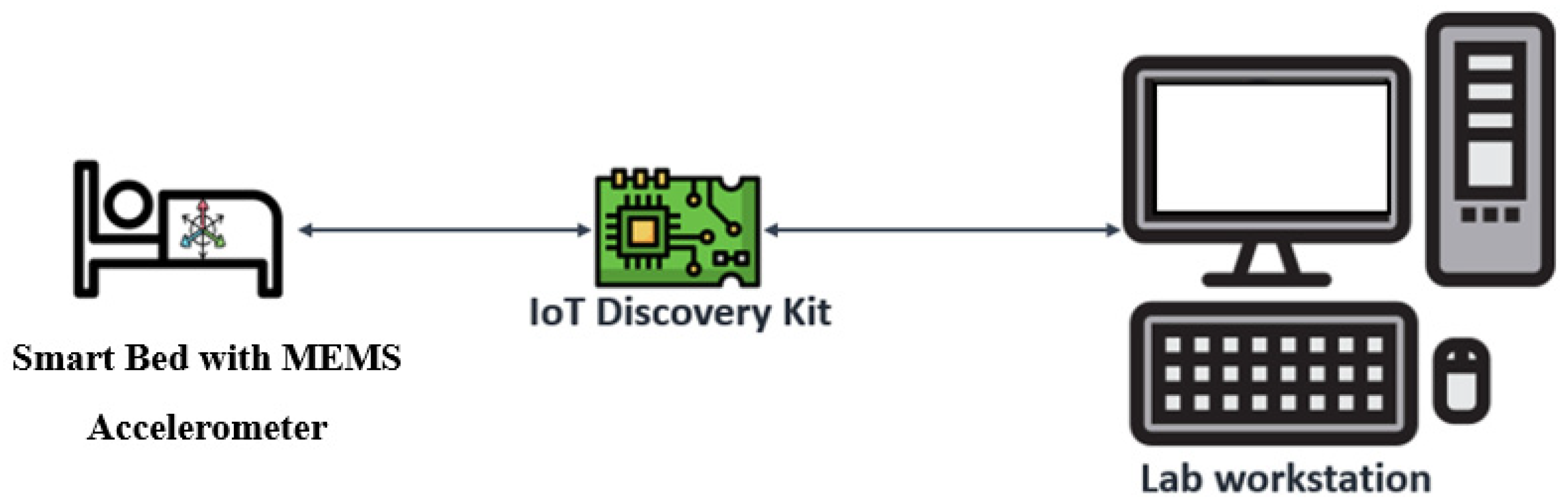

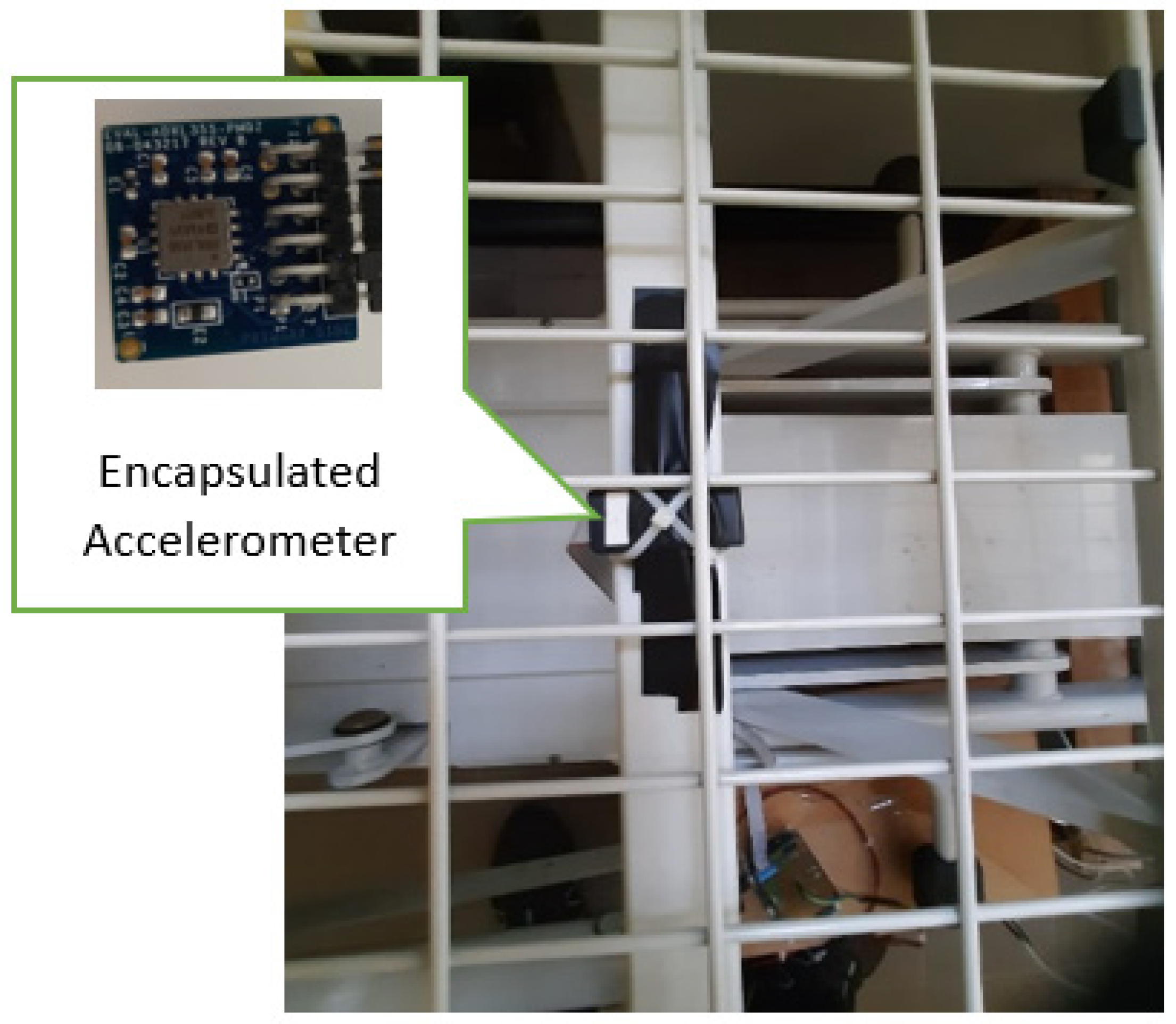

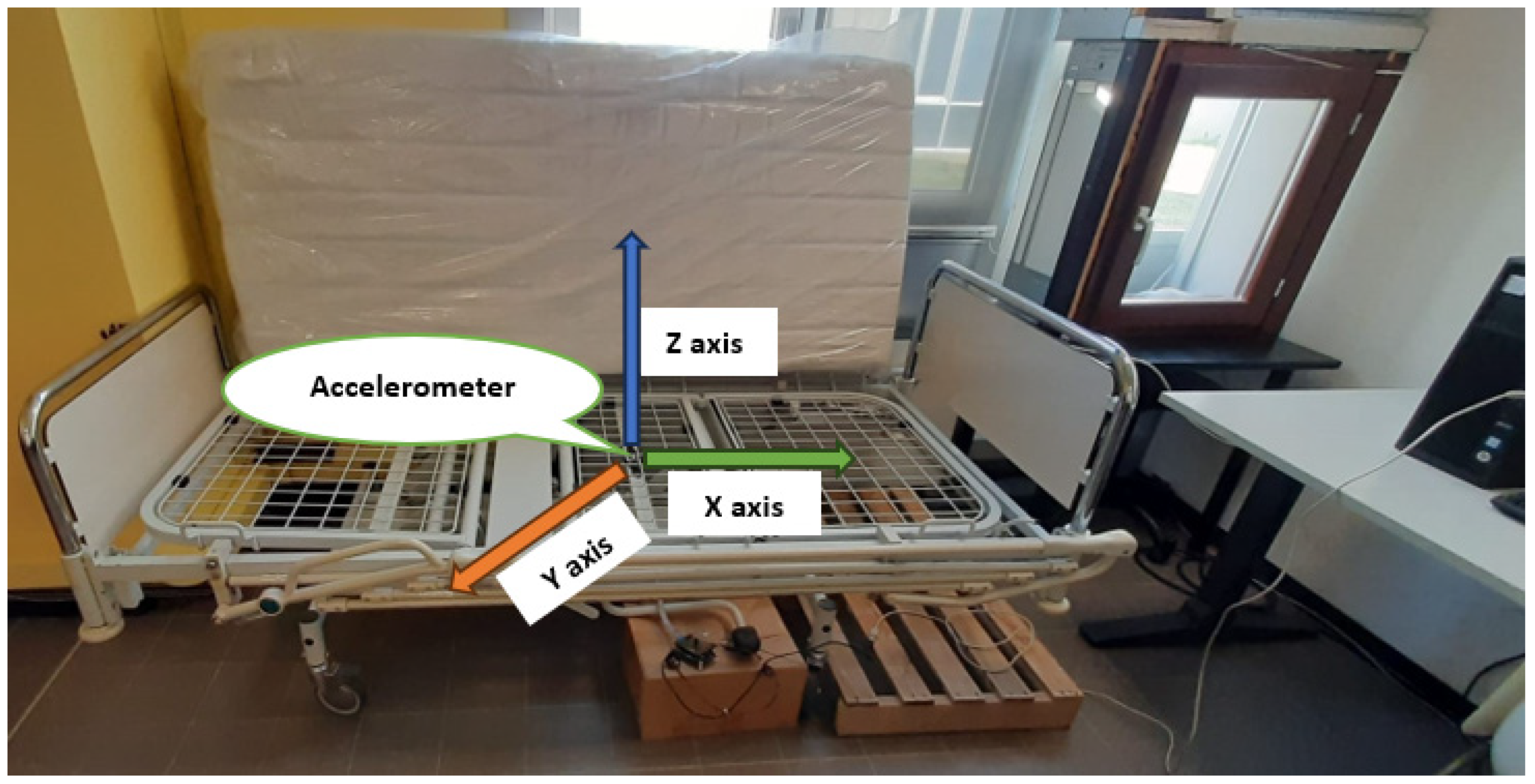

2.1. Setup and Devices

2.2. Data Processing and ML Features

- Xsum, Ysum, Zsum are the sum of acceleration along X, Y, and Z-axes for each window.

- Xstd, Ystd, Zstd are the standard deviation of acceleration along X, Y, and Z-axes for each window.

- Xmax, Ymax, Zmax are the maximum value of acceleration along X, Y, Z-axes for each window.

- ΔXsum is the sum of Δacc along X-axis for each window.

- ΔXstd is the standard deviation of Δacc along X-axis for each window.

- ΔXmax is the maximum value of Δacc along X-axis for each window.

- 0: No heartbeat detected

- 1: Heartbeat detected

2.3. ML Algorithms

2.3.1. Logistic Regression

- P(Y = 1) is the probability that the dependent variable Y is equal to 1 (positive class).

- e is the base of the natural logarithm.

- β0, β1, …, βn are the coefficients to be learned from the training data.

- X1, …, Xn are the input features.

2.3.2. Linear Discrimination Analysis

- c is the number of classes.

- Ni is the number of instances in class i.

- xij is the j-th instance of class i.

- μi is the mean vector of class i.

- μ is the overall mean vector.

- If ≥ 0.5, classify as Class 1.

- If < 0.5, classify as Class 2.

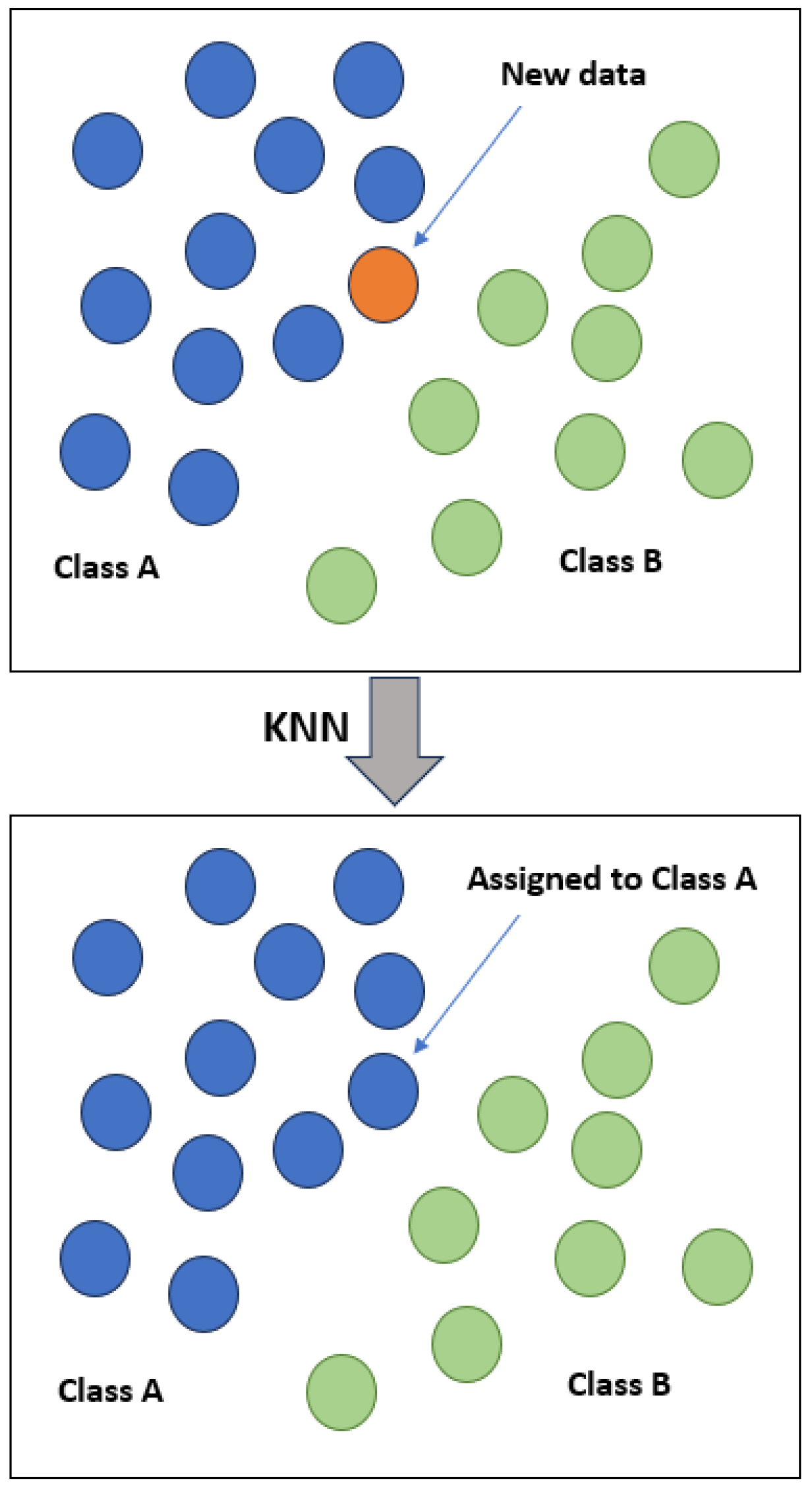

2.3.3. K Nearest Neighbours

- The choice of K is a hyperparameter that needs to be specified. It determines how many neighbours influence the classification decision.

- A smaller K (e.g., 1 or 3) makes the algorithm more sensitive to noise but can capture local patterns.

- A larger K (e.g., 10 or 20) provides a smoother decision boundary but may miss local variations.

- For binary classification, the decision rule involves a majority vote among the k nearest neighbors.

- If k is odd, there will be a clear majority.

- If k is even, a tie-breaking rule may be needed.

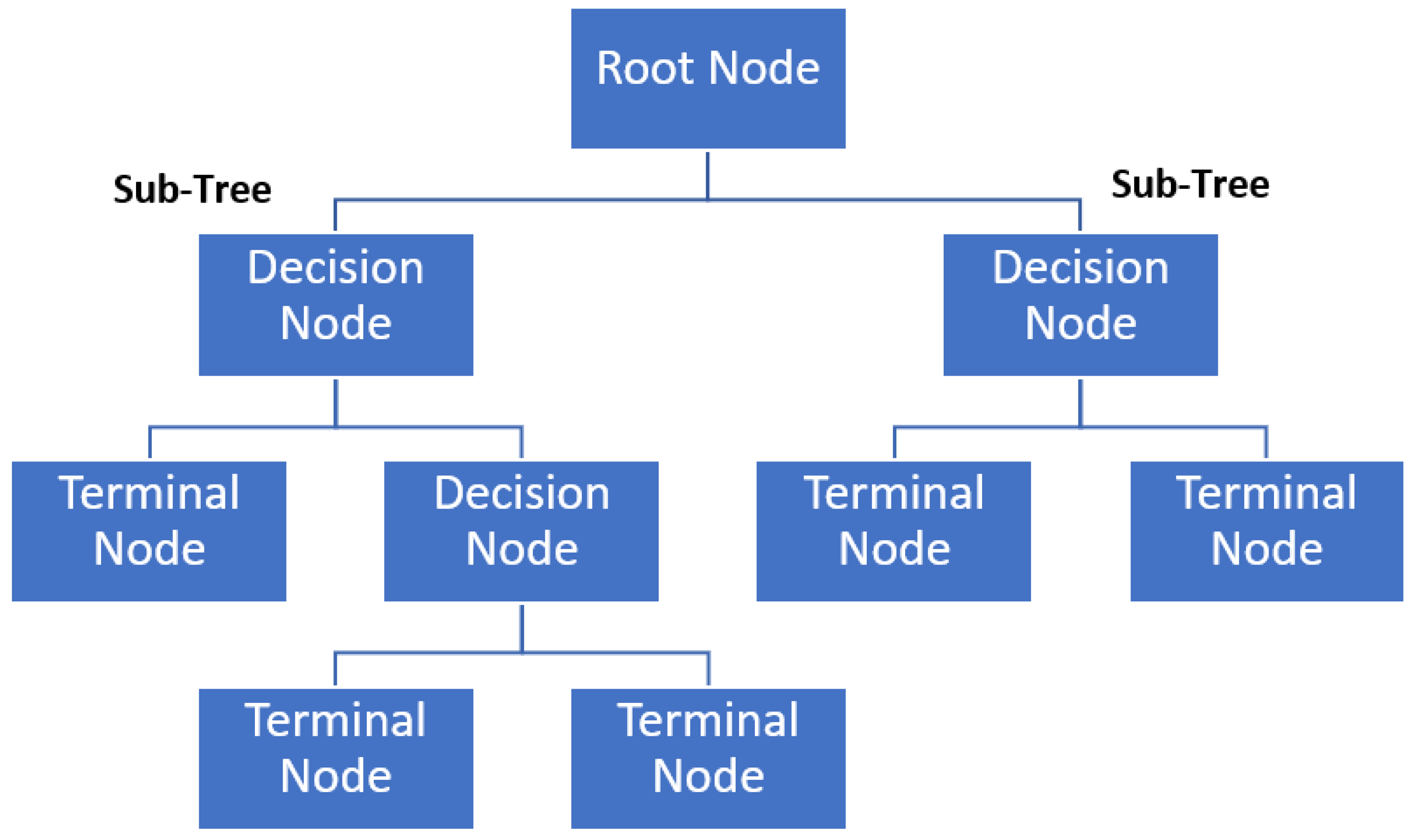

2.3.4. Classification and Regression Trees

2.3.5. Naive Bayes

- Y is the class variable (e.g., 0 or 1).

- X is the vector of feature variables.

2.3.6. Support Vector Machines

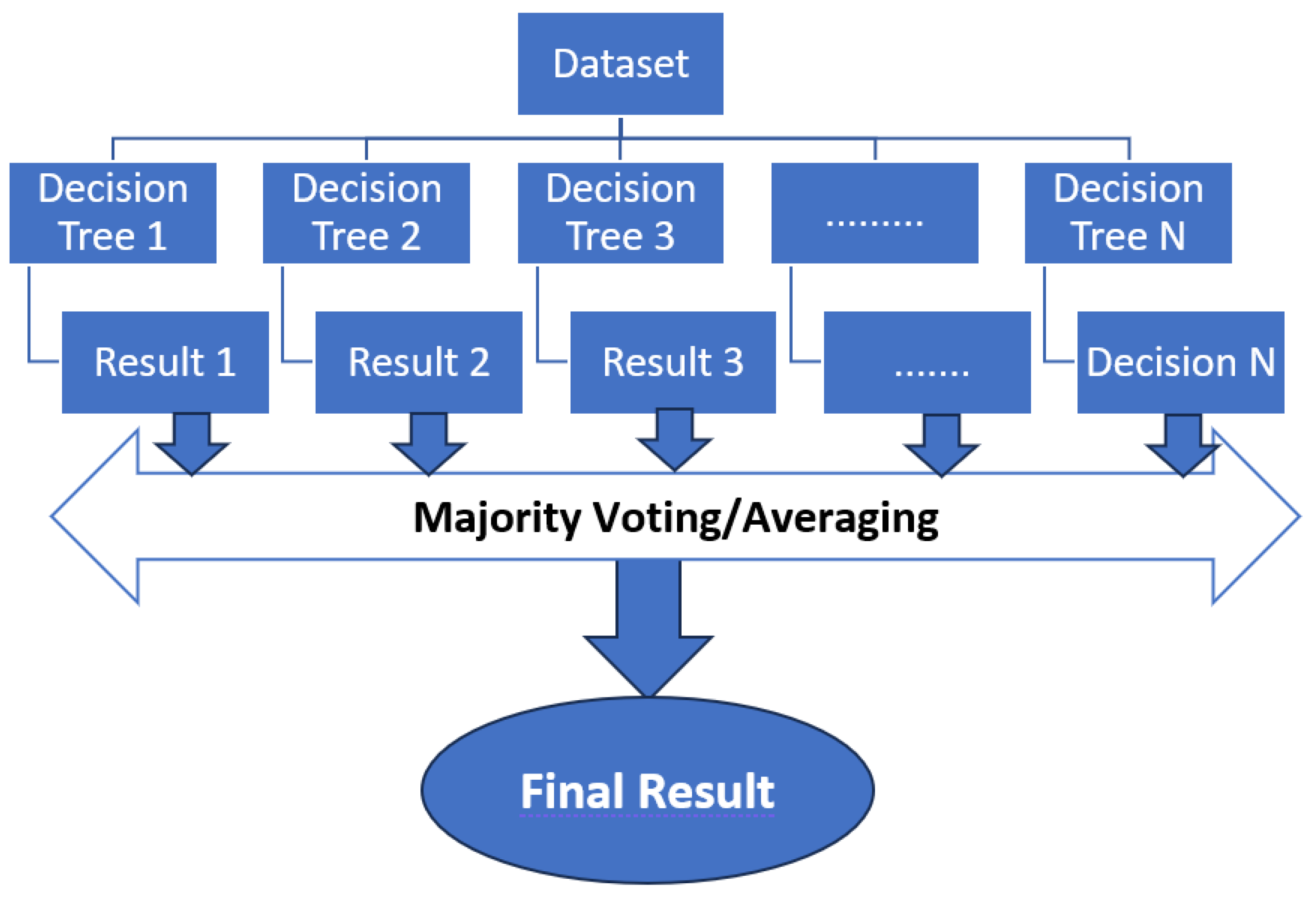

2.3.7. Random Forest

2.3.8. Deep Learning

- Input Layer is the first layer in the network, where input data is fed into the model. Each node in the input layer represents a feature of the input data.

- Hidden layers come after the input layer but before the output layer. Each node in a hidden layer performs a weighted sum of its inputs, applies an activation function to the result, and passes the output to the next layer. Multiple hidden layers allow the network to learn complex and hierarchical representations of the input data.

- Output Layer is the final layer that produces the network’s output. The number of nodes in the output layer depends on the type of task the network is designed for. For binary classification, there is typically one node with a sigmoid activation function, while for multi-class classification, there might be multiple nodes with softmax activation.

- Weights and Biases: Each connection between nodes has an associated weight learned during training. Bias refers to a term added to the weighted sum of inputs and passed through an activation function. It allows the neural network to represent constant values in the output, even when all the input values are zero. The weights and biases are adjusted during training to minimize the difference between the predicted output and target values.

- Activation Functions: Nodes in hidden layers and the output layer typically apply an activation function to introduce non-linearity into the model. Common activation functions include the rectified linear unit (ReLU) for hidden layers and the sigmoid as the output layer for binary classification. The ReLU function is computationally efficient and helps mitigate the vanishing gradient problem. It is commonly used in hidden layers of neural networks. Output: [0, +∞) for positive values, 0 for negative values. The sigmoid function squashes its input to the range (0, 1), making it suitable for binary classification problems where the output represents probabilities.

- Stochastic Gradient Descent (SGD) is used for optimization during backpropagation. Instead of updating weights after processing the entire dataset (batch), weights are updated after processing a subset (mini-batch) of the data, which reduces the computational load and helps escape local minima.

- Backpropagation The algorithm compares the predicted output of the network with the actual output (ground truth) and calculates the error. The error is then propagated backward through the network to update weights and reduce errors in subsequent iterations.

- DL training: the network is trained using a supervised learning approach, where it learns from a labeled dataset. The optimization algorithm automatically performs backpropagation during the training process (in this case, the SGD). The algorithm computes the gradient of the loss concerning the weights and biases in the network. This gradient represents the direction in which the weights and biases should be adjusted to decrease the loss. During each training epoch, the model processes batches of training data, computes the loss, and updates its parameters through backpropagation. This iterative process continues for the specified number of epochs, and the model gradually improves its ability to predict the given task.

- Weights are updated using the error gradient with respect to the weights. The learning rate controls the step size during weight updates. After the training progress is completed, the final weights are used for DL prediction.

2.4. Metric Evaluation

- True Positive (TP): The number of instances that are actually positive (belong to the positive class) and are correctly predicted as positive by the model.

- False Positive (FP): The number of instances that are actually negative (belong to the negative class) but are incorrectly predicted as positive by the model.

- True Negative (TN): The number of instances that are actually negative and are correctly predicted as negative by the model.

- False Negative (FN): The number of instances that are actually positive but are incorrectly predicted as negative by the model.

- Metricnegative and Metricpositive are each class’s performance metrics (e.g., precision, recall, F1 score).

- Weightnegative and Weightpositive are the number of samples belonging to the negative class and to the positive class, respectively.

- Total Samples is the total number of samples in the dataset.

3. Results and Discussion

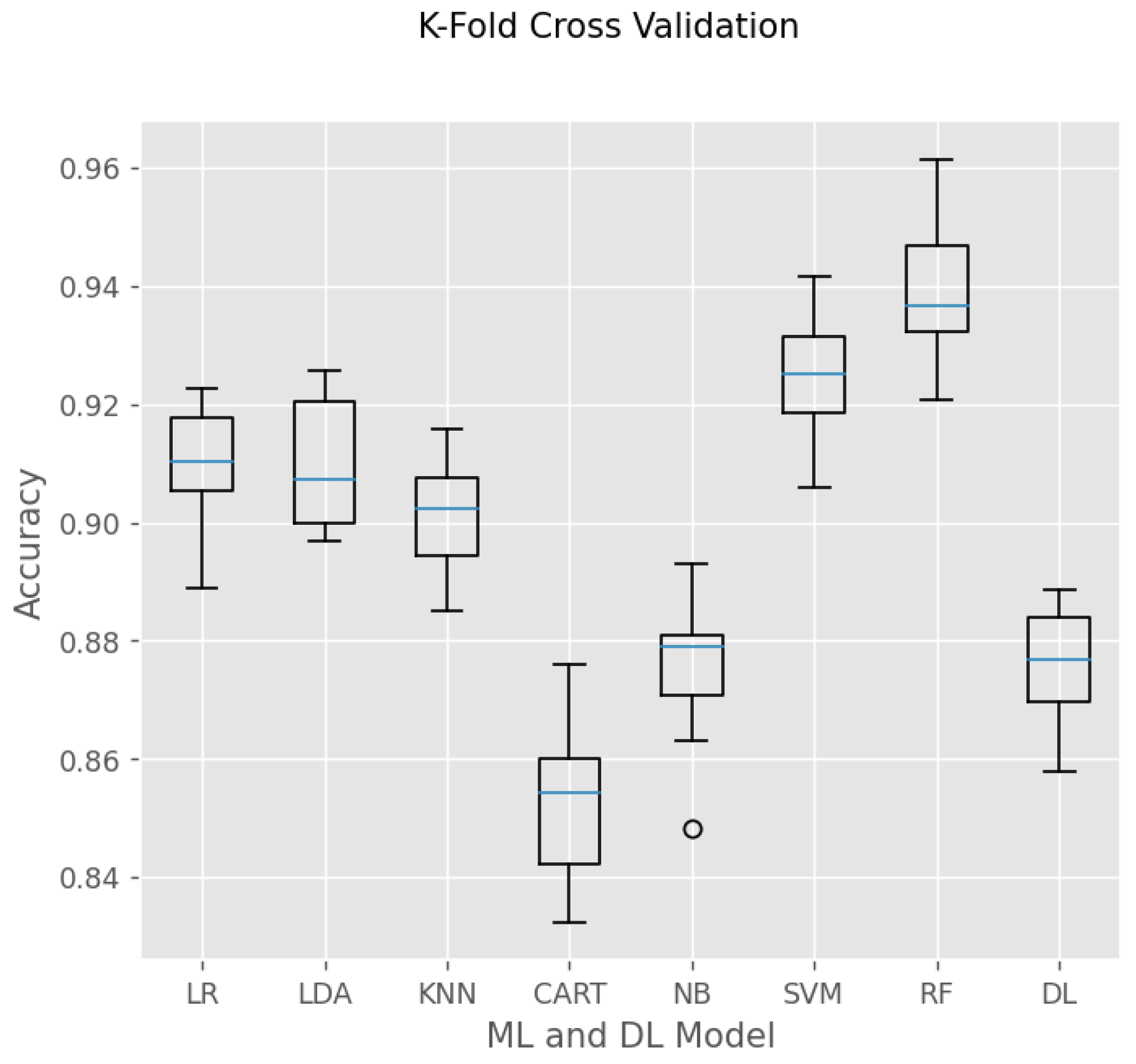

3.1. Model Comparison and Selection

3.2. Test on Each Person

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dare—Digital Lifelong Prevention. Available online: https://www.fondazionedare.it/ (accessed on 12 February 2024).

- Il Digitale Strumento Di Prevenzione Sanitaria: Il Progetto Dare. Available online: https://www.agendadigitale.eu/sanita/il-digitale-strumento-di-prevenzione-sanitaria-il-progetto-dare/ (accessed on 11 November 2023).

- Speranza, M.; López-López, J.D.; Schwartzmann, P.; Morr, I.; Rodríguez-González, M.J.; Buitrago, A.; Pow-Chon-Long, F.; Passos, L.C.; Rossel, V.; Perna, E.R.; et al. Cardiovascular Complications in Patients with Heart Failure and COVID-19: Cardio COVID 19–20 Registry. J. Cardiovasc. Dev. Dis. 2024, 11, 34. [Google Scholar] [CrossRef]

- Mo, M.; Thiesmeier, R.; Kiwango, G.; Rausch, C.; Möller, J.; Liang, Y. The Association between Birthweight and Use of Car-diovascular Medications: The Role of Health Behaviors. J. Cardiovasc. Dev. Dis. 2023, 10, 426. [Google Scholar] [CrossRef] [PubMed]

- Gray, R.; Indraratna, P.; Lovell, N.; Ooi, S.Y. Digital Health Technology in the Prevention of Heart Failure and Coronary Artery Disease. Cardiovasc. Digit. Health J. 2022, 3, S9–S16. [Google Scholar] [CrossRef] [PubMed]

- Majumder, S.; Mondal, T.; Deen, M.J. Wearable Sensors for Remote Health Monitoring. Sensors 2017, 17, 130. [Google Scholar] [CrossRef] [PubMed]

- Xu, J.; Xu, L. Sensor System and Health Monitoring. In Integrated System Health Management; Academic Press: Cambridge, MA, USA, 2017; pp. 55–99. [Google Scholar] [CrossRef]

- Sadek, I.; Abdulrazak, B. A Comparison of Three Heart Rate Detection Algorithms over Ballistocardiogram Signals. Biomed. Signal Process. Control 2021, 70, 103017. [Google Scholar] [CrossRef]

- Galli, A.; Montree, R.J.H.; Que, S.; Peri, E.; Vullings, R. An Overview of the Sensors for Heart Rate Monitoring Used in Ex-tramural Applications. Sensors 2022, 22, 4035. [Google Scholar] [CrossRef] [PubMed]

- Alugubelli, N.; Abuissa, H.; Roka, A. Wearable Devices for Remote Monitoring of Heart Rate and Heart Rate Varia-bil-ity—What We Know and What Is Coming. Sensors 2022, 22, 8903. [Google Scholar] [CrossRef] [PubMed]

- Pomeranz, B.; Macaulay, R.J.; Caudill, M.A.; Kutz, I.; Adam, D.; Gordon, D.; Kilborn, K.M.; Barger, A.C.; Shannon, D.C.; Cohen, R.J.; et al. Assessment of autonomic function in humans by heart rate spectral analysis. Am. J. Physiol. Heart Circ. Physiol. 1985, 248, H151–H153. [Google Scholar] [CrossRef] [PubMed]

- D’Mello, Y.; Skoric, J.; Xu, S.; Roche, P.J.R.; Lortie, M.; Gagnon, S.; Plant, D.V. Real-Time Cardiac Beat Detection and Heart Rate Monitoring from Combined Seismocardiography and Gyrocardiography. Sensors 2019, 19, 3472. [Google Scholar] [CrossRef] [PubMed]

- Meza, C.; Juega, J.; Francisco, J.; Santos, A.; Duran, L.; Rodriguez, M.; Alvarez-Sabin, J.; Sero, L.; Ustrell, X.; Bashir, S.; et al. Accuracy of a Smartwatch to Assess Heart Rate Monitoring and Atrial Fibrillation in Stroke Patients. Sensors 2023, 23, 4632. [Google Scholar] [CrossRef] [PubMed]

- Phan, D.; Siong, L.Y.; Pathirana, P.N.; Seneviratne, A. Smartwatch: Performance Evaluation for Long-Term Heart Rate Monitoring. Available online: https://ieeexplore.ieee.org/abstract/document/7344944 (accessed on 25 January 2024).

- Toru, H.; Maruyama, H.; Eriko, M.; Hosoda, T. Method of Emotion Estimation Based on the Heart Rate Data of a Smartwatch. In Proceedings of the 2022 12th International Congress on Advanced Applied Informatics (IIAI-AAI) 2022, Kanazawa, Japan, 2–8 July 2022. [Google Scholar] [CrossRef]

- Chen, M.-C.; Chen, R.-C.; Zhao, Q. Combining Smartwatch and Environments Data for Predicting the Heart Rate. In Proceedings of the 2018 IEEE International Conference on Applied System Invention (ICASI) 2018, Chiba, Tokyo, Japan, 13–17 April 2018. [Google Scholar] [CrossRef]

- Hoang, M.L.; Carratu, M.; Ugwiri, M.A.; Paciello, V.; Pietrosanto, A. A New Technique for Optimization of Linear Dis-placement Measurement Based on MEMS Accelerometer. In Proceedings of the 2020 International Semiconductor Conference (CAS) 2020, Sinaia, Romania, 7–9 October 2020. [Google Scholar] [CrossRef]

- Hoang, M.L.; Delmonte, N. K-Centroid Convergence Clustering Identification in One-Label per Type for Disease Prediction. IAES Int. J. Artif. Intell. 2024, 13, 1149. [Google Scholar] [CrossRef]

- Hoang, M.L. Smart Drone Surveillance System Based on AI and on IoT Communication in Case of Intrusion and Fire Accident. Drones 2023, 7, 694. [Google Scholar] [CrossRef]

- Hoang, M.L.M.; Pietrosanto, A. Yaw/Heading Optimization by Machine Learning Model Based on MEMS Magnetometer under Harsh Conditions. Measurement 2022, 193, 111013. [Google Scholar] [CrossRef]

- Hoang, M.L.; Nkembi, A.A.; Pham, P.L. Real-Time Risk Assessment Detection for Weak People by Parallel Training Logical Execution of a Supervised Learning System Based on an IoT Wearable MEMS Accelerometer. Sensors 2023, 23, 1516. [Google Scholar] [CrossRef] [PubMed]

- Asha, N.E.J.; Ehtesum-Ul-Islam; Khan, R. Low-Cost Heart Rate Sensor and Mental Stress Detection Using Machine Learning. In Proceedings of the 2021 5th International Conference on Trends in Electronics and Informatics (ICOEI), Tirunelveli, India, 3–5 June 2021. [Google Scholar]

- Shamsolnizam, A.F.; Zulkarnain Basri, I.; Zakaria, N.A.; Tajuddin, T.; Suryady, Z. Beat: Heart Monitoring Application. In Proceedings of the 2022 IEEE 8th International Conference on Computing, Engineering and Design (ICCED), Sukabumi, Indonesia, 28–29 July 2022. [Google Scholar] [CrossRef]

- Cuevas-Chávez, A.; Hernández, Y.; Ortiz-Hernandez, J.; Sánchez-Jiménez, E.; Ochoa-Ruiz, G.; Pérez, J.; González-Serna, G. A Systematic Review of Machine Learning and IoT Applied to the Prediction and Monitoring of Cardiovascular Diseases. Healthcare 2023, 11, 2240. [Google Scholar] [CrossRef]

- Pramukantoro, E.S.; Gofuku, A. A Heartbeat Classifier for Continuous Prediction Using a Wearable Device. Sensors 2022, 22, 5080. [Google Scholar] [CrossRef]

- Mora, N.; Cocconcelli, F.; Matrella, G.; Ciampolini, P. A Unified Methodology for Heartbeats Detection in Seismocardiogram and Ballistocardiogram Signals. Computers 2020, 9, 41. [Google Scholar] [CrossRef]

- Cocconcelli, F.; Mora, N.; Matrella, G.; Ciampolini, P. Seismocardiography-Based Detection of Heartbeats for Continuous Monitoring of Vital Signs. In Proceedings of the 11th Computer Science and Electronic Engineering (CEEC), Colchester, UK, 18–20 September 2019. [Google Scholar] [CrossRef]

- Cocconcelli, F.; Mora, N.; Matrella, G.; Ciampolini, P. High-Accuracy, Unsupervised Annotation of Seismocardiogram Traces for Heart Rate Monitoring. IEEE Trans. Instrum. Meas. 2020, 69, 6372–6380. [Google Scholar] [CrossRef]

- Gaiduk, M.; Wehrle, D.; Seepold, R.; Ortega, J.A. Non-Obtrusive System for Overnight Respiration and Heartbeat Tracking. Procedia Comput. Sci. 2020, 176, 2746–2755. [Google Scholar] [CrossRef]

- Haghi, M.; Asadov, A.; Boiko, A.; Ortega, J.A.; Martínez Madrid, N.; Seepold, R. Validating Force Sensitive Resistor Strip Sensors for Cardiorespiratory Measurement during Sleep: A Preliminary Study. Sensors 2023, 23, 3973. [Google Scholar] [CrossRef]

- Ni, A.; Azarang, A.; Kehtarnavaz, N. A Review of Deep Learning-Based Contactless Heart Rate Measurement Methods. Sensors 2021, 21, 3719. [Google Scholar] [CrossRef]

- Cheng, C.-H.; Wong, K.-L.; Chin, J.-W.; Chan, T.-T.; So, R.H.Y. Deep Learning Methods for Remote Heart Rate Measurement: A Review and Future Research Agenda. Sensors 2021, 21, 6296. [Google Scholar] [CrossRef]

- Boudet, S.; Houzé de l’Aulnoit, A.; Peyrodie, L.; Demailly, R.; Houzé de l’Aulnoit, D. Use of Deep Learning to Detect the Maternal Heart Rate and False Signals on Fetal Heart Rate Recordings. Biosensors 2022, 12, 691. [Google Scholar] [CrossRef]

- Malini, A.H.; Sudarshan, G.; Kumar, S.G.; Sumanth, G. Non-Contact Heart Rate Monitoring System Using Deep Learning Tech-niques. In Proceedings of the 2023 International Conference on Intelligent Data Communication Technologies and Internet of Things (IDCIoT), Bengaluru, India, 5–7 January 2023. [Google Scholar] [CrossRef]

- Choi, Y.; Boo, Y. Comparing Logistic Regression Models with Alternative Machine Learning Methods to Predict the Risk of Drug Intoxication Mortality. Int. J. Environ. Res. Public Health 2020, 17, 897. [Google Scholar] [CrossRef]

- Prakhar, J.; Haider, M.T.U. Automated Detection of Biases within the Healthcare System Using Clustering and Logistic Re-gression. In Proceedings of the 2023 15th International Conference on Computer and Automation Engineering (ICCAE), Sydney, Australia, 3–5 March 2023. [Google Scholar]

- Adebiyi, M.O.; Arowolo, M.O.; Mshelia, M.D.; Olugbara, O.O. A Linear Discriminant Analysis and Classification Model for Breast Cancer Diagnosis. Appl. Sci. 2022, 12, 11455. [Google Scholar] [CrossRef]

- Gaudenzi, P.; Nardi, D.; Chiappetta, I.; Atek, S.; Lampani, L.; Pasquali, M.; Sarasini, F.; Tirilló, J.; Valente, T. Sparse sensing detection of impact-induced delaminations in composite laminates. Compos. Struct. 2015, 133, 1209–1219. [Google Scholar] [CrossRef]

- Ozturk Kiyak, E.; Ghasemkhani, B.; Birant, D. High-Level K-Nearest Neighbors (HLKNN): A Supervised Machine Learning Model for Classification Analysis. Electronics 2023, 12, 3828. [Google Scholar] [CrossRef]

- Chen, C.-H.; Huang, W.-T.; Tan, T.-H.; Chang, C.-C.; Chang, Y.-J. Using K-Nearest Neighbor Classification to Diagnose Abnormal Lung Sounds. Sensors 2015, 15, 13132–13158. [Google Scholar] [CrossRef] [PubMed]

- Pathak, S.; Mishra, I.; Swetapadma, A. An Assessment of Decision Tree Based Classification and Regression Algorithms. In Proceedings of the 2018 3rd International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 15–16 November 2018. [Google Scholar] [CrossRef]

- Pereira, S.; Karia, D. Prediction of Sudden Cardiac Death Using Classification and Regression Tree Model with Coalesced Based ECG and Clinical Data. In Proceedings of the 2018 3rd International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 15–16 October 2018. [Google Scholar]

- Langarizadeh, M.; Moghbeli, F. Applying Naive Bayesian Networks to Disease Prediction: A Systematic Review. Acta Inform. Med. 2016, 24, 364. [Google Scholar] [CrossRef]

- Scikit-Learn. Naive Bayes. Available online: https://scikit-learn.org/stable/modules/naive_bayes.html (accessed on 24 August 2023).

- Scikit-Learn. Support Vector Machine. Available online: https://scikit-learn.org/stable/modules/svm.html (accessed on 24 August 2023).

- Martinez-Alanis, M.; Bojorges-Valdez, E.; Wessel, N.; Lerma, C. Prediction of Sudden Cardiac Death Risk with a Support Vector Machine Based on Heart Rate Variability and Heartprint Indices. Sensors 2020, 20, 5483. [Google Scholar] [CrossRef] [PubMed]

- Ye, Y.; He, W.; Cheng, Y.; Huang, W.; Zhang, Z. A Robust Random Forest-Based Approach for Heart Rate Monitoring Using Photoplethysmography Signal Contaminated by Intense Motion Artifacts. Sensors 2017, 17, 385. [Google Scholar] [CrossRef] [PubMed]

- Scikit-Learn. sklearn.ensemble.RandomForestClassifier. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html (accessed on 24 August 2023).

- Yadav, R.; Bhat, A. Applications of Deep Learning for Disease Management. In Proceedings of the 2022 4th International Conference on Advances in Computing, Communication Control and Networking (ICAC3N), Greater Noida, India, 16–17 December 2022. [Google Scholar]

- Sarker, I.H. Deep Learning: A Comprehensive Overview on Techniques, Taxonomy, Applications and Research Directions. SN Comput. Sci. 2021, 2, 420. [Google Scholar] [CrossRef] [PubMed]

- Analog Devices. ADXL355. Datasheet and Product Info. Available online: https://www.analog.com/en/products/adxl355.html#product-documentation (accessed on 20 September 2023).

- ST B-L475E-IOT01A—STMicroelectronics. Available online: https://www.st.com/en/evaluation-tools/b-l475e-iot01a.html (accessed on 20 September 2023).

- Pulsesensor. Heartbeats in Your Project, Lickety-Split. Available online: https://pulsesensor.com/ (accessed on 21 September 2023).

- Python. Available online: https://www.python.org/ (accessed on 21 September 2023).

- Scikit-Learn. Scikit-Learn: Machine Learning in Python. Available online: https://scikit-learn.org/stable/ (accessed on 1 November 2023).

- Keras|TensorFlow Core|TensorFlow. Available online: https://www.tensorflow.org/guide/keras (accessed on 1 November 2023).

- Analog Device. Low Noise, Low Drift, Low Power, 3-Axis MEMS Accelerometers ADXL 355—Rev. B. Available online: https://www.analog.com/media/en/technical-documentation/data-sheets/adxl354_adxl355.pdf (accessed on 25 September 2023).

- Hoang, M.L.; Carratu, M.; Paciello, V.; Pietrosanto, A. Noise Attenuation on IMU Measurement for Drone Balance by Sensor Fusion. In Proceedings of the 2021 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Glasgow, Scotland, 17–20 May 2021. [Google Scholar] [CrossRef]

- Hoang, M.L.; Carratu, M.; Paciello, V.; Pietrosanto, A. A New Orientation Method for Inclinometer Based on MEMS Accel-erometer Used in Industry 4.0. In Proceedings of the 2020 IEEE 18th International Conference on Industrial Informatics (INDIN), Warwick, UK, 20–23 July 2020. [Google Scholar] [CrossRef]

- Chang, H.; Chen, J.; Liu, Y. Micro-Piezoelectric Pulse Diagnoser and Frequency Domain Analysis of Human Pulse Signals. J. Tradit. Chin. Med. Sci. 2018, 5, 35–42. [Google Scholar] [CrossRef]

- Lee, S.; Lee, C.; Mun, K.G.; Kim, D. Decision Tree Algorithm Considering Distances between Classes. IEEE Access 2022, 10, 69750–69756. [Google Scholar] [CrossRef]

| ML Features | Heart Beat Class | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Xsum (0) | Xstd (1) | Xmax (2) | ΔXsum (3) | ΔXstd (4) | ΔXmax (5) | Ysum (6) | Ystd (7) | Ymax (8) | Zsum (9) | Zstd (10) | Zmax (11) | 0 or 1 |

| Models | Mean Accuracy | Std | Training and Test Time for 1-Fold (s) |

|---|---|---|---|

| LR | 0.908 | 0.09 | 0.179 |

| LDA | 0.902 | 0.013 | 0.048 |

| KNN | 0.907 | 0.011 | 0.069 |

| CART | 0.854 | 0.015 | 0.2385 |

| NB | 0.846 | 0.012 | 0.006 |

| SVM | 0.925 | 0.014 | 3.246 |

| RF | 0.935 | 0.012 | 2.256 |

| DL | 0.876 | 0.010 | 109.105 |

| Person Index | Random Forest | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1-Score | Macro Average | Weighted Average | Accuracy | ||||

| No Beating | Heart Beating | No Beating | Heart Beating | No Beating | Heart Beating | ||||

| P1 | 0.90 | 0.95 | 0.95 | 0.89 | 0.93 | 0.92 | 0.92 | 0.92 | 0.92 |

| P2 | 0.91 | 0.94 | 0.89 | 0.95 | 0.90 | 0.95 | 0.92 | 0.93 | 0.93 |

| P3 | 0.91 | 0.99 | 0.99 | 0.82 | 0.95 | 0.89 | 0.92 | 0.93 | 0.93 |

| P4 | 0.91 | 0.93 | 0.93 | 0.90 | 0.92 | 0.92 | 0.92 | 0.92 | 0.92 |

| P5 | 0.96 | 0.95 | 0.96 | 0.95 | 0.96 | 0.95 | 0.96 | 0.96 | 0.96 |

| P6 | 0.93 | 0.93 | 0.94 | 0.92 | 0.94 | 0.92 | 0.95 | 0.93 | 0.93 |

| P7 | 0.88 | 0.91 | 0.86 | 0.93 | 0.87 | 0.92 | 0.90 | 0.90 | 0.90 |

| P8 | 0.96 | 0.93 | 0.94 | 0.96 | 0.95 | 0.95 | 0.95 | 0.95 | 0.95 |

| P9 | 0.87 | 0.96 | 0.95 | 0.90 | 0.91 | 0.93 | 0.91 | 0.92 | 0.92 |

| P10 | 0.93 | 0.93 | 0.90 | 0.95 | 0.92 | 0.94 | 0.93 | 0.93 | 0.93 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hoang, M.L.; Matrella, G.; Ciampolini, P. Comparison of Machine Learning Algorithms for Heartbeat Detection Based on Accelerometric Signals Produced by a Smart Bed. Sensors 2024, 24, 1900. https://doi.org/10.3390/s24061900

Hoang ML, Matrella G, Ciampolini P. Comparison of Machine Learning Algorithms for Heartbeat Detection Based on Accelerometric Signals Produced by a Smart Bed. Sensors. 2024; 24(6):1900. https://doi.org/10.3390/s24061900

Chicago/Turabian StyleHoang, Minh Long, Guido Matrella, and Paolo Ciampolini. 2024. "Comparison of Machine Learning Algorithms for Heartbeat Detection Based on Accelerometric Signals Produced by a Smart Bed" Sensors 24, no. 6: 1900. https://doi.org/10.3390/s24061900

APA StyleHoang, M. L., Matrella, G., & Ciampolini, P. (2024). Comparison of Machine Learning Algorithms for Heartbeat Detection Based on Accelerometric Signals Produced by a Smart Bed. Sensors, 24(6), 1900. https://doi.org/10.3390/s24061900