A Crowd Movement Analysis Method Based on Radar Particle Flow

Abstract

1. Introduction

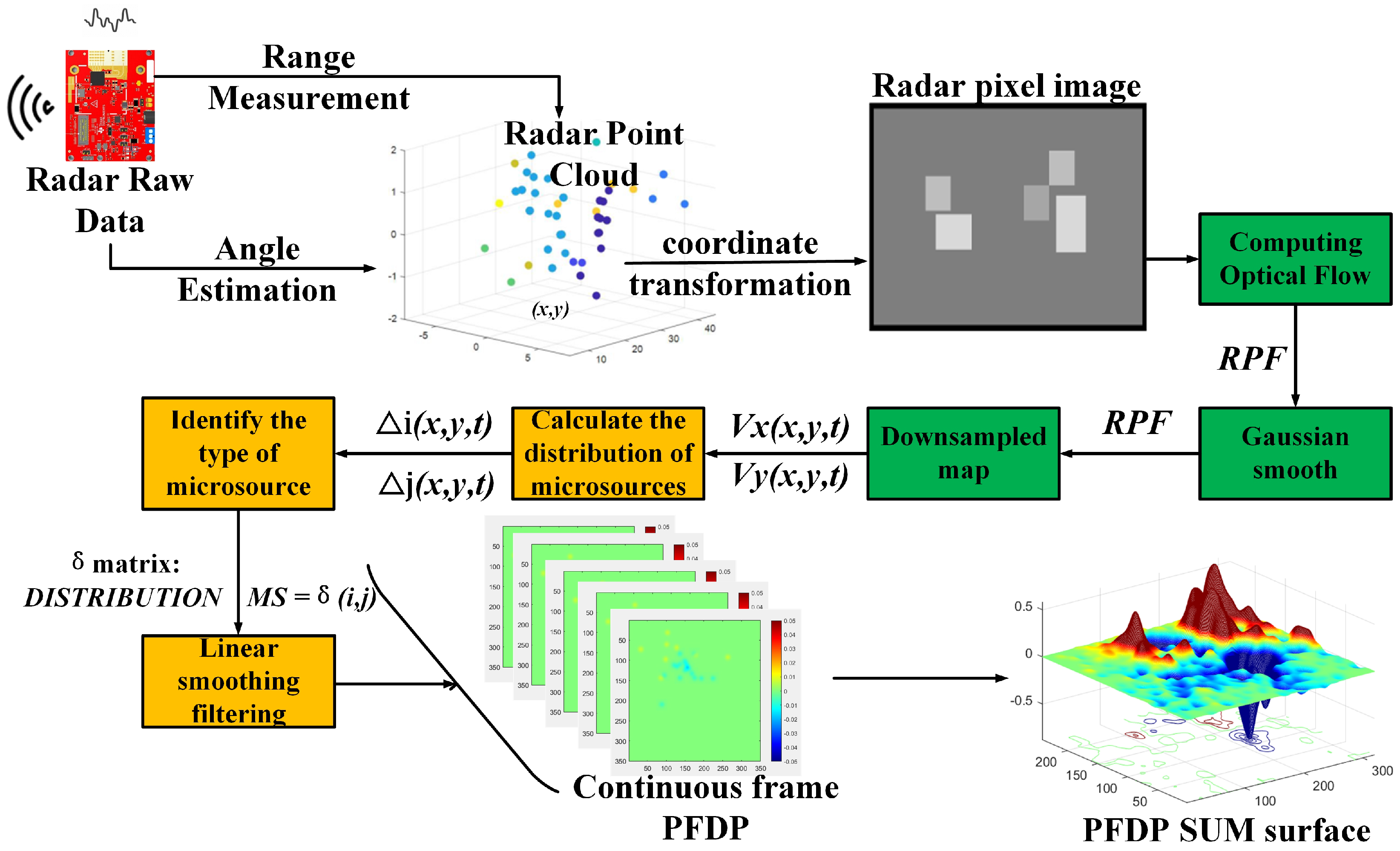

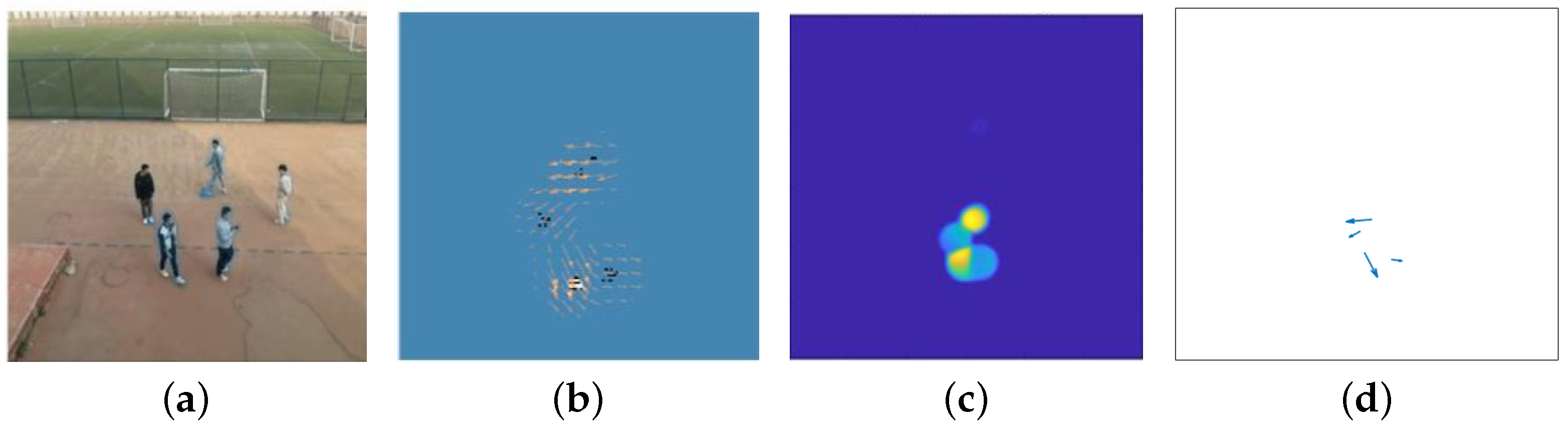

- mm-Wave radar was employed to gather crowd movement information, introducing the concept of RPF. Spatial consistency constraints were enhanced through the fusion of multiple frames, followed by the transformation of optical flow to a binary image by ultilizing neighborhood-based Gaussian smoothing.

- Based on particle flow information, we derived the virtual motion field of target movement and obtained the particle flow diffusion potential (PFDP). Crowd movement diffusion and aggregation were determined by analyzing extremum values and the spatial distribution of the PFDP.

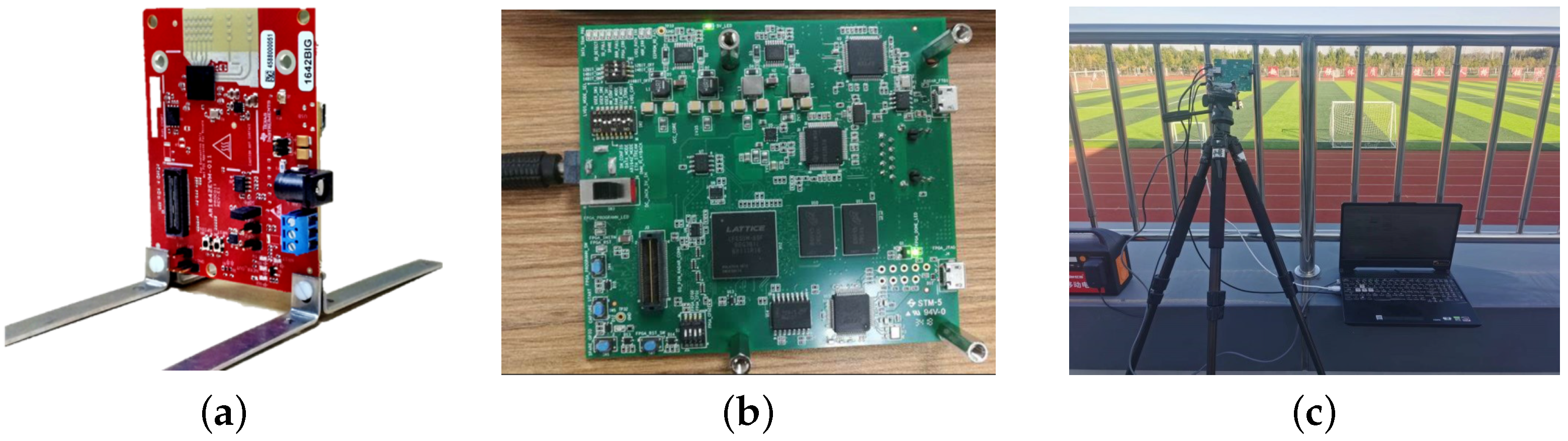

- A testing platform was established by using a Texas Instruments (TI) mm-Wave radar to collect continuous frame data under various motion patterns, such as free movement, aggregation, and dispersion. By processing over 20,000 frames of radar raw data, the functionality of the proposed algorithm in crowd behavior discrimination was validated, and the algorithm’s performance was assessed.

2. Preliminary Knowledge

2.1. Optical Flow

- Brightness constancy assumption: This assumption asserts that the brightness of an object remains constant as it moves between frames. It is the most fundamental assumption of optical flow, and various derivative algorithms in optical flow are built upon this premise. The mathematical expression of this assumption is as follows:where I represents the grayscale values at various points in a frame, and it can be viewed as a function of pixel coordinates as well as time (t). In most application scenarios, RGB images are typically preconverted to grayscale. This preprocessing step is undertaken to eliminate redundant information, thereby enhancing computational efficiency. Perform Taylor expansion of Equation (1) at x and y to obtainwhere is a second- and higher-order term that includes , , and . Subtract from both sides, and then divide by to obtainBring , into Equation (3):Equation (4) is called the optical flow constraint equation, which reflects the corresponding relationship between grayscale and velocity.

- The slow motion assumption: It is assumed that time is continuous, and the motion is slow. The position of the target does not undergo drastic changes over time. It should be noted that the notion of drastic displacement is not easily quantifiable. The trajectory of the target between adjacent frames can be ’approximated’ as continuous in pixels. When the video frame rate is higher or the object’s motion is sufficiently slow, this assumption is easily satisfied. As reflected in Equation (4), the changes in u and v as pixels move are slow, and the local region’s variation is small. Especially when the target undergoes nondeformable rigid body motion, the spatial change rate of gthevelocity in the local region is zero. Therefore, a smoothing term is introduced:For all pixels, the requirement is to solve for u and v when is at its minimum. Comprehensive optical flow constraints Equation (4) and velocity smoothing constraints Equation (5) establish the following energy function:where is the smoothing weight coefficient, which represents the weight of the velocity smoothing term. This is a functional extreme value problem, which could be solved using Euler–Lagrange equations. The Euler–Lagrange equations for the first-order partial derivatives of the bivariate function corresponding to Equation (6) arewhereIn [27], the Laplacian operator is approximated by taking the difference between the velocity of a point and the average velocity of its surroundings. That is,

2.2. Lucas–Kanade (LK) Method and Farneback Method

2.3. The Computational Complexity of the Optical Flow Method

3. Methodology

- (1)

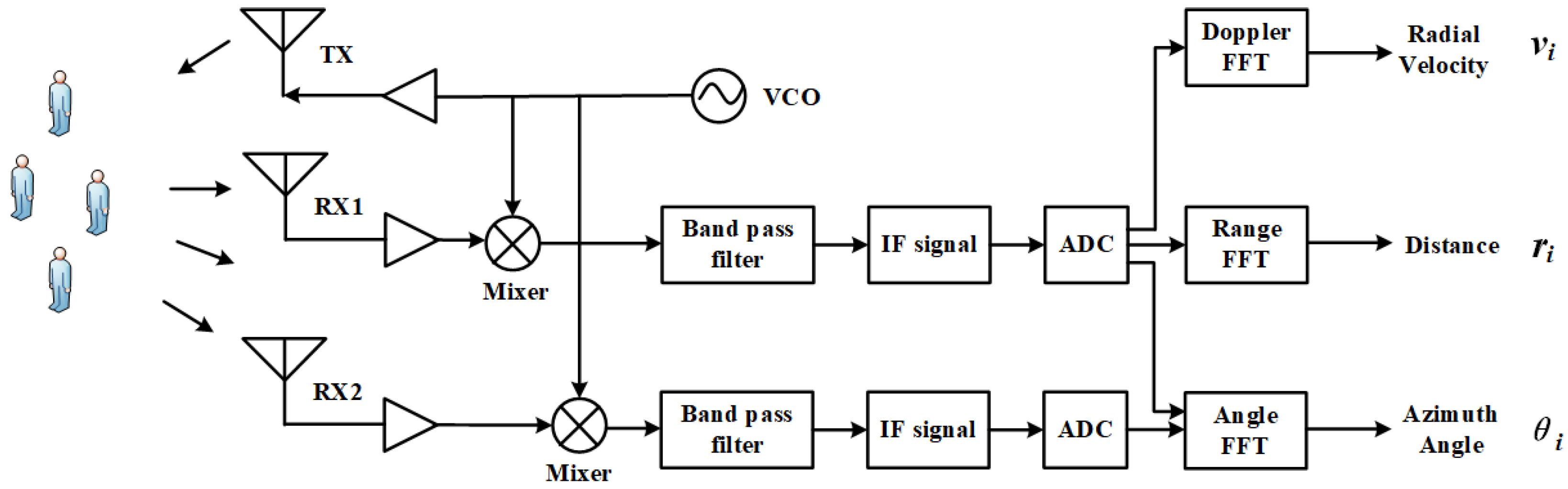

- The raw Analog-to-Digital Converter (ADC) data used to capture crowd echoes can be transformed into 3D Fast Fourier Transform (FFT) to obtain distance and angle information of measurement points, thereby exporting a mm-Wave radar point set.

- (2)

- Coordinate transformation is applied to project all measurement points onto pixel coordinates, forming a pixel map.

- (3)

- The optical flow field is computed between adjacent frames, and RPF is obtained through Gaussian smoothing and local maxima sampling.

- (4)

- Utilizing the direction and position information of vectors at various points in the flow field, the virtual distribution of sources at diverse locations in the plane is inferred, and a new concept of micro-source is defined.

- (5)

- The micro-sources are integrated within a closed rectangular window, iterating over the entire RPF plane to generate a matrix, which describes the potential distribution at various locations in the plane.

- (6)

- Over a certain period, these potential matrices are superimposed to form a potential surface, delineating aggregation and divergence points while effectively characterizing the flow direction of the crowd.

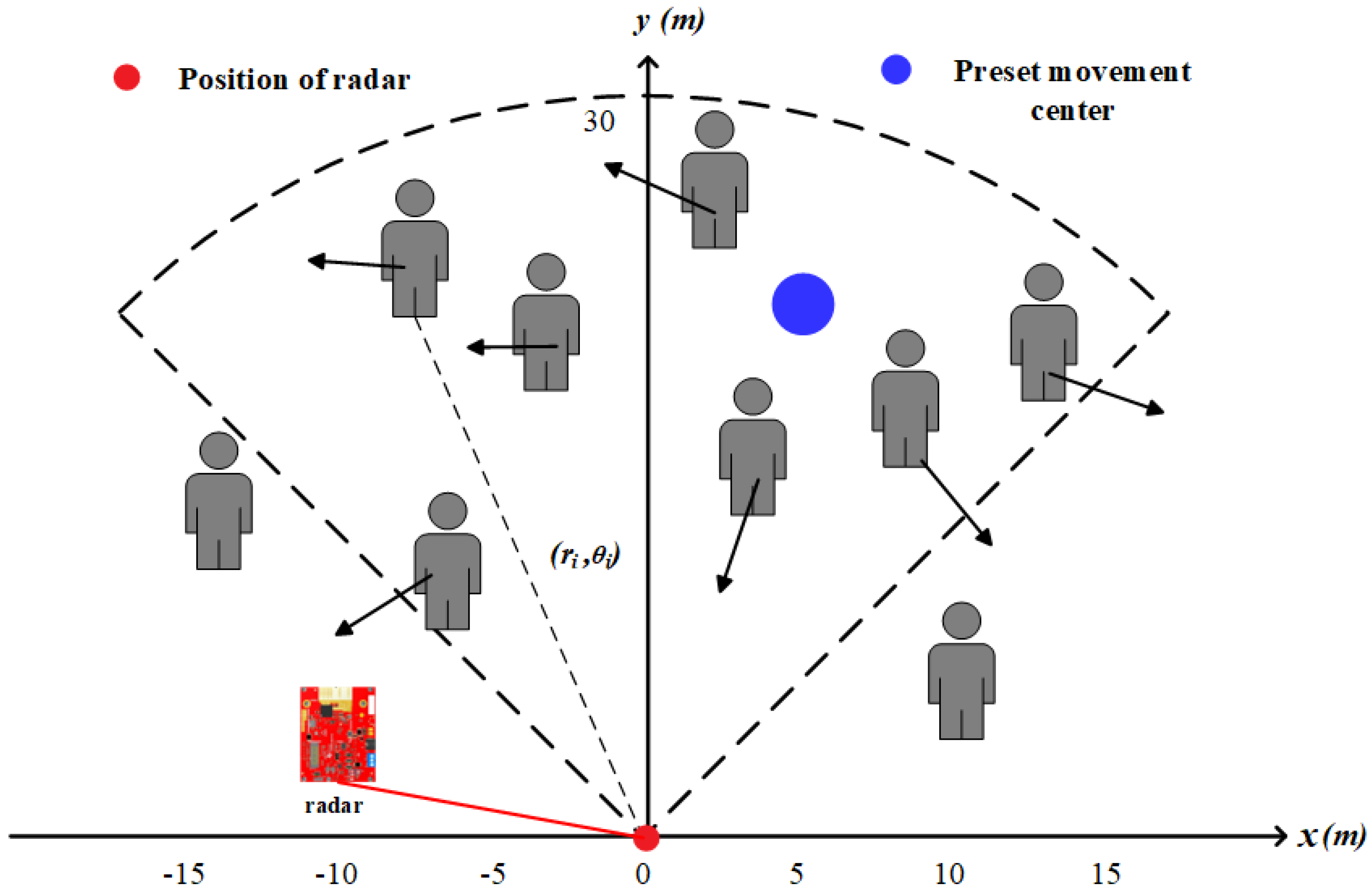

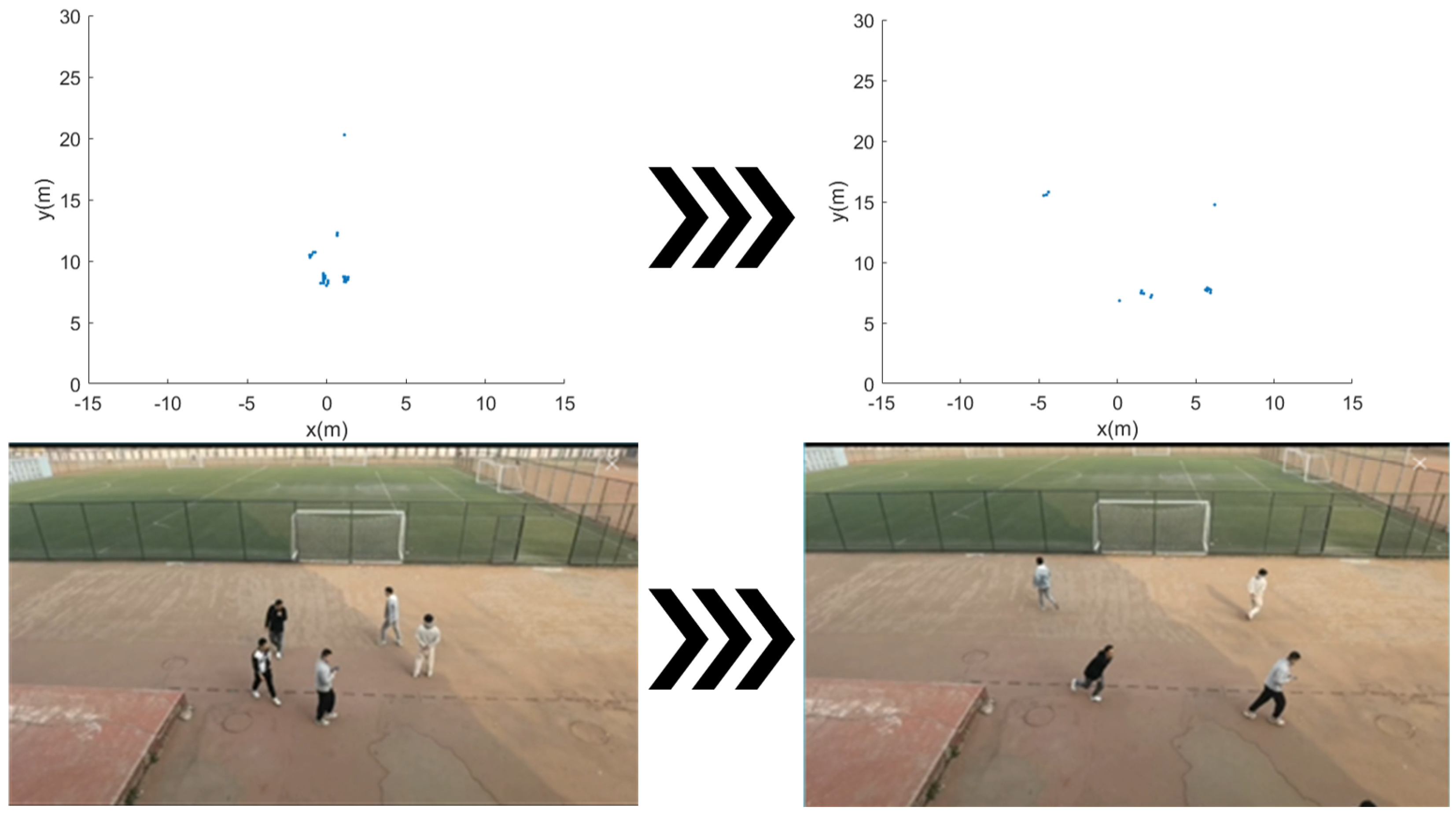

3.1. Acquisition and Preprocessing of Point Set Pixel Images

3.2. Radar Particle Flow

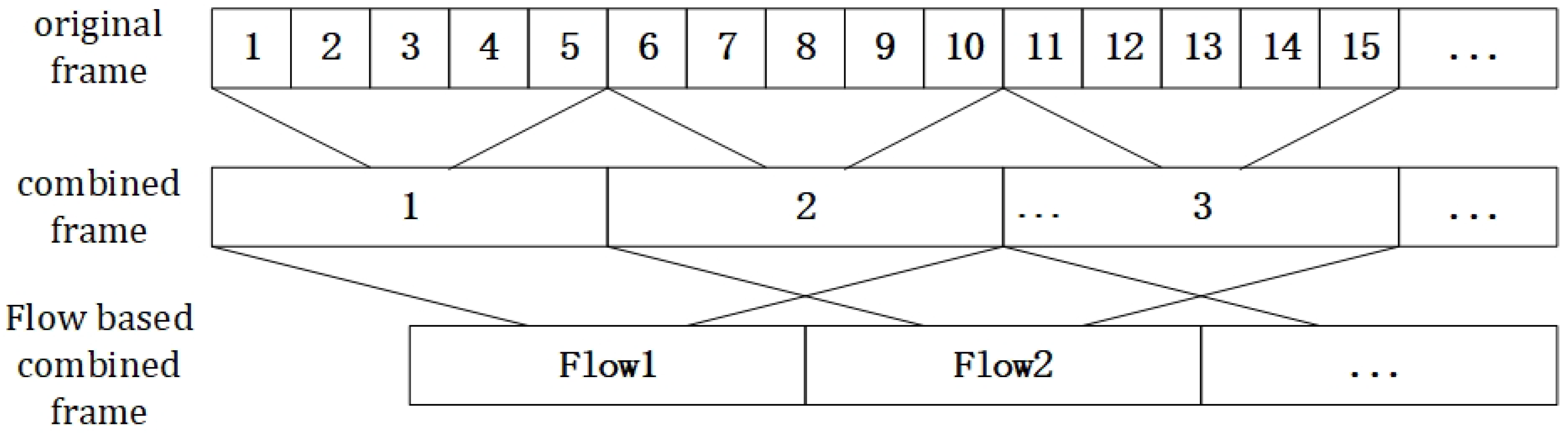

- Merging data across multiple frames can help suppress target disappearance. For example, merging every 5 original frames into 1 combined frame and then calculating the RPF based on adjacent pairs of these combined frames, as shown in Figure 5.

- To enhance the constraint of spatial consistency, it was necessary to expand the neighborhood of optical flow calculation. In this study, since the objects were mm-Wave radar point clouds with no fixed shape, an adaptive method was needed to determine the neighborhood size (NS). The process in this method is as follows:

- Input a set of combined frame mm-Wave point set data: . Since the point set of the targets within the combined frame exhibits a certain degree of extension in the direction of travel, similar to an ellipse, we chose the adaptive ellipse distance density peak fuzzy (AEDDPF) [30] method to cluster and obtain K clusters.

- For each cluster , calculate the geometric center:where represents the number of points belonging to the kth cluster.

- Take the geometric center of each cluster as the center to construct a circle:where:Here ‖.‖ represents distance.

- Finally, calculate the average diameter of all clusters as :

Next, run the Farneback algorithm on consecutive frames to compute the RPF based on mm-Wave point set images. - In order to obtain the overall velocity direction of targets, it is necessary to downsample the RPF of the target and its neighboring pixels. Since the shape of the radar point set for a target is unstable, unlike optical imaging, RPF cannot utilize feature point tracking [31,32] to obtain the target’s velocity. Considering that the velocity magnitudes of the target point and various pixel points in its neighborhood follow a Gaussian distribution, the direction corresponding to the maximum velocity magnitude within that neighborhood can be determined as the overall velocity direction of the target. That is,where is the neighborhood centered at point , and its size is generally about NS.

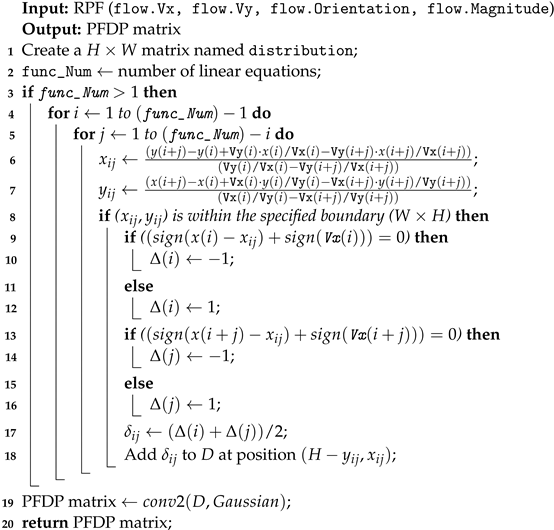

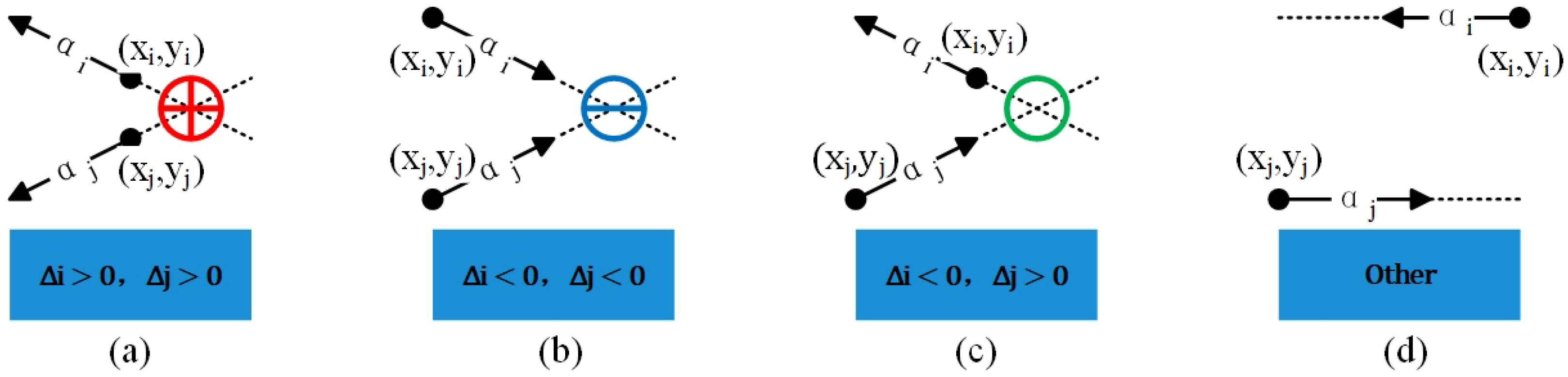

3.3. Micro-Source (MS) and PFDP

- (a)

- These two vectors originate from the intersection point, and, at that point, = 1.

- (b)

- Both vectors point toward the intersection point, and, at that point, = −1.

- (c)

- One vector points to the intersection point, and the other vector points away from the intersection point; at that point, = 0.

- (d)

- In other cases, is undefined.

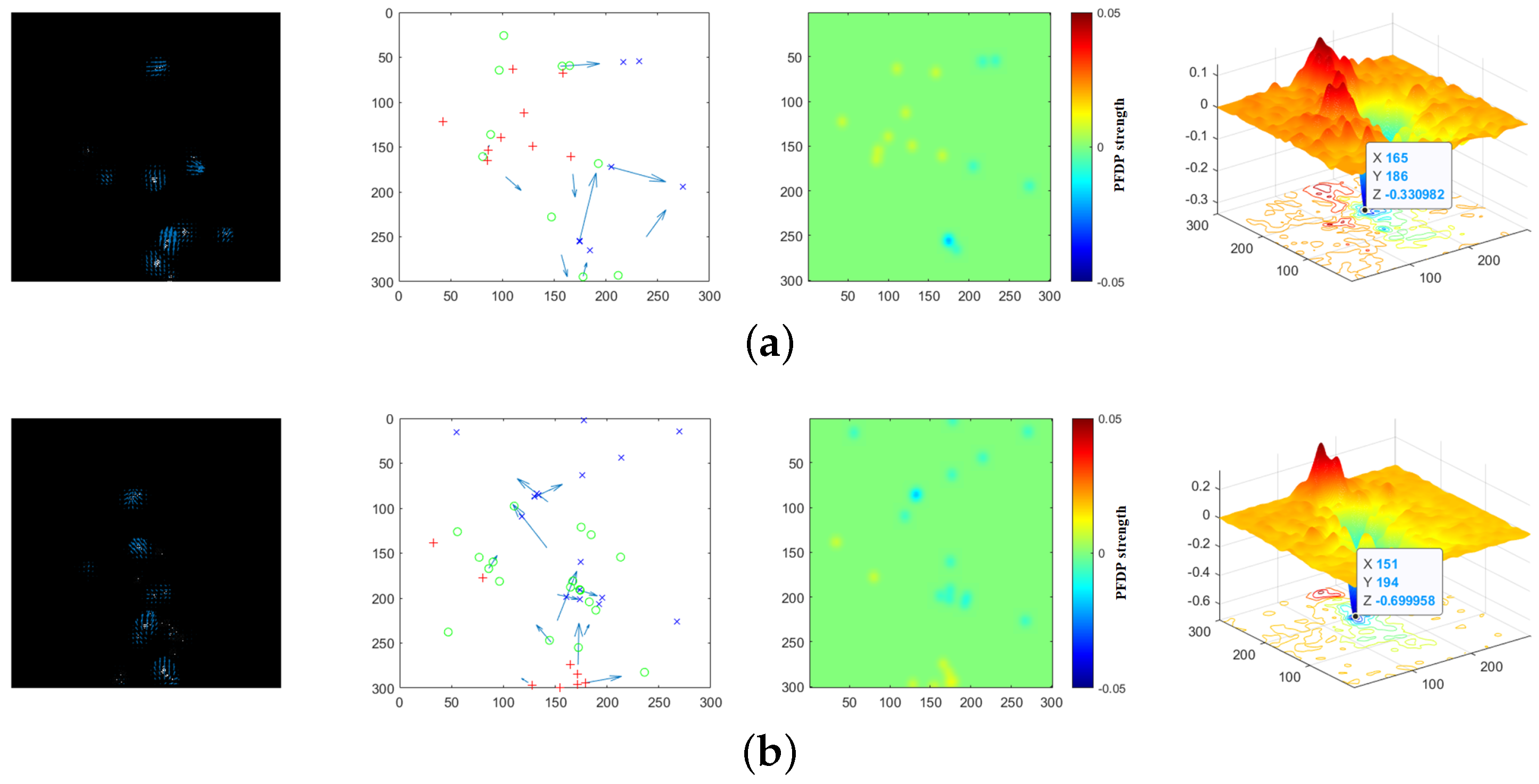

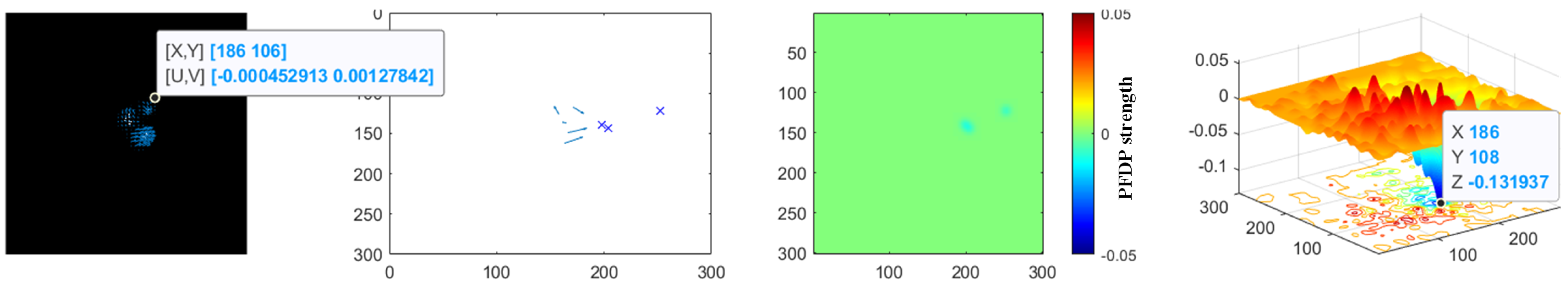

- PFDP Matrix: Obtained from Equation (38), it describes the distribution of PFDP intensity within a single frame. The feature energy map generated using the PFDP matrix intuitively reflects the strength of the PFDP at various pixel coordinates at the current moment.

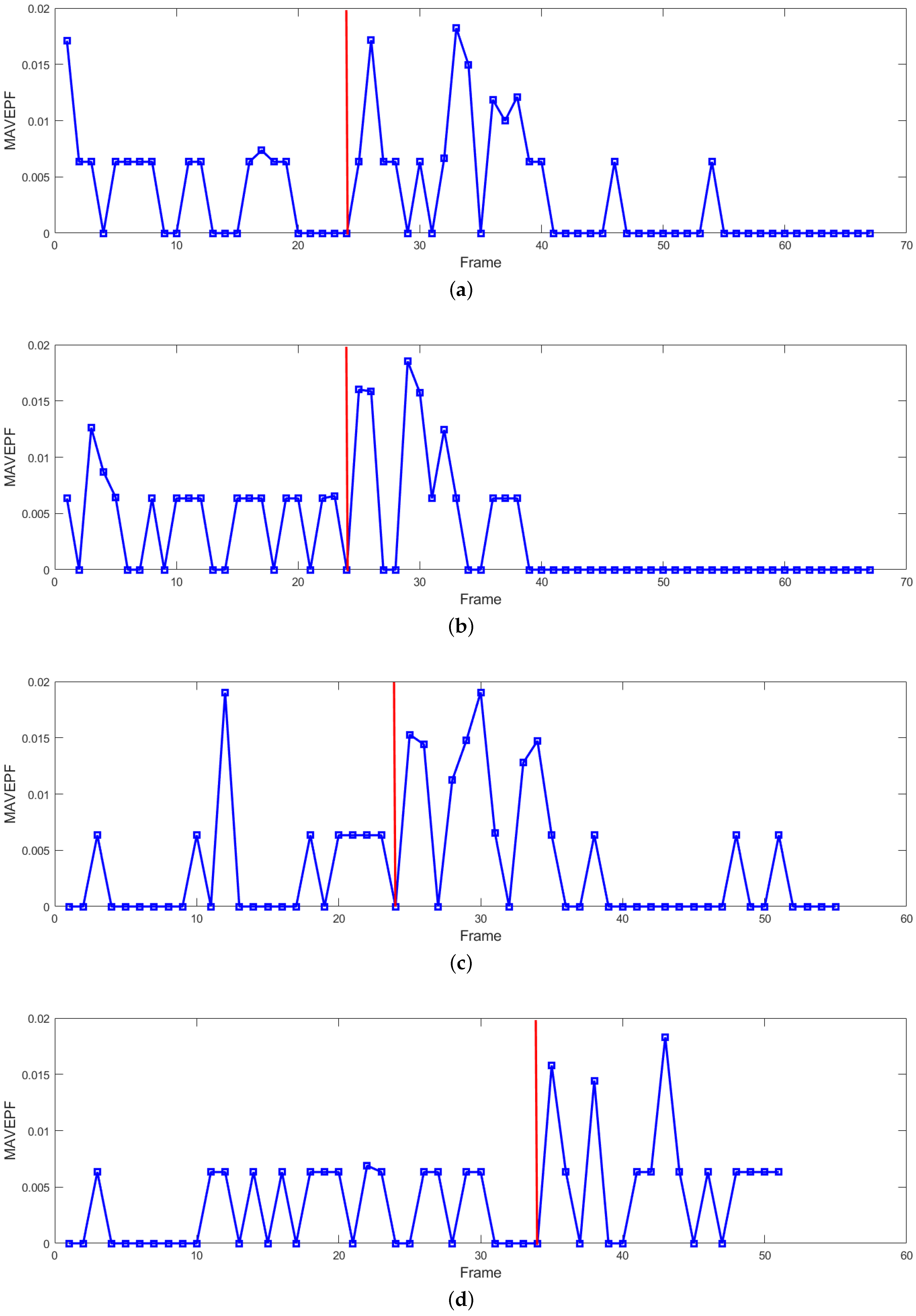

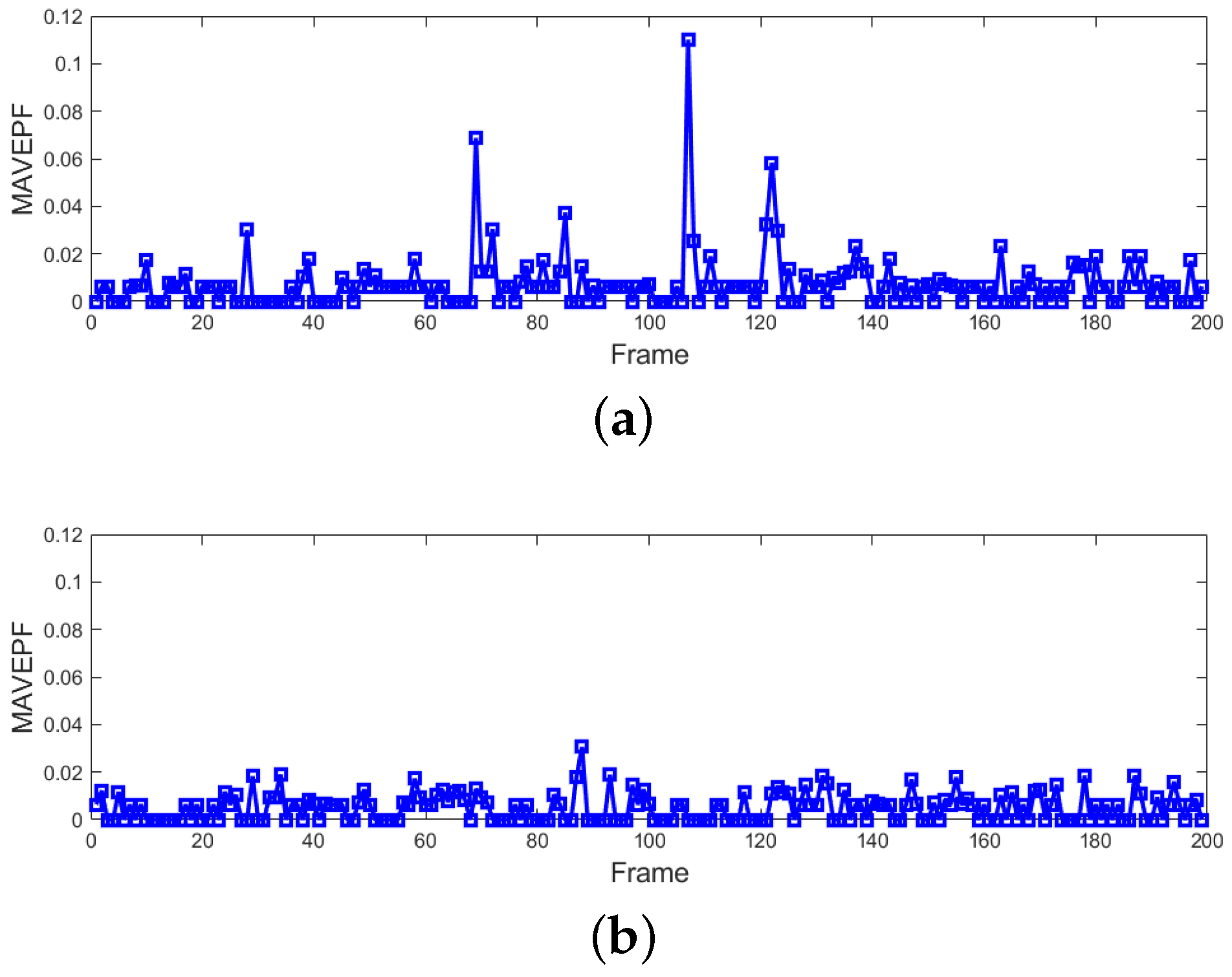

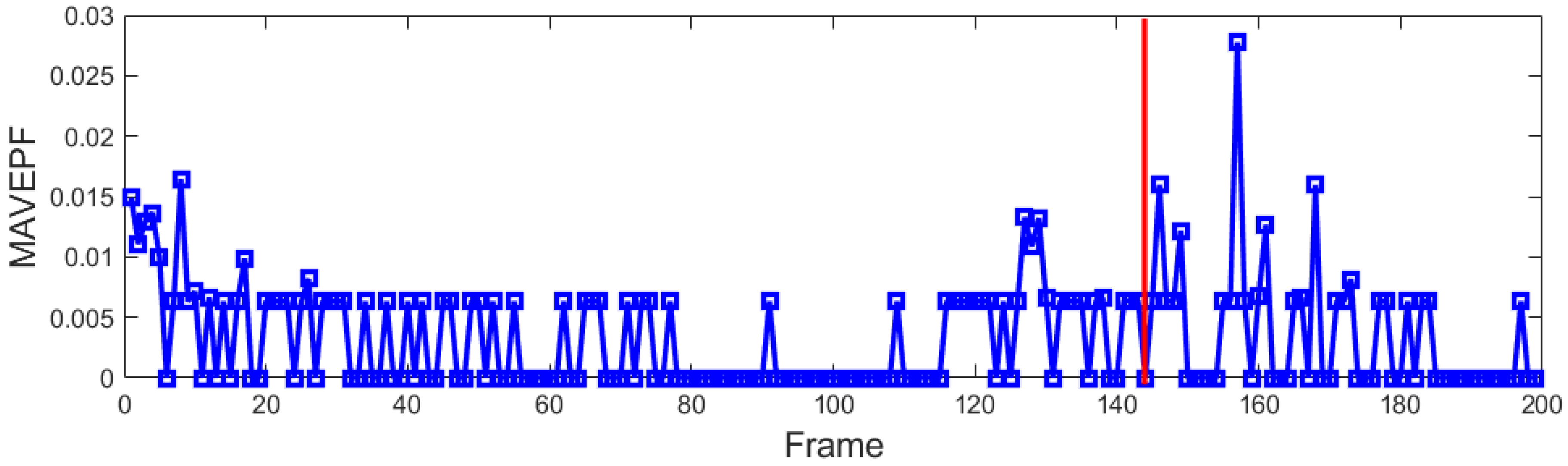

- The maximum absolute value of elements in PFDP per frame (MAVEPF): The MAVEPF curve effectively characterizes the variation in the state of crowd movement. It is important to note that due to the influence of different data sources, the extracted amount of RPF varies. Therefore, we do not define a fixed threshold for distinguishing anomalies in the MAVEPF curve. The MAVEPF curve can be used to observe the relative differences between frames in the same data source.

- Accumulated PFDP: It is obtained by accumulating continuous frames of the PFDP and can be presented in the form of a three-dimensional surface. The accumulation of the PFDP helps suppress the influence of random noise and balances the bias in event localization. The highest point on the PFDP surface represents the location with the strongest dispersion capacity in the current environment, while the lowest point indicates the location with the strongest absorption capacity. These points are considered the central locations most likely to experience diffusion or aggregation phenomena.

| Algorithm 1: RPF to PFDP based on MS |

|

4. Experiments and Analysis

4.1. Experimental Setup

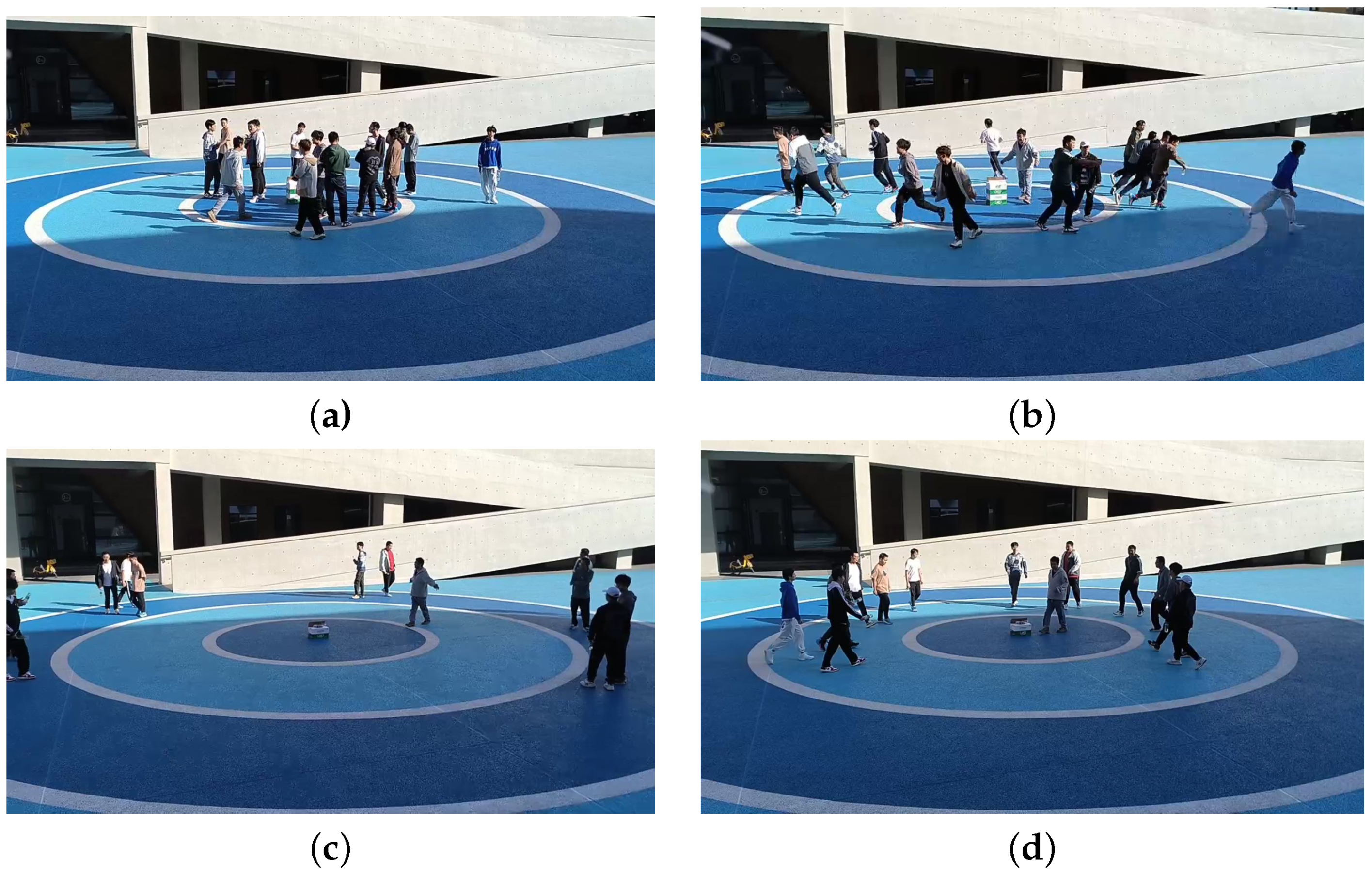

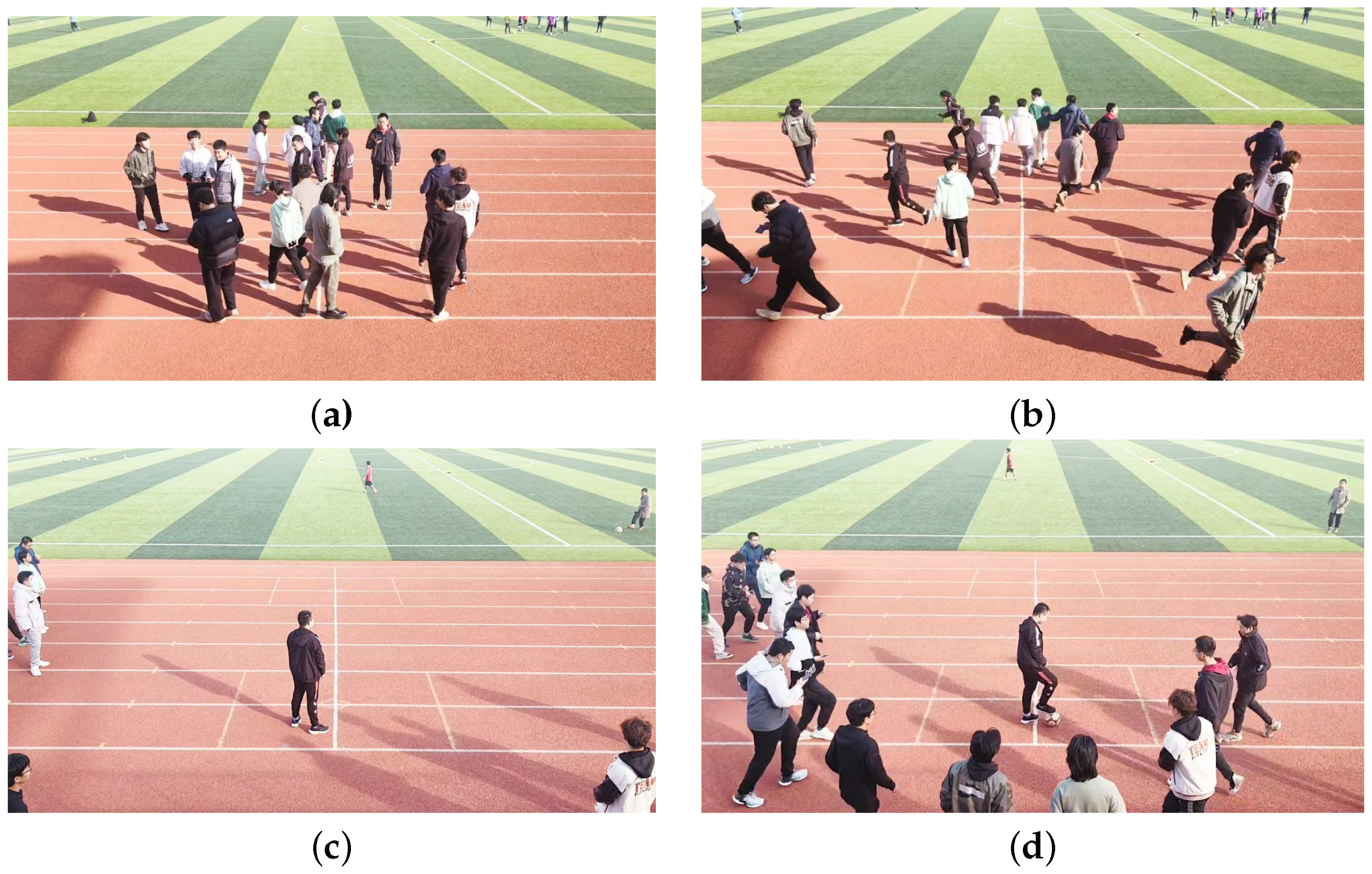

4.2. Sudden Diffusion and Aggregation

4.3. Normal Pedestrian Flow

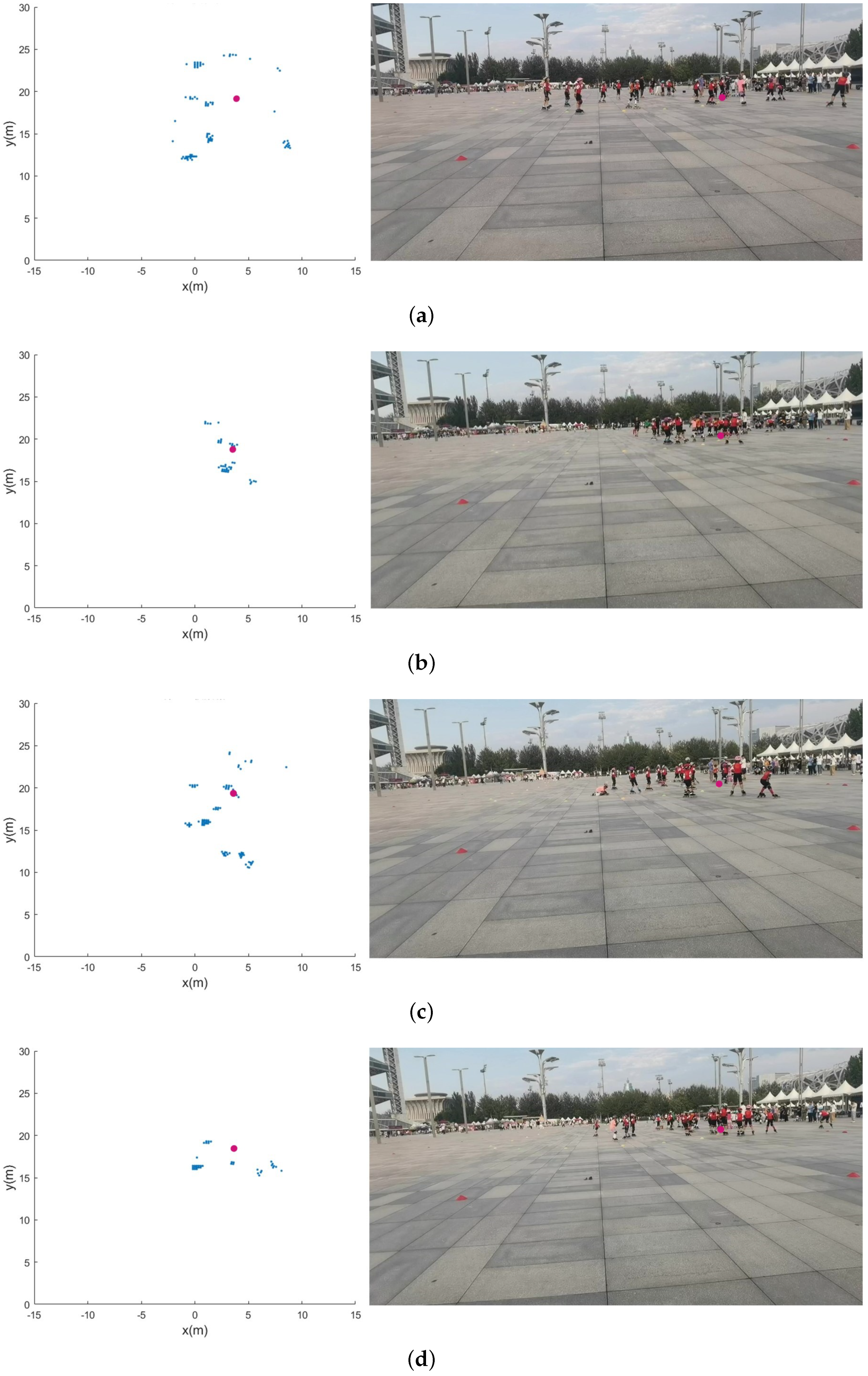

4.4. A Random Scene of Roller Skating Training Class

4.5. Algorithm Performance Analysis

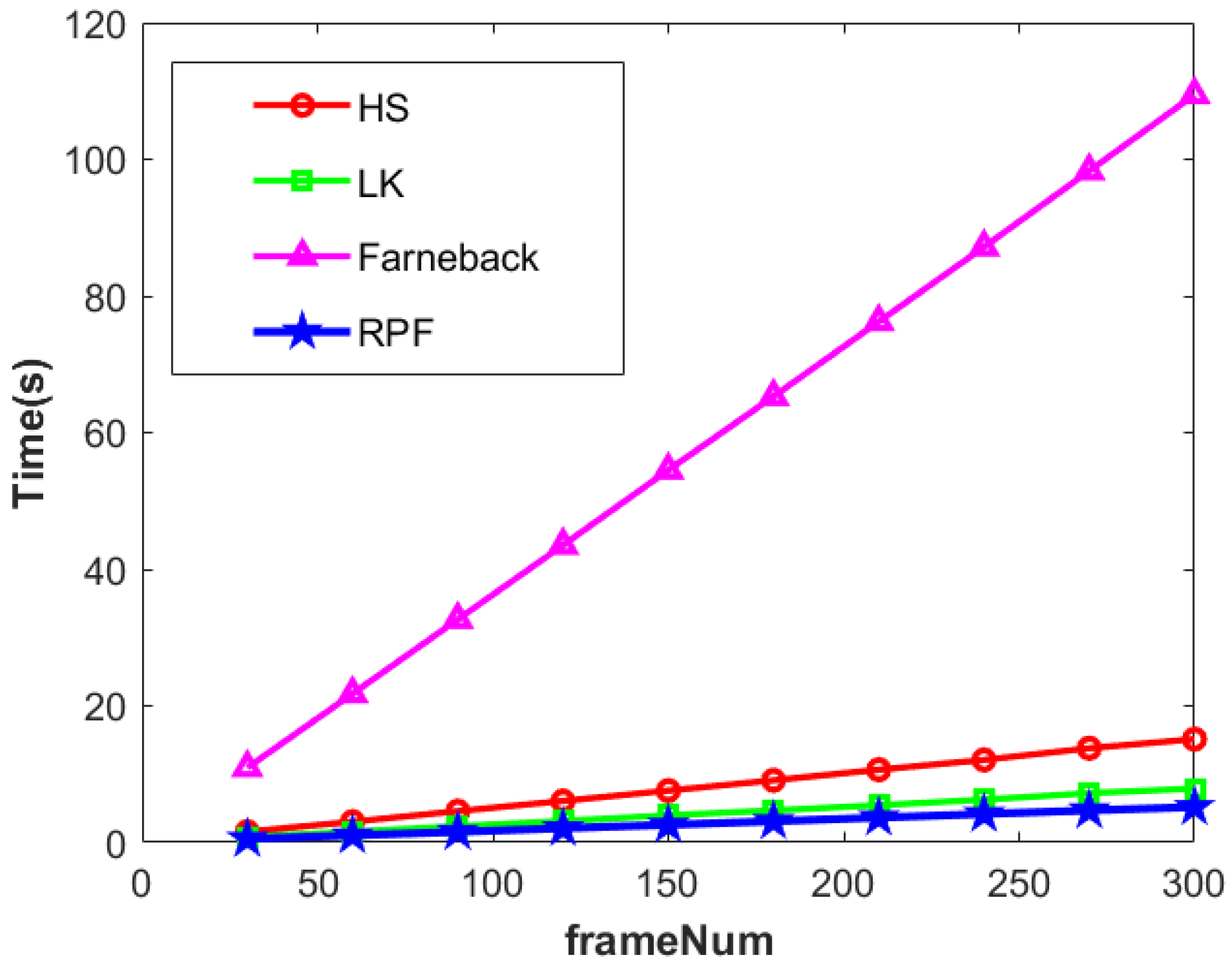

4.5.1. Performance Comparison of Extracting Crowd Movement Information

4.5.2. Performance Comparison in Detecting Crowd Anomalies

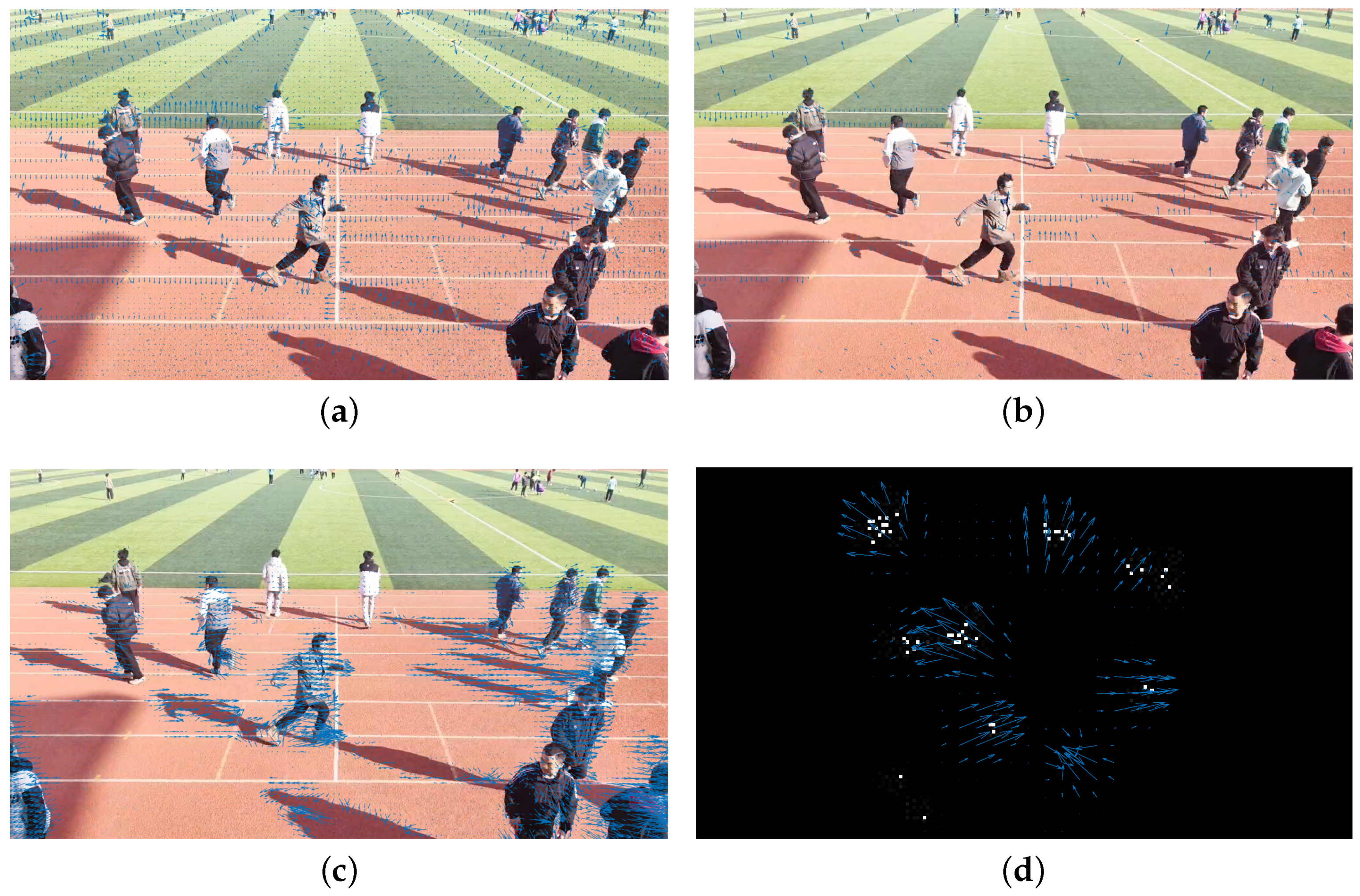

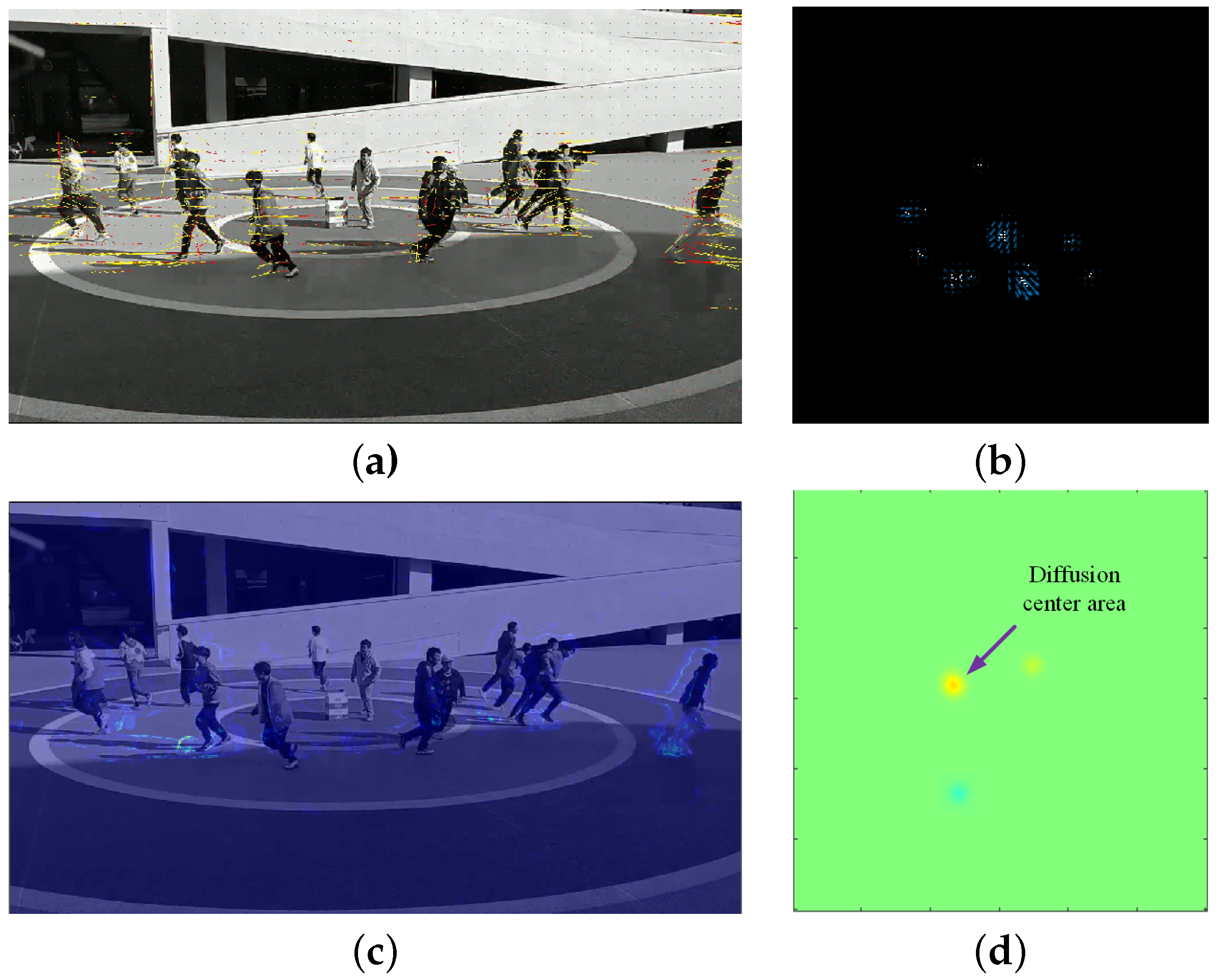

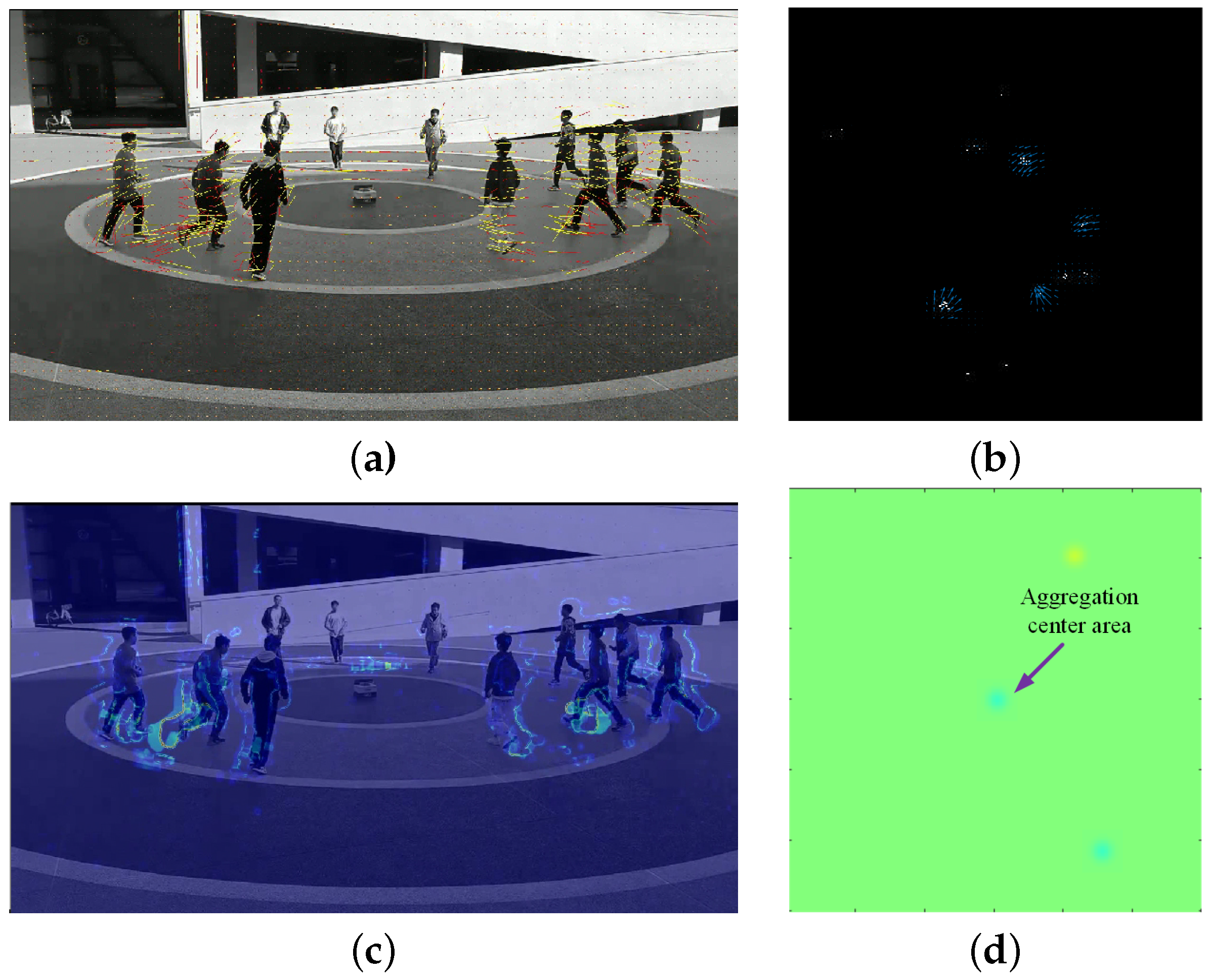

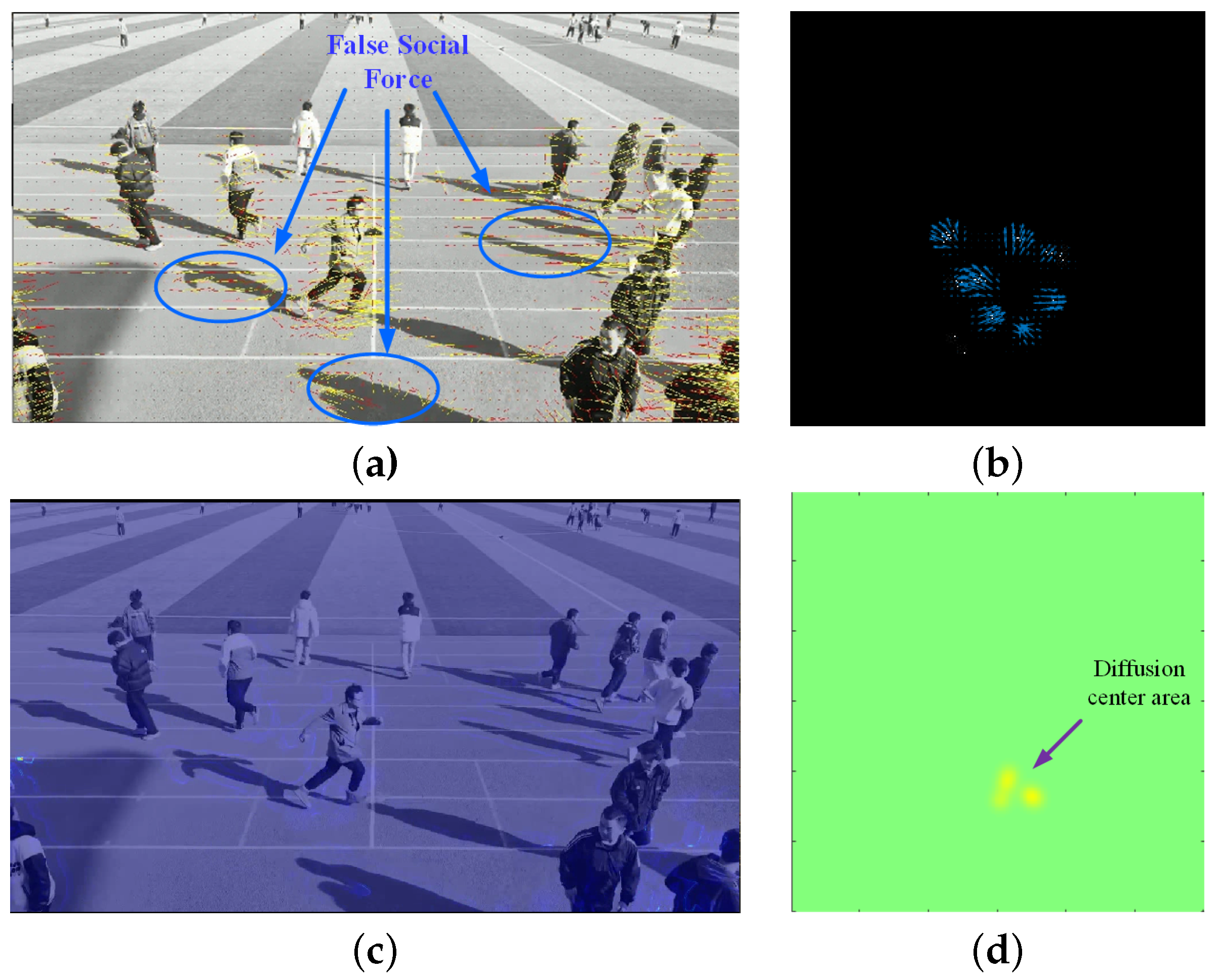

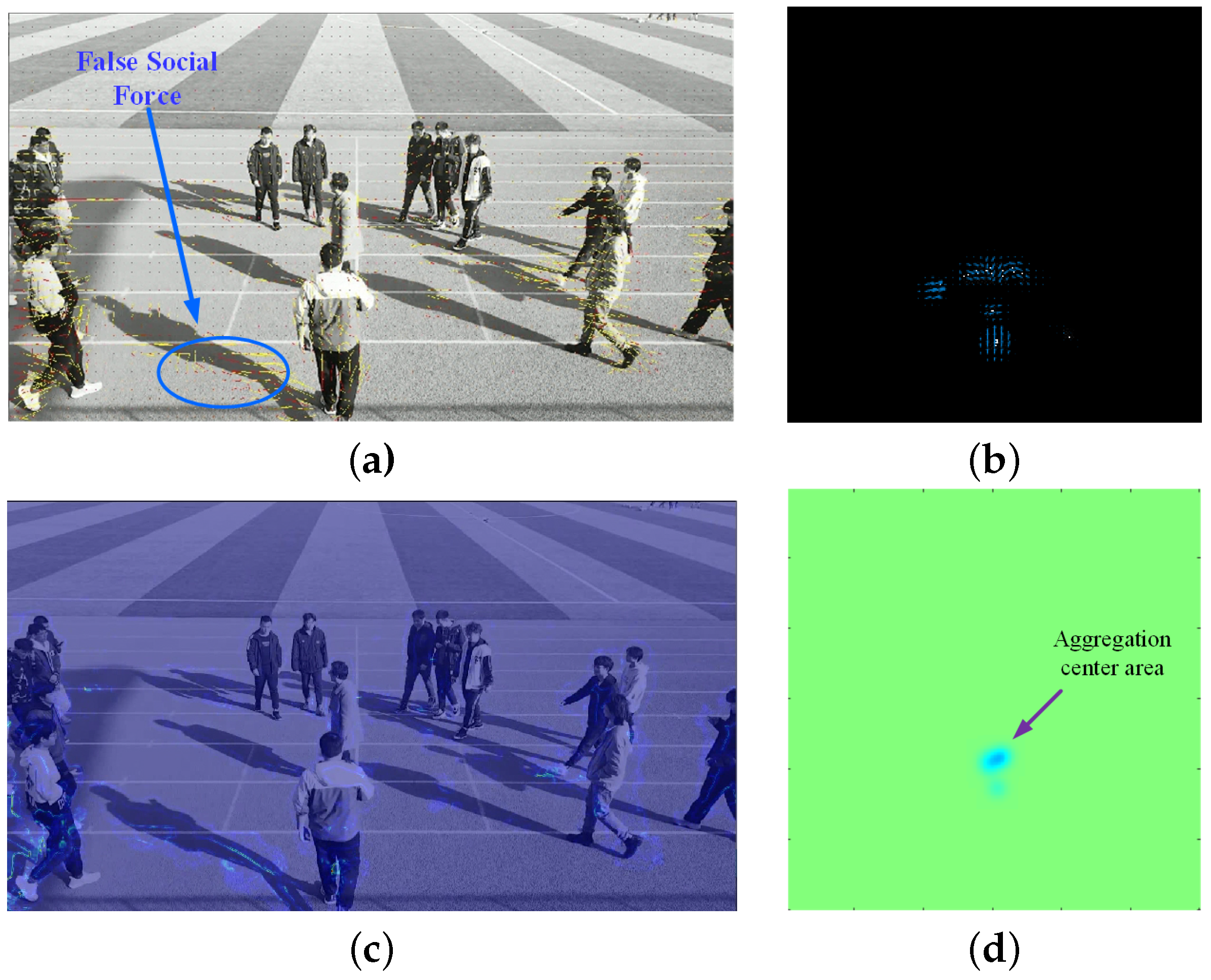

- In (a) in Figure 23, Figure 24, Figure 25 and Figure 26, the effectiveness of extracting optical flow from video data using the SFM method is demonstrated, as shown by the yellow lines, which represent the flow direction of the targets in the video. In (b), we can see that the proposed method also detected the flow direction of the target from radar data. Compared to the video method, RPF also provided positional and directional information on a two-dimensional plane, thereby offering a more realistic representation of the target’s flow direction.

- From (c) in Figure 23, Figure 24, Figure 25 and Figure 26, it can be observed that the SFM focused on the intersection area of dense flows. In contrast, the proposed method was more inclined toward detecting the source of crowd movement or the center of arrival. For instance, in Figure 23d and Figure 25d, the yellow region represents the center position where the crowd disperses away during diffusion, while the blue region in Figure 24d and Figure 26d indicates the center position where the crowd gathers during aggregation.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, T.; Chang, H.; Wang, M.; Ni, B.; Hong, R.; Yan, S. Crowded Scene Analysis: A Survey. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 367–386. [Google Scholar] [CrossRef]

- Sinha, A.; Padhi, S.; Shikalgar, S. A Survey and Analysis of Crowd Anomaly Detection Techniques. In Proceedings of the 2021 Third International Conference on Intelligent Communication Technologies and Virtual Mobile Networks (ICICV), Tirunelveli, India, 4–6 February 2021; pp. 1500–1504. [Google Scholar] [CrossRef]

- Madan, N.; Ristea, N.C.; Ionescu, R.T.; Nasrollahi, K.; Khan, F.S.; Moeslund, T.B.; Shah, M. Self-Supervised Masked Convolutional Transformer Block for Anomaly Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 525–542. [Google Scholar] [CrossRef] [PubMed]

- Falcon-Caro, A.; Sanei, S. Diffusion Adaptation for Crowd Analysis. In Proceedings of the 2021 International Conference on e-Health and Bioengineering (EHB), Iasi, Romania, 18–19 November 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Tomar, A.; Kumar, S.; Pant, B. Crowd Analysis in Video Surveillance: A Review. In Proceedings of the 2022 International Conference on Decision Aid Sciences and Applications (DASA), Chiangrai, Thailand, 23–25 March 2022; pp. 162–168. [Google Scholar] [CrossRef]

- Priya, S.; Minu, R. Abnormal Activity Detection Techniques in Intelligent Video Surveillance: A Survey. In Proceedings of the 2023 7th International Conference on Trends in Electronics and Informatics (ICOEI), Tirunelveli, India, 11–13 April 2023; pp. 1608–1613. [Google Scholar] [CrossRef]

- Sun, J.; Li, Y.; Chai, L.; Lu, C. Modality Exploration, Retrieval and Adaptation for Trajectory Prediction. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 15051–15064. [Google Scholar] [CrossRef] [PubMed]

- Altowairqi, S.; Luo, S.; Greer, P. A Review of the Recent Progress on Crowd Anomaly Detection. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 659–669. [Google Scholar] [CrossRef]

- Tripathi, G.; Singh, K.; Vishwakarma, D.K. Convolutional neural networks for crowd behaviour analysis: A survey. Vis. Comput. 2019, 35, 753–776. [Google Scholar] [CrossRef]

- Zhang, X.; Yu, Q.; Yu, H. Physics inspired methods for crowd video surveillance and analysis: A survey. IEEE Access 2018, 6, 66816–66830. [Google Scholar] [CrossRef]

- Direkoglu, C. Abnormal Crowd Behavior Detection Using Motion Information Images and Convolutional Neural Networks. IEEE Access 2020, 8, 80408–80416. [Google Scholar] [CrossRef]

- Cai, R.; Zhang, H.; Liu, W.; Gao, S.; Hao, Z. Appearance-Motion Memory Consistency Network for Video Anomaly Detection. Proc. AAAI Conf. Artif. Intell. 2021, 35, 938–946. [Google Scholar] [CrossRef]

- Ganokratanaa, T.; Aramvith, S.; Sebe, N. Unsupervised Anomaly Detection and Localization Based on Deep Spatiotemporal Translation Network. IEEE Access 2020, 8, 50312–50329. [Google Scholar] [CrossRef]

- Colque, R.V.H.M.; Júnior, C.A.C.; Schwartz, W.R. Histograms of Optical Flow Orientation and Magnitude to Detect Anomalous Events in Videos. In Proceedings of the 2015 28th SIBGRAPI Conference on Graphics, Patterns and Images, Salvador, Brazil, 26–29 August 2015; pp. 126–133. [Google Scholar] [CrossRef]

- Zhang, L.; Han, J. Recognition of Abnormal Behavior of Crowd based on Spatial Location Feature. In Proceedings of the 2020 IEEE 9th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 11–13 December 2020; Volume 9, pp. 736–741. [Google Scholar] [CrossRef]

- Chondro, P.; Liu, C.Y.; Chen, C.Y.; Ruan, S.J. Detecting Abnormal Massive Crowd Flows: Characterizing Fleeing En Masse by Analyzing the Acceleration of Object Vectors. IEEE Consum. Electron. Mag. 2019, 8, 32–37. [Google Scholar] [CrossRef]

- Wang, Q.; Gao, J.; Lin, W.; Li, X. NWPU-Crowd: A Large-Scale Benchmark for Crowd Counting and Localization. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 2141–2149. [Google Scholar] [CrossRef]

- Solmaz, B.; Moore, B.E.; Shah, M. Identifying behaviors in crowd scenes using stability analysis for dynamical systems. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2064–2070. [Google Scholar] [CrossRef]

- Wu, S.; Yang, H.; Zheng, S.; Su, H.; Fan, Y.; Yang, M.H. Crowd behavior analysis via curl and divergence of motion trajectories. Int. J. Comput. Vis. 2017, 123, 499–519. [Google Scholar] [CrossRef]

- Xu, M.; Li, C.; Lv, P.; Lin, N.; Hou, R.; Zhou, B. An efficient method of crowd aggregation computation in public areas. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 2814–2825. [Google Scholar] [CrossRef]

- Afonso, M.; Nascimento, J. Predictive multiple motion fields for trajectory completion: Application to surveillance systems. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 2547–2551. [Google Scholar] [CrossRef]

- Guendel, R.G.; Ullmann, I.; Fioranelli, F.; Yarovoy, A. Continuous People Crowd Monitoring defined as a Regression Problem using Radar Networks. In Proceedings of the 2023 20th European Radar Conference (EuRAD), Berlin, Germany, 20–22 September 2023; pp. 294–297. [Google Scholar] [CrossRef]

- Lobanova, V.; Bezdetnyy, D.; Anishchenko, L. Human Activity Recognition Based on Radar and Video Surveillance Sensor Fusion. In Proceedings of the 2023 IEEE Ural-Siberian Conference on Biomedical Engineering, Radioelectronics and Information Technology (USBEREIT), Yekaterinburg, Russia, 15–17 May 2023; pp. 25–28. [Google Scholar] [CrossRef]

- Zhang, R.; Cao, S. Real-time human motion behavior detection via CNN using mmWave radar. IEEE Sens. Lett. 2018, 3, 3500104. [Google Scholar] [CrossRef]

- Liu, Y.; Chang, S.; Wei, Z.; Zhang, K.; Feng, Z. Fusing mmWave Radar With Camera for 3-D Detection in Autonomous Driving. IEEE Internet Things J. 2022, 9, 20408–20421. [Google Scholar] [CrossRef]

- Kim, Y.; Choi, J.W.; Kum, D. GRIF Net: Gated Region of Interest Fusion Network for Robust 3D Object Detection from Radar Point Cloud and Monocular Image. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 10857–10864. [Google Scholar] [CrossRef]

- Horn, B.K.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the IJCAI’81: 7th International Joint Conference on Artificial Intelligence, Vancouver, BC, Canada, 24–28 August 1981; Volume 2, pp. 674–679. [Google Scholar]

- Farnebäck, G. Two-frame motion estimation based on polynomial expansion. In Proceedings of the Image Analysis: 13th Scandinavian Conference, SCIA 2003, Halmstad, Sweden, 29 June–2 July 2003; pp. 363–370. [Google Scholar]

- Cao, L.; Zhang, X.; Wang, T.; Du, K.; Fu, C. An adaptive ellipse distance density peak fuzzy clustering algorithm based on the multi-target traffic radar. Sensors 2020, 20, 4920. [Google Scholar] [CrossRef] [PubMed]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; Volume 15, pp. 10–5244. [Google Scholar]

- Siegemund, J.; Schugk, D. Vehicule Based Method of Object Tracking Using Kanade Lucas Tomasi (KLT) Methodology. US11321851B2, 3 May 2022. [Google Scholar]

- Wagner, G.; Choset, H. Gaussian reconstruction of swarm behavior from partial data. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 5864–5870. [Google Scholar]

- Nemade, N.; Gohokar, V. Comparative performance analysis of optical flow algorithms for anomaly detection. In Proceedings of the International Conference on Communication and Information Processing (ICCIP), Chongqing, China, 15–17 November 2019. [Google Scholar]

- Mehran, R.; Oyama, A.; Shah, M. Abnormal crowd behavior detection using social force model. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 935–942. [Google Scholar] [CrossRef]

| Rader Parameters | Value |

|---|---|

| Transmit antennas | 2 |

| Receive antennas | 4 |

| Starting frequency | 77 GHz |

| Stop frequency | 80.2 GHz |

| Bandwidth | 3.2 GHz |

| Frequency slop | 100 MHz/usec |

| Frame periodicity | 100 msec |

| Chirps per frame | 64 |

| Sampling rate | 6 Msps |

| Samples per chirp | 192 |

| Scene/Reference Points | Number of Experiments | Number of Correct PFDP Main Peak | Accuracy of PFDP Main Peak | Mean Coordinates of Localization | MAE (Meters) |

|---|---|---|---|---|---|

| Diffusion 1/(150, 160) | 50 | 48 | 96% | (150.96, 150.94) | 1.7 |

| Aggregation 1/(150,160) | 50 | 44 | 88% | (144.1, 161.78) | 1.9 |

| Diffusion 2/(150,210) | 50 | 45 | 90% | (151.84, 208,84) | 1.42 |

| Aggregation 2/(150,210) | 50 | 47 | 94% | (149.8, 214.14) | 1.34 |

| Parameter | Explanation | Value |

|---|---|---|

| NPL | number of layers of the pyramid | 4 |

| NI | calculate number of iterations | 30 |

| NS | neighborhood size | 9 |

| FS | size of smoothing filter window | 25 |

| Method | Diffusion in Scene 1 | Aggregation in Scene 1 | Diffusion in Scene 2 | Aggregation in Scene 2 |

|---|---|---|---|---|

| SFM | 2875.6 s | 2850.3 s | 2905.8 s | 2864.2 s |

| Ours | 3.05 s | 3.02 s | 3.06 s | 3.02 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, L.; Cao, L.; Zhao, Z.; Wang, D.; Fu, C. A Crowd Movement Analysis Method Based on Radar Particle Flow. Sensors 2024, 24, 1899. https://doi.org/10.3390/s24061899

Zhang L, Cao L, Zhao Z, Wang D, Fu C. A Crowd Movement Analysis Method Based on Radar Particle Flow. Sensors. 2024; 24(6):1899. https://doi.org/10.3390/s24061899

Chicago/Turabian StyleZhang, Li, Lin Cao, Zongmin Zhao, Dongfeng Wang, and Chong Fu. 2024. "A Crowd Movement Analysis Method Based on Radar Particle Flow" Sensors 24, no. 6: 1899. https://doi.org/10.3390/s24061899

APA StyleZhang, L., Cao, L., Zhao, Z., Wang, D., & Fu, C. (2024). A Crowd Movement Analysis Method Based on Radar Particle Flow. Sensors, 24(6), 1899. https://doi.org/10.3390/s24061899