STNet: A Time-Frequency Analysis-Based Intrusion Detection Network for Distributed Optical Fiber Acoustic Sensing Systems

Abstract

1. Introduction

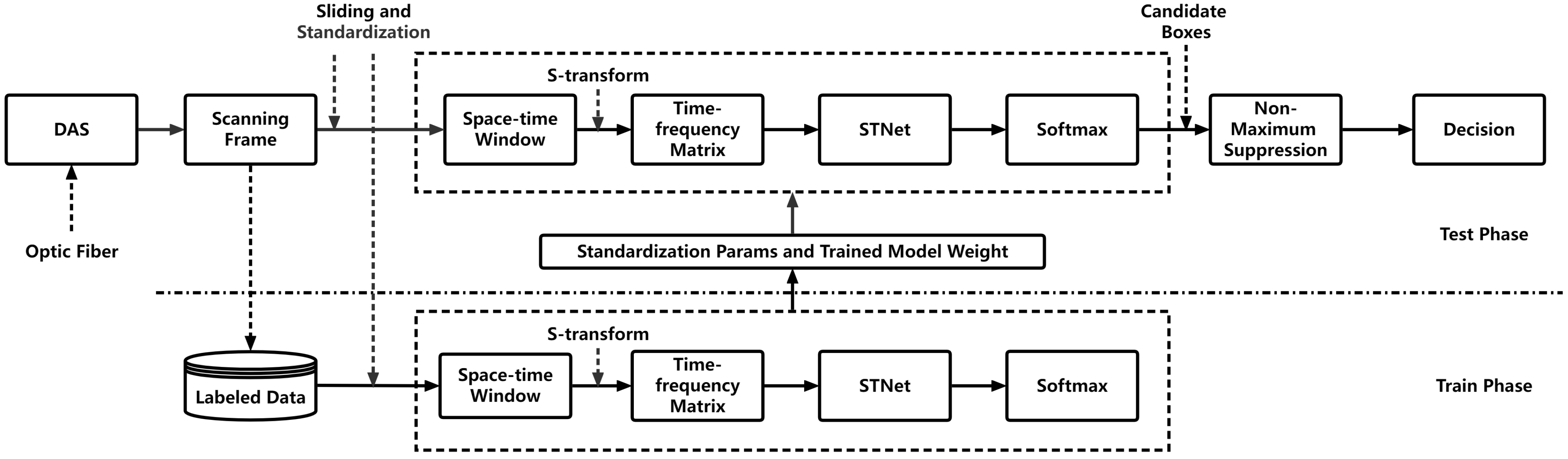

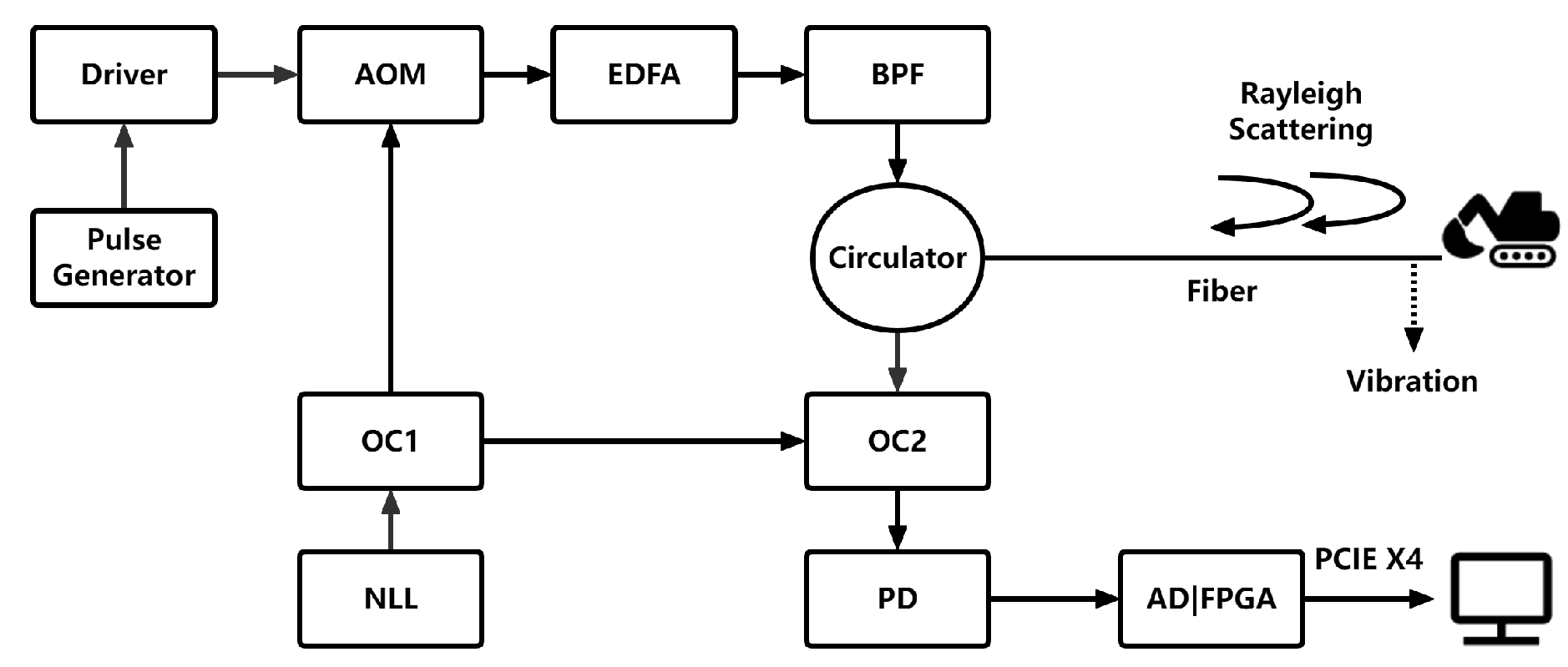

2. Principle of Intrusion Detection

2.1. Intrusion Detection System

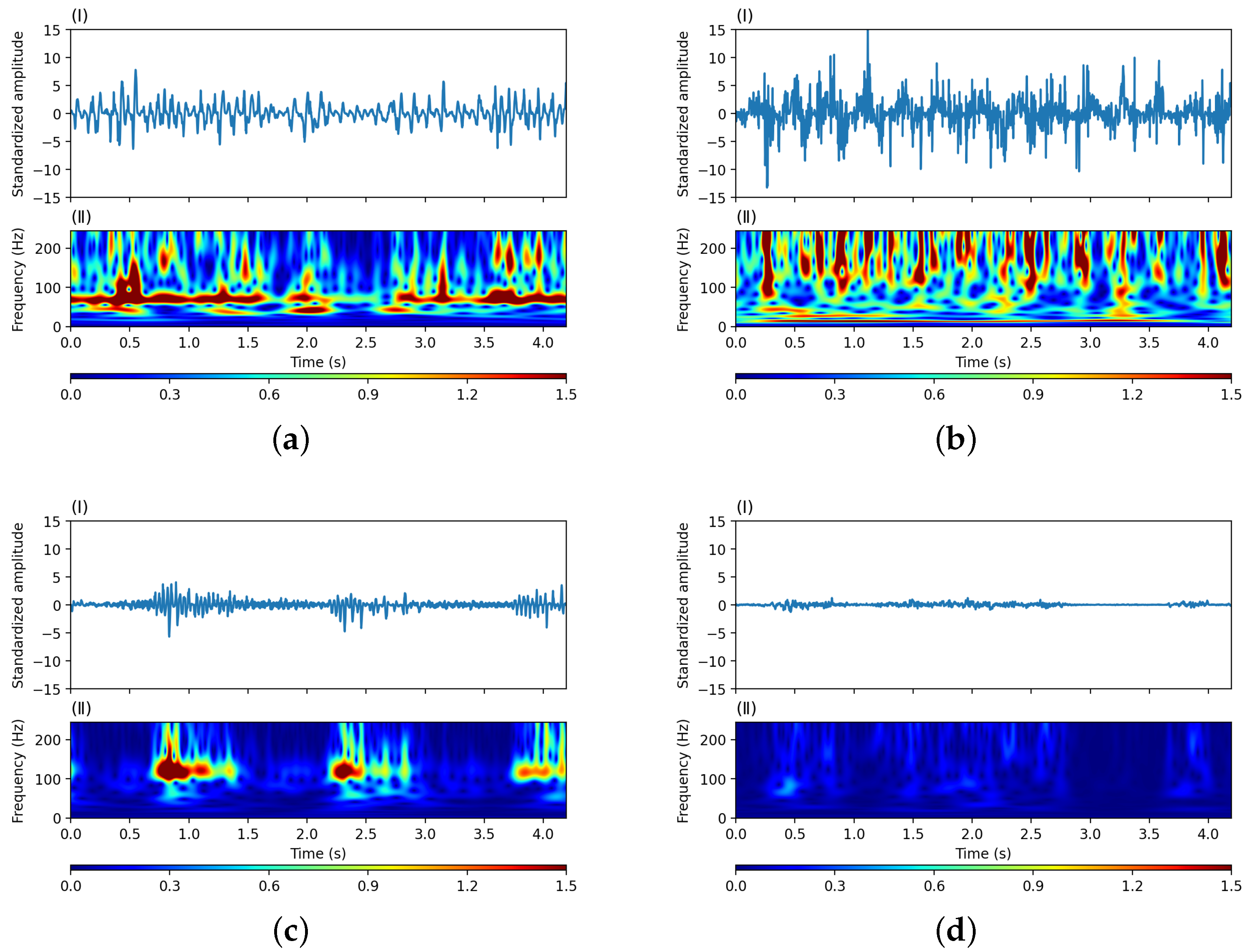

2.2. Time-Frequency Analysis and the Stockwell Transform

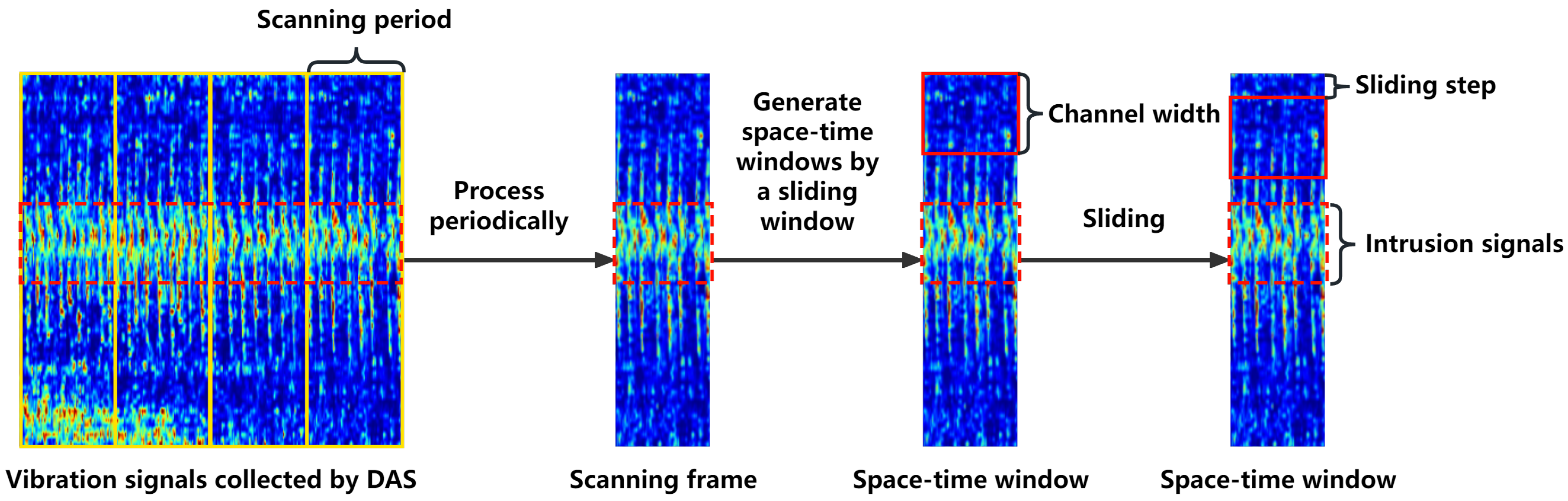

2.3. Data Preprocessing

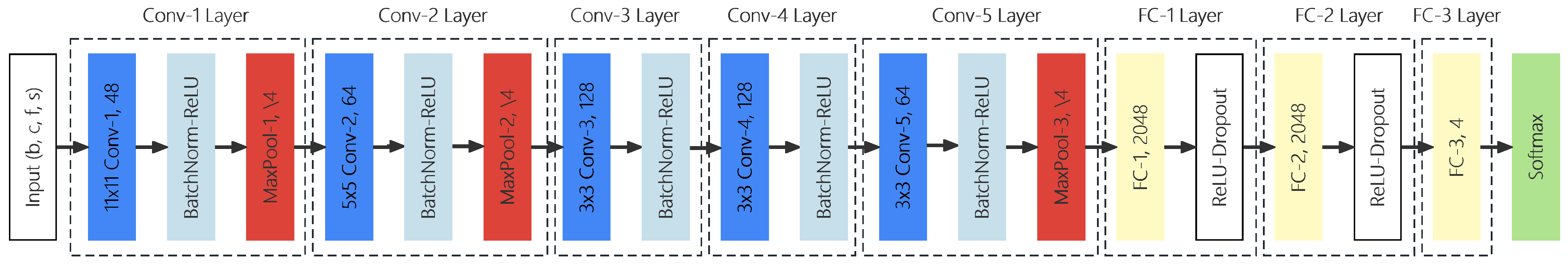

2.4. STNet

2.5. Non-Maximum Suppression

| Algorithm 1 NMSA for intrusion detection |

| Input: , , 1: 2: repeat 3: 4: 5: 6: 7: for in B do 8: if then 9: 10: 11: end if 12: end for 13: until Output: R, C |

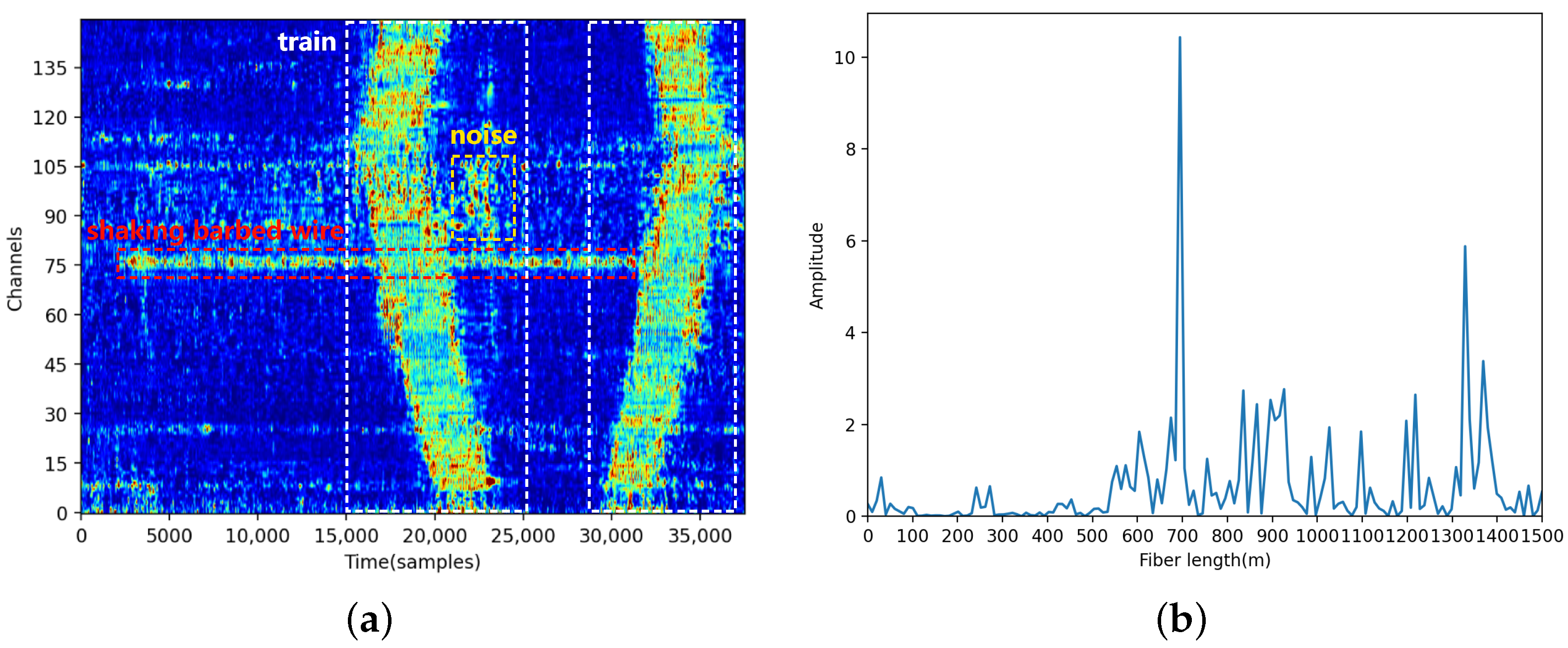

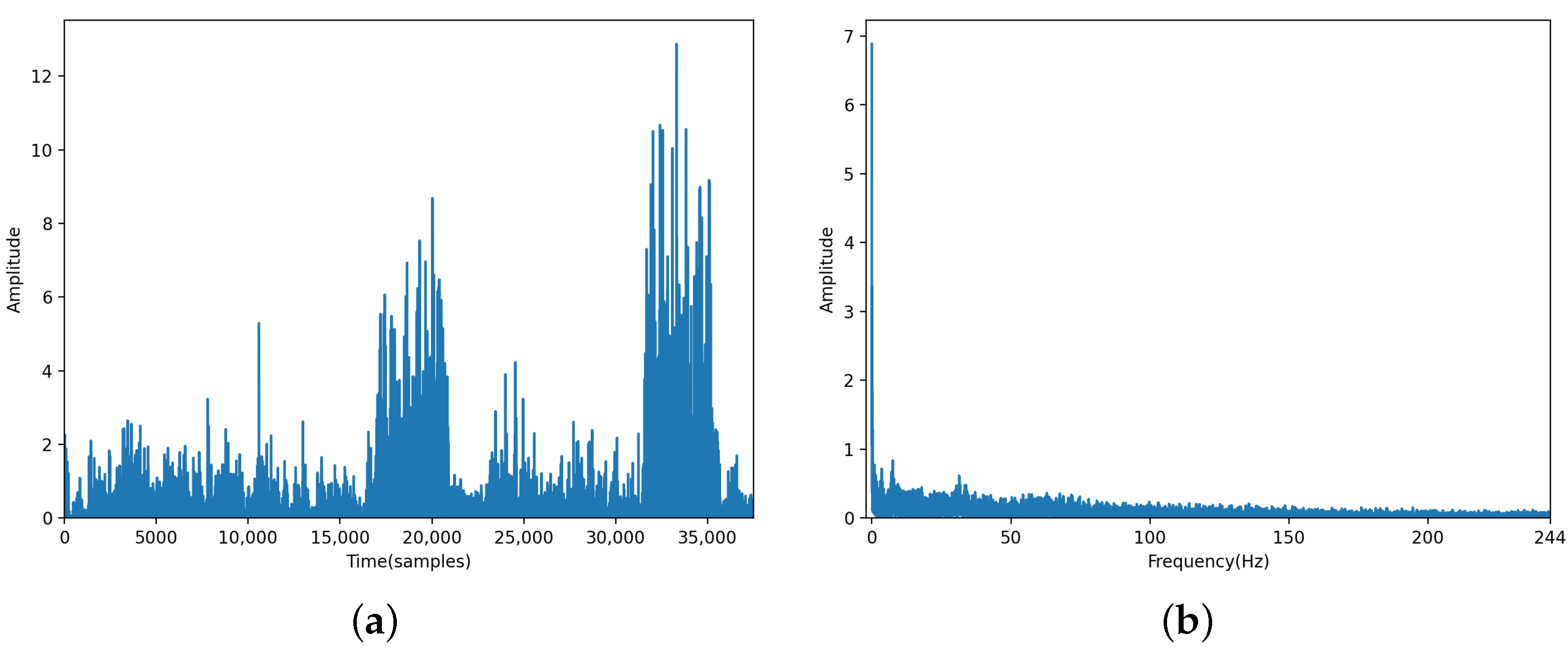

3. Experimental Procedure

3.1. Principle of the DAS System

3.2. Experimental Setup

- (1)

- Climbing the fence: A person climbed over the fence on the separation wall. On average, this action lasted for approximately 24 s.

- (2)

- Shaking the barbed wire: A person shook the barbed wire atop the isolation wall. On average, this action lasted for approximately 40 s.

- (3)

- Breaking the wall: A person used a hammer to hit the isolation wall approximately once every second. On average, this action lasted for approximately 35 s.

- (4)

- Background: Background signals were captured in a relatively sunny environment near the sensing fiber and consisted of noise generated by passing trains and heavy trucks, as well as by other noise sources.

3.3. Dataset and Network Training

3.4. Evaluation Metrics

- TP corresponded to the situation in which the candidate box correctly overlapped the ground-truth box. (In the case of multiple overlapping candidate boxes, only one TP was counted.)

- FN corresponded to the case in which a ground-truth box existed in the scanning frame, but none of the candidate boxes hit it.

- FP corresponded to the case in which the candidate box did not intersect with any ground-truth box in the scanning frame.

- (1)

- Intrusion detection rate (IDR): the fraction of detected intrusion events, equivalent to recall:

- (2)

- False alarm rate (FAR): the ratio of the number of false alarms to the number of all triggered alarms, corresponding to 1-precision:

- (3)

- F1 score: the harmonic mean of the IDR and 1 − FAR, for measuring the overall performance of a method:

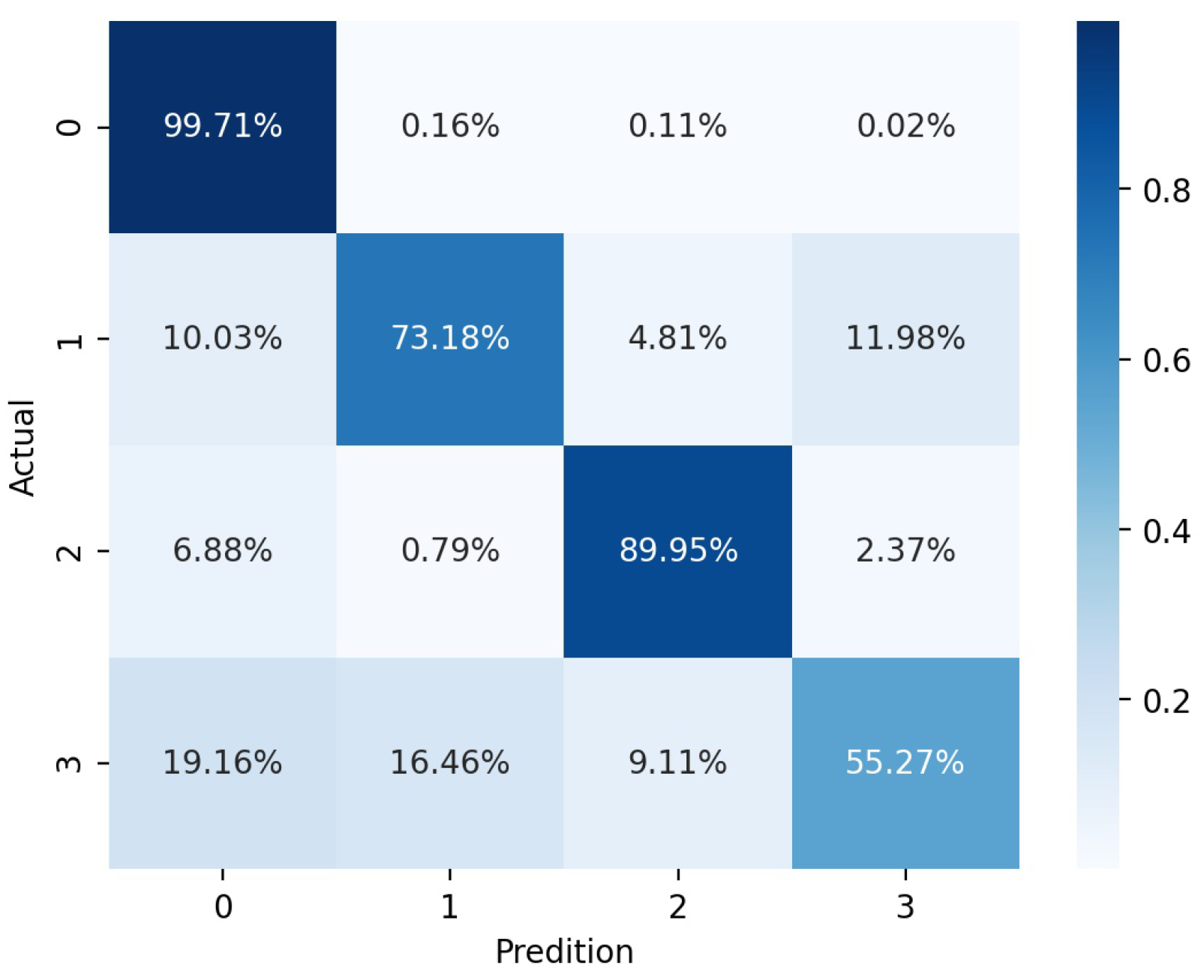

4. Experimental Results

4.1. Effects of the Scanning Period and Channel Width

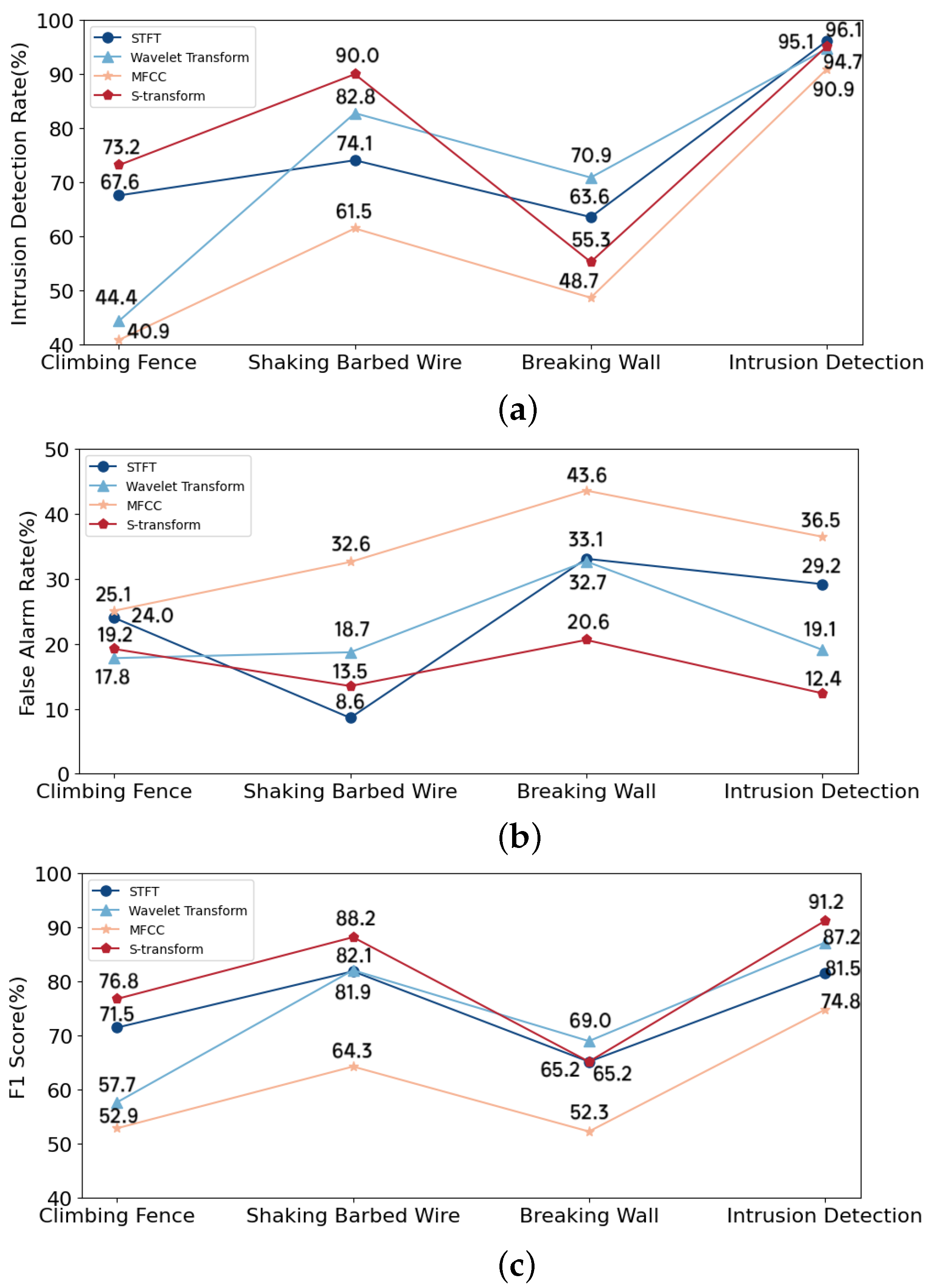

4.2. Comparison of Time-Frequency Analysis Methods

4.3. Comparison of Model Performances

5. Conclusions and Discussion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shiloh, L.; Eyal, A.; Giryes, R. Efficient Processing of Distributed Acoustic Sensing Data Using a Deep Learning Approach. J. Light. Technol. 2019, 37, 4755–4762. [Google Scholar] [CrossRef]

- Timofeev, A.V.; Groznov, D.I. Classification of Seismoacoustic Emission Sources in Fiber Optic Systems for Monitoring Extended Objects. Optoelectron. Instrum. Data Process. 2020, 56, 50–60. [Google Scholar] [CrossRef]

- Che, Q.; Wen, H.; Li, X.; Peng, Z.; Chen, K.P. Partial Discharge Recognition Based on Optical Fiber Distributed Acoustic Sensing and a Convolutional Neural Network. IEEE Access 2019, 7, 101758–101764. [Google Scholar] [CrossRef]

- Wiesmeyr, C.; Litzenberger, M.; Waser, M.; Papp, A.; Garn, H.; Neunteufel, G.; Döller, H. Real-time train tracking from distributed acoustic sensing data. Appl. Sci. 2020, 10, 448. [Google Scholar] [CrossRef]

- Liu, H.; Ma, J.; Xu, T.; Yan, W.; Ma, L.; Zhang, X. Vehicle Detection and Classification Using Distributed Fiber Optic Acoustic Sensing. IEEE Trans. Veh. Technol. 2020, 69, 1363–1374. [Google Scholar] [CrossRef]

- Wiesmeyr, C.; Coronel, C.; Litzenberger, M.; Doller, H.J.; Schweiger, H.B.; Calbris, G. Distributed Acoustic Sensing for Vehicle Speed and Traffic Flow Estimation. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 2596–2601. [Google Scholar] [CrossRef]

- George, J.; Mary, L.; Riyas, K.S. Vehicle detection and classification from acoustic signal using ANN and KNN. In Proceedings of the 2013 International Conference on Control Communication and Computing (ICCC), Thiruvananthapuram, India, 13–15 December 2013; pp. 436–439. [Google Scholar] [CrossRef]

- Liu, H.; Ma, J.; Yan, W.; Liu, W.; Zhang, X.; Li, C. Traffic Flow Detection Using Distributed Fiber Optic Acoustic Sensing. IEEE Access 2018, 6, 68968–68980. [Google Scholar] [CrossRef]

- Meng, H.; Wang, S.; Gao, C.; Liu, F. Research on Recognition Method of Railway Perimeter Intrusions Based on Φ-OTDR Optical Fiber Sensing Technology. IEEE Sens. J. 2021, 21, 9852–9859. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, J.; Wang, M.; Zhong, Y.; Peng, F. Fiber distributed acoustic sensing using convolutional long short-term memory network: A field test on high-speed railway intrusion detection. Opt. Express 2020, 28, 2925. [Google Scholar] [CrossRef] [PubMed]

- Xie, Y.; Wang, M.; Zhong, Y.; Deng, L.; Zhang, J. Label-Free Anomaly Detection Using Distributed Optical Fiber Acoustic Sensing. Sensors 2023, 23, 4094. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.; Chen, J.; Liu, X.; Xiao, Y.; Wang, M.; Zheng, Y.; Rao, Y. One-Dimensional CNN-Based Intelligent Recognition of Vibrations in Pipeline Monitoring With DAS. J. Light. Technol. 2019, 37, 4359–4366. [Google Scholar] [CrossRef]

- Wu, H.; Liu, X.; Xiao, Y.; Rao, Y. A Dynamic Time Sequence Recognition and Knowledge Mining Method Based on the Hidden Markov Models (HMMs) for Pipeline Safety Monitoring with ϕ-OTDR. J. Light. Technol. 2019, 37, 4991–5000. [Google Scholar] [CrossRef]

- Tejedor, J.; Macias-Guarasa, J.; Martins, H.F.; Pastor-Graells, J.; Corredera, P.; Martin-Lopez, S. Machine learning methods for pipeline surveillance systems based on distributed acoustic sensing: A review. Appl. Sci. 2017, 7, 841. [Google Scholar] [CrossRef]

- Min, R.; Liu, Z.; Pereira, L.; Yang, C.; Sui, Q.; Marques, C. Optical fiber sensing for marine environment and marine structural health monitoring: A review. Opt. Laser Technol. 2021, 140, 107082. [Google Scholar] [CrossRef]

- Martins, H.F.; Piote, D.; Tejedor, J.; Macias-Guarasa, J.; Pastor-Graells, J.; Martin-Lopez, S.; Corredera, P.; Smet, F.D.; Postvoll, W.; Ahlen, C.H.; et al. Early detection of pipeline integrity threats using a smart fiber optic surveillance system: The PIT-STOP project. In Proceedings of the 24th International Conference on Optical Fibre Sensors, Curitiba, Brazil, 28 September–2 October 2015; Volume 9634, p. 96347X. [Google Scholar] [CrossRef]

- Jia, H.; Liang, S.; Lou, S.; Sheng, X. A k-Nearest Neighbor Algorithm-Based Near Category Support Vector Machine Method for Event Identification of φ-OTDR. IEEE Sens. J. 2019, 19, 3683–3689. [Google Scholar] [CrossRef]

- Fedorov, A.K.; Anufriev, M.N.; Zhirnov, A.A.; Stepanov, K.V.; Nesterov, E.T.; Namiot, D.E.; Karasik, V.E.; Pnev, A.B. Note: Gaussian mixture model for event recognition in optical time-domain reflectometry based sensing systems. Rev. Sci. Instruments 2016, 87, 036107. [Google Scholar] [CrossRef]

- Xu, C.; Guan, J.; Bao, M.; Lu, J.; Ye, W. Pattern recognition based on time-frequency analysis and convolutional neural networks for vibrational events in φ-OTDR. Opt. Eng. 2018, 57, 1. [Google Scholar] [CrossRef]

- Shi, Y.; Liu, X.; Wei, C. An event recognition method based on MFCC, superposition algorithm and deep learning for buried distributed optical fiber sensors. Opt. Commun. 2022, 522, 128647. [Google Scholar] [CrossRef]

- Tian, M.; Dong, H.; Yu, K. Attention based Temporal convolutional network for Φ-OTDR event classification. In Proceedings of the 2021 19th International Conference on Optical Communications and Networks (ICOCN), Qufu, China, 23–27 August 2021. [Google Scholar] [CrossRef]

- Hu, Y.; Wang, S.; Wang, Z.; Zhang, Y. The Research on Information Representation of Φ-OTDR Distributed Vibration Signals. J. Sens. 2017, 2017, 6020645. [Google Scholar] [CrossRef]

- Abdoush, Y.; Pojani, G.; Corazza, G.E.; Garcia-Molina, J.A. Controlled-coverage discrete S-transform (CC-DST): Theory and applications. Digit. Signal Process. 2019, 88, 207–222. [Google Scholar] [CrossRef]

- Hariharan, M.; Vijean, V.; Sindhu, R.; Divakar, P.; Saidatul, A.; Yaacob, S. Classification of mental tasks using stockwell transform. Comput. Electr. Eng. 2014, 40, 1741–1749. [Google Scholar] [CrossRef]

- Geng, M.; Zhou, W.; Liu, G.; Li, C.; Zhang, Y. Epileptic Seizure Detection Based on Stockwell Transform and Bidirectional Long Short-Term Memory. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 573–580. [Google Scholar] [CrossRef]

- Singh, M.; Shaik, A.G. Faulty bearing detection, classification and location in a three-phase induction motor based on Stockwell transform and support vector machine. Meas. J. Int. Meas. Confed. 2019, 131, 524–533. [Google Scholar] [CrossRef]

- Mishra, S.; Bhende, C.N.; Panigrahi, B.K. Detection and classification of power quality disturbances using S-transform and probabilistic neural network. IEEE Trans. Power Deliv. 2008, 23, 280–287. [Google Scholar] [CrossRef]

- Cui, X.Z.; Hong, H.P. Use of discrete orthonormal s-transform to simulate earthquake ground motions. Bull. Seismol. Soc. Am. 2020, 110, 565–575. [Google Scholar] [CrossRef]

- Gong, M.; Wang, D.; Zhao, X.; Guo, H.; Luo, D.; Song, M. A review of non-maximum suppression algorithms for deep learning target detection. In Proceedings of the Seventh Symposium on Novel Photoelectronic Detection Technology and Application 2020, Kunming, China, 5–7 November 2020. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

| Layer | Kernel Size | Stride | Padding | Output Shape |

|---|---|---|---|---|

| Conv-1 | 11 | 1 | 1 | (48, 235, 502) |

| MaxPool-1 | 4 | 3 | 0 | (48, 78, 167) |

| Conv-2 | 5 | 1 | 2 | (64, 78, 167) |

| MaxPool-2 | 4 | 3 | 0 | (64, 25, 55) |

| Conv-3 | 3 | 1 | 1 | (128, 25, 55) |

| Conv-4 | 3 | 1 | 1 | (128, 25, 55) |

| Conv-5 | 3 | 1 | 1 | (64, 25, 55) |

| MaxPool-3 | 4 | 3 | 0 | (64, 8, 18) |

| FC-1 | - | - | - | (2048) |

| FC-2 | - | - | - | (2048) |

| FC-3 | - | - | - | (4) |

| Information | Climbing Fence | Shaking Barbed Wire | Breaking Wall |

|---|---|---|---|

| Number of samples | 53 | 52 | 47 |

| Minimum number of sampling points | 7500 | 10,000 | 4500 |

| Maximum number of sampling points | 40,500 | 40,500 | 37,000 |

| Average number of samping points | 15,755 | 23,337 | 20,798 |

| Minimum duration of intrusion | 2683 | 4820 | 4316 |

| Maximum duration of intrusion | 28,269 | 40,439 | 31,598 |

| Average duration of intrusion | 11,503 | 19,386 | 16,849 |

| Minimum number of channels | 70 | 70 | 70 |

| Maximum number of channels | 150 | 150 | 150 |

| Average number of channels | 127 | 125 | 122 |

| Minimum number of invaded channels | 8 | 4 | 7 |

| Maximum number of invaded channels | 27 | 15 | 19 |

| Average number of invaded channels | 17 | 7 | 13 |

| Channel Width | Training Set | Testing Set |

|---|---|---|

| 10 | 199,440 | 159,295 |

| 15 | 128,246 | 102,428 |

| 20 | 92,855 | 73,976 |

| 25 | 70,131 | 55,819 |

| 30 | 56,131 | 44,680 |

| Channel Width | Intrusion Classification | Intrusion Detection | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Climbing Fence | Shaking Barbed Wire | Breaking Wall | IDR | FAR | F1 | |||||||

| IDR | FAR | F1 | IDR | FAR | F1 | IDR | FAR | F1 | ||||

| 10 | 68.5% | 16.2% | 75.4% | 80.4% | 11.0% | 84.5% | 77.0% | 27.2% | 74.8% | 96.9% | 23.9% | 85.2% |

| 15 | 73.2% | 19.2% | 76.8% | 90.0% | 13.5% | 88.2% | 55.3% | 20.6% | 65.2% | 95.1% | 12.4% | 91.2% |

| 20 | 66.6% | 13.4% | 75.3% | 83.1% | 14.5% | 84.3% | 71.2% | 23.8% | 73.6% | 96.0% | 15.3% | 90.0% |

| 25 | 71.9% | 14.7% | 78.1% | 73.4% | 9.9% | 80.9% | 74.2% | 22.7% | 75.7% | 95.4% | 12.2% | 91.4% |

| 30 | 71.4% | 8.8% | 80.1% | 86.0% | 13.0% | 86.5% | 75.4% | 19.1% | 78.1% | 96.6% | 11.6% | 92.3% |

| Scanning Period | Intrusion Classification | Intrusion Detection | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Climbing Fence | Shaking Barbed Wire | Breaking Wall | IDR | FAR | F1 | |||||||

| IDR | FAR | F1 | IDR | FAR | F1 | IDR | FAR | F1 | ||||

| 256 | 58.5% | 18.1% | 68.3% | 77.1% | 16.8% | 80.0% | 68.7% | 31.3% | 68.7% | 76.9% | 4.3% | 85.3% |

| 512 | 73.2% | 19.2% | 76.8% | 90.0% | 13.5% | 88.2% | 55.3% | 20.6% | 65.2% | 95.1% | 12.4% | 91.2% |

| 1024 | 68.0% | 15.5% | 75.6% | 72.9% | 17.6% | 77.4% | 71.0% | 26.5% | 72.2% | 94.7% | 21.9% | 85.6% |

| 2048 | 60.2% | 26.0% | 66.4% | 71.6% | 32.6% | 69.5% | 48.9% | 35.2% | 54.4% | 96.6% | 54.1% | 62.3% |

| Model | Intrusion Classification | Intrusion Detection | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Climbing Fence | Shaking Barbed Wire | Breaking Wall | IDR | FAR | F1 | ||||||||

| IDR | FAR | F1 | IDR | FAR | F1 | IDR | FAR | F1 | |||||

| Time | ATCN-BiLSTM [21] | 23.5% | 55.1% | 30.8% | 12.8% | 74.1% | 17.2% | 15.9% | 61.5% | 22.5% | 35.8% | 23.6% | 48.7% |

| Space– Time | AlexNet [30] | 54.6% | 35.3% | 59.2% | 27.5% | 18.9% | 41.1% | 65.3% | 49.2% | 57.1% | 97.6% | 54.3% | 62.3% |

| VGG16 [31] | 71.7% | 34.0% | 68.7% | 50.2% | 15.5% | 63.0% | 51.5% | 40.9% | 55.1% | 96.8% | 47.6% | 68.0% | |

| Inception-v3 [32] | 41.1% | 21.2% | 54.0% | 40.7% | 17.8% | 54.4% | 84.0% | 43.1% | 67.9% | 96.4% | 48.3% | 67.3% | |

| ResNet [33] | 36.2% | 28.0% | 48.2% | 57.6% | 24.3% | 65.4% | 71.5% | 42.2% | 63.9% | 95.1% | 46.2% | 68.7% | |

| DenseNet [34] | 62.6% | 31.6% | 65.4% | 45.6% | 10.9% | 60.3% | 72.1% | 36.3% | 67.6% | 96.2% | 36.0% | 76.9% | |

| ConvLSTM [10] | 54.6% | 29.0% | 61.7% | 70.6% | 28.6% | 71.0% | 45.7% | 38.5% | 52.5% | 93.6% | 30.4% | 80.0% | |

| Time- Frequency | Xu [19] | 44.6% | 56.8% | 43.9% | 45.6% | 55.5% | 45.1% | 54.4% | 38.2% | 57.9% | 67.4% | 30.2% | 68.6% |

| Shi [20] | 43.8% | 52.1% | 45.7% | 64.3% | 74.2% | 36.8% | 61.7% | 45.0% | 58.2% | 71.9% | 37.8% | 66.7% | |

| STNet | 73.2% | 19.2% | 76.8% | 90.0% | 13.5% | 88.2% | 55.3% | 20.6% | 65.2% | 95.1% | 12.4% | 91.2% | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zeng, Y.; Zhang, J.; Zhong, Y.; Deng, L.; Wang, M. STNet: A Time-Frequency Analysis-Based Intrusion Detection Network for Distributed Optical Fiber Acoustic Sensing Systems. Sensors 2024, 24, 1570. https://doi.org/10.3390/s24051570

Zeng Y, Zhang J, Zhong Y, Deng L, Wang M. STNet: A Time-Frequency Analysis-Based Intrusion Detection Network for Distributed Optical Fiber Acoustic Sensing Systems. Sensors. 2024; 24(5):1570. https://doi.org/10.3390/s24051570

Chicago/Turabian StyleZeng, Yiming, Jianwei Zhang, Yuzhong Zhong, Lin Deng, and Maoning Wang. 2024. "STNet: A Time-Frequency Analysis-Based Intrusion Detection Network for Distributed Optical Fiber Acoustic Sensing Systems" Sensors 24, no. 5: 1570. https://doi.org/10.3390/s24051570

APA StyleZeng, Y., Zhang, J., Zhong, Y., Deng, L., & Wang, M. (2024). STNet: A Time-Frequency Analysis-Based Intrusion Detection Network for Distributed Optical Fiber Acoustic Sensing Systems. Sensors, 24(5), 1570. https://doi.org/10.3390/s24051570