Monitoring Flow-Forming Processes Using Design of Experiments and a Machine Learning Approach Based on Randomized-Supervised Time Series Forest and Recursive Feature Elimination

Abstract

1. Introduction

- Demonstration of the feasibility of utilizing machine learning and sensor data to monitor flow-forming processes.

- Development of a practical approach to monitor flow-forming processes. The approach includes an experimental design capable of providing the necessary data, as well as a procedure for preprocessing the data and extracting features that capture the information needed by the machine learning models to detect defects in the blank and the machine.

- Development of a feature extraction algorithm for multivariate time series classification, derived from the state-of-the-art algorithm r-STSF (see [8]), incorporating extensions for multivariate time series and metric target variables.

2. Related Work

2.1. Condition-Based Maintenance

2.2. Data Acquisition

2.3. Sensors

2.4. Monitoring Flow Forming Processes

3. Materials and Methods

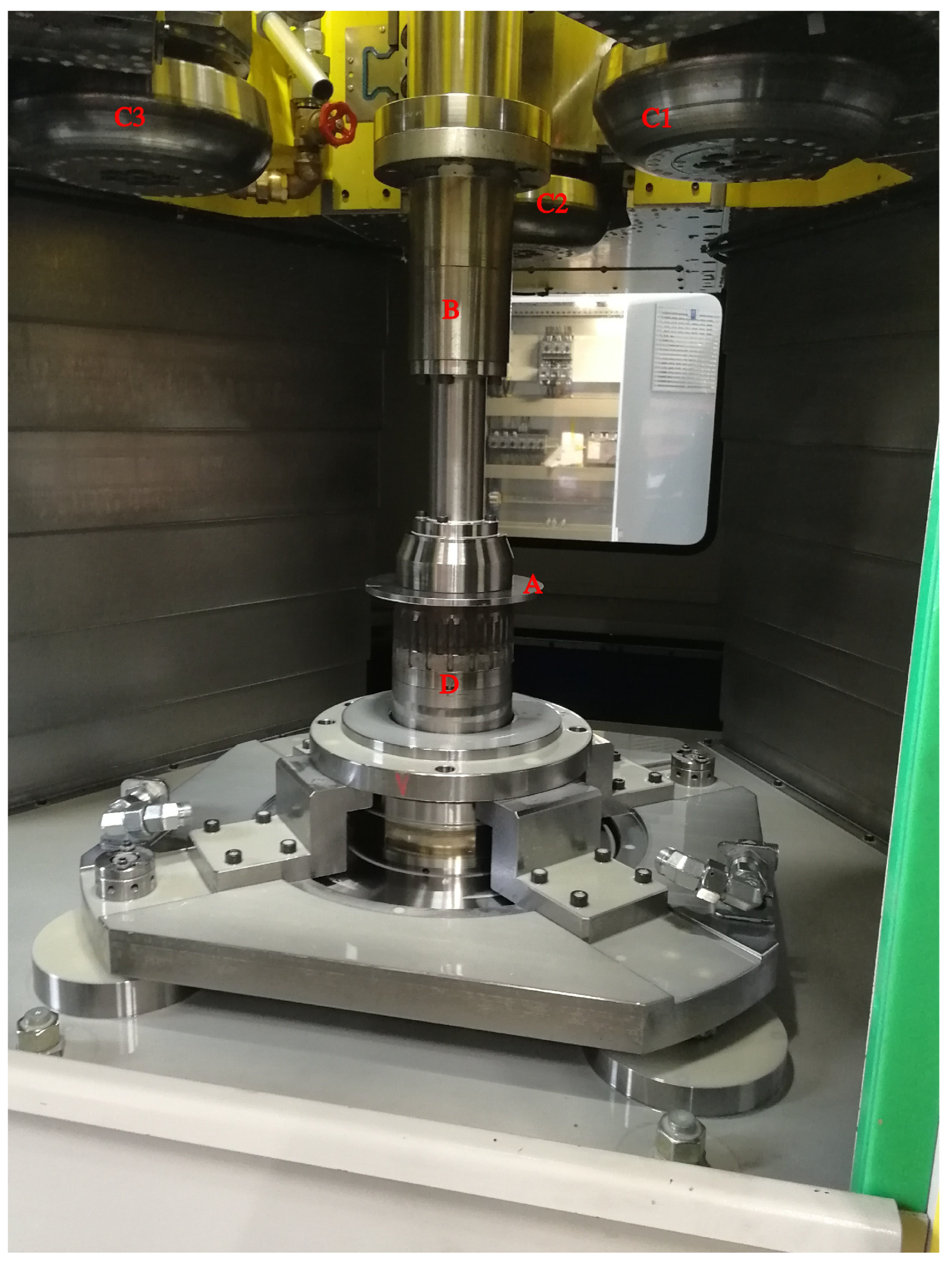

3.1. Experimental Setup

3.2. Experiments

- Diameter of the blank;

- Thickness of the blank;

- Irregular diameter reduction in the blank by grinding (see Figure 8);

- Local thickness reduction in the blank by grinding (see Figure 9);

- Alloy from which the blank is made;

- Deviation of the center of the hole from the center of the blank (see Figure 10);

- A defined deformation of the blank where the blank is not completely flat (see Figure 11);

- Straight piece milled from the blank (see Figure 12);

- Flow rate of the cooling liquid;

- Damage to one of the processing rollers by grinding (see Figure 13).

3.3. Experimental Design I

3.4. Experimental Design II

3.5. Training Pipeline and Test Pipeline

3.5.1. Data Cleaning and Transformations of Time Series Variables

- Extraction of in-process data: The raw data set of each experimental series consists of the continuous time series of all the time series variables over the complete course of the experimental series, including the time between experiments. Using the position data of the rollers, the data recorded during the actual forming processes are separated from the data recorded while the machine is idle. The latter are discarded.

- Removal of empty runs: If the CNC program is executed without a blank being inserted (which sometimes needs to be carried out for different reasons), the corresponding data are discarded.

- Removal of not useful time series variables: Some of the time series variables supplied by the PLC are redundant. These are discarded.

- One hot encoding: Some of the time series variables supplied by the PLC are nominal scaled. These are transformed using one-hot encoding.

- Imputation of missing data points: Missing data points are imputed using LASSO (Least Absolute Shrinkage and Selection Operator, see [37]) regression.

- Merging the data from the PLC with the DoE data: The time series data supplied by the PLC are merged with the corresponding influencing variables from the experimental design. For this purpose, a consecutive process counter from the PLC data and the experiment number from the experimental design are used. The assignment between the process counter and the associated experiment number is documented while the experiments are carried out.

3.5.2. Engineering of Additional Time Series Variables

3.5.3. Feature Extraction Using the Methodology of the Randomized-Supervised Time Series Forest

- A feature selection can be incorporated between the feature extraction and the training of models on the generated features (see Figure 14).

- The Extra Tree algorithm is an integral part of the r-STSF as defined by [8]. By detaching the feature extraction functionality from the rest of r-STSF, it becomes possible to train arbitrary other machine learning algorithms on the generated features.

- In addition to the choice of learning algorithm, the choice of libraries and implementations used for it is also open (Cabello et al. [8] use the Python library sklearn). In the present work, the machine learning platform H2O is used to train the models.

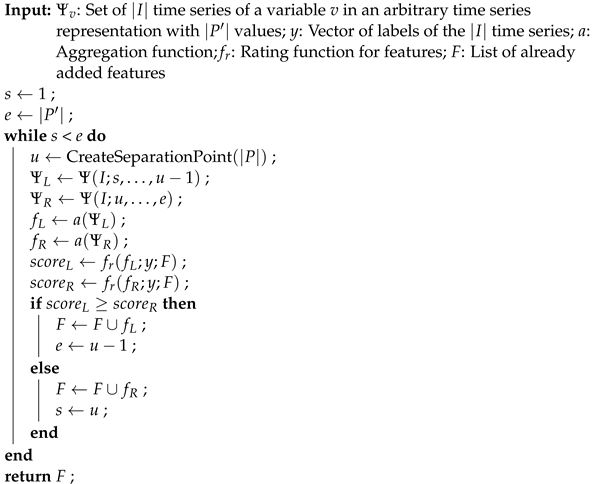

| Algorithm 1: Supervised Time Series Feature Generation: Repeated iteration over all time series variables and time series representations. |

| Input: X: Set of time series with measuring points und variables; y: Vector of labels of the time series; A: Set of aggregation functions; : Rating function for features; : Number of repetitions (d in [8])  |

| Algorithm 2: IntervalSearch: Generation of interval features on a time series variable in a time series representation. |

|

3.5.4. Feature Selection Using Recursive Feature Elimination

- Improvement of the predictive ability of machine learning models trained on the resulting feature set. The experiments of [7] suggest that this is also possible for random forests, which are generally considered to be robust against irrelevant features.

- Minimization of the required sensors.

- Simplification of the models and thus improvement of their interpretability.

- Reduction in the time required to train the machine learning models.

- Reduction in the time required for the evaluation of new observations. This is particularly relevant for a possible live monitoring of the forming processes, where the time available for an evaluation of new data is limited.

3.5.5. Model Generation and Selection

- Random forests usually have a good predictive ability, which depends only little on the settings of the hyperparameters.

- Due to the small size of the training data set and the high number of dimensions, there is a high risk of generating overfitted models. Random forests are largely immune to this.

- The RFE algorithm used also employs random forests. Thus, the selected feature set is to some extent tuned for random forests.

4. Results

4.1. Evaluation Method

4.2. Evaluation of Regression Models

4.3. Validation of Classification Models

4.4. Dimensionality Reduction

5. Discussion

6. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CBM | Condition-based Maintenance |

| CM | Condition Monitoring |

| CNC | Computerized Numerical Control |

| DoE | Design of Experiments |

| FEA | Finite Element Analysis |

| HIVE-COTE | Hierarchical Vote Collective of Transformation-Based Ensembles |

| LASSO | Least Absolute Shrinkage and Selection Operator |

| MAE | Mean Absolute Error |

| MCC | Matthews Correlation Coefficient |

| PLC | Programmable Logic Controller |

| r-STSF | Randomized-Supervised Time Series Forest |

| RFE | Recursive Feature Elimination |

| RMS | Root Mean Square |

| RMSE | Root Mean Square Error |

| RUL | Remaining Useful Life |

| STSF | Supervised Time Series Forest |

| STSFG | Supervised Time Series Feature Generation |

| SVM | Support Vector Machine |

| TCM | Tool Condition Monitoring |

| TSF | Time Series Forest |

Appendix A

| Component | Measured Variable |

|---|---|

| spindle | revolutions per minute |

| active current | |

| active torque | |

| active motor temperature | |

| vibration | |

| feed of spindle | position |

| position error | |

| pressure side A | |

| pressure side B | |

| feed of roller 1 position | position error |

| pressure side A | |

| pressure side b | |

| force side A | |

| force side B | |

| bearing temperature | |

| feed of roller 2 | position |

| position error | |

| pressure side A | |

| pressure side b | |

| force side A | |

| force side B | |

| bearing temperature | |

| feed of roller 3 | position |

| position error | |

| pressure side A | |

| pressure side b | |

| force side A | |

| force side B | |

| bearing temperature | |

| tailstock traverse | pressure |

| temperature traverse bearing | |

| force | |

| vibration | |

| ejector | pressure |

| force | |

| hydraulic power unit | fill level |

| temperature | |

| pressure | |

| bearing lubrication spindle | fill level |

| temperature | |

| bearing lubrication tailstock traverse | fill level |

| temperature |

References

- Jahazi, M.; Ebrahimi, G. The influence of flow forming parameters and microstructure on the quality of a D6ac steel. J. Mater. Process. Technol. 2000, 103, 362–366. [Google Scholar] [CrossRef]

- Appleby, A.; Conway, A.; Ion, W. A novel methodology for in-process monitoring of flow forming. In Proceedings of the International Conference of Global Network for Innovative Technology and AWAM International Conference in Civil Engineering (IGNITE-AICCE’17): Sustainable Technology And Practice For Infrastructure and Community Resilience, 2017, AIP Conference Proceedings, Dublin, Ireland, 26–28 April 2017. [Google Scholar] [CrossRef]

- Srinivasulu, M.; Komaraiah, M.; Rao, C.K.P. Application of Response Surface Methodology to Predict Ovality of AA6082 Flow Formed Tubes. Int. J. Manuf. Mater. Mech. Eng. 2013, 3, 52–65. [Google Scholar] [CrossRef]

- Appleby, A. Monitoring of Incremental Rotary Forming. Ph.D. Thesis, University of Strathclyde (Department of Design, Manufacturing and Engineering Management), Glasgow, Scotland, 2019. [Google Scholar] [CrossRef]

- Kononenko, I.; Kukar, M. Machine Learning and Data Mining, [repr. of the ed.] 2007, ed.; Woodhead Publishing: Chichester, UK, 2013. [Google Scholar]

- Bishop, C.M. Pattern Recognition and Machine Learning, 9 (corrected at 8th printing), ed.; Information science and statistics; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Kuhn, M.; Johnson, K. Applied Predictive Modeling, 1st ed.; Springer: New York, NY, USA, 2013. [Google Scholar] [CrossRef]

- Cabello, N.; Naghizade, E.; Qi, J.; Kulik, L. Fast, accurate and explainable time series classification through randomization. Data Min. Knowl. Discov. 2023, 38, 748–811. [Google Scholar] [CrossRef]

- Goyal, D.; Pabla, B.S.; Dhami, S.S.; Lachhwani, K. Optimization of condition-based maintenance using soft computing. Neural Comput. Appl. 2017, 28, 829–844. [Google Scholar] [CrossRef]

- Sobie, C.; Freitas, C.; Nicolai, M. Simulation-driven machine learning: Bearing fault classification. Mech. Syst. Signal Process. 2018, 99, 403–419. [Google Scholar] [CrossRef]

- Kurukuru, V.S.B.; Haque, A.; Khan, M.A.; Tripathy, A.K. Fault classification for Photovoltaic Modules Using Thermography and Machine Learning Techniques. In Proceedings of the 2019 International Conference on Computer and Information Sciences (ICCIS), Sakaka, Saudi Arabia, 3–4 April 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Gong, C.S.A.; Su, C.H.S.; Tseng, K.H. Implementation of Machine Learning for Fault Classification on Vehicle Power Transmission System. IEEE Sens. J. 2020, 20, 15163–15176. [Google Scholar] [CrossRef]

- Nazir, Q.; Shao, C. Online tool condition monitoring for ultrasonic metal welding via sensor fusion and machine learning. J. Manuf. Process. 2021, 62, 806–816. [Google Scholar] [CrossRef]

- Kanawaday, A.; Sane, A. Machine Learning for Predictive Maintenance of Industrial Machines using IoT Sensor Data. In Proceedings of the 2017 8th IEEE International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 24–26 November 2017; pp. 87–90. [Google Scholar]

- Costa, M.; Wullt, B.; Norrlöf, M.; Gunnarsson, S. Failure detection in robotic arms using statistical modeling, machine learning and hybrid gradient boosting. Measurement 2019, 146, 425–436. [Google Scholar] [CrossRef]

- Tian, Z.; Wong, L.; Safaei, N. A neural network approach for remaining useful life prediction utilizing both failure and suspension histories. Mech. Syst. Signal Process. 2010, 24, 1542–1555. [Google Scholar] [CrossRef]

- Abellan-Nebot, J.V.; Romero Subirón, F. A review of machining monitoring systems based on artificial intelligence process models. Int. J. Adv. Manuf. Technol. 2010, 47, 237–257. [Google Scholar] [CrossRef]

- Breckweg, A. Automatisiertes und prozessüberwachtes Radialclinchen höherfester Blechwerkstoffe. Ph.D. Thesis, Universität Stuttgart (Fakultät für Maschinenbau), Stuttgart, Germany, 2007. [Google Scholar]

- Choi, Y.J.; Park, M.S.; Chu, C.N. Prediction of drill failure using features extraction in time and frequency domains of feed motor current. Int. J. Mach. Tools Manuf. 2008, 48, 29–39. [Google Scholar] [CrossRef]

- Cheng, F.; Dong, J. Monitoring tip-based nanomachining process by time series analysis using support vector machine. J. Manuf. Process. 2019, 38, 158–166. [Google Scholar] [CrossRef]

- Lei, Y.; Li, N.; Gontarz, S.; Lin, J.; Radkowski, S.; Dybala, J. A Model-Based Method for Remaining Useful Life Prediction of Machinery. IEEE Trans. Reliab. 2016, 65, 1314–1326. [Google Scholar] [CrossRef]

- Ren, L.; Cui, J.; Sun, Y.; Cheng, X. Multi-bearing remaining useful life collaborative prediction: A deep learning approach. J. Manuf. Syst. 2017, 43, 248–256. [Google Scholar] [CrossRef]

- Drouillet, C.; Karandikar, J.; Nath, C.; Journeaux, A.C.; El Mansori, M.; Kurfess, T. Tool life predictions in milling using spindle power with the neural network technique. J. Manuf. Process. 2016, 22, 161–168. [Google Scholar] [CrossRef]

- Bhuiyan, M.S.; Choudhury, I. Review of Sensor Applications in Tool Condition Monitoring in Machining. Compr. Mater. Process. 2014, 13, 539–569. [Google Scholar] [CrossRef]

- Banerjee, P.; Hui, N.B.; Dikshit, M.K.; Laha, R.; Das, S. Modeling and optimization of mean thickness of backward flow formed tubes using regression analysis, particle swarm optimization and neural network. SN Appl. Sci. 2020, 2, 1353. [Google Scholar] [CrossRef]

- Podder, B.; Banerjee, P.; Kumar, K.R.; Hui, N.B. Forward and reverse modeling of flow forming of solution annealed H30 aluminium tubes. Neural Comput. Appl. 2020, 32, 2081–2093. [Google Scholar] [CrossRef]

- Riepold, M.; Arian, B.; Rozo Vasquez, J.; Homberg, W.; Walther, F.; Trächtler, A. Model approaches for closed-loop property control for flow forming. Adv. Ind. Manuf. Eng. 2021, 3, 100057. [Google Scholar] [CrossRef]

- Bhatt, R.J.; Raval, H.K. An Investigation of forces during Flow-Forming Process. Int. J. Adv. Manuf. Technol. 2016, 3, 12–17. [Google Scholar]

- Bhatt, R.J.; Raval, H.K. Optimization of process parameters during flow-forming process and its verification. Mechanika 2017, 23, 581. [Google Scholar] [CrossRef]

- Bhatt, R.J.; Raval, H.K. In situ investigations on forces and power consumption during flow forming process. J. Mech. Sci. Technol. 2018, 32, 1307–1315, PII: 235. [Google Scholar] [CrossRef]

- Abouelatta, O.B.; Madl, J. Surface roughness prediction based on cutting parameters and tool vibrations in turning operations. J. Mater. Process. Technol. 2001, 118, 269–277. [Google Scholar] [CrossRef]

- Hoppe, F.; Hohmann, J.; Knoll, M.; Kubik, C.; Groche, P. Feature-based Supervision of Shear Cutting Processes on the Basis of Force Measurements: Evaluation of Feature Engineering and Feature Extraction. Procedia Manuf. 2019, 34, 847–856. [Google Scholar] [CrossRef]

- Huang, P.T.; Chen, J. Neural network-based tool breakage monitoring system for end milling operations. J. Ind. Technol. 2000, 16, 2–7. [Google Scholar]

- Akima, H. A New Method of Interpolation and Smooth Curve Fitting Based on Local Procedures. J. ACM 1970, 17, 589–602. [Google Scholar] [CrossRef]

- Siebertz, K.; van Bebber, D.; Hochkirchen, T. Statistische Versuchsplanung, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar] [CrossRef]

- Montgomery, D.C.; Runger, G.C. Foldovers of 2 k-p Resolution IV Experimental Designs. J. Qual. Technol. 1996, 28, 446–450. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression Shrinkage and Selection via the Lasso. J. R. Stat. Soc. Ser. B (Methodol.) 1996, 58, 267–288. [Google Scholar]

- Heaton, J. An empirical analysis of feature engineering for predictive modeling. In Proceedings of the SoutheastCon 2016, Norfolk, VA, USA, 30 March–3 April 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Deng, H.; Runger, G.; Tuv, E.; Vladimir, M. A time series forest for classification and feature extraction. Inf. Sci. 2013, 239, 142–153. [Google Scholar] [CrossRef]

- Cabello, N.; Naghizade, E.; Qi, J.; Kulik, L. Fast and Accurate Time Series Classification Through Supervised Interval Search. In Proceedings of the 2020 IEEE International Conference on Data Mining (ICDM), Sorrento, Italy, 17–20 November 2020; pp. 948–953. [Google Scholar] [CrossRef]

- Lines, J.; Taylor, S.; Bagnall, A. Time Series Classification with HIVE-COTE. ACM Trans. Knowl. Discov. Data 2018, 12, 1–35. [Google Scholar] [CrossRef]

- Duda, R.O.; Hart, P.E.; Stork, D.G. Pattern Classification, 2nd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2000. [Google Scholar]

- Croux, C.; Dehon, C. Influence Functions of the Spearman and Kendall Correlation Measures. SSRN Electron. J. 2010, 19, 497–515. [Google Scholar] [CrossRef][Green Version]

- Díaz-Uriarte, R.; Alvarez de Andrés, S. Gene selection and classification of microarray data using random forest. BMC Bioinform. 2006, 7, 3. [Google Scholar] [CrossRef] [PubMed]

- Darst, B.F.; Malecki, K.C.; Engelman, C.D. Using recursive feature elimination in random forest to account for correlated variables in high dimensional data. BMC Genet. 2018, 19, 65. [Google Scholar] [CrossRef] [PubMed]

- Svetnik, V.; Liaw, A.; Tong, C.; Wang, T. Application of Breiman’s Random Forest to Modeling Structure-Activity Relationships of Pharmaceutical Molecules. In Multiple Classifier Systems; Roli, F., Kittler, J., Windeatt, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 334–343. [Google Scholar]

- Guyon, I.; Weston, J.; Barnhill, S.; Vapnik, V. Gene Selection for Cancer Classification using Support Vector Machines. Mach. Learn. 2002, 46, 389–422. [Google Scholar] [CrossRef]

- Kohavi, R. A Study of Cross-Validation and Bootstrap for Accuracy Estimation and Model Selection. In Proceedings of the 14th International Joint Conference on Artificial Intelligence—Volume 2, Montréal, QC, Canada, 20–25 August 1995; Morgan Kaufmann Publishers Inc.: Stanford, CA, USA, 1995. IJCAI’95. pp. 1137–1143. [Google Scholar]

- Schüürmann, G.; Ebert, R.U.; Chen, J.; Wang, B.; Kühne, R. External Validation and Prediction Employing the Predictive Squared Correlation Coefficient Test Set Activity Mean vs. Training Set Activity Mean. J. Chem. Inf. Model. 2008, 48 11, 2140–2145. [Google Scholar] [CrossRef]

- Chicco, D.; Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef]

- Ribeiro, R.P.; Pereira, P.; Gama, J. Sequential anomalies: A study in the Railway Industry. Mach. Learn. 2016, 105, 127–153. [Google Scholar] [CrossRef]

| Parameter | Description | Value |

|---|---|---|

| Repetitions of the STSFG | 5 | |

| Proportion of features removed in each RFE iteration | ||

| Number of trees of the random forests trained in the RFE | 1000 | |

| Number of randomly selected features at each node of the random forests built in the RFE | , | |

| Number of trees of the random forests | 2000 |

| Target Variable | Ø RMSE | Ø MAE | Ø | Standard Deviation |

|---|---|---|---|---|

| influencing factor 1 (diameter of the blank) | mm | mm | mm | |

| influencing factor 2 (thickness of the blank) | mm | mm | mm | |

| influencing factor 6 (displaced hole in the blank) | mm | mm | mm |

| Target Variable | Ø MCC | Ø F1 | Ø Precision | Ø Recall | Ø Accuracy |

|---|---|---|---|---|---|

| influencing factor 7 (defined deformation) | |||||

| influencing factor 10 (damaged roller) | |||||

| influencing factor 9 (flow rate of cooling liquid) | 0.45 | 0.72 | 0.66 | 0.82 | 0.72 |

| Target Variable | Ø Feature Count after STSFG | Ø Feature Count after RFE | Reduction |

|---|---|---|---|

| Influencing factor 1 (diameter of the blank) | |||

| Influencing factor 2 (thickness of the blank) | |||

| Influencing factor 6 (displaced hole in the blank) | |||

| Influencingfactor 7 (defined deformation) | |||

| Influencing factor 9 (flow rate of cooling liquid) | |||

| Influencing factor 10 (damaged roller) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Anozie, L.; Fink, B.; Friedrich, C.M.; Engels, C. Monitoring Flow-Forming Processes Using Design of Experiments and a Machine Learning Approach Based on Randomized-Supervised Time Series Forest and Recursive Feature Elimination. Sensors 2024, 24, 1527. https://doi.org/10.3390/s24051527

Anozie L, Fink B, Friedrich CM, Engels C. Monitoring Flow-Forming Processes Using Design of Experiments and a Machine Learning Approach Based on Randomized-Supervised Time Series Forest and Recursive Feature Elimination. Sensors. 2024; 24(5):1527. https://doi.org/10.3390/s24051527

Chicago/Turabian StyleAnozie, Leroy, Bodo Fink, Christoph M. Friedrich, and Christoph Engels. 2024. "Monitoring Flow-Forming Processes Using Design of Experiments and a Machine Learning Approach Based on Randomized-Supervised Time Series Forest and Recursive Feature Elimination" Sensors 24, no. 5: 1527. https://doi.org/10.3390/s24051527

APA StyleAnozie, L., Fink, B., Friedrich, C. M., & Engels, C. (2024). Monitoring Flow-Forming Processes Using Design of Experiments and a Machine Learning Approach Based on Randomized-Supervised Time Series Forest and Recursive Feature Elimination. Sensors, 24(5), 1527. https://doi.org/10.3390/s24051527