INSANet: INtra-INter Spectral Attention Network for Effective Feature Fusion of Multispectral Pedestrian Detection

Abstract

1. Introduction

2. Related Work

2.1. Multispectral Pedestrian Detection

2.2. Attention-Based Fusion Strategies

2.3. Data Augmentations in Pedestrian Detection

3. Materials and Methods

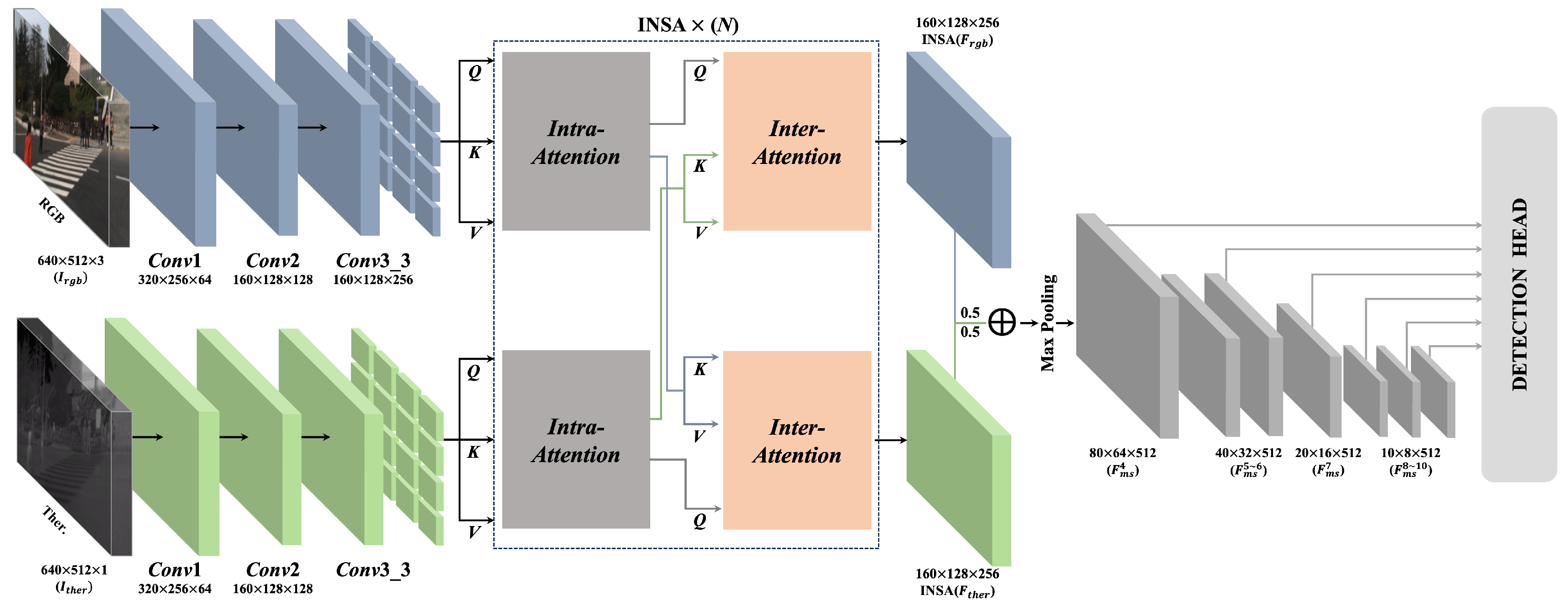

3.1. Model Architecture

3.1.1. Overall Framework

3.1.2. Attention-Based Fusion

Preliminary Transformer-Based Fusion

3.1.3. INtra-INter Spectral Attention

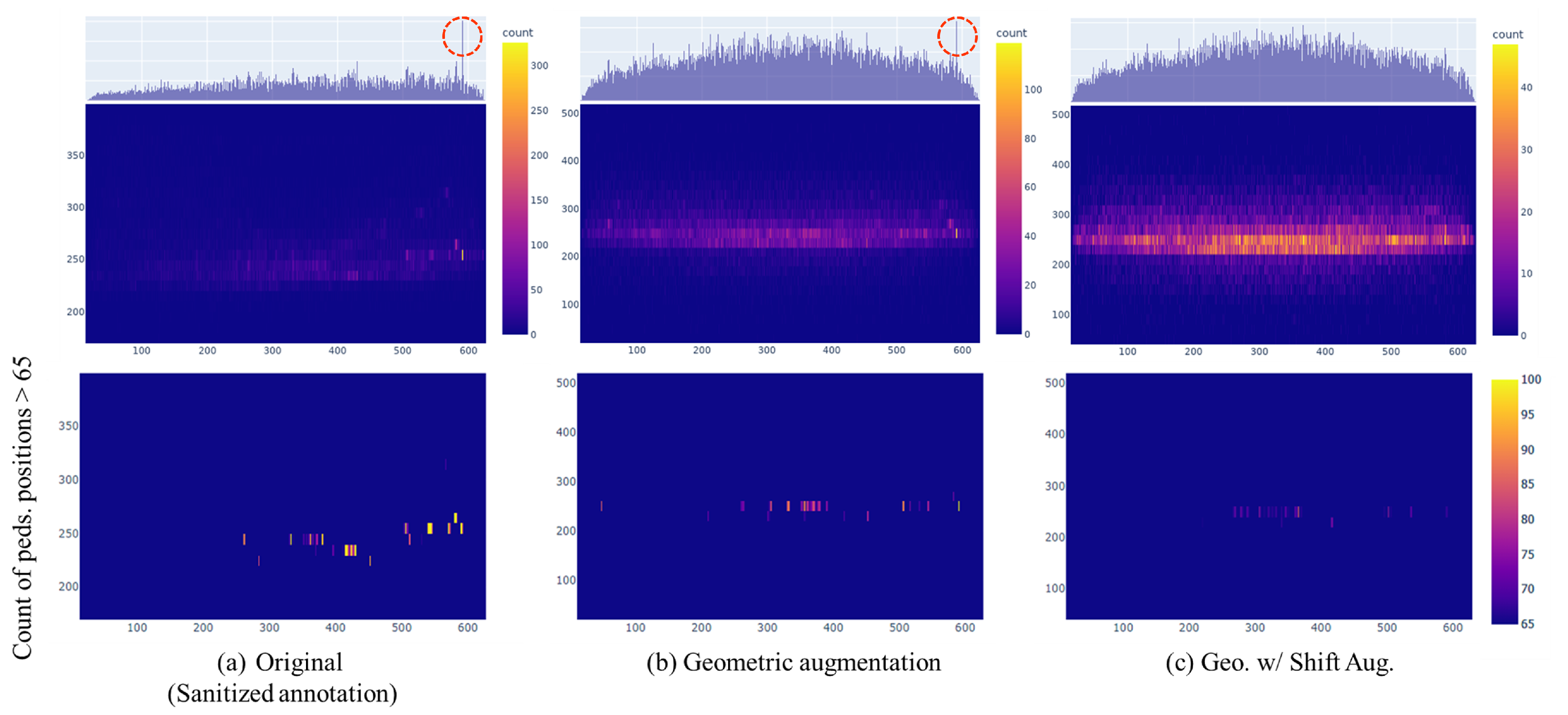

3.2. Analysis of Geometric Data Augmentation

4. Experiments

4.1. Experimental Setup

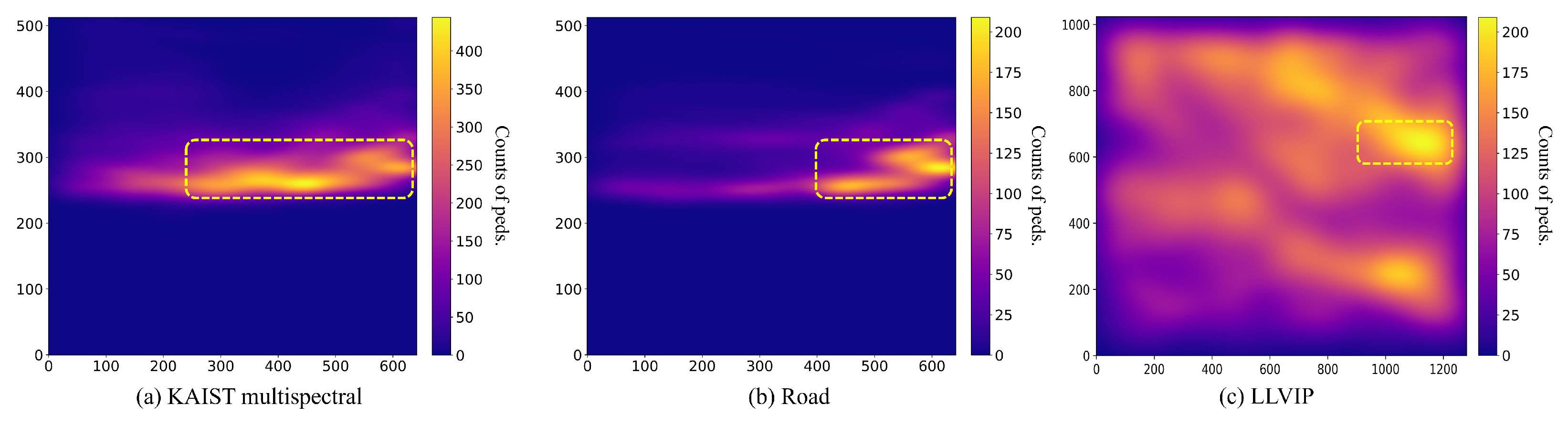

4.1.1. KAIST Dataset

4.1.2. LLVIP Dataset

4.2. Implementation Details

4.3. Evaluation Metric

4.4. Comparison with State-of-the-Art Multispectral Pedestrian Detection Methods

4.4.1. KAIST Dataset

4.4.2. LLVIP Dataset

4.5. Ablation Study

4.5.1. Effects of INtra-INter Spectral Attention

4.5.2. Hyperparameters in INSA

4.5.3. The Impact of Performance from Geo and Shift Augmentation

4.5.4. Hyperparameters in Shift Augmentation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? the kitti vision benchmark suite. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuScenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 19–25 June 2020; pp. 11621–11631. [Google Scholar]

- Wang, X.; Wang, M.; Li, W. Scene-specific pedestrian detection for static video surveillance. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 361–374. [Google Scholar] [CrossRef]

- Du, D.; Zhu, P.; Wen, L.; Bian, X.; Lin, H.; Hu, Q.; Peng, T.; Zheng, J.; Wang, X.; Zhang, Y.; et al. VisDrone-DET2019: The vision meets drone object detection in image challenge results. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Repbulic of Korea, 27–28 October 2019. [Google Scholar]

- Hwang, S.; Park, J.; Kim, N.; Choi, Y.; So Kweon, I. Multispectral pedestrian detection: Benchmark dataset and baseline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1037–1045. [Google Scholar]

- Xu, D.; Ouyang, W.; Ricci, E.; Wang, X.; Sebe, N. Learning cross-modal deep representations for robust pedestrian detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5363–5371. [Google Scholar]

- Devaguptapu, C.; Akolekar, N.; M Sharma, M.; N Balasubramanian, V. Borrow from anywhere: Pseudo multi-modal object detection in thermal imagery. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Kieu, M.; Bagdanov, A.D.; Bertini, M.; Del Bimbo, A. Task-conditioned domain adaptation for pedestrian detection in thermal imagery. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 546–562. [Google Scholar]

- González, A.; Fang, Z.; Socarras, Y.; Serrat, J.; Vázquez, D.; Xu, J.; López, A.M. Pedestrian detection at day/night time with visible and FIR cameras: A comparison. Sensors 2016, 16, 820. [Google Scholar] [CrossRef]

- Jia, X.; Zhu, C.; Li, M.; Tang, W.; Zhou, W. LLVIP: A visible-infrared paired dataset for low-light vision. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Virtual, 10–17 October 2021; pp. 3496–3504. [Google Scholar]

- Zhang, L.; Zhu, X.; Chen, X.; Yang, X.; Lei, Z.; Liu, Z. Weakly aligned cross-modal learning for multispectral pedestrian detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5127–5137. [Google Scholar]

- Zhang, L.; Liu, Z.; Zhang, S.; Yang, X.; Qiao, H.; Huang, K.; Hussain, A. Cross-modality interactive attention network for multispectral pedestrian detection. Inf. Fusion 2019, 50, 20–29. [Google Scholar] [CrossRef]

- Zhang, H.; Fromont, E.; Lefèvre, S.; Avignon, B. Guided attentive feature fusion for multispectral pedestrian detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (CVPR), Virtual, 19–25 June 2021; pp. 72–80. [Google Scholar]

- Zheng, Y.; Izzat, I.H.; Ziaee, S. GFD-SSD: Gated fusion double SSD for multispectral pedestrian detection. arXiv 2019, arXiv:1903.06999. [Google Scholar]

- Kim, J.; Kim, H.; Kim, T.; Kim, N.; Choi, Y. MLPD: Multi-Label Pedestrian Detector in Multispectral Domain. IEEE Robot. Autom. Lett. 2021, 6, 7846–7853. [Google Scholar] [CrossRef]

- Li, C.; Song, D.; Tong, R.; Tang, M. Multispectral pedestrian detection via simultaneous detection and segmentation. In Proceedings of the in British Machine Vision Conference (BMVC), Newcastle, UK, 3–6 September 2018; pp. 225.1–225.12. [Google Scholar]

- Zhang, H.; Fromont, E.; Lefevre, S.; Avignon, B. Multispectral fusion for object detection with cyclic fuse-and-refine blocks. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Virtual, 25–28 October 2020; pp. 276–280. [Google Scholar]

- Zhou, K.; Chen, L.; Cao, X. Improving multispectral pedestrian detection by addressing modality imbalance problems. In Proceedings of the European Conference on Computer Vision (ECCV), Springer, Glasgow, UK, 23–28 August 2020; pp. 787–803. [Google Scholar]

- Qingyun, F.; Dapeng, H.; Zhaokui, W. Cross-modality fusion transformer for multispectral object detection. arXiv 2021, arXiv:2111.00273. [Google Scholar]

- Shen, J.; Chen, Y.; Liu, Y.; Zuo, X.; Fan, H.; Yang, W. ICAFusion: Iterative cross-attention guided feature fusion for multispectral object detection. Pattern Recognit. 2024, 145, 109913. [Google Scholar] [CrossRef]

- Xu, Z.; Zhuang, J.; Liu, Q.; Zhou, J.; Peng, S. Benchmarking a large-scale FIR dataset for on-road pedestrian detection. Infrared Phys. Technol. 2019, 96, 199–208. [Google Scholar] [CrossRef]

- Tumas, P.; Nowosielski, A.; Serackis, A. Pedestrian detection in severe weather conditions. IEEE Access 2020, 8, 62775–62784. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, S.; Wang, S.; Metaxas, D.N. Multispectral deep neural networks for pedestrian detection. arXiv 2016, arXiv:1611.02644. [Google Scholar]

- Yang, X.; Qian, Y.; Zhu, H.; Wang, C.; Yang, M. BAANet: Learning bi-directional adaptive attention gates for multispectral pedestrian detection. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 2920–2926. [Google Scholar]

- Li, C.; Song, D.; Tong, R.; Tang, M. Illumination-aware faster R-CNN for robust multispectral pedestrian detection. Pattern Recognit. 2019, 85, 161–171. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective kernel networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 510–519. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Munich, Germany, 8–14 September 2018; pp. 7132–7141. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Zhu, Y.; Sun, X.; Wang, M.; Huang, H. Multi-Modal Feature Pyramid Transformer for RGB-Infrared Object Detection. IEEE Trans. Intell. Transp. Syst. 2023, 24, 9984–9995. [Google Scholar] [CrossRef]

- Yun, S.; Han, D.; Oh, S.J.; Chun, S.; Choe, J.; Yoo, Y. Cutmix: Regularization strategy to train strong classifiers with localizable features. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Virtual, 18–22 June 2019; pp. 6023–6032. [Google Scholar]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Cygert, S.; Czyżewski, A. Toward robust pedestrian detection with data augmentation. IEEE Access 2020, 8, 136674–136683. [Google Scholar] [CrossRef]

- Chen, Z.; Ouyang, W.; Liu, T.; Tao, D. A shape transformation-based dataset augmentation framework for pedestrian detection. Int. J. Comput. Vis. 2021, 129, 1121–1138. [Google Scholar] [CrossRef]

- Chi, C.; Zhang, S.; Xing, J.; Lei, Z.; Li, S.Z.; Zou, X. Pedhunter: Occlusion robust pedestrian detector in crowded scenes. Proc. AAAI Conf. Artif. Intell. 2020, 34, 10639–10646. [Google Scholar] [CrossRef]

- Tang, Y.; Li, B.; Liu, M.; Chen, B.; Wang, Y.; Ouyang, W. Autopedestrian: An automatic data augmentation and loss function search scheme for pedestrian detection. IEEE Trans. Image Process. 2021, 30, 8483–8496. [Google Scholar] [CrossRef] [PubMed]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Khan, A.H.; Nawaz, M.S.; Dengel, A. Localized Semantic Feature Mixers for Efficient Pedestrian Detection in Autonomous Driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 5476–5485. [Google Scholar]

- Tumas, P.; Serackis, A.; Nowosielski, A. Augmentation of severe weather impact to far-infrared sensor images to improve pedestrian detection system. Electronics 2021, 10, 934. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Dollar, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian detection: An evaluation of the state of the art. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 743–761. [Google Scholar] [CrossRef]

- Dollár, P.; Appel, R.; Belongie, S.; Perona, P. Fast feature pyramids for object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1532–1545. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Yao, H.; Zhang, Y.; Jian, H.; Zhang, L.; Cheng, R. Nighttime pedestrian detection based on Fore-Background contrast learning. Knowl.-Based Syst. 2023, 275, 110719. [Google Scholar] [CrossRef]

| Method | Miss Rate (%) | |||||

|---|---|---|---|---|---|---|

| ALL | DAY | NIGHT | Campus | Road | Downtown | |

| ACF [45] | 47.32 | 42.57 | 56.17 | 16.50 | 6.68 | 18.45 |

| Halfway Fusion [23] | 25.75 | 24.88 | 26.59 | - | - | - |

| MSDS-RCNN [16] | 11.34 | 10.53 | 12.94 | 11.26 | 3.60 | 14.80 |

| AR-CNN [11] | 9.34 | 9.94 | 8.38 | 11.73 | 3.38 | 11.73 |

| 8.31 | 8.36 | 8.27 | 10.80 | 3.74 | 11.00 | |

| MBNet [18] | 8.13 | 8.28 | 7.86 | 10.65 | 4.25 | 9.18 |

| MLPD [15] | 7.58 | 7.95 | 6.95 | 9.21 | 5.04 | 9.32 |

| ICAFusion [20] | 7.17 | 6.82 | 7.85 | - | - | - |

| [19] | 6.75 | 7.76 | 4.59 | 9.45 | 3.47 | 8.72 |

| GAFF [13] | 6.48 | 8.35 | 3.46 | 7.95 | 3.70 | 8.35 |

| CFR [17] | 5.96 | 8.35 | 3.46 | 7.45 | 4.10 | 7.25 |

| 6.12 | 7.19 | 4.37 | 9.05 | 3.24 | 7.25 | |

| 5.50 | 6.29 | 4.20 | 7.64 | 3.06 | 6.72 | |

| Method | Spectral | Miss Rate (%) |

|---|---|---|

| Yolov3 [46] | visible | 37.70 |

| infrared | 19.73 | |

| Yolov5 [10] | visible | 22.59 |

| infrared | 10.66 | |

| FBCNet [47] | visible | 19.78 |

| infrared | 7.98 | |

| MLPD [15] | multi | 6.01 |

| CFT [19] | 5.40 | |

| multi | 5.64 | |

| 4.43 |

| Attention | Miss Rate (%) | |

|---|---|---|

| Intra (Self) | Inter (Cross) | ALL |

| - | - | 7.50 |

| √ | - | 6.81 |

| - | √ | 6.66 |

| √ | √ | 6.12 |

| Iterations of Modules N | |

| MR (%) | |

| 1 | 6.16 |

| 2 | 6.12 |

| 4 | 6.20 |

| Number of Patches | |

| Patch | MR (%) |

| 8 | 6.61 |

| 16 | 6.12 |

| 32 | 6.88 |

| Method | Aug. | Miss Rate (%) | ||||||

|---|---|---|---|---|---|---|---|---|

| Geo | Shift | ALL | DAY | NIGHT | Campus | Road | Downtown | |

| Baseline | - | - | 11.50 | 13.98 | 6.83 | 14.82 | 8.22 | 13.73 |

| - | √ | 10.58 | 12.29 | 7.11 | 13.67 | 3.17 | 13.59 | |

| √ | - | 7.50 | 8.84 | 4.70 | 11.06 | 1.93 | 9.18 | |

| √ | √ | 7.03 | 7.85 | 5.38 | 9.40 | 3.29 | 8.81 | |

| INSA(Ours) | - | - | 10.11 | 11.18 | 7.86 | 12.74 | 6.04 | 12.36 |

| - | √ | 9.30 | 10.42 | 7.35 | 11.89 | 2.92 | 12.24 | |

| √ | - | 6.12 | 7.19 | 4.37 | 9.05 | 3.24 | 7.25 | |

| √ | √ | 5.50 | 6.29 | 4.20 | 7.64 | 3.06 | 6.72 | |

| Miss Rate (%) | ||||||||

|---|---|---|---|---|---|---|---|---|

| 0 | ||||||||

| p | 1.0 | 7.26 | 6.96 | 6.87 | 6.12 (p = 0) | 7.18 | 7.49 | 7.57 |

| 0.7 | 6.71 | 6.07 | 6.63 | 6.80 | 6.70 | 7.61 | ||

| 0.5 | 6.76 | 5.85 | 5.93 | 7.00 | 7.21 | 7.16 | ||

| 0.3 | 6.33 | 5.50 | 5.95 | 6.27 | 6.22 | 6.93 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, S.; Kim, T.; Shin, J.; Kim, N.; Choi, Y. INSANet: INtra-INter Spectral Attention Network for Effective Feature Fusion of Multispectral Pedestrian Detection. Sensors 2024, 24, 1168. https://doi.org/10.3390/s24041168

Lee S, Kim T, Shin J, Kim N, Choi Y. INSANet: INtra-INter Spectral Attention Network for Effective Feature Fusion of Multispectral Pedestrian Detection. Sensors. 2024; 24(4):1168. https://doi.org/10.3390/s24041168

Chicago/Turabian StyleLee, Sangin, Taejoo Kim, Jeongmin Shin, Namil Kim, and Yukyung Choi. 2024. "INSANet: INtra-INter Spectral Attention Network for Effective Feature Fusion of Multispectral Pedestrian Detection" Sensors 24, no. 4: 1168. https://doi.org/10.3390/s24041168

APA StyleLee, S., Kim, T., Shin, J., Kim, N., & Choi, Y. (2024). INSANet: INtra-INter Spectral Attention Network for Effective Feature Fusion of Multispectral Pedestrian Detection. Sensors, 24(4), 1168. https://doi.org/10.3390/s24041168