Thermal Cameras for Continuous and Contactless Respiration Monitoring

Abstract

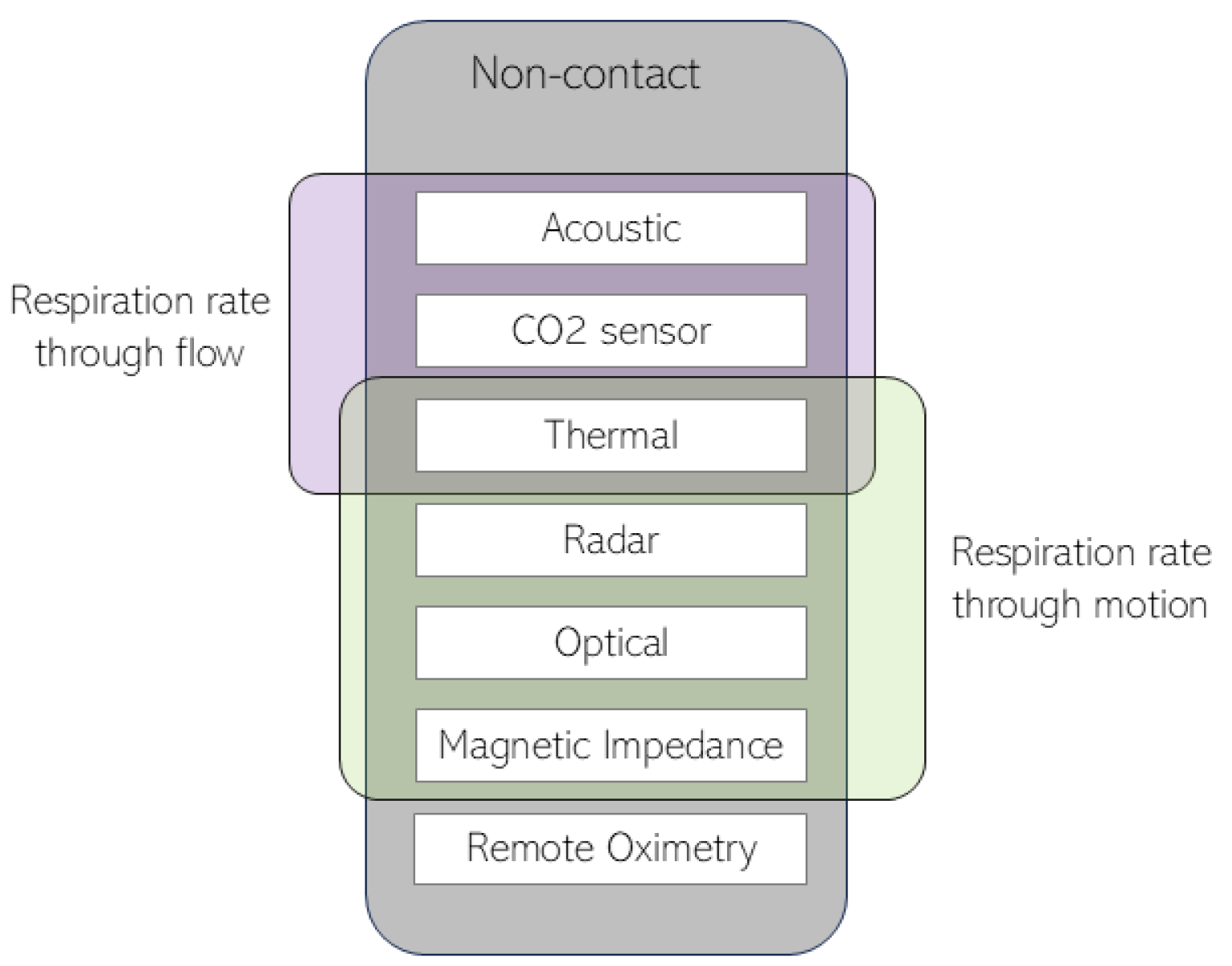

1. Introduction

2. Remote Thermography

2.1. Data Acquisition

| Participants | Environment | Camera(s) (Type, Resolution) | Reference | Outcome | Flow/Motion Separation | Performance | |

|---|---|---|---|---|---|---|---|

| Murthy et al. (2005/2006) [39,47] | 3 healthy adults | Lab | MWIR, (640 × 512) | Respiration belt | RR | No | accuracy = 96.43% |

| Murthy et al. (2009) [40] | 14 healthy adults 13 adults with OSA | Lab | MWIR, (640 × 512) | Polysomnography | Airflow abnormalities | No | kappa = 0.80–0.92% |

| Fei and Pavlidis et al. (2010) [41] | 20 healthy adults | Lab | MWIR, (640 × 512) | Respiration belt | RR | No | CAND = 98.27% |

| Al-Khalidi et al. (2010) [42] | - | Lab | LWIR, (320 × 240) | - | ROI for RR | No | - |

| Abbas et al. (2011) [43] | 7 infants | NICU | LWIR, (1024 × 768) | Chest impedance ECG monitor | RR | No | - |

| Lewis et al. (2011) [44] | 12 healthy adults 7 healthy adults | Lab | LWIR, (320 × 240) MWIR, (640 × 512) | Plethysmography | RR, IBI, rTV | No | correlation = 0.90–0.98 correlation = 0.90–0.95 |

| Goldman et al. (2012) [45] | 17 children | PEDS | LWIR, (320 × 240) | Nasal pressure Manual annotations | RR, breathing synchronicity | Yes | alpha = 0.976 |

| Chauvin et al. (2014) [46] | 15 healthy adults | Lab | LWIR, (640 × 480) | Respiration belt | RR | No | tp2 = 37–100% |

| Pereira et al. (2015) [48] | 11 healthy adults | Lab | LWIR, (1024 × 768) | Piezo-plethysmography | RR/IBI | No | correlation = 0.940–0.974 MAE = 0.33–0.96 bpm |

| Ruminski et al. (2016) [49,50] | 16 healthy adults 12 healthy adults | Lab | LWIR, (320 × 240) | Respiration belt | RR, apneas | No | MAE = 0.415–1.291 bpm correlation = 0.912–0.953% |

| Pereira et al. (2017) [51] | 12 healthy adults | Lab | LWIR, (1024 × 768) | Piezo-plethysmography | RR | Yes | correlation = 0.95–0.98 RMSE = 0.28–3.45 bpm |

| Ruminski et al. (2017) [52] | 10 healthy adults | Lab | LWIR, (60 × 80) | Respiration belt | RR | No | MAE = 0.236–0.350 bpm |

| Pereira et al. (2018) [53] | 20 healthy adults | Lab | MWIR, (1024 × 768) | Piezo-plethysmography | RR (and HR) | No | RMSE = 0.71 ± 0.30 bpm |

| Pereira et al. (2018) [54] | 12 healthy adults 9 newborns | Lab NICU | LWIR, (1024 × 768) | Piezo-plethysmography ECG | RR | No | RMSE = 0.31–3.27 bpm RMSE = 4.15 bpm |

| Cho et al. (2017) [55] | 23 healthy adults | Lab/Outdoors | LWIR, (160 × 120) | Respiration belt | RR/IBI | No | correlation = 0.9987 RMSE = 0.459 bpm |

| Cho et al. (2017) [56] | 8 healthy adults | Lab | LWIR, (120 × 120) | Instructed protocol | stress level based on RR | No | accuracy = 84.59%/56.52% |

| Hochhausen et al. (2018) [57] | 28 adults | PACU | LWIR, (1024 × 768) | Chest impedance ECG monitor | RR | No | correlation = 0.607–0.849 |

| Chan et al. (2019) [58] | 27 adults | ICU | LWIR, (382 × 288) | Chest impedance Manual annotations | RR | No | mean bias = −0.667/−1.000 bpm correlation = 0.796–0.943 |

| Jakkaew et al. (2020) [59] | 16 healthy adults | Lab | LWIR, (640 × 480) | Respiration belt | RR | Yes | RMSE = 1.82 ± 0.75 bpm |

| Jagadev et al. (2020/2022) [60,61] | 50 healthy adults | Lab | LWIR, (320 × 240) | Manual annotations | RR | No | [60] precision = 98.76% sensitivity = 99.07% [61] accuracy = 98.83–99.5% |

| Lorato et al. (2020) [62] | 7 premature newborns | NICU | LWIR, (60 × 80) | Chest impedance | RR | No | MAE = 2.07 bpm |

| Lorato et al. (2021) [63] | 9 premature newborns | NICU | LWIR, (60 × 80) | Chest impedance | Apneas | Yes | accuracy = 83.20–94.35% |

| Kwon et al. (2021) [64] | 101 adults | PACU | LWIR, (320 × 240) | Manual annotations Chest impedance | RR | No | correlation = 0.95 |

| Lyra et al. (2021) [65] | 26 adults | ICU | LWIR, (382 × 288) | Chest impedance | RR | No | MAE = 2.69 bpm |

| Takahashi et al. (2021) [66] | 7 adults | Lab | LWIR, (320 × 256) | Instructed protocol | RR | No | MAE = 0.66 bpm |

| Shu et al. (2022) [67] | 8 healthy adults | Lab | LWIR, (320 × 240) | PPG | RR | No | error < 2% |

2.2. Defining and Tracking the Region of Interest (ROI)

| ROI Definition | Body Area | Tracking | Method | |

|---|---|---|---|---|

| Murthy et al. (2006) [39] | Manual | Nostrils/mouth | Yes | ROI adjusted manually; Tracking assumes the relative position towards the tip of the nose |

| Murthy et al. (2009) [40] | Automatic | Nostrils | Yes | ROI segmentation based on integral projections and an edge detector; Coalitional tracking [71] |

| Fei and Pavlidis et al. (2010) [41] | Automatic | Nostrils | Yes | ROI detection based on vertical and horizontal gradients; Coalitional tracking [71] |

| Al-Khalidi et al. (2010) [42] | Automatic | n.a. * | Yes | Two methods for ROI detection based on low pixel intensity; Tracking the circle around the ROI center |

| Abbas et al. (2011) [43] | Manual | Nostrils | No | - |

| Lewis et al. (2011) [44] | Manual | Nostrils | Yes | Manual selection of first ROI; PBVD tracking algorithm [72] |

| Goldman et al. (2012) [45] | Manual | Nostrils, thorax, and abdomen | No | Manual selection of ROIs; Frames differencing |

| Chauvin et al. (2014) [46] | Manual | Nose/mouth | Yes | TLD algorithm: Tracking based on Lucas–Kanade algorithm [73]; Detector (if needed to reinitialize the tracker); Look at Pose to adjust pan–tilt unit |

| Pereira et al. (2015/2018) [48,53] | Automatic | Nose | Yes |

ROI obtained through a sequence of thresholding, temperature projections, and edge detections; Tracking using the least-squares approach [74] |

| Ruminski et al. (2016) [49,50] | Manual | Nostrils/nose | No | |

| Pereira et al. (2017) [51] | Automatic | Nose, mouth and shoulders | Yes | |

| Ruminski et al. (2017) [52] | Manual | Nostrils/mouth | No | ROI selected should be big enough to account for small movements |

| Pereira et al. (2018) [54] | Automatic | n.a. * | No | “Black box” approach: a grid is laid over the video and each grid cell is an ROI |

| Cho et al. (2017) [55,56] | Automatic | Nostrils | Yes | Pre-processing: optimal quantization; Thermal gradient map and gradient through Kalal et al.’s algorithm [75]; Lucas-Kanade’s disparity-based tracker [73]; ROI update |

| Hochhausen et al. (2018) [57] | Manual | Nose | Yes | Tracking using Mei et al.’s algorithm [74]; |

| Chan et al. (2019) [58] | Manual | Nostrils | Yes | Tracking using Kanade–Lucas–Tomasi tracker [73,76] |

| Jakkaew et al. (2020) [59] | Automatic | n.a. * | No | Noise removal with a Gaussian filter; ROI considered the square around the highest intensity pixel or ROI is the largest area above a certain threshold |

| Jagadev et al. (2020) [60] | Manual | Nostrils | Yes | Tracking using the algorithm proposed by Kazemi et al. [77] |

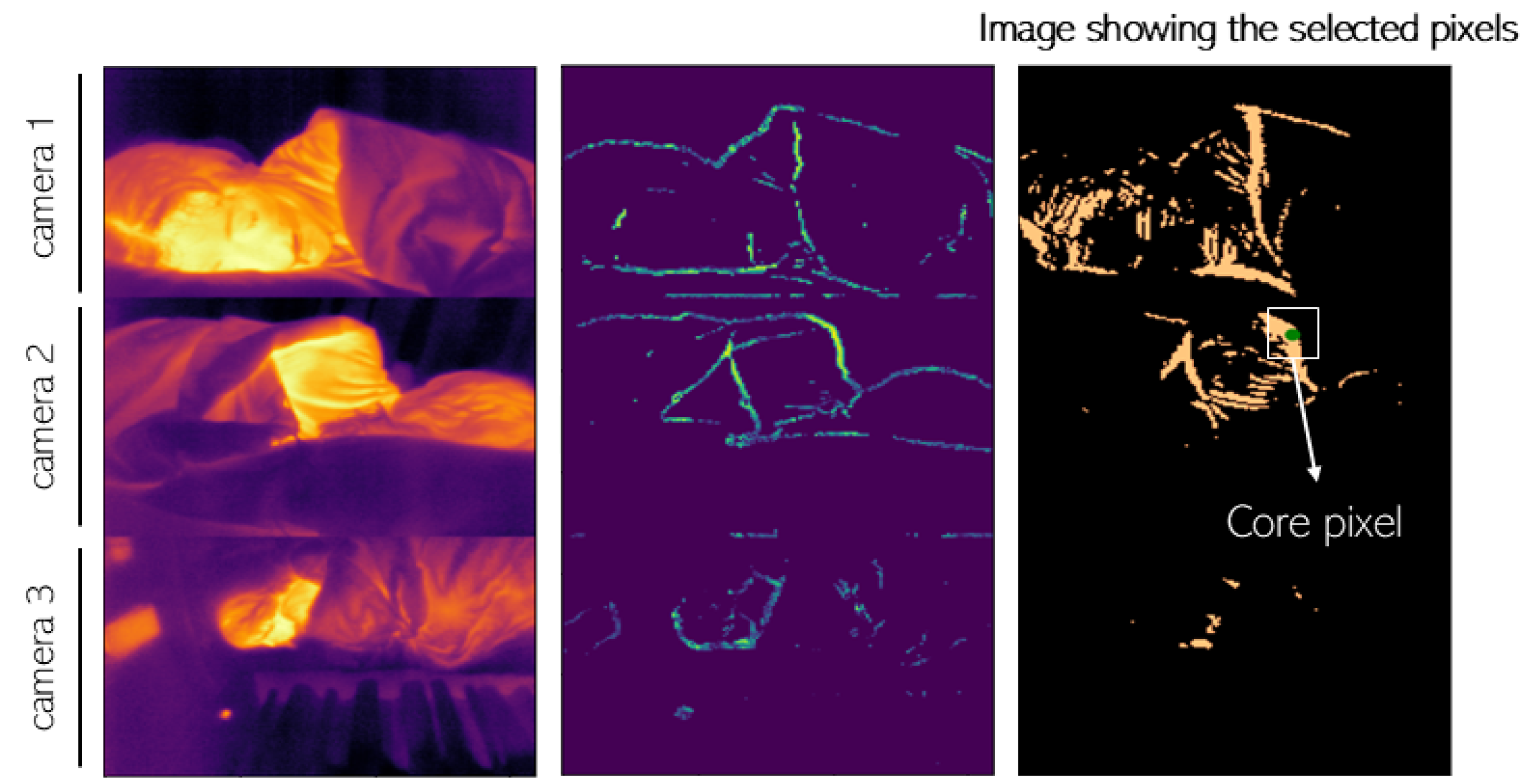

| Lorato et al. (2020) [62] | Automatic | n.a. * | No | Combination of three features (pseudo-periodicity, RRclusters, and gradient); Core pixel defined as the highest value in the combined matrix; ROI defined as a region with high correlation to the core pixel |

| Lorato et al. (2021) [63] | Automatic | n.a. * | No | Same method as in [62] with two more features (covariance and flow map) used to separate the motion from flow ROI |

| Kwon et al. (2021) [64] | Manual | Nose | No | - |

| Lyra et al. (2021) [65] | Automatic | Head and chest | Yes | Deep learning method: YOLOv4-Tiny object detector to extract the ROI continuously [78] |

| Takahashi et al. (2021) [66] | Automatic | Face | No | Deep learning method: YOLOv3 to detect the ROI; The ROI is divided into subregions [79] |

| Jagadev et al. (2022) [61] | Automatic | Nostrils | Yes | Deep learning method (ResNet50) for face detection; Tracking using the algorithm proposed by Kazemi et al. [77] |

| Shu et al. (2022) [67] | Automatic | Nostrils | Yes | Deep learning method: YOLOv3 to detect and track the ROI |

2.3. Breathing Signal Extraction and Respiration Rate Estimation

| Breathing Signal Extraction and RR Estimation Methods | |

|---|---|

| Murthy et al. (2006) [39] | - Breathing waveform as the number of pixels and their temperature |

| Murthy et al. (2009) [40] | - Respiration signal as the averaged intensity of ROI - Wavelet analysis CWT |

| Fei and Pavlidis et al. (2010) [41] | - Respiration signal as the averaged intensity of ROI - Wavelet analysis CWT |

| Al-Khalidi et al. (2010) [42] | - Respiration signal as the averaged intensity of ROI |

| Abbas et al. (2011) [43] | - Respiration signal as the averaged intensity of ROI - Wavelet analysis CWT (Debauchies wavelet) |

| Lewis et al. (2011) [44] | - Thermal signal as the averaged intensity of each nostril - Respiration rate measured through the spectral density distribution - Tidal volume measured through thermal signal integration - Dynamic filtering |

| Goldman et al. (2012) [45] | - Respiration signal as the difference between positive and negative areas - Phase correction and filtering (Chebyshev) - Fourier transform to obtain the RR |

| Chauvin et al. (2014) [46] | - Gradient to mask the ROI - Breathing waveform as the average intensity within the mask - Hanning window and Fourier transform to obtain the RR |

| Pereira et al. (2015/2018) [48,53] Chan et al. (2019) [58] Hochhausen et al. (2018) [57] Kwon et al. (2021) [64] | - Respiration signal as the average intensity of the ROM - Filtering: Butterworth - IBI computed with the Brüser et al. algorithm [85]: three estimators combined with a Bayesian function |

| Ruminski et al. (2016) [49,50] | - Respiration signal as the averaged intensity of the ROI - Signal normalized and filtered (moving average and Butterworth filters) - RR extracted using four different estimators |

| Pereira et al. (2017) [51] | - Respiration signal of the nose and mouth ROIs as the average intensity - Respiration signal of the shoulders as the vertical movement - Fourier transform to extract RR - SQI computation based on four features of the power spectrum - Fusion algorithm to combine all regions |

| Ruminski et al. (2017) [52] | - Respiration signal computed using a skewness operator - Filtering: Butterworth - RR extracted using three different estimators |

| Cho et al. (2017) [55] | - Respiration signal computed through a thermal voxel-based method - RR determined through short-time power spectral density: Fourier transform of the short-time autocorrelation function |

| Cho et al. (2017) [56] | - Computation of the 2D spectrogram - Data augmentation - CNN to classify different stress levels |

| Pereira et al. (2018) [54] | -For each grid cell: - Hamming window, Fourier transform, normalization, and filtering - SQI computation based on four features of the power spectrum -Selection of cells with SQI > 0.75 -RR defined using three different fusion techniques |

| Jakkaew et al. (2020) [59] | - Respiration signal as the averaged intensity of the ROI - Filtering: Butterworth, Savitzky–Golay, and moving average - RR computed through the number of peaks in the signal |

| Jagadev et al. (2020) [60] | - Respiration signal as the averaged intensity of the ROI - Testing and comparing different filters - Breath detection algorithm to extract the RR |

| Lorato et al. (2020/2021) [62,63] | - Respiration signal as the averaged intensity of the ROI - Filtering: Butterworth - RR as the predominant frequency |

| Lyra et al. (2021) [65] | - Optical flow algorithm [86] to detect pixel intensity changes - RR as the frequency of the changes |

| Takahashi et al. (2021) [66] | - For each subregion: -Frequency analysis: PSD -Respiratory likelihood index as a weighted score of the PSD - RR as the frequency with the highest index |

| Jagadev et al. (2022) [61] | - Machine learning algorithm (BSCA) to automatically obtain the RR |

| Shu et al. (2022) [67] | - Respiration signal as the average intensity of the ROI - Filtering: Butterworth - RR as the predominant frequency |

3. Applications

Apnea Detection

4. Limitations and Challenges

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Di Fiore, J.M. Neonatal cardiorespiratory monitoring techniques. In Seminars in Neonatology; Elsevier: Amsterdam, The Netherlands, 2004; Volume 9, pp. 195–203. [Google Scholar]

- Grossman, P. Respiration, stress, and cardiovascular function. Psychophysiology 1983, 20, 284–300. [Google Scholar] [CrossRef] [PubMed]

- West, J.B. Respiratory Physiology: The Essentials; Lippincott Williams & Wilkins: Philadelphia, PA, USA, 2012. [Google Scholar]

- Cereda, M.; Neligan, P.J. Ventilation and Pulmonary Function. In Monitoring in Neurocritical Care; Elsevier: Amsterdam, The Netherlands, 2013; pp. 189–199. [Google Scholar]

- Costanzo, I.; Sen, D.; Rhein, L.; Guler, U. Respiratory monitoring: Current state of the art and future roads. IEEE Rev. Biomed. Eng. 2020, 15, 103–121. [Google Scholar] [CrossRef]

- Ortega, R.; Connor, C.; Kim, S.; Djang, R.; Patel, K. Monitoring ventilation with capnography. N. Engl. J. Med. 2012, 367, e27. [Google Scholar] [CrossRef]

- Tobias, J.D. Transcutaneous carbon dioxide monitoring in infants and children. Pediatr. Anesth. 2009, 19, 434–444. [Google Scholar] [CrossRef]

- Lochner, C.M.; Khan, Y.; Pierre, A.; Arias, A.C. All-organic optoelectronic sensor for pulse oximetry. Nat. Commun. 2014, 5, 5745. [Google Scholar] [CrossRef]

- Tavakoli, M.; Turicchia, L.; Sarpeshkar, R. An ultra-low-power pulse oximeter implemented with an energy-efficient transimpedance amplifier. IEEE Trans. Biomed. Circuits Syst. 2009, 4, 27–38. [Google Scholar] [CrossRef]

- Jubran, A. Pulse oximetry. Crit. Care 1999, 3, R11. [Google Scholar] [CrossRef]

- Torp, K.D.; Modi, P.; Simon, L.V. Pulse Oximetry; StatPearls Publishing: Treasure Island, FL, USA, 2017. [Google Scholar]

- AL-Khalidi, F.Q.; Saatchi, R.; Burke, D.; Elphick, H.; Tan, S. Respiration rate monitoring methods: A review. Pediatr. Pulmonol. 2011, 46, 523–529. [Google Scholar] [CrossRef]

- Hsu, C.H.; Chow, J.C. Design and clinic monitoring of a newly developed non-attached infant apnea monitor. Biomed. Eng. Appl. Basis Commun. 2005, 17, 126–134. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, D.; Wang, L.; Zheng, Y.; Gu, T.; Dorizzi, B.; Zhou, X. Contactless respiration monitoring using ultrasound signal with off-the-shelf audio devices. IEEE Internet Things J. 2018, 6, 2959–2973. [Google Scholar] [CrossRef]

- Doheny, E.P.; O’Callaghan, B.P.; Fahed, V.S.; Liegey, J.; Goulding, C.; Ryan, S.; Lowery, M.M. Estimation of respiratory rate and exhale duration using audio signals recorded by smartphone microphones. Biomed. Signal Process. Control 2023, 80, 104318. [Google Scholar] [CrossRef]

- Stratton, H.; Saatchi, R.; Evans, R.; Elphick, H. Noncontact Respiration Rate Monitoring: An Evaluation of Four Methods; The British Institute of Non-Destructive Testing: Northampton, UK, 2021. [Google Scholar]

- Fei, J.; Zhu, Z.; Pavlidis, I. Imaging breathing rate in the CO2 absorption band. In Proceedings of the 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference, Shanghai, China, 17–18 January 2006; pp. 700–705. [Google Scholar]

- Lin, J.C. Noninvasive microwave measurement of respiration. Proc. IEEE 1975, 63, 1530. [Google Scholar] [CrossRef]

- Gu, C.; Li, C. From tumor targeting to speech monitoring: Accurate respiratory monitoring using medical continuous-wave radar sensors. IEEE Microw. Mag. 2014, 15, 66–76. [Google Scholar]

- Yang, X.; Sun, G.; Ishibashi, K. Non-contact acquisition of respiration and heart rates using Doppler radar with time domain peak-detection algorithm. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju, Republic of Korea, 11–15 July 2017; pp. 2847–2850. [Google Scholar]

- Capraro, G.; Etebari, C.; Luchette, K.; Mercurio, L.; Merck, D.; Kirenko, I.; van Zon, K.; Bartula, M.; Rocque, M.; Kobayashi, L. ‘No touch’ vitals: A pilot study of non-contact vital signs acquisition in exercising volunteers. In Proceedings of the 2018 IEEE Biomedical Circuits and Systems Conference (BioCAS), Cleveland, OH, USA, 17–19 October 2018; pp. 1–4. [Google Scholar]

- Addison, P.S.; Jacquel, D.; Foo, D.M.; Antunes, A.; Borg, U.R. Video-based physiologic monitoring during an acute hypoxic challenge: Heart rate, respiratory rate, and oxygen saturation. Anesth. Analg. 2017, 125, 860–873. [Google Scholar] [CrossRef]

- Aoki, H.; Takemura, Y.; Mimura, K.; Nakajima, M. Development of non-restrictive sensing system for sleeping person using fiber grating vision sensor. In Proceedings of the MHS2001. Proceedings of 2001 International Symposium on Micromechatronics and Human Science (Cat. No. 01TH8583), Nagoya, Japan, 10–11 September 2001; pp. 155–160. [Google Scholar]

- Teichmann, D.; Foussier, J.; Jia, J.; Leonhardt, S.; Walter, M. Noncontact monitoring of cardiorespiratory activity by electromagnetic coupling. IEEE Trans. Biomed. Eng. 2013, 60, 2142–2152. [Google Scholar] [CrossRef]

- Teichmann, D.; Teichmann, M.; Weitz, P.; Wolfart, S.; Leonhardt, S.; Walter, M. SensInDenT—Noncontact sensors integrated into dental treatment units. IEEE Trans. Biomed. Circuits Syst. 2016, 11, 225–233. [Google Scholar] [CrossRef]

- Radomski, A.; Teichmann, D. On-Road Evaluation of Unobtrusive In-Car Respiration Monitoring. Sensors 2024, 24, 4500. [Google Scholar] [CrossRef]

- Shao, D.; Liu, C.; Tsow, F.; Yang, Y.; Du, Z.; Iriya, R.; Yu, H.; Tao, N. Noncontact monitoring of blood oxygen saturation using camera and dual-wavelength imaging system. IEEE Trans. Biomed. Eng. 2015, 63, 1091–1098. [Google Scholar] [CrossRef]

- Stubán, N.; Masatsugu, N. Non-invasive calibration method for pulse oximeters. Period. Polytech. Electr. Eng. Arch. 2008, 52, 91–94. [Google Scholar] [CrossRef]

- Van Gastel, M.; Verkruysse, W. Contactless SpO2 with an RGB camera: Experimental proof of calibrated SpO2. Biomed. Opt. Express 2022, 13, 6791–6802. [Google Scholar] [CrossRef]

- Moço, A.; Verkruysse, W. Pulse oximetry based on photoplethysmography imaging with red and green light: Calibratability and challenges. J. Clin. Monit. Comput. 2021, 35, 123–133. [Google Scholar] [CrossRef] [PubMed]

- Wei, B.; Wu, X.; Zhang, C.; Lv, Z. Analysis and improvement of non-contact SpO2 extraction using an RGB webcam. Biomed. Opt. Express 2021, 12, 5227–5245. [Google Scholar] [CrossRef] [PubMed]

- Hu, M.H.; Zhai, G.T.; Li, D.; Fan, Y.Z.; Chen, X.H.; Yang, X.K. Synergetic use of thermal and visible imaging techniques for contactless and unobtrusive breathing measurement. J. Biomed. Opt. 2017, 22, 036006. [Google Scholar] [CrossRef] [PubMed]

- Kunczik, J.; Hubbermann, K.; Mösch, L.; Follmann, A.; Czaplik, M.; Barbosa Pereira, C. Breathing pattern monitoring by using remote sensors. Sensors 2022, 22, 8854. [Google Scholar] [CrossRef]

- Maurya, L.; Zwiggelaar, R.; Chawla, D.; Mahapatra, P. Non-contact respiratory rate monitoring using thermal and visible imaging: A pilot study on neonates. J. Clin. Monit. Comput. 2023, 37, 815–828. [Google Scholar] [CrossRef]

- Scebba, G.; Da Poian, G.; Karlen, W. Multispectral video fusion for non-contact monitoring of respiratory rate and apnea. IEEE Trans. Biomed. Eng. 2020, 68, 350–359. [Google Scholar] [CrossRef]

- Pavlidis, I.; Levine, J.; Baukol, P. Thermal imaging for anxiety detection. In Proceedings of the IEEE Workshop on Computer Vision Beyond the Visible Spectrum: Methods and Applications (Cat. No. PR00640), Hilton Head, SC, USA, 16 June 2000; pp. 104–109. [Google Scholar]

- Pavlidis, I.; Levine, J. Thermal image analysis for polygraph testing. IEEE Eng. Med. Biol. Mag. 2002, 21, 56–64. [Google Scholar] [CrossRef]

- Pavlidis, I. Continuous physiological monitoring. In Proceedings of the 25th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (IEEE Cat. No. 03CH37439), Cancun, Mexico, 17–21 September 2003; Volume 2, pp. 1084–1087. [Google Scholar]

- Murthy, R.; Pavlidis, I. Noncontact measurement of breathing function. IEEE Eng. Med. Biol. Mag. 2006, 25, 57–67. [Google Scholar] [CrossRef]

- Murthy, J.N.; Van Jaarsveld, J.; Fei, J.; Pavlidis, I.; Harrykissoon, R.I.; Lucke, J.F.; Faiz, S.; Castriotta, R.J. Thermal infrared imaging: A novel method to monitor airflow during polysomnography. Sleep 2009, 32, 1521–1527. [Google Scholar] [CrossRef][Green Version]

- Fei, J.; Pavlidis, I. Thermistor at a distance: Unobtrusive measurement of breathing. IEEE Trans. Biomed. Eng. 2009, 57, 988–998. [Google Scholar]

- Al-Khalidi, F.Q.; Saatchi, R.; Burke, D.; Elphick, H. Tracking human face features in thermal images for respiration monitoring. In Proceedings of the ACS/IEEE International Conference on Computer Systems and Applications—AICCSA 2010, Hammamet, Tunisia, 16–19 May 2010; pp. 1–6. [Google Scholar]

- Abbas, A.K.; Heimann, K.; Jergus, K.; Orlikowsky, T.; Leonhardt, S. Neonatal non-contact respiratory monitoring based on real-time infrared thermography. Biomed. Eng. Online 2011, 10, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Lewis, G.F.; Gatto, R.G.; Porges, S.W. A novel method for extracting respiration rate and relative tidal volume from infrared thermography. Psychophysiology 2011, 48, 877–887. [Google Scholar] [CrossRef] [PubMed]

- Goldman, L.J. Nasal airflow and thoracoabdominal motion in children using infrared thermographic video processing. Pediatr. Pulmonol. 2012, 47, 476–486. [Google Scholar] [CrossRef] [PubMed]

- Chauvin, R.; Hamel, M.; Brière, S.; Ferland, F.; Grondin, F.; Létourneau, D.; Tousignant, M.; Michaud, F. Contact-free respiration rate monitoring using a pan–tilt thermal camera for stationary bike telerehabilitation sessions. IEEE Syst. J. 2014, 10, 1046–1055. [Google Scholar] [CrossRef]

- Murthy, R.; Pavlidis, I. Non-Contact Monitoring of Breathing Function Using Infrared Imaging; Technical Report Number UH-CS-05-09; Department of Computer Science, University of Houston: Houston, TX, USA, 2005. [Google Scholar]

- Pereira, C.B.; Yu, X.; Czaplik, M.; Rossaint, R.; Blazek, V.; Leonhardt, S. Remote monitoring of breathing dynamics using infrared thermography. Biomed. Opt. Express 2015, 6, 4378–4394. [Google Scholar] [CrossRef]

- Ruminski, J. Analysis of the parameters of respiration patterns extracted from thermal image sequences. Biocybern. Biomed. Eng. 2016, 36, 731–741. [Google Scholar] [CrossRef]

- Rumiński, J. Evaluation of the respiration rate and pattern using a portable thermal camera. In Proceedings of the 13th Quantitative Infrared Thermography Conference, Gdansk, Poland, 4 July–8 July 2016. [Google Scholar]

- Barbosa Pereira, C.; Yu, X.; Czaplik, M.; Blazek, V.; Venema, B.; Leonhardt, S. Estimation of breathing rate in thermal imaging videos: A pilot study on healthy human subjects. J. Clin. Monit. Comput. 2017, 31, 1241–1254. [Google Scholar] [CrossRef]

- Ruminski, J.; Kwasniewska, A. Evaluation of respiration rate using thermal imaging in mobile conditions. In Application of Infrared to Biomedical Sciences; Series in BioEngineering; Ng, E., Etehadtavakol, M., Eds.; Springer: Singapore, 2017; pp. 311–346. [Google Scholar]

- Barbosa Pereira, C.; Czaplik, M.; Blazek, V.; Leonhardt, S.; Teichmann, D. Monitoring of cardiorespiratory signals using thermal imaging: A pilot study on healthy human subjects. Sensors 2018, 18, 1541. [Google Scholar] [CrossRef]

- Pereira, C.B.; Yu, X.; Goos, T.; Reiss, I.; Orlikowsky, T.; Heimann, K.; Venema, B.; Blazek, V.; Leonhardt, S.; Teichmann, D. Noncontact monitoring of respiratory rate in newborn infants using thermal imaging. IEEE Trans. Biomed. Eng. 2018, 66, 1105–1114. [Google Scholar] [CrossRef]

- Cho, Y.; Julier, S.J.; Marquardt, N.; Bianchi-Berthouze, N. Robust tracking of respiratory rate in high-dynamic range scenes using mobile thermal imaging. Biomed. Opt. Express 2017, 8, 4480–4503. [Google Scholar] [CrossRef]

- Cho, Y.; Bianchi-Berthouze, N.; Julier, S.J. DeepBreath: Deep learning of breathing patterns for automatic stress recognition using low-cost thermal imaging in unconstrained settings. In Proceedings of the 2017 Seventh International Conference on Affective Computing and Intelligent Interaction (ACII), San Antonio, TX, USA, 23–26 October 2017; pp. 456–463. [Google Scholar]

- Hochhausen, N.; Barbosa Pereira, C.; Leonhardt, S.; Rossaint, R.; Czaplik, M. Estimating respiratory rate in post-anesthesia care unit patients using infrared thermography: An observational study. Sensors 2018, 18, 1618. [Google Scholar] [CrossRef] [PubMed]

- Chan, P.; Wong, G.; Dinh Nguyen, T.; Nguyen, T.; McNeil, J.; Hopper, I. Estimation of respiratory rate using infrared video in an inpatient population: An observational study. J. Clin. Monit. Comput. 2020, 34, 1275–1284. [Google Scholar] [CrossRef] [PubMed]

- Jakkaew, P.; Onoye, T. Non-contact respiration monitoring and body movements detection for sleep using thermal imaging. Sensors 2020, 20, 6307. [Google Scholar] [CrossRef] [PubMed]

- Jagadev, P.; Giri, L.I. Non-contact monitoring of human respiration using infrared thermography and machine learning. Infrared Phys. Technol. 2020, 104, 103117. [Google Scholar] [CrossRef]

- Jagadev, P.; Naik, S.; Giri, L.I. Contactless monitoring of human respiration using infrared thermography and deep learning. Physiol. Meas. 2022, 43, 025006. [Google Scholar] [CrossRef]

- Lorato, I.; Stuijk, S.; Meftah, M.; Kommers, D.; Andriessen, P.; van Pul, C.; de Haan, G. Multi-camera infrared thermography for infant respiration monitoring. Biomed. Opt. Express 2020, 11, 4848–4861. [Google Scholar] [CrossRef]

- Lorato, I.; Stuijk, S.; Meftah, M.; Kommers, D.; Andriessen, P.; van Pul, C.; de Haan, G. Automatic separation of respiratory flow from motion in thermal videos for infant apnea detection. Sensors 2021, 21, 6306. [Google Scholar] [CrossRef]

- Kwon, H.M.; Ikeda, K.; Kim, S.H.; Thiele, R.H. Non-contact thermography-based respiratory rate monitoring in a post-anesthetic care unit. J. Clin. Monit. Comput. 2021, 35, 1291–1297. [Google Scholar] [CrossRef]

- Lyra, S.; Mayer, L.; Ou, L.; Chen, D.; Timms, P.; Tay, A.; Chan, P.Y.; Ganse, B.; Leonhardt, S.; Hoog Antink, C. A deep learning-based camera approach for vital sign monitoring using thermography images for ICU patients. Sensors 2021, 21, 1495. [Google Scholar] [CrossRef]

- Takahashi, Y.; Gu, Y.; Nakada, T.; Abe, R.; Nakaguchi, T. Estimation of respiratory rate from thermography using respiratory likelihood index. Sensors 2021, 21, 4406. [Google Scholar] [CrossRef]

- Shu, S.; Liang, H.; Zhang, Y.; Zhang, Y.; Yang, Z. Non-contact measurement of human respiration using an infrared thermal camera and the deep learning method. Meas. Sci. Technol. 2022, 33, 075202. [Google Scholar] [CrossRef]

- Lorato, I.; Stuijk, S.; Meftah, M.; Kommers, D.; Andriessen, P.; van Pul, C.; de Haan, G. Towards continuous camera-based respiration monitoring in infants. Sensors 2021, 21, 2268. [Google Scholar] [CrossRef] [PubMed]

- Alves, R.; Van Meulen, F.; Van Gastel, M.; Verkruijsse, W.; Overeem, S.; Zinger, S.; Stuijk, S. Thermal Imaging for Respiration Monitoring in Sleeping Positions: A Single Camera is Enough. In Proceedings of the 2023 IEEE 13th International Conference on Consumer Electronics-Berlin (ICCE—Berlin), Berlin, Germany, 3–5 September 2023; pp. 220–225. [Google Scholar]

- Sobel, I. An Isotropic 3 × 3 Image Gradient Operator; Presentation at Stanford A.I. Project 1968; Universitetet Linkoping: Linkoping, Sweden, 2014. [Google Scholar]

- Dowdall, J.; Pavlidis, I.T.; Tsiamyrtzis, P. Coalitional tracking. Comput. Vis. Image Underst. 2007, 106, 205–219. [Google Scholar] [CrossRef]

- Tao, H.; Huang, T.S. A piecewise Bézier volume deformation model and its applications in facial motion capture. In Advances in Image Processing and Understanding: A Festschrift for Thomas S Huang; World Scientific: Singapore, 2002; pp. 39–56. [Google Scholar]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the IJCAI’81: 7th International Joint Conference on Artificial Intelligence, Vancouver, BC, Canada, 24–28 August 1981; Volume 2, pp. 674–679. [Google Scholar]

- Mei, X.; Ling, H. Robust visual tracking and vehicle classification via sparse representation. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2259–2272. [Google Scholar]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Forward-backward error: Automatic detection of tracking failures. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2756–2759. [Google Scholar]

- Tomasi, C.; Kanade, T. Detection and tracking of point. Int. J. Comput. Vis. 1991, 9, 137–154. [Google Scholar] [CrossRef]

- Kazemi, V.; Sullivan, J. One millisecond face alignment with an ensemble of regression trees. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1867–1874. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Redmon, J. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- FLIR Software, Teledyne FLIR. 2024. Available online: https://www.flir.in/browse/professional-tools/thermography-software/ (accessed on 3 October 2024).

- Huang, Z.; Wang, W.; De Haan, G. Nose breathing or mouth breathing? A thermography-based new measurement for sleep monitoring. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 3882–3888. [Google Scholar]

- Koroteeva, E.; Shagiyanova, A. Infrared-based visualization of exhalation flows while wearing protective face masks. Phys. Fluids 2022, 34, 011705. [Google Scholar] [CrossRef]

- Telson, Y.C.; Furlan, R.M.M.M.; Ferreira, R.A.M.; Porto, M.P.; Motta, A.R. Breathing Mode Assessment with Thermography: A Pilot Study. CoDAS SciELO: Sao Paulo, Brasil, 2024; Volume 36, p. e20220323. [Google Scholar]

- Brüser, C.; Winter, S.; Leonhardt, S. Robust inter-beat interval estimation in cardiac vibration signals. Physiol. Meas. 2013, 34, 123. [Google Scholar] [CrossRef]

- Farnebäck, G. Two-frame motion estimation based on polynomial expansion. In Proceedings of the Image Analysis: 13th Scandinavian Conference, SCIA 2003, Halmstad, Sweden, 29 June–2 July 2003; pp. 363–370. [Google Scholar]

- Akay, M.; Mello, C. Wavelets for biomedical signal processing. In Proceedings of the 19th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, ‘Magnificent Milestones and Emerging Opportunities in Medical Engineering’ (Cat. No. 97CH36136), Chicago, IL, USA, 30 October–2 November 1997; Volume 6, pp. 2688–2691. [Google Scholar]

- Brüser, C.; Winter, S.; Leonhardt, S. How speech processing can help with beat-to-beat heart rate estimation in ballistocardiograms. In Proceedings of the 2013 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Gatineau, QC, Canada, 4–5 May 2013; pp. 12–16. [Google Scholar]

- Akbarian, S.; Ghahjaverestan, N.M.; Yadollahi, A.; Taati, B. Distinguishing obstructive versus central apneas in infrared video of sleep using deep learning: Validation study. J. Med. Internet Res. 2020, 22, e17252. [Google Scholar] [CrossRef]

- Watson, H.; Sackner, M.A.; Belsito, A.S. Method and Apparatus for Distinguishing Central Obstructive and Mixed Apneas by External Monitoring Devices Which Measure Rib Cage and Abdominal Compartmental Excursions During Respiration. US Patent 4,777,962, 18 October 1988. [Google Scholar]

- Lorato, I.; Stuijk, S.; Meftah, M.; Verkruijsse, W.; De Haan, G. Camera-based on-line short cessation of breathing detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alves, R.; van Meulen, F.; Overeem, S.; Zinger, S.; Stuijk, S. Thermal Cameras for Continuous and Contactless Respiration Monitoring. Sensors 2024, 24, 8118. https://doi.org/10.3390/s24248118

Alves R, van Meulen F, Overeem S, Zinger S, Stuijk S. Thermal Cameras for Continuous and Contactless Respiration Monitoring. Sensors. 2024; 24(24):8118. https://doi.org/10.3390/s24248118

Chicago/Turabian StyleAlves, Raquel, Fokke van Meulen, Sebastiaan Overeem, Svitlana Zinger, and Sander Stuijk. 2024. "Thermal Cameras for Continuous and Contactless Respiration Monitoring" Sensors 24, no. 24: 8118. https://doi.org/10.3390/s24248118

APA StyleAlves, R., van Meulen, F., Overeem, S., Zinger, S., & Stuijk, S. (2024). Thermal Cameras for Continuous and Contactless Respiration Monitoring. Sensors, 24(24), 8118. https://doi.org/10.3390/s24248118