Development of a Grape Cut Point Detection System Using Multi-Cameras for a Grape-Harvesting Robot

Abstract

1. Introduction

2. Materials and Methods

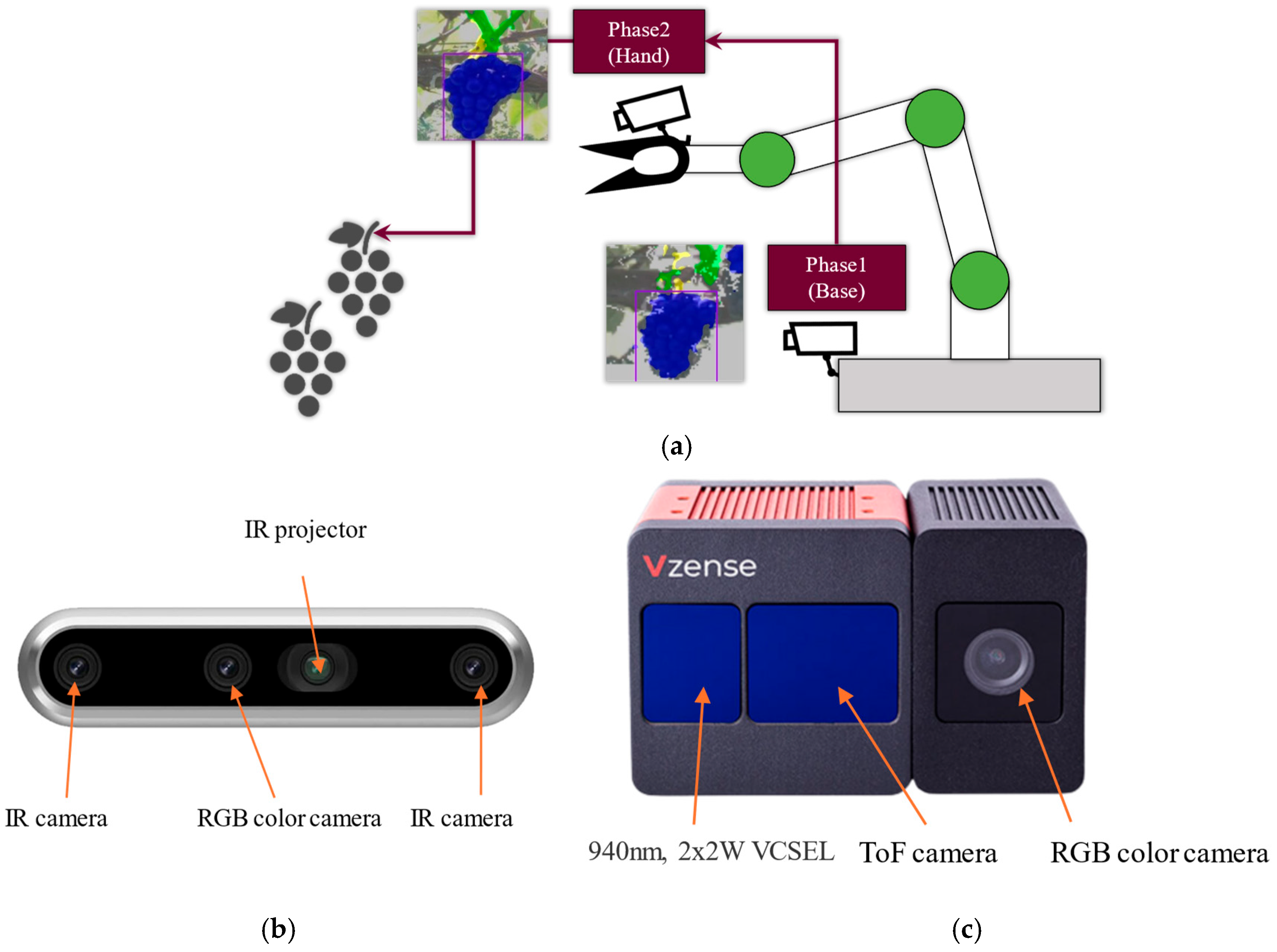

2.1. The Grape-Harvesting Robot System

2.2. Grape Cluster and Cut Point Detection Method

2.2.1. The Process Steps of Cut Point Detection and Harvesting Robot

2.2.2. Data Collection for Training the AI Detection Model

2.2.3. Detection of the Grape Cluster Using the Base Camera

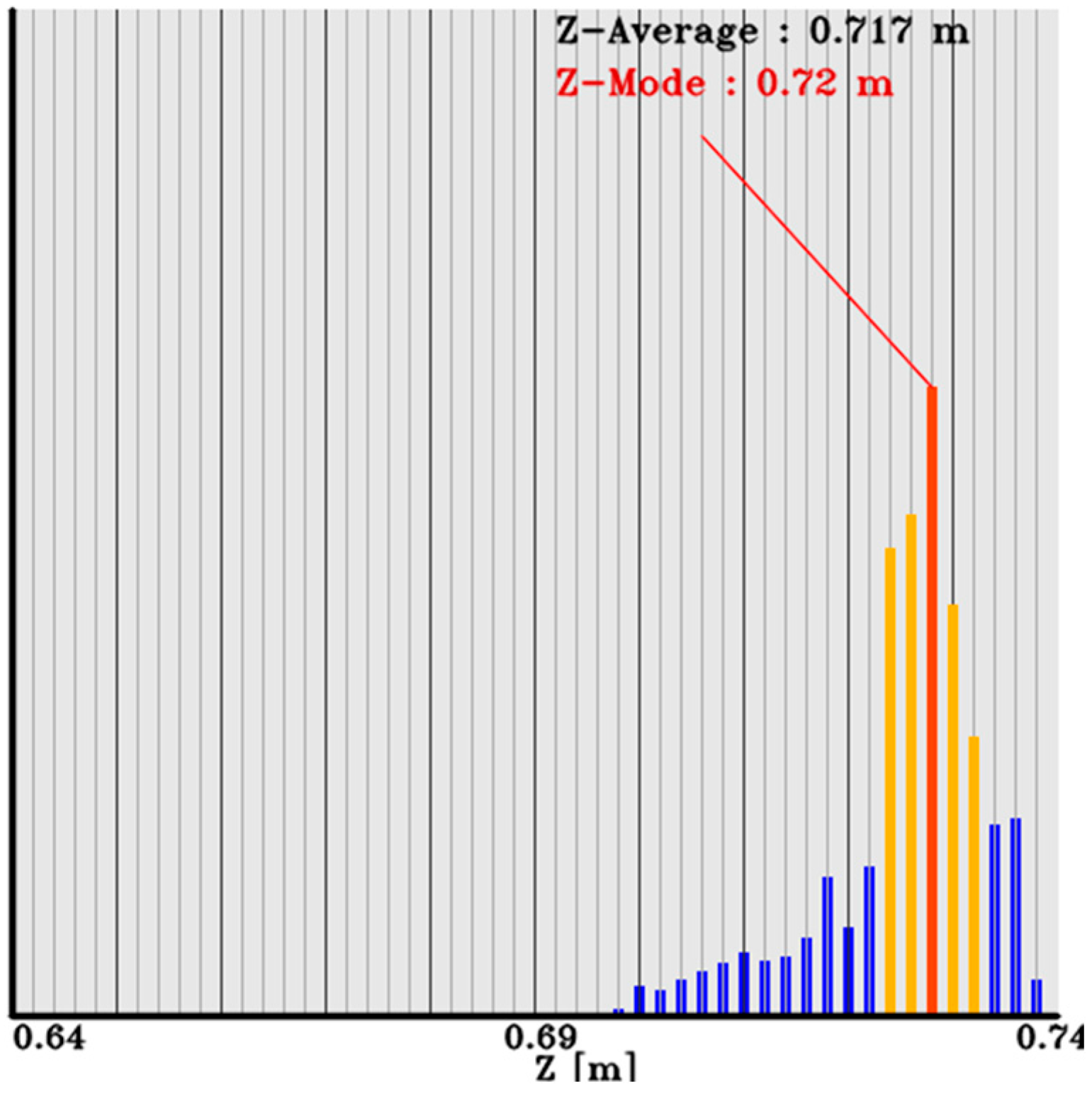

2.2.4. Cut Point Detection and 3D Position Estimation from Hand Camera

3. Experiment Results and Discussion

3.1. Indoor Experiment Results and Discussion

3.2. Outdoor Field Experiment Results and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Astill, G.; Perez, A.; Thornsbury, S. Developing Automation and Mechanization for Specialty Crops: A Review of U.S. Department of Agriculture Programs: A Report to Congress; Administrative Publication Number 082; Department of Agriculture: Washington, DC, USA, 2020.

- Zhang, T.; Huang, Z.; You, W.; Lin, J.; Tang, X.; Huang, H. An Autonomous Fruit and Vegetable Harvester with a Low-Cost Gripper Using a 3D Sensor. Sensors 2020, 20, 93. [Google Scholar] [CrossRef] [PubMed]

- Park, Y.; Seol, J.; Pak, J.; Jo, Y.; Kim, C.; Son, H.I. Human-Centered Approach for an Efficient Cucumber Harvesting Robot System: Harvest Ordering, Visual Servoing, and End-Effector. Comput. Electron. Agric. 2023, 212, 108116. [Google Scholar] [CrossRef]

- Rong, J.; Wang, P.; Wang, T.; Hu, L.; Yuan, T. Fruit Pose Recognition and Directional Orderly Grasping Strategies for Tomato Harvesting Robots. Comput. Electron. Agric. 2022, 202, 107430. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, Y.; Yang, C.; Zhao, H.; Chen, G.; Zhang, Z.; Fu, S.; Zhang, M.; Xu, H. End-Effector with a Bite Mode for Harvesting Citrus Fruit in Random Stalk Orientation Environment. Comput. Electron. Agric. 2019, 157, 454–470. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2015, arXiv:1506.02640. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. arXiv 2015, arXiv:1512.02325. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. arXiv 2016, arXiv:1612.08242. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar] [CrossRef]

- Li, T.; Xie, F.; Zhao, Z.; Zhao, H.; Guo, X.; Feng, Q. A Multi-Arm Robot System for Efficient Apple Harvesting: Perception, Task Plan and Control. Comput. Electron. Agric. 2023, 211, 107979. [Google Scholar] [CrossRef]

- Li, H.; Li, C.; Li, G.; Chen, L. A Real-Time Table Grape Detection Method Based on Improved YOLOv4-Tiny Network in Complex Background. Biosyst. Eng. 2021, 212, 347–359. [Google Scholar] [CrossRef]

- Shen, L.; Su, J.; He, R.; Song, L.; Huang, R.; Fang, Y.; Song, Y.; Su, B. Real-Time Tracking and Counting of Grape Clusters in the Field Based on Channel Pruning with YOLOv5s. Comput. Electron. Agric. 2023, 206, 107662. [Google Scholar] [CrossRef]

- Wang, C.; Tang, Y.; Zou, X.; SiTu, W.; Feng, W. A Robust Fruit Image Segmentation Algorithm against Varying Illumination for Vision System of Fruit Harvesting Robot. Optik 2017, 131, 626–631. [Google Scholar] [CrossRef]

- Ji, W.; Qian, Z.; Xu, B.; Tao, Y.; Zhao, D.; Ding, S. Apple Tree Branch Segmentation from Images with Small Gray-Level Difference for Agricultural Harvesting Robot. Optik 2016, 127, 11173–11182. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, Z.; Luo, L.; Wei, H.; Wang, W.; Chen, M.; Luo, S. DualSeg: Fusing Transformer and CNN Structure for Image Segmentation in Complex Vineyard Environment. Comput. Electron. Agric. 2023, 206, 107682. [Google Scholar] [CrossRef]

- Luo, L.; Tang, Y.; Zou, X.; Ye, M.; Feng, W.; Li, G. Vision-Based Extraction of Spatial Information in Grape Clusters for Harvesting Robots. Biosyst. Eng. 2016, 151, 90–104. [Google Scholar] [CrossRef]

- Coll-Ribes, G.; Torres-Rodríguez, I.J.; Grau, A.; Guerra, E.; Sanfeliu, A. Accurate Detection and Depth Estimation of Table Grapes and Peduncles for Robot Harvesting, Combining Monocular Depth Estimation and CNN Methods. Comput. Electron. Agric. 2023, 215, 108362. [Google Scholar] [CrossRef]

- Jin, Y.; Liu, J.; Wang, J.; Xu, Z.; Yuan, Y. Far-near Combined Positioning of Picking-Point Based on Depth Data Features for Horizontal-Trellis Cultivated Grape. Comput. Electron. Agric. 2022, 194, 106791. [Google Scholar] [CrossRef]

- Kang, H.; Wang, X.; Chen, C. Accurate Fruit Localisation Using High Resolution LiDAR-Camera Fusion and Instance Segmentation. Comput. Electron. Agric. 2022, 203, 107450. [Google Scholar] [CrossRef]

- Medic, T.; Ray, P.; Han, Y.; Broggini, G.A.L.; Kollaart, S. Remotely Sensing Inner Fruit Quality Using Multispectral LiDAR: Estimating Sugar and Dry Matter Content in Apples. Comput. Electron. Agric. 2024, 224, 109128. [Google Scholar] [CrossRef]

- Rivera, G.; Porras, R.; Florencia, R.; Sánchez-Solís, J.P. LiDAR Applications in Precision Agriculture for Cultivating Crops: A Review of Recent Advances. Comput. Electron. Agric. 2023, 207, 107737. [Google Scholar] [CrossRef]

- Shu, Y.; Zheng, W.; Xiong, C.; Xie, Z. Research on the Vision System of Lychee Picking Robot Based on Stereo Vision. J. Radiat. Res. Appl. Sci. 2024, 17, 100777. [Google Scholar] [CrossRef]

- Tang, Y.; Zhou, H.; Wang, H.; Zhang, Y. Fruit Detection and Positioning Technology for a Camellia Oleifera C. Abel Orchard Based on Improved YOLOv4-Tiny Model and Binocular Stereo Vision. Expert Syst. Appl. 2023, 211, 118573. [Google Scholar] [CrossRef]

- Alenyà, G.; Foix, S.; Torras, C. ToF Cameras for Active Vision in Robotics. Sens. Actuators Phys. 2014, 218, 10–22. [Google Scholar] [CrossRef]

- Fang, Y.; Wang, X.; Sun, Z.; Zhang, K.; Su, B. Study of the Depth Accuracy and Entropy Characteristics of a ToF Camera with Coupled Noise. Opt. Lasers Eng. 2020, 128, 106001. [Google Scholar] [CrossRef]

| Item | Base Camera | Hand Camera |

|---|---|---|

| Model | RealSense D457 | DS77C pro |

| Depth type | Stereo Depth | TOF(Time-of-flight) Depth |

| 3D camera resolution (pixel) | 640 × 480 | 640 × 480 |

| 3D FOV | Horizontal: 72°, Vertical: 62° | Horizontal: 70°, Vertical: 50° |

| Accuracy | ±2% | <1% |

| Color camera resolution (pixel) | 1280 × 800 | 1600 × 1200 |

| FOV | Horizontal: 90°, Vertical: 65° | Horizontal: 77°, Vertical: 55° |

| Data type | Depth, IR (left + right), RGB | Depth, IR, RGB |

| Working distance (m) | 0.32~8 | 0.15~5 |

| Data interface | GigE | GigE |

| Size (mm), Weight (g) | 124.5 × 29.5 × 36.5, 145 | 105 × 65 × 72.65, 581 |

| IR Wavelength | 940 nm | 940 nm, 2 × 2 W VCSEL |

| Temperature | 0~50 °C | −20~50 °C |

| Model | valAP50 | valAP95 | testAP50 | testAP95 | Train Time Cost (s) | Predict Time Cost (ms) |

|---|---|---|---|---|---|---|

| YOLOv5n | 0.782 | 0.403 | 0.761 | 0.418 | 2266 | 10.08 |

| YOLOv5s | 0.778 | 0.427 | 0.762 | 0.424 | 3848 | 10.15 |

| YOLOv5m | 0.766 | 0.442 | 0.770 | 0.454 | 9856 | 14.72 |

| YOLOv5l | 0.765 | 0.436 | 0.776 | 0.459 | 15,202 | 24.48 |

| YOLOv5x | 0.766 | 0.441 | 0.794 | 0.498 | 19,597 | 42.72 |

| YOLOv6 | 0.789 | 0.466 | 0.770 | 0.469 | 3689 | 12.82 |

| YOLOv7-tiny | 0.758 | 0.378 | 0.769 | 0.400 | 4049 | 9.01 |

| YOLOv7 | 0.764 | 0.418 | 0.764 | 0.439 | 4047 | 25.37 |

| YOLOv7x | 0.765 | 0.428 | 0.796 | 0.460 | 193,683 | 44.11 |

| YOLOv8n | 0.792 | 0.483 | 0.789 | 0.478 | 844 | 10.57 |

| YOLOv8s | 0.807 | 0.518 | 0.814 | 0.553 | 1293 | 10.94 |

| YOLOv8m | 0.825 | 0.541 | 0.802 | 0.529 | 6758 | 21.16 |

| YOLOv8l | 0.792 | 0.516 | 0.764 | 0.518 | 28,923 | 33.89 |

| Stem Length | |||||

|---|---|---|---|---|---|

| <20 mm | 20~30 mm | 30~40 mm | 40~50 mm | 50~60 mm | |

| Total number | 20 | 20 | 20 | 20 | 20 |

| Correctly detected | 16 | 16 | 20 | 19 | 20 |

| Success rate (%) | 80% | 80% | 100% | 95% | 100 |

| Stem Length | |||||

|---|---|---|---|---|---|

| <20 mm | 20~30 mm | 30~40 mm | 40~50 mm | 50~60 mm | |

| Total number | 20 | 20 | 20 | 20 | 20 |

| Correctly detected | 16 | 16 | 20 | 19 | 20 |

| Success rate (%) | 80% | 80% | 100% | 95% | 100 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, L.; Noguchi, T.; Hoshino, Y. Development of a Grape Cut Point Detection System Using Multi-Cameras for a Grape-Harvesting Robot. Sensors 2024, 24, 8035. https://doi.org/10.3390/s24248035

Yang L, Noguchi T, Hoshino Y. Development of a Grape Cut Point Detection System Using Multi-Cameras for a Grape-Harvesting Robot. Sensors. 2024; 24(24):8035. https://doi.org/10.3390/s24248035

Chicago/Turabian StyleYang, Liangliang, Tomoki Noguchi, and Yohei Hoshino. 2024. "Development of a Grape Cut Point Detection System Using Multi-Cameras for a Grape-Harvesting Robot" Sensors 24, no. 24: 8035. https://doi.org/10.3390/s24248035

APA StyleYang, L., Noguchi, T., & Hoshino, Y. (2024). Development of a Grape Cut Point Detection System Using Multi-Cameras for a Grape-Harvesting Robot. Sensors, 24(24), 8035. https://doi.org/10.3390/s24248035