Validity Analysis of Monocular Human Pose Estimation Models Interfaced with a Mobile Application for Assessing Upper Limb Range of Motion

Abstract

:1. Introduction

2. Materials and Methods

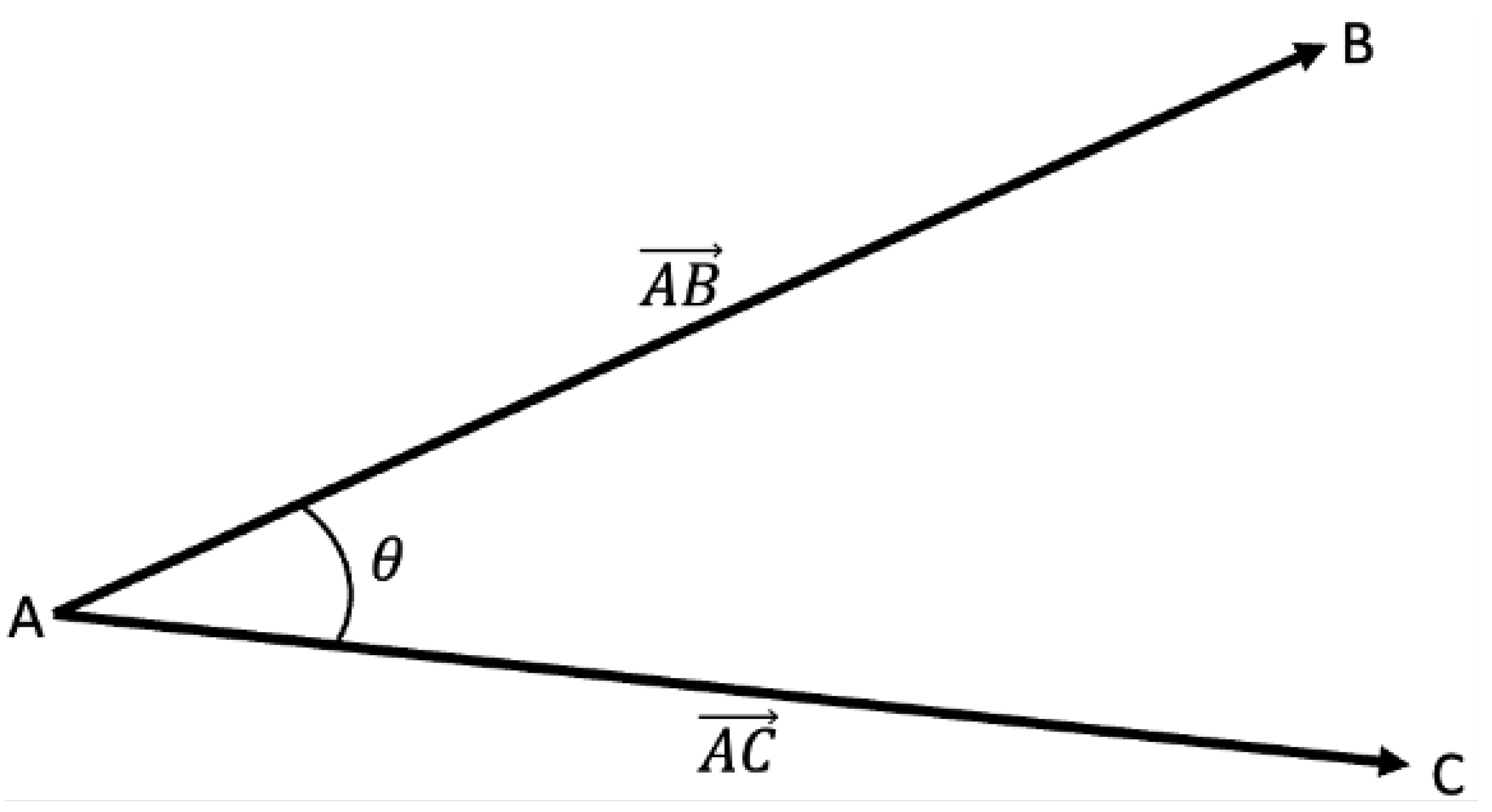

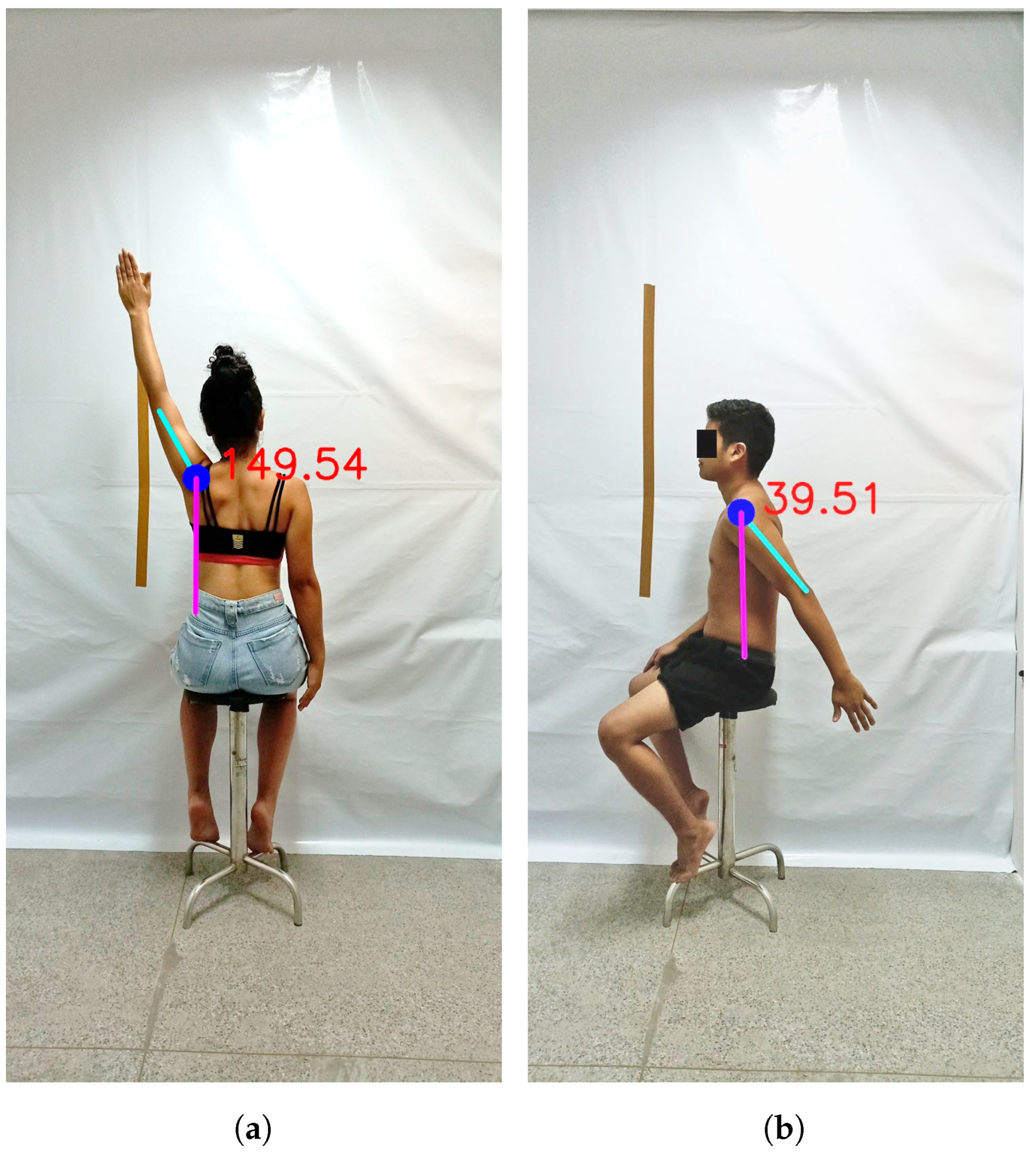

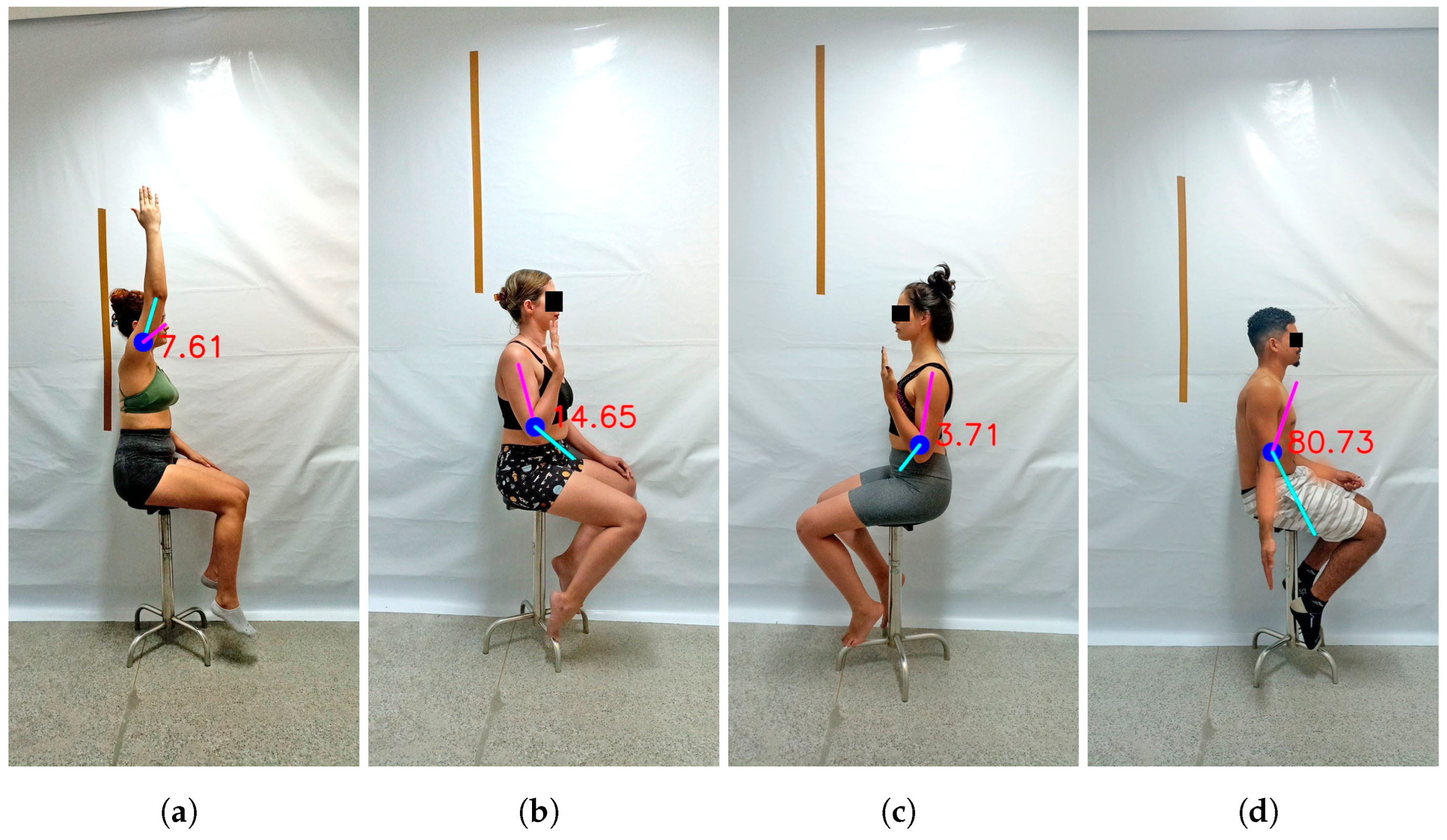

2.1. Human Pose Estimation for ROM Assessment

2.2. Sample

2.3. Data Acquisition Procedures

2.4. Statistical Analysis Procedures

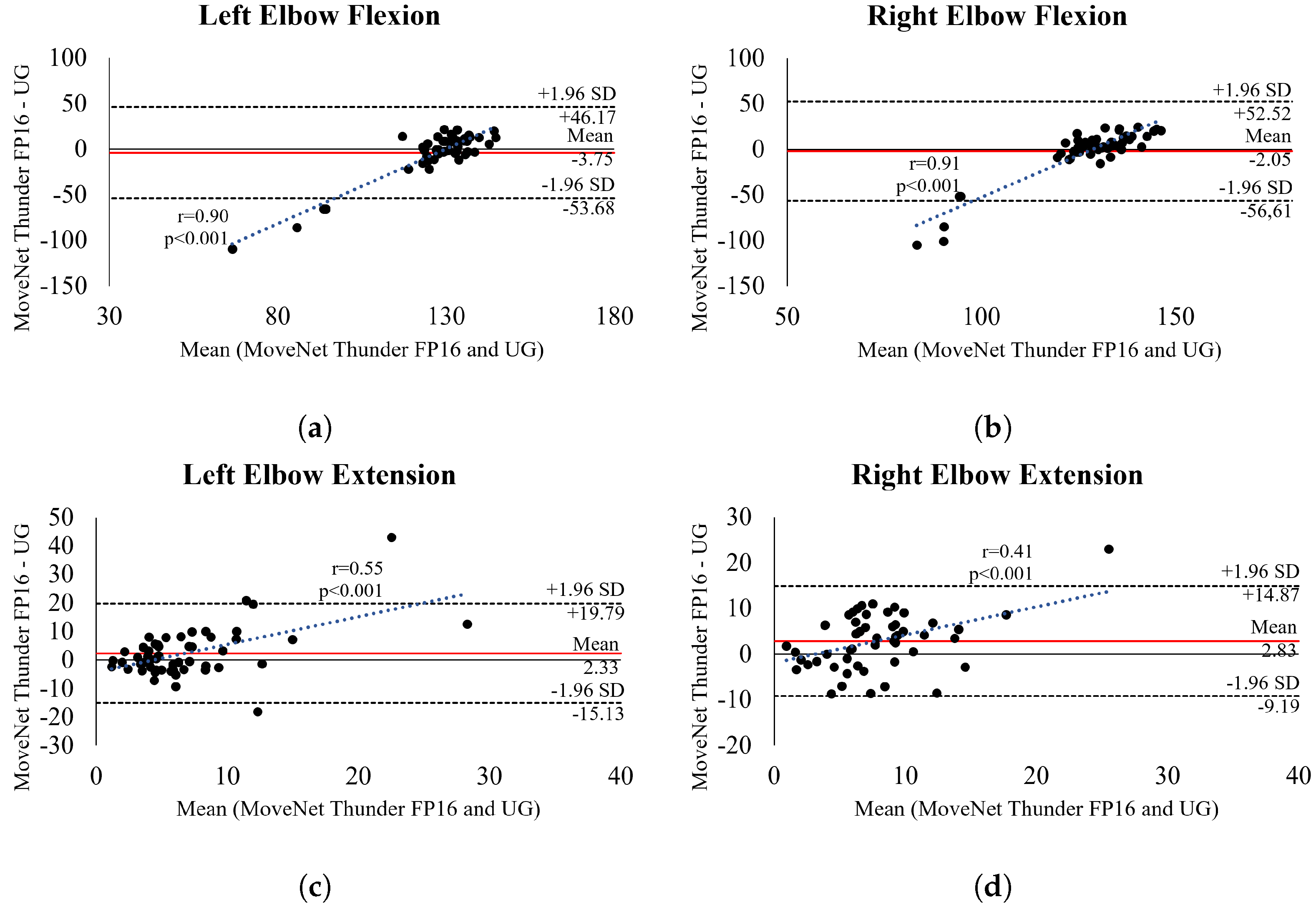

3. Results

4. Discussion

4.1. Principal Findings

4.2. Comparison with Prior Works

4.3. Practical Implications

4.4. Limitations and Future Work

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CV | Computer vision |

| HPE | Human Pose Estimation |

| KJP | Key Joint Points |

| IMUs | Inertial Measurement Units |

| LOA | Limits of Agreements |

| PT-BR | Brazilian Portuguese |

| RMSE | Root Mean Square Errors |

| ROM | Range of Motion |

| UG | Universal Goniometer |

| VG | Virtual Goniometer |

References

- Kamel, A.; Sheng, B.; Li, P.; Kim, J.; Feng, D.D. Hybrid Refinement-Correction Heatmaps for Human Pose Estimation. IEEE Trans. Multimed. 2021, 23, 1330–1342. [Google Scholar] [CrossRef]

- Liu, H.; Liu, T.; Chen, Y.; Zhang, Z.; Li, Y.F. EHPE: Skeleton Cues-Based Gaussian Coordinate Encoding for Efficient Human Pose Estimation. IEEE Trans. Multimed. 2024, 26, 8464–8475. [Google Scholar] [CrossRef]

- Dubey, S.; Dixit, M. A comprehensive survey on human pose estimation approaches. Multimed. Syst. 2023, 29, 167–195. [Google Scholar] [CrossRef]

- Zheng, C.; Wu, W.; Chen, C.; Yang, T.; Zhu, S.; Shen, J.; Kehtarnavaz, N.; Shah, M. Deep Learning-based Human Pose Estimation: A Survey. ACM Comput. Surv. 2023, 56, 3603618. [Google Scholar] [CrossRef]

- Ravì, D.; Wong, C.; Deligianni, F.; Berthelot, M.; Andreu-Perez, J.; Lo, B.; Yang, G.Z. Deep Learning for Health Informatics. IEEE J. Biomed. Health Inform. 2017, 21, 4–21. [Google Scholar] [CrossRef] [PubMed]

- Stenum, J.; Cherry-Allen, K.M.; Pyles, C.O.; Reetzke, R.; Vignos, M.F.; Roemmich, R.T. Applications of Pose Estimation in Human Health and Performance across the Lifespan. Sensors 2021, 21, 7315. [Google Scholar] [CrossRef] [PubMed]

- Moreira, R.; Fialho, R.; Teles, A.S.; Bordalo, V.; Vasconcelos, S.S.; de Morais Gouveia, G.P.; Bastos, V.H.; Teixeira, S. A computer vision-based mobile tool for assessing human posture: A validation study. Comput. Methods Programs Biomed. 2022, 214, 106565. [Google Scholar] [CrossRef] [PubMed]

- Moreira, R.; Teles, A.; Fialho, R.; Baluz, R.; Santos, T.C.; Goulart-Filho, R.; Rocha, L.; Silva, F.J.; Gupta, N.; Bastos, V.H.; et al. Mobile Applications for Assessing Human Posture: A Systematic Literature Review. Electronics 2020, 9, 1196. [Google Scholar] [CrossRef]

- Su, D.; Liu, Z.; Jiang, X.; Zhang, F.; Yu, W.; Ma, H.; Wang, C.; Wang, Z.; Wang, X.; Hu, W.; et al. Simple Smartphone-Based Assessment of Gait Characteristics in Parkinson Disease: Validation Study. JMIR mHealth uHealth 2021, 9, e25451. [Google Scholar] [CrossRef] [PubMed]

- Milani, P.; Coccetta, C.A.; Rabini, A.; Sciarra, T.; Massazza, G.; Ferriero, G. Mobile Smartphone Applications for Body Position Measurement in Rehabilitation: A Review of Goniometric Tools. PMR 2014, 6, 1038–1043. [Google Scholar] [CrossRef] [PubMed]

- Longoni, L.; Brunati, R.; Sale, P.; Casale, R.; Ronconi, G.; Ferriero, G. Smartphone applications validated for joint angle measurement: A systematic review. Int. J. Rehabil. Res. 2019, 42, 11–19. [Google Scholar] [CrossRef] [PubMed]

- Marques, A.P. Manual de Goniometria, 2nd ed.; Editora Manole Ltda: Barueri, Brazil, 2003. [Google Scholar]

- Walmsley, C.; Williams, S.; Grisbrook, T.; Elliott, C.; Imms, C.; Campbell, A. Measurement of Upper Limb Range of Motion Using Wearable Sensors: A Systematic Review. Sport. Med. Open 2018, 4, 53. [Google Scholar] [CrossRef] [PubMed]

- Blonna, D.; Zarkadas, P.; Fitzsimmons, J.; O’Driscoll, S. Validation of a photography-based goniometry method for measuring joint range of motion. J. Shoulder Elb. Surg. 2012, 21, 29–35. [Google Scholar] [CrossRef]

- Liu, Z.; Yin, Z.; Jiang, Y.; Zheng, Q. Dielectric interface passivation of polyelectrolyte-gated organic field-effect transistors for ultrasensitive low-voltage pressure sensors in wearable applications. Mater. Today Electron. 2022, 1, 100001. [Google Scholar] [CrossRef]

- Ketenci, I.E.; Yanik, H.S.; Erdoğan, Ö.; Adıyeke, L.; Erdem, S. Reliability of 2 Smartphone Applications for Cobb Angle Measurement in Scoliosis. Clin. Orthop. Surg. 2021, 13, 67–70. [Google Scholar] [CrossRef]

- Mattos e Dinato, M.C.; de Faria Freitas, M.; Milano, C.; Valloto, E.; Ninomiya, A.F.; Pagnano, R.G. Reliability of Two Smartphone Applications for Radiographic Measurements of Hallux Valgus Angles. J. Foot Ankle Surg. 2017, 56, 230–233. [Google Scholar] [CrossRef]

- Ketenci, İ.E.; Yanık, H.S. Use of a smartphone application for fracture angulation measurement. Med. J. Haydarpaşa Numune Train. Res. Hosp. 2019, 59, 50–53. [Google Scholar] [CrossRef]

- Moreira, R.; Teles, A.; Fialho, R.; dos Santos, T.C.P.; Vasconcelos, S.S.; de Sá, I.C.; Bastos, V.H.; Silva, F.; Teixeira, S. Can human posture and range of motion be measured automatically by smart mobile applications? Med. Hypotheses 2020, 142, 109741. [Google Scholar] [CrossRef]

- Zimmermann, C.; Welschehold, T.; Dornhege, C.; Burgard, W.; Brox, T. 3D Human Pose Estimation in RGBD Images for Robotic Task Learning. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 13 September 2018; pp. 1986–1992. [Google Scholar] [CrossRef]

- Bridgeman, L.; Volino, M.; Guillemaut, J.Y.; Hilton, A. Multi-Person 3D Pose Estimation and Tracking in Sports. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019; pp. 2487–2496. [Google Scholar] [CrossRef]

- Kumarapu, L.; Mukherjee, P. AnimePose: Multi-person 3D pose estimation and animation. Pattern Recognit. Lett. 2021, 147, 16–24. [Google Scholar] [CrossRef]

- Fan, J.; Gu, F.; Lv, L.; Zhang, Z.; Zhu, C.; Qi, J.; Wang, H.; Jiantao, Y.; Zhu, Q. Reliability of a human pose tracking algorithm for measuring upper limb joints: Comparison with photography-based goniometry. BMC Musculoskelet. Disord. 2022, 23, 877. [Google Scholar] [CrossRef] [PubMed]

- Sabo, A.; Mittal, N.; Deshpande, A.; Clarke, H.; Taati, B. Automated, Vision-Based Goniometry and Range of Motion Calculation in Individuals with Suspected Ehlers-Danlos Syndromes/Generalized Hypermobility Spectrum Disorders: A Comparison of Pose-Estimation Libraries to Goniometric Measurements. IEEE J. Transl. Eng. Health Med. 2023, 12, 140–150. [Google Scholar] [CrossRef] [PubMed]

- Fialho, R.; Moreira, R.; Santos, T.C.P.; Vasconcelos, S.S.; Teixeira, S.; Silva, F.; Rodrigues, J.J.P.C.; Teles, A.S. Can computer vision be used for anthropometry? A feasibility study of a smart mobile application. In Proceedings of the 2021 IEEE 34th International Symposium on Computer-Based Medical Systems (CBMS), Aveiro, Portugal, 7–9 June 2021; IEEE: New York, NY, USA, 2021; pp. 119–124. [Google Scholar] [CrossRef]

- Chen, Y.; Tian, Y.; He, M. Monocular human pose estimation: A survey of deep learning-based methods. Comput. Vis. Image Underst. 2020, 192, 102897. [Google Scholar] [CrossRef]

- Google. Pose Estimation. 2023. Available online: https://www.tensorflow.org/lite/examples/pose_estimation/overview (accessed on 10 November 2024).

- Papandreou, G.; Zhu, T.; Chen, L.; Gidaris, S.; Tompson, J.; Murphy, K. PersonLab: Person Pose Estimation and Instance Segmentation with a Bottom-Up, Part-Based, Geometric Embedding Model. In Proceedings of the Computer Vision—ECCV 2018: 15th European Conference, Munich, Germany, 8–14 September 2018. [Google Scholar] [CrossRef]

- Google. TensorFlow.js. 2023. Available online: https://www.tensorflow.org/js (accessed on 21 December 2023).

- Google. PoseNet-Kaggle. 2024. Available online: https://www.kaggle.com/models/tensorflow/posenet-mobilenet (accessed on 11 July 2024).

- Google. MoveNet-Kaggle. 2024. Available online: https://www.kaggle.com/models/google/movenet (accessed on 11 July 2024).

- Huang, X.; Nishimura, S.; Wu, B. A Pose Detection based Continuous Authentication System Design via Gait Feature Analysis. In Proceedings of the 2022 IEEE International Conference on Dependable, Autonomic and Secure Computing, International Conference on Pervasive Intelligence and Computing, Intl Conf on Cloud and Big Data Computing, International Conference on Cyber Science and Technology Congress (DASC/PiCom/CBDCom/CyberSciTech), Falerna, Italy, 12–15 September 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Yoon, T.L.; Park, K.M.; Choi, S.A.; Lee, J.H.; Jeong, H.J.; Cynn, H.S. A comparison of the reliability of the trochanteric prominence angle test and the alternative method in healthy subjects. Man. Ther. 2014, 19, 97–101. [Google Scholar] [CrossRef] [PubMed]

- Jones, A.; Sealey, R.; Crowe, M.; Gordon, S. Concurrent validity and reliability of the Simple Goniometer iPhone app compared with the Universal Goniometer. Physiother. Theory Pract. 2014, 30, 512–516. [Google Scholar] [CrossRef] [PubMed]

- Pérez-de la Cruz, S.; de León, Ó.A.; Mallada, N.P.; Rodríguez, A.V. Validity and intra-examiner reliability of the Hawk goniometer versus the universal goniometer for the measurement of range of motion of the glenohumeral joint. Med. Eng. Phys. 2021, 89, 7–11. [Google Scholar] [CrossRef]

- Chapleau, J.; Canet, F.; Petit, Y.; Laflamme, G.Y.; Rouleau, D. Validity of Goniometric Elbow Measurements: Comparative Study with a Radiographic Method. Clin. Orthop. Relat. Res. 2011, 469, 3134–3140. [Google Scholar] [CrossRef] [PubMed]

- Gomila, R.; Clark, C.S. Missing data in experiments: Challenges and solutions. Psychol. Methods 2022, 27, 143–155. [Google Scholar] [CrossRef] [PubMed]

- Haukoos, J.S.; Lewis, R.J. Advanced Statistics: Bootstrapping Confidence Intervals for Statistics with “Difficult” Distributions. Acad. Emerg. Med. 2005, 12, 360–365. [Google Scholar] [CrossRef] [PubMed]

- Martin Bland, J.; Altman, D. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet 1986, 327, 307–310. [Google Scholar] [CrossRef]

- Wu, Y.; Tao, K.; Chen, Q.; Tian, Y.; Sun, L. A Comprehensive Analysis of the Validity and Reliability of the Perception Neuron Studio for Upper-Body Motion Capture. Sensors 2022, 22, 6954. [Google Scholar] [CrossRef] [PubMed]

- Cuesta-Vargas, A.; Galán-Mercant, A.; Williams, J. The use of inertial sensors system for human motion analysis. Phys. Ther. Rev. PTR 2010, 15, 462–473. [Google Scholar] [CrossRef] [PubMed]

- Washabaugh, E.P.; Shanmugam, T.A.; Ranganathan, R.; Krishnan, C. Comparing the accuracy of open-source pose estimation methods for measuring gait kinematics. Gait Posture 2022, 97, 188–195. [Google Scholar] [CrossRef]

- Coughlan, M.J.; Salesky, M.A.; Zhang, A.L.; Feeley, B.T.; Ma, C.B.; Lansdown, D.A. Minimum clinically important difference for the American Shoulder and Elbow Surgeons score after revision shoulder arthroplasty. Semin. Arthroplast. JSES 2022, 32, 23–28. [Google Scholar] [CrossRef]

- Bravi, R.; Caputo, S.; Jayousi, S.; Martinelli, A.; Biotti, L.; Nannini, I.; Cohen, E.J.; Quarta, E.; Grasso, S.; Lucchesi, G.; et al. An Inertial Measurement Unit-Based Wireless System for Shoulder Motion Assessment in Patients with Cervical Spinal Cord Injury: A Validation Pilot Study in a Clinical Setting. Sensors 2021, 21, 1057. [Google Scholar] [CrossRef]

- Mihcin, S. Simultaneous validation of wearable motion capture system for lower body applications: Over single plane range of motion (ROM) and gait activities. Biomed. Eng. Biomed. Tech. 2022, 67, 185–199. [Google Scholar] [CrossRef]

- Cubukcu, B.; Yüzgeç, U.; Zileli, R.; Zileli, A. Reliability and validity analyzes of Kinect V2 based measurement system for shoulder motions. Med. Eng. Phys. 2019, 76, 20–31. [Google Scholar] [CrossRef]

- Beshara, P.; Chen, J.F.; Read, A.C.; Lagadec, P.; Wang, T.; Walsh, W.R. The Reliability and Validity of Wearable Inertial Sensors Coupled with the Microsoft Kinect to Measure Shoulder Range-of-Motion. Sensors 2020, 20, 7238. [Google Scholar] [CrossRef]

- Engstrand, F.; Tesselaar, E.; Gestblom, R.; Farnebo, S. Validation of a smartphone application and wearable sensor for measurements of wrist motions. J. Hand Surg. (Eur. Vol.) 2021, 46, 1057–1063. [Google Scholar] [CrossRef]

- Ferriero, G.; Vercelli, S.; Sartorio, F.; Lasa, S.M.; Ilieva, E.; Brigatti, E.; Ruella, C.; Foti, C. Reliability of a smartphone-based goniometer for knee joint goniometry. Int. J. Rehabil. Res. 2013, 36, 146–151. [Google Scholar] [CrossRef] [PubMed]

- Otter, S.J.; Agalliu, B.; Baer, N.; Hales, G.; Harvey, K.; James, K.; Keating, R.; McConnell, W.; Nelson, R.; Qureshi, S.; et al. The reliability of a smartphone goniometer application compared with a traditional goniometer for measuring first metatarsophalangeal joint dorsiflexion. J. Foot Ankle Res. 2015, 8, 30. [Google Scholar] [CrossRef]

- Ferriero, G.; Sartorio, F.; Foti, C.; Primavera, D.; Brigatti, E.; Vercelli, S. Reliability of a new application for smartphones (DrGoniometer) for elbow angle measurement. PM&R 2011, 3, 1153–1154. [Google Scholar] [CrossRef]

- Giavarina, D. Understanding Bland Altman analysis. Biochem. Medica 2015, 25, 141–151. [Google Scholar] [CrossRef] [PubMed]

- Liu, B.; Ferrari, V. Active Learning for Human Pose Estimation. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4373–4382. [Google Scholar] [CrossRef]

- Yang, J.; Zeng, A.; Zhang, R.; Zhang, L. X-Pose: Detecting Any Keypoints. arXiv 2024, arXiv:2310.08530. [Google Scholar]

- Khirodkar, R.; Bagautdinov, T.; Martinez, J.; Zhaoen, S.; James, A.; Selednik, P.; Anderson, S.; Saito, S. Sapiens: Foundation for Human Vision Models. arXiv 2024, arXiv:2408.12569. [Google Scholar]

| Flexion | Extension | Abduction | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean ± SD | t-Test | p-Value | Mean ± SD | t-Test | p-Value | Mean ± SD | t-Test | p-Value | |||

| MNL8Q | Right | UG | 159.54 ± 7.32 | −0.037 | 0.98 * | 38.19 ± 3.32 | 0.964 | 0.34 * | 166.04 ± 7.50 | −1.362 | 0.19 * |

| Model | 159.38 ± 30.44 | 36.57 ± 6.93 | 159.25 ± 24.59 | ||||||||

| Left | UG | 159.28 ± 7.12 | 0.053 | 0.96 * | 38.70 ± 3.69 | 1.202 | 0.24 * | 164.66 ± 7.96 | −3.596 | <0.001 | |

| Model | 159.38 ± 11.28 | 34.35 ± 6.08 | 156.55 ± 37.34 | ||||||||

| MNL16Q | Right | UG | 159.54 ± 7.32 | −1.918 | 0.08 * | 38.19 ± 3.32 | −4.331 | <0.001 | 166.04 ± 7.50 | −3.646 | <0.001 |

| Model | 162.54 ± 22.61 | 34.61 ± 6.09 | 157.42 ± 29.38 | ||||||||

| Left | UG | 159.28 ± 7.12 | −4.731 | <0.001 | 38.70 ± 3.69 | −5.734 | <0.001 | 164.66 ± 7.96 | −1.779 | 0.07 * | |

| Model | 161.26 ± 9.16 | 33.01 ± 6.25 | 156.79 ± 34.54 | ||||||||

| MNT8Q | Right | UG | 159.54 ± 7.32 | −1.960 | 0.26 * | 38.19 ± 3.32 | −2.088 | 0.14 * | 166.04 ± 7.50 | −1.932 | 0.07 * |

| Model | 157.76 ± 7.99 | 35.64 ± 5.18 | 164.03 ± 7.92 | ||||||||

| Left | UG | 159.28 ± 7.12 | −1.570 | 0.16 * | 38.70 ± 3.69 | −1.648 | 0.15 * | 164.66 ± 7.96 | 0.347 | 0.73 * | |

| Model | 154.22 ± 9.66 | 37.17 ± 5.80 | 165.18 ± 10.12 | ||||||||

| MNT16Q | Right | UG | 159.54 ± 7.25 | −3.165 | <0.001 | 38.19 ± 3.29 | −4.036 | <0.001 | 166.04 ± 7.42 | 0.206 | 0.83 * |

| Model | 159.89 ± 6.90 | 36.03 ± 4.82 | 163.36 ± 8.10 | ||||||||

| Left | UG | 159.28 ± 7.05 | −3.362 | <0.001 | 38.70 ± 3.66 | −3.669 | <0.001 | 164.66 ± 7.88 | −0.416 | 0.69 * | |

| Model | 156.63 ± 8.02 | 37.50 ± 7.22 | 166.31 ± 7.04 | ||||||||

| PoseNet | Right | UG | 159.54 ± 7.32 | 3.188 | <0.02 | 38.19 ± 3.32 | 0.209 | 0.83 * | 166.04 ± 7.50 | 2.253 | 0.08 * |

| Model | 152.43 ± 28.40 | 40.04 ± 14.33 | 132.60 ± 59.35 | ||||||||

| Left | UG | 159.28 ± 7.12 | 2.962 | <0.001 | 38.70 ± 3.69 | 0.943 | 0.38 * | 164.66 ± 7.96 | 3.375 | <0.001 | |

| Model | 148.09 ± 23.72 | 39.24 ± 20.49 | 120.96 ± 62.76 | ||||||||

| Flexion | Extension | |||||||

|---|---|---|---|---|---|---|---|---|

| Mean ± SD | t-Test | p-Value | Mean ± SD | t-Test | p-Value | |||

| MNL8Q | Right | UG | 128.48 ± 5.78 | 0.282 | 0.76 * | 6.35 ± 4.07 | −1.745 | 0.13 |

| Model | 108.25 ± 46.39 | 11.70 ± 11.58 | ||||||

| Left | UG | 129.30 ± 5.92 | −2.150 | 0.04 | 5.72 ± 4.47 | −3.408 | 0.03 | |

| Model | 111.46 ± 37.49 | 8.40 ± 7.08 | ||||||

| MNL16Q | Right | UG | 128.48 ± 5.78 | −3.201 | <0.001 | 6.35 ± 4.07 | 0.900 | 0.41 |

| Model | 103.39 ± 43.91 | 6.56 ± 4.07 | ||||||

| Left | UG | 129.30 ± 5.92 | −1.129 | 0.27 * | 5.72 ± 4.47 | 0.178 | 0.87 | |

| Model | 110.36 ± 36.56 | 6.55 ± 4.37 | ||||||

| MNT8Q | Right | UG | 128.48 ± 5.78 | −2.589 | 0.02 | 6.35 ± 4.07 | −4.113 | <0.001 |

| Model | 129.21 ± 25.87 | 10.98 ± 13.60 | ||||||

| Left | UG | 129.30 ± 5.92 | 1.429 | 0.16 | 5.72 ± 4.47 | −5.036 | <0.001 | |

| Model | 127.80 ± 26.83 | 9.66 ± 8.61 | ||||||

| MNT16Q | Right | UG | 128.48 ± 5.72 | −0.541 | 0.59 * | 6.35 ± 4.02 | 15.182 | <0.001 |

| Model | 126.43 ± 27.84 | 9.18 ± 6.19 | ||||||

| Left | UG | 129.30 ± 5.86 | −1.083 | 0.30 * | 5.72 ± 4.43 | 9.639 | <0.001 | |

| Model | 125.55 ± 25.89 | 7.98 ± 7.91 | ||||||

| PoseNet | Right | UG | 128.48 ± 5.78 | 3.394 | <0.001 | 6.35 ± 4.07 | 0.540 | 0.59 |

| Model | 143.81 ± 6.63 | 6.78 ± 5.12 | ||||||

| Left | UG | 129.30 ± 5.92 | 1.941 | 0.07 * | 5.72 ± 4.47 | 3.264 | 0.02 | |

| Model | 140.29 ± 7.27 | 21.89 ± 38.10 | ||||||

| Flexion | Extension | Abduction | |||||

|---|---|---|---|---|---|---|---|

| Right | Left | Right | Left | Right | Left | ||

| MNL8Q | BIAS ± SD | −0.16 ± 30.96 | 0.10 ± 13.65 | −1.63 ± 6.12 | −4.35 ± 6.64 | −6.78 ± 24.96 | −8.11 ± 37.26 |

| LOA | −9.75 to 7.65 | −3.62 to 4.63 | −3.33 to 0.01 | −6.16 to −2.57 | −15.49 to 0.95 | −19.35 to 1.32 | |

| RMSE | 30.67 | 13.52 | 6.28 | 7.88 | 25.64 | 37.79 | |

| MNL16Q | BIAS ± SD | 2.99 ± 22.40 | 1.98 ± 11.92 | −3.58 ± 5.96 | −5.69 ± 7.15 | −8.62 ± 29.77 | −7.87 ± 34.44 |

| LOA | −5.35 to 8.28 | −1.45 to 5.58 | −5.17 to −1.90 | −7.73 to −3.66 | −18.61 to 1.71 | −20.72 to 1.63 | |

| RMSE | 22.39 | 11.97 | 6.90 | 9.08 | 30.72 | 35.01 | |

| MNT8Q | BIAS ± SD | −1.78 ± 9.42 | −5.05 ± 10.13 | −2.56 ± 5.06 | −1.53 ± 6.21 | −2.01 ± 7.51 | 0.52 ± 10.79 |

| LOA | −4.36 to 1.14 | −7.75 to −2.16 | −4.05 to −1.16 | −3.11 to 0.04 | −4.28 to 0.22 | −2.40 to 3.46 | |

| RMSE | 9.49 | 11.24 | 5.63 | 6.34 | 7.70 | 10.70 | |

| MNT16Q | BIAS ± SD | 0.35 ± 9.16 | −2.62 ± 8.93 | −2.16 ± 4.89 | −1.20 ± 7.74 | −2.68 ± 7.55 | 1.65 ± 8.38 |

| LOA | −2.12 to 2.67 | −4.97 to −0.13 | −3.54 to −0.70 | −3.41 to 1.15 | −4.96 to −0.43 | −0.27 to 3.59 | |

| RMSE | 8.99 | 9.28 | 5.33 | 7.77 | 7.94 | 8.48 | |

| PoseNet | BIAS ± SD | −7.11 ± 29.38 | −11.18 ± 23.67 | 1.84 ± 14.79 | 0.53 ± 21.54 | −33.44 ± 58.64 | −43.70 ± 62.58 |

| LOA | −17.77 to 0.01 | −19.49 to −5.10 | −1.73 to 6.33 | −4.76 to 6.25 | −49.76 to −17.96 | −61.83 to −25.16 | |

| RMSE | 29.96 | 25.98 | 14.76 | 21.34 | 67.01 | 75.84 | |

| Flexion | Extension | ||||

|---|---|---|---|---|---|

| Right | Left | Right | Left | ||

| MNL8Q | BIAS ± SD | −20.23 ± 46.09 | −17.84 ± 38.27 | 5.34 ± 12.09 | 2.68 ± 6.52 |

| LOA | −33.47 to −7.06 | −31.34 to −7.26 | 2.48 to 8.66 | 0.84 to 4.55 | |

| RMSE | 49.94 | 41.89 | 13.12 | 6.99 | |

| MNL16Q | BIAS ± SD | −25.08 ± 44.81 | −18.94 ± 37.22 | 0.21 ± 7.17 | 0.83 ± 6.32 |

| LOA | −38.52 to −13.29 | −28.43 to −10.67 | −1.52 to 2.08 | −1.01 to 2.49 | |

| RMSE | 50.98 | 41.45 | 7.11 | 6.32 | |

| MNT8Q | BIAS ± SD | 0.73 ± 25.68 | −1.49 ± 25.99 | 4.63 ± 14.83 | 3.93 ± 8.41 |

| LOA | −8.34 to 7.81 | −10.34 to 4.78 | 1.52 to 8.24 | 2.00 to 5.98 | |

| RMSE | 25.44 | 25.78 | 15.40 | 9.21 | |

| MNT16Q | BIAS ± SD | −2.04 ± 27.57 | −3.75 ± 25.23 | 2.79 ± 6.01 | 2.26 ± 8.47 |

| LOA | −9.99 to 4.64 | −11.85 to 3.07 | 1.24 to 4.40 | −0.17 to 4.76 | |

| RMSE | 27.39 | 25.27 | 6.66 | 8.74 | |

| PoseNet | BIAS ± SD | 15.33 ± 7.28 | 10.99 ± 8.22 | 0.42 ± 5.60 | 16.17 ± 36.72 |

| LOA | 13.07 to 17.32 | 8.60 to 13.55 | −1.12 to 1.98 | 8.21 to 24.07 | |

| RMSE | 16.94 | 13.68 | 5.89 | 39.91 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moreira, R.; Teixeira, S.; Fialho, R.; Miranda, A.; Lima, L.D.B.; Carvalho, M.B.; Alves, A.B.; Bastos, V.H.V.; Teles, A.S. Validity Analysis of Monocular Human Pose Estimation Models Interfaced with a Mobile Application for Assessing Upper Limb Range of Motion. Sensors 2024, 24, 7983. https://doi.org/10.3390/s24247983

Moreira R, Teixeira S, Fialho R, Miranda A, Lima LDB, Carvalho MB, Alves AB, Bastos VHV, Teles AS. Validity Analysis of Monocular Human Pose Estimation Models Interfaced with a Mobile Application for Assessing Upper Limb Range of Motion. Sensors. 2024; 24(24):7983. https://doi.org/10.3390/s24247983

Chicago/Turabian StyleMoreira, Rayele, Silmar Teixeira, Renan Fialho, Aline Miranda, Lucas Daniel Batista Lima, Maria Beatriz Carvalho, Ana Beatriz Alves, Victor Hugo Vale Bastos, and Ariel Soares Teles. 2024. "Validity Analysis of Monocular Human Pose Estimation Models Interfaced with a Mobile Application for Assessing Upper Limb Range of Motion" Sensors 24, no. 24: 7983. https://doi.org/10.3390/s24247983

APA StyleMoreira, R., Teixeira, S., Fialho, R., Miranda, A., Lima, L. D. B., Carvalho, M. B., Alves, A. B., Bastos, V. H. V., & Teles, A. S. (2024). Validity Analysis of Monocular Human Pose Estimation Models Interfaced with a Mobile Application for Assessing Upper Limb Range of Motion. Sensors, 24(24), 7983. https://doi.org/10.3390/s24247983