Abstract

The epidural injection is a medical intervention to inject therapeutics directly into the vicinity of the spinal cord for pain management. Because of its proximity to the spinal cord, imprecise insertion of the needle may result in irreversible damage to the nerves or spinal cord. This study explores enhancing procedural accuracy by integrating a telerobotic system and augmented reality (AR) assistance. Tele-kinesthesia is achieved using a leader–follower integrated system, and stable force feedback is provided using a novel impedance-matching force rendering approach. In this domain, augmented reality employs a magnetic-tracker-based approach for real-time 3D model projection onto the patient’s body, aiming to augment the physician’s visual field and improve needle insertion accuracy. Preliminary results indicate that our AR-enhanced robotic system may reduce the cognitive load and improve the accuracy of ENI, highlighting the promise of AR technologies in complex medical procedures. However, further studies with larger sample sizes and more diverse clinical settings must comprehensively validate these findings. This work lays the groundwork for future research into integrating AR into medical robotics, potentially transforming clinical practices by enhancing procedural safety and efficiency.

1. Introduction

1.1. Background

Epidural needle insertion (ENI) is a percutaneous clinical technique performed frequently in clinics for a variety of purposes, e.g., pain management and regional/neuraxial blockage. This technique involves inserting and maneuvering a fine, flexible needle through the skin and subcutaneous soft tissue so that the tip of the flexible needle enters the epidural space (ES). The ENI technique is a “blind” minimally invasive procedure [] in the sense that there is no direct visual feedback from the trajectory of the needle tip through the tissues []. Traditionally, once the epidural needle is positioned, the syringe is removed to check for the presence of spinal fluid or blood at the needle hub, indicating potential misplacement into the subarachnoid space or a blood vessel, respectively []. This is followed by an aspiration test with a small syringe to ensure that neither cerebrospinal fluid (CSF) nor blood can be aspirated, confirming the needle’s correct placement within the epidural space, which is 3 to 5 cm deep from the skin, with a height of 8 to 10 mm. While ultrasound (US) guidance can enhance needle trajectory visualization, its use in ENI is not common due to the bimanual nature of the procedure and the additional costs involved, making traditional observational and manual confirmation methods the standard practice because of their reliability and simplicity [,].

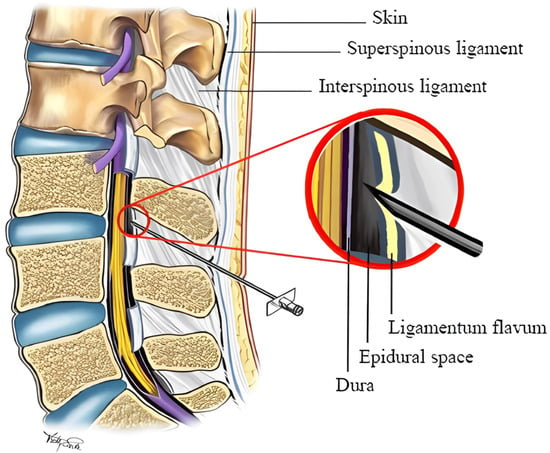

The current standard of care for determining the needle passage through tissue layers and its penetration into the ES is the loss-of-resistance (LOR) technique []. For LOR, the physician connects the needle to a syringe filled with saline. The physician then inserts the needle through the soft tissue while pressing the syringe’s plunger. While the needle tip passes through different soft tissues, the physician feels different resistance levels against the saline injection. Eventually, when the needle tip enters the ES, the resistance against the saline injection drastically drops. The physician may then confirm the needle placement in the ES by US. Figure 1 shows a lumbar anatomy and ENI procedure schematic. LOR can also be performed using air. Both air and saline are used to detect the passage of the needle from the denser ligamentum flavum into the relatively less dense epidural space, with the change in resistance felt by the practitioner serving as a critical indicator. While saline provides a more tactile sensation due to its fluid nature, some clinicians favour the air due to factors such as a potentially lower incidence of complications like dural puncture with cerebrospinal fluid leakage, known as a wet tap. The choice between air and saline often depends on the clinician’s training and experience, as well as the specific clinical scenario.

Figure 1.

A schematic of the lumbar spine anatomy during an epidural needle insertion procedure [].

Because of the deep location of the ES, its small size (7 mm wide, 10 mm high, and 5 mm deep), and its proximity to the dura (and spinal cord), the risks of cord puncture and geometrical miss are high in this procedure []. Studies have shown that the most common complications of ENI are positional overshoot and accidental puncture of the dura matter (up to 15% []). In addition, typically, an epidural injection session takes up to 45 min, most of which is spent on the manual insertion of the needle by trial and error [].

1.2. Alternative State-of-the-Art Approaches

Teleoperated robot-assisted needle insertion (RNI) systems have been successfully adopted for needle insertion into other deep organs, e.g., the prostate [] and breast nodules []. RNI systems have precise and repeatable needle trajectory control advantages and enable physicians to perform teleoperated RNI procedures []. Also, studies have shown that RNI systems can perform semi-autonomous needle insertions with a surgeon-in-the-loop control framework. Specific RNI systems for ENI procedures have recently been proposed that adopt similar system architectures [,]. Some studies have also proposed custom-designed needles equipped with tip sensors to detect epidural penetration. However, using sensor-equipped needles is not a clinically favourable option due to cost considerations and availability limitations. Also, most of the proposed RNI systems for ENI procedures rely on an imaging modality as a source of intraoperative guidance. The proposed RNI systems typically require preoperative trajectory planning and intraoperative model-based needle control with trajectory error correction.

Clinically, the major limitations of the proposed RNI systems are the following:

- They rely on preoperative 3D imaging (MRI) for planning;

- They involve image-based tissue penetration detection, which is prone to error due to large deformations of the soft tissue [];

- An imaging device needs to be integrated with the robot controller.

Studies have proposed various designs for needle insertion systems []. Robotic systems have been used for needle insertion into soft tissue, e.g., the prostate [,,,], breast [,,], lung [], and brain []. The proposed robotic platforms in the literature have mainly adopted two design approaches: strap-secured [] and robotic arm-mounted []. While strap-secured needle insertion robots allow superior mobility and a faster setup, they suffer from insertion point instability []. Thus, in this study, a robotic arm-mounted design approach was adopted.

Using haptic technology in robotic surgery has become integral in medical procedures, allowing clinicians to interact with digital simulations through tactile feedback. The authors have previously developed medical technologies for force-sensitive interventions, such as laparoscopic, cardiac intraluminal, and percutaneous interventions, e.g., [,,,,,,,,,,]. They have also shown that haptic technology enhances surgery precision by enabling the feeling of textures and resistance as if interacting with real tissues, thus improving spatial awareness and decision-making during operations where visual cues are limited [,]. These systems provide real-time tactile sensations that significantly enhance procedural accuracy and safety, particularly in scenarios that demand high tactile feedback []. Such advancements in haptic feedback not only improve the execution of complex medical tasks but also reduce the likelihood of complications, marking a substantial progression in medical practice.

Recent advancements in medical technology have brought augmented reality (AR) to the forefront, particularly in its application to spinal surgery [,]. AR-based methods have shown significant promise in reducing the need for intraoperative X-ray imaging, a standard yet often risky practice in these procedures []. The essence of AR navigation lies in its ability to display procedural imagery directly onto wearable devices or screens. This innovative approach allows surgeons to visualize surgical instruments in conjunction with the patient’s anatomy in real time, greatly enhancing precision and safety. Notably, integrating AR with robotic precision has marked a new era in spinal surgery and related procedures. This combination offers unparalleled accuracy, particularly in spinal injections, drawing parallels to the advancements witnessed in other areas of spinal surgery. The precision of robotic systems, known for their ability to execute movements with meticulous accuracy, complements the real-time visual guidance provided by AR. This synergy enhances the surgeon’s ability to perform complex procedures with greater confidence and reduced risk.

Several studies have underscored the efficacy of AR navigation systems, especially when compared to traditional freehand methods [,]. Research has consistently shown that the use of AR in spinal procedures, such as the placement of pedicle screws, results in a significantly higher level of accuracy. More importantly, this increase in accuracy is achieved without the need for intraoperative fluoroscopy, a technique that, while useful, exposes patients to radiation. The integration of AR has been linked to a notable decrease in radiation exposure, aligning to minimize patient risk during surgical procedures [,].

Furthermore, using AR in spinal surgery enhances procedural accuracy and contributes to overall improvements in surgical outcomes []. By providing surgeons with a detailed, three-dimensional view of the surgical site, AR navigation systems facilitate more precise incisions, reduced tissue damage, and potentially quicker patient recovery times. These benefits are of particular importance in spinal surgeries, where precision is paramount to avoiding complications and ensuring successful outcomes [].

In conclusion, the fusion of AR technology with robotic precision represents a significant leap forward in spinal surgery and related interventions. As these technologies continue to evolve and become more integrated into surgical practices, they hold promise for revolutionizing spinal care, offering safer, more efficient, and highly accurate procedures for patients worldwide [,,].

1.3. Objectives and Contributions

To address the limitations of RNI systems for ENI procedures, this study aimed to design, test, and validate a hardware–software integrated needle driver capable of robustly rendering the total needle insertion force to the operator. In addition, it was hypothesized that adapting the authors’ previously proposed impedance-matching method as an alternative to direct force rendering would increase the safety of teleoperation through enhanced stability. More specifically, the contributions of this study are as follows:

- A novel needle driver system compatible with a commercial medical robot was designed and prototyped, and its performance was validated for ENI tasks;

- A novel hardware–software integrated system utilizing the proposed needle driver system was developed and integrated with the commercial robot;

- The authors’ impedance-matching method was extended to be usable for ENI procedures, and its accuracy in force rendering was studied;

- The integrated system was tested for usability and performance in a multi-user study.

2. Methodology

2.1. Proposed System Architecture

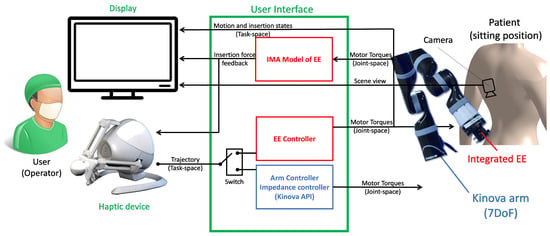

As discussed in Section 1.2, the proposed system was selected to be an arm-mounted system for better stability. Also, leveraging the pre-built control modes of a commercial robot, a 7-degree-of-freedom (DoF) serial manipulator (Kinova Gen2 Spherical, Kinova, Boisbriand, QC, Canada) was used. Figure 2 shows a schematic of the proposed system architecture.

Figure 2.

The system architecture of the proposed hardware–software integrated robotic ENI system.

As shown in Figure 2, the system has two operational (control) modes: (a) the robotic arm controller (positioning of the arm) and (b) the end-effector controller (control of needle insertion depth). For the arm positioning mode, two controllers are implemented, i.e., an admittance controller to allow the local aide, e.g., a nurse, to pose the robotic arm in an aiming position on the patient’s skin and a position follower controller for full telepositioning of the arm. A direct insertion force controller (push thrust) was developed for the needle insertion mode. The force controller regulates the thrust on the epidural needle proportionally to the deviation of the haptic device from its zero position. Meanwhile, a force sensor measures the needle insertion force and relays it back to the impedance-matching (IM) force renderer that regulates the force command on the haptic user interface. The system uses a 3-DoF haptic input device (Falcon, Force Dimensions, Nyon, Switzerland) as the operator interface. The haptic device is capable of rendering up to a 15N insertion force. In addition, the serial manipulator is equipped with a 6-DoF force–torque sensor (Gamma, ATI Automation Industries, Apex, NC, USA). The nominal force resolution of the Gamma sensor is 0.01 N.

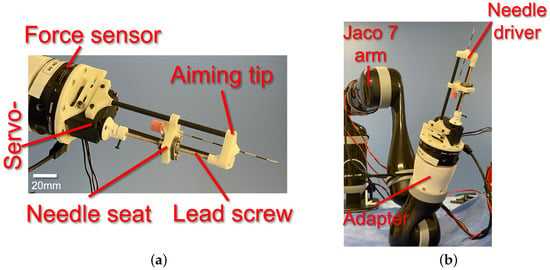

2.2. Needle Driver Design

Figure 3 depicts this study’s prototyped needle driver end-effector. The needle driver provides a total stroke range of 90 mm for an epidural needle with 16–25 G size. The needle driver utilizes a 1 mm pitch lead-screw-and-nut mechanism to drive the needle seat forward and backward (Figure 3a). The lead screw is coupled with a 3D-printed spring coupler to a servo motor (XL-430, Dynamixel, Lake Forest, CA, USA). The servo motor provides an encoder reading of its shaft position with 1024 counts per revolution, resulting in a 1/1024 mm accuracy for the insertion depth measurement. The servo motor is powered and controlled with an OpenCR 1.0 board (Robotis, Seoul, Republic of Korea). An aiming piece (tip) with a central hole was designed and prototyped to provide a line of sight (aiming) to the needle. Also, the aiming tip is connected to the servo motor using two straight fibre-reinforced carbon tubes. In addition, the lead screw serves as the third support, constraining the aiming tip from rotational deviation with respect to the needle driver base.

Figure 3.

(a) Prototyped needle driver end-effector with components and (b) integrated needle driver with Kinova Gen2 serial manipulator (Jaco 7 arm) [].

The needle driver was installed on the Gamma ATI force–torque sensor using a customized 3D-printed adapter. In contrast, the sensor was installed on the manipulator’s end-effector using a customized 3D-printed adapter (Figure 3b).

2.3. Cartesian Admittance Controller

A Cartesian admittance controller was developed and implemented to allow the local aide, e.g., the nurse, to position the robotic arm in an aiming position relative to the patient’s spine. The controllers are implemented using C++ object-oriented programming and communicate with the manipulator’s low-level controller (Kinova API in C++), haptic device controller, and force sensor portal using a parallel shared memory protocol.

2.4. Arm Telepositioning Controller

For the arm telepositioning controller, first, a Cartesian admittance controller was designed. The manipulator has a native Cartesian velocity controller leveraged to achieve the admittance behaviour. The Gamma force sensor provides real-time external manipulation forces in the end-effector’s coordinate system of the form

Given that the Gamma force sensor is installed on the end-effector, it measures external forces applied on the arm’s end-effector in its local coordinate systems. To map the measured forces to global coordinates, the end-effector’s local-to-global rotation matrix is used:

In real time, the rotation matrix is computed every time the end-effector’s Euler angles change (event-based update). The Kinova API provides Euler angles based on a rotated coordinate system with the convention, i.e., the Tait–Bryan convention. In the end, a proportional regulation controller was designed for admittance control with the following form:

where is the diagonal gain matrix. For simplicity, is adopted, with k as the proportional gain selected by the user and as the 3-by-3 identity matrix. The constant k also determines the sensitivity of the end-effector to external force. In this study, exhibited stable admittance behaviour and corresponded to a total linear velocity of .

In addition, the desired Cartesian velocity of the end-effector is augmented by the remote operator’s input on the haptic device. The augmented term to the desired end-effector velocity is of the form

with being the displacement of the haptic device from its original (resting) position along its x-, y-, and z-axes caused by the remote operator’s hand. Also, the empirical gain and rotation matrix map the haptic device’s coordination system to the end-effector coordination system. Thus, the total desired velocity of the end-effector is

where is determined empirically as

Equation (5) shows that the end-effector’s velocity in positioning mode was determined by both the remote operator’s command and the local external forces on the end-effector. If the local external force is exerted due to the collision of the end-effector with an obstacle in the task space, e.g., the patient’s body or the environment, a negative contact force will oppose (override) the remote operator’s command. Thus, this could lead to a safer remote positioning of the end-effector. Also, should the external force be additive to the remote operator’s command (e.g., if a local aide assists in correctly positioning the needle driver), assistive co-manipulation, e.g., for positioning correction or overdrive protection, could be possible.

2.5. Needle Insertion Force Controller

After positioning the needle for insertion, assuming that the needle is not extended, it is assumed that upon initial contact with the patient’s skin, the remote operator will switch control to the needle insertion force controller. In this use case, the desired thrust force on the needle along its longitudinal axis (local z-axis, Figure 4) is regulated through the torque control of the servo motor. The utilized servo controller, OpenCR, provides an internal low-level torque controller interface. The desired needle insertion force command is defined as proportional to the displacement of the haptic device from its original position in the local forward axis (local x). Also, given the lead-screw mechanism utilized in the design, a linear relationship between the insertion force and the servo motor’s torque is assumed. Due to negligible friction in the lubricated ball screw (lead screw and lead nut), frictional losses are neglected. Therefore, the desired servo motor torque is defined as

where is the cross-product operator, and is a heuristic selected by the user on the graphical user interface as the traction coefficient converting the insertion force to servo motor torque. The command is calculated in real time and sent to the servo motor driver as the desired set torque.

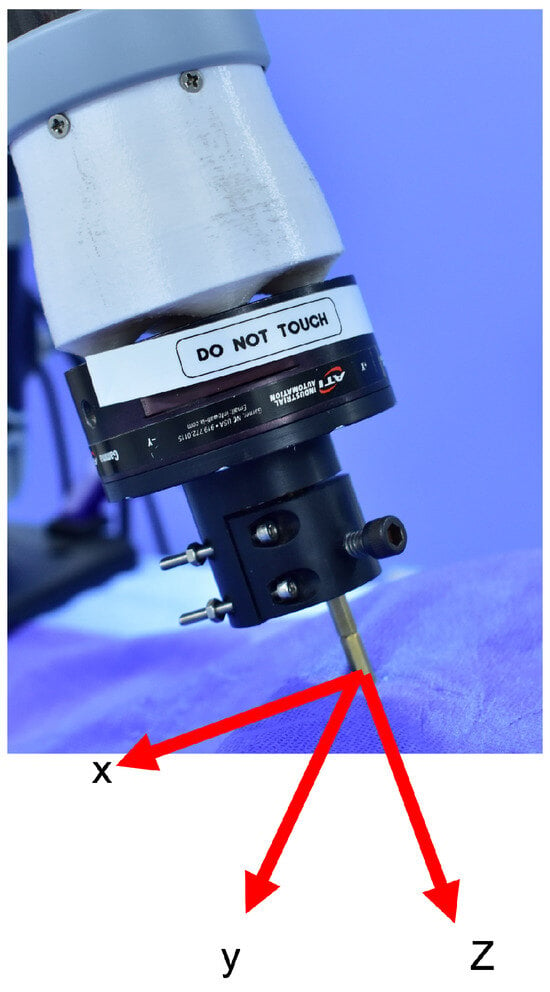

Figure 4.

End-effector’s local coordinate system.

Meanwhile, to avoid needle breakage if the patient moves perpendicularly to the needle, the end-effector remains under admittance control on its local x-y plane. This combination of force control along the local z-axis and admittance control on the x-y plane also hypothetically increases the safety of remote needle insertion.

2.6. IM Force Estimation and Rendering

To render the needle insertion force at the remote operator’s hand, studies have mainly used the direct force reflection (DFR) approach [,,]. In DFR, the end-effector forces are measured using a force sensor or estimated using observers. We have previously shown that, for remote surgical interventions, DFR may suffer from instability caused by a large delay or interruption in communication between the remote haptic device and the robot []. If a DFR force rendering were to be adopted in this study, the desired haptic force would be of the form

Defining T as a one-directional time delay between the leader and follower, the error of force feedback would be of the form

This would depend on T and could potentially surpass the recoverability threshold of the input gradient to the haptic device.

To alleviate this problem, in this study, we adopted the proposed impedance-matching (IM) method of [] for the robust force rendering of the insertion force. The insertion force feedback at the haptic device is calculated as follows:

where is the IM-based estimated insertion force, calculated as

where is calculated using the method in [] at the local machine of the remote operator based on received force reading from the Gamma force sensor in the past 50 ms.

As suggested, the passage of the needle tip through layers of soft tissue would hypothetically be identifiable by the user through sudden drops in the insertion force, perceived through haptic feedback []. Thus, one of the end-points of the validation studies in this work was to determine the needle tip penetration depth based on pure insertion force feedback in the absence of any imaging modality.

2.7. Augmented Reality

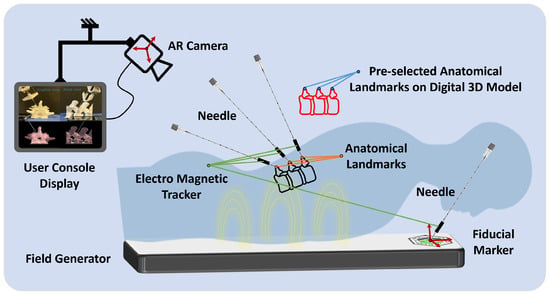

For the projection of the 3D model onto a 3D-printed counterpart, utilization of a fiducial marker is paramount within the augmented reality framework. This marker is integral to the AR system, providing a reference point for accurately determining the position and orientation of the AR camera (see Figure 5). This alignment is crucial for the projection’s fidelity, enhancing the simulation’s utility and realism in medical training and planning. The calibration framework for the AR system primarily involves using a fiducial marker, specifically a Vuforia tag.

Figure 5.

A representative illustration of the registration process using the magnetic tracking system. This diagram demonstrates the calibration and mapping of anatomical landmarks from the patient’s body to the corresponding points on a digital 3D model, enabling a precise augmented reality overlay for surgical planning and guidance.

The calibration process begins with a calibration set with a pre-determined relative position of the Vuforia fiducial marker. The position of this marker is ascertained using a 3D measurement system. The primary focus is ensuring the accurate rendering of augmented reality objects in the scene by identifying the AR camera’s pose (position and orientation). Pose estimation is crucial for stable and accurate projections of the 3D-printed model. In this setup, the Vuforia software 10.5 development kit is employed for real-time identification of the camera’s pose concerning the fiducial tag.

The initial step involves generating the anatomical model to create patient-specific 3D holograms for display to the physician. This is achieved by converting a patient’s lumbar CT scan into a detailed 3D digital twin representation using the Mimics Innovation Suite 2021 (Materialise, Belgium). This process allows for the precise and tailored visualization of a patient’s anatomy, significantly enhancing the physician’s ability to plan and execute medical procedures with increased accuracy and confidence.

2.8. Digital Twin Registration

The initiation of the augmented reality application within medical procedures is marked by the precise registration of the fiducial marker’s origin, a critical step for determining the AR camera’s spatial location. The marker can be positioned on the patient’s lower back, typically close to the L3–L4 region, to provide a stable reference for AR registration. This placement ensures alignment with anatomical landmarks and falls within the calibrated field of view of the AR camera. This process utilizes a needle with a magnetic tracker (Aurora, Northern Digital Inc., Waterloo, ON Canada) to accurately register the marker’s position. Subsequently, the methodology involves meticulously identifying essential anatomical landmarks in the patient’s anatomy. These landmarks act as pivotal reference points, facilitating the establishment of a direct correspondence between the actual patient anatomy and the digital 3D model derived from medical imaging data. By employing the magnetic tracking system, these selected anatomical landmarks are precisely aligned with their digital counterparts on the 3D model. In this study, three regions of the 3D-printed spinal model (crests of the spinal processes of L4, L5, and L6) were localized using an electromagnetic probe. They were registered to their corresponding regions on the 3D model through static 3D registration. This mapping is achieved through a calibration process where the magnetic tracker precisely locates the predefined points on the patient’s body in the physical space (Figure 5). The system then aligns these points with the digital 3D model’s corresponding landmarks, localized to the fiducial tag coordinate system to overlay the model onto the patient’s anatomy effectively. This alignment is crucial for ensuring that the AR projection accurately represents the patient’s internal structures, providing a real-time, augmented view that enhances the surgeon’s ability to navigate and plan surgical interventions.

3. Validation Studies

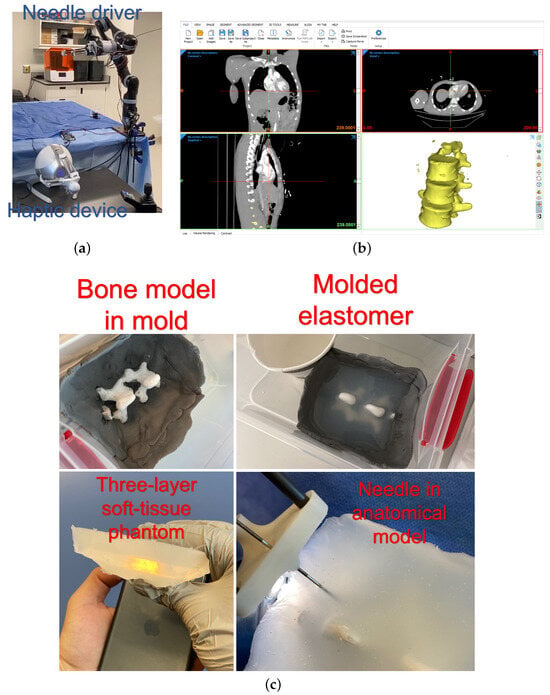

3.1. Setup

For validation studies, the setup shown in Figure 6a, comprising the needle driver installed on the serial manipulator and the haptic device, was used. Also, an anatomical 3D model based on a real patient CT scan was fabricated using 3D printing. Three layers of soft-tissue-mimicking material (Ecoflex 00-20, Ecoflex 00-50, and Ecoflex 00-20, respectively) were sequentially moulded on the 3D-printed spinal bone model. Figure 6b,c show the 3D-reconstructed CT scan and fabricated anatomical model. While the AR model is based on preoperative CT images, intraoperative consistency is ensured using a magnetic tracking system. Fiducial markers can serve as landmarks, which allow the device to match real-time information. The allowable limit of such needle path deviations is 1.5 mm. The threshold will be checked continuously, and should the deviation cross this line, the operator will be given an alarm. This technique will be followed for perfect needle trajectories and AR preview accuracy.

Figure 6.

(a) The experimental setup, (b) CT reconstruction software, and (c) the process of moulding the anatomical model with a soft tissue phantom [].

3.2. Protocol

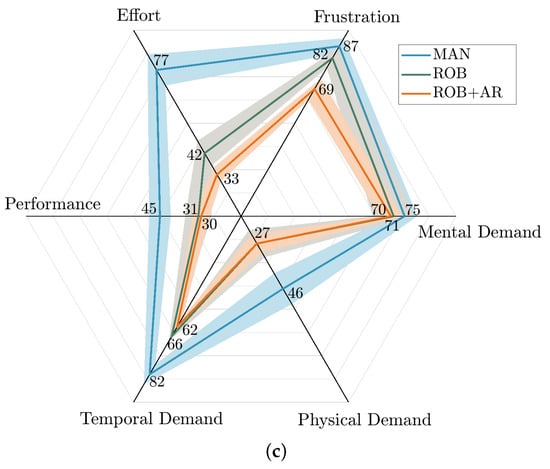

The validation study protocol was based on the NASA Task Load Index (TLX) to statistically compare the mental demand, physical demand, temporal demand, performance, effort, and frustration in using the robotic system (ROB) versus the robotic system with augmented reality assistance (ROB + AR) versus manual (MAN). Each NASA TLX metric was explicitly defined for participants before the task to ensure consistent interpretation. In addition, the accuracy of force rendering was assessed by evaluating the IM force feedback versus the ground truth (SENSOR). Moreover, the depth of needle penetration into the epidural space was compared between manual and robotic groups.

To perform the analyses, five individuals without prior familiarity with robotic and manual procedures were given 15 min of familiarization with using the robotic system with and without augmented reality assistance and manual procedures before the beginning of the test. During participant tests, both admittance control (x-y plane) and force control (z-axis) were active. This ensured safe and precise needle insertion, simulating realistic clinical conditions. Each individual was tasked to perform five manual, five robotic without AR assistance, and five robotic with AR assistance procedures. The participants were randomly assigned to start with either the manual or the robotic task to mitigate any potential learning or fatigue effects that could bias the results. The participants were asked to stop the intervention when they were convinced that the tip of the needle had penetrated the epidural space.

4. Results and Discussion

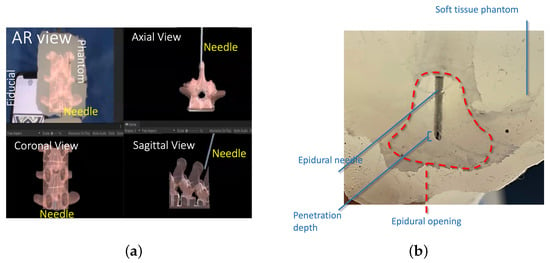

4.1. Needle Penetration Depth

During the procedure, the surgeon observes the augmented model projected onto the patient’s body. Figure 7a illustrates the surgeon console display with sagittal and axial views of the needle in the spine model with a virtual, augmented model. Figure 7b depicts the needle tip after a participant stopped insertion. Table 1 shows the comparative statistics of the needle penetration depth. The results of ROB and MAN groups were statistically tested for statistical differences using an independent t-sample test with a 95% confidence interval ( is differently denoted by ★). The t-sample test was chosen for statistical analysis due to its robustness in comparing small sample sizes, commonly used in similar surgical studies. The test ensured the reliable validation of depth and success rate differences between groups. The comparative metrics were time of completion (TOC), needle penetration depth, repeatability (measured by the standard deviation of penetration depth), and success (measured as the percentage of the population successfully inserting the needle into epidural space without retracting the needle). The statistical analysis showed that needle penetration depth, repeatability, and success were statistically different between manual and robotic groups. In all three metrics, ROB (AR) exhibited improved metrics. While traditional methods rely heavily on the practitioner’s expertise and anatomical landmarks, the AR system offers real-time, 3D visual guidance, directly overlaying critical anatomical structures onto the patient’s body. This comparison highlighted an average decrease of 56% in procedural time and an enhanced accuracy rate, suggesting that AR technology not only streamlines the process but also mitigates the risks associated with blind or guided needle insertions.

Figure 7.

(a) A comparison of the sagittal and axial views of the needle in the spine model with a virtual model. (b) Penetration depth and epidural cavity representation in a representative post-study image. (c) A comparison of TLX results in different procedures [].

Table 1.

Statistical comparison of ROB versus MAN groups for needle penetration test.

4.2. Force Rendering

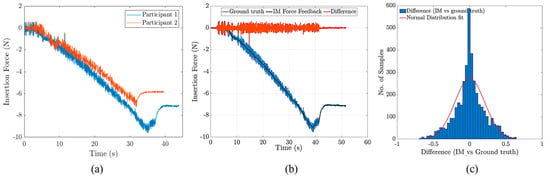

Figure 8a depicts the insertion forces recorded for two representative individuals in the study. The experiments were conducted with a sampling frequency of 1 kHz, a needle insertion speed of 2 mm/s, and an 18 G needle diameter to ensure consistency and reliability in the data collection. Two noticeable force drops during needle insertion in all the experiments may have cued the participants to soft tissue layer passages. It was also observed that, in most cases, the second force drop (corresponding to the passage through the last layer and entrance to epidural space) was larger than the first force drop. Also, Figure 8b compares the IM rendered force with the measured insertion force (ground truth) for a representative test. The mean absolute error between the IM force (rendered) and ground truth was N. For the calculation of the mean absolute errors, the first plateau (before insertion) and the forces after the peak (reversal) were not considered to only include the active “insertion” part of the experiments. Figure 8c shows the distribution of accumulated error among all the participants and in all repetitions. The nominal two-way communication delay between the robot and user interface was within the millisecond range. Our empirical testing showed a two-way communication delay of less than 10ms with Ethernet communication.

Figure 8.

(a) Needle insertion forces for two representative participants in the needle insertion study, (b) a comparison of the ground truth with IM rendered force, and (c) the distribution of the differences between the ground truth and IM force.

4.3. User Study

After five repetitions of MAN and ROB (with and without AR assistance) had been completed by each participant, they were given the standard NASA TLX questionnaire to indicate their experience with respect to the metrics of the questionnaire (i.e., mental, physical, and temporal demand; performance; effort; and frustration) on a scale of 0 to 100 with increments of 5. The NASA TLX framework uses different full-score indicators for each metric to reflect their relative importance to the overall workload in task-specific contexts. In this study, higher weights were assigned to metrics like performance, frustration, and mental demand, as these are critical for assessing the cognitive load and usability of the robotic and AR-assisted systems. This weighting approach ensures that the Task Load Index accurately represents the specific demands of the procedure. Therefore, given the high-risk nature of the epidural insertion task, the weights in Table 2 were selected to assess the users’ experience. Table 3 shows the results for all five participants.

Table 2.

NASA TLX metric weights (0−10).

Table 3.

Results of NASA TLX user study survey.

The results of the TLX analysis showed that the participants’ perceived task load was 18.60 points less with the proposed robotic technology with AR assistance than with manual intervention. The smallest difference in task load metrics was for mental load, with a 5-point improvement with the robotic system with AR assistance, while the largest difference was in effort metric, which showed a 44-point improvement on average among the participants. A contributing factor that might not have been captured in this test is the association among task load metrics, which may alter participants’ perceptions of task loads. Further studies are required to clarify this speculation. Also, the physical demand, performance, and frustration task load metrics were improved in ROB and ROB + AR compared to MAN.

In summary, the findings of the needle penetration depth and TLX analysis showed that the proposed robot-assisted system could improve the accuracy, repeatability, and success rate of needle insertion for the epidural injection tasks. At the same time, it decreased the Task Load Index of this intervention for the participants. Feedback from medical professionals who utilized the AR system during procedures has been decisively positive. Physicians reported an enhanced confidence in needle placement, attributing this to the precise visual cues provided by the AR interface. However, some practitioners noted a learning curve in adapting to the AR visualizations, suggesting the need for targeted training programs to maximize the system’s effectiveness and user comfort. This study was a proof of concept and the first step toward system development for telekinetic epidural needle insertion interventions. Therefore, there were limitations that need to be addressed in subsequent studies. For example, the statistical population of the user study was small. Therefore, the statistical conclusions might be speculative and statistically hard to generalize. Also, the user studies merely focused on the overall system usability, and each module of the proposed system, e.g., the effects of positioning control parameters, the effect of the presence/absence of force feedback, latency in AR visualization, marker recognition in varying surgical environments, and the sampling time window of the IM force rendering system, was not studied. In future studies, using optical tracking systems may increase the accuracy of the registered hologram, potentially leading to sub-millimetre accuracy. Another extension of this study could be to test the usability and performance of the system in other anatomical regions, e.g., kidney stone ablation and deep neural stimulation in the brain.

5. Conclusions

In this study, a robot-assisted system for epidural needle insertion was designed, prototyped, controlled, and tested. Performance tests at the system level were performed in a user study with a patient-specific 3D-printed anatomical model. In addition, an augmented reality-guided system was designed and tested for robotic epidural needle injection. Electromagnetic tracker-based static registration was used to register the hologram on a patient-specific 3D-printed model. The preliminary results from our clinical study demonstrate the system’s potential to enhance usability and improve procedure outcomes. Notably, using AR and robotic assistance increased the accuracy, success rate, and repeatability of needle insertions while reducing both the cognitive load on practitioners and the overall procedural time. These promising outcomes suggest that the system could significantly benefit clinical practice by making epidural insertions more efficient and effective. Given these initial findings, there is a clear need for extended research involving larger-scale clinical trials. Such studies will comprehensively evaluate the system’s performance in varied clinical settings, ensuring its reliability and effectiveness in real-world medical applications.

Author Contributions

Conceptualization, A.S. and A.H.; Software, A.S.; Validation, A.S.; Formal analysis, A.S.; Writing—original draft, A.S.; Supervision, R.C., J.B., L.S.F. and A.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Montreal General Hospital Foundation Research Award and Mitacs Accelerate fund.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Beigi, P. Image-Based Enhancement and Tracking of An Epidural Needle in Ultrasound Using Time-Series Analysis. Ph.D. Thesis, University of British Columbia, Kelowna, BC, Canada, 2017. [Google Scholar]

- Sprigge, J.; Harper, S. Accidental dural puncture and post dural puncture headache in obstetric anaesthesia: Presentation and management: A 23-year survey in a district general hospital. Anaesthesia 2008, 63, 36–43. [Google Scholar] [CrossRef] [PubMed]

- Capogna, G. Epidural Technique in Obstetric Anesthesia; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Li, G.; Patel, N.A.; Hagemeister, J.; Yan, J.; Wu, D.; Sharma, K.; Cleary, K.; Iordachita, I. Body-mounted robotic assistant for MRI-guided low back pain injection. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 321–331. [Google Scholar] [CrossRef] [PubMed]

- Eappen, S.; Blinn, A.; Segal, S. Incidence of epidural catheter replacement in parturients: A retrospective chart review. Int. J. Obstet. Anesth. 1998, 7, 220–225. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.; Adler, D.K. Ultrasound-assisted lumbar puncture in pediatric emergency medicine. J. Emerg. Med. 2014, 47, 59–64. [Google Scholar] [CrossRef]

- Khadem, M.; Rossa, C.; Usmani, N.; Sloboda, R.S.; Tavakoli, M. Geometric control of 3D needle steering in soft-tissue. Automatica 2019, 101, 36–43. [Google Scholar] [CrossRef]

- Liu, W.; Carriere, J.; Meyer, T.; Sloboda, R.; Husain, S.; Usmani, N.; Yang, Z.; Tavakoli, M. Intraoperative optimization of seed implantation plan in breast brachytherapy. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 1027–1035. [Google Scholar] [CrossRef]

- Yang, C.; Xie, Y.; Liu, S.; Sun, D. Force modeling, identification, and feedback control of robot-assisted needle insertion: A survey of the literature. Sensors 2018, 18, 561. [Google Scholar] [CrossRef]

- Siepel, F.J.; Maris, B.; Welleweerd, M.K.; Groenhuis, V.; Fiorini, P.; Stramigioli, S. Needle and biopsy robots: A review. Curr. Robot. Rep. 2021, 2, 73–84. [Google Scholar] [CrossRef]

- Asgar-Deen, D.; Carriere, J.; Wiebe, E.; Peiris, L.; Duha, A.; Tavakoli, M. Augmented Reality Guided Needle Biopsy of Soft Tissue: A Pilot Study. Front. Robot. AI 2020, 7, 72. [Google Scholar] [CrossRef]

- Carriere, J.; Khadem, M.; Rossa, C.; Usmani, N.; Sloboda, R.; Tavakoli, M. Surgeon-in-the-loop 3-d needle steering through ultrasound-guided feedback control. IEEE Robot. Autom. Lett. 2017, 3, 469–476. [Google Scholar] [CrossRef]

- Carrier, J.; Khadem, M.; Rossa, C.; Usmani, N.; Sloboda, R.S.; Tavakoli, M. Event-triggered 3d needle control using a reduced-order computationally efficient bicycle model in a constrained optimization framework. J. Med. Robot. Res. 2019, 4, 1842004. [Google Scholar] [CrossRef]

- Groenhuis, V.; Siepel, F.J.; Veltman, J.; van Zandwijk, J.K.; Stramigioli, S. Stormram 4: An MR safe robotic system for breast biopsy. Ann. Biomed. Eng. 2018, 46, 1686–1696. [Google Scholar] [CrossRef] [PubMed]

- Groenhuis, V.; Siepel, F.J.; Stramigioli, S. Sunram 5: A magnetic resonance-safe robotic system for breast biopsy, driven by pneumatic stepper motors. In Handbook of Robotic and Image-Guided Surgery; Elsevier: Amsterdam, The Netherlands, 2020; pp. 375–396. [Google Scholar]

- Nikolaev, A.; Hansen, H.H.; De Jong, L.; Mann, R.; Tagliabue, E.; Maris, B.; Groenhuis, V.; Siepel, F.; Caballo, M.; Sechopoulos, I.; et al. Ultrasound-guided breast biopsy of ultrasound occult lesions using multimodality image co-registration and tissue displacement tracking. In Proceedings of the Medical Imaging 2019: Ultrasonic Imaging and Tomography, San Diego, CA, USA, 17–18 February 2019; Volume 10955, pp. 205–213. [Google Scholar]

- Hoelscher, J.; Fu, M.; Fried, I.; Emerson, M.; Ertop, T.E.; Rox, M.; Kuntz, A.; Akulian, J.A.; Webster, R.J., III; Alterovitz, R. Backward planning for a multi-stage steerable needle lung robot. IEEE Robot. Autom. Lett. 2021, 6, 3987–3994. [Google Scholar] [CrossRef] [PubMed]

- Rubino, F.; Eichberg, D.G.; Cordeiro, J.G.; Di, L.; Eliahu, K.; Shah, A.H.; Luther, E.M.; Lu, V.M.; Komotar, R.J.; Ivan, M.E. Robotic guidance platform for laser interstitial thermal ablation and stereotactic needle biopsies: A single center experience. J. Robot. Surg. 2022, 16, 549–557. [Google Scholar] [CrossRef] [PubMed]

- Ben-David, E.; Shochat, M.; Roth, I.; Nissenbaum, I.; Sosna, J.; Goldberg, S.N. Evaluation of a CT-guided robotic system for precise percutaneous needle insertion. J. Vasc. Interv. Radiol. 2018, 29, 1440–1446. [Google Scholar] [CrossRef]

- Li, H.; Wang, Y.; Li, Y.; Zhang, J. A novel manipulator with needle insertion forces feedback for robot-assisted lumbar puncture. Int. J. Med. Robot. Comput. Assist. Surg. 2021, 17, e2226. [Google Scholar] [CrossRef]

- Jolaei, M.; Hooshiar, A.; Dargahi, J. Displacement-based model for estimation of contact force between rfa catheter and atrial tissue with ex-vivo validation. In Proceedings of the 2019 IEEE International Symposium on Robotic and Sensors Environments (ROSE), Ottawa, ON, Canada, 17–18 June 2019; pp. 1–7. [Google Scholar]

- Hooshiar, A.; Sayadi, A.; Jolaei, M.; Dargahi, J. Analytical tip force estimation on tendon-driven catheters through inverse solution of cosserat rod model. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 1829–1834. [Google Scholar]

- Bandari, N.M.; Hooshair, A.; Packirisamy, M.; Dargahi, J. Optical fiber array sensor for lateral and circumferential force measurement suitable for minimally invasive surgery: Design, modeling and analysis. In Proceedings of the Specialty Optical Fibers; Optica Publishing Group: Washington, DC, USA, 2016; p. JTu4A.44. [Google Scholar]

- Torkaman, T.; Roshanfar, M.; Dargahi, J.; Hooshiar, A. Embedded six-dof force–torque sensor for soft robots with learning-based calibration. IEEE Sensors J. 2023, 23, 4204–4215. [Google Scholar] [CrossRef]

- Mehrjouyan, A.; Menhaj, M.B.; Hooshiar, A. Safety-enhanced observer-based adaptive fuzzy synchronization control framework for teleoperation systems. Eur. J. Control 2023, 73, 100885. [Google Scholar] [CrossRef]

- Mehrjouyan, A.; Menhaj, M.B.; Hooshiar, A. Adaptive-neural command filtered synchronization control of tele-robotic systems using disturbance observer with safety enhancement. J. Frankl. Inst. 2024, 361, 107036. [Google Scholar] [CrossRef]

- Masoumi, N.; Ramos, A.C.; Torkaman, T.; Feldman, L.S.; Barralet, J.; Dargahi, J.; Hooshiar, A. Embedded Force Sensor for Soft Robots With Deep Transformation Calibration. IEEE Trans. Med. Robot. Bionics 2024, 6, 1363–1374. [Google Scholar] [CrossRef]

- Torkaman, T.; Roshanfar, M.; Dargahi, J.; Hooshiar, A. Analytical modeling and experimental validation of a gelatin-based shape sensor for soft robots. In Proceedings of the 2022 International Symposium on Medical Robotics (ISMR), Atlanta, GA, USA, 13–15 April 2022; pp. 1–7. [Google Scholar]

- Roshanfar, M.; Dargahi, J.; Hooshiar, A. Toward semi-autonomous stiffness adaptation of pneumatic soft robots: Modeling and validation. In Proceedings of the 2021 IEEE International Conference on Autonomous Systems (ICAS), Montréal, QC, Canada, 11–13 August 2021; pp. 1–5. [Google Scholar]

- Roshanfar, M.; Sayadi, A.; Dargahi, J.; Hooshiar, A. Stiffness adaptation of a hybrid soft surgical robot for improved safety in interventional surgery. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine &Biology Society (EMBC), Glasgow, UK, 11–15 July 2022; pp. 4853–4859. [Google Scholar]

- Roshanfar, M.; Taki, S.; Sayadi, A.; Cecere, R.; Dargahi, J.; Hooshiar, A. Hyperelastic modeling and validation of hybrid-actuated soft robot with pressure-stiffening. Micromachines 2023, 14, 900. [Google Scholar] [CrossRef] [PubMed]

- Hooshiar, A.; Najarian, S.; Dargahi, J. Haptic telerobotic cardiovascular intervention: A review of approaches, methods, and future perspectives. IEEE Rev. Biomed. Eng. 2019, 13, 32–50. [Google Scholar] [CrossRef] [PubMed]

- Kazemipour, N.; Sayadi, A.; Cecere, R.; Barralet, J.; Hooshiar, A. Augmented Reality-Assisted Epidural Needle Insertion: User Experience and Performance. In Proceedings of the 15th Hamlyn Symposium on Medical Robotics, London, UK, 26–29 June 2023; pp. 25–26. [Google Scholar]

- Bergholz, M.; Ferle, M.; Weber, B.M. The benefits of haptic feedback in robot-assisted surgery and their moderators: A meta-analysis. Sci. Rep. 2023, 13, 19215. [Google Scholar] [CrossRef] [PubMed]

- Kazemipour, N.; Hooshiar, A.; Kersten-Oertel, M. A usability analysis of augmented reality and haptics for surgical planning. Int. J. Comput. Assist. Radiol. Surg. 2024, 19, 2069–2078. [Google Scholar] [CrossRef]

- Vadalà, G.; De Salvatore, S.; Ambrosio, L.; Russo, F.; Papalia, R.; Denaro, V. Robotic spine surgery and augmented reality systems: A state of the art. Neurospine 2020, 17, 88. [Google Scholar] [CrossRef]

- Morimoto, T.; Kobayashi, T.; Hirata, H.; Otani, K.; Sugimoto, M.; Tsukamoto, M.; Yoshihara, T.; Ueno, M.; Mawatari, M. XR (extended reality: Virtual reality, augmented reality, mixed reality) technology in spine medicine: Status quo and quo vadis. J. Clin. Med. 2022, 11, 470. [Google Scholar] [CrossRef]

- Malhotra, S.; Halabi, O.; Dakua, S.P.; Padhan, J.; Paul, S.; Palliyali, W. Augmented reality in surgical navigation: A review of evaluation and validation metrics. Appl. Sci. 2023, 13, 1629. [Google Scholar] [CrossRef]

- Gibby, J.; Cvetko, S.; Javan, R.; Parr, R.; Gibby, W. Use of augmented reality for image-guided spine procedures. Eur. Spine J. 2020, 29, 1823–1832. [Google Scholar] [CrossRef]

- Elmi-Terander, A.; Skulason, H.; Söderman, M.; Racadio, J.; Homan, R.; Babic, D.; van der Vaart, N.; Nachabe, R. Surgical navigation technology based on augmented reality and integrated 3D intraoperative imaging: A spine cadaveric feasibility and accuracy study. Spine 2016, 41, E1303. [Google Scholar] [CrossRef]

- Azad, T.D.; Warman, A.; Tracz, J.A.; Hughes, L.P.; Judy, B.F.; Witham, T.F. Augmented reality in spine surgery–past, present, and future. Spine J. 2024, 24, 1–13. [Google Scholar] [CrossRef]

- Edström, E.; Burström, G.; Omar, A.; Nachabe, R.; Söderman, M.; Persson, O.; Gerdhem, P.; Elmi-Terander, A. Augmented reality surgical navigation in spine surgery to minimize staff radiation exposure. Spine 2020, 45, E45–E53. [Google Scholar] [CrossRef] [PubMed]

- Guo, S.; Song, Y.; Yin, X.; Zhang, L.; Tamiya, T.; Hirata, H.; Ishihara, H. A novel robot-assisted endovascular catheterization system with haptic force feedback. IEEE Trans. Robot. 2019, 35, 685–696. [Google Scholar] [CrossRef]

- Ravali, G.; Manivannan, M. Haptic feedback in needle insertion modeling and simulation. IEEE Rev. Biomed. Eng. 2017, 10, 63–77. [Google Scholar] [CrossRef] [PubMed]

- Meli, L.; Pacchierotti, C.; Prattichizzo, D. Experimental evaluation of magnified haptic feedback for robot-assisted needle insertion and palpation. Int. J. Med. Robot. Comput. Assist. Surg. 2017, 13, e1809. [Google Scholar] [CrossRef]

- Sayadi, A.; Hooshiar, A.; Dargahi, J. Impedance matching approach for robust force feedback rendering with application in robot-assisted interventions. In Proceedings of the 2020 8th International Conference on Control, Mechatronics and Automation (ICCMA), Moscow, Russia, 6–8 November 2020; pp. 18–22. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).