Lightweight Two-Layer Control Architecture for Human-Following Robot

Abstract

1. Introduction

2. Materials and Methods

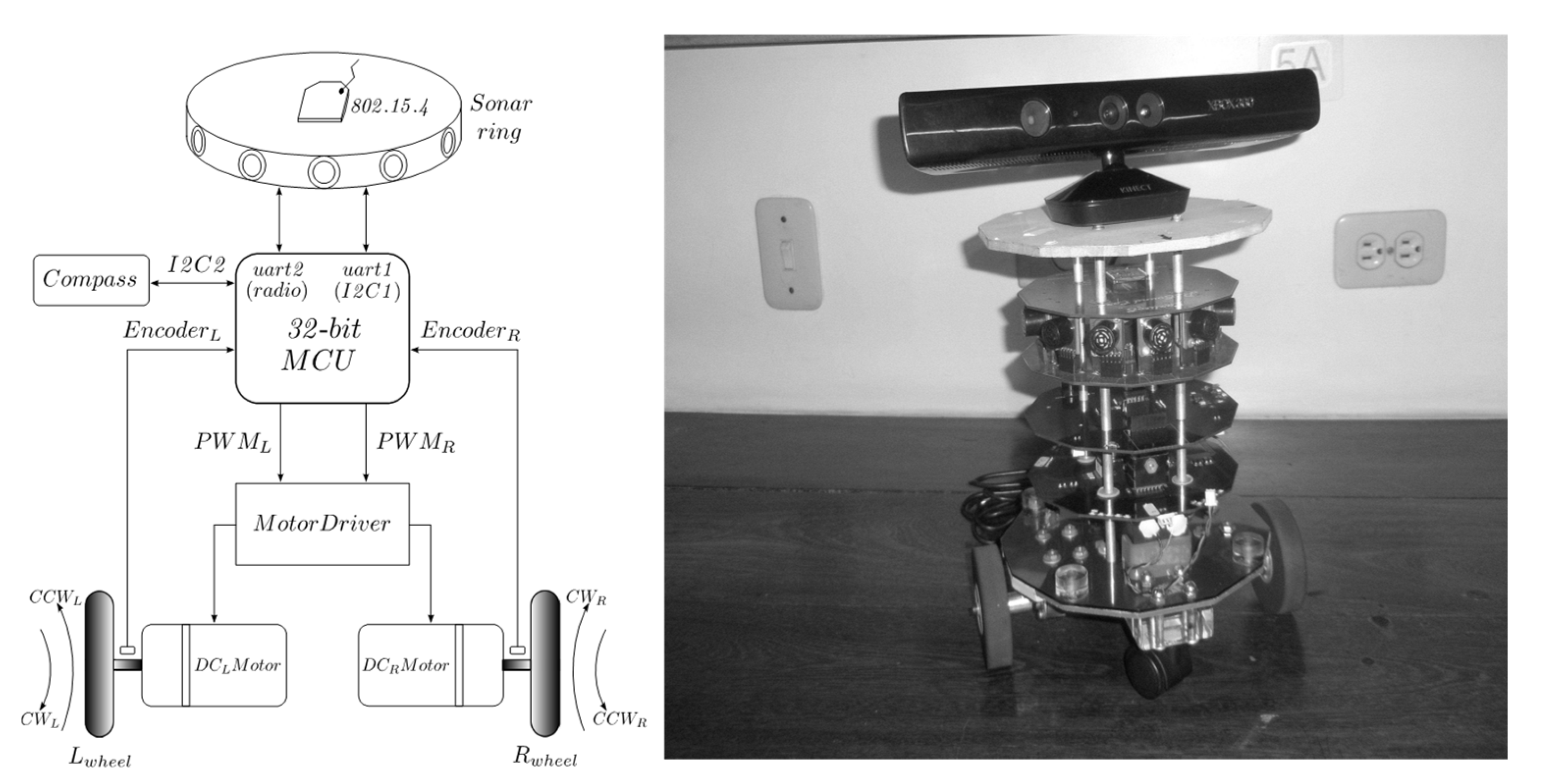

2.1. Mobile Robotic Platform

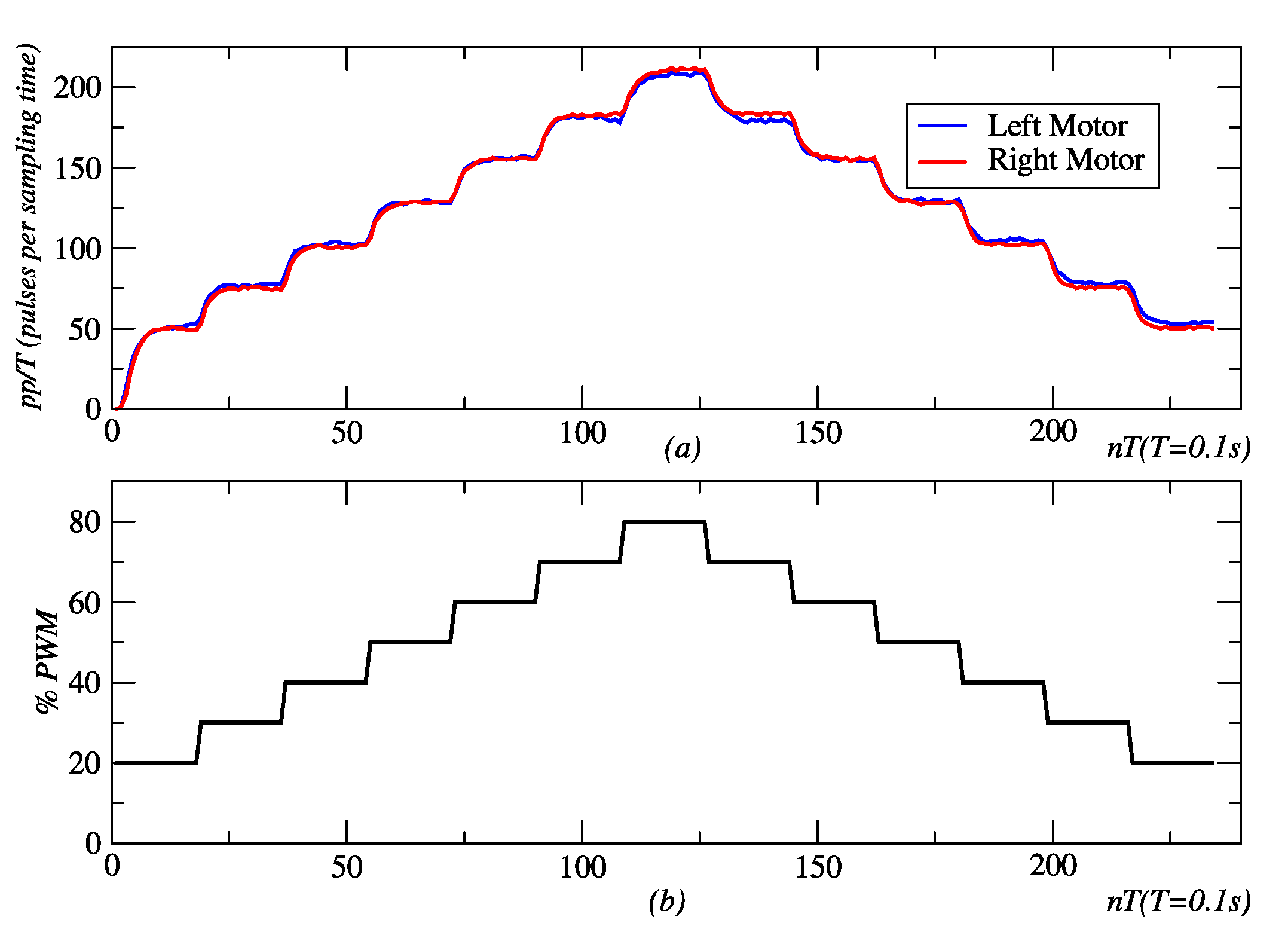

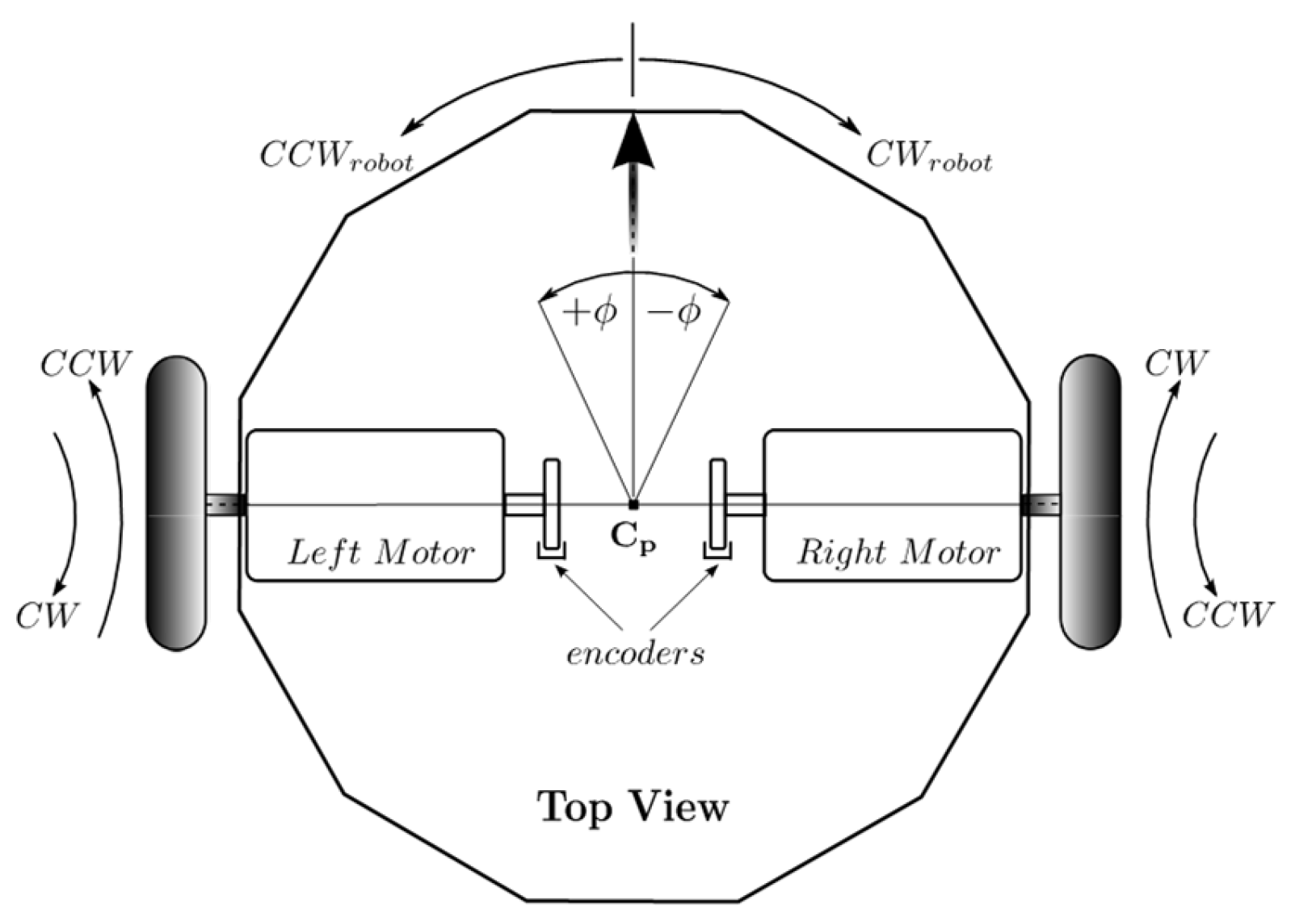

2.2. Effector Modeling and Control

| Algorithm 1: Application of time-variant PWM signal to robot’s effectors. Source: Authors. |

| Input: Start command from host PC through an 802.15.4 link Output: Pulse counts from encoders in host PC through ab 802.15.4 link Initialize internal hardware of microcontroller: UART at 9600,8,N,1 for 802.15.4 communications; timer TPM3 for 1 KHz PWM signals generations; TPM1, TPM2 for count pulses of encoders; interruptions of internal RTC for 0.1 s sampling interval FlagUpDown = 0; DCLeftmotor = 0; DCRightmotor = 0; CountPulsesrightmotor = 0; CountPulsesleftmotor = 0; repeat while uart reception register empty do wait; end RcvCommand = uart reception register; until RcvCommand ≠ “s”; repeat if FlagUpDown = 0 then DCLeftmotor += PWMUpDown; DCRightmotor += PWMUpDown; end else DCLeftmotor −= PWMUpDown; DCRightmotor −= PWMUpDown; end PWMtimeinterval = 15; repeat while no RTC interruption do wait; end CountPulsesLeftmotor = internal count register TPM1; CountPulsesLeftmotor = internal count register TPM1; while uart busy do wait; end; uart transmition register = CountPulsesLeftmotor; while uart busy do wait; end uart transmition register = CountPulsesRightmotor; CountPulsesLeftmotor = 0; CountPulsesRightmotor = 0; PWMtimeinterval ——; until PWMtimeinterval = 0; if DCLeftmotor = 80 and DCRightmotor = 80 then FlagUpdown = 1; end until DCLeftmotor < 20 and DCRightmotor < 20; |

2.2.1. Pole Placement Method

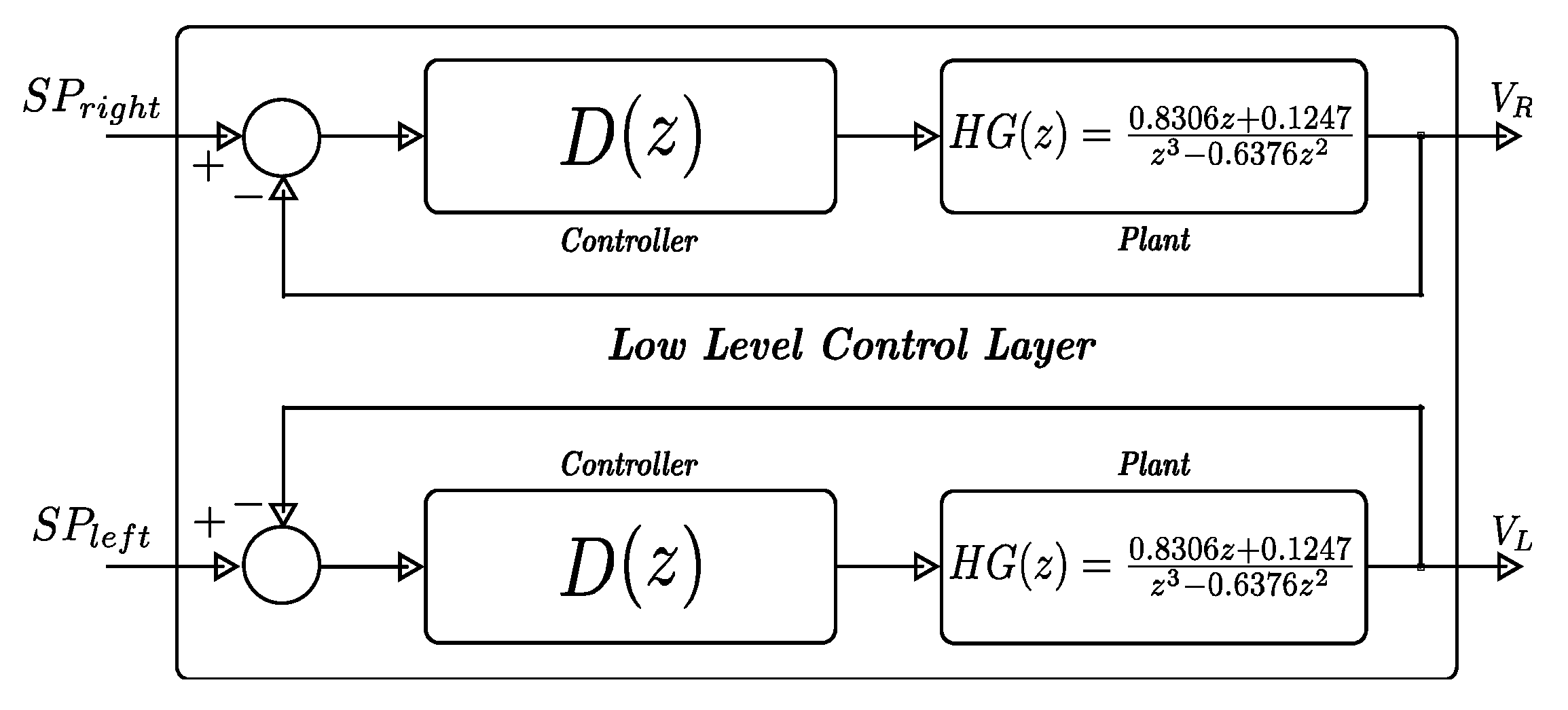

2.2.2. Internal Model

2.2.3. Low-Level Control Architecture

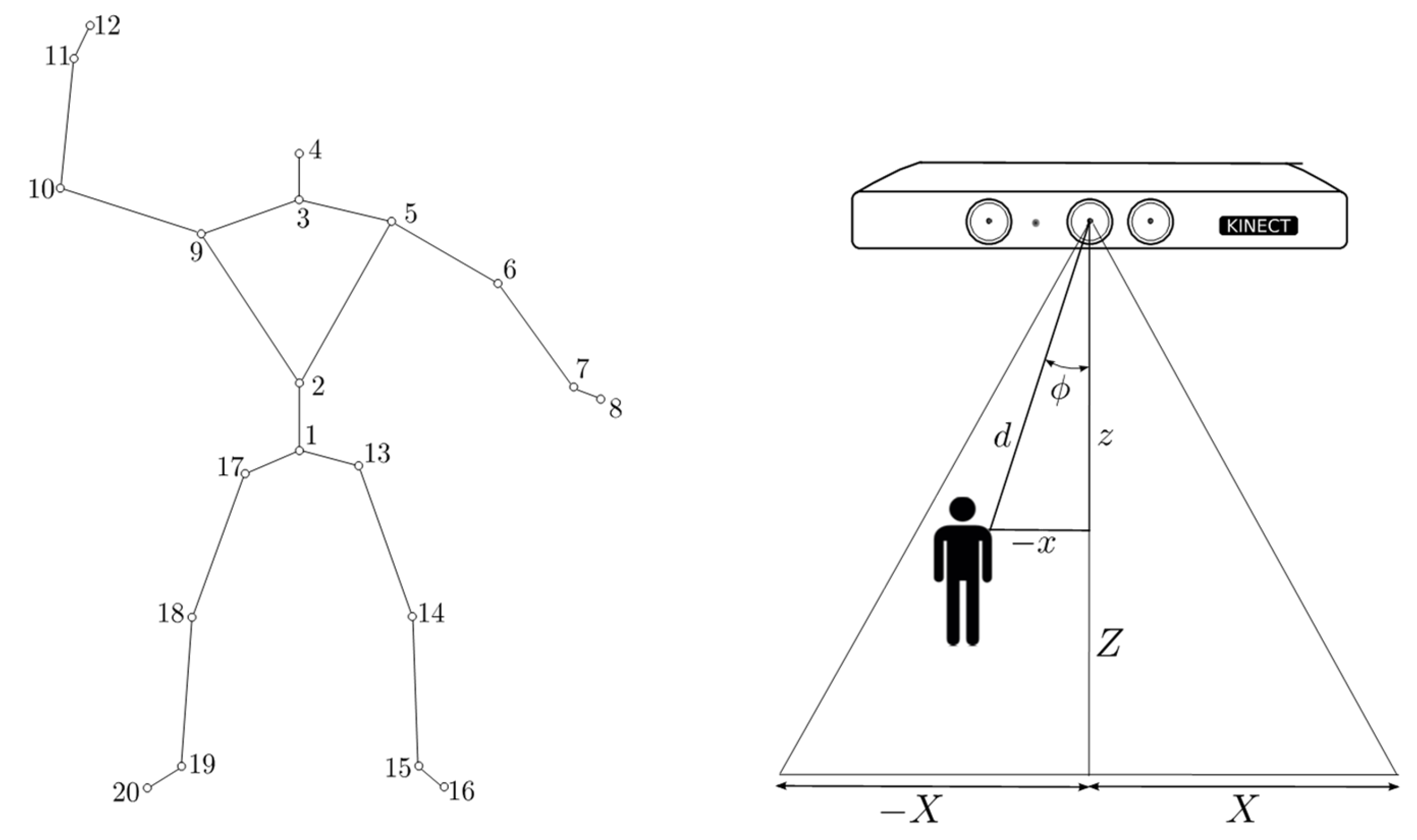

2.3. Linear and Angular Displacements

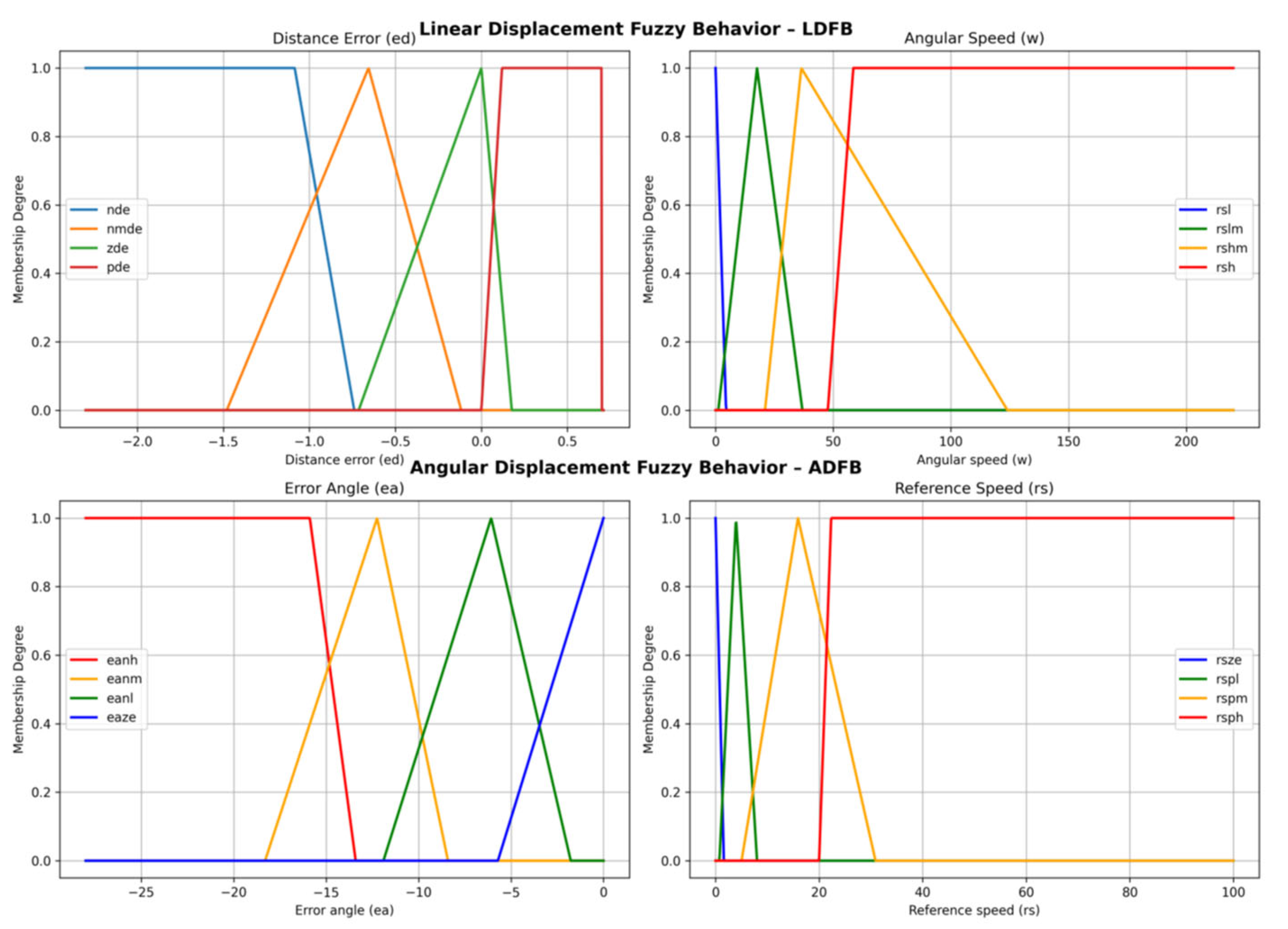

2.3.1. Linear Displacement Fuzzy Behavior (LDFB)

2.3.2. Angular Displacement Fuzzy Behavior (ADFB)

2.3.3. Control Architecture

| Algorithm 2: Behavior-based Control Layer. Source: Authors. |

| Input: Time t f during which the person is followed by the robot Output: Velocity set-point Vr for PI embedded controllers, distance d between the person and the robot, angular position ϕ of the person respect to the robot, flag of active behavior behave_flag, and text file for data logging data.txt // behave_flag = 1: robot angular displacement in CW // // behave_flag = 2: robot angular displacement in CCW // // behave_flag = 3: linear forward displacement in CCW // Vr = 0; t = 0; d = 0; ϕ = 0; behave_flag = 0; x = 0; z = 0; Initialize the PC UART at 9600,8,N,1 for 802.15.4 communications; Open(“data.txt”, Write); /* create and open a data logging text file for write */ Run the Kinect skeletal tracking functionality; repeat z ← the z coordinate of the joint spin 1; x ← the x coordinate of the joint spin 1; d = sqrt(x2 + y2); ϕ = tan−1(x/z); if −25 ≤ ϕ ≤ −8 or 8 ≤ ϕ ≤ 25 then Deactivate linear displacement behavior; Vr = fuzzy_angular_behavior(ϕ); if −25 ≤ ϕ ≤ −8 then behave_flag = 2; else behave_flag = 1; end else Desactivate fuzzy angular displacement behavior; Vr = fuzzy_linear_behavior(d); behave_flag = 3; end UART_transmission(behave_flag,Vr); Write(“data.txt”, Vr, d, ϕ, behave_flag); until t > t f; behave_flag = 0; UART_transmission(behave_flag); UART_close(); Close(“data.txt”); |

| Algorithm 3: Embedded Control Layer. Source: Authors. |

| Input: Active behavior flag behave_flag, Velocity set-point Vr, and stop_character for stop the robot Output: DC_Left and, DC_Right for setting the Duty Cycle of the PWM control signals for left and right motors Initialize internal UART of microcontroller at 9600,8,N,1 for 802.15.4 communications; Enable interrupts for data reception in the UART; Configure the internal timer module TPM1 to counting pulses of the left motor encoder; Configure the internal timer module TPM2 to counting pulses of the right motor encoder; Configure the internal timer module TPM3 to generate 1 KHz PWM control signals; Configure the internal RTC module to generate periodic interrupts each T = 0.1 s; Vr = 0; DC_left = 0; DC_right = 0; stop_character = 0; behave_flag = 0; LW_pulses = 0; RW_pulses = 0; repeat wait; until t > t f; // Interrupt Service Routine for internal RTC time out ISR RTC_time_out{ LW_pulses ← (TPM1 counting register) // pulse counting left motor RW_pulses ← (TPM2 counting register) // pulse counting right motor switch behave_flag do case 1 do left motor rotation ← CCW; right motor rotation ← CCW; // robot rotates in CW case 2 do left motor rotation ← CW; right motor rotation ← CW; // robot rotates in CCW case 3 do left motor rotation ← CCW; right motor rotation ← CW; // robot moves forward otherwise do Vr = 0; end DC_left = left_motor_PI_controller(LW_pulses, Vr); DC_right = right_motor_PI_controller(RW_pulses, Vr); TPM1 duty cycle register = DC_left; TPM2 duty cycle register = DC_right; } // Interrupt Service Routune for UART reception ISR UART_reception{ behave_flag ← (UART_reception_register); Vr ← (UART_reception_register); } |

2.4. Experimental Designs

2.4.1. Experimental Design for Embedded Controllers

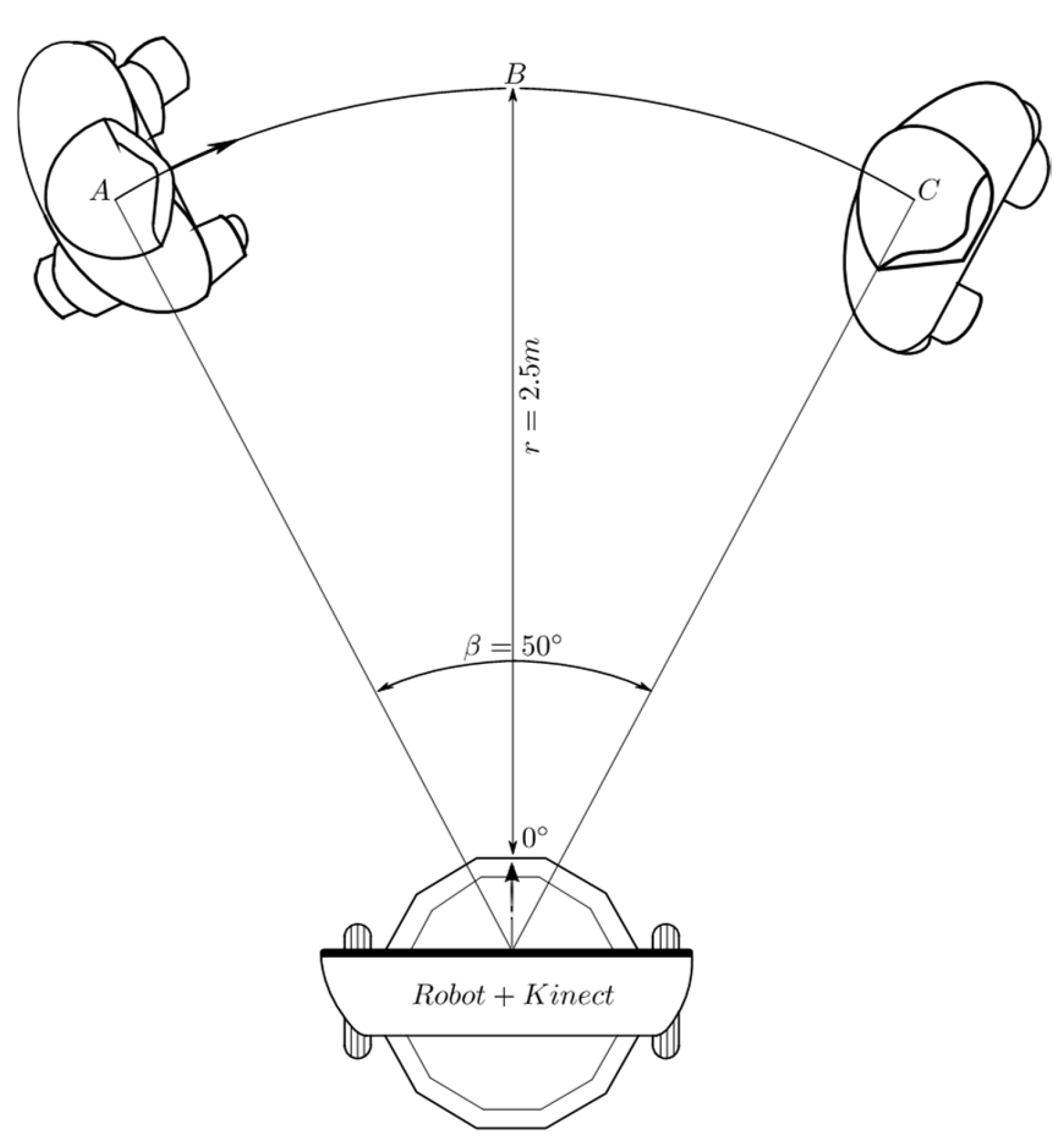

2.4.2. Experimental Design for Fuzzy Behaviors

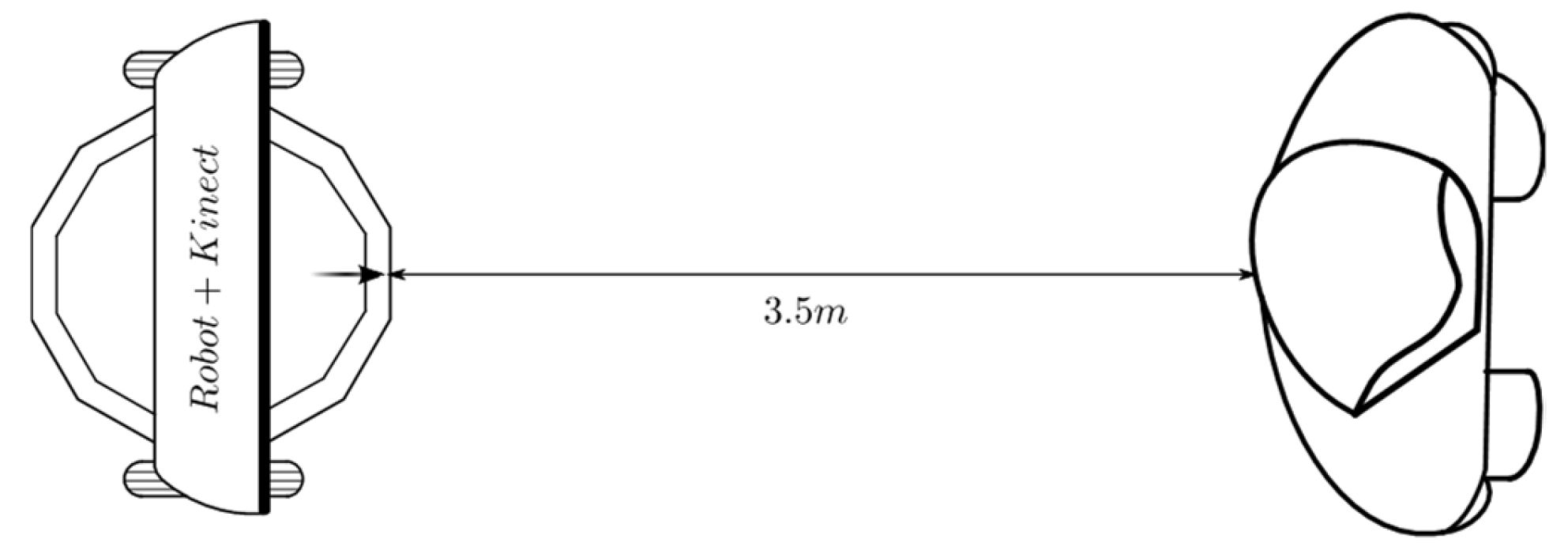

2.4.3. Experimental Design for Linear Tracking

2.4.4. Experimental Design for Angular Tracking

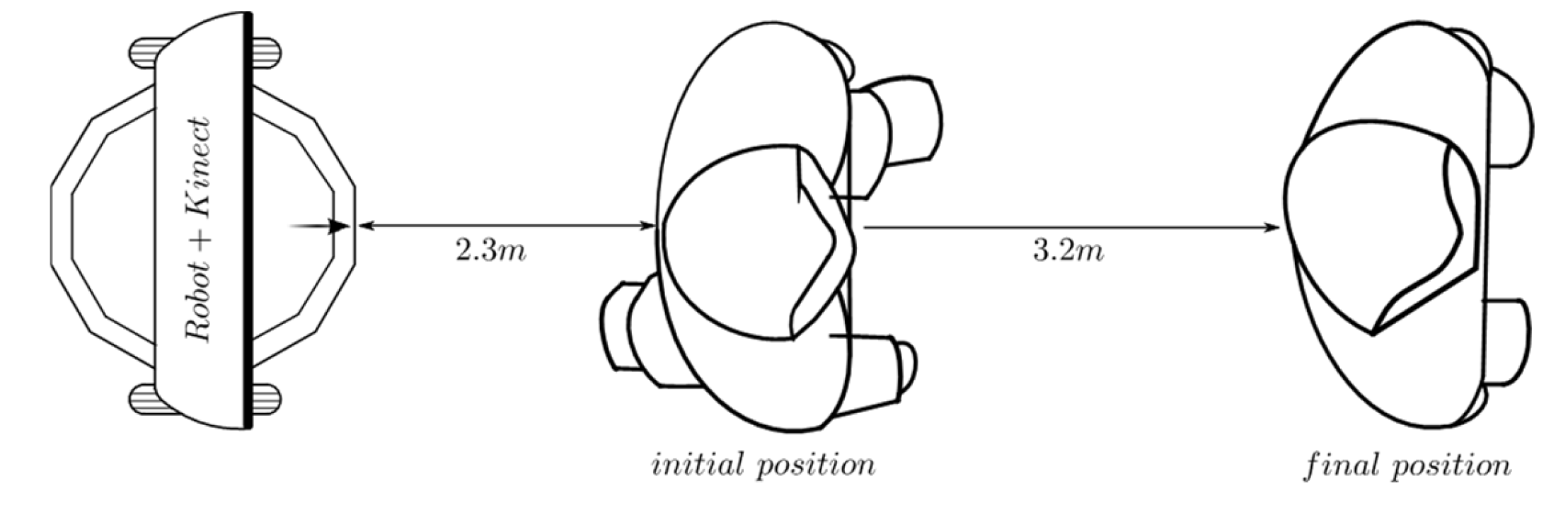

2.4.5. Experimental Design for Behavior Coordination

3. Results and Discussion

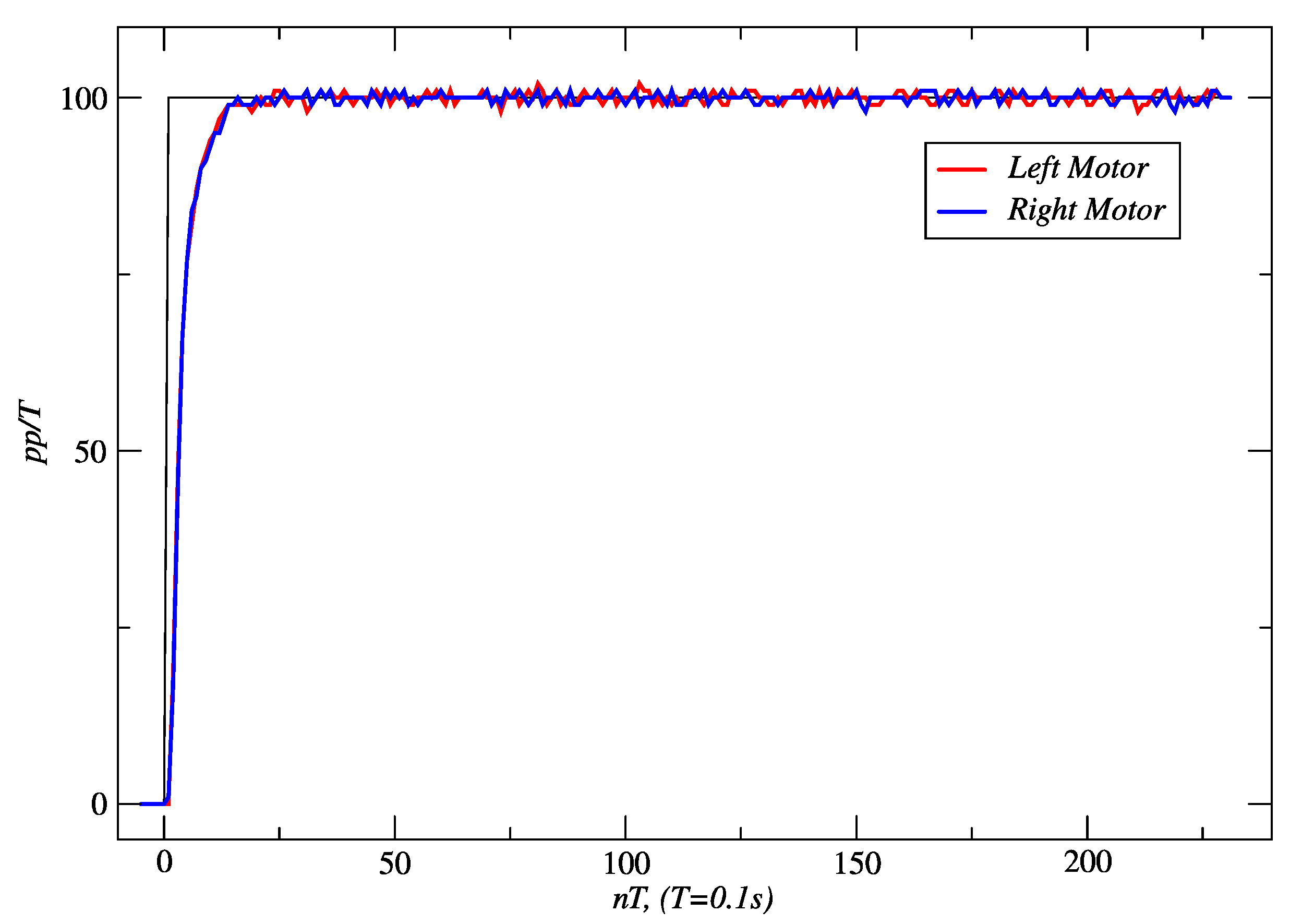

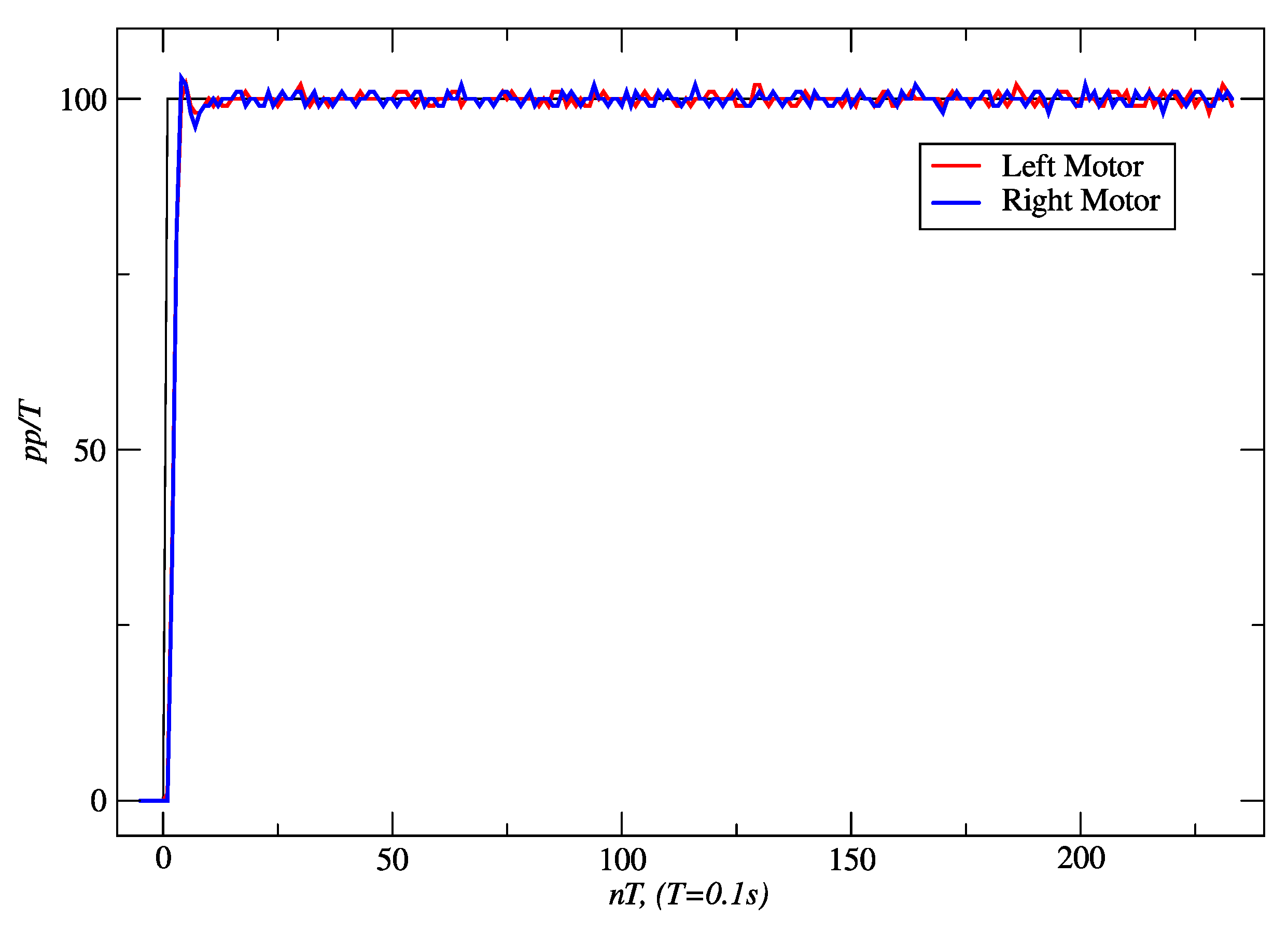

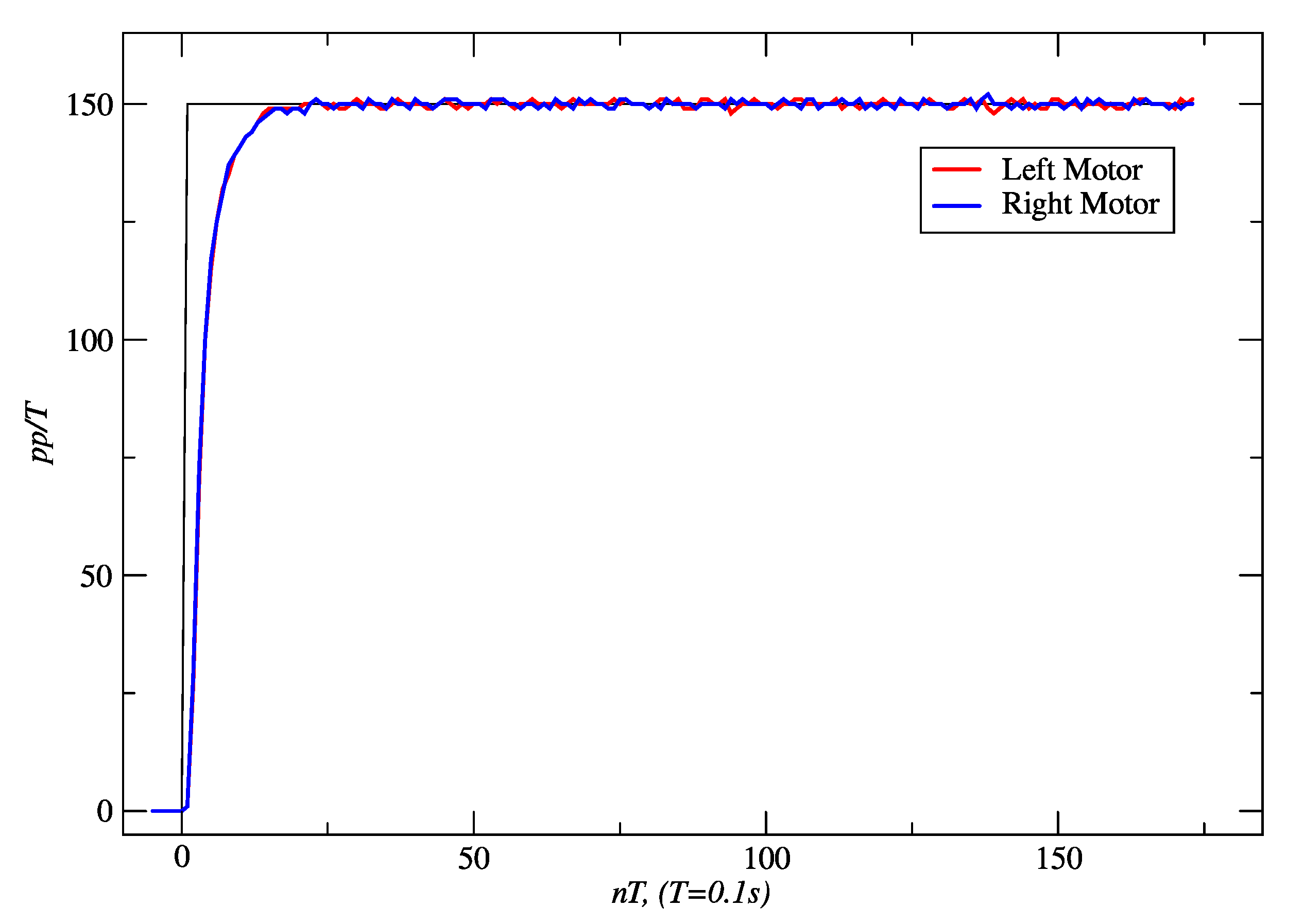

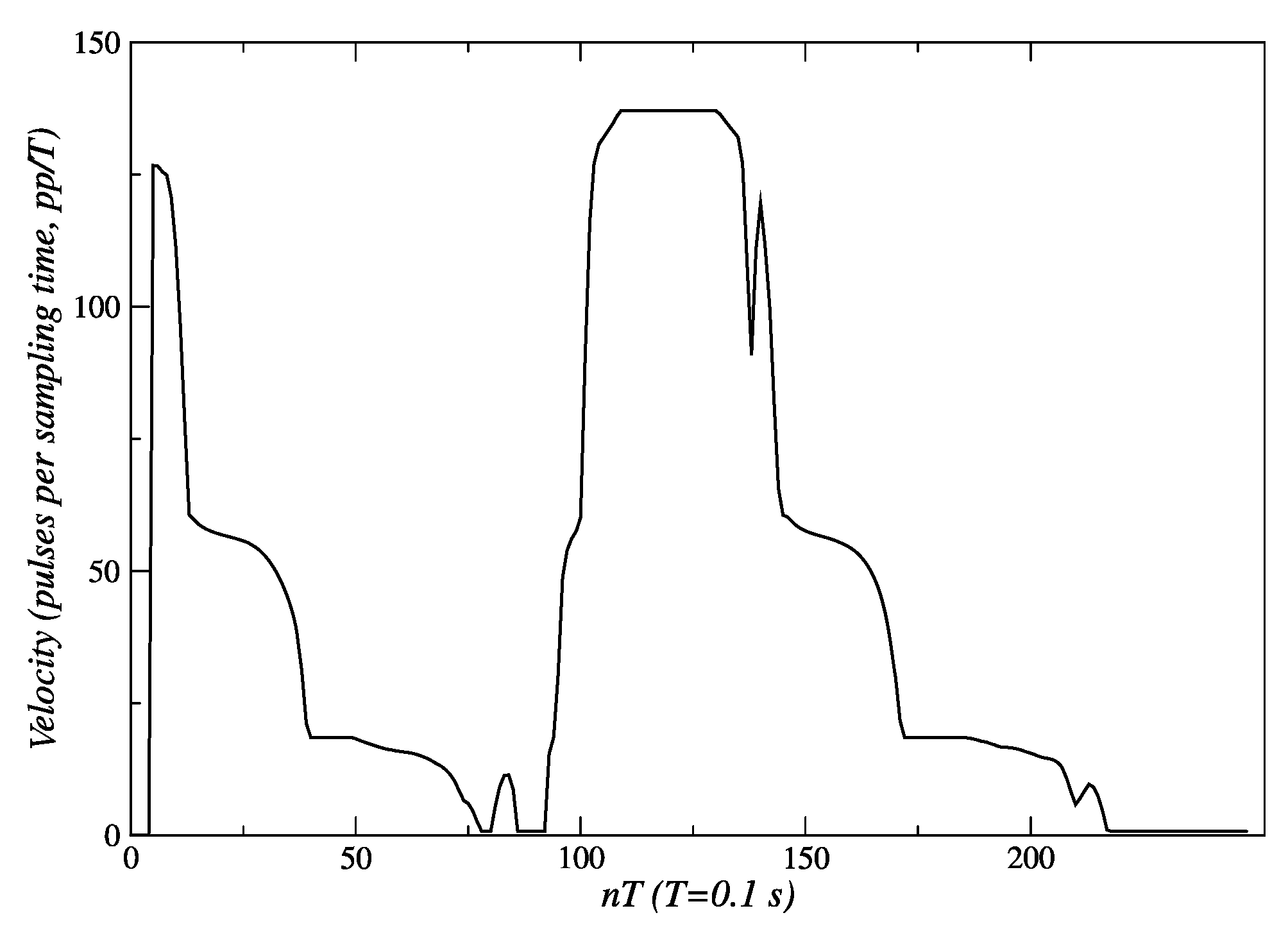

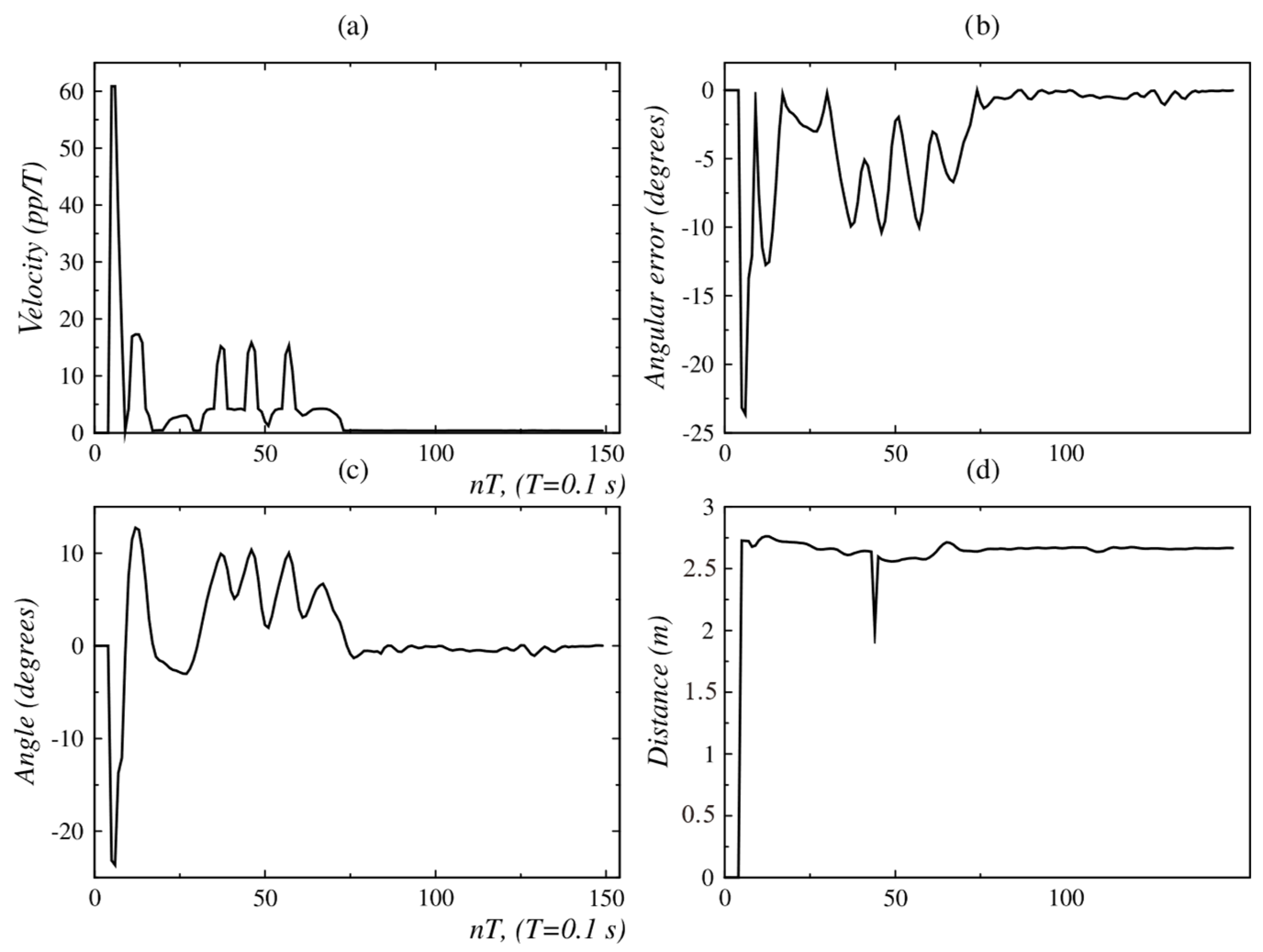

3.1. Results for Embedded Controllers

3.2. Results for Fuzzy Behaviors

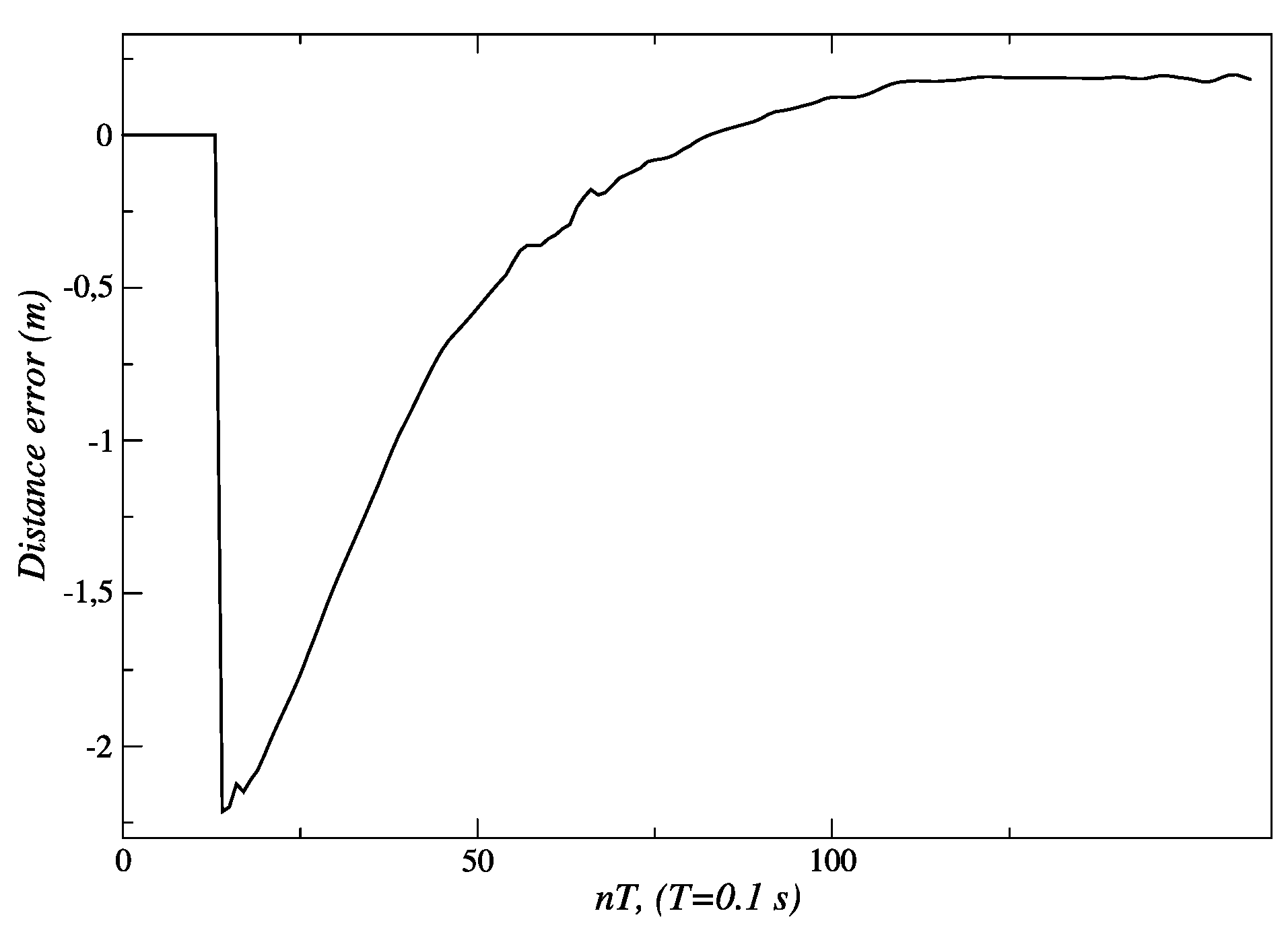

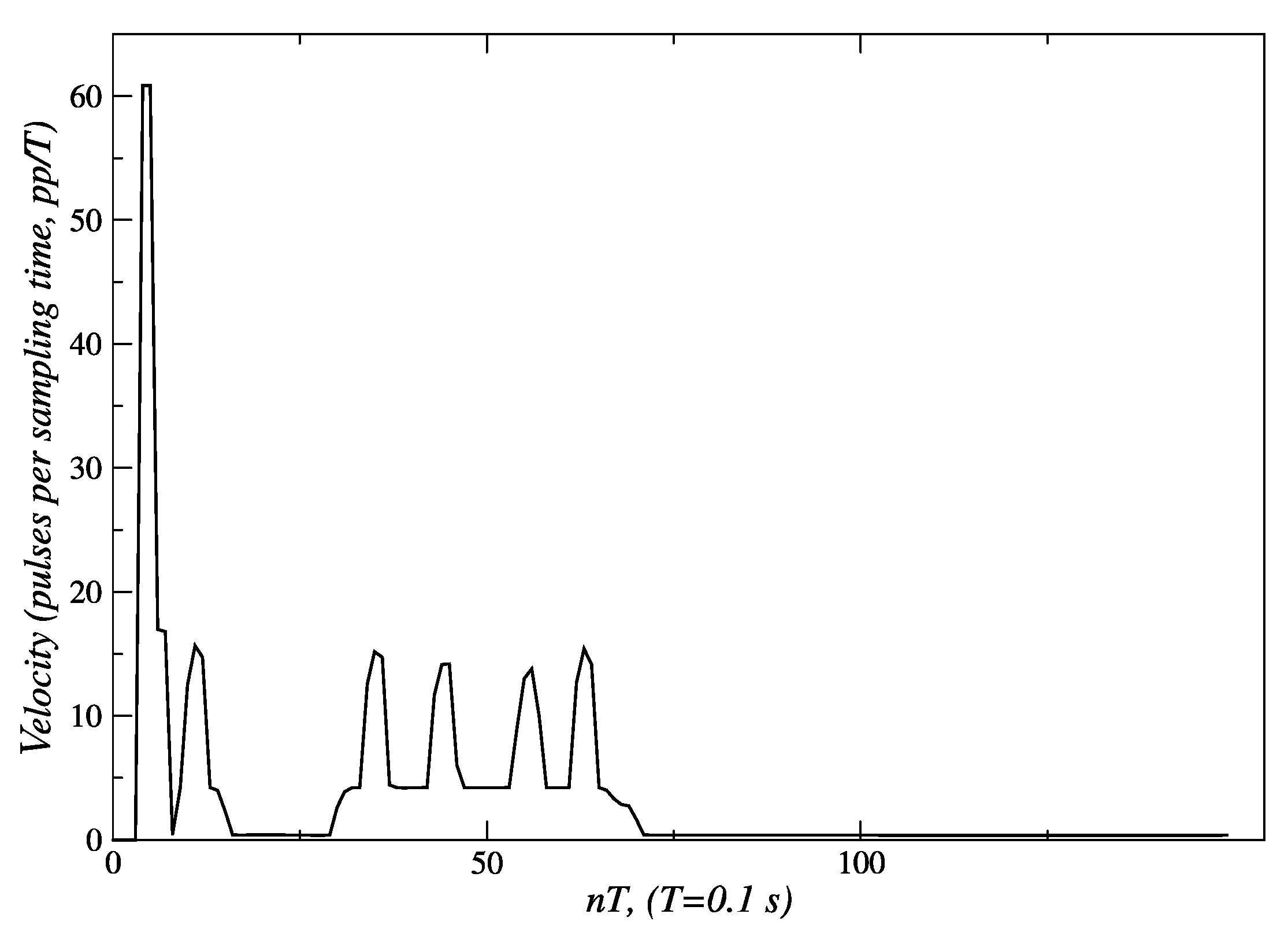

3.2.1. Results for Linear Tracking

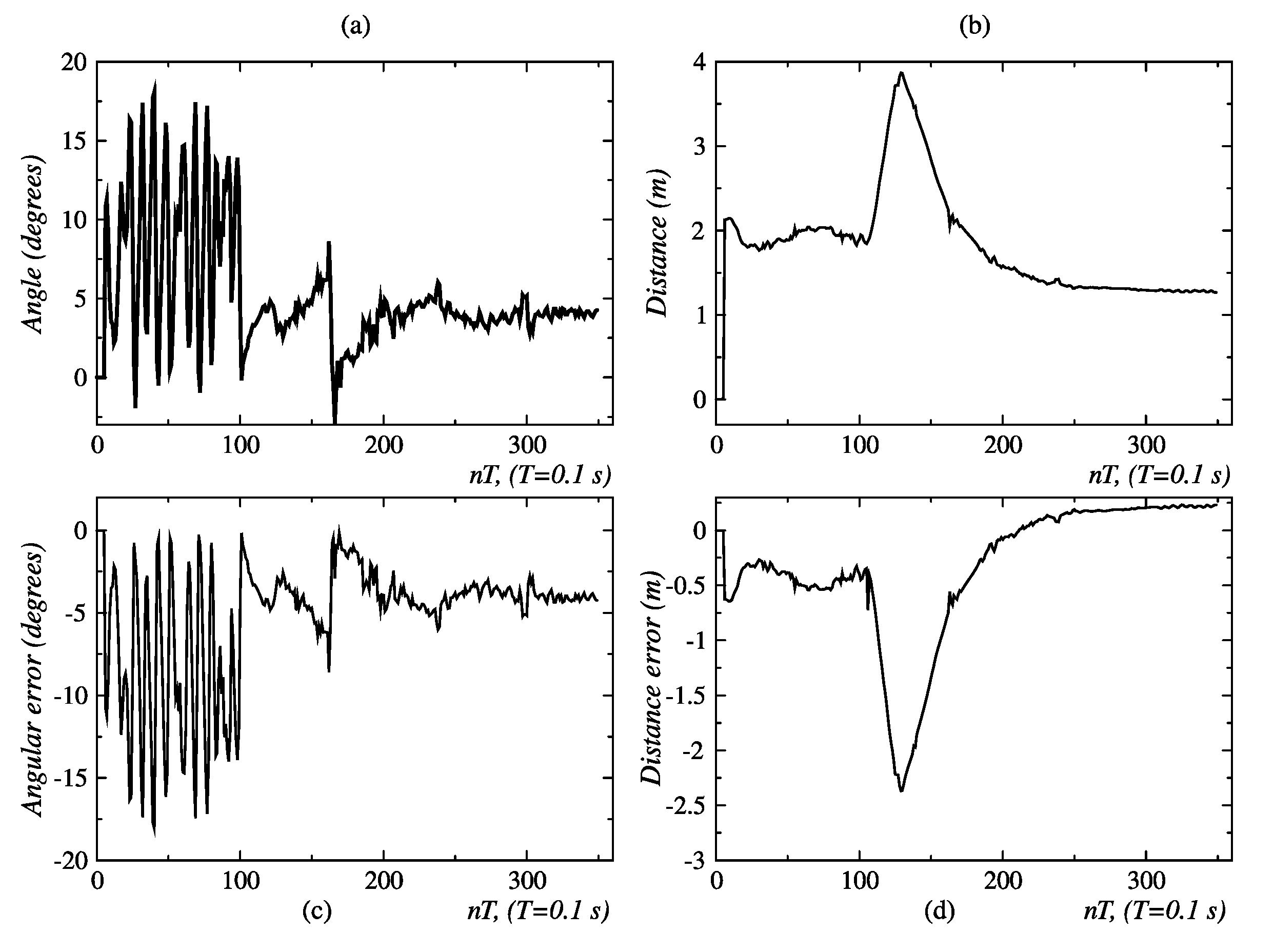

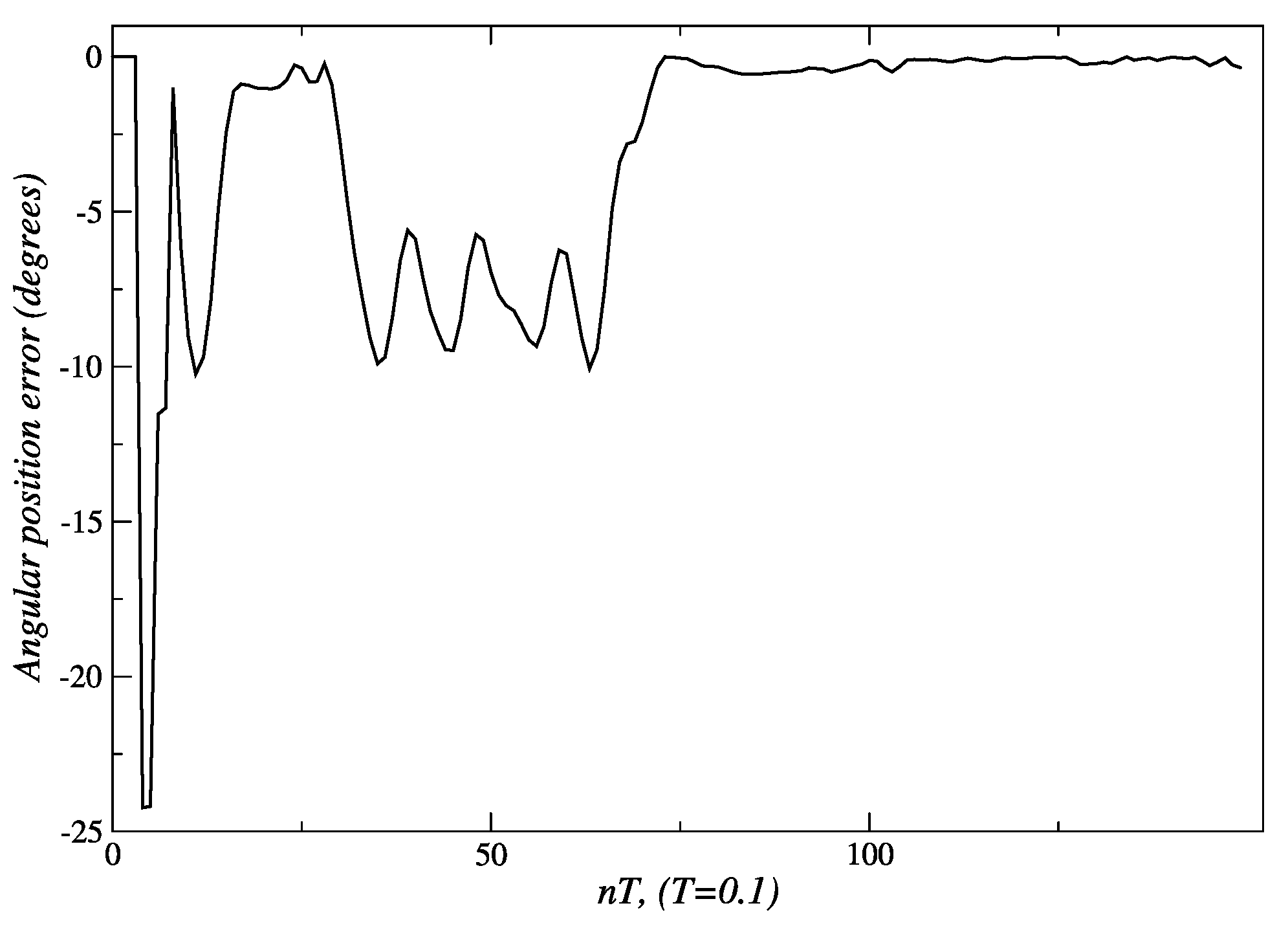

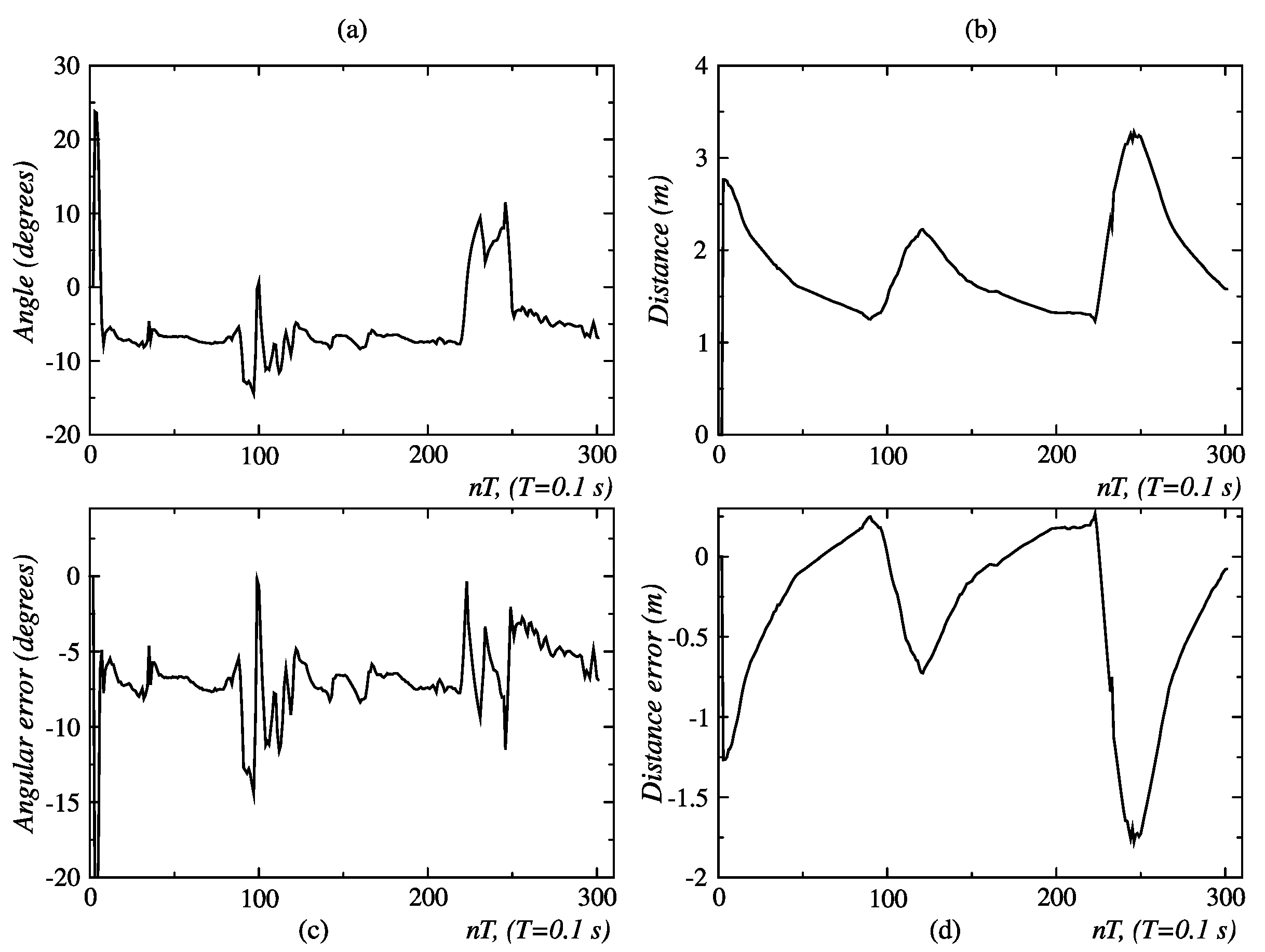

3.2.2. Results for Angular Tracking

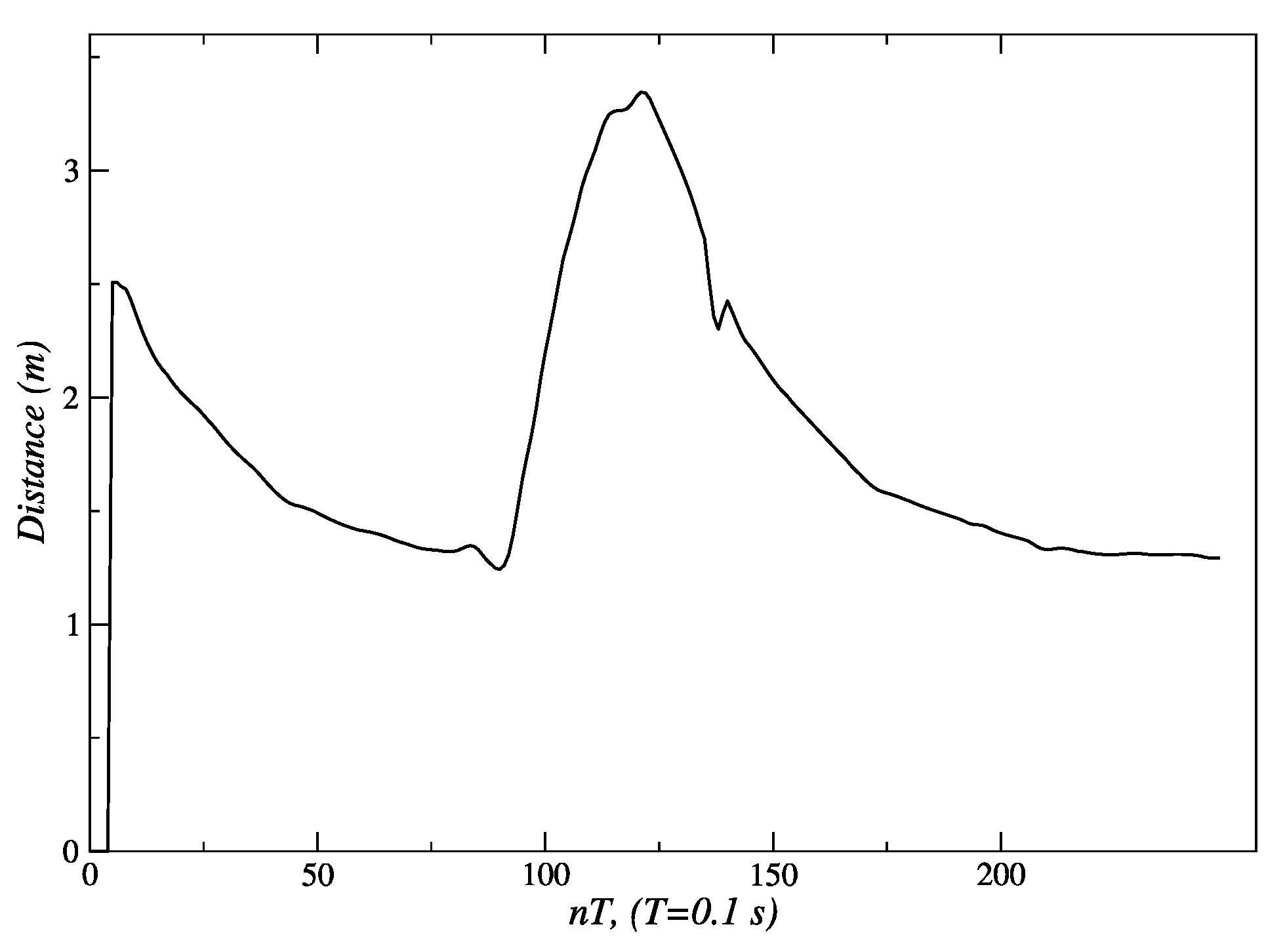

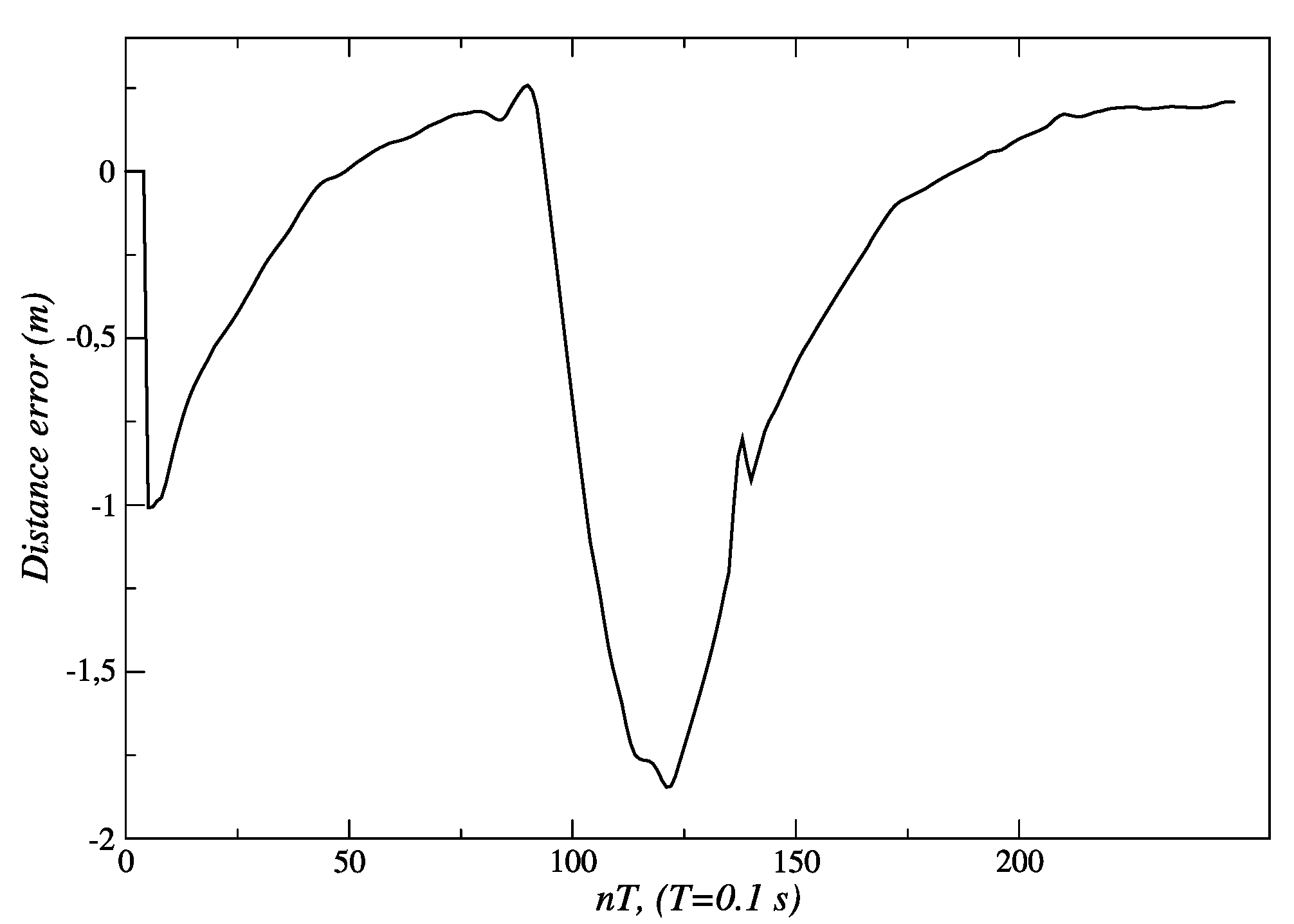

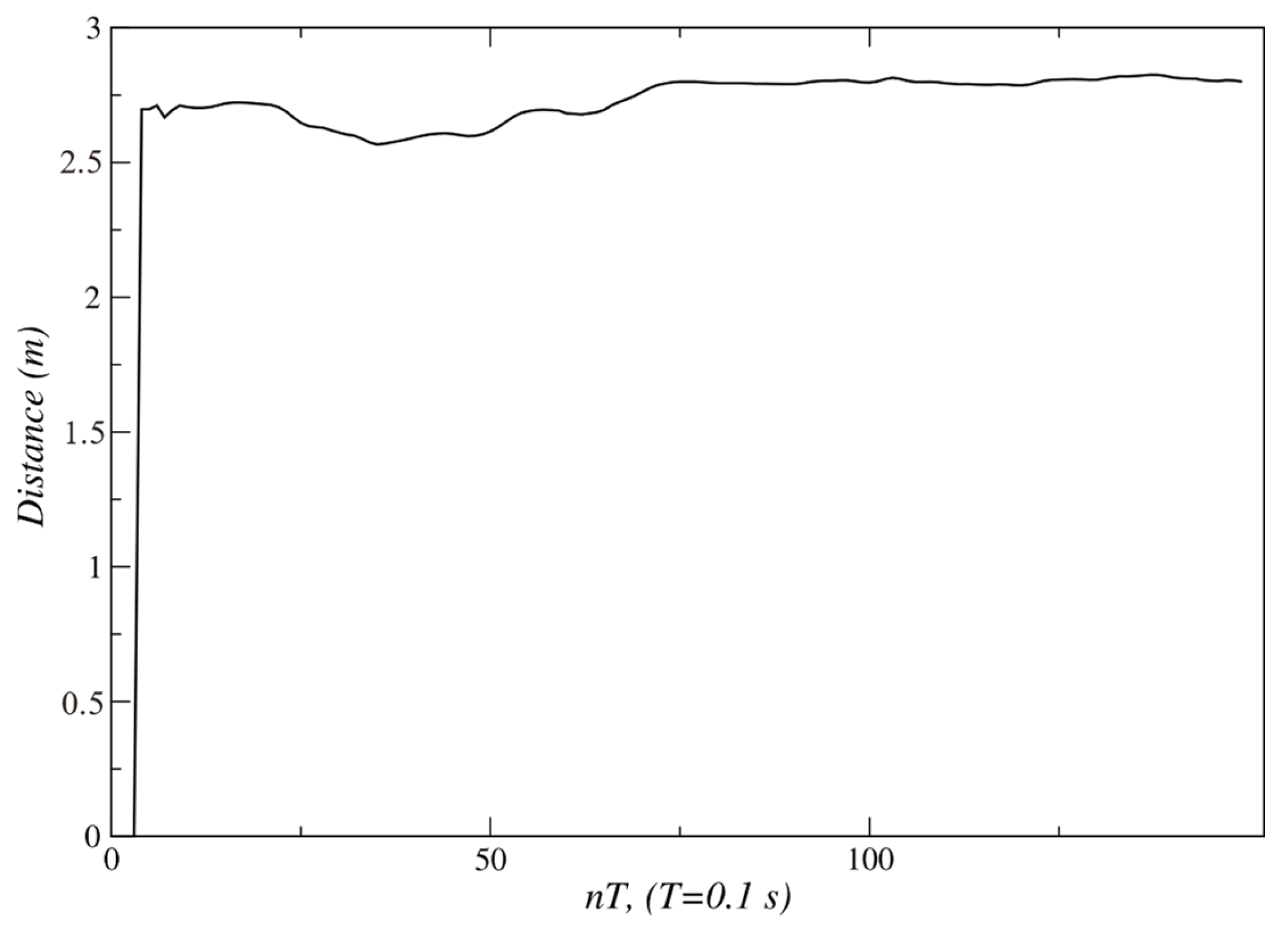

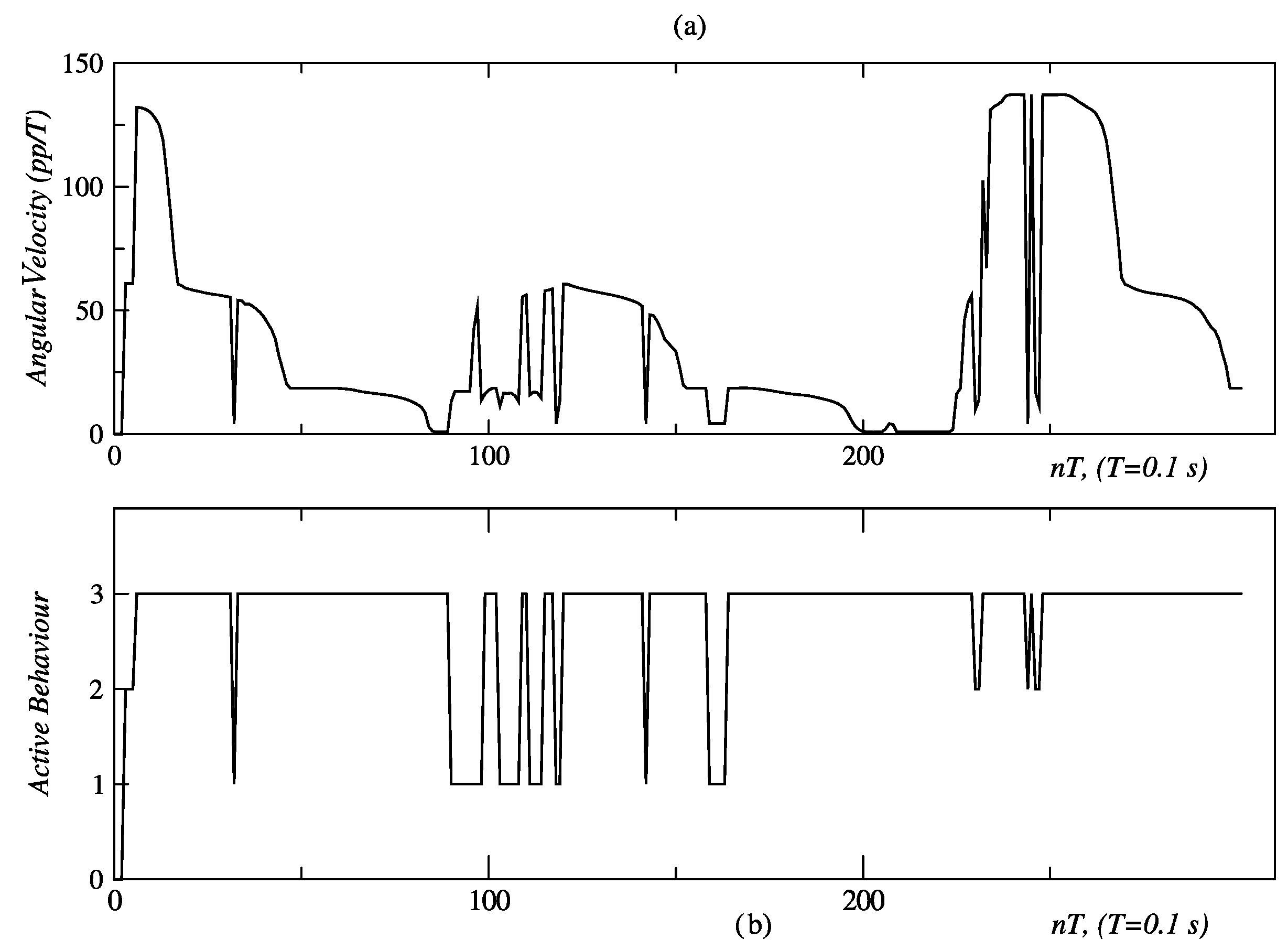

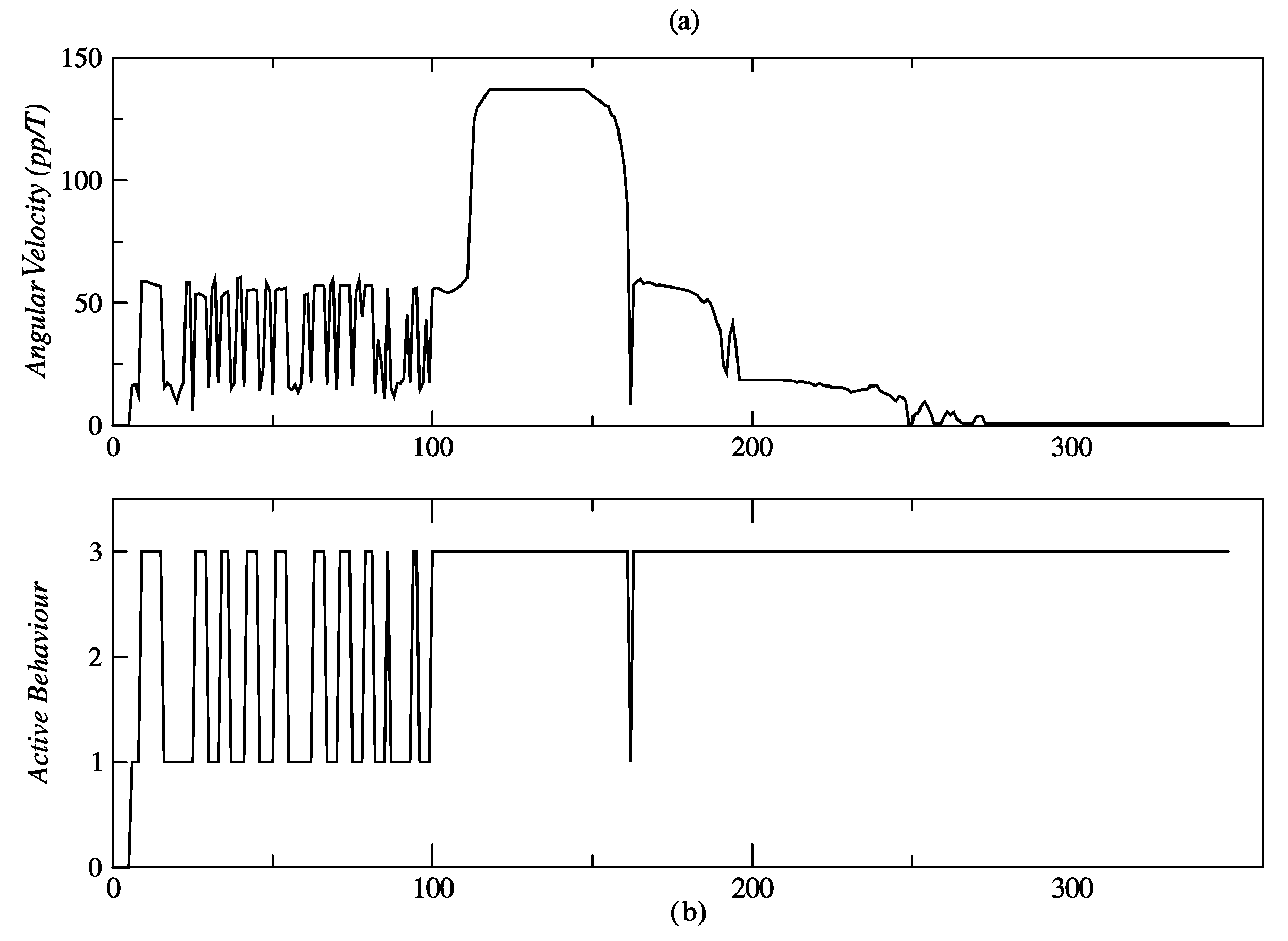

3.3. Results for Behavior Coordination

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fulmer, T.; Reuben, D.B.; Auerbach, J.; Fick, D.M.; Galambos, C.; Johnson, K.S. Actualizing better health and health care for older adults: Commentary describes six vital directions to improve the care and quality of life for all older Americans. Health Aff. 2021, 40, 219–225. [Google Scholar] [CrossRef] [PubMed]

- Aggar, C.; Sorwar, G.; Seton, C.; Penman, O.; Ward, A. Smart home technology to support older people’s quality of life: A longitudinal pilot study. Int. J. Older People Nurs. 2023, 18, e12489. [Google Scholar] [CrossRef] [PubMed]

- Sather, R.; Soufineyestani, M.; Imtiaz, N.; Khan, A.A. Assistive robots designed for elderly care and caregivers. Int. J. Robot. Control 2021, 3, 1–12. [Google Scholar] [CrossRef]

- Sharma, N.; Pandey, J.K.; Mondal, S. A review of mobile robots: Applications and future prospect. Int. J. Precis. Eng. Manuf. 2023, 24, 1695–1706. [Google Scholar] [CrossRef]

- Keroglou, C.; Kansizoglou, I.; Michailidis, P.; Oikonomou, K.M.; Papapetros, I.T.; Dragkola, P.; Michailidis, I.; Gasteratos, A.; Kosmatopoulos, E.; Sirakoulis, G.C. A survey on technical challenges of assistive robotics for elder people in domestic environments: The ASPiDA concept. IEEE Trans. Med. Robot. Bionics 2023, 5, 196–205. [Google Scholar] [CrossRef]

- Johnston, C. Ethical design and use of robotic care of the elderly. J. Bioethical Inq. 2022, 19, 11–14. [Google Scholar] [CrossRef]

- Cai, S.; Ram, A.; Gou, Z.; Shaikh, M.A.W.; Chen, Y.A.; Wan, Y.; Hara, K.; Zhao, S.; Hsu, D. Navigating real-world challenges: A quadruped robot guiding system for visually impaired people in diverse environments. In Proceedings of the CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; pp. 1–18. [Google Scholar] [CrossRef]

- Asgharian, P.; Panchea, A.M.; Ferland, F. A review on the use of mobile service robots in elderly care. Robotics 2022, 11, 127. [Google Scholar] [CrossRef]

- Calatrava, F.M.; Gutiérrez, E.; Bautista, D.; Ortiz, F.J.; González, J.R.; Vera, J.A.; Jiménez, M.; Méndez, I.; Ruiz, C.; Mozos, O.M. Robotic-based well-being monitoring and coaching system for the elderly in their daily activities. Sensors 2021, 21, 6865. [Google Scholar] [CrossRef]

- Abou, A.; Wang, M.; Padır, T. A systematic review of robotics research in support of in-home care for older adults. Information 2020, 11, 75. [Google Scholar] [CrossRef]

- Zhao, D.; Sun, X.; Shan, B.; Yang, Z.; Yang, J.; Liu, H.; Jiang, Y.; Hiroshi, Y. Research status of elderly-care robots and safe human-robot interaction methods. Front. Neurosci. 2023, 17, 1291682. [Google Scholar] [CrossRef]

- Kok, C.L.; Ho, C.K.; Teo, T.H.; Kato, K.; Koh, Y.Y. A novel implementation of a social robot for sustainable human engagement in homecare services for ageing populations. Sensors 2024, 24, 4466. [Google Scholar] [CrossRef] [PubMed]

- Liao, Y.J.; Jao, Y.L.; Boltz, M.; Adekeye, O.T.; Berish, D.; Yuan, F.; Zhao, X. Use of a humanoid robot in supporting dementia care: A qualitative analysis. SAGE Open Nurs. 2023, 9, 23779608231179528. [Google Scholar] [CrossRef] [PubMed]

- Sugianto, N.; Tjondronegoro, D.; Stockdale, R.; Yuwono, E.I. Privacy-preserving AI-enabled video surveillance for social distancing: Responsible design and deployment for public spaces. Inf. Technol. People 2024, 37, 998–1022. [Google Scholar] [CrossRef]

- Ravi, S.; Climent-Pérez, P.; Florez, F. A review on visual privacy preservation techniques for active and assisted living. Multimed. Tools Appl. 2024, 83, 14715–14755. [Google Scholar] [CrossRef]

- Varshney, N.; Bakariya, B.; Kushwaha, A.K.; Khare, M. Rule-based multi-view human activity recognition system in real time using skeleton data from RGB-D sensor. Soft Comput. 2023, 27, 405–421. [Google Scholar] [CrossRef]

- Mollaei, H.; Sepehri, M.; Khatibi, T. Patient’s actions recognition in hospital’s recovery department based on RGB-D dataset. Multimed. Tools Appl. 2023, 82, 24127–24154. [Google Scholar] [CrossRef]

- Patel, I.; Kulkarni, M.; Mehendale, N. Review of sensor-driven assistive device technologies for enhancing navigation for the visually impaired. Multimed. Tools Appl. 2024, 83, 52171–52195. [Google Scholar] [CrossRef]

- Van Assche, M.; Petrovic, M.; Cambier, D.; Calders, P.; Van Gelder, P.; Van de Velde, D. The perspectives of older adults with mild cognitive impairment and their caregivers on the use of socially assistive robots in healthcare: Exploring factors that influence attitude in a pre-implementation stage. Disabil. Rehabil. Assist. Technol. 2024, 19, 222–232. [Google Scholar] [CrossRef]

- Petrich, L.C. Advancing the Acceptance and Use of Wheelchair-Mounted Robotic Manipulators. Master of Science Thesis, Department of Computing Science, University of Alberta, Edmonton, Canada, 2023. [Google Scholar]

- Wu, D.; Pu, L.; Jo, J.; Hexel, R.; Moyle, W. Deploying robot-led activities for people with dementia at aged care facilities: A feasibility study. J. Am. Med. Dir. Assoc. 2024, 25, 105028. [Google Scholar] [CrossRef]

- Terayama, J.; Ravankar, A.A.; Luces, J.V.; Tafrishi, S.A.; Hirata, Y. Concept and prototype development of adaptive touch walking support robot for maximizing human physical potential. IEEE Robot. Autom. Lett. 2024, 9, 6935–6942. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Y.; Peng, F.; Yao, Y. Pole placement-based current control method for CSI-fed PMSM drive with eliminating capacitor voltage sampling. IEEE Trans. Power Electron. 2023, 38, 9409–9420. [Google Scholar] [CrossRef]

- Della, C.; Duriez, C.; Rus, D. Model-based control of soft robots: A survey of the state of the art and open challenges. IEEE Control Syst. Mag. 2023, 43, 30–65. [Google Scholar] [CrossRef]

- Shoshany, S.; Peleg, E.; Zonnenshain, A.; Yudilevitch, G. Model-based-systems-engineering for conceptual design: An integrative approach. Syst. Eng. 2023, 26, 783–799. [Google Scholar] [CrossRef]

- De Vries, P.C.; Cinque, M.; De Tommasi, G.; Treutterer, W.; Humphreys, D.; Walker, M.; Felici, F.; Gomez, I.; Zabeo, L.; Ravensbergen, T.; et al. Strategy to systematically design and deploy the ITER plasma control system: A system engineering and model-based design approach. Fusion Eng. Des. 2024, 204, 114464. [Google Scholar] [CrossRef]

- Mongeau, J.M.; Yang, Y.; Escalante, I.; Cowan, N.; Jayaram, K. Moving in an uncertain world: Robust and adaptive control of locomotion from organisms to machine intelligence. Integr. Comp. Biol. 2024, 64, 1390–1407. [Google Scholar] [CrossRef]

- Lesire, C.; Bailon, R.; Barbier, M.; Grand, C. A hierarchical deliberative architecture framework based on goal decomposition. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 9865–9870. [Google Scholar] [CrossRef]

- Ju, C.; Son, H. A hybrid systems-based hierarchical control architecture for heterogeneous field robot teams. IEEE Trans. Cybern. 2021, 53, 1802–1815. [Google Scholar] [CrossRef]

- Burgess, B.; Lehnert, C.; Leitner, J.; Corke, P. An architecture for reactive mobile manipulation on-the-moveIn. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May 2023–2 June 2023; pp. 1623–1629. [Google Scholar] [CrossRef]

- Akkaladevi, S.C.; Propst, M.; Deshpande, K.; Hofmann, M.; Pichler, A. Towards a behavior tree based robotic skill execution framework for human robot collaboration in industrial assembly. In Proceedings of the IEEE International Conference on Automation, Robotics and Applications (ICARA), Athens, Greece, 22–24 February 2024; pp. 18–22. [Google Scholar] [CrossRef]

- Raja, R. Software architecture for agricultural robots: Systems, requirements, challenges, case studies, and future perspectives. IEEE Trans. AgriFood Electron. 2024, 2, 125–137. [Google Scholar] [CrossRef]

- Ohlander, H.; Johnson, D. Path Planning and Collision Avoidance for a 6-DOF Manipulator: A Comparative Study of Path Planning and Collision Avoidance Algorithms for the Saab Seaeye eM1-7 Electric Manipulator. Master’s Thesis, Department of Electrical Engineering, Linköping University, Linköping, Sweden, 2024. [Google Scholar]

- Naderolasli, A.; Shojaei, K.; Chatraei, A. Leader-follower formation control of Euler-Lagrange systems with limited field-of-view and saturating actuators: A case study for tractor-trailer wheeled mobile robots. Eur. J. Control 2024, 75, 100903. [Google Scholar] [CrossRef]

- Hussey, S.; Swindell, J.E.; Goad, A.C.; Egbert, A.; Clegg, A.; Baylis, C.; Marks, R.J. Application of Mamdani fuzzy inference systems to interference assessments. In Proceedings of the IEEE International Symposium on Dynamic Spectrum Access Networks (DySPAN), Washington, DC, USA, 13–16 May 2024; pp. 13–18. [Google Scholar] [CrossRef]

- Vásconez, J.P.; Calderón, M.; Briceño, I.C.; Pantoja, J.M.; Cruz, P.J. A behavior-based fuzzy control system for mobile robot navigation: Design and assessment. In Proceedings of the International Conference on Advanced Research in Technologies, Information, Innovation and Sustainability, Madrid, Spain, 18–20 October 2023; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 412–426. [Google Scholar]

- Algabri, A.J.; Choi, M.T. Deep-learning-based indoor human following of mobile robot using color feature. Sensors 2020, 20, 2699. [Google Scholar] [CrossRef]

- Jeyatharan, K.; Ekanayake, H.; Sandaruwan, K.D. Behavior based tracking for human following robot. In Proceedings of the 2021 IEEE 16th International Conference on Industrial and Information Systems (ICIIS), Kandy, Sri Lanka, 09–11 December 2021; pp. 371–376. [Google Scholar] [CrossRef]

| Behavior | Input Variable | Output Variable |

|---|---|---|

| Linear displacement | ed: distance error between person and robot | Speed references for IMC |

| Angular displacement | ea: angular error between person and robot | Speed references for IMC |

| Input: Distance Error (ed) | Output: Angular Speed of Wheels (ω) |

|---|---|

| U = {ed ∈ ℝ | −2.3 ≤ ed ≤ 0.7} T(ed) = {nde, nmde, zde, pde} G: Prefixes: n: negative, m: medium z: zero, p: positive Suffixes: de: distance error | V = {ω ∈ ℝ | 0 ≤ ω ≤ 220} T(ω) = {rsl, rslm, rshm, rsh} G: Prefixes: rs: references of speed Suffixes: l: low, m: medium h: high |

| M: nde → trapmf [−2.3, −2.3, −1.085, −0.739] nmde → trimf [−1.48, −0.6558, −0.117] zde → trimf [−0.713, 0, 0.177] pde → trapmf [0, 0.12, 0.7, 0.7] | M: rsl → trimf [0, 0, 4.47] rslm → trimf [1.18, 17.63, 36.9] rshm → trimf [21, 36.43, 124] rsh → trapmf [47.7, 58.53, 220, 220] |

| Left Motor | Right Motor | Robot Displacement |

|---|---|---|

| CCW | CW | Linear forward |

| CW | CCW | Linear back |

| CW | CW | CCW |

| CCW | CCW | CW |

| Input: Error Angle (ea) | Output: Reference Velocity for Angular Displacement (vra) |

|---|---|

| U = {ea ∈ ℝ | −28 ≤ ea ≤ 0} T(ea) = {eanh, eanm, eanl, eaze} G: Prefixes: ea: error angle Suffixes: n: negative, m: medium ze: zero, h: high, l: low | V = {vra ∈ ℝ | 0 ≤ vra ≤ 100} T(vra) = {rsze, rspl, rspm, rsph} G: Prefixes: rs: references of speed Suffixes: l: low, m: medium h: high, p: positive, ze: zero |

| M: eanh → trapmf [−28, −28, −15.88, −13.4] eanm → trimf [−18.3, −12.24, −8.41] eanl → trimf [−11.9, −6.073, −1.77] eaze → trapmf [−5.71, 0.0299, 0, 0] | M: rsze → trimf [0, 0.534, 1.603] rspl → trimf [0.748, 3.95, 8.013] rspm → trimf [5.02, 15.92, 30.9] rsph → trapmf [20, 22.33, 100, 100] |

| Left IAE | Motor ISE | Right IAE | Motor ISE | |

|---|---|---|---|---|

| Pole Placement | 553 | 48,955 | 549 | 47,855 |

| Internal Model | 303 | 31,561 | 301 | 31,443 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Acosta-Amaya, G.A.; Miranda-Montoya, D.A.; Jimenez-Builes, J.A. Lightweight Two-Layer Control Architecture for Human-Following Robot. Sensors 2024, 24, 7796. https://doi.org/10.3390/s24237796

Acosta-Amaya GA, Miranda-Montoya DA, Jimenez-Builes JA. Lightweight Two-Layer Control Architecture for Human-Following Robot. Sensors. 2024; 24(23):7796. https://doi.org/10.3390/s24237796

Chicago/Turabian StyleAcosta-Amaya, Gustavo A., Deimer A. Miranda-Montoya, and Jovani A. Jimenez-Builes. 2024. "Lightweight Two-Layer Control Architecture for Human-Following Robot" Sensors 24, no. 23: 7796. https://doi.org/10.3390/s24237796

APA StyleAcosta-Amaya, G. A., Miranda-Montoya, D. A., & Jimenez-Builes, J. A. (2024). Lightweight Two-Layer Control Architecture for Human-Following Robot. Sensors, 24(23), 7796. https://doi.org/10.3390/s24237796