Abstract

The hand–eye calibration of laser profilers and industrial robots is a critical component of the laser vision system in welding applications. To improve calibration accuracy and efficiency, this study proposes a position-constrained calibration compensation algorithm aimed at optimizing the hand–eye transformation matrix. Initially, the laser profiler is mounted on the robot and used to scan a standard sphere from various poses to obtain the theoretical center coordinates of the sphere, which are then utilized to compute the hand–eye transformation matrix. Subsequently, the positional data of the standard sphere’s surface are collected at different poses using the welding gun tip mounted on the robot, allowing for the fitting of the sphere’s center coordinates as calibration values. Finally, by minimizing the error between the theoretical and calibrated sphere center coordinates, the optimal hand–eye transformation matrix is derived. Experimental results demonstrate that, following error compensation, the average distance error in hand–eye calibration decreased from 4.5731 mm to 0.7069 mm, indicating that the proposed calibration method is both reliable and effective.

1. Introduction

Robotic welding technology is essential in modern manufacturing, particularly in the automotive, shipbuilding, and pressure vessel industries, where automated welding is in high demand [1,2,3]. The line-structured light sensor, valued for its non-contact measurement, high speed, accuracy, and stability, has emerged as the preferred tool for automated robotic welding [4,5]. A prevalent measurement technique involves affixing a line-structured laser sensor to the end effector of a robot. This method capitalizes on the robot’s inherent flexibility, allowing for a wider range of measurements than a sensor that is stationary [6].

Upon the initial installation of the sensor on the robot flange, it is essential to ascertain the relative pose relationship between the sensor and the robot’s end effector, a process referred to as hand–eye calibration [7]. The accuracy of hand–eye calibration directly impacts the precision of the sensor’s measurements in the robot’s coordinate system, which is crucial for high-precision tasks such as weld seam detection and tracking. The standard approach of hand–eye calibration is to formulate a matrix equation derived from the robot’s kinematic model, employing calibration objects as constraints to determine the transformation matrix that relates the sensor coordinate system to the end effector [8,9,10,11,12,13]. However, during hand–eye calibration, error accumulation occurs due to sensor calibration errors, geometric errors and motion errors of the robotic arm, and deformation errors caused by load gravity. Therefore, the calibration scheme requires urgent optimization.

The accuracy of hand–eye calibration directly impacts the precision of the entire robotic vision system. This challenge has led many experts and scholars to explore algorithms and models to effectively improve the accuracy of robot hand–eye calibration. Shiu and Ahmad [14] initially converted hand–eye calibration into the problem of solving the rotation matrix, as well as solving the nonlinear homogeneous equation AX = BX. However, since the line-structured light sensor outputs two-dimensional point cloud data, Huang et al. [15] and Yang et al. [16] used the center of a stationary standard sphere as a constraint to establish and solve the matrix equation of the form AX = B instead of AX = XB. An et al. [17] proposed a combined optimization method to address the impact of random errors during hand–eye calibration and applied it to the hand–eye calibration process, However, this method did not consider the motion and geometric errors of the robotic arm, nor did it include the installation of an end effector. Cao et al. [18] proposed a simultaneous calibration method for the robot hand–eye relationship and kinematics using a line-structured light sensor. They utilized two standard spheres with known center distances for calibration, reducing the average distance error from 3.967 mm to 1.001 mm. Still, this method did not account for non-kinematic errors caused by load, and the calibration model and process were complex and inefficient. Furthermore, existing hand–eye calibration algorithms do not account for cumulative errors, rendering them inadequate for weld seam detection requirements.

To address these challenges, this paper proposes a compensation algorithm based on position constraints to optimize the calibration model parameters. An experimental platform is constructed to verify the correctness and effectiveness of the proposed methods. This paper is organized as follows: Section 2 introduces the principles of hand–eye calibration and error compensation techniques. Section 3 describes the industrial robot measurement system and presents the experimental results, including a comprehensive calibration process, error compensation procedures, and verification of the optimized hand–eye transformation matrix. Finally, Section 4 provides a summary of the paper and presents the concluding remarks.

2. Hand–Eye Calibration of the Line Structure Light Sensor

Using the 3D measurement data from the line-structured light sensor, the trajectory of the robotic arm equipped with a welding torch can be planned to achieve automated welding. The key to this technology is accurately calibrating the rigid body transformation from the coordinate system to the robotic arm tool coordinate system , i.e., calibrating the hand–eye matrices for the sensor used in industrial robots.

2.1. Principle of the Hand–Eye Calibration Algorithm Based on Spherical Constraints

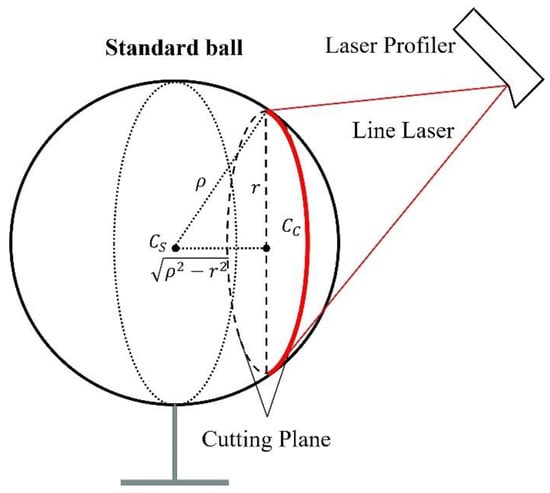

For an automated welding system, hand–eye calibration is the process of calibrating the relative coordinate transformation between the welding torch tip and the camera. A standard sphere fixed in the robot’s workspace is used as the calibration target. When the laser line emitted by the sensor projects onto the surface of the standard sphere, the intersection forms a circle, and the laser line creates a circular arc, as shown in Figure 1.

Figure 1.

Calibration sphere model.

Since the circle’s center lies in the light plane, its coordinates in the system can be represented as . By extracting the arc points and fitting the circle, the values of , , and the radius r can be obtained. Using the Pythagorean theorem and considering the geometric properties of the sphere, the coordinates of the sphere’s center in the system can be expressed as as follows [17]:

Here, is the radius of the sphere, and the sign of is determined by the relative position of the line-structured light sensor and the standard sphere.

By keeping the sphere’s center fixed and changing the sensor’s position and orientation multiple times, the coordinates of the sphere center in the system are obtained as , where k denotes the k-th measurement state of the robotic arm at different positions and orientations. According to the rigid body transformation, the relationship between the sphere center’s position in the robotic arm base coordinate system and is given as follows:

Here, (3 × 3) and (3 × 1) are determined by the robotic arm’s calibration algorithm and are considered known. and (3 × 1) are the hand–eye matrices to be solved.

First, solve for the rotation matrix , ince the robotic arm only undergoes translational motion and does not change its orientation between two positions, the rotation matrix remains constant [17], i.e., . Based on this, the following equation can be derived:

Here, and are the inverse and transpose of , respectively. Since is an orthogonal matrix, .

After moving the robotic arm through translations and measuring , − 1 equations can be established from Equation (3):

To obtain the optimal solution for the rotation matrix , following the idea of the Iterative Closest Point (ICP) registration algorithm [19], let . Performing SVD [20] on yields . The optimal rotation matrix based on the measurement results is then the following:

The translation vector can be obtained by changing the position and orientation of the robotic arm through arbitrary rotations and translations. From Equation (2), the following can be derived:

By changing the robotic arm’s position and orientation times and measuring , the following can be obtained:

Since the coefficient matrix is known, the optimal translation vector can be obtained using the least squares method:

2.2. Position-Constrained Calibration Compensation Algorithm

The aforementioned hand–eye calibration algorithm inevitably encounters errors due to several factors: (a) When solving for the hand–eye parameters, the calibration equations are established based on motion constraints, assuming that the sensor detection data are unbiased. However, errors exist in the sensor’s intrinsic calibration. (b) The robotic arm itself has geometric and motion errors, and relying solely on theoretical matrix decomposition has limitations. (c) The robotic arm, equipped with a welding torch and the sensor, undergoes deformation due to gravity, resulting in deformation errors. These errors contribute to inaccuracies in the theoretical matrix decomposition process, making it challenging to ensure the precision of experimental calibration. To address this issue, a hand–eye calibration error compensation algorithm is proposed:

Step 1: Use the hand–eye parameters and obtained from the hand–eye calibration algorithm described in Section 2.1 as the initial solution.

Step 2: Control the robotic arm, equipped with the sensor and welding torch, to collect positional information on the standard sphere’s surface points from various orientations at multiple poses. Fit the coordinates of in the system to obtain , which represents the true position of in the system.

Step 3: Substitute the sphere center position measured at the k-th pose and the pose matrices and relative to the system into Equation (2) to obtain the theoretical position of in the system.

Step 4: Based on the principle of minimizing the error between the theoretical sphere center coordinates and the true sphere center coordinates, establish the objective function of Equation (9). Use the LM algorithm to iteratively solve Equation (9), optimizing the hand–eye parameters and of the robot vision-guided system until the results converge.

where is the total number of times the sensor measures the sphere center when obtaining the initial hand–eye parameters, which is the sum of the number of translations and the number of arbitrary pose changes of the robotic arm.

3. Experimental Verification and Results Analysis

3.1. Experimental Setup

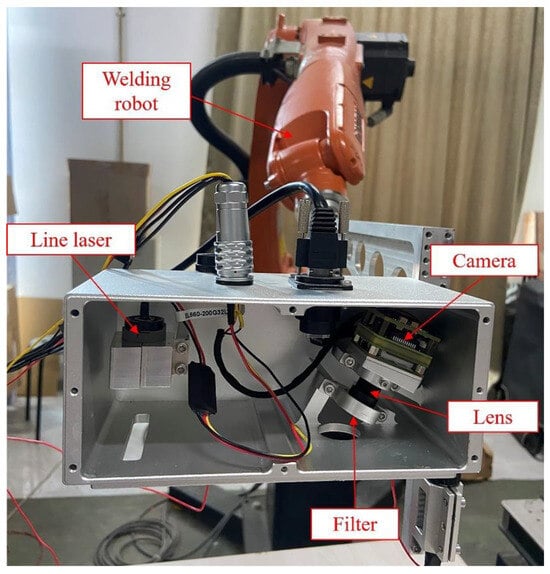

The line-structured light sensor and the robotic arm used in this system is shown in Figure 2. The camera is a board-level CMOS camera, model MV-CB016-10GM-C from Hikvision (Hangzhou, China), with a resolution of 1440 × 1080 and a pixel size of 3.45 μm × 3.45 μm. It is equipped with a fixed-focus lens with a focal length of 12 mm. Considering the ambient light conditions in the application environment, a laser emitter with a wavelength of 658 ± 5 nm was selected, and a narrow-band filter matching this wavelength range was installed in front of the lens. The robotic arm used is a KUKA KR5 R1400 (Augsburg, Bavaria, Germany), a six-axis industrial robot.

Figure 2.

Line-Structured light sensor and robot system.

3.2. Hand–Eye Calibration Experiment

In the hand–eye calibration experiment, a calibration tool—hereinafter referred to as the “simulated welding torch”—was employed to replicate the operation of an actual welding torch. A standard stainless-steel ball, with a diameter of 40.00 mm ± 0.01 mm, was securely positioned within the workspace of the robotic arm’s vision guidance system. According to the calibration procedure detailed in Section 2.1, the robotic arm was first controlled to measure the standard ball 10 times using the line-structured light sensor, restricting movement to translation only. Subsequently, the robotic arm measured the standard ball another 10 times using the line-structured light sensor from arbitrary poses [17]. During these measurements, to prevent singularities in the calibration algorithm, variations occur along the , , and axes of the base coordinate system were ensured when translating the robotic arm. When the robotic arm adopted arbitrary poses, all six joints exhibited variation. Finally, the hand–eye matrices and were determined:

After completing the above steps, the robotic arm’s pose was adjusted to measure the positions of three points using the line-structured light sensor from ten different viewpoints. The deviation between the sensor’s measured positions and the actual positions was calculated. The average deviations were 4.3132 mm for point 1, 4.5731 mm for point 2, and 4.0331 mm for point 3, as shown in Table 1. These values are significantly higher than the requirement for industrial robot vision guidance systems for weld tracking, which is within 1.5 mm, indicating the need for further optimization.

Table 1.

Average deviation of feature points.

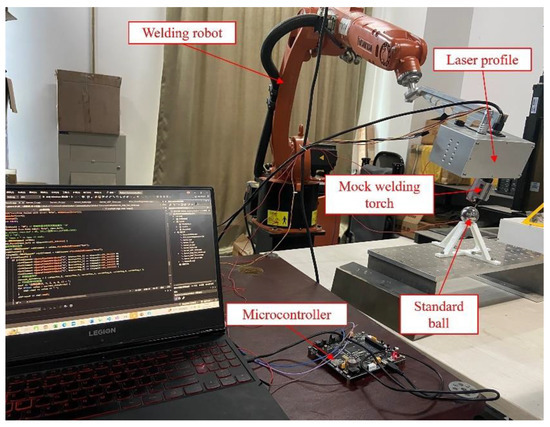

To address the insufficient accuracy, 60 sets of standard sphere surface points were randomly collected within the robot’s workspace. A microcontroller connected the robotic arm and the standard sphere to ensure accuracy. The robotic arm touching the standard sphere served as a trigger signal to collect the real-time position of the mock welding torch tip at the end of the robotic arm, as shown in Figure 3. The collected sphere points were used to fit the sphere expression, thereby identifying the accurate position of the sphere center.

Figure 3.

Calibration error compensation system.

Following the hand–eye calibration compensation algorithm based on position constraints described in Section 2.2, the optimized hand–eye parameters and were obtained. The positions of the three points were subsequently remeasured using a line-structured light sensor from ten consistent viewpoints during the calibration process. Table 1 presents the average errors of the feature points before and after error compensation. The results revealed that the average deviation was 0.8183 mm for point 1, 0.7069 mm for point 2, and 0.6371 mm for point 3. These deviations are well below 1.5 mm, meeting the requirements for weld seam tracking.

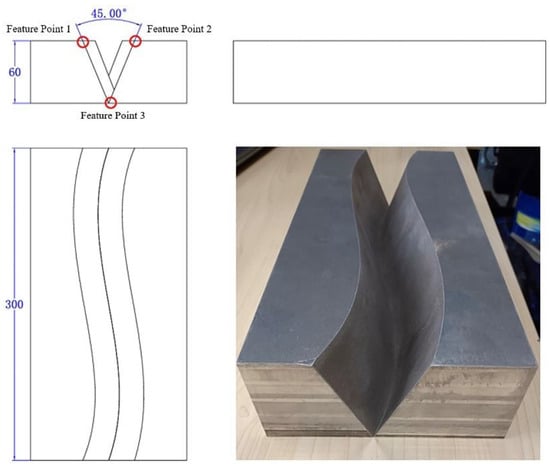

To verify the accuracy of the proposed algorithm for detecting weld seams and guide welding in the thick plate welding, an S-shaped trajectory V-groove welding piece was designed and manufactured, as shown in Figure 4. This V-groove welding piece had a thickness of 60 mm, a length of 300 mm, and a groove angle of 45°.

Figure 4.

V-groove weld of S-shaped trajectory.

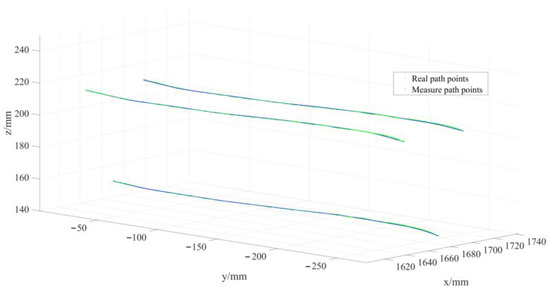

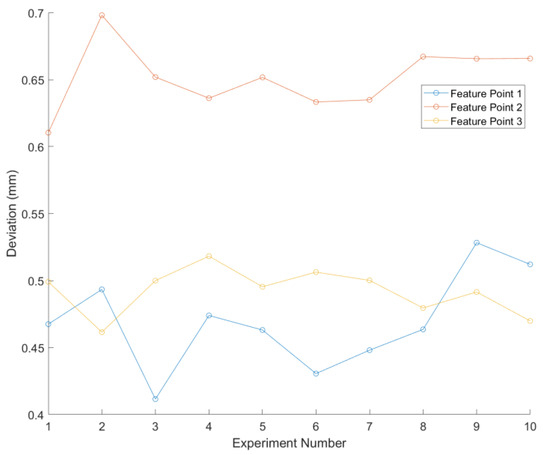

By comparing all V-groove weld seam feature points detected by the sensor during tracking with the actual weld seam trajectory, as illustrated in Figure 5, the average absolute trajectory error was determined. Figure 6 presents the deviations between the measured and actual values of the trajectories for the three feature points across ten measurement sessions. The average errors for the weld seam trajectory were 0.4567 mm for feature point 1, 0.6374 mm for feature point 2, and 0.4856 mm for feature point 3, as detailed in Table 2. These errors meet the application requirements for effective weld seam tracking.

Figure 5.

Comparison of weld detection trajectory and actual trajectory.

Figure 6.

Average deviation results of feature points.

Table 2.

Average deviation of weld seam feature points.

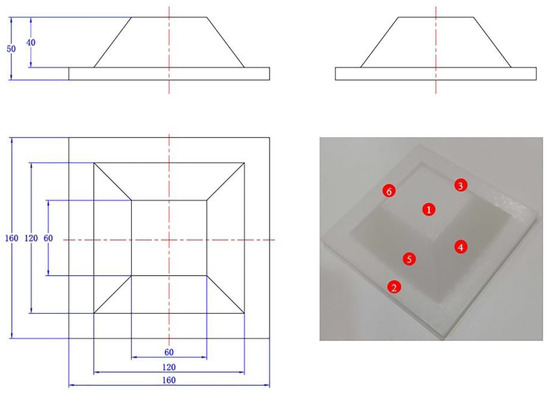

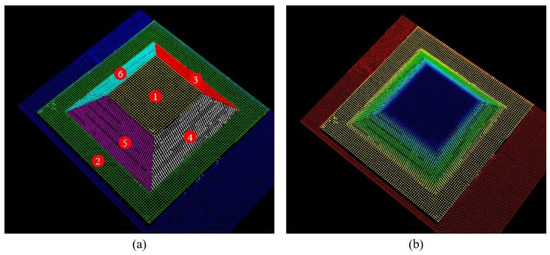

3.3. Three-Dimensional Reconstruction Accuracy Verification

To further validate the generalizability of the algorithm, a regular boss with a dimensional accuracy of 0.01 mm was designed as the evaluation object. The calibrated robot and the laser vision sensor were then employed to perform the 3D reconstruction. Different numerical labels were assigned to each plane of the boss, as depicted in Figure 7. The results of scanning and reconstructing the boss using the line-structured light sensor are shown in Figure 8a. The point cloud quality is high and meets the evaluation requirements. Additionally, the point cloud of the boss was segmented into planes, each assigned different colors to distinguish the planes corresponding to the numerical labels, as shown in Figure 8b.

Figure 7.

Boss for evaluating 3D reconstruction accuracy. The numbers 1 to 6 correspond to the six planes of the convex platform, respectively.

Figure 8.

3D reconstruction results of boss: (a) boss point cloud; (b) point cloud segmentation. The numbers 1 to 6 correspond to the six planes of the convex platform, respectively.

To quantitatively evaluate the reconstruction accuracy, both length and angle measurements were considered. The four slanted edges of the boss were used as the length evaluation standard. According to the design dimensions in Figure 7, the theoretical lengths of the slanted edges , , , and are 50 mm. The dihedral angles between plane 2 and the four slanted surfaces were used as the angle evaluation standard, with theoretical values of , , , and being 53.13°. After segmenting the reconstructed point cloud of the boss into planes and performing geometric calculations, the deviations between the point cloud data and the theoretical data were obtained, as shown in Table 3. The average length deviation was 0.5071 mm, and the average angle deviation is 0.2145°, both of which meet the application requirements for weld seam tracking.

Table 3.

Deviations between point cloud data and theoretical data.

4. Conclusions

A comprehensive and effective calibration scheme was proposed for a line-structured light sensor based on a constant-focus optical path. By introducing a two-dimensional tilt angle, a more suitable inclined camera imaging model for the line-structured light sensor was established. The detailed process of obtaining initial values and performing nonlinear optimization of the model parameters was thoroughly described. Using a dual-step target as a marker, numerous non-collinear points on the light plane were quickly and conveniently obtained, and the light plane equation in the ideal camera coordinate system was accurately fitted. An integrated automatic calibration device was designed to efficiently complete the calibration experiments of the vision sensor while adhering to imaging constraints.

The experimental results demonstrate that the average distance error of the hand–eye calibration after error compensation decreased from 4.5731 mm to 0.7069 mm. This indicates that the proposed calibration method ensures high detection accuracy and repeatability for the line-structured light sensor. Additionally, the effectiveness of the calibration method in reducing the impact of hand–eye calibration errors for industrial robots was validated.

Author Contributions

J.L.: Conceptualization, Methodology, Formal analysis, Software, Writing—Review and Editing, Supervision, Project administration. W.R.: Methodology, Investigation, Writing—original draft. Y.F.: Conceptualization, Investigation, Validation. J.F.: Resources. J.Z.: Resources, Supervision, Funding acquisition, Project administration. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Tsinghua University (Department of Mechanical Engineering), Shenzhen Chuangxin Laser Co., Ltd. Laser Advanced Manufacturing Joint Research Center Fund (20232910019).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Pietraszkiewicz, W.; Konopińska, V. Junctions in shell structures: A review. Thin-Walled Struct. 2015, 95, 310–334. [Google Scholar] [CrossRef]

- Wu, Q.; Li, Z.; Gao, C.; Biao, W.; Shen, G. Research on welding guidance system of intelligent perception for steel weldment. IEEE Sens. J. 2023, 23, 5220–5231. [Google Scholar] [CrossRef]

- Wang, X.; Zhou, X.; Xia, Z.; Gu, X. A survey of welding robot intelligent path optimization. J. Manuf. Process. 2021, 63, 14–23. [Google Scholar] [CrossRef]

- Guan, T. Research on the application of robot welding technology in modern architecture. Int. J. Syst. Assur. Eng. Manag. 2023, 14, 681–690. [Google Scholar] [CrossRef]

- Tran, C.C.; Lin, C.Y. An intelligent path planning of welding robot based on multisensor interaction. IEEE Sens. J. 2023, 23, 8591–8604. [Google Scholar] [CrossRef]

- Li, M.; Du, Z.; Ma, X.; Dong, W.; Gao, Y. A robot hand-eye calibration method of line laser sensor based on 3D reconstruction. Robot. Comput.-Integr. Manuf. 2021, 71, 102136. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, J.; Jiang, X.; Liang, B. Error correctable hand–eye Calibration for stripe-laser vision-guided robotics. IEEE Trans. Instrum. Meas. 2020, 69, 10. [Google Scholar] [CrossRef]

- Wu, Q.; Qiu, J.; Li, Z.; Liu, J.; Wang, B. Hand-Eye Calibration Method of Line Structured Light Vision Sensor Robot Based on Planar Target. Laser Optoelectron. Prog. 2023, 60, 1015002. [Google Scholar]

- Pavlovčič, U.; Arko, P.; Jezeršek, M. Simultaneous Hand–Eye and Intrinsic Calibration of a Laser Profilometer Mounted on a Robot Arm. Sensors 2021, 21, 1037. [Google Scholar] [CrossRef] [PubMed]

- Xiao, R.; Xu, Y.; Hou, Z.; Chen, C.; Chen, S. An automatic calibration algorithm for laser vision sensor in robotic autonomous welding system. J. Intell. Manuf. 2021, 33, 1419–1432. [Google Scholar] [CrossRef]

- Xu, J.; Li, Q.; White, B. A novel hand-eye calibration method for industrial robot and line laser vision sensor. Sens. Rev. 2023, 43, 259–265. [Google Scholar] [CrossRef]

- Zhong, K.; Lin, J.; Gong, T.; Zhang, X.; Wang, N. Hand-eye calibration method for a line structured light robot vision system based on a single planar constraint. Robot. Comput.-Integr. Manuf. 2025, 91, 102825. [Google Scholar] [CrossRef]

- Xu, J.; Hoo, J.L.; Dritsas, S.; Fernandez, J.G. Hand-eye calibration for 2D laser profile scanners using straight edges of common objects. Robot. Comput.-Integr. Manuf. 2022, 73, 102221. [Google Scholar] [CrossRef]

- Shiu, Y.C.; Ahmad, S. Calibration of wrist-mounted robotic sensors by solving homogeneous transform equations of the form AX=XB. IEEE Trans. Robot. Autom. 1987, 5, 16–29. [Google Scholar] [CrossRef]

- Jia, H.; Zhu, J.; Yi, W. Calibration for 3d profile measurement robot with laser line-scan sensor. Chin. J. Sens. Actuators 2012, 25, 62–66. [Google Scholar] [CrossRef]

- Yang, S.; Yin, S.; Ren, Y.; Zhu, J.; Ye, S. Improvement of calibration method for robotic flexible visual measurement systems. Opt. Precis. Eng. 2014, 22, 3239–3246. [Google Scholar] [CrossRef]

- An, Y.; Wang, X.; Zhu, X.; Jiang, S.; Ma, X.; Cui, J.; Qu, Z. Application of combinatorial optimization algorithm in industrial robot hand eye calibration. Measurement 2012, 202, 111815. [Google Scholar] [CrossRef]

- Cao, D.; Liu, W.; Liu, S.; Chen, J.; Liu, W.; Ge, J.; Deng, Z. Simultaneous calibration of hand-eye and kinematics for industrial robot using line-structured light sensor. Measurement 2023, 221, 113508. [Google Scholar] [CrossRef]

- Yang, J.; Li, H.; Campbell, D.; Jia, Y. Go-ICP: A globally optimal solution to 3D ICP point-set registration. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 2241–2254. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Li, X.; Li, F.; Zhang, G. Calibration method for line-structured light vision sensor based on a single ball target. Opt. Laser Eng. 2015, 69, 20–28. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).