1. Introduction

Temperature measurement is central to thermal analysis and condition monitoring in industries such as electronics, pipelines, and aerospace [

1,

2,

3]. By monitoring temperature and related information within thermal fields, safety incidents can be effectively mitigated, thereby improving the safety and reliability of industrial equipment. Furthermore, analyzing temperature data enables a deeper understanding of thermal characteristics, an assessment of heat dissipation under varying operating conditions, and the prediction of potential thermal issues. This ensures more stable and reliable operation throughout the equipment’s service life. Additionally, temperature measurement helps engineers optimize the selection of thermal dissipation structures and materials, enhancing efficiency and performance while reducing energy consumption and costs.

In recent studies, thermal field measurement methods are primarily classified into invasive and non-invasive measurements [

4]. Invasive measurements mainly rely on thermocouple technology, while non-invasive measurements primarily use spectroscopic techniques. In recent years, with the advancement of sensor array technology, low-resolution (LR) temperature field data can be obtained at a relatively low cost. Nonetheless, electrical signal interference among adjacent sensors limits the acquisition of accurate and high-resolution (HR) thermal field information. Spectral intensity and environmental factors also pose challenges to applying spectroscopic techniques for obtaining high-resolution data at a low cost [

5,

6]. Therefore, there is an urgent need for effective and low-cost measurement approaches to obtain high-resolution temperature data across various thermal fields.

To address this challenge, some pioneers have proposed combining LR data with deep learning techniques to develop super-resolution methods. These methods aim to use machine learning models to learn physical field distributions and capture physical characteristics. Deep learning has been widely recognized for its powerful search and computational capabilities, enabling many innovative applications across diverse fields [

7]. Super-resolution (SR) is a technique for enhancing image resolution. Deep learning-based methods for SR were initially introduced by Dong et al. [

8]. They proposed a deep learning model, the super-resolution convolutional neural network (SRCNN), which exhibits significantly superior SR reconstruction performance compared to traditional methods such as bicubic interpolation [

9]. As one of the earliest deep learning approaches for super-resolution, this technique has been extensively validated in fields such as medicine [

10], fluid mechanics, and beyond. The related research in thermodynamics [

11] is also increasing year by year, and it has become a reliable choice for the measurement and study of thermal phenomena. SRCNN is capable of reconstructing fine details and complex structures in temperature fields, which is essential for accurate analysis in thermodynamic applications. This capability arises from deep learning models’ ability to capture complex patterns often overlooked by traditional methods.

Building on SRCNN, researchers have proposed increasingly complex models to handle diverse applications. Kong et al. [

12] explored deep learning-based approaches in heat flow fields, proposing a novel multiple-path super-resolution convolutional neural network (MPSRC) for temperature measurement in combustion chambers. Heuristically, we realize that the flow and temperature fields in the SR task exhibit similar structural and spatial dependencies. In this paper, we draw on mainstream studies in flow field super-resolution to inform our approach. Fukami et al. [

13] applied SR techniques in fluid dynamics, proposing a hybrid downsampled skip-connection/multi-scale (DSC/MS) model for reconstructing two-dimensional decaying isotropic turbulence flow fields. Deng et al. [

14] introduced two Generative Adversarial Network (GAN)-based models, Super-Resolution Generative Adversarial Network (SRGANs) and Enhanced Super-Resolution Generative Adversarial Networks (ESRGANs), for booting the spatial resolution of complex wake flows behind cylinders.

With the advancement of SR algorithms, approaches relying solely on SRCNN have been considered as being limited in interpretability. To address this, some researchers have incorporated physics-informed neural networks (PINNs) [

15,

16,

17] and developed methods to integrate physical information during training, enhancing both interpretability and accuracy [

18,

19]. Rajat et al. [

20] introduced specific physical losses during training to enhance discontinuity detection in applications such as Burger’s equation, methane combustion, and fouling in industrial heat exchangers. Building on the widely used U-Net architecture for image segmentation, they developed PI-UNet, a tailored deep learning model for these tasks. In our research, three different scenarios are also selected to validate the effectiveness of our model. To provide a benchmark for comparison, we utilized the PI-UNet, that has demonstrated strong performance across various scenarios.

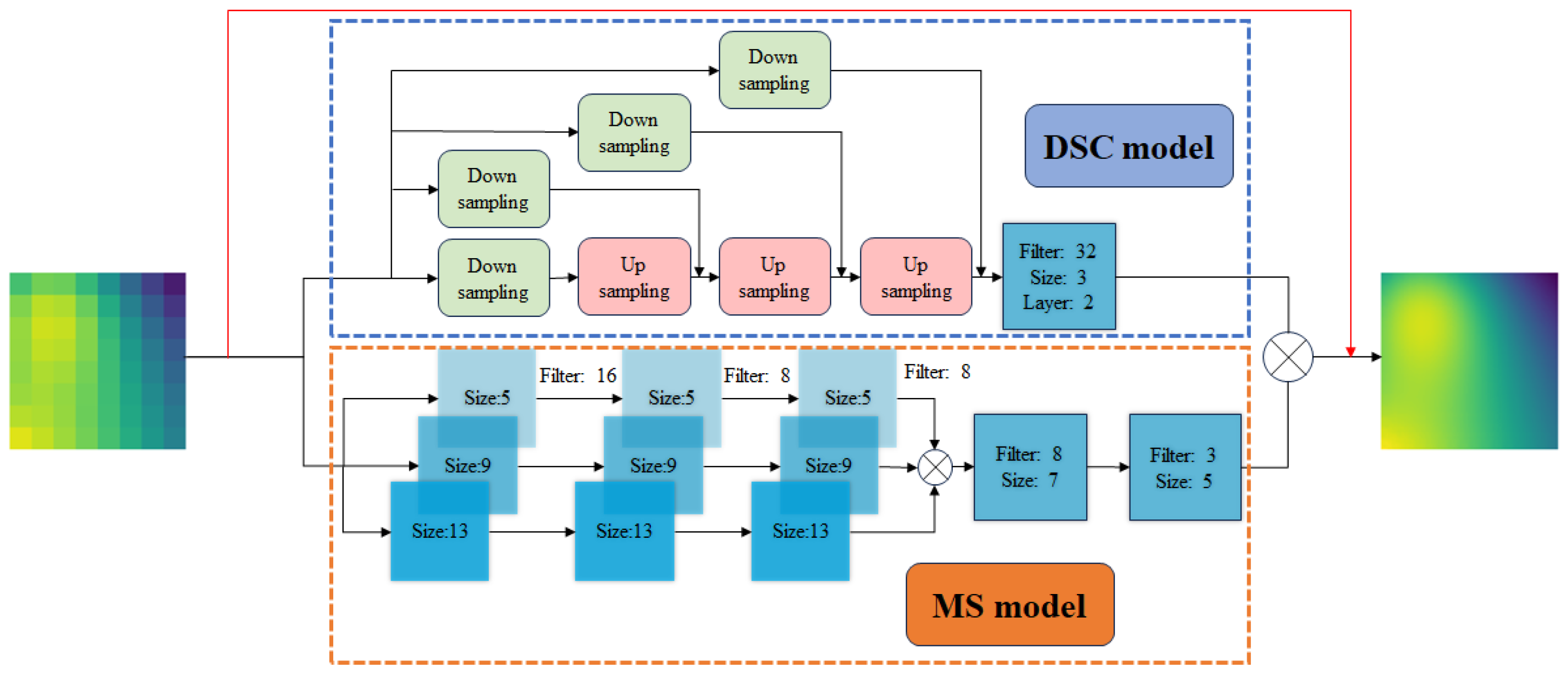

Drawing inspiration from existing studies and incorporating physical information, we propose a modified model based on the previously mentioned DSC/MS framework. This model has been further optimized from DSC/MS for application in temperature field environments. The key rationale behind choosing the DSC/MS model lies in its dual-path architecture, which enables it to independently learn the characteristics of the temperature field at various scales. This design facilitates the precise capture of smooth gradient properties and sparse mutations within the temperature field, enhancing the model’s expressiveness and overall applicability. Inspired by PI-UNet, we introduce a simplified loss function that integrates physical information loss, further enhancing the model’s accuracy. Additionally, the simplified design mitigates the loss of physical information through automatic differential back propagation and the preservation of differential matrices, resulting in reduced computational costs. We conduct simulations across three distinct two-dimensional thermal scenarios to evaluate the effectiveness of our approach: laser heating dynamics within silicon chips, the thermodynamic interaction of merging hot and cold water, and the convective heat transfer phenomenon associated with heat dissipation in metal sheets exposed to airflow. The main contributions of this research are as follows:

Propose the modified DSC/MS model to effectively address the SR task in temperature fields.

Introduce simplified physical loss, which incorporates physical information into guided training while reducing the full calculation cost from physical information loss.

The remainder of this paper is organized as follows:

Section 2 introduces the datasets used in this study.

Section 3 describes the architecture of the super-resolution model and the specific physical loss function. In

Section 4, we showcase the SR reconstruction performance of our proposed model on three scenarios. We also highlight the positive effects of physical information on training. Finally,

Section 5 provides a summary.

2. Dataset

The primary objective of this study is to design a general supervised model that effectively captures the nonlinear relationship between LR data in a heat flow field and HR data, where represents the LR data and represents the HR data with . We apply this model to the following three different thermal fields:

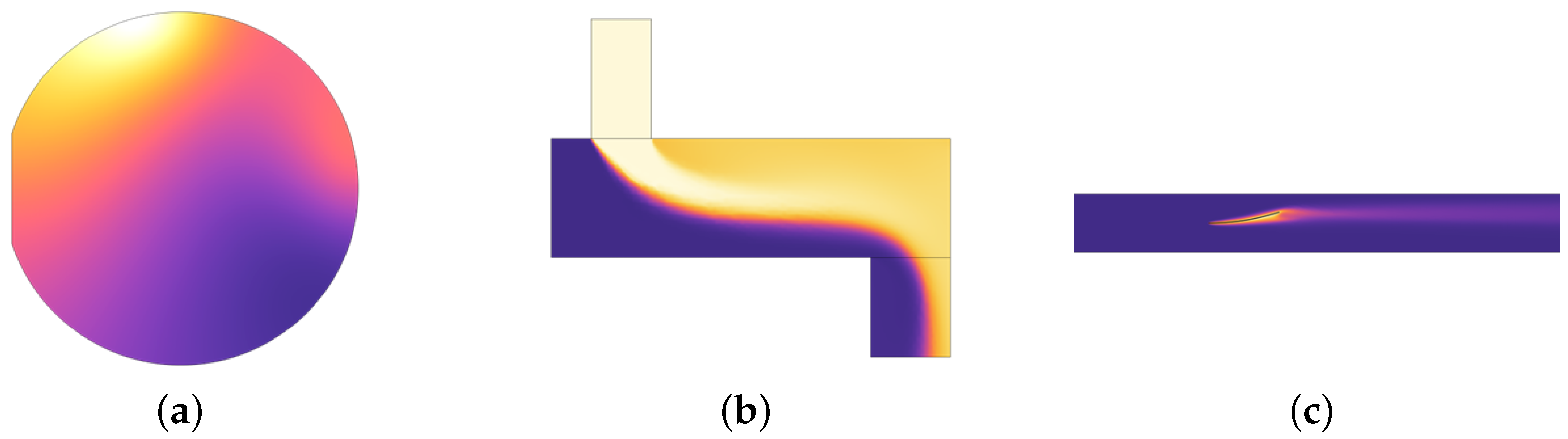

Laser Heating: A silicon wafer with a 2-inch diameter and a notch on the left side is fixed on a thermally insulated workbench inside a chamber. The workbench rotates at 10 revolutions per minute. The wafer is heated by two laser beams, each with a radius of 0.01 m and a power of 10 W. The lasers move along the x and y axes of the silicon wafer. The heating process lasts one minute. In this experiment, it is assumed that the thermal insulation of the environment is good and the chamber walls maintain a constant temperature of 20 °C. Under these conditions, the only source of heat loss is thermal radiation transmitted from the top of the wafer to the chamber walls.

Water Mixing: In a container measuring 0.03 m × 0.1 m, filled with cold water at ambient temperature, hot water at 90 °C is injected through an upper channel with a diameter of 30 mm at a velocity of 0.2 m/s. The mixed water flows out from the bottom, forming a temperature field. In the simulation process, the effect of gravitational convection on the flow is neglected.

Heat Dissipating: The bimetallic strip, fixed on the left side inside an air duct, is heated by a constant 50 W heat source to induce bending. Airflow is introduced to cool the metal plates. The geometric structure of the bimetallic strip consists of two plates, each measuring 0.01 m × 0.05 m, with the lower one made of copper and the upper plate made of iron. An airflow at a speed of 0.2 m/s flows from the left side of the channel to the right side.

These three simulation cases are sourced from the COMSOL Multiphysics official case library referred to as laser heating [

21], water mixing [

22], and heat dissipating [

23], respectively. The simulation difficulty of the three scenarios increases gradually, corresponding to an increment in the number of partial differential equations to be considered. We simulate these thermal fields using COMSOL Multiphysics 6.2, obtaining a certain quantity of HR data at various time steps. The schematic diagram of the simulation scenarios is shown in

Figure 1.

The lighter shades indicate regions of lower temperature, while warmer tones represent areas of higher temperature.

The simulation data can be considered as accurate high-resolution data, which are post-processed into temperature field images of a size of

. To align with the sampling principles of spectral and sensor array technologies for low-resolution data, we apply average pooling to the high-resolution images to obtain LR data. Average pooling is a method used to compress input feature maps by reducing dimension. To transform a high-resolution image of size

into a low-resolution grid of size

, we represent the average pooling operation as

where

denotes the values in the coarse mesh,

denotes the values in the fine mesh, and

denotes a range in which the HR values are averaged to obtain the corresponding

. Since physical measurement sensors typically consider the LR data as the average temperature within a region, applying average pooling to process the data is reasonable. Additionally, referring to Fukami’s work [

13] on image processing for fluid dynamics, average pooling shows superior performance in super-resolution prediction compared to max pooling.

We sampled 2100 data points at a regular interval from the beginning of the simulation. The samples are divided into a training set of the first 2000 points and a test set of the remaining 100 points. The specific simulation and sampling parameters are shown in

Table 1.

4. Results and Discussion

In this section, we present and discuss experimental results, showing the performance evaluation of our proposed method on multiple datasets. In

Section 4.1, we introduce the training environment and parameter settings. Two widely used interpolation techniques, bilinear interpolation (BI) and bicubic interpolation (BC), serve as baseline methods in

Section 4.2. We demonstrate the impact of our modified DSC/MS model in comparison to the original DSC/MS and PI-UNet [

20] model, both reproduced for a fair comparison. In

Section 4.3, we show how our proposed simple physical loss improves predictive outcomes, abbreviating our improved models as modified DSC/MS and modified PI-DSC/MS, where “PI” indicates that the model incorporates physical information in the loss function. Finally, in

Section 4.4, we compare the effect of sample size on model training effectiveness.

4.1. Model Training

Model training is conducted on a server equipped with an RTX4090 GPU (NVIDIA Corporation, Santa Clara, CA, USA), 32 GB memory, and 8 CPU cores. The open-source software library PyTorch 2.1.2 is used. We fix the number of training iterations at 2000 and the sample size at 2000 for all three simulations. We use the Adam optimizer with an initial learning rate of 0.001 and a batch size of 100. To prevent overfitting, L2 regularization (weight decay) is applied with a coefficient of 0.00001. Also, gradient clipping is used to prevent gradient explosion. The model with the best test loss during iteration is saved as the final model.

4.2. Super-Resolution Results

To evaluate model performance, we selected four comparison metrics: mean absolute percentage error (MAPE), L2 Error, peak signal-to-noise ratio (PSNR), and structure similarity index measure (SSIM). The calculation methods are as follows:

In the above formulas, n denotes total quantity, y represents ground truth, and denotes predicted value. MAX refers to the maximum predicted value and MSE stands for the mean squared error. represents the local mean, denotes the local variance, and represents the local covariance. These local features are obtained by the convolution operation of the sliding window, and the kernel size of the window is 11. and are small constant values introduced to stabilize the computation and avoid division by zero in the denominator. MAPE visually measures average pixel-level differences between two images but ignores the intensity of the differences. The L2 Error indicates how similar a predicted image is to the original, being sensitive to noise and potentially not matching a human eye perception of image quality. PSNR represents the ratio of dynamic range of original image to noise in a reconstructed image, evaluating the quality of the reconstructed image. Larger PSNR values indicate less image distortion. Generally, PSNR above 40 dB indicates an image quality that is nearly indistinguishable from the original. A range between 30 and 40 dB indicates distortion loss within an acceptable range. An image quality below 30 dB is considered poor. In the following experiments, when PSNR is below 20 dB, we believe that the SR method has no effect. SSIM is a more perceptually meaningful metric for assessing image quality, as it focuses on structural information rather than simply pixel-level differences. SSIM offers a more comprehensive evaluation of image quality, providing a better reflection of human visual perception.

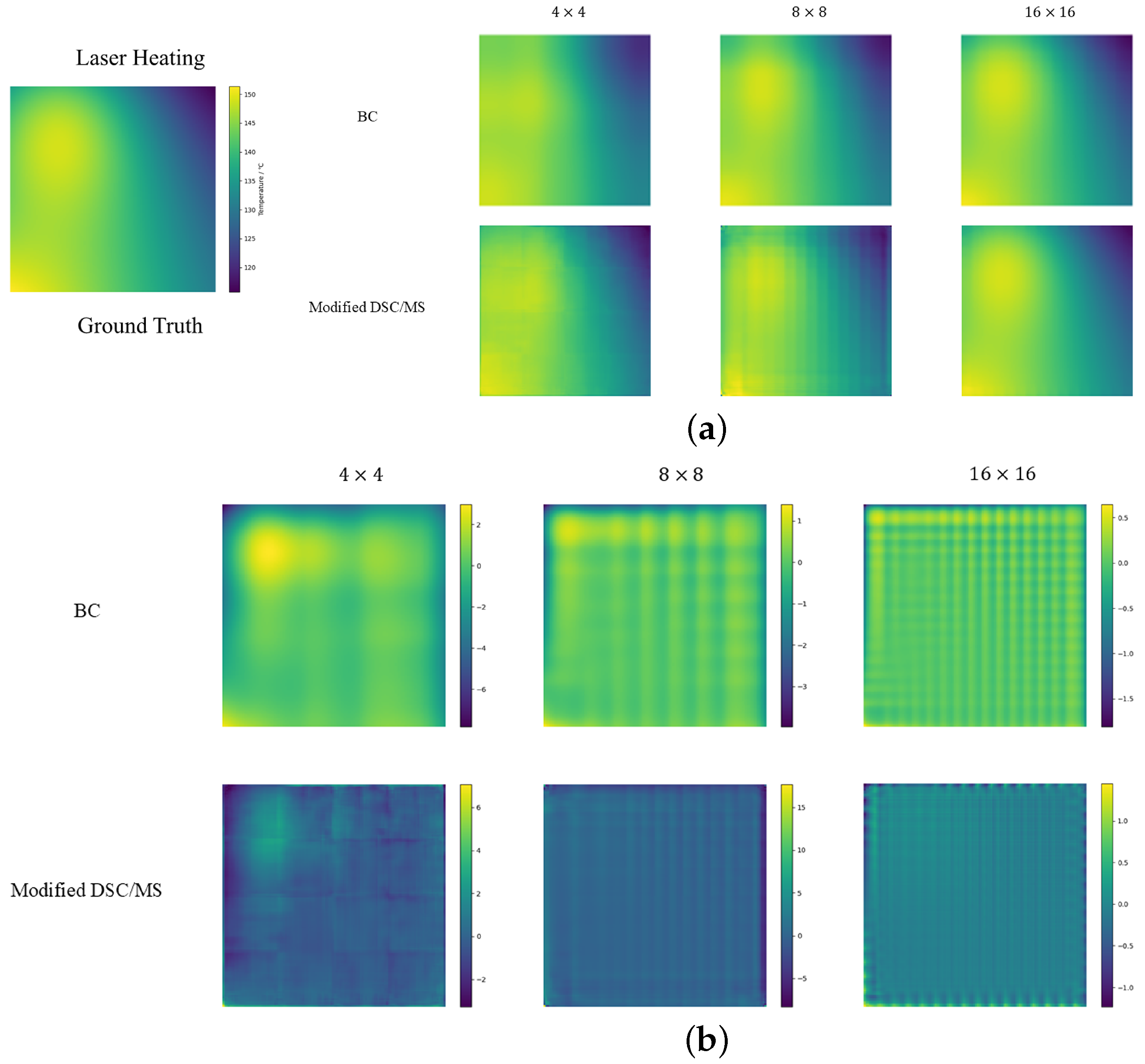

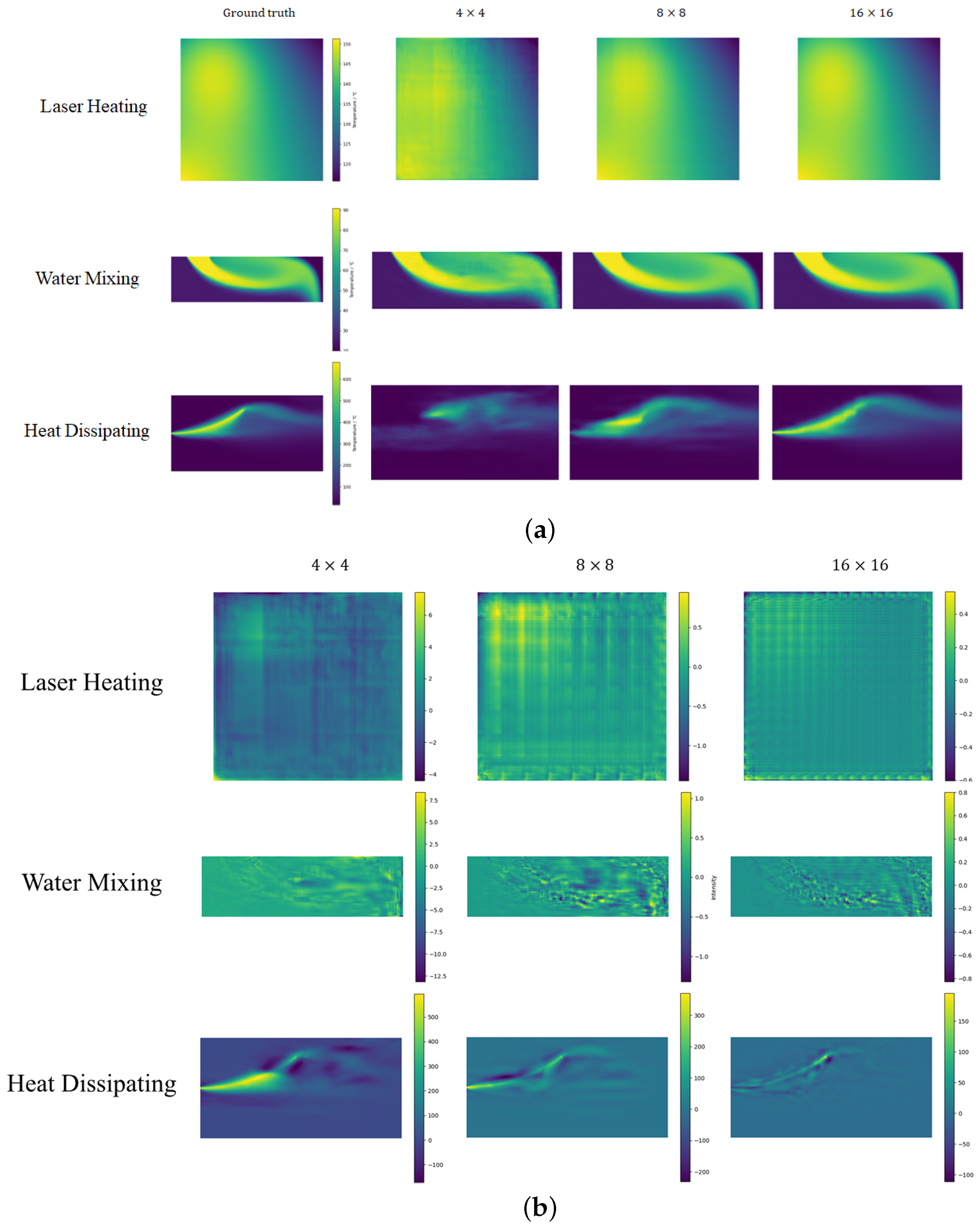

4.2.1. Laser Heating

Table 2 shows that the modified DSC/MS algorithm significantly outperforms other methods in terms of MAPE and L2 Error. While our model performs well in terms of accuracy, its PSNR is lower than that of interpolation methods. Super-resolution models typically produce finer details than interpolation methods, but these details may contain higher frequency noise or artifacts that, while having less of an impact on the error indicator, can cause PSNR to decrease because PSNR is sensitive to high-frequency noise or small fluctuations. Interpolation methods, such as bilinear or bicubic, typically produce smoother images with higher PSNR because they have less noise and more uniform error distribution. However, this smoothing can sacrifice image detail, resulting in poor accuracy metrics like relative error and L2 Error. Although our method performs poorly compared to interpolation methods in terms of PSNR, it is also above 30 dB, which can be considered good performance. For the SSIM metric, the modified DSC/MS model performs slightly worse than the BC and BI methods, but it still remains within the range of structural similarity. From the error image in

Figure 3b, our model’s error interval is similar to the BC method but with a much smoother error distribution and mostly close to zero errors. Therefore, in summary, the prediction results of the modified DSC/MS model still have certain advantages in accuracy. In contrast, the PI-UNet model struggles more with this metric.

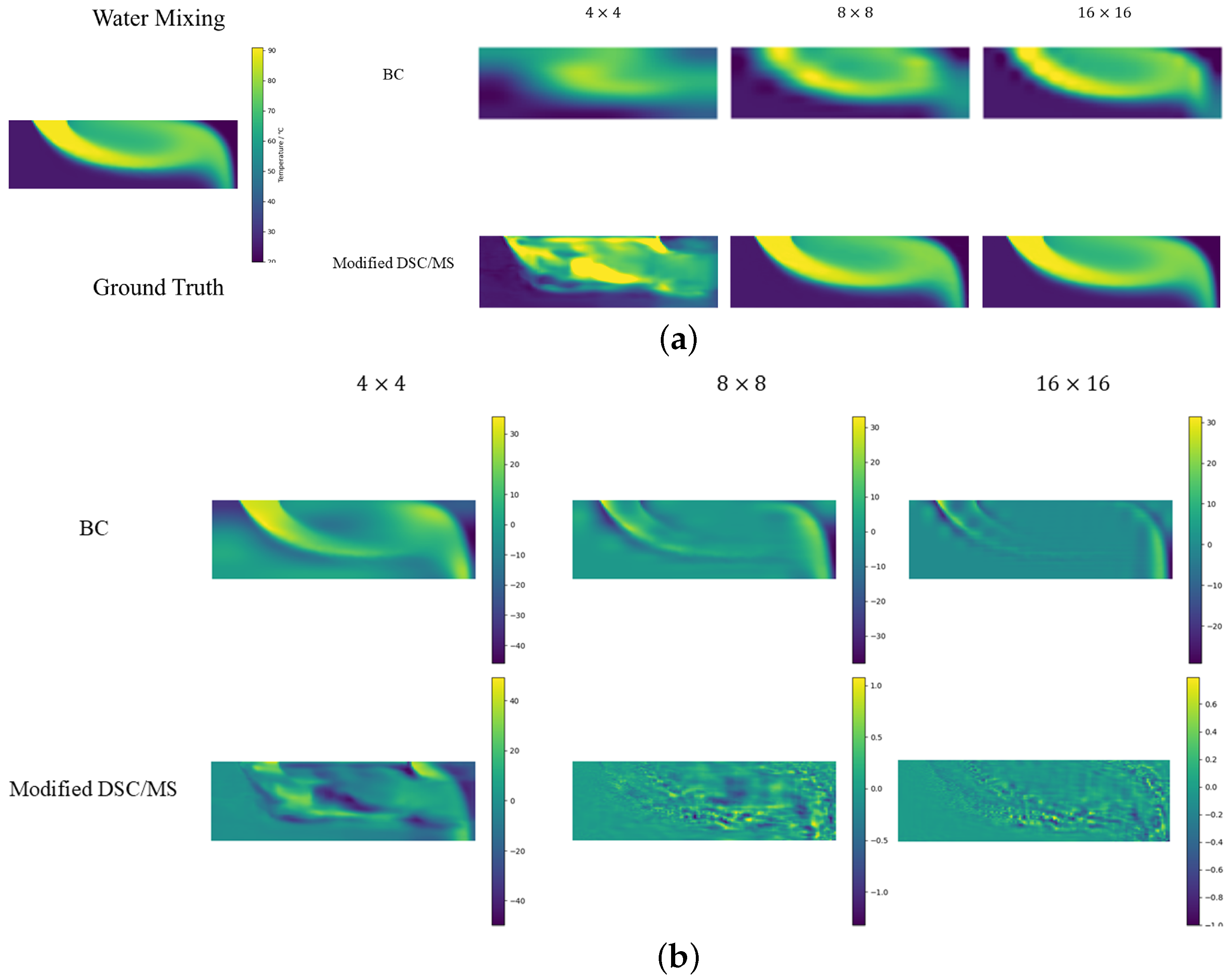

4.2.2. Water Mixing

In the convective environment of hot and cold water, our model outperforms other super-resolution strategies on all metrics except for

LR grids. Modified DSC/MS exhibits significantly lower MAPE and L2 Errors than other methods. The temperature field possesses distinct features in the current environment, such as abrupt changes. The modified DSC/MS model can easily capture these features and make accurate predictions. For the case of an LR grid size of

, all the methods largely fail to produce effective predictions due to the limited feature information provided by the rough grid. As PI-UNet performs better than other models in the case of a

LR, it can largely be attributed to the positive effect of physical loss in model training. This will be discussed in detail in

Section 4.3. Building on this premise, even without employing PI-related methods, our model outperforms PI-UNet in scenarios with LR grids of

and

, further highlighting the superiority of the DCS/MS structure.

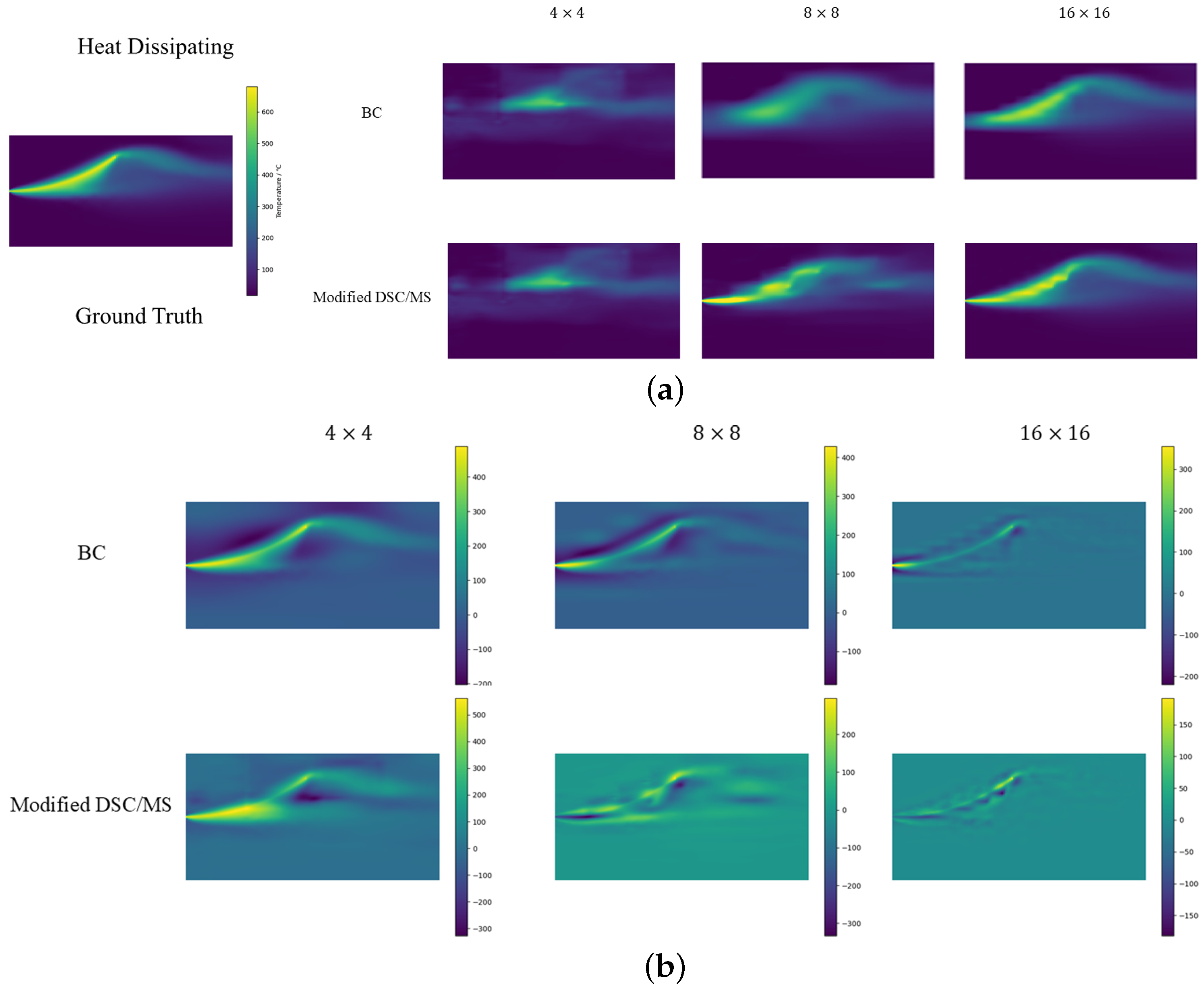

4.2.3. Heat Dissipating

In the heat dissipating scenario, due to temperature aggregation and the large variation in gradients, spatial features are concentrated, posing challenges for super-resolution. With an LR grid size of

, all the methods produce meaningless predictions. Interpolation performs poorly in this scenario. Observing the error graph in

Figure 5b, the BC error trends to closely follow the real image, which is also reflected in the SSIM results. Since the interpolation method estimates the surrounding known data points, it may struggle to accurately capture rapid temperature changes in areas with large gradients. This can lead to an overall underestimation of the post-SR temperatures, resulting in lower temperatures after super-resolution and, consequently, a higher MAPE. With an LR size of

, the error remains high. However, as shown in

Figure 5a, our proposed model exhibits consistency with the original temperature field in temperature distribution, gradients, and other aspects. With a grid size of

, our model predicts with overwhelming superiority, achieving a 7% higher accuracy than PI-UNet.

Through performance statistics across the aforementioned three environments, we validate the design of our model. The model takes into account features of different scales, enabling it to effectively reconstruct temperature field data at certain scaling ratios. Compared to interpolation methods, the general model exhibits smaller super-resolution errors in scenarios with prominent features and large temperature gradients. Our general model performs significantly better when the LR sizes are and . When the coarse mesh size is , the model may not be able to complete the super-resolution task in some scenes due to less information. It can also be observed that the improvement of DSC/MS has a certain effect. The modified DSC/MS presents certain advantages in different predictable scenarios, and the effect is significant in some cases. Compared to the interpolation method, although our model is slightly inferior to the interpolation method in some indicators, by looking at the error graph, our model has less noise and less error on the error graph. In contrast, while PI-UNet shows decent predictive performance in specific scenarios, it may exhibit some limitations when performing super-resolution tasks for temperature fields compared to the improved DSC/MS.

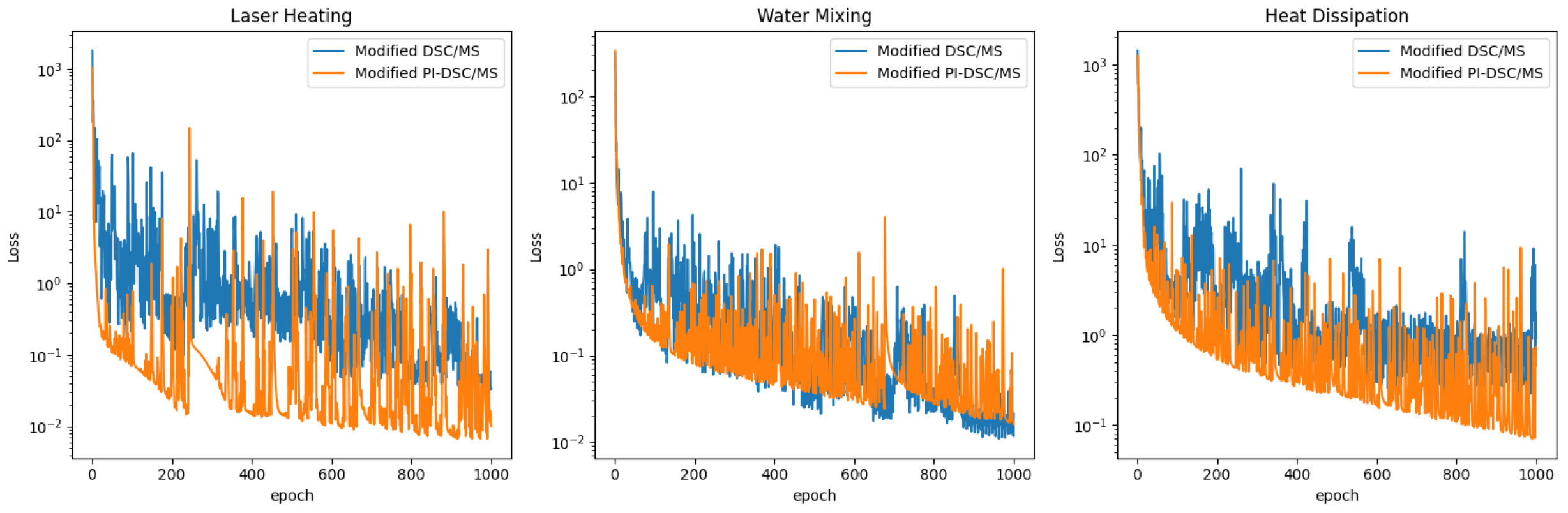

4.3. Modified PI-DSC/MS

In this section, we consider incorporating a model with specific physical loss functions.

Figure 6 and

Table 5 demonstrates the impact of the presence or absence of physical loss on prediction performance in three scenarios.

Introducing physical loss results in further reductions in predicted MAE and L2 loss, as well as an improvement in PSNR. However, SSIM did not show significant improvement in most cases, suggesting that the modified DSC/MS method is already effective in restoring the temperature field structure. The additional physical residual terms in the loss function serve a similar role as soft constraints on the model, thereby constraining the search space during model training and accelerating convergence.

Figure 7 shows the decreasing trend of data loss

in three scenarios. It can be seen that

decreases faster when trained with physical loss constraints. This also highlights the advantage of PI-UNet in the

low-resolution task within the water mixing scenario, as demonstrated in

Table 3. The results demonstrate that the improved PI-DSC/MS not only successfully completes the task but also outperforms PI-UNet across all performance metrics, further confirming the superiority of the structure. It is important to highlight that neither of our models can perform SR in the heat dissipation scenario with a coarse

grid. As shown in

Table 4, none of the five evaluated methods achieved this, indicating that the task is too complex and the

LR data are insufficient.

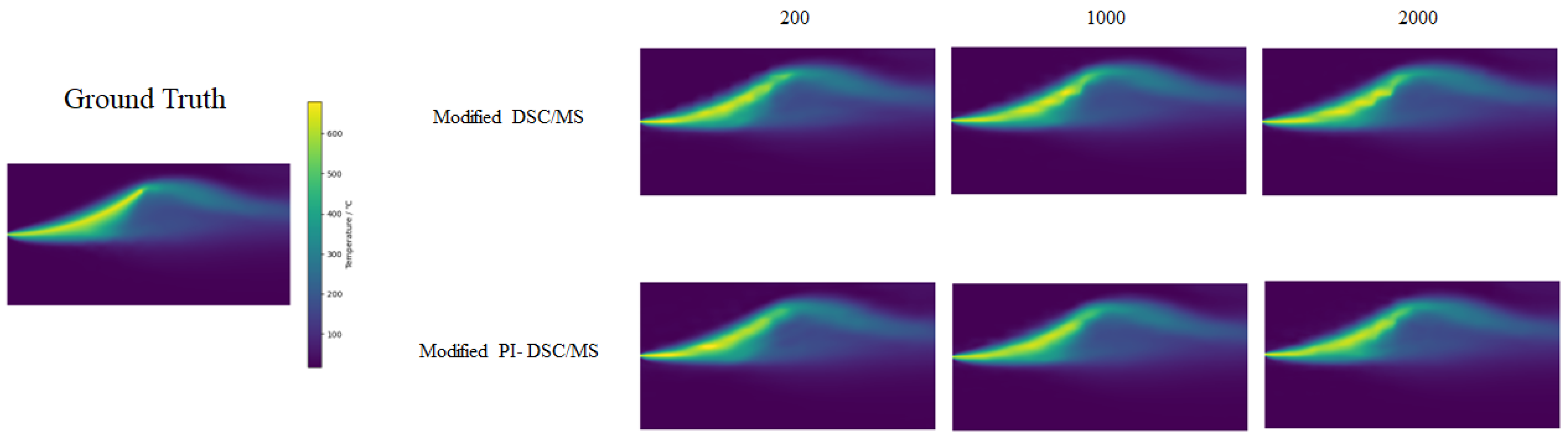

4.4. Sampling Quantity

As a supplement, we investigated the impact of sampling quantity on the prediction performance of the model. In this section, we conducted experiments using a heat dissipating experiment with an LR size of

as an example. The results are shown in

Table 6 and

Figure 8. Although not listed, the predicted effect in the other two scenarios is consistent with the trend of the results in the table.

It can be observed that in most cases, the quantity of samples has minimal impact on the training of the model. Even with a significant reduction in sample quantity, there is no sharp decrease in prediction accuracy. This implies that we can save costs on initial sampling and shorten training time. This feature opens up the possibility for application in scenarios with data distortion caused by long-time sampling of measuring equipment. Based on these findings, we chose to terminate training after 2000 iterations on the grounds that additional iterations would yield diminishing returns in terms of performance improvement. This decision was made to ensure the validity of the model while ensuring the efficiency of the calculation. As a result, as shown in

Figure 7, losses continue to decrease, reflecting a continuous but negligible improvement beyond the selected stop point.

5. Conclusions

In this paper, we propose a general model for measuring high-resolution temperature fields. We utilize a modified DSC/MS model, which includes a DSC component that effectively captures large-scale features and reflects underlying information, and an MS structure that excels at capturing small features at different scales. Based on the original model, we introduced important modifications such as additional jump joins and specific loss functions. By training and testing the model on numerical simulation temperature fields in three different scenarios, we verify the performance of the modified models. The error of the improved model is consistently below 20% across various scenarios, meeting the requirements for measurements. Compared to the interpolation method, our model achieves an error reduction of approximately 3 to 25 times, making it highly suitable for numerical processing in measurement tasks.

In practical applications, this model can provide a reliable solution for monitoring high-resolution temperature fields. Due to its universal design, the model has wider applicability and can adapt to monitoring requirements in various temperature fields. Despite significant progress in the current model design, challenges remain. In the current experiment, we still use a simple simulation model. Obtaining real experimental data to replace the simulation data currently used will be the focus of future research.