Abstract

Upper and lower limb rehabilitation training is essential for restoring patients’ physical movement ability and enhancing muscle strength and coordination. However, traditional rehabilitation training methods have limitations, such as high costs, low patient participation, and lack of real-time feedback. The purpose of this study is to design and implement a rehabilitation training evaluation system based on virtual reality to improve the quality of patients’ rehabilitation training. This paper proposes an upper and lower limb rehabilitation training evaluation system based on virtual reality technology, aiming to solve the problems existing in traditional rehabilitation training. The system provides patients with an immersive and interactive rehabilitation training environment through virtual reality technology, aiming to improve patients’ participation and rehabilitation effects. This study used Kinect 2.0 and Leap Motion sensors to capture patients’ motion data and transmit them to virtual training scenes. The system designed multiple virtual scenes specifically for different upper and lower limbs, with a focus on hand function training. Through these scenes, patients can perform various movement training, and the system will provide real-time feedback based on the accuracy of the patient’s movements. The experimental results show that patients using the system show higher participation and better rehabilitation training effects. Compared with patients receiving traditional rehabilitation training, patients using the virtual reality system have significantly improved movement accuracy and training participation. The virtual reality rehabilitation training evaluation system developed in this study improves the quality of patients’ rehabilitation and provides personalized treatment information to medical personnel through data collection and analysis, promoting the systematization and personalization of rehabilitation training. This system is innovative and has broad application potential in the field of rehabilitation medicine.

1. Introduction

Upper and lower limb rehabilitation training is a vital part of rehabilitation medicine. It is widely used in patients with diseases such as burns, brain injuries, fractures, or trauma [1,2]. The main goal of this type of training is to restore patients’ physical exercise capabilities, enhance muscle strength and coordination due to diseases or injuries, and ultimately help patients improve their quality of daily life.

According to research and statistics in recent years, China has mainly concentrated on traffic accidents and other non-traffic accidents caused by accidents. According to a national traffic accident survey, from 2010 to 2019, more than 2,125,994 traffic accidents occurred in China. In these accidents, the proportion of patients involving upper and lower limb injury is higher. According to a national survey of Chinese fracture patients (more than 512,187 individuals), upper and lower limb injuries (such as arms, legs, and spine fractures) are more common types [3].

With the increase in patients with upper and lower limb injuries due to accidents, rehabilitation training has become an urgent demand for patients requiring rehabilitation. At present, the author’s country’s rehabilitation training mainly depends on the traditional method of rehabilitation, and it usually involves one-to-one rehabilitation guidance provided by rehabilitation trainers. Although this method can conduct rehabilitation training for the specific situations of patients under professional guidance, it has high rehabilitation training costs and patients need to frequently go to the rehabilitation center for treatment, which not only causes an economic burden but also greatly affects its convenience to patients.

With the rapid development of virtual reality (VR) technology and intelligent rehabilitation equipment, the field of rehabilitation medicine has ushered in new breakthroughs. VR technology can create a more immersive and interactive rehabilitation environment for patients. Combined with the multi-sensory experience and instant feedback mechanism, it can effectively improve the participation and motivation of patients in rehabilitation training. As a result, the rehabilitation effect is greatly improved [4]. At the same time, as a new means for rehabilitation auxiliary, rehabilitation training robots have gradually become research hotspots in the academic and clinical fields. Through accurate exercise assistance and data monitoring, they provide personalized real-time feedback in exercise recovery training, which can help the rehabilitation division for more efficient treatment and significantly improve the efficiency and effect of rehabilitation [5].

Although these rehabilitation technologies have made significant progress, their applications still have inherent limitations. First of all, patients easily lose interest and motivation in long-term rehabilitation training, resulting in insufficient input and enthusiasm for training [6]. Secondly, traditional rehabilitation training usually involves repeated actions and exercises. This monotonous training mode may make patients feel bored, reducing their participation and persistence [7]. In addition, the real-time feedback provided by traditional rehabilitation methods is relatively limited, and it is difficult for patients to understand their own rehabilitation progress and improvement points, which affects its confidence and effect [8]. At the same time, most of the traditional rehabilitation training adopts universal training schemes, which makes it difficult to make flexible adjustments according to the individual needs of patients, which may lead to poor rehabilitation effects [9]. Furthermore, traditional rehabilitation training requires a lot of time and resources, including the participation of rehabilitation professionals, equipment and venue use, which virtually increases the cost and time pressure of rehabilitation [10]. More importantly, traditional rehabilitation training is usually carried out in a controlled environment. It is difficult for patients to convert the training effect into a practical application situation in daily life, which affects the actual effect of rehabilitation progress [11]. These problems make traditional recovery methods face many challenges in practical applications, leading to a slow rehabilitation process and unstable effects. Patients’ participation and rehabilitation effects are often subject to multiple restrictions on situations, psychology, and external conditions, and it is difficult to achieve the ideal rehabilitation state.

Therefore, upper and lower limb rehabilitation training is essential for patients with exercise dysfunction caused by trauma or disease. It not only helps to restore patients’ daily activities but also significantly improves their quality of life and helps patients re-integrate into normal social life. Therefore, in the future, new technologies will be applied in upper and lower limb rehabilitation training, such as virtual reality, robotic rehabilitation equipment, and remote rehabilitation systems, which will further optimize the rehabilitation effect and promote the popularization and application of rehabilitation training.

2. Related Work

In recent years, the research of VR technology in rehabilitation medicine has continued to deepen, and its application field has expanded from traditional motion function to many scenarios such as neural rehabilitation, cognitive training, and remote rehabilitation. The main advantage of VR technology is that it can provide patients with a highly immersive and interactive virtual environment and stimulate patients’ initiative and training motivation through sensory feedback. The current research progress focuses on how to use VR systems to achieve personalized training and real-time monitoring of rehabilitation progress, combining them with other auxiliary technologies to optimize the rehabilitation effect and improve the efficiency of rehabilitation. In the field of sports function rehabilitation, Laver et al. [12] found that VR technology has a significant advantage in the function of improving the upper and lower limbs of stroke patients, especially in providing personalized rehabilitation tasks and real-time feedback mechanisms. By dynamically capturing patient movements and providing visual and tactile feedback, the VR system can effectively help patients correct motion errors and ensure the safety and effectiveness of training. In addition, many studies have further developed a rehabilitation platform for multi-sensory interaction. Use the visual, hearing, and tactile stimulation in virtual scenes to increase the patient’s sense of input of the task, thereby improving the rehabilitation effect [13,14,15].

In addition, virtual reality technology can also help rehabilitation professionals formulate personalized rehabilitation plans for patients to ensure that the rehabilitation process can be dynamically adjusted according to the specific needs of each patient and the progress of recovery. Lange et al.’s [16] research discussed how to design game-based rehabilitation tasks to meet a patient’s personalized needs and rehabilitation goals. Lloréns et al. [17] discussed balanced rehabilitation training through the Kinect bone tracking system so that patients could receive rehabilitation treatment in the family environment, thereby reducing their dependence on time and venue resources. At the same time, research by Merians et al. [7] pointed out that a rehabilitation system with enhanced virtual reality can save a lot of time and venue resources in rehabilitation while significantly improving the accessibility of rehabilitation. Sveistrup et al. [18] further explained the application advantage of virtual reality technology in sports rehabilitation, and believed that the combination of virtual training tasks with the patient’s daily life situation can improve the practical application and conversion effect of rehabilitation training.

With the continuous progress of modern rehabilitation technology, the application of virtual reality technology is not limited to patients with stroke rehabilitation, but has also gradually expanded to other nerve injuries, muscle and bone diseases, and remote rehabilitation training. Khokale et al. [19] researched the application of VR and AR technology in the application prospects of VR and AR technology in stroke rehabilitation, indicating that these technologies can provide remote rehabilitation services for patients in remote areas. Moreira et al. [20] conducted a systematic review of the application of head-wear display (HMD) virtual reality in the rehabilitation of upper limbs. It was found that it has the potential to improve the recovery of upper limb exercise and reduce pain, but it still requires large-scale research to confirm its effect. Maggio et al. [21] reviewed the application of a computer-based rehabilitation environment (Caren) system in neural rehabilitation. It was pointed out that although Caren shows potential advantages in the rehabilitation of Parkinson’s disease, traumatic brain injury (TBI), and multiple sclerosis patients, the current research samples are small and need to further verify its effectiveness in remote rehabilitation applications.

In the study of the combination of virtual reality and rehabilitation robot technology, Chheang et al. [4] proposed an upper limb rehabilitation framework combining VR and robotics technology. Analyzing the elbow joint action through wearable sensors significantly improves the participation and effect of patients in family rehabilitation. Brady et al. [22] studied this through an interview with a focus group, which discussed the view of physical therapist’s view of the rehabilitation of shoulder muscle skeletal pain support for VR support. The results showed that VR had the potential to manage pain and improve of patient participation, but there were still problems with security and operating convenience in practical applications.

In the field of upper and lower limb function rehabilitation, its broad potential has been demonstrated. Among them, Mohammadhadi Sarajchi et al. [23] studied a rehabilitation exoskeleton system designed for children aged 8 to 12. Six active joints (distributed in hip and knee joints) and an adjustable design were used to meet the growth needs of different children. Oblak et al. [24] investigated the combination of the Kinect(Microsoft Corporation, Redmond, WA, USA) device and the tactile feedback system in upper limb rehabilitation training, especially for the recovery of arm and hand function. Prrochnow et al. [25] further studied the neurological mechanism of Kinect in the virtual reality environment and verified its application effect in rehabilitation therapy in patients with stroke and nerve injuries. In addition, through in-depth investigations, we found that patients using Kinect devices combined with rehabilitation games for training showed greater improvements in the recovery and rehabilitation effect in terms of sports ability recovery and rehabilitation [26,27,28].

Although VR and rehabilitation robot technology have shown broad prospects in modern rehabilitation medicine, their applications still face many challenges, such as high equipment costs, complex operations, and a large patient experience burden. In addition, most VR rehabilitation systems still need patients to wear more bulky physiological sensors, which will cause exercise restrictions for patients with inconvenience or recovery on the sick bed and increase the physical burden. Therefore, future research should focus on how to further optimize the experience of virtual reality technology and improve its accessibility and effectiveness in rehabilitation training.

The main contributions made in this study are as follows:

- The main contribution of this article is to propose a system based on virtual reality technology. By providing a more attractive and interactive rehabilitation training environment, the system significantly improves the participation and rehabilitation effect of patients. Compared with the traditional rehabilitation training method, the system can dynamically adjust the training plan according to the patient’s specific situation and help patients to correct the movement in time through real-time feedback mechanisms, thereby improving the personalization and accuracy of rehabilitation training.

- The research of this article is reflected in in-depth analysis and experimental verification of the application of virtual reality in the field of rehabilitation. Through experiments in patients with burns, this article not only confirms the effectiveness of the virtual reality rehabilitation training system but also shows its advantages in improving patient rehabilitation and improving the treatment experience. These findings provide an important empirical basis for the research and application of future virtual reality technology in the field of rehabilitation training.

- The work of this article in data collection and analysis is also worthy of attention. The system can collect a large amount of rehabilitation training data and use these data to provide detailed information and support for medical staff to help them optimize and personalize the treatment plan. This data-driven rehabilitation evaluation method not only improves the scientific and systematic nature of rehabilitation training but also provides new research ideas and tools for rehabilitation medicine.

3. System Architecture and Methods

3.1. System Framework Structure

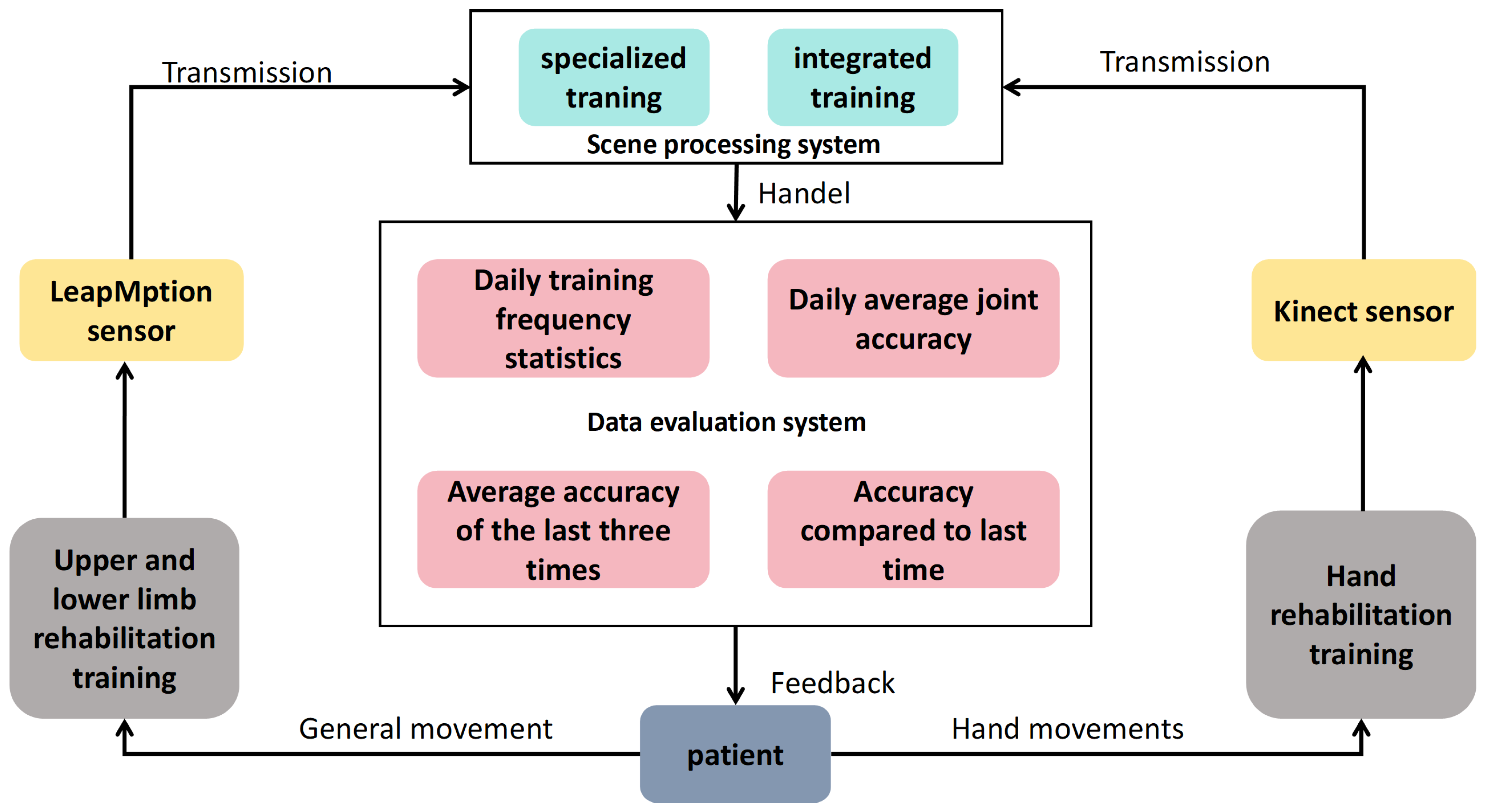

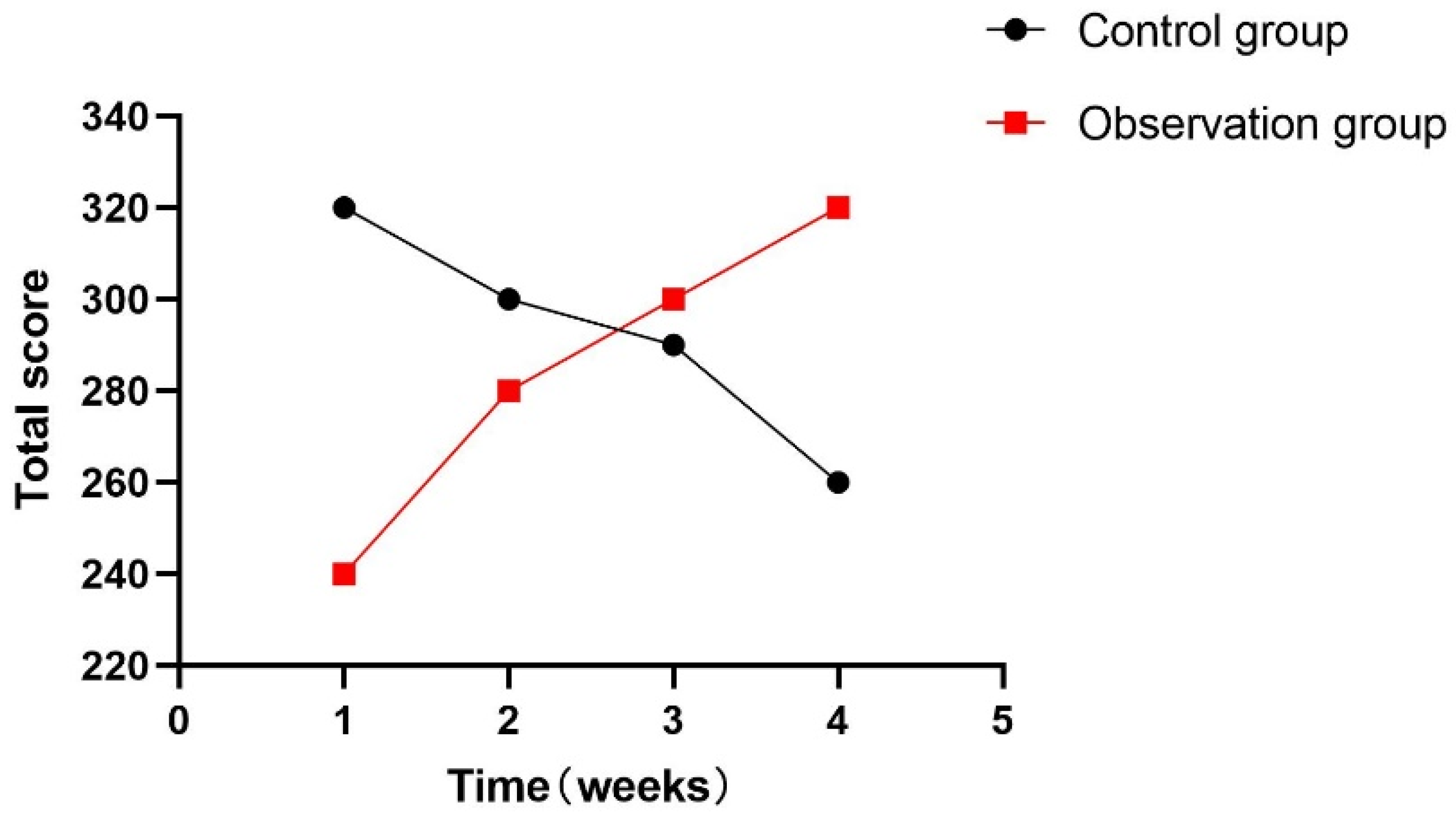

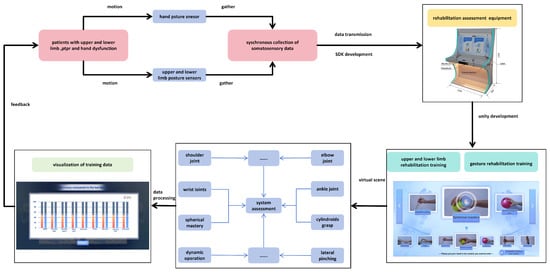

The system is rehabilitated for patients with dysfunction of upper and lower limbs. Patients make corresponding actions on the upper and lower limbs rehabilitation assessment and training equipment (hereinafter referred to as the rehabilitation evaluation equipment). For example, when the patient conducts rehabilitation training for the entire body’s large joints, the patient needs to face the equipment between 1 m and 3 m and perform rehabilitation training campaigns on the upper and lower limbs. The Kinect sensor device collects the patient’s movement trajectory and transmits it to Unity3D 2022.1 and 3DMAX 2021. In the scene processing system constructed by other simulated software, the scene processing system is mainly composed of two modules: special training and comprehensive training. Then, the scene processing system processes the rehabilitation training tasks and rehabilitation training requirements in the virtual scenario and transmits the processing results to the data evaluation system module. The data assessment system module can record the following according to the accuracy of the patient’s posture: the daily training number statistics, the daily average joint accuracy, the average accuracy of the past three times, and the accuracy compared with the last time. Finally, feedback on the processing results is provided to the patients. Similarly, when the patient conducts hand rehabilitation training, they only need to hang up at the front of the rehabilitation assessment equipment and perform the corresponding training exercise. The system frame structure is shown in Figure 1.

Figure 1.

System framework structure diagram.

3.2. Hardware Equipment

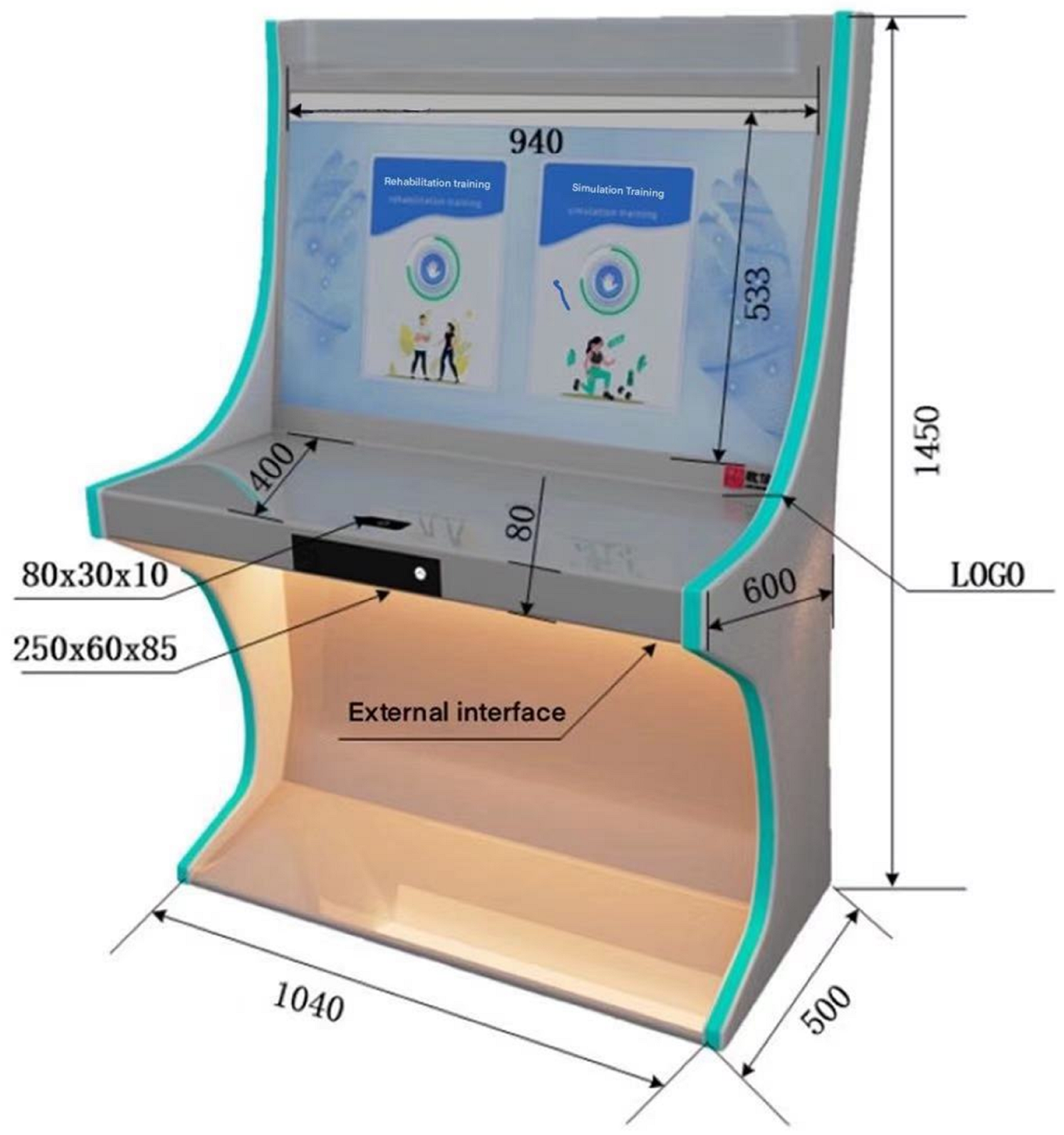

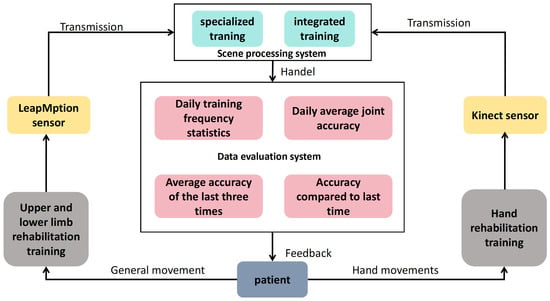

The equipment used in this study was completely jointly developed by laboratory members. This product provides a highly comprehensive integrated treatment, training, and rehabilitation solution for patients with upper and lower limb movement disorders and hand dysfunction. The external structure of this product is made of metal to ensure product quality is strong, durable, stable, and safe. This product consists of a vertical all-in-one machine, a 43-inch large touch screen, and a touch monitor. It contains a Kinect2.0 somatosensory depth camera to detect and record patients doing motor dysfunction rehabilitation training and a Leap Motion gesture tracker to detect and record the medical treatment and rehabilitation status of patients with hand dysfunction. This product is independently developed throughout the entire process and is combined with software and hardware servers. Patient data can be transferred to the cloud and saved in real-time for subsequent doctor treatment needs. The full name of this equipment is the upper, lower limb, and hand Rehabilitation training assessment instrument, as shown in Figure 2. This product provides entertaining interactive training courses for patients with motor dysfunction. It uses a somatosensory camera to capture human movements. By comparing the accuracy of actual movements with standard movements, interaction with objects in the game training scene can be achieved, thereby improving the patient’s subjective initiative in rehabilitation training.

Figure 2.

Upper, lower limb, and hand rehabilitation training assessment instrument.

3.3. Hardware Equipment

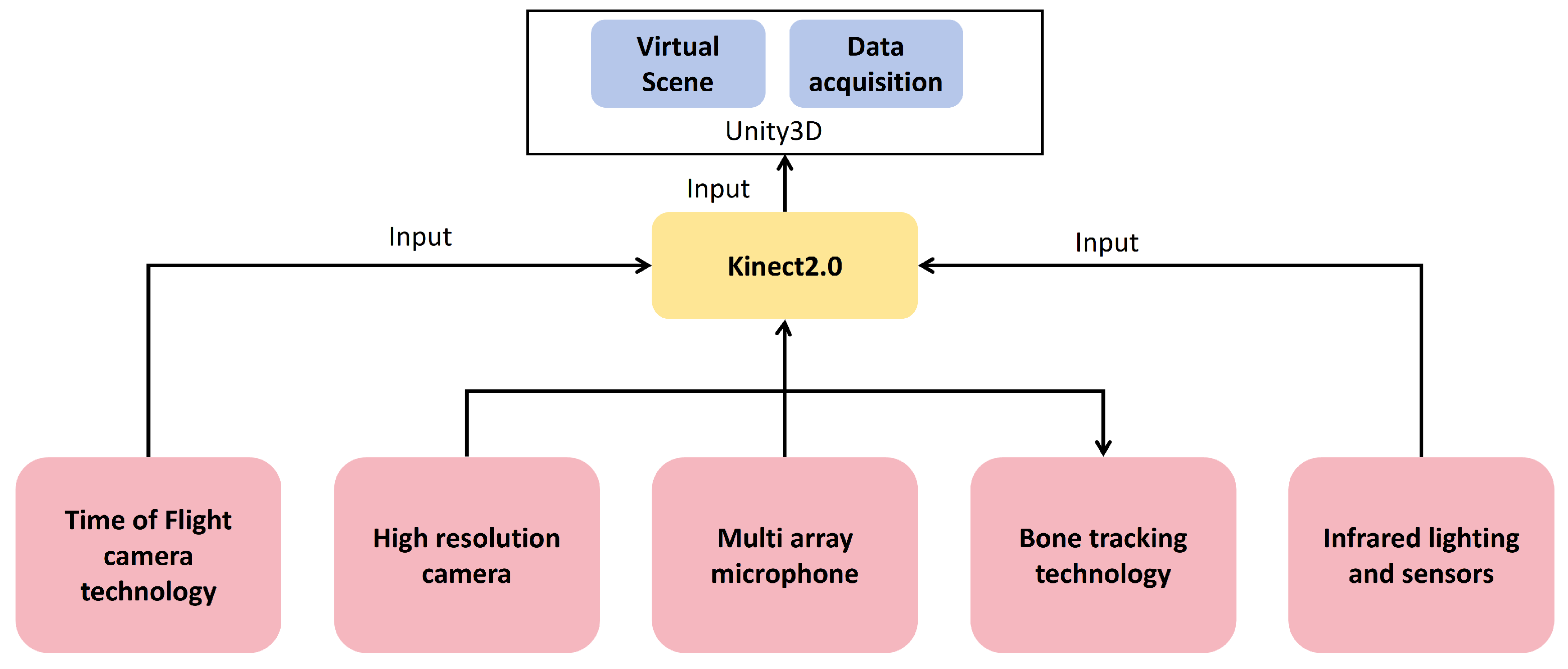

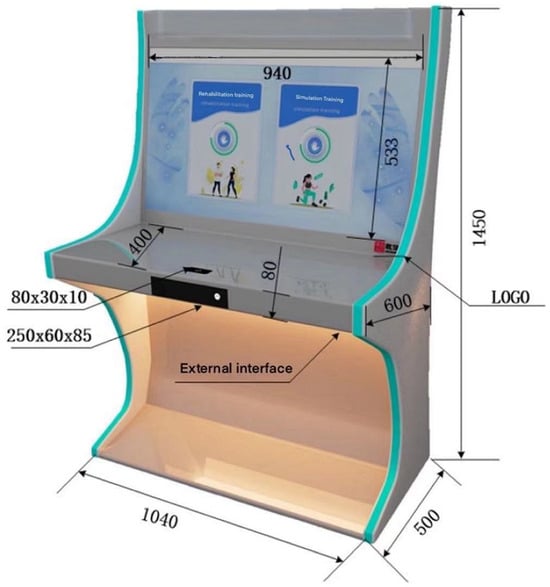

The upper and lower limb gesture recognition sensor used is Kinect 2.0. This device is a depth-sensing camera launched by Microsoft [29]. It uses a series of advanced technologies to realize its functions:

- Time-of-flight camera technology: Kinect 2.0 uses time-of-flight technology to measure the time it takes for light to be emitted from the camera to the surface of the object and back by sending infrared light pulses. This allows Kinect to accurately measure the depth of an object and create a depth image.

- High-resolution camera: Kinect 2.0 is equipped with a 1080p resolution color camera, capable of capturing clearer and more detailed images. This allows Kinect to more accurately recognize and track human movements and facial expressions.

- Multi-array microphone: Kinect 2.0 integrates multiple microphones to capture and locate spatial sounds. This enables the device to implement voice recognition and control functions.

- Bone-tracking technology: Kinect 2.0 uses advanced bone tracking algorithms to accurately capture the movement of human joints. This enables Kinect to track the user’s movements in real-time, enabling natural user interfaces and motion-sensing gaming.

- Infrared lighting and sensors: In order to work in low-light environments, Kinect 2.0 is equipped with infrared lighting and sensors that can work reliably under different lighting conditions.

The specific technology roadmap is shown in Figure 3.

Figure 3.

Upper, lower limb, and hand rehabilitation training assessment instrument.

The gesture recognition sensor used is Leap Motion, which uses a technology called “visual touchless gesture control”. The Leap Motion somatosensory interactor uses optical hand tracking technology and has two high-frame-rate grayscale infrared cameras with wide-angle lenses and four infrared LEDs. The optimal working environment is a lighting environment that can produce clear, high-contrast object outlines. The top layer The filter layer only allows infrared light waves to enter and exit and performs preliminary processing on the data collected by the camera to simplify the later calculation complexity; a binocular camera is used to extract the three-dimensional position of the hand through the principle of binocular stereo vision imaging and establish a three-dimensional model of the hand; using Grayscale cameras reduces the amount of computational data and increases the algorithm speed.

Based on the optimization of the above hardware and algorithms, Leap Motion can collect 200 frames of hand data per second with an accuracy of up to 0.01 mm. The trackable area of Leap Motion is in the shape of an inverted square pyramid in space, with a horizontal field of view of 140° and a vertical field of view of 120°. The interactive depth is between 10cm and 60cm, with a maximum of no more than 80 cm.

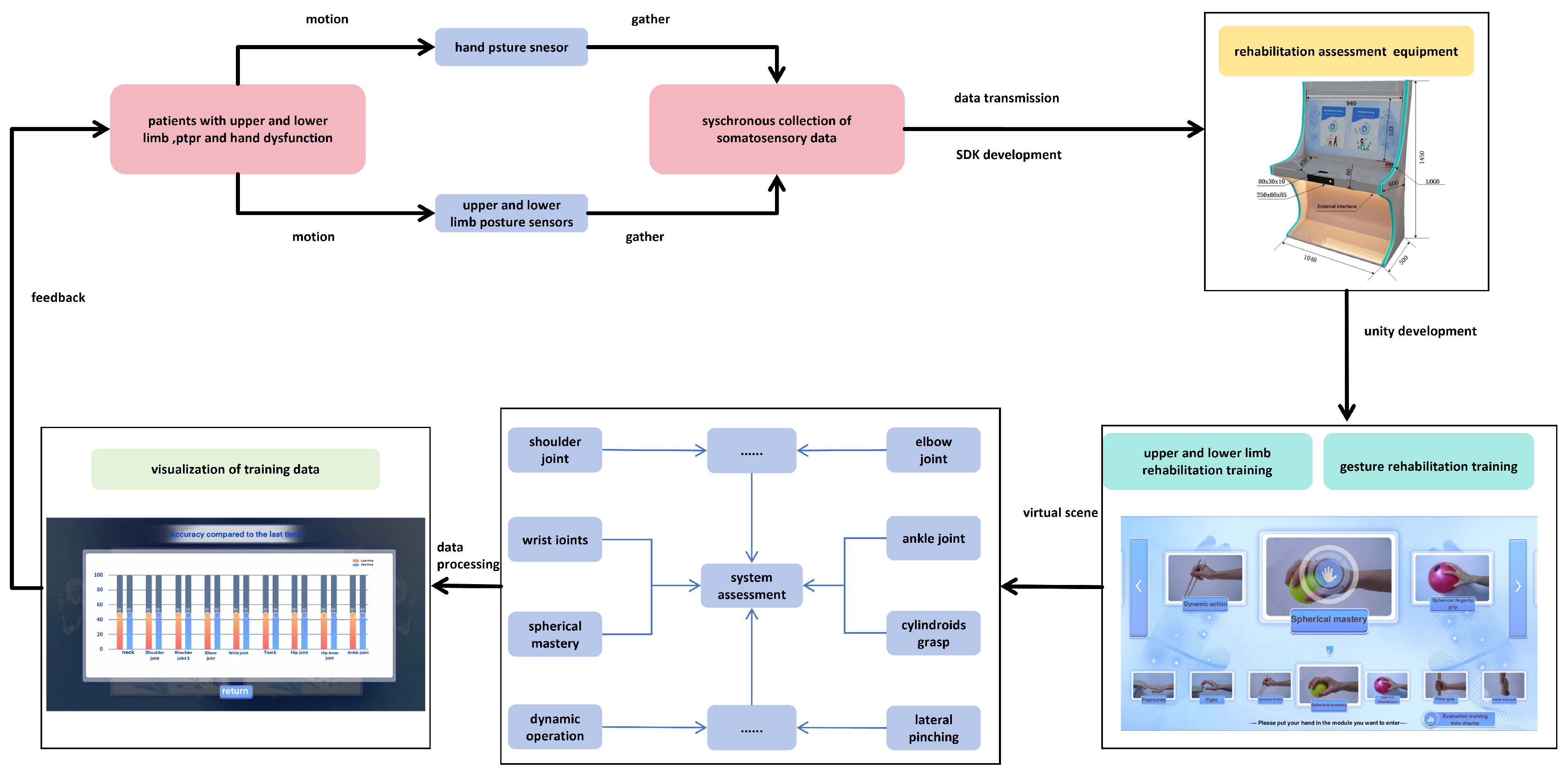

3.4. Collect Data and Interaction with Virtual Scenes

Patients with upper and lower limb and hand dysfunction stand in front of the device and make corresponding movements. The patient’s motion data can be collected by the corresponding sensor and transmitted to the virtual program scene developed by Unity. The upper and lower limbs in this system provide are nine virtual scenes in the rehabilitation training, which are dedicated to training the following posture movements: neck, shoulder joint, shoulder joint 2, elbow joint, wrist joint, trunk, hip joint, hip–knee joint, and ankle joint. For the corresponding hand, there are 13 virtual scenes in the Ministry of Sports Rehabilitation training, which are dedicated to training the following posture movements: hanging, lifting, touching, pushing, hitting, dynamic operation, spherical grasp, spherical finger grip, columnar grasp, hook and pull, two-fingertip pinch, multiple-fingertip pinch, and side pinch. Patients undergo the above special training, and through system evaluation, visualized evaluation data are output and finally fed back to the patient so that the patient can adjust in real-time based on the training data to improve the accuracy of his rehabilitation training. Figure 4 shows the interaction between collected data and virtual scenes.

Figure 4.

Interaction between the collected data and virtual scene.

The interaction between the collected data and the virtual scene is shown in Figure 4.

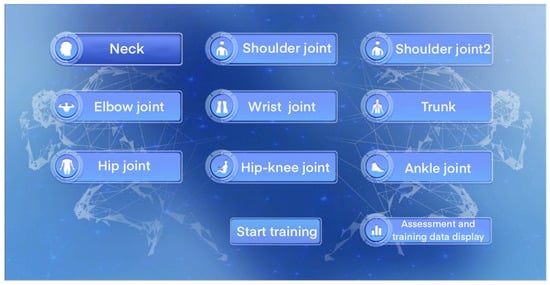

3.5. Virtual Scene Design

Interesting feedback can relieve patient fatigue during training and prevent treatments from becoming too tedious, which is critical for long-term recovery. This article designs a variety of simple and interesting biofeedback virtual environments to meet the needs of people with different degrees of respiratory impairment and provides users with training conditions for upper and lower limb rehabilitation and hand disorder rehabilitation. Through approachable and easy-to-operate training scenarios, user training operations are made convenient and concise. As shown in Figure 5, during the system development, this paper designed nine targeted training scenario selection interfaces for patients with upper and lower limb movement disorders.

Figure 5.

Trunk flexion exercises.

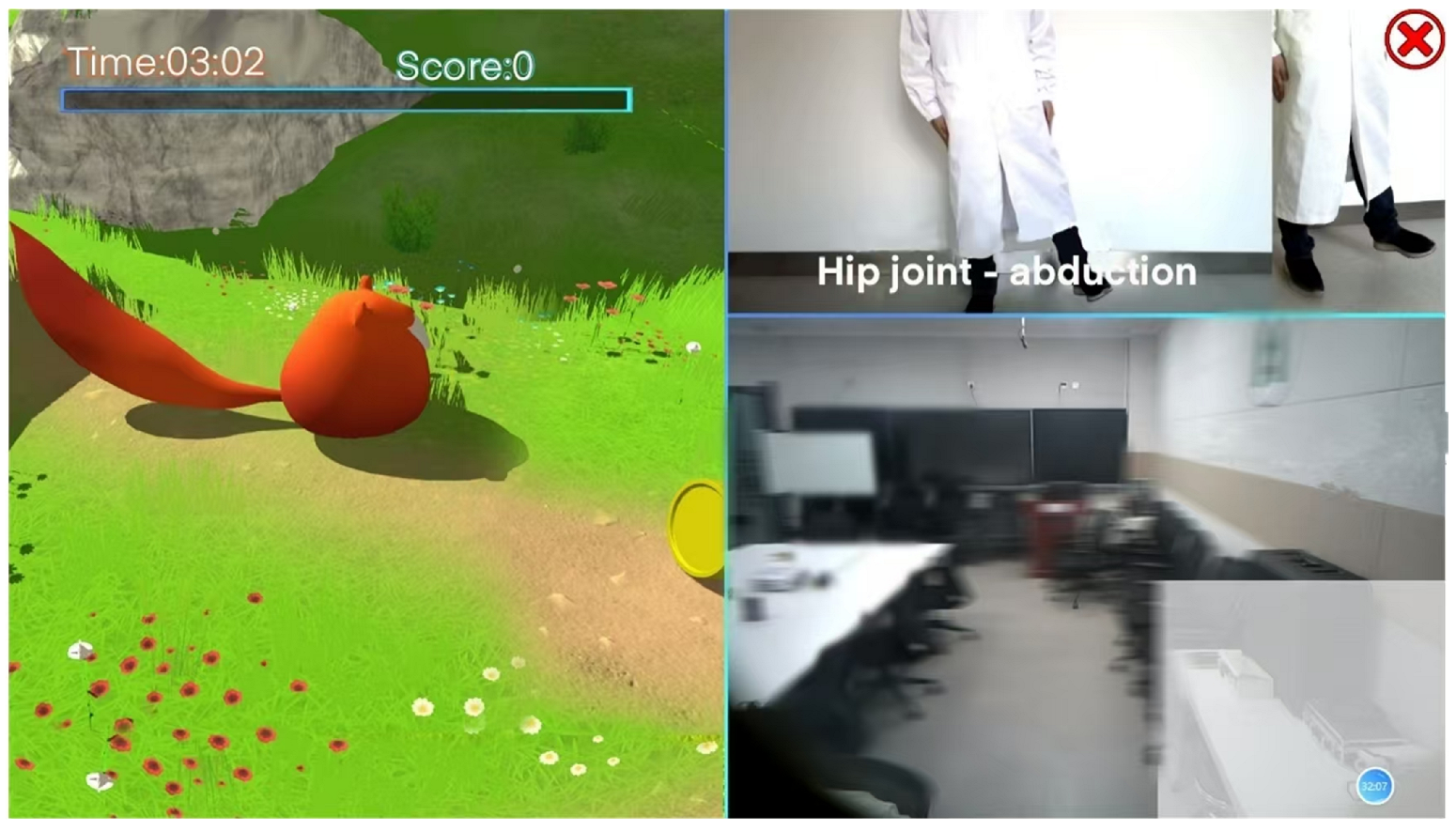

For example, in the trunk-flexion movement in special training, the patient stands in front of the device and makes the corresponding training posture according to the teaching video content prompted in the upper-right corner of the screen; in the lower-right corner, the patient can see his own movement trajectory information; on the left side of the screen is a virtual scene designed by this system. The small animals in the scene will give corresponding feedback according to the standard degree of the patient’s actions, such as jumping forward and picking up the gold coins in front. Prompts such as scores, time, and progress bars make the rehabilitation training more interesting for patients, as shown in Figure 6.

Figure 6.

Interactive virtual rehabilitation training interface.

Of course, in addition to nine different special trainings, this system also has some interesting random action exercises. Patients need to make corresponding actions according to the screen prompts. The distant screen will move from far to near as time goes by. If the patient’s actions are standard, there will be score feedback. If the patient’s actions are not standard, there will be a corresponding punishment mechanism, as shown in Figure 7. Similarly, the system has built-in a set of interesting training for cutting fruits based on the patient’s movements, as shown in Figure 8.

Figure 7.

Fun training.

Figure 8.

Cutting fruits based on movement.

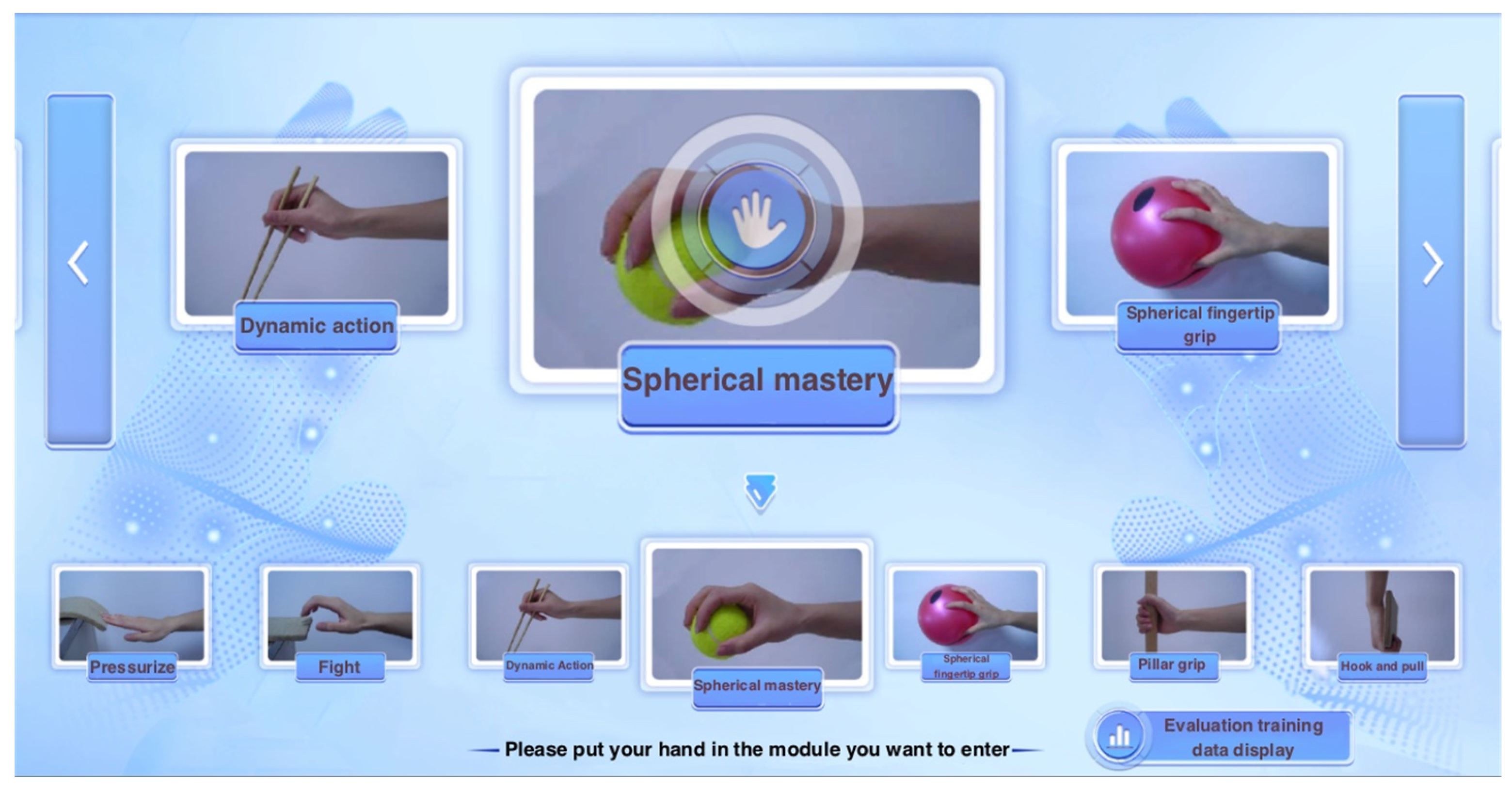

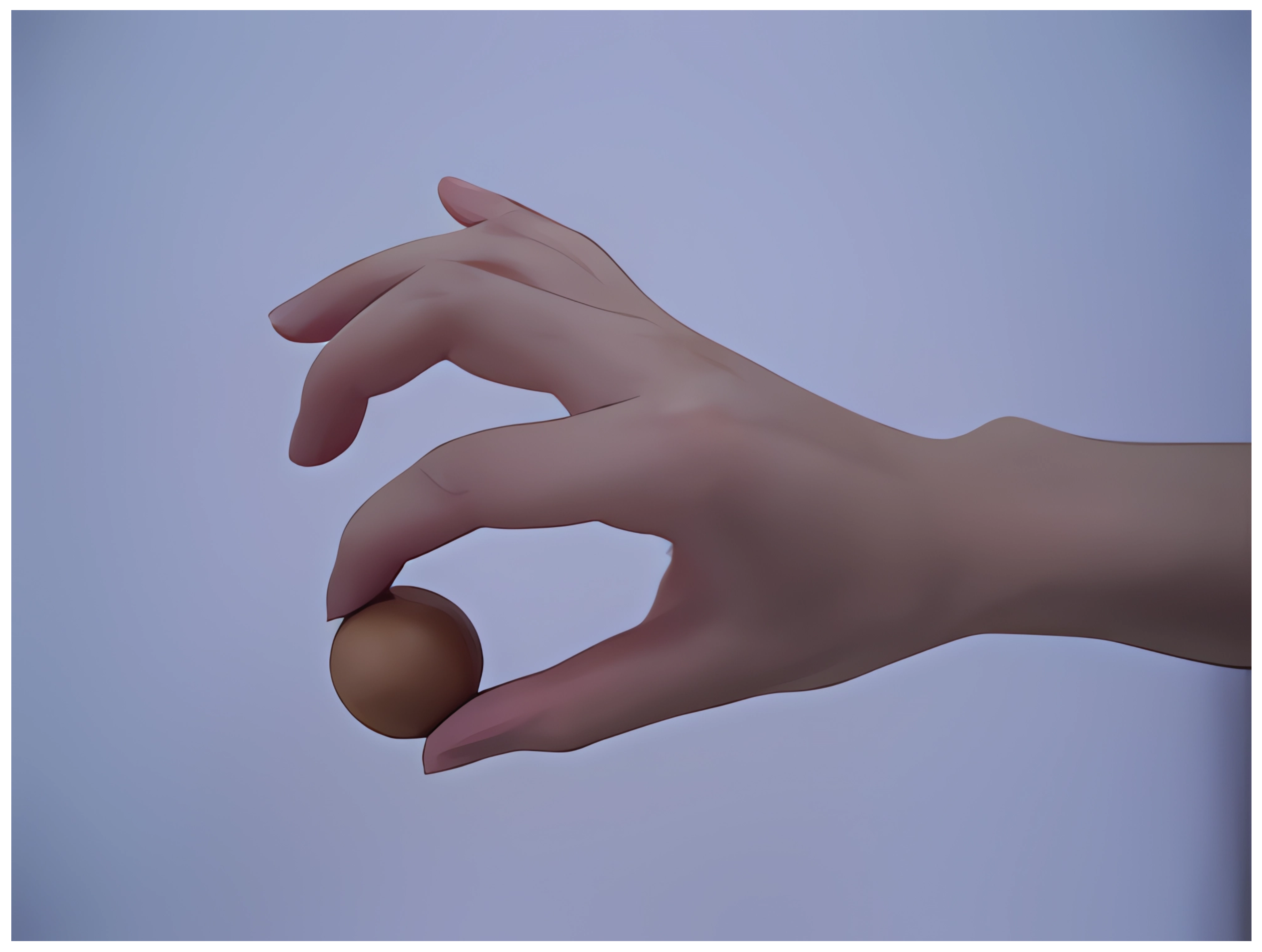

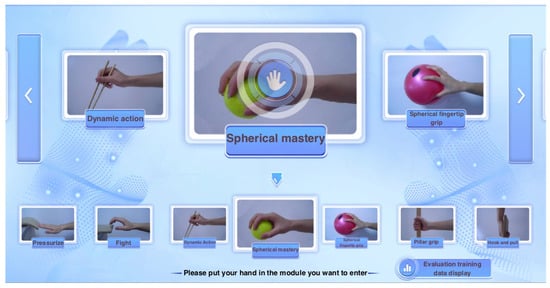

Similarly, for patients with hand functional movement disorders, this article designed 13 targeted training scenarios, as shown in Figure 9.

Figure 9.

Thirteen types of special training.

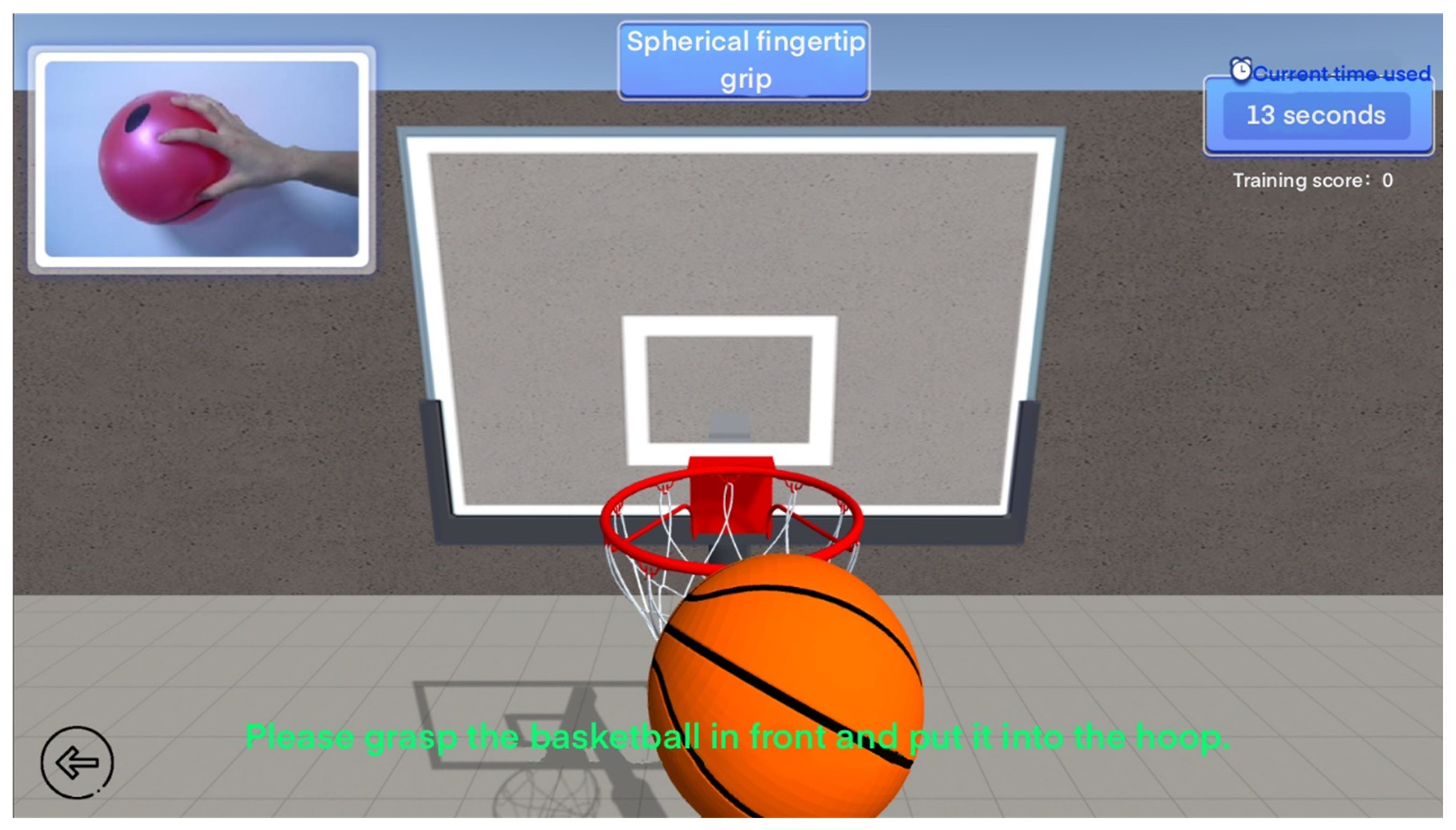

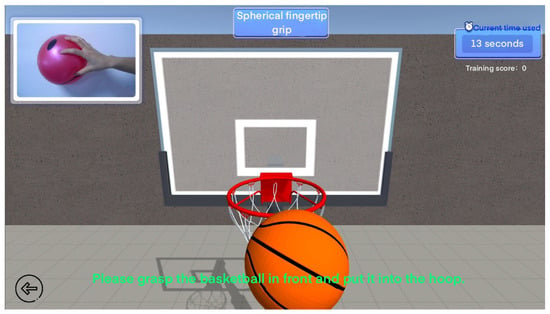

Here, we take the ball-shaped fingertip grip and hitting in the 13 special gesture training as examples. In the ball-shaped fingertip grip virtual scene, the patient will make grabbing movements according to the prompts in the scene and put the basketball into the ball. The more you put into the backboard within the specified time, the higher your score will be, as shown in Figure 10. In the virtual scene of special hitting training, patients need to perform standard hitting movements and hit the hamsters in the virtual scene with their hands. Within the specified time, the more hamsters hit, the higher the score, as shown in Figure 11. In this type of fun training, patients will not feel bored and will also record the training data in the game.

Figure 10.

Ball fingertip grip special training.

Figure 11.

Special hitting training.

3.6. Training Data Collection and Visualization

Upper and Lower Limb Training Data Collection

Kinect can obtain the position coordinates of various joint points of the human body by processing depth data, such as the head, hands, feet, etc. Kinect can track up to two skeletons and can detect up to six people. The standing mode can track 20 joint points, and the sitting mode can track 10 joint points.

3.7. Hand Training Data Collection

When a hand enters the recognition area of Leap Motion, it will automatically track and output a series of data frames that are refreshed in real-time. Frame data are the core of Leap Motion. Each frame contains information about hand movements, such as all hands, fingers, pointables, tools, and gestures, and their position, velocity, direction, rotation angle, and other information. The hand model generated for each hand specifically includes the thumb, index finger, middle finger, ring finger, little finger, and wrist joint; for each finger, it includes the distal phalanx, middle phalanx, proximal phalanx, and metacarpal. Leap Motion simulates the skeletal joints of a real human hand and can achieve real-time, fast, and accurate hand tracking by updating information at each frame.

Here, we take the special training in the system, two-fingertip pinching, as an example. We need to judge whether the patient makes the corresponding action and whether it is standard, as shown in Figure 12. We need to calculate and detect whether the positional characteristics of the third knuckle of the patient’s thumb are consistent with those of the third knuckle of each finger. The three-dimensional coordinate information of the third knuckle of the thumb is A(x1, y1, and z1), and the three-dimensional coordinate information of the third knuckle of the index finger is B (x2, y2, and z2).The distance d between two points A and B is the similarity between the thumb and the index finger. Based on the obtained d value, it can be judged whether the patient’s actions are standard.

Figure 12.

Standard movement of pinching with two fingertips.

Visualized Data and Analysis

This system uses a variety of virtual reality gamification elements to embed rehabilitation training into virtual reality games and present patients’ performance through game scores, level progress, etc., thereby stimulating patients’ enthusiasm and participation. It also provides instant feedback, including sound prompts, visual feedback, and vibration feedback, are used to help patients adjust their movements and ensure correct posture and movement. Finally, the system generates rehabilitation training progress reports through the virtual reality system so that medical professionals can track the patient’s progress. These reports include graphics, charts, numerical data, etc.

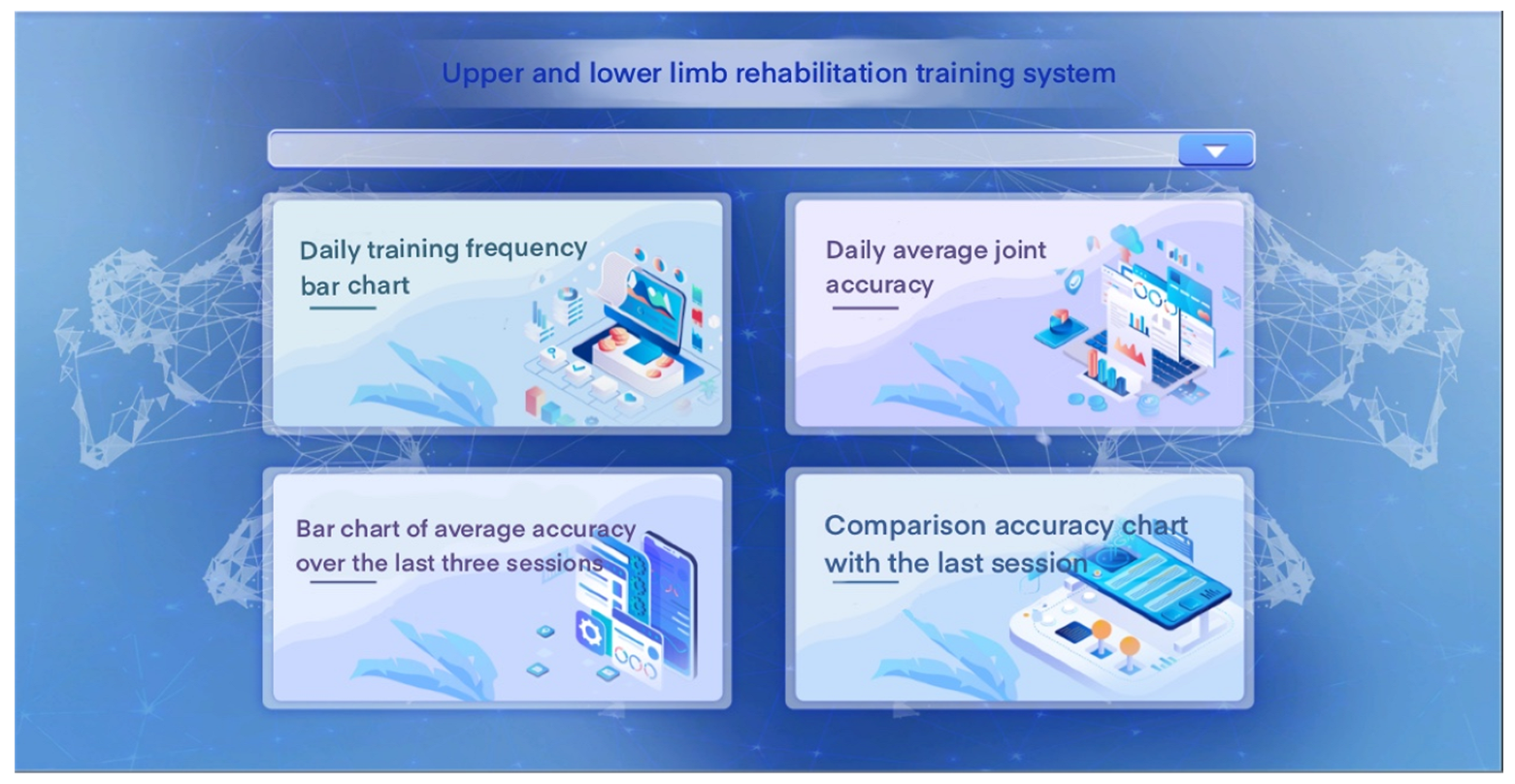

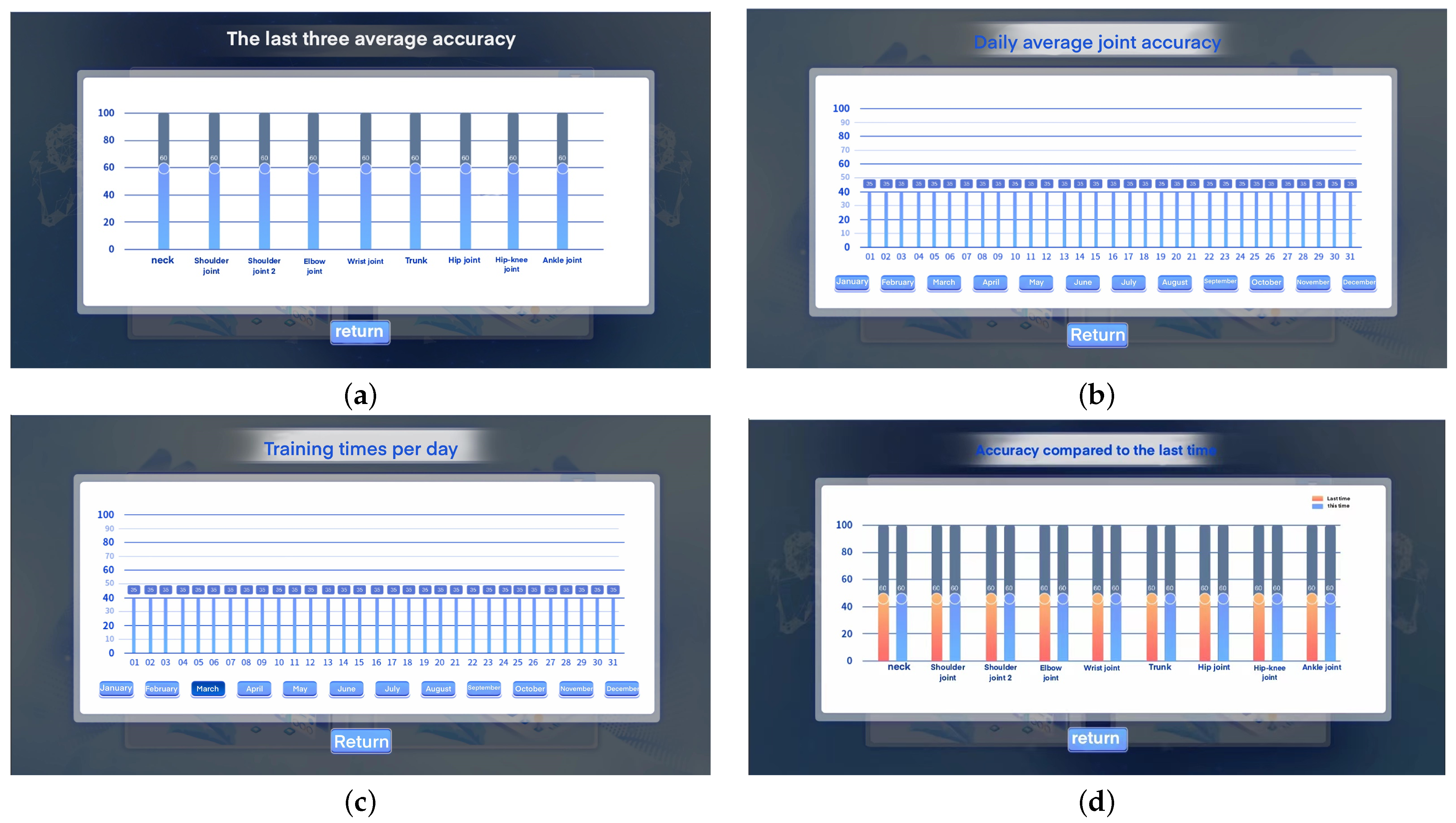

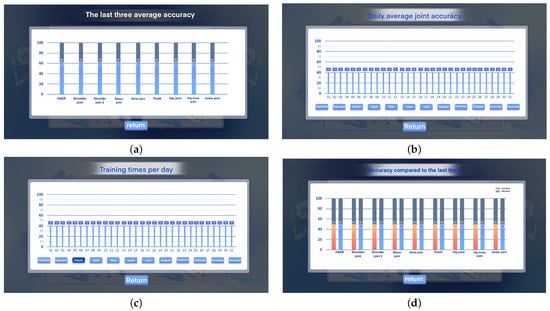

As shown in Figure 13, the system can record the patient’s rehabilitation training data, including a bar graph of the number of daily training sessions, a bar graph of the average joint accuracy of the past three sessions, and a chart comparing the accuracy with the last session. The detailed data display interface is shown in Figure 14. Among them, Figure 14a is an interface for recording the average accuracy of the last three rehabilitation training sessions. Figure 14b is an interface for recording the average joint accuracy of daily rehabilitation training. Figure 14c is an interface for recording the number of daily rehabilitation training sessions. Figure 14d is an interface for comparing the accuracy with the last rehabilitation training session.

Figure 13.

Rehabilitation training visualization data summary.

Figure 14.

Patient rehabilitation training data record. Among them, Figure (a) is the interface for recording the average accuracy of the last three rehabilitation training sessions. Figure (b) is the interface for recording the average accuracy of joints in daily rehabilitation training. Figure (c) is the interface for recording the statistics of the number of daily rehabilitation training sessions. Figure (d) is the interface for comparing the accuracy with the last rehabilitation training session.

When viewing the patient’s rehabilitation training data, the device then sends a data request to the cloud service. Here, the daily joint accuracy is taken as an example. See Algorithms 1 and 2.

| Algorithm 1 Uploading locally obtained data to the server |

|

| Algorithm 2 Obtaining the daily joint accuracy from the server |

|

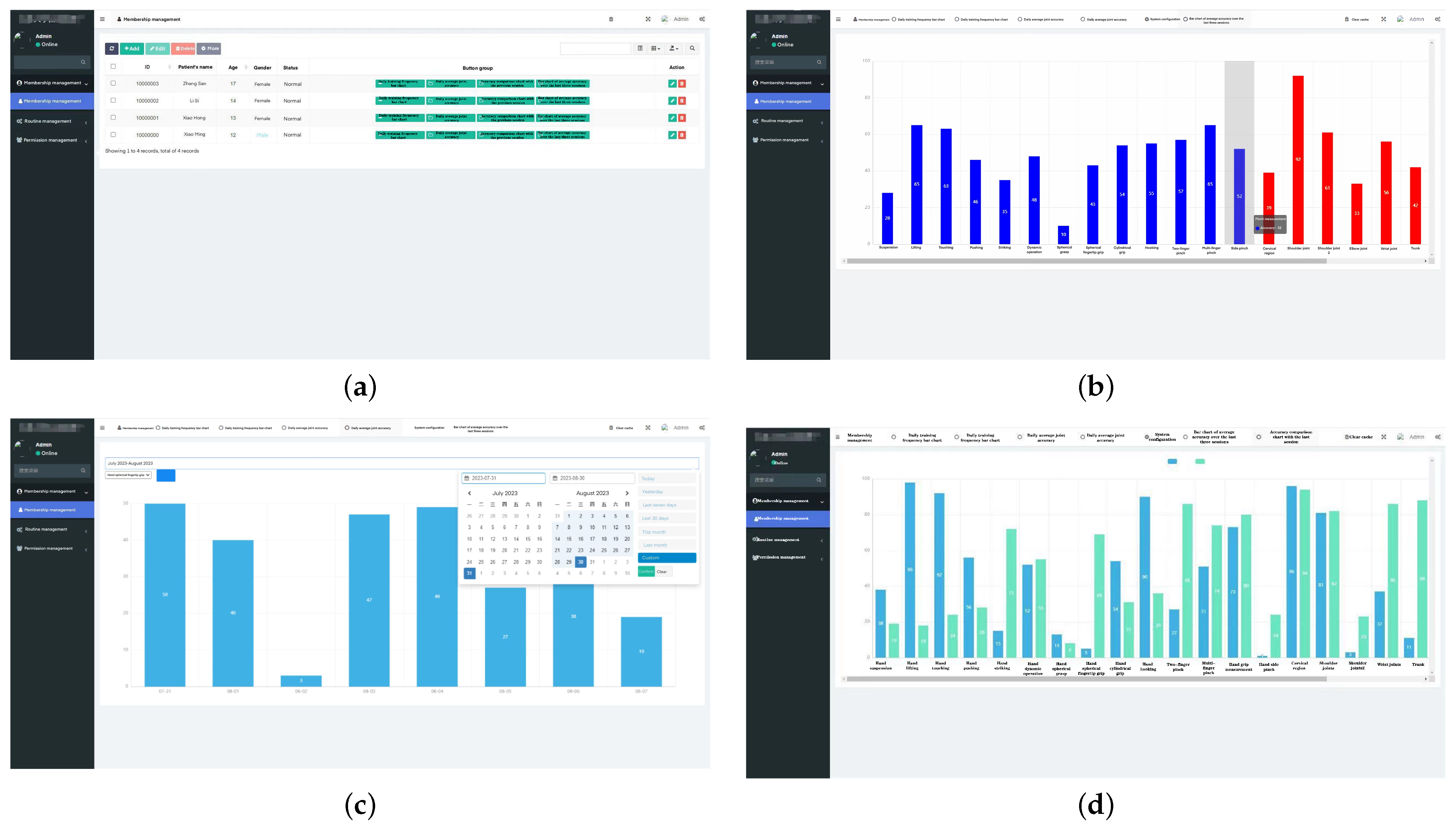

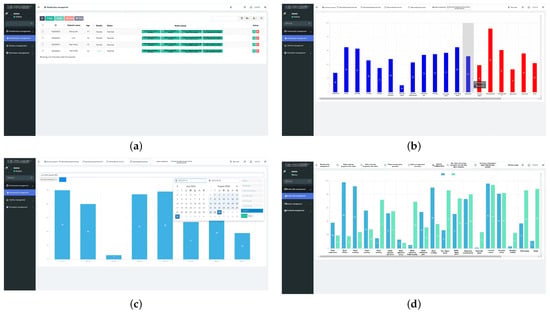

In addition to viewing the patient’s rehabilitation training data on the device, medical staff or patients can also view the patient’s detailed rehabilitation data by remotely accessing the server platform, as shown in Figure 15. Figure 15a shows the background patient data management interface. Figure 15b shows the background patient rehabilitation data. Figure 15c is the background patient rehabilitation detailed data interface. Figure 15d is the interface for comparison with the background rehabilitation data.

Figure 15.

Detailed rehabilitation training data of patients.Among them, (a) is the background patient data management interface. (b) is the background patient rehabilitation data interface. (c) is the background patient rehabilitation detailed data interface. (d) is the background rehabilitation data comparison interface.

4. Experiments

4.1. Participants

In order to verify the effectiveness of the system, this study recruited 10 patients with medium burns to participate in the test. Before the test, we explained to all participants in detail the research purposes, processes, and possible risks and interests, and each participant signed a letter of informed consent and agreed to participate in this study. In order to ensure the safety of patients, we adopted a variety of measures, including gradual training, real-time monitoring, a user-friendly interface, comfort adjustment, security protocol, and data security protection to prevent discomfort. In the study, two professional staff were set up to guide the participants on how to correctly use the rehabilitation training system and conduct necessary training for the participants. Participants were divided into two groups: the first group was the control group, with five participants receiving routine traditional rehabilitation training, upper and lower limb rehabilitation training, and hand rehabilitation training. In the second observation group, the remaining five participants performed rehabilitation training in the virtual scene designed in this article. At present, burns are clinically divided into four levels: mild, moderate, severe, and extremely severe. Mild burns refer to a second-degree burn area of less than 10%, and there is no third-degree burn. Moderate burns refer to a second-degree burn area between 10% and 29% or a third-degree burn area of less than 10%. Severe burns refer to a second-degree burn area between 30% and 49%, or a third-degree burn area between 10% and 19%, or although the burn area is insufficient, it is accompanied by serious complications (such as shock, respiratory burns or other organ damage). Extremely severe burns refer to a second-degree burn area greater than 50%, or a third-degree burn area greater than 20%, or although it does not meet the above area standards, it is accompanied by serious complications [30].

To ensure the validity of the assessment, patients with other lung functions were excluded and participants met the following criteria:

- Meet the standards for moderate burns;

- Cooperate with training and other behaviors without resistance during rehabilitation training;

- Good compliance during rehabilitation training and tolerance during training.

Table 1 shows participant information. Participant screening was based on patients after moderate burns, mainly focusing on young and middle-aged people with an age distribution between 25 and 45 years old. In order to eliminate the interference of participants with other health conditions in the experiment, participants who had no other health conditions and were able to perform rehabilitation training on their own were selected for the experiment.

Table 1.

Participants’ information.

4.2. Experimental Process

After each breathing session, each participant received a questionnaire to fill out about their training experience. This scale examines participants’ feelings about training through the following questions [31]:

- Do you feel bored when training?

- During training, are you inattentive or distracted?

- During training, can you keep up with the training rhythm?

Each item in this experimental survey adopts a five-point Likert scale [32] with options ranging from 1 to 5; that is, strongly agree to strongly disagree. Each item is scored from 1 to 5, and the score is multiplied by the corresponding weight to obtain the final score, as shown in Table 2.

Table 2.

Experimental training feeling scale.

5. Results and Analysis

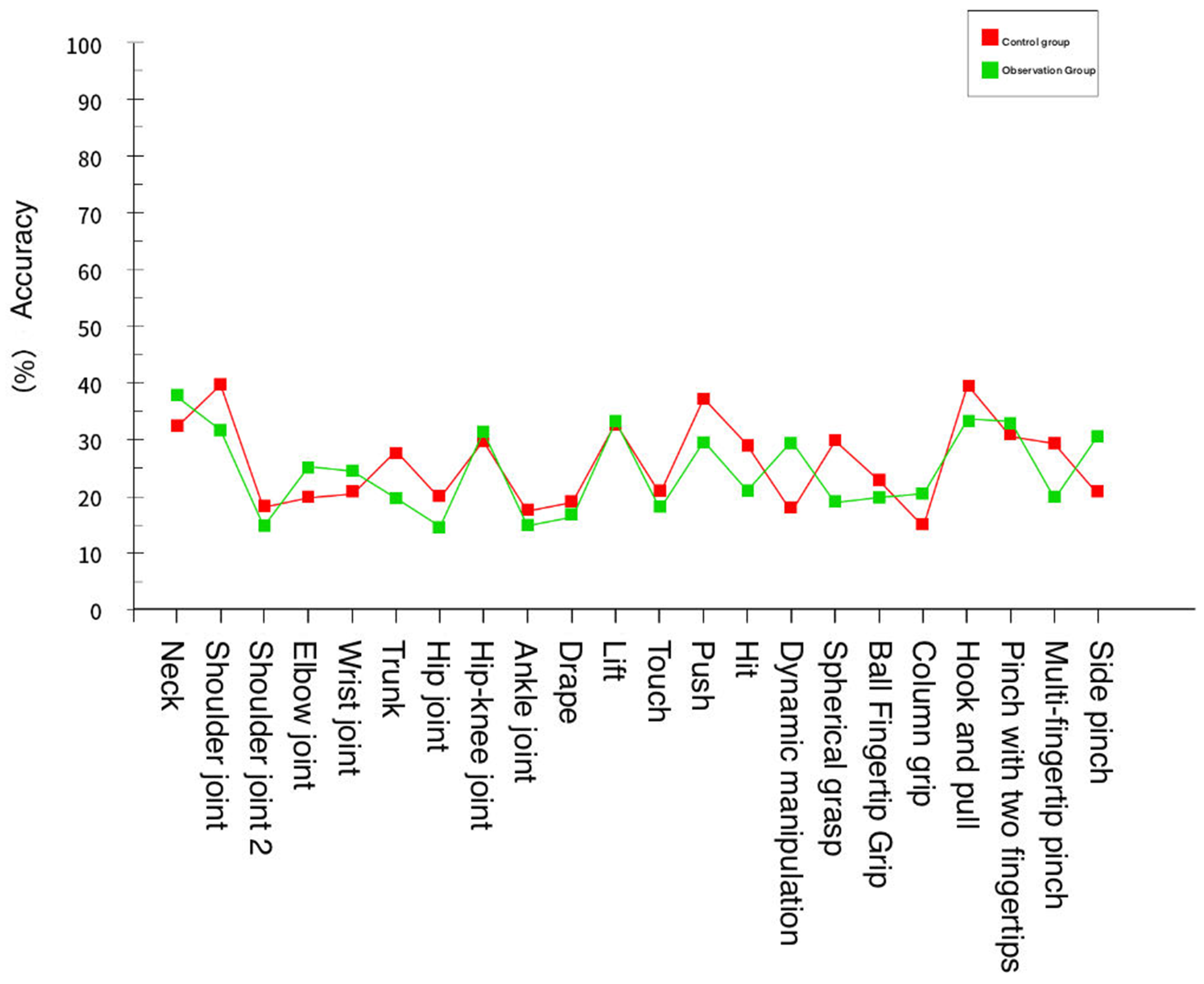

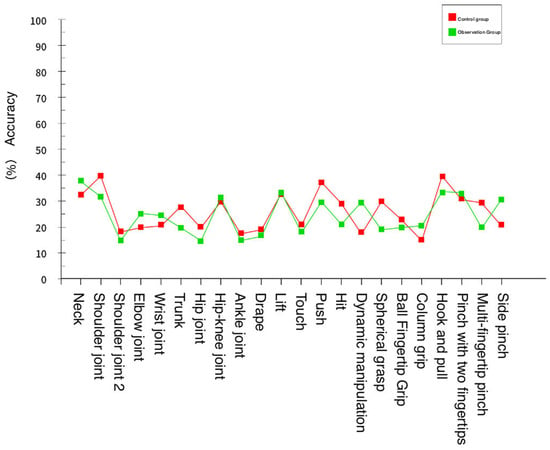

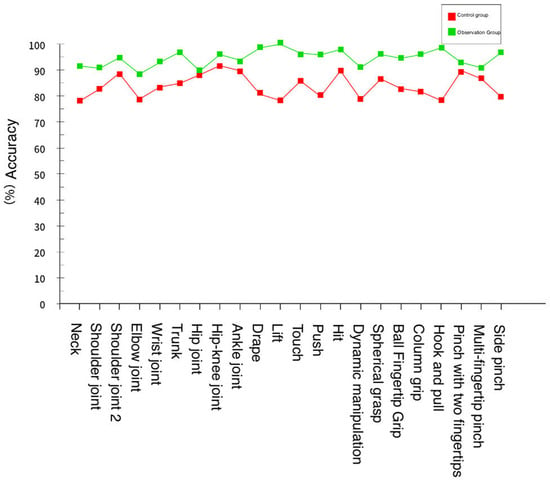

During this rehabilitation training, the system recorded the changes in rehabilitation data parameters of each participant before and after training to measure the effectiveness of the training system. The experiment found that each participant’s rehabilitation training effect improved. Taking the data in Table 3 and Table 4 as an example, it can be seen that before traditional rehabilitation training or virtual reality rehabilitation training, the standard rates of rehabilitation training for members of the control group and observation group are similar, as shown in Figure 16.

Table 3.

Accuracy rate of members in the control group before rehabilitation training.

Table 4.

Accuracy rate of members of the observation group before rehabilitation training.

Figure 16.

Movement standard of the control group and observation group before rehabilitation training.

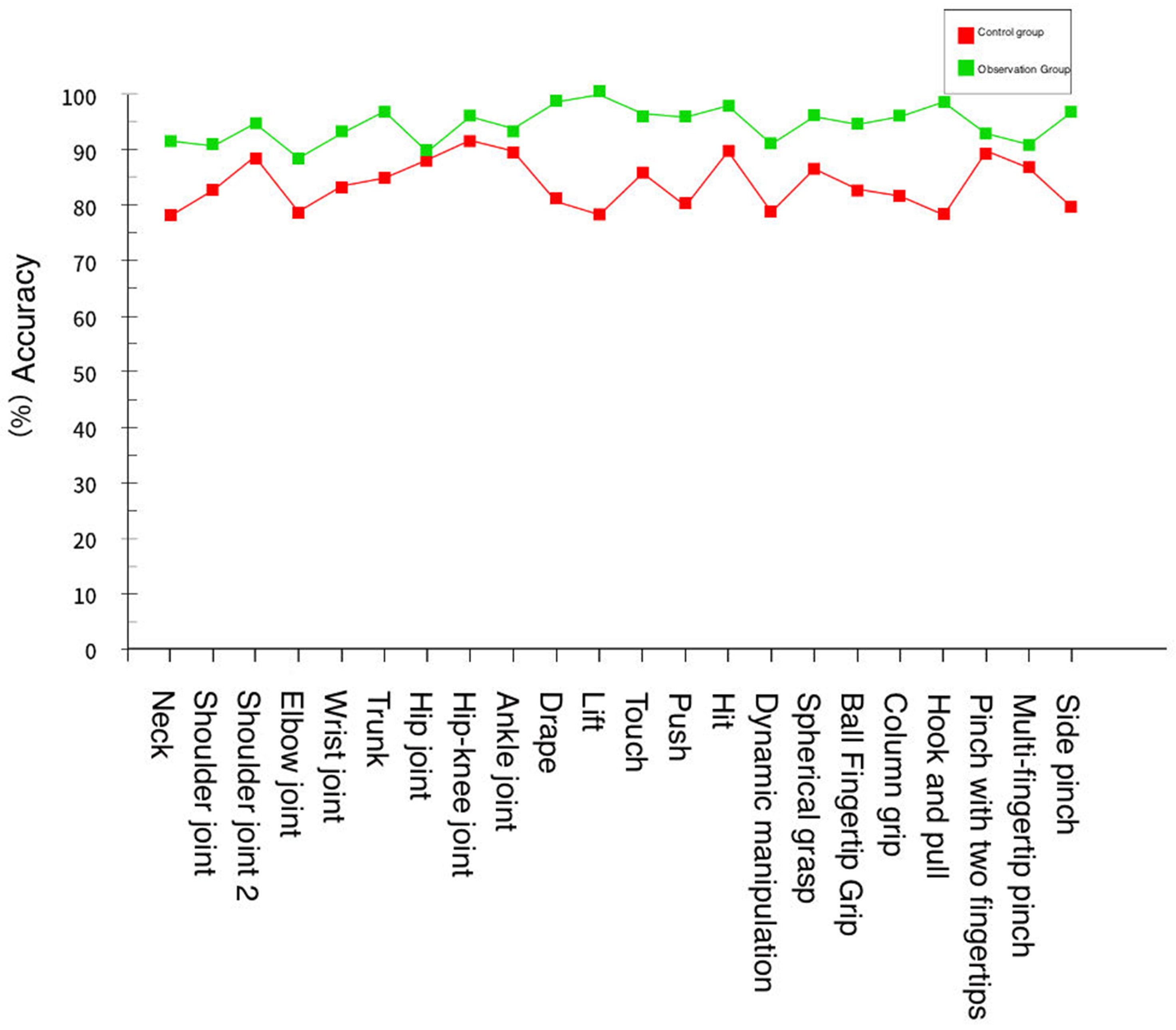

The members of the control group performed one-on-one traditional rehabilitation training methods according to the rehabilitation trainer, while the members of the observation group used the virtual reality environment of this system for rehabilitation training and finally conducted a standardization test. The results are shown in Table 5 and Table 6.

Table 5.

Correct rate of members of the control group after rehabilitation training.

Table 6.

The accuracy rate of members of the observation group after rehabilitation training.

It can be concluded from the experimental data provided by the training standard of the observation group members were higher overall than those of the control group members. The comparison of the rehabilitation training of both parties is shown in Figure 17.

Figure 17.

Movement standard of the control group and observation group after rehabilitation training.

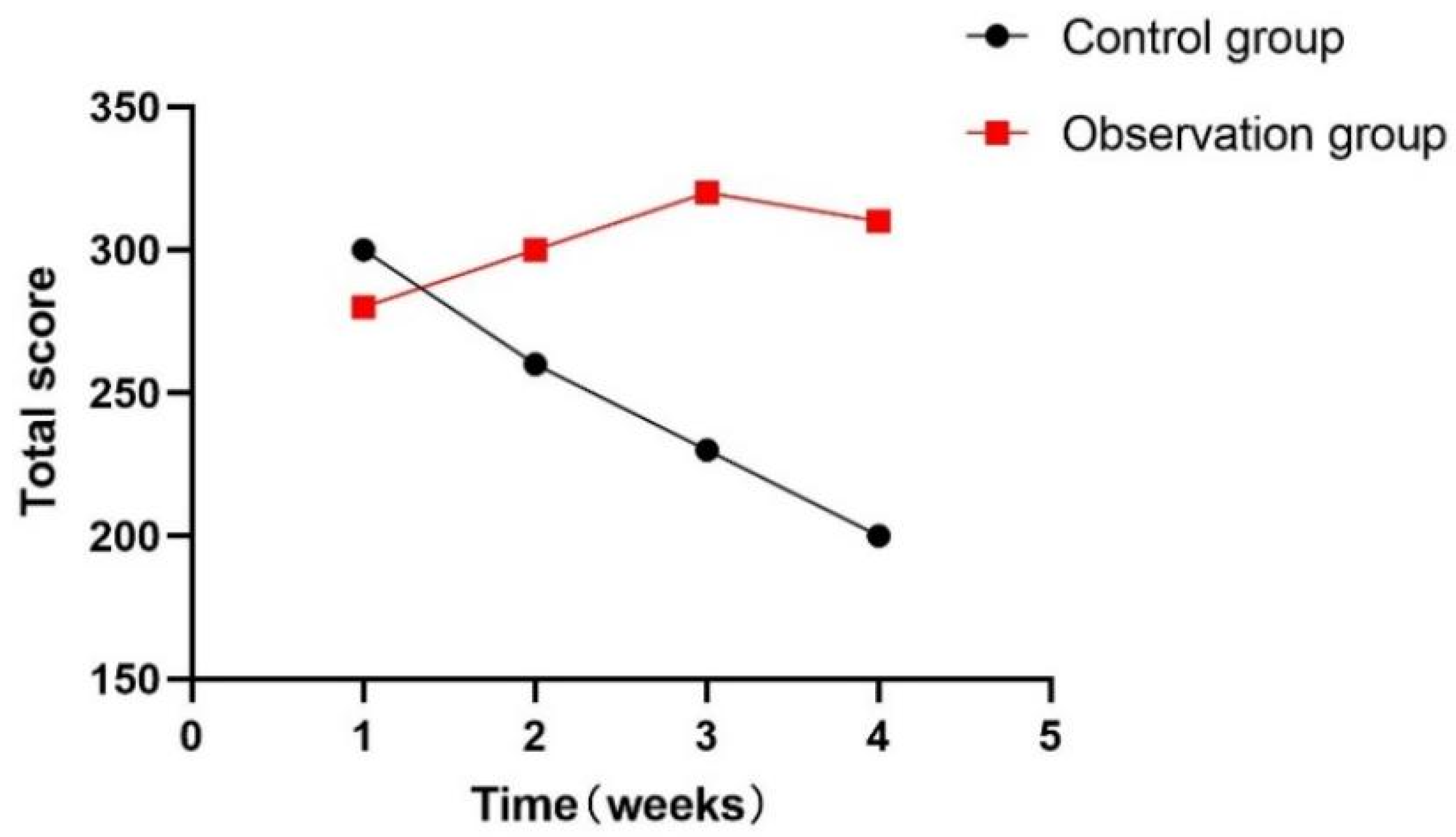

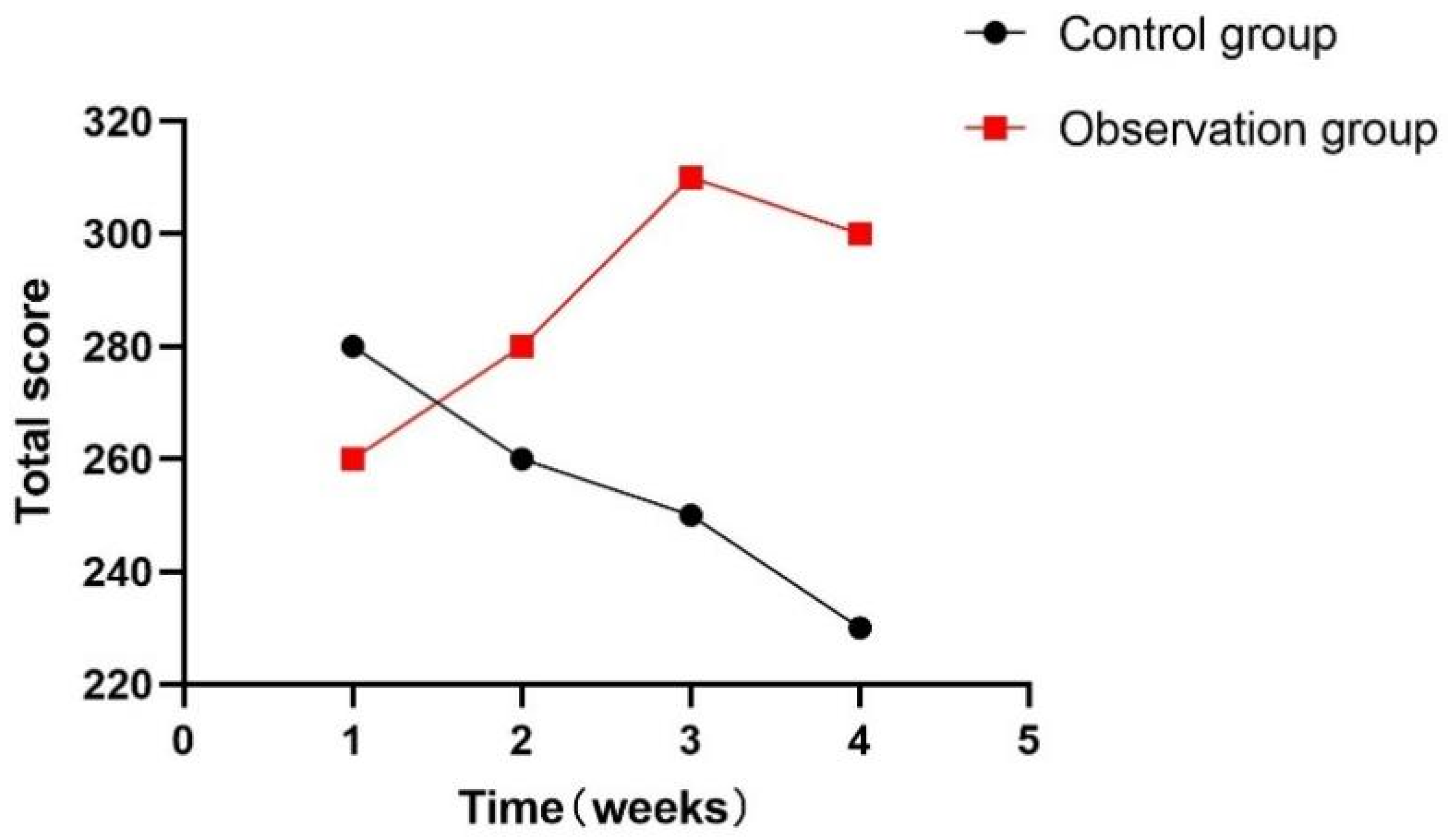

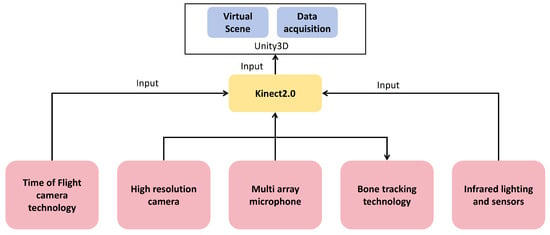

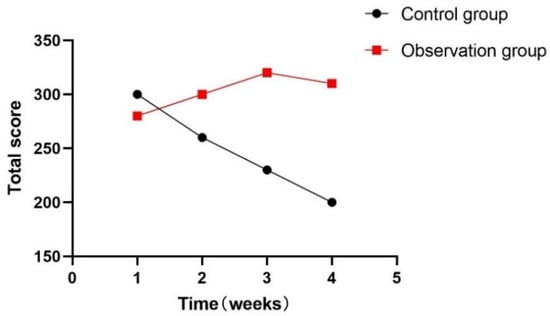

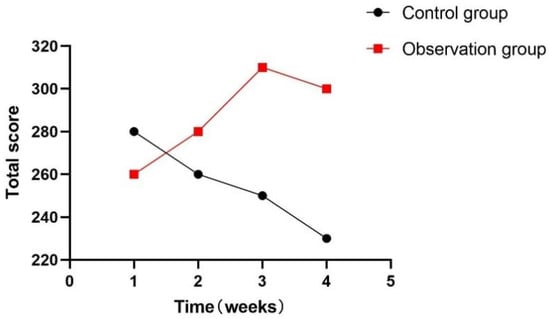

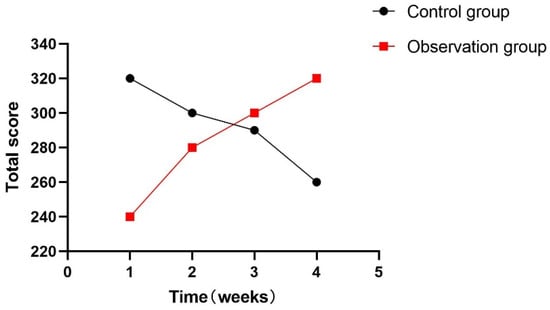

At the same time, this experiment statistically summarized the feelings of each participant during the training process, as shown in Figure 18, Figure 19 and Figure 20. During the one-month experiment, subsequent rehabilitation training showed that compared with patients in the participation group, patients in the control group showed more problems, such as inattention, boredom, and an inability to keep up with the rhythm.

Figure 18.

Total weekly boredom score.

Figure 19.

Total weekly inattention score.

Figure 20.

Total points for not keeping up each week.

6. Conclusions

Traditional upper and lower limb or hand rehabilitation training requires a rehabilitation trainer to provide one-on-one guidance to the patient on rehabilitation movements. This training is expensive and inconvenient for the patient. In contrast, this article provides an evaluation system for upper and lower limb and hand rehabilitation training based on virtual reality technology, which embodies the method of combining virtual reality technology with rehabilitation training and also realizes the visualization and traceability of rehabilitation training data, providing real-time feedback to help patients better understand and adjust their movements. This timely feedback helps to improve the quality of rehabilitation training.

The purpose of this research system is to improve the rehabilitation training quality of patients with motor disorders of upper and lower limb and hand dysfunction and realize a more convenient, practical, and interesting self-chemical training method. Through this experimental equipment and Kinect and LeapMotion data sensors, we have realized the research on methods of combining human upper and lower limb movements and hand movements with virtual scenes and interacting in real-time. We conduct data analysis on the data collected after human movement, use human movement data to drive changes in the virtual scene, and realize the interaction between human movement data and virtual scenes. We use data visualization algorithms to quantify and visualize movement data to evaluate the effectiveness of patient rehabilitation training. In the virtual scene of rehabilitation training, interesting methods such as tasks, scores, and plots are used to guide patients to continue rehabilitation training in a comfortable process, thereby completing specific rehabilitation training tasks and goals. The system is evaluated from two aspects: training effect and user experience. Comparing the experimental results and experiences of 10 participants, the results show that compared with the traditional one-to-one guidance of rehabilitation trainers on patients in rehabilitation movements, this research system has better training effects and experiences, and rehabilitation training is more active.

The experimental results show that virtual reality technology can significantly improve the rehabilitation effect in upper and lower limb and hand rehabilitation training. Patients’ rehabilitation training in the virtual simulation environment shows greater participation and enthusiasm. Compared with traditional methods, the rehabilitation effect is more significant. Virtual reality technology injects more entertainment and interactivity into rehabilitation training, thereby significantly enhancing patients’ motivation. Patients are more willing to invest in the virtual simulation environment, making the recovery process more pleasant, and improving treatment compliance. The experimental results support the effectiveness of personalized rehabilitation programs based on virtual reality technology. The system can dynamically adjust rehabilitation training according to the patient’s specific needs and progress, making the treatment closer to the patient’s individual differences and improving the pertinence and effectiveness of the treatment. The real-time feedback provided by the virtual simulation system is crucial to the patient’s rehabilitation process. Through real-time movement monitoring and feedback, this system makes it easier for patients to correct improper movements and improve motor skills, thus accelerating the rehabilitation process.

According to the experimental results, the upper limbs and lower extremity rehabilitation training evaluation system based on virtual reality technology has shown great advantages in many ways, introducing innovative treatment methods into the field of rehabilitation. However, further long-term studies and large-scale applications still need to be conducted to more fully evaluate the long-term effects of the system and long-term patient acceptance.

7. Limitation and Challenges

Although virtual reality technology has shown great potential in rehabilitation training, its high cost and technical complexity may indeed hinder the widespread application of this technology, especially in resource-limited environments.

Doctors suggest that future research should focus more on individual differences and develop personalized adaptive training programs to improve patient acceptance and compliance. Additionally, doctors emphasize the importance of multidisciplinary collaboration, including cooperation among physicians, physical therapists, engineers, and patients, to ensure that the system’s development meets actual clinical needs and the personal preferences of patients. They also recommend conducting long-term follow-up studies to evaluate the system’s impact on patients’ long-term rehabilitation and whether it can continuously improve patients’ functional status.

In response to the challenges posed by high costs, technical complexity, and patient adaptability in virtual reality technology for rehabilitation training, we are committed to reducing costs and simplifying technical operations to enhance the system’s accessibility. Based on doctors’ recommendations, we will develop personalized rehabilitation plans to meet the specific needs of different patients, thereby improving their acceptance and compliance. We will also pay close attention to data security and privacy protection issues, ensuring that effective measures are taken to safeguard patients’ personal information. With the continuous advancement of technology, we will continue to conduct research to assess the long-term effects of virtual reality technology in the field of rehabilitation, address existing shortcomings, and ensure that our system can provide long-term and effective rehabilitation support for patients.

We believe that by addressing these limitations and undertaking future work, the virtual reality-based upper and lower limb training assessment system will bring greater value to the field of rehabilitation medicine and provide higher-quality rehabilitation services for patients.

Author Contributions

Conceptualization, H.G.; methodology, H.G.; software, C.Y.; validation, H.G., M.L. and C.Y.; formal analysis, H.G.; investigation, Z.D.; resources, J.Z.; data curation, L.S.; writing—original draft preparation, H.G.; writing—review and editing, J.Z. and Z.K.; visualization, H.G.; supervision, J.Z. and Z.K.; project administration, J.Z.; funding acquisition, J.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Jilin Provincial Department of Science and Technology (Grant/Award Number: Nos. YDZJ202301ZYTS496, 20230401092YY) and The Education Department of Jilin Province (Nos. JJKH20230673KJ, JKH20230680KJ).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Beretta, E.; Cesareo, A.; Biffi, E.; Schafer, C.; Galbiati, S.; Strazzer, S. Rehabilitation of upper limb in children with acquired brain injury: A preliminary comparative study. J. Healthc. Eng. 2018, 2018, 4208492. [Google Scholar] [CrossRef] [PubMed]

- Lannin, N.A.; McCluskey, A. A systematic review of upper limb rehabilitation for adults with traumatic brain injury. Brain Impair. 2008, 9, 237–246. [Google Scholar] [CrossRef]

- Qi, M.; Hu, X.; Li, X.; Wang, X.; Shi, X. Analysis of road traffic injuries and casualties in China: A ten-year nationwide longitudinal study. PeerJ 2022, 10, e14046. [Google Scholar] [CrossRef] [PubMed]

- Chheang, V.; Lokesh, R.; Chaudhari, A.; Wang, Q.; Baron, L.; Kiafar, B.; Doshi, S.; Thostenson, E.; Cashaback, J.; Barmaki, R.L. Immersive Virtual Reality and Robotics for Upper Extremity Rehabilitation. arXiv 2023, arXiv:2304.11110. [Google Scholar]

- Taveggia, G.; Borboni, A.; Salvi, L.; Mulé, C.; Fogliaresi, S.; Villafañe, J.H.; Casale, R. Efficacy of robot-assisted rehabilitation for the functional recovery of the upper limb in post-stroke patients: A randomized controlled study. Eur. J. Phys. Rehabil. Med. 2016, 52, 767–773. [Google Scholar]

- Levac, D.; Colquhoun, H.; O’brien, K.K. Scoping studies: Advancing the methodology. Implement. Sci. 2010, 5, 1–9. [Google Scholar] [CrossRef]

- Merians, A.S.; Jack, D.; Boian, R.; Tremaine, M.; Burdea, G.C.; Adamovich, S.V.; Recce, M.; Poizner, H. Virtual reality–Augmented rehabilitation for patients following stroke. Phys. Ther. 2002, 82, 898–915. [Google Scholar] [CrossRef]

- Lohse, K.R.; Lang, C.E.; Boyd, L.A. Is more better? Using metadata to explore dose–response relationships in stroke rehabilitation. Stroke 2014, 45, 2053–2058. [Google Scholar] [CrossRef]

- Crotty, M.; Laver, K.; Schoene, D.; George, S.; Lannin, N.; Sherrington, C.; Na, L. Telerehabilitation services for stroke. Cochrane Database Syst. Rev. 2013, 12, 12. [Google Scholar]

- Guitar, N.A.; Connelly, D.M.; Nagamatsu, L.S.; Orange, J.B.; Muir-Hunter, S.W. The effects of physical exercise on executive function in community-dwelling older adults living with Alzheimer’s-type dementia: A systematic review. Ageing Res. Rev. 2018, 47, 159–167. [Google Scholar] [CrossRef]

- Laver, K. Virtual reality for stroke rehabilitation. In Virtual Reality in Health and Rehabilitation; CRC Press: Boca Raton, FL, USA, 2020; pp. 19–28. [Google Scholar]

- Holden, M.K. Virtual environments for motor rehabilitation. Cyberpsychology Behav. 2005, 8, 187–211. [Google Scholar] [CrossRef] [PubMed]

- Deutsch, J.E.; Borbely, M.; Filler, J.; Huhn, K.; Guarrera-Bowlby, P. Use of a low-cost, commercially available gaming console (Wii) for rehabilitation of an adolescent with cerebral palsy. Phys. Ther. 2008, 88, 1196–1207. [Google Scholar] [CrossRef] [PubMed]

- Cameirão, M.S.; Badia, S.B.I.; Oller, E.D.; Verschure, P.F. Neurorehabilitation using the virtual reality based Rehabilitation Gaming System: Methodology, design, psychometrics, usability and validation. J. Neuroeng. Rehabil. 2010, 7, 48. [Google Scholar] [CrossRef] [PubMed]

- da Silva Cameirão, M.; Bermudez i Badia, S.; Duarte, E.; Verschure, P.F. Virtual reality based rehabilitation speeds up functional recovery of the upper extremities after stroke: A randomized controlled pilot study in the acute phase of stroke using the rehabilitation gaming system. Restor. Neurol. Neurosci. 2011, 29, 287–298. [Google Scholar] [CrossRef] [PubMed]

- Lange, B.; Koenig, S.; Chang, C.Y.; McConnell, E.; Suma, E.; Bolas, M.; Rizzo, A. Designing informed game-based rehabilitation tasks leveraging advances in virtual reality. Disabil. Rehabil. 2012, 34, 1863–1870. [Google Scholar] [CrossRef]

- Lloréns, R.; Alcañiz, M.; Colomer, C.; Navarro, M.D. Balance recovery through virtual stepping exercises using Kinect skeleton tracking: A follow-up study with chronic stroke patients. In Annual Review of Cybertherapy and Telemedicine 2012; IOS Press: Amsterdam, The Netherlands, 2012; pp. 108–112. [Google Scholar]

- Sveistrup, H. Motor rehabilitation using virtual reality. J. Neuroeng. Rehabil. 2004, 1, 10. [Google Scholar] [CrossRef]

- Khokale, R.; Mathew, G.S.; Ahmed, S.; Maheen, S.; Fawad, M.; Bandaru, P.; Zerin, A.; Nazir, Z.; Khawaja, I.; Sharif, I.; et al. Virtual and augmented reality in post-stroke rehabilitation: A narrative review. Cureus 2023, 15, e37559. [Google Scholar] [CrossRef]

- Moreira, M.; Marques, A.; Vilaça, J.L.; Carvalho, V.; Duque, D. A Review on Full Immersive Head-Mounted Virtual Reality Assisted Physiotherapy in Upper Limbs Rehabilitation. In Proceedings of the 2023 IEEE 11th International Conference on Serious Games and Applications for Health (SeGAH), Athens, Greece, 28–30 August 2023; pp. 1–7. [Google Scholar]

- Maggio, M.G.; Cezar, R.P.; Milardi, D.; Borzelli, D.; De Marchis, C.; D’avella, A.; Quartarone, A.; Calabrò, R.S. Do patients with neurological disorders benefit from immersive virtual reality? A scoping review on the emerging use of the computer-assisted rehabilitation environment. Eur. J. Phys. Rehabil. Med. 2024, 60, 37. [Google Scholar] [CrossRef]

- Brady, N.; Dejaco, B.; Lewis, J.; McCreesh, K.; McVeigh, J.G. Physiotherapist beliefs and perspectives on virtual reality supported rehabilitation for the management of musculoskeletal shoulder pain: A focus group study. PLoS ONE 2023, 18, e0284445. [Google Scholar] [CrossRef]

- Sarajchi, M.; Sirlantzis, K. Pediatric Robotic Lower-Limb Exoskeleton: An Innovative Design and Kinematic Analysis. IEEE Access 2023, 11, 115219–115230. [Google Scholar] [CrossRef]

- Oblak, J.; Cikajlo, I.; Matjačić, Z. Universal haptic drive: A robot for arm and wrist rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 2009, 18, 293–302. [Google Scholar] [CrossRef] [PubMed]

- Prochnow, D.; Bermúdez i Badia, S.; Schmidt, J.; Duff, A.; Brunheim, S.; Kleiser, R.; Seitz, R.; Verschure, P. A functional magnetic resonance imaging study of visuomotor processing in a virtual reality-based paradigm: Rehabilitation Gaming System. Eur. J. Neurosci. 2013, 37, 1441–1447. [Google Scholar] [CrossRef] [PubMed]

- Edwards, D.; Kumar, S.; Brinkman, L.; Ferreira, I.C.; Esquenazi, A.; Nguyen, T.; Su, M.; Stein, S.; May, J.; Hendrix, A.; et al. Telerehabilitation initiated early in post-stroke recovery: A feasibility study. Neurorehabilit. Neural Repair 2023, 37, 131–141. [Google Scholar] [CrossRef] [PubMed]

- Diener, J.; Rayling, S.; Bezold, J.; Krell-Roesch, J.; Woll, A.; Wunsch, K. Effectiveness and acceptability of e-and m-health interventions to promote physical activity and prevent falls in nursing homes—A systematic review. Front. Physiol. 2022, 13, 894397. [Google Scholar] [CrossRef]

- Simkins, M.; Fedulow, I.; Kim, H.; Abrams, G.; Byl, N.; Rosen, J. Robotic rehabilitation game design for chronic stroke. Games Heal. Res. Dev. Clin. Appl. 2012, 1, 422–430. [Google Scholar] [CrossRef]

- Shotton, J.; Fitzgibbon, A.; Cook, M.; Sharp, T.; Finocchio, M.; Moore, R.; Kipman, A.; Blake, A. Real-time human pose recognition in parts from single depth images. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1297–1304. [Google Scholar]

- Żwierełło, W.; Piorun, K.; Skórka-Majewicz, M.; Maruszewska, A.; Antoniewski, J.; Gutowska, I. Burns: Classification, pathophysiology, and treatment: A review. Int. J. Mol. Sci. 2023, 24, 3749. [Google Scholar] [CrossRef]

- Shi, L.; Liu, F.; Liu, Y.; Wang, R.; Zhang, J.; Zhao, Z.; Zhao, J. Biofeedback Respiratory Rehabilitation Training System Based on Virtual Reality Technology. Sensors 2023, 23, 9025. [Google Scholar] [CrossRef]

- DeVellis, R.F.; Thorpe, C.T. Scale Development: Theory and Applications; Sage Publications: Washington DC, USA, 2021. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).