Abstract

The selection of a target when training deep neural networks for speech enhancement is an important consideration. Different masks have been shown to exhibit different performance characteristics depending on the application and the conditions. This paper presents a comprehensive comparison of several different masks for noise reduction in cochlear implants. The study incorporated three well-known masks, namely the Ideal Binary Mask (IBM), Ideal Ratio Mask (IRM) and the Fast Fourier Transform Mask (FFTM), as well as two newly proposed masks, based on existing masks, called the Quantized Mask (QM) and the Phase-Sensitive plus Ideal Ratio Mask (PSM+). These five masks are used to train networks to estimate masks for the purpose of separating speech from noisy mixtures. A vocoder was used to simulate the behavior of a cochlear implant. Short-time Objective Intelligibility (STOI) and Perceptual Evaluation of Speech Quality (PESQ) scores indicate that the two new masks proposed in this study (QM and PSM+) perform best for normal speech intelligibility and quality in the presence of stationary and non-stationary noise over a range of signal-to-noise ratios (SNRs). The Normalized Covariance Measure (NCM) and similarity scores indicate that they also perform best for speech intelligibility/gauging the similarity of vocoded speech. The Quantized Mask performs better than the Ideal Binary Mask due to its better resolution as it approximates the Wiener Gain Function. The PSM+ performs better than the three existing benchmark masks (IBM, IRM, and FFTM) as it incorporates both magnitude and phase information.

1. Introduction

Typically, in a mask-based supervised speech separation system, acoustic features provide the input to a learning machine while a suitable mask serves as the output, with a suitable target mask being used during training. Such a system can be used to perform speech enhancement (otherwise known as noise reduction) in a range of applications. The particular application of interest in this paper is a cochlear implant, which is a prosthesis used to restore hearing to people with hearing loss due to damage incurred by hair cells in the cochlea. Users of cochlear implants can understand the majority of what a speaker has to say in quiet environments. This is essentially termed speech intelligibility. However, they struggle to understand what is being said when there is interfering background noise. Previous studies have applied neural networks [1,2] and recurrent neural networks [3] to improve speech intelligibility in noise for users of cochlear implants.

The works of Chen and Wang [4,5] provide a good overview of DNN-based mask estimation for supervised speech separation. They introduce masks such as the Ideal Binary Mask (IBM), Target Binary Mask (TBM), Ideal Ratio Mask (IRM), Fast Fourier Transform Magnitude (FFT-MAG), Gammatone Frequency Power Spectrum (GF-POW), Phase-Sensitive Ideal Ratio Mask (PSIRM), and Complex Ideal Ratio Mask (cIRM). Michelsanti et al. [6] also provide an overview of deep-learning-based audiovisual speech enhancement and separation. When dealing with training targets, they discuss direct mapping, mask approximation, and indirect mapping. Wang [7] proposed a deep neural network for time-domain signal reconstruction, and in that study, both the IBM and the IRM were utilized. Nossier et al. [8] performed a comparison of mapping and masking targets (including some of the aforementioned masks) for various deep-learning-based speech enhancement architectures. Samui et al. [9] employed the IRM, PSM (Phase-Sensitive Mask), and cIRM when performing speech enhancement using a fuzzy deep belief network.

While previous work has evaluated a wide variety of masks, based on both magnitude and phase information, there is scope for further improvement. The motivation for this paper is to extend previous work by proposing two new masks, one an adaptation of the IBM/IRM, and therefore using primarily magnitude information, with the second an adaptation of the PSM, also making use of phase information. The paper also reports an experimental study on these new masks, comparing them to some benchmark masks from the literature.

This paper is organized as follows. The literature pertaining to previous work in the field of binary, ratio, and phase mask estimation and speech enhancement is summarized in Section 2. Section 3 outlines the mathematical definitions of existing masks and new masks proposed in this study. Section 4 covers the methodology for this study, including the dataset used; the masks under evaluation, including the newly proposed masks; the machine learning architecture used; the cochlear implant simulation; and the metrics used for predicting intelligibility, quality, and similarity. The experimental results are contained in Section 5. Section 6 discusses the ensuing results and Section 7 makes some concluding remarks.

The key contributions of this paper are as follows:

- It proposes the use of a new Quantized Mask (QM), which is an approximation of the Wiener Gain Function (WGF), for the application of speech enhancement in cochlear implants;

- It also proposes another new mask called the PSM+, which is a hybrid of the PSM and the IRM for the same purpose;

- It compares the performance of these masks to other well-established masks (e.g., IBM, IRM, and FFTM) in terms of speech intelligibility and quality for normal hearing as well as speech intelligibility and similarity for hearing impairment.

2. Background

A mask is essentially a two-dimensional array of values where each horizontal row is composed of overlapping time frames of a signal and each vertical column is composed of its corresponding frequency components. This is referred to as the time–frequency representation of a signal and the entries in the array are referred to as time–frequency units. According to Lee et al. [10], there are two main learning schemes for time–frequency masks, namely mask approximation (MA) and spectra approximation (SA). The former approach minimizes the MA objective function while the latter approach minimizes spectral distortion.

A widely used mask in speech enhancement is the Ideal Binary Mask (IBM). Heymann et al. [11] performed neural-network-based spectral mask estimation for beamforming using this mask. In their work, they defined both an ideal binary mask for noise (IBMN) as well as an ideal binary mask for the target signal (IBMX). Kjems et al. [12] investigated the role of the mask pattern in the intelligibility of ideal binary-masked speech. They also defined the target binary mask (TBM) as that obtained by comparing the target energy to that of a speech-shaped noise (SSN) reference signal matching the long-term spectrum of the target speaker in each time–frequency (T-F) unit.

Others have focused on ratio masks and adaptations thereof. Abdullah et al. [13] employed a Quantized Correlation Mask to improve the efficiency of a DNN-based speech enhancement system. This is a correlation-based learning target where the IRM is optimized by adjusting inter-channel correlation factors. Bao and Abulla [14] also exploited normalized cross correlation (NCCC) when developing a noise masking method based on estimating a ratio mask using channels based on the Gammatone frequency scale. Previous work in the area of masking and correlation can be found in the work of Bao et al. [15,16]. More recently, Lang and Yang [17] trained a Corrected Ratio Mask (CRM) with deep neural networks for echo cancellation in laser monitoring signals.

Much of the work using deep learning networks for speech enhancement has primarily focused on estimating the magnitude spectrum while reusing the phase from the noisy speech for resynthesis. However, some studies have also endeavored to include phase-related information when generating masks. Choi et al. [18] used phase information and proposed a polar-coordinate-wise complex-valued masking method to implement the Complex Ideal Ratio Mask. The phase-sensitive filter (mask) was employed by Erdogan et al. [19] to perform speech separation using deep recurrent neural networks. Hasannezhad et al. [20] performed speech enhancement by estimating a phase-sensitive mask using a novel hybrid neural network. A bounded approximation of the Phase-Sensitive Mask (PSM) was approximated by Lee et al. [10]. They then used a joint learning algorithm that trains the approximated value through its parameterized variables of speech magnitude spectra, noise magnitude spectra, and the phase difference between clean and noisy spectra. Finally, they used a warping function to control the dynamic range of the magnitude spectra. Li et al. [21] compared the PSM to the IRM and obtained improved performance for speech enhancement by focusing on smaller values using relative loss.

Mayer et al. [22] also considered the impact of phase estimation when using time–frequency masking to perform single-channel speech separation. Indeed, a fully convolutional neural network was used by Ouyang et al. [23] to demonstrate that complex spectrogram processing is effective at phase estimation, resulting in the improved reconstruction of clean female speech. Tan and Wang [24] proposed a convolutional recurrent network (CRN) for complex spectral mapping in speech enhancement. Complex spectral mapping estimates the real and imaginary spectrograms of clean from noisy speech. It aims to enhance both magnitude and phase responses of noisy speech. A PSM and conditional generative adversarial network (cGAN) architecture was proposed by Routray and Mao [25] and showed that it performed better than other baseline networks in terms of speech quality and intelligibility. Wang and Bao [26] also incorporated phase information when estimating masks for speech enhancement.

Zhang et al. [27] proposed a time–frequency attention (TTF) module and used a residual temporal convolutional network (resTCN) to perform monoaural speech enhancement with IRM and PSM training targets. A phase-aware speech enhancement using a deep neural network was also proposed by Zheng et al. [28]. During training, they transformed the unstructured phase spectrogram to its derivative with respect to time (known as instantaneous frequency deviation (IFD)). In testing, a post-processing method was proposed to recover the phase spectrogram from the estimated IFD. Sivapatham et al. [29,30] combined the phase and correlation in a mask to improve speech intelligibility in a deep neural network.

In this paper, perfect masks that are generated to investigate their oracle performance will be referred to as ideal masks. These masks are not estimated and are generated with perfect knowledge of the clean speech signal and noise. Masks that are generated using a neural network will be referred to as estimated masks.

3. Masks

A number of masks feature prominently in the existing literature. Five such masks are defined in Section 3.1, namely the IBM, IRM, FFTM, PSM (ORM), and cIRM. Two new masks are newly proposed in this study, namely the QM and PSM+, and are defined in Section 3.2 accordingly. Some visual examples of the masks are provided in Section 3.3.

3.1. Existing Masks

The first mask considered is the well-established Ideal Binary Mask (IBM) [31], which is defined in Equation (1) as follows:

Here, SNR(t,f) is the local signal-to-noise ratio within a time–frequency unit. To preserve sufficient intelligibility, the local criterion (LC) is usually chosen to be 5 dB lower than the SNR of the mixture [32]. It is referred to as a hard mask as it has hard values of 0 and 1.

Another mask widely used in speech separation is the Ideal Ratio Mask (IRM) [33]. It is defined in Equation (2) as follows:

Here, S(t,f)2 and N(t,f)2 are the speech energy and noise energy within a time–frequency unit. This mask is referred to as a soft mask and can have any value in the range of [0, 1].

The Spectral Magnitude Mask [32], referred to here as the FFT-MASK (FFTM), is defined in Equation (3) as follows:

This is the ratio of the spectral magnitudes of the clean and noisy speech. Unlike the previous two masks, it is unbounded in the positive direction, i.e., its values are in the range of [0, ∞].

While the majority of masks in the literature are based solely on magnitude information, several researchers have also proposed masks that incorporate phase information. For example, the Phase-Sensitive Mask (PSM) [19] is defined in Equation (4) as follows:

Here, S(t,f) and Y(t,f) are the clean and noisy spectra, respectively, while θ represents the phase difference between the clean and the noisy speech within a time–frequency unit. The PSM is unbounded in both negative and positive directions, i.e., its values are in the range of [−∞, ∞]. It was also defined by Wang et al. [34] using Equation (5), where, again, S denotes the clean speech signal, Y is the noisy speech signal, Re denotes the real part, θS is the phase of the clean speech, θY is the phase of the noisy speech, and the (t,f) notation has been omitted for simplicity.

The spectrum of the clean speech can be represented in complex rectangular form in Equation (6) where subscript r is the real part, subscript i is the imaginary part, and .

Similarly, the spectrum of the noise N can be represented using Equation (7).

Assuming that the noise is additive, the spectrum of the noisy speech can be written in complex rectangular form as in Equation (8).

Combining Equations (5) and (8) results in Equation (9).

This can be expanded to produce Equation (10).

Finally, using * to represent the complex conjugate, this equation can be further simplified to Equation (11), which demonstrates, analytically, that the PSM is equivalent to the Optimal Ratio Mask (ORM). The ORM was derived by Liang et al. [35] to maximize the signal-to-noise ratio and was further used in speech separation in [36,37].

The Complex Ideal Ratio Mask (cIRM) is well described in [38,39] and is defined mathematically in Equation (12) as follows:

Here, Mr and Mi are the real and imaginary components of the mask and are defined in Equations (13) and (14):

Here, Yr and Yi are the real and imaginary components of the spectrum of the noisy speech while Sr and Si are the real and imaginary components of the spectrum of the clean speech. As stated in [38], Mr is theoretically identical to the PSM. Mi is unbounded in both negative and positive directions, i.e., its values are in the range of [−∞, ∞].

3.2. Proposed Masks

Two new masks are proposed in this paper. The first mask is inspired by the IBM but has multiple local SNR thresholds rather than just one. In essence, it is a quantized approximation to the Wiener Gain Function (WGF) [40,41] and hence is referred to here as the Quantized Mask (QM). The number of local criteria and their respective values have been derived empirically to optimize the STOI score, and the mask is defined in Equation (15) as follows:

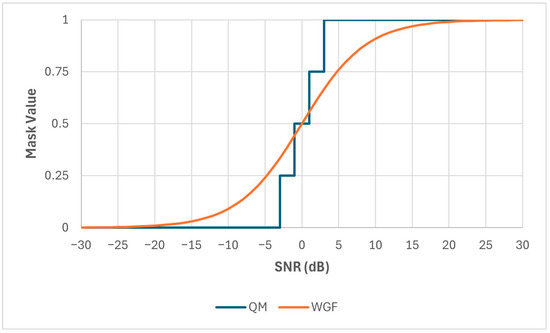

If SNR is the signal-to-noise ratio of the mixture, then LC1 = SNR-8, LC2 = SNR-6, LC3 = SNR-4, and LC4 = SNR-2. For example, the QM for a mixture SNR of 5 dB is illustrated in Figure 1. The motivation for using this mask is to provide more granular magnitude information than the IBM and hence maximize speech intelligibility.

Figure 1.

Quantized Mask (QM) and Wiener Gain Function (WGF) at mixture SNR of 5 dB.

The second new mask proposed in this paper is a hybrid mask as defined in Equation (16) and is referred to as the PSM+. It uses Equation (4) to calculate modified PSM values:

As can be seen in Equation (16), if the PSM values are negative, they are replaced by their corresponding IRM values in Equation (2). Based on the results of the empirical results in this study, if they exceed a value of 2, they are clipped at 2. This approach is motivated by the work of Zhou et al. [42], who employed mask fusion with multi-target learning for speech enhancement. Because the end goal of this research is to optimize speech intelligibility, the retention of as much magnitude and phase information as possible is beneficial. When the PSM+ is used, approximately half of the mask values (i.e., those PSM values that are positive) exploit both magnitude and phase. Rather than discarding the remaining negative PSM values and treating them as zero, it was decided to use their corresponding IRM values, thus retaining additional magnitude information.

3.3. Examples

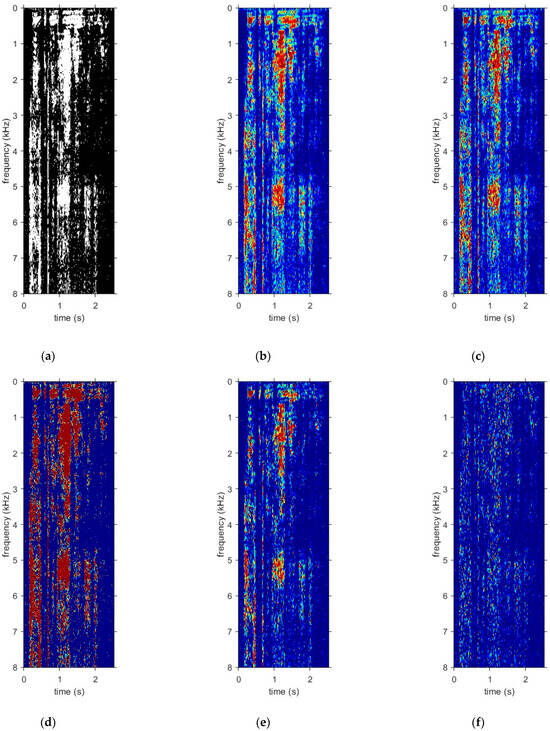

By way of example, Figure 2 shows color images of the ideal masks for a particular utterance mixed with babble noise at −5 dB SNR. The utterance was taken from the IEEE-Harvard corpus [43] and sampled at 16 kHz. A grayscale is used in the figure, with white pixels denoting a value of 1 and black pixels denoting a value of 0. Although they are not identical, broadly speaking, the same pattern is more or less evident in the masks labelled (a) to (e). However, for the mask labelled (f), which is the imaginary part of the cIRM, the pixels are predominantly black, suggesting that most mask values are closer to 0 (noise-dominated).

Figure 2.

Ideal masks for the utterance “choose between the high road and the low” mixed with babble noise at −5 dB SNR: (a) IBM; (b) IRM; (c) FFTM; (d) QM, (e) cIRMr; (f) cIRMi.

4. Materials and Methods

This section initially outlines the dataset used for the experimental work in this study. It then proceeds to discuss how the masks presented in Section 3 are used as training targets, including both well-established masks as well as two newly proposed masks. Following this, it provides an overview of the machine learning network used to estimate the masks. It then discusses how a vocoder is used to simulate the operation of a cochlear implant. Then, the objective metrics are explained, and these are used to determine the performance of the estimated masks in terms of speech intelligibility, quality, and similarity. Finally, all software sources are documented.

4.1. The Dataset

The IEEE-Harvard corpus [43] was selected as the dataset for the clean speech utterances for this study. All 720 sentences were produced by a male speaker, with 600 of these used for training purposes (including generating ideal masks) and 120 used for testing purposes. This dataset was chosen as it has been widely used in other related studies such as in [1,44], which makes it is useful for comparison. The sentences themselves are phonetically balanced and the phonemes have been specifically selected for their frequency content. Three noise sources were used in the initial part of this study, i.e., to evaluate the oracle performance. These noises were babble, factory, and speech-shaped noise (SSN). The babble was produced by recording 100 people speaking in a canteen while the factory noise was from a car production hall. These first two noise sources were taken from the Signal Processing Information Base (SPIB) [43] while the third was generated by mixing white noise from SPIB with the long-term speech envelope of the IEEE-Harvard speech corpus. The factory noise was useful when comparing initial results to those in previous work such as [5,32]. Only babble and SSN were used in association with the neural networks to generate estimated masks as these were representative of non-stationary and stationary noise, respectively. The training and test mixtures were generated in accordance with the work described in [32]. Random cuts from the first 2 min of the babble and SSN were mixed with the training utterances at −5 dB and 0 dB SNR. Similarly, random cuts from the last 2 min of both noises were mixed with the test utterances at −5 dB, 0 dB, and 5 dB SNR.

4.2. Mask Generation

An FFT of length 1024 was used to estimate the ideal masks for testing the oracle performance while an FFT of length 320 was used for the estimated masks. Five different estimated masks were evaluated for this study. Three of these were from the existing literature, namely IBM, IRM, and FFTM. The other two were the masks proposed in Section 3.2, namely QM and PSM+.

As previously stated, the estimated masks were generated by the neural network. Although the ideal IBM in Equation (1) is a hard mask, its estimated version is a soft mask. It is straightforward to calculate the IRM using Equation (2) while the FFTM is calculated using Equation (3). However, based on empirical testing, all values above 1.5 were clipped at 1.5 in this study. This was used rather than using log compression suggested in [32] or hyperbolic tangent compression suggested in [38,45]. The proposed QM was calculated using Equation (9). Finally, the second newly proposed mask in this study (PSM+) was calculated using Equation (10). Due to its unbounded nature, the cIRM was not estimated in this study. However, as previously stated, the real part of the cIRM is indeed the PSM.

In each case, the estimated speech spectrum is calculated using Equation (17) as follows, where Mest is the estimated mask, Y is the noisy spectrum, and θY is the phase of the noisy speech.

The one exception to this is the complex ratio mask where *, in this case, represents complex multiplication in Equation (18). However, this is only used during oracle performance experiments.

4.3. The Neural Network

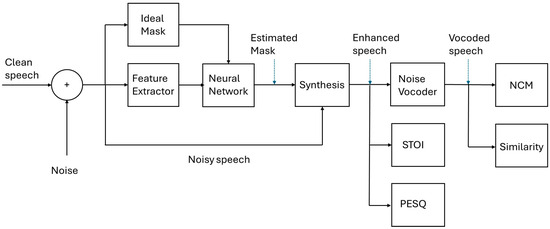

The neural network is an integral part of the test system in Figure 3 and is based on the multilayer perceptron (MLP) architecture. Although MLP is a basic architecture, it is considered to be a well-known and acceptable benchmark based on previous research in the field. Following on from the work of [46], 24 Mel Spectrogram acoustic features were extracted at the frame level and used as the inputs. The sampling frequency was 16 kHz and the analysis window was 20 ms with 50% overlap. The features were concatenated with delta features and then smoothed using an auto-regressive moving average filter. Three hidden layers were used, with each layer containing 1024 nodes. The sigmoid activation function was used for hidden layers. It was also used for the output layer when the output was in the range of [0, 1], with the linear activation function being used otherwise. The standard backpropagation algorithm was used without dropout. Adaptive gradient descent was used as the optimization algorithm with a scaling factor of 0.0015. The number of training epochs used was 20. Momentum was initially set to 0.5 and changed to 0.9 after epoch 5. The mean squared error (MSE) was used as the cost function to predict the error between the predicted and expected output values. The outputs were the mask values, which had dimensions of 320, corresponding to the number of frequency bins. The outputs were spliced into a 5-frame window according to [32]. Ideal masks were used as the training targets and the estimated masks were used during testing. No pretraining was used during these experiments. This neural network can be used to estimate masks as per the study of Healy et al. [47] and these masks can be used in a speech segregation system.

Figure 3.

Block diagram of the test system.

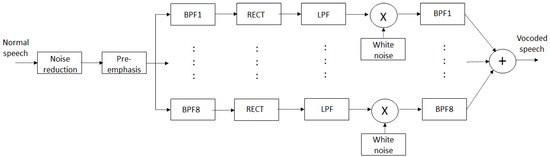

4.4. Vocoder Simulation

Channel vocoders have been widely used to simulate speech processing in cochlear implants, as described by Cychosz et al. [48]. For the purposes of this study, an eight-channel noise vocoder (see Figure 4) was used for CI simulation. The pre-emphasis filter had a cutoff frequency of 2 kHz and a roll-off of 3 dB/octave. The band pass filters (BPFs) in the eight channels had center frequencies of 366, 526, 757, 1089, 1566, 2252, 3241 and 4662 Hz. The low pass filters (LPFs), which follow the rectification stage (RECT), had a cutoff frequency of 120 Hz. The noise vocoder was used to vocode the normal speech so that it mimicked the sound heard by the wearer of a cochlear implant.

Figure 4.

Block diagram of 8-channel noise vocoder.

4.5. Metrics

Objective speech quality and intelligibility metrics can be divided into two classes, namely intrusive metrics, which use a reference signal, and nonintrusive metrics, which do not use a reference signal. Falk et al. [49] investigated twelve such metrics for predicting objective quality and intelligibility for users of hearing aids and cochlear implants. This followed on from the work of Cosentino et al. [50], Santos et al. [51], Kokkinakis and Loizou [52], and Hu and Loizou [53].

Short-Time Objective Intelligibility (STOI) [54] is used here as a speech intelligibility metric for normal hearing, i.e., speech signals that have not been vocoded. It measures the correlation between the short-time temporal envelopes of a clean utterance and an estimated utterance: in this study, one that was generated via noise reduction conducted on a noisy signal. The STOI varies from 0 to 1 and can be interpreted as 0% to 100% intelligibility. It is defined in Equation (19), where dj(m) is an intermediate intelligibility measure defined in [55], M is the total number of frames, and J is the number of one-third octave bands used for analysis.

Perceptual Evaluation of Speech Quality (PESQ) [56] is used here as a speech quality metric for normal hearing. It utilizes an auditory transform to generate a loudness spectrum. The loudness spectra of clean utterances are compared to those of estimated utterances, with the score ranging from −0.5 to 4.5, to predict mean opinion score (MOS). In this paper, the MOS-mapped score according to ITU P.862.2 is used [57]. PESQ can be calculated using Equation (20), where Dind is the disturbance value, Aind is the asymmetrical disturbance value, a0 = 4.5, a1 = −0.1, and a2 = −0.039.

If the raw model PESQ output is PESQr, the output mapping function used in the wideband extension is defined in Equation (21).

The Normalized Covariance Measure (NCM) [58] is used to predict speech intelligibility for hearing-impaired listeners such as those wearing cochlear implants. Based on the work of Lai et al. [59], it is used to predict the intelligibility of vocoded and wideband speech. It is a Speech Transmission Index (STI) measure and is based on the covariance of the envelopes between clean vocoded speech and estimated vocoded speech. NCM scores range from 0 (0%) to 1 (100%) intelligibility. It is defined in Equation (22), where Wi is the band importance weight applied to each of the k bands and TIi is the transmission index in each band.

Euclidean distance has been used in various audio applications such as in measuring the similarity of a speaker’s voice characteristics [60], performing speech recognition [61], and measuring the similarity between two songs [62]. Therefore, it was decided to use a final group of measures to estimate the similarity between clean vocoded speech and estimated vocoded speech. Histograms were generated (using Scott’s Rule for the optimum number of bins) for clean, mixture (i.e., noisy), and estimated vocoded signals. These were normalized to represent probability distribution functions (pdfs) and the distance/similarity between various pdfs was measured. All metrics were defined and categorized by Cha in [63]. For this study, only distances were used, where similarity (s) was related to distance (d) in Equation (23) as follows:

As outlined in [63], eight different families were used to measure similarity. Euclidean L2 and Chebyschev L∞ similarities were calculated for the Lp Minkowski family. Sorensen, Gower, and Soergel similarities were measured for the L1 family. Intersections, Wave Hedges, Czekanowsi, Motya, Ruzicka, and Tanimoto similarities were calculated for the Intersection family. Inner Product, Cosine, Kumar Hassebrook, and Dice similarities were calculated for the Inner Product family. Fidelity, Bhattacharya, Matusita, and Squared Chord similarities were calculated for the Fidelity family. Squared Euclidean similarity was calculated for the Squared L2 family. Taneja and Average similarities were calculated for the Combinations family. Kullback–Leibler Divergence was calculated for the Shannon’s Entropy family. The Kullback–Leibler Divergence was calculated using Equation (24) as follows:

Here, Pi and Qi are the pdf values in each histogram bin i and nbins is the number of bins. Care was taken to avoid the case of 0ln0.

Finally, a correlation coefficient was calculated to measure the correlation between pdfs. Its value could range from −1 (direct negative correlation) to 1 (direct positive correlation). If it was 0, there was no correlation.

4.6. Software

MATLAB R2023b was used for all experiments. Software for the neural network architecture and for calculating STOI scores was sourced at http://web.cse.ohio-state.edu/pnl/DNN_toolbox/ [32] (accessed on 5 April 2021) and adapted according to the needs of specific experiments. When conducting the oracle performance evaluations, software for computing the IBM was sourced at https://ch.mathworks.com/matlabcentral/fileexchange/33199-ideal-binary-mask (accessed on 3 March 2023). It was then modified to calculate the other masks used in this study. To simulate the behavior of a cochlear implant, the vocoder implementation at https://github.com/vmontazeri/cochlear-implant-simulation (accessed on 10 December 2020) was used. The software for calculating PESQ and NCM scores was sourced from the appendix of [44]. Software for calculating probability distribution functions and similarity was sourced at https://uk.mathworks.com/matlabcentral/fileexchange/97142-probability-density-functions-distance-and-similarity (accessed on 17 June 2024).

5. Results

A feasibility study was conducted initially with ideal masks (i.e., “oracle” masks) in order to establish an upper limit on performance. Here, the 600 training utterances were used for measuring the oracle performance of the masks. Following on from this, the 120 test utterances were used for measuring how effective the neural networks were at estimating the masks and hence performing noise reduction on the mixtures. In all cases, the best (most effective) scores are shown here in bold text while the worst (least effective) scores are in italics. ‘Best’ means the highest mean/median and lowest standard deviation.

5.1. Oracle Performance

Oracle STOI scores for normal speech for each of the ideal masks evaluated are given in Table 1 in the form of mean, median, and standard deviation values. Three different noise types at −5 dB SNR have been used. The higher the mean/median is and the lower the standard deviation for STOI is, the better the intelligibility is. In all cases in Table 1, the median is slightly greater than the mean score. Regardless of the noise type, cIRM had a perfect mean score of 1 and a standard deviation of 0 and was able to perfectly recover the clean speech from the noisy mixture [34] and so has been excluded from Table 1. Of the other masks, the FFTM was the most effective mask with the highest mean and lowest standard deviation scores. The IBM was the least effective mask with the lowest mean and highest standard deviation scores.

Table 1.

STOI scores for normal speech using oracle masks at −5 dB SNR for three noise types. ‘Mix’ indicates the unprocessed mixture (most and least effective scores are in bold and italics, respectively).

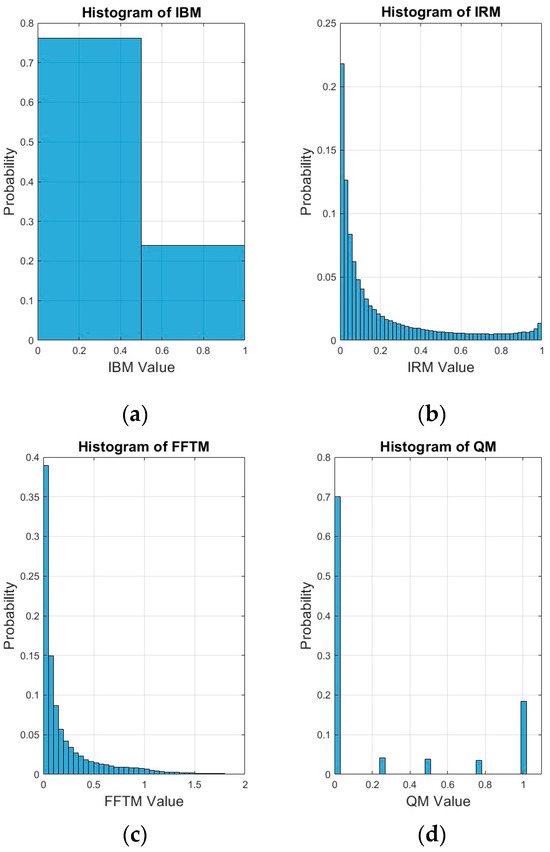

According to [38], targets/masks that take on values in the range of [0, 1] can be more conducive for supervised learning with deep neural networks. To better visualize the distribution of mask values, histograms were generated for the same sample utterance as in Figure 2 for each of the ideal masks. These histograms have been plotted in Figure 5 for babble noise at −5 dB SNR. This information is useful for deciding what values to clip for some of the unbounded mask values. It also provides insight on what percentage of negative mask values used the IRM and what percentage of positive mask values used the PSM when generating the PSM+. The experimental results in this study have demonstrated that clipping a small percentage of mask values is acceptable as speech intelligibility is still preserved. However, discarding a large percentage of mask values simply because they are negative has a detrimental effect on speech intelligibility as these mask values carry a significant amount of useful information. For the IBM, there are only two values, i.e., 0 and 1. A total of 24% of the values were equal to 1 (speech-dominant) while 76% of the values were 0 (noise-dominant) for babble noise. In the interests of brevity, histograms for factory noise and SSN have not been plotted in Figure 5. However, for comparison, for SSN, 15% of the values were equal to 1 while 85% of the values were equal to 0. For factory noise, 11% of the values were equal to 1 while 89% of the values were equal to 0. For the IRM, the values decayed approximately exponentially between 0 and 1. However, they rose slightly again as the IRM value reached 1. For the FFTM, the values also decayed exponentially. The maximum value was 74.6, with 94.5% of the values being in the range of [0, 1] (only values from 0 to 2 have been plotted in Figure 5c). A total of 97.9% of the values lay in the range of [0, 1.5]. For the QM, 70.05% of the values fell at quantization level 1, 4.13% fell into quantization level 2, 3.86% fell into quantization level 3, 3.55% fell into quantization level 4 and 18.41% fell into quantization level 5. For cIMRr, the minimum and maximum values were −72.7869 and 74.5591, respectively, and 56.1% of the values were in the range of [0, 1]. A total of 58.5% of the values were in the range of [0, 2]. A total of 41.1% of the values were negative. The mean and standard deviation were 0.0918 and 0.5871, respectively. For cIMRi, the minimum and maximum values were −45.8876 and 35.1535, respectively. A total of 49.3% of the values were in the range of [0, 1] while 50% of the values were in the range of [0, 2]. A total of 49.7% of the values were negative while the mean and standard deviation were −0.0005 and 0.4747, respectively. Both the cIMRr and the cIRMi distributions appeared to be reasonably symmetric around 0 but cIMRr was skewed slightly to the right.

Figure 5.

Histograms for the ideal masks for the utterance “choose between the high road and the low” mixed with babble noise at −5 dB SNR: (a) IBM; (b) IRM; (c) FFTM; (d) QM, (e) cIRMr; (f) cIRMi.

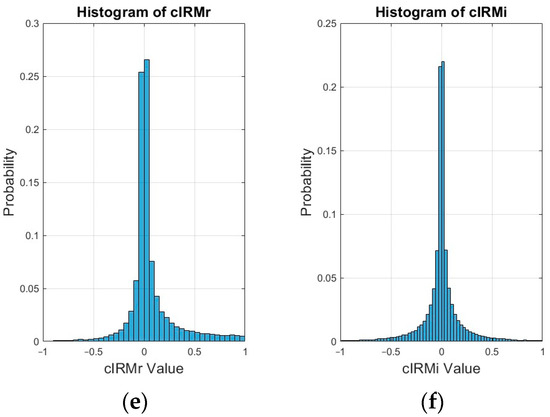

5.2. Normal Hearing Speech Intelligibility

As an example of the impact of speech estimation, spectrograms from the experiments in this study have been illustrated in Figure 6 for a sample normal clean speech utterance, a mixture of clean speech and babble noise at −5 dB SNR, and the resulting speech signal synthesized using the estimated IBM. From Figure 6, it can be seen that the estimated speech (bottom of Figure 6) using the IBM bears a reasonable resemblance to the original clean speech (top of Figure 6); in particular, transient events are reasonably well preserved while harmonics are also reasonably well preserved.

Figure 6.

Spectrograms of normal clean speech, normal clean speech mixed with babble noise at −5 dB SNR, and IBM-estimated normal speech for the utterance “A plea for funds seems to come again”. Spectrograms use the Hanning Window with 20 ms frames and 50% overlap.

Table 2 shows the STOI scores for the estimated masks for babble and SSN across a range of SNRs. It should be noted that all estimated masks performed well in terms of STOI improvement relative to the normal mixtures. The strongest-performing mask for normal speech intelligibility was the PSM+ while the least effective mask was the IRM. When averaging over the two noise types and the three SNRs, the PSM+ showed a 15.1% improvement while the IRM showed a 14.2% improvement. The FFTM performed best for babble noise while the PSM+ performed best for SSN.

Table 2.

Mean STOI scores for normal speech using estimated masks at three SNRs for two noise types (most and least effective scores are shown in bold and italics, respectively).

5.3. Normal Hearing Speech Quality

Table 3 shows the PESQ scores for the estimated masks for babble and SSN across a range of SNRs. All estimated masks performed well in terms of PESQ improvement relative to the normal mixtures. The strongest performing mask for normal speech quality was the PSM+ while the least effective mask was the FFTM. When averaging over the two noise types and the three SNRs, the PSM+ showed a 27.7% improvement while the FFTM showed a 20.3% improvement. The PSM+ performed best for both babble noise and SSN and was closely followed by the QM.

Table 3.

Mean PESQ scores for normal speech using estimated masks at three SNRs for two noise types (most and least effective scores are shown in bold and italics, respectively).

5.4. Hearing-Impaired Speech Intelligibility

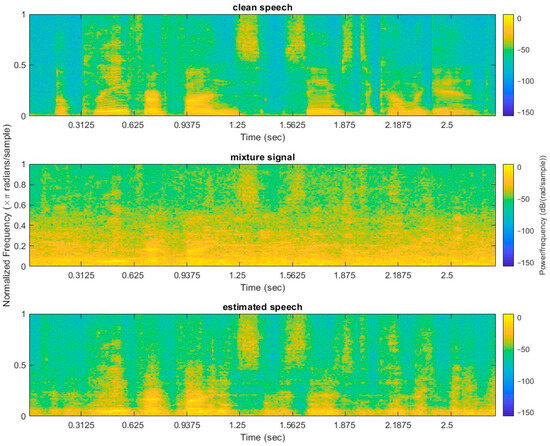

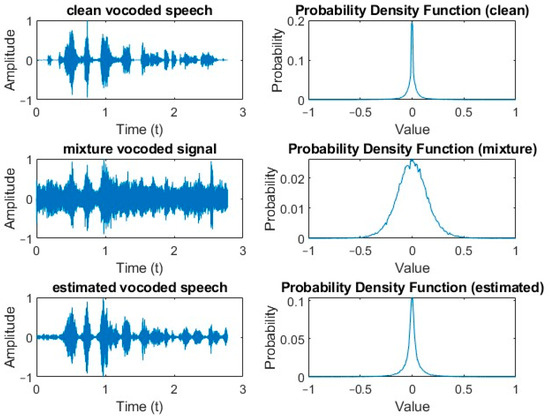

According to Newman and Chatterjee [64], noise-vocoded speech preserves the amplitude structure. However, it has substantially reduced spectral information. This is similar to what happens in cochlear implant signal processing. Time-domain signals from the experiments in this study for a particular clean vocoded speech utterance, a mixture of this clean vocoded speech and babble noise at −5 dB SNR, and the resulting vocoded speech signal using the estimated IBM have been illustrated in Figure 7. This was the same utterance as in Figure 6, but this time, the clean speech had been vocoded to mimic the behavior of a cochlear implant. This utterance was made up of eight words and the duration was just under three seconds. In the mixture, the clean speech was swamped by the babble noise and it would be extremely challenging for a hearing-impaired listener to discern what had been spoken. The estimated (enhanced) speech was not an exact replica of the clean speech but resembled it much more closely, and hence, there was a higher probability that the utterance could be understood.

Figure 7.

Time-domain representation of vocoded signals for clean speech, clean speech mixed with babble noise at −5 dB SNR, and IBM-estimated speech for the utterance “A plea for funds seems to come again”.

Table 4 shows the intelligibility estimated using the NCM for the estimated masks for babble and SSN across a range of SNRs. It should be noted that all estimated masks performed well in terms of NCM improvement relative to the vocoded mixtures. The strongest performing mask for hearing-impaired speech intelligibility was the PSM+ while the least effective mask was the FFTM. When averaging over the two noise types and the three SNRs, the PSM+ showed a 27.8% improvement while the FFTM showed a 27.0% improvement. The PSM+ performed best for both babble noise and SSN and was closely followed by the QM.

Table 4.

NCM scores for vocoded speech using estimated masks at three SNRs for two noise types (most and least effective scores are shown in bold and italics, respectively).

5.5. Hearing-Impaired Speech Similarity

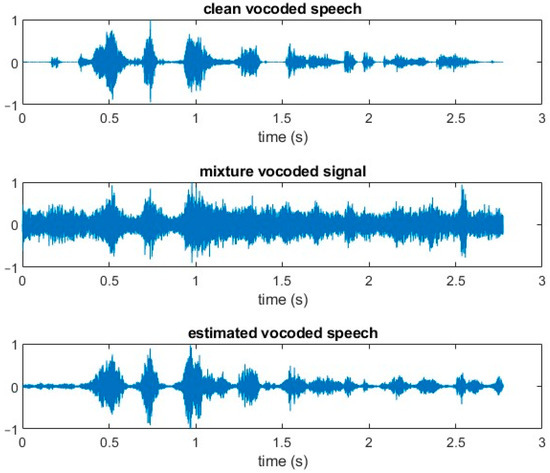

While it is not common practice to measure the quality of vocoded speech, it is useful to perform some comparison between clean vocoded speech, vocoded mixtures, and estimated vocoded speech. As discussed in Section 4.5, in this study, this was motivated by previous work on the use of “similarity” in speech and audio applications. The clean, mixture, and estimated vocoded signals were converted into histograms and then normalized so that they represented probability density functions, as shown in Figure 8. Similarity scores were calculated according to the methodology described in Section 4.5. The scores were separated according to the two noise types, i.e., babble and SSN. Each noise type was presented at three SNRs, as before. In general, the higher the similarity is, the better the score will be. The one exception to this is Shannon’s Entropy, where the smaller the Kullback–Leibler Divergence is, the greater the similarity will be. The results at the three different SNRs, for babble noise, are presented in Table 5, Table 6 and Table 7 while corresponding results for SSN are presented in Table 8, Table 9 and Table 10.

Figure 8.

Time- domain representation and corresponding probability density functions of vocoded signals for clean speech, clean speech mixed with babble noise at −5 dB SNR, and IBM-estimated speech for the utterance “A plea for funds seems to come again”.

Table 5.

Similarity scores for vocoded speech using estimated masks at −5 dB babble (most and least effective scores are shown in bold and italics, respectively).

Table 6.

Similarity scores for vocoded speech using estimated masks at 0 dB babble (most and least effective scores are shown in bold and italics, respectively).

Table 7.

Similarity scores for vocoded speech using estimated masks at 5 dB babble (most and least effective scores are shown in bold and italics, respectively).

Table 8.

Similarity scores for vocoded speech using estimated masks at −5 dB SSN (most and least effective scores are shown in bold and italics, respectively).

Table 9.

Similarity scores for vocoded speech using estimated masks at 0 dB SSN (most and least effective scores are shown in bold and italics, respectively).

Table 10.

Similarity scores for vocoded speech using estimated masks at 5 dB SSN (most and least effective scores are shown in bold and italics, respectively).

5.5.1. Babble Noise

Table 5 shows that the PSM+ and the QM had the highest similarity at −5 dB babble while the FFTM exhibited the lowest similarity. Table 6 shows that the QM had the best similarity at 0 dB babble while the FFTM exhibited the worst similarity. Table 7 shows that the IBM had the best similarity at 5 dB babble while the FFTM exhibited the worst similarity.

5.5.2. Speech-Shaped Noise

Table 8 shows that the PSM+ and the QM had the best similarity at −5 dB SSN while the FFTM exhibited the worst similarity. Table 9 shows that the IBM had the best similarity at 0 dB SSN while the IRM exhibited the worst similarity. Table 10 shows that the PSM+ and the IBM had the best similarity at 5 dB SSN while the FFTM and the QM exhibited the worst similarity.

If the similarity/correlation scores are further averaged over both noise types and three SNRs, the best-performing mask is the QM with a score of 0.8508 and the least effective mask is the FFTM with a score of 0.8265. For reference, the corresponding score for the mixtures is 0.6421. If the KLD scores are averaged over both noise types and three SNRs, the best performing mask is the QM with a score of 0.0423 and the least effective mask is the FFTM with a score of 0.0648. For reference, the corresponding score for the mixtures is 0.4826.

6. Discussion

In order to set the results of this study in the context of the literature, the speech intelligibility results of Wang et al. [32] for normal speech are used as a basis for comparison. They used a similar neural network architecture to the one used in this paper. However, they used the TIMIT database for speech utterances, the NOISEX database for noise sources, a 64-channel Gammatone filterbank to generate the masks (as opposed to the STFT used here), and a set of complementary features. They also used the normalization and compression of unbounded mask values. However, [34] recommended that masks that are unbounded should not be compressed. Therefore, it was decided not to compress the masks in this study.

Table 11 shows a comparison of the results from Wang et al. [32] for noisy speech and speech estimated using three masks (i.e., the masks common to both [32] and this study) with the results from this study for two noise types and three SNRs. The Pearson correlation between both sets of results was 0.992, which indicates a strong correlation. For this set of STOI scores, the mean over all mixtures, mask types, noise types, and SNRs for [32] was 0.754 versus 0.764 for this study. Correspondingly, the standard deviation for [32] was 0.098 versus 0.104 for this study. This would suggest that the short-time Fourier Transform (STFT) method, for generating the masks, results in marginally better STOI scores than using the Gammatone filter approach.

Table 11.

STOI scores from Wang et al. [32] vs. this study.

In general, the PESQ scores of [32] were consistently higher than for this study but followed the same trend. When the speech utterances used in this study were resampled from 16 kHz (wideband) to 8 kHz (narrowband), the PESQ scores were closer to those of [32]. The objective measurement of speech quality is not considered as important as that of speech intelligibility for hearing-impaired listeners as it is more important to understand what is being said rather than how it is said [49]. Nonetheless, speech quality is still considered here as it is beneficial to be able to predict how comfortable it is for someone to listen to speech, especially in the presence of background noise. PESQ scores are used here to predict the quality of speech for normal speech only. The PSM+ is the best performing mask across both noise types over a range of SNRs for both STOI and PESQ.

When speech is noise vocoded to mimic the sound heard by the wearer of a cochlear implant, the masks newly proposed in this study perform best, namely the QM and the PSM+. When averaging over SNRs, PSM+ performs marginally better for both babble and SSN.

The Multilayer Perceptron architecture was used as the benchmark in this study. A different architecture might change the absolute performance but probably would not change the relative behavior of the different masks. To verify this, future work could be conducted using an alternative architecture such as the Deep Complex Convolution Recurrent Network (DCCRN) used in [65]. Employing such a complex network would allow for complex-valued spectrum modelling.

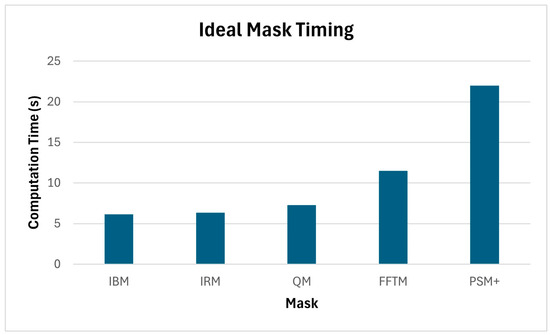

The masks were also compared in terms of their respective computational load based on a standard Windows laptop (Intel Core i7 processor) running MATLAB R2023b. The time was measured for how long it took to generate the 600 training mixtures and calculate the respective ideal masks. This was achieved using babble noise at −5 dB SNR. The results are illustrated in Figure 9. As expected, the IBM took the least amount of time (6.14 s) as it is the simplest mask. The IRM took slightly longer to calculate (6.33 s). The QM, which is one of the proposed new masks, took slightly longer at 7.27 s. This was followed by the FFTM at 11.49 s. The mask that took the longest time to calculate was the PSM+ at 22.00 s. The main reason for this was that in effect, two masks were being calculated, i.e., the PSM and the IRM. Also, the PSM involves the calculation of the cosine of a phase angle.

Figure 9.

Time to generate training mixtures and compute ideal masks.

The similarity, correlation, and divergence values were measured for the vocoded mixtures and the vocoded clean speech utterances as a reference. Then, the same calculations were repeated for all five estimated masks, but this time between the estimated vocoded speech and the clean vocoded utterances. As expected, both similarity and correlation increased while divergence decreased for both noise types as the SNR was increased. The probability density functions (pdfs) are observed in Figure 8 for a particular clean vocoded utterance, the same vocoded utterance mixed with babble noise, and the IBM-vocoded estimate of speech. The mean values for the clean vocoded, vocoded mixture, and estimated vocoded signals were all approximately 0. However, their standard deviations were 0.0906, 0.1558 and 0.1028, respectively. This indicates that the standard deviations of the clean and estimated signals were fairly similar but significantly different from those of the vocoded mixture. When viewing the pdfs, the peaks of the clean vocoded, mixture vocoded, and estimated vocoded signals were 0.2, 0.025 and 0.1, respectively. The peaks of the clean and estimated vocoded were of the same order of magnitude but were significantly different from that of the vocoded mixture, resulting in the flatter nature of its pdf. Overall, the similarity results were consistent with other results regardless of which similarity family was used.

All testing in this study used objective metrics to measure the performance of the masks for intelligibility and quality. Future research work might also be conducted to corroborate these results using subjective listening tests. For example, it would be of interest to compare the results of percent-correct word recognition scores and speech reception threshold to the NCM scores from Section 5.

7. Conclusions

The selection of an appropriate training target is important for both learning and generalization in speech separation [5]. This is particularly important when considering a speech enhancement system for cochlear implants. In this study, five different masks were compared using a feedforward deep neural network containing three hidden layers. Three of the masks had been well established in the literature and two were proposed in this work. All masks performed well for both speech quality and intelligibility for normal hearing. The proposed masks performed best in this regard. All masks performed well for intelligibility, similarity, and divergence for vocoded speech. The proposed masks also performed best in this case. Based on the literature and the results of this study, it is beneficial to combine both the magnitude and phase when designing a mask to be used as a training target in a noise reduction system for cochlear implants.

Author Contributions

Conceptualization, F.H., E.J. and A.P.; Methodology, F.H. and E.J.; Software, F.H. and A.P.; Formal analysis, F.H., E.J. and A.P.; Investigation, F.H., E.J. and A.P.; Writing—original draft, F.H.; Writing—review & editing, F.H. and E.J.; Supervision, M.G. and E.J.; Project administration, E.J. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by Science Foundation Ireland under Grants 13/RC/2094 P2 and 18/SP/5942, co-funded under the European Regional Development Fund through the Southern and Eastern Regional Operational Programme to Lero—The Science Foundation Ireland Research Centre for Software (www.lero.ie) (accessed on 7 July 2021).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset used in this study is publicly available and has been cited in Section 4.1 of this paper.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Bolner, F.; Goehring, T.; Monaghan, J.; van Dijk, B.; Wouters, J.; Bleeck, S. Speech enhancement based on neural networks applied to cochlear implant coding strategies. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 6520–6524. [Google Scholar]

- Goehring, T.; Bolner, F.; Monaghan, J.J.; van Dijk, B.; Zarowski, A.; Bleeck, S. Speech enhancement based on neural networks improves speech intelligibility in noise for cochlear implant users. Hear. Res. 2017, 344, 183–194. [Google Scholar] [CrossRef] [PubMed]

- Goehring, T.; Keshavarzi, M.; Carlyon, R.P.; Moore, B.C. Using recurrent neural networks to improve the perception of speech in non-stationary noise by people with cochlear implants. J. Acoust. Soc. Am. 2019, 146, 705–718. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Wang, D. Dnn based mask estimation for supervised speech separation. In Audio Source Separation. Signals and Communication Technology; Makino, S., Ed.; Springer: Cham, Switzerland, 2018; pp. 207–235. [Google Scholar]

- Wang, D.; Chen, J. Supervised speech separation based on deep learning: An overview. IEEE/ACM Trans. Audio Speech Lang. Process. 2018, 26, 1702–1726. [Google Scholar] [CrossRef]

- Michelsanti, D.; Tan, Z.-H.; Zhang, S.-X.; Xu, Y.; Yu, M.; Yu, D.; Jensen, J. An overview of deep-learning-based audio-visual speech enhancement and separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 1368–1396. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, D. A deep neural network for time-domain signal reconstruction. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, QLD, Australia, 19–24 April 2015; pp. 4390–4394. [Google Scholar]

- Nossier, S.A.; Wall, J.; Moniri, M.; Glackin, C.; Cannings, N. Mapping and masking targets comparison using different deep learning based speech enhancement architectures. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Samui, S.; Chakrabarti, I.; Ghosh, S.K. Time–frequency masking based supervised speech enhancement framework using fuzzy deep belief network. Appl. Soft Comput. 2019, 74, 583–602. [Google Scholar] [CrossRef]

- Lee, J.; Skoglund, J.; Shabestary, T.; Kang, H.-G. Phase-sensitive joint learning algorithms for deep learning-based speech enhancement. IEEE Signal Process. Lett. 2018, 25, 1276–1280. [Google Scholar] [CrossRef]

- Heymann, J.; Drude, L.; Haeb-Umbach, R. Neural network based spectral mask estimation for acoustic beamforming. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 196–200. [Google Scholar]

- Kjems, U.; Boldt, J.B.; Pedersen, M.S.; Lunner, T.; Wang, D. Role of mask pattern in intelligibility of ideal binary-masked noisy speech. J. Acoust. Soc. Am. 2009, 126, 1415–1426. [Google Scholar] [CrossRef]

- Abdullah, S.; Zamani, M.; Demosthenous, A. Towards more efficient DNN-based speech enhancement using quantized correlation mask. IEEE Access 2021, 9, 24350–24362. [Google Scholar] [CrossRef]

- Bao, F.; Abdulla, W.H. Noise masking method based on an effective ratio mask estimation in Gammatone channels. APSIPA Trans. Signal Inf. Process. 2018, 7, e5. [Google Scholar] [CrossRef]

- Bao, F.; Dou, H.-j.; Jia, M.-s.; Bao, C.-c. A novel speech enhancement method using power spectra smooth in wiener filtering. In Proceedings of the Signal and Information Processing Association Annual Summit and Conference (APSIPA), 2014 Asia-Pacific, Siem Reap, Cambodia, 9–12 December 2014; pp. 1–4. [Google Scholar]

- Boldt, J.B.; Ellis, D.P. A simple correlation-based model of intelligibility for nonlinear speech enhancement and separation. In Proceedings of the 2009 17th European Signal Processing Conference, Glasgow, UK, 24–28 August 2009; pp. 1849–1853. [Google Scholar]

- Lang, H.; Yang, J. Learning Ratio Mask with Cascaded Deep Neural Networks for Echo Cancellation in Laser Monitoring Signals. Electronics 2020, 9, 856. [Google Scholar] [CrossRef]

- Choi, H.-S.; Kim, J.-H.; Huh, J.; Kim, A.; Ha, J.-W.; Lee, K. Phase-aware speech enhancement with deep complex u-net. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Erdogan, H.; Hershey, J.R.; Watanabe, S.; Le Roux, J. Phase-sensitive and recognition-boosted speech separation using deep recurrent neural networks. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, QLD, Australia, 19–24 April 2015; pp. 708–712. [Google Scholar]

- Hasannezhad, M.; Ouyang, Z.; Zhu, W.-P.; Champagne, B. Speech enhancement with phase sensitive mask estimation using a novel hybrid neural network. IEEE Open J. Signal Process. 2021, 2, 136–150. [Google Scholar] [CrossRef]

- Li, H.; Xu, Y.; Ke, D.; Su, K. Improving speech enhancement by focusing on smaller values using relative loss. IET Signal Process. 2020, 14, 374–384. [Google Scholar] [CrossRef]

- Mayer, F.; Williamson, D.S.; Mowlaee, P.; Wang, D. Impact of phase estimation on single-channel speech separation based on time-frequency masking. J. Acoust. Soc. Am. 2017, 141, 4668–4679. [Google Scholar] [CrossRef] [PubMed]

- Ouyang, Z.; Yu, H.; Zhu, W.-P.; Champagne, B. A fully convolutional neural network for complex spectrogram processing in speech enhancement. In Proceedings of the ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 5756–5760. [Google Scholar]

- Tan, K.; Wang, D. Complex spectral mapping with a convolutional recurrent network for monaural speech enhancement. In Proceedings of the ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 6865–6869. [Google Scholar]

- Routray, S.; Mao, Q. Phase sensitive masking-based single channel speech enhancement using conditional generative adversarial network. Comput. Speech Lang. 2022, 71, 101270. [Google Scholar] [CrossRef]

- Wang, X.; Bao, C. Mask estimation incorporating phase-sensitive information for speech enhancement. Appl. Acoust. 2019, 156, 101–112. [Google Scholar] [CrossRef]

- Zhang, Q.; Song, Q.; Ni, Z.; Nicolson, A.; Li, H. Time-frequency attention for monaural speech enhancement. In Proceedings of the ICASSP 2022–2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 7852–7856. [Google Scholar]

- Zheng, N.; Zhang, X.-L. Phase-aware speech enhancement based on deep neural networks. IEEE/ACM Trans. Audio Speech Lang. Process. 2018, 27, 63–76. [Google Scholar] [CrossRef]

- Sivapatham, S.; Kar, A.; Bodile, R.; Mladenovic, V.; Sooraksa, P. A deep neural network-correlation phase sensitive mask based estimation to improve speech intelligibility. Appl. Acoust. 2023, 212, 109592. [Google Scholar] [CrossRef]

- Sowjanya, D.; Sivapatham, S.; Kar, A.; Mladenovic, V. Mask estimation using phase information and inter-channel correlation for speech enhancement. Circuits Syst. Signal Process. 2022, 41, 4117–4135. [Google Scholar] [CrossRef]

- Wang, D. On ideal binary mask as the computational goal of auditory scene analysis. In Speech Separation by Humans and Machines; Springer: Berlin/Heidelberg, Germany, 2005; pp. 181–197. [Google Scholar]

- Wang, Y.; Narayanan, A.; Wang, D. On training targets for supervised speech separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2014, 22, 1849–1858. [Google Scholar] [CrossRef]

- Li, X.; Li, J.; Yan, Y. Ideal Ratio Mask Estimation Using Deep Neural Networks for Monaural Speech Segregation in Noisy Reverberant Conditions. In Proceedings of the Interspeech, Stockholm, Sweden, 20–24 August 2017; pp. 1203–1207. [Google Scholar]

- Wang, Z.; Wang, X.; Li, X.; Fu, Q.; Yan, Y. Oracle performance investigation of the ideal masks. In Proceedings of the 2016 IEEE International Workshop on Acoustic Signal Enhancement (IWAENC), Xi’an, China, 13–16 September 2016; pp. 1–5. [Google Scholar]

- Liang, S.; Liu, W.; Jiang, W.; Xue, W. The optimal ratio time-frequency mask for speech separation in terms of the signal-to-noise ratio. J. Acoust. Soc. Am. 2013, 134, EL452–EL458. [Google Scholar] [CrossRef]

- Xia, S.; Li, H.; Zhang, X. Using optimal ratio mask as training target for supervised speech separation. In Proceedings of the 2017 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Kuala Lumpur, Malaysia, 12–15 December 2017; pp. 163–166. [Google Scholar]

- Issa, R.J.; Al-Irhaym, Y.F. Audio source separation using supervised deep neural network. In Proceedings of the Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2021; p. 022077. [Google Scholar]

- Williamson, D.S.; Wang, Y.; Wang, D. Complex ratio masking for monaural speech separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2015, 24, 483–492. [Google Scholar] [CrossRef] [PubMed]

- Williamson, D.S.; Wang, D. Time-frequency masking in the complex domain for speech dereverberation and denoising. IEEE/ACM Trans. Audio Speech Lang. Process. 2017, 25, 1492–1501. [Google Scholar] [CrossRef] [PubMed]

- Hu, Y.; Loizou, P.C. A new sound coding strategy for suppressing noise in cochlear implants. J. Acoust. Soc. Am. 2008, 124, 498–509. [Google Scholar] [CrossRef] [PubMed]

- Hasan, T.; Hansen, J.H. Acoustic factor analysis for robust speaker verification. IEEE Trans. Audio Speech Lang. Process. 2012, 21, 842–853. [Google Scholar] [CrossRef]

- Zhou, L.; Jiang, W.; Xu, J.; Wen, F.; Liu, P. Masks fusion with multi-target learning for speech enhancement. arXiv 2021, arXiv:2109.11164. [Google Scholar]

- Rothauser, E. IEEE recommended practice for speech quality measurements. IEEE Trans. Audio Electroacoust. 1969, 17, 225–246. [Google Scholar]

- Loizou, P.C. Speech Enhancement: Theory and Practice; CRC Press: Boca Raton, FL, USA, 2013. [Google Scholar]

- Liu, Y.; Zhang, H.; Zhang, X. Using Shifted Real Spectrum Mask as Training Target for Supervised Speech Separation. In Proceedings of the Interspeech, Hyderabad, India, 2–6 September 2018; pp. 1151–1155. [Google Scholar]

- Henry, F.; Parsi, A.; Glavin, M.; Jones, E. Experimental Investigation of Acoustic Features to Optimize Intelligibility in Cochlear Implants. Sensors 2023, 23, 7553. [Google Scholar] [CrossRef] [PubMed]

- Healy, E.W.; Yoho, S.E.; Wang, Y.; Wang, D. An algorithm to improve speech recognition in noise for hearing-impaired listeners. J. Acoust. Soc. Am. 2013, 134, 3029–3038. [Google Scholar] [CrossRef]

- Cychosz, M.; Winn, M.B.; Goupell, M.J. How to vocode: Using channel vocoders for cochlear-implant research. J. Acoust. Soc. Am. 2024, 155, 2407–2437. [Google Scholar] [CrossRef]

- Falk, T.H.; Parsa, V.; Santos, J.F.; Arehart, K.; Hazrati, O.; Huber, R.; Kates, J.M.; Scollie, S. Objective quality and intelligibility prediction for users of assistive listening devices: Advantages and limitations of existing tools. IEEE Signal Process. Mag. 2015, 32, 114–124. [Google Scholar] [CrossRef]

- Cosentino, S.; Marquardt, T.; McAlpine, D.; Falk, T.H. Towards objective measures of speech intelligibility for cochlear implant users in reverberant environments. In Proceedings of the 2012 11th International Conference on Information Science, Signal Processing and their Applications (ISSPA), Montreal, QC, Canada, 2–5 July 2012; pp. 666–671. [Google Scholar]

- Santos, J.F.; Cosentino, S.; Hazrati, O.; Loizou, P.C.; Falk, T.H. Performance comparison of intrusive objective speech intelligibility and quality metrics for cochlear implant users. In Proceedings of the Thirteenth Annual Conference of the International Speech Communication Association, Portland, OR, USA, 9–13 September 2012. [Google Scholar]

- Kokkinakis, K.; Loizou, P.C. Evaluation of objective measures for quality assessment of reverberant speech. In Proceedings of the 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech Republic, 22–27 May 2011; pp. 2420–2423. [Google Scholar]

- Hu, Y.; Loizou, P.C. Evaluation of objective quality measures for speech enhancement. IEEE Trans. Audio Speech Lang. Process. 2007, 16, 229–238. [Google Scholar] [CrossRef]

- Taal, C.H.; Hendriks, R.C.; Heusdens, R.; Jensen, J. An algorithm for intelligibility prediction of time–frequency weighted noisy speech. IEEE Trans. Audio Speech Lang. Process. 2011, 19, 2125–2136. [Google Scholar] [CrossRef]

- Taal, C.H.; Hendriks, R.C.; Heusdens, R.; Jensen, J. A short-time objective intelligibility measure for time-frequency weighted noisy speech. In Proceedings of the 2010 IEEE International Conference on Acoustics, Speech and Signal Processing, Dallas, TX, USA, 14–19 March 2010; pp. 4214–4217. [Google Scholar]

- Rix, A.W.; Beerends, J.G.; Hollier, M.P.; Hekstra, A.P. Perceptual evaluation of speech quality (PESQ)-a new method for speech quality assessment of telephone networks and codecs. In Proceedings of the 2001 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings (Cat. No. 01CH37221), Salt Lake City, UT, USA, 7–11 May 2001; pp. 749–752. [Google Scholar]

- Kurittu, A.; Samela, J.; Lakaniemi, A.; Mattila, V.-v.; Zacharov, N. Application and Verfication of the Objective Quality Assessment Method According to ITU Recommendation Series ITU-T P. 862. J. Audio Eng. Soc. 2006, 54, 1189–1202. [Google Scholar]

- Holube, I.; Kollmeier, B. Speech intelligibility prediction in hearing-impaired listeners based on a psychoacoustically motivated perception model. J. Acoust. Soc. Am. 1996, 100, 1703–1716. [Google Scholar] [CrossRef]

- Lai, Y.-H.; Chen, F.; Wang, S.-S.; Lu, X.; Tsao, Y.; Lee, C.-H. A deep denoising autoencoder approach to improving the intelligibility of vocoded speech in cochlear implant simulation. IEEE Trans. Biomed. Eng. 2017, 64, 1568–1578. [Google Scholar] [CrossRef] [PubMed]

- Kalaivani, S.; Thakur, R.S. Modified Hidden Markov Model for Speaker Identification System. Int. J. Adv. Comput. Electron. Eng. 2017, 2, 1–7. [Google Scholar]

- Thakur, A.S.; Sahayam, N. Speech recognition using euclidean distance. Int. J. Emerg. Technol. Adv. Eng. 2013, 3, 587–590. [Google Scholar]

- Park, M.W.; Lee, E.C. Similarity measurement method between two songs by using the conditional Euclidean distance. Wseas Trans. Inf. Sci. Appl. 2013, 10, 12. [Google Scholar]

- Cha, S.-H. Comprehensive survey on distance/similarity measures between probability density functions. Int. J. Math. Models Methods Appl. Sci. 2007, 1, 300–307. [Google Scholar]

- Newman, R.; Chatterjee, M. Toddlers’ recognition of noise-vocoded speech. J. Acoust. Soc. Am. 2013, 133, 483–494. [Google Scholar] [CrossRef]

- Hu, Y.; Liu, Y.; Lv, S.; Xing, M.; Zhang, S.; Fu, Y.; Wu, J.; Zhang, B.; Xie, L. DCCRN: Deep complex convolution recurrent network for phase-aware speech enhancement. arXiv 2020, arXiv:2008.00264. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).