1. Introduction

In recent years, conventional underwater imaging methods that depend on sonar or stereovision have encountered difficulties as a result of their vulnerability to underwater noise. Consequently, it has become increasingly challenging to attain precise three-dimensional reconstructions of targets submerged in water. As a result, there has been a growing interest in underwater optical three-dimensional reconstruction technology [

1]. With the advancement of deep learning, single-image-based 3D reconstruction has made significant progress in underwater imaging. Traditional methods like stereovision have been surpassed by end-to-end training methods that utilize deep learning. These methods can directly take a single image as input and generate a reconstructed 3D model as output. By extracting features more efficiently, the reconstruction effect is improved [

2,

3]. Nevertheless, this approach does have some drawbacks. Firstly, the available public dataset is limited in size and lacks diversity, leading to a shortage of training data. Additionally, the quality of the reconstructed 3D objects is not particularly impressive in terms of resolution and accuracy. Moreover, obtaining images of underwater low-light environments without an active light source poses a significant challenge. Another prevalent technique for underwater imaging is sonar-based imaging radar, which offers a modest level of resolution and has limitations in capturing intricate details and detecting minute objects. Underwater laser scanning employs laser point cloud scanning technology to precisely capture three-dimensional models of the underwater environment without physical contact. However, because of the refraction that occurs when light passes through different media, it is necessary to apply refraction correction in order to enhance the accuracy of the imaging. This method is well suited for measuring a wide range of underwater scenes and acquiring precise models of targets. Underwater laser scanning imaging serves multiple purposes, including target identification of underwater robots, high-resolution imaging of structures, real-time data assistance for underwater rescue operations, detection of underwater torpedoes, and identification of undersea buildings [

4]. In the industrial sector, this technology finds extensive applications in several areas, such as industrial product quality inspection, building analysis [

5], water conservancy troubleshooting, and other related sectors [

6]. Marine scientific research uses technology to investigate various aspects of the ocean, including the exploration of oil and gas resources beneath the seabed, mapping the topography of the seabed, and the search for submerged archaeological structures of historical significance [

7]. Furthermore, due to the advancements and practical implementation of contemporary electronic information technology and underwater imaging technology, the latter is utilized to detect reefs and beacon structures, among other things, and is, thus, employed to some degree in maritime vessel navigation [

8]. Additionally, the technology can be utilized to identify the contamination source in contaminated waters [

9].

As an active optical measurement technology, three-dimensional line-structured light reconstruction enables the reconstruction of measured targets with high precision [

10]. The underlying principle of this methodology entails the application of a laser beam to produce a line, which is then subjected to rotational scanning by a turntable. During this scanning process, the distance and angle of each laser point are meticulously recorded. Consequently, three-dimensional coordinate data pertaining to the target object can be obtained [

11]. The linear laser scanning system primarily consists of three major components: a camera (CCD), a linear laser, and a scanning turntable. The calibration of system parameters is a crucial step in achieving accurate three-dimensional reconstruction [

12]. This process involves calibrating various parameters, such as the CCD internal and external reference matrices, the light plane equation, and the system rotary axis equation. One of the most important of these parameters is the calibration of the light plane. This is because correct calibration of the light plane equations makes it easier to understand how light moves through water, which allows for accurate adjustments for refraction. Compensating for refraction is an essential process in guaranteeing the precision of the obtained laser scan data. Therefore, precise calibration of the light plane is essential for acquiring point cloud data of superior quality. By conducting camera parameter calibration, it becomes possible to establish the transformation relationship between the pixel coordinate system and the camera coordinate system. By combining this transformational relationship, it is possible to least-squares fit numerous laser strips on the calibration target to derive the plane equation of the light plane in the camera coordinate system. [

13]. The calibration of camera parameters and light plane calibration predominantly rely on the Zhang [

14] method. This widely used, dependable, and straightforward method finalizes the internal and external parameters of the camera through the extraction of corner points and modeling of the camera using checkboard images captured from multiple angles. However, alternative techniques such as the Dewar method [

15], the sawtooth method, and the step measurement method [

16] can also yield a specific quantity of calibration points with high precision, facilitating the achievement of camera calibration. Regarding the calibration of the rotary axis, there are several regularly employed methods, namely the cylinder-based approach, the standard ball-based method, and the checkerboard grid calibration method. These methods for calibrating the rotary axis involve observing the measured object to obtain a significant number of highly accurate angle points. The rotational path of these angle points at different heights corresponds to the center of a circle at different heights. By fitting a straight line to the center points of the angle points at different heights, the linear equation of the axis of rotation can be determined.

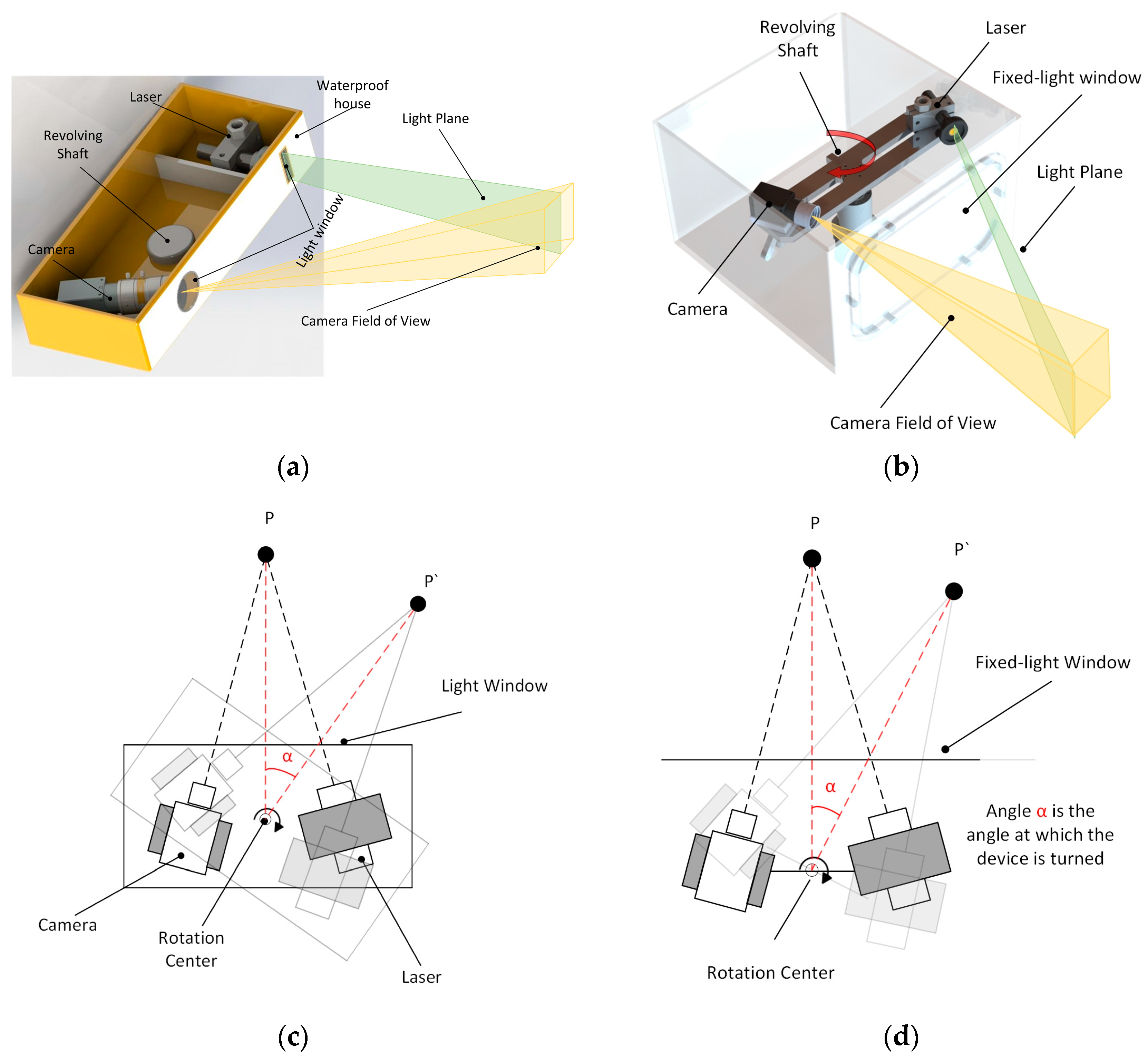

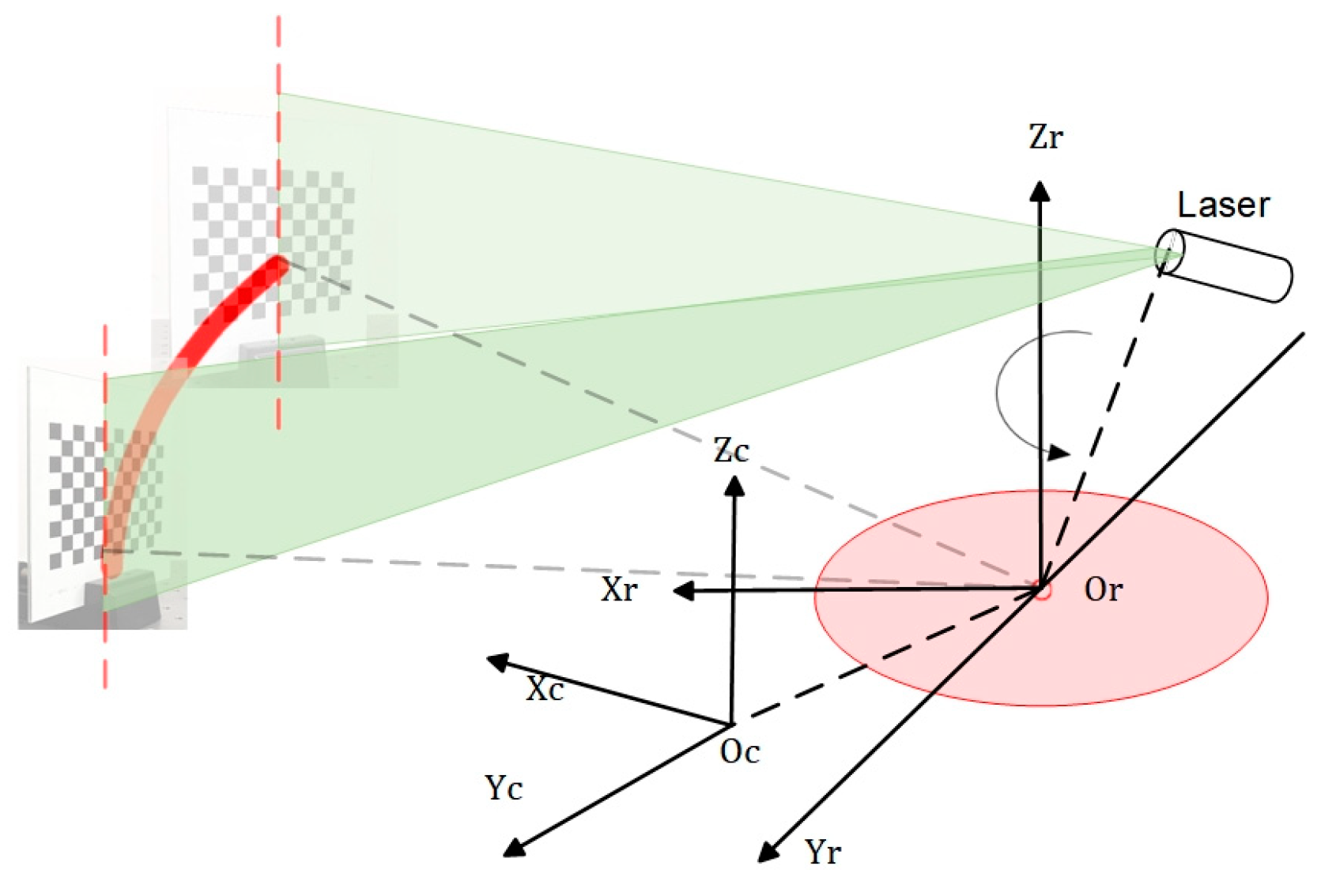

In the context of addressing the issue of refraction error in a system, as seen in

Figure 1a,b, it is common practice in underwater 3D reconstruction to establish a fixed relationship between the light window, laser scanning imaging system, and rotary table. By maintaining a fixed configuration between these components, the system’s refraction error can be analyzed in a more stable manner [

17]. As a result, this facilitates the creation of a static compensation algorithm that can be utilized to analyze the refraction process and mitigate the detrimental impacts of refraction on the laser scanning imaging system. The application of this technology facilitates improved observation of the refraction error phenomenon. Nevertheless, the analysis procedure is deficient in specificity and fails to comprehensively consider the mechanical parameters of the imaging system [

18]. Furthermore, someone can expand upon the concept of stable refraction by undertaking an exhaustive examination of the two refraction processes through the utilization of three distinct media. In addition, mechanical parameters of the system are taken into account, such as the distance between the window plane and the optical center. This is performed to make the established refraction compensation model [

19] more reliable and effective. However, it is worth noting that the calibration method for determining the distance between the optical center and the window plane often heavily relies on the aforementioned compensation model. The complexity of the problem-solving process is noteworthy. In addition to performing an analysis of the refraction process in order to mitigate the inaccuracy, a novel three-dimensional laser sensor was presented by Miguel Castillon et al. [

20], wherein the inherent properties of a two-axis mirror were utilized to transform a projected curve into a straight line upon refraction in water. This strategy effectively mitigates the occurrence of refraction errors. In low-light situations underwater, the rotation of a conventional scanning device causes some degree of water fluctuation, which warps the light strip data that the system sensor receives about the object’s surface. To solve the problem, this research studies an underwater 3D scanning and imaging system that makes use of a fixed light window and a spinning laser (FWLS). The refraction compensation algorithm employed in the FWLS scanning imaging system, as investigated in this study, bears resemblance to the refraction compensation method utilized in the galvanometer–fixed light window imaging system [

21]. However, it is worth noting that the refraction compensation algorithm in the latter system typically only takes into account the pixel coordinate shift in the return light. As seen in

Figure 1b,d schematics, this study presents the development of a model that simulates the dynamic process of a FWLS scanning imaging system based on the imaging principle. The FWLS scanning imaging system’s refraction compensation technique is derived by solving the equations for the light plane and the dynamic pixel coordinate offsets related to dynamic refraction.

In a previous study, the presence of water current and attenuation refraction in water contributed to increased calibration challenges. Wang et al. improved the laser 3D reconstruction method and derived a deep neural network (DNN)-based way to find augmented reality (AR) markers to deal with these problems. An approach to adequately training the model for robustness is described. Upon evaluation, the automatic laser line ID determination reaches 100 percent accuracy, and the detection rate of underwater AR markers reaches a maximum of 91 percent [

22]. Chen et al. suggested an underwater stereo-matching algorithm based on a convolutional neural network (CNN) for reconstructing 3D images underwater. This would allow for high framerates even though it would require a lot of computing power. Direct training with unprocessed data significantly reduces the training process’s complexity. To validate the procedure, underwater fish reconstruction experiments were performed employing this approach; the outcomes indicate that the error rate remains below 6% [

23]. Nocerino et al. introduced a dense image-matching technique along with a thorough evaluation and analysis of the chosen method. They further validated the accuracy of their algorithm by conducting point cloud analysis on eight distinct objects and scenarios, thereby demonstrating its practicality. Nocerino’s suggested approach for dense image matching produces a point cloud with high density. This point cloud is used to regulate and fine-tune the reconstruction quality of objects based on the dataset’s features. However, the presence of numerous and ambiguous parameters can impact the final outcomes of the reconstruction [

24]. Fabio Menna et al. examined the practicality of using photogrammetry to photograph and create 3D models of objects with intricate shapes and optical characteristics. They opted for a semi-automated approach to ensure cost-effectiveness in their experiments, which involved three objects with varying shapes and optical properties. Utilizing a quasi-automatic procedure reduces the expenses of the experiments and produces satisfactory geometries, textures, and accurate color information. However, the method’s high f-numbers, necessary to achieve a sufficient depth of field, lead to excessively long exposure times. Additionally, the experimental equipment is bulky and occupies a significant amount of space, rendering it unsuitable for intricate underwater environments [

25]. Thomas Luhmann conducts a comprehensive evaluation and synthesis of the progression of cameras and calibration techniques. He examines several camera-modeling models and places emphasis on current advancements in automatic calibration methods and photogrammetric precision. Self-calibration is a completely automated procedure that is used for both targeted and untargeted objects and scenes. As self-calibration becomes more common, the reliability of calibration is enhanced by increasing the redundancy of observation data. The expense of self-calibration is reduced; however, calibration errors can still inevitably impact the calculation of object points and the independent accuracy of calibrated scenes [

26]. Hans-Gerd Maas suggested an automated measurement system that utilizes structured light projection to measure objects with limited surface texture at close distances. He outlined a particular method and tested it on various examples to validate its effectiveness. This method offers all the benefits of photogrammetric systems without the need for operator-provided approximations or initial matches. Nevertheless, this technique is limited to detecting deformation only in the depth coordinate direction. Additionally, the point grating must be physically applied to the surface to accurately compute local strain and shear [

27]. Michael Bleier introduced a two-part 3D scanning system designed for shallow water. The technology utilizes satellite navigation and a high-power cross-line laser to extend the detection range. The system is separated into two sections, one above the water and one below. Close-range scanning can produce millimeter-level inaccuracies in experimental settings. The scanning system utilizes a high-power laser that effectively overcomes water absorption and interference from ambient light. The system’s cross-line laser pattern enables an unrestricted scanning motion. However, it is important to note that this system is limited to shallow water detection within the range of 5–10 m. Additionally, the system’s GNSS antenna, located near the lid, provides a low level of localization accuracy, which can result in errors [

28]. In the pursuit of investigating an algorithm for compensating underwater refraction errors, one approach is to make modifications to the existing method in order to minimize errors [

29]. Alternatively, a novel correction algorithm can be developed with the specific objective of addressing refraction-related distortions [

30]. Hao [

31] and Xue developed a refraction error correction algorithm based on their system’s refraction model. This technique effectively enhances the three-dimensional image accuracy of the system to approximately 0.6 mm. In their study, Ou et al. [

32] employed a combination of binocular cameras and laser fusion technology to develop a model of the system. They conducted an analysis of the refraction error, performed system calibration, and ultimately achieved high-precision imaging in low-light underwater conditions.

However, the imaging modes of the systems examined by Hao, Xue, Ou, et al. exhibit fluctuating effects on the water body during scanning. This causes distortion in the light bar information on the surface of the measured object, resulting in undesired reconstruction errors. Therefore, this paper focuses on studying the FWLS scanning and imaging system, as depicted in

Figure 1d. In conjunction with Hao, Xue, et al.’s refraction error compensation algorithm, this study develops a mathematical model to represent the dynamic refraction process of the FWLS scanning imaging system. As a result, this paper offers a dynamic refraction error compensation algorithm to address the fluctuation error issue that underwater rotational scanning causes in water bodies. By doing so, this paper aims to circumvent the fluctuation error problem that arises during the system’s rotational scanning in low-light conditions underwater.

2. Description of the Underwater Imaging Device

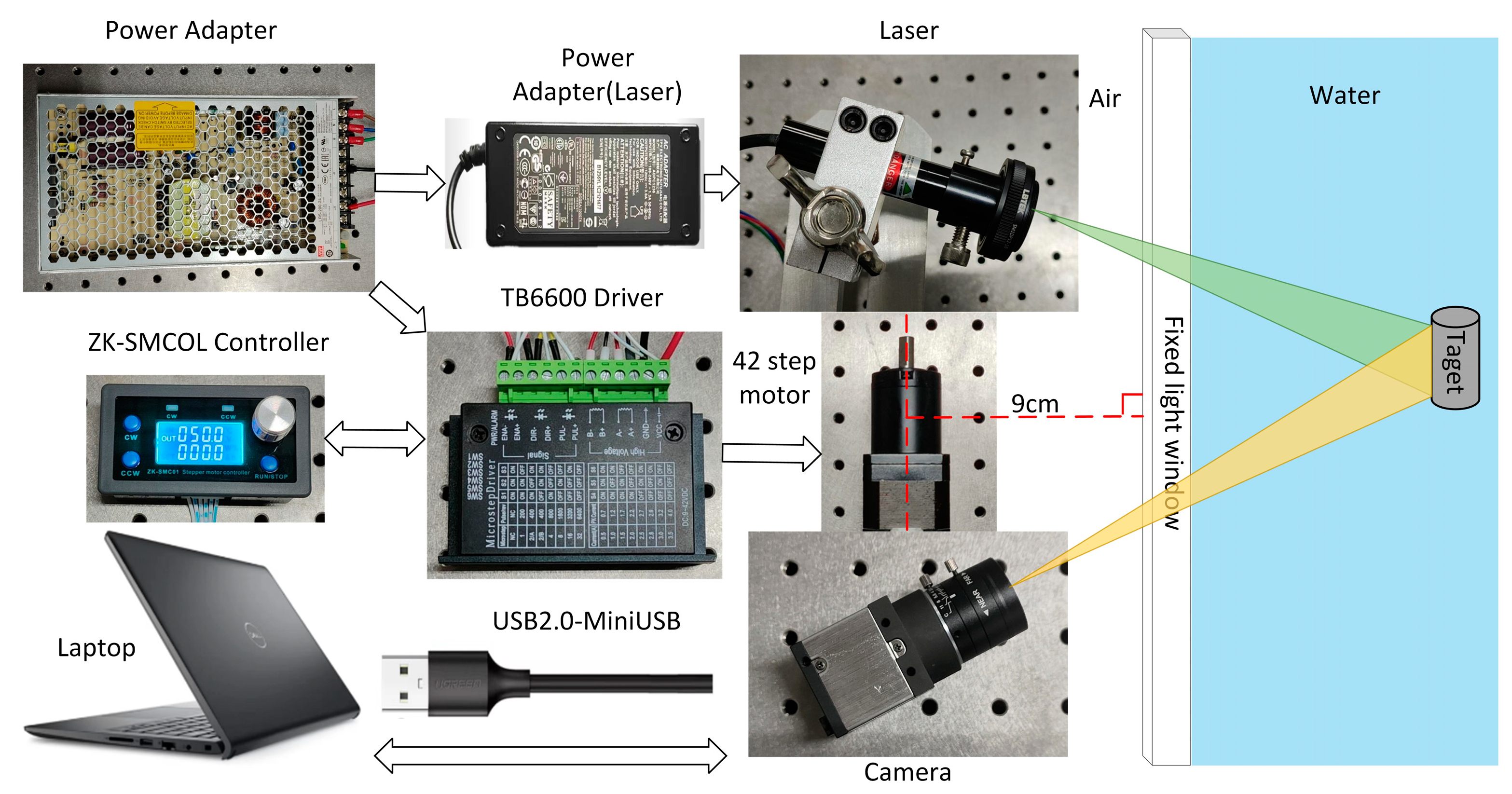

As depicted in

Figure 2, the hardware component of the underwater imaging equipment comprises a CCD camera, a linear laser, a rotating table, a controller, and a driver. The camera utilized in this study is Thorlabs’ DCU224C industrial camera (Optical equipment, Newton, NJ, USA), which offers a resolution of 1280 × 1024 pixels. It operates within a spectral range of 350 nm to 600 nm. The camera is equipped with an 8 mm focal length lens, specifically the MVCAM-LC0820-5M model (Lankeguangdian Co. Ltd., Hangzhou, China), providing a field-of-view angle of 46.8° horizontally, 36° vertically, and 56° diagonally. For the linear laser, a 520 nm linear laser with a power output of 200 mW was selected. The rotary table employed in this setup consists of a 42-step motor, along with its corresponding controller and driver.

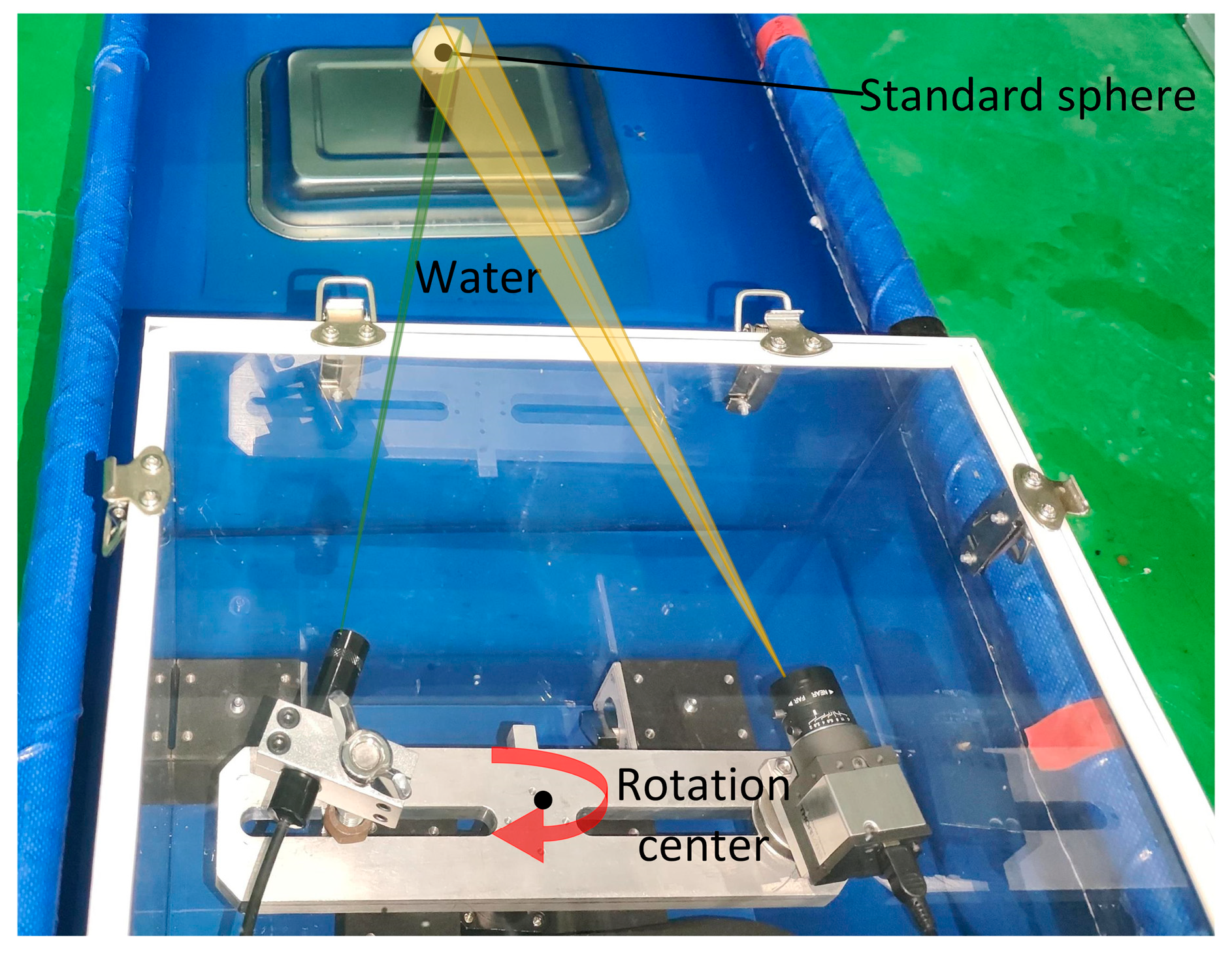

As seen in

Figure 3, the camera and laser are affixed to opposite ends of the rotary table beam, with their relative locations remaining constant. The motor rotation axis is positioned at a vertical distance of 9 cm from the light window. By manipulating the motor rotation speed and direction at the controller end, the beam may be rotated. This allows for horizontal scanning of the system at a fixed point within a 360° range. The complete device is housed within a waterproof cover constructed from ultra-clear transparent glass, which has a transmittance rate of 90%, with dimensions of 30 cm × 30 cm × 40 cm and a wall thickness of 5 mm. The refractive index of water is 1.3333.

The system workflow is as follows: initially, the system camera, light plane, and rotation axis are calibrated in the atmosphere. Then, the underwater refraction error compensation algorithm is integrated into the 3D point cloud-stitching program. When the system scans the measured object, the controller rotates the rotary table at the predetermined rotational speed. Simultaneously, the camera captures images of the light strip during the rotation scanning process. After the scanning is finished, the captured images are processed on a PC to obtain the image coordinates of the structure’s light center. Once the scanning is finished, the obtained image is analyzed on the computer to determine the precise coordinates of the center of the structural light. The coordinates are transformed to derive the spatial coordinates of the object’s surface being examined. After making a dynamic refraction adjustment, the 3D point cloud model of the object is made by combining the 3D point cloud-stitching method with the system’s rotational speed.

2.1. The Calibration of Light Planes and Rotation Axes

2.1.1. Light Plane Fitting Utilizing the Least-Squares Method

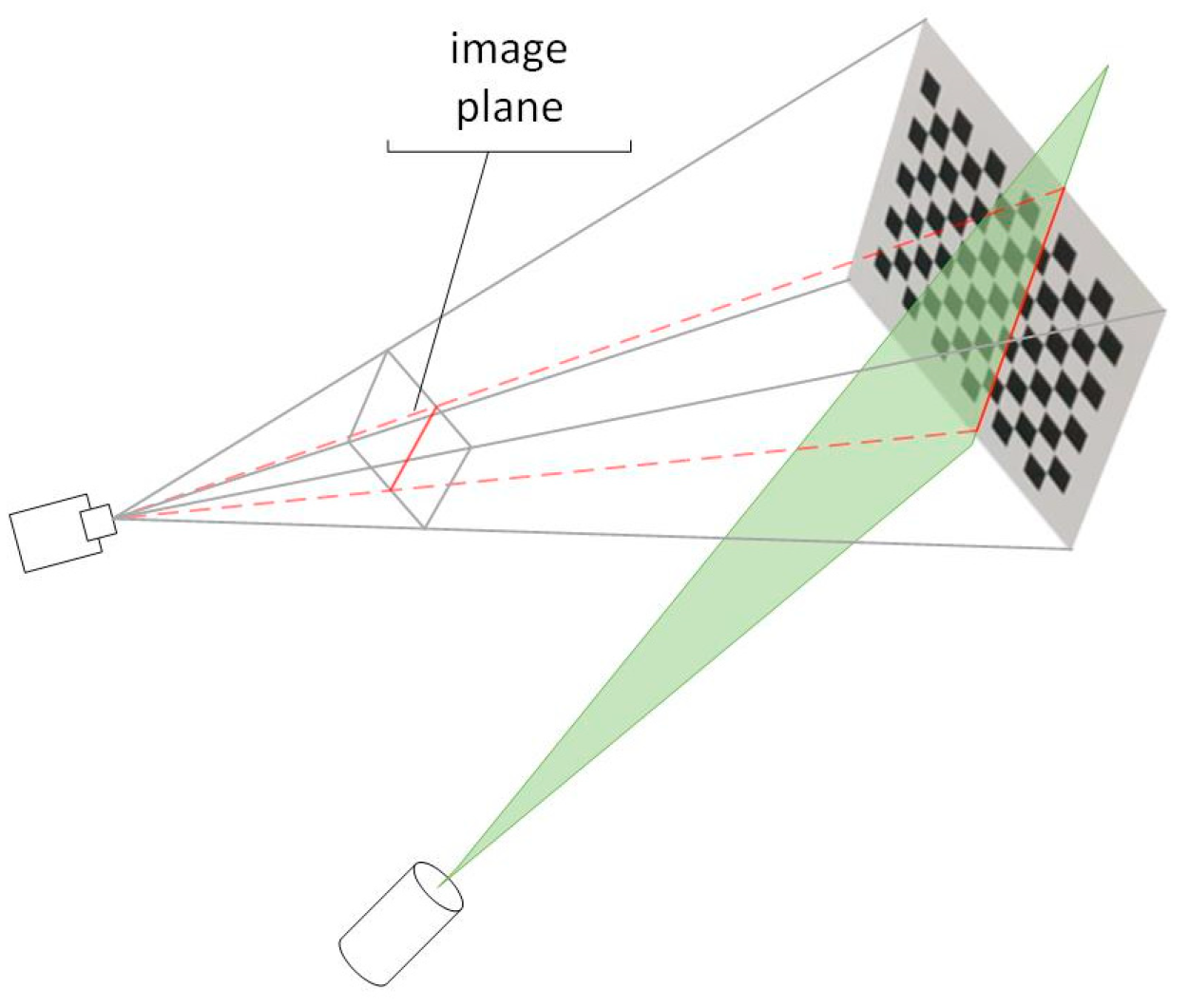

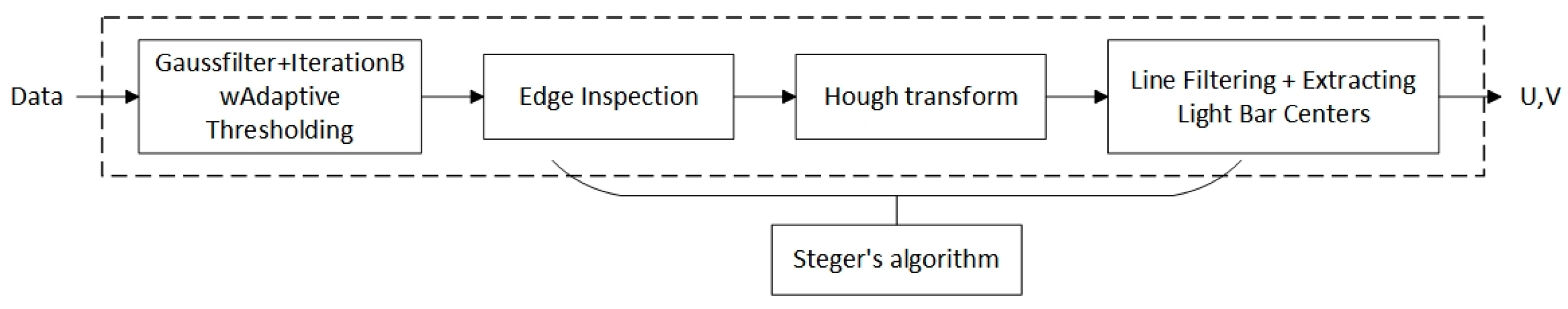

The CCD internal and external reference matrices, as well as the transformation matrix between the camera coordinate system and the tessellated coordinate system [

33], can be readily derived using the Zhang calibration method. As seen in

Figure 4, if

is the light plane equation, then finding the coefficients of this plane equation only requires four locations. Using the checkerboard calibration method, first create two sets of checkerboard calibration images, one with and one without light strips. Next, use the light strip extraction method in

Section 3 to extract the actual coordinates of the light strips on the target. Finally, use the conversion matrix to convert the coordinates to the camera coordinate system. From the two sets of images, two linear equations can be extracted, and the light plane equation can be found using least-squares fitting [

34,

35].

Error equation from plane equation:

By streamlining the error equation’s partial derivation, we can obtain:

Since the system of equations is linear, Clem’s approach can be used to solve it and obtain the final coefficients of the plane equation as:

In conclusion, the light plane equation that results from the 40 cm measurement distance’s experimental calibration is:

2.1.2. The Calibration of Rotary Axis

In the context of rotary scanning measurement, if the rotation angle is known (which is determined by the motor controller), it is possible to obtain the point cloud data of the entire object surface by performing a coordinate solution using the camera external reference matrix and the light plane equation, provided that the linear equation of the rotation axis is obtained. As depicted in the theoretical model of the imaging device illustrated in

Figure 5, it is observed that the

Zr axis aligns with the rotational axis of the system. The coordinate system for the rotation axis is denoted as

.The camera coordinate system at the initial scanning location of the device is denoted as

, while the CCD optical center is represented as

Oc. After rotating clockwise by an angle of α around the rotation axis

Zr, the camera coordinate system is denoted as

.

As depicted in

Figure 6, the schematic figure illustrates the process of rotary axis calibration. It is imperative that during the rotation and scanning of the device, each point on the target’s trajectory must be on a circle centered on the axis of rotation. As a result, the checkerboard grid’s corner points that rotate at a specific angle are first calibrated using the Zhang method. The corner points’ coordinates are then converted to the camera coordinate system, and they are subsequently fitted to a circle. Subsequently, the property that the angle points of various heights on the chessboard target are placed at different places on the rotation axis is used to generate a series of coordinates of the center of a circle. Finally, the circle’s centers’ coordinates are fitted to a straight line, allowing the equation of the straight line for the rotary axis in the camera coordinate system to be obtained as

[

36,

37].

The conversion of pixel coordinates to the rotary axis coordinate system is performed in the following manner:

where

A is the internal reference matrix of the camera and

R and

T are the rotation and translation matrices from the pixel coordinate system to the rotational coordinate system; thus, obtaining the matrix

A is as simple as:

The values 1806.73 and 1806.51 in matrix

A represent the focal length, which signifies the ratio between the pixels and the actual length in the horizontal and vertical directions of the image. The values 0 and 0 in the third column of the matrix represent the principal point of the image, indicating the pixel coordinates of the image’s center. The values 634.62 and 513.50 in matrix

A correspond to the first- and second-order coefficients of the camera’s radial distortion, respectively. Additionally, a value of 1 indicates the distortion of the tangential. In conclusion, the equation for the plane of the rotary axis derived from the 40 cm measurement distance’s experimental calibration is:

The CCD optical center exhibits circular motion with the rotating shaft as its center. According to the aforementioned calibration, the vertical distance between the CCD optical center coordinate and the rotating shaft is determined to be R = 109.7855 mm. The measured distance between the center of the rotating axis and the center of the camera is approximately 110 mm, which closely aligns with the calibration findings of the rotary axis in determining the value of r. The credibility of the calibration data pertaining to the rotational axis is evident.

4. Refractive Error Compensation in Underwater Light Windows

In the context of underwater measurements, the FWLS scanning imaging device is enclosed within a waterproof cover to ensure its functionality. Consequently, the device and the object being measured are situated in distinct media. When the instrument is operational, the laser is emitted towards the object being measured by passing through the light window and water. The light that is reflected back from the object likewise passes through the light window and water and is subsequently detected by the CCD. Hence, it becomes apparent that the light plane and the CCD image coordinate experience an excursion due to refraction [

41,

42,

43]. To address this issue, mathematical models are established based on the measurement process, allowing for the determination of equations describing the dynamic light plane refraction and the offset of pixel coordinates on the plane. This mathematical model is illustrated in

Figure 9.

As indicated in

Figure 9, where Ro is the rotating axis’s center, angle

represents the inclination between the normal vector of the light plane a and the horizontal direction, while angle

denotes the inclination between the light plane c and the vertical direction.

denotes the angle at which the laser light enters the light window, while

represents the angle at which the laser light exits the light window and enters the water. Similarly,

signifies the angle at which the return light enters the light window from the water, and

denotes the angle at which the return light exits the light window and enters the air. P’ is the coordinate point on the image plane of the theoretical return light of the measured target point Pt, and position P is the coordinate point on the image plane of the actual return light of the measured target point Pt. The angle formed by the laser and the rotating table’s beam is α. When the device is in its original position, the CCD is at position

Oc, and it rotates counterclockwise for t seconds to reach position

Oc’, with a rotation angle of

. The simple geometric connection can be used to calculate the relationship between

and the angle of rotation at moment t:

The variable can be mathematically represented as , where N is the number of frames associated with the image, T represents the camera frame rate, and ω signifies the minimum rotation unit speed of the device.

4.1. Resolving the Light Plane’s Dynamic Refraction Equation

Let us consider a refracting plane with a normal vector D(0,0,1). The incident light plane, with a normal vector

(a,

b,

c) denoted as (a), is refracted through the glass and forms a new light plane, denoted as (b), with a normal vector (

,

,

). Subsequently, the light plane b enters the water through the glass and is refracted again, resulting in a new light plane denoted as (c), with a normal vector (

,

,

). The refractive index ratio between air and water is denoted as

. By Snell’s law:

Normalize the light plane c’s normal vector:

Putting this into Equation (11) results in:

We know that since the normal of the light plane (

a), the refraction plane (

D), and the refracted light plane (

c) are coplanar:

Equations (13)and (14) provides us with:

If we substitute the intersection point of the light plane (

c) with the light window

into the equation for the light plane (

c),where

H is the distance from the CCD optical center to the light window, we obtain:

In conclusion, only the distance between the CCD optical center and the glass is unknown. The CCD optical center rotates in a circular motion with Ro serving as the center and R as the radius from the

Oc position to the

Oc’ position, as seen in

Figure 9’s right panel. The equation for the circle with center

Ro and radius

r, denoted as ⊙

RoOc, can be derived as

. When the device is mounted, the distance from the spinning shaft’s center to the light window–water side is 90 mm, and the equation of the line of refraction

D is

z = 90. It is easy to obtain

. Next, by utilizing Equation (5), the H expression may be converted to align with the camera coordinate system. Consequently, the plane equation of the refracted light plane (c) can be derived by employing the coupling Equations (15) and (16).

4.2. Solution for the Pixel Coordinate Offset Coefficient

The schematic picture in

Figure 9 illustrates the mapping of the underwater target point Pt onto the image plane, resulting in the imaging point

P (u, v). If the return light is not subject to refraction by the water body and the light window, it is observed directly at the location of

. By determining the offset coefficient η between the two points, it is possible to achieve refraction correction for each measured point [

44,

45,

46,

47,

48]. The offset η, which represents the difference between the point

on the image plane corresponding to the theoretical return light and the point

P on the image plane corresponding to the actual return light, can be mathematically described as the ratio of the tangent of

to

, as seen in

Figure 9.

also known as:

where

f is the camera’s focal length. By Snell’s law:

.

Then bring Equation (9) into (17) to obtain:

that is:

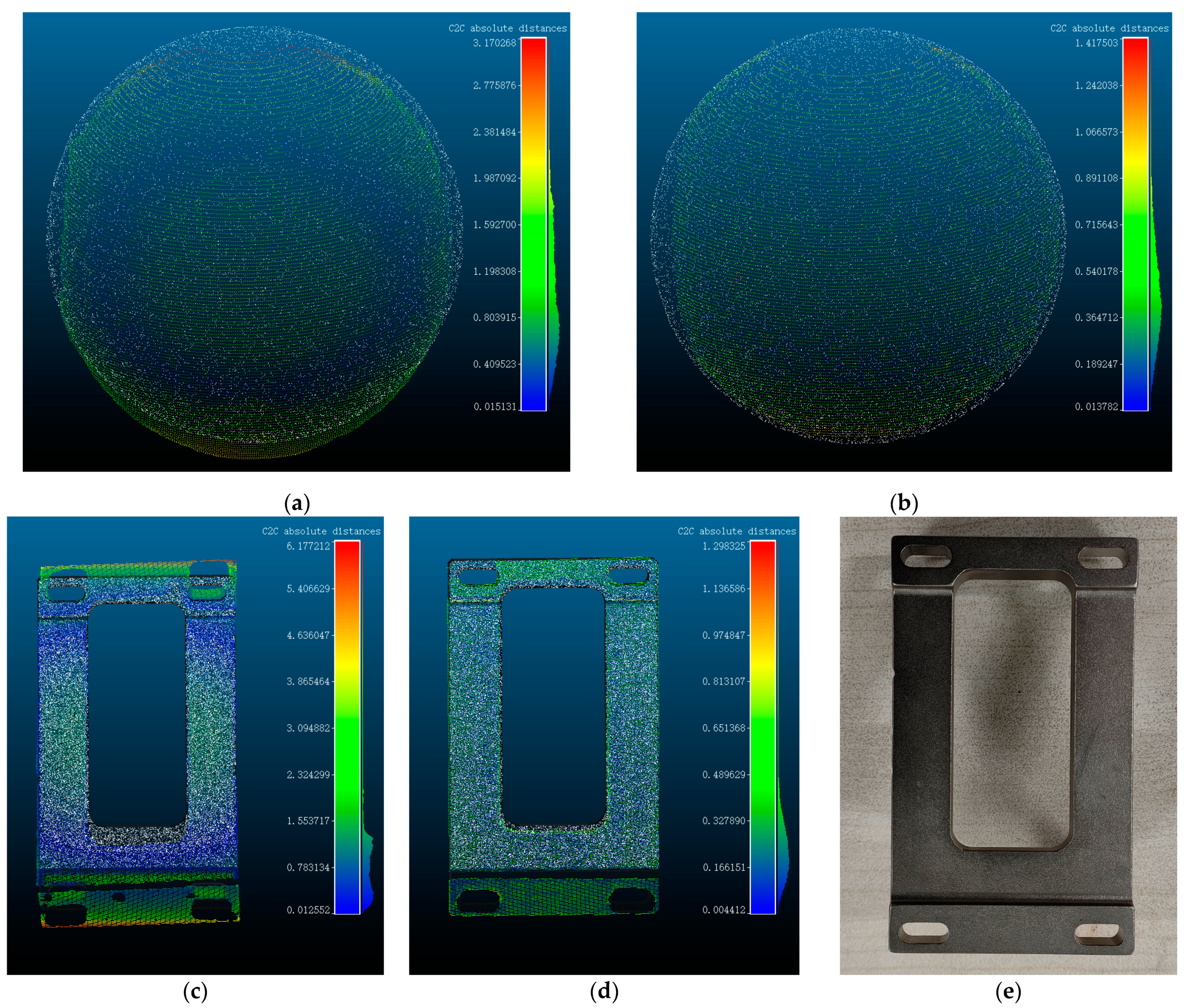

5. Error Analysis Experiments

To evaluate the precision of the system, we conducted multiple scans and reconstructions of a standard ball with a radius of 20 mm. This was performed within a range of 30 cm to 80 cm, with D representing the working distance. Additionally, we selected six positions within the imaging field of view at different distances. The objective was to calculate the measurement radius of the standard ball before and after correcting for refraction errors. The radius of the standard sphere measurement underwater, without the use of the refraction compensation method, is represented by the symbol R. Conversely, the radius of the measurement after accounting for the correction of refraction errors is marked by Rw. The radius of the standard ball can be determined through a computation, and the measured radius of the standard ball is presented in the table provided.

As seen in

Table 1, in the absence of the refraction error compensation method, the measurement error of the standard ball remains within a range of 2.5 mm. The largest measurement error observed is 2.36 mm, while the minimum measurement error is −0.67 mm. Upon the implementation of the refraction error compensation algorithm, the reconstructed standard ball radius exhibits a minimum error of 0.18 mm and a maximum error of 0.9 mm. It is apparent from this observation that the addition of the refraction error correction method improved the system’s reconstruction accuracy to some extent. Subsequently, the point cloud data obtained before and following the application of refraction adjustment were chosen for comparison with the point cloud data of the standard ball, which has a radius of 20 mm. The distances between the point clouds are illustrated in

Figure 10a,b.

The varying hues of the right-side bars in

Figure 10a–d correspond to distinct values that indicate the disparity between the measured point cloud model and the point cloud of the standard workpiece or standard sphere. The point cloud of the standard workpiece or standard sphere is depicted in white, while the colored point cloud represents the data obtained from the actual measurement. The color gradient from blue to red signifies the range of distances, with blue indicating proximity and red indicating greater distance. The measured point cloud with the addition of the refraction compensation method clearly matches the actual standard sphere model better, as shown in

Figure 10a,b, and the overall curvature and other details are enhanced. To further validate the efficacy of the compensation method, we conducted scanning reconstruction of the workpiece depicted in

Figure 10e, and a satisfactory compensation result is observed as shown in

Figure 10c,d.

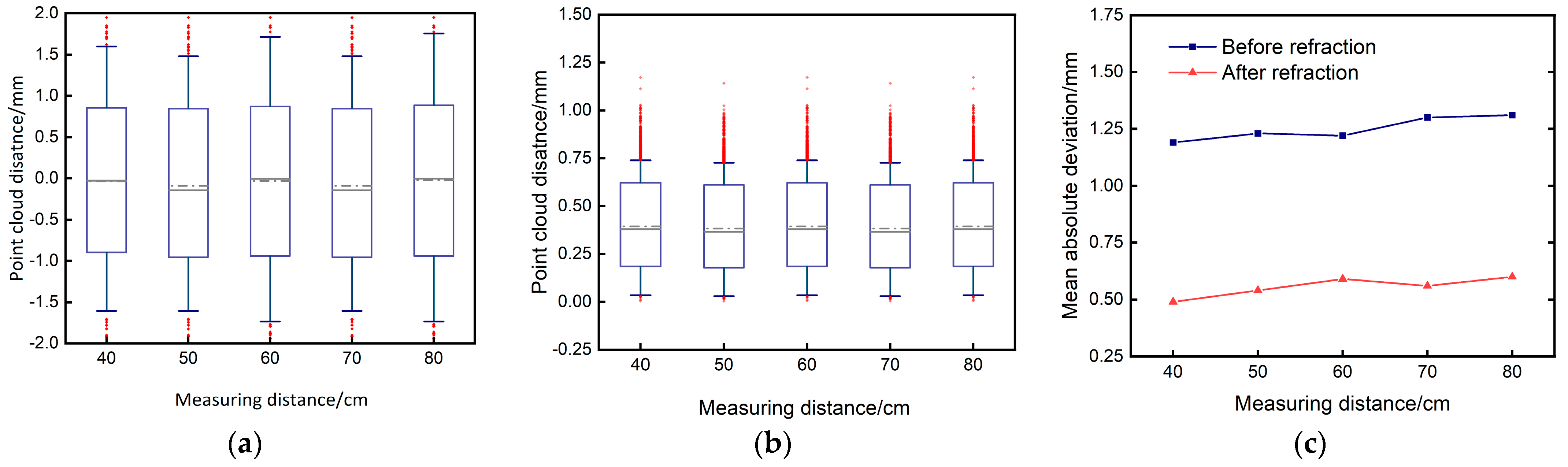

Five sets of point clouds were chosen, both before and after compensation. These point clouds were registered with the standard sphere point cloud, and the distances between the five groups of point clouds and the standard sphere point cloud were obtained. The box plots regarding distance distribution are presented in

Figure 11a–c. The box plot diagrams reveal that the disparity between the measured point cloud and the standard sphere is primarily concentrated within the range of (−1, +1) prior to compensation. However, after compensation, the disparity is predominantly distributed within the range of (0.25, 0.75). The mean absolute error of the distance between the acquired point clouds is computed, as depicted in

Figure 11c. The mean absolute deviation (MAD) prior to compensation measures approximately 1.25 mm. However, after applying the refraction compensation method, the MAD is effectively lowered to around 0.5 mm. This observation substantiates the authenticity and efficacy of the compensation algorithm. The analysis of dynamic refraction compensation serves as a foundation for future research on approaches for compensating dynamic refraction.

The first two columns of

Table 2 display the two frequently employed underwater laser scanners, while the last two columns showcase the scanning modes extensively utilized in contemporary academic research. The SXLS-100 product (Lankeguangdian Co. Ltd., Hangzhou, China) [

49] is identical to the scanning imaging device studied by Xue et al., and the ULS-100/200 product (Voyis Imaging Inc. Waterloo, ON, Canada) [

50] is identical to the scanning imaging device studied by Xie et al. Xie’s mode combines galvanometer scanning with a fixed camera and fixed light window, whereas Xue’s mode is illustrated in

Figure 1a. Compared to industrial products, the resolution and accuracy of the homemade imaging rotary in this paper are similar within a certain measurement distance. However, there is still a gap between the working distance and scanning line refresh rate. This is because the scanning mode of FWLS is constrained by the mechanical characteristics of the rotation mechanism, preventing it from achieving a very fast scanning speed. Additionally, when the imaging distance is large, the angle between the optical axis of the camera and the laser line becomes too small, resulting in fewer captured image pixels and lower scanning resolution. However, increasing the distance between the camera and the laser can extend the working distance of the imaging system. The FWLS scan line refresh rate is comparatively lower in magnitude than the galvanometer scanning approach investigated by Xie et al. However, the FWLS scanning imaging transposition manages to attain a satisfactory working distance and imaging accuracy, all while preserving a low cost of ownership. In contrast to the conventional scanning mode depicted in

Figure 1a, investigated by Xue et al., the imaging device examined in this study guarantees an equivalent scan line refresh rate while achieving superior accuracy and working distance. Furthermore, the scanning imaging mode of FWLS effectively prevents the distortion of the obtained light strip data resulting from the fluctuation of water generated by the rotational scanning of the system. Simply put, when compared to current industrial goods and scanning imaging devices offered in academic research, the FWLS system achieves a commendable level of resolution and accuracy. However, its working distance and scan line refresh rate are rather average. The imaging precision of FWLS can be enhanced by selecting a camera with a higher pixel resolution, a linear laser with a narrower light bar, and a mechanical rotation mechanism with reduced mechanical errors. Similarly, the imaging range of FWLS can be expanded by increasing the distance between the camera and the laser.

The mechanical error of the rotary table and other structures must be minimized if we are to achieve a more rapid 3D reconstruction of targets. Additionally, the volume and weight of the rotating hardware, including cameras and lasers, should not be excessively large. Mechanical scanning has certain limitations that restrict the attainment of extremely high scanning speeds to the more expensive galvanometer scanning, memes, and other imaging modes. Nonetheless, the FWLS scanning imaging scheme investigated in this article is applicable to general underwater low-light scenarios, is inexpensive, and can achieve a respectable scanning speed while preserving a degree of imaging precision. Additionally, the turbidity, fluctuations, and presence of aquatic organisms in the water will have a significant impact on the imaging accuracy in challenging subaqueous settings. Therefore, to accurately replicate the underwater environment, we have examined the impact of physical noise, imaging distance, and partial obscuration of the measured target on the system.

5.1. Underwater Physical Noise’s Effects on the System

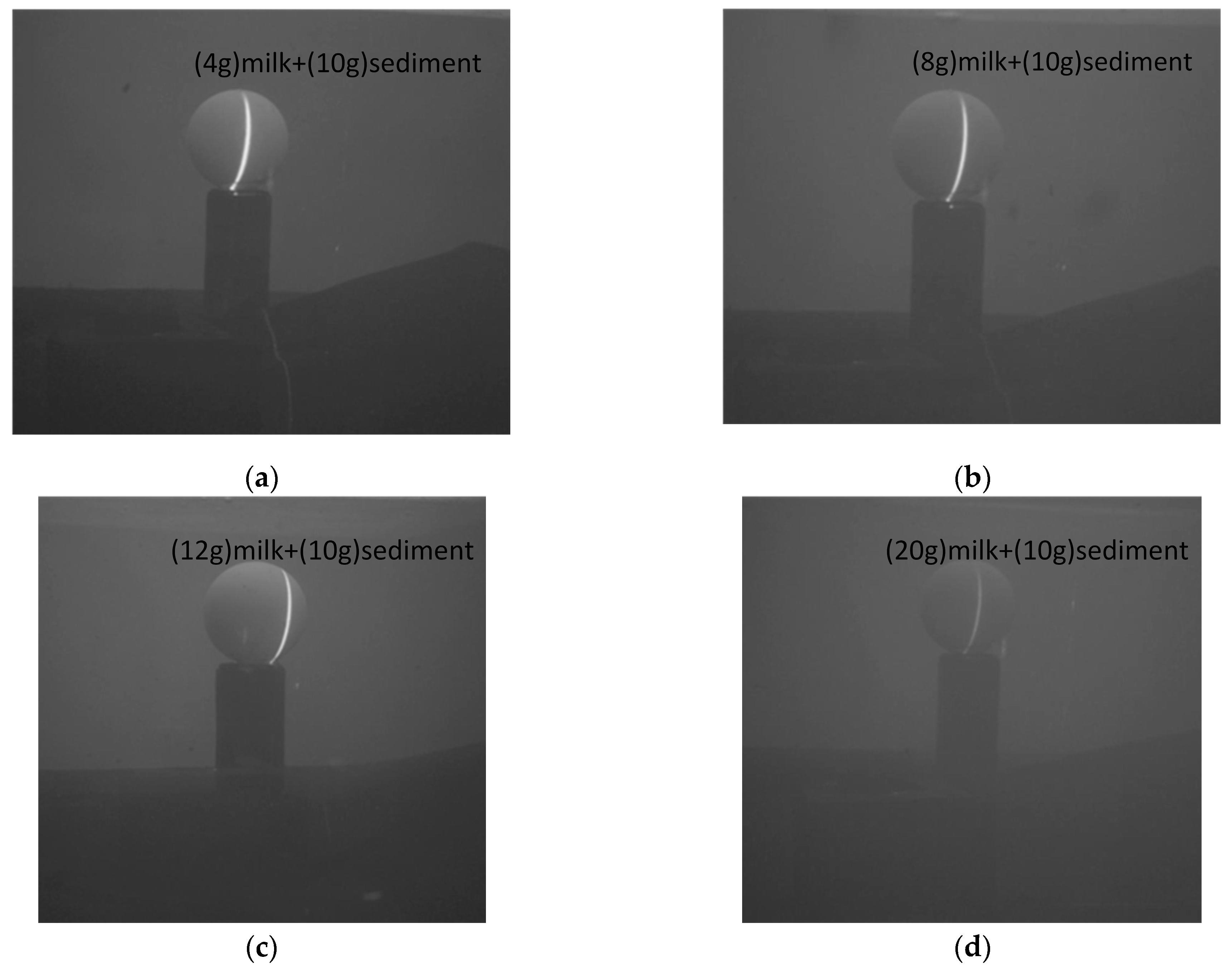

To replicate the effects of actual underwater sediment, suspended solids, and other physical disturbances on the system, we introduced a specific quantity of sediment and milk into 132 L of fresh water. Subsequently, we conducted experiments to assess the influence of varying levels of turbidity in the underwater environment on the imaging system.

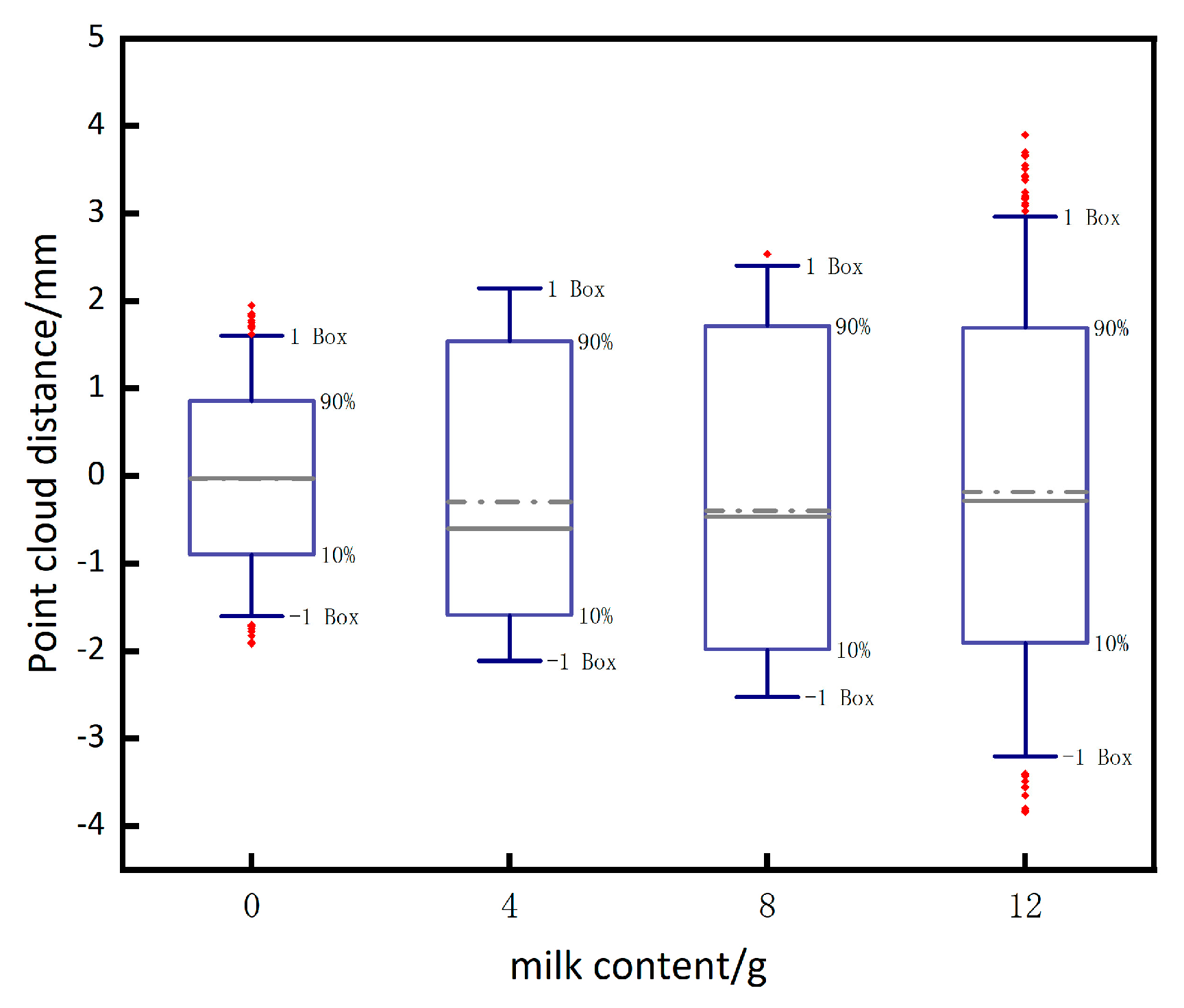

As shown in

Figure 12, different amounts of milk and sediment were added to the experimental pool to create underwater environments with varying turbidity, and measurements were taken on the standard sphere in each of these four environments to obtain the error corresponding to the (a), (b), (c) plots as shown in

Figure 13. When 20 g of milk is added to the underwater, the underwater turbidity is too high, resulting in the inability to image the underwater. To summarize, excessive turbidity in underwater conditions has two main effects on imaging. Firstly, it causes the scattering of light in both forward and backward directions, which hampers the quality of the images. Secondly, suspended particles in the water, like silt, partially absorb the returning light, leading to an inability to capture clear images.

5.2. Impact of Measuring Distance on System

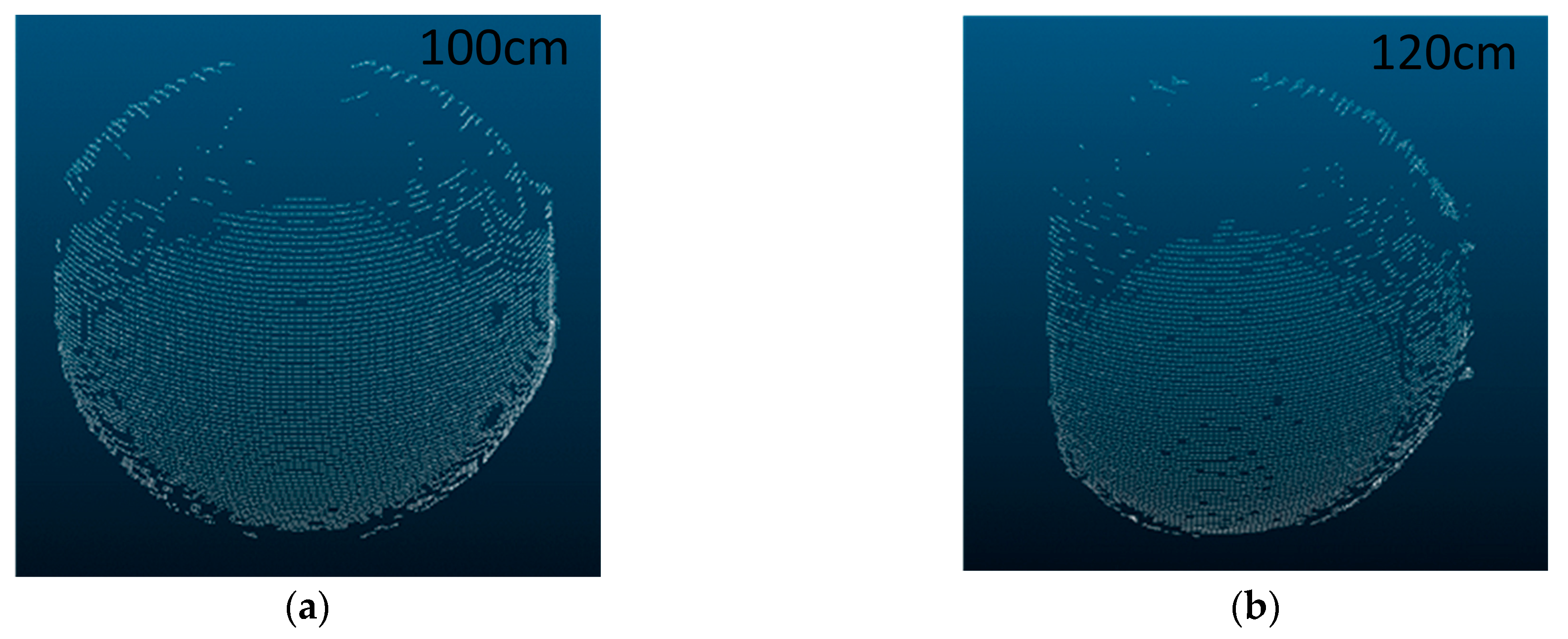

As shown in

Figure 10b and

Figure 14, when the distance being measured is large, the water body absorbs light, causing the CCD to receive inadequate return light. As a result, the scanned photographs taken have lower contrast, leading to partial missing data in the measurements. When the system approaches the maximum measurement distance, the small angle between the system’s optical axis and the light plane causes fewer pixels to be scanned in the image. This leads to lower resolution in the scanning results. At this point, the system’s error becomes the main factor and the impact of refraction compensation is less significant. Finally, based on the data acquired from the 120 cm and 100 cm measurement distances in

Figure 14, it is obvious that a measuring distance that is too far away primarily affects the reception of the backhaul light, resulting in missing data. Therefore, in order to ensure accurate measurement data, it is crucial to keep the measurement distance within the system’s working range.

5.3. Effect of Partial Occlusion of the Measured Target on the System

Figure 15 demonstrates our use of a shade cloth to imitate an underwater obstacle obstructing the measured target. When the underwater target is obstructed by another obstacle, the occluded portion cannot be measured. However, the unobstructed portion follows the refraction compensation algorithm as usual. Scenarios involving the occluded light plane and the occluded light path of the camera’s return path are analogous to this case.