Investigating Transfer Learning in Noisy Environments: A Study of Predecessor and Successor Features in Spatial Learning Using a T-Maze

Abstract

1. Introduction

2. Related Works

2.1. Challenges Posed by Noise in RL

2.2. Enhancing RL with Transfer Learning

2.3. Hyperparameter Optimization in RL

3. Materials and Methods

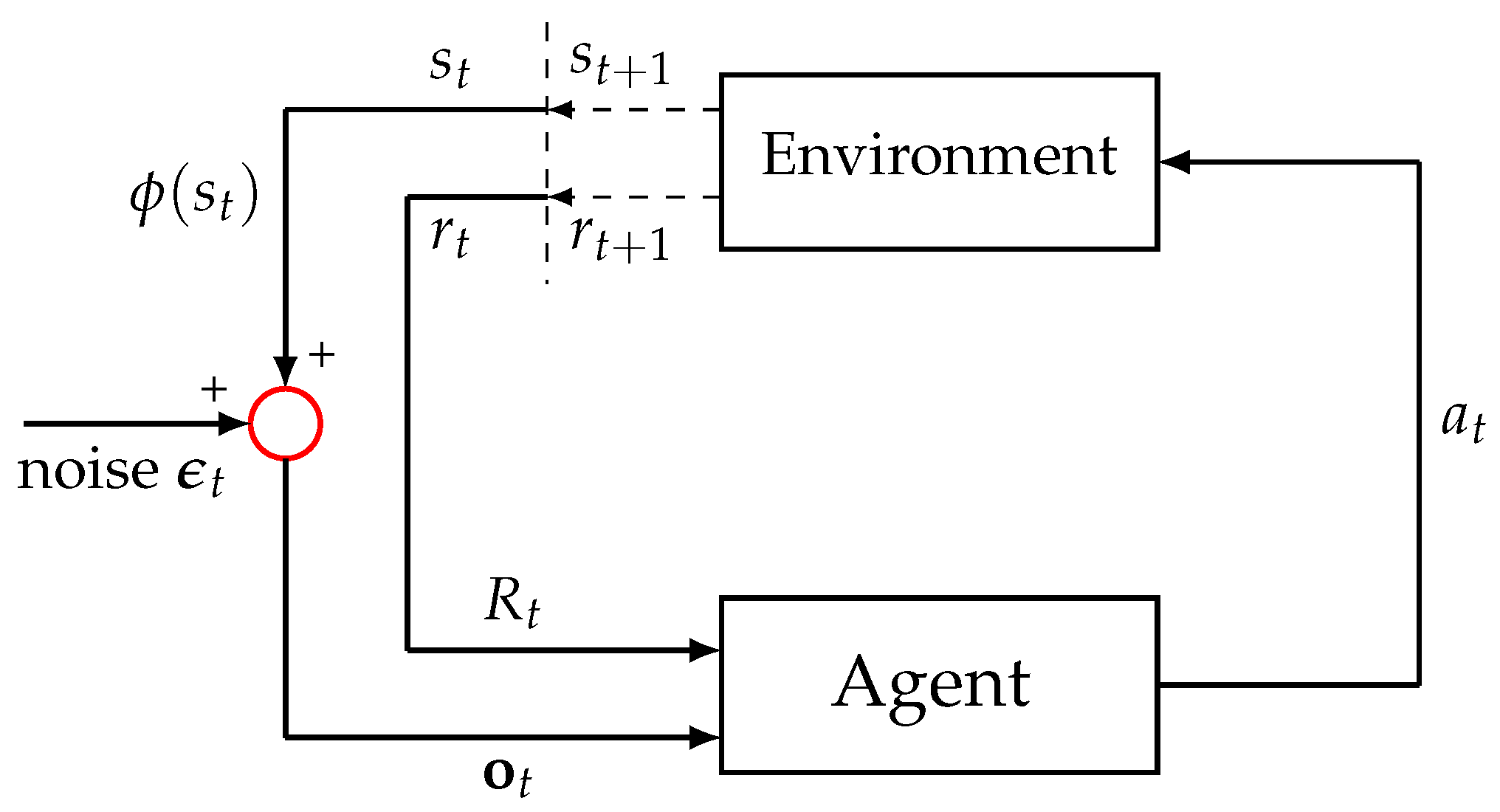

3.1. Markov Decision Processes

3.2. Predecessor Feature Learning

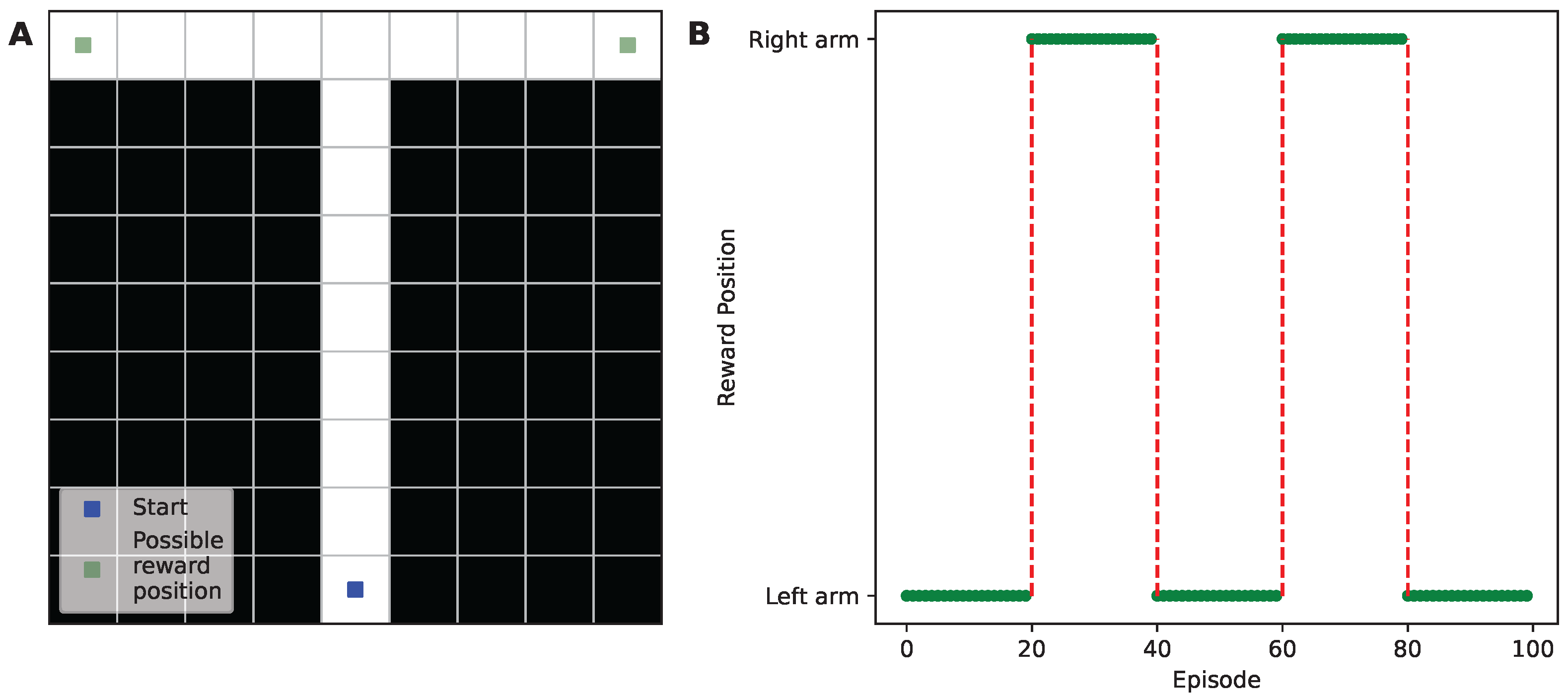

3.3. Experimental Design for Transfer Learning

3.4. Gaussian Noise in State Observations

| Algorithm 1 Predecessor feature learning with noisy observations |

|

3.5. Hyperparameters of the Algorithm

3.6. Evaluation Metrics for Transfer Learning

- Cumulative reward: The total reward accumulated by the agent over the course of the episodes, which serves as an indicator of the agent’s overall performance in the task.

- Step length to the end of the episode: The number of steps it took the agent to reach the end of an episode, reflecting its ability to efficiently navigate the environment.

- Adaptation rate: An indicator of the number of episodes an agent took to adapt to the reward location being switched. The adaptation rate was calculated by assessing the episodes in which the agent successfully reached the reward location five times consecutively, which were then considered in determining the adaptation rate. For example, if an agent reached this performance level by the 10th episode after the reward location shifted, its adaptation rate would equate to 10, meaning the optimal adaptation rate was defined as 5. A lower adaptation rate implies swifter adaptation of the agent to the new reward location, whereas a higher adaptation rate denotes a more gradual process of adjustment.

- Adaptation step length: This measure evaluates the number of steps required by an agent to reach its defined adaptation rate. For instance, with the adaptation rate set at 10, this metric calculates the steps necessary to successfully navigate 10 episodes following a change in the reward’s location. Utilizing a calculation approach akin to that for the adaptation rate but focused on steps rather than episodes, this metric offers an alternative view of an agent’s ability to adjust to environmental changes.

4. Experimental Results

4.1. Analyzing the Cumulative Reward and Step Length

4.1.1. Cumulative Reward

4.1.2. Step Length

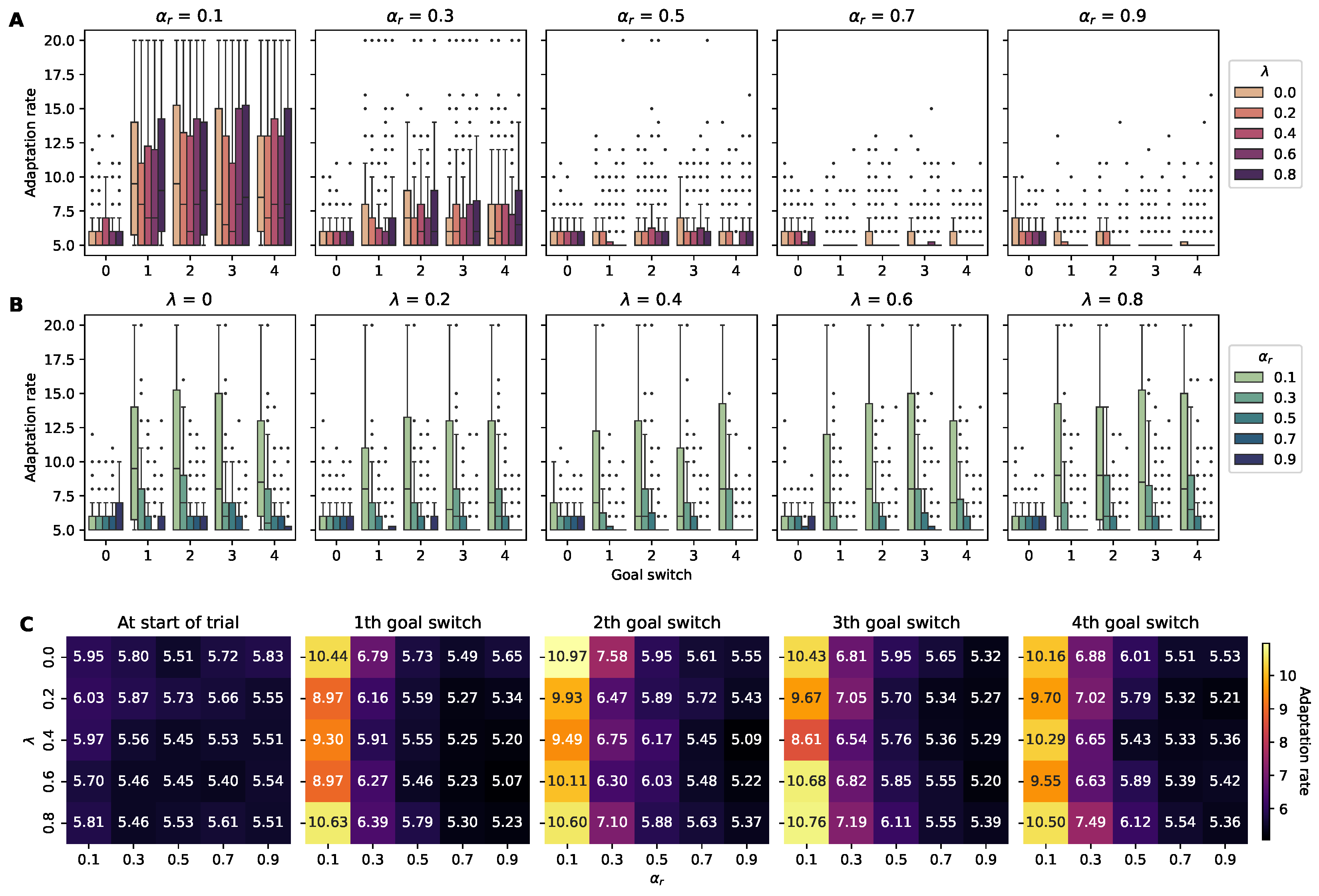

4.2. Analysis of the Adaptation Metrics

4.2.1. Adaptation Rate

4.2.2. Adaptation Step Length

5. Conclusions and Future Work

5.1. Hyperparameters

5.2. Neuroscientific Implications

5.3. Limitations and Future Research Directions

5.4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| MDPs | Markov Decision Processes; |

| SFs | Successor Features; |

| PFs | Predecessor Features; |

| AI | Artificial Intelligence; |

| RL | Reinforcement Learning; |

| SR | Successor Representation; |

| TD | Temporal Difference; |

| GANs | Generative Adversarial Networks; |

| STDP | Spike-Timing-Dependent Plasticity. |

References

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning; MIT Press: Cambridge, MA, USA, 2018; p. 552. [Google Scholar]

- Taylor, M.E. Transfer between Different Reinforcement Learning Methods. In Transfer in Reinforcement Learning Domains: Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2009; pp. 139–179. [Google Scholar]

- Taylor, M.E.; Stone, P. Transfer Learning for Reinforcement Learning Domains: A Survey. J. Mach. Learn. Res. 2009, 10, 1633–1685. [Google Scholar] [CrossRef]

- Lazaric, A. Transfer in Reinforcement Learning: A Framework and a Survey. In Adaptation, Learning, and Optimization: Reinforcement Learning; Springer: Berlin/Heidelberg, Germany, 2012; pp. 143–173. [Google Scholar]

- Eichenbaum, H. Memory: Organization and Control. Annu. Rev. Psychol. 2017, 68, 19–45. [Google Scholar] [CrossRef] [PubMed]

- Tomov, M.; Schulz, E.; Gershman, S. Multi-task reinforcement learning in humans. Nat. Hum. Behav. 2021, 5, 764–773. [Google Scholar] [CrossRef]

- Samborska, V.; Butler, J.L.; Walton, M.E.; Behrens, T.E.J.; Akam, T. Complementary task representations in hippocampus and prefrontal cortex for generalizing the structure of problems. Nat. Neurosci. 2022, 25, 1314–1326. [Google Scholar] [CrossRef]

- K Namboodiri, V.; Stuber, G. The learning of prospective and retrospective cognitive maps within neural circuits. Neuron 2021, 109, 3552–3575. [Google Scholar] [CrossRef]

- Miller, A.; Jacob, A.; Ramsaran, A.; De Snoo, M.; Josselyn, S.; Frankland, P. Emergence of a predictive model in the hippocampus. Neuron 2023, 111, 1952–1965.e5. [Google Scholar] [CrossRef]

- Dayan, P. Improving generalization for temporal difference learning: The successor representation. Neural Comput. 1993, 5, 613–624. [Google Scholar] [CrossRef]

- Stachenfeld, K.L.; Botvinick, M.M.; Gershman, S.J. The hippocampus as a predictive map. Nat. Neurosci. 2017, 7, 1951. [Google Scholar] [CrossRef]

- Lee, H. Toward the biological model of the hippocampus as the successor representation agent. Biosystems 2022, 213, 104612. [Google Scholar] [CrossRef]

- Barreto, A.; Dabney, W.; Munos, R.; Hunt, J.J.; Schaul, T.; Van Hasselt, H.; Silver, D. Successor features for transfer in reinforcement learning. In Proceedings of the 31st Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Barreto, A.; Borsa, D.; Quan, J.; Schaul, T.; Silver, D.; Hessel, M.; Mankowitz, D.; Žídek, A.; Munos, R. Transfer in Deep Reinforcement Learning Using Successor Features and Generalised Policy Improvement. arXiv 2019, arXiv:1901.10964v1. [Google Scholar]

- Borsa, D.; Barreto, A.; Quan, J.; Mankowitz, D.; Munos, R.; Hasselt, H.V.; Silver, D.; Schaul, T. Universal Successor Features Approximators. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Ma, C.; Ashley, D.R.; Wen, J.; Bengio, Y. Universal Successor Features for Transfer Reinforcement Learning. arXiv 2020, arXiv:2001.04025v1. [Google Scholar]

- Lehnert, L.; Tellex, S.; Littman, M.L. Advantages and Limitations of using Successor Features for Transfer in Reinforcement Learning. arXiv 2017, arXiv:1708.00102v1. [Google Scholar]

- Bailey, D.; Mattar, M. Predecessor Features. arXiv 2022, arXiv:2206.00303. [Google Scholar] [CrossRef]

- Pitis, S. Source traces for temporal difference learning. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Dodge, S.; Karam, L. Understanding How Image Quality Affects Deep Neural Networks. arXiv 2016, arXiv:1604.04004v2. [Google Scholar]

- Vasiljevic, I.; Chakrabarti, A.; Shakhnarovich, G. Examining the Impact of Blur on Recognition by Convolutional Networks. arXiv 2016, arXiv:1611.05760v2. [Google Scholar]

- Geirhos, R.; Temme, C.R.M.; Rauber, J.; Schütt, H.H.; Bethge, M.; Wichmann, F.A. Generalisation in humans and deep neural networks. arXiv 2018, arXiv:1808.08750v3. [Google Scholar]

- Li, C.; Li, S.; Feng, Y.; Gryllias, K.; Gu, F.; Pecht, M. Small data challenges for intelligent prognostics and health management: A review. Artif. Intell. Rev. 2024, 57, 2. [Google Scholar] [CrossRef]

- Wang, H.; Li, C.; Ding, P.; Li, S.; Li, T.; Liu, C.; Zhang, X.; Hong, Z. A novel transformer-based few-shot learning method for intelligent fault diagnosis with noisy labels under varying working conditions. Reliab. Eng. Syst. Saf. 2024, 251, 110400. [Google Scholar] [CrossRef]

- Li, C.; Luo, K.; Yang, L.; Li, S.; Wang, H.; Zhang, X.; Liao, Z. A Zero-Shot Fault Detection Method for UAV Sensors Based on a Novel CVAE-GAN Model. IEEE Sensors J. 2024, 24, 23239–23254. [Google Scholar] [CrossRef]

- Lee, H. Exploring the Noise Resilience of Successor Features and Predecessor Features Algorithms in One and Two-Dimensional Environments. arXiv 2023, arXiv:2304.06894v2. [Google Scholar]

- Dulac-Arnold, G.; Levine, N.; Mankowitz, D.J.; Li, J.; Paduraru, C.; Gowal, S.; Hester, T. Challenges of real-world reinforcement learning: Definitions, benchmarks and analysis. Mach. Learn. 2021, 110, 2419–2468. [Google Scholar] [CrossRef]

- Thrun, S.; Schwartz, A. Issues in using function approximation for reinforcement learning. In Proceedings of the 1993 Connectionist Models Summer School; Psychology Press: London, UK, 2014; pp. 255–263. [Google Scholar]

- Zhang, H.; Chen, H.; Xiao, C.; Li, B.; Liu, M.; Boning, D.; Hsieh, C.J. Robust deep reinforcement learning against adversarial perturbations on state observations. Adv. Neural Inf. Process. Syst. 2020, 33, 21024–21037. [Google Scholar]

- Zhou, Q.; Chen, S.; Wang, Y.; Xu, H.; Du, W.; Zhang, H.; Du, Y.; Tenenbaum, J.B.; Gan, C. HAZARD Challenge: Embodied Decision Making in Dynamically Changing Environments. arXiv 2024, arXiv:2401.12975v1. [Google Scholar]

- Park, J.; Choi, J.; Nah, S.; Kim, D. Distributional and hierarchical reinforcement learning for physical systems with noisy state observations and exogenous perturbations. Eng. Appl. Artif. Intell. 2023, 123, 106465. [Google Scholar] [CrossRef]

- Sun, K.; Zhao, Y.; Jui, S.; Kong, L. Exploring the training robustness of distributional reinforcement learning against noisy state observations. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases; Springer: Cham, Switzerland, 2023; pp. 36–51. [Google Scholar]

- Watkins, C.J.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Fox, R.; Pakman, A.; Tishby, N. Taming the Noise in Reinforcement Learning via Soft Updates. In Proceedings of the 32nd Conference on Uncertainty in Artificial Intelligence (UAI 2016), Jersey City, NJ, USA, 25–19 June 2016. [Google Scholar]

- Moreno, A.; Martín, J.D.; Soria, E.; Magdalena, R.; Martínez, M. Noisy reinforcements in reinforcement learning: Some case studies based on gridworlds. In Proceedings of the 6th WSEAS International Conference on Applied Computer Science, Canary Islands, Spain, 16–18 December 2006. [Google Scholar]

- Zhu, Z.; Lin, K.; Jain, A.; Zhou, J. Transfer Learning in Deep Reinforcement Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 13344–13362. [Google Scholar] [CrossRef]

- Sandeep Varma, N.; K, P.R.; Sinha, V. Effective Reinforcement Learning using Transfer Learning. In Proceedings of the 2022 IEEE International Conference on Data Science and Information System (ICDSIS), Hassan, India, 29–30 July 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Xing, E.P.; Jebara, T. (Eds.) An Efficient Approach for Assessing Hyperparameter Importance; Proceedings of Machine Learning Research: Bejing, China, 2014; Volume 32. [Google Scholar]

- Bergstra, J.; Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Snoek, J.; Larochelle, H.; Adams, R.P. Practical bayesian optimization of machine learning algorithms. Adv. Neural Inf. Process. Syst. 2012, 4, 2951–2959. [Google Scholar]

- Lee, H. Tuning the Weights: The Impact of Initial Matrix Configurations on Successor Features’ Learning Efficacy. Electronics 2023, 12, 4212. [Google Scholar] [CrossRef]

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Burovski, E.; Peterson, P.; Weckesser, W.; Bright, J.; et al. SciPy 1.0: Fundamental algorithms for scientific computing in Python. Nat. Methods 2020, 17, 261–272. [Google Scholar] [CrossRef] [PubMed]

- Seabold, S.; Perktold, J. Statsmodels: Econometric and Statistical Modeling with Python. In Proceedings of the 9th Python in Science Conference, Austin, TX, USA, 28 June–3 July 2010; pp. 92–96. [Google Scholar] [CrossRef]

- Richards, B.; Frankland, P. The Persistence and Transience of Memory. Neuron 2017, 94, 1071–1084. [Google Scholar] [CrossRef] [PubMed]

- Gershman, S. The Successor Representation: Its Computational Logic and Neural Substrates. J. Neurosci. 2018, 38, 7193–7200. [Google Scholar] [CrossRef] [PubMed]

- Hassabis, D.; Kumaran, D.; Summerfield, C.; Botvinick, M. Neuroscience-Inspired Artificial Intelligence. Neuron 2017, 95, 245–258. [Google Scholar] [CrossRef] [PubMed]

- Ludvig, E.A.; Bellemare, M.G.; Pearson, K.G. A Primer on Reinforcement Learning in the Brain. In Computational Neuroscience for Advancing Artificial Intelligence; IGI Global: Hershey, PA, USA, 2011; pp. 111–144. [Google Scholar]

- Lee, D.; Seo, H.; Jung, M. Neural basis of reinforcement learning and decision making. Annu. Rev. Neurosci. 2012, 35, 287–308. [Google Scholar] [CrossRef] [PubMed]

- Niv, Y. Reinforcement learning in the brain. J. Math. Psychol. 2009, 53, 139–154. [Google Scholar] [CrossRef]

- Gershman, S.; Uchida, N. Believing in dopamine. Nat. Rev. Neurosci. 2019, 5, 6. [Google Scholar] [CrossRef]

- Schultz, W. Predictive reward signal of dopamine neurons. J. Neurophysiol. 1998, 80, 1–27. [Google Scholar] [CrossRef]

- Pan, W.; Schmidt, R.; Wickens, J.; Hyland, B. Dopamine cells respond to predicted events during classical conditioning: Evidence for eligibility traces in the reward-learning network. J. Neurosci. 2005, 25, 6235–6242. [Google Scholar] [CrossRef]

- Izhikevich, E. Solving the distal reward problem through linkage of STDP and dopamine signaling. Cereb. Cortex 2007, 17, 2443–2452. [Google Scholar] [CrossRef]

- Dabney, W.; Kurth-Nelson, Z.; Uchida, N.; Starkweather, C.; Hassabis, D.; Munos, R.; Botvinick, M. A distributional code for value in dopamine-based reinforcement learning. Nature 2020, 577, 671–675. [Google Scholar] [CrossRef]

- Suri, R.E. TD models of reward predictive responses in dopamine neurons. Neural Netw. 2002, 15, 523–533. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Lowet, A.S.; Zheng, Q.; Matias, S.; Drugowitsch, J.; Uchida, N. Distributional Reinforcement Learning in the Brain. Trends Neurosci. 2020, 43, 980–997. [Google Scholar] [CrossRef] [PubMed]

- Gardner, M.P.H.; Schoenbaum, G.; Gershman, S.J. Rethinking dopamine as generalized prediction error. Proc. R. Soc. B Biol. Sci. 2018, 285, 20181645. [Google Scholar] [CrossRef] [PubMed]

- Bono, J.; Zannone, S.; Pedrosa, V.; Clopath, C. Learning predictive cognitive maps with spiking neurons during behavior and replays. Elife 2023, 12, e80671. [Google Scholar] [CrossRef] [PubMed]

- Gerstner, W.; Lehmann, M.; Liakoni, V.; Corneil, D.; Brea, J. Eligibility Traces and Plasticity on Behavioral Time Scales: Experimental Support of NeoHebbian Three-Factor Learning Rules. Front. Neural Circuits 2018, 12, 53. [Google Scholar] [CrossRef]

- Shindou, T.; Shindou, M.; Watanabe, S.; Wickens, J. A silent eligibility trace enables dopamine-dependent synaptic plasticity for reinforcement learning in the mouse striatum. Eur. J. Neurosci. 2019, 49, 726–736. [Google Scholar] [CrossRef]

- Fang, C.; Aronov, D.; Abbott, L.; Mackevicius, E. Neural learning rules for generating flexible predictions and computing the successor representation. Elife 2023, 12, e80680. [Google Scholar] [CrossRef]

- George, T.; de Cothi, W.; Stachenfeld, K.; Barry, C. Rapid learning of predictive maps with STDP and theta phase precession. Elife 2023, 12, e80663. [Google Scholar] [CrossRef]

- Zhang, B.; Rajan, R.; Pineda, L.; Lambert, N.; Biedenkapp, A.; Chua, K.; Hutter, F.; Calandra, R. On the Importance of Hyperparameter Optimization for Model-based Reinforcement Learning. arXiv 2021, arXiv:2102.13651v1. [Google Scholar]

- Grossman, C.; Bari, B.; Cohen, J. Serotonin neurons modulate learning rate through uncertainty. Curr. Biol. 2022, 32, 586–599.e7. [Google Scholar] [CrossRef] [PubMed]

- Iigaya, K.; Fonseca, M.; Murakami, M.; Mainen, Z.; Dayan, P. An effect of serotonergic stimulation on learning rates for rewards apparent after long intertrial intervals. Nat. Commun. 2018, 9, 2477. [Google Scholar] [CrossRef] [PubMed]

- Hyun, J.; Hannan, P.; Iwamoto, H.; Blakely, R.; Kwon, H. Serotonin in the orbitofrontal cortex enhances cognitive flexibility. bioRxiv 2023. [Google Scholar] [CrossRef]

| Metric | Hyperparameter | Correlation Coefficient () | p-Value |

|---|---|---|---|

| Cumulative reward | 0.661 | <0.001 | |

| 0.034 | 0.09 | ||

| Step length | −0.750 | <0.001 | |

| −0.231 | < 0.001 |

| Metric | Hyperparameter | Regression Coefficient | 95% Conf. Interval |

|---|---|---|---|

| Cumulative reward | (constant) | 83.825 | 83.159, 84.490 |

| 19.434 | 18.483, 20.385 | ||

| −0.077 | −1.028, 0.874 | ||

| Step length | (constant) | 182.678 | 179.554, 185.801 |

| −117.405 | −121.867, −112.942 | ||

| −24.782 | −29.244, −20.320 |

| Metric | Hyperparameter | Correlation Coefficient () | p-Value |

|---|---|---|---|

| Adaptation rate | −0.363 | <0.001 | |

| −0.029 | 0.001 | ||

| Adaptation step length | −0.428 | <0.001 | |

| −0.068 | <0.001 |

| Metric | Hyperparameter | Regression Coefficient | 95% Conf. Interval |

|---|---|---|---|

| Adaptation rate | (constant) | 8.643 | 8.520, 8.765 |

| −4.312 | −4.488, −4.137 | ||

| −0.059 | −0.234, 0.116 | ||

| Adaptation step length | (constant) | 2550.657 | 2489.834, 2611.481 |

| −2300.500 | −2387.391, −2213.609 | ||

| −70.776 | −157.667, 16.115 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seo, I.; Lee, H. Investigating Transfer Learning in Noisy Environments: A Study of Predecessor and Successor Features in Spatial Learning Using a T-Maze. Sensors 2024, 24, 6419. https://doi.org/10.3390/s24196419

Seo I, Lee H. Investigating Transfer Learning in Noisy Environments: A Study of Predecessor and Successor Features in Spatial Learning Using a T-Maze. Sensors. 2024; 24(19):6419. https://doi.org/10.3390/s24196419

Chicago/Turabian StyleSeo, Incheol, and Hyunsu Lee. 2024. "Investigating Transfer Learning in Noisy Environments: A Study of Predecessor and Successor Features in Spatial Learning Using a T-Maze" Sensors 24, no. 19: 6419. https://doi.org/10.3390/s24196419

APA StyleSeo, I., & Lee, H. (2024). Investigating Transfer Learning in Noisy Environments: A Study of Predecessor and Successor Features in Spatial Learning Using a T-Maze. Sensors, 24(19), 6419. https://doi.org/10.3390/s24196419