Abstract

We consider a complex control problem: making a monopod accurately reach a target with a single jump. The monopod can jump in any direction at different elevations of the terrain. This is a paradigm for a much larger class of problems, which are extremely challenging and computationally expensive to solve using standard optimization-based techniques. Reinforcement learning (RL) is an interesting alternative, but an end-to-end approach in which the controller must learn everything from scratch can be non-trivial with a sparse-reward task like jumping. Our solution is to guide the learning process within an RL framework leveraging nature-inspired heuristic knowledge. This expedient brings widespread benefits, such as a drastic reduction of learning time, and the ability to learn and compensate for possible errors in the low-level execution of the motion. Our simulation results reveal a clear advantage of our solution against both optimization-based and end-to-end RL approaches.

1. Introduction

Legged robots have become a popular technology for navigating unstructured terrains. Control design for this type of system is far from trivial. Remarkable results have been achieved for standard locomotion tasks with continuous foot contact (e.g., walking and trotting [1]). Other tasks, like jumps, have a long aerial phase without any contact with the ground, and are more challenging because even small deviations from the desired trajectory can have a large impact on the landing location and orientation [2]. Additionally, the high accelerations and the constraints involved make jumping very challenging to manage, especially in the context of a real-time application.

This problem has received some attention in the last few years. A line of research has produced heuristic approaches that rely on physical intuition and/or simplified models to be used in designing controllers or planners [3,4]. However, the hand-crafted motion actions produced by these approaches are not guaranteed to be physically implementable. Another common approach is to use full-body numerical optimization [5] that enables handling the numerous constraints associated with legged robots. A remarkable set of aerial motions (jumps onto and off of platforms, spins, flips, barrel rolls) has been produced by MIT Mini Cheetah in [6,7,8] using a centroidal momentum-based non-linear optimization. However, optimization-based approaches for high-dimensional non-linear problems suffer from high computational costs, making them unsuitable for real-time implementation, particularly for online trajectory replanning. Recent advances [5,9] have made significant improvements in the efficiency of Model Predictive Control (MPC) for jumping tasks. However, the price to pay is to introduce some artificial constraints, such as fixing the contact sequence, the time-of-flight, or optimizing the contact timings offline [5]. One limitation of pre-specifying foot contacts, it that this can cause instability problems in the presence of a large contact mismatch [10].

A third set of approaches is based on Reinforcement Learning (RL). The increased computational power and the availability of sophisticated approaches for function approximation have fueled an increasing interest in robot learning. The seminal work of Lillicrap [11] showed that an actor-critic scheme combined with a deep Q network could be successfully applied to learn end-to-end policies for a continuous action domain. Given these results, several groups have then applied RL to quadrupeds for locomotion tasks [12,13,14,15,16], and to in-place hopping legs [17]. As with most model-free approaches, the use of RL requires a massive number of training steps (in the order of millions) to find good solutions [18]. Other approaches [19,20,21] seek to improve the efficiency and robustness of the learning process by combining Trajectory Optimization (TO) with RL: they use the former to generate initial trajectories to bootstrap the exploration of alternatives made by the latter. As a final remark, the efficiency and robustness of the RL learning process are greatly affected by the choice of the action space [22,23]. Some approaches require that the controller directly generates the torques [24], while others suggest that the controller operates in Cartesian or joint space [13,25]. In [19], the authors combined trajectory optimization with deep RL, employing trajectory optimization to provide demonstrations used as a starting point to facilitate initial exploration, thus addressing the issue of local optimality.

Despite the abundance of research in the field, the application of RL to a jumping task has received limited attention, and with good reasons. Indeed, due to the prolonged airborne phase, and the sparse/discrete nature of the reward (i.e., a positive reward is given at the touchdown moment), the problem of jumping is not easily addressed with a conventional RL [26]. There are a few learning methods applied to jumping that require prolonged training periods and/or over-engineered reward shaping [27]. A straightforward implementation of end-to-end RL methods without any structure can lead to disappointing learning performance [28]. This is primarily due to the non-smooth reward landscape created by abrupt contact changes. Among successful RL approaches to jump tasks, Yang et al. [28] utilize a controller to warm start a policy for efficient training (i.e., they learn the residual action) but consider only fixed jump durations. The authors claim that warm-starting the policy reduces the noise in the reward landscape, and determines a better convergence of the training process. Vezzi et al. [29] propose multi-stage learning phases with different levels of refinement; but their goal is to maximize jump height and distance, with little or no consideration for jump accuracy.

Paper Contribution

We propose an RL framework to produce omnidirectional jump trajectories (from standstill) on elevated terrain. The main features are: 1. a computation time below a few milliseconds that enables the real-time execution of the software at controller rates (i.e., in the order of kHz); 2. a quick learning phase; 3. high levels of accuracy and safety. A faster learning phase has two advantages: (1) lowering the barriers to accessing this technology for users with limited availability of computing power; (2) addressing the environmental concerns connected with the carbon footprint of learning technologies [30].

We compare our approach (that we call Guided Reinforcement Learning (GRL)) with both a baseline TO controller with a fixed jump duration and a “standard” End-to-end Reinforcement Learning (E2E) approach that considers joint references as action space. In the first comparison, GRL achieved better or equal performance while ensuring real-time computation speed. With respect to E2E walking approaches [13,24], we observed a substantial reduction in the number of episodes (without considering parallelization) needed to achieve good learning performance [31]. Instead, an E2E implementation specific for a jump motion (Section 4.2), did not provide satisfactory learning results. This aligns with the outcomes of [28]. To the author’s knowledge, nobody has yet achieved good results learning omni-directional jumps with pure E2E approaches.

2. Guided Reinforcement Learning for Jumping

2.1. Problem Description

Simple notions of bio-mechanics suggest that legged animals execute their jumps in three phases: 1. thrust—an explosive extension of the limbs follows an initial compression to gain sufficient momentum for the lift-off and the phase finishes when the foot leaves the ground; 2. flight—the body, subject uniquely to gravity, reaches an apex where the vertical Center of Mass (CoM) velocity changes its sign and the posture is adjusted to prepare for landing; 3. landing—the body realizes a touch-down, which means that the foot establishes contact with the ground again. For the sake of simplicity, we consider a simplified and yet realistic setting: a monopod robot, whose base link is sustained by passive prismatic joints preventing any change in its orientation (see Section 3.3). In this paper, we focus only on the thrust phase. We assume that a specialized controller manages the landing phase, such as the one proposed by Roscia et al. [2].

The flight phase is governed by the ballistic law. Let be the target location for the CoM of the robot at the end of the jump and let be the CoM state at lift-off. Since the trajectory is ballistic, after lift-off, the trajectory lies on the vertical plane containing and . The set of possible landing CoM positions is a function of , :

where is the flight time. Given these considerations, we can model the problem of generating the thrust phase in the following terms:

Problem 1.

Synthesize a thrust phase that produces a lift-off state (i.e., CoM position and velocity) that: 1. satisfies (1); 2. copes with the potentially adverse conditions posed by the environment (i.e., contact stability, friction constraints); 3. satisfies the physical and actuation constraints.

Non-linear optimization is frequently used for similar problems. However, it has two important limitations that obstruct its application in our specific case: 1. the computation requirements are very high, complicating both the real-time execution and the use of low-cost embedded hardware, 2. the problem is strongly non-convex, which can lead the solvers to be trapped in local minima, 3. local approaches have better performance in terms of computational effort but the quality of the computed solution is usually dependent on a good initialization.

2.2. Overview of the Approach

We use RL to learn optimal trajectories for a jump motion, which a lower-level controller then tracks. Our strategy is based on the following ideas. First, learning is performed in Cartesian space rather than in joint space. This choice allows generalization towards different robot morphologies and configurations [32]. Additionally, it allows for the side execution of a safety filter that discards unfeasible outputs of the RL agent by simple computations (see Section 3.2). Second, while the system is airborne, its final landing position is dictated by simple mechanical laws (ballistic). Therefore, the learning process can focus solely on the thrusting phase. Third, we know from biology [33] that mammals are extremely effective in learning how to walk because of “prior” knowledge of their genetic background. The learning process can thus be guided by an approximate knowledge of what the resulting motion should “look like”. Physical intuition suggests that a jump motion needs a “charging” phase to compress the legs, followed by an extension phase where the CoM is accelerated both upwards and in the jump direction. The “charging” phase allows the exploitation the full range of leg extension for CoM acceleration. Specifically, we parametrize the thrusting trajectory (i.e., from standstill to lift-off) for the CoM with a (3rd order) Bézier curve that is instrumental in obtaining such natural-looking trajectories. The adoption of Bézier curves for learning tasks is not uncommon in the literature [34,35,36]. This heuristic drastically reduces the dimension of the search space and gives a physically meaningful reference for trajectory learning.

Additionally, we will show that the trajectories computed with our approach are very close to the ones obtained with numerical optimization, and the system retains good generalization abilities. By making this choice, we can learn the Bézier parameters using an off-policy Deep Reinforcement Learning (Deep-RL) algorithm, Twin Delayed Deep Deterministic Policy Gradient (TD3), trained to minimize cost functions very similar to those typically used in optimal control.

The Cartesian trajectory generated by our RL agent is translated into joint space via inverse kinematics, and tracked by a low-level joint-space Proportional-Derivative (PD) controller with gravity compensation. This turned out to be more effective than parametrizing the actions in joint space, as in E2E RL (see Section 4.3.3). Indeed, despite E2E approaches demonstrating quite effective learning of walking motions [31], they turn out to struggle to learn tasks with sparse rewards [26], and this is the case with jumping motions.

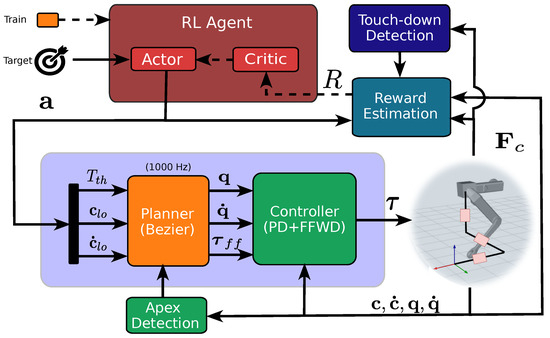

Our RL pipeline is depicted in Figure 1. The agent state can be in training or inference mode; when in training mode, the Critic Neural Network (NN) and the Actor NN are updated (see dashed lines in the figure) at regular intervals after collecting a sufficient number of rewards (cf. Table 1).

Figure 1.

Diagram of the GRL Framework. The framework is split into two levels: the RL agent and the planner. The RL agent produces an action for the planner based on a desired target. This computes a Bézier reference curve that is mapped into joint motion via inverse kinematics and tracked by the PD controller that provides the joint torques to feed the robot. During the training, at the end of each episode, a reward is computed and fed back to the RL agent. Dashed lines are active when the framework is in training mode.

Table 1.

Controller, planner, and simulator parameters.

3. Learning Framework

The thrust phase is characterized by the lift-off CoM position and velocity , and by the thrust duration , which is the time to reach the lift-off configuration from the initial state. For GRL, the state of the environment is defined as (, ), where is the initial CoM position and the CoM at the landing location (target). The objective of the GRL agent is to find the jump parameters (, , ) that minimize the landing position error at touch-down while satisfying the physical constraints. Our jumping scenario can be seen as a high-level planning of a single-step episode where the only action performed leads always to the end state.

3.1. The Action Space

The dimension of the action space has a strong impact on the performance. An action with few parameters reduces the exploration space, this reduces the complexity of the mapping, speeding up the learning process. A first way to reduce the complexity of the action space is by expressing and in spherical coordinates (2). Because of the peculiar nature of a jump task, the trajectory lies in the plane containing the CoM and its desired target location . Hence, we define the yaw angle as the orientation of the jumping plane (where the vector lies) in the frame. Because the jump trajectory is constrained to be in the jumping plane, remains constant (i.e., ) throughout the flight, and we can further restrict the coordinates to a convex bi-dimensional space:

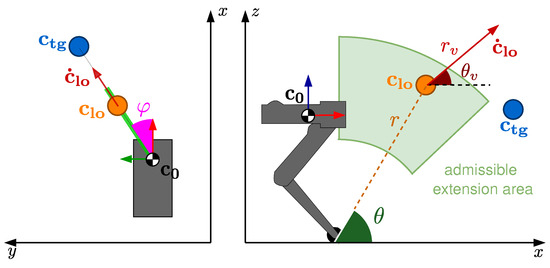

As shown in Figure 2, the lift-off position vector is identified by: the radius r (i.e., the maximum leg extension), the yaw angle , and the pitch angle . Likewise, the lift-off velocity , is described by its magnitude , and the pitch angle with respect to the ground.

Figure 2.

Action parametrization and its bounds. On the left is the top view, and on the right is the side view of the jumping plane.

Therefore, by using this assumption, we have reduced the dimension of the action space from 7 to 5: .

The action space can be further restricted by applying some domain knowledge. The radius r has to be smaller than a value ( m) to prevent boundary singularity due to leg over-extension, and greater than a value ( m) to avoid complete leg retraction. The bounds on the velocity , represented by m/s, and rad, and the bounds on pitch angle are set to rule out jumps that involve excessive foot slippage and useless force effort. Specifically, restricting to be positive ensures a non-negligible vertical component for the velocity, while bounding to the positive quadrant secures that the lift-off velocity will be oriented “toward” the target. Note that the above restrictions, performed at the level of action design, prevent the agent from exploring trajectories that are physically impossible, reducing the search space without any loss in terms of optimality.

Trajectory Parametrization in Cartesian Space

Our strategy to tackle the problem of generating a compression–extension trajectory for the leg to achieve a given lift-off configuration is based on two important choices: 1. restricting the search of the Cartesian space evolution to curves generated by known parametric functions; 2. making the RL agent learn the curve trajectory’s parameters and then finding the joint trajectories through inverse kinematics, with the obvious benefit of reducing the search space and boosting convergence.

The analytical and geometric properties of 3rd order Bézier curves make them a perfect fit for our problem. Bézier curves are a class of parametric functions defined on the same variable (i.e., time) which generate points in the convex hull of the control points. A 3rd order Bézier curve is defined by four control points. In our case, the first and the final points are constrained to be the initial and lift-off CoM positions, respectively. Defining the following Bernstein polynomials:

We can compactly write the Bézier curve as function of its control points:

The curve domain is defined only in the normalized time interval: . The derivative of a 3rd degree Bézier curve is itself a Bézier curve of 2nd degree with 3 control points defined as . This can be easily proved by computing the time derivative of (5) and finding that it simplifies to a Bézier polynomial of the 2nd order whose coefficients turn out to be . Since we are considering an execution time and , then the derivative becomes:

3.2. A Physically Informative Reward Function

In RL, an appropriate choice of the reward function is key to the outcome. Furthermore, we can use the reward function as a means to inject prior knowledge into the learning process. In our case, the reward function was designed to penalize the violations of the physical constraints while giving a positive reward to the executions that make the robot land in the proximity of the target point. The constraints that must be enforced throughout the whole thrust phase are called path constraints. Given the lower and upper limits , for each variable x, their violation is computed as a cost through the usage of a linear activation function :

The output of the activation function is zero if the value is in the allowed range, and is the exceeded violation otherwise.

Physical feasibility check: Before starting each episode, we perform a sanity check on the action proposed by the RL policy: if the given CoM vertical velocity is not sufficient to reach the target height, we abort the simulation, returning a high penalty cost . This can be computed by obtaining the time to reach the apex and substituting it in the ballistic equation:

This results in , which is the apex elevation. If , the episode is aborted. This feasibility check can be employed as an “a posteriori” safety feature in the inference phase, to check if a predicted action will lead to unsafe results. In this case, the action can be aborted early without performing the jump, and high-level strategies could be adopted to relax the jumping requirements (e.g., lower the target height).

Unilaterality constraint: In a legged robot, a leg can only push on the ground and not pull. This is because the component of the force along the contact normal (Z for flat terrains) must be positive.

Friction constraint: To avoid slippage, the tangential component of the contact force is bounded by the foot-terrain friction coefficient , i.e., .

Joint range and torque constraints: The three joint kinematic limits must not be exceeded. Similarly, each of the joint actuator torque limits must be respected.

Singularity constraint: The singularity constraint avoids the leg being completely stretched. During the thrust phase (where we assume that contact is maintained), CoM must stay in the hemisphere of radius equal to the maximum leg extension. This condition prevents the robot from getting close to points with reduced mobility that produce high joint velocities in the inverse kinematic computation. Even though this constraint is enforced by design in the action generation, the actual trajectory might still violate it due to tracking inaccuracies. If a singular configuration is reached, the episode is interrupted and a high cost is returned. The costs caused by the violation of path constraints are evaluated for each time step of the thrust phase and accumulated into the feasibility cost . In addition to these path constraints, we also want to account for the error between the actual and the desired lift-off state. This penalty encourages lift-off configurations that are easier to track for the motion controller. Another penalty is introduced when an episode does not produce a correct touchdown. This is needed to enable in-place jumps and to prevent the robot from staying stationary. The positive component of the reward function is the output of a non-linear landing target reward function, which evaluates how close the CoM arrived to the desired target (an equivalent reward could be defined by expressing landing accuracy at the foot level because the robot is supposed to land with the same joint posture as the initial state. We expressed this at the CoM level for consistency with the trusting trajectory). This reward grows exponentially when this distance approaches zero:

where k is a gain to encourage jumps closer to the target position, and is an adjustable parameter to bound the maximum value of and scale it. An infinitesimal value is added at the denominator to avoid division by zero. Hence, the total reward function is:

with , and where are the previously introduced feasibility costs. We decided to perform reward shaping [37], by clamping the total reward to by mean of an indicator function . This aims to promote the actions that induce constraint satisfaction.

3.3. Implementation Details

The training of the RL agent and the sim-to-sim validation of the learned policy were performed on top of a Gazebo simulator. Because we are considering only translational motions, we modeled a 3 Degrees of Freedom (DoFs) monopod with three passive prismatic joints attached at the base. These prismatic joints constrain the robot base’s movements to planes parallel to the ground. For the sake of simplicity, we also considered that the landing phase was under the responsibility of a different controller (e.g., see [2]). Our interest was simply in the touch-down event, which is checked by verifying that the contact force exceeds a positive threshold . Therefore, the termination of the episode is determined by the occurrence of three possible conditions: execution timeout, singularity, unfeasible jump, or touch-down.

The control policy (default NN) is implemented as a neural network that takes the state as an input, and outputs the actions. The NN is a multi-layer perception with three hidden layers of sizes 256, 512, and 256 with ReLU activations between each layer, and with tanh output activation to map the output between −1 and 1. A low-level PD plus gravity compensation controller generates the torques that are sent to the Gazebo simulator at 1 kHz. The joint reference positions at the lift-off are reset to the initial configuration to enable the natural retraction of the leg and avoid stumbling. Landing locations at different heights are achieved by making a 5 × 5 cm platform appear at the desired landing location only after the apex moment (making the platform appear only at the apex is needed for purely vertical jumps because it avoids impacts with the platform during the trusting phase). The impact of the foot with the platform determines the touch-down event and the consequent termination of the episode. A tight interaction with the simulation environment is key to the efficient training of the RL agent. The communication between the planner component and the Gazebo simulator is managed by the Locosim framework [38]. To interact with the planner, and consequentially with the environment, we developed an ROS node called Jumpleg Agent, where we implemented the RL agent. The code is available at (source code available at https://github.com/mfocchi/rl_pipeline) (accessed on 25 July 2024). During the initial stage of the training process, the action is randomly generated across episodes to allow for an initial broad exploration of the action space.

4. Simulation Results

In this section, we discuss some simulation results that validate the proposed approach and compare it with state-of-the-art alternatives. We used a computer with the following hardware specifications: CPU AMD Ryzen 5 3600 (Santa Clara, CA, USA), GPU Nvidia GTX1650 4 GB (Santa Clara, CA, USA), RAM 16 GB DDR4 3200 MHz. During training, we generated targets inside a predefined training region. These samples are generated randomly inside a cylinder centered on the robot’s initial CoM, with a radius in [0, 0.65] m and a height in [0.25, 0.5] m (see Figure 3). The size was selected to push the system to its performance limits. The parameters of the robot, controller, and simulation are presented in Table 1.

Figure 3.

Three-dimensional view of the calculated feasibility regions (target positions with Relative Percentual Error (RPE) ). In light gray, we depict the training region bounds while in black the test region bounds. The targets are located at different heights all around the robot.

4.1. Non-Linear Trajectory Optimization

The first approach we compare with is a standard optimal control strategy based on Feasible Differential Dynamic Programming (FDDP). FDDP is an efficient algorithm for whole-body control [39] that exploits the sparse structure of the optimal control problem. The FDDP solver is implemented with the optimal control library Crocoddyl [40] and uses the library Pinocchio [41] to enable fast computations of costs, dynamics, and their derivatives.

For the problem at hand, we discretized the trajectory into N successive knots with a timestep . As decision variables, we chose the joint torques. We split the jump into three phases: thrusting, flying, and landed. The constraints in FDDP are encoded as soft penalties in the cost. We encoded friction cones and the tracking of foot locations and velocities at the thrusting/landed stages, respectively. During the flying phase, we added a tracking cost for the CoM reference to encourage the robot to lift off. We regularized control inputs and states throughout the horizon.

4.2. End-to-End RL

At the opposite end of the spectrum, there are the approaches entirely based on Deep Learning, using end-to-end RL without injecting any prior domain knowledge. The RL agent sets joint position references to a low-level PD + gravity compensation controller. The use of a PD controller allows the system to inherit the stability properties of the feedback controller, but at the same time, it allows for explosive torques (by intentionally regulating the references to have a discrepancy w.r.t. the actual positions). Contrary to GRL, E2E RL queries the action at each control loop until touch down, because set points are also needed for the joints when the leg is airborne. To be more specific, instead of directly setting the joint references , the control policy produces as action and joint angle deviations w.r.t. to the nominal joint angle configuration . As suggested by [42,43], to ease the learning, we run the agent at a lower frequency (200 Hz) than the controller (1 kHz). We terminate the episode if: (1) a touchdown is detected; (2) the robot has fallen (i.e., the base link is close to the ground); (3) we reach singularity; or (4) a timeout of 2 s is reached. CoM, joint position, and joint velocities are included in the state. Since, in the E2E implementation, the joint velocity assumes non-zero values, it must be included in the state. Additionally, joint velocity has a temporal relation, which is likely the reason its inclusion in the state space improves learning performance [31]. Since the domain is not changing and the state is Markovian, augmenting the state with the history of some past samples [13,25] was not necessary. Hence, the observation is (, , , , ). Differently from GRL, the initial state of each episode is sampled from a uniform distribution in the neighborhood of the nominal joint pose (). We also encourage smoothness by penalising the quantity . Finally, because of the different units, we scale each state variable against its range in order to have better conditioning in the gradient of the NN. In terms of rewards, we use the same feasibility rewards used for GRL, computed at each loop. To discourage the robot from taking a sequence of rapid and small in-place jumps toward the target, we applied, before the lift-off, a penalty each time that the leg performs a ground contact that is not a unique touchdown. Another penalty is added to force the leg to stay still during the flying phase. The same target function (9) of GRL is used to encourage landing close to the target. Providing this reward at every step also results in a more informative (i.e., dense) reward [44] before the touchdown. Following the curriculum learning idea [45], we gradually increase the difficulty of the jump, enlarging the bounds of the training region (where the targets are sampled) in accordance with the number of episodes and the average reward.

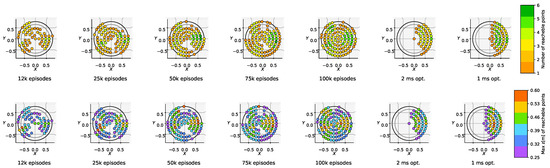

4.3. Policy Performance: The Feasibility Region

We tested the agent in inference mode, for omnidirectional jumps at 6 different heights, for a total of 726 target positions uniformly spaced on a grid (test region) of the same cylindrical shape as the training region. The area of the test region was chosen to be 20% bigger than the training region, to demonstrate the generalization abilities of the system at its feasibility boundary. The policy was periodically evaluated on the test region set to assess the evolution of the models stored during the training phase. To measure the quality of a jump, we used the RPE, which we define as the distance between the touch-down and the desired target point, divided by the jump length. The RPE metric is defined as:

where is the reached landing position. The feasibility region represents the area where the agent can execute an accurate landing, i.e., RPE . A color bar quantifies the jump accuracy.

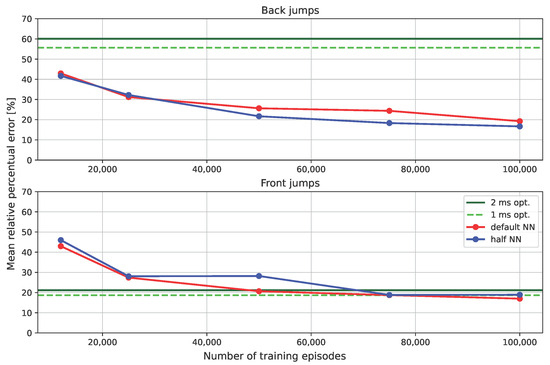

4.3.1. Performance Baseline: Trajectory Optimization

We compared the approach with the baseline FDDP approach, without changing the cost weights and limiting the number of iterations to 500. For optimal control, setting ms, the average computation time was 2.13 s for front jumps and 2.35 s for back jumps, while a single evaluation of the NN requires only ms. Figure 4 (last 2 plots in the row) shows that a reasonable accuracy is obtained for target locations in front of the robot, while FDDP behaves poorly for targets behind the robot. We also tried different timesteps , ranging from 1 to 3 ms. The solver did not converge for the majority of targets when using ms. Decreasing from 2 ms to 1 ms resulted in an increased computation time (from 2.3 to 4.3 s), while the number of feasible points increased from 118 to 168. We can easily explain the different performances for forward and backward jumps: when executing backward jumps FDDP cannot generalize owing to the asymmetry of the leg, and the solver remains trapped in a poor local minimum. We conjecture that this could be related to the leg morphology (with all knees bent backwards), which influences the main axis force ellipsoid, and hence the capability of the leg to push. These issues may be mitigated by duly tuning the weights for each target and/or augmenting the decision variables with the timings of the jump phases (at the price of a significant computational burden). Computing the mean RPE separately for the back region and the front region, we obtained an accuracy of 52 % and 16.5%, respectively.

Figure 4.

Top-view of the feasibility region: (first 5 plots in the row) for different numbers of episodes of the training phase (the number of reachable points is computed for each X,Y pair and for all the 6 different heights) and (last 2 plots in the row) in the case of the baseline FDDP.

4.3.2. Performance of GRL

We repeated the simulations on the test region using GRL with the default NN and with an NN where we halved the number of neurons in each hidden layer (half NN). Figure 5 shows that, in both cases, the RPE decreases (i.e., accuracy increases) monotonically with the number of episodes. For front jumps, the average RPE goes from approximately 40% to 16% in 100 k episodes. A satisfactory level (i.e., RPE 20% for front jumps) is already achieved after 50 k episodes. All the feasibility constraints turned out to be mostly satisfied after 10 k episodes. The number of feasible points (with RPE ) after 100 k is 316 out of 726. The fact the feasible points do not cover the whole test region is an indicator of the maximum capability of the robot. The figure also shows that GRL always outperforms the standard optimization method in terms of accuracy for back jumps, achieving a comparable accuracy for front jumps.

Figure 5.

Plot of the average RPE as a function of the number of training episodes. The red plot is the NN with a default number of neurons, the blue plot is the NN with half neurons, and the green and green dashed plots are the results of optimization with 2 ms and 1 ms discretization, respectively.

Halving the number of neurons, the two models behave with similar accuracy, showing that the RL model could ideally be further simplified. From Figure 3 and Figure 4, we can observe that the feasibility region expands with the number of training epochs. In the same figures, we can see that the test region (black cylinder) is bigger than the region where we trained the NN (gray cylinder), demonstrating its extrapolation capabilities.

GRL is also capable, to some extent, of learning the dynamics and compensating the tracking inaccuracies of the underlying low-level controller. This is shown in the accompanying video (link to the accompanying video (https://youtu.be/ARhoYwIrkU0?si=-dchquJwMFBNd2KN) (accessed on 25 July 2024)) by the grey ball that represents the ideal landing location (i.e., if the CoM lift-off velocity associated to each action was perfectly tracked). The grey ball is different from the desired target (blue ball) because of small tracking inaccuracies but the agent learned to provide a lift-off velocity that compensates for these, managing to accurately reach the desired location (blue ball). In the same video, we show how the quality of the jumps steadily improves with the number of training episodes.

4.3.3. Performance Baseline: E2E RL

The E2E policy was trained over 10M simulation steps, not reaching convergence and, consequently, not achieving satisfactory results. In the test phase, there were only a few targets out of 726 where the algorithm managed to attain an error below 10%. We are aware that E2E is capable of achieving walking tasks. However, for jumping tasks, while additional engineering effort in reward shaping [42] is believed to improve the learning speed and the performance of the E2E policy, our method provides a data-efficient alternative that is robust to the controller’s inaccuracy, generalizes to different target positions, and can be applied to different robot morphologies. More details are reported in the accompanying video.

5. Conclusions

In this work, we proposed a guided RL-based strategy to perform an omni-directional 3D jump on an elevated terrain with a legged robot. Taking inspiration from nature and exploiting a few heuristic assumptions on the the shape of the jump trajectory (i.e., we added some structure in the policy), we have shown that, in a few thousand training episodes, the agent obtains the ability to jump in a big area, maintaining high accuracy while reaching the boundary of its performance (coming from the physical limitations of the machine). The approach learns a robust policy and proficiently compensates for the tracking inaccuracies of the low-level controller. The proposed approach is very efficient (it requires a small number of training episodes to reach a good performance), it achieves a good generalization (e.g., by executing jumps in a region 20% larger than the one used for training), and it outperforms a standard end-to-end RL that was unable to learn the jumping motion. Compared to optimal control, GRL (1) achieved the same level of performance both in front jumps and back jumps (optimal control did not) and (2) required several orders of magnitude lower computation time.

In our future research, we plan to extend the approach to a full quadruped robot, considering also angular motions. The generalization to angular motions could lead us to a comprehensive a framework for the execution of a large class of jumping motions (e.g., twist, somersault, barrel jumps) on flat, elevated, or inclined surfaces. For a successful implementation on the real platform, a landing strategy [2] will be also integrated. We are also seeking ways to improve robustness by including robot non-idealities in the learning phase and to further speed up the training phase by leveraging parallel computation. Additionally, we plan to create a jump reflex that can be triggered multiple times, including when the robot is already in motion (e.g., non-zero initial velocity).

Supplementary Materials

A supplementary video can be found on: https://youtu.be/ARhoYwIrkU0?si=-dchquJwMFBNd2KN (accessed on 25 July 2024)). The code associated with this work can be found on: https://github.com/mfocchi/rl_pipeline (accessed on 25 July 2024).

Author Contributions

Conceptualization, M.F.; Methodology, A.D.P., D.F. and L.P.; Software, R.B. and M.F.; Validation, R.B. and M.F.; Investigation, R.B., M.F. and L.P.; Data curation, R.B. and M.F.; Writing—original draft, R.B. and M.F.; Writing—review & editing, M.F., A.D.P., D.F. and L.P.; Supervision, M.F. and L.P.; Project administration, M.F.; Funding acquisition, L.P. All authors have read and agreed to the published version of the manuscript.

Funding

The publication was created with the co-financing of the European Union FSE-REACT-EU, PON Research and Innovation 2014-2020 DM1062/2021.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is contained within the article and Supplementary Materials.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Jenelten, F.; Grandia, R.; Farshidian, F.; Hutter, M. TAMOLS: Terrain-Aware Motion Optimization for Legged Systems. IEEE Trans. Robot. 2022, 38, 3395–3413. [Google Scholar] [CrossRef]

- Roscia, F.; Focchi, M.; Prete, A.D.; Caldwell, D.G.; Semini, C. Reactive Landing Controller for Quadruped Robots. IEEE Robot. Autom. Lett. 2023, 8, 7210–7217. [Google Scholar] [CrossRef]

- Park, H.W.; Wensing, P.M.; Kim, S. High-speed bounding with the MIT Cheetah 2: Control design and experiments. Int. J. Robot. Res. 2017, 36, 167–192. [Google Scholar] [CrossRef]

- Yim, J.K.; Singh, B.R.P.; Wang, E.K.; Featherstone, R.; Fearing, R.S. Precision Robotic Leaping and Landing Using Stance-Phase Balance. IEEE Robot. Autom. Lett. 2020, 5, 3422–3429. [Google Scholar] [CrossRef]

- Nguyen, C.; Nguyen, Q. Contact-timing and Trajectory Optimization for 3D Jumping on Quadruped Robots. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 11994–11999. [Google Scholar] [CrossRef]

- Chignoli, M.; Kim, S. Online Trajectory Optimization for Dynamic Aerial Motions of a Quadruped Robot. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: New York, NY, USA, 2021; pp. 7693–7699. [Google Scholar]

- García, G.; Griffin, R.; Pratt, J. Time-Varying Model Predictive Control for Highly Dynamic Motions of Quadrupedal Robots. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 7344–7349. [Google Scholar] [CrossRef]

- Chignoli, M.; Morozov, S.; Kim, S. Rapid and Reliable Quadruped Motion Planning with Omnidirectional Jumping. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 6621–6627. [Google Scholar] [CrossRef]

- Mastalli, C.; Merkt, W.; Xin, G.; Shim, J.; Mistry, M.; Havoutis, I.; Vijayakumar, S. Agile Maneuvers in Legged Robots:a Predictive Control Approach. arXiv 2022, arXiv:2203.07554v2. [Google Scholar]

- Li, H.; Wensing, P.M. Cafe-Mpc: A Cascaded-Fidelity Model Predictive Control Framework with Tuning-Free Whole-Body Control. arXiv 2024, arXiv:2403.03995. [Google Scholar]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.M.O.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Gehring, C.; Coros, S.; Hutler, M.; Dario Bellicoso, C.; Heijnen, H.; Diethelm, R.; Bloesch, M.; Fankhauser, P.; Hwangbo, J.; Hoepflinger, M.; et al. Practice Makes Perfect: An Optimization-Based Approach to Controlling Agile Motions for a Quadruped Robot. IEEE Robot. Autom. Mag. 2016, 23, 34–43. [Google Scholar] [CrossRef]

- Hwangbo, J.; Lee, J.; Dosovitskiy, A.; Bellicoso, D.; Tsounis, V.; Koltun, V.; Hutter, M. Learning agile and dynamic motor skills for legged robots. Sci. Robot. 2019, 4, eaau5872. [Google Scholar] [CrossRef] [PubMed]

- Peng, X.; Coumans, E.; Zhang, T.; Lee, T.W.; Tan, J.; Levine, S. Learning Agile Robotic Locomotion Skills by Imitating Animals. In Proceedings of the Robotics: Science and Systems 2020, Corvalis, OR, USA, 12–16 July 2020. [Google Scholar] [CrossRef]

- Ji, G.; Mun, J.; Kim, H.; Hwangbo, J. Concurrent Training of a Control Policy and a State Estimator for Dynamic and Robust Legged Locomotion. IEEE Robot. Autom. Lett. 2022, 7, 4630–4637. [Google Scholar] [CrossRef]

- Rudin, N.; Hoeller, D.; Reist, P.; Hutter, M. Learning to walk in minutes using massively parallel deep reinforcement learning. In Proceedings of the Conference on Robot Learning. PMLR, Auckland, New Zealand, 14–18 December 2022; pp. 91–100. [Google Scholar]

- Fankhauser, P.; Hutter, M.; Gehring, C.; Bloesch, M.; Hoepflinger, M.A.; Siegwart, R. Reinforcement learning of single legged locomotion. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 188–193. [Google Scholar] [CrossRef]

- OpenAI. Benchmarks for Spinning Up Implementations. 2022. Available online: https://spinningup.openai.com/en/latest/spinningup/bench.html#benchmarks-for-spinning-up-implementations (accessed on 26 February 2023).

- Bogdanovic, M.; Khadiv, M.; Righetti, L. Model-free reinforcement learning for robust locomotion using demonstrations from trajectory optimization. Front. Robot. AI 2022, 9, 854212. [Google Scholar] [CrossRef] [PubMed]

- Bellegarda, G.; Nguyen, C.; Nguyen, Q. Robust Quadruped Jumping via Deep Reinforcement Learning. arXiv 2023, arXiv:cs.RO/2011.07089. [Google Scholar]

- Grandesso, G.; Alboni, E.; Papini, G.P.; Wensing, P.M.; Prete, A.D. CACTO: Continuous Actor-Critic with Trajectory Optimization-Towards Global Optimality. IEEE Robot. Autom. Lett. 2023, 8, 3318–3325. [Google Scholar] [CrossRef]

- Peng, X.B.; van de Panne, M. Learning Locomotion Skills Using DeepRL: Does the Choice of Action Space Matter? In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation, Los Angeles, CA, USA, 28–30 July 2017. [Google Scholar]

- Bellegarda, G.; Byl, K. Training in Task Space to Speed Up and Guide Reinforcement Learning. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 2693–2699. [Google Scholar] [CrossRef]

- Chen, S.; Zhang, B.; Mueller, M.W.; Rai, A.; Sreenath, K. Learning Torque Control for Quadrupedal Locomotion. arXiv 2022, arXiv:2203.05194. [Google Scholar]

- Aractingi, M.; Léziart, P.A.; Flayols, T.; Perez, J.; Silander, T.; Souères, P. Controlling the Solo12 Quadruped Robot with Deep Reinforcement Learning. Sci. Rep. 2023, 13, 11945. [Google Scholar] [CrossRef] [PubMed]

- Majid, A.Y.; Saaybi, S.; van Rietbergen, T.; François-Lavet, V.; Prasad, R.V.; Verhoeven, C. Deep Reinforcement Learning Versus Evolution Strategies: A Comparative Survey. arXiv 2021, arXiv:2110.01411. [Google Scholar] [CrossRef] [PubMed]

- Atanassov, V.; Ding, J.; Kober, J.; Havoutis, I.; Santina, C.D. Curriculum-Based Reinforcement Learning for Quadrupedal Jumping: A Reference-free Design. arXiv 2024, arXiv:2401.16337. [Google Scholar]

- Yang, Y.; Meng, X.; Yu, W.; Zhang, T.; Tan, J.; Boots, B. Continuous Versatile Jumping Using Learned Action Residuals. In Proceedings of the Machine Learning Research PMLR, Philadelphia, PA, USA, 15–16 June 2023; Volume 211, pp. 770–782. [Google Scholar]

- Vezzi, F.; Ding, J.; Raffin, A.; Kober, J.; Della Santina, C. Two-Stage Learning of Highly Dynamic Motions with Rigid and Articulated Soft Quadrupeds. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024. [Google Scholar]

- Henderson, P.; Hu, J.; Romoff, J.; Brunskill, E.; Jurafsky, D.; Pineau, J. Towards the systematic reporting of the energy and carbon footprints of machine learning. J. Mach. Learn. Res. 2020, 21, 10039–10081. [Google Scholar]

- Mock, J.W.; Muknahallipatna, S.S. A comparison of ppo, td3 and sac reinforcement algorithms for quadruped walking gait generation. J. Intell. Learn. Syst. Appl. 2023, 15, 36–56. [Google Scholar] [CrossRef]

- Shafiee, M.; Bellegarda, G.; Ijspeert, A. ManyQuadrupeds: Learning a Single Locomotion Policy for Diverse Quadruped Robots. arXiv 2024, arXiv:2310.10486. [Google Scholar]

- Zador, A.M. A critique of pure learning and what artificial neural networks can learn from animal brains. Nat. Commun. 2019, 10, 3770. [Google Scholar] [CrossRef] [PubMed]

- Shen, H.; Yosinski, J.; Kormushev, P.; Caldwell, D.G.; Lipson, H. Learning Fast Quadruped Robot Gaits with the RL PoWER Spline Parameterization. Cybern. Inf. Technol. 2013, 12, 66–75. [Google Scholar] [CrossRef]

- Kim, T.; Lee, S.H. Quadruped Locomotion on Non-Rigid Terrain using Reinforcement Learning. arXiv 2021, arXiv:2107.02955. [Google Scholar]

- Ji, Y.; Li, Z.; Sun, Y.; Peng, X.B.; Levine, S.; Berseth, G.; Sreenath, K. Hierarchical Reinforcement Learning for Precise Soccer Shooting Skills using a Quadrupedal Robot. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 1479–1486. [Google Scholar] [CrossRef]

- Grzes, M. Reward Shaping in Episodic Reinforcement Learning; ACM: New York, NY, USA, 2017. [Google Scholar]

- Focchi, M.; Roscia, F.; Semini, C. Locosim: An Open-Source Cross-PlatformRobotics Framework. In Synergetic Cooperation between Robots and Humans, Proceedings of the CLAWAR 2023, Florianopolis, Brazil, 2–4 October 2023; Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2024; pp. 395–406. [Google Scholar] [CrossRef]

- Budhiraja, R.; Carpentier, J.; Mastalli, C.; Mansard, N. Differential Dynamic Programming for Multi-Phase Rigid Contact Dynamics. In Proceedings of the IEEE International Conference on Humanoid Robots, Beijing, China, 6–9 November 2018. [Google Scholar]

- Mastalli, C.; Budhiraja, R.; Merkt, W.; Saurel, G.; Hammoud, B.; Naveau, M.; Carpentier, J.; Righetti, L.; Vijayakumar, S.; Mansard, N. Crocoddyl: An Efficient and Versatile Framework for Multi-Contact Optimal Control. In Proceedings of the IEEE International Conference on Robotics and Automation, Paris, France, 31 May–31 August 2020; pp. 2536–2542. [Google Scholar] [CrossRef]

- Carpentier, J.; Saurel, G.; Buondonno, G.; Mirabel, J.; Lamiraux, F.; Stasse, O.; Mansard, N. The Pinocchio C++ library—A fast and flexible implementation of rigid body dynamics algorithms and their analytical derivatives. In Proceedings of the IEEE International Symposium on System Integrations (SII), Paris, France, 14–16 January 2019. [Google Scholar]

- Gangapurwala, S.; Campanaro, L.; Havoutis, I. Learning Low-Frequency Motion Control for Robust and Dynamic Robot Locomotion. arXiv 2022, arXiv:2209.14887. [Google Scholar]

- Zhao, T.Z.; Kumar, V.; Levine, S.; Finn, C. Learning Fine-Grained Bimanual Manipulation with Low-Cost Hardware. arXiv 2023, arXiv:2304.13705. [Google Scholar]

- Jeon, S.H.; Heim, S.; Khazoom, C.; Kim, S. Benchmarking Potential Based Rewards for Learning Humanoid Locomotion. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 9204–9210. [Google Scholar] [CrossRef]

- Bengio, Y.; Louradour, J.; Collobert, R.; Weston, J. Curriculum learning. In Proceedings of the 26th Annual International Conference on Machine Learning, Montreal, QC, Canada, 14–18 June 2009; Volume 382, pp. 41–48. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).