Feature Detection of Non-Cooperative and Rotating Space Objects through Bayesian Optimization

Abstract

1. Introduction

- We design a reward function combining two components: the detection reward and the sinusoidal reward. The detection reward uses historical feature detection data to assign rewards to the chaser states. The second component utilizes the predictions of the feature locations to calculate the difference between the ideal and actual chaser states and assign rewards to the chaser states from the difference. Simulation results from the combined reward model show that the proposed algorithm can drive the chaser toward successful feature detection performance.

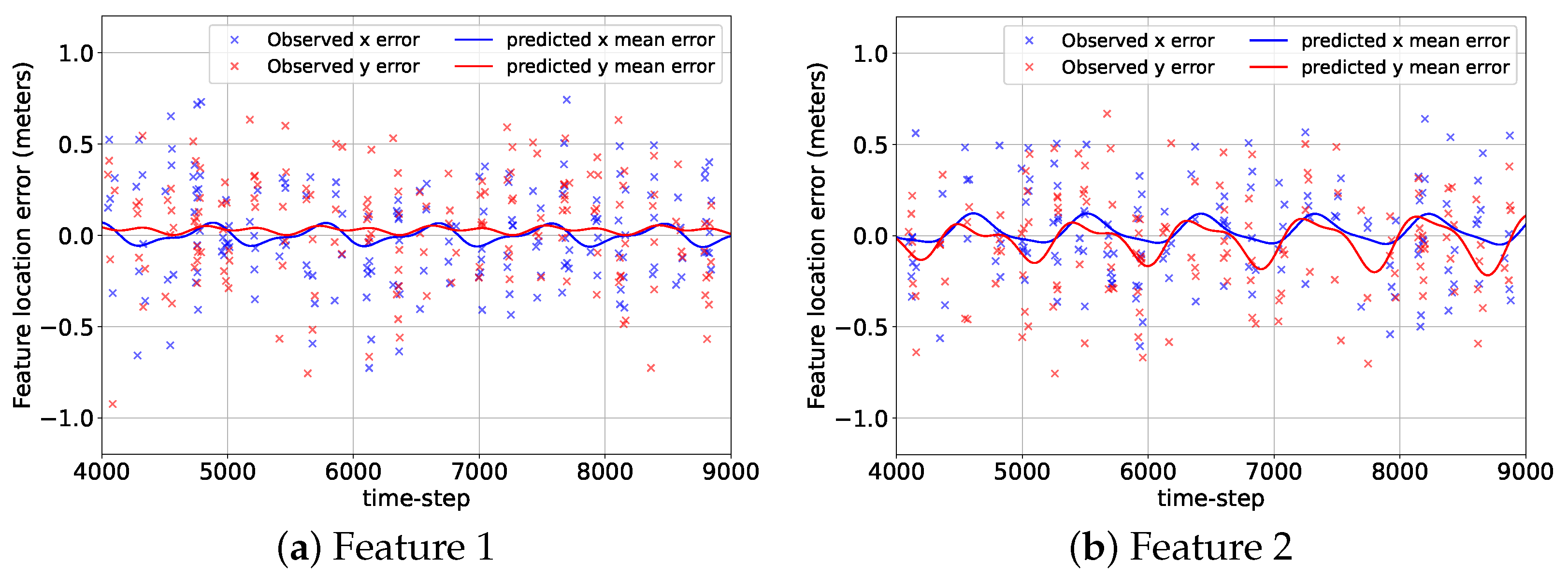

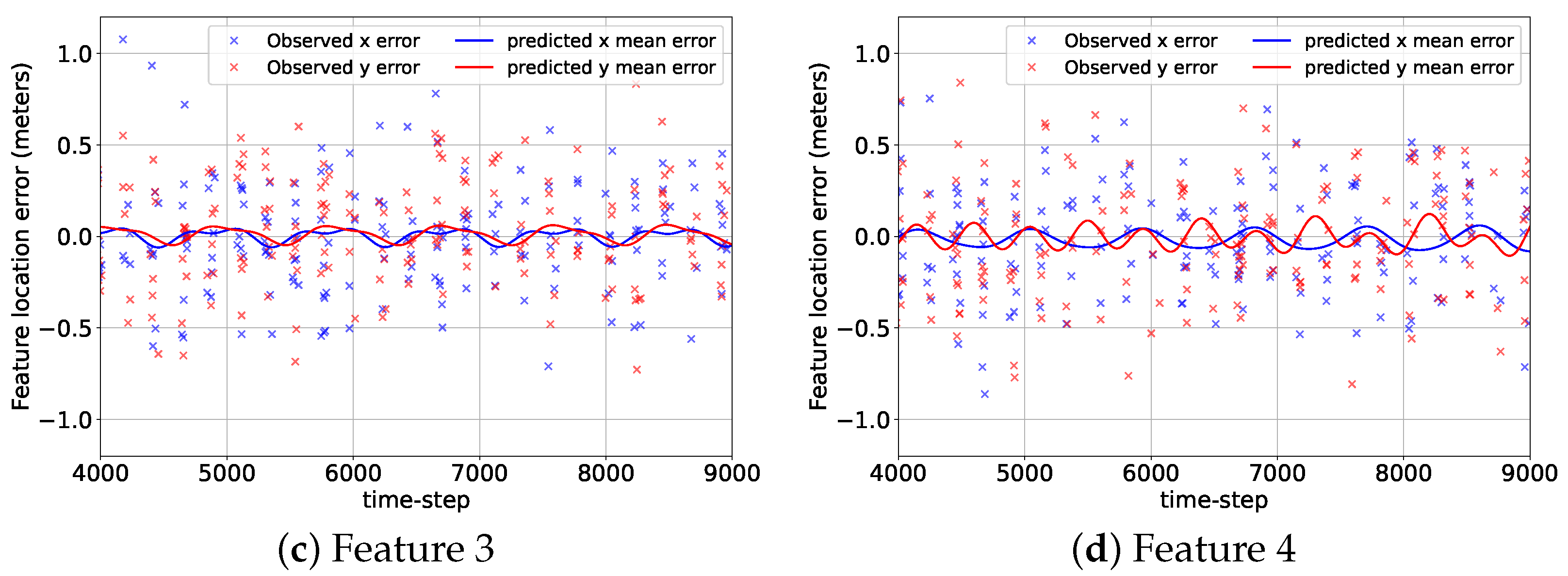

- We create data-driven models based on the Gaussian Process (GP) regression for predicting the feature positions, which are utilized for the sinusoidal reward calculation. Due to the implementation of the GP, the proposed algorithm can be executed without requiring a physics model.

- We implement the Fast Fourier Transform for appraising the target rotational period and use the estimated period as the initial guess of the periodicity hyper-parameter for training the GP models. This intelligent choice results in generating GP models with highly accurate prediction performance.

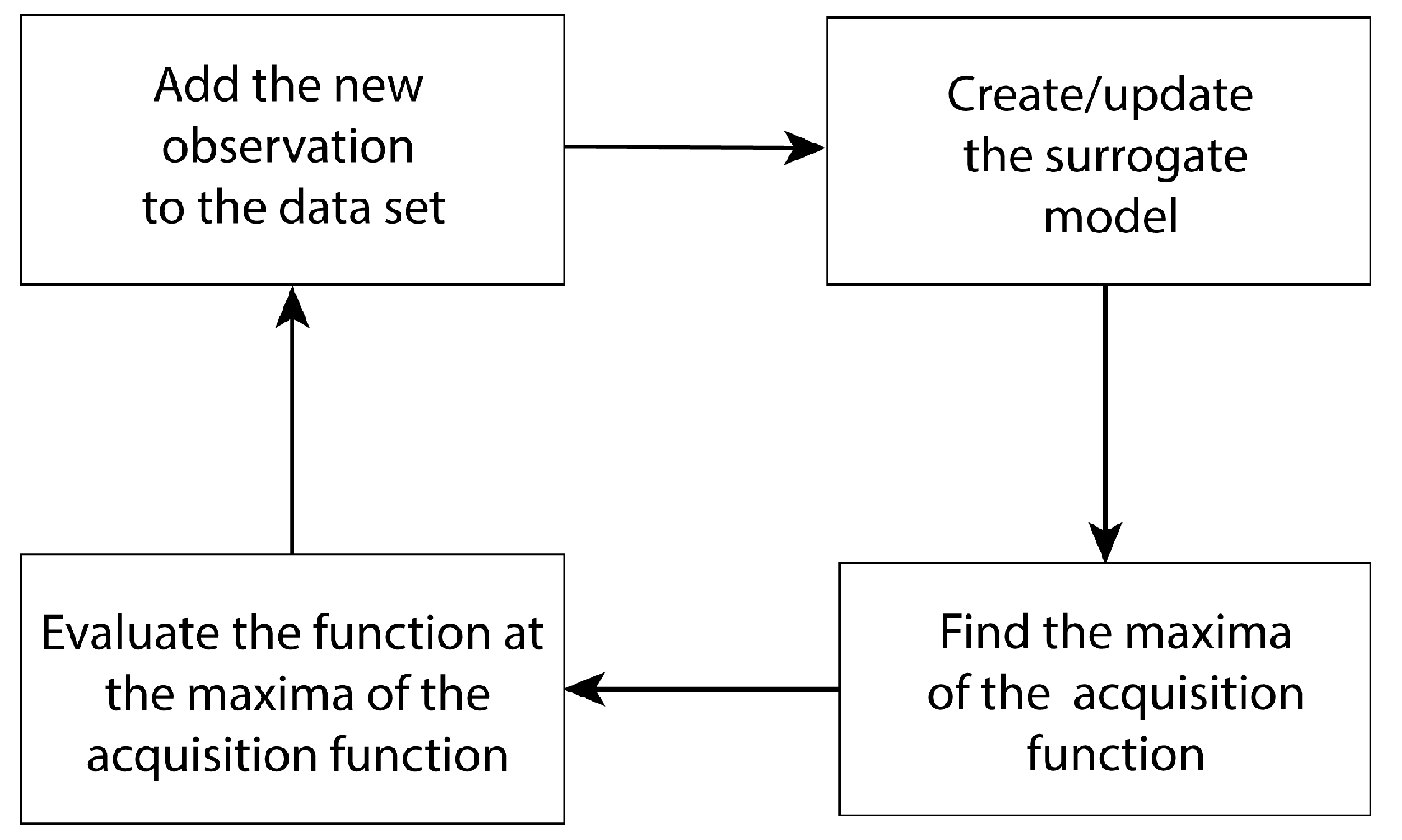

- We utilize a GP for creating a reward distribution model over the chaser states. Using this model as the surrogate model and the Upper Confidence Bound (UCB) function as the acquisition function, we employ the Bayesian Optimization (BO) technique to determine the appropriate camera directional angles. We demonstrate that BO with the reward distribution model using a GP can determine the camera directional angles that lead to satisfactory feature detection performance.

2. Methodology

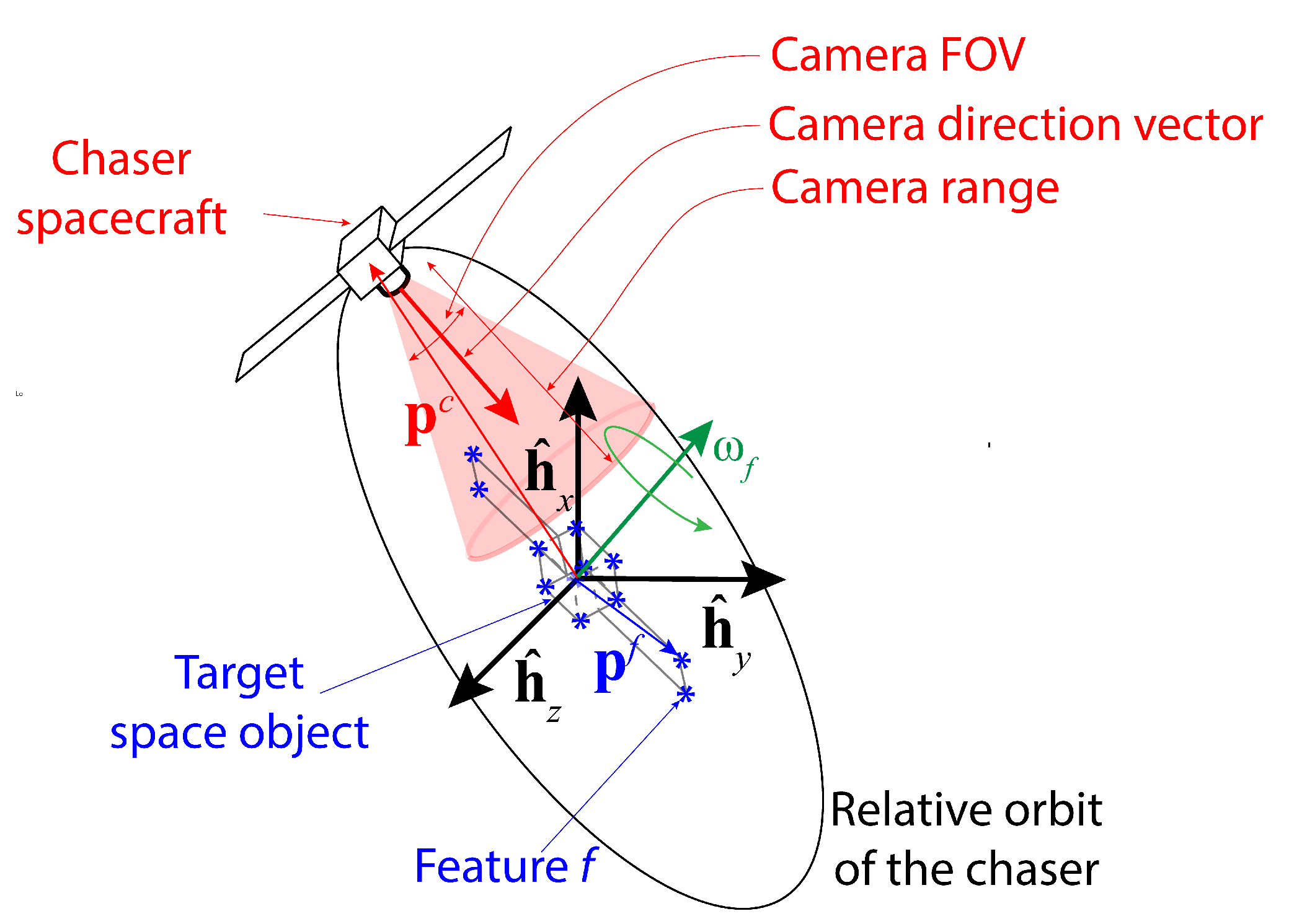

2.1. Problem Specification in the 3D Spatial Domain

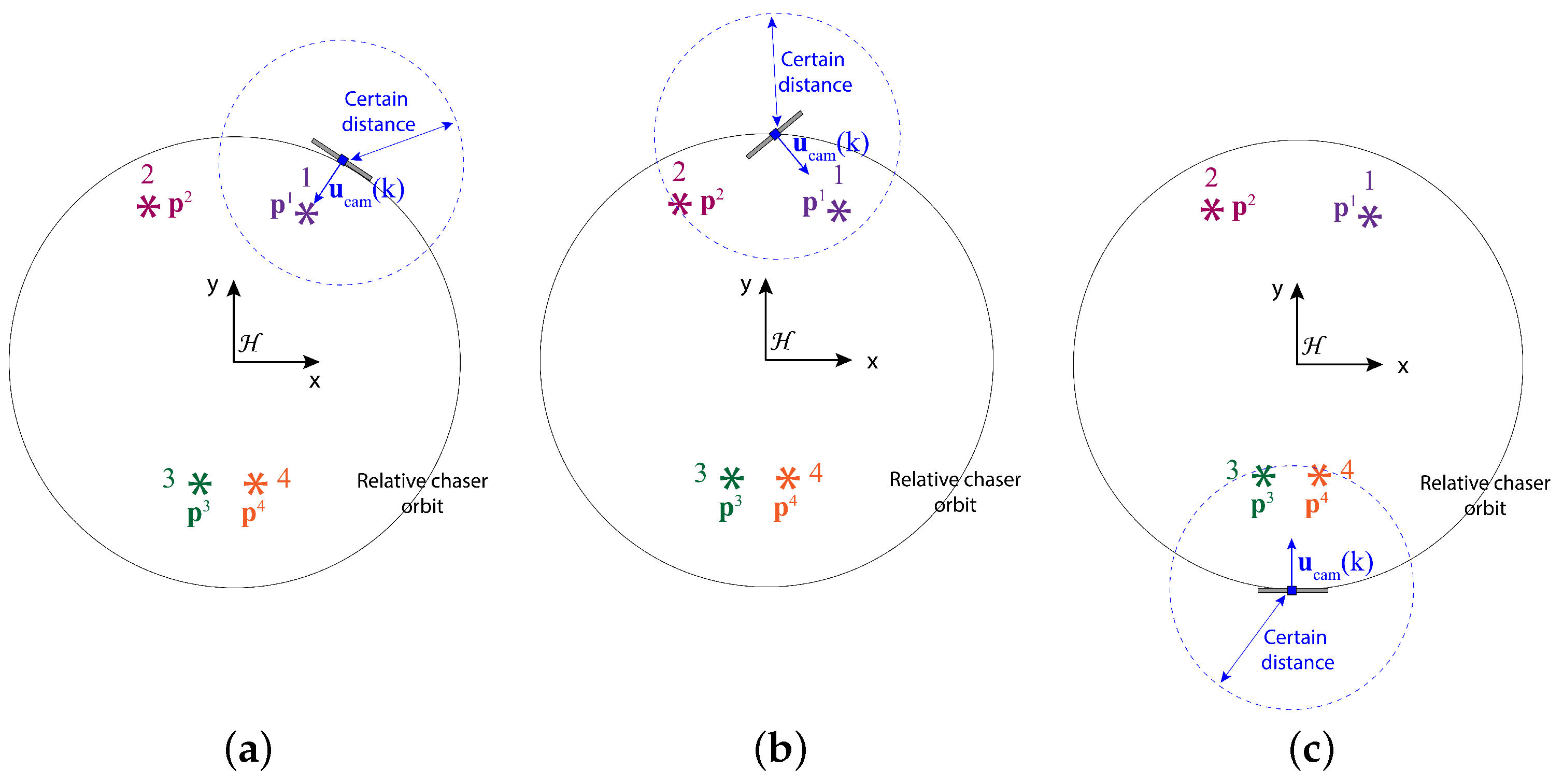

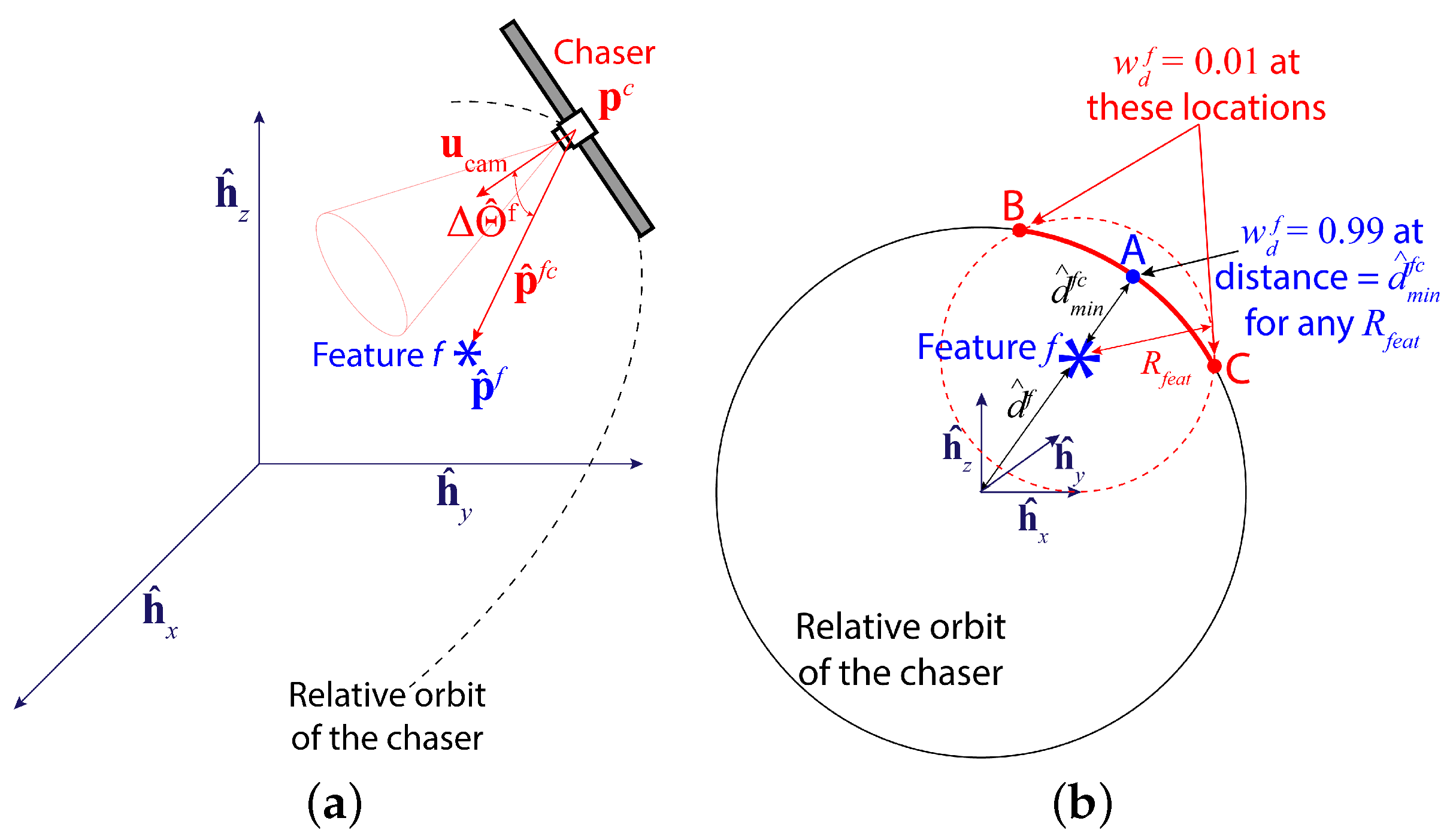

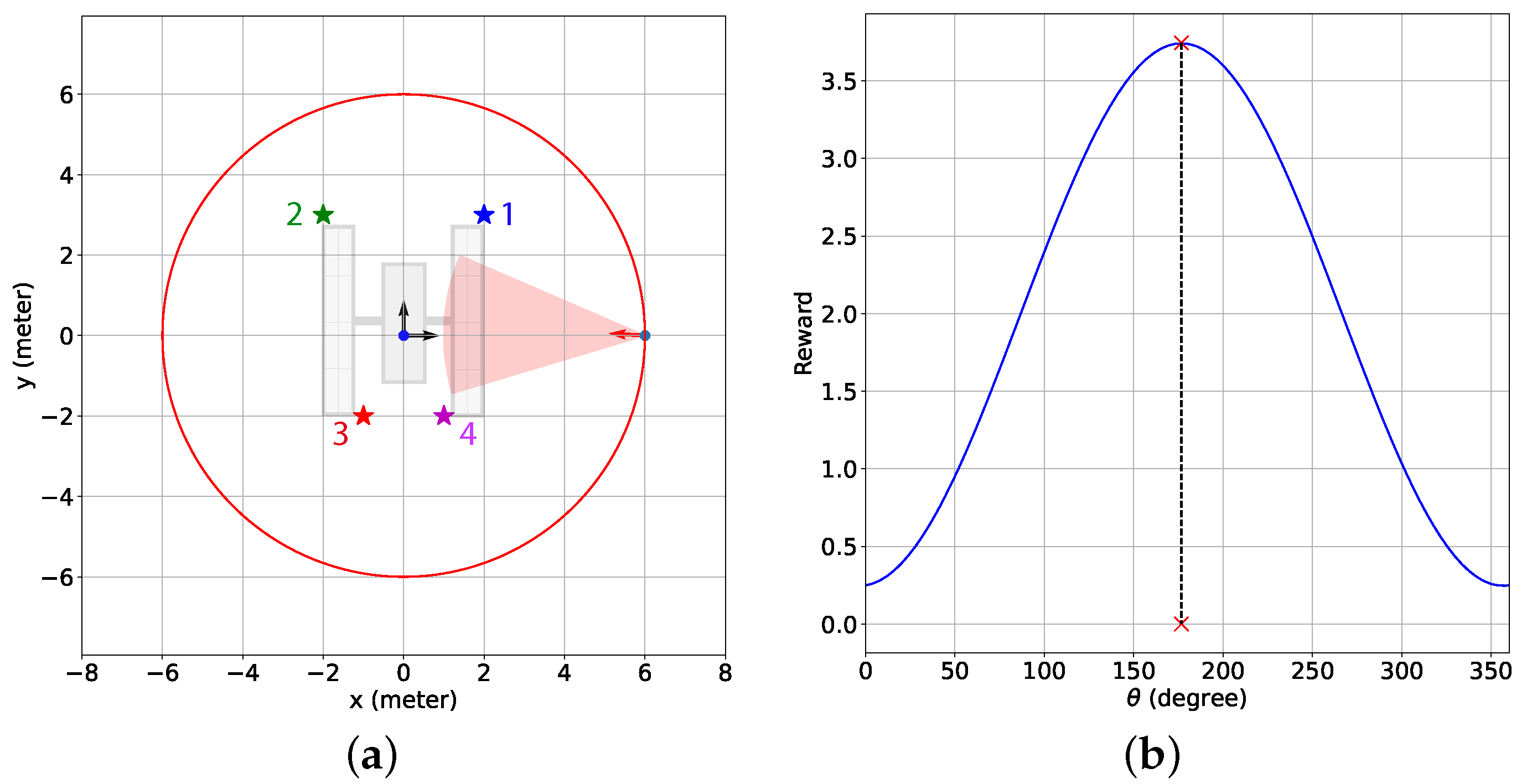

- The camera is turned straight toward a feature f or and if the rest of the features are further than a certain distance from the chaser (see Figure 2a). Here, and are the true ideal camera directional angles for feature f given that the camera direction vector exactly points toward feature f. The true ideal camera directional angles are defined as follows:where is the relative position of feature f with respect to the chaser, degrees, and degrees.

- If two or more features are within the certain range, but they are not simultaneously detectable, the chaser should point the camera toward the closest feature (see Figure 2b).

- If two or more features are within the certain range and they are simultaneously detectable, the chaser should point the camera in such a direction so that the maximum number of features are detected (see Figure 2c). In this work, we assume the following definition of for this case with two features p and q:where and are distance weights associated with features p and q, and the weights are calculated based on the distances between the features and the chaser, and . Due to these weights, the optimal camera directional angles are more inclined toward the ideal camera directional angles of the feature within the certain range that is closer to the chaser. How to design the distance-based weight is provided in Section 2.3.2.

2.2. Camera Measurement Model

2.3. Reward Model

2.3.1. Feature Detection Reward ()

2.3.2. Sinusoidal Reward ()

2.3.3. Combined Reward (r)

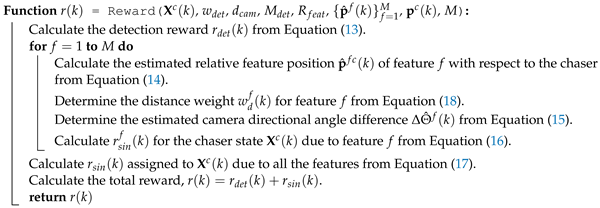

| Algorithm 1: Working mechanism of the Reward() function |

|

2.4. Gaussian Process (GP) Models

2.4.1. GP-Feature Models

| Algorithm 2: Working mechanism of the findFeaturePeriod() function |

|

2.4.2. GP-Reward Model

2.5. Limitations of the GP Models

2.6. Bayesian Optimization (BO)

2.7. Simulation Workflow

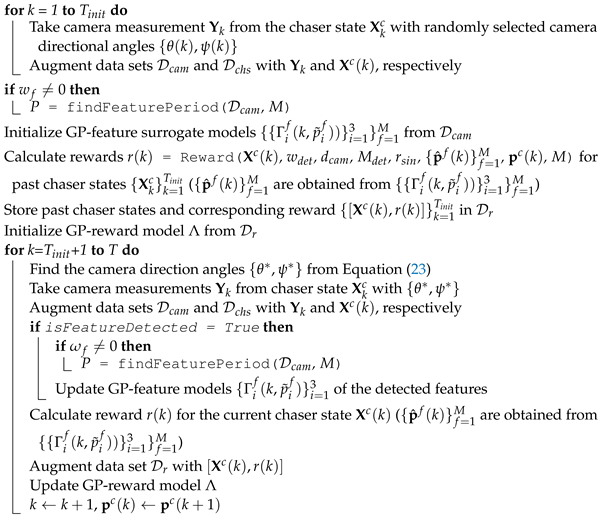

| Algorithm 3: Bayesian Optimization-based SOCRAFT algorithm for detecting features of a non-cooperative and rotating target |

| Initialize chaser trajectory , total step T, total steps for initial data collection , camera FOV, camera range , maximum reward , detection reward weight , radius of influence , total number of features M Initialize data sets (with the maximum size S), , and  |

3. Results and Discussion

3.1. Common Simulation Setup

3.2. Two-Dimensional Case

3.2.1. Simulation Setup

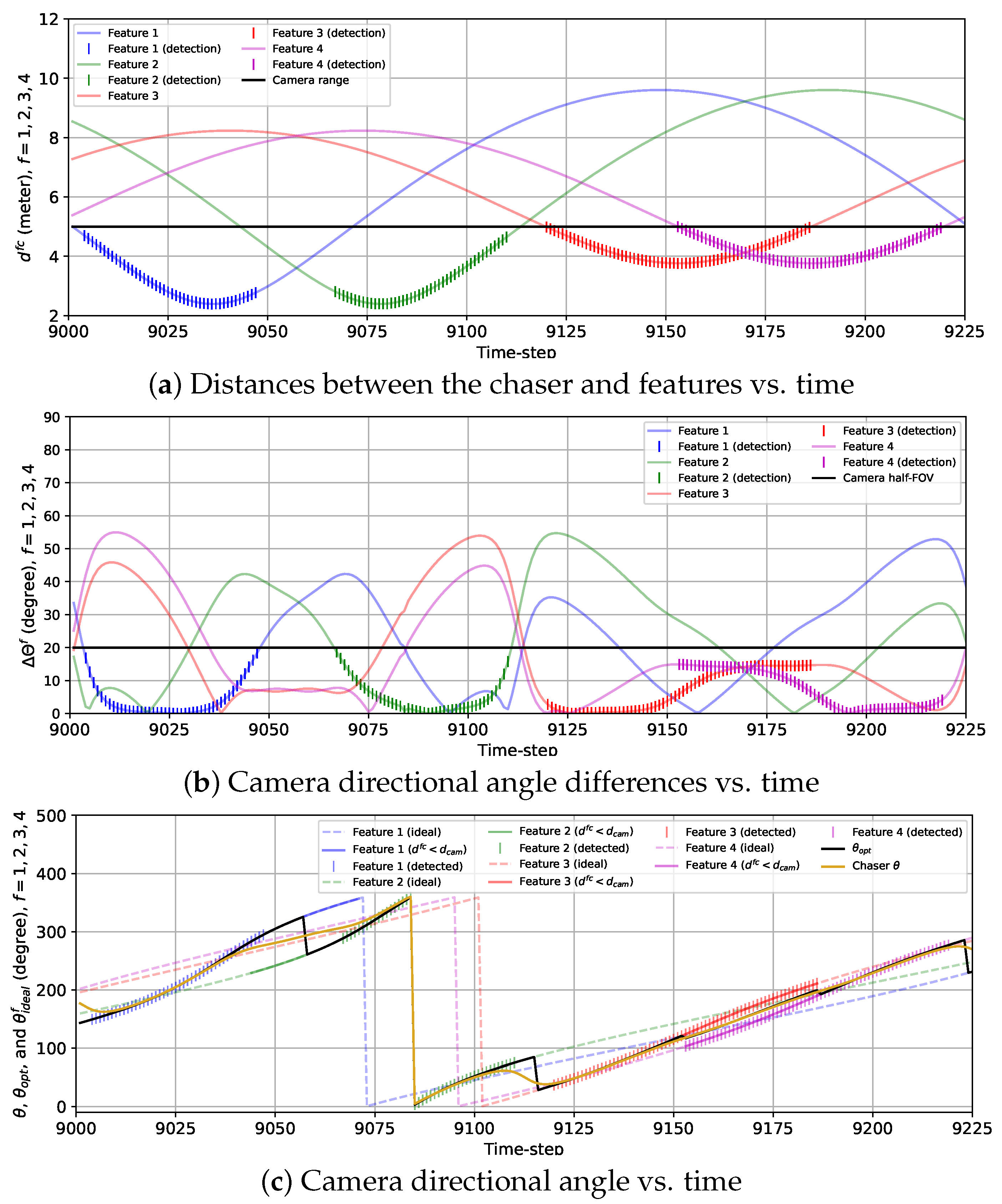

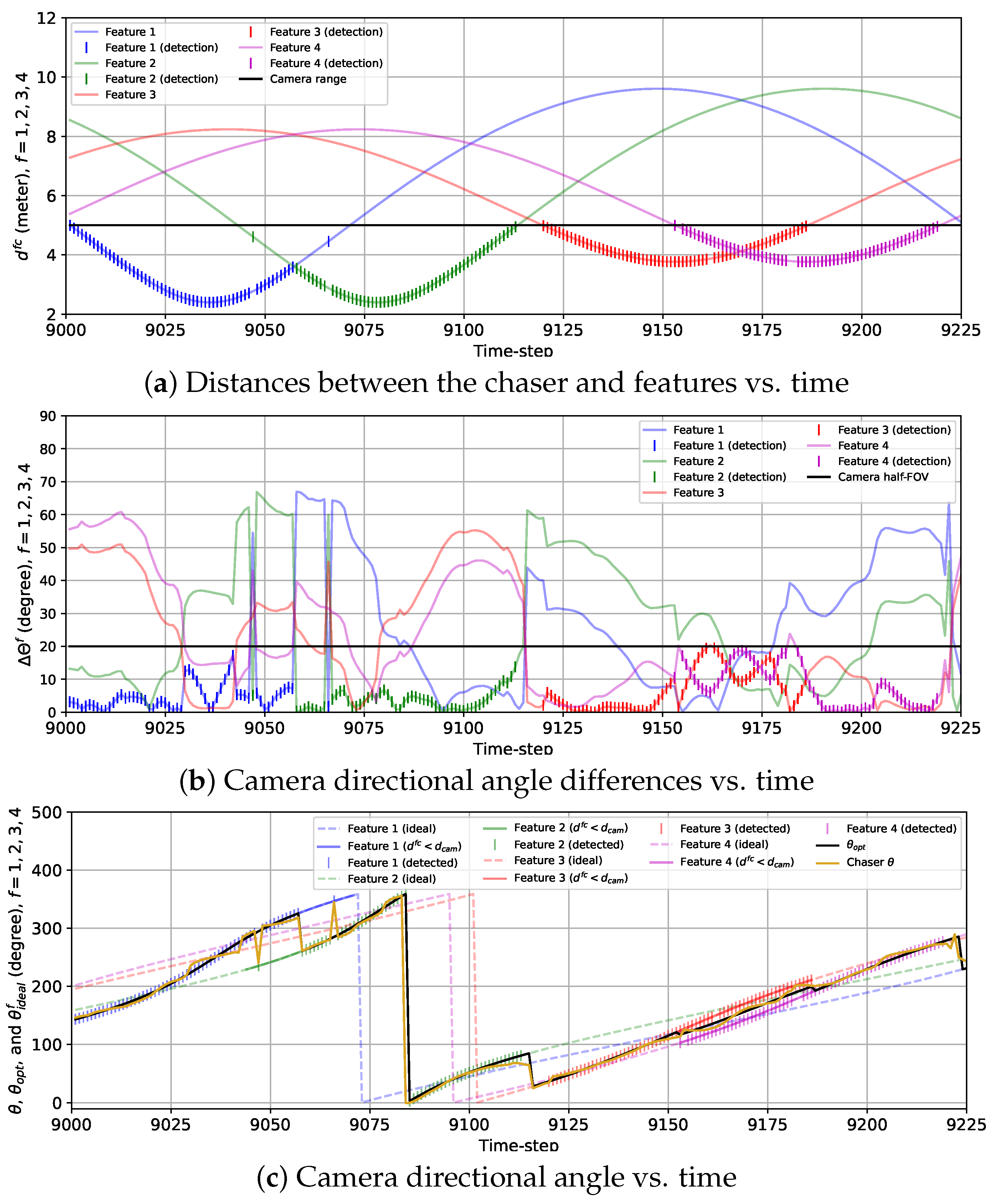

3.2.2. Simulation Results

3.3. Three-Dimensional Case

3.3.1. Simulation Setup

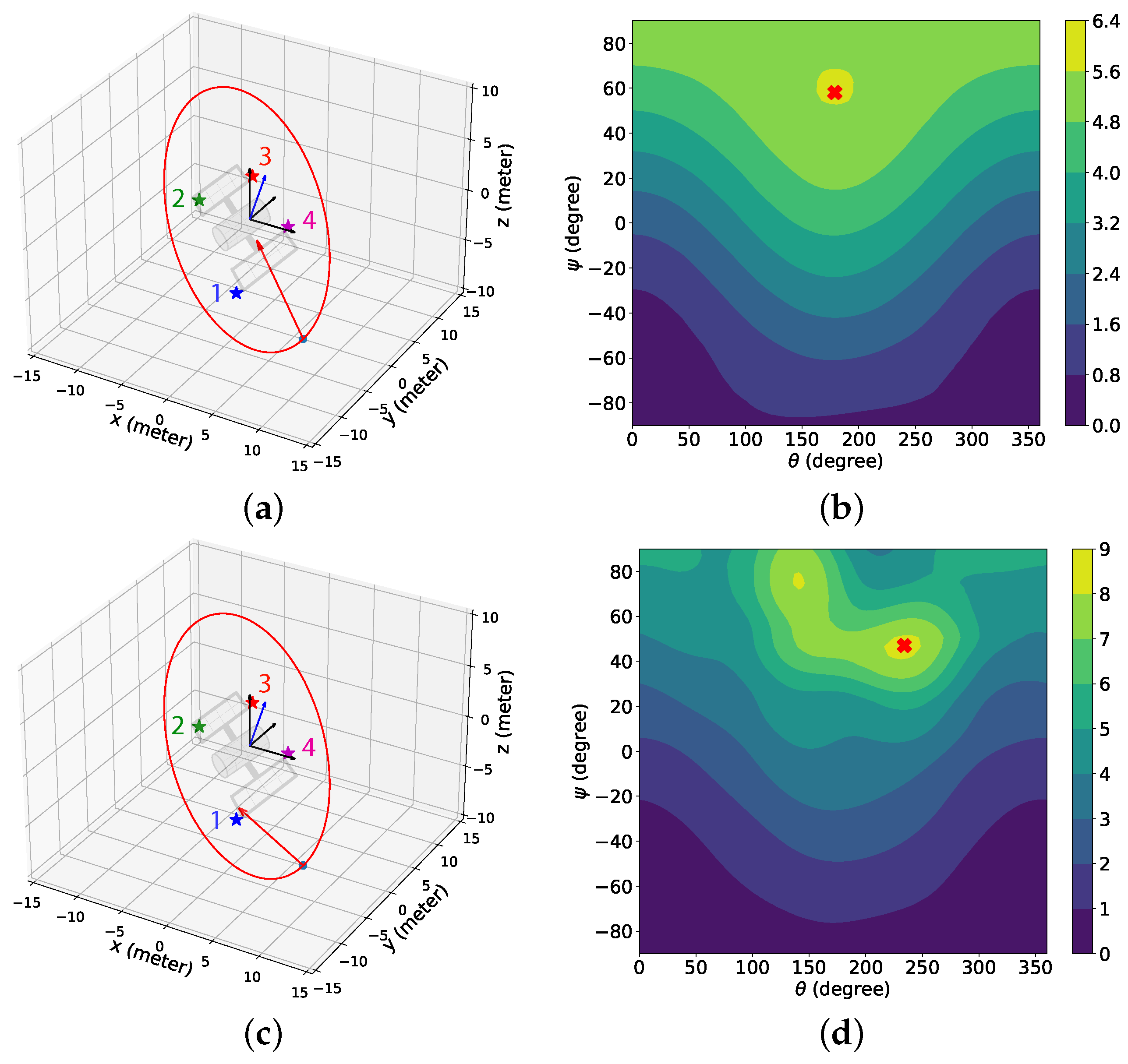

3.3.2. Simulation Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| BO | Bayesian Optimization |

| GP | Gaussian Process |

| FFT | Fast Fourier Transform |

| SOCRAFT | Space Object Chaser-Resident Assessment Feature Tracking |

| FOV | Field of view |

| LIDAR | Light Detection and Ranging |

| LEO | Low Earth Orbit |

| RSO | Resident Space Object |

| NASA | National Aeronautics and Space Administration |

| ISAM | In-Space Servicing, Assembly, and Manufacturing |

| PE | Pursuit–Evasion |

| SDA | Space Domain Awareness |

| SLAM | Simultaneous Localization and Mapping |

| RANSAC | Random Sample Consensus |

| SIFT | Scale Invariant Feature Transform |

| ISS | International Space Station |

| ASLAM | Active Simultaneous Localization and Mapping |

| MPC | Model Predictive Control |

| SBMPO | Sampling-Based Model Predictive Optimization |

| EKF | Extended Kalman Filter |

| UKF | Unscented Kalman Filter |

| ML | Machine Learning |

| RL | Reinforcement Learning |

| Nomenclature | |

| Target Hill frame | |

| , P | Angular velocity and rotational period of the target, respectively |

| , | Positions of the chaser and feature f in |

| Relative position of feature f with respect to the chaser in | |

| Spatial components of | |

| k | Time step index |

| Time instance at time step k | |

| , T | Total time steps for the initial data collection and the complete duration of the simulation, respectively |

| , , , , | Integral constants associated with the Clohessy–Wiltshire equations |

| x, y, z | Spatial components of |

| , | Camera directional angle components |

| , | Optimal camera directional angle components |

| , | Ideal camera directional angle components for feature f given that the camera direction vector is exactly pointed toward feature f |

| Unit vector in the direction of the camera orientation in | |

| Chaser state | |

| Distance between the chaser and feature f | |

| Minimum distance between the chaser and feature f | |

| Camera range | |

| Camera field of view | |

| Angle between and | |

| White Gaussian measurement noise | |

| Standard deviation of the measurement noise | |

| Output from the measurement model | |

| , | Data sets for storing and , respectively |

| Data sets for storing the chaser states and the associated rewards | |

| S | Maximum size of |

| M, | Number of total and detected features, respectively |

| , , r | Detection, total sinusoidal, and combined rewards, respectively |

| Sinusoidal reward assigned to due to feature f | |

| Constant maximum reward assigned for each detected feature | |

| Constant weight for adjusting the priority of the detection reward | |

| Distance weight associated with feature f | |

| Radius of influence | |

| Gaussian Process model for predicting the ith position component of feature f | |

| Gaussian Process model for estimating the reward distribution over | |

| , K | Kernel functions associated with and , respectively |

| , | Smoothness hyper-parameters in the kernel functions |

| , | Length-scale hyper-parameters in the kernel functions |

| Frequency with the highest magnitude from the FFT analysis | |

| Optimal action according to BO | |

| Action space | |

| The Upper Confidence Bound acquisition function | |

| , | Mean and standard deviation of r from |

| Estimated value | |

| Measured value |

Appendix A. Derivation of the Expression of the Reverse Sigmoid Function in Equation (18)

Appendix B. Derivation of the Necessary Conditions for Creating a Natural Closed Relative Chaser Orbit of Circular Shape

- in Equation (2).

- , and satisfies the constraint .

- or degrees

- and

References

- Wilkins, M.P.; Pfeffer, A.; Schumacher, P.W.; Jah, M.K. Towards an artificial space object taxonomy. In Proceedings of the 2013 AAS/AIAA Astrodynamics Specialist Conferece, Hilton Head, SC, USA, 11–15 August 2013; Available online: https://apps.dtic.mil/sti/citations/ADA591395 (accessed on 4 June 2024).

- Kessler, D.J.; Johnson, N.L.; Liou, J.; Matney, M. The Kessler Syndrome: Implications to future space operations. Adv. Astronaut. Sci. 2010, 137, 2010. Available online: https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=227655e022441d1379dfdc395173ed2e776d54ee (accessed on 4 June 2024).

- In-Space Servicing, Assembly, and Manufacturing (ISAM). Available online: https://nexis.gsfc.nasa.gov/isam/index.html (accessed on 11 July 2024).

- Wong, X.I.; Majji, M.; Singla, P. Photometric stereopsis for 3D reconstruction of space objects. In Handbook of Dynamic Data Driven Applications Systems; Springer: Berlin/Heidelberg, Germany, 2018; pp. 253–291. [Google Scholar] [CrossRef]

- Yuan, X.; Martínez, J.F.; Eckert, M.; López-Santidrián, L. An improved Otsu threshold segmentation method for underwater simultaneous localization and mapping-based navigation. Sensors 2016, 16, 1148. [Google Scholar] [CrossRef] [PubMed]

- Xue, G.; Wei, J.; Li, R.; Cheng, J. LeGO-LOAM-SC: An Improved Simultaneous Localization and Mapping Method Fusing LeGO-LOAM and Scan Context for Underground Coalmine. Sensors 2022, 22, 520. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.C.; Thorpe, C.; Thrun, S. Online simultaneous localization and mapping with detection and tracking of moving objects: Theory and results from a ground vehicle in crowded urban areas. In Proceedings of the 2003 IEEE International Conference on Robotics and Automation, Taipei, Taiwan, 14–19 September 2003; pp. 842–849. [Google Scholar] [CrossRef]

- Gupta, A.; Fernando, X. Simultaneous localization and mapping (SLAM) and data fusion in unmanned aerial vehicles: Recent advances and challenges. Drones 2022, 6, 85. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Montemerlo, M.; Thrun, S. Simultaneous localization and mapping with unknown data association using FastSLAM. In Proceedings of the 2003 IEEE International Conference on Robotics and Automation, Taipei, Taiwan, 14–19 September 2003; pp. 1985–1991. [Google Scholar] [CrossRef]

- Kaess, M. Simultaneous localization and mapping with infinite planes. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 4605–4611. [Google Scholar] [CrossRef]

- Wolf, D.F.; Sukhatme, G.S. Mobile robot simultaneous localization and mapping in dynamic environments. Auton. Robots 2005, 19, 53–65. [Google Scholar] [CrossRef]

- Tweddle, B.E. Computer Vision-Based Localization and Mapping of an Unknown, Uncooperative and Spinning Target for Spacecraft Proximity Operations. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2013. Available online: https://dspace.mit.edu/handle/1721.1/85693 (accessed on 4 June 2024).

- Derpanis, K.G. Overview of the RANSAC Algorithm. Image Rochester NY 2010, 4, 2–3. Available online: https://rmozone.com/snapshots/2015/07/cdg-room-refs/ransac.pdf (accessed on 4 June 2024).

- Lindeberg, T. Scale invariant feature transform. Scholarpedia 2012, 7, 10491. [Google Scholar] [CrossRef]

- Setterfield, T.P. On-Orbit Inspection of a Rotating Object Using a Moving Observer. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2017. Available online: https://dspace.mit.edu/handle/1721.1/112363 (accessed on 4 June 2024).

- Kontitsis, M.; Tsiotras, P.; Theodorou, E. An information-theoretic active localization approach during relative circumnavigation in orbit. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, San Diego, CA, USA, 4–8 January 2016; p. 0872. [Google Scholar] [CrossRef]

- Capolupo, F.; Simeon, T.; Berges, J.C. Heuristic Guidance Techniques for the Exploration of Small Celestial Bodies. IFAC PapersOnLine 2017, 50, 8279–8284. [Google Scholar] [CrossRef]

- Capolupo, F.; Labourdette, P. Receding-horizon trajectory planning algorithm for passively safe on-orbit inspection missions. J. Guid. Control Dyn. 2019, 42, 1023–1032. [Google Scholar] [CrossRef]

- Maestrini, M.; Di Lizia, P. Guidance for Autonomous Inspection of Unknown Uncooperative Resident Space Object. In Proceedings of the 2020 AAS/AIAA Astrodynamics Specialist Conference, South Lake Tahoe, CA, USA, 9–12 August 2020; pp. 1–13. Available online: https://www.researchgate.net/publication/349318751_Guidance_for_Autonomous_Inspection_of_Unknown_Uncooperative_Resident_Space_Objects (accessed on 4 June 2024).

- Maestrini, M.; Di Lizia, P. Guidance strategy for autonomous inspection of unknown non-cooperative resident space objects. J. Guid. Control Dyn. 2022, 45, 1126–1136. [Google Scholar] [CrossRef]

- Ekal, M.; Albee, K.; Coltin, B.; Ventura, R.; Linares, R.; Miller, D.W. Online information-aware motion planning with inertial parameter learning for robotic free-flyers. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 8766–8773. [Google Scholar] [CrossRef]

- Giannitrapani, A.; Ceccarelli, N.; Scortecci, F.; Garulli, A. Comparison of EKF and UKF for spacecraft localization via angle measurements. IEEE Trans. Aerosp. Electron. Syst. 2011, 47, 75–84. [Google Scholar] [CrossRef]

- Placed, J.A.; Castellanos, J.A. A deep reinforcement learning approach for active SLAM. Appl. Sci. 2020, 10, 8386. [Google Scholar] [CrossRef]

- Wen, S.; Zhao, Y.; Yuan, X.; Wang, Z.; Zhang, D.; Manfredi, L. Path planning for active SLAM based on deep reinforcement learning under unknown environments. Intell. Serv. Robot. 2020, 13, 263–272. [Google Scholar] [CrossRef]

- Brandonisio, A.; Lavagna, M.; Guzzetti, D. Reinforcement learning for uncooperative space objects smart imaging path-planning. J. Astronaut. Sci. 2021, 68, 1145–1169. [Google Scholar] [CrossRef]

- Pesce, V.; Agha-mohammadi, A.a.; Lavagna, M. Autonomous navigation & mapping of small bodies. In Proceedings of the 2018 IEEE Aerospace Conference, Big Sky, MT, USA, 2–9 March 2018; pp. 1–10. [Google Scholar] [CrossRef]

- Chan, D.M.; Agha-mohammadi, A.A. Autonomous imaging and mapping of small bodies using deep reinforcement learning. In Proceedings of the 2019 IEEE Aerospace Conference, Big Sky, MT, USA, 3–10 March 2019; pp. 1–12. [Google Scholar] [CrossRef]

- Dani, A.; Panahandeh, G.; Chung, S.J.; Hutchinson, S. Image moments for higher-level feature based navigation. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 602–609. [Google Scholar] [CrossRef]

- Meier, K.; Chung, S.J.; Hutchinson, S. Visual-inertial curve simultaneous localization and mapping: Creating a sparse structured world without feature points. J. Field Robot. 2018, 35, 516–544. [Google Scholar] [CrossRef]

- Corke, P.I.; Jachimczyk, W.; Pillat, R. Robotics, Vision and Control: Fundamental Algorithms in MATLAB, 1st ed.; Springer: Berlin/Heidelberg, Germany, 2011; Volume 73, ISBN 978-3-642-20143-1. [Google Scholar] [CrossRef]

- Capuano, V.; Kim, K.; Harvard, A.; Chung, S.J. Monocular-based pose determination of uncooperative space objects. Acta Astronaut. 2020, 166, 493–506. [Google Scholar] [CrossRef]

- Pesce, V.; Lavagna, M.; Bevilacqua, R. Stereovision-based pose and inertia estimation of unknown and uncooperative space objects. Adv. Space Res. 2017, 59, 236–251. [Google Scholar] [CrossRef]

- Segal, S.; Carmi, A.; Gurfil, P. Stereovision-based estimation of relative dynamics between noncooperative satellites: Theory and experiments. IEEE Trans. Control Syst. Technol. 2013, 22, 568–584. [Google Scholar] [CrossRef]

- Schaub, H.; Junkins, J.L. Analytical Mechanics of Space Systems, 2nd ed.; American Institute of Aeronautics and Astronautics: Reston, VA, USA, 2014; ISBN 978-1-62410-521-0. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Sharma, S.; D’Amico, S. Comparative assessment of techniques for initial pose estimation using monocular vision. Acta Astronaut. 2016, 123, 435–445. [Google Scholar] [CrossRef]

- Rasmussen, C.E.; Williams, C.K. Gaussian Processes for Machine Learning; MIT Press: Cambridge, MA, USA, 2006; ISBN 026218253X. [Google Scholar] [CrossRef]

- MacKay, D.J. Introduction to Gaussian processes. NATO ASI F Comput. Syst. Sci. 1998, 168, 133–166. Available online: https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=e045b76dc5daf9f4656ac10b456c5d1d9de5bc84 (accessed on 4 June 2024).

- GPy: A Gaussian Process Framework in Python. Available online: http://github.com/SheffieldML/GPy (accessed on 11 July 2024).

- Matthews, A.G.D.G.; van der Wilk, M.; Nickson, T.; Fujii, K.; Boukouvalas, A.; León-Villagrá, P.; Ghahramani, Z.; Hensman, J. GPflow: A Gaussian process library using TensorFlow. J. Mach. Learn. Res. 2017, 18, 1–6. Available online: https://www.jmlr.org/papers/volume18/16-537/16-537.pdf (accessed on 4 June 2024).

| Time Step | Noisy Feature Location | Feature Index |

|---|---|---|

| 1 | ||

| 2 | ||

| ⋮ | ⋮ | ⋮ |

| k |

| Input Training Data (Chaser States) | Output Training Data | |||||

|---|---|---|---|---|---|---|

| Time Step | Chaser Location Components | Camera Dir. | Reward | |||

| Component | Component | Component | ||||

| 1 | ||||||

| 2 | ||||||

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ |

| k | ||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kabir, R.H.; Bai, X. Feature Detection of Non-Cooperative and Rotating Space Objects through Bayesian Optimization. Sensors 2024, 24, 4831. https://doi.org/10.3390/s24154831

Kabir RH, Bai X. Feature Detection of Non-Cooperative and Rotating Space Objects through Bayesian Optimization. Sensors. 2024; 24(15):4831. https://doi.org/10.3390/s24154831

Chicago/Turabian StyleKabir, Rabiul Hasan, and Xiaoli Bai. 2024. "Feature Detection of Non-Cooperative and Rotating Space Objects through Bayesian Optimization" Sensors 24, no. 15: 4831. https://doi.org/10.3390/s24154831

APA StyleKabir, R. H., & Bai, X. (2024). Feature Detection of Non-Cooperative and Rotating Space Objects through Bayesian Optimization. Sensors, 24(15), 4831. https://doi.org/10.3390/s24154831