Abstract

Infrared images hold significant value in applications such as remote sensing and fire safety. However, infrared detectors often face the problem of high hardware costs, which limits their widespread use. Advancements in deep learning have spurred innovative approaches to image super-resolution (SR), but comparatively few efforts have been dedicated to the exploration of infrared images. To address this, we design the Residual Swin Transformer and Average Pooling Block (RSTAB) and propose the SwinAIR, which can effectively extract and fuse the diverse frequency features in infrared images and achieve superior SR reconstruction performance. By further integrating SwinAIR with U-Net, we propose the SwinAIR-GAN for real infrared image SR reconstruction. SwinAIR-GAN extends the degradation space to better simulate the degradation process of real infrared images. Additionally, it incorporates spectral normalization, dropout, and artifact discrimination loss to reduce the potential image artifacts. Qualitative and quantitative evaluations on various datasets confirm the effectiveness of our proposed method in reconstructing realistic textures and details of infrared images.

1. Introduction

Infrared imaging utilizes infrared detectors to capture images, differentiating target objects from the background based on the disparities found in radiated heat. This technique effectively addresses the constraints encountered in visible imaging, such as limited object penetration and varying weather conditions, thus playing a pivotal role in diverse fields including climate research [1], disaster warning [2], rescue [3] and resource exploration [4]. However, the resolution of infrared images depends on the quantity and size of the dedicated infrared detector array. The hardware limitation can restrict the imaging quality.

Image super-resolution (SR) can transform low-resolution (LR) images into high-resolution (HR) ones without enhancing optical detector sensitivity or developing complex optical imaging systems, significantly reducing costs. Recent advancements in deep learning have demonstrated exceptional performance in utilizing SR for image enhancement.

Early deep learning-based image SR methods (e.g., SRCNN [5], VDSR [6], and EDSR [7]) rely on convolutional neural network (CNN) to learn the mapping from LR images to HR images. However, since convolutional kernels can only perceive local information from the input image, it is necessary to stack deep networks to gradually enlarge the receptive field. Therefore, CNN may be limited in handling global information in images. Recently, transformer-based methods [8,9,10,11,12] have become widely popular in the field of image SR. They leverage a self-attention mechanism to effectively capture long-range dependencies and handle multi-scale features in images, thus achieving better SR performance. Despite this, the above methods rely only on custom degradation models, such as bicubic interpolation (BI), for paired training, which tend to overlook the complex degradation processes present in real-world images, resulting in overly smooth SR results.

Nowadays, blind-image SR networks (e.g., BSRGAN [13] and Real-ESRGAN [14]) have gained attention for their ability to handle real-world SR tasks. These methods generate training pairs using unknown degradations, which leads to more robust and generalizable models that better meet real-world requirements. However, these works primarily focus on visible images. Few studies have extended image SR to the field of infrared images.

To address the SR challenge in infrared images, we design the Residual Swin Transformer and Average Pooling Block (RSTAB) and construct the novel Infrared Image Super-Resolution model based on Swin Transformer [8] and Average Pooling [15], SwinAIR. SwinAIR combines the strengths of CNN and transformer, allowing for the sufficient extraction of features from infrared images. To achieve SR reconstruction of real infrared images, we further integrate the SwinAIR with the generative adversarial network (GAN) and build the SwinAIR-GAN, which can recover realistic textures and features matching the real infrared images. The primary contributions of this study are as follows:

- We explore a novel infrared image SR reconstruction model, SwinAIR. We introduce the Residual Swin Transformer and Average Pooling Block into the deep feature extraction module of SwinAIR. This configuration effectively extracts both low- and high-frequency infrared features while concurrently fusing them. Comparative results with existing methods demonstrate that our model exhibits superior performance in the SR reconstruction of infrared images.

- We combine SwinAIR with the U-Net [16] to construct SwinAIR-GAN, further exploring the problem of SR reconstruction for real infrared images. SwinAIR-GAN utilizes SwinAIR as the generator network and employs U-Net as the discriminator network. Comparative results with similar methods demonstrate that our model can generate infrared images with better visual effects and more realistic features and details.

- We incorporate spectral normalization, dropout, and artifact discrimination loss to minimize possible artifacts during the restoration process of real infrared images and enhance the generalization ability of SwinAIR-GAN. We also expand the degradation space of the degradation model to emulate the degradation process of real infrared images more accurately. These improvements enable the generation of infrared images that closely align with the textures and details of real-world images while avoiding over-smoothing.

- We establish an infrared data acquisition system that can simultaneously capture LR and HR infrared images of corresponding scenes, addressing the issue of lacking reference images in the real infrared image SR reconstruction task.

The rest of this paper is structured as follows. Section 2 provides a brief overview of related studies, and Section 3 offers a detailed description of the proposed network. In Section 4, we validate the effectiveness of our network by testing it on various datasets and conducting ablation experiments. Finally, the conclusions are presented in Section 5.

2. Related Works

Infrared image SR methods are derived from visible image SR methods. In this section, we briefly review the image SR reconstruction research status related to our work.

2.1. Traditional Infrared Image Super-Resolution Reconstruction Methods

Traditional infrared image SR methods, similar to visible image SR methods, can be categorized into frequency domain-based, dictionary-based, and other methods. Frequency domain-based methods decompose the infrared image into the spatial domain and the frequency domain [17], processing the image separately in each domain. For example, Choi et al. [18] distinguished the edge pixels of infrared images and utilized visible images as guidance to enhance the high-frequency information of infrared images. Mao et al. [19] transformed the infrared image SR problem into a sparse signal reconstruction problem based on differential operations, significantly enhancing the algorithm’s speed. Dictionary-based methods [20,21,22,23,24,25] focus on constructing the mapping relationship between LR and HR image patches. By leveraging the relationships between image patches, these methods retain the pattern information of LR images in HR images. This type of method has garnered researchers’ attention for its interpretability. Other traditional methods include iterative methods [26,27], regularization methods [28], and so on.

With the advancement of deep learning technology, transformer and GAN technologies have begun to be widely applied in the field of image SR reconstruction, demonstrating significant advantages.

2.2. Transformer-Based Image Super-Resolution Reconstruction Methods

Transformer was initially proposed by Vaswani et al. [29] to address sequence modeling in natural language processing tasks. The self-attention mechanism allows the transformer to effectively capture global dependencies. Additionally, its parallel computing capability accelerates training and enhances efficiency. With the development of vision [30,31], detection [32,33], and video-vision [34,35] transformers, superior results have been achieved by transformers in various visual tasks.

In the field of image SR reconstruction, there are mainly two types of transformer-based frameworks. The first type utilizes the transformer structure entirely, such as the end-to-end network proposed by Chen et al. [9]. However, this framework may lack adaptability to biases in some cases. The second type combines transformer with CNN, leveraging the strengths of CNN in capturing local features and transformer in modeling global dependency relationships. This integration enables better capture of both local details and global structures in the image. For example, Liu et al. [8] introduced a backbone Swin Transformer that replaces the standard multi-head self-attention with a shifted-window multi-head self-attention for dense hierarchical feature map prediction, enabling efficient interactions among non-overlapping local windows while addressing the challenges posed by larger-scale images and huge training datasets. Liang et al. [10] built upon this idea and proposed SwinIR, which has demonstrated impressive performance in various tasks, including image SR and dehazing. Subsequently, researchers have continuously produced new research results [11,12] with better performance based on this foundation. Cao et al. [36] took SwinIR as the architecture and proposed the CFMB-T model for infrared remote sensing images SR reconstruction. Yi et al. [37] further utilized a hybrid convolution–transformer network for feature extraction, and the suggested HCTIR deblur model has made great progress in infrared image deblurring.

Despite the promising performance of transformer-based methods in image SR reconstruction, strategies that combine the transformer with CNN for deep feature extraction are still limited. This limitation often results in networks overlooking high-frequency information present in images. The problem is particularly pronounced when dealing with infrared images that involve blurring and a lack of high-frequency details. To address this issue, we propose SwinAIR, which better integrates the transformer with CNN to effectively capture both low- and high-frequency information, thereby enhancing the SR reconstruction capability for infrared images.

2.3. GAN-Based Image Super-Resolution Reconstruction Methods

GAN was proposed by Goodfellow et al. in 2014 [38]. It consists of a generator network and a discriminator network that are trained adversarially. The generator network progressively creates more realistic samples, while the discriminator network continuously refines its ability to differentiate between real and artificial images. Training concludes when the discriminator network struggles at a given threshold to distinguish between the two, resulting in high-quality generated samples.

Ledig et al. [39] first applied the GAN to image SR reconstruction and introduced the SRGAN. The SRGAN incorporates perceptual loss, which provides more detailed and lifelike SR images. Subsequently, Yan et al. [40] optimized the image quality assessment approach and used it to improve the loss function of SRGAN, further enhancing its performance. Wang et al. [41] introduced the ESRGAN model in 2018, which incorporates a residual dense block into its generator network and employs a relative discriminator network. By enhancing perceptual loss and introducing interpolation, ESRGAN achieves exceptional perceptual reconstruction effects. Based on the ESRGAN, several newer networks have been developed, including BSRGAN [13], RFB-ESRGAN [42], and Real-ESRGAN [14].

Liu et al. [43] introduced the natural image gradient prior. They used visible images from corresponding scenes as guidance to generate infrared images with improved subjective visual quality. Huang et al. [44] proposed HetSRWGAN, which incorporates heterogeneous convolution and adversarial training. The reconstructed infrared images using this method achieve higher objective evaluation metrics. They also introduced the lightweight PSGAN [45] using a multi-stage transfer learning strategy. Liu et al. [46] designed a generator network with recursive attention modules, significantly improving the SR quality of vehicle infrared images. Lee et al. [47] proposed the Style Transfer Super-Resolution Generative Adversarial Network, STSRGAN, enhancing the quality of LR infrared images containing very small targets.

Although the aforementioned GAN-based methods have shown high potentiality, their training processes are often unstable and result in unintended artifacts. To generate more realistic infrared images, we employ the spectral normalization-based U-Net [16] as the discriminator network and add the artifact discrimination loss function in our SwinAIR-GAN.

3. Proposed Method

In this section, we will comprehensively introduce the SwinAIR and SwinAIR-GAN along with their technical details.

3.1. SwinAIR

3.1.1. Overall Structure

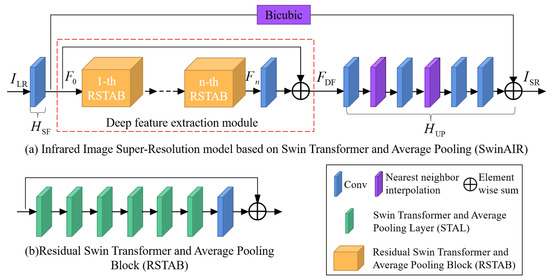

The structure of SwinAIR is displayed in Figure 1. Infrared images generally have lower resolution and contain more low-frequency information than visible images. Therefore, the input LR infrared image first undergoes shallow feature extraction and is passed directly to the end of the deep feature extraction network. This helps to better preserve its low-frequency information, thereby improving the performance of SwinAIR.

Figure 1.

Structure of our proposed Infrared Image Super-Resolution model based on Swin Transformer and Average Pooling, SwinAIR. Firstly, the input LR infrared undergoes the shallow feature extraction module to extract the shallow feature map ; then, is deep extracted and refined by the deep feature extraction module. We obtain after RSTABs. After undergoes convolutional operations, it is further combined with through residual connection to obtain the deep feature map . Finally, is input into the upsampling module to generate the output SR infrared image . The interpolation operation here is bicubic.

Concurrently, the shallow features are input to the deep feature extraction module to obtain the deep features. We adopt the hierarchical design of the deep feature extraction module from the Swin Transformer [8]. This structure allows for the extraction and integration of features at different scales in the infrared image, helping the network to better learn critical information and reduce noise.

Ultimately, the shallow features and deep features are merged and fed into the upsampling module to generate the SR infrared image.

In the shallow feature extraction module, the LR infrared image is processed by a 3 × 3 convolutional layer to extract shallow feature information as follows:

where denotes the LR infrared image, and , , , and indicate the height, width, and channel number of the LR infrared image, respectively. represents the shallow feature extraction module, and represents the shallow feature map.

In the deep feature extraction module, multiple stacked RSTABs (as shown in Figure 1b) and a 3 × 3 convolutional layer are employed to further extract deep features. Residual connections are also used for feature aggregation to obtain deep features, and this process can be simplified as

where corresponds to the deep feature extraction module, and represents the deep feature map. The deep feature extraction module not only expands the receptive field by stacking convolutional layers but also combines the advantages of both transformer and CNN to effectively extract low- and high-frequency image information. With local and global residual learning, the problem of gradient degradation in deep networks is mitigated, significantly reducing the parameters and overall training time.

Finally, the feature map obtained from the deep feature extraction module is reconstructed layer by layer according to a specified upsampling factor that follows the order of the upsampling module (as shown in Figure 1a). Simultaneously, the upsampled LR infrared image is moved to the end of the upsampling module, where it is added to the reconstructed feature map to produce the final SR infrared image:

where represents the upsampling module, is the SR infrared image, and , , , and indicate the height, width, and channel number of the SR infrared image, respectively (similar to ). The specific steps of the upsampling reconstruction process can be expressed as

where denotes a 3 × 3 convolutional layer, represents the nearest neighbor interpolation, denotes the bicubic interpolation, represents the reconstructed feature map and signifies the dropout operation. Dropout is a regularization technique originally proposed to address overfitting issues in classification networks. A recent study has demonstrated that dropout can enhance the generalizability of SR networks [48]. Therefore, we apply dropout before the last convolutional layer in the upsampling module in SwinAIR with a probability of 0.5. The effectiveness of this approach is confirmed by ablation experiments in Section 4.4.2.

3.1.2. Deep Feature Extraction Module

Drawing inspiration from previous work [8,49], we develop the deep feature extraction module for SwinAIR. It is mainly composed of several RSTABs, which combine the strengths of transformer and CNN to effectively extract low- and high-frequency information from infrared images.

Specifically, the deep feature extraction module consists of n RSTABs and a 3 × 3 convolutional layer with residual connections. The intermediate feature map obtained from the RSTAB, , and the final output deep feature map, , are given as follows:

where denotes the shallow feature map , and represents the RSTAB. By introducing a convolutional layer at the end of the deep feature extraction module, the inductive bias of convolutional operations is incorporated into the transformer, providing a more robust foundation for the subsequent aggregation of shallow and deep features [10].

As illustrated in Figure 1b, each RSTAB is composed of six Swin Transformers and Average Pooling Layers (STALs) and a 3 × 3 convolutional layer with the residual connection. This allows local features to be transmitted and fused across different layers, enabling the network to deeply learn the mapping relationship between LR and HR infrared images, thereby accelerating the network’s learning and convergence speed.

For the given input feature map of the RSTAB, , the intermediate output feature map can be further defined as follows:

where denotes the STAL in the RSTAB, and represents the feature map output in the STAL of the RSTAB. represents the final 3 × 3 convolutional layer in the RSTAB.

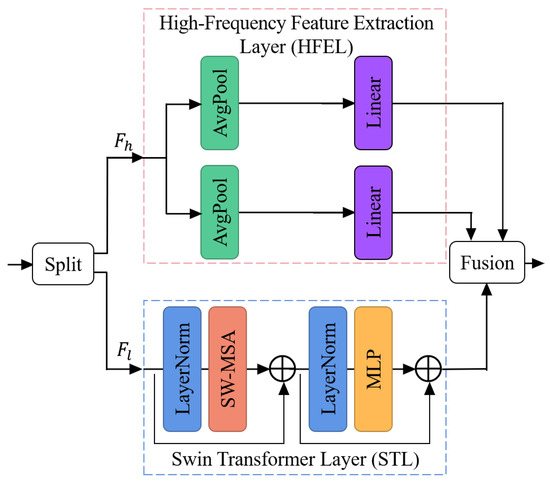

The internal structure of STAL is shown in Figure 2. Each STAL has two sections: the High-Frequency Feature Extraction Layer (HFEL), which consists of two parallel average pooling and fully connected layers, and the Swin Transformer Layer (STL) [8]. The use of channel separation strategies allows the network to independently process low- and high-frequency information in infrared images, mitigating interference during the learning of different structural features. This approach also reduces computational complexity and accelerates the convergence of the network.

Figure 2.

Structure of the Swin Transformer and Average Pooling Layer (STAL). There are two ways to combine CNN with the transformer: serial or parallel. The serial method means that each layer can process either low- or high-frequency information but not both. Therefore, to allow each layer to process both types of information simultaneously, a parallel structure with channel separation is used to integrate the CNN and transformer. The feature map is initially divided into and , which are then separately fed into the High-Frequency Feature Extraction Layer (HFEL) and the Swin Transformer Layer (STL). In STL, SW-MSA and MLP represent the self-attention module based on the shift-window mechanism and the multi-layer perceptron module, respectively.

Specifically, for the STAL of the RSTAB, we assume the input feature map . , , and indicate the height, width, and channel number of . is partitioned channel-wise to and (); then, and are independently fed to the HFEL and the STL.

With the sensitivity of the average pooling layers and the ability of the fully connected layers to capture details [49], the HFEL comprises two parallel paths to perform high-frequency information extraction, each containing an average pooling layer and a fully connected layer. is divided into two channel-wise maps and . and are separately processed in the parallel paths to generate their respective outputs , as follows:

where signifies the average pooling layer and symbolizes the fully connected layer.

To decrease the computational burden associated with the standard transformer for image processing, we use STL for low-frequency feature extraction. As shown in Figure 2, STL primarily consists of the self-attention module based on the shift-window mechanism (SW-MSA) and the multi-layer perceptron module (MLP). SW-MSA calculates multi-head self-attention within local regions of the image by shift window. This process captures the correlations between different local regions of the image, thereby extracting local structures and texture information. MLP combines non-linear transformations to better capture the semantic information of the image. For , the processing process can be described as shown below:

where represents the feature map after processing by the STL. denotes the layer normalization operation, represents the SW-MSA module, and represents the MLP module.

Finally, the low-frequency information feature map after processing by the STL, , and the high-frequency information feature maps after processing by the HFEL, , , are fused along the channel dimension to get , which can be represented as

where represents the fusion operation.

3.1.3. Loss Function

SwinAIR uses L1 loss as the loss function.The L1 loss calculates the pixel-wise differences between SR and HR images as follows:

where h, w, and c denote the height, width, and channel number of the image, respectively. and represent the pixel values of the SR and HR images at the spatial location, , respectively.

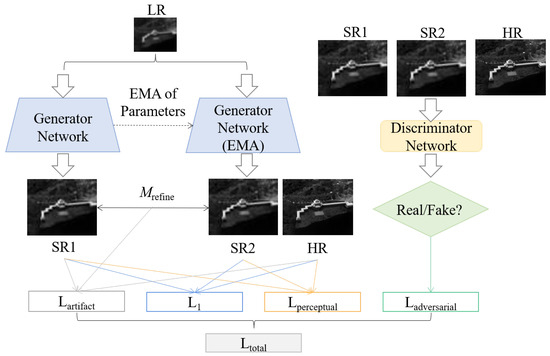

3.2. SwinAIR-GAN

To generate infrared images that more closely align with real physical characteristics, we further design SwinAIR-GAN based on SwinAIR. The proposed SwinAIR-GAN structure for real infrared image SR is depicted in Figure 3. We use SwinAIR as the generator network and a spectral normalization-based U-Net as the discriminator network.

Figure 3.

SwinAIR-GAN method. The generator network generates the SR infrared feature map SR1 from the LR infrared image and applies an exponential moving average (EMA) to the parameters to obtain the infrared feature map SR2. Various loss functions are then computed for SR1, SR2, and the HR infrared image. Finally, the corresponding discriminator network judge the authenticity of the generated infrared image.

3.2.1. Generator Network

The generator network utilizes our proposed SwinAIR, which effectively extracts and reconstructs information from infrared images. Detailed information about SwinAIR can be found in Section 3.1.

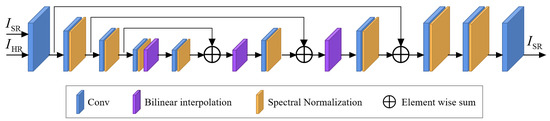

3.2.2. Discriminator Network

The discriminator network (as shown in Figure 4) employs a U-Net architecture. However, the complex degradation space and structure can increase training instability. To mitigate this risk, spectral normalization [50] is used to stabilize training. This approach can also alleviate the introduction of unnecessary artifacts during GAN training, thereby achieving a balance between local image detail enhancement and artifact suppression.

Figure 4.

Structure of the discriminator network. We adopt a U-Net structure based on spectral normalization.

The discriminator network primarily comprises 10 convolutional layers, 8 spectral normalization layers, and 3 bilinear interpolation layers. Each of the first 9 convolutional layers is followed by a Leaky ReLU activation function. Table 1 provides the detailed information about each convolutional layer in the discriminator network.

Table 1.

Detailed information about the convolutional layers in the discriminator network.

3.2.3. Loss Function

Appropriate loss functions are crucial to quantifying the differences between SR and HR images and optimizing network training. Conventional networks use a combination of per-pixel, perceptual, and adversarial losses in real-image SR tasks. To enhance image details and eliminate artifacts, the SwinAIR-GAN incorporates additional artifact discrimination loss. Below, we will discuss these different loss functions in more detail.

L1 Loss. The information about L1 loss can be found in Section 3.1.3.

Perceptual Loss. In contrast to L1 loss, perceptual loss aims to make SR infrared images more visually similar to HR infrared images rather than just minimizing pixel differences. This process is expressed as follows:

where , , and denote the height, width, and channel numbers of the feature map, respectively. and represent the pixel values of the SR and HR feature maps extracted from the layer of the pretrained network at the spatial location , respectively.

Adversarial Loss. Adversarial loss is primarily used to generate realistic SR images. The adversarial losses of SwinAIR-GAN are represented as follows:

where and represent the adversarial losses of the generator network and discriminator network, respectively. and denote the probabilities the SR and HR images are trained to be classified as real images, respectively.

Artifact Discrimination Loss. Artifact discrimination loss is used to minimize SR image artifacts [51] as follows:

where refers to the SR image obtained through the generator network, and represents the refined feature map.

Overall loss function. Taking the aforementioned losses into account, the comprehensive loss function of the SwinAIR-GAN is as follows:

where , and represent the proportionate contributions of each loss type (i.e., , perceptual, adversarial and artifact discrimination types) to the comprehensive loss function. Based on experience, we set , , and .

3.2.4. Degradation Model

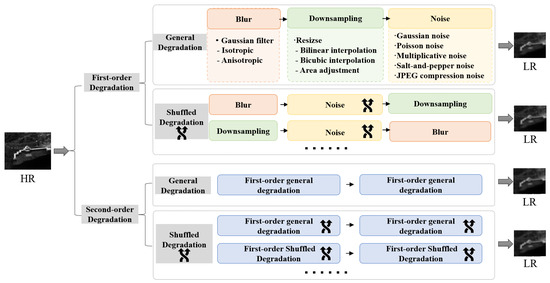

Classical SR networks commonly employed bicubic downsampling to simulate image degradation. However, this approach has difficulty dealing with complex and unknown degradations in real infrared images. Issues such as blurring and ghosting are commonplace. To tackle this problem, we extend the degradation space (as shown in Figure 5) to more effectively simulate the process real infrared image degradation.

Figure 5.

The degradation model. We expand the degradation space and consider first- and second-order degradation processes to emulate the real degradation of infrared images more accurately.

Typically, image degradation can be represented by a general model, such as the following:

where k denotes the blur kernel, ⊗ denotes the convolution operation, ↓ denotes the down-sampling operation, s is the scaling factor, and represents noise (typically additive white Gaussian) with a standard deviation of .

To expand the degradation space, we consider both first- and second-order degradations. For the first-order degenerate process, general and shuffled degradations are applied. The general degradation refers to the sequential application of blur, downsampling, and noise in sets of two or three. The shuffled degradation involves the random ordering of the degradation steps. For the second-order degenerate process, the same two degradation types are considered. The general degradation comprises two first-order degradation processes, while the shuffled degradation involves randomly shuffling blur, downsampling, and noise for each of the two first-order degradation processes.

In the degradation model, blur, noise, and downsampling are the three key factors affecting image degradation. By expanding the degradation space of these factors, true degradation processes are approximated to improve the applicability and generalizability of the network. Our proposed degradation model considers these key factors that affect the degradation of infrared images as follows:

Blur. In our model, isotropic and anisotropic Gaussian blur kernels are used. A Gaussian blur kernel with a kernel size of can be represented as follows:

where ∑ denotes the covariance matrix, C represents the spatial coordinates, and N is a normalization constant, , and is sampled from a Gaussian distribution. ∑ can be further expressed as

where and represent the standard deviation along two main axes (i.e., the eigenvalues of the covariance matrix), and is the rotation angle. When , is an isotropic Gaussian blur kernel. Otherwise, is an anisotropic Gaussian blur kernel.

The Gaussian blur kernel configurations are hyperparameters. Different settings affect the blurring of infrared images during degradation and the complexity of the model. Based on previous works (e.g., BSRGAN [13] and Real-ESRGAN [14]) and the characteristics of infrared images, we experiment with various settings combinations and ultimately determine the optimal settings.

For the kernel size, we uniformly sample from to obtain varying degrees of blurring. For the isotropic Gaussian kernel, we uniformly sample the standard deviation from . For the anisotropic Gaussian kernel, we uniformly sample the standard deviation from and with equal probability, and we uniformly sample the rotation angle from to simulate motion blurring at different angles.

By randomly using these Gaussian kernels with different configurations during the degradation, we achieve a variety of complex blurring effects that closely simulate the degradation process of real infrared images, thus effectively optimizing the performance of SwinAIR-GAN.

Noise. The noise in infrared images can usually be classified into two categories: random noise and fixed pattern noise. Random noise includes two types of stationary random white noise: Gaussian noise and Poisson noise. Fixed pattern noise is primarily characterized by image non-uniformity and blind elements. Non-uniform and scanning-array blind elements contribute to multiplicative noise, whereas staring-array blind elements result in salt-and-pepper noise. Therefore, we consider four types of noise for processing: Gaussian noise, Poisson noise, multiplicative noise, and salt-and-pepper noise. We also include JPEG compression noise to expand the degradation space.

Gaussian noise is random noise with a probability density function (PDF) that follows a Gaussian distribution. The PDF of Gaussian noise is given by

where x represents the grayscale value of the image noise, represents the mean value of x, and represents the standard deviation of x.

Poisson noise follows a Poisson distribution, and its intensity is proportional to that of the image. The noises at different pixel points are independent of one another. The PDF of Poisson noise is given by

where is the expectant value, and .

Multiplicative noise is produced by random variations in channel characteristics and is related to the signal through multiplication. This type of noise follows a rayleigh or gamma distribution. In infrared images, the multiplicative noise caused by the non-uniformity is numerous and mixed together in a unified form. In a focal plane detector array, the response of each detection element to a mixture of various non-uniform factors can be expressed as follows:

where x represents the response of the detection element, I represents the incident infrared radiation, and M represents the number of detection elements in the array. The non-uniformity of each detection element is influenced by the combined impact of the gain , and offset, , of each unit.

Salt-and-pepper noise is caused by changes in signal pulse intensity. It includes two variations: high-intensity salt-like noise represented by white dots and low-intensity pepper-like noise represented by black dots. Typically, they both occur simultaneously. Dieickx et al. [52] suggested that the blind elements of a staring array manifest as salt-and-pepper noise. The PDF of salt-and-pepper noise in infrared images can be represented as follows:

where and represent the density distributions of pepper noise and salt noise, respectively.

JPEG compression is a widely used lossy compression technique for digital images, which reduces storage and transmission requirements by discarding unimportant components. The quality of the compressed image is determined by the quality factor q (). A higher q value corresponds to a lower compression ratio and better image quality. When q > 90, the image quality is considered great, and there will be no obvious artifacts introduced. In our degradation model, the range for the JPEG quality factor is set to within .

Downsampling. Downsampling is a fundamental operation used to change the image size and generate LR images. There are four interpolation methods for image resizing: nearest neighbor, bilinear, bicubic, and area adjustment. Nearest neighbor interpolation introduces pixel deviation issues [53]; hence, we focus primarily on the other three.

The different resizing methods have their own effects on images. Our degradation model randomly selects from downsampling, upsampling, and maintaining the original size to diversify the degradation space. Assuming that the scaling factor is s, the image size is first changed by a (), resulting in an intermediate-sized image. After degradation, the image is resized by a scaling factor of , causing the image size to match the downsampling scope required by the original image.

4. Experimental Details and Results

In this section, we provide a detailed introduction to the experimental details and validation results of SwinAIR and SwinAIR-GAN. Both quantitative and qualitative analyses demonstrate the superiority of our proposed method for infrared image SR reconstruction.

4.1. Datasets and Metrics

Training sets. Due to the scarcity of high-quality public infrared image datasets available for training, we utilize visible image datasets as the training set based on previous work [45]. Specifically, we use the DV2K [54] (DIV2K [55] + Flickr2K [7]) dataset, which contains 3450 images rich in texture and detail.

Test sets. To maximize the generalization of test scenarios and thoroughly validate the effectiveness of the proposed method, we employ six datasets as the test sets for SwinAIR: the CVC-14 dataset [56], the Flir dataset [57], the Iray universal infrared dataset [58] (Iray-384), the Iray infrared maritime ship dataset [59] (Iray-ship), the Iray infrared aerial photography dataset [60] (Iray-aerial photography), and the Iray infrared security dataset [61] (Iray-security). The CVC-14 dataset [56], the Flir dataset [57], and the Iray-384 dataset [58] mainly consist of road traffic scene images, while the Iray-ship dataset [59], the Iray-aerial photography dataset [60], and the Iray-security dataset [61] consist of maritime ship scenes, aerial street scenes, and security surveillance scenes, respectively. For SwinAIR-GAN, we use the ASL-TID dataset [57], the Iray-384 dataset [58] and a self-built dataset as the test sets. The ASL-TID dataset [57] primarily includes scenes with pedestrians, cats and horses, and our self-built dataset comprises 138 images covering various scenes such as buildings, roads, vehicles and pedestrians.

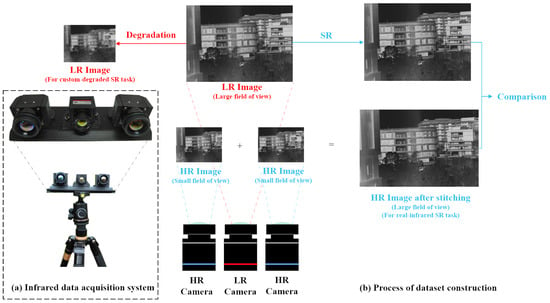

The purpose of creating our own dataset is to address the challenge of lacking reference images in real infrared image SR tasks, which makes it difficult to thoroughly compare the texture and detail recovery effects of different methods. Specifically, we build an infrared data acquisition system consisting of an LR Iray infrared camera and two HR Chengdu Jinglin infrared cameras. The parameters and models of the cameras are detailed in Table 2. The specific process of acquiring infrared image data using the above-mentioned system is shown in Figure 6. We place three cameras parallel to each other with the LR Iray infrared camera in the middle and the two HR Chengdu Jinglin infrared cameras on the sides. Different degradation methods are applied to infrared images captured by the Iray camera across various scenes to construct LR–HR image pairs (in the custom-degraded SR task, the infrared images captured by the Iray camera are considered as HR images relative to the degraded images). Simultaneously, we perform parallax correction on the images captured by the two Chengdu Jinglin infrared cameras and then stitch them together to obtain images with a higher resolution than the original ones captured by the Iray camera. These higher-resolution images will be used as reference images in real infrared SR tasks (i.e., direct SR reconstruction of images captured by the Iray camera) to evaluate the effectiveness of different methods in reconstructing details and textures from unknown degraded infrared images.

Table 2.

Detailed parameters of the infrared data acquisition system.

Figure 6.

Our infrared data acquisition system. (a) System hardware. (b) Process of dataset construction. For SR tasks with custom degradation, images captured by the Iray camera are used as HR reference images and to calculate metrics. Due to differences in images captured by different cameras, we use no-reference metrics for the quantitative analysis of SR reconstruction quality for real SR tasks. The HR images captured by the Jinglin Chengdu cameras are stitched together to serve as only reference images for real SR tasks, assessing whether the SR reconstructed images are consistent with real textures and details.

Evaluation metrics. To quantitatively evaluate the SR performance of our proposed method, we use full-reference image quality evaluation metrics, peak signal-to-noise ratio (PSNR), and structural similarity (SSIM) for SwinAIR. To further evaluate the realism of the generated infrared images of SwinAIR-GAN, we also use non-reference image quality evaluation metrics, natural image quality evaluator (NIQE), and perceptual index (PI), in addition to PSNR and SSIM. All evaluation metrics are computed on the luminance channel after converting the reconstructed images to the YCbCr color space. We assess the efficiency of SwinAIR using floating-point operations (FLOPs) and inference speed.

4.2. Model and Training Settings

Model settings. For SwinAIR, the number of RSTABs (n) and the number of STALs (m) are set to 6. The window size of the SW-MSA in STL is 8 with the number of attention heads set to 6. The weights for the high-frequency channel () and the low-frequency channel () are set to 1/3 and 2/3, respectively. For SwinAIR-GAN, the degradation model parameters are detailed in Section 3.2.4. The generator network parameters are the same as those of SwinAIR, and the discriminator network settings are provided in Table 1.

Training settings. We use the Adam method to optimize our SwinAIR and SwinAIR-GAN, with the three hyperparameters of Adam, , , and , set to , , and , respectively. The initial learning rate is set to and decays using a multi-step strategy. The number of iterations for model updates is set to . All trainings are implemented in PyTorch and conducted on a server equipped with 8 Nvidia A100 GPUs with a batch size of 32. The input LR image size is set to 64 × 64. During the training process, the dataset is augmented using seven data augmentation techniques, including random horizontal flipping, random vertical flipping, and random 90° rotations.

4.3. SwinAIR

4.3.1. Comparisons with the State-of-the-Art Methods

We compare our SwinAIR method with existing mainstream classical image SR methods on multiple datasets to evaluate their performance in reconstructing infrared images. All LR images are degraded using bicubic downsampling.

Quantitative Evaluations. We evaluate the SR reconstruction performance of SwinAIR and other methods on infrared images across six datasets at different scales. The quantitative comparison results are shown in Table 3. Our network achieves superior reconstruction performance with fewer parameters. At the ×2 and ×3 scales, our method achieves the best metrics across all datasets except for the CVC-14 dataset [56], where the results are second best. At the ×4 scale, our method achieves the best performance across all test sets while reducing the parameters by 19% compared to SwinIR. We also compare the efficiency of SwinAIR with several well-performing methods at the scale, and the results are shown in Table 4. The FLOPs and inference speed measurements are performed on an NVIDIA A100 GPU with the input image size set to . To ensure the accuracy of the inference speed measurement, we first perform 50 warm-up inferences, which are followed by 100 inferences to calculate the average inference time. Compared to CNN-based methods (e.g., EDSR [7] and SRFBN [62]), transformer-based methods (e.g., SwinIR [10], HAT [12] and SwinAIR) achieve better performance at the cost of reduced inference speed. Nonetheless, the use of the shift-window mechanism can effectively reduce their computational complexity. Additionally, due to the implementation of channel separation strategies, SwinAIR achieves the lowest computational complexity among the compared methods.

Table 3.

Quantitative comparison results achieved by different methods on the CVC-14 [56], Flir [57], Iray-384 [58], Iray-ship [59], Iray-aerial photography [60], and Iray-security [61] datasets. We use PSNR (dB)/SSIM * as the evaluation metrics. Params represents the parameters of the methods. Bold and underlined numbers indicate the best and second-best results, respectively.

Table 4.

Efficiency comparison results achieved by different methods. FLOPs (G) and inference speed (ms) * are used as metrics for evaluating computational complexity and real-time performance, respectively.

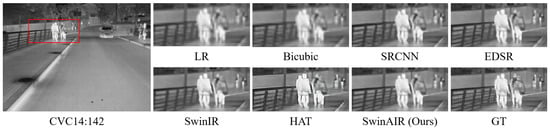

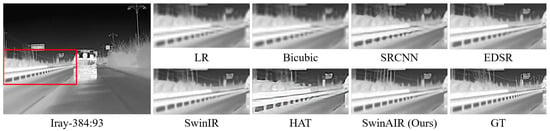

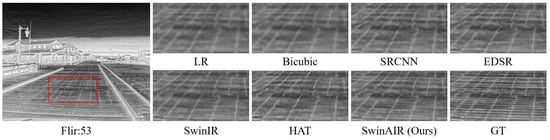

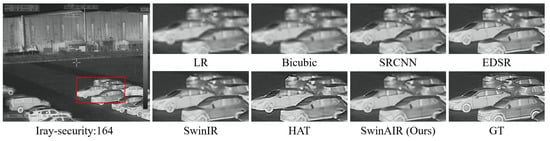

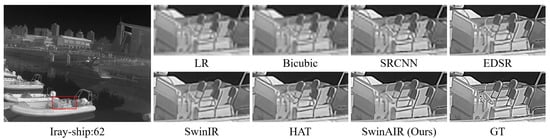

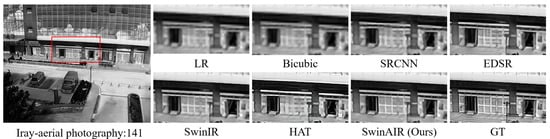

Visual comparison. We compare the visual reconstruction results of different methods at the ×4 scale on different datasets. Compared with other methods, our proposed SwinAIR demonstrates superior visual effects and is able to better restore accurate details and textures.

For instance, in the restoration of fence details (Figure 7 and Figure 8), bicubic, SRCNN [5], and EDSR [7] produce visually less appealing blurry images. HAT [12] generates overly sharpened images with speckled and serrated textures. SwinIR [10] and SwinAIR perform better overall, but SwinIR [10] introduces distortions and twists at intersections of distant fence lines. Only SwinAIR can reconstruct such detailed features consistently with reference images. In the restoration of ground textures (Figure 9), all methods except SwinAIR fail to accurately restore the closely spaced horizontal lines at the bottom. Similar situations can also be observed in the contours of cars and ships (Figure 10 and Figure 11), and the lines of door frames (Figure 12), where SwinAIR shows superior capability in recovering fine and contour features. This demonstrates that handling different frequency features separately can effectively enhance the network’s performance.

Figure 7.

Visual comparison results achieved by different methods on the CVC14 dataset [56] at the ×4 scale. GT represents the original HR image in the red box of the leftmost image. Our proposed method can restore more realistic fence details.

Figure 8.

Visual comparison results achieved by different methods on the Iray-384 dataset [58] at the ×4 scale. GT represents the original HR image in the red box of the leftmost image. Our proposed method can restore more realistic fence details.

Figure 9.

Visual comparison results achieved by different methods on the Flir dataset [57] at the ×4 scale. GT represents the original HR image in the red box of the leftmost image. Our proposed method can restore closely spaced ground textures.

Figure 10.

Visual comparison results achieved by different methods on the Iray-security dataset [61] at the ×4 scale. GT represents the original HR image in the red box of the leftmost image. Our proposed method can restore more realistic vehicle contours.

Figure 11.

Visual comparison results achieved by different methods on the Iray-ship dataset [59] at the ×4 scale. GT represents the original HR image in the red box of the leftmost image. Our proposed method can restore more realistic ship contours.

Figure 12.

Visual comparison results achieved by different methods on the Iray-aerial photography dataset [60] at the ×4 scale. GT represents the original HR image in the red box of the leftmost image. Our proposed method can restore more realistic lines of door frames.

4.3.2. Ablation Study

To further validate the effectiveness of each component in SwinAIR and the rationality of parameter selection, we conduct extensive ablation experiments. The models are retrained on the DV2K [54] dataset and reevaluated on the CVC14 dataset [56] at the ×4 scale.

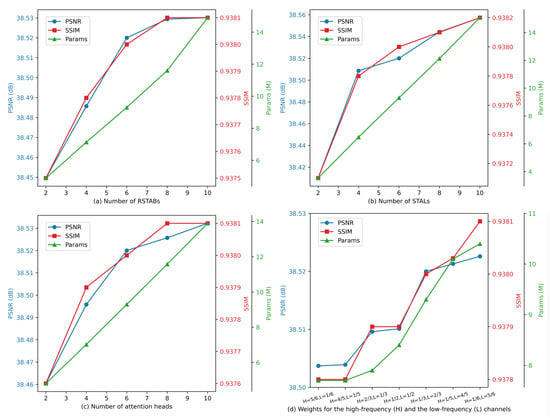

Number of RSTABs. Initially, as the number of RSTABs increases, the network’s ability to capture features continuously improves, and its performance steadily enhances. However, when the number of RSTABs increases further, the network performance gains begin to saturate while parameters continue to increase rapidly. The relation between network performance and parameters with varying RSTABs is shown in Figure 13a. To balance the performance and parameters of SwinAIR, we ultimately set the number of RSTABs to 6.

Figure 13.

We evaluate the impact of different module configurations and parameter selections on the performance of SwinAIR using PSNR (dB), SSIM, and the number of parameters (M) as performance metrics. In the figures, params represent the number of parameters, H and L denote the weights of high-frequency and low-frequency channels, respectively. (a) The impact of the number of RSTABs on the performance of SwinAIR. (b) The impact of the number of STALs within each RSTAB on the performance of SwinAIR. (c) The impact of the number of attention heads in the STL on the performance of SwinAIR. (d) The impact of different weights of the high-frequency and the low-frequency channels on the performance of SwinAIR.

Number of STALs in RSTAB. As shown in Figure 13b, the performance of SwinAIR starts to plateau when the number of STALs reaches 4 and saturates when the number reaches 6. There is little difference in performance between having 6 and 8 STALs. Therefore, we ultimately set the number of STALs to 6.

Number of attention heads. Using more attention heads can enhance the network’s ability to capture complex features, but it also introduces additional computational overhead. By analyzing the impact of different numbers of attention heads on network performance, as shown in Figure 13c, we find that setting the number of attention heads to 6 achieves a good balance between performance and parameters.

Different weights of the high-frequency and the low-frequency channels. The selection of channel separation weights is related to the distribution of high-frequency and low-frequency information in infrared images. As shown in Figure 13d, we notice that as the weight of the low-frequency channel increases, the network performance continuously improves. This indicates that there is still a substantial amount of learnable low-frequency information in the images. However, due to the high complexity of the low-frequency feature processing structure (STL), the model’s parameters also increase accordingly. When the low-frequency channel weight exceeds 2/3, further increasing the weight provides minimal performance gain. Therefore, we ultimately set the high-frequency channel weight to 1/3 and the low-frequency channel weight to 2/3.

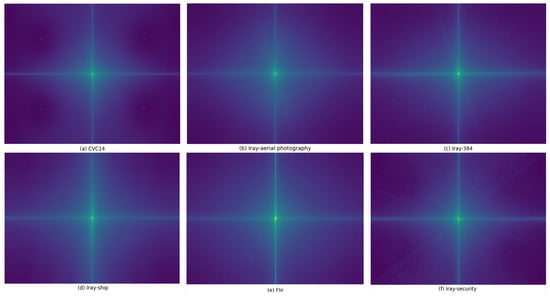

Different datasets have varied devices, shooting environments, and objects, which may affect the optimal selection of channel separation weights. To further verify the reasonableness of channel separation weight settings, we perform frequency domain analysis on different datasets. Specifically, we conduct the Fourier transform on the images in each dataset and compute the average spectrum to represent the overall distribution of frequency information in the dataset. The comparison results are shown in Figure 14. Although the spectrograms of different datasets vary in detail, the overall range and intensity of frequency information distribution are similar. This demonstrates that the channel separation weights can be applied to different types of infrared images.

Figure 14.

Frequency domain analysis of different datasets. We perform the Fourier transform and spectrum centralization on the images in the datasets. The central part of the spectrogram represents low-frequency information, while the edges represent high-frequency information. The overall range of the spectrum distribution is similar across different datasets.

Different structures of the HFEL. Pooling layers in CNNs can reduce data dimensionality and extract high-frequency information from images. We validate the impact of using different pooling layers and different numbers of branches within the HFEL on SwinAIR’s performance. The results are shown in Table 5. The results indicate that the average pooling layer outperforms the max pooling layer. Additionally, the structure with two parallel pooling and fully connected layers significantly outperforms the single-branch structure. Interestingly, we find that using both average and max pooling layers together does not perform as well as using either one alone. We hypothesize that this is due to the mismatch and difficulty in fusing features extracted by different pooling layers. Therefore, we ultimately adopt a structure with two parallel average pooling and fully connected layers in the HFEL.

Table 5.

Comparison results of using different types of pooling layers and different numbers of branches within the HFEL.

4.4. SwinAIR-GAN

4.4.1. Comparisons with the State-of-the-Art Methods

We compare the SwinAIR-GAN method with other GAN-based image SR methods across multiple datasets to thoroughly evaluate its performance in restoring real infrared images. For the sake of fairness, we compare methods only with similar types of loss functions due to the strong correlation between evaluation metrics and the loss functions of GAN-based image SR methods [64].

Quantitative Evaluations. We evaluate the SR reconstruction performance of SwinAIR-GAN and other GAN-based methods on infrared images across three datasets. We validate the image restoration performance under both bicubic downsampling (BI degradation) and Gaussian blur downsampling (BD degradation) scenarios at the ×4 scale. The quantitative comparison results are shown in Table 6. Additionally, we perform the ×4 scale SR reconstruction directly on the images in the dataset to validate the SR reconstruction performance of different methods on unknown degraded real scenes. We use no-reference evaluation metrics, NIQE and PI, for quantitative analysis. The quantitative comparison results are shown in Table 7. Apart from achieving second-best results on the ASL-TID dataset [57] for unknown degraded real scenes, SwinAIR-GAN outperforms other models of the same category across all other datasets under various degradation conditions.

Table 6.

Quantitative comparison results achieved by different GAN-based methods on the self-built, Iray-384 [58], and ASL-TID [57] datasets. BI and BD denote the degradation modes using bicubic downsampling and Gaussian blur downsampling, respectively. We use PSNR (dB)/SSIM * as the evaluation metrics. Params represents the parameters of the methods. Bold and underlined numbers indicate the best and second-best results, respectively.

Table 7.

Quantitative comparison results achieved by different GAN-based methods on the self-built, Iray-384 [58], and ASL-TID [57] datasets. We perform 4× SR reconstruction on the HR images in the dataset to validate the reconstruction performance of different methods on unknown degradation. NIQE/PI * are used as the evaluation metrics. Params represents the parameters of the methods. Bold and underlined numbers indicate the best and second-best results, respectively.

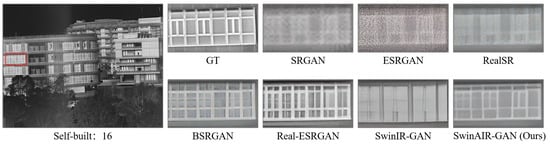

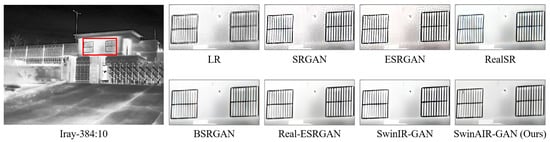

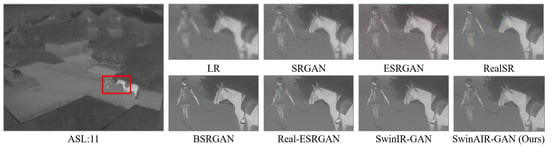

Visual comparison. We compare the visual reconstruction results of different methods for real infrared images on multiple datasets at the ×4 scale. Compared with other methods, our proposed SwinAIR-GAN is able to restore details and textures more accurately and achieve better visual effects closer to the real images. For example, in Figure 15, only SwinAIR-GAN restores texture details consistent with the real scene. Other methods either introduce a lot of noise (e.g., ESRGAN [41], RealSR [54]) or generate incorrect details and textures (e.g., Real-ESRGAN [14], BSRGAN [13]). In other scenarios, our method recovers texture details that are more visually accurate and consistent with the LR image (e.g., the restoration of window lines on the left side in Figure 16 and the restoration of the person’s arm in Figure 17).

Figure 15.

Visual comparison results achieved by different methods for real infrared images on the self-built dataset at the ×4 scale. GT represents the reference image in the red box of the leftmost image, which is obtained by stitching images from two HR cameras. We perform SR reconstruction on the corresponding real-scene images captured by the LR camera and observe the texture differences with the GT images to evaluate performance.

Figure 16.

Visual comparison results achieved by different methods for real infrared images on the Iray-384 dataset [58] at the ×4 scale. LR represents the original image in the red box of the leftmost image.

Figure 17.

Visual comparison results achieved by different methods for real infrared images on the ASL-TID dataset [57] at the ×4 scale. LR represents the original image in the red box of the leftmost image.

4.4.2. Ablation Study

We conduct further ablation experiments on SwinAIR-GAN to validate the effectiveness of the dropout operation and the artifact discrimination loss. The models are retrained on the DV2K [54] dataset and reevaluated on the self-built dataset at the ×4 scale. We quantitatively assess the experimental results under custom degradation (using PSNR (dB)/SSIM as reference metrics) and unknown degradation (using NIQE/PI as reference metrics).

Effectiveness of the dropout operation. The dropout operation included in the SwinAIR-GAN lead to improved performance across all evaluation metrics compared with the same model without the dropout operation. The specific results can be found in Table 8. For real infrared images, the dropout operation results in an NIQE reduction of 0.2964 and a PI reduction of 0.1173. Furthermore, there are significant improvements in PSNR and SSIM under various degradations.

Table 8.

Comparison results of whether use dropout operation or not.

Effectiveness of the artifact discrimination loss. The SwinAIR-GAN incorporating the artifact discrimination loss exhibits lower NIQE/PI scores in the real infrared SR task and higher PSNR (dB) /SSIM scores in the custom-degraded SR task than the model without. The detailed results are shown in Table 9.

Table 9.

Comparison results of whether use artifact discrimination loss or not.

5. Conclusions

In this study, we devise a novel method, SwinAIR, for infrared image SR reconstruction. Through our carefully designed RSTAB, SwinAIR is capable of extracting both low- and high-frequency information from infrared images, enabling effective resolution enhancement. Building on this foundation, we further explore the SR reconstruction task for real infrared images by integrating SwinAIR with the U-Net to create SwinAIR-GAN. SwinAIR-GAN expands the degradation space to better simulate the process of real infrared image degradation. In addition, spectral normalization, dropout operation, and artifact discrimination loss are added to further enhance its performance. To better evaluate the reconstruction effects of different methods on real infrared images, we build an infrared data acquisition system. This system captures corresponding LR and HR infrared images of the same scene, addressing the lack of reference images in real infrared image SR tasks. Extensive comparative experiments on various datasets demonstrate the effectiveness of SwinAIR and SwinAIR-GAN. Although our method achieves superior performance and delivers visually striking results, there may still be differences between the reconstruction images by SwinAIR-GAN and the real infrared images due to the lack of high-quality infrared datasets [65]. Recent studies have shown that transfer learning can effectively improve network performance under the scarcity of datasets [45,66,67]. In the future, we will consider employing this technique, as well as expanding the training dataset, to further explore new methods for the real infrared image SR reconstruction task.

Author Contributions

Methodology, F.H.; Validation, J.W.; Writing—original draft, Y.L.; Writing— review and editing, X.Y.; Supervision, J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Henn, K.A.; Peduzzi, A. Surface Heat Monitoring with High-Resolution UAV Thermal Imaging: Assessing Accuracy and Applications in Urban Environments. Remote Sens. 2024, 16, 930. [Google Scholar] [CrossRef]

- Chen, X.; Letu, H.; Shang, H.; Ri, X.; Tang, C.; Ji, D.; Shi, C.; Teng, Y. Rainfall Area Identification Algorithm Based on Himawari-8 Satellite Data and Analysis of its Spatiotemporal Characteristics. Remote Sens. 2024, 16, 747. [Google Scholar] [CrossRef]

- Cheng, L.; He, Y.; Mao, Y.; Liu, Z.; Dang, X.; Dong, Y.; Wu, L. Personnel Detection in Dark Aquatic Environments Based on Infrared Thermal Imaging Technology and an Improved YOLOv5s Model. Sensors 2024, 24, 3321. [Google Scholar] [CrossRef]

- Calvin, W.M.; Littlefield, E.F.; Kratt, C. Remote sensing of geothermal-related minerals for resource exploration in Nevada. Geothermics 2015, 53, 517–526. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a deep convolutional network for image super-resolution. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part IV 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 184–199. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Chen, H.; Wang, Y.; Guo, T.; Xu, C.; Deng, Y.; Liu, Z.; Ma, S.; Xu, C.; Xu, C.; Gao, W. Pre-trained image processing transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 12299–12310. [Google Scholar]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. Swinir: Image restoration using swin transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1833–1844. [Google Scholar]

- Zhang, D.; Huang, F.; Liu, S.; Wang, X.; Jin, Z. Swinfir: Revisiting the swinir with fast fourier convolution and improved training for image super-resolution. arXiv 2022, arXiv:2208.11247. [Google Scholar]

- Chen, X.; Wang, X.; Zhou, J.; Qiao, Y.; Dong, C. Activating more pixels in image super-resolution transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 22367–22377. [Google Scholar]

- Zhang, K.; Liang, J.; Van Gool, L.; Timofte, R. Designing a practical degradation model for deep blind image super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 4791–4800. [Google Scholar]

- Wang, X.; Xie, L.; Dong, C.; Shan, Y. Real-esrgan: Training real-world blind super-resolution with pure synthetic data. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1905–1914. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network in network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, part III 18. Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Wang, J.; Ralph, J.F.; Goulermas, J.Y. An analysis of a robust super resolution algorithm for infrared imaging. In Proceedings of the 2009 Proceedings of 6th International Symposium on Image and Signal Processing and Analysis, Salzburg, Austria, 16–18 September 2009; pp. 158–163. [Google Scholar]

- Choi, K.; Kim, C.; Kang, M.H.; Ra, J.B. Resolution improvement of infrared images using visible image information. IEEE Signal Process. Lett. 2011, 18, 611–614. [Google Scholar] [CrossRef]

- Mao, Y.; Wang, Y.; Zhou, J.; Jia, H. An infrared image super-resolution reconstruction method based on compressive sensing. Infrared Phys. Technol. 2016, 76, 735–739. [Google Scholar] [CrossRef]

- Deng, C.Z.; Tian, W.; Chen, P.; Wang, S.Q.; Zhu, H.S.; Hu, S.F. Infrared image super-resolution via locality-constrained group sparse model. Acta Phys. Sin. 2014, 63, 044202. [Google Scholar] [CrossRef]

- Yang, X.; Wu, W.; Hua, H.; Liu, K. Infrared image recovery from visible image by using multi-scale and multi-view sparse representation. In Proceedings of the 2015 11th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS), Bangkok, Thailand, 23–27 November 2015; pp. 554–559. [Google Scholar]

- Yang, X.; Wu, W.; Liu, K.; Zhou, K.; Yan, B. Fast multisensor infrared image super-resolution scheme with multiple regression models. J. Syst. Archit. 2016, 64, 11–25. [Google Scholar] [CrossRef]

- Song, P.; Deng, X.; Mota, J.F.; Deligiannis, N.; Dragotti, P.L.; Rodrigues, M.R. Multimodal image super-resolution via joint sparse representations induced by coupled dictionaries. IEEE Trans. Comput. Imaging 2019, 6, 57–72. [Google Scholar] [CrossRef]

- Yao, T.; Luo, Y.; Hu, J.; Xie, H.; Hu, Q. Infrared image super-resolution via discriminative dictionary and deep residual network. Infrared Phys. Technol. 2020, 107, 103314. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, L.; Liu, B.; Zhao, H. Research on blind super-resolution technology for infrared images of power equipment based on compressed sensing theory. Sensors 2021, 21, 4109. [Google Scholar] [CrossRef] [PubMed]

- Alonso-Fernandez, F.; Farrugia, R.A.; Bigun, J. Iris super-resolution using iterative neighbor embedding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 153–161. [Google Scholar]

- Ahmadi, S.; Burgholzer, P.; Jung, P.; Caire, G.; Ziegler, M. Super resolution laser line scanning thermography. Opt. Lasers Eng. 2020, 134, 106279. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, J.; Wang, L. Compressed Sensing Super-Resolution Method for Improving the Accuracy of Infrared Diagnosis of Power Equipment. Appl. Sci. 2022, 12, 4046. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Lin, X.; Sun, S.; Huang, W.; Sheng, B.; Li, P.; Feng, D.D. EAPT: Efficient attention pyramid transformer for image processing. IEEE Trans. Multimed. 2021, 25, 50–61. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Ma, C.; Zhuo, L.; Li, J.; Zhang, Y.; Zhang, J. Cascade transformer decoder based occluded pedestrian detection with dynamic deformable convolution and gaussian projection channel attention mechanism. IEEE Trans. Multimed. 2023, 25, 1529–1537. [Google Scholar] [CrossRef]

- Arnab, A.; Dehghani, M.; Heigold, G.; Sun, C.; Lučić, M.; Schmid, C. Vivit: A video vision transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 6836–6846. [Google Scholar]

- Junayed, M.S.; Islam, M.B. Consistent video inpainting using axial attention-based style transformer. IEEE Trans. Multimed. 2022, 25, 7494–7504. [Google Scholar] [CrossRef]

- Cao, Y.; Li, L.; Liu, B.; Zhou, W.; Li, Z.; Ni, W. CFMB-T: A cross-frequency multi-branch transformer for low-quality infrared remote sensing image super-resolution. Infrared Phys. Technol. 2023, 133, 104861. [Google Scholar] [CrossRef]

- Yi, S.; Li, L.; Liu, X.; Li, J.; Chen, L. HCTIRdeblur: A hybrid convolution-transformer network for single infrared image deblurring. Infrared Phys. Technol. 2023, 131, 104640. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Yan, B.; Bare, B.; Ma, C.; Li, K.; Tan, W. Deep objective quality assessment driven single image super-resolution. IEEE Trans. Multimed. 2019, 21, 2957–2971. [Google Scholar] [CrossRef]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Change Loy, C. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Shang, T.; Dai, Q.; Zhu, S.; Yang, T.; Guo, Y. Perceptual extreme super-resolution network with receptive field block. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 440–441. [Google Scholar]

- Liu, S.; Yang, Y.; Li, Q.; Feng, H.; Xu, Z.; Chen, Y.; Liu, L. Infrared image super resolution using gan with infrared image prior. In Proceedings of the 2019 IEEE 4th International Conference on Signal and Image Processing (ICSIP), Wuxi, China, 19–21 July 2019; pp. 1004–1009. [Google Scholar]

- Huang, Y.; Jiang, Z.; Wang, Q.; Jiang, Q.; Pang, G. Infrared image super-resolution via heterogeneous convolutional WGAN. In Proceedings of the PRICAI 2021: Trends in Artificial Intelligence: 18th Pacific Rim International Conference on Artificial Intelligence, PRICAI 2021, Hanoi, Vietnam, 8–12 November 2021; Proceedings, Part II 18. Springer: Berlin/Heidelberg, Germany, 2021; pp. 461–472. [Google Scholar]

- Huang, Y.; Jiang, Z.; Lan, R.; Zhang, S.; Pi, K. Infrared image super-resolution via transfer learning and PSRGAN. IEEE Signal Process. Lett. 2021, 28, 982–986. [Google Scholar] [CrossRef]

- Liu, Q.M.; Jia, R.S.; Liu, Y.B.; Sun, H.B.; Yu, J.Z.; Sun, H.M. Infrared image super-resolution reconstruction by using generative adversarial network with an attention mechanism. Appl. Intell. 2021, 51, 2018–2030. [Google Scholar] [CrossRef]

- Lee, I.H.; Chung, W.Y.; Park, C.G. Style transformation super-resolution GAN for extremely small infrared target image. Pattern Recognit. Lett. 2023, 174, 1–9. [Google Scholar] [CrossRef]

- Kong, X.; Liu, X.; Gu, J.; Qiao, Y.; Dong, C. Reflash dropout in image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 6002–6012. [Google Scholar]

- Si, C.; Yu, W.; Zhou, P.; Zhou, Y.; Wang, X.; Yan, S. Inception transformer. Adv. Neural Inf. Process. Syst. 2022, 35, 23495–23509. [Google Scholar]

- Miyato, T.; Kataoka, T.; Koyama, M.; Yoshida, Y. Spectral normalization for generative adversarial networks. arXiv 2018, arXiv:1802.05957. [Google Scholar]

- Liang, J.; Zeng, H.; Zhang, L. Details or artifacts: A locally discriminative learning approach to realistic image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5657–5666. [Google Scholar]

- Dierickx, B.; Meynants, G. Missing pixel correction algorithm for image sensors. In Advanced Focal Plane Arrays and Electronic Cameras II; SPIE: Bellingham, WA, USA, 1998; Volume 3410, pp. 200–203. [Google Scholar]

- Zhang, K.; Gool, L.V.; Timofte, R. Deep unfolding network for image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3217–3226. [Google Scholar]

- Ji, X.; Cao, Y.; Tai, Y.; Wang, C.; Li, J.; Huang, F. Real-world super-resolution via kernel estimation and noise injection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 13–19 June 2020; pp. 466–467. [Google Scholar]

- Timofte, R.; Agustsson, E.; Van Gool, L.; Yang, M.H.; Zhang, L. Ntire 2017 challenge on single image super-resolution: Methods and results. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 114–125. [Google Scholar]

- González, A.; Fang, Z.; Socarras, Y.; Serrat, J.; Vázquez, D.; Xu, J.; López, A.M. Pedestrian detection at day/night time with visible and FIR cameras: A comparison. Sensors 2016, 16, 820. [Google Scholar] [CrossRef]

- Portmann, J.; Lynen, S.; Chli, M.; Siegwart, R. People detection and tracking from aerial thermal views. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 1794–1800. [Google Scholar]

- Iray-384 Image Database. Available online: http://openai.iraytek.com/apply/Universal_video.html/ (accessed on 21 May 2024).

- Iray-Ship Image Database. Available online: http://openai.raytrontek.com/apply/Sea_shipping.html/ (accessed on 21 May 2024).

- Iray-Aerial Photography Image Database. Available online: http://openai.iraytek.com/apply/Aerial_mancar.html/ (accessed on 21 May 2024).

- Iray-Security Image Database. Available online: http://openai.iraytek.com/apply/Infrared_security.html/ (accessed on 21 May 2024).

- Li, Z.; Yang, J.; Liu, Z.; Yang, X.; Jeon, G.; Wu, W. Feedback network for image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3867–3876. [Google Scholar]

- Zhang, K.; Zuo, W.; Zhang, L. Learning a single convolutional super-resolution network for multiple degradations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3262–3271. [Google Scholar]

- Sajjadi, M.S.; Scholkopf, B.; Hirsch, M. Enhancenet: Single image super-resolution through automated texture synthesis. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4491–4500. [Google Scholar]

- Liang, S.; Song, K.; Zhao, W.; Li, S.; Yan, Y. DASR: Dual-Attention Transformer for infrared image super-resolution. Infrared Phys. Technol. 2023, 133, 104837. [Google Scholar] [CrossRef]

- Wei, W.; Sun, Y.; Zhang, L.; Nie, J.; Zhang, Y. Boosting one-shot spectral super-resolution using transfer learning. IEEE Trans. Comput. Imaging 2020, 6, 1459–1470. [Google Scholar] [CrossRef]

- Zhang, B.; Ma, M.; Wang, M.; Hong, D.; Yu, L.; Wang, J.; Gong, P.; Huang, X. Enhanced resolution of FY4 remote sensing visible spectrum images utilizing super-resolution and transfer learning techniques. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 7391–7399. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).