Fourier Ptychographic Microscopy Reconstruction Method Based on Residual Local Mixture Network

Abstract

1. Introduction

- To address the issues of poor reconstruction quality and low efficiency, a mixture attention network is introduced to optimize the reconstruction efficiency, reduce computational complexity, improve quality, and minimize time costs;

- To tackle the problems of noise and artifacts during the reconstruction process, a Gaussian diffusion model is introduced to simulate data diffusion, smoothing out noise, reducing coherent artifacts, and enhancing the quality and accuracy of reconstructed images.

2. Methods

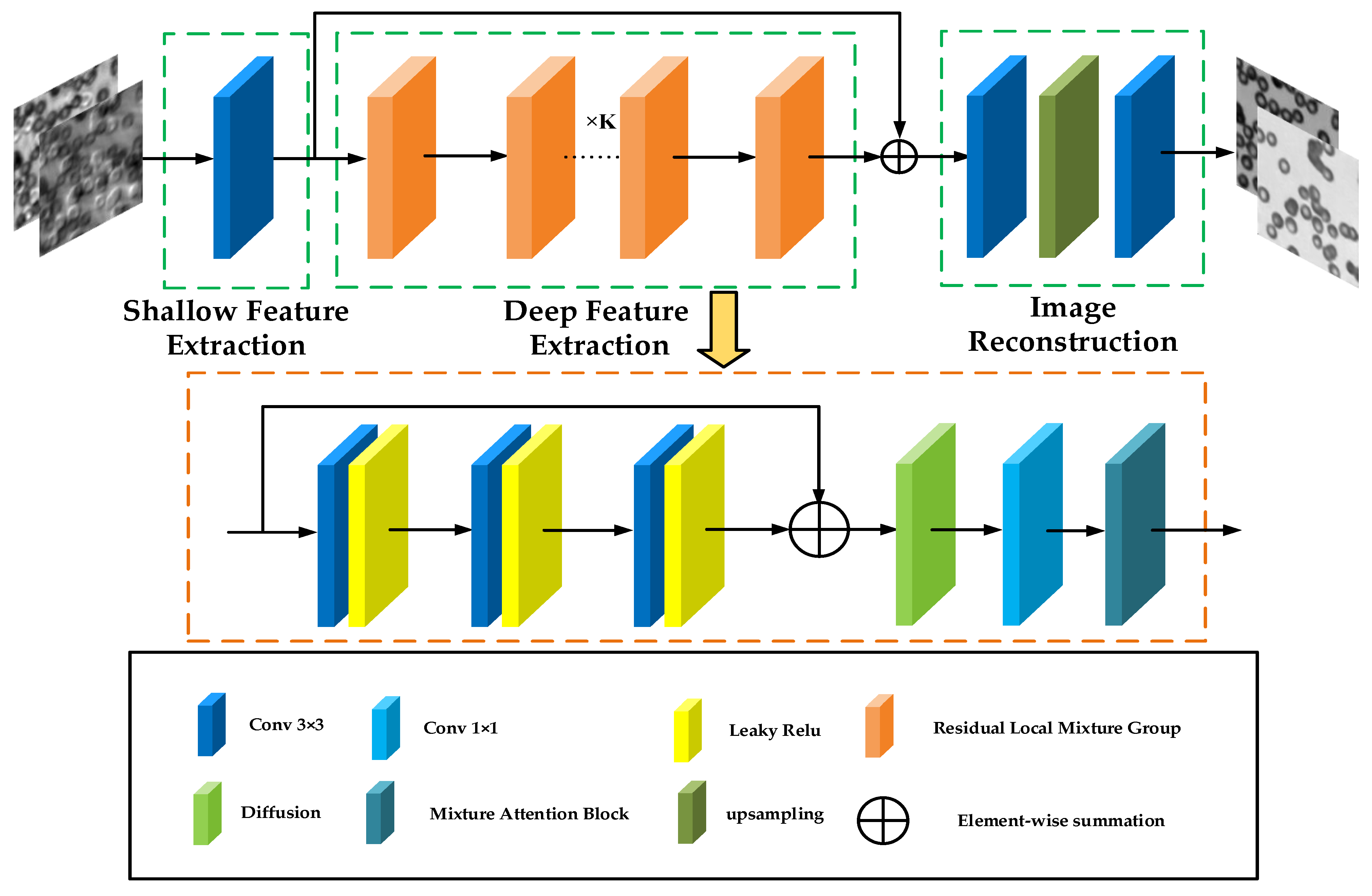

2.1. Network Architecture

2.2. Residual Local Mixture Group

2.3. Mixture Attention Block

2.4. Enhanced Spatial Attention (ESA)

2.5. SE Layer

2.6. Diffusion Model

2.7. Evaluation Metrics

3. Experiment

3.1. Dataset

3.2. Optimizer Comparison Experiment

3.3. Ablation Experiment

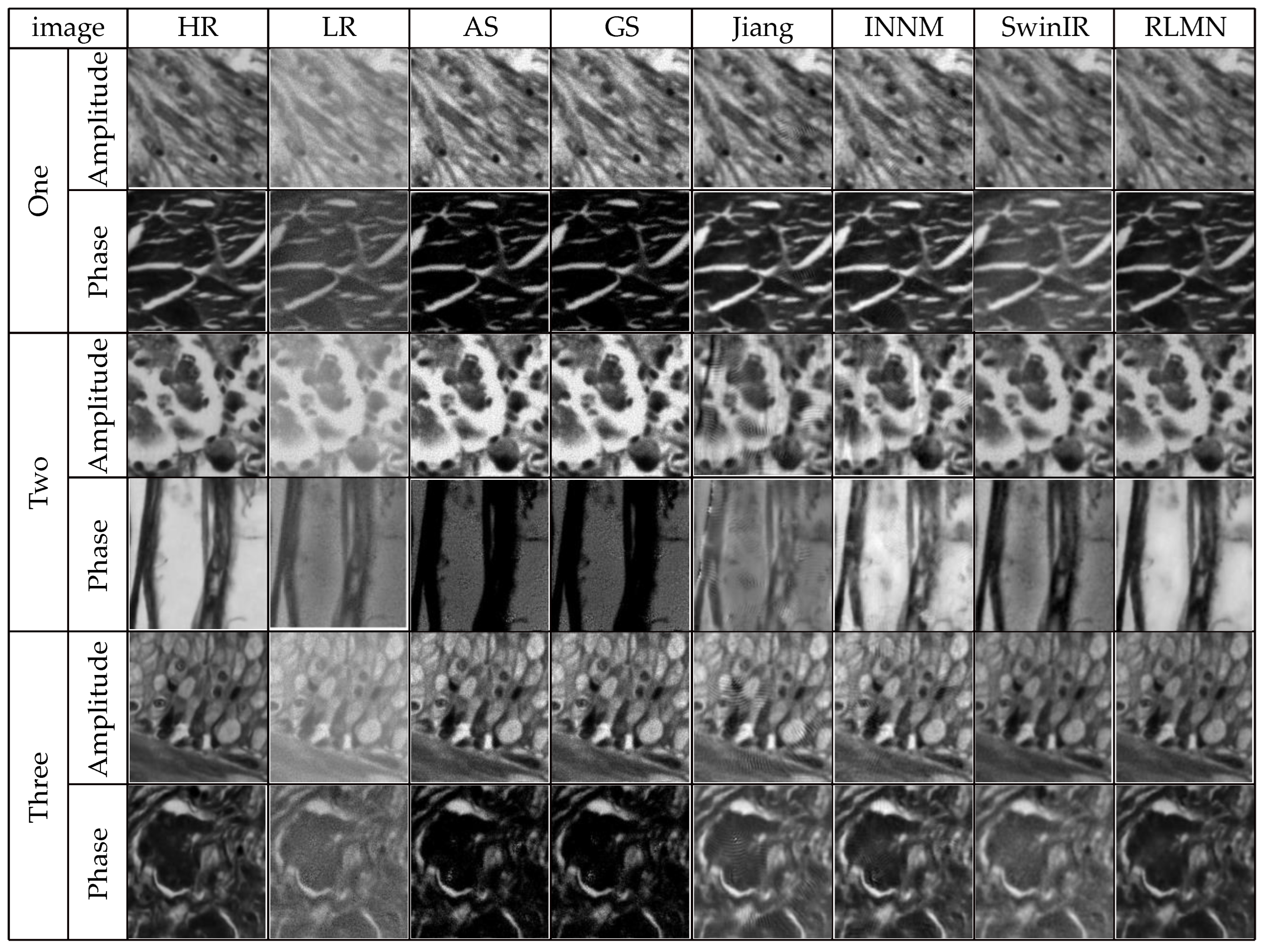

3.4. Comparative Experiment under Identical Noise Conditions

3.5. Comparative Experiment under Different Noise Conditions

3.6. Comparative Experiment on Real Images

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zheng, G.; Horstmeyer, R.; Yang, C. Wide-field, high-resolution Fourier ptychographic microscopy. Nat. Photonics 2013, 7, 739–745. [Google Scholar] [CrossRef]

- Zheng, G.; Shen, C.; Jiang, S.; Song, P.; Yang, C. Concept, implementations and applications of Fourier ptychography. Nat. Rev. Phys. 2021, 3, 207–223. [Google Scholar] [CrossRef]

- Pan, A.; Zuo, C.; Yao, B. High-resolution and large field-of-view Fourier ptychographic microscopy and its applications in biomedicine. Rep. Prog. Phys. 2020, 83, 096101. [Google Scholar] [CrossRef]

- Guo, K.; Dong, S.; Zheng, G. Fourier ptychography for brightfield, phase, darkfield, reflective, multi-slice, and fluorescence imaging. IEEE J. Sel. Top. Quantum Electron. 2015, 22, 77–88. [Google Scholar] [CrossRef]

- Zuo, C.; Sun, J.; Chen, Q. Adaptive step-size strategy for noise-robust Fourier ptychographic microscopy. Opt. Express 2016, 24, 20724–20744. [Google Scholar] [CrossRef]

- Bian, L.; Suo, J.; Zheng, G.; Guo, K.; Chen, F.; Dai, Q. Fourier ptychographic reconstruction using Wirtinger flow optimization. Opt. Express 2015, 23, 4856–4866. [Google Scholar] [CrossRef]

- Bian, L.; Suo, J.; Chung, J.; Ou, X.; Yang, C.; Chen, F.; Dai, Q. Fourier ptychographic reconstruction using Poisson maximum likelihood and truncated Wirtinger gradient. Scientific reports, 2016, 6, 27384. [Google Scholar] [CrossRef]

- Jiang, S.; Guo, K.; Liao, J.; Zheng, G. Solving Fourier ptychographic imaging problems via neural network modeling and TensorFlow. Biomed. Opt. Express 2018, 9, 3306–3319. [Google Scholar] [CrossRef]

- Sun, J.; Chen, Q.; Zhang, Y.; Zuo, C. Efficient positional misalignment correction method for Fourier ptychographic microscopy. Biomed. Opt. Express 2016, 7, 1336–1350. [Google Scholar] [CrossRef]

- Zhang, J.; Xu, T.; Zhang, Y.; Chen, Y.; Wang, S.; Wang, X. Multiplex Fourier ptychographic reconstruction with model-based neural network for Internet of Things. Ad Hoc Netw. 2021, 111, 102350. [Google Scholar] [CrossRef]

- Wang, X.; Piao, Y.; Jin, Y.; Li, J.; Lin, Z.; Cui, J.; Xu, T. Fourier Ptychographic Reconstruction Method of Self-Training Physical Model. Appl. Sci. 2023, 13, 3590. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, Y.; Jiang, S.; Dixit, K.; Song, P.; Zhang, X.; Ji, X.; Li, X. Neural network model assisted Fourier ptychography with Zernike aberration recovery and total variation constraint. J. Biomed. Opt. 2021, 26, 036502. [Google Scholar] [CrossRef]

- Chen, Q.; Huang, D.; Chen, R. Fourier ptychographic microscopy with untrained deep neural network priors. Opt. Express 2022, 30, 39597–39612. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Tejani, A.; Totz, J.; Wang, Z.; Shi, W. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Loy, C.C.; Qiao, Y.; Tang, X. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Choi, H.; Lee, J.; Yang, J. N-gram in swin transformers for efficient lightweight image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 2071–2081. [Google Scholar]

- Niu, B.; Wen, W.; Ren, W.; Zhang, X.; Yang, L.; Wang, S.; Zhang, K.; Cao, X.; Shen, H. Single image super-resolution via a holistic attention network. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XII 16. Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 191–207. [Google Scholar]

- Wang, H.; Chen, X.; Ni, B.; Liu, Y.; Liu, J. Omni aggregation networks for lightweight image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 22378–22387. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef]

- Huang, S.; Sun, J.; Yang, Y.; Fang, Y.; Lin, P.; Que, Y. Robust Single-Image Super-Resolution Based on Adaptive Edge Preserving Smoothing Regularization. IEEE Trans. Image Process. 2018, 27, 2650–2663. [Google Scholar] [CrossRef]

- Gao, X.; Zhang, K.; Tao, D.; Li, X. Image Super-Resolution with Sparse Neighbor Embedding. IEEE Trans. Image Process. 2012, 21, 3194–3205. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1646–1654. [Google Scholar]

- Musunuri, Y.R.; Kwon, O.S. Deep Residual Dense Network for Single Image Super-Resolution. Electronics 2021, 10, 555. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image super-resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 286–301. [Google Scholar]

- Zhang, Y.; Li, K.; Li, K.; Zhong, B.; Fu, Y. Residual non-local attention networks for image restoration. arXiv 2019, arXiv:1903.10082. [Google Scholar]

- Sun, M.; Shao, L.; Zhu, Y.; Zhang, Y.; Wang, S.; Wang, Y.; Diao, Z.; Li, D.; Mu, Q.; Xuan, L. Double-flow convolutional neural network for rapid large field of view Fourier ptychographic reconstruction. J. Biophotonics 2021, 14, e202000444. [Google Scholar] [CrossRef]

- Sun, M.; Chen, X.; Zhu, Y.; Li, D.; Mu, Q.; Xuan, L. Neural network model combined with pupil recovery for Fourier ptychographic microscopy. Opt. Express 2019, 27, 24161–24174. [Google Scholar] [CrossRef]

- Zhang, J.; Tao, X.; Yang, L.; Wang, C.; Tao, C.; Hu, J.; Wu, R.; Zheng, Z. The integration of neural network and physical reconstruction model for Fourier ptychographic microscopy. Opt. Commun. 2022, 504, 127470. [Google Scholar] [CrossRef]

- Zhang, J.; Xu, T.; Li, J.; Zhang, Y.; Jiang, S.; Chen, Y.; Zhang, J. Physics-based learning with channel attention for Fourier ptychographic microscopy. J. Biophotonics 2022, 15, e202100296. [Google Scholar] [CrossRef]

- Li, J.; Hao, J.; Wang, X.; Wang, Y.; Wang, Y.; Wang, H.; Wang, X. Fourier Ptychographic Microscopic Reconstruction Method Based on Residual Hybrid Attention Network. Sensors 2023, 23, 7301. [Google Scholar] [CrossRef]

| Image | Adagrad PSNR (dB) /SSIM | Adamax PSNR (dB) /SSIM | AdamW PSNR (dB) /SSIM |

|---|---|---|---|

| Amplitude | 28.00 /0.6033 | 33.95 /0.9305 | 34.91 /0.9534 |

| Phase | 24.10 /0.5268 | 23.84 /0.8892 | 31.90 /0.9568 |

| Image | RLDN PSNR (dB) /SSIM | RLMAN PSNR (dB) /SSIM | RLMN PSNR (dB) /SSIM |

|---|---|---|---|

| Amplitude | 34.33 /0.9511 | 34.48 /0.9508 | 35.25 /0.9636 |

| Phase | 19.73 /0.9344 | 24.11 /0.9358 | 25.51 /0.9568 |

| Image | AS PSNR (dB) /SSIM | GS PSNR (dB) /SSIM | Jiang PSNR (dB) /SSIM | INNM PSNR (dB) /SSIM | SwinIR PSNR (dB) /SSIM | RLMN PSNR (dB) /SSIM | |

|---|---|---|---|---|---|---|---|

| One | Amplitude | 19.39 /0.5218 | 19.40 /0.5245 | 25.72 /0.9123 | 23.73 /0.8732 | 33.81 /0.9203 | 35.47 /0.9615 |

| Phase | 18.98 /0.3462 | 18.95 /0.3433 | 22.63 /0.9223 | 22.51 /0.8890 | 15.90 /0.6971 | 31.82 /0.9612 | |

| Two | Amplitude | 18.09 /0.5336 | 18.09 /0.5379 | 25.34 /0.8540 | 23.17 /0.8239 | 29.75 /0.8839 | 35.25 /0.9636 |

| Phase | 7.25 /0.1214 | 7.24 /0.1209 | 17.52 /0.7145 | 19.24 /0.7821 | 12.19 /0.7386 | 25.51 /0.9568 | |

| Three | Amplitude | 20.15 /0.5318 | 19.90 /0.5336 | 21.87 /0.8278 | 19.89 /0.8553 | 34.64 /0.9246 | 35.91 /0.9630 |

| Phase | 18.06 /0.3017 | 18.03 /0.2997 | 21.92 /0.8088 | 23.41 /0.8809 | 15.42 /0.6945 | 30.21 /0.9485 | |

| Image | AS PSNR (dB) /SSIM | GS PSNR (dB) /SSIM | Jiang PSNR (dB) /SSIM | INNM PSNR (dB) /SSIM | SwinIR PSNR (dB) /SSIM | RLMN PSNR (dB) /SSIM | |

|---|---|---|---|---|---|---|---|

| 1 × 10−4 | Amplitude | 14.27 /0.5692 | 14.26 /0.5668 | 17.87 /0.7975 | 19.51 /0.7406 | 27.09 /0.8916 | 39.80 /0.9761 |

| Phase | 19.99 /0.3437 | 20.00 /0.3449 | 24.53 /0.9312 | 26.46 /0.9165 | 18.70 /0.5780 | 29.58 /0.9426 | |

| 2 × 10−4 | Amplitude | 16.95 /0.5155 | 16.97 /0.5157 | 21.71 /0.8686 | 19.77 /0.7516 | 33.54 /0.8979 | 34.71 /0.9467 |

| Phase | 15.98 /0.2410 | 15.97 /0.2384 | 20.03 /0.8930 | 21.63 /0.8931 | 17.27 /0.7961 | 32.67 /0.9642 | |

| 3 × 10−4 | Amplitude | 20.19 /0.6137 | 20.20 /0.6107 | 23.50 /0.8957 | 24.07 /0.8781 | 33.84 /0.9346 | 35.80 /0.9645 |

| Phase | 16.76 /0.2516 | 16.80 /0.2534 | 20.16 /0.8054 | 19.31 /0.7462 | 16.98 /0.7365 | 26.46 /0.9483 | |

| Model | Iterations | Reconstruction Time |

|---|---|---|

| AS | 50 | 2.4987 s |

| GS | 50 | 2.5133 s |

| Jiang | 50 | 89.49 s |

| INNM | 50 | 398.30 s |

| SwinIR | 0 | 0.597 s |

| RLMN | 0 | 0.315 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Wang, Y.; Li, J.; Wang, X. Fourier Ptychographic Microscopy Reconstruction Method Based on Residual Local Mixture Network. Sensors 2024, 24, 4099. https://doi.org/10.3390/s24134099

Wang Y, Wang Y, Li J, Wang X. Fourier Ptychographic Microscopy Reconstruction Method Based on Residual Local Mixture Network. Sensors. 2024; 24(13):4099. https://doi.org/10.3390/s24134099

Chicago/Turabian StyleWang, Yan, Yongshan Wang, Jie Li, and Xiaoli Wang. 2024. "Fourier Ptychographic Microscopy Reconstruction Method Based on Residual Local Mixture Network" Sensors 24, no. 13: 4099. https://doi.org/10.3390/s24134099

APA StyleWang, Y., Wang, Y., Li, J., & Wang, X. (2024). Fourier Ptychographic Microscopy Reconstruction Method Based on Residual Local Mixture Network. Sensors, 24(13), 4099. https://doi.org/10.3390/s24134099