Abstract

One of the biggest challenges of computers is collecting data from human behavior, such as interpreting human emotions. Traditionally, this process is carried out by computer vision or multichannel electroencephalograms. However, they comprise heavy computational resources, far from final users or where the dataset was made. On the other side, sensors can capture muscle reactions and respond on the spot, preserving information locally without using robust computers. Therefore, the research subject is the recognition of the six primary human emotions using electromyography sensors in a portable device. They are placed on specific facial muscles to detect happiness, anger, surprise, fear, sadness, and disgust. The experimental results showed that when working with the CortexM0 microcontroller, enough computational capabilities were achieved to store a deep learning model with a classification store of 92%. Furthermore, we demonstrate the necessity of collecting data from natural environments and how they need to be processed by a machine learning pipeline.

1. Introduction

In recent years, humans and machines have worked closely to understand the human brain and its functionalities to control body movements [1,2]. Specifically, machines try to recognize human behavior through muscle activity to interact with them [3,4]. Therefore, facial muscles play a fundamental role in human communication, even with machines, since facial expressions represent a very high percentage of nonverbal communication, where 55% of the message is transmitted through facial expressions, 38% by intonation, and only 7% of the message by linguistic language [5]. Hence, smooth communication between people and machines requires interfaces, which commonly are sensors or cameras. However, cameras use remote sensing techniques to collect data from humans whereby, in some scenarios, these techniques cannot describe muscle movements when people react to a specific emotion [6]. Indeed, cameras can recognize emotions by applying deep learning techniques comparing thousands of images. These techniques require heavy computational resources, mainly when the deep model applies filters to find patterns that remove unnecessary picture information. Furthermore, when cameras are deployed in uncontrolled environments, they insert luminosity variations and vibrations that affect the quality of the image and the ability of the machine to recognize human emotions. As a result, cameras struggle to detect the voluntary/involuntary messages produced by the nervous system in response to human emotions [7].

Conversely, modern data acquisition systems collect data from human behavior through electrical measurements of muscle activity. Furthermore, emotion recognition methods (ERMs) attempt to recognize facial expressions [8,9] in different situations to determine the internal emotional state of a person by subjective experiences (i.e., how the person feels) [10]. ERM techniques are focused on gathering information from physiological signals, such as electroencephalogram (EEG), electromyogram (EMG), and electrocardiogram (ECG) [11]. Previous work, such as Ekman [12], proposes the existence of six basic facial emotions: anger, disgust, fear, happiness, sadness, and surprise. These emotions follow a specific physiological response pattern and can be demonstrated by ERM techniques, such as EMG analysis. Therefore, collecting EMG signals need small electrodes (i.e., sensors) to detect light voltage variations in the muscles of the face [13]. In addition, it is important to mention that facial muscles have a single motor unit associated with movements of different facial regions which contribute to the representation of facial expressions and that is located under the subcutaneous tissue of the face and neck [14].

Taking into account the abovementioned statements, the research goal is to present an EMG electronic device that can classify the most relevant human emotions locally [15]. Therefore, the system uses three sensors placed on specific muscles of the face to collect data and create a dataset. Then, filters in hardware and software are developed to reduce noise and unpredictable light muscle contractions of the face. Next, three machine learning (ML) approaches are designed to define a suitable ML method that has the highest classification accuracy and low computational consumption. In short, the main contributions are as follows:

- An extensive literature review is carried out to select the minimum number of sensors and samples to classify six human emotions, proving that EMG analysis is an adequate alternative in harsh environments where cameras struggle to take high-quality images of humans.

- A proper ML analysis is performed using three approaches to determine the best one with a light workload in the electronic device. Therefore, analog signals are converted into different data structures to fit ML algorithms’ training phase.

- An adequate electronic system design is presented, which combines hardware and software to reach an ML application, keeping a high classification score and less power consumption than cameras.

The rest of the manuscript is structured as follows: Section 2 shows related works and a background of facial muscles. Then, Section 3 presents the electronic design focused on the location of sensors. Afterwards, Section 4 describes the machine learning pipeline. Next, the results are discussed in Section 5. Finally, Section 6 presents the conclusions of the paper and future work.

2. Background

This section summarizes earlier surveys covering the intersection between the data acquisition and deep learning techniques used to achieve emotion recognition (Section 2.1). Following this, Section 2.2 shows a facial muscle description for a better understanding of the location of sensors.

2.1. Early Works on EMG in the Field of Emotion Recognition

In detection-based computer vision, Thiam et al. [16] developed their own data classification pipeline, including three cameras to acquire a human emotion database, with results that yielded an accuracy of about 94%. In addition, Perusquia et al. [17] introduced a device for micro-smile recognition using signal processing and neural networks. These works demonstrate that cameras need controlled environments and an external server to deploy deep learning models. On the other hand, early studies such as [11,18,19,20,21] present emotion recognition approaches with classical classifiers, such as support vector machines (SVMs) and convolutional neural networks (CNNs). They mainly use ECG and EMG signals. Furthermore, recent works such as [22,23,24] have presented extensive methods to classify emotion recognition by means of EEG signals, achieving 97% accuracy. However, they use existing datasets to develop their neural network models [25]. Finally, Pham et al. [8] presented a fine-tuned CNN model focused on emotion recognition.

In EMG signals space, Jiang et al. [26] presented one of the first intuitions to recognize emotions trough EMG signals. Then, works such as [13,27,28] presented electronic devices to acquire EMG signals from some facial muscles. The studies included SVM and neural networks, with an accuracy of about 91% up to 99% by taking thousand of samples and running experiments on computers. Therefore, the abovementioned electronic devices are developed just for data collection. Furthermore, in [29], the authors presented a multichannel EMG analysis and a long short-term memory classifier (LSTM) as a classifier to recognize eight emotions with 95% average accuracy. Moreover, another study on emotion recognition, which also focused on the recognition of facial expressions using EMG signals, is presented in [30].

The abovementioned works have complex systems, and their application in natural conditions would be challenging since they depend on the number of sensors and human emotions collected. In addition, they might need high computational resources and specific hardware in controlled environments. Therefore, few applications have worked on exporting the ML model to the device, which offers proof that its portability is not high.

Moreover, other previous works include [31,32,33]. In [31], a method for reading human facial expressions using a wearable device is presented. The method combines independent component analysis (ICA) and an artificial neural network (ANN). In addition, the authors are able to identify smiling and nonsmiling faces by measuring bioelectrical signals on the side of the face. However, EMG information from the corrugator supercilii and zygomaticis major muscles was not recorded. Similarly, ref. [32] used a wearable, noninvasive method to study facial muscle activation associated with masked smiles, enjoyment smiles, and social smiles. The method presented in [32] uses EMG recording, ICA, and a short training procedure. However, according to [33], ref. [32] does not show the degree of agreement (i.e., concordance) between facial EMG data and subjective valence.

On the other hand, in contrast to this [31,32], ref. [33] provide evidence that emotional valence can be assessed using a wearable facial EMG device while we are moving, The wearable device developed in [33] recorded the corrugator supercilii and zygomatic major muscles.

Finally, the wearable devices mentioned above are electronic systems placed on the head, near the cerebral cortex. Therefore, from our point of view, it would be useful to study the emotions that people experience when using battery-powered electronic devices near the cerebral cortex, and also how these devices affect people’s health. In the work presented in this research, we try to distance the electrical part of our measurement system from the user. In this way, we avoid any kind of interference with the functioning of the brain while we classify people’s expressions. We also use both hardware and software filtering to reduce the noise and complexity of the ML model. Lastly, the models presented in this work can be translated into portable electronic systems with low computational resources and low cost.

2.2. Facial Muscles

The facial muscles are the fibers (elastic tissue) that support sensory organs to perform their activities, for example, chewing, smelling, hearing, or listening. Motor neurons carry out this process by giving instructions to muscles by electrical pulses to maintain muscle contraction. This electrical activity is called motor unit action potential (MUAP), representing the duration, electrical amplitude, and phase of muscle contraction. Typical MUAP duration is between 5 and 15 ms [34]. In this scenario, the facial muscles are divided into three groups: (1) the top group, which generates eyebrow movement, frowning mainly to allow eye-opening; (2) the middle group, which is closely involved with mouth movements and helps phonation, and some very particular muscles allow chewing; and (3) the bottom group, which is responsible for generating facial movements due to the lips’ soft tissues [35].

The recognition of facial expressions from physiological signals is generated from the central nervous system through the MUAP analysis. Thus, there are low electrical levels when the muscle is at rest. In contrast, muscle contraction is generated when a nerve impulse raises the electrical levels [36]. Therefore, the facial actions coding system (FACS) indexes the facial expressions in actions units (AUs) to match the biomechanic information obtained in MUAP analysis of EMG signals. Furthermore, this approach aims at splitting the face into individual movements of their specific muscle activation to describe emotions [37,38]. Therefore, FACS has intensity scoring by appending letters A–E (for minimal–maximal intensity) to the action unit number. Consequently, the target emotions that FACS helps to determine are as follows: surprise, sadness, happiness, anger, disgust, and fear. Lastly, Table 1 shows the summary of all the muscles people commonly use to express emotions according to FACS.

Table 1.

Muscles involved in the generation of emotions by FACS (accessed on 15 October 2023) [2].

3. Electronic Design

Section 3.1 mentions setting up sensors for the data collection process. Then, Section 3.2 describes the electronic system.

3.1. Sensors’ Location

EMG sensors are composed of two units. The first unit consists of electrodes to collect electricity fluctuations in muscles. EMG sensors are usually built with three electrodes: two electrodes are placed on the top and in the middle of the muscle, and the last one is considered ground, commonly placed on bones since they do not have electricity [39]. The second unit is in charge of cleaning incoming data from electrodes; it has an instrumentation amplifier internally with a gain of 200×, a bandpass filter with a lower 3 dB cutoff frequency of 20 Hz and an upper 3 dB cutoff frequency of 498 Hz, and a rectification circuit [40].

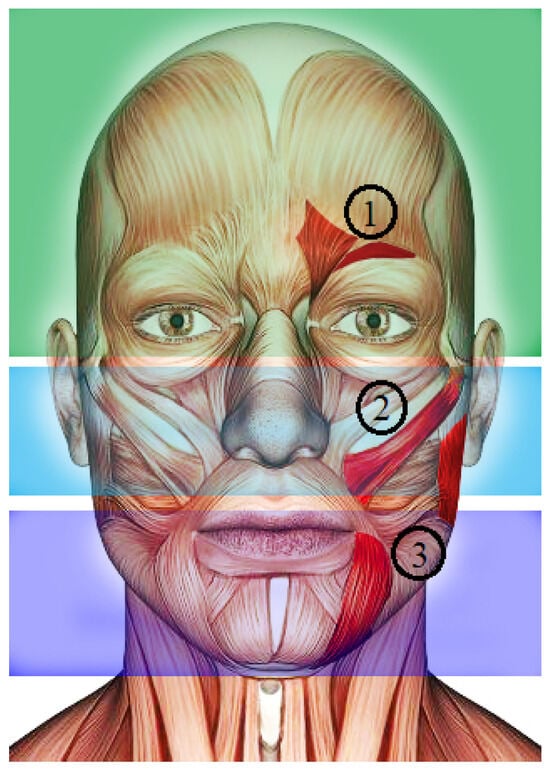

Following the EMG sensors configuration, Table 1 shows the primary muscles activated in the target facial expressions. We figured out that some muscles are part of several expressions. Therefore, we determined three EMGs in the most participating muscles to describe each facial expression. One sensor was placed between the Orbicularis oculi muscle and the Corrugator supercilii muscle. These muscles are in the forehead’s upper part of the eyebrow. It is the primary muscle over the negative emotions of sadness, anger, and disgust (sensor 1). Another sensor was placed on the Zygomaticus major muscle, which is located in the cheek near the mouth. This muscle highly acts on emotions of happiness, fear, and surprise (sensor 2). Finally, the last sensor collects data from the Depressor Anguli Oris and Masseter muscles. Both muscles are in the lower part of the mouth, towards the jaw, and act mainly on the emotions of surprise, fear, happiness, and sadness (sensor 3) [37]. With this sensor selection, we ensure that at least two sensors are activated on each facial expression. In short, Figure 1 shows the graphical description for the sensors’ locations (Figure 1 was purchased by the authors in https://sp.depositphotos.com/41323531/stock-photo-male-anatomy-face.html (accessed on 21 November 2023) depositphotos and modified by them to adapt it to the interests of the proposed research).

Figure 1.

Location of three EMG sensors to collect data. Sensor 1: Orbicularis oculi and the Corrugator supercilii muscles; Sensor 2: Zygomaticus major; Sensor 3: Depressor Anguli Oris and Masseter muscles. Green section: top face muscles; Blue section: middle face muscles; Purple section: bottom face muscles.

3.2. Electronic System Description

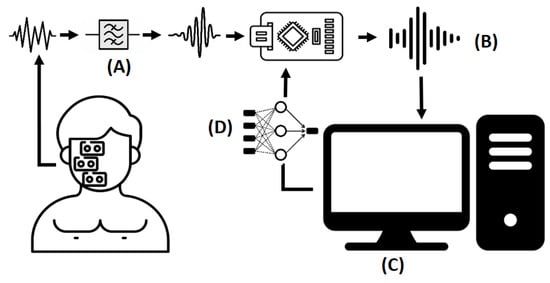

Once each sensor’s location is defined, the next step is designing the portable electronic device. According to the hardware’s functionalities and replicability requirements, MyoWare muscle biosensors were selected to collect EMG signals. These sensors are placed in a bipolar configuration with at least 2 cm of separation between them to avoid noise caused by electrical interference. Then, to avoid the sensor sending a raw signal directly to the microcontroller, an extra operational amplifier is used to couple the signal to the analog–digital converter and reduce noise for unpredictable facial movements. This task is carried out by using a voltage follower amplifier. However, even when the signal is rectified, some DC components, represented as signal peaks, are part of the EMG signal. Therefore, signal filtering is needed in further steps. The Arduino nano IoT was defined as a microcontroller to process EMG signals. Next, the Arduino sent those signals to the computer to store them and train ML models. Finally, once the ML model was selected, it was optimized to run into the microcontroller and make decisions locally. Figure 2 shows the abovementioned electronic system design.

Figure 2.

Electronic system design. Step (A): Sensors gather data and analog filtering is carried out. Step (B): The microcontroller receives the EMG signals and converts them into digital signals to send to the computer. Step (C): The computer stores the EMG signals to train ML models. Step (D): The inference is allocated to the microcontroller.

4. Machine Learning Pipeline

Once the electronic prototype is described above, it can collect EMG data from people. However, even when data play a fundamental role in discovering facial expression patterns, it is necessary to establish an adequate ML pipeline to select ML models that fit the data and test them with the proper performance metrics. In this scenario, we defined the ML pipeline with the following steps: data collection, data preprocessing, data preparation, and model design. Moreover, the following section describes how to evaluate the model, optimize it, and export it to the electronic device.

4.1. Data Collection

For conducting the data collection stage, 30 people (i.e., 18 men and 12 women) used cleaning/shaving products to remove beards and make-up. Then, each person used new electrodes to collect data. The central frequency was 25 Hz, and the system took 300 samples around it in 4 s, where the first and the last second usually contained errors due to a person’s delayed response to activate their muscles. Then, people rested for 4 s between experiments to eliminate the effect of muscle fatigue [41]. This process was performed ten times, where people intentionally made each emotion by their criteria, representing 300 instances per expression. Afterward, they were exposed to images to express emotions naturally under the same experimental conditions. We used a Lenovo Thinkpad P50s laptop system to store data; its specifications are listed in Table 2. Figure 3 shows the sensors’ locations and the six facial expressions taken into consideration in this research.

Table 2.

Lenovo Thinkpad P50s system specifications.

Figure 3.

Samples reshaped into one-dimensional array.

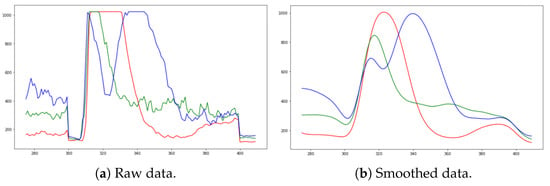

4.2. Data Preprocessing

The dataset was built once EMG signals were collected and stored in the computer. The dataset shows the six types of facial expressions: happiness, anger, surprise, fear, disgust, and sadness. Each sample is a three-dimensional array (i.e., 100 instances per expression with 3 EMG sensors [3][100]), representing 3600 samples with their respective labels. However, when these samples are plotted, it is evident that some noise components were injected into the analog signal. Therefore, smoothing filters in software were deployed to improve the signal. The signal-to-noise (SNR) metric determines the suitable smooth algorithm [42,43,44]. The results are shown in Table 3. In addition, this table shows a statistical description of the signal: mean, standard deviation (SD), and variability coefficient. This statistical information provides the proper smoothing filter, which is the Gaussian filter, since it has less variability in the smoothed samples and better SNR. Figure 4 shows an example of how the Gaussian filter smooths the signal and reduces the noise.

Table 3.

SNR analysis applied smoothing algorithms into the EMG analog signals.

Figure 4.

Anger representation by EMG signals. Y-axis: Analog-to-digital converter resolution; X-axis: Sample size. Sensor 1: (–); Sensor 2: (–); Sensor 3: (–).

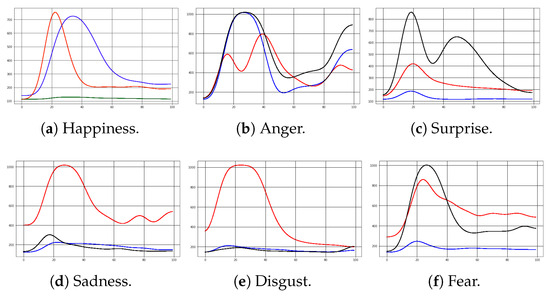

4.3. Data Preparation

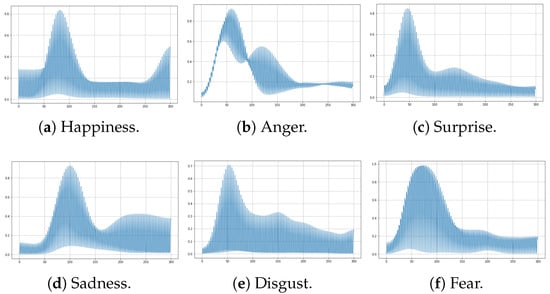

Once the EMG data are clean, data must be reshaped to fit for ML models. First, deep learning methods allow multidimensional data as model inputs, in compliance with how the dataset is already presented (i.e., each sample is an [100][3] array). However, classical ML methods described in further sections require unidimensional data as model input. Therefore, the dataset was reshaped into one-dimensional data by merging three sensors into one signal. This was achieved by adding the last two sensors to the end of the first sensor. As a result, each sample changed to a [300][1] array. Both datasets were split across participants into the training and test set, taking 80% and 20%, respectively. This is performed to obtain generalized models and obtain unbiased results. This procedure was carried out ten times by taking random samples each time to obtain balanced classification results. Figure 5 and Figure 6 show samples of multidimensional and one-dimensional data of each facial expression.

Figure 5.

Multidimensional EMG signals. Y-axis: Analog-to-digital converter resolution; X-axis: Sample size. Sensor 1: (–); Sensor 2: (–); Sensor 3: (–) or (–).

Figure 6.

One-dimensional EMG signal. Y-axis: Analog-to-digital converter resolution; X-axis: Sample size.

4.4. Model Design

This subsection shows the supervised classification algorithms with the one-dimensional training set and the deep learning algorithms with the multidimensional training set.

4.4.1. Supervised Classification Algorithms

Classification algorithms can learn using different mathematical approaches. In consequence, reviewed works (e.g., [24,45,46]) mention the most usual classification algorithm methods based on the following:

- Distance-based: K-nearest neighbors (KNN).

- Model-based: Support vector machine (SVM).

- Density-based: Bayesian classifier (BC).

- Heuristic: Decision tree (DT).

4.4.2. Neural Networks

Neural networks learn to extract relevant features when data pass through their architecture, and each neuron updates its weight to create probability rules. Therefore, they can learn from time series, sound signals, and images. Given that the dataset is presented in two ways (i.e., multidimensional and reshaped one-dimensional input), two neural network models were deployed with similar structures but different input shapes. The first model is shown in Table 4, called DP1. The DP1 architecture uses a sequential model and dense layers, which means that each layer is deeply connected with its preceding layer. In addition, “rectifier linear unit” (ReLU) is used for sparse activation of neurons, efficient computation, and better gradient propagation. Then, a flatten layer is used to obtain a flattened output. Finally, a dense layer with six neurons with the softmax activation function is added. Applying softmax makes the output vector in the interval such that the components will add up to 1. Therefore, they can be interpreted as probabilities. In this research, we do not use CNN architectures because in previous tests we could not achieve the high accuracy of standard neural networks, even when we tried several architectures.

Table 4.

Proposed multidimensional architecture of the neural network (DP1).

Consequently, the second neural network model, DP2, uses a similar architecture to the first model except for the flatten layer since the input is a one-dimensional array (see Table 5). It is worth mentioning that both neural network models do not add a drop layer, because we obtained this architecture based on trial-and-error experiments to avoid overfitting and reduce the model complexity.

Table 5.

Proposed one-dimensional architecture of the neural network (DP2).

5. Results

This section shows the evaluation of the ML models using classification metrics provided by the confusion matrix. Then, the selected ML models are optimized to be placed on the microcontroller’s memory and make inferences locally. Finally, the electronic design is presented.

5.1. Evaluation of ML Models

The confusion matrix tested the ML models’ performance with the test set. It provides the following classification metrics: precision, recall, F1-score, and accuracy. Table 6 shows that KNN and SVM algorithms are unsuitable for recognizing facial expressions since their performance is lower than expected. Furthermore, both techniques struggled to identify surprise and disgust. Conversely, naive Bayes and decision tree have high scores in classifying facial expressions even when the precision in detecting disgust is below 90%. Related to deep learning models, the DP2 model had a similar performance to classification algorithms (i.e., accuracy is about 93%). On the other hand, DP1 proved to be the model with the highest classification accuracy, recall, and precision. Consequently, the decision tree algorithm and the neural network models DP1 and DP2 are quantized and exported into the microcontroller’s memory to test them in a real-world environment to predict the label in incoming data (i.e., make inferences locally). Naive Bayes is not considered for further stages since it does not have support to quantize the model and needs to be trained on the device, which demands high computational requirements.

Table 6.

Evaluation of ML classification models: Precision (Prec.), recall (Rec.), F1-score (F1-sco.), and accuracy (Acc.).

5.2. Model Optimization and Deployment

A real-world test was designed to collect data from five women and ten men who were not considered when the ML models were trained. The testing methodology started when participants expressed different facial expressions without external stimuli. Afterward, the participant was exposed to see images and reacted to them naturally. First, the decision tree algorithm was placed on the microcontroller’s memory to predict the facial expression of people. This model needs 4 Kbytes of flash and 2 Kbytes of RAM to make inferences in 2 s. The model’s accuracy when external factors do not stimulate people is 79%, and when they are stimulated, it is 85%. Second, the DP1 model was also placed on the microcontroller’s memory to run the same test; this model uses 150 Kbytes of flash and 10 Mbytes of RAM and makes decisions in 8 s. This model could recognize facial expressions at 88% and 92%, respectively. For its part, DP2 uses 120 Kbytes of flash and 6.5 Mbytes of RAM and makes decisions in 5.5 s. The DP2 recognizes facial expression at 86% and 90%, respectively. Therefore, this model was selected to design the final device since, even when its performance is lower than DP2, it consumes 55 mW rather than 80 mW, which is a large improvement in the power consumption while keeping a similar performance.

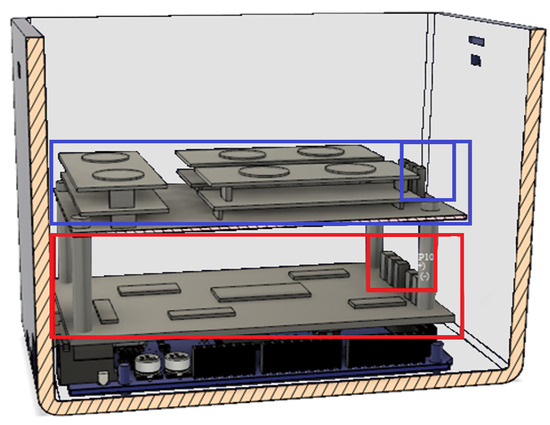

5.3. Electronic Device

After selecting the ML model, the next step was to design the PCB board and the device’s case. We aimed to build a robust electronic board and designed two electronic shields that would connect with the carrier board male headers. The first shield was placed on top of the carrier board and consisted of analog amplifiers to couple the EMG signals with the analog–digital pins of the microcontroller. The second shield was for the sensors and was placed on top of the first shield. Both shields were powered by an external supply and connected by jumpers. The system can be seen in Figure 7.

Figure 7.

Digital electronic system. Blue rectangle: sensors’ board; small blue and red boxes: jumpers; red rectangle: noise filter board placed allocated over the microcontroller.

In addition, Figure 8 shows the electronic case made in a 3D printer. On top of the case, there are LEDs to highlight when the electronic system collects data and makes decisions. A USB connection is also supported to power the system, recharge the battery, and send data to the computer.

Figure 8.

Emotion recognition electronic case. (a) Sensors’ PCB board. (b) Electronic case with electrodes.

6. Conclusions and Future Work

In this paper, the proposed facial expression recognition system by EMG sensors and ML methods, which integrates an ML pipeline and electronic system design, performed excellently in classifying the six principal facial expressions. In addition, a deep learning model with a multidimensional array as input was shown to be an acceptable solution compared to classification methods. However, when multidimensional data were reshaped to one-dimensional data, it achieves similar performance but with lower power consumption. This power consumption is an adequate improvement in electronic devices that need batteries. Additionally, the deep learning model was quantized to be compiled into the microcontroller to reduce sending information to the cloud. Therefore, the electronic system is portable and can send information to computers with low computational resources since the model does not need to be updated. Furthermore, the ML pipeline shows an adequate data collection step with the correct allocation of EMG sensors on the face using the facial actions coding system, which describes the specific facial muscle activation. As a result, the electronic system was built with a case with a noise cancellation board between the sensors and the microcontroller.

Moreover, the deep learning model can correctly recognize over 90% of human expressions in women and men. In addition, it demonstrated that artificial expressions without stimuli could be detected with sensors and showed that natural facial expressions are standardized in people. Finally, this research provides a new perspective on recognizing human expressions with a portable device. Even when the dataset was made with spontaneous and artificial emotions, there were almost no variations between them. We considered that it was not from the EMG sensor errors. However, when the user needs to respond naturally, the system can detect those minor electrical variations.

In future work, the proposed electronic system will be further expanded and optimized to be used in medical treatments with some samples of people who have low facial mobility or facial muscle limitations.

Author Contributions

P.A.S.-D.: original draft preparation, original draft preparation, investigation, and software; P.D.R.-M.: methodology, formal analysis, and writing—review and editing; W.H.: resources, project administration, supervision, writing—review and editing, and funding acquisition. All authors have read and agreed to the published version of the manuscript.

Funding

This research and the APC were funded by the Universidad de Las Americas (UDLA), Quito, Ecuador (Research project: IEA.WHP.21.02).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wang, M.; Lee, W.; Shu, L.; Kim, Y.S.; Park, C.H. Development and Analysis of an Origami-Based Elastomeric Actuator and Soft Gripper Control with Machine Learning and EMG Sensors. Sensors 2024, 24, 1751. [Google Scholar] [CrossRef] [PubMed]

- Donato, G.; Bartlett, M.S.; Hager, J.C.; Ekman, P.; Sejnowski, T.J. Classifying Facial Actions. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 974–989. [Google Scholar] [CrossRef] [PubMed]

- Alarcão, S.M.; Fonseca, M.J. Emotions Recognition Using EEG Signals: A Survey. IEEE Trans. Affect. Comput. 2019, 10, 374–393. [Google Scholar] [CrossRef]

- Bonifati, P.; Baracca, M.; Menolotto, M.; Averta, G.; Bianchi, M. A Multi-Modal Under-Sensorized Wearable System for Optimal Kinematic and Muscular Tracking of Human Upper Limb Motion. Sensors 2023, 23, 3716. [Google Scholar] [CrossRef] [PubMed]

- Dino, H.I.; Abdulrazzaq, M.B. Facial Expression Classification Based on SVM, KNN and MLP Classifiers. In Proceedings of the 2019 International Conference on Advanced Science and Engineering (ICOASE), Zakho-Duhok, Iraq, 2–4 April 2019; pp. 70–75. [Google Scholar] [CrossRef]

- Doheny, E.P.; Goulding, C.; Flood, M.W.; Mcmanus, L.; Lowery, M.M. Feature-Based Evaluation of a Wearable Surface EMG Sensor Against Laboratory Standard EMG During Force-Varying and Fatiguing Contractions. IEEE Sens. J. 2020, 20, 2757–2765. [Google Scholar] [CrossRef]

- Degirmenci, M.; Ozdemir, M.A.; Sadighzadeh, R.; Akan, A. Emotion Recognition from EEG Signals by Using Empirical Mode Decomposition. In Proceedings of the 2018 Medical Technologies National Congress (TIPTEKNO), Magusa, Cyprus, 8–10 November 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Pham, T.D.; Duong, M.T.; Ho, Q.T.; Lee, S.; Hong, M.C. CNN-Based Facial Expression Recognition with Simultaneous Consideration of Inter-Class and Intra-Class Variations. Sensors 2023, 23, 9658. [Google Scholar] [CrossRef] [PubMed]

- Bian, Y.; Küster, D.; Liu, H.; Krumhuber, E.G. Understanding Naturalistic Facial Expressions with Deep Learning and Multimodal Large Language Models. Sensors 2024, 24, 126. [Google Scholar] [CrossRef] [PubMed]

- Borelli, G.; Jovic Bonnet, J.; Rosales Hernandez, Y.; Matsuda, K.; Damerau, J. Spectral-Distance-Based Detection of EMG Activity From Capacitive Measurements. IEEE Sens. J. 2018, 18, 8502–8509. [Google Scholar] [CrossRef]

- Song, T.; Zheng, W.; Song, P.; Cui, Z. EEG Emotion Recognition Using Dynamical Graph Convolutional Neural Networks. IEEE Trans. Affect. Comput. 2020, 11, 532–541. [Google Scholar] [CrossRef]

- Ekman, P. Universal Facial Expresions of Emotion. Calif. Ment. Health Res. Dig. 1970, 8, 46. [Google Scholar] [CrossRef]

- Cai, Y.; Guo, Y.; Jiang, H.; Huang, M.C. Machine-learning approaches for recognizing muscle activities involved in facial expressions captured by multi-channels surface electromyogram. Smart Health 2018, 5, 15–25. [Google Scholar] [CrossRef]

- Chen, S.; Gao, Z.; Wang, S. Emotion recognition from peripheral physiological signals enhanced by EEG. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 2827–2831. [Google Scholar] [CrossRef]

- Kamavuako, E.N. On the Applications of EMG Sensors and Signals. Sensors 2022, 22, 7966. [Google Scholar] [CrossRef] [PubMed]

- Thiam, P.; Kessler, V.; Amirian, M.; Bellmann, P.; Layher, G.; Zhang, Y.; Velana, M.; Gruss, S.; Walter, S.; Traue, H.C.; et al. Multi-Modal Pain Intensity Recognition Based on the SenseEmotion Database. IEEE Trans. Affect. Comput. 2021, 12, 743–760. [Google Scholar] [CrossRef]

- Perusquía-Hernández, M.; Hirokawa, M.; Suzuki, K. A Wearable Device for Fast and Subtle Spontaneous Smile Recognition. IEEE Trans. Affect. Comput. 2017, 8, 522–533. [Google Scholar] [CrossRef]

- Guendil, Z.; Lachiri, Z.; Maaoui, C.; Pruski, A. Multiresolution framework for emotion sensing in physiological signals. In Proceedings of the 2016 2nd International Conference on Advanced Technologies for Signal and Image Processing (ATSIP), Monastir, Tunisia, 21–23 March 2016; pp. 793–797. [Google Scholar] [CrossRef]

- Ghare, P.S.; Paithane, A. Human emotion recognition using non linear and non stationary EEG signal. In Proceedings of the 2016 International Conference on Automatic Control and Dynamic Optimization Techniques (ICACDOT), Pune, India, 9–10 September 2016; pp. 1013–1016. [Google Scholar] [CrossRef]

- Shin, J.; Maeng, J.; Kim, D.H. Inner Emotion Recognition Using Multi Bio-Signals. In Proceedings of the 2018 IEEE International Conference on Consumer Electronics-Asia (ICCE-Asia), JeJu, Korea, 24–26 June 2018; pp. 206–212. [Google Scholar] [CrossRef]

- Wang, X.h.; Zhang, T.; Xu, X.m.; Chen, L.; Xing, X.f.; Chen, C.L.P. EEG Emotion Recognition Using Dynamical Graph Convolutional Neural Networks and Broad Learning System. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 1240–1244. [Google Scholar] [CrossRef]

- Kollias, D.; Zafeiriou, S. Exploiting Multi-CNN Features in CNN-RNN Based Dimensional Emotion Recognition on the OMG in-the-Wild Dataset. IEEE Trans. Affect. Comput. 2021, 12, 595–606. [Google Scholar] [CrossRef]

- Zhao, Y.; Yang, J.; Lin, J.; Yu, D.; Cao, X. A 3D Convolutional Neural Network for Emotion Recognition based on EEG Signals. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Song, T.; Liu, S.; Zheng, W.; Zong, Y.; Cui, Z.; Li, Y.; Zhou, X. Variational Instance-Adaptive Graph for EEG Emotion Recognition. IEEE Trans. Affect. Comput. 2021, 14, 343–356. [Google Scholar] [CrossRef]

- Li, G.; Ouyang, D.; Yuan, Y.; Li, W.; Guo, Z.; Qu, X.; Green, P. An EEG Data Processing Approach for Emotion Recognition. IEEE Sens. J. 2022, 22, 10751–10763. [Google Scholar] [CrossRef]

- Jiang, M.; Rahmani, A.M.; Westerlund, T.; Liljeberg, P.; Tenhunen, H. Facial Expression Recognition with sEMG Method. In Proceedings of the 2015 IEEE International Conference on Computer and Information Technology; Ubiquitous Computing and Communications; Dependable, Autonomic and Secure Computing; Pervasive Intelligence and Computing, Liverpool, UK, 26–28 October 2015; pp. 981–988. [Google Scholar] [CrossRef]

- Mithbavkar, S.A.; Shah, M.S. Recognition of Emotion Through Facial Expressions Using EMG Signal. In Proceedings of the 2019 International Conference on Nascent Technologies in Engineering (ICNTE), Navi Mumbai, India, 4–5 January 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Das, P.; Bhattacharyya, J.; Sen, K.; Pal, S. Assessment of Pain using Optimized Feature Set from Corrugator EMG. In Proceedings of the 2020 IEEE Applied Signal Processing Conference (ASPCON), Kolkata, India, 7–9 October 2020; pp. 349–353. [Google Scholar] [CrossRef]

- Mithbavkar, S.A.; Shah, M.S. Analysis of EMG Based Emotion Recognition for Multiple People and Emotions. In Proceedings of the 2021 IEEE 3rd Eurasia Conference on Biomedical Engineering, Healthcare and Sustainability (ECBIOS), Tainan, Taiwan, 28–30 May 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Ang, L.; Belen, E.; Bernardo, R.; Boongaling, E.; Briones, G.; Coronel, J. Facial expression recognition through pattern analysis of facial muscle movements utilizing electromyogram sensors. In Proceedings of the 2004 IEEE Region 10 Conference TENCON 2004, Chiang Mai, Thailand, 24 November 2004; Volume C. pp. 600–603. [Google Scholar] [CrossRef]

- Gruebler, A.; Suzuki, K. A Wearable Interface for Reading Facial Expressions Based on Bioelectrical Signals. In Proceedings of the International Conference on Kansei Engineering and Emotion Research 2010 (KEER2010), Paris, France, 2–4 March 2010; p. 247. [Google Scholar]

- Inzelberg, L.; Rand, D.; Steinberg, S.; David-Pur, M.; Hanein, Y. A Wearable High-Resolution Facial Electromyography for Long Term Recordings in Freely Behaving Humans. Sci. Rep. 2018, 8, 2058. [Google Scholar] [CrossRef] [PubMed]

- Sato, W.; Murata, K.; Uraoka, Y.; Shibata, K.; Yoshikawa, S.; Furuta, M. Emotional valence sensing using a wearable facial EMG device. Sci. Rep. 2021, 11, 5757. [Google Scholar] [CrossRef]

- Preston, D.C.; Shapiro, B.E. 15-Basic Electromyography: Analysis of Motor Unit Action Potentials. In Electromyography and Neuromuscular Disorders, 3rd ed.; Preston, D.C., Shapiro, B.E., Eds.; W.B. Saunders: London, UK, 2013; pp. 235–248. [Google Scholar] [CrossRef]

- Parsaei, H.; Stashuk, D.W. EMG Signal Decomposition Using Motor Unit Potential Train Validity. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 21, 265–274. [Google Scholar] [CrossRef]

- Ekman, P.; Rosenberg, E.L. What the Face Reveals: Basic and Applied Studies of Spontaneous Expression Using the Facial Action Coding System (FACS); Oxford University Press: New York, NY, USA, 2012; pp. 1–672. [Google Scholar] [CrossRef]

- Lewinski, P.; Den Uyl, T.M.; Butler, C. Automated facial coding: Validation of basic emotions and FACS AUs in facereader. J. Neurosci. Psychol. Econ. 2014, 7, 227–236. [Google Scholar] [CrossRef]

- Gokcesu, K.; Ergeneci, M.; Ertan, E.; Gokcesu, H. An Adaptive Algorithm for Online Interference Cancellation in EMG Sensors. IEEE Sens. J. 2019, 19, 214–223. [Google Scholar] [CrossRef]

- Ahmed, O.; Brifcani, A. Gene Expression Classification Based on Deep Learning. In Proceedings of the 2019 4th Scientific International Conference Najaf (SICN), Al-Najef, Iraq, 29–30 April 2019; pp. 145–149. [Google Scholar] [CrossRef]

- Turgunov, A.; Zohirov, K.; Nasimov, R.; Mirzakhalilov, S. Comparative Analysis of the Results of EMG Signal Classification Based on Machine Learning Algorithms. In Proceedings of the 2021 International Conference on Information Science and Communications Technologies (ICISCT), Tashkent, Uzbekistan, 3–5 November 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Hou, C.; Cai, F.; Liu, F.; Cheng, S.; Wang, H. A Method for Removing ECG Interference From Lumbar EMG Based on Signal Segmentation and SSA. IEEE Sens. J. 2022, 22, 13309–13317. [Google Scholar] [CrossRef]

- Choi, Y.; Lee, S.; Sung, M.; Park, J.; Kim, S.; Choi, Y. Development of EMG-FMG Based Prosthesis With PVDF-Film Vibrational Feedback Control. IEEE Sens. J. 2021, 21, 23597–23607. [Google Scholar] [CrossRef]

- Rosero-Montalvo, P.D.; López-Batista, V.F.; Peluffo-Ordóñez, D.H. A New Data-Preprocessing-Related Taxonomy of Sensors for IoT Applications. Information 2022, 13, 241. [Google Scholar] [CrossRef]

- Kowalski, P.; Smyk, R. Review and comparison of smoothing algorithms for one-dimensional data noise reduction. In Proceedings of the 2018 International Interdisciplinary PhD Workshop (IIPhDW), Świnouście, Poland, 9–12 May 2018; pp. 277–281. [Google Scholar] [CrossRef]

- Rosero-Montalvo, P.D.; Fuentes-Hernández, E.A.; Morocho-Cayamcela, M.E.; Sierra-Martínez, L.M.; Peluffo-Ordóñez, D.H. Addressing the Data Acquisition Paradigm in the Early Detection of Pediatric Foot Deformities. Sensors 2021, 21, 4422. [Google Scholar] [CrossRef] [PubMed]

- Ergin, T.; Ozdemir, M.A.; Akan, A. Emotion Recognition with Multi-Channel EEG Signals Using Visual Stimulus. In Proceedings of the 2019 Medical Technologies Congress (TIPTEKNO), Izmir, Turkey, 3–5 October 2019; pp. 1–4. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).